8.2 Non-Equivalent Groups Designs

Learning objectives.

- Describe the different types of nonequivalent groups quasi-experimental designs.

- Identify some of the threats to internal validity associated with each of these designs.

Recall that when participants in a between-subjects experiment are randomly assigned to conditions, the resulting groups are likely to be quite similar. In fact, researchers consider them to be equivalent. When participants are not randomly assigned to conditions, however, the resulting groups are likely to be dissimilar in some ways. For this reason, researchers consider them to be nonequivalent. A nonequivalent groups design , then, is a between-subjects design in which participants have not been randomly assigned to conditions. There are several types of nonequivalent groups designs we will consider.

Posttest Only Nonequivalent Groups Design

The first nonequivalent groups design we will consider is the posttest only nonequivalent groups design. In this design, participants in one group are exposed to a treatment, a nonequivalent group is not exposed to the treatment, and then the two groups are compared. Imagine, for example, a researcher who wants to evaluate a new method of teaching fractions to third graders. One way would be to conduct a study with a treatment group consisting of one class of third-grade students and a control group consisting of another class of third-grade students. This design would be a nonequivalent groups design because the students are not randomly assigned to classes by the researcher, which means there could be important differences between them. For example, the parents of higher achieving or more motivated students might have been more likely to request that their children be assigned to Ms. Williams’s class. Or the principal might have assigned the “troublemakers” to Mr. Jones’s class because he is a stronger disciplinarian. Of course, the teachers’ styles, and even the classroom environments might be very different and might cause different levels of achievement or motivation among the students. If at the end of the study there was a difference in the two classes’ knowledge of fractions, it might have been caused by the difference between the teaching methods—but it might have been caused by any of these confounding variables.

Of course, researchers using a posttest only nonequivalent groups design can take steps to ensure that their groups are as similar as possible. In the present example, the researcher could try to select two classes at the same school, where the students in the two classes have similar scores on a standardized math test and the teachers are the same sex, are close in age, and have similar teaching styles. Taking such steps would increase the internal validity of the study because it would eliminate some of the most important confounding variables. But without true random assignment of the students to conditions, there remains the possibility of other important confounding variables that the researcher was not able to control.

Pretest-Posttest Nonequivalent Groups Design

Another way to improve upon the posttest only nonequivalent groups design is to add a pretest. In the pretest-posttest nonequivalent groups design t here is a treatment group that is given a pretest, receives a treatment, and then is given a posttest. But at the same time there is a nonequivalent control group that is given a pretest, does not receive the treatment, and then is given a posttest. The question, then, is not simply whether participants who receive the treatment improve, but whether they improve more than participants who do not receive the treatment.

Imagine, for example, that students in one school are given a pretest on their attitudes toward drugs, then are exposed to an anti-drug program, and finally, are given a posttest. Students in a similar school are given the pretest, not exposed to an anti-drug program, and finally, are given a posttest. Again, if students in the treatment condition become more negative toward drugs, this change in attitude could be an effect of the treatment, but it could also be a matter of history or maturation. If it really is an effect of the treatment, then students in the treatment condition should become more negative than students in the control condition. But if it is a matter of history (e.g., news of a celebrity drug overdose) or maturation (e.g., improved reasoning), then students in the two conditions would be likely to show similar amounts of change. This type of design does not completely eliminate the possibility of confounding variables, however. Something could occur at one of the schools but not the other (e.g., a student drug overdose), so students at the first school would be affected by it while students at the other school would not.

Returning to the example of evaluating a new measure of teaching third graders, this study could be improved by adding a pretest of students’ knowledge of fractions. The changes in scores from pretest to posttest would then be evaluated and compared across conditions to determine whether one group demonstrated a bigger improvement in knowledge of fractions than another. Of course, the teachers’ styles, and even the classroom environments might still be very different and might cause different levels of achievement or motivation among the students that are independent of the teaching intervention. Once again, differential history also represents a potential threat to internal validity. If asbestos is found in one of the schools causing it to be shut down for a month then this interruption in teaching could produce a difference across groups on posttest scores.

If participants in this kind of design are randomly assigned to conditions, it becomes a true between-groups experiment rather than a quasi-experiment. In fact, it is the kind of experiment that Eysenck called for—and that has now been conducted many times—to demonstrate the effectiveness of psychotherapy.

Interrupted Time-Series Design with Nonequivalent Groups

One way to improve upon the interrupted time-series design is to add a control group. The interrupted time-series design with nonequivalent groups involves taking a set of measurements at intervals over a period of time both before and after an intervention of interest in two or more nonequivalent groups. Once again consider the manufacturing company that measures its workers’ productivity each week for a year before and after reducing work shifts from 10 hours to 8 hours. This design could be improved by locating another manufacturing company who does not plan to change their shift length and using them as a nonequivalent control group. If productivity increased rather quickly after the shortening of the work shifts in the treatment group but productivity remained consistent in the control group, then this provides better evidence for the effectiveness of the treatment.

Similarly, in the example of examining the effects of taking attendance on student absences in a research methods course, the design could be improved by using students in another section of the research methods course as a control group. If a consistently higher number of absences was found in the treatment group before the intervention, followed by a sustained drop in absences after the treatment, while the nonequivalent control group showed consistently high absences across the semester then this would provide superior evidence for the effectiveness of the treatment in reducing absences.

Pretest-Posttest Design With Switching Replication

Some of these nonequivalent control group designs can be further improved by adding a switching replication. Using a pretest-posttest design with switching replication design, nonequivalent groups are administered a pretest of the dependent variable, then one group receives a treatment while a nonequivalent control group does not receive a treatment, the dependent variable is assessed again, and then the treatment is added to the control group, and finally the dependent variable is assessed one last time.

As a concrete example, let’s say we wanted to introduce an exercise intervention for the treatment of depression. We recruit one group of patients experiencing depression and a nonequivalent control group of students experiencing depression. We first measure depression levels in both groups, and then we introduce the exercise intervention to the patients experiencing depression, but we hold off on introducing the treatment to the students. We then measure depression levels in both groups. If the treatment is effective we should see a reduction in the depression levels of the patients (who received the treatment) but not in the students (who have not yet received the treatment). Finally, while the group of patients continues to engage in the treatment, we would introduce the treatment to the students with depression. Now and only now should we see the students’ levels of depression decrease.

One of the strengths of this design is that it includes a built in replication. In the example given, we would get evidence for the efficacy of the treatment in two different samples (patients and students). Another strength of this design is that it provides more control over history effects. It becomes rather unlikely that some outside event would perfectly coincide with the introduction of the treatment in the first group and with the delayed introduction of the treatment in the second group. For instance, if a change in the weather occurred when we first introduced the treatment to the patients, and this explained their reductions in depression the second time that depression was measured, then we would see depression levels decrease in both the groups. Similarly, the switching replication helps to control for maturation and instrumentation. Both groups would be expected to show the same rates of spontaneous remission of depression and if the instrument for assessing depression happened to change at some point in the study the change would be consistent across both of the groups. Of course, demand characteristics, placebo effects, and experimenter expectancy effects can still be problems. But they can be controlled for using some of the methods described in Chapter 5.

Switching Replication with Treatment Removal Design

In a basic pretest-posttest design with switching replication, the first group receives a treatment and the second group receives the same treatment a little bit later on (while the initial group continues to receive the treatment). In contrast, in a switching replication with treatment removal design , the treatment is removed from the first group when it is added to the second group. Once again, let’s assume we first measure the depression levels of patients with depression and students with depression. Then we introduce the exercise intervention to only the patients. After they have been exposed to the exercise intervention for a week we assess depression levels again in both groups. If the intervention is effective then we should see depression levels decrease in the patient group but not the student group (because the students haven’t received the treatment yet). Next, we would remove the treatment from the group of patients with depression. So we would tell them to stop exercising. At the same time, we would tell the student group to start exercising. After a week of the students exercising and the patients not exercising, we would reassess depression levels. Now if the intervention is effective we should see that the depression levels have decreased in the student group but that they have increased in the patient group (because they are no longer exercising).

Demonstrating a treatment effect in two groups staggered over time and demonstrating the reversal of the treatment effect after the treatment has been removed can provide strong evidence for the efficacy of the treatment. In addition to providing evidence for the replicability of the findings, this design can also provide evidence for whether the treatment continues to show effects after it has been withdrawn.

Key Takeaways

- Quasi-experimental research involves the manipulation of an independent variable without the random assignment of participants to conditions or counterbalancing of orders of conditions.

- There are three types of quasi-experimental designs that are within-subjects in nature. These are the one-group posttest only design, the one-group pretest-posttest design, and the interrupted time-series design.

- There are five types of quasi-experimental designs that are between-subjects in nature. These are the posttest only design with nonequivalent groups, the pretest-posttest design with nonequivalent groups, the interrupted time-series design with nonequivalent groups, the pretest-posttest design with switching replication, and the switching replication with treatment removal design.

- Quasi-experimental research eliminates the directionality problem because it involves the manipulation of the independent variable. However, it does not eliminate the problem of confounding variables, because it does not involve random assignment to conditions or counterbalancing. For these reasons, quasi-experimental research is generally higher in internal validity than non-experimental studies but lower than true experiments.

- Of all of the quasi-experimental designs, those that include a switching replication are highest in internal validity.

- Practice: Imagine that two professors decide to test the effect of giving daily quizzes on student performance in a statistics course. They decide that Professor A will give quizzes but Professor B will not. They will then compare the performance of students in their two sections on a common final exam. List five other variables that might differ between the two sections that could affect the results.

- regression to the mean

- spontaneous remission

Share This Book

- Increase Font Size

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Random Assignment in Experiments | Introduction & Examples

Random Assignment in Experiments | Introduction & Examples

Published on March 8, 2021 by Pritha Bhandari . Revised on June 22, 2023.

In experimental research, random assignment is a way of placing participants from your sample into different treatment groups using randomization.

With simple random assignment, every member of the sample has a known or equal chance of being placed in a control group or an experimental group. Studies that use simple random assignment are also called completely randomized designs .

Random assignment is a key part of experimental design . It helps you ensure that all groups are comparable at the start of a study: any differences between them are due to random factors, not research biases like sampling bias or selection bias .

Table of contents

Why does random assignment matter, random sampling vs random assignment, how do you use random assignment, when is random assignment not used, other interesting articles, frequently asked questions about random assignment.

Random assignment is an important part of control in experimental research, because it helps strengthen the internal validity of an experiment and avoid biases.

In experiments, researchers manipulate an independent variable to assess its effect on a dependent variable, while controlling for other variables. To do so, they often use different levels of an independent variable for different groups of participants.

This is called a between-groups or independent measures design.

You use three groups of participants that are each given a different level of the independent variable:

- a control group that’s given a placebo (no dosage, to control for a placebo effect ),

- an experimental group that’s given a low dosage,

- a second experimental group that’s given a high dosage.

Random assignment to helps you make sure that the treatment groups don’t differ in systematic ways at the start of the experiment, as this can seriously affect (and even invalidate) your work.

If you don’t use random assignment, you may not be able to rule out alternative explanations for your results.

- participants recruited from cafes are placed in the control group ,

- participants recruited from local community centers are placed in the low dosage experimental group,

- participants recruited from gyms are placed in the high dosage group.

With this type of assignment, it’s hard to tell whether the participant characteristics are the same across all groups at the start of the study. Gym-users may tend to engage in more healthy behaviors than people who frequent cafes or community centers, and this would introduce a healthy user bias in your study.

Although random assignment helps even out baseline differences between groups, it doesn’t always make them completely equivalent. There may still be extraneous variables that differ between groups, and there will always be some group differences that arise from chance.

Most of the time, the random variation between groups is low, and, therefore, it’s acceptable for further analysis. This is especially true when you have a large sample. In general, you should always use random assignment in experiments when it is ethically possible and makes sense for your study topic.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

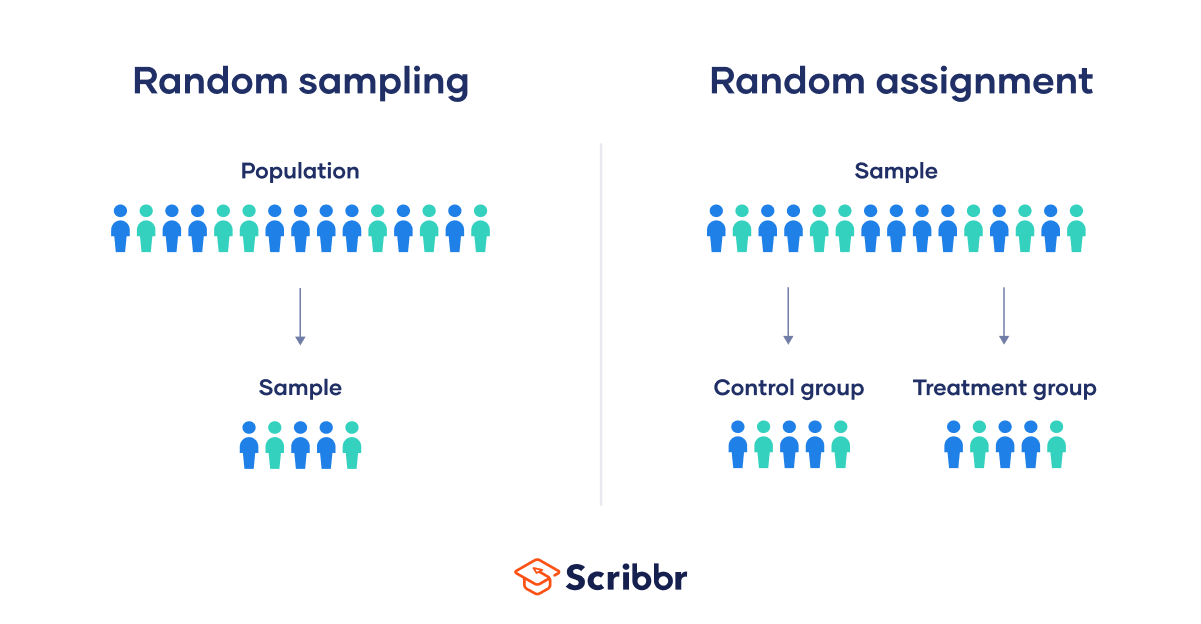

Random sampling and random assignment are both important concepts in research, but it’s important to understand the difference between them.

Random sampling (also called probability sampling or random selection) is a way of selecting members of a population to be included in your study. In contrast, random assignment is a way of sorting the sample participants into control and experimental groups.

While random sampling is used in many types of studies, random assignment is only used in between-subjects experimental designs.

Some studies use both random sampling and random assignment, while others use only one or the other.

Random sampling enhances the external validity or generalizability of your results, because it helps ensure that your sample is unbiased and representative of the whole population. This allows you to make stronger statistical inferences .

You use a simple random sample to collect data. Because you have access to the whole population (all employees), you can assign all 8000 employees a number and use a random number generator to select 300 employees. These 300 employees are your full sample.

Random assignment enhances the internal validity of the study, because it ensures that there are no systematic differences between the participants in each group. This helps you conclude that the outcomes can be attributed to the independent variable .

- a control group that receives no intervention.

- an experimental group that has a remote team-building intervention every week for a month.

You use random assignment to place participants into the control or experimental group. To do so, you take your list of participants and assign each participant a number. Again, you use a random number generator to place each participant in one of the two groups.

To use simple random assignment, you start by giving every member of the sample a unique number. Then, you can use computer programs or manual methods to randomly assign each participant to a group.

- Random number generator: Use a computer program to generate random numbers from the list for each group.

- Lottery method: Place all numbers individually in a hat or a bucket, and draw numbers at random for each group.

- Flip a coin: When you only have two groups, for each number on the list, flip a coin to decide if they’ll be in the control or the experimental group.

- Use a dice: When you have three groups, for each number on the list, roll a dice to decide which of the groups they will be in. For example, assume that rolling 1 or 2 lands them in a control group; 3 or 4 in an experimental group; and 5 or 6 in a second control or experimental group.

This type of random assignment is the most powerful method of placing participants in conditions, because each individual has an equal chance of being placed in any one of your treatment groups.

Random assignment in block designs

In more complicated experimental designs, random assignment is only used after participants are grouped into blocks based on some characteristic (e.g., test score or demographic variable). These groupings mean that you need a larger sample to achieve high statistical power .

For example, a randomized block design involves placing participants into blocks based on a shared characteristic (e.g., college students versus graduates), and then using random assignment within each block to assign participants to every treatment condition. This helps you assess whether the characteristic affects the outcomes of your treatment.

In an experimental matched design , you use blocking and then match up individual participants from each block based on specific characteristics. Within each matched pair or group, you randomly assign each participant to one of the conditions in the experiment and compare their outcomes.

Sometimes, it’s not relevant or ethical to use simple random assignment, so groups are assigned in a different way.

When comparing different groups

Sometimes, differences between participants are the main focus of a study, for example, when comparing men and women or people with and without health conditions. Participants are not randomly assigned to different groups, but instead assigned based on their characteristics.

In this type of study, the characteristic of interest (e.g., gender) is an independent variable, and the groups differ based on the different levels (e.g., men, women, etc.). All participants are tested the same way, and then their group-level outcomes are compared.

When it’s not ethically permissible

When studying unhealthy or dangerous behaviors, it’s not possible to use random assignment. For example, if you’re studying heavy drinkers and social drinkers, it’s unethical to randomly assign participants to one of the two groups and ask them to drink large amounts of alcohol for your experiment.

When you can’t assign participants to groups, you can also conduct a quasi-experimental study . In a quasi-experiment, you study the outcomes of pre-existing groups who receive treatments that you may not have any control over (e.g., heavy drinkers and social drinkers). These groups aren’t randomly assigned, but may be considered comparable when some other variables (e.g., age or socioeconomic status) are controlled for.

Prevent plagiarism. Run a free check.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

- Quartiles & Quantiles

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Prospective cohort study

Research bias

- Implicit bias

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hindsight bias

- Affect heuristic

- Social desirability bias

In experimental research, random assignment is a way of placing participants from your sample into different groups using randomization. With this method, every member of the sample has a known or equal chance of being placed in a control group or an experimental group.

Random selection, or random sampling , is a way of selecting members of a population for your study’s sample.

In contrast, random assignment is a way of sorting the sample into control and experimental groups.

Random sampling enhances the external validity or generalizability of your results, while random assignment improves the internal validity of your study.

Random assignment is used in experiments with a between-groups or independent measures design. In this research design, there’s usually a control group and one or more experimental groups. Random assignment helps ensure that the groups are comparable.

In general, you should always use random assignment in this type of experimental design when it is ethically possible and makes sense for your study topic.

To implement random assignment , assign a unique number to every member of your study’s sample .

Then, you can use a random number generator or a lottery method to randomly assign each number to a control or experimental group. You can also do so manually, by flipping a coin or rolling a dice to randomly assign participants to groups.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 22). Random Assignment in Experiments | Introduction & Examples. Scribbr. Retrieved March 20, 2024, from https://www.scribbr.com/methodology/random-assignment/

Is this article helpful?

Pritha Bhandari

Other students also liked, guide to experimental design | overview, steps, & examples, confounding variables | definition, examples & controls, control groups and treatment groups | uses & examples, "i thought ai proofreading was useless but..".

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

Random versus nonrandom assignment in controlled experiments: do you get the same answer?

Affiliation.

- 1 Department of Psychology, University of Memphis, Tennessee 38152, USA.

- PMID: 8991316

Psychotherapy meta-analyses commonly combine results from controlled experiments that use random and nonrandom assignment without examining whether the 2 methods give the same answer. Results from this article call this practice into question. With the use of outcome studies of marital and family therapy, 64 experiments using random assignment yielded consistently higher mean post-test effects and less variable posttest effects than 36 studies using nonrandom assignment. This difference was reduced by about half by taking into account various covariates, especially pretest effect size levels and various characteristics of control groups. The importance of this finding depends on (a) whether one is discussing meta-analysis or primary experiments, (b) how precise an answer is desired, and (c) whether some adjustment to the data from studies using nonrandom assignment is possible. It is concluded that studies using nonrandom assignment may produce acceptable approximations to results from randomized experiments under some circumstances but that reliance on results from randomized experiments as the gold standard is still well founded.

Publication types

- Comparative Study

- Family Therapy

- Marital Therapy

- Meta-Analysis as Topic*

- Random Allocation*

IResearchNet

Nonexperimental Designs

The most frequently used experimental design type for research in industrial and organizational psychology and a number of allied fields is the nonexperiment. This design type differs from that of both the randomized experiment and the quasi-experiment in several important respects. Prior to describing the nonexperimental design type, we note that the article on experimental designs in this section considers basic issues associated with (a) the validity of inferences stemming from empirical research and (b) the settings within which research takes place. Thus, the same set of issues is not addressed in this entry.

Attributes of Nonexperimental Designs

Nonexperimental designs differ from both quasi-experimental designs and randomized experimental designs in several important respects. Overall, these differences lead research using nonexperimental designs to be far weaker than that using alternative designs, in terms of internal validity and several other criteria.

Academic Writing, Editing, Proofreading, And Problem Solving Services

Get 10% off with 24start discount code, measurement of assumed causes.

In nonexperimental research, variables that are assumed causes are measured, as opposed to being manipulated. For example, a researcher interested in testing the relation between organizational commitment (an assumed cause) and worker productivity (an assumed effect) would have to measure the levels of these variables. Because of the fact that commitment levels were measured, the study would have little if any internal validity. Note, moreover, that the internal validity of such research would not be at all improved by a host of data analytic strategies (e.g., path analysis, structural equation modeling) that purport to allow for inferences about causal connections between and among variables (Stone-Romero, 2002; Stone-Romero & Rosopa, 2004).

Nonrandom Assignment of Participants and Absence of Conditions

In nonexperiments, there are typically no explicitly defined research conditions. For example, a researcher interested in assessing the relation between job satisfaction (an assumed cause) and organizational commitment (an assumed effect) would simply measure the level of both such variables. Because participants were not randomly assigned to conditions in which the level of job satisfaction was manipulated, the researcher would be left in the uncomfortable position of not having information about the many variables that were confounded with job satisfaction. Thus, the internal validity of the study would be a major concern. Moreover, even if the study involved the comparison of scores on one or more dependent variables across existing conditions over which the researcher had no control, the researcher would have no control over the assignment of participants to the conditions. For example, a researcher investigating the assumed effects of incentive systems on firm productivity in several manufacturing firms would have no control over the attributes of such systems. Again, this would serve to greatly diminish the internal validity of the study.

Measurement of Assumed Dependent Variables

In nonexperimental research, assumed dependent variables are measured. Note that the same is true of both randomized experiments and quasi-experiments. However, there are very important differences among the three experimental design types that warrant attention. More specifically, in the case of well-conducted randomized experiments, the researcher can be highly confident that the scores on the dependent variable(s) were a function of the study’s manipulations. Moreover, in quasi-experiments with appropriate design features, the investigator can be fairly confident that the study’s manipulations were responsible for observed differences on the dependent variable(s). However, in nonexperimental studies, the researcher is placed in the uncomfortable position of having to assume that what he or she views as dependent variables are indeed effects. Regrettably, in virtually all nonexperimental research, this assumption rests on a very shaky foundation. Thus, for example, in a study of the assumed effect of job satisfaction on intentions to quit a job, what the researcher assumes to be the effect may in fact be the cause. That is, individuals who have decided to quit for reasons that were not based on job satisfaction could, in the interest of cognitive consistency, view their jobs as not being satisfying.

Control Over Extraneous or Confounding Variables

Because of the fact that nonexperimental research does not benefit from the controls (e.g., random assignment to conditions) that are common to studies using randomized experimental designs, there is relatively little potential to control extraneous variables. As a result, the results of nonexperimental research tend to have little, if any, internal validity. For instance, assume that a researcher did a nonexperimental study of the assumed causal relation between negative affectivity and job-related strain and found these variables to be positively related. It would be inappropriate to conclude that these variables were causally related. At least one important reason for this is that the measures of these constructs have common items. Thus, any detected relation between them could well be spurious, as noted by Eugene F. Stone-Romero in 2005.

In hopes of bolstering causal inference, researchers who do nonexperimental studies often measure variables that are assumed to be confounds and then use such procedures as hierarchical multiple regression, path analysis, and structural equation modeling to control them. Regrettably, such procedures have little potential to control confounds. There are at least four reasons for this. First, researchers are seldom aware of all of the relevant confounds. Second, even if all of them were known, it is seldom possible to measure more than a few of them in any given study and use them as controls. Third, to the degree that the measures of confounds are unreliable, procedures such as multiple regression will fail to fully control for the effects of measured confounds. Fourth, and finally, because a large number of causal models may be consistent with a given set of covariances among a set of variables, statistical procedures are incapable of providing compelling evidence about the superiority of any given model over alternative models.

References:

- Cook, T. D., & Campbell, D. T. (1979). Quasi-experimentation: Design and analysis issues for field settings. Boston: Houghton Mifflin.

- Shadish, W. R., Cook, T. D., & Campbell, D. T. (2002). Experimental and quasi-experimental designs for generalized causal inference. Boston: Houghton Mifflin.

- Stone-Romero, E. F. (2002). The relative validity and usefulness of various empirical research designs. In

- G. Rogelberg (Ed.), Handbook of research methods in industrial and organizational psychology (pp. 77-98). Malden, MA: Blackwell.

- Stone-Romero, E. F. (2005). Personality-based stigmas and unfair discrimination in work organizations. In R. L. Dipboye & A. Colella (Eds.), Discrimination at work: The psychological and organizational bases (pp. 255-280). Mahwah, NJ: Lawrence Erlbaum.

- Stone-Romero, E. F., & Rosopa, P. (2004). Inference problems with hierarchical multiple regression-based tests of mediating effects. Research in Personnel and Human Resources Management, 23, 249-290.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Random Assignment in Experiments | Introduction & Examples

Random Assignment in Experiments | Introduction & Examples

Published on 6 May 2022 by Pritha Bhandari . Revised on 13 February 2023.

In experimental research, random assignment is a way of placing participants from your sample into different treatment groups using randomisation.

With simple random assignment, every member of the sample has a known or equal chance of being placed in a control group or an experimental group. Studies that use simple random assignment are also called completely randomised designs .

Random assignment is a key part of experimental design . It helps you ensure that all groups are comparable at the start of a study: any differences between them are due to random factors.

Table of contents

Why does random assignment matter, random sampling vs random assignment, how do you use random assignment, when is random assignment not used, frequently asked questions about random assignment.

Random assignment is an important part of control in experimental research, because it helps strengthen the internal validity of an experiment.

In experiments, researchers manipulate an independent variable to assess its effect on a dependent variable, while controlling for other variables. To do so, they often use different levels of an independent variable for different groups of participants.

This is called a between-groups or independent measures design.

You use three groups of participants that are each given a different level of the independent variable:

- A control group that’s given a placebo (no dosage)

- An experimental group that’s given a low dosage

- A second experimental group that’s given a high dosage

Random assignment to helps you make sure that the treatment groups don’t differ in systematic or biased ways at the start of the experiment.

If you don’t use random assignment, you may not be able to rule out alternative explanations for your results.

- Participants recruited from pubs are placed in the control group

- Participants recruited from local community centres are placed in the low-dosage experimental group

- Participants recruited from gyms are placed in the high-dosage group

With this type of assignment, it’s hard to tell whether the participant characteristics are the same across all groups at the start of the study. Gym users may tend to engage in more healthy behaviours than people who frequent pubs or community centres, and this would introduce a healthy user bias in your study.

Although random assignment helps even out baseline differences between groups, it doesn’t always make them completely equivalent. There may still be extraneous variables that differ between groups, and there will always be some group differences that arise from chance.

Most of the time, the random variation between groups is low, and, therefore, it’s acceptable for further analysis. This is especially true when you have a large sample. In general, you should always use random assignment in experiments when it is ethically possible and makes sense for your study topic.

Prevent plagiarism, run a free check.

Random sampling and random assignment are both important concepts in research, but it’s important to understand the difference between them.

Random sampling (also called probability sampling or random selection) is a way of selecting members of a population to be included in your study. In contrast, random assignment is a way of sorting the sample participants into control and experimental groups.

While random sampling is used in many types of studies, random assignment is only used in between-subjects experimental designs.

Some studies use both random sampling and random assignment, while others use only one or the other.

Random sampling enhances the external validity or generalisability of your results, because it helps to ensure that your sample is unbiased and representative of the whole population. This allows you to make stronger statistical inferences .

You use a simple random sample to collect data. Because you have access to the whole population (all employees), you can assign all 8,000 employees a number and use a random number generator to select 300 employees. These 300 employees are your full sample.

Random assignment enhances the internal validity of the study, because it ensures that there are no systematic differences between the participants in each group. This helps you conclude that the outcomes can be attributed to the independent variable .

- A control group that receives no intervention

- An experimental group that has a remote team-building intervention every week for a month

You use random assignment to place participants into the control or experimental group. To do so, you take your list of participants and assign each participant a number. Again, you use a random number generator to place each participant in one of the two groups.

To use simple random assignment, you start by giving every member of the sample a unique number. Then, you can use computer programs or manual methods to randomly assign each participant to a group.

- Random number generator: Use a computer program to generate random numbers from the list for each group.

- Lottery method: Place all numbers individually into a hat or a bucket, and draw numbers at random for each group.

- Flip a coin: When you only have two groups, for each number on the list, flip a coin to decide if they’ll be in the control or the experimental group.

- Use a dice: When you have three groups, for each number on the list, roll a die to decide which of the groups they will be in. For example, assume that rolling 1 or 2 lands them in a control group; 3 or 4 in an experimental group; and 5 or 6 in a second control or experimental group.

This type of random assignment is the most powerful method of placing participants in conditions, because each individual has an equal chance of being placed in any one of your treatment groups.

Random assignment in block designs

In more complicated experimental designs, random assignment is only used after participants are grouped into blocks based on some characteristic (e.g., test score or demographic variable). These groupings mean that you need a larger sample to achieve high statistical power .

For example, a randomised block design involves placing participants into blocks based on a shared characteristic (e.g., college students vs graduates), and then using random assignment within each block to assign participants to every treatment condition. This helps you assess whether the characteristic affects the outcomes of your treatment.

In an experimental matched design , you use blocking and then match up individual participants from each block based on specific characteristics. Within each matched pair or group, you randomly assign each participant to one of the conditions in the experiment and compare their outcomes.

Sometimes, it’s not relevant or ethical to use simple random assignment, so groups are assigned in a different way.

When comparing different groups

Sometimes, differences between participants are the main focus of a study, for example, when comparing children and adults or people with and without health conditions. Participants are not randomly assigned to different groups, but instead assigned based on their characteristics.

In this type of study, the characteristic of interest (e.g., gender) is an independent variable, and the groups differ based on the different levels (e.g., men, women). All participants are tested the same way, and then their group-level outcomes are compared.

When it’s not ethically permissible

When studying unhealthy or dangerous behaviours, it’s not possible to use random assignment. For example, if you’re studying heavy drinkers and social drinkers, it’s unethical to randomly assign participants to one of the two groups and ask them to drink large amounts of alcohol for your experiment.

When you can’t assign participants to groups, you can also conduct a quasi-experimental study . In a quasi-experiment, you study the outcomes of pre-existing groups who receive treatments that you may not have any control over (e.g., heavy drinkers and social drinkers).

These groups aren’t randomly assigned, but may be considered comparable when some other variables (e.g., age or socioeconomic status) are controlled for.

In experimental research, random assignment is a way of placing participants from your sample into different groups using randomisation. With this method, every member of the sample has a known or equal chance of being placed in a control group or an experimental group.

Random selection, or random sampling , is a way of selecting members of a population for your study’s sample.

In contrast, random assignment is a way of sorting the sample into control and experimental groups.

Random sampling enhances the external validity or generalisability of your results, while random assignment improves the internal validity of your study.

Random assignment is used in experiments with a between-groups or independent measures design. In this research design, there’s usually a control group and one or more experimental groups. Random assignment helps ensure that the groups are comparable.

In general, you should always use random assignment in this type of experimental design when it is ethically possible and makes sense for your study topic.

To implement random assignment , assign a unique number to every member of your study’s sample .

Then, you can use a random number generator or a lottery method to randomly assign each number to a control or experimental group. You can also do so manually, by flipping a coin or rolling a die to randomly assign participants to groups.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Bhandari, P. (2023, February 13). Random Assignment in Experiments | Introduction & Examples. Scribbr. Retrieved 21 March 2024, from https://www.scribbr.co.uk/research-methods/random-assignment-experiments/

Is this article helpful?

Pritha Bhandari

Other students also liked, a quick guide to experimental design | 5 steps & examples, controlled experiments | methods & examples of control, control groups and treatment groups | uses & examples.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Review Article

- Open access

- Published: 29 July 2021

Clinical Research

Errors in the implementation, analysis, and reporting of randomization within obesity and nutrition research: a guide to their avoidance

- Colby J. Vorland ORCID: orcid.org/0000-0003-4225-372X 1 ,

- Andrew W. Brown ORCID: orcid.org/0000-0002-1758-8205 1 ,

- John A. Dawson 2 ,

- Stephanie L. Dickinson ORCID: orcid.org/0000-0002-2998-4467 3 ,

- Lilian Golzarri-Arroyo ORCID: orcid.org/0000-0002-1221-6701 3 ,

- Bridget A. Hannon 4 ,

- Moonseong Heo ORCID: orcid.org/0000-0001-7711-1209 5 ,

- Steven B. Heymsfield ORCID: orcid.org/0000-0003-1127-9425 6 ,

- Wasantha P. Jayawardene ORCID: orcid.org/0000-0002-8798-0894 1 ,

- Chanaka N. Kahathuduwa 7 ,

- Scott W. Keith 8 ,

- J. Michael Oakes 9 ,

- Carmen D. Tekwe 3 ,

- Lehana Thabane 10 &

- David B. Allison ORCID: orcid.org/0000-0003-3566-9399 3

International Journal of Obesity volume 45 , pages 2335–2346 ( 2021 ) Cite this article

13k Accesses

17 Citations

74 Altmetric

Metrics details

- Nutrition disorders

Randomization is an important tool used to establish causal inferences in studies designed to further our understanding of questions related to obesity and nutrition. To take advantage of the inferences afforded by randomization, scientific standards must be upheld during the planning, execution, analysis, and reporting of such studies. We discuss ten errors in randomized experiments from real-world examples from the literature and outline best practices for their avoidance. These ten errors include: representing nonrandom allocation as random, failing to adequately conceal allocation, not accounting for changing allocation ratios, replacing subjects in nonrandom ways, failing to account for non-independence, drawing inferences by comparing statistical significance from within-group comparisons instead of between-groups, pooling data and breaking the randomized design, failing to account for missing data, failing to report sufficient information to understand study methods, and failing to frame the causal question as testing the randomized assignment per se. We hope that these examples will aid researchers, reviewers, journal editors, and other readers to endeavor to a high standard of scientific rigor in randomized experiments within obesity and nutrition research.

Similar content being viewed by others

Statistical analysis of continuous outcomes from parallel-arm randomized controlled trials in nutrition—a tutorial

Christian Ritz

Randomization, design and analysis for interdependency in aging research: no person or mouse is an island

Daniella E. Chusyd, Steven N. Austad, … David B. Allison

The “completely randomised” and the “randomised block” are the only experimental designs suitable for widespread use in pre-clinical research

Michael F. W. Festing

Introduction

Randomization in scientific experiments bolsters causal inference. Determining a true causal effect would require observing the difference between two outcomes within a single unit (e.g., person, animal) in one case after exogenous manipulation (e.g., “treatment”) and in another case without the manipulation, with all else, including the time of observation, held constant [ 1 ]. However, this true causal effect would require parallel universes in which the same unit at the same time undergoes manipulation in one universe but does not in the other. In the absence of parallel universes, we can estimate average causal effects by balancing all differences between multiple units, such that one group looks as similar as possible to the other group. In practice, however, balancing all variables is likely impossible. For practical application, randomization is an alternative because the selection process is independent of the individual’s pre-randomization (observed and unobserved) characteristics that could confound the outcome, and also balances in the long run the distributions of variables that would otherwise be potential confounders, thereby providing unbiased estimation of treatment effects [ 2 ]. Randomization and exogenous treatment allow inferential statistics to create unbiased effect estimates [ 3 ]. Departures from randomization may increase uncertainty and yield bias.

Randomization is a seemingly simple concept: just assign people (or more generically, “units” [e.g., mice, rats, flies, classrooms, clinics, families]) randomly to one treatment or intervention versus another. The importance of randomization may have been first recognized at the end of the nineteenth century, and formalized in the 1920s [ 4 ]. Yet since its inception there have been errors in the implementation or interpretation of randomized experiments. In 1930, the Lanarkshire Milk investigation tested whether raw or pasteurized milk altered weight and height vs. a control condition in 20,000 schoolchildren [ 5 ]. After publication of the experiment, William Gosset (writing as “Student” of “Student’s t -test” fame) critiqued the study [ 6 ], noting that while there was some random selection of students, a subset of the children were selected on the basis of being either “well fed or ill nourished,” which favored more of the smaller and lighter children being selected, rather than randomized, to the milk groups. Thus, the greater growth in individuals assigned to the milk groups could have been from receiving the milk intervention, or the result of selection bias, an invalidating design flaw. This violates the assumption that the intervention is independent of pre-randomization characteristics of the person being assigned.

Methodologists continue to improve our understanding of the implications of effective randomization, including random sequence generation, implementation (like allocation concealment and blinding), special randomization situations (e.g., randomizing groups of individuals), analysis (e.g., how to analyze an experiment with missing data), and reporting (e.g., how to describe the randomization procedures). Herein, we identify recent publications within obesity and nutrition literature that contain errors in these aspects (see Supplementary Table 1 for a structured list). These examples largely focus on errors arising in the context of null hypothesis significance testing; while there are misconceptions associated with the understanding of p values per se [ 7 , 8 ], it is the framework by which authors typically draw conclusions. The examples span randomized experiments and trials, without or with control groups (i.e., randomized controlled trials [RCTs]). We use these examples to discuss how errors can bias study findings and fail to meet best practices for performing and reporting randomized studies. We clarify that the examples represent a convenience sample, and we make no claims about the frequency of these errors other than that they are frequent enough to have caught our attention. Our categories of errors are neither exhaustive nor in any rank order of severity. Furthermore, we make no assumptions about the circumstances that led to the errors. Rather, we share these examples in the spirit of Gosset who wrote in 1931 on the Lanarkshire Milk experiment, “…but what follows is written not so much in criticism of what was done…as in the hope that in any further work full advantage may be taken of the light which may be thrown on the best methods of arrangement by the defects as well as by the merits” [ 6 ].

Errors in implementing group allocation

1. error: representing nonrandom allocation methods as random, description.

Participants are allocated into treatment groups by use of methods that are not random, but the study is labeled as randomized.

Explanation

Allocation refers to the assignment of subjects into experimental groups. The use of random methods gives each study participant a known probability of being assigned to any experimental group. When any nonrandom allocation is used, studies should not be labeled as randomized.

Authors of studies published in a sample of Chinese journals that were labeled as randomized were interviewed about their methods, and in only ~7% was randomization determined to be properly implemented [ 9 ]. Improperly labeling studies as randomized is not uncommon in both human and animal research on topics of nutrition and obesity, and can occur in different ways.

In one instance, a vitamin D supplementation trial used a nonrandomized convenience sample from a different hospital as a control group, yet labeled the trial as randomized [ 10 ]. In a reply [ 11 ], the authors suggested that no selection bias occurred during the allocation because they detected no significant differences between groups on measured covariates. However, this assumption is unjustified because (a) unobserved or mismeasured covariates can potentially introduce bias, or measurement of a covariate may be imperfect, (b) the inferential validity of randomization rests on the assumption that the distributions of all pre-randomization variables are the same in the long run across levels of the treatment groups, not that the distributions are the same across groups in any one sample, and (c) concluding that groups are identical at baseline because no significant differences were detected entails fallaciously “accepting the null.” Regardless of the lack of observed statistical differences between groups, treatment allocation was not randomized and should not be labeled as such.

In another example, researchers first allocated all participants to the intervention to ensure a sufficient sample size and then randomized future participants [ 12 ]. This violates the assumption that every subject has some probability of being assigned to every group [ 13 ]; the participants first allocated had no probability of being in the control group. In addition, those in the initial allocation wave may have had different characteristics from those with later enrollment.

If units are not all concurrently randomized (e.g., one group is enrolled at a different time), there is also a time-associated confound [ 14 ]. This is exemplified by a study of the effects of a nutraceutical formulation on hair growth that was labeled as randomized [ 15 ]. Participants were randomized to one of two treatment groups, and then each group underwent placebo and treatment sequentially (essentially a pretest-posttest design). The sequential order suggested a hair growth-by-time confound, with hair growth differing by season [ 16 ].

Nonrandom allocation can leave a signature in baseline between-group differences. With randomization, on average, the p values of baseline group comparisons will be uniform for independent measurements. While there are limitations to applying this principle broadly to assessing literature [ 17 , 18 , 19 ], in some cases it has proved useful as a prompt for more information about how and whether randomization was actually employed. An analysis by Carlisle of baseline p value distributions in over 5000 trials flagged apparent deviations from this expectation [ 20 ], suggesting that many studies labeled as randomized may not be. One trial flagged [ 21 ] was the Primary Prevention of Cardiovascular Disease with a Mediterranean Diet (PREDIMED) trial, which highlighted the significant impact of advice to consume a Mediterranean-style diet coupled with additional intake of extra-virgin olive oil or mixed nuts on risk for cardiovascular disease, compared with advice to consume a low-fat diet [ 22 ]. An audit by the PREDIMED authors discovered that members of some of the households were nonrandomly assigned to the same group as the randomized member. Furthermore, one intervention site switched from individuals to clinics as the randomization unit [ 23 ] (see section 5, “Error: failing to account for non-independence” for discussion of non-independence). Thus, the original analysis at the individual level was inappropriate for these participants because some did not have a known probability of being assigned to one of the treatment groups or the control. A retraction and reanalysis did not change the main results or conclusions [ 23 ], although causal language in the article was tempered. Conclusions from secondary analyses were affected, however, such as the 5-year change in body weight and waist circumference, which changed statistical significance for the olive oil group [ 24 ]. Use of statistical principles to examine the likelihood that randomization was properly implemented has flagged other studies related to nutrition and obesity, too [ 25 , 26 , 27 , 28 ]. In at least four cases, publications were retracted [ 22 , 26 , 29 , 30 ].

Best practices

Where randomization is impossible, methods should be clearly stated so that there is no conflation of nonrandomized with randomized experiments. Investigators should establish procedures a priori to monitor how randomization is implemented. Furthermore, although a given randomized sample may not appear balanced on all measurable baseline variables, by definition those imbalances have occurred by chance. Altering the allocation process to enforce balance with the use of nonrandom methods may introduce bias. Importantly, use of nonrandom methods may warrant changing how study results are communicated. At a practical level, most methodologists and statisticians would agree that if an RCT is properly randomized, it is reasonable to make causal claims about intervention assignment and outcomes. Whereas the purpose of most research is to seek causal effects [ 31 ], errors discussed herein break randomization, and thereby introduce additional concerns that must be satisfied to increase the confidence in unbiased estimates. While a nuanced discussion of the use of causal language is outside the scope of this review, from a purist perspective, the description of relationships as causal from nonrandom methods is inappropriate [ 32 ].

Where important pre-randomization factors are identified that could influence results if they are imbalanced (such as animal body weight), forms of restricted randomization exist to maintain the benefits of randomization with control over such factors, instead of using haphazard methods that may introduce bias. These include blocking and stratification [ 33 , 34 ], which necessitate additional consideration at the analysis stage beyond a simple randomization scheme (see section 5, “Error: failing to account for non-independence”).

2. Error: failing to adequately conceal allocation from investigators

Investigators who assign treatments, and the participants receiving them, are inadequately concealed from knowing what condition was assigned.

Allocation concealment, when implemented properly, prevents researchers from foreknowing the allocation of the next participant. Furthermore, it prevents participants from foreknowing their assignment, who may choose to dropout if they do not receive a preferred treatment. Thus, concealment prevents selection bias and confounding [ 35 , 36 , 37 ]. Whereas randomization is a method to create unbiased estimates of effect, allocation concealment is necessary to remove the human element of decisions (whether conscious or unconscious) when participants are assigned to groups, and both are important for a rigorous trial. When concealment is broken, sample estimates can become biased in different ways.

Even with the use of random allocation methods, the failure to conceal allocation means that the researchers, and sometimes participants, will know upcoming assignments. The audit of PREDIMED, as discussed in section 1, “Error: representing nonrandom allocation methods as random,” also clarified that allocation was not concealed [ 23 ], despite using computer-generated randomization tables. In the case of the Lanarkshire study as described above [ 5 , 6 ], the failure to conceal allocation led to conscious bias in how schoolchildren were assigned to the interventions. In other cases, researchers may unconsciously bias allocations if they have any involvement in the allocation. For example, if the researcher who is doing the allocation is using a physical method of randomization such as rolling a die or flipping a coin in the presence of the subject, their perception of how the die or coin is rolled or flipped, or how it falls, leaves room to redo it in ways that may select for certain subjects being allocated to particular assignments.

Nonrandom allocation also may make concealment impossible; examples and explanations are presented in Table 1 .

Appropriate concealment strategies may vary by study, but it is ideal that concealment be implemented. The random generation and storage of allocation codes is essential to allocation concealment, using generic numerals or letters unknown to the investigator. Electronic generation and storage of allocations in a protected centralized database is sometimes preferred [ 33 , 38 ] to opaque sealed envelopes [ 39 , 40 ], which is not completely immune to breach and can bias the results if poorly carried out or intentionally compromised [ 41 , 42 , 43 ]. Furthermore, if feasible, real-time generation may be favored over pre-generated allocations [ 44 ]. Regardless of physical or electronic concealment, the allocation codes and other important information about the assignment scheme, such as block size in permuted block randomization [ 45 ], should remain concealed from all research staff and participants. Initial allocation concealment can still be implemented and would improve the rigor of trials even if blinding (i.e., preventing post-randomization knowledge of group assignments) throughout the trial cannot be maintained.

3. Error: not accounting for changes in allocation ratios

The allocation ratio or number of treatment groups is changed partway through a study, but the change is not accounted for in the statistical analysis.

Over the course of a study, researchers may intentionally change treatment group allocations, such as adding, dropping, or combining treatment arms, for various reasons. When researchers change allocation ratios mid-study, this must be taken into account during statistical analysis [ 46 ]. Allocation ratios also change in “adaptive trials,” which have specific methods and concerns beyond what we can cover here (see [ 47 ] for more information).

A study evaluating effects of weight loss on telomere length performed one phase by randomizing participants to three treatment groups (in-person counseling, telephone counseling, and usual care) with 1:1:1 allocation. After no significant difference was found between in-person and telephone counseling, participants in the next phase of the study were randomized with 1:1 allocation into a combined intervention of in-person and telephone counseling or usual care [ 48 ]. In addition to the authors’ choice of analyzing interim results before starting another phase (which risks increasing false-positive findings and should be accounted for in statistical analysis [ 49 ]), the analysis combined these two phases, effectively analyzing 2:1 and 1:1 allocations together [ 50 ]. Another study of low-calorie sweeteners and sucrose and weight-related outcomes [ 51 ] started by randomly allocating participants evenly to five treatment groups with 1:1:1:1:1 allocation, but changed to 2:1:1:1:1 midway through after one group had a higher attrition rate. Neither of these two studies reported accounting for these different phases of study in the statistical analysis. Using different allocation ratios for different groups can bias study results [ 46 , 50 ]. This is because differences may exist between the different periods of recruitment in participant characteristics, such as baseline BMI [ 46 , 50 ]. Thus, baseline differences in the wave of participants allocated at the 2:1 ratio, when pooled with the ratio of those allocated at the 1:1 ratio, would exaggerate the differences when analyzed as though all participants were allocated at the same time.

When allocation ratios change within studies or between randomized experiments that are pooled, caution should be used in combining data. Changes in allocation ratios must be properly taken into account in statistical analysis (see section 7, “Error: improper pooling of data”).

4. Error: replacements are not randomly selected

Participants who dropout are replaced in ways that are nonrandom, for instance, by allocating individuals to a single treatment that experienced a high percentage of participant dropout.

Nonrandom replacement of dropouts is another example of changing allocation ratios. Dropout is common in real-world studies and often leads to missing data, bias, and potentially the loss of power. A meta-analysis of pharmaceutical trials for obesity estimated an average 1-year dropout rate of 37% [ 52 ]. Similarly, a secondary analysis of a diet intervention estimated that the probability of completing the trial was only 60% after just 12 weeks [ 53 ]. Analytical approaches like intention-to-treat [ITT] analysis and imputation of data (described in the Errors in analysis section below) may obviate the need to consider replacing subjects after the initial randomization [ 52 , 54 ]. Yet replacement is sometimes observed in the literature and failing to use random methods to do so introduces another source of potential bias.

In a properly implemented simple RCT, every subject will have the same a priori probability of belonging to any group as any other subject. When a subject who has dropped out is replaced with the next person enrolled instead of by using randomization for assignment, the new participant did not have the same chances as the other subjects in the study of being allocated to that group. This corrupts the process of randomization, potentially introducing bias, and compromises causal inference. Furthermore, allocating participants this way makes allocation concealment impossible.

It is vital to account for dropout in the calculation of sample size and allocation ratios when designing the study. Nevertheless, if dropout was not accounted for a priori, one option is that for the number of dropouts encountered, new participants are enrolled, but each new participant is randomly assigned to groups with the same allocation ratios as the originals [ 55 ]. Note that if dropouts are higher from a particular group and if completers only are analyzed, this may result in an imbalance in the final sample group allocation, but this is not an issue if the ITT principle is adhered to (see section 8, “Error: failing to account for missing data”).

Often, studies do not specify the methods used to replace subjects and use nondescript sentences similar to “subjects who dropped out were replaced” [ 56 , 57 , 58 , 59 ]. As discussed in regard to a trial on green tea ointment and pain and wound healing [ 60 ], such vagueness might suggest introduction of bias and lead to questionable conclusions.

Although replacing subjects may indeed help with the problem of power, the consequences can be detrimental if not properly implemented. Therefore, the decision to replace participants should be thoroughly considered, preplanned if at all possible, and performed by using correct methods, if found to be necessary.

Errors in the analysis of randomized experiments

5. error: failing to account for non-independence.

Groups of subjects (e.g., classrooms, schools, cages of animals) are randomly assigned to experimental conditions together but the data are analyzed as if they were randomized individually, or repeated within-subject measures are treated as independent. Or, measures are treated as independent when subjects individually randomized have repeated within-subject measures or are treated in groups.

The use of cluster randomized trial (cRCT) designs is increasing in nutrition and obesity studies, particularly for the study of school-based interventions, and in contexts where participants are exposed to the other group(s) and as such there is a lack of independence. Similarly, animals are commonly housed together (e.g., in cages, tanks) or grouped by litter. If investigators randomize all animals to treatments by groups instead of individually, this correlation must be addressed in the analysis, but is often unrecognized or ignored. These concerns also exist in cell culture experiments, for example, if treatments are randomized to an entire plate instead of individual wells. In cluster designs, the unit of randomization is the cluster, and not the individual. A frequent error in such interventions is to power and analyze the study at the individual (e.g., person, animal) level instead of the cluster level. Failing to account for within-cluster correlation (often measured by the intraclass correlation coefficient) and cluster-level impacts during study planning leads to an overestimation of statistical power [ 61 ] and typically leads to p values and associated confidence intervals that are artificially small [ 62 , 63 ].

If cRCTs are implemented incorrectly to start, valid inferential analysis for treatment effects is not possible without untestable assumptions [ 61 ]. For instance, randomly assigning one school to an intervention and one to a control yields no degrees of freedom, akin to randomizing one individual to treatment and one to control and treating multiple measurements on each of the two individuals as though those measurements were independent [ 61 ].

Studies that randomize at the individual level may also have correlated observations that should be considered in the analysis, and so it is important to identify potential sources of clustering. For example, outcome measures may be correlated when animals are individually randomized but then group housed for treatment. Likewise, participants individually randomized may be treated in group sessions (such as classes related to the intervention), or may be grouped together within surgeons that do not equally operate in all study arms. These types of scenarios require consideration in statistical analysis [ 64 ]. When repeated measurements are taken on subjects, they similarly must account for within-subject correlation. Taking multiple measurements within individuals (e.g., measuring eyesight in the left and right eye or longitudinal data within person over time) and treating them as independent will lead to invalid inferences [ 64 ].

A distinct issue exists when using forms of restricted randomization (e.g., stratification, blocking, minimization) that are employed to have tighter control over particular factors of interest. In such situations, it is important to include the factors on which randomization restrictions occur as covariates in the statistical model to account for the added correlation between groups [ 65 , 66 ]. Not doing so can result in p values and associated confidence intervals that are artificially large and reduced statistical power. On the other hand, given that one is likely employing restricted randomization because of a small number of units of randomization, losing even a few “denominator” degrees of freedom due to the inclusion of additional covariates in the model may also adversely affect power [ 67 , 68 ].

Failing to account for clustering is one of the most pervasive errors in nutrition and obesity studies that we observe [ 6 , 61 , 69 , 70 , 71 , 72 , 73 , 74 , 75 , 76 , 77 , 78 , 79 ]. A review of school-based randomized trials with weight-related outcomes found that only 21.5% of studies used intracluster correlation coefficients in their power analysis, and only 68.6% applied multilevel models to account for clustering [ 80 ]. In the most severe cases that we observe, a failure to appropriately focus on the cluster as the unit of randomization invalidates any hope of deriving causal inferences [ 70 , 75 , 81 ]. For additional discussion of errors in implementation and reporting in cRCTs, see ref. [ 61 ].

In an example of clustering within participants, a study of vitamin E on diabetic neuropathy randomized participants to the intervention or placebo, but for outcomes related to nerve conduction, the authors conducted measurements in limbs, stating that “left and right sides were treated independently” [ 82 ]. Because these measures were taken within the same participants, within-subject correlations must be taken into account in statistical analyses. Treating non-independent measurements as independent in statistical analysis is sometimes called “pseudoreplication” and is also a common error in animal and cell culture experiments [ 83 ].

When planning cRCTs, it is critical to perform a power calculation that incorporates the number of clusters in the design [ 61 ]. Moreover, analyses of such designs, as well as individually randomized designs, need to include the correlations from clustering for proper treatment inferences, just as repeated measurements of outcomes within subjects must be treated as non-independent.

6. Error: basing conclusions on within-group statistical tests instead of between-groups tests

Experimental groups are analyzed separately for significant differences in the change from baseline and a difference is concluded if one is significant and the other(s) not, instead of comparing directly between groups.

The probative comparison for RCTs is between groups. Sometimes, however, researchers use pre-post within-group tests and draw conclusions based on whether the within-group significance is different, for example, significant in one group but not the other (the so-called “Difference in Nominal Significance” or DINS error [ 84 ]). Using these within-group tests to imply differences between groups increases the false-positive rate of 5% for equal group sizes to up to 50% (and higher for unequal groups) [ 85 ] and is therefore invalid.

The DINS error was identified in an RCT testing isomaltulose vs. sucrose in the context of effects of an energy-reduced diet on weight and fat mass, where some conclusions, such as the outcome of fat mass, were drawn from within-group comparisons but the between-group comparison was not statistically different [ 86 ]. We observe this error frequently in nutrition and obesity research [ 87 , 88 , 89 , 90 , 91 , 92 , 93 , 94 , 95 , 96 , 97 , 98 , 99 , 100 , 101 , 102 , 103 ]. Sometimes using this logic still reaches the correct conclusions (i.e., the between-group and within-group comparisons are both statistically significant or not), but often it does not, and therefore it is an unreliable approach for inferences.

For proper analysis of RCTs, within-group testing should not be represented as the comparison of interest [ 71 , 84 , 85 , 87 , 102 ]. Journal editors, reviewers, and readers should request that conclusions be drawn from between-group comparisons.

7. Error: improper pooling of data

Data for a single RCT are pooled without maintaining the randomized design, or data from multiple RCTs are pooled (i.e., meta-analysis) without accounting for study in statistical analysis.

Data for statistical analysis can be pooled either within one or multiple RCTs, but errors can arise when the random elements of assignment are disregarded. Pooling within one study refers to the process of combining data across different groups, subgroups, or sites to include in a single analysis. When a single RCT is performed across multiple sites or subgroups and the same allocation ratio is not used across all sites or subgroups, or the randomization allocation to study arms changes during the course of an RCT, these different sites, subgroups, or phases of the study need to be taken into account during data analysis. This is because assignment probability is confounded with subset. If data are pooled simply with no account for subsets, any differences between subsets can bias effect estimation [ 50 ].