Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- 02 October 2018

Supercharge your data wrangling with a graphics card

- David Matthews 0

David Matthews is a freelance writer based in Berlin.

You can also search for this author in PubMed Google Scholar

Illustration by The Project Twins

Evan Schneider, an astrophysicist at Princeton University in New Jersey, spent her doctorate learning to harness a chip that’s causing a quiet revolution in scientific data processing: the graphics processing unit (GPU).

Over the past decade or so, GPUs have been challenging the conventional workhorse of computing, the central processing unit (CPU), for dominance in computationally intensive work. As a result, chips that were designed to make video games look better are now being deployed to power everything from virtual reality to self-driving cars and cryptocurrencies.

Of the 100 most powerful supercomputing clusters in the world, 20 now incorporate GPUs from NVIDIA, one of the leading chipmakers. That includes the world’s fastest computer, the Summit cluster at the US Department of Energy’s Oak Ridge National Laboratory in Tennessee, which boasts more than 27,000 GPUs.

For Schneider, running astrophysical models on GPUs “has opened up a new area of my work that just wouldn’t have been accessible without doing this”. By rewriting her code to run on GPU-based supercomputers rather than CPU-focused ones, she has been able to simulate regions of the Galaxy in ten times as much detail, she says. As a result of the increase in resolution, she explains, the entire model now works differently — for example, giving new insights into how gas behaves on the outskirts of galaxies.

Put simply, GPUs can perform vastly more calculations simultaneously than CPUs, although these tasks have to be comparatively basic — for example, working out which colour each pixel should display during a video game. By comparison, CPUs can bring more power to bear on each task, but have to work through them one by one. GPUs can therefore drastically speed up scientific models that can be divvied up into lots of identical tasks, an approach known as parallel processing.

There are no hard rules about which types of calculation GPUs can accelerate, but proponents agree they work best when applied to problems that involve lots of things — atoms, for example — that can be modelled simultaneously. The technique has become particularly prevalent in fields such as molecular dynamics, astrophysics and machine learning.

Schneider, for example, models galaxies by splitting them up into millions of discrete areas and then dividing the work of simulating each of them between a GPU’s many cores, the units that actually execute calculations. Whereas CPUs normally have at most tens of cores — the number has increased as they themselves have become more parallel — GPUs can have thousands. The difference, she explains, is that whereas each CPU core can work autonomously on a different task, GPU cores are like a workforce that has to carry out similar processes. The result can be a massive acceleration in scientific computing, making previously intractable problems solvable. But to achieve those benefits, researchers will probably need to invest in some hardware or cloud computing — not to mention re-engineering their software.

A bit at a time

Fundamentally, parallelizing work for GPUs means breaking it up into little pieces and farming those pieces out to individual cores, where they are run simultaneously. There are several ways scientists can get started. One option, Schneider says, is to attend a parallel-processing workshop, where “you’ll spend literally a day or two with people who know how to program with GPUs, implementing the simplest solutions”.

OpenACC, for instance, is a programming model that allows scientists to take code written for CPUs and parallelize some processes; this allows researchers to get a feel for whether their code will run significantly faster on GPUs, Schneider says. The OpenACC community runs regular workshops and group programming events called hackathons to get scientists started on porting their code to GPUs. When contemplating whether to experiment with GPUs, “the first thing to think about is: ‘Is there a piece of my problem where everything could be done in parallel?’”, says Schneider.

The next step is to rewrite code specifically to take advantage of GPUs. To speed up his code, Philipp Germann, a systems biologist at the European Molecular Biology Laboratory in Barcelona, Spain, learnt CUDA, an NVIDIA-created parallel processing architecture that includes a language similar to C++ that is specifically designed for GPUs. “I’m definitely not a particularly experienced programmer,” he admits, but says the tool took only around two weeks to learn.

One cell-modelling program that Germann and his colleagues created proved to be two to three orders of magnitude faster on GPUs than were equivalent CPU-based programs. “We can really simulate each cell — when will it divide, how will it move, how will it signal to others,” Germann says. “Before, that was simply not possible.”

CUDA works exclusively on NVIDIA chips; an alternative tool, the open-source OpenCL, works on any GPU, including those from rival chipmaker AMD. Matthew Liska, an astrophysicist at the University of Amsterdam, prefers CUDA for its user-friendliness. Liska wrote GPU-accelerated code simulating black holes as part of a research project ( M. Liska et al. Mon. Not. R. Astron. Soc. Lett. 474 , L81–L85; 2018 ); the GPUs accelerated that code by at least an order of magnitude, he says. Other scientists who spoke to Nature also said CUDA was easier to use, with plenty of code libraries and support available.

You might need to block off a month or two to focus on the conversion, advises Alexander Tchekhovskoy, an astrophysicist at Northwestern University in Evanston, Illinois, who was also involved in Liska’s project. Relatively simple code can work with little modification on GPUs, says Marco Nobile, a high-performance-computing specialist at the University of Milan Bicocca in Italy who has co-authored an overview of GPUs in bioinformatics, computational biology and systems biology ( M. S. Nobile et al. Brief. Bioinform. 18 , 870–885; 2017 ). But, he warns, for an extreme performance boost, users might need to rewrite their algorithms, optimize data structures and remove conditional branches — places where the code can follow multiple possible paths, complicating parallelism. “Sometimes, you need months to really squeeze out performance.”

And sometimes, the effort simply isn’t worth it. Dagmar Iber, a computational biologist at the Swiss Federal Institute of Technology in Zurich, says that her group considered using GPUs to processes light-sheet microscopy data. In the end, the researchers managed to get acceptable results using CPUs, and decided not to explore a GPU acceleration because it would have meant making too many adaptations. And not all approaches will work, either: one of Tchekhovskoy’s attempts yielded no speed improvement whatsoever over a CPU. “You can invest a lot of time, and you might not get much return on your investment,” he says.

More often than not, however, that’s not the case; he says that in the field of fluid dynamics, researchers typically have seen speed-ups “from a factor of two to an order of magnitude”.

Cloud processing

As for the hardware itself, all computers need a CPU, which acts as the computer’s brain, but not all come with a dedicated GPU. Some instead integrate their graphics processing with the CPU or motherboard, although a separate GPU can normally be added. To add multiple GPUs, users might need a new motherboard with extra slots, says Liska, as well as a more robust power supply.

One way to test out parallel processing without having to actually buy new hardware is to rent GPU power from a cloud-computing provider such as Amazon Web Services, says Tim Lanfear, director of solution architecture and engineering for NVIDIA in Europe, the Middle East and Africa. (See, for example, this computational notebook, which exploits GPUs in the Google cloud: go.nature.com/2ngfst8 .) But cloud computing can be expensive; if a researcher finds they need to use a GPU constantly, he says, “you’re better off buying your own than renting one from Amazon”.

Lanfear suggests experimenting with parallel processing on a cheaper GPU aimed at gamers and then deploying code on a more professional chip. A top-of-the-range gaming GPU can cost US$1,200, whereas NVIDIA’s Tesla GPUs, designed for high-performance computing, have prices in the multiple thousands of dollars. Apple computers do not officially support current NVIDIA GPUs, only those from AMD.

Despite the growth of GPU computing, the technology’s progress through different scientific fields has been patchy. It has reached maturity in molecular dynamics, according to Lanfear, and taken off in machine learning. That’s because the algorithm implemented by a neural network “can be expressed as solving a large set of equations”, which suits parallel processing. “Whenever you’ve got lots of something, a GPU is typically good. So, lots of equations, lots of data, lots of atoms in your molecules,” he says. The technology has also been used to interpret seismic data, because GPUs can model millions of sections of Earth independently to see how they interact with their neighbours.

But as a practical matter, harnessing that power can be tricky. In astrophysics, Schneider estimates that perhaps 1 in 20 colleagues she meets have become adopters, held back by the effort it takes to rewrite code.

Germann echoes that sentiment. “More and more people are recognizing the potential,” he says. But, “I think still quite a few labs are scared to some degree” because “it has the reputation of being hard to program”.

Nature 562 , 151-152 (2018)

doi: https://doi.org/10.1038/d41586-018-06870-8

Related Articles

- Computer science

- Information technology

- Computational biology and bioinformatics

AI now beats humans at basic tasks — new benchmarks are needed, says major report

News 15 APR 24

High-threshold and low-overhead fault-tolerant quantum memory

Article 27 MAR 24

Three reasons why AI doesn’t model human language

Correspondence 19 MAR 24

The dream of electronic newspapers becomes a reality — in 1974

News & Views 07 MAY 24

How scientists are making the most of Reddit

Career Feature 01 APR 24

A global timekeeping problem postponed by global warming

AI’s keen diagnostic eye

Outlook 18 APR 24

So … you’ve been hacked

Technology Feature 19 MAR 24

No installation required: how WebAssembly is changing scientific computing

Technology Feature 11 MAR 24

Faculty Positions& Postdoctoral Research Fellow, School of Optical and Electronic Information, HUST

Job Opportunities: Leading talents, young talents, overseas outstanding young scholars, postdoctoral researchers.

Wuhan, Hubei, China

School of Optical and Electronic Information, Huazhong University of Science and Technology

Postdoc in CRISPR Meta-Analytics and AI for Therapeutic Target Discovery and Priotisation (OT Grant)

APPLICATION CLOSING DATE: 14/06/2024 Human Technopole (HT) is a new interdisciplinary life science research institute created and supported by the...

Human Technopole

Research Associate - Metabolism

Houston, Texas (US)

Baylor College of Medicine (BCM)

Postdoc Fellowships

Train with world-renowned cancer researchers at NIH? Consider joining the Center for Cancer Research (CCR) at the National Cancer Institute

Bethesda, Maryland

NIH National Cancer Institute (NCI)

Faculty Recruitment, Westlake University School of Medicine

Faculty positions are open at four distinct ranks: Assistant Professor, Associate Professor, Full Professor, and Chair Professor.

Hangzhou, Zhejiang, China

Westlake University

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

RTX on—The NVIDIA Turing GPU

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

- All Research Labs

- 3D Deep Learning

- Applied Research

- Autonomous Vehicles

- Deep Imagination

- New and Featured

- AI Art Gallery

- AI & Machine Learning

- Computer Vision

- Academic Collaborations

- Government Collaborations

- Graduate Fellowship

- Internships

- Research Openings

- Research Scientists

- Meet the Team

Our publications provide insight into some of our leading-edge research.

Publication Year

Research areas.

- Artificial Intelligence and Machine Learning (427)

- Computer Vision (304)

- Computer Graphics (294)

- Computer Architecture (222)

- Robotics (106)

- Circuits and VLSI Design (101)

- High Performance Computing (98)

- Real-Time Rendering (90)

- Algorithms and Numerical Methods (86)

- Generative AI (77)

- Resilience and Safety (61)

- VR, AR and Display Technology (61)

- Computational Photography and Imaging (49)

- Programming Languages, Systems and Tools (49)

- Human Computer Interaction (46)

- Autonomous Vehicles (39)

- Speech Processing (35)

- Applied Perception (26)

- Esports (22)

- Hyperscale Graphics (16)

- Medical (16)

- Natural Language Processing (13)

- Telecommunications (13)

- Networking (12)

- Machine Translation (6)

- Quantum Computing (5)

- Climate Simulation (1)

- Storage Systems (1)

- NeurIPS (26)

- SIGGRAPH (33)

- Search for: Toggle Search

Latest NVIDIA Graphics Research Advances Generative AI’s Next Frontier

NVIDIA today introduced a wave of cutting-edge AI research that will enable developers and artists to bring their ideas to life — whether still or moving, in 2D or 3D, hyperrealistic or fantastical.

Around 20 NVIDIA Research papers advancing generative AI and neural graphics — including collaborations with over a dozen universities in the U.S., Europe and Israel — are headed to SIGGRAPH 2023 , the premier computer graphics conference, taking place Aug. 6-10 in Los Angeles.

The papers include generative AI models that turn text into personalized images; inverse rendering tools that transform still images into 3D objects; neural physics models that use AI to simulate complex 3D elements with stunning realism; and neural rendering models that unlock new capabilities for generating real-time, AI-powered visual details.

Innovations by NVIDIA researchers are regularly shared with developers on GitHub and incorporated into products, including the NVIDIA Omniverse platform for building and operating metaverse applications and NVIDIA Picasso , a recently announced foundry for custom generative AI models for visual design. Years of NVIDIA graphics research helped bring film-style rendering to games, like the recently released Cyberpunk 2077 Ray Tracing: Overdrive Mode , the world’s first path-traced AAA title.

The research advancements presented this year at SIGGRAPH will help developers and enterprises rapidly generate synthetic data to populate virtual worlds for robotics and autonomous vehicle training. They’ll also enable creators in art, architecture, graphic design, game development and film to more quickly produce high-quality visuals for storyboarding, previsualization and even production.

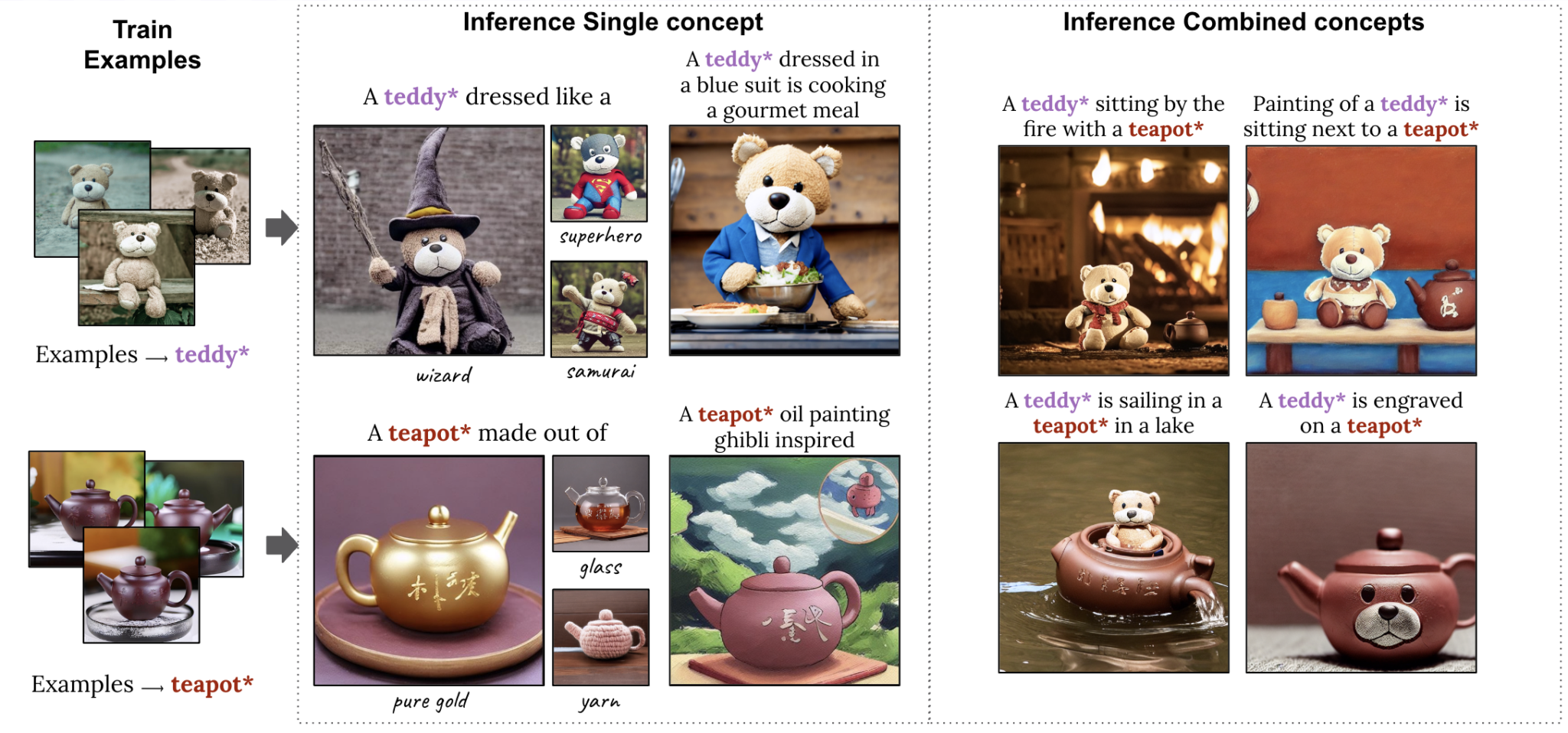

AI With a Personal Touch: Customized Text-to-Image Models

Generative AI models that transform text into images are powerful tools to create concept art or storyboards for films, video games and 3D virtual worlds. Text-to-image AI tools can turn a prompt like “children’s toys” into nearly infinite visuals a creator can use for inspiration — generating images of stuffed animals, blocks or puzzles.

However, artists may have a particular subject in mind. A creative director for a toy brand, for example, could be planning an ad campaign around a new teddy bear and want to visualize the toy in different situations, such as a teddy bear tea party. To enable this level of specificity in the output of a generative AI model, researchers from Tel Aviv University and NVIDIA have two SIGGRAPH papers that enable users to provide image examples that the model quickly learns from.

One paper describes a technique that needs a single example image to customize its output, accelerating the personalization process from minutes to roughly 11 seconds on a single NVIDIA A100 Tensor Core GPU , more than 60x faster than previous personalization approaches.

A second paper introduces a highly compact model called Perfusion, which takes a handful of concept images to allow users to combine multiple personalized elements — such as a specific teddy bear and teapot — into a single AI-generated visual:

Serving in 3D: Advances in Inverse Rendering and Character Creation

Once a creator comes up with concept art for a virtual world, the next step is to render the environment and populate it with 3D objects and characters. NVIDIA Research is inventing AI techniques to accelerate this time-consuming process by automatically transforming 2D images and videos into 3D representations that creators can import into graphics applications for further editing.

A third paper created with researchers at the University of California, San Diego, discusses tech that can generate and render a photorealistic 3D head-and-shoulders model based on a single 2D portrait — a major breakthrough that makes 3D avatar creation and 3D video conferencing accessible with AI. The method runs in real time on a consumer desktop, and can generate a photorealistic or stylized 3D telepresence using only conventional webcams or smartphone cameras.

A fourth project, a collaboration with Stanford University, brings lifelike motion to 3D characters. The researchers created an AI system that can learn a range of tennis skills from 2D video recordings of real tennis matches and apply this motion to 3D characters . The simulated tennis players can accurately hit the ball to target positions on a virtual court, and even play extended rallies with other characters.

Beyond the test case of tennis, this SIGGRAPH paper addresses the difficult challenge of producing 3D characters that can perform diverse skills with realistic movement — without the use of expensive motion-capture data.

Not a Hair Out of Place: Neural Physics Enables Realistic Simulations

Once a 3D character is generated, artists can layer in realistic details such as hair — a complex, computationally expensive challenge for animators.

Humans have an average of 100,000 hairs on their heads, with each reacting dynamically to an individual’s motion and the surrounding environment. Traditionally, creators have used physics formulas to calculate hair movement, simplifying or approximating its motion based on the resources available. That’s why virtual characters in a big-budget film sport much more detailed heads of hair than real-time video game avatars.

A fifth paper showcases a method that can simulate tens of thousands of hairs in high resolution and in real time using neural physics, an AI technique that teaches a neural network to predict how an object would move in the real world.

The team’s novel approach for accurate simulation of full-scale hair is specifically optimized for modern GPUs. It offers significant performance leaps compared to state-of-the-art, CPU-based solvers, reducing simulation times from multiple days to merely hours — while also boosting the quality of hair simulations possible in real time. This technique finally enables both accurate and interactive physically based hair grooming.

Neural Rendering Brings Film-Quality Detail to Real-Time Graphics

After an environment is filled with animated 3D objects and characters, real-time rendering simulates the physics of light reflecting through the virtual scene. Recent NVIDIA research shows how AI models for textures, materials and volumes can deliver film-quality, photorealistic visuals in real time for video games and digital twins.

NVIDIA invented programmable shading over two decades ago, enabling developers to customize the graphics pipeline. In these latest neural rendering inventions, researchers extend programmable shading code with AI models that run deep inside NVIDIA’s real-time graphics pipelines.

In a sixth SIGGRAPH paper, NVIDIA will present neural texture compression that delivers up to 16x more texture detail without taking additional GPU memory. Neural texture compression can substantially increase the realism of 3D scenes, as seen in the image below, which demonstrates how neural-compressed textures (right) capture sharper detail than previous formats, where the text remains blurry (center).

A related paper announced last year is now available in early access as NeuralVDB , an AI-enabled data compression technique that decreases by 100x the memory needed to represent volumetric data — like smoke, fire, clouds and water.

NVIDIA also released today more details about neural materials research that was shown in the most recent NVIDIA GTC keynote . The paper describes an AI system that learns how light reflects from photoreal, many-layered materials, reducing the complexity of these assets down to small neural networks that run in real time, enabling up to 10x faster shading.

The level of realism can be seen in this neural-rendered teapot, which accurately represents the ceramic, the imperfect clear-coat glaze, fingerprints, smudges and even dust.

More Generative AI and Graphics Research

These are just the highlights — read more about all the NVIDIA research presentations at SIGGRAPH . And save the date for NVIDIA founder and CEO Jensen Huang’s keynote address at the conference, taking place Aug. 8. NVIDIA will also present six courses, four talks and two Emerging Technology demos at SIGGRAPH, with topics including path tracing, telepresence and diffusion models for generative AI.

NVIDIA Research has hundreds of scientists and engineers worldwide, with teams focused on topics including AI, computer graphics, computer vision, self-driving cars and robotics.

NVIDIA websites use cookies to deliver and improve the website experience. See our cookie policy for further details on how we use cookies and how to change your cookie settings.

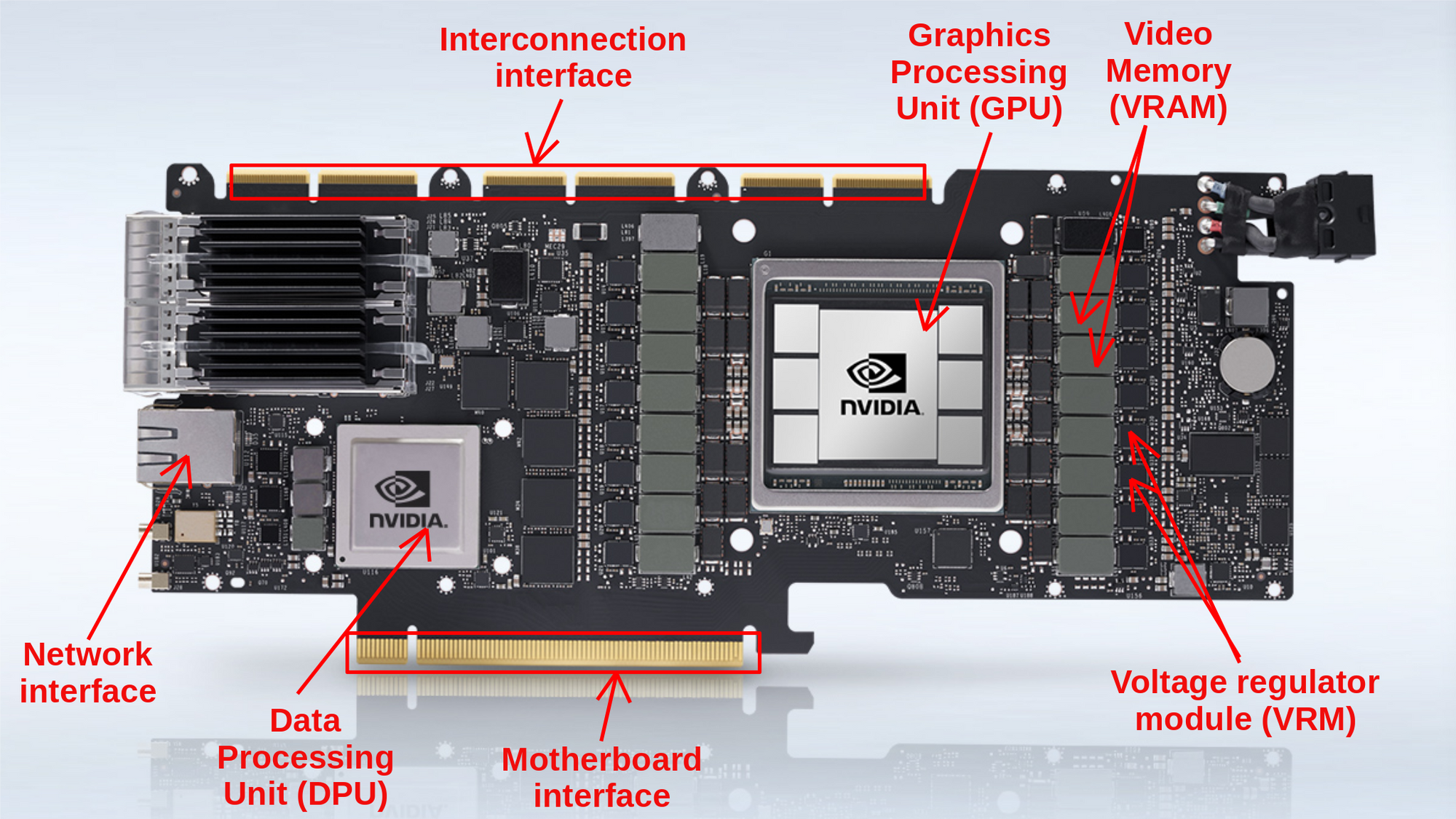

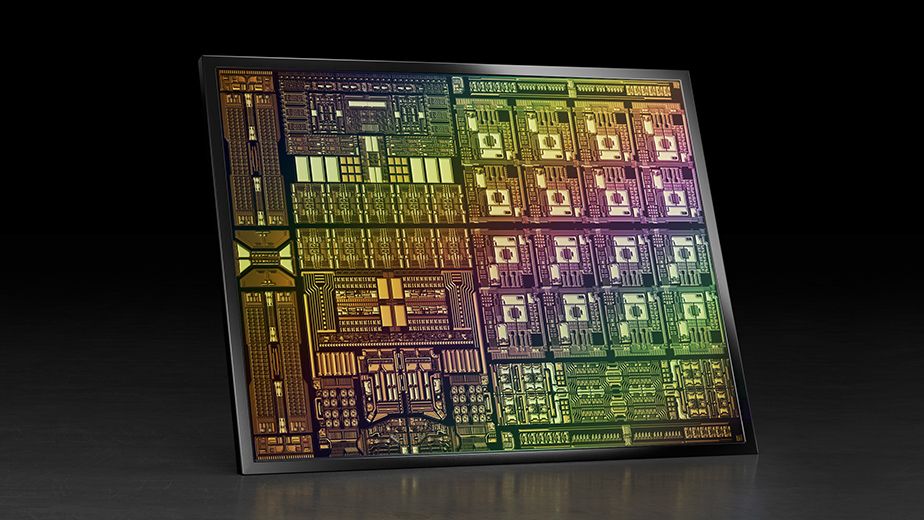

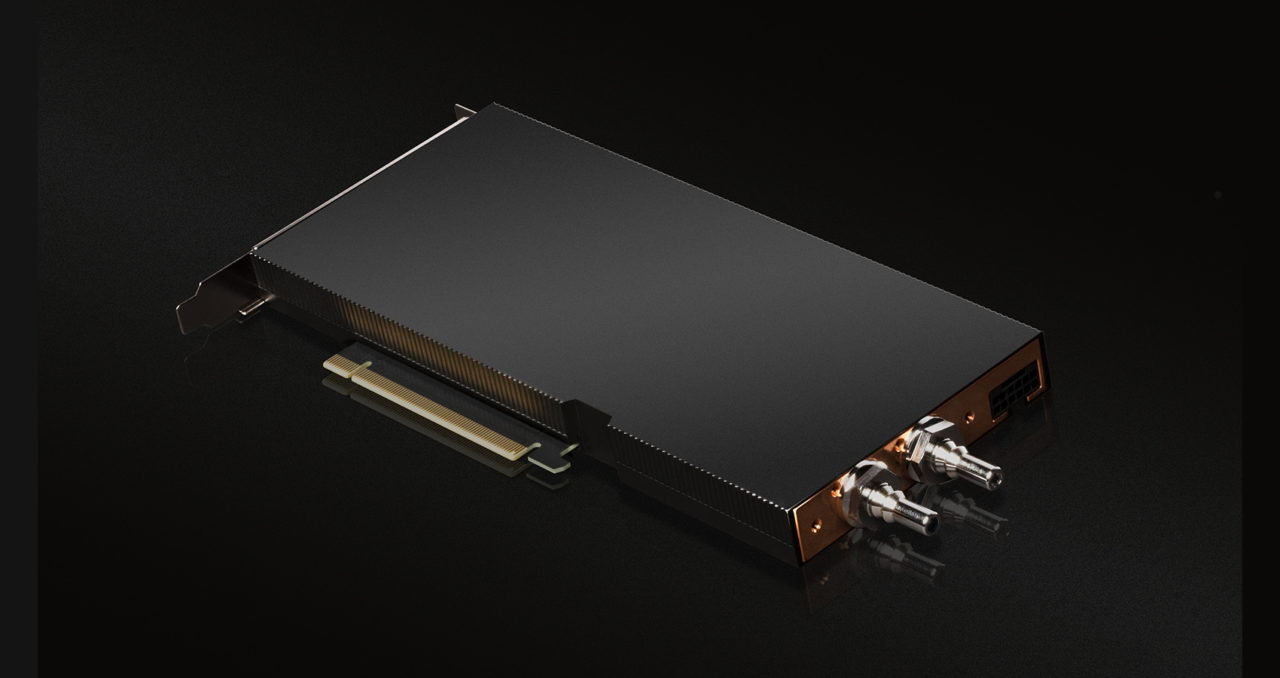

A complete anatomy of a graphics card: Case study of the NVIDIA A100

In our latest blogpost, we shine a spotlight on the Nvidia A100 to take a technical examination of the technology behind them, their components, architecture, and how the innovations within have made them the best tool for deep learning.

2 years ago • 13 min read

Add speed and simplicity to your Machine Learning workflow today

In this article, we'll take a technical examination of the technology behind graphics cards, their components, architecture, and how they relate to machine learning.

The task of a graphics card is very complex, yet its concepts and components are simple to comprehend. We will look at the essential components of a video card and what they accomplish. And at each stage, we'll use the NVIDIA A100 - 40 GB as an example of the current state of the art of graphics cards. The A100 arguably represents the best single GPU available for deep learning on the market.

Graphics card breakdown

A graphics card, often known as a video card, graphics adapter, display card, or display adapter, is a type of expansion card that processes data and produces graphical output. As a result, it's commonly used for video editing, gaming, and 3D rendering. However, it's become the go-to powerhouse for machine learning applications and cryptocurrency mining in recent years. A graphics card accomplishes these highly demanding tasks with the help of the following components:

Graphics Processing Unit (GPU)

Data processing unit (dpu), video memory (vram), video bios (vbios), voltage regulator module (vrm), motherboard interface, interconnection interface.

- Network interface & controller

Output Interfaces

Cooling system.

Frequently mistaken for the graphics card itself. The GPU, unlike a computer's CPU, is designed to handle more complex mathematical and geometric calculations required for graphics rendering. GPUs, on average, have more transistors and a larger density of computing cores with more Arithmetic Logic Units (ALU) than a normal CPU.

There are four classifications of these units:

- Streaming Multiprocessors (SMs)

- Load/Store (LD/ST) units

- Special Function Units (SFU)

- Texture Mapping Unit (TMU)

1) A Streaming Multiprocessor (SM) is a type of execution entity that consists of a collection of cores that share a register space, as well as shared memory and an L1 cache. Multiple threads can be simultaneously executed by a core in an SM. When it comes to SM's core, there are two major rivals:

- Compute Unified Device Architecture (CUDA) or Tensor cores by NVIDIA

- Stream Processors by AMD

NVIDIA's CUDA Cores and Tensor Cores, in general, are believed to be more stable and optimized, particularly for machine learning applications. CUDA cores have been present on every Nvidia GPU released in the last decade, but Tensor Cores are a newer addition. Tensor cores are much quicker than CUDA cores at computing. In fact, CUDA cores can only do one operation every clock cycle, but tensor cores can perform several operations per cycle. In terms of accuracy and processing speed, CUDA cores are not as powerful as Tensor cores for machine learning models, but for some applications they are more than enough. As a result, these are the best options for training machine learning models.

The performances of these cores are measured in the FLOPS unit (floating point operations per second). For these measurements, The NVIDIA A100 achieves record breaking values:

According the NVIDIA documentation , using sparsity format for data representation can even help double some of these values.

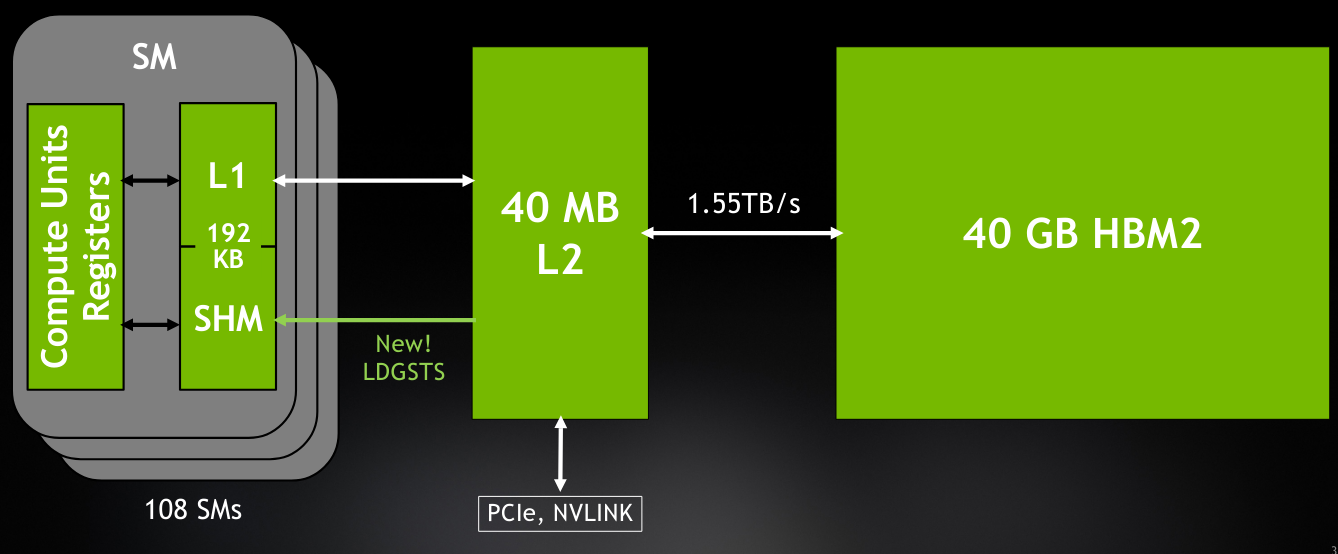

Inside the A100, cache management is done in a particular way to make data transfer between cores and VRAM as fast and smooth as possible. For this purpose, the A100 GPU has 3 levels of cache L0, L1 and L2:

The L0 instruction cache is private to a single streaming multiprocessor sub-processing block, the L1 instruction cache is private to an SM, and the L2 cache is unified, shared across all SMs, and reserved for both instruction and data. The L2 cache in the A100 is larger than all previous GPU's caches and comes with a size of 40MB and it acts as the bridge between the L1 private cache and the 40GB HBM2 VRAM which we will see in details later in this article.

2) Load/Store (LD/ST) units allow threads to perform multiple data loading and storing to memory operations per single clock cycle. In the A100, these unit introduce a new method for asynchronous copy of data, which gives the possibility to load data that can be shared globally between threads without consuming extra thread resources. This newly introduced method offers an increase of around 20% of data loading times between shared memory and local caches.

3) Special Function Units (SFUs) efficiently perform structured arithmetic or mathematical functions on vectored data, – for example sine, cosine, reciprocal, and square root.

4) Texture Mapping Unit (TMU) handles application-specific tasks such image rotation, resizing, adding distortion and noise, and moving 3D plane objects.

The DPU is a non-standard component of the graphics card. Data Processing units are a newly introduced class of programmable processor that joined CPUs and GPUs as the three main components of computing. So, a DPU is a stand-alone processor that is generally implemented in ML and Data centers. It offers a set of accelerated software abilities to manage: networking, storage, security. The A100 graphics card has on board the latest BlueField-2 DPU, which can give great advantages when it comes to handling workloads with massive multiple-input multiple-outputs (MIMO), AI-on-5G deployments, and even more specialized workloads such as signal processing or multi-node training.

In its broadest definition, video random-access memory (VRAM) is analogous to system RAM. VRAM is a sort of cache utilized by the GPU to hold massive amounts of data required for graphics or other applications. All data saved in VRAM is transitory. Traditional VRAM is frequently much faster than the system RAM. And, more importantly, it's physically close to the GPU. It's directly soldered to the graphics card's PCB. This enables remarkably fast data transport with minimal latency, allowing for high-resolution graphics rendering or deep learning model training.

On current graphics cards, VRAM comes in a variety of sizes, speeds, and bus widths. Currently, multiple technologies are implemented; GDDR and HMB have their own respective variations. GDDR (SGRAM Double Data Rate) has been the industry standard for more than a decade. It achieves high clock speeds, but at the expense of physical space and higher than average power consumption. On the other hand, HBM (High Bandwidth Memory) is the state of the art for VRAM technologies. It consumes less power and has the ability to be stacked to increase memory size while taking less real estate on the graphics card. It also allows higher bandwidth and lower clock speeds. The NVIDIA A100 is backed with the latest generation of HBM memories, the HBM2e with a size of 80GB, and a bandwidth up to 1935 GB/s. This is a 73% increase in comparison with the previous version Tesla V100.

It ensures that the GPU receives the necessary power at a constant voltage. A low-quality VRM can create a series of problems, including GPU shutdowns under stress, limited overclocking performances, and even shortened GPU lifespan. The graphics card receives 12 volts of electricity from a modern power supply unit (PSU). GPUs, on the other hand, are sensitive to voltage and cannot sustain that value. This is where the VRM comes into play. It reduces the 12-volt power supply to 1.1 volts before sending it to the GPU cores and memory. The power stage of the A100 with all its VRMs can sustain a power delivery up to 300 Watts.

Using the 8-Pin power connector the A100 receives power from the power supply unit, then forwards the current to the VRMs, that supplies the power to the GPU and DPU, as a 1.1 VDC current, rated at a maximum enforced limit of 300 W and a theoretical limit of 400 W.

This is the sub-component of the graphics card that plugs into the system's motherboard. It is via this interface, or 'slot’, that the graphics card and the computer interchange data and control commands. At the start of the 2000s, many types of interfaces were implemented by different manufacturers: PCI, PCIe, PCI-X or AGP. But, now PCIe has become the go-to interface for mainly all graphics card manufacturers.

PCIe or PCI Express, short for Peripheral Component Interconnect Express, is the most common standardized motherboard interface for connection with graphics cards, hard disk drive, host adapters, SSDs, Wi-Fi and other Ethernet hardware connections.

PCIe standards have different generations, and by each generation there is a major increase in speed and bandwidth:

PCIe slots can be implemented in different physical configurations: x1, x4, x8, x16, x32. The number represents how many lanes are implemented in the slot. The more lanes we have the higher bandwidth we can transfer between the graphics card and the motherboard. The NVidia A100 comes with a PCIe 4.0 x16 interface, which is the most performant commercially available generation of the interface.

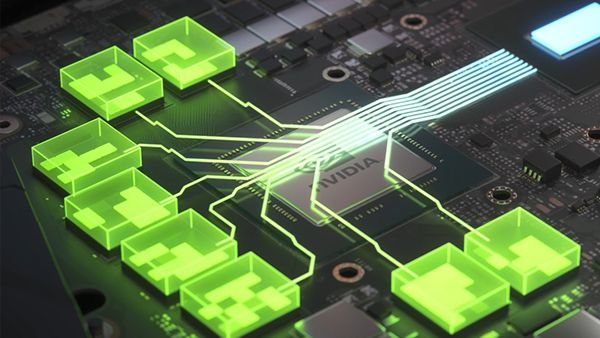

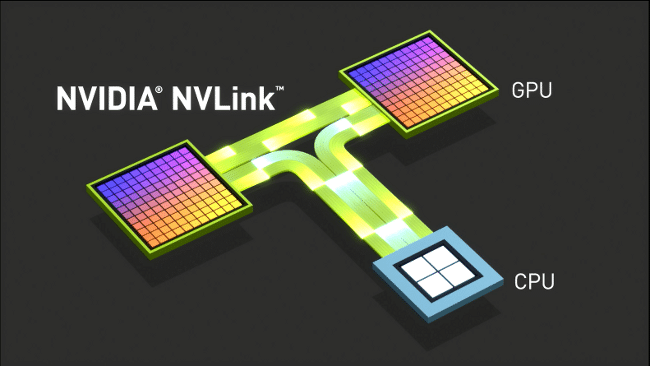

The interconnection interface is a bus that gives system builders the possibility to connect multiple graphics cards mounted on a single motherboard, to allow scaling of the processing power through multiple cards. This multi-card scaling can be done through the PCIe bus on the motherboard or through a dedicated interconnection interface that acts as a data bridge. AMD and NVIDIA both present their graphics cards with proprietary methods of scaling, AMD with its CrossFireX technology and NVIDIA with its SLI technology. SLI was deprecated during the Turing generation with the introduction of NVLink, which is considered as the top of the line of multi-card scaling technologies.

The NVIDIA A100 uses the 3rd generation of NVLink that can offer up to 600 GB/s speed between the two GPUs. Also, it represent a more energy efficient way, than PCI Express, to deliver data between GPUs.

Network interface

The network interface is not a standard component of the graphics card. It's only available for high performance cards that require direct data tunneling to its DPU and GPU. In the case of the A100, the network interface is comprised of 2x 100Gbps Ethernet ports that allows faster processing especially for applications involving AI-based networking.

The output interfaces are the ports that are built on the graphics card and gives it the ability to connect to a monitor. Multiple connection types can be implemented.

For older systems, VGA and DVI were used, while recently manufacturers tend to use HDMI and Display-Port while some portable systems implement the USB Type-C as the main port.

As for the card under our microscope in this article, the A100 does not have an output interface. Since, it was designed from the start as professional card for ML/DL and use in data centers, so there is no reason for it have a display connectivity.

A video BIOS, often known as VBIOS, is a graphics card's Basic Input Output System (BIOS). The video BIOS, like the system BIOS, provides a set of video-related informations that programs can use to access the graphics card, as well as maintaining vendor-specific settings such as the card name, clock frequencies, VRAM types, voltages, and fan speed control parameters.

The cooling is generally not considered as a part of the graphics card components listing. But, due to its importance it cannot be neglected in this technical deep dive.

Due of the amount of energy consumed by graphics cards, a high amount of thermal energy is generated. And, to keep the performances during the activity of the card and to preserve the long term usability, core temperature values should be limited to ovoid thermal throttling which is the performance reduction due to high temperature at GPU and VRAM level.

For this, two techniques are mainly used: Air cooling and liquid cooling. We'll take a look at the liquid cooling method used by the A100.

The coolant enters the graphics cards through heat-conductive pipes and absorbs the heat when going through the system. Then, the coolant is pulled using liquid pumping toward the radiator that acts as a heat exchanger between the liquid in the pipes and the air surrounding the radiator. Cloud GPU services often come built in with tools to monitor this temperature, like Paperspace Gradient Notebook's monitoring tools. This helps prevent overheating if you are running particularly expensive programs by serving as a warning system.

How to measure the performance of a graphics card?

Now that we know the major components and parts of a graphics card, we will see how the performance of a given card can be measured so it can be compared to other cards.

To evaluate a graphics card two scheme can be followed: evaluate the technical specifications of the sub-components and compare them to the results of other cards, or perform a test (a.k.a a benchmark) on the cards and compare the scores.

Specifications based evaluation

Graphics card have tens of technical specifications that can help determine its performance. We'll list the most important ones to look for when making a choice based on this evaluation method:

Core counts: the number of cores on a GPU can be a good measurement to start with when looking at the potential performance of a card. However, this can give biased comparison when comparing GPUs with different core types and architectures.

Core speed : It indicates the number of individual elementary computations that cores perform every second, measured in MHz or GHz. Another measurement to look for when building a personal system is the overclock maximum core speed, which is generally much higher than the non-overclocked speed.

Memory Size : The more RAM a card have, the more data it can handle a given time. But, it does not mean that by increasing VRAM the performance will increase, since this also depends on other components that can bottleneck.

Memory type : Memory chips with the same size can present different performances based on the technology implemented. HBM, HBM2 and HBM2e memory chips perform generally better that GDDR5 and GDDR6.

Memory Bandwidth: Memory bandwidth can be viewed as a broader method for evaluating a graphics card's VRAM performance. Memory bandwidth is basically how quickly your card's VRAM can be accessed and used at any one time.

Thermal design power (TDP): It shows how much electricity is needed to produce the most heat that the cooling system is capable of handling. When building systems, TDP is an important element for evaluating the power needs of the card.

Benchmark based evaluation

While the technical specifications can offer a broad idea on where the graphics card stands in comparison with others, it does not give a definite quantifiable mean of comparison.

Enter the benchmark , which is a test that gives a quantifiable result that can be clearly comparable between cards. For machine learning oriented graphics cards, a logical benchmark would be an ML model that is trained and evaluated across the cards to be compared. On Paperspace, multiple DL model benchmarks (YOLOR, StyleGAN_XL and EfficientNet) were performed on all cards available on either Core or Gradient. And, for each one the completion time of the benchmark was the quantifiable variable used.

Spoiler alert ! the A100 had the best results across all three benchmark test scenarios.

The advantage of the benchmark based evaluation is that it produces a single measurable element that can simply be used for comparison. Unlike the specification based evaluation, this method allows for a more complete evaluation of the graphics card as a unified system.

Why are graphics cards suitable for machine learning ?

In contrast to CPUs, GPUs are built from the ground up to process large amounts of data and carry out complicated tasks. Parallel computing is another benefit of GPUs. While CPU manufacturers strive for performance increases, which are recently starting to plateau, GPUs get around this by tailoring hardware and compute arrangements to a particular need. The Single Instruction, Multiple Data (SIMD) architecture used in this kind of parallel computing makes it possible to effectively spread workloads among GPU cores.

So, since the goal of machine learning is to enhance and improve the capabilities of algorithms, greater continuous data sets are required to be input. More data means these algorithms can learn from it more effectively and create more reliable models. Parallel computing capabilities, offered by graphics cards, can facilitate complex multi-step processes like deep learning algorithms and neural networks, in particular.

What are the best graphics cards for machine learning?

Short answer: The NVIDIA A100 - 80GB is the best single GPU available.

Long answer: Machine learning applications are a perfect match for the architecture of the NVIDIA A100 in particular and the Ampere series in general. Traffic moving to and from the DPU will be directly treated by the A100 GPU cores. This opens up a completely new class of networking and security applications that use AI, such as data leak detection, network performance optimization, and prediction.

While the A100 is the nuclear option when it comes to machine learning applications, more power does not always mean better. Depending on the ML model, the size of the dataset, the training and evaluation time constraints, sometimes a lower tier graphics card can be more than enough while keeping the cost as low as it can be. That's why having a cloud platform that offers a variety of graphics cards is important for an ML expert. For each mission, there is a perfect weapon.

Be sure to check out the Paperspace Cloud GPU comparison site to find the best deals available for the GPU you need! The A100 80 GB is currently only available in the cloud from Paperspace.

Add speed and simplicity to your Machine Learning workflow today.

https://images.nvidia.com/aem-dam/en-zz/Solutions/data-center/nvidia-ampere-architecture-whitepaper.pdf

https://www.nvidia.com/en-us/data-center/a100/

https://developer.nvidia.com/blog/nvidia-ampere-architecture-in-depth/

https://www.nvidia.com/content/dam/en-zz/Solutions/Data-Center/a100/pdf/nvidia-a100-datasheet-nvidia-us-2188504-web.pdf

https://www.nvidia.com/en-in/networking/products/data-processing-unit/

https://images.nvidia.com/aem-dam/en-zz/Solutions/data-center/dgx-a100/dgxa100-system-architecture-white-paper.pdf

Spread the word

Padding in convolutional neural networks, alternative to colab pro: comparing google's jupyter notebooks to gradient notebooks (updated), keep reading, explore llama 3 with paperspace, understanding parallel computing: gpus vs cpus explained simply with role of cuda, exploring the evolution of gpus: nvidia hopper vs. ampere architectures, subscribe to our newsletter.

Stay updated with Paperspace Blog by signing up for our newsletter.

🎉 Awesome! Now check your inbox and click the link to confirm your subscription.

Please enter a valid email address

Oops! There was an error sending the email, please try later

Help | Advanced Search

Computer Science > Computers and Society

Title: synthesizing proteins on the graphics card. protein folding and the limits of critical ai studies.

Abstract: This paper investigates the application of the transformer architecture in protein folding, as exemplified by DeepMind's AlphaFold project, and its implications for the understanding of large language models as models of language. The prevailing discourse often assumes a ready-made analogy between proteins -- encoded as sequences of amino acids -- and natural language -- encoded as sequences of discrete symbols. Instead of assuming as given the linguistic structure of proteins, we critically evaluate this analogy to assess the kind of knowledge-making afforded by the transformer architecture. We first trace the analogy's emergence and historical development, carving out the influence of structural linguistics on structural biology beginning in the mid-20th century. We then examine three often overlooked pre-processing steps essential to the transformer architecture, including subword tokenization, word embedding, and positional encoding, to demonstrate its regime of representation based on continuous, high-dimensional vector spaces, which departs from the discrete, semantically demarcated symbols of language. The successful deployment of transformers in protein folding, we argue, discloses what we consider a non-linguistic approach to token processing intrinsic to the architecture. We contend that through this non-linguistic processing, the transformer architecture carves out unique epistemological territory and produces a new class of knowledge, distinct from established domains. We contend that our search for intelligent machines has to begin with the shape, rather than the place, of intelligence. Consequently, the emerging field of critical AI studies should take methodological inspiration from the history of science in its quest to conceptualize the contributions of artificial intelligence to knowledge-making, within and beyond the domain-specific sciences.

Submission history

Access paper:.

- HTML (experimental)

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

- Forums New posts Search forums

- What's new New posts Latest activity

- Members Current visitors

- How to block Google's AI overviews and just get search results

Research paper about graphics cards

- Thread starter Hoempapaa

- Start date Apr 7, 2007

- Graphics Cards

Distinguished

- Apr 7, 2007

Hi guys, I am a student at the technical university Eindhoven (in Holland) and I'm writing a paper about the technological trajectories of graphics cards. For this paper I'm looking for a graph that contains the speed of all, or the mayor, graphics cards from 1993 untill now. This graph could best be about the millions of triangles per second or something alike. I would be very glad if anybody here could help me finding a graph like this. Or maybe anyone nows where to find data about this so I can construct a graph by myself. Thanks!

TheGreatGrapeApe

As good as it gets IMO; http://www.techarp.com/showarticle.aspx?artno=88 http://users.erols.com/chare/video.htm

TRENDING THREADS

- Started by Admin

- May 9, 2024

- Replies: 80

- Started by Cameron R

- Today at 3:45 PM

- Started by Everesttheraccoon

- Thursday at 3:27 PM

- Replies: 52

- May 8, 2024

- Replies: 85

- Started by Tachyie

- Today at 12:53 AM

- Replies: 38

- Started by diango

- Yesterday at 4:28 PM

- Replies: 36

- Started by atifpolitici

- May 12, 2024

- Replies: 83

Latest posts

- Latest: CelicaGT

- 1 minute ago

- Latest: USAFRet

- 7 minutes ago

- Latest: inzanezain

- 12 minutes ago

- Latest: znanyabbowiec

- 21 minutes ago

- Latest: NedSmelly

- Latest: Lutfij

- 32 minutes ago

- 40 minutes ago

Moderators online

Share this page

- Advertising

- Cookies Policies

- Term & Conditions

Microsoft Research Blog

Microsoft at chi 2024: innovations in human-centered design.

Published May 15, 2024

Share this page

- Share on Facebook

- Share on Twitter

- Share on LinkedIn

- Share on Reddit

- Subscribe to our RSS feed

The ways people engage with technology, through its design and functionality, determine its utility and acceptance in everyday use, setting the stage for widespread adoption. When computing tools and services respect the diversity of people’s experiences and abilities, technology is not only functional but also universally accessible. Human-computer interaction (HCI) plays a crucial role in this process, examining how technology integrates into our daily lives and exploring ways digital tools can be shaped to meet individual needs and enhance our interactions with the world.

The ACM CHI Conference on Human Factors in Computing Systems is a premier forum that brings together researchers and experts in the field, and Microsoft is honored to support CHI 2024 as a returning sponsor. We’re pleased to announce that 33 papers by Microsoft researchers and their collaborators have been accepted this year, with four winning the Best Paper Award and seven receiving honorable mentions.

This research aims to redefine how people work, collaborate, and play using technology, with a focus on design innovation to create more personalized, engaging, and effective interactions. Several projects emphasize customizing the user experience to better meet individual needs, such as exploring the potential of large language models (LLMs) to help reduce procrastination. Others investigate ways to boost realism in virtual and mixed reality environments, using touch to create a more immersive experience. There are also studies that address the challenges of understanding how people interact with technology. These include applying psychology and cognitive science to examine the use of generative AI and social media, with the goal of using the insights to guide future research and design directions. This post highlights these projects.

MICROSOFT RESEARCH PODCAST

AI Frontiers: The future of scale with Ahmed Awadallah and Ashley Llorens

This episode features Senior Principal Research Manager Ahmed H. Awadallah , whose work improving the efficiency of large-scale AI models and efforts to help move advancements in the space from research to practice have put him at the forefront of this new era of AI.

Best Paper Award recipients

DynaVis: Dynamically Synthesized UI Widgets for Visualization Editing Priyan Vaithilingam, Elena L. Glassman, Jeevana Priya Inala , Chenglong Wang GUIs used for editing visualizations can overwhelm users or limit their interactions. To address this, the authors introduce DynaVis, which combines natural language interfaces with dynamically synthesized UI widgets, enabling people to initiate and refine edits using natural language.

Generative Echo Chamber? Effects of LLM-Powered Search Systems on Diverse Information Seeking Nikhil Sharma, Q. Vera Liao , Ziang Xiao Conversational search systems powered by LLMs potentially improve on traditional search methods, yet their influence on increasing selective exposure and fostering echo chambers remains underexplored. This research suggests that LLM-driven conversational search may enhance biased information querying, particularly when the LLM’s outputs reinforce user views, emphasizing significant implications for the development and regulation of these technologies.

Piet: Facilitating Color Authoring for Motion Graphics Video Xinyu Shi, Yinghou Wang, Yun Wang , Jian Zhao Motion graphic (MG) videos use animated visuals and color to effectively communicate complex ideas, yet existing color authoring tools are lacking. This work introduces Piet, a tool prototype that offers an interactive palette and support for quick theme changes and controlled focus, significantly streamlining the color design process.

The Metacognitive Demands and Opportunities of Generative AI Lev Tankelevitch , Viktor Kewenig, Auste Simkute, Ava Elizabeth Scott, Advait Sarkar , Abigail Sellen , Sean Rintel Generative AI systems offer unprecedented opportunities for transforming professional and personal work, yet they present challenges around prompting, evaluating and relying on outputs, and optimizing workflows. This paper shows that metacognition—the psychological ability to monitor and control one’s thoughts and behavior—offers a valuable lens through which to understand and design for these usability challenges.

Honorable Mentions

B ig or Small, It’s All in Your Head: Visuo-Haptic Illusion of Size-Change Using Finger-Repositioning Myung Jin Kim, Eyal Ofek, Michel Pahud , Mike J. Sinclair, Andrea Bianchi This research introduces a fixed-sized VR controller that uses finger repositioning to create a visuo-haptic illusion of dynamic size changes in handheld virtual objects, allowing users to perceive virtual objects as significantly smaller or larger than the actual device.

LLMR: Real-time Prompting of Interactive Worlds Using Large Language Models Fernanda De La Torre, Cathy Mengying Fang, Han Huang, Andrzej Banburski-Fahey, Judith Amores , Jaron Lanier Large Language Model for Mixed Reality (LLMR) is a framework for the real-time creation and modification of interactive mixed reality experiences using LLMs. It uses novel strategies to tackle difficult cases where ideal training data is scarce or where the design goal requires the synthesis of internal dynamics, intuitive analysis, or advanced interactivity.

Observer Effect in Social Media Use Koustuv Saha, Pranshu Gupta, Gloria Mark, Emre Kiciman , Munmun De Choudhury This work investigates the observer effect in behavioral assessments on social media use. The observer effect is a phenomenon in which individuals alter their behavior due to awareness of being monitored. Conducted over an average of 82 months (about 7 years) retrospectively and five months prospectively using Facebook data, the study found that deviations in expected behavior and language post-enrollment in the study reflected individual psychological traits. The authors recommend ways to mitigate the observer effect in these scenarios.

Reading Between the Lines: Modeling User Behavior and Costs in AI-Assisted Programming Hussein Mozannar, Gagan Bansal , Adam Fourney , Eric Horvitz By investigating how developers use GitHub Copilot, the authors created CUPS, a taxonomy of programmer activities during system interaction. This approach not only elucidates interaction patterns and inefficiencies but can also drive more effective metrics and UI design for code-recommendation systems with the goal of improving programmer productivity.

SharedNeRF: Leveraging Photorealistic and View-dependent Rendering for Real-time and Remote Collaboration Mose Sakashita, Bala Kumaravel, Nicolai Marquardt , Andrew D. Wilson SharedNeRF, a system for synchronous remote collaboration, utilizes neural radiance field (NeRF) technology to provide photorealistic, viewpoint-specific renderings that are seamlessly integrated with point clouds to capture dynamic movements and changes in a shared space. A preliminary study demonstrated its effectiveness, as participants used this high-fidelity, multi-perspective visualization to successfully complete a flower arrangement task.

Understanding the Role of Large Language Models in Personalizing and Scaffolding Strategies to Combat Academic Procrastination Ananya Bhattacharjee, Yuchen Zeng, Sarah Yi Xu, Dana Kulzhabayeva, Minyi Ma, Rachel Kornfield, Syed Ishtiaque Ahmed, Alex Mariakakis, Mary P. Czerwinski , Anastasia Kuzminykh, Michael Liut, Joseph Jay Williams In this study, the authors explore the potential of LLMs for customizing academic procrastination interventions, employing a technology probe to generate personalized advice. Their findings emphasize the need for LLMs to offer structured, deadline-oriented advice and adaptive questioning techniques, providing key design insights for LLM-based tools while highlighting cautions against their use for therapeutic guidance.

Where Are We So Far? Understanding Data Storytelling Tools from the Perspective of Human-AI Collaboration Haotian Li, Yun Wang , Huamin Qu This paper evaluates data storytelling tools using a dual framework to analyze the stages of the storytelling workflow—analysis, planning, implementation, communication—and the roles of humans and AI in each stage, such as creators, assistants, optimizers, and reviewers. The study identifies common collaboration patterns in existing tools, summarizes lessons from these patterns, and highlights future research opportunities for human-AI collaboration in data storytelling.

Learn more about our work and contributions to CHI 2024, including our full list of publications , on our conference webpage .

Related publications

Big or small, it’s all in your head: visuo-haptic illusion of size-change using finger-repositioning, llmr: real-time prompting of interactive worlds using large language models, reading between the lines: modeling user behavior and costs in ai-assisted programming, observer effect in social media use, where are we so far understanding data storytelling tools from the perspective of human-ai collaboration, the metacognitive demands and opportunities of generative ai, piet: facilitating color authoring for motion graphics video, dynavis: dynamically synthesized ui widgets for visualization editing, generative echo chamber effects of llm-powered search systems on diverse information seeking, understanding the role of large language models in personalizing and scaffolding strategies to combat academic procrastination, sharednerf: leveraging photorealistic and view-dependent rendering for real-time and remote collaboration, continue reading.

Research Focus: Week of May 13, 2024

Research Focus: Week of April 15, 2024

Research Focus: Week of March 18, 2024

Advancing human-centered AI: Updates on responsible AI research

Research areas.

Related events

- Microsoft at CHI 2024

Related labs

- Microsoft Research Lab - Asia

- Microsoft Research Lab - Cambridge

- Microsoft Research Lab - Redmond

- Microsoft Research Lab – Montréal

- AI Frontiers

- Follow on Twitter

- Like on Facebook

- Follow on LinkedIn

- Subscribe on Youtube

- Follow on Instagram

Share this page:

IMAGES

VIDEO

COMMENTS

This paper presents an effort to bring GPU computing closer to programmers and wider community of users. GPU computing is explored through NVIDIA Compute Unified Device Architecture (CUDA) that is ...

Published manuscript (IEEE Digital Library) Graphics processing units (GPUs) power today's fastest supercomputers, are the dominant platform for deep learning, and provide the intelligence for devices ranging from self-driving cars to robots and smart cameras. They also generate compelling photorealistic images at real-time frame rates.

Graphics processing units (GPUs) power today's fastest supercomputers, are the dominant platform for deep learning, and provide the intelligence for devices ranging from self-driving cars to robots and smart cameras. They also generate compelling photorealistic images at real-time frame rates. GPUs have evolved by adding features to support new use cases. NVIDIA's GeForce 256, the first ...

effort to studying GPU and its importance and influential role in. various fields. In this review paper, a brief syno psis of history, applications, challenges, and some research in various areas ...

Lanfear suggests experimenting with parallel processing on a cheaper GPU aimed at gamers and then deploying code on a more professional chip. A top-of-the-range gaming GPU can cost US$1,200 ...

Graphic Programming Unit (GPU) is a parallel processor designed with h igh computational ability. The. extensive use of GPU was in the field of gaming and rendering of 30 graphics. With the fast ...

NVIDIA's latest processor family, the Turing GPU, was designed to realize a vision for next-generation graphics combining rasterization, ray tracing, and deep learning. It includes fundamental advancements in several key areas: streaming multiprocessor efficiency, a Tensor Core for accelerated AI inferencing, and an RTCore for accelerated ray tracing. With these innovations, Turing unlocks ...

Abstract. Graphics processing units (GPUs) power today's fastest supercomputers, are the dominant platform for deep learning, and provide the intelligence for devices ranging from self-driving cars to robots and smart cameras. They also generate compelling photorealistic images at real-time frame rates. GPUs have evolved by adding features to ...

The increasing incorporation of Graphics Processing Units (GPUs) as accelerators has been one of the forefront High Performance Computing (HPC) trends and provides unprecedented performance; however, the prevalent adoption of the Single-Program Multiple-Data (SPMD) programming model brings with it challenges of resource underutilization. In other words, under SPMD, every CPU needs GPU ...

Our publications provide insight into some of our leading-edge research. Research Labs . All Research Labs. 3D Deep Learning. Applied Research. Autonomous Vehicles ... Computer Graphics (294) Computer Architecture (222) Robotics (106) Circuits and VLSI Design (101) ... Best Paper Award. MedPart: A Multi-Level Evolutionary Differentiable ...

Around 20 NVIDIA Research papers advancing generative AI and neural graphics — including collaborations with over a dozen universities in the U.S., Europe and Israel — are headed to SIGGRAPH 2023, the premier computer graphics conference, taking place Aug. 6-10 in Los Angeles. The papers include generative AI models that turn text into ...

various fields. In this review paper, a brief synopsis of history, applications, challenges, and some research in various areas of life covers some aspects of GPU. Keywords—GAS model, Graph processing, GPU, Graph, BSP model. I. INTRODUCTION A graphics processing unit (PC / GPU) is a computer chip that

GPU-Card Performance Research in Satellite Imagery Classification Problems Using Machine Learning ... application of various machine learning models for solving practical problems largely depends on the performance of GPU cards. The paper compares the accuracy of image recognition based on the experiments with NVIDIA's GPU cards and gives ...

Seven papers on the latest advances in GPU research will be presented at this year's most prominent GPU and graphics conferences: SIGGRAPH, EGSR, and HPG. Author. Anton Kaplanyan. Intel. Intel continues to evolve its integrated GPU strategy and has entered the market of discrete and data center GPUs with products like Intel® Arc™ graphics ...

GPU is a graphical processing unit which enables you to run high definitions graphics on your PC, which are the demand of modern computing. Like the CPU (Central Processing Unit), it is a single-chip processor. However, as shown in Fig. 1, the GPU has hundreds of cores as compared to the 4 or 8 in the latest CPUs.

It's directly soldered to the graphics card's PCB. This enables remarkably fast data transport with minimal latency, allowing for high-resolution graphics rendering or deep learning model training. NVIDIA GeForce RTX 3050 VRAM Positionning . On current graphics cards, VRAM comes in a variety of sizes, speeds, and bus widths.

Explore the latest full-text research PDFs, articles, conference papers, preprints and more on GPU. Find methods information, sources, references or conduct a literature review on GPU

Turing is the latest graphical processing unit (GPU) architecture from NVIDIA, and it underpins the new RTX graphics cards. RTX is shorthand for real-time ray tracing, which is one of the biggest advantages of the Turing architecture. Turing is the latest graphical processing unit (GPU) architecture from NVIDIA, and it underpins the new RTX ...

The two largest civil dedicated graphics card supply companies are AMD and NVIDIA and both of them produce gaming graphics cards and graph design graphics cards (Jon Peddie Research, 2014). NVIDIA and AMD have been competing with each other for a very long time. The competition in the dedicated graphics card area has a significant point and it is

AMD Radeon RX 7800 XT, RX 7700 XT Are Easy to Buy on Launch Day. No bots, scalpers, or digital queues. Demand for GPUs has normalized, making it easy to snag AMD's newest graphics cards from ...

And nowadays it is used in most of the processing applications. This paper gives a glimpse about those applications which require the GPU for computing. The mentioned research papers give the validation that by integrating the GPU in the computing, system processes can be made fast. I. INTRODUCTION The GPU which stands for Graphics Processing Unit.

This paper investigates the application of the transformer architecture in protein folding, as exemplified by DeepMind's AlphaFold project, and its implications for the understanding of large language models as models of language. The prevailing discourse often assumes a ready-made analogy between proteins -- encoded as sequences of amino acids -- and natural language -- encoded as sequences ...

18,510. Apr 7, 2007. #1. Hi guys, I am a student at the technical university Eindhoven (in Holland) and I'm writing a paper about the technological trajectories of graphics cards. For this paper I'm looking for a graph that contains the speed of all, or the mayor, graphics cards from 1993 untill now. This graph could best be about the millions ...

The ACM CHI Conference on Human Factors in Computing Systems is a premier forum that brings together researchers and experts in the field, and Microsoft is honored to support CHI 2024 as a returning sponsor. We're pleased to announce that 33 papers by Microsoft researchers and their collaborators have been accepted this year, with four ...

The positive effects of tea on Alzheimer's disease (AD) have increasingly captured researchers' attention. Nevertheless, the quantitative comprehensive analysis in the relevant literatur is lack. This paper aims to thoroughly examine the current research status and hotspots from 2014 to 2023, providing a valuable reference for subsequent research. Documents spanning from 2014 to 2023 were ...