Have a thesis expert improve your writing

Check your thesis for plagiarism in 10 minutes, generate your apa citations for free.

- Knowledge Base

- Null and Alternative Hypotheses | Definitions & Examples

Null and Alternative Hypotheses | Definitions & Examples

Published on 5 October 2022 by Shaun Turney . Revised on 6 December 2022.

The null and alternative hypotheses are two competing claims that researchers weigh evidence for and against using a statistical test :

- Null hypothesis (H 0 ): There’s no effect in the population .

- Alternative hypothesis (H A ): There’s an effect in the population.

The effect is usually the effect of the independent variable on the dependent variable .

Table of contents

Answering your research question with hypotheses, what is a null hypothesis, what is an alternative hypothesis, differences between null and alternative hypotheses, how to write null and alternative hypotheses, frequently asked questions about null and alternative hypotheses.

The null and alternative hypotheses offer competing answers to your research question . When the research question asks “Does the independent variable affect the dependent variable?”, the null hypothesis (H 0 ) answers “No, there’s no effect in the population.” On the other hand, the alternative hypothesis (H A ) answers “Yes, there is an effect in the population.”

The null and alternative are always claims about the population. That’s because the goal of hypothesis testing is to make inferences about a population based on a sample . Often, we infer whether there’s an effect in the population by looking at differences between groups or relationships between variables in the sample.

You can use a statistical test to decide whether the evidence favors the null or alternative hypothesis. Each type of statistical test comes with a specific way of phrasing the null and alternative hypothesis. However, the hypotheses can also be phrased in a general way that applies to any test.

The null hypothesis is the claim that there’s no effect in the population.

If the sample provides enough evidence against the claim that there’s no effect in the population ( p ≤ α), then we can reject the null hypothesis . Otherwise, we fail to reject the null hypothesis.

Although “fail to reject” may sound awkward, it’s the only wording that statisticians accept. Be careful not to say you “prove” or “accept” the null hypothesis.

Null hypotheses often include phrases such as “no effect”, “no difference”, or “no relationship”. When written in mathematical terms, they always include an equality (usually =, but sometimes ≥ or ≤).

Examples of null hypotheses

The table below gives examples of research questions and null hypotheses. There’s always more than one way to answer a research question, but these null hypotheses can help you get started.

*Note that some researchers prefer to always write the null hypothesis in terms of “no effect” and “=”. It would be fine to say that daily meditation has no effect on the incidence of depression and p 1 = p 2 .

The alternative hypothesis (H A ) is the other answer to your research question . It claims that there’s an effect in the population.

Often, your alternative hypothesis is the same as your research hypothesis. In other words, it’s the claim that you expect or hope will be true.

The alternative hypothesis is the complement to the null hypothesis. Null and alternative hypotheses are exhaustive, meaning that together they cover every possible outcome. They are also mutually exclusive, meaning that only one can be true at a time.

Alternative hypotheses often include phrases such as “an effect”, “a difference”, or “a relationship”. When alternative hypotheses are written in mathematical terms, they always include an inequality (usually ≠, but sometimes > or <). As with null hypotheses, there are many acceptable ways to phrase an alternative hypothesis.

Examples of alternative hypotheses

The table below gives examples of research questions and alternative hypotheses to help you get started with formulating your own.

Null and alternative hypotheses are similar in some ways:

- They’re both answers to the research question

- They both make claims about the population

- They’re both evaluated by statistical tests.

However, there are important differences between the two types of hypotheses, summarized in the following table.

To help you write your hypotheses, you can use the template sentences below. If you know which statistical test you’re going to use, you can use the test-specific template sentences. Otherwise, you can use the general template sentences.

The only thing you need to know to use these general template sentences are your dependent and independent variables. To write your research question, null hypothesis, and alternative hypothesis, fill in the following sentences with your variables:

Does independent variable affect dependent variable ?

- Null hypothesis (H 0 ): Independent variable does not affect dependent variable .

- Alternative hypothesis (H A ): Independent variable affects dependent variable .

Test-specific

Once you know the statistical test you’ll be using, you can write your hypotheses in a more precise and mathematical way specific to the test you chose. The table below provides template sentences for common statistical tests.

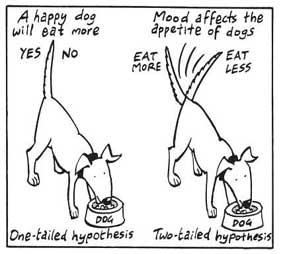

Note: The template sentences above assume that you’re performing one-tailed tests . One-tailed tests are appropriate for most studies.

The null hypothesis is often abbreviated as H 0 . When the null hypothesis is written using mathematical symbols, it always includes an equality symbol (usually =, but sometimes ≥ or ≤).

The alternative hypothesis is often abbreviated as H a or H 1 . When the alternative hypothesis is written using mathematical symbols, it always includes an inequality symbol (usually ≠, but sometimes < or >).

A research hypothesis is your proposed answer to your research question. The research hypothesis usually includes an explanation (‘ x affects y because …’).

A statistical hypothesis, on the other hand, is a mathematical statement about a population parameter. Statistical hypotheses always come in pairs: the null and alternative hypotheses. In a well-designed study , the statistical hypotheses correspond logically to the research hypothesis.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Turney, S. (2022, December 06). Null and Alternative Hypotheses | Definitions & Examples. Scribbr. Retrieved 14 May 2024, from https://www.scribbr.co.uk/stats/null-and-alternative-hypothesis/

Is this article helpful?

Shaun Turney

Other students also liked, levels of measurement: nominal, ordinal, interval, ratio, the standard normal distribution | calculator, examples & uses, types of variables in research | definitions & examples.

Statistics Resources

- Excel - Tutorials

- Basic Probability Rules

- Single Event Probability

- Complement Rule

- Intersections & Unions

- Compound Events

- Levels of Measurement

- Independent and Dependent Variables

- Entering Data

- Central Tendency

- Data and Tests

- Displaying Data

- Discussing Statistics In-text

- SEM and Confidence Intervals

- Two-Way Frequency Tables

- Empirical Rule

- Finding Probability

- Accessing SPSS

- Chart and Graphs

- Frequency Table and Distribution

- Descriptive Statistics

- Converting Raw Scores to Z-Scores

- Converting Z-scores to t-scores

- Split File/Split Output

- Partial Eta Squared

- Downloading and Installing G*Power: Windows/PC

- Correlation

- Testing Parametric Assumptions

- One-Way ANOVA

- Two-Way ANOVA

- Repeated Measures ANOVA

- Goodness-of-Fit

- Test of Association

- Pearson's r

- Point Biserial

- Mediation and Moderation

- Simple Linear Regression

- Multiple Linear Regression

- Binomial Logistic Regression

- Multinomial Logistic Regression

- Independent Samples T-test

- Dependent Samples T-test

- Testing Assumptions

- T-tests using SPSS

- T-Test Practice

- Predictive Analytics This link opens in a new window

- Quantitative Research Questions

- Null & Alternative Hypotheses

- One-Tail vs. Two-Tail

- Alpha & Beta

- Associated Probability

- Decision Rule

- Statement of Conclusion

- Statistics Group Sessions

ASC Chat Hours

ASC Chat is usually available at the following times ( Pacific Time):

If there is not a coach on duty, submit your question via one of the below methods:

928-440-1325

Ask a Coach

Search our FAQs on the Academic Success Center's Ask a Coach page.

Once you have developed a clear and focused research question or set of research questions, you’ll be ready to conduct further research, a literature review, on the topic to help you make an educated guess about the answer to your question(s). This educated guess is called a hypothesis.

In research, there are two types of hypotheses: null and alternative. They work as a complementary pair, each stating that the other is wrong.

- Null Hypothesis (H 0 ) – This can be thought of as the implied hypothesis. “Null” meaning “nothing.” This hypothesis states that there is no difference between groups or no relationship between variables. The null hypothesis is a presumption of status quo or no change.

- Alternative Hypothesis (H a ) – This is also known as the claim. This hypothesis should state what you expect the data to show, based on your research on the topic. This is your answer to your research question.

Null Hypothesis: H 0 : There is no difference in the salary of factory workers based on gender. Alternative Hypothesis : H a : Male factory workers have a higher salary than female factory workers.

Null Hypothesis : H 0 : There is no relationship between height and shoe size. Alternative Hypothesis : H a : There is a positive relationship between height and shoe size.

Null Hypothesis : H 0 : Experience on the job has no impact on the quality of a brick mason’s work. Alternative Hypothesis : H a : The quality of a brick mason’s work is influenced by on-the-job experience.

Was this resource helpful?

- << Previous: Hypothesis Testing

- Next: One-Tail vs. Two-Tail >>

- Last Updated: Apr 19, 2024 3:09 PM

- URL: https://resources.nu.edu/statsresources

Our websites may use cookies to personalize and enhance your experience. By continuing without changing your cookie settings, you agree to this collection. For more information, please see our University Websites Privacy Notice .

Neag School of Education

Educational Research Basics by Del Siegle

Null and alternative hypotheses.

Converting research questions to hypothesis is a simple task. Take the questions and make it a positive statement that says a relationship exists (correlation studies) or a difference exists between the groups (experiment study) and you have the alternative hypothesis. Write the statement such that a relationship does not exist or a difference does not exist and you have the null hypothesis. You can reverse the process if you have a hypothesis and wish to write a research question.

When you are comparing two groups, the groups are the independent variable. When you are testing whether something affects something else, the cause is the independent variable. The independent variable is the one you manipulate.

Teachers given higher pay will have more positive attitudes toward children than teachers given lower pay. The first step is to ask yourself “Are there two or more groups being compared?” The answer is “Yes.” What are the groups? Teachers who are given higher pay and teachers who are given lower pay. The independent variable is teacher pay. The dependent variable (the outcome) is attitude towards school.

You could also approach is another way. “Is something causing something else?” The answer is “Yes.” What is causing what? Teacher pay is causing attitude towards school. Therefore, teacher pay is the independent variable (cause) and attitude towards school is the dependent variable (outcome).

By tradition, we try to disprove (reject) the null hypothesis. We can never prove a null hypothesis, because it is impossible to prove something does not exist. We can disprove something does not exist by finding an example of it. Therefore, in research we try to disprove the null hypothesis. When we do find that a relationship (or difference) exists then we reject the null and accept the alternative. If we do not find that a relationship (or difference) exists, we fail to reject the null hypothesis (and go with it). We never say we accept the null hypothesis because it is never possible to prove something does not exist. That is why we say that we failed to reject the null hypothesis, rather than we accepted it.

Del Siegle, Ph.D. Neag School of Education – University of Connecticut [email protected] www.delsiegle.com

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

10.1 - setting the hypotheses: examples.

A significance test examines whether the null hypothesis provides a plausible explanation of the data. The null hypothesis itself does not involve the data. It is a statement about a parameter (a numerical characteristic of the population). These population values might be proportions or means or differences between means or proportions or correlations or odds ratios or any other numerical summary of the population. The alternative hypothesis is typically the research hypothesis of interest. Here are some examples.

Example 10.2: Hypotheses with One Sample of One Categorical Variable Section

About 10% of the human population is left-handed. Suppose a researcher at Penn State speculates that students in the College of Arts and Architecture are more likely to be left-handed than people found in the general population. We only have one sample since we will be comparing a population proportion based on a sample value to a known population value.

- Research Question : Are artists more likely to be left-handed than people found in the general population?

- Response Variable : Classification of the student as either right-handed or left-handed

State Null and Alternative Hypotheses

- Null Hypothesis : Students in the College of Arts and Architecture are no more likely to be left-handed than people in the general population (population percent of left-handed students in the College of Art and Architecture = 10% or p = .10).

- Alternative Hypothesis : Students in the College of Arts and Architecture are more likely to be left-handed than people in the general population (population percent of left-handed students in the College of Arts and Architecture > 10% or p > .10). This is a one-sided alternative hypothesis.

Example 10.3: Hypotheses with One Sample of One Measurement Variable Section

A generic brand of the anti-histamine Diphenhydramine markets a capsule with a 50 milligram dose. The manufacturer is worried that the machine that fills the capsules has come out of calibration and is no longer creating capsules with the appropriate dosage.

- Research Question : Does the data suggest that the population mean dosage of this brand is different than 50 mg?

- Response Variable : dosage of the active ingredient found by a chemical assay.

- Null Hypothesis : On the average, the dosage sold under this brand is 50 mg (population mean dosage = 50 mg).

- Alternative Hypothesis : On the average, the dosage sold under this brand is not 50 mg (population mean dosage ≠ 50 mg). This is a two-sided alternative hypothesis.

Example 10.4: Hypotheses with Two Samples of One Categorical Variable Section

Many people are starting to prefer vegetarian meals on a regular basis. Specifically, a researcher believes that females are more likely than males to eat vegetarian meals on a regular basis.

- Research Question : Does the data suggest that females are more likely than males to eat vegetarian meals on a regular basis?

- Response Variable : Classification of whether or not a person eats vegetarian meals on a regular basis

- Explanatory (Grouping) Variable: Sex

- Null Hypothesis : There is no sex effect regarding those who eat vegetarian meals on a regular basis (population percent of females who eat vegetarian meals on a regular basis = population percent of males who eat vegetarian meals on a regular basis or p females = p males ).

- Alternative Hypothesis : Females are more likely than males to eat vegetarian meals on a regular basis (population percent of females who eat vegetarian meals on a regular basis > population percent of males who eat vegetarian meals on a regular basis or p females > p males ). This is a one-sided alternative hypothesis.

Example 10.5: Hypotheses with Two Samples of One Measurement Variable Section

Obesity is a major health problem today. Research is starting to show that people may be able to lose more weight on a low carbohydrate diet than on a low fat diet.

- Research Question : Does the data suggest that, on the average, people are able to lose more weight on a low carbohydrate diet than on a low fat diet?

- Response Variable : Weight loss (pounds)

- Explanatory (Grouping) Variable : Type of diet

- Null Hypothesis : There is no difference in the mean amount of weight loss when comparing a low carbohydrate diet with a low fat diet (population mean weight loss on a low carbohydrate diet = population mean weight loss on a low fat diet).

- Alternative Hypothesis : The mean weight loss should be greater for those on a low carbohydrate diet when compared with those on a low fat diet (population mean weight loss on a low carbohydrate diet > population mean weight loss on a low fat diet). This is a one-sided alternative hypothesis.

Example 10.6: Hypotheses about the relationship between Two Categorical Variables Section

- Research Question : Do the odds of having a stroke increase if you inhale second hand smoke ? A case-control study of non-smoking stroke patients and controls of the same age and occupation are asked if someone in their household smokes.

- Variables : There are two different categorical variables (Stroke patient vs control and whether the subject lives in the same household as a smoker). Living with a smoker (or not) is the natural explanatory variable and having a stroke (or not) is the natural response variable in this situation.

- Null Hypothesis : There is no relationship between whether or not a person has a stroke and whether or not a person lives with a smoker (odds ratio between stroke and second-hand smoke situation is = 1).

- Alternative Hypothesis : There is a relationship between whether or not a person has a stroke and whether or not a person lives with a smoker (odds ratio between stroke and second-hand smoke situation is > 1). This is a one-tailed alternative.

This research question might also be addressed like example 11.4 by making the hypotheses about comparing the proportion of stroke patients that live with smokers to the proportion of controls that live with smokers.

Example 10.7: Hypotheses about the relationship between Two Measurement Variables Section

- Research Question : A financial analyst believes there might be a positive association between the change in a stock's price and the amount of the stock purchased by non-management employees the previous day (stock trading by management being under "insider-trading" regulatory restrictions).

- Variables : Daily price change information (the response variable) and previous day stock purchases by non-management employees (explanatory variable). These are two different measurement variables.

- Null Hypothesis : The correlation between the daily stock price change (\$) and the daily stock purchases by non-management employees (\$) = 0.

- Alternative Hypothesis : The correlation between the daily stock price change (\$) and the daily stock purchases by non-management employees (\$) > 0. This is a one-sided alternative hypothesis.

Example 10.8: Hypotheses about comparing the relationship between Two Measurement Variables in Two Samples Section

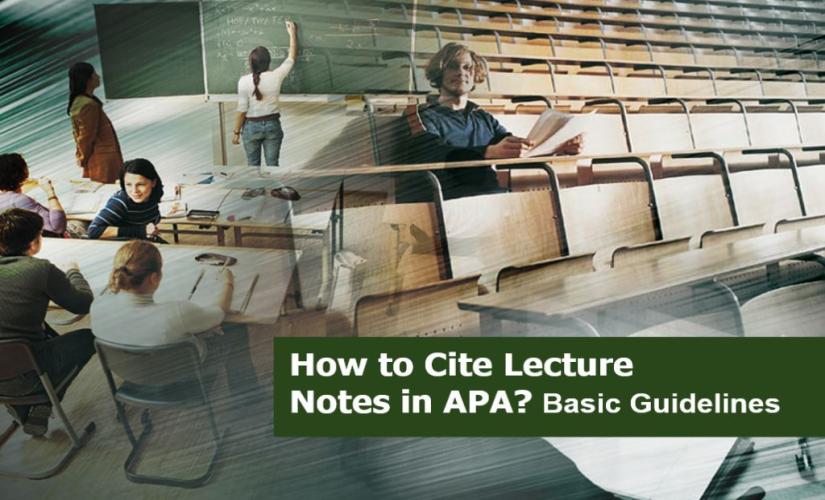

- Research Question : Is there a linear relationship between the amount of the bill (\$) at a restaurant and the tip (\$) that was left. Is the strength of this association different for family restaurants than for fine dining restaurants?

- Variables : There are two different measurement variables. The size of the tip would depend on the size of the bill so the amount of the bill would be the explanatory variable and the size of the tip would be the response variable.

- Null Hypothesis : The correlation between the amount of the bill (\$) at a restaurant and the tip (\$) that was left is the same at family restaurants as it is at fine dining restaurants.

- Alternative Hypothesis : The correlation between the amount of the bill (\$) at a restaurant and the tip (\$) that was left is the difference at family restaurants then it is at fine dining restaurants. This is a two-sided alternative hypothesis.

- PRO Courses Guides New Tech Help Pro Expert Videos About wikiHow Pro Upgrade Sign In

- EDIT Edit this Article

- EXPLORE Tech Help Pro About Us Random Article Quizzes Request a New Article Community Dashboard This Or That Game Popular Categories Arts and Entertainment Artwork Books Movies Computers and Electronics Computers Phone Skills Technology Hacks Health Men's Health Mental Health Women's Health Relationships Dating Love Relationship Issues Hobbies and Crafts Crafts Drawing Games Education & Communication Communication Skills Personal Development Studying Personal Care and Style Fashion Hair Care Personal Hygiene Youth Personal Care School Stuff Dating All Categories Arts and Entertainment Finance and Business Home and Garden Relationship Quizzes Cars & Other Vehicles Food and Entertaining Personal Care and Style Sports and Fitness Computers and Electronics Health Pets and Animals Travel Education & Communication Hobbies and Crafts Philosophy and Religion Work World Family Life Holidays and Traditions Relationships Youth

- Browse Articles

- Learn Something New

- Quizzes Hot

- This Or That Game

- Train Your Brain

- Explore More

- Support wikiHow

- About wikiHow

- Log in / Sign up

- Education and Communications

- College University and Postgraduate

- Academic Writing

Writing Null Hypotheses in Research and Statistics

Last Updated: January 17, 2024 Fact Checked

This article was co-authored by Joseph Quinones and by wikiHow staff writer, Jennifer Mueller, JD . Joseph Quinones is a High School Physics Teacher working at South Bronx Community Charter High School. Joseph specializes in astronomy and astrophysics and is interested in science education and science outreach, currently practicing ways to make physics accessible to more students with the goal of bringing more students of color into the STEM fields. He has experience working on Astrophysics research projects at the Museum of Natural History (AMNH). Joseph recieved his Bachelor's degree in Physics from Lehman College and his Masters in Physics Education from City College of New York (CCNY). He is also a member of a network called New York City Men Teach. There are 7 references cited in this article, which can be found at the bottom of the page. This article has been fact-checked, ensuring the accuracy of any cited facts and confirming the authority of its sources. This article has been viewed 25,573 times.

Are you working on a research project and struggling with how to write a null hypothesis? Well, you've come to the right place! Start by recognizing that the basic definition of "null" is "none" or "zero"—that's your biggest clue as to what a null hypothesis should say. Keep reading to learn everything you need to know about the null hypothesis, including how it relates to your research question and your alternative hypothesis as well as how to use it in different types of studies.

Things You Should Know

- Write a research null hypothesis as a statement that the studied variables have no relationship to each other, or that there's no difference between 2 groups.

- Adjust the format of your null hypothesis to match the statistical method you used to test it, such as using "mean" if you're comparing the mean between 2 groups.

What is a null hypothesis?

- Research hypothesis: States in plain language that there's no relationship between the 2 variables or there's no difference between the 2 groups being studied.

- Statistical hypothesis: States the predicted outcome of statistical analysis through a mathematical equation related to the statistical method you're using.

Examples of Null Hypotheses

Null Hypothesis vs. Alternative Hypothesis

- For example, your alternative hypothesis could state a positive correlation between 2 variables while your null hypothesis states there's no relationship. If there's a negative correlation, then both hypotheses are false.

- You need additional data or evidence to show that your alternative hypothesis is correct—proving the null hypothesis false is just the first step.

- In smaller studies, sometimes it's enough to show that there's some relationship and your hypothesis could be correct—you can leave the additional proof as an open question for other researchers to tackle.

How do I test a null hypothesis?

- Group means: Compare the mean of the variable in your sample with the mean of the variable in the general population. [6] X Research source

- Group proportions: Compare the proportion of the variable in your sample with the proportion of the variable in the general population. [7] X Research source

- Correlation: Correlation analysis looks at the relationship between 2 variables—specifically, whether they tend to happen together. [8] X Research source

- Regression: Regression analysis reveals the correlation between 2 variables while also controlling for the effect of other, interrelated variables. [9] X Research source

Templates for Null Hypotheses

- Research null hypothesis: There is no difference in the mean [dependent variable] between [group 1] and [group 2].

- Research null hypothesis: The proportion of [dependent variable] in [group 1] and [group 2] is the same.

- Research null hypothesis: There is no correlation between [independent variable] and [dependent variable] in the population.

- Research null hypothesis: There is no relationship between [independent variable] and [dependent variable] in the population.

Expert Q&A

You Might Also Like

Expert Interview

Thanks for reading our article! If you’d like to learn more about physics, check out our in-depth interview with Joseph Quinones .

- ↑ https://online.stat.psu.edu/stat100/lesson/10/10.1

- ↑ https://online.stat.psu.edu/stat501/lesson/2/2.12

- ↑ https://support.minitab.com/en-us/minitab/21/help-and-how-to/statistics/basic-statistics/supporting-topics/basics/null-and-alternative-hypotheses/

- ↑ https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5635437/

- ↑ https://online.stat.psu.edu/statprogram/reviews/statistical-concepts/hypothesis-testing

- ↑ https://education.arcus.chop.edu/null-hypothesis-testing/

- ↑ https://sphweb.bumc.bu.edu/otlt/mph-modules/bs/bs704_hypothesistest-means-proportions/bs704_hypothesistest-means-proportions_print.html

About This Article

- Send fan mail to authors

Reader Success Stories

Dec 3, 2022

Did this article help you?

Featured Articles

Trending Articles

Watch Articles

- Terms of Use

- Privacy Policy

- Do Not Sell or Share My Info

- Not Selling Info

wikiHow Tech Help Pro:

Develop the tech skills you need for work and life

Statistics Made Easy

How to Write a Null Hypothesis (5 Examples)

A hypothesis test uses sample data to determine whether or not some claim about a population parameter is true.

Whenever we perform a hypothesis test, we always write a null hypothesis and an alternative hypothesis, which take the following forms:

H 0 (Null Hypothesis): Population parameter =, ≤, ≥ some value

H A (Alternative Hypothesis): Population parameter <, >, ≠ some value

Note that the null hypothesis always contains the equal sign .

We interpret the hypotheses as follows:

Null hypothesis: The sample data provides no evidence to support some claim being made by an individual.

Alternative hypothesis: The sample data does provide sufficient evidence to support the claim being made by an individual.

For example, suppose it’s assumed that the average height of a certain species of plant is 20 inches tall. However, one botanist claims the true average height is greater than 20 inches.

To test this claim, she may go out and collect a random sample of plants. She can then use this sample data to perform a hypothesis test using the following two hypotheses:

H 0 : μ ≤ 20 (the true mean height of plants is equal to or even less than 20 inches)

H A : μ > 20 (the true mean height of plants is greater than 20 inches)

If the sample data gathered by the botanist shows that the mean height of this species of plants is significantly greater than 20 inches, she can reject the null hypothesis and conclude that the mean height is greater than 20 inches.

Read through the following examples to gain a better understanding of how to write a null hypothesis in different situations.

Example 1: Weight of Turtles

A biologist wants to test whether or not the true mean weight of a certain species of turtles is 300 pounds. To test this, he goes out and measures the weight of a random sample of 40 turtles.

Here is how to write the null and alternative hypotheses for this scenario:

H 0 : μ = 300 (the true mean weight is equal to 300 pounds)

H A : μ ≠ 300 (the true mean weight is not equal to 300 pounds)

Example 2: Height of Males

It’s assumed that the mean height of males in a certain city is 68 inches. However, an independent researcher believes the true mean height is greater than 68 inches. To test this, he goes out and collects the height of 50 males in the city.

H 0 : μ ≤ 68 (the true mean height is equal to or even less than 68 inches)

H A : μ > 68 (the true mean height is greater than 68 inches)

Example 3: Graduation Rates

A university states that 80% of all students graduate on time. However, an independent researcher believes that less than 80% of all students graduate on time. To test this, she collects data on the proportion of students who graduated on time last year at the university.

H 0 : p ≥ 0.80 (the true proportion of students who graduate on time is 80% or higher)

H A : μ < 0.80 (the true proportion of students who graduate on time is less than 80%)

Example 4: Burger Weights

A food researcher wants to test whether or not the true mean weight of a burger at a certain restaurant is 7 ounces. To test this, he goes out and measures the weight of a random sample of 20 burgers from this restaurant.

H 0 : μ = 7 (the true mean weight is equal to 7 ounces)

H A : μ ≠ 7 (the true mean weight is not equal to 7 ounces)

Example 5: Citizen Support

A politician claims that less than 30% of citizens in a certain town support a certain law. To test this, he goes out and surveys 200 citizens on whether or not they support the law.

H 0 : p ≥ .30 (the true proportion of citizens who support the law is greater than or equal to 30%)

H A : μ < 0.30 (the true proportion of citizens who support the law is less than 30%)

Additional Resources

Introduction to Hypothesis Testing Introduction to Confidence Intervals An Explanation of P-Values and Statistical Significance

Featured Posts

Hey there. My name is Zach Bobbitt. I have a Masters of Science degree in Applied Statistics and I’ve worked on machine learning algorithms for professional businesses in both healthcare and retail. I’m passionate about statistics, machine learning, and data visualization and I created Statology to be a resource for both students and teachers alike. My goal with this site is to help you learn statistics through using simple terms, plenty of real-world examples, and helpful illustrations.

2 Replies to “How to Write a Null Hypothesis (5 Examples)”

you are amazing, thank you so much

Say I am a botanist hypothesizing the average height of daisies is 20 inches, or not? Does T = (ave – 20 inches) / √ variance / (80 / 4)? … This assumes 40 real measures + 40 fake = 80 n, but that seems questionable. Please advise.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Join the Statology Community

Sign up to receive Statology's exclusive study resource: 100 practice problems with step-by-step solutions. Plus, get our latest insights, tutorials, and data analysis tips straight to your inbox!

By subscribing you accept Statology's Privacy Policy.

How to Write a Null and Alternative Hypothesis: A Guide With Examples

- Icon Calendar 18 May 2024

- Icon Page 1229 words

- Icon Clock 6 min read

When undertaking a qualitative or quantitative research project, researchers must first formulate a research question, from which they develop a hypothesis. By definition, a hypothesis is a prediction that a researcher makes about the research question and can either be affirmative or negative. In this case, a research question has three main components: variables (independent and dependent), a population sample, and the relation between the variables. When the prediction contradicts the research question, it is referred to as a null hypothesis. In short, a null hypothesis is a statement that implies there is no relationship between independent and dependent variables. Hence, researchers need to learn how to write a good null and alternative hypothesis to present quality studies.

General Aspect of Writing a Null and Alternative Hypothesis

Students with qualitative or quantitative research assignments must learn how to formulate and write a good research question and hypothesis. By definition, a hypothesis is an assumption or prediction that a researcher makes before undertaking an experimental investigation. Basically, academic standards require such a prediction to be a precise and testable statement, meaning that researchers must prove or disapprove of it in the course of the assignment. In this case, the main components of a hypothesis are variables (independent and dependent), a population sample, and the relation between the variables. Therefore, a research hypothesis is a prediction that researchers write about the relationship between two or more variables. In turn, the research inquiry is the process that seeks to answer the research question and, in the process, test the hypothesis by confirming or disapproving it.

Types of Hypotheses

There are several types of hypotheses, including an alternative hypothesis, a null hypothesis, a directional hypothesis, and a non-directional hypothesis. Basically, the directional hypothesis is a prediction of how the independent variable affects the dependent variable. In contrast, the non-directional hypothesis predicts that the independent variable influences the dependent variable, but does not specify how. Regardless of the type, all hypotheses are about predicting the relationship between the independent and dependent variables.

What Is a Null and Alternative Hypothesis

A null hypothesis, usually symbolized as “H0,” is a statement that contradicts the research hypothesis. In other words, it is a negative statement, indicating that there is no relationship between the independent and dependent variables. By testing the null hypothesis, a researcher can determine whether the inquiry results are due to the chance or the effect of manipulating the dependent variable. In most instances, a null hypothesis corresponds with an alternative hypothesis, a positive statement that covers a relationship that exists between the independent and dependent variables. Also, it is highly recommended that a researcher should write the alternative hypothesis first before the null hypothesis.

10 Examples of Research Questions Цith H0 and H1 Hypotheses

Before developing a hypothesis, a researcher must formulate the research question. Then, the next step is to transform the question into a negative statement that claims the lack of a relationship between the independent and dependent variables. Alternatively, researchers can change the question into a positive statement that includes a relationship that exists between the variables. In turn, this latter statement becomes the alternative hypothesis and is symbolized as H1. Hence, some of the examples of research questions and hull and alternative hypotheses are as follows:

1. Do physical exercises help individuals to age gracefully?

A Null Hypothesis (H0): Physical exercises are not a guarantee for graceful old age.

An Alternative Hypothesis (H1): Engaging in physical exercises enables individuals to remain healthy and active into old age.

2. What are the implications of therapeutic interventions in the fight against substance abuse?

H0: Therapeutic interventions are of no help in the fight against substance abuse.

H1: Exposing individuals with substance abuse disorders to therapeutic interventions helps to control and even stop their addictions.

3. How do sexual orientation and gender identity affect the experiences of late adolescents in foster care?

H0: Sexual orientation and gender identity have no effects on the experiences of late adolescents in foster care.

H1: The reality of stereotypes in society makes sexual orientation and gender identity factors complicate the experiences of late adolescents in foster care.

4. Does income inequality contribute to crime in high-density urban areas?

H0: There is no correlation between income inequality and incidences of crime in high-density urban areas.

H1: The high crime rates in high-density urban areas are due to the incidence of income inequality in those areas.

5. Does placement in foster care impact individuals’ mental health?

H0: There is no correlation between being in foster care and having mental health problems.

H1: Individuals placed in foster care experience anxiety and depression at one point in their life.

6. Do assistive devices and technologies lessen the mobility challenges of older adults with a stroke?

H0: Assistive devices and technologies do not provide any assistance to the mobility of older adults diagnosed with a stroke.

H1: Assistive devices and technologies enhance the mobility of older adults diagnosed with a stroke.

7. Does race identity undermine classroom participation?

H0: There is no correlation between racial identity and the ability to participate in classroom learning.

H1: Students from racial minorities are not as active as white students in classroom participation.

8. Do high school grades determine future success?

H0: There is no correlation between how one performs in high school and their success level in life.

H1: Attaining high grades in high school positions one for greater success in the future personal and professional lives.

9. Does critical thinking predict academic achievement?

H0: There is no correlation between critical thinking and academic achievement.

H1: Being a critical thinker is a pathway to academic success.

10. What benefits does group therapy provide to victims of domestic violence?

H0: Group therapy does not help victims of domestic violence because individuals prefer to hide rather than expose their shame.

H1: Group therapy provides domestic violence victims with a platform to share their hurt and connect with others with similar experiences.

Summing Up on How to Write a Null and Alternative Hypothesis

The formulation of research questions in qualitative and quantitative assignments helps students develop a hypothesis for their experiment. In this case, learning how to write a good hypothesis helps students and researchers to make their research relevant. Basically, the difference between a null and alternative hypothesis is that the former contradicts the research question, while the latter affirms it. In short, a null hypothesis is a negative statement relative to the research question, and an alternative hypothesis is a positive statement. Moreover, it is important to note that developing the null hypothesis at the beginning of the assignment is for prediction purposes. As such, the research work answers the research question and confirms or disapproves of the hypothesis. Hence, some of the tips that students and researchers need to know when developing a null hypothesis include:

- Formulate a research question that specifies the relationship between an independent variable and a dependent variable.

- Develop an alternative hypothesis that says a relationship that exists between the variables.

- Develop a null hypothesis that says a relationship that does not exist between the variables.

- Conduct the research to answer the research question, which allows the confirmation of a disapproval of a null hypothesis.

To Learn More, Read Relevant Articles

SAT Essay Examples With Explanations and Recommendations

- 29 July 2020

How to Cite Lecture Notes in APA: Basic Guidelines

- 27 July 2020

9.1 Null and Alternative Hypotheses

The actual test begins by considering two hypotheses . They are called the null hypothesis and the alternative hypothesis . These hypotheses contain opposing viewpoints.

H 0 , the — null hypothesis: a statement of no difference between sample means or proportions or no difference between a sample mean or proportion and a population mean or proportion. In other words, the difference equals 0.

H a —, the alternative hypothesis: a claim about the population that is contradictory to H 0 and what we conclude when we reject H 0 .

Since the null and alternative hypotheses are contradictory, you must examine evidence to decide if you have enough evidence to reject the null hypothesis or not. The evidence is in the form of sample data.

After you have determined which hypothesis the sample supports, you make a decision. There are two options for a decision. They are reject H 0 if the sample information favors the alternative hypothesis or do not reject H 0 or decline to reject H 0 if the sample information is insufficient to reject the null hypothesis.

Mathematical Symbols Used in H 0 and H a :

H 0 always has a symbol with an equal in it. H a never has a symbol with an equal in it. The choice of symbol depends on the wording of the hypothesis test. However, be aware that many researchers use = in the null hypothesis, even with > or < as the symbol in the alternative hypothesis. This practice is acceptable because we only make the decision to reject or not reject the null hypothesis.

Example 9.1

H 0 : No more than 30 percent of the registered voters in Santa Clara County voted in the primary election. p ≤ 30 H a : More than 30 percent of the registered voters in Santa Clara County voted in the primary election. p > 30

A medical trial is conducted to test whether or not a new medicine reduces cholesterol by 25 percent. State the null and alternative hypotheses.

Example 9.2

We want to test whether the mean GPA of students in American colleges is different from 2.0 (out of 4.0). The null and alternative hypotheses are the following: H 0 : μ = 2.0 H a : μ ≠ 2.0

We want to test whether the mean height of eighth graders is 66 inches. State the null and alternative hypotheses. Fill in the correct symbol (=, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : μ __ 66

- H a : μ __ 66

Example 9.3

We want to test if college students take fewer than five years to graduate from college, on the average. The null and alternative hypotheses are the following: H 0 : μ ≥ 5 H a : μ < 5

We want to test if it takes fewer than 45 minutes to teach a lesson plan. State the null and alternative hypotheses. Fill in the correct symbol ( =, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : μ __ 45

- H a : μ __ 45

Example 9.4

An article on school standards stated that about half of all students in France, Germany, and Israel take advanced placement exams and a third of the students pass. The same article stated that 6.6 percent of U.S. students take advanced placement exams and 4.4 percent pass. Test if the percentage of U.S. students who take advanced placement exams is more than 6.6 percent. State the null and alternative hypotheses. H 0 : p ≤ 0.066 H a : p > 0.066

On a state driver’s test, about 40 percent pass the test on the first try. We want to test if more than 40 percent pass on the first try. Fill in the correct symbol (=, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : p __ 0.40

- H a : p __ 0.40

Collaborative Exercise

Bring to class a newspaper, some news magazines, and some internet articles. In groups, find articles from which your group can write null and alternative hypotheses. Discuss your hypotheses with the rest of the class.

As an Amazon Associate we earn from qualifying purchases.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute Texas Education Agency (TEA). The original material is available at: https://www.texasgateway.org/book/tea-statistics . Changes were made to the original material, including updates to art, structure, and other content updates.

Access for free at https://openstax.org/books/statistics/pages/1-introduction

- Authors: Barbara Illowsky, Susan Dean

- Publisher/website: OpenStax

- Book title: Statistics

- Publication date: Mar 27, 2020

- Location: Houston, Texas

- Book URL: https://openstax.org/books/statistics/pages/1-introduction

- Section URL: https://openstax.org/books/statistics/pages/9-1-null-and-alternative-hypotheses

© Jan 23, 2024 Texas Education Agency (TEA). The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

Research Hypothesis In Psychology: Types, & Examples

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

A research hypothesis, in its plural form “hypotheses,” is a specific, testable prediction about the anticipated results of a study, established at its outset. It is a key component of the scientific method .

Hypotheses connect theory to data and guide the research process towards expanding scientific understanding

Some key points about hypotheses:

- A hypothesis expresses an expected pattern or relationship. It connects the variables under investigation.

- It is stated in clear, precise terms before any data collection or analysis occurs. This makes the hypothesis testable.

- A hypothesis must be falsifiable. It should be possible, even if unlikely in practice, to collect data that disconfirms rather than supports the hypothesis.

- Hypotheses guide research. Scientists design studies to explicitly evaluate hypotheses about how nature works.

- For a hypothesis to be valid, it must be testable against empirical evidence. The evidence can then confirm or disprove the testable predictions.

- Hypotheses are informed by background knowledge and observation, but go beyond what is already known to propose an explanation of how or why something occurs.

Predictions typically arise from a thorough knowledge of the research literature, curiosity about real-world problems or implications, and integrating this to advance theory. They build on existing literature while providing new insight.

Types of Research Hypotheses

Alternative hypothesis.

The research hypothesis is often called the alternative or experimental hypothesis in experimental research.

It typically suggests a potential relationship between two key variables: the independent variable, which the researcher manipulates, and the dependent variable, which is measured based on those changes.

The alternative hypothesis states a relationship exists between the two variables being studied (one variable affects the other).

A hypothesis is a testable statement or prediction about the relationship between two or more variables. It is a key component of the scientific method. Some key points about hypotheses:

- Important hypotheses lead to predictions that can be tested empirically. The evidence can then confirm or disprove the testable predictions.

In summary, a hypothesis is a precise, testable statement of what researchers expect to happen in a study and why. Hypotheses connect theory to data and guide the research process towards expanding scientific understanding.

An experimental hypothesis predicts what change(s) will occur in the dependent variable when the independent variable is manipulated.

It states that the results are not due to chance and are significant in supporting the theory being investigated.

The alternative hypothesis can be directional, indicating a specific direction of the effect, or non-directional, suggesting a difference without specifying its nature. It’s what researchers aim to support or demonstrate through their study.

Null Hypothesis

The null hypothesis states no relationship exists between the two variables being studied (one variable does not affect the other). There will be no changes in the dependent variable due to manipulating the independent variable.

It states results are due to chance and are not significant in supporting the idea being investigated.

The null hypothesis, positing no effect or relationship, is a foundational contrast to the research hypothesis in scientific inquiry. It establishes a baseline for statistical testing, promoting objectivity by initiating research from a neutral stance.

Many statistical methods are tailored to test the null hypothesis, determining the likelihood of observed results if no true effect exists.

This dual-hypothesis approach provides clarity, ensuring that research intentions are explicit, and fosters consistency across scientific studies, enhancing the standardization and interpretability of research outcomes.

Nondirectional Hypothesis

A non-directional hypothesis, also known as a two-tailed hypothesis, predicts that there is a difference or relationship between two variables but does not specify the direction of this relationship.

It merely indicates that a change or effect will occur without predicting which group will have higher or lower values.

For example, “There is a difference in performance between Group A and Group B” is a non-directional hypothesis.

Directional Hypothesis

A directional (one-tailed) hypothesis predicts the nature of the effect of the independent variable on the dependent variable. It predicts in which direction the change will take place. (i.e., greater, smaller, less, more)

It specifies whether one variable is greater, lesser, or different from another, rather than just indicating that there’s a difference without specifying its nature.

For example, “Exercise increases weight loss” is a directional hypothesis.

Falsifiability

The Falsification Principle, proposed by Karl Popper , is a way of demarcating science from non-science. It suggests that for a theory or hypothesis to be considered scientific, it must be testable and irrefutable.

Falsifiability emphasizes that scientific claims shouldn’t just be confirmable but should also have the potential to be proven wrong.

It means that there should exist some potential evidence or experiment that could prove the proposition false.

However many confirming instances exist for a theory, it only takes one counter observation to falsify it. For example, the hypothesis that “all swans are white,” can be falsified by observing a black swan.

For Popper, science should attempt to disprove a theory rather than attempt to continually provide evidence to support a research hypothesis.

Can a Hypothesis be Proven?

Hypotheses make probabilistic predictions. They state the expected outcome if a particular relationship exists. However, a study result supporting a hypothesis does not definitively prove it is true.

All studies have limitations. There may be unknown confounding factors or issues that limit the certainty of conclusions. Additional studies may yield different results.

In science, hypotheses can realistically only be supported with some degree of confidence, not proven. The process of science is to incrementally accumulate evidence for and against hypothesized relationships in an ongoing pursuit of better models and explanations that best fit the empirical data. But hypotheses remain open to revision and rejection if that is where the evidence leads.

- Disproving a hypothesis is definitive. Solid disconfirmatory evidence will falsify a hypothesis and require altering or discarding it based on the evidence.

- However, confirming evidence is always open to revision. Other explanations may account for the same results, and additional or contradictory evidence may emerge over time.

We can never 100% prove the alternative hypothesis. Instead, we see if we can disprove, or reject the null hypothesis.

If we reject the null hypothesis, this doesn’t mean that our alternative hypothesis is correct but does support the alternative/experimental hypothesis.

Upon analysis of the results, an alternative hypothesis can be rejected or supported, but it can never be proven to be correct. We must avoid any reference to results proving a theory as this implies 100% certainty, and there is always a chance that evidence may exist which could refute a theory.

How to Write a Hypothesis

- Identify variables . The researcher manipulates the independent variable and the dependent variable is the measured outcome.

- Operationalized the variables being investigated . Operationalization of a hypothesis refers to the process of making the variables physically measurable or testable, e.g. if you are about to study aggression, you might count the number of punches given by participants.

- Decide on a direction for your prediction . If there is evidence in the literature to support a specific effect of the independent variable on the dependent variable, write a directional (one-tailed) hypothesis. If there are limited or ambiguous findings in the literature regarding the effect of the independent variable on the dependent variable, write a non-directional (two-tailed) hypothesis.

- Make it Testable : Ensure your hypothesis can be tested through experimentation or observation. It should be possible to prove it false (principle of falsifiability).

- Clear & concise language . A strong hypothesis is concise (typically one to two sentences long), and formulated using clear and straightforward language, ensuring it’s easily understood and testable.

Consider a hypothesis many teachers might subscribe to: students work better on Monday morning than on Friday afternoon (IV=Day, DV= Standard of work).

Now, if we decide to study this by giving the same group of students a lesson on a Monday morning and a Friday afternoon and then measuring their immediate recall of the material covered in each session, we would end up with the following:

- The alternative hypothesis states that students will recall significantly more information on a Monday morning than on a Friday afternoon.

- The null hypothesis states that there will be no significant difference in the amount recalled on a Monday morning compared to a Friday afternoon. Any difference will be due to chance or confounding factors.

More Examples

- Memory : Participants exposed to classical music during study sessions will recall more items from a list than those who studied in silence.

- Social Psychology : Individuals who frequently engage in social media use will report higher levels of perceived social isolation compared to those who use it infrequently.

- Developmental Psychology : Children who engage in regular imaginative play have better problem-solving skills than those who don’t.

- Clinical Psychology : Cognitive-behavioral therapy will be more effective in reducing symptoms of anxiety over a 6-month period compared to traditional talk therapy.

- Cognitive Psychology : Individuals who multitask between various electronic devices will have shorter attention spans on focused tasks than those who single-task.

- Health Psychology : Patients who practice mindfulness meditation will experience lower levels of chronic pain compared to those who don’t meditate.

- Organizational Psychology : Employees in open-plan offices will report higher levels of stress than those in private offices.

- Behavioral Psychology : Rats rewarded with food after pressing a lever will press it more frequently than rats who receive no reward.

Related Articles

Research Methodology

Qualitative Data Coding

What Is a Focus Group?

Cross-Cultural Research Methodology In Psychology

What Is Internal Validity In Research?

Research Methodology , Statistics

What Is Face Validity In Research? Importance & How To Measure

Criterion Validity: Definition & Examples

- Research article

- Open access

- Published: 19 May 2010

The null hypothesis significance test in health sciences research (1995-2006): statistical analysis and interpretation

- Luis Carlos Silva-Ayçaguer 1 ,

- Patricio Suárez-Gil 2 &

- Ana Fernández-Somoano 3

BMC Medical Research Methodology volume 10 , Article number: 44 ( 2010 ) Cite this article

38k Accesses

23 Citations

18 Altmetric

Metrics details

The null hypothesis significance test (NHST) is the most frequently used statistical method, although its inferential validity has been widely criticized since its introduction. In 1988, the International Committee of Medical Journal Editors (ICMJE) warned against sole reliance on NHST to substantiate study conclusions and suggested supplementary use of confidence intervals (CI). Our objective was to evaluate the extent and quality in the use of NHST and CI, both in English and Spanish language biomedical publications between 1995 and 2006, taking into account the International Committee of Medical Journal Editors recommendations, with particular focus on the accuracy of the interpretation of statistical significance and the validity of conclusions.

Original articles published in three English and three Spanish biomedical journals in three fields (General Medicine, Clinical Specialties and Epidemiology - Public Health) were considered for this study. Papers published in 1995-1996, 2000-2001, and 2005-2006 were selected through a systematic sampling method. After excluding the purely descriptive and theoretical articles, analytic studies were evaluated for their use of NHST with P-values and/or CI for interpretation of statistical "significance" and "relevance" in study conclusions.

Among 1,043 original papers, 874 were selected for detailed review. The exclusive use of P-values was less frequent in English language publications as well as in Public Health journals; overall such use decreased from 41% in 1995-1996 to 21% in 2005-2006. While the use of CI increased over time, the "significance fallacy" (to equate statistical and substantive significance) appeared very often, mainly in journals devoted to clinical specialties (81%). In papers originally written in English and Spanish, 15% and 10%, respectively, mentioned statistical significance in their conclusions.

Conclusions

Overall, results of our review show some improvements in statistical management of statistical results, but further efforts by scholars and journal editors are clearly required to move the communication toward ICMJE advices, especially in the clinical setting, which seems to be imperative among publications in Spanish.

Peer Review reports

The null hypothesis statistical testing (NHST) has been the most widely used statistical approach in health research over the past 80 years. Its origins dates back to 1279 [ 1 ] although it was in the second decade of the twentieth century when the statistician Ronald Fisher formally introduced the concept of "null hypothesis" H 0 - which, generally speaking, establishes that certain parameters do not differ from each other. He was the inventor of the "P-value" through which it could be assessed [ 2 ]. Fisher's P-value is defined as a conditional probability calculated using the results of a study. Specifically, the P-value is the probability of obtaining a result at least as extreme as the one that was actually observed, assuming that the null hypothesis is true. The Fisherian significance testing theory considered the p-value as an index to measure the strength of evidence against the null hypothesis in a single experiment. The father of NHST never endorsed, however, the inflexible application of the ultimately subjective threshold levels almost universally adopted later on (although the introduction of the 0.05 has his paternity also).

A few years later, Jerzy Neyman and Egon Pearson considered the Fisherian approach inefficient, and in 1928 they published an article [ 3 ] that would provide the theoretical basis of what they called hypothesis statistical testing . The Neyman-Pearson approach is based on the notion that one out of two choices has to be taken: accept the null hypothesis taking the information as a reference based on the information provided, or reject it in favor of an alternative one. Thus, one can incur one of two types of errors: a Type I error, if the null hypothesis is rejected when it is actually true, and a Type II error, if the null hypothesis is accepted when it is actually false. They established a rule to optimize the decision process, using the p-value introduced by Fisher, by setting the maximum frequency of errors that would be admissible.

The null hypothesis statistical testing, as applied today, is a hybrid coming from the amalgamation of the two methods [ 4 ]. As a matter of fact, some 15 years later, both procedures were combined to give rise to the nowadays widespread use of an inferential tool that would satisfy none of the statisticians involved in the original controversy. The present method essentially goes as follows: given a null hypothesis, an estimate of the parameter (or parameters) is obtained and used to create statistics whose distribution, under H 0 , is known. With these data the P-value is computed. Finally, the null hypothesis is rejected when the obtained P-value is smaller than a certain comparative threshold (usually 0.05) and it is not rejected if P is larger than the threshold.

The first reservations about the validity of the method began to appear around 1940, when some statisticians censured the logical roots and practical convenience of Fisher's P-value [ 5 ]. Significance tests and P-values have repeatedly drawn the attention and criticism of many authors over the past 70 years, who have kept questioning its epistemological legitimacy as well as its practical value. What remains in spite of these criticisms is the lasting legacy of researchers' unwillingness to eradicate or reform these methods.

Although there are very comprehensive works on the topic [ 6 ], we list below some of the criticisms most universally accepted by specialists.

The P-values are used as a tool to make decisions in favor of or against a hypothesis. What really may be relevant, however, is to get an effect size estimate (often the difference between two values) rather than rendering dichotomous true/false verdicts [ 7 – 11 ].

The P-value is a conditional probability of the data, provided that some assumptions are met, but what really interests the investigator is the inverse probability: what degree of validity can be attributed to each of several competing hypotheses, once that certain data have been observed [ 12 ].

The two elements that affect the results, namely the sample size and the magnitude of the effect, are inextricably linked in the value of p and we can always get a lower P-value by increasing the sample size. Thus, the conclusions depend on a factor completely unrelated to the reality studied (i.e. the available resources, which in turn determine the sample size) [ 13 , 14 ].

Those who defend the NHST often assert the objective nature of that test, but the process is actually far from being so. NHST does not ensure objectivity. This is reflected in the fact that we generally operate with thresholds that are ultimately no more than conventions, such as 0.01 or 0.05. What is more, for many years their use has unequivocally demonstrated the inherent subjectivity that goes with the concept of P, regardless of how it will be used later [ 15 – 17 ].

In practice, the NHST is limited to a binary response sorting hypotheses into "true" and "false" or declaring "rejection" or "no rejection", without demanding a reasonable interpretation of the results, as has been noted time and again for decades. This binary orthodoxy validates categorical thinking, which results in a very simplistic view of scientific activity that induces researchers not to test theories about the magnitude of effect sizes [ 18 – 20 ].

Despite the weakness and shortcomings of the NHST, they are frequently taught as if they were the key inferential statistical method or the most appropriate, or even the sole unquestioned one. The statistical textbooks, with only some exceptions, do not even mention the NHST controversy. Instead, the myth is spread that NHST is the "natural" final action of scientific inference and the only procedure for testing hypotheses. However, relevant specialists and important regulators of the scientific world advocate avoiding them.

Taking especially into account that NHST does not offer the most important information (i.e. the magnitude of an effect of interest, and the precision of the estimate of the magnitude of that effect), many experts recommend the reporting of point estimates of effect sizes with confidence intervals as the appropriate representation of the inherent uncertainty linked to empirical studies [ 21 – 25 ]. Since 1988, the International Committee of Medical Journal Editors (ICMJE, known as the Vancouver Group ) incorporates the following recommendation to authors of manuscripts submitted to medical journals: "When possible, quantify findings and present them with appropriate indicators of measurement error or uncertainty (such as confidence intervals). Avoid relying solely on statistical hypothesis testing, such as P-values, which fail to convey important information about effect size" [ 26 ].

As will be shown, the use of confidence intervals (CI), occasionally accompanied by P-values, is recommended as a more appropriate method for reporting results. Some authors have noted several shortcomings of CI long ago [ 27 ]. In spite of the fact that calculating CI could be complicated indeed, and that their interpretation is far from simple [ 28 , 29 ], authors are urged to use them because they provide much more information than the NHST and do not merit most of its criticisms of NHST [ 30 ]. While some have proposed different options (for instance, likelihood-based information theoretic methods [ 31 ], and the Bayesian inferential paradigm [ 32 ]), confidence interval estimation of effect sizes is clearly the most widespread alternative approach.

Although twenty years have passed since the ICMJE began to disseminate such recommendations, systematically ignored by the vast majority of textbooks and hardly incorporated in medical publications [ 33 ], it is interesting to examine the extent to which the NHST is used in articles published in medical journals during recent years, in order to identify what is still lacking in the process of eradicating the widespread ceremonial use that is made of statistics in health research [ 34 ]. Furthermore, it is enlightening in this context to examine whether these patterns differ between English- and Spanish-speaking worlds and, if so, to see if the changes in paradigms are occurring more slowly in Spanish-language publications. In such a case we would offer various suggestions.

In addition to assessing the adherence to the above cited statistical recommendation proposed by ICMJE relative to the use of P-values, we consider it of particular interest to estimate the extent to which the significance fallacy is present, an inertial deficiency that consists of attributing -- explicitly or not -- qualitative importance or practical relevance to the found differences simply because statistical significance was obtained.

Many authors produce misleading statements such as "a significant effect was (or was not) found" when it should be said that "a statistically significant difference was (or was not) found". A detrimental consequence of this equivalence is that some authors believe that finding out whether there is "statistical significance" or not is the aim, so that this term is then mentioned in the conclusions [ 35 ]. This means virtually nothing, except that it indicates that the author is letting a computer do the thinking. Since the real research questions are never statistical ones, the answers cannot be statistical either. Accordingly, the conversion of the dichotomous outcome produced by a NHST into a conclusion is another manifestation of the mentioned fallacy.

The general objective of the present study is to evaluate the extent and quality of use of NHST and CI, both in English- and in Spanish-language biomedical publications, between 1995 and 2006 taking into account the International Committee of Medical Journal Editors recommendations, with particular focus on accuracy regarding interpretation of statistical significance and the validity of conclusions.

We reviewed the original articles from six journals, three in English and three in Spanish, over three disjoint periods sufficiently separated from each other (1995-1996, 2000-2001, 2005-2006) as to properly describe the evolution in prevalence of the target features along the selected periods.

The selection of journals was intended to get representation for each of the following three thematic areas: clinical specialties ( Obstetrics & Gynecology and Revista Española de Cardiología) ; Public Health and Epidemiology ( International Journal of Epidemiology and Atención Primaria) and the area of general and internal medicine ( British Medical Journal and Medicina Clínica ). Five of the selected journals formally endorsed ICMJE guidelines; the remaining one ( Revista Española de Cardiología ) suggests observing ICMJE demands in relation with specific issues. We attempted to capture journal diversity in the sample by selecting general and specialty journals with different degrees of influence, resulting from their impact factors in 2007, which oscillated between 1.337 (MC) and 9.723 (BMJ). No special reasons guided us to choose these specific journals, but we opted for journals with rather large paid circulations. For instance, the Spanish Cardiology Journal is the one with the largest impact factor among the fourteen Spanish Journals devoted to clinical specialties that have impact factor and Obstetrics & Gynecology has an outstanding impact factor among the huge number of journals available for selection.

It was decided to take around 60 papers for each biennium and journal, which means a total of around 1,000 papers. As recently suggested [ 36 , 37 ], this number was not established using a conventional method, but by means of a purposive and pragmatic approach in choosing the maximum sample size that was feasible.

Systematic sampling in phases [ 38 ] was used in applying a sampling fraction equal to 60/N, where N is the number of articles, in each of the 18 subgroups defined by crossing the six journals and the three time periods. Table 1 lists the population size and the sample size for each subgroup. While the sample within each subgroup was selected with equal probability, estimates based on other subsets of articles (defined across time periods, areas, or languages) are based on samples with various selection probabilities. Proper weights were used to take into account the stratified nature of the sampling in these cases.

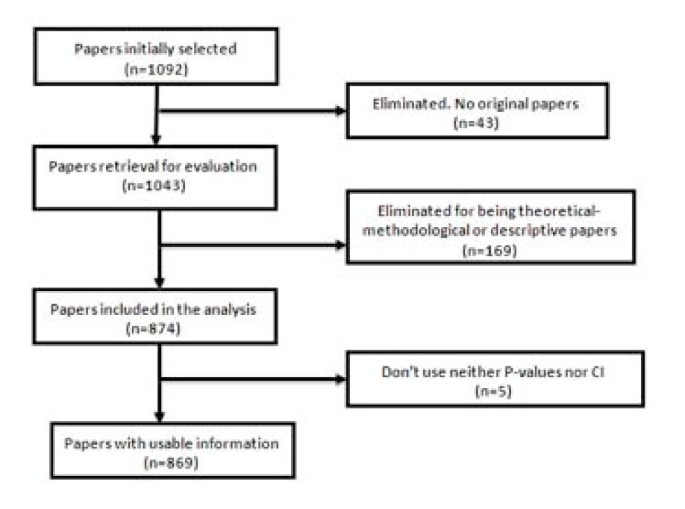

Forty-nine of the 1,092 selected papers were eliminated because, although the section of the article in which they were assigned could suggest they were originals, detailed scrutiny revealed that in some cases they were not. The sample, therefore, consisted of 1,043 papers. Each of them was classified into one of three categories: (1) purely descriptive papers, those designed to review or characterize the state of affairs as it exists at present, (2) analytical papers, or (3) articles that address theoretical, methodological or conceptual issues. An article was regarded as analytical if it seeks to explain the reasons behind a particular occurrence by discovering causal relationships or, even if self-classified as descriptive, it was carried out to assess cause-effect associations among variables. We classify as theoretical or methodological those articles that do not handle empirical data as such, and focus instead on proposing or assessing research methods. We identified 169 papers as purely descriptive or theoretical, which were therefore excluded from the sample. Figure 1 presents a flow chart showing the process for determining eligibility for inclusion in the sample.

Flow chart of the selection process for eligible papers .

To estimate the adherence to ICMJE recommendations, we considered whether the papers used P-values, confidence intervals, and both simultaneously. By "the use of P-values" we mean that the article contains at least one P-value, explicitly mentioned in the text or at the bottom of a table, or that it reports that an effect was considered as statistically significant . It was deemed that an article uses CI if it explicitly contained at least one confidence interval, but not when it only provides information that could allow its computation (usually by presenting both the estimate and the standard error). Probability intervals provided in Bayesian analysis were classified as confidence intervals (although conceptually they are not the same) since what is really of interest here is whether or not the authors quantify the findings and present them with appropriate indicators of the margin of error or uncertainty.

In addition we determined whether the "Results" section of each article attributed the status of "significant" to an effect on the sole basis of the outcome of a NHST (i.e., without clarifying that it is strictly statistical significance). Similarly, we examined whether the term "significant" (applied to a test) was mistakenly used as synonymous with substantive , relevant or important . The use of the term "significant effect" when it is only appropriate as a reference to a "statistically significant difference," can be considered a direct expression of the significance fallacy [ 39 ] and, as such, constitutes one way to detect the problem in a specific paper.

We also assessed whether the "Conclusions," which sometimes appear as a separate section in the paper or otherwise in the last paragraphs of the "Discussion" section mentioned statistical significance and, if so, whether any of such mentions were no more than an allusion to results.

To perform these analyses we considered both the abstract and the body of the article. To assess the handling of the significance issue, however, only the body of the manuscript was taken into account.

The information was collected by four trained observers. Every paper was assigned to two reviewers. Disagreements were discussed and, if no agreement was reached, a third reviewer was consulted to break the tie and so moderate the effect of subjectivity in the assessment.