- Open access

- Published: 10 October 2023

Clinical systematic reviews – a brief overview

- Mayura Thilanka Iddagoda 1 , 2 &

- Leon Flicker 1 , 2

BMC Medical Research Methodology volume 23 , Article number: 226 ( 2023 ) Cite this article

2031 Accesses

4 Citations

2 Altmetric

Metrics details

Systematic reviews answer research questions through a defined methodology. It is a complex task and multiple articles need to be referred to acquire wide range of required knowledge to conduct a systematic review. The aim of this article is to bring the process into a single paper.

The statistical concepts and sequence of steps to conduct a systematic review or a meta-analysis are examined by authors.

The process of conducting a clinical systematic review is described in seven manageable steps in this article. Each step is explained with examples to understand the method evidently.

A complex process of conducting a systematic review is presented simply in a single article.

Peer Review reports

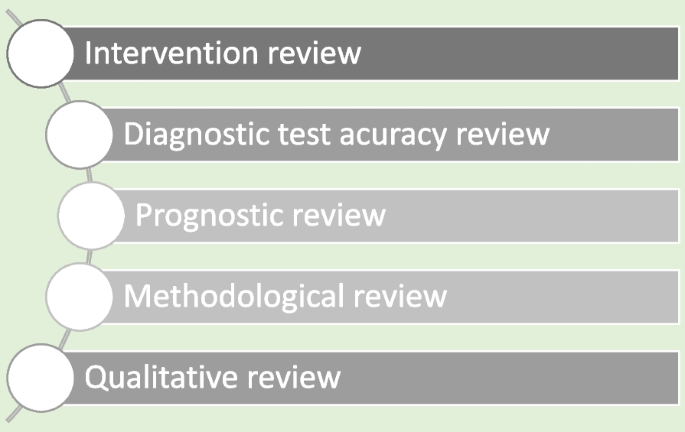

Systematic reviews are a structured approach to answer a research question based on all suitable available empirical evidence. The statistical methodology used to synthesize results in such a review is called ‘meta-analysis’. There are five types of clinical systematic reviews described in this article (see Fig. 1 ), including intervention, diagnostic test accuracy, prognostic, methodological and qualitative. This review will provide a very brief overview in a narrative fashion. This article does not cover systematic reviews of more epidemiologically based studies. The recommended process undertaken in a systematic review is described under seven steps in this paper [ 1 ].

Types of systematic reviews

There are resources for those who are moving from the beginning stage and gaining more expertise (See Table 1 ). Cochrane conducts online interactive master classes on systematic reviews throughout the year and there are web tutorials in the form of e-learning modules. Some groups in Cochrane commission limited number of systematic reviews and can be contacted directly for support ([email protected]). Some institutions have systematic review training programs including John Hopkins (Coursea), Joanna Briggs Institute (JBI education), Yale University (Search strategy), University of York (Centre for Reviews) and Mayo Clinic Libraries. BMC systematic reviews group also introduced “Peer review mentoring” program to support early researchers in systematic reviews. The local University/Hospital librarian is usually a good point of first reference for searches and is able to direct reviewers to other support.

Research question and study protocol

A clearly defined study question is vital and will direct the following steps in a systematic review. The question should have some novelty (e.g. there should be no existing review without new primary studies) and be of interest to the reviewers. Major conflicts of interest can be problematic (e.g. employment by a company that manufactures the intervention). Primary components of a research question should include inclusion criteria, search strategy, analysis or outcome measures and interpretation. Types of reviews will determine the categories of research questions such as intervention, prognostic, diagnostic, etc. [ 1 ].

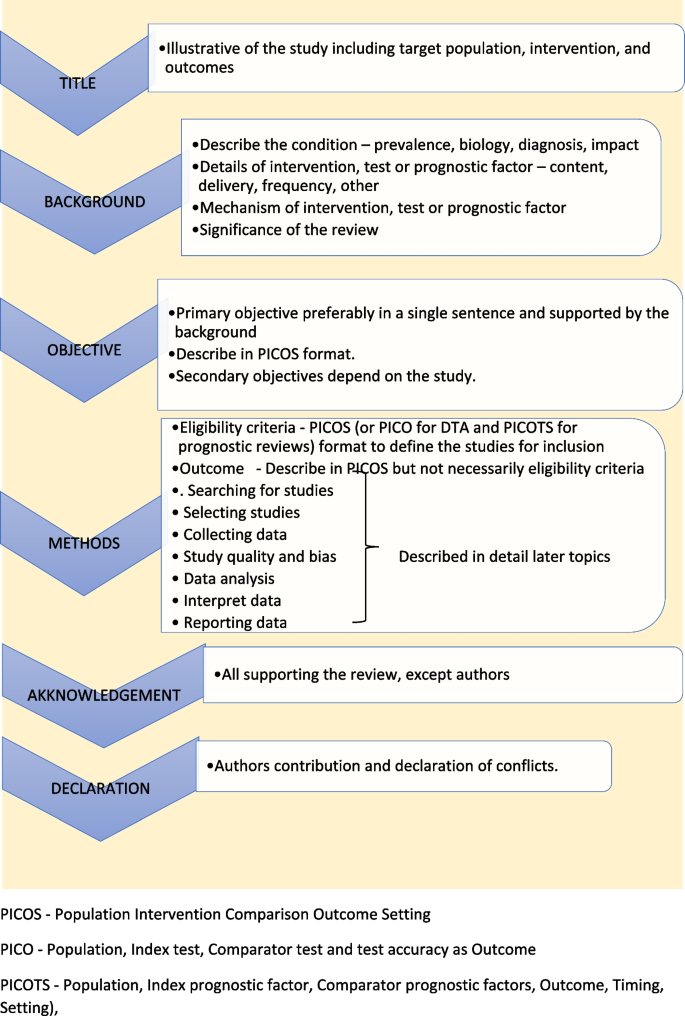

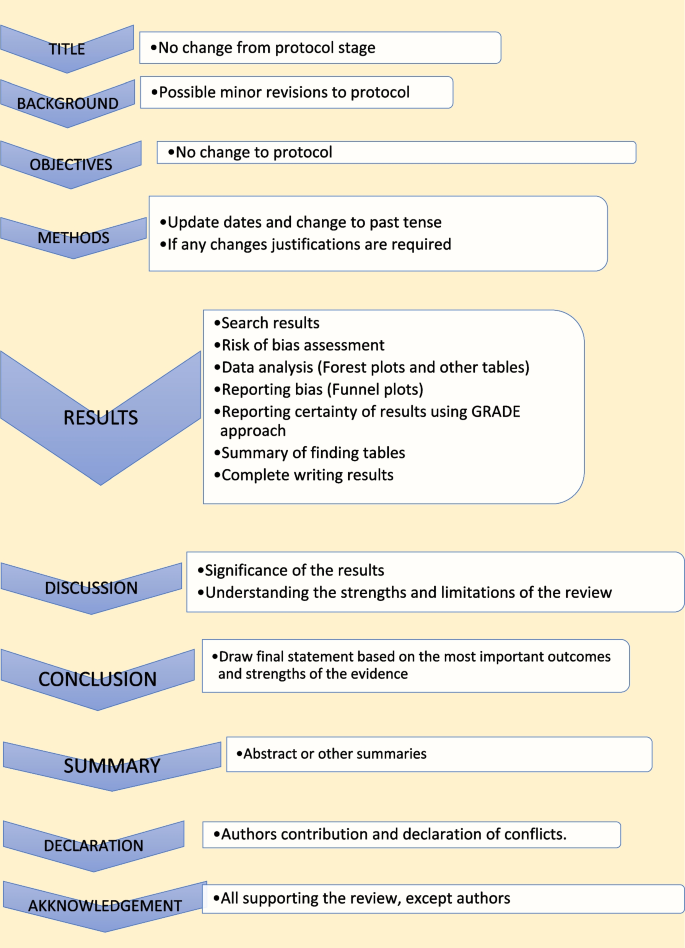

Study protocol elaborates the research question. The language of the study protocol is important. It is usually written in future tense, accessible language, active voice and full sentences [ 2 ]. Structure of the review protocol is described in Fig. 2 .

Structure of the review protocol

Searching studies

The comprehensive search for eligible studies is the most defining step in a systematic review. The guidance by an information specialist, or an experienced librarian, is a key requirement for designing a thorough search strategy [ 3 , 4 ].

The search strategy should explore multiple sources rigorously and it should be reproducible. It is important to balance sensitivity and precision in designing a search plan. A sensitive approach will provide a large number of studies, which lowers the risk of missing relevant studies but may produce a large workload. On the other hand, a focused search (precision) will give a more manageable number of studies but increases the risk of missing studies.

There are multiple sources to search for eligible studies in a systematic review or a meta-analysis. The key databases are Central (Cochrane register of clinical trials), MEDLINE (PubMed) and Embase. There are many other databases, published reviews and reference lists that may be used. Forward citation tracking can be done for searched studies using citation indices like Google Scholar, Scopus or Web of Science. There may be studies presented to different levels of governmental and non-governmental organizations which are not recognized as commercial publishers. These studies are called ‘grey literature’. Extensive investigations in different sources are required to identify grey literature. Information specialists are helpful in finding these studies [ 2 ].

Designing the search strategy requires a structured approach. Again, assistance from a librarian or an information specialist is recommended. PICOS, PICO and PICOTS elements are used to design key concepts. Participants and study design are relevant elements used in all reviews. Intervention reviews require specification of the intervention’s exact nature. Outcomes are important for both intervention and prognostic reviews.

Search terms are then developed using key concepts. There are two main search terms (text words and index terms). Text words or natural language terms appear in most publications. Different authors may use different text words for the same pathology. For an example, words such as injury, wound, trauma are used to describe physical damage to the body. Index terms, on the other hand, are controlled vocabularies defined by database indexers [ 4 ]. Common terms are MeSH (Medical Subject Headings) by MEDLINE and Emtree in Embase. The index terms do not change with the interface (eg. the term ‘wound and injuries’ is used for all types of damage to the body from external causes) [ 5 ].

Search filters are used to identify search terms. The choice of filters depends on the study design, database and interface. There are specific words used to combine search terms called ‘Boolean operators’. The main Boolean operators are ‘OR’ which broaden the search (accidents OR falls will include all studies with both terms) and ‘AND’ which narrow the search (accidents AND falls will select studies with both terms). In standard search strategy all terms within a key concept are combined with ‘OR’ and in-between concepts using ‘AND’.

Limits and restrictions are used in search strategy to improve precision. The common restrictions are language selections, publication date limits and format boundaries. These limits may result in missing relevant studies. It is good practice to explain the reason for restrictions in the search strategy. It is also important to be aware of errors and retractions in selected studies. Information specialists can add terms to remove such studies in the search process. The final step is piloting the search strategy. It will give an opportunity to adjust the search strategy for optimal sensitivity and precision [ 6 ].

All systematic reviews require consistent management of the search studies. It is challenging to manage a large number of studies manually. Reference management software can merge all search results, remove duplicates, record number of studies selected in each step, store methodology and selection criteria, and support exporting selected studies to analysis software. Specific platforms and software packages are extremely useful and can save time and effort in navigating the search and compiling the appropriate data. There are many software packages available for systematic review reference management, including Covidence, Abstracker, CADIMA, SUMARI and DistillerSR.

Throughout the search process, documentation is crucial. Search criteria and strategy, total number of studies in each step, searched databases and non-databases and copies of internet results are important records. In a situation where the search was more than 12 months old, it is advisable to re-run the search to minimize missing novel studies [ 2 , 6 ].

Selecting studies

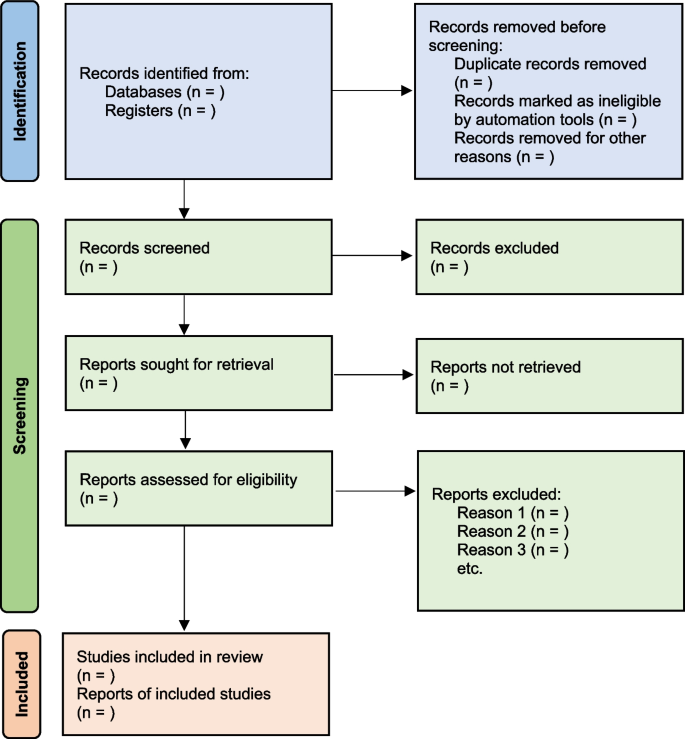

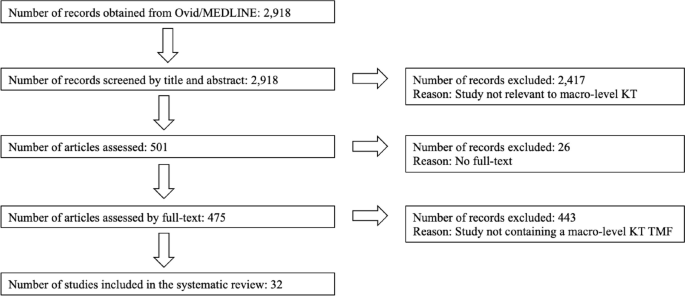

All the searched studies are selected for quantitative synthesis. Numbers of studies marked in each selection process needs to be documented. The PRISMA flow maps (Fig. 3 ) can be used to report the selection process [ 7 ].

PRISMA flow diagram map for systematic review study selection process

During the selection process, it is important to minimize bias. This can be achieved by measures such as having a pre-planned written review protocol with inclusion and exclusion criteria, adding study design as an inclusion criteria and independent study selection by at least 2 researchers. Items to consider in collecting data are source, eligibility, methods, outcomes, and results. Outcomes should be based on what is important to patients, not what researchers have decided to measure. Other items of interest are bibliographic information and references of other relevant studies. The most important decisions for the entire review are whether individual studies will be included or excluded for consideration in subsequent analyses. This may be the major determinant of the final composite results of the review. It is important to resolve any discrepancies in individual judgements by reviewers as objectively as possible, always remembering that individuals may be nature by “lumpers” or "splitters”. Ref (Darwin, Charles (1 August 1857). "Letter no. 2130". Darwin Correspondence Project).

Once the items to collect are decided, data extraction forms can be used to collect data for the review. The extraction form can be set up as paper, soft copy (word, excel or pdf format) or by using a database from specific software (eg: Covidence, EPPI-Reviewer, etc). All recordable outcome measures are collected for optimal analysis. It is nearly always a problem that some included studies may not provide usable data for extraction. These challenges are managed as shown in Table 2 .

It is important to be polite and clear when contacting authors. Imputing missing data carries a risk of error and it is best to get as much possible information from relevant authors. There are different data categories used to report outcomes in research studies. Table 3 summarizes common data types with some examples [ 2 ].

Study quality and bias

The results will not represent accurate evidence when there is bias in a study. These poor-quality studies introduce bias into a systematic review. Risk of bias is decreased, and the study’s quality improved by clearcut randomization, outcome data on all participants (i.e. complete follow-up) and blinding (for both participant and outcome assessor) [ 2 , 8 ].

The Cochrane Risk of bias tool (RoB) [ 9 ] can be used to assess risk of bias in Randomized Control Trials (RCTs). However, in Non-Randomized Studies of Interventions (NRSI), tools such as The Newcastle-Ottawa Scale [ 10 ], ROBINS-I [ 11 ], The DOWNS-Black [ 12 ] can be used to assess risk of bias. Please see bias domains in RCT and NRSI in Table 4 .

Blinding and masking can minimize the bias secondary to deviation from intended interventions. Missing outcome data or attrition due to various issues such as participant withdrawal, loss to follow up and lost data are also common causes for bias in studies. Researchers use imputation to address missing data which could lead to over or underestimation of intervention effects. Sensitivity analysis can be conducted to investigate the effect of such assumptions. Selective reporting is another problem, and it is difficult to identify and sources such as clinical trial registries or published trial protocols can be used to minimize such discrepancies.

Data analysis

Analysis of data is crucial in a systematic review and important aspect of this step are described below [ 2 , 13 ].

- Effect measure

Outcome data for each selected study will be in different measures. It is important to select a comparable effect measure for all studies for the particular outcome to facilitate synthesis of overall effect measure. Common effect measures for dichotomous outcomes are risk ratios (RR), odds ratios (OR) and risk differences (absolute risk reduction - ARR). These measures are selected for the analysis based on their consistency, mathematical properties, and communication effect For DTA reviews sensitivity and specificity are commonly used.

The mean difference (MD) is the commonest effect measure of continuous outcome data. When interpreting MD, report as many details such as the size of the difference, nature of the outcome (good or bad), characteristics of the scale for better understanding of the results. However, studies in the review may not use the same scales and standardization of results may be required. The standardized mean difference (SMD) can be calculated in such situations if the same concept or measures are used. The SMD is expressed in units of Standard Deviation (SD). It is important to correct the direction of the scale before combining them. All outcome data should be reported along with a measure of uncertainty such as confidence interval (CI).

There are endpoints and changes from baseline data in studies. Endpoint scores are usually reported in standard deviations (SD) and change from baseline data present in MD. Although it is possible to combine two types of data, SMD calculations are inaccurate in such situations. It is also good practice to conduct sensitivity analyses to assess the acceptability of the choices made.

Meta analysis

There are many advantages to performing a meta-analysis. It combines samples and provides more precise quantitative answers to the study objective. Study quality, comparability of data and data formats affect the output of the meta-analysis. The acceptable steps in meta-analysis are described in Table 5 .

- Heterogeneity

Variation across studies, more than expected by chance, is called heterogeneity. Although there are several types of heterogeneity such as clinical (variations in population and interventions), methodological (differences in designs and outcomes) and statistical (variable measure of effects), statistical heterogeneity is the most important type to discuss in meta-analysis [ 2 , 14 , 15 ].

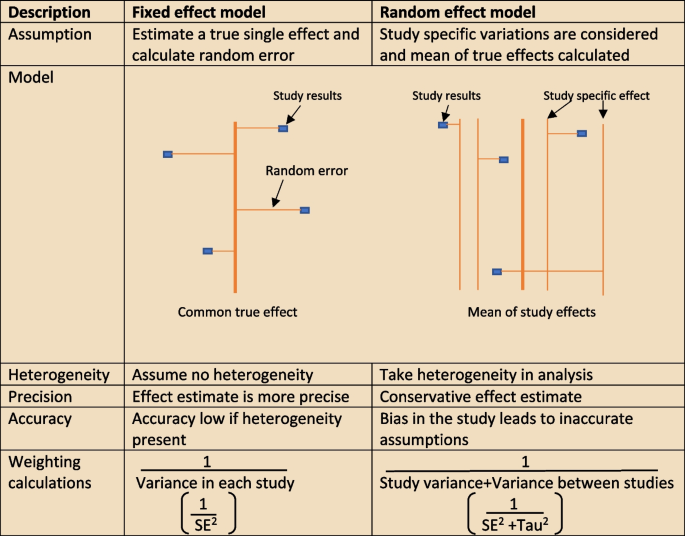

The heterogeneity assumptions affect data analysis. There are two models as described in Fig. 4 , used to assess heterogeneity. If the heterogeneity is minimal, then the Tau 2 is close to zero and weight estimates are similar from both methods. Tau is the standard deviation of true effect between studies and Tau 2 is the variance.

Heterogeneity assumption methods

There are a few tools to assess heterogeneity. These are Q test, I 2 statistics and visual inspection of forest plot. The easiest method is visual inspection of forest plot. Studies without overlap in confidence intervals are not homogenous. At the same time studies spread over null effect line, the heterogeneity is more relevant in analysis to guide the direction of the effect. The chi-squared or Q test believes all studies measure the same effect and a low p value suggests high heterogeneity. However, reliability of the Q test is low in extreme number of studies as the p value becomes less sensitive or too sensitive, thus under- or over-diagnosing heterogeneity respectively. The other tool to diagnose heterogeneity is I 2 statistic, which presents heterogeneity in a percentage value. Low values, below 30%, suggest minimal heterogeneity.

The next step is to deal with heterogeneity by exploring possible causes. Errors in data collection or analysis and true variations in population or intervention are common reasons for outlying results. These identified reasons should be presented cautiously in subgroup analysis. If no cause is identified, mention this in (GRADE approach– described later) the review as unexplained heterogeneity. In each subgroup, the heterogeneity and effect modification should be reported. It is also important to have a logical basis for each factor reported in the subgroup analysis, as too many factors may confuse readers. It is equally important to make sure there is meaningful clinical relevance in these subgroups.

Different study designs and missing data

Some studies may have more than one intervention. It is reasonable to ignore intervention arms of no interest in the review. But if all treatment arms need to be included, the control group could be divided uniformly amongst intervention arms, or all arms could be analyzed together or separately. The unit of analysis error is common in cluster randomized trial analysis, since clusters are considered as units. Similarly, correlation should be considered in crossover trials to minimize over or under weighting the study in analysis. There will be high risk of bias and heterogeneity in analyzing nonrandomized studies (NRS). However, normal effect measures can be used in relatively homogenous NRS meta-analysis.

Sometimes, missing statistics are found, and it is reasonable to calculate means and SDs from available data. Imputation of data should be done cautiously and reported in sensitive analysis.

Reporting and interpretation of results

It is important to report results in depth and not merely statistical values. The main measures used to report meta-analysis are Confidence interval (CI) and SMD [ 2 ].

The CI is the range where the true value probably sits. A narrow CI suggests more precise effects. The CI is usually presented as 95% interval (Corresponding to p value of 0.05) and rarely in 90% interval (P of 0.1). It is statistically significant when CI is away from the line of zero effect. However even statistically significant effects may not have clinical value if it does not meet minimally important change. On the other effects that are not statistically significant may still have clinical importance and raises question regarding the overall power of the meta-analysis to detect clinically important effects.

The SMD is defined above (“ Data analysis ” section) as an effect measure. The value more than zero means significant change of the intervention. However, interpretation of the size of significance is difficult in SMD as it reports units of standard deviation (SD). The Cohen’s rule of thumb (SMD <0.4 small effect, >0.7 large effect and moderate in between), transformation to OR (assuming equal SDs in both control and intervention arms) or calculating estimate MDs in a familiar scale are reasonable methods to report SMD results.

Reporting bias and certainty of evidence

The risk of missing information in a systematic review in the process from writing study protocol to publication is called reporting bias. Many factors such as author beliefs, word limitations, editorial and reviewers’ approvals can cause reporting bias. Funnel plots are a recommended statistical method to detect reporting bias in systematic reviews and meta-analysis.

Reporting the certainty of the results is another important step at the end of study analysis. The Grading of Recommendations, Assessment, Development and Evaluation (GRADE) is a recommended structured approach to report certainty of data. Table 6 describe topics used to rate up or down the certainty according to GRADE system [ 16 ]. Another important aspect of a systematic review is to categorize and present research studies based on the quality of the study.

The final rating of certainty in a meta-analysis is based on combination of all domains in each and overall studies. This information should be mentioned in the result section using numbers and explained in text in the discussion. The same system can be used in narrative synthesis of results in systematic reviews. It is important to remember rate up is only relevant for non-randomized studies and randomized studies starts with higher certainty.

Reporting the review

The last step of a systematic review or meta-analysis is report writing. Here, all parts are merged to write the review in structured format, using the protocol as the starting point. All systematic reviews should have a protocol to begin with as shown in Fig. 5 [ 2 ].

Structure for report writing

Summary of finding table

The ‘summary of finding’ table is a useful step in the writing. All the outcomes with a list of studies are recorded in this table. Then the relative / absolute effect (import from forest plots), certainty of evidence (based on GRADE) and comments are included in separate columns. Footnotes can be included for explanation of decisions. There are softwares to develop summary of tables, such as GRADEpro, which is compatible with RevMan [ 17 ].

Presenting results

The first paragraph of the results is the search process. The PRISMA flow (described in Fig. 1 ) is recommended to report the search summary [ 7 ]. The second section is the summary of risk of bias assessment for included studies. This will be only a narrative writing of significant differences, as individual study risk of bias will be presented in data tables in detail. Following this, review findings are presented in structured format.

The effects of interventions are presented in forest plots and data tables/figures. It is important to remember that this is not the section to interpret or infer results. All outcomes planned in the protocol should be reported, including the outcomes without evidence. Consistency of outcomes order should be maintained throughout the review. Present intervention vs no intervention before one vs other intervention. Primary outcomes are compared first, followed by secondary outcomes. Throughout the writing, check the reliability of results among plots, tables, figures, and texts. However, it may not be feasible to publish all plots and tables in the main document. Supplementary materials or appendices are available in journals for less important analyses.

There may be situations where selected studies are too diverse to conduct a meta-analysis. Narrative synthesis is an option in such situations to analyze results. It is easy to examine data by grouping studies in a narrative synthesis. Avoid vote counting of positive and negative studies in narrative reviews.

The first paragraph in the discussion should summarize the main (both positive and negative) findings along with certainty of evidence. The summary of the finding table can be used to identify the most important outcomes. Then describe whether the results address the study questions in the format of PICOS.

The quality of the review evidence is discussed afterwards. All domains of GRADE assessment including inconsistency, indirectness, imprecision, publication bias should be discussed in relation to the conclusions. Selection bias of studies can be included in the strengths/limitations section along with other assumptions made during the review. It is reasonable to mention agreements/disagreements with other reviews at the end in the context of past reviews.

The conclusion is the summary of review findings which guide readers to make decisions in policy making or clinical practice. It is important to mention both positive and negative salient results of the review in the conclusion. Make sure only your study findings are presented, and do not comment on outside sources. At the end of presenting results, recommendations can be mentioned to fill the gaps in evidence. The primary value of systematic reviews is to drive improvements in evidence-based practice, based on the needs of patients.

There are often other versions of the summaries from reviews presenting the major findings in plain language for the benefit of consumers and general public. It is advisable to use bullet points, and subheadings can be phrased as questions (What is the intervention? Whys it is important? What did we find? What are limitations? What is the conclusion?). It is better to write in first person active voice to directly address readers.

All types of summaries should provide consistent information to the main text. When describing uncertainty, be clear with the study limitations. As the summary is painting the study report, focus on the main results and quality of evidence.

Availability of data and materials

Not applicable.

Chandler J, Cumpston M, Thomas J, Higgins JP, Deeks JJ, Clarke MJ, Li T, Page MJ, Welch VA. Chapter 1: introduction. Cochrane Handbook Syst Rev Interv Ver. 2019;5(0):3–8.

Cumpston M, Li T, Page MJ, Chandler J, Welch VA, Higgins JP, Thomas J. Updated guidance for trusted systematic reviews: a new edition of the Cochrane Handbook for Systematic Reviews of Interventions. Cochrane Database Syst Rev. 2019;2019(10).

Mueller M, D’Addario M, Egger M, Cevallos M, Dekkers O, Mugglin C, Scott P. Methods to systematically review and meta-analyse observational studies: a systematic scoping review of recommendations. BMC Med Res Methodol. 2018;18(1):1–8.

Article Google Scholar

Chojecki D, Tjosvold L. Documenting and reporting the search process. HTAI Vortal [online]. 2020.

Sorden N. New MeSH Browser Available. NLM Tech Bull. 2016;(413):e2. https://www.nlm.nih.gov/pubs/techbull/nd16/nd16_mesh_browser_upgrade.html .

Tawfik GM, Dila KA, Mohamed MY, Tam DN, Kien ND, Ahmed AM, Huy NT. A step by step guide for conducting a systematic review and meta-analysis with simulation data. Trop Med Health. 2019;47(1):1–9.

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372:n71. https://doi.org/10.1136/bmj.n71 .

Article PubMed PubMed Central Google Scholar

Ma LL, Wang YY, Yang ZH, Huang D, Weng H, Zeng XT. Methodological quality (risk of bias) assessment tools for primary and secondary medical studies: what are they and which is better? Military Med Res. 2020;7(1):1–1.

Higgins JP, Savović J, Page MJ, Elbers RG, Sterne JA. Assessing risk of bias in a randomized trial. Cochrane Handb Syst Rev Interv. 2019:205–28.

Deeks JJ, Dinnes J, D’Amico R, Sowden AJ, Sakarovitch C, Song F, Petticrew M, Altman DG. Evaluating non-randomised intervention studies. Health Technol Assess (Winchester, England). 2003;7(27):iii–173.

CAS Google Scholar

Sterne JAC, Hernán MA, Reeves BC, Savović J, Berkman ND, Viswanathan M, Henry D, Altman DG, Ansari MT, Boutron I, Carpenter JR, Chan AW, Churchill R, Deeks JJ, Hróbjartsson A, Kirkham J, Jüni P, Loke YK, Pigott TD, Ramsay CR, Regidor D, Rothstein HR, Sandhu L, Santaguida PL, Schünemann HJ, Shea B, Shrier I, Tugwell P, Turner L, Valentine JC, Waddington H, Waters E, Wells GA, Whiting PF, Higgins JPT. ROBINS-I: a tool for assessing risk of bias in non-randomized studies of interventions. BMJ. 2016;355:i4919. https://doi.org/10.1136/bmj.i4919 .

Downs SH, Black N. The feasibility of creating a checklist for the assessment of the methodological quality both of randomised and non-randomised studies of health care interventions. J Epidemiol Community Health. 1998;52(6):377–84.

Article CAS PubMed PubMed Central Google Scholar

Ahn E, Kang H. Introduction to systematic review and meta-analysis. Korean J Anesthesiol. 2018;71(2):103–12.

Lin L. Comparison of four heterogeneity measures for meta-analysis. J Eval Clin Pract. 2020;26(1):376–84.

Article PubMed Google Scholar

Mohan BP, Adler DG. Heterogeneity in systematic review and meta-analysis: how to read between the numbers. Gastrointest Endosc. 2019;89(4):902–3.

Schünemann HJ. GRADE: from grading the evidence to developing recommendations. A description of the system and a proposal regarding the transferability of the results of clinical research to clinical practice. Zeitschrift Evidenz, Fortbildung Qualitat Gesundheitswesen. 2009;103(6):391–400.

Taito S. The construct of certainty of evidence has not been disseminated to systematic reviews and clinical practice guidelines; response to ‘The GRADE Working Group’ et al. J Clin Epidemiol. 2022;147:171.

Download references

Acknowledgements

This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

Author information

Authors and affiliations.

University of Western Australia, Stirling Hwy, Crawley, Perth, WA, 6009, Australia

Mayura Thilanka Iddagoda & Leon Flicker

Perioperative Service, Royal Perth Hospital, Wellington Street, Perth, WA, 6000, Australia

You can also search for this author in PubMed Google Scholar

Contributions

M.I. involved in conceptualization, literature search and writing the Article. L.F. reviewed and corrected contents. All authors reviewed the manuscript.

Corresponding author

Correspondence to Mayura Thilanka Iddagoda .

Ethics declarations

Ethics approval and consent to participate, consent for publication, competing interests.

The authors declare no competing interests.

Additional information

Publisher’s note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ . The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

Reprints and permissions

About this article

Cite this article.

Iddagoda, M.T., Flicker, L. Clinical systematic reviews – a brief overview. BMC Med Res Methodol 23 , 226 (2023). https://doi.org/10.1186/s12874-023-02047-8

Download citation

Received : 02 March 2023

Accepted : 27 September 2023

Published : 10 October 2023

DOI : https://doi.org/10.1186/s12874-023-02047-8

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Sytematic review

- Meta-analysis

- Risk of bias

- Certainty of evidence

BMC Medical Research Methodology

ISSN: 1471-2288

- General enquiries: [email protected]

- Open access

- Published: 08 June 2023

Guidance to best tools and practices for systematic reviews

- Kat Kolaski 1 ,

- Lynne Romeiser Logan 2 &

- John P. A. Ioannidis 3

Systematic Reviews volume 12 , Article number: 96 ( 2023 ) Cite this article

20k Accesses

15 Citations

76 Altmetric

Metrics details

Data continue to accumulate indicating that many systematic reviews are methodologically flawed, biased, redundant, or uninformative. Some improvements have occurred in recent years based on empirical methods research and standardization of appraisal tools; however, many authors do not routinely or consistently apply these updated methods. In addition, guideline developers, peer reviewers, and journal editors often disregard current methodological standards. Although extensively acknowledged and explored in the methodological literature, most clinicians seem unaware of these issues and may automatically accept evidence syntheses (and clinical practice guidelines based on their conclusions) as trustworthy.

A plethora of methods and tools are recommended for the development and evaluation of evidence syntheses. It is important to understand what these are intended to do (and cannot do) and how they can be utilized. Our objective is to distill this sprawling information into a format that is understandable and readily accessible to authors, peer reviewers, and editors. In doing so, we aim to promote appreciation and understanding of the demanding science of evidence synthesis among stakeholders. We focus on well-documented deficiencies in key components of evidence syntheses to elucidate the rationale for current standards. The constructs underlying the tools developed to assess reporting, risk of bias, and methodological quality of evidence syntheses are distinguished from those involved in determining overall certainty of a body of evidence. Another important distinction is made between those tools used by authors to develop their syntheses as opposed to those used to ultimately judge their work.

Exemplar methods and research practices are described, complemented by novel pragmatic strategies to improve evidence syntheses. The latter include preferred terminology and a scheme to characterize types of research evidence. We organize best practice resources in a Concise Guide that can be widely adopted and adapted for routine implementation by authors and journals. Appropriate, informed use of these is encouraged, but we caution against their superficial application and emphasize their endorsement does not substitute for in-depth methodological training. By highlighting best practices with their rationale, we hope this guidance will inspire further evolution of methods and tools that can advance the field.

Part 1. The state of evidence synthesis

Evidence syntheses are commonly regarded as the foundation of evidence-based medicine (EBM). They are widely accredited for providing reliable evidence and, as such, they have significantly influenced medical research and clinical practice. Despite their uptake throughout health care and ubiquity in contemporary medical literature, some important aspects of evidence syntheses are generally overlooked or not well recognized. Evidence syntheses are mostly retrospective exercises, they often depend on weak or irreparably flawed data, and they may use tools that have acknowledged or yet unrecognized limitations. They are complicated and time-consuming undertakings prone to bias and errors. Production of a good evidence synthesis requires careful preparation and high levels of organization in order to limit potential pitfalls [ 1 ]. Many authors do not recognize the complexity of such an endeavor and the many methodological challenges they may encounter. Failure to do so is likely to result in research and resource waste.

Given their potential impact on people’s lives, it is crucial for evidence syntheses to correctly report on the current knowledge base. In order to be perceived as trustworthy, reliable demonstration of the accuracy of evidence syntheses is equally imperative [ 2 ]. Concerns about the trustworthiness of evidence syntheses are not recent developments. From the early years when EBM first began to gain traction until recent times when thousands of systematic reviews are published monthly [ 3 ] the rigor of evidence syntheses has always varied. Many systematic reviews and meta-analyses had obvious deficiencies because original methods and processes had gaps, lacked precision, and/or were not widely known. The situation has improved with empirical research concerning which methods to use and standardization of appraisal tools. However, given the geometrical increase in the number of evidence syntheses being published, a relatively larger pool of unreliable evidence syntheses is being published today.

Publication of methodological studies that critically appraise the methods used in evidence syntheses is increasing at a fast pace. This reflects the availability of tools specifically developed for this purpose [ 4 , 5 , 6 ]. Yet many clinical specialties report that alarming numbers of evidence syntheses fail on these assessments. The syntheses identified report on a broad range of common conditions including, but not limited to, cancer, [ 7 ] chronic obstructive pulmonary disease, [ 8 ] osteoporosis, [ 9 ] stroke, [ 10 ] cerebral palsy, [ 11 ] chronic low back pain, [ 12 ] refractive error, [ 13 ] major depression, [ 14 ] pain, [ 15 ] and obesity [ 16 , 17 ]. The situation is even more concerning with regard to evidence syntheses included in clinical practice guidelines (CPGs) [ 18 , 19 , 20 ]. Astonishingly, in a sample of CPGs published in 2017–18, more than half did not apply even basic systematic methods in the evidence syntheses used to inform their recommendations [ 21 ].

These reports, while not widely acknowledged, suggest there are pervasive problems not limited to evidence syntheses that evaluate specific kinds of interventions or include primary research of a particular study design (eg, randomized versus non-randomized) [ 22 ]. Similar concerns about the reliability of evidence syntheses have been expressed by proponents of EBM in highly circulated medical journals [ 23 , 24 , 25 , 26 ]. These publications have also raised awareness about redundancy, inadequate input of statistical expertise, and deficient reporting. These issues plague primary research as well; however, there is heightened concern for the impact of these deficiencies given the critical role of evidence syntheses in policy and clinical decision-making.

Methods and guidance to produce a reliable evidence synthesis

Several international consortiums of EBM experts and national health care organizations currently provide detailed guidance (Table 1 ). They draw criteria from the reporting and methodological standards of currently recommended appraisal tools, and regularly review and update their methods to reflect new information and changing needs. In addition, they endorse the Grading of Recommendations Assessment, Development and Evaluation (GRADE) system for rating the overall quality of a body of evidence [ 27 ]. These groups typically certify or commission systematic reviews that are published in exclusive databases (eg, Cochrane, JBI) or are used to develop government or agency sponsored guidelines or health technology assessments (eg, National Institute for Health and Care Excellence [NICE], Scottish Intercollegiate Guidelines Network [SIGN], Agency for Healthcare Research and Quality [AHRQ]). They offer developers of evidence syntheses various levels of methodological advice, technical and administrative support, and editorial assistance. Use of specific protocols and checklists are required for development teams within these groups, but their online methodological resources are accessible to any potential author.

Notably, Cochrane is the largest single producer of evidence syntheses in biomedical research; however, these only account for 15% of the total [ 28 ]. The World Health Organization requires Cochrane standards be used to develop evidence syntheses that inform their CPGs [ 29 ]. Authors investigating questions of intervention effectiveness in syntheses developed for Cochrane follow the Methodological Expectations of Cochrane Intervention Reviews [ 30 ] and undergo multi-tiered peer review [ 31 , 32 ]. Several empirical evaluations have shown that Cochrane systematic reviews are of higher methodological quality compared with non-Cochrane reviews [ 4 , 7 , 9 , 11 , 14 , 32 , 33 , 34 , 35 ]. However, some of these assessments have biases: they may be conducted by Cochrane-affiliated authors, and they sometimes use scales and tools developed and used in the Cochrane environment and by its partners. In addition, evidence syntheses published in the Cochrane database are not subject to space or word restrictions, while non-Cochrane syntheses are often limited. As a result, information that may be relevant to the critical appraisal of non-Cochrane reviews is often removed or is relegated to online-only supplements that may not be readily or fully accessible [ 28 ].

Influences on the state of evidence synthesis

Many authors are familiar with the evidence syntheses produced by the leading EBM organizations but can be intimidated by the time and effort necessary to apply their standards. Instead of following their guidance, authors may employ methods that are discouraged or outdated 28]. Suboptimal methods described in in the literature may then be taken up by others. For example, the Newcastle–Ottawa Scale (NOS) is a commonly used tool for appraising non-randomized studies [ 36 ]. Many authors justify their selection of this tool with reference to a publication that describes the unreliability of the NOS and recommends against its use [ 37 ]. Obviously, the authors who cite this report for that purpose have not read it. Authors and peer reviewers have a responsibility to use reliable and accurate methods and not copycat previous citations or substandard work [ 38 , 39 ]. Similar cautions may potentially extend to automation tools. These have concentrated on evidence searching [ 40 ] and selection given how demanding it is for humans to maintain truly up-to-date evidence [ 2 , 41 ]. Cochrane has deployed machine learning to identify randomized controlled trials (RCTs) and studies related to COVID-19, [ 2 , 42 ] but such tools are not yet commonly used [ 43 ]. The routine integration of automation tools in the development of future evidence syntheses should not displace the interpretive part of the process.

Editorials about unreliable or misleading systematic reviews highlight several of the intertwining factors that may contribute to continued publication of unreliable evidence syntheses: shortcomings and inconsistencies of the peer review process, lack of endorsement of current standards on the part of journal editors, the incentive structure of academia, industry influences, publication bias, and the lure of “predatory” journals [ 44 , 45 , 46 , 47 , 48 ]. At this juncture, clarification of the extent to which each of these factors contribute remains speculative, but their impact is likely to be synergistic.

Over time, the generalized acceptance of the conclusions of systematic reviews as incontrovertible has affected trends in the dissemination and uptake of evidence. Reporting of the results of evidence syntheses and recommendations of CPGs has shifted beyond medical journals to press releases and news headlines and, more recently, to the realm of social media and influencers. The lay public and policy makers may depend on these outlets for interpreting evidence syntheses and CPGs. Unfortunately, communication to the general public often reflects intentional or non-intentional misrepresentation or “spin” of the research findings [ 49 , 50 , 51 , 52 ] News and social media outlets also tend to reduce conclusions on a body of evidence and recommendations for treatment to binary choices (eg, “do it” versus “don’t do it”) that may be assigned an actionable symbol (eg, red/green traffic lights, smiley/frowning face emoji).

Strategies for improvement

Many authors and peer reviewers are volunteer health care professionals or trainees who lack formal training in evidence synthesis [ 46 , 53 ]. Informing them about research methodology could increase the likelihood they will apply rigorous methods [ 25 , 33 , 45 ]. We tackle this challenge, from both a theoretical and a practical perspective, by offering guidance applicable to any specialty. It is based on recent methodological research that is extensively referenced to promote self-study. However, the information presented is not intended to be substitute for committed training in evidence synthesis methodology; instead, we hope to inspire our target audience to seek such training. We also hope to inform a broader audience of clinicians and guideline developers influenced by evidence syntheses. Notably, these communities often include the same members who serve in different capacities.

In the following sections, we highlight methodological concepts and practices that may be unfamiliar, problematic, confusing, or controversial. In Part 2, we consider various types of evidence syntheses and the types of research evidence summarized by them. In Part 3, we examine some widely used (and misused) tools for the critical appraisal of systematic reviews and reporting guidelines for evidence syntheses. In Part 4, we discuss how to meet methodological conduct standards applicable to key components of systematic reviews. In Part 5, we describe the merits and caveats of rating the overall certainty of a body of evidence. Finally, in Part 6, we summarize suggested terminology, methods, and tools for development and evaluation of evidence syntheses that reflect current best practices.

Part 2. Types of syntheses and research evidence

A good foundation for the development of evidence syntheses requires an appreciation of their various methodologies and the ability to correctly identify the types of research potentially available for inclusion in the synthesis.

Types of evidence syntheses

Systematic reviews have historically focused on the benefits and harms of interventions; over time, various types of systematic reviews have emerged to address the diverse information needs of clinicians, patients, and policy makers [ 54 ] Systematic reviews with traditional components have become defined by the different topics they assess (Table 2.1 ). In addition, other distinctive types of evidence syntheses have evolved, including overviews or umbrella reviews, scoping reviews, rapid reviews, and living reviews. The popularity of these has been increasing in recent years [ 55 , 56 , 57 , 58 ]. A summary of the development, methods, available guidance, and indications for these unique types of evidence syntheses is available in Additional File 2 A.

Both Cochrane [ 30 , 59 ] and JBI [ 60 ] provide methodologies for many types of evidence syntheses; they describe these with different terminology, but there is obvious overlap (Table 2.2 ). The majority of evidence syntheses published by Cochrane (96%) and JBI (62%) are categorized as intervention reviews. This reflects the earlier development and dissemination of their intervention review methodologies; these remain well-established [ 30 , 59 , 61 ] as both organizations continue to focus on topics related to treatment efficacy and harms. In contrast, intervention reviews represent only about half of the total published in the general medical literature, and several non-intervention review types contribute to a significant proportion of the other half.

Types of research evidence

There is consensus on the importance of using multiple study designs in evidence syntheses; at the same time, there is a lack of agreement on methods to identify included study designs. Authors of evidence syntheses may use various taxonomies and associated algorithms to guide selection and/or classification of study designs. These tools differentiate categories of research and apply labels to individual study designs (eg, RCT, cross-sectional). A familiar example is the Design Tree endorsed by the Centre for Evidence-Based Medicine [ 70 ]. Such tools may not be helpful to authors of evidence syntheses for multiple reasons.

Suboptimal levels of agreement and accuracy even among trained methodologists reflect challenges with the application of such tools [ 71 , 72 ]. Problematic distinctions or decision points (eg, experimental or observational, controlled or uncontrolled, prospective or retrospective) and design labels (eg, cohort, case control, uncontrolled trial) have been reported [ 71 ]. The variable application of ambiguous study design labels to non-randomized studies is common, making them especially prone to misclassification [ 73 ]. In addition, study labels do not denote the unique design features that make different types of non-randomized studies susceptible to different biases, including those related to how the data are obtained (eg, clinical trials, disease registries, wearable devices). Given this limitation, it is important to be aware that design labels preclude the accurate assignment of non-randomized studies to a “level of evidence” in traditional hierarchies [ 74 ].

These concerns suggest that available tools and nomenclature used to distinguish types of research evidence may not uniformly apply to biomedical research and non-health fields that utilize evidence syntheses (eg, education, economics) [ 75 , 76 ]. Moreover, primary research reports often do not describe study design or do so incompletely or inaccurately; thus, indexing in PubMed and other databases does not address the potential for misclassification [ 77 ]. Yet proper identification of research evidence has implications for several key components of evidence syntheses. For example, search strategies limited by index terms using design labels or study selection based on labels applied by the authors of primary studies may cause inconsistent or unjustified study inclusions and/or exclusions [ 77 ]. In addition, because risk of bias (RoB) tools consider attributes specific to certain types of studies and study design features, results of these assessments may be invalidated if an inappropriate tool is used. Appropriate classification of studies is also relevant for the selection of a suitable method of synthesis and interpretation of those results.

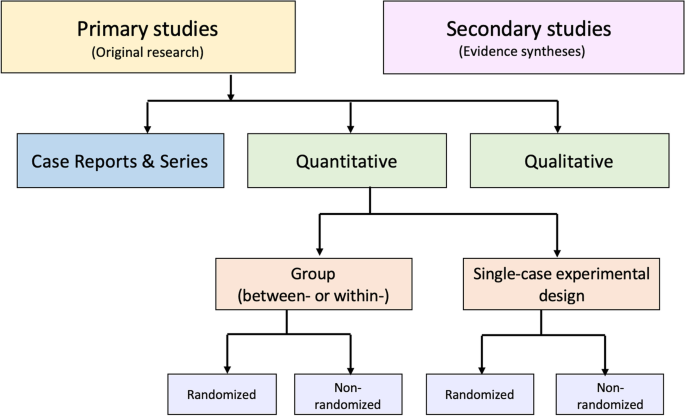

An alternative to these tools and nomenclature involves application of a few fundamental distinctions that encompass a wide range of research designs and contexts. While these distinctions are not novel, we integrate them into a practical scheme (see Fig. 1 ) designed to guide authors of evidence syntheses in the basic identification of research evidence. The initial distinction is between primary and secondary studies. Primary studies are then further distinguished by: 1) the type of data reported (qualitative or quantitative); and 2) two defining design features (group or single-case and randomized or non-randomized). The different types of studies and study designs represented in the scheme are described in detail in Additional File 2 B. It is important to conceptualize their methods as complementary as opposed to contrasting or hierarchical [ 78 ]; each offers advantages and disadvantages that determine their appropriateness for answering different kinds of research questions in an evidence synthesis.

Distinguishing types of research evidence

Application of these basic distinctions may avoid some of the potential difficulties associated with study design labels and taxonomies. Nevertheless, debatable methodological issues are raised when certain types of research identified in this scheme are included in an evidence synthesis. We briefly highlight those associated with inclusion of non-randomized studies, case reports and series, and a combination of primary and secondary studies.

Non-randomized studies

When investigating an intervention’s effectiveness, it is important for authors to recognize the uncertainty of observed effects reported by studies with high RoB. Results of statistical analyses that include such studies need to be interpreted with caution in order to avoid misleading conclusions [ 74 ]. Review authors may consider excluding randomized studies with high RoB from meta-analyses. Non-randomized studies of intervention (NRSI) are affected by a greater potential range of biases and thus vary more than RCTs in their ability to estimate a causal effect [ 79 ]. If data from NRSI are synthesized in meta-analyses, it is helpful to separately report their summary estimates [ 6 , 74 ].

Nonetheless, certain design features of NRSI (eg, which parts of the study were prospectively designed) may help to distinguish stronger from weaker ones. Cochrane recommends that authors of a review including NRSI focus on relevant study design features when determining eligibility criteria instead of relying on non-informative study design labels [ 79 , 80 ] This process is facilitated by a study design feature checklist; guidance on using the checklist is included with developers’ description of the tool [ 73 , 74 ]. Authors collect information about these design features during data extraction and then consider it when making final study selection decisions and when performing RoB assessments of the included NRSI.

Case reports and case series

Correctly identified case reports and case series can contribute evidence not well captured by other designs [ 81 ]; in addition, some topics may be limited to a body of evidence that consists primarily of uncontrolled clinical observations. Murad and colleagues offer a framework for how to include case reports and series in an evidence synthesis [ 82 ]. Distinguishing between cohort studies and case series in these syntheses is important, especially for those that rely on evidence from NRSI. Additional data obtained from studies misclassified as case series can potentially increase the confidence in effect estimates. Mathes and Pieper provide authors of evidence syntheses with specific guidance on distinguishing between cohort studies and case series, but emphasize the increased workload involved [ 77 ].

Primary and secondary studies

Synthesis of combined evidence from primary and secondary studies may provide a broad perspective on the entirety of available literature on a topic. This is, in fact, the recommended strategy for scoping reviews that may include a variety of sources of evidence (eg, CPGs, popular media). However, except for scoping reviews, the synthesis of data from primary and secondary studies is discouraged unless there are strong reasons to justify doing so.

Combining primary and secondary sources of evidence is challenging for authors of other types of evidence syntheses for several reasons [ 83 ]. Assessments of RoB for primary and secondary studies are derived from conceptually different tools, thus obfuscating the ability to make an overall RoB assessment of a combination of these study types. In addition, authors who include primary and secondary studies must devise non-standardized methods for synthesis. Note this contrasts with well-established methods available for updating existing evidence syntheses with additional data from new primary studies [ 84 , 85 , 86 ]. However, a new review that synthesizes data from primary and secondary studies raises questions of validity and may unintentionally support a biased conclusion because no existing methodological guidance is currently available [ 87 ].

Recommendations

We suggest that journal editors require authors to identify which type of evidence synthesis they are submitting and reference the specific methodology used for its development. This will clarify the research question and methods for peer reviewers and potentially simplify the editorial process. Editors should announce this practice and include it in the instructions to authors. To decrease bias and apply correct methods, authors must also accurately identify the types of research evidence included in their syntheses.

Part 3. Conduct and reporting

The need to develop criteria to assess the rigor of systematic reviews was recognized soon after the EBM movement began to gain international traction [ 88 , 89 ]. Systematic reviews rapidly became popular, but many were very poorly conceived, conducted, and reported. These problems remain highly prevalent [ 23 ] despite development of guidelines and tools to standardize and improve the performance and reporting of evidence syntheses [ 22 , 28 ]. Table 3.1 provides some historical perspective on the evolution of tools developed specifically for the evaluation of systematic reviews, with or without meta-analysis.

These tools are often interchangeably invoked when referring to the “quality” of an evidence synthesis. However, quality is a vague term that is frequently misused and misunderstood; more precisely, these tools specify different standards for evidence syntheses. Methodological standards address how well a systematic review was designed and performed [ 5 ]. RoB assessments refer to systematic flaws or limitations in the design, conduct, or analysis of research that distort the findings of the review [ 4 ]. Reporting standards help systematic review authors describe the methodology they used and the results of their synthesis in sufficient detail [ 92 ]. It is essential to distinguish between these evaluations: a systematic review may be biased, it may fail to report sufficient information on essential features, or it may exhibit both problems; a thoroughly reported systematic evidence synthesis review may still be biased and flawed while an otherwise unbiased one may suffer from deficient documentation.

We direct attention to the currently recommended tools listed in Table 3.1 but concentrate on AMSTAR-2 (update of AMSTAR [A Measurement Tool to Assess Systematic Reviews]) and ROBIS (Risk of Bias in Systematic Reviews), which evaluate methodological quality and RoB, respectively. For comparison and completeness, we include PRISMA 2020 (update of the 2009 Preferred Reporting Items for Systematic Reviews of Meta-Analyses statement), which offers guidance on reporting standards. The exclusive focus on these three tools is by design; it addresses concerns related to the considerable variability in tools used for the evaluation of systematic reviews [ 28 , 88 , 96 , 97 ]. We highlight the underlying constructs these tools were designed to assess, then describe their components and applications. Their known (or potential) uptake and impact and limitations are also discussed.

Evaluation of conduct

Development.

AMSTAR [ 5 ] was in use for a decade prior to the 2017 publication of AMSTAR-2; both provide a broad evaluation of methodological quality of intervention systematic reviews, including flaws arising through poor conduct of the review [ 6 ]. ROBIS, published in 2016, was developed to specifically assess RoB introduced by the conduct of the review; it is applicable to systematic reviews of interventions and several other types of reviews [ 4 ]. Both tools reflect a shift to a domain-based approach as opposed to generic quality checklists. There are a few items unique to each tool; however, similarities between items have been demonstrated [ 98 , 99 ]. AMSTAR-2 and ROBIS are recommended for use by: 1) authors of overviews or umbrella reviews and CPGs to evaluate systematic reviews considered as evidence; 2) authors of methodological research studies to appraise included systematic reviews; and 3) peer reviewers for appraisal of submitted systematic review manuscripts. For authors, these tools may function as teaching aids and inform conduct of their review during its development.

Description

Systematic reviews that include randomized and/or non-randomized studies as evidence can be appraised with AMSTAR-2 and ROBIS. Other characteristics of AMSTAR-2 and ROBIS are summarized in Table 3.2 . Both tools define categories for an overall rating; however, neither tool is intended to generate a total score by simply calculating the number of responses satisfying criteria for individual items [ 4 , 6 ]. AMSTAR-2 focuses on the rigor of a review’s methods irrespective of the specific subject matter. ROBIS places emphasis on a review’s results section— this suggests it may be optimally applied by appraisers with some knowledge of the review’s topic as they may be better equipped to determine if certain procedures (or lack thereof) would impact the validity of a review’s findings [ 98 , 100 ]. Reliability studies show AMSTAR-2 overall confidence ratings strongly correlate with the overall RoB ratings in ROBIS [ 100 , 101 ].

Interrater reliability has been shown to be acceptable for AMSTAR-2 [ 6 , 11 , 102 ] and ROBIS [ 4 , 98 , 103 ] but neither tool has been shown to be superior in this regard [ 100 , 101 , 104 , 105 ]. Overall, variability in reliability for both tools has been reported across items, between pairs of raters, and between centers [ 6 , 100 , 101 , 104 ]. The effects of appraiser experience on the results of AMSTAR-2 and ROBIS require further evaluation [ 101 , 105 ]. Updates to both tools should address items shown to be prone to individual appraisers’ subjective biases and opinions [ 11 , 100 ]; this may involve modifications of the current domains and signaling questions as well as incorporation of methods to make an appraiser’s judgments more explicit. Future revisions of these tools may also consider the addition of standards for aspects of systematic review development currently lacking (eg, rating overall certainty of evidence, [ 99 ] methods for synthesis without meta-analysis [ 105 ]) and removal of items that assess aspects of reporting that are thoroughly evaluated by PRISMA 2020.

Application

A good understanding of what is required to satisfy the standards of AMSTAR-2 and ROBIS involves study of the accompanying guidance documents written by the tools’ developers; these contain detailed descriptions of each item’s standards. In addition, accurate appraisal of a systematic review with either tool requires training. Most experts recommend independent assessment by at least two appraisers with a process for resolving discrepancies as well as procedures to establish interrater reliability, such as pilot testing, a calibration phase or exercise, and development of predefined decision rules [ 35 , 99 , 100 , 101 , 103 , 104 , 106 ]. These methods may, to some extent, address the challenges associated with the diversity in methodological training, subject matter expertise, and experience using the tools that are likely to exist among appraisers.

The standards of AMSTAR, AMSTAR-2, and ROBIS have been used in many methodological studies and epidemiological investigations. However, the increased publication of overviews or umbrella reviews and CPGs has likely been a greater influence on the widening acceptance of these tools. Critical appraisal of the secondary studies considered evidence is essential to the trustworthiness of both the recommendations of CPGs and the conclusions of overviews. Currently both Cochrane [ 55 ] and JBI [ 107 ] recommend AMSTAR-2 and ROBIS in their guidance for authors of overviews or umbrella reviews. However, ROBIS and AMSTAR-2 were released in 2016 and 2017, respectively; thus, to date, limited data have been reported about the uptake of these tools or which of the two may be preferred [ 21 , 106 ]. Currently, in relation to CPGs, AMSTAR-2 appears to be overwhelmingly popular compared to ROBIS. A Google Scholar search of this topic (search terms “AMSTAR 2 AND clinical practice guidelines,” “ROBIS AND clinical practice guidelines” 13 May 2022) found 12,700 hits for AMSTAR-2 and 1,280 for ROBIS. The apparent greater appeal of AMSTAR-2 may relate to its longer track record given the original version of the tool was in use for 10 years prior to its update in 2017.

Barriers to the uptake of AMSTAR-2 and ROBIS include the real or perceived time and resources necessary to complete the items they include and appraisers’ confidence in their own ratings [ 104 ]. Reports from comparative studies available to date indicate that appraisers find AMSTAR-2 questions, responses, and guidance to be clearer and simpler compared with ROBIS [ 11 , 101 , 104 , 105 ]. This suggests that for appraisal of intervention systematic reviews, AMSTAR-2 may be a more practical tool than ROBIS, especially for novice appraisers [ 101 , 103 , 104 , 105 ]. The unique characteristics of each tool, as well as their potential advantages and disadvantages, should be taken into consideration when deciding which tool should be used for an appraisal of a systematic review. In addition, the choice of one or the other may depend on how the results of an appraisal will be used; for example, a peer reviewer’s appraisal of a single manuscript versus an appraisal of multiple systematic reviews in an overview or umbrella review, CPG, or systematic methodological study.

Authors of overviews and CPGs report results of AMSTAR-2 and ROBIS appraisals for each of the systematic reviews they include as evidence. Ideally, an independent judgment of their appraisals can be made by the end users of overviews and CPGs; however, most stakeholders, including clinicians, are unlikely to have a sophisticated understanding of these tools. Nevertheless, they should at least be aware that AMSTAR-2 and ROBIS ratings reported in overviews and CPGs may be inaccurate because the tools are not applied as intended by their developers. This can result from inadequate training of the overview or CPG authors who perform the appraisals, or to modifications of the appraisal tools imposed by them. The potential variability in overall confidence and RoB ratings highlights why appraisers applying these tools need to support their judgments with explicit documentation; this allows readers to judge for themselves whether they agree with the criteria used by appraisers [ 4 , 108 ]. When these judgments are explicit, the underlying rationale used when applying these tools can be assessed [ 109 ].

Theoretically, we would expect an association of AMSTAR-2 with improved methodological rigor and an association of ROBIS with lower RoB in recent systematic reviews compared to those published before 2017. To our knowledge, this has not yet been demonstrated; however, like reports about the actual uptake of these tools, time will tell. Additional data on user experience is also needed to further elucidate the practical challenges and methodological nuances encountered with the application of these tools. This information could potentially inform the creation of unifying criteria to guide and standardize the appraisal of evidence syntheses [ 109 ].

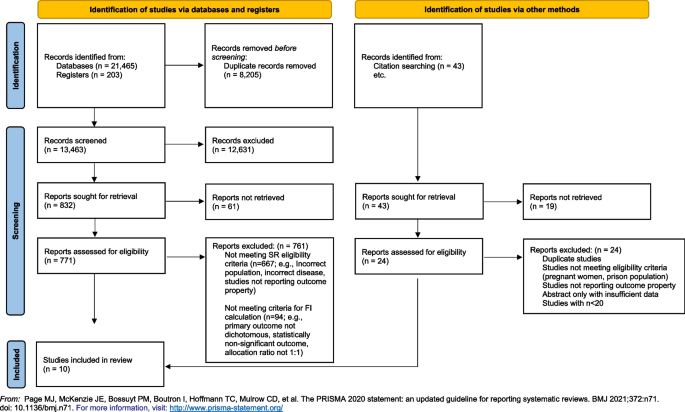

Evaluation of reporting

Complete reporting is essential for users to establish the trustworthiness and applicability of a systematic review’s findings. Efforts to standardize and improve the reporting of systematic reviews resulted in the 2009 publication of the PRISMA statement [ 92 ] with its accompanying explanation and elaboration document [ 110 ]. This guideline was designed to help authors prepare a complete and transparent report of their systematic review. In addition, adherence to PRISMA is often used to evaluate the thoroughness of reporting of published systematic reviews [ 111 ]. The updated version, PRISMA 2020 [ 93 ], and its guidance document [ 112 ] were published in 2021. Items on the original and updated versions of PRISMA are organized by the six basic review components they address (title, abstract, introduction, methods, results, discussion). The PRISMA 2020 update is a considerably expanded version of the original; it includes standards and examples for the 27 original and 13 additional reporting items that capture methodological advances and may enhance the replicability of reviews [ 113 ].

The original PRISMA statement fostered the development of various PRISMA extensions (Table 3.3 ). These include reporting guidance for scoping reviews and reviews of diagnostic test accuracy and for intervention reviews that report on the following: harms outcomes, equity issues, the effects of acupuncture, the results of network meta-analyses and analyses of individual participant data. Detailed reporting guidance for specific systematic review components (abstracts, protocols, literature searches) is also available.

Uptake and impact

The 2009 PRISMA standards [ 92 ] for reporting have been widely endorsed by authors, journals, and EBM-related organizations. We anticipate the same for PRISMA 2020 [ 93 ] given its co-publication in multiple high-impact journals. However, to date, there is a lack of strong evidence for an association between improved systematic review reporting and endorsement of PRISMA 2009 standards [ 43 , 111 ]. Most journals require a PRISMA checklist accompany submissions of systematic review manuscripts. However, the accuracy of information presented on these self-reported checklists is not necessarily verified. It remains unclear which strategies (eg, authors’ self-report of checklists, peer reviewer checks) might improve adherence to the PRISMA reporting standards; in addition, the feasibility of any potentially effective strategies must be taken into consideration given the structure and limitations of current research and publication practices [ 124 ].

Pitfalls and limitations of PRISMA, AMSTAR-2, and ROBIS

Misunderstanding of the roles of these tools and their misapplication may be widespread problems. PRISMA 2020 is a reporting guideline that is most beneficial if consulted when developing a review as opposed to merely completing a checklist when submitting to a journal; at that point, the review is finished, with good or bad methodological choices. However, PRISMA checklists evaluate how completely an element of review conduct was reported, but do not evaluate the caliber of conduct or performance of a review. Thus, review authors and readers should not think that a rigorous systematic review can be produced by simply following the PRISMA 2020 guidelines. Similarly, it is important to recognize that AMSTAR-2 and ROBIS are tools to evaluate the conduct of a review but do not substitute for conceptual methodological guidance. In addition, they are not intended to be simple checklists. In fact, they have the potential for misuse or abuse if applied as such; for example, by calculating a total score to make a judgment about a review’s overall confidence or RoB. Proper selection of a response for the individual items on AMSTAR-2 and ROBIS requires training or at least reference to their accompanying guidance documents.

Not surprisingly, it has been shown that compliance with the PRISMA checklist is not necessarily associated with satisfying the standards of ROBIS [ 125 ]. AMSTAR-2 and ROBIS were not available when PRISMA 2009 was developed; however, they were considered in the development of PRISMA 2020 [ 113 ]. Therefore, future studies may show a positive relationship between fulfillment of PRISMA 2020 standards for reporting and meeting the standards of tools evaluating methodological quality and RoB.

Choice of an appropriate tool for the evaluation of a systematic review first involves identification of the underlying construct to be assessed. For systematic reviews of interventions, recommended tools include AMSTAR-2 and ROBIS for appraisal of conduct and PRISMA 2020 for completeness of reporting. All three tools were developed rigorously and provide easily accessible and detailed user guidance, which is necessary for their proper application and interpretation. When considering a manuscript for publication, training in these tools can sensitize peer reviewers and editors to major issues that may affect the review’s trustworthiness and completeness of reporting. Judgment of the overall certainty of a body of evidence and formulation of recommendations rely, in part, on AMSTAR-2 or ROBIS appraisals of systematic reviews. Therefore, training on the application of these tools is essential for authors of overviews and developers of CPGs. Peer reviewers and editors considering an overview or CPG for publication must hold their authors to a high standard of transparency regarding both the conduct and reporting of these appraisals.

Part 4. Meeting conduct standards

Many authors, peer reviewers, and editors erroneously equate fulfillment of the items on the PRISMA checklist with superior methodological rigor. For direction on methodology, we refer them to available resources that provide comprehensive conceptual guidance [ 59 , 60 ] as well as primers with basic step-by-step instructions [ 1 , 126 , 127 ]. This section is intended to complement study of such resources by facilitating use of AMSTAR-2 and ROBIS, tools specifically developed to evaluate methodological rigor of systematic reviews. These tools are widely accepted by methodologists; however, in the general medical literature, they are not uniformly selected for the critical appraisal of systematic reviews [ 88 , 96 ].

To enable their uptake, Table 4.1 links review components to the corresponding appraisal tool items. Expectations of AMSTAR-2 and ROBIS are concisely stated, and reasoning provided.

Issues involved in meeting the standards for seven review components (identified in bold in Table 4.1 ) are addressed in detail. These were chosen for elaboration for one (or both) of two reasons: 1) the component has been identified as potentially problematic for systematic review authors based on consistent reports of their frequent AMSTAR-2 or ROBIS deficiencies [ 9 , 11 , 15 , 88 , 128 , 129 ]; and/or 2) the review component is judged by standards of an AMSTAR-2 “critical” domain. These have the greatest implications for how a systematic review will be appraised: if standards for any one of these critical domains are not met, the review is rated as having “critically low confidence.”

Research question

Specific and unambiguous research questions may have more value for reviews that deal with hypothesis testing. Mnemonics for the various elements of research questions are suggested by JBI and Cochrane (Table 2.1 ). These prompt authors to consider the specialized methods involved for developing different types of systematic reviews; however, while inclusion of the suggested elements makes a review compliant with a particular review’s methods, it does not necessarily make a research question appropriate. Table 4.2 lists acronyms that may aid in developing the research question. They include overlapping concepts of importance in this time of proliferating reviews of uncertain value [ 130 ]. If these issues are not prospectively contemplated, systematic review authors may establish an overly broad scope, or develop runaway scope allowing them to stray from predefined choices relating to key comparisons and outcomes.

Once a research question is established, searching on registry sites and databases for existing systematic reviews addressing the same or a similar topic is necessary in order to avoid contributing to research waste [ 131 ]. Repeating an existing systematic review must be justified, for example, if previous reviews are out of date or methodologically flawed. A full discussion on replication of intervention systematic reviews, including a consensus checklist, can be found in the work of Tugwell and colleagues [ 84 ].

Protocol development is considered a core component of systematic reviews [ 125 , 126 , 132 ]. Review protocols may allow researchers to plan and anticipate potential issues, assess validity of methods, prevent arbitrary decision-making, and minimize bias that can be introduced by the conduct of the review. Registration of a protocol that allows public access promotes transparency of the systematic review’s methods and processes and reduces the potential for duplication [ 132 ]. Thinking early and carefully about all the steps of a systematic review is pragmatic and logical and may mitigate the influence of the authors’ prior knowledge of the evidence [ 133 ]. In addition, the protocol stage is when the scope of the review can be carefully considered by authors, reviewers, and editors; this may help to avoid production of overly ambitious reviews that include excessive numbers of comparisons and outcomes or are undisciplined in their study selection.

An association with attainment of AMSTAR standards in systematic reviews with published prospective protocols has been reported [ 134 ]. However, completeness of reporting does not seem to be different in reviews with a protocol compared to those without one [ 135 ]. PRISMA-P [ 116 ] and its accompanying elaboration and explanation document [ 136 ] can be used to guide and assess the reporting of protocols. A final version of the review should fully describe any protocol deviations. Peer reviewers may compare the submitted manuscript with any available pre-registered protocol; this is required if AMSTAR-2 or ROBIS are used for critical appraisal.

There are multiple options for the recording of protocols (Table 4.3 ). Some journals will peer review and publish protocols. In addition, many online sites offer date-stamped and publicly accessible protocol registration. Some of these are exclusively for protocols of evidence syntheses; others are less restrictive and offer researchers the capacity for data storage, sharing, and other workflow features. These sites document protocol details to varying extents and have different requirements [ 137 ]. The most popular site for systematic reviews, the International Prospective Register of Systematic Reviews (PROSPERO), for example, only registers reviews that report on an outcome with direct relevance to human health. The PROSPERO record documents protocols for all types of reviews except literature and scoping reviews. Of note, PROSPERO requires authors register their review protocols prior to any data extraction [ 133 , 138 ]. The electronic records of most of these registry sites allow authors to update their protocols and facilitate transparent tracking of protocol changes, which are not unexpected during the progress of the review [ 139 ].

Study design inclusion

For most systematic reviews, broad inclusion of study designs is recommended [ 126 ]. This may allow comparison of results between contrasting study design types [ 126 ]. Certain study designs may be considered preferable depending on the type of review and nature of the research question. However, prevailing stereotypes about what each study design does best may not be accurate. For example, in systematic reviews of interventions, randomized designs are typically thought to answer highly specific questions while non-randomized designs often are expected to reveal greater information about harms or real-word evidence [ 126 , 140 , 141 ]. This may be a false distinction; randomized trials may be pragmatic [ 142 ], they may offer important (and more unbiased) information on harms [ 143 ], and data from non-randomized trials may not necessarily be more real-world-oriented [ 144 ].