LEARN STATISTICS EASILY

Learn Data Analysis Now!

Non-Parametric Statistics: A Comprehensive Guide

Exploring the Versatile World of Non-Parametric Statistics: Mastering Flexible Data Analysis Techniques.

Introduction

Non-parametric statistics serve as a critical toolset in data analysis. They are known for their adaptability and the capacity to provide valid results without the stringent prerequisites demanded by parametric counterparts. This article delves into the fundamentals of non-parametric techniques, shedding light on their operational mechanisms, advantages, and scenarios of optimal application. By equipping readers with a solid grasp of non-parametric statistics , we aim to enhance their analytical capabilities, enabling the effective handling of diverse datasets, especially those that challenge conventional parametric assumptions. Through a precise, technical exposition, this guide seeks to elevate the reader’s proficiency in applying non-parametric methods to extract meaningful insights from data, irrespective of its distribution or scale.

- Non-parametric statistics bypass assumptions for true data integrity.

- Flexible methods in non-parametric statistics reveal hidden data patterns.

- Real-world applications of non-parametric statistics solve complex issues.

- Non-parametric techniques like Mann-Whitney U bring clarity to data.

- Ethical data analysis through non-parametric statistics upholds truth.

Ad description. Lorem ipsum dolor sit amet, consectetur adipiscing elit.

Understanding Non-Parametric Statistics

Non-parametric statistics are indispensable in data analysis, mainly due to their capacity to process data without the necessity for predefined distribution assumptions. This distinct attribute sets non-parametric methods apart from parametric ones, which mandate that data adhere to certain distribution norms, such as the normal distribution. The utility of non-parametric techniques becomes especially pronounced with datasets where the distribution is either unknown, non-normal, or insufficient sample size to validate any distributional assumptions.

The cornerstone of non-parametric statistics is their reliance on the ranks or order of data points instead of the actual data values. This approach renders them inherently resilient to outliers and aptly suited for analyzing non-linear relationships within the data. Such versatility makes non-parametric methods applicable across diverse data types and research contexts, including situations involving ordinal data or instances where scale measurements are infeasible.

By circumventing the assumption of a specific underlying distribution, non-parametric methods facilitate a more authentic data analysis, capturing its intrinsic structure and characteristics. This capability allows researchers to derive conclusions that are more aligned with the actual nature of their data, which is particularly beneficial in disciplines where data may not conform to the conventional assumptions underpinning parametric tests.

Non-Parametric Statistics Flexibility

The core advantage of Non-Parametric Statistics lies in its inherent flexibility, which is crucial for analyzing data that doesn’t conform to the assumptions required by traditional parametric methods. This flexibility stems from the ability of non-parametric techniques to make fewer assumptions about the data distribution, allowing for a broader application across various types of data structures and distributions.

For instance, non-parametric methods do not assume a specific underlying distribution (such as normal distribution), making them particularly useful for skewed, outliers, or ordinal data. This is a significant technical benefit when dealing with real-world data, often deviating from idealized statistical assumptions.

Moreover, non-parametric statistics are adept at handling small sample sizes where the central limit theorem might not apply, and parametric tests could be unreliable. This makes them invaluable in fields where large samples are difficult to obtain, such as in rare disease research or highly specialized scientific studies.

Another technical aspect of non-parametric methods is their use in hypothesis testing, particularly with the Wilcoxon Signed-Rank Test for paired data and the Mann-Whitney U Test for independent samples. These tests are robust alternatives to the t-test when the data does not meet the necessary parametric assumptions, providing a means to conduct meaningful statistical analysis without the stringent requirements of normality and homoscedasticity.

The flexibility of non-parametric methods extends to their application in correlation analysis with Spearman’s rank correlation and in estimating distribution functions with the Kaplan-Meier estimator, among others. These tools are indispensable in fields ranging from medical research to environmental studies, where the nature of the data and the research questions do not fit neatly into parametric frameworks.

Techniques and Methods

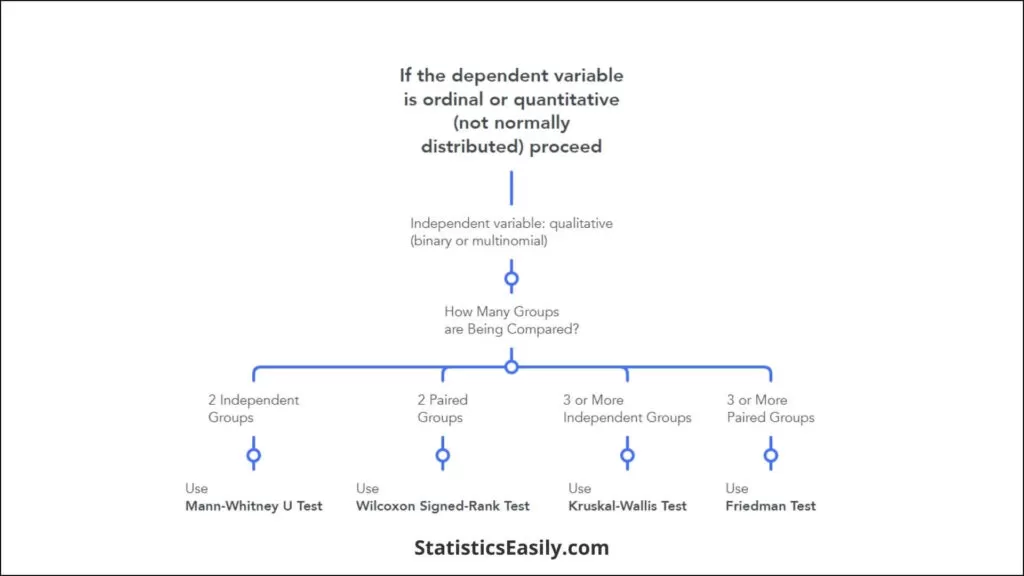

In non-parametric statistics , several essential techniques and methods stand out for their utility and versatility across various types of data analysis. This section delves into six standard non-parametric tests, providing a technical overview of each method and its application.

Mann-Whitney U Test : Often employed as an alternative to the t-test for independent samples, the Mann-Whitney U test is pivotal when comparing two independent groups. It assesses whether their distributions differ significantly, relying not on the actual data values but on the ranks of these values. This test is instrumental when the data doesn’t meet the normality assumption required by parametric tests.

Wilcoxon Signed-Rank Test : This test is a non-parametric alternative to the paired t-test, used when assessing the differences between two related samples, matched samples, or repeated measurements on a single sample. The Wilcoxon test evaluates whether the median differences between pairs of observations are zero. It is ideal for the paired differences that do not follow a normal distribution.

Kruskal-Wallis Test : As the non-parametric counterpart to the one-way ANOVA, the Kruskal-Wallis test extends the Mann-Whitney U test to more than two independent groups. It evaluates whether the populations from which the samples are drawn have identical distributions. Like the Mann-Whitney U, it bases its analysis on the rank of the data, making it suitable for data that does not follow a normal distribution.

Friedman Test : Analogous to the repeated measures ANOVA in parametric statistics, the Friedman test is a non-parametric method for detecting differences in treatments across multiple test attempts. It is beneficial for analyzing data from experiments where measurements are taken from the same subjects under different conditions, allowing for assessing the effects of other treatments on a single sample population.

Spearman’s Rank Correlation : Spearman’s rank correlation coefficient offers a non-parametric measure of the strength and direction of association between two variables. It is especially applicable in scenarios where the variables are measured on an ordinal scale or when the relationship between variables is not linear. This method emphasizes the monotonic relationship between variables, providing insights into the data’s behavior beyond linear correlations.

Kendall’s Tau : Kendall’s Tau is a correlation measure designed to assess the association between two measured quantities. It determines the strength and direction of the relationship, much like Spearman’s rank correlation, but focuses on the concordance and discordance between data points. Kendall’s Tau is particularly useful for data that involves ordinal or ranked variables, providing insight into the monotonic relationship without assuming linearity.

Chi-square Test: The Chi-square test is a non-parametric statistical tool used to determine whether there is a significant difference between the expected frequencies and the observed frequencies in one or more categories. It is beneficial in categorical data analysis, where the variables are nominal or ordinal, and the data are in the form of frequencies or counts. This test is valuable when evaluating hypotheses on the independence of two variables or the goodness of fit for a particular distribution.

Non-Parametric Statistics Real-World Applications

The practical utility of Non-Parametric Statistics is vast and varied, spanning numerous fields and research disciplines. This section showcases real-world case studies and examples where non-parametric methods have provided insightful solutions to complex problems, highlighting the depth and versatility of these techniques.

Environmental Science : In a study examining the impact of industrial pollution on river water quality, researchers employed the Kruskal-Wallis test to compare the pH levels across multiple sites. This non-parametric method was chosen due to the non-normal distribution of pH levels and the presence of outliers caused by sporadic pollution events. The test revealed significant differences in water quality, guiding policymakers in identifying pollution hotspots.

Medical Research : In a longitudinal study on chronic pain management, the Wilcoxon Signed-Rank Test was employed to assess the effectiveness of a novel therapy compared to conventional treatment. Each patient underwent both treatments in different periods, with pain scores recorded on an ordinal scale before and after each treatment phase. Given the non-normal distribution of differences in pain scores before and after each treatment for the same patient, the Wilcoxon test facilitated a statistically robust analysis. It revealed a significant reduction in pain intensity with the new therapy compared to conventional treatment, thereby demonstrating its superior efficacy in a manner that was both robust and suited to the paired nature of the data.

Market Research : A market research firm used Spearman’s Rank Correlation to analyze survey data to understand customer satisfaction across various service sectors. The ordinal ranking of satisfaction levels and the non-linear relationship between service features and customer satisfaction made Spearman’s correlation an ideal choice, uncovering critical drivers of customer loyalty.

Education : In educational research, the Friedman test was utilized to assess the effectiveness of different teaching methods on student performance over time. With data collected from the same group of students under three distinct teaching conditions, the test provided insights into which method led to significant improvements, informing curriculum development.

Social Sciences : Kendall’s Tau was applied in a sociological study to examine the relationship between social media usage and community engagement among youths. Given the ordinal data and the interest in understanding the direction and strength of the association without assuming linearity, Kendall’s Tau offered nuanced insights, revealing a weak but significant negative correlation.

Non-Parametric Statistics Implementation in R

Implementing non-parametric statistical methods in R involves a systematic approach to ensure accurate and ethical analysis. This step-by-step guide will walk you through the process, from data preparation to result interpretation, while emphasizing the importance of data integrity and ethical considerations.

1. Data Preparation:

- Begin by importing your dataset into R using functions like read.csv() for CSV files or read.table() for tab-delimited data.

- Perform initial data exploration using functions like summary(), str(), and head() to understand your data’s structure, variables, and any apparent issues like missing values or outliers.

2. Choosing the Right Test:

- Determine the appropriate non-parametric test based on your data type and research question. For two independent samples, consider the Mann-Whitney U test (wilcox.test() function); for paired samples, use the Wilcoxon Signed-Rank test (wilcox.test() with paired = TRUE); for more than two independent groups, use the Kruskal-Wallis test (kruskal.test()); and for correlation analysis, use Spearman’s rank correlation (cor.test() with method = “spearman”).

3. Executing the Test:

- Execute the chosen test using its corresponding function. Ensure your data meets the test’s requirements, such as correctly ranked or categorized.

- For example, to run a Mann-Whitney U test, use wilcox.test(group1, group2), replacing group1 and group2 with your actual data vectors.

4. Result Interpretation:

- Carefully interpret the output, paying attention to the test statistic and p-value. A p-value less than your significance level (commonly 0.05) indicates a statistically significant difference or correlation.

- Consider the effect size and confidence intervals to assess the practical significance of your findings.

5. Data Integrity and Ethical Considerations:

- Ensure data integrity by double-checking data entry, handling missing values appropriately, and conducting outlier analysis.

- Maintain ethical standards by respecting participant confidentiality, obtaining necessary permissions for data use, and reporting findings honestly without data manipulation.

6. Reporting:

- When documenting your analysis, include a detailed methodology section that outlines the non-parametric tests used, reasons for their selection, and any data preprocessing steps.

- Present your results using visual aids like plots or tables where applicable, and discuss the implications of your findings in the context of your research question.

Throughout this article, we have underscored the significance and value of non-parametric statistics in data analysis. These methods enable us to approach data sets with unknown or non-normal distributions, providing genuine insights and unveiling the truth and beauty hidden within the data. We encourage readers to maintain an open mind and a steadfast commitment to uncovering authentic insights when applying statistical methods to their research and projects. We invite you to explore the potential of non-parametric statistics in your endeavors and to share your findings with the scientific and academic community, contributing to the collective enrichment of knowledge and the advancement of science.

Recommended Articles

Discover more about the transformative power of data analysis in our collection of articles. Dive deeper into the world of statistics with our curated content and join our community of truth-seeking analysts.

Understanding the Assumptions for Chi-Square Test of Independence

- What is the difference between t-test and Mann-Whitney test?

- Mastering the Mann-Whitney U Test: A Comprehensive Guide

- A Comprehensive Guide to Hypotheses Tests in Statistics

- A Guide to Hypotheses Tests

Frequently Asked Questions (FAQs)

Q1: What Are Non-Parametric Statistics? Non-parametric statistics are methods that don’t rely on data from specific distributions. They are used when data doesn’t meet the assumptions of parametric tests.

Q2: Why Choose Non-Parametric Methods? They offer flexibility in analyzing data with unknown distributions or small sample sizes, providing a more ethical approach to data analysis.

Q3: What Is the Mann-Whitney U Test? It’s a non-parametric test for assessing whether two independent samples come from the same distribution, especially useful when data doesn’t meet normality assumptions.

Q4: How Do Non-Parametric Methods Enhance Data Integrity? By not imposing strict assumptions on data, non-parametric methods respect the natural form of data, leading to more truthful insights.

Q5: Can Non-Parametric Statistics Handle Outliers? Yes, non-parametric statistics are less sensitive to outliers, making them suitable for datasets with extreme values.

Q6: What Is the Kruskal-Wallis Test? This test is a non-parametric method for comparing more than two independent samples, proper when the ANOVA assumptions are not met.

Q7: How Does Spearman’s Rank Correlation Work? Spearman’s rank correlation measures the strength and direction of association between two ranked variables, ideal for non-linear relationships.

Q8: What Are the Real-World Applications of Non-Parametric Statistics? They are widely used in fields like environmental science, education, and medicine, where data may not follow standard distributions.

Q9: What Are the Benefits of Using Non-Parametric Statistics in Data Analysis? They provide a more inclusive data analysis, accommodating various data types and distributions and revealing deeper insights.

Q10: How to Get Started with Non-Parametric Statistical Analysis? Begin by understanding the nature of your data and choosing appropriate non-parametric methods that align with your analysis goals.

Similar Posts

How Do You Calculate Degrees of Freedom?

Master “How do you calculate degrees of freedom” in statistical analysis to enhance data accuracy and insights.

ANOVA versus ANCOVA: Breaking Down the Differences

Explore the crucial differences between ANOVA versus ANCOVA, and learn when to use each method for optimal data analysis.

“What Does The P-Value Mean” Revisited

We Have Already Presented A Didactic Explanation Of The P-Value, But Not That Precise. Now Learn An Accurate Definition For The P-Value!

What’s Regression Analysis? A Comprehensive Guide for Beginners

Discover what’s regression analysis, its types, key concepts, applications, and common pitfalls in our comprehensive guide for beginners.

Explore the assumptions and applications of the Chi-Square Test of Independence, a crucial tool for analyzing categorical data in various fields.

How to Report Simple Linear Regression Results in APA Style

With this article, you will learn How to Report Simple Linear Regression in APA-style. Ensure accurate, credible research results.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 29 April 2014

Points of significance

Nonparametric tests

- Martin Krzywinski 1 &

- Naomi Altman 2

Nature Methods volume 11 , pages 467–468 ( 2014 ) Cite this article

64k Accesses

54 Citations

13 Altmetric

Metrics details

- Research data

- Statistical methods

A Corrigendum to this article was published on 27 June 2014

This article has been updated

Nonparametric tests robustly compare skewed or ranked data.

You have full access to this article via your institution.

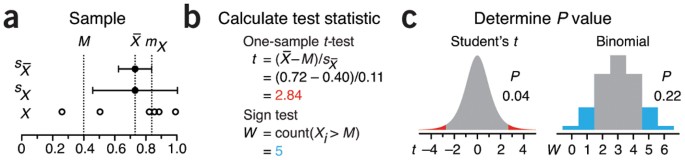

We have seen that the t -test is robust with respect to assumptions about normality and equivariance 1 and thus is widely applicable. There is another class of methods—nonparametric tests—more suitable for data that come from skewed distributions or have a discrete or ordinal scale. Nonparametric tests such as the sign and Wilcoxon rank-sum tests relax distribution assumptions and are therefore easier to justify, but they come at the cost of lower sensitivity owing to less information inherent in their assumptions. For small samples, the performance of these tests is also constrained because their P values are only coarsely sampled and may have a large minimum. Both issues are mitigated by using larger samples.

These tests work analogously to their parametric counterparts: a test statistic and its distribution under the null are used to assign significance to observations. We compare in Figure 1 the one-sample t -test 2 to a nonparametric equivalent, the sign test (though more sensitive and sophisticated variants exist), using a putative sample X whose source distribution we cannot readily identify ( Fig. 1a ). The null hypothesis of the sign test is that the sample median m X is equal to the proposed median, M = 0.4. The test uses the number of sample values larger than M as its test statistic, W —under the null we expect to see as many values below the median as above, with the exact probability given by the binomial distribution ( Fig. 1c ). The median is a more useful descriptor than the mean for asymmetric and otherwise irregular distributions. The sign test makes no assumptions about the distribution—only that sample values be independent. If we propose that the population median is M = 0.4 and we observe X , we find W = 5 ( Fig. 1b ). The chance of observing a value of W under the null that is at least as extreme ( W ≤ 1 or W ≥ 5) is P = 0.22, using both tails of the binomial distribution ( Fig. 1c ). To limit the test to whether the median of X was biased towards values larger than M , we would consider only the area for W ≥ 5 in the right tail to find P = 0.11.

The P value of 0.22 from the sign test is much higher than that from the t -test ( P = 0.04), reflecting that the sign test is less sensitive. This is because it is not influenced by the actual distance between the sample values and M —it measures only 'how many' instead of 'how much'. Consequently, it needs larger sample sizes or more supporting evidence than the t -test. For the example of X , to obtain P < 0.05 we would need to have all values larger than M ( W = 6). Its large P values and straightforward application makes the sign test a useful diagnostic. Take, for example, a hypothetical situation slightly different from that in Figure 1 , where P > 0.05 is reported for the case where a treatment has lowered blood pressure in 6 out of 6 subjects. You may think this P seems implausibly large, and you'd be right because the equivalent scenario for the sign test ( W = 6, n = 6) gives a two-tailed P = 0.03.

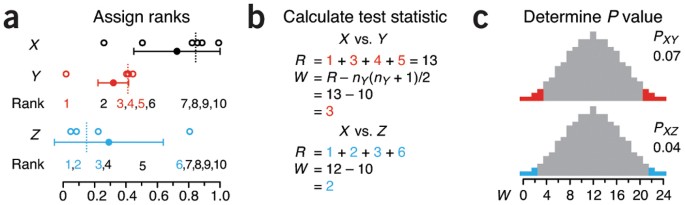

To compare two samples, the Wilcoxon rank-sum test is widely used and is sometimes referred to as the Mann-Whitney or Mann-Whitney-Wilcoxon test. It tests whether the samples come from distributions with the same median. It doesn't assume normality, but as a test of equality of medians, it requires both samples to come from distributions with the same shape. The Wilcoxon test is one of many methods that reduce the dynamic range of values by converting them to their ranks in the list of ordered values pooled from both samples ( Fig. 2a ). The test statistic, W , is the degree to which the sum of ranks is larger than the lowest possible in the sample with the lower ranks ( Fig. 2b ). We expect that a sample from a population with a smaller median will be converted to a set of smaller ranks.

( a ) Sample comparisons of X vs. Y and X vs. Z start with ranking pooled values and identifying the ranks in the smaller-sized sample (e.g., 1, 3, 4, 5 for Y ; 1, 2, 3, 6 for Z ). Error bars show sample mean and s.d., and sample medians are shown by vertical dotted lines. ( b ) The Wilcoxon rank-sum test statistic W is the difference between the sum of ranks and the smallest possible observed sum. ( c ) For small sample sizes the exact distribution of W can be calculated. For samples of size (6, 4), there are only 210 different rank combinations corresponding to 25 distinct values of W .

Because there is a finite number (210) of combinations of rank-ordering for X ( n X = 6) and Y ( n Y = 4), we can enumerate all outcomes of the test and explicitly construct the distribution of W ( Fig. 2c ) to assign a P value to W . The smallest value of W = 0 occurs when all values in one sample are smaller than those in the other. When they are all larger, the statistic reaches a maximum, W = n X n Y = 24. For X versus Y , W = 3, and there are 14 of 210 test outcomes with W ≤ 3 or W ≥ 21. Thus, P XY =14/210 = 0.067. For X versus Z , W = 2, and P XZ = 8/210 = 0.038. For cases in which both samples are larger than 10, W is approximately normal, and we can obtain the P value from a z -test of ( W – μ W )/ σ W , where μ W = n 1 ( n 1 + n 2 + 1)/2 and σ W = √( μ W n 2 /6).

The ability to enumerate all outcomes of the test statistic makes calculating the P value straightforward ( Figs. 1c and 2c ), but there is an important consequence: there will be a minimum P value, P min . Depending on the size of samples, P min can be relatively large. For comparisons of samples of size n X = 6 and n Y = 4 ( Fig. 2a ), P min = 1/210 = 0.005 for a one-tailed test, or 0.01 for a two-tailed test, corresponding to W = 0. Moreover, because there are only 25 distinct values of W ( Fig. 2c ), only two other two-tailed P values are <0.05: P = 0.02 ( W = 1) and P = 0.038 ( W = 2). The next-largest P value ( W = 3) is P = 0.07. Because there is no P with value 0.05, the test cannot be set to reject the null at a type I rate of 5%. Even if we test at α = 0.05, we will be rejecting the null at the next lower P —for an effective type I error of 3.8%. We will see how this affects test performance for small samples further on. In fact, it may even be impossible to reach significance at α = 0.05 because there is a limited number of ways in which small samples can vary in the context of ranks, and no outcome of the test happens less than 5% of the time. For example, samples of size 4 and 3 offer only 35 arrangements of ranks and a two-tailed P min = 2/35 = 0.057. Contrast this to the t -test, which can produce any P value because the test statistic can take on an infinite number of values.

This has serious implications in multiple-testing scenarios discussed in the previous column 3 . Recall that when N tests are performed, multiple-testing corrections will scale the smallest P value to NP . In the same way as a test may never yield a significant result ( P min > α ), applying multiple-testing correction may also preclude it ( NP min > α ). For example, making N = 6 comparisons on samples such as X and Y shown in Figure 2a ( n X = 6, n Y = 4) will never yield an adjusted P value lower than α = 0.05 because P min = 0.01 > α / N . To achieve two-tailed significance at α = 0.05 across N = 10, 100 or 1,000 tests, we require sample sizes that produce at least 400, 4,000 or 40,000 distinct rank combinations. This is achieved for sample pairs of size of (5, 6), (7, 8) and (9, 9), respectively.

The P values from the Wilcoxon test ( P XY = 0.07, P XZ = 0.04) in Figure 2a appear to be in conflict with those obtained from the t -test ( P XY = 0.04, P XZ = 0.06). The two methods tell us contradictory information—or do they? As mentioned, the Wilcoxon test concerns the median, whereas the t -test concerns the mean. For asymmetric distributions, these values can be quite different, and it is conceivable that the medians are the same but the means are different. The t -test does not identify the difference in means of X and Z as significant because the standard deviation, s Z , is relatively large owing to the influence of the sample's largest value (0.81). Because the t -test reacts to any change in any sample value, the presence of outliers can easily influence its outcome when samples are small. For example, simply increasing the largest value in X (1.00) by 0.3 will increase s X from 0.28 to 0.35 and result in a P XY value that is no longer significant at α = 0.05. This change does not alter the Wilcoxon P value because the rank scheme remains unaltered. This insensitivity to changes in the data—outliers and typical effects alike—reduces the sensitivity of rank methods.

The fact that the output of a rank test is driven by the probability that a value drawn from distribution A will be smaller (or larger) than one drawn from B without regard to their absolute difference has an interesting consequence: we cannot use this probability (pairwise preferences, in general) to impose an order on distributions. Consider a case of three equally prevalent diseases for which treatment A has cure times of 2, 2 and 5 days for the three diseases, and treatment B has 1, 4 and 4. Without treatment, each disease requires 3 days to cure—let's call this control C . Treatment A is better than C for the first two diseases but not the third, and treatment B is better only for the first. Can we determine which of the three options ( A , B , C ) is better? If we try to answer this using the probability of observing a shorter time to cure, we find P ( A < C ) = 67% and P ( C < B ) = 67% but also that P ( B < A ) = 56%—a rock-paper-scissors scenario.

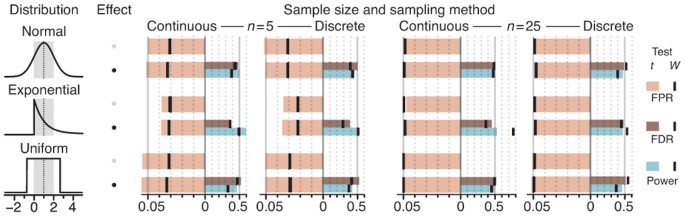

The question about which test to use does not have an unqualified answer—both have limitations. To illustrate how the t - and Wilcoxon tests might perform in a practical setting, we compared their false positive rate (FPR), false discovery rate (FDR) and power at α = 0.05 for different sampling distributions and sample sizes ( n = 5 and 25) in the presence and absence of an effect ( Fig. 3 ). At n = 5, Wilcoxon FPR = 0.032 < α because this is the largest P value it can produce smaller than α , not because the test inherently performs better. We can always reach this FPR with the t -test by setting α = 0.032, where we'll find that it will still have slightly higher power than a Wilcoxon test that rejects at this rate. At n = 5, Wilcoxon performs better for discrete sampling—the power (0.43) is essentially the same as the t -test's (0.46), but the FDR is lower. When both tests are applied at α = 0.032, Wilcoxon power (0.43) is slightly higher than t -test power (0.39). The differences between the tests for n = 25 diminishes because the number of arrangements of ranks is extremely large and the normal approximation to sample means is more accurate. However, one case stands out: in the presence of skew (e.g., exponential distribution), Wilcoxon power is much higher than that of the t -test, particularly for continuous sampling. This is because the majority of values are tightly spaced and ranks are more sensitive to small shifts. Skew affects t -test FPR and power in a complex way, depending on whether one- or two-tailed tests are performed and the direction of the skew relative to the direction of the population shift that is being studied 4 .

Data were sampled from three common analytical distributions with μ = 1 (dotted lines) and σ = 1 (gray bars, μ ± σ ). Discrete sampling was simulated by rounding values to the nearest integer. The FPR, FDR and power of Wilcoxon tests (black lines) and t -tests (colored bars) for 100,000 sample pairs for each combination of sample size ( n = 5 and 25), effect chance (0 and 10%) and sampling method. In the absence of an effect, both sample values were drawn from a given distribution type with μ = 1. With effect, the distribution for the second sample was shifted by d ( d = 1.4 for n = 5; d = 0.57 for n = 25). The effect size was chosen to yield 50% power for the t -test in the normal noise scenario. Two-tailed P at α = 0.05.

Nonparametric methods represent a more cautious approach and remove the burden of assumptions about the distribution. They apply naturally to data that are already in the form of ranks or degree of preference, for which numerical differences cannot be interpreted. Their power is generally lower, especially in multiple-testing scenarios. However, when data are very skewed, rank methods reach higher power and are a better choice than the t -test.

Change history

23 may 2014.

In the version of this article initially published, the expression X ( n X = 6) was incorrectly written as X ( n Y = 6). The error has been corrected in the HTML and PDF versions of the article.

Krzywinski, M. & Altman, N. Nat. Methods 11 , 215–216 (2014).

Article PubMed CAS Google Scholar

Krzywinski, M. & Altman, N. Nat. Methods 10 , 1041–1042 (2013).

Krzywinski, M. & Altman, N. Nat. Methods 11 , 355–356 (2014).

Article CAS Google Scholar

Reineke, D. M., Baggett, J. & Elfessi, A. J. Stat. Educ. 11 (2003).

Download references

Author information

Authors and affiliations.

Martin Krzywinski is a staff scientist at Canada's Michael Smith Genome Sciences Centre.,

Martin Krzywinski

Naomi Altman is a Professor of Statistics at The Pennsylvania State University.,

- Naomi Altman

You can also search for this author in PubMed Google Scholar

Ethics declarations

Competing interests.

The authors declare no competing financial interests.

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Krzywinski, M., Altman, N. Nonparametric tests. Nat Methods 11 , 467–468 (2014). https://doi.org/10.1038/nmeth.2937

Download citation

Published : 29 April 2014

Issue Date : May 2014

DOI : https://doi.org/10.1038/nmeth.2937

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Diseased and healthy murine local lung strains evaluated using digital image correlation.

- T. M. Nelson

- K. A. M. Quiros

- M. Eskandari

Scientific Reports (2023)

A machine learning approach to predicting early and late postoperative reintubation

- Mathew J. Koretsky

- Ethan Y. Brovman

- Nick Cheney

Journal of Clinical Monitoring and Computing (2023)

Survival analysis—time-to-event data and censoring

- Tanujit Dey

- Stuart R. Lipsitz

Nature Methods (2022)

Analysis of COVID-19 data using neutrosophic Kruskal Wallis H test

- Rehan Ahmad Khan Sherwani

- Huma Shakeel

- Muhammad Aslam

BMC Medical Research Methodology (2021)

Restoration of tumour-growth suppression in vivo via systemic nanoparticle-mediated delivery of PTEN mRNA

- Mohammad Ariful Islam

Nature Biomedical Engineering (2018)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Parametric vs. Non-Parametric Tests and When to Use Them

The fundamentals of data science include computer science, statistics and math. It’s very easy to get caught up in the latest and greatest, most powerful algorithms — convolutional neural nets, reinforcement learning, etc.

As an ML/health researcher and algorithm developer, I often employ these techniques. However, something I have seen rife in the data science community after having trained ~10 years as an electrical engineer is that if all you have is a hammer, everything looks like a nail. Suffice it to say that while many of these exciting algorithms have immense applicability, too often the statistical underpinnings of the data science community are overlooked.

What is the Difference Between Parametric and Non-Parametric Tests?

A parametric test makes assumptions about a population’s parameters, and a non-parametric test does not assume anything about the underlying distribution.

I’ve been lucky enough to have had both undergraduate and graduate courses dedicated solely to statistics , in addition to growing up with a statistician for a mother. So this article will share some basic statistical tests and when/where to use them.

A parametric test makes assumptions about a population’s parameters:

- Normality : Data in each group should be normally distributed.

- Independence : Data in each group should be sampled randomly and independently.

- No outliers : No extreme outliers in the data.

- Equal Variance : Data in each group should have approximately equal variance.

If possible, we should use a parametric test. However, a non-parametric test (sometimes referred to as a distribution free test ) does not assume anything about the underlying distribution (for example, that the data comes from a normal (parametric distribution).

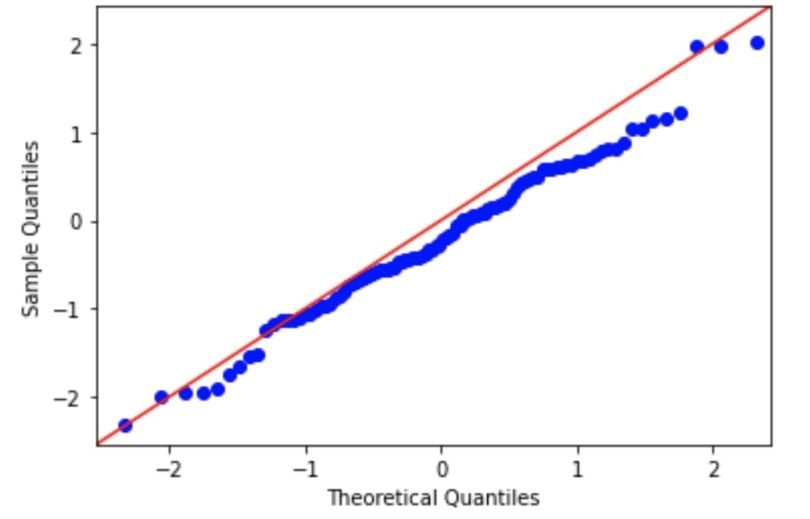

We can assess normality visually using a Q-Q (quantile-quantile) plot. In these plots, the observed data is plotted against the expected quantile of a normal distribution . A demo code in Python is seen here, where a random normal distribution has been created. If the data are normal, it will appear as a straight line.

Read more about data science Random Forest Classifier: A Complete Guide to How It Works in Machine Learning

Tests to Check for Normality

- Shapiro-Wilk

- Kolmogorov-Smirnov

The null hypothesis of both of these tests is that the sample was sampled from a normal (or Gaussian) distribution. Therefore, if the p-value is significant, then the assumption of normality has been violated and the alternate hypothesis that the data must be non-normal is accepted as true.

Selecting the Right Test

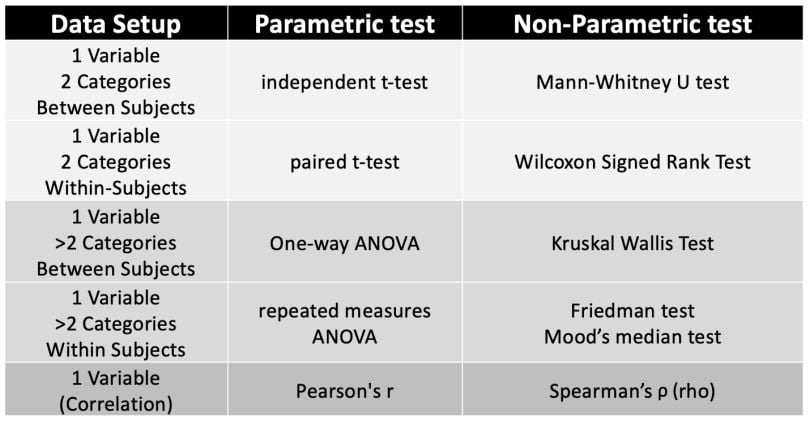

You can refer to this table when dealing with interval level data for parametric and non-parametric tests.

Read more about data science Statistical Tests: When to Use T-Test, Chi-Square and More

Advantages and Disadvantages

Non-parametric tests have several advantages, including:

- More statistical power when assumptions of parametric tests are violated.

- Assumption of normality does not apply.

- Small sample sizes are okay.

- They can be used for all data types, including ordinal, nominal and interval (continuous).

- Can be used with data that has outliers.

Disadvantages of non-parametric tests:

- Less powerful than parametric tests if assumptions haven’t been violated

[1] Kotz, S.; et al., eds. (2006), Encyclopedia of Statistical Sciences , Wiley.

[2] Lindstrom, D. (2010). Schaum’s Easy Outline of Statistics , Second Edition (Schaum’s Easy Outlines) 2nd Edition. McGraw-Hill Education

[3] Rumsey, D. J. (2003). Statistics for dummies, 18th edition

Built In’s expert contributor network publishes thoughtful, solutions-oriented stories written by innovative tech professionals. It is the tech industry’s definitive destination for sharing compelling, first-person accounts of problem-solving on the road to innovation.

Great Companies Need Great People. That's Where We Come In.

Nonparametric Tests

- 1

- | 2

- | 3

- | 4

- | 5

- | 6

- | 7

- | 8

- | 9

All Modules

Introduction to Nonparametric Testing

This module will describe some popular nonparametric tests for continuous outcomes. Interested readers should see Conover 3 for a more comprehensive coverage of nonparametric tests.

The techniques described here apply to outcomes that are ordinal, ranked, or continuous outcome variables that are not normally distributed. Recall that continuous outcomes are quantitative measures based on a specific measurement scale (e.g., weight in pounds, height in inches). Some investigators make the distinction between continuous, interval and ordinal scaled data. Interval data are like continuous data in that they are measured on a constant scale (i.e., there exists the same difference between adjacent scale scores across the entire spectrum of scores). Differences between interval scores are interpretable, but ratios are not. Temperature in Celsius or Fahrenheit is an example of an interval scale outcome. The difference between 30º and 40º is the same as the difference between 70º and 80º, yet 80º is not twice as warm as 40º. Ordinal outcomes can be less specific as the ordered categories need not be equally spaced. Symptom severity is an example of an ordinal outcome and it is not clear whether the difference between much worse and slightly worse is the same as the difference between no change and slightly improved. Some studies use visual scales to assess participants' self-reported signs and symptoms. Pain is often measured in this way, from 0 to 10 with 0 representing no pain and 10 representing agonizing pain. Participants are sometimes shown a visual scale such as that shown in the upper portion of the figure below and asked to choose the number that best represents their pain state. Sometimes pain scales use visual anchors as shown in the lower portion of the figure below.

Visual Pain Scale

In the upper portion of the figure, certainly 10 is worse than 9, which is worse than 8; however, the difference between adjacent scores may not necessarily be the same. It is important to understand how outcomes are measured to make appropriate inferences based on statistical analysis and, in particular, not to overstate precision.

Assigning Ranks

The nonparametric procedures that we describe here follow the same general procedure. The outcome variable (ordinal, interval or continuous) is ranked from lowest to highest and the analysis focuses on the ranks as opposed to the measured or raw values. For example, suppose we measure self-reported pain using a visual analog scale with anchors at 0 (no pain) and 10 (agonizing pain) and record the following in a sample of n=6 participants:

7 5 9 3 0 2

The ranks, which are used to perform a nonparametric test, are assigned as follows: First, the data are ordered from smallest to largest. The lowest value is then assigned a rank of 1, the next lowest a rank of 2 and so on. The largest value is assigned a rank of n (in this example, n=6). The observed data and corresponding ranks are shown below:

A complicating issue that arises when assigning ranks occurs when there are ties in the sample (i.e., the same values are measured in two or more participants). For example, suppose that the following data are observed in our sample of n=6:

Observed Data: 7 7 9 3 0 2

The 4 th and 5 th ordered values are both equal to 7. When assigning ranks, the recommended procedure is to assign the mean rank of 4.5 to each (i.e. the mean of 4 and 5), as follows:

Suppose that there are three values of 7. In this case, we assign a rank of 5 (the mean of 4, 5 and 6) to the 4 th , 5 th and 6 th values, as follows:

Using this approach of assigning the mean rank when there are ties ensures that the sum of the ranks is the same in each sample (for example, 1+2+3+4+5+6=21, 1+2+3+4.5+4.5+6=21 and 1+2+3+5+5+5=21). Using this approach, the sum of the ranks will always equal n(n+1)/2. When conducting nonparametric tests, it is useful to check the sum of the ranks before proceeding with the analysis.

To conduct nonparametric tests, we again follow the five-step approach outlined in the modules on hypothesis testing.

- Set up hypotheses and select the level of significance α. Analogous to parametric testing, the research hypothesis can be one- or two- sided (one- or two-tailed), depending on the research question of interest.

- Select the appropriate test statistic. The test statistic is a single number that summarizes the sample information. In nonparametric tests, the observed data is converted into ranks and then the ranks are summarized into a test statistic.

- Set up decision rule. The decision rule is a statement that tells under what circumstances to reject the null hypothesis. Note that in some nonparametric tests we reject H 0 if the test statistic is large, while in others we reject H 0 if the test statistic is small. We make the distinction as we describe the different tests.

- Compute the test statistic. Here we compute the test statistic by summarizing the ranks into the test statistic identified in Step 2.

- Conclusion. The final conclusion is made by comparing the test statistic (which is a summary of the information observed in the sample) to the decision rule. The final conclusion is either to reject the null hypothesis (because it is very unlikely to observe the sample data if the null hypothesis is true) or not to reject the null hypothesis (because the sample data are not very unlikely if the null hypothesis is true).

return to top | previous page | next page

Content ©2017. All Rights Reserved. Date last modified: May 4, 2017. Wayne W. LaMorte, MD, PhD, MPH

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Bras Pneumol

- v.47(4); Jul-Aug 2021

Nonparametric statistical tests: friend or foe?

Testes estatísticos não paramétricos: mocinho ou bandido, maría teresa politi.

1 . Methods in Epidemiologic, Clinical, and Operations Research (MECOR) program, American Thoracic Society/Asociación Latinoamericana del Tórax, Montevideo, Uruguay.

2 . Laboratorio de Estadística Aplicada a las Ciencias de la Salud - LEACS - Departamento de Toxicología y Farmacología, Facultad de Ciencias Médicas, Universidad de Buenos Aires, Buenos Aires, Argentina.

Juliana Carvalho Ferreira

3 . Divisão de Pneumologia, Instituto do Coração, Hospital das Clínicas, Faculdade de Medicina, Universidade de São Paulo, São Paulo (SP) Brasil.

Cecilia María Patino

4 . Department of Preventive Medicine, Keck School of Medicine, University of Southern California, Los Angeles, CA, USA.

PRACTICAL SCENARIO

The head of an ICU would like to assess if obese patients admitted for a COPD exacerbation have a longer hospital length of stay (LOS) than do non-obese patients. After recruiting 200 patients, she finds that the distribution of LOS is strongly skewed to the right ( Figure 1 A). If she were to perform a test of hypothesis, would it be appropriate to use a t-test to compare LOS between obese and non-obese patients with a COPD exacerbation?

PARAMETRIC VS. NONPARAMETRIC TESTS IN STATISTICS

Parametric tests assume that the distribution of data is normal or bell-shaped ( Figure 1 B) to test hypotheses. For example, the t-test is a parametric test that assumes that the outcome of interest has a normal distribution, that can be characterized by two parameters 1 : the mean and the standard deviation ( Figure 1 B).

Nonparametric tests do not require that the data fulfill this restrictive distribution assumption for the outcome variable. Therefore, they are more flexible and can be widely applied to various different distributions. Nonparametric techniques use ranks 1 instead of the actual values of the observations. For this reason, in addition to continuous data, they can be used to analyze ordinal data, for which parametric tests are usually inappropriate. 2

What are the pitfalls? If the outcome variable is normally distributed and the assumptions for using parametric tests are met, nonparametric techniques have lower statistical power than do the comparable parametric tests. This means that nonparametric tests are less likely to detect a statistically significant result (i.e., less likely to find a p-value < 0.05 than a parametric test). Additionally, parametric tests provide parameter estimations-in the case of the t test, the mean and the standard deviation are the calculated parameters-and a confidence interval for these parameters. For example, in our practical scenario, if the difference in LOS between the groups were analyzed with a t-test, it would report a sample mean difference in LOS between the groups and the standard deviation of that difference in LOS. Finally, the 95% confidence interval of the sample mean difference could be reported to express the range of values for the mean difference in the population. Conversely, nonparametric tests do not estimate parameters such as mean, standard deviation, or confidence intervals. They only calculate a p-value. 2

HOW TO CHOOSE BETWEEN PARAMETRIC AND NONPARAMETRIC TESTS?

When sample sizes are large, that is, greater than 100, parametric tests can usually be applied regardless of the outcome variable distribution. This is due to the central limit theorem, which states that if the sample size is large enough, the distribution of a given variable is approximately normal. The farther the distribution departs from being normal, the larger the sample size will be necessary to approximate normality.

When sample sizes are small, and outcome variable distributions are extremely non-normal, nonparametric tests are more appropriate. For example, some variables are naturally skewed, such as hospital LOS or number of asthma exacerbations per year. In these cases, extremely skewed variables should always be analyzed with nonparametric tests, even with large sample sizes. 2

In our practical scenario, because the distribution of LOS is strongly skewed to the right, the relationship between obesity and LOS among the patients hospitalized for COPD exacerbations should be analyzed with a nonparametric test (Wilcoxon rank sum test or Mann-Whitney test) instead of a t-test.

- Search Search Please fill out this field.

- Corporate Finance

- Financial Analysis

Nonparametric Statistics: Overview, Types, and Examples

:max_bytes(150000):strip_icc():format(webp)/TimothyLi-picture1-4fb5c746f503451bacfee414a08f5c1f.jpg)

Investopedia / Zoe Hansen

What Are Nonparametric Statistics?

Nonparametric statistics refers to a statistical method in which the data are not assumed to come from prescribed models that are determined by a small number of parameters; examples of such models include the normal distribution model and the linear regression model. Nonparametric statistics sometimes uses data that is ordinal, meaning it does not rely on numbers, but rather on a ranking or order of sorts. For example, a survey conveying consumer preferences ranging from like to dislike would be considered ordinal data.

Nonparametric statistics includes nonparametric descriptive statistics , statistical models, inference, and statistical tests. The model structure of nonparametric models is not specified a priori but is instead determined from data. The term nonparametric is not meant to imply that such models completely lack parameters, but rather that the number and nature of the parameters are flexible and not fixed in advance. A histogram is an example of a nonparametric estimate of a probability distribution.

Key Takeaways

- Nonparametric statistics are easy to use but do not offer the pinpoint accuracy of other statistical models.

- This type of analysis is often best suited when considering the order of something, where even if the numerical data changes, the results will likely stay the same.

Understanding Nonparametric Statistics

In statistics, parametric statistics includes parameters such as the mean, standard deviation, Pearson correlation, variance, etc. This form of statistics uses the observed data to estimate the parameters of the distribution. Under parametric statistics, data are often assumed to come from a normal distribution with unknown parameters μ (population mean) and σ2 (population variance), which are then estimated using the sample mean and sample variance.

Nonparametric statistics makes no assumption about the sample size or whether the observed data is quantitative.

Nonparametric statistics does not assume that data is drawn from a normal distribution. Instead, the shape of the distribution is estimated under this form of statistical measurement. While there are many situations in which a normal distribution can be assumed, there are also some scenarios in which the true data generating process is far from normally distributed.

Examples of Nonparametric Statistics

In the first example, consider a financial analyst who wishes to estimate the value-at-risk (VaR) of an investment. The analyst gathers earnings data from 100’s of similar investments over a similar time horizon. Rather than assume that the earnings follow a normal distribution, they use the histogram to estimate the distribution nonparametrically. The 5th percentile of this histogram then provides the analyst with a nonparametric estimate of VaR.

For a second example, consider a different researcher who wants to know whether average hours of sleep is linked to how frequently one falls ill. Because many people get sick rarely, if at all, and occasional others get sick far more often than most others, the distribution of illness frequency is clearly non-normal, being right-skewed and outlier-prone. Thus, rather than use a method that assumes a normal distribution for illness frequency, as is done in classical regression analysis, for example, the researcher decides to use a nonparametric method such as quantile regression analysis.

Special Considerations

Nonparametric statistics have gained appreciation due to their ease of use. As the need for parameters is relieved, the data becomes more applicable to a larger variety of tests. This type of statistics can be used without the mean, sample size, standard deviation, or the estimation of any other related parameters when none of that information is available.

Since nonparametric statistics makes fewer assumptions about the sample data, its application is wider in scope than parametric statistics. In cases where parametric testing is more appropriate, nonparametric methods will be less efficient. This is because nonparametric statistics discard some information that is available in the data, unlike parametric statistics.

:max_bytes(150000):strip_icc():format(webp)/focused-businesswoman-working-at-computer-in-office-1075535804-f2fc718646164388a11d41f6160ca5e9.jpg)

- Terms of Service

- Editorial Policy

- Privacy Policy

- Your Privacy Choices

- Math Article

- Non Parametric Test

Non-Parametric Test

Non-parametric tests are experiments that do not require the underlying population for assumptions. It does not rely on any data referring to any particular parametric group of probability distributions . Non-parametric methods are also called distribution-free tests since they do not have any underlying population. In this article, we will discuss what a non-parametric test is, different methods, merits, demerits and examples of non-parametric testing methods.

Table of Contents:

- Non-parametric T Test

- Non-parametric Paired T-Test

Mann Whitney U Test

Wilcoxon signed-rank test, kruskal wallis test.

- Advantages and Disadvantages

- Applications

What is a Non-parametric Test?

Non-parametric tests are the mathematical methods used in statistical hypothesis testing, which do not make assumptions about the frequency distribution of variables that are to be evaluated. The non-parametric experiment is used when there are skewed data, and it comprises techniques that do not depend on data pertaining to any particular distribution.

The word non-parametric does not mean that these models do not have any parameters. The fact is, the characteristics and number of parameters are pretty flexible and not predefined. Therefore, these models are called distribution-free models.

Non-Parametric T-Test

Whenever a few assumptions in the given population are uncertain, we use non-parametric tests, which are also considered parametric counterparts. When data are not distributed normally or when they are on an ordinal level of measurement, we have to use non-parametric tests for analysis. The basic rule is to use a parametric t-test for normally distributed data and a non-parametric test for skewed data.

Non-Parametric Paired T-Test

The paired sample t-test is used to match two means scores, and these scores come from the same group. Pair samples t-test is used when variables are independent and have two levels, and those levels are repeated measures.

Non-parametric Test Methods

The four different techniques of parametric tests, such as Mann Whitney U test, the sign test, the Wilcoxon signed-rank test, and the Kruskal Wallis test are discussed here in detail. We know that the non-parametric tests are completely based on the ranks, which are assigned to the ordered data. The four different types of non-parametric test are summarized below with their uses, null hypothesis , test statistic, and the decision rule.

Kruskal Wallis test is used to compare the continuous outcome in greater than two independent samples.

Null hypothesis, H 0 : K Population medians are equal.

Test statistic:

If N is the total sample size, k is the number of comparison groups, R j is the sum of the ranks in the jth group and n j is the sample size in the jth group, then the test statistic, H is given by:

\(\begin{array}{l}H = \left ( \frac{12}{N(N+1)}\sum_{j=1}^{k} \frac{R_{j}^{2}}{n_{j}}\right )-3(N+1)\end{array} \)

Decision Rule: Reject the null hypothesis H 0 if H ≥ critical value

The sign test is used to compare the continuous outcome in the paired samples or the two matches samples.

Null hypothesis, H 0 : Median difference should be zero

Test statistic: The test statistic of the sign test is the smaller of the number of positive or negative signs.

Decision Rule: Reject the null hypothesis if the smaller of number of the positive or the negative signs are less than or equal to the critical value from the table.

Mann Whitney U test is used to compare the continuous outcomes in the two independent samples.

Null hypothesis, H 0 : The two populations should be equal.

If R 1 and R 2 are the sum of the ranks in group 1 and group 2 respectively, then the test statistic “U” is the smaller of:

\(\begin{array}{l}U_{1}= n_{1}n_{2}+\frac{n_{1}(n_{1}+1)}{2}-R_{1}\end{array} \)

\(\begin{array}{l}U_{2}= n_{1}n_{2}+\frac{n_{2}(n_{2}+1)}{2}-R_{2}\end{array} \)

Decision Rule: Reject the null hypothesis if the test statistic, U is less than or equal to critical value from the table.

Wilcoxon signed-rank test is used to compare the continuous outcome in the two matched samples or the paired samples.

Null hypothesis, H 0 : Median difference should be zero.

Test statistic: The test statistic W, is defined as the smaller of W+ or W- .

Where W+ and W- are the sums of the positive and the negative ranks of the different scores.

Decision Rule: Reject the null hypothesis if the test statistic, W is less than or equal to the critical value from the table.

Advantages and Disadvantages of Non-Parametric Test

The advantages of the non-parametric test are:

- Easily understandable

- Short calculations

- Assumption of distribution is not required

- Applicable to all types of data

The disadvantages of the non-parametric test are:

- Less efficient as compared to parametric test

- The results may or may not provide an accurate answer because they are distribution free

Applications of Non-Parametric Test

The conditions when non-parametric tests are used are listed below:

- When parametric tests are not satisfied.

- When testing the hypothesis, it does not have any distribution.

- For quick data analysis.

- When unscaled data is available.

Frequently Asked Questions on Non-Parametric Test

What is meant by a non-parametric test.

The non-parametric test is one of the methods of statistical analysis, which does not require any distribution to meet the required assumptions, that has to be analyzed. Hence, the non-parametric test is called a distribution-free test.

What is the advantage of a non-parametric test?

The advantage of nonparametric tests over the parametric test is that they do not consider any assumptions about the data.

Is Chi-square a non-parametric test?

Yes, the Chi-square test is a non-parametric test in statistics, and it is called a distribution-free test.

Mention the different types of non-parametric tests.

The different types of non-parametric test are: Kruskal Wallis Test Sign Test Mann Whitney U test Wilcoxon signed-rank test

When to use the parametric and non-parametric test?

If the mean of the data more accurately represents the centre of the distribution, and the sample size is large enough, we can use the parametric test. Whereas, if the median of the data more accurately represents the centre of the distribution, and the sample size is large, we can use non-parametric distribution.

Register with BYJU'S & Download Free PDFs

Register with byju's & watch live videos.

- > Statistics

Non-Parametric Statistics: Types, Tests, and Examples

- Pragya Soni

- May 12, 2022

Statistics, an essential element of data management and predictive analysis , is classified into two types, parametric and non-parametric.

Parametric tests are based on the assumptions related to the population or data sources while, non-parametric test is not into assumptions, it's more factual than the parametric tests. Here is a detailed blog about non-parametric statistics.

What is the Meaning of Non-Parametric Statistics ?

Unlike, parametric statistics, non-parametric statistics is a branch of statistics that is not solely based on the parametrized families of assumptions and probability distribution. Non-parametric statistics depend on either being distribution free or having specified distribution, without keeping any parameters into consideration.

Non-parametric statistics are defined by non-parametric tests; these are the experiments that do not require any sample population for assumptions. For this reason, non-parametric tests are also known as distribution free tests as they don’t rely on data related to any particular parametric group of probability distributions.

In other terms, non-parametric statistics is a statistical method where a particular data is not required to fit in a normal distribution. Usually, non-parametric statistics used the ordinal data that doesn’t rely on the numbers, but rather a ranking or order. For consideration, statistical tests, inferences, statistical models, and descriptive statistics.

Non-parametric statistics is thus defined as a statistical method where data doesn’t come from a prescribed model that is determined by a small number of parameters. Unlike normal distribution model, factorial design and regression modeling, non-parametric statistics is a whole different content.

Unlike parametric models, non-parametric is quite easy to use but it doesn’t offer the exact accuracy like the other statistical models. Therefore, non-parametric statistics is generally preferred for the studies where a net change in input has minute or no effect on the output. Like even if the numerical data changes, the results are likely to stay the same.

Also Read | What is Regression Testing?

How does Non-Parametric Statistics Work ?

Parametric statistics consists of the parameters like mean, standard deviation , variance, etc. Thus, it uses the observed data to estimate the parameters of the distribution. Data are often assumed to come from a normal distribution with unknown parameters.

While, non-parametric statistics doesn’t assume the fact that the data is taken from a same or normal distribution. In fact, non-parametric statistics assume that the data is estimated under a different measurement. The actual data generating process is quite far from the normally distributed process.

Types of Non-Parametric Statistics

Non-parametric statistics are further classified into two major categories. Here is the brief introduction to both of them:

1. Descriptive Statistics

Descriptive statistics is a type of non-parametric statistics. It represents the entire population or a sample of a population. It breaks down the measure of central tendency and central variability.

2. Statistical Inference

Statistical inference is defined as the process through which inferences about the sample population is made according to the certain statistics calculated from the sample drawn through that population.

Some Examples of Non-Parametric Tests

In the recent research years, non-parametric data has gained appreciation due to their ease of use. Also, non-parametric statistics is applicable to a huge variety of data despite its mean, sample size, or other variation. As non-parametric statistics use fewer assumptions, it has wider scope than parametric statistics.

Here are some common examples of non-parametric statistics :

Consider the case of a financial analyst who wants to estimate the value of risk of an investment. Now, rather than making the assumption that earnings follow a normal distribution, the analyst uses a histogram to estimate the distribution by applying non-parametric statistics.

Consider another case of a researcher who is researching to find out a relation between the sleep cycle and healthy state in human beings. Taking parametric statistics here will make the process quite complicated.

So, despite using a method that assumes a normal distribution for illness frequency. The researcher will opt to use any non-parametric method like quantile regression analysis.

Similarly, consider the case of another health researcher, who wants to estimate the number of babies born underweight in India, he will also employ the non-parametric measurement for data testing.

A marketer that is interested in knowing the market growth or success of a company, will surely employ a non-statistical approach.

Any researcher that is testing the market to check the consumer preferences for a product will also employ a non-statistical data test. As different parameters in nutritional value of the product like agree, disagree, strongly agree and slightly agree will make the parametric application hard.

Any other science or social science research which include nominal variables such as age, gender, marital data, employment, or educational qualification is also called as non-parametric statistics. It plays an important role when the source data lacks clear numerical interpretation.

Also Read | Applications of Statistical Techniques

What are Non-Parametric Tests ?

Types of Non-Parametric Tests

Here is the list of non-parametric tests that are conducted on the population for the purpose of statistics tests :

Wilcoxon Rank Sum Test

The Wilcoxon test also known as rank sum test or signed rank test. It is a type of non-parametric test that works on two paired groups. The main focus of this test is comparison between two paired groups. The test helps in calculating the difference between each set of pairs and analyses the differences.

The Wilcoxon test is classified as a statistical hypothesis tes t and is used to compare two related samples, matched samples, or repeated measurements on a single sample to assess whether their population mean rank is different or not.

Mann- Whitney U Test

The Mann-Whitney U test also known as the Mann-Whitney-Wilcoxon test, Wilcoxon rank sum test and Wilcoxon-Mann-Whitney test. It is a non-parametric test based on null hypothesis. It is equally likely that a randomly selected sample from one sample may have higher value than the other selected sample or maybe less.

Mann-Whitney test is usually used to compare the characteristics between two independent groups when the dependent variable is either ordinal or continuous. But these variables shouldn’t be normally distributed. For a Mann-Whitney test, four requirements are must to meet. The first three are related to study designs and the fourth one reflects the nature of data.

Kruskal Wallis Test

Sometimes referred to as a one way ANOVA on ranks, Kruskal Wallis H test is a nonparametric test that is used to determine the statistical differences between the two or more groups of an independent variable. The word ANOVA is expanded as Analysis of variance.

The test is named after the scientists who discovered it, William Kruskal and W. Allen Wallis. The major purpose of the test is to check if the sample is tested if the sample is taken from the same population or not.

Friedman Test

The Friedman test is similar to the Kruskal Wallis test. It is an alternative to the ANOVA test. The only difference between Friedman test and ANOVA test is that Friedman test works on repeated measures basis. Friedman test is used for creating differences between two groups when the dependent variable is measured in the ordinal.

The Friedman test is further divided into two parts, Friedman 1 test and Friedman 2 test. It was developed by sir Milton Friedman and hence is named after him. The test is even applicable to complete block designs and thus is also known as a special case of Durbin test.

Distribution Free Tests

Distribution free tests are defined as the mathematical procedures. These tests are widely used for testing statistical hypotheses. It makes no assumption about the probability distribution of the variables. An important list of distribution free tests is as follows:

- Anderson-Darling test: It is done to check if the sample is drawn from a given distribution or not.

- Statistical bootstrap methods: It is a basic non-statistical test used to estimate the accuracy and sampling distribution of a statistic.

- Cochran’s Q: Cochran’s Q is used to check constant treatments in block designs with 0/1 outcomes.

- Cohen’s kappa: Cohen kappa is used to measure the inter-rater agreement for categorical items.

- Kaplan-Meier test: Kaplan Meier test helps in estimating the survival function from lifetime data, modeling, and censoring.

- Two-way analysis Friedman test: Also known as ranking test, it is used to randomize different block designs.

- Kendall’s tau: The test helps in defining the statistical dependency between two different variables.

- Kolmogorov-Smirnov test: The test draws the inference if a sample is taken from the same distribution or if two or more samples are taken from the same sample.

- Kendall’s W: The test is used to measure the inference of an inter-rater agreement .

- Kuiper’s test: The test is done to determine if the sample drawn from a given distribution is sensitive to cyclic variations or not.

- Log Rank test: This test compares the survival distribution of two right-skewed and censored samples.

- McNemar’s test: It tests the contingency in the sample and revert when the row and column marginal frequencies are equal to or not.

- Median tests: As the name suggests, median tests check if the two samples drawn from the similar population have similar median values or not.

- Pitman’s permutation test: It is a statistical test that yields the value of p variables. This is done by examining all possible rearrangements of labels.

- Rank products: Rank products are used to detect expressed genes in replicated microarray experiments.

- Siegel Tukey tests: This test is used for differences in scale between two groups.

- Sign test: Sign test is used to test whether matched pair samples are drawn from distributions from equal medians.

- Spearman’s rank: It is used to measure the statistical dependence between two variables using a monotonic function.

- Squared ranks test: Squared rank test helps in testing the equality of variances between two or more variables.

- Wald-Wolfowitz runs a test: This test is done to check if the elements of the sequence are mutually independent or random.

Also Read | Factor Analysis

Advantages and Disadvantages of Non-Parametric Tests

The benefits of non-parametric tests are as follows:

It is easy to understand and apply.

It consists of short calculations.

The assumption of the population is not required.

Non-parametric test is applicable to all data kinds

The limitations of non-parametric tests are:

It is less efficient than parametric tests.

Sometimes the result of non-parametric data is insufficient to provide an accurate answer.

Applications of Non-Parametric Tests

Non-parametric tests are quite helpful, in the cases :

Where parametric tests are not giving sufficient results.

When the testing hypothesis is not based on the sample.

For the quicker analysis of the sample.

When the data is unscaled.

The current scenario of research is based on fluctuating inputs, thus, non-parametric statistics and tests become essential for in-depth research and data analysis .

Share Blog :

Be a part of our Instagram community

Trending blogs

5 Factors Influencing Consumer Behavior

Elasticity of Demand and its Types

An Overview of Descriptive Analysis

What is PESTLE Analysis? Everything you need to know about it

What is Managerial Economics? Definition, Types, Nature, Principles, and Scope

5 Factors Affecting the Price Elasticity of Demand (PED)

6 Major Branches of Artificial Intelligence (AI)

Scope of Managerial Economics

Dijkstra’s Algorithm: The Shortest Path Algorithm

Different Types of Research Methods

Latest Comments

brenwright30

THIS IS HOW YOU CAN RECOVER YOUR LOST CRYPTO? Are you a victim of Investment, BTC, Forex, NFT, Credit card, etc Scam? Do you want to investigate a cheating spouse? Do you desire credit repair (all bureaus)? Contact Hacker Steve (Funds Recovery agent) asap to get started. He specializes in all cases of ethical hacking, cryptocurrency, fake investment schemes, recovery scam, credit repair, stolen account, etc. Stay safe out there! [email protected] https://hackersteve.great-site.net/

The Importance of Non-Parametric Tests in Statistical Analysis

Updated: March 7, 2023 by Ken Feldman

Many statistical tests have underlying assumptions about the population data. But, what happens if you violate those assumptions? This is when you might need to use a non-parametric test to answer your statistical question.

Non-parametric refers to a type of statistical analysis that does not make any assumptions about the underlying probability distribution or population parameters of the data being analyzed. In contrast, parametric analysis assumes that the data is drawn from a particular distribution, such as a normal distribution , and estimates parameters, such as the mean and variance , based on the sample data.

Overview: What is non-parametric?

Non-parametric methods are often used when the assumptions of parametric methods are not met or when the data is not normally distributed. Non-parametric methods can be used to test hypotheses, estimate parameters, and perform regression analysis.

Examples of non-parametric methods include the Wilcoxon rank-sum test , the Kruskal-Wallis test , and the Mann-Whitney U test. These tests do not assume any specific distribution of the data and are often used when the data is skewed, has outliers, or is not normally distributed.

Be aware that a non-parametric test like Mann-Whitney will have an equivalent parametric test such as the 2-sample t test . While the t test compares two population means, the Mann Whitney will be comparing two population medians.

5 benefits of non-parametric

There are several benefits to using non-parametric methods:

1. Distribution-free

Non-parametric methods do not require any assumptions about the underlying probability distribution of the data. This means you can use any type of data, including data that is not normally distributed or has outliers .

2. Robustness

Non-parametric methods are often more robust to outliers and extreme values than parametric methods. They can provide more accurate results in the presence of such data points.

3. Flexibility

Non-parametric methods are very flexible and can be used to analyze a wide range of data types, including ordinal and nominal data .

4. Simplicity

Non-parametric methods are often simpler and easier to use than parametric methods. They do not require advanced mathematical knowledge or complex software.

5. Small Sample Sizes

Non-parametric methods can be used with small sample sizes, as they do not require large sample sizes to provide accurate results.

Why is non-parametric important to understand?

Understanding non-parametric methods is important for several reasons:

Real-world data

Real-world data often does not meet the assumptions of parametric methods. Non-parametric methods can be used to analyze such data accurately.

Complementary

Non-parametric methods can complement parametric methods. By understanding non-parametric methods, you can use the appropriate method for their data type and ensure accurate results.

Flexibility

Non-parametric methods provide a flexible set of tools that can be used to analyze a wide range of data types, including data that is not normally distributed or has outliers.

An industry example of a non-parametric

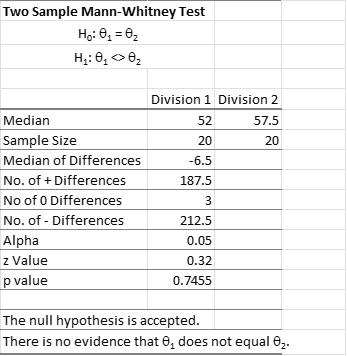

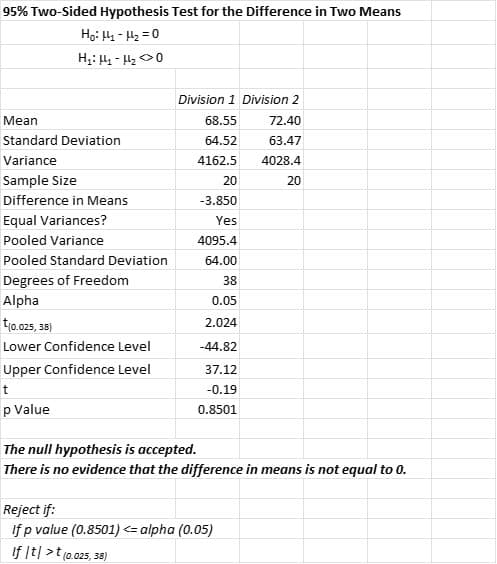

A sales manager for a consumer products company wanted to compare the sales of two sales divisions. When she tested the data for normality, she found the data from both divisions was not normally distributed. The company’s Six Sigma Master Black Belt recommended she use a Mann-Whitney test to determine if there was any statistically significant difference between the two groups. Below is the output of the data she ran. Note, that while there is a mathematical difference between median sales, it is not statistically significant using the Mann-Whitney test.

Interestingly, the use of the parametric 2-sample t test results in the same conclusions despite the violation of the assumption of normality. That could be due to the robustness of that assumption.

7 best practices when thinking about non-parametric

Here are some best practices for using non-parametric methods:

1. Understand the data

Before applying non-parametric methods, it is important to understand the characteristics of the data. This includes assessing the distribution of the data, identifying outliers, and considering the scale of measurement (nominal, ordinal, or interval).

2. Choose the appropriate test

There are many different non-parametric tests available, each with different assumptions and requirements. It is important to choose the appropriate test for the research question and data type.