Speech Recognition in Unity3D – The Ultimate Guide

There are three main strategies in converting user speech input to text:

- Voice Commands

- Free Dictation

These strategies exist in any voice detection engine (Google, Microsoft, Amazon, Apple, Nuance, Intel, or others), therefore the concepts described here will give you a good reference point to understand how to work with any of them. In today’s article, we’ll explore the differences of each method, understand their use-cases, and see a quick implementation of the main ones.

Prerequisites

To write and execute code, you need to install the following software:

- Visual Studio 2019 Community

Unity3D is using a Microsoft API that works on any Windows 10 device (Desktop, UWP, HoloLens, XBOX). Similar APIs also exist for Android and iOS.

Did you know?…

LightBuzz has been helping Fortune-500 companies and innovative startups create amazing Unity3D applications and games. If you are looking to hire developers for your project, get in touch with us.

Source code

The source code of the project is available in our LightBuzz GitHub account. Feel free to download, fork, and even extend it!

1) Voice commands

We are first going to examine the simplest form of speech recognition: plain voice commands.

Description

Voice commands are predictable single words or expressions, such as:

- “Forward”

- “Left”

- “Fire”

- “Answer call”

The detection engine is listening to the user and compares the result with various possible interpretations. If one of them is near the spoken phrase within a certain confidence threshold, it’s marked as a proposed answer.

Since that is a “one or anything” approach, the engine will either recognize the phrase or nothing at all.

This method fails when you have several ways to say one thing. For example, the words “hello”, “hi”, “hey there” are all forms of greeting. Using this approach, you have to define all of them explicitly.

This method is useful for short, expected phrases, such as in-game controls.

Our original article includes detailed examples of using simple voice commands. You may also check out the Voice Commands Scene on the sample project .

Below, you can see the simplest C# code example for recognizing a few words:

2) Free Dictation

To solve the challenges of simple voice commands, we shall use the dictation mode.

While the user speaks in this mode, the engine listens for every possible word. While listening, it tries to find the best possible match of what the user meant to say.

This is the mode activated by your mobile device when you speak to it when writing a new email using voice. The engine manages to write the text in less than a second after you finish to say a word.

Technically, this is really impressive, especially considering that it compares your voice across multi-lingual dictionaries, while also checking grammar rules.

Use this mode for free-form text. If your application has no idea what to expect, the Dictation mode is your best bet.

You can see an example of the Dictation mode in the sample project Dictation Mode Scene . Here is the simplest way to use the Dictation mode:

As you can see, we first create a new dictation engine and register for the possible events.

- It starts with DictationHypothesis events, which are thrown really fast as the user speaks. However, hypothesized phrases may contain lots of errors.

- DictationResult is an event thrown after the user stops speaking for 1–2 seconds. It’s only then that the engine provides a single sentence with the highest probability.

- DictationComplete is thrown on several occasions when the engine shuts down. Some occasions are irreversible technical issues, while others just require a restart of the engine to get back to work.

- DictationError is thrown for other unpredictable errors.

Here are two general rules-of-thumb:

- For the highest quality, use DictationResult .

- For the fastest response, use DictationHypothesis .

Having both quality and speed is impossible with this technic.

Is it even possible to combine high-quality recognition with high speed?

Well, there is a reason we are not yet using voice commands as Iron Man does: In real-world applications, users are frequently complaining about typing errors, which probably happens only less than 10% of the cases… Dictation has many more mistakes than that.

To increase accuracy and keep the speed fast at the same time, we need the best of both worlds — the freedom of the Dictation and the response time of the Voice Commands.

The solution is Grammar Mode . This mode requires us to write a dictionary. A dictionary is an XML file that defines various rules for the things that the user will potentially say. This way, we can ignore languages we don’t need, and phrases the user will probably not use.

The grammar file also explains to the engine what are the possible words it can expect to receive next, therefore shrinking the amount from ANYTHING to X. This significantly increases performance and quality.

For example, using a Grammar, we could greet with either of these phrases:

- “Hello, how are you?”

- “Hi there”

- “Hey, what’s up?”

- “How’s it going?”

All of those could be listed in a rule that says:

If the user started saying something that sounds like” Hello”, it would be easily differentiated from e.g “Ciao”, compared to being differentiated also from e.g. “Yellow” or “Halo”.

We are going to see how to create our own Grammar file in a future article.

For your reference, this is the official specification for structuring a Grammar file .

In this tutorial, we described two methods of recognizing voice in Unity3D: Voice Commands and Dictation. Voice Commands are the easiest way to recognize pre-defined words. Dictation is a way to recognize free-form phrases. In a future article, we are going to see how to develop our own Grammar and feed it to Unity3D.

Until then, why don’t you start writing your code by speaking to your PC?

You made it to this point? Awesome! Here is the source code for your convenience.

Before you go…

Sharing is caring.

If you liked this article, remember to share it on social media, so you can help other developers, too! Also, let me know your thoughts in the comments below. ‘Til the next time… keep coding!

Shachar Oz is a product manager and UX specialist with extensive experience with emergent technologies, like AR, VR and computer vision. He designed Human Machine Interfaces for the last 10 years for video games, apps, robots and cars, using interfaces like face tracking, hand gestures and voice recognition. Website

You May Also Like

Lightbuzz body tracking sdk version 6.

Kinect is dead. Here is the best alternative

Product Update: LightBuzz SDK version 5.5

11 comments.

Hello, I have a question, while in unity everything works perfectly, but when I build the project for PC, and open the application, it doesn’t work. Please help.

hi Omar, well, i have built it will Unity 2019.1 as well as with 2019.3 and it works perfectly.

i apologize if it doesn’t. please try to make a build from the github source code, and feel free to send us some error messages that occur.

i apologize if it doesn’t. please try to make a build from the github source code, and feel free to send us some error messages that occur.

Hello, I’m trying Dictation Recognizer and I want to change the language to Spanish but I still don’t quite get it. Can you help me with this?

hi Alexis, perhaps check if the code here could help you: https://docs.microsoft.com/en-us/windows/apps/design/input/specify-the-speech-recognizer-language

You need an object – protected PhraseRecognizer recognizer;

in the example nr 1. Take care and thanks for this article!

Thank you Carl. Happy you liked it.

does this support android builds

Hi there. Sadly not. Android and ios have different speech api. this api supports microsoft devices.

Any working example for the grammar case?

Well, you can find this example from Microsoft. It should work anyway on PC. A combination between Grammar and machine learning is how most of these mechanisms work today.

https://learn.microsoft.com/en-us/dotnet/api/system.speech.recognition.grammar?view=netframework-4.8.1#examples

Leave a Reply Cancel Reply

Save my name, email, and website in this browser for the next time I comment.

This site uses Akismet to reduce spam. Learn how your comment data is processed .

© 2024 LIGHTBUZZ INC. Privacy Policy & Terms of Service

Privacy Overview

How to use Text-to-Speech in Unity

Enhance your Unity game by integrating artificial intelligence capabilities. This Unity AI tutorial will walk you through the process of using the Eden AI Unity Plugin, covering key steps from installation to implementing various AI models.

What is Unity ?

Established in 2004, Unity is a gaming company offering a powerful game development engine that empowers developers to create immersive games across various platforms, including mobile devices, consoles, and PCs.

If you're aiming to elevate your gameplay, Unity allows you to integrate artificial intelligence (AI), enabling intelligent behaviors, decision-making, and advanced functionalities in your games or applications.

Unity offers multiple paths for AI integration. Notably, the Unity Eden AI Plugin effortlessly syncs with the Eden AI API, enabling easy integration of AI tasks like text-to-speech conversion within your Unity applications.

Benefits of integrating Text to Speech into video game development

Integrating Text-to-Speech (TTS) into video game development offers a range of benefits, enhancing both the gaming experience and the overall development process:

1. Immersive Player Interaction

TTS enables characters in the game to speak, providing a more immersive and realistic interaction between players and non-player characters (NPCs).

2. Accessibility for Diverse Audiences

TTS can be utilized to cater to a diverse global audience by translating in-game text into spoken words, making the gaming experience more accessible for players with varying linguistic backgrounds.

3. Customizable Player Experience

Developers can use TTS to create personalized and adaptive gaming experiences, allowing characters to respond dynamically to player actions and choices.

4. Innovative Gameplay Mechanics

Game developers can introduce innovative gameplay mechanics by incorporating voice commands, allowing players to control in-game actions using spoken words, leading to a more interactive gaming experience.

5. Adaptive NPC Behavior

NPCs with TTS capabilities can exhibit more sophisticated and human-like behaviors, responding intelligently to player actions and creating a more challenging and exciting gaming environment.

6. Multi-Modal Gaming Experiences

TTS opens the door to multi-modal gaming experiences, combining visual elements with spoken dialogues, which can be especially beneficial for players who prefer or require alternative communication methods.

Integrating TTS into video games enhances the overall gameplay, contributing to a more inclusive, dynamic, and enjoyable gaming experience for players.

Use cases of Video Game Text-to-Speech Integration

Text-to-Speech (TTS) integration in video games introduces various use cases, enhancing player engagement, accessibility, and overall gaming experiences. Here are several applications of TTS in the context of video games:

Quest Guidance

TTS can guide players through quests by providing spoken instructions, hints, or clues, offering an additional layer of assistance in navigating game objectives.

Interactive Conversations

Enable players to engage in interactive conversations with NPCs through TTS, allowing for more realistic and dynamic exchanges within the game world.

Accessibility for Visually Impaired Players

TTS aids visually impaired players by converting in-game text into spoken words, providing crucial information about game elements, menus, and story developments.

Character AI Interaction

TTS can enhance interactions with AI-driven characters by allowing them to vocally respond to player queries, creating a more realistic and immersive gaming environment.

Interactive Learning Games

In educational or serious games, TTS can assist in delivering instructional content, quizzes, or interactive learning experiences, making the gameplay educational and engaging.

Procedural Content Generation

TTS can contribute to procedural content generation by dynamically narrating events, backstory, or lore within the game, adding depth and context to the gaming world.

Integrating TTS into video games offers a versatile set of applications that go beyond traditional text presentation, providing new dimensions of interactivity, accessibility, and storytelling.

How to integrate TTS into your video game with Unity

Step 1. install the eden ai unity plugin.

Ensure that you have a Unity project open and ready for integration. If you haven't installed the Eden AI plugin, follow these steps:

- Open your Unity Package Manager

- Add package from GitHub

Step 2. Obtain your Eden AI API Key

To get started with the Eden AI API, you need to sign up for an account on the Eden AI platform .

Once registered, you will get an API key which you will need to use the Eden AI Unity Plugin. You can set it in your script or add a file auth.json to your user folder (path: ~/.edenai (Linux/Mac) or %USERPROFILE%/.edenai/ (Windows)) as follows:

Alternatively, you can pass the API key as a parameter when creating an instance of the EdenAIApi class. If the API key is not provided, it will attempt to read it from the auth.json file in your user folder.

Step 3. Integrate Text-to-Speech on Unity

Bring vitality to your non-player characters (NPCs) by empowering them to vocalize through the implementation of text-to-speech functionality.

Leveraging the Eden AI plugin, you can seamlessly integrate a variety of services, including Google Cloud, OpenAI, AWS, IBM Watson, LovoAI, Microsoft Azure, and ElevenLabs text-to-speech providers, into your Unity project (refer to the complete list here ).

This capability allows you to tailor the voice model, language, and audio format to align with the desired atmosphere of your game.

1. Open your script file where you want to implement the text-to-speech functionality.

2. Import the required namespaces at the beginning of your script:

3. Create an instance of the Eden AI API class:

4. Implement the SendTextToSpeechRequest function with the necessary parameters:

Step 4: Handle the Text-to-Speech Response

The SendTextToSpeechRequest function returns a TextToSpeechResponse object.

Access the response attributes as needed. For example:

Step 5: Customize Parameters (Optional)

The SendTextToSpeechRequest function allows you to customize various parameters:

- Rate: Adjust speaking rate.

- Pitch: Modify speaking pitch.

- Volume: Control audio volume.

- VoiceModel: Specify a specific voice model.

- Include these optional parameters based on your preferences.

Step 6: Test and Debug

Run your Unity project and test the text-to-speech functionality. Monitor the console for any potential errors or exceptions, and make adjustments as necessary.

Now, your Unity project is equipped with text-to-speech functionality using the Eden AI plugin. Customize the parameters to suit your game's atmosphere, and enhance the immersive experience for your players.

TTS integration enhances immersion and opens doors for diverse gameplay experiences. Feel free to experiment with optional parameters for further fine-tuning. Explore additional AI functionalities offered by Eden AI to elevate your game development here .

About Eden AI

Eden AI is the future of AI usage in companies: our app allows you to call multiple AI APIs.

- Centralized and fully monitored billing

- Unified API: quick switch between AI models and providers

- Standardized response format: the JSON output format is the same for all suppliers.

- The best Artificial Intelligence APIs in the market are available

- Data protection: Eden AI will not store or use any data.

Related Posts

.jpg)

How to implement Image Similarity Search with Python

Top Free Computer Vision APIs, and Open Source models

.jpg)

Our Custom Chatbot Gets Supercharged with New Features

Try eden ai for free..

You can directly start building now. If you have any questions, feel free to schedule a call with us!

Drop your email and we'll get back to you ASAP to answer any questions you have or just to say hi —we promise not to spam you!

Technologies

© 2023 Eden AI. All rights reserved.

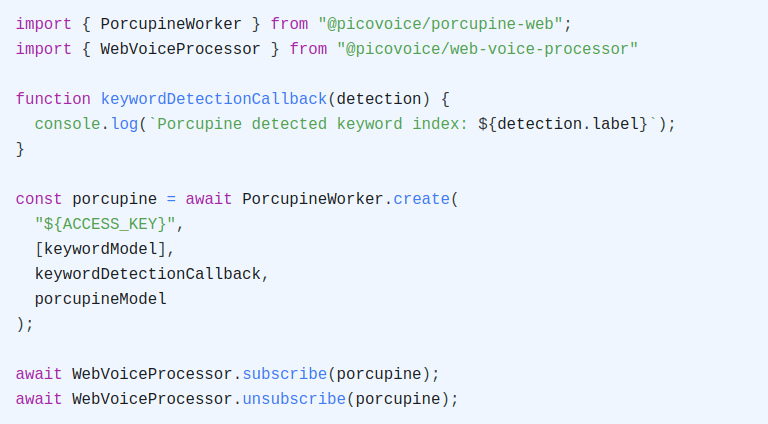

Unity Speech Recognition

This article serves as a comprehensive guide for adding on-device Speech Recognition to an Unity project.

When used casually, Speech Recognition usually refers solely to Speech-to-Text . However, Speech-to-Text represents only a single facet of Speech Recognition technologies. It also refers to features such as Wake Word Detection , Voice Command Recognition , and Voice Activity Detection ( VAD ). In the context of Unity projects, Speech Recognition can be used to implement a Voice Interface .

Fortunately Picovoice offers a few tools to help implement Voice Interfaces . If all that is needed is to recognize when specific phrases or words are said, use Porcupine Wake Word . If Voice Commands need to be understood and intent extracted with details (i.e. slot values), Rhino Speech-to-Intent is more suitable. Keep reading to see how to quickly start with both of them.

Picovoice Unity SDKs have cross-platform support for Linux , macOS , Windows , Android and iOS !

Porcupine Wake Word

To integrate the Porcupine Wake Word SDK into your Unity project, download and import the latest Porcupine Unity package .

Sign up for a free Picovoice Console account and obtain your AccessKey . The AccessKey is only required for authentication and authorization.

Create a custom wake word model using Picovoice Console.

Download the .ppn model file and copy it into your project's StreamingAssets folder.

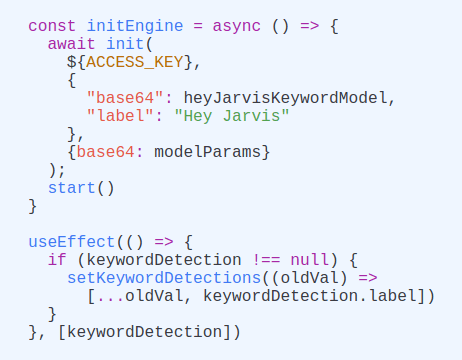

Write a callback that takes action when a keyword is detected:

- Initialize the Porcupine Wake Word engine with the callback and the .ppn file name (or path relative to the StreamingAssets folder):

- Start detecting:

For further details, visit the Porcupine Wake Word product page or refer to Porcupine's Unity SDK quick start guide .

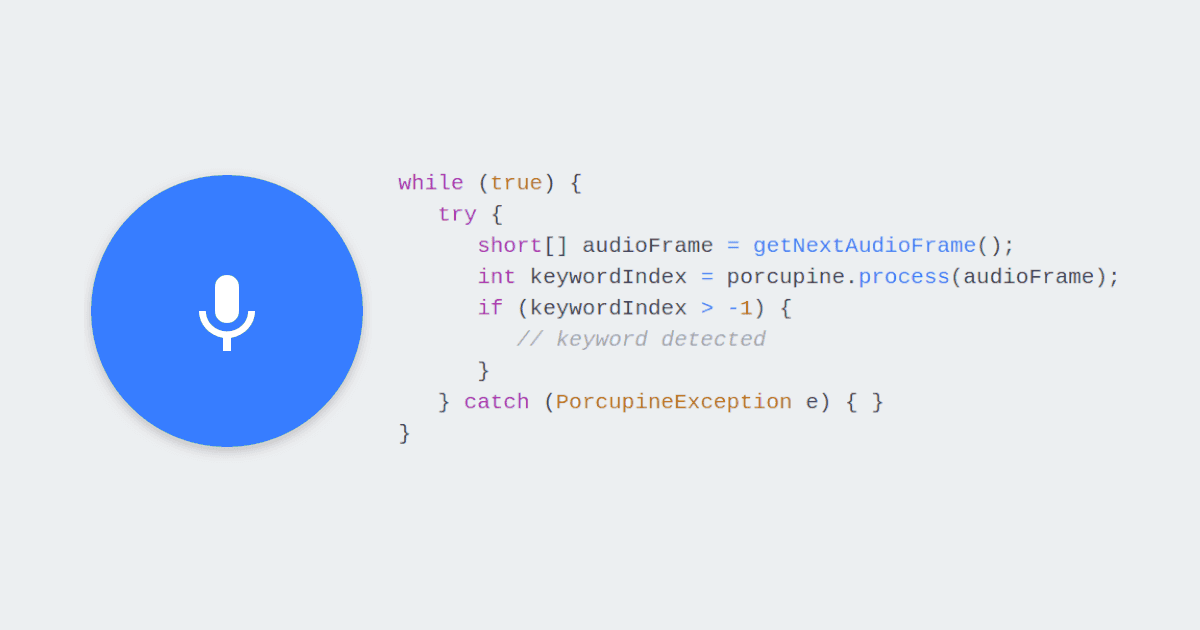

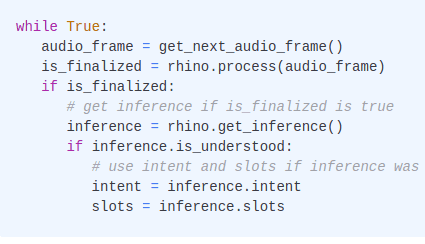

Rhino Speech-to-Intent

To integrate the Rhino Speech-to-Intent SDK into your Unity project, download and import the latest Rhino Unity package .

Create a custom context model using Picovoice Console.

Download the .rhn model file and copy it into your project's StreamingAssets folder.

Write a callback that takes action when a user's intent is inferred:

- Initialize the Rhino Speech-to-Intent engine with the callback and the .rhn file name (or path relative to the StreamingAssets folder):

- Start inferring:

For further details, visit the Rhino Speech-to-Intent product page or refer to Rhino's Android SDK quick start guide .

Subscribe to our newsletter

More from Picovoice

Learn how to perform Speech Recognition in JavaScript, including Speech-to-Text, Voice Commands, Wake Word Detection, and Voice Activity Det...

Learn how to perform Speech Recognition in iOS, including Speech-to-Text, Voice Commands, Wake Word Detection, and Voice Activity Detection.

Learn how to perform Speech Recognition in the web using React, including Voice Commands and Wake Word Detection.

Learn how to perform Speech Recognition in Android, including Speech-to-Text, Voice Commands, Wake Word Detection, and Voice Activity Detect...

Learn how to perform Speech Recognition in Python, including Speech-to-Text, Voice Commands, Wake Word Detection, and Voice Activity Detecti...

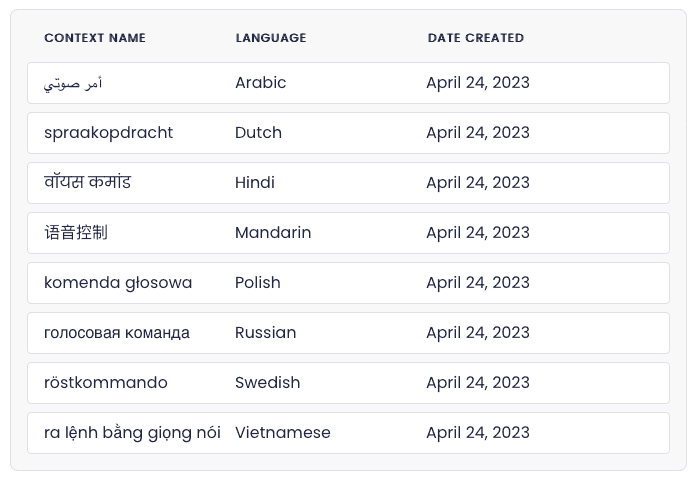

New releases of Porcupine Wake Word and Rhino Speech-to-Intent engines add support for Arabic, Dutch, Farsi, Hindi, Mandarin, Polish, Russia...

Learn how to create offline voice assistants like Alexa or Siri that run fully on-device using an STM32 microcontroller.

Learn how to transcribe speech to text on an Android device. Picovoice Leopard and Cheetah Speech-to-Text SDKs run on mobile, desktop, and e...

Introducing the Unity Text-to-Speech Plugin from ReadSpeaker

Having trouble adding synthetic speech to your next video game release? Try the Unity text-to-speech plugin from ReadSpeaker AI. Learn more here.

- Accessibility

- Assistive Technology

- ReadSpeaker News

- Text To Speech

- Voice Branding

As a game developer, how will you use text to speech (TTS)?

We’ve only begun to discover what this tool can do in the hands of creators. What we do know is that TTS can solve tough development problems , that it’s a cornerstone of accessibility , and that it’s a key component of dynamic AI-enhanced characters: NPCs that carry on original conversations with players.

There have traditionally been a few technical roadblocks between TTS and the game studio: Devs find it cumbersome to create and import TTS sound files through an external TTS engine. Some TTS speech labors under perceptible latency, making it unsuitable for in-game audio. And an unintegrated TTS engine creates a whole new layer of project management, threatening already drum-tight production schedules.

What devs need is a latency-free TTS tool they can use independently, without leaving the game engine—and that’s exactly what you get with ReadSpeaker AI’s Unity text-to-speech plugin.

ReadSpeaker AI’s Unity Text-to-Speech Plugin

ReadSpeaker AI offers a market-ready TTS plugin for Unity and Unreal Engine, and will work with studios to provide APIs for other game engines. For now, though, we’ll confine our discussion to Unity, which claims nearly 65% of the game development engine market. ReadSpeaker AI’s TTS plugin is an easy-to-install tool that allows devs to create and manipulate synthetic speech directly in Unity: no file management, no swapping between interfaces, and a deep library of rich, lifelike TTS voices. ReadSpeaker AI uses deep neural networks (DNN) to create AI-powered TTS voices of the highest quality, complete with industry-leading pronunciation thanks to custom pronunciation dictionaries and linguist support.

With this neural TTS at their fingertips, developers can improve the game development process—and the player’s experience—limited only by their creativity. So far, we’ve identified four powerful uses for a TTS game engine plugin. These include:

- User interface (UI) narration for accessibility. User interface narration is an accessibility feature that remediates barriers for players with vision impairments and other disabilities; TTS makes it easy to implement. Even before ReadSpeaker AI released the Unity plugin, The Last of Us Part 2 (released in 2018) used ReadSpeaker TTS for its UI narration feature. A triple-A studio like Naughty Dog can take the time to generate TTS files outside the game engine; those files were ultimately shipped on the game disc. That solution might not work ideally for digital games or independent studios, but a TTS game engine plugin will.

- Prototyping dialogue at early stages of development. Don’t wait until you’ve got a voice actor in the studio to find out your script doesn’t flow perfectly. The Unity TTS plugin allows developers to draft scenes within the engine, tweaking lines and pacing to get the plan perfect before the recording studio’s clock starts running.

- Instant audio narration for in-game text chat. Unity speech synthesis from ReadSpeaker AI renders audio instantly at runtime, through a speech engine embedded in the game files, so it’s ideal for narrating chat messages instantly. This is another powerful accessibility tool—one that’s now required for online multiplayer games in the U.S., according to the 21st Century Communications and Video Accessibility Act (CVAA). But it’s also great for players who simply prefer to listen rather than read in the heat of action.

- Lifelike speech for AI NPCs and procedurally generated text. Natural language processing allows software to understand human speech and create original, relevant responses. Only TTS can make these conversational voicebots—which is essentially what AI NPCs are—speak out loud. Besides, AI NPCs are just one use of procedurally generated speech in video games. What are the others? You decide. Game designers are artists, and dynamic, runtime TTS from ReadSpeaker AI is a whole new palette.

Text to Speech vs. Human Voice Actors for Video Game Characters

Note that our list of use cases for TTS in game development doesn’t include replacing voice talent for in-game character voices, other than AI NPCs that generate dialogue in real time. Voice actors remain the gold standard for character speech, and that’s not likely to change any time soon. In fact, every great neural TTS voice starts with a great voice actor; they provide the training data that allows the DNN technology to produce lifelike speech, with contracts that ensure fair, ethical treatment for all parties. So while there’s certainly a place for TTS in character voices, they are not a replacement for human talent. Instead, think of TTS as a tool for development, accessibility, and the growing role of AI in gaming.

ReadSpeaker AI brings more than 20 years of experience in TTS, with a focus on performance. That expertise helped us develop an embedded TTS engine that renders audio on the player’s machine, eliminating latency. We also offer more than 90 top-quality voices in over 30 languages, plus SSML support so you can control expression precisely. These capabilities set ReadSpeaker AI apart from the crowd. Curious? Keep reading for a real-world example.

ReadSpeaker AI Speech Synthesis in Action

Soft Leaf Studios used ReadSpeaker AI’s Unity text-to-speech plugin for scene prototyping and UI and story narration for its highly accessible game, in development at publication time, Stories of Blossom . Check out this video to see how it works:

“Without a TTS plugin like this, we would be left guessing what audio samples we would need to generate, and how they would play back,” Conor Bradley, Stories of Blossom lead developer, told ReadSpeaker AI. “The plugin allows us to experiment without the need to lock our decisions, which is a very powerful tool to have the privilege to use.”

This example begs the question every game developer will soon be asking themselves, a variation on the question we started with: What could a Unity text-to-speech plugin do for your next release? Reach out to start the conversation .

ReadSpeaker’s industry-leading voice expertise leveraged by leading Italian newspaper to enhance the reader experience Milan, Italy. – 19 October, 2023 – ReadSpeaker, the most trusted,…

Accessibility overlays have gotten a lot of bad press, much of it deserved. So what can you do to improve web accessibility? Find out here.

As STEM classrooms move online, we need new ways to make content accessible—and even fun! Learn nine approaches to digital STEM accessibility here.

- ReadSpeaker webReader

- ReadSpeaker docReader

- ReadSpeaker TextAid

- Assessments

- Text to Speech for K12

- Higher Education

- Corporate Learning

- Learning Management Systems

- Custom Text-To-Speech (TTS) Voices

- Voice Cloning Software

- Text-To-Speech (TTS) Voices

- ReadSpeaker speechMaker Desktop

- ReadSpeaker speechMaker

- ReadSpeaker speechCloud API

- ReadSpeaker speechEngine SAPI

- ReadSpeaker speechServer

- ReadSpeaker speechServer MRCP

- ReadSpeaker speechEngine SDK

- ReadSpeaker speechEngine SDK Embedded

- Automotive Applications

- Conversational AI

- Entertainment

- Experiential Marketing

- Guidance & Navigation

- Smart Home Devices

- Transportation

- Virtual Assistant Persona

- Voice Commerce

- Customer Stories & e-Books

- About ReadSpeaker

- TTS Languages and Voices

- The Top 10 Benefits of Text to Speech for Businesses

- Learning Library

- e-Learning Voices: Text to Speech or Voice Actors?

- TTS Talks & Webinars

Make your products more engaging with our voice solutions.

- Solutions ReadSpeaker Online ReadSpeaker webReader ReadSpeaker docReader ReadSpeaker TextAid ReadSpeaker Learning Education Assessments Text to Speech for K12 Higher Education Corporate Learning Learning Management Systems ReadSpeaker Enterprise AI Voice Generator Custom Text-To-Speech (TTS) Voices Voice Cloning Software Text-To-Speech (TTS) Voices ReadSpeaker speechCloud API ReadSpeaker speechEngine SAPI ReadSpeaker speechServer ReadSpeaker speechServer MRCP ReadSpeaker speechEngine SDK ReadSpeaker speechEngine SDK Embedded

- Applications Accessibility Automotive Applications Conversational AI Education Entertainment Experiential Marketing Fintech Gaming Government Guidance & Navigation Healthcare Media Publishing Smart Home Devices Transportation Virtual Assistant Persona Voice Commerce

- Resources Resources TTS Languages and Voices Learning Library TTS Talks and Webinars About ReadSpeaker Careers Support Blog The Top 10 Benefits of Text to Speech for Businesses e-Learning Voices: Text to Speech or Voice Actors?

- Get started

Search on ReadSpeaker.com ...

All languages.

- Norsk Bokmål

- Latviešu valoda

- Português – Brasil

Using the Speech-to-Text API with C#

1. overview.

Google Cloud Speech-to-Text API enables developers to convert audio to text in 120 languages and variants, by applying powerful neural network models in an easy to use API.

In this codelab, you will focus on using the Speech-to-Text API with C#. You will learn how to send an audio file in English and other languages to the Cloud Speech-to-Text API for transcription.

What you'll learn

- How to use the Cloud Shell

- How to enable the Speech-to-Text API

- How to Authenticate API requests

- How to install the Google Cloud client library for C#

- How to transcribe audio files in English

- How to transcribe audio files with word timestamps

- How to transcribe audio files in different languages

What you'll need

- A Google Cloud Platform Project

- A Browser, such Chrome or Firefox

- Familiarity using C#

How will you use this tutorial?

How would you rate your experience with c#, how would you rate your experience with using google cloud platform services, 2. setup and requirements, self-paced environment setup.

- Sign-in to the Google Cloud Console and create a new project or reuse an existing one. If you don't already have a Gmail or Google Workspace account, you must create one .

- The Project name is the display name for this project's participants. It is a character string not used by Google APIs. You can always update it.

- The Project ID is unique across all Google Cloud projects and is immutable (cannot be changed after it has been set). The Cloud Console auto-generates a unique string; usually you don't care what it is. In most codelabs, you'll need to reference your Project ID (typically identified as PROJECT_ID ). If you don't like the generated ID, you might generate another random one. Alternatively, you can try your own, and see if it's available. It can't be changed after this step and remains for the duration of the project.

- For your information, there is a third value, a Project Number , which some APIs use. Learn more about all three of these values in the documentation .

- Next, you'll need to enable billing in the Cloud Console to use Cloud resources/APIs. Running through this codelab won't cost much, if anything at all. To shut down resources to avoid incurring billing beyond this tutorial, you can delete the resources you created or delete the project. New Google Cloud users are eligible for the $300 USD Free Trial program.

Start Cloud Shell

While Google Cloud can be operated remotely from your laptop, in this codelab you will be using Google Cloud Shell , a command line environment running in the Cloud.

Activate Cloud Shell

If this is your first time starting Cloud Shell, you're presented with an intermediate screen describing what it is. If you were presented with an intermediate screen, click Continue .

It should only take a few moments to provision and connect to Cloud Shell.

This virtual machine is loaded with all the development tools needed. It offers a persistent 5 GB home directory and runs in Google Cloud, greatly enhancing network performance and authentication. Much, if not all, of your work in this codelab can be done with a browser.

Once connected to Cloud Shell, you should see that you are authenticated and that the project is set to your project ID.

- Run the following command in Cloud Shell to confirm that you are authenticated:

Command output

- Run the following command in Cloud Shell to confirm that the gcloud command knows about your project:

If it is not, you can set it with this command:

3. Enable the Speech-to-Text API

Before you can begin using the Speech-to-Text API, you must enable the API. You can enable the API by using the following command in the Cloud Shell:

4. Install the Google Cloud Speech-to-Text API client library for C#

First, create a simple C# console application that you will use to run Speech-to-Text API samples:

You should see the application created and dependencies resolved:

Next, navigate to SpeechToTextApiDemo folder:

And add Google.Cloud.Speech.V1 NuGet package to the project:

Now, you're ready to use Speech-to-Text API!

5. Transcribe Audio Files

In this section, you will transcribe a pre-recorded audio file in English. The audio file is available on Google Cloud Storage.

To transcribe an audio file, open the code editor from the top right side of the Cloud Shell:

Navigate to the Program.cs file inside the SpeechToTextApiDemo folder and replace the code with the following:

Take a minute or two to study the code and see it is used to transcribe an audio file*.*

The Encoding parameter tells the API which type of audio encoding you're using for the audio file. Flac is the encoding type for .raw files (see the doc for encoding type for more details).

In the RecognitionAudio object, you can pass the API either the uri of our audio file in Cloud Storage or the local file path for the audio file. Here, we're using a Cloud Storage uri.

Back in Cloud Shell, run the app:

You should see the following output:

In this step, you were able to transcribe an audio file in English and print out the result. Read more about Transcribing .

6. Transcribe with word timestamps

Speech-to-Text can detect time offset (timestamp) for the transcribed audio. Time offsets show the beginning and end of each spoken word in the supplied audio. A time offset value represents the amount of time that has elapsed from the beginning of the audio, in increments of 100ms.

To transcribe an audio file with time offsets, navigate to the Program.cs file inside the SpeechToTextApiDemo folder and replace the code with the following:

Take a minute or two to study the code and see it is used to transcribe an audio file with word timestamps*.* The EnableWordTimeOffsets parameter tells the API to enable time offsets (see the doc for more details).

In this step, you were able to transcribe an audio file in English with word timestamps and print out the result. Read more about Transcribing with word offsets .

7. Transcribe different languages

Speech-to-Text API supports transcription in over 100 languages! You can find a list of supported languages here .

In this section, you will transcribe a pre-recorded audio file in French. The audio file is available on Google Cloud Storage.

To transcribe the French audio file, navigate to the Program.cs file inside the SpeechToTextApiDemo folder and replace the code with the following:

Take a minute or two to study the code and see how it is used to transcribe an audio file*.* The LanguageCode parameter tells the API what language the audio recording is in.

This is a sentence from a popular French children's tale .

In this step, you were able to transcribe an audio file in French and print out the result. Read more about supported languages .

8. Congratulations!

You learned how to use the Speech-to-Text API using C# to perform different kinds of transcription on audio files!

To avoid incurring charges to your Google Cloud Platform account for the resources used in this quickstart:

- Go to the Cloud Platform Console .

- Select the project you want to shut down, then click ‘Delete' at the top: this schedules the project for deletion.

- Google Cloud Speech-to-Text API: https://cloud.google.com/speech-to-text/docs

- C#/.NET on Google Cloud Platform: https://cloud.google.com/dotnet/

- Google Cloud .NET client: https://googlecloudplatform.github.io/google-cloud-dotnet/

This work is licensed under a Creative Commons Attribution 2.0 Generic License.

Except as otherwise noted, the content of this page is licensed under the Creative Commons Attribution 4.0 License , and code samples are licensed under the Apache 2.0 License . For details, see the Google Developers Site Policies . Java is a registered trademark of Oracle and/or its affiliates.

Open main menu

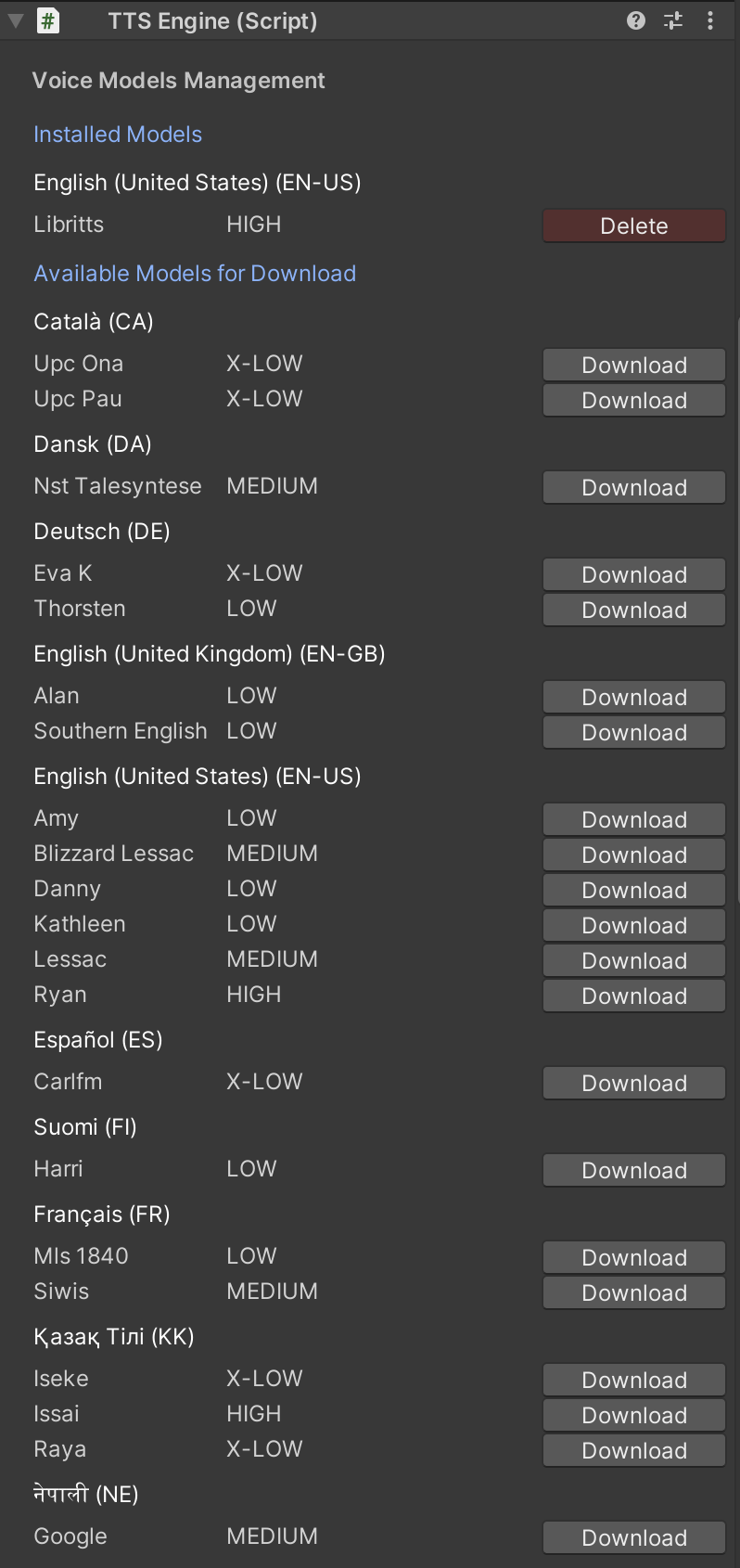

Overtone - Realistic AI Offline Text to Speech (TTS)

Overtone is an offline Text-to-Speech asset for Unity. Enrich your game with 15+ languages, 900+ English voices, rapid performance, and cross-platform support.

Getting Started

Welcome to the Overtone documentation! In this section, we’ll walk you through the initial steps to start using the tools. We will explain the various features of Overtone, how to set it up, and provide guidance on using the different models for text to speech

Overtone provides a versatile text-to-speech solution, supporting over 15 languages to cater to a diverse user base. It is important to note that the quality of each model varies, which in turn affects the voice output. Overtone offers four quality variations: X-LOW, LOW, MEDIUM, and HIGH, allowing users to choose the one that best fits their needs.

The plugin includes a default English-only model, called LibriTTS, which boasts a selection of more than 900 distinct voices, readily available for use. As lower quality models are faster to process, they are particularly well-suited for mobile devices, where speed and efficiency are crucial.

How to download models

The TTSVoice component provides a convenient interface for downloading the models with just a click. Alternatively you can open the window from Window > Overtone > Download Manager

The plugin contains a demos to demonstrate the functionality: Text to speech. You can input text, select a downloaded voice in the TTSVoice component an listen to it

This class loads and setups the model into memory. It should be added into scenes that Overtone is planned to be used. It exposes 1 method, Speak which receives a string and a TTSVoice and returns an audioclip.

Example programatic usage:

This script loads a voice model and frees it when necessary. It also allows the user to select the speaker id to use in the voice model.

Script Reference for TTSVoice.cs

TTSPlayer.cs is a script that combines a TTSVoice and a TTSEngine into synthesized text.

Script Reference for TTSPlayer.cs

Ssmlpreprocessor.

SSMLPreprocessor.cs is a static class that offers limited SSML (Speech Synthesis Markup Language) support for Overtone. Currently, this class supports preprocessing for the <break> tag.

Speech Synthesis Markup Language (SSML) is an XML-based markup language that provides a standard way to control various aspects of synthesized speech output, including pronunciation, volume, pitch, and speed.

While we plan to add partial SSML support in future updates, for now, the SSMLPreprocessor class only recognizes the <break> tag.

The <break> tag allows you to add a pause in the synthesized speech output.

Supported Platforms

Overtone supports the following platforms:

If interested in any other platforms, please reach out.

Supported Languages

Troubleshooting.

For any questions, issues or feature requests don’t hesitate to email us at [email protected] or join the discord . Very are happy to help and aim to have very fast response times :)

We are a small company focused on building tools for game developers. Send us an email to [email protected] if interested in working with us. For any other inquiries, feel free to contact us at [email protected] or contact us on the discord

Sign up to our newsletter.

Want to receive news about discounts, new products and updates?

- Case Studies

- Support & Services

- Asset Store

Search Unity

A Unity ID allows you to buy and/or subscribe to Unity products and services, shop in the Asset Store and participate in the Unity community.

- Discussions

- Evangelists

- User Groups

- Beta Program

- Advisory Panel

You are using an out of date browser. It may not display this or other websites correctly. You should upgrade or use an alternative browser .

- Unity 6 Preview is now available. To find out what's new, have a look at our Unity 6 Preview blog post . Dismiss Notice

- Unity is excited to announce that we will be collaborating with TheXPlace for a summer game jam from June 13 - June 19. Learn more. Dismiss Notice

- Search titles only

Separate names with a comma.

- Search this thread only

- Display results as threads

Useful Searches

- Recent Posts

[Open Source] whisper.unity - free speech to text running on your machine

Discussion in ' Assets and Asset Store ' started by Macoron , Apr 12, 2023 .

whisper.unity Several month ago OpenAI released powerful audio speech recognition (asr) model called Whisper . Code and weights are under MIT license. I used another open source implementation called whisper.cpp and moved it to Unity. Main features: Multilanguage, supports around 60 languages Can do transcription from one language to another. For example transcribe German audio to English text. Works faster than realtime. On my Mac it transcribes 11 seconds audio in 220 ms Runs on local user machine without Internet connection Free and open source, can be used in commercial projects Feel free to use it in your projects: https://github.com/Macoron/whisper.unity

I implemented this into my new project. It works great, thanks for this! I couldn't get this work for IL2CPP, though, I had to use Mono. (Unity 2022.3.0)

Gord10 said: ↑ I implemented this into my new project. It works great, thanks for this! I couldn't get this work for IL2CPP, though, I had to use Mono. (Unity 2022.3.0) Click to expand...

Macoron said: ↑ Great, nice to hear that you used it for your project. For what platform did you have problem with IL2CPP? It should be supported. Click to expand...

Gord10 said: ↑ I get following errors in player (64-bits Windows build) Click to expand...

Great! Windows, Mac (tested only for Silicon) and Linux IL2CPP builds work perfectly, now, thanks for the fix.

Warfighter789

Hi there, is this asset compatible with VR devices such as Meta Quest 2? I attempted to integrate it into my project but encountered an error. Thanks in advance. Error Unity NotSupportedException: IL2CPP doesn't allow marshaling delegates that reference instance methods to native code. The method we're trying to marshal is: Whisper.Native.whisper_progress_callback::Invoke.

Warfighter789 said: ↑ Hi there, is this asset compatible with VR devices such as Meta Quest 2? I attempted to integrate it into my project but encountered an error. Thanks in advance. Error Unity NotSupportedException: IL2CPP doesn't allow marshaling delegates that reference instance methods to native code. The method we're trying to marshal is: Whisper.Native.whisper_progress_callback::Invoke. Click to expand...

Hi, Unfortunately, I have an initialization error with Unity 2022.3 LTS concerning libwhisper.dll (DllNotFoundException: libwhisper assembly) Any advice will be welcome to allow a compilation with this version.

jlmarc33 said: ↑ Hi, Unfortunately, I have an initialization error with Unity 2022.3 LTS concerning libwhisper.dll (DllNotFoundException: libwhisper assembly) View attachment 1267375 Any advice will be welcome to allow a compilation with this version. Click to expand...

Macoron said: ↑ Check messages above. This error should be fixed by recent update. Btw, I didn't test in Oculus Quest 2, but really interested to see how fast it works. Please write back. Edit: Make sure you use lastest-latest with this update https://github.com/Macoron/whisper.unity/pull/41 Click to expand...

Warfighter789 said: ↑ The latest update fixed the issue, thank you! The speed is quite fast. I noticed that the Speech to Voice isn't as accurate anymore, is that normal? Click to expand...

Macoron said: ↑ What do you mean Speech to Voice isn't accurate anymore? Do you have bad transcription results? If that the the case, what language do you use? Click to expand...

Warfighter789 said: ↑ Yeah, my transcription results are having problems. I've noticed that it's not picking up my voice as accurately as before. Sometimes when I say something, it comes out differently in the transcript. I'm using English. Click to expand...

Macoron said: ↑ Well, you can try to use older release. The latest master uses whisper.cpp 1.4.2 which may works different from 1.2.2. I also noticed some changes, but not sure if it's better or worse. https://github.com/Macoron/whisper.unity/releases/tag/1.1.1 If you are using English, I highly recommend you to switch to `whisper.tiny.en` or `whisper.base.en` models. They are much better in English transcription. I personally use `whisper.small.en`, but they might be too heavy for quest. Click to expand...

I tested Whisper.unity successfully on my Windows 11 laptop PC without any issues. I used Unity 2021.3.9 and the latest 2022.3.4 LTS. So, my initialization problem with Unity 2022.3.0 seems to be related only to my specific desktop PC configuration... (Windows 10 with security restrictions).

jlmarc33 said: ↑ I tested Whisper.unity successfully on my Windows 11 laptop PC without any issues. I used Unity 2021.3.9 and the latest 2022.3.4 LTS. So, my initialization problem with Unity 2022.3.0 seems to be related only to my specific desktop PC configuration... (Windows 10 with security restrictions). Click to expand...

Bullybolton

@Warfighter789 what did you do to test on Quest 2? I've just built the microphone sample scene onto quest and it was very slow.

Macoron said: ↑ https://github.com/Macoron/whisper.unity Click to expand...

Spellbook said: ↑ This is something I've worked towards for years and it has effectively been impossible unless you're Google, Apple or Amazon... I don't think people quite realize how revolutionary this stuff is yet. Click to expand...

One issue I've run into is sampling a short audio clip returns 0 segments. Using push-to-talk, someone might quickly say "Yes" and the clip is 1 or 2 seconds long. The WhisperWrapper line "var n = WhisperNative.whisper_full_n_segments(_whisperCtx);" returns 0, finding no segments. I assume this is probably a limitation of the Whisper internals? I wanted to ask before I artificially append a few seconds to the end of audio clips as a hack solution.

Spellbook said: ↑ One issue I've run into is sampling a short audio clip returns 0 segments. Using push-to-talk, someone might quickly say "Yes" and the clip is 1 or 2 seconds long. Click to expand...

yeah , escuse my english i french sorry if question is a noob one i cant find how to translate a text from language to another exept of couse for the bool translateToEnglish, i want to translate all speech what ever the language in french any help would be highly appreciated thanks for this great paquage !

Utopien said: ↑ yeah , escuse my english i french sorry if question is a noob one i cant find how to translate a text from language to another exept of couse for the bool translateToEnglish, i want to translate all speech what ever the language in french any help would be highly appreciated Click to expand...

Macoron said: ↑ Find Whisper Manager on your scene and there find "Language" field. Write "fr" language code and make sure that "Translate To English" is disabled. Now any speech on any language will be translated to French text. Keep in mind, that it doesn't work as well as English translation and you will probably need bigger model than "tiny". With smaller models it will probably be just gibberish. View attachment 1272260 Click to expand...

Quick update: whisper.unity updated to 1.2.0 version! Biggest changes are prompting and streaming support. For more information, check release notes in Github repository .

This is amazing I've always wanted to see something like this

Hey this is working great for me in editor but when i do an android build It dies thusly 09-11 23:43:30.505 1781 2132 I Unity : Trying to load Whisper model from buffer... 09-11 23:43:30.553 1781 1817 E Unity : DllNotFoundException: __Internal assembly:<unknown assembly> type:<unknown type> member null) 09-11 23:43:30.553 1781 1817 E Unity : at (wrapper managed-to-native) Whisper.Native.WhisperNative.whisper_init_from_buffer(intptr,uintptr) 09-11 23:43:30.553 1781 1817 E Unity : at Whisper.WhisperWrapper.InitFromBuffer (System.Byte[] buffer) [0x00054] in <82e321693d1448d4ae1fba9fa7e11c76>:0 09-11 23:43:30.553 1781 1817 E Unity : at Whisper.WhisperWrapper+<>c__DisplayClass27_0.<InitFromBufferAsync>b__0 () [0x00000] in <82e321693d1448d4ae1fba9fa7e11c76>:0 09-11 23:43:30.553 1781 1817 E Unity : at System.Threading.Tasks.Task`1[TResult].InnerInvoke () [0x0000f] in <0bfb382d99114c52bcae2561abca6423>:0 09-11 23:43:30.553 1781 1817 E Unity : at System.Threading.Tasks.Task.Execute () [0x00000] in <0bfb382d99114c52bcae2561abca6423>:0 09-11 23:43:30.553 1781 1817 E Unity : --- End of stack trace from previous location where exception was thrown --- 09-11 23:43:30.553 1781 1817 E Unity : 09-11 23:43:30.553 1781 1817 E Unity : at Whisper.WhisperWrapper.InitFromBufferAsync (System.Byte[] buffer) [0x0007d] in <82e321693d1448d4ae1fba9fa7e11c76>:0 09-11 23:43:30.553 1781 1817 E Unity : at Whisper.WhisperWrapper.InitFromFileAsync (System.String modelPath) [0x000c1] in <82e321693d1448d4ae1fba9fa7e11c76>:0 09-11 23:43:30.553 1781 1817 E Unity : at Whisper.WhisperManager.InitModel () [0x000bd] 09-11 23:43:35.290 1781 1817 E Unity : Whisper model isn't loaded! Init Whisper model first!

Strategos said: ↑ Hey this is working great for me in editor but when i do an android build It dies thusly Click to expand...

Macoron said: ↑ For what device are you building? Which version of Unity? Please also check that your Player Settings has IL2CPP Scripting Backend and you are building for ARM64 architecture. Click to expand...

Is there a way to add custom words? There are some words that I need to use but it doesn't pick them up as that word ever.

Also, @Macoron I tried installing the package from the package manager and kept getting an error about OnRecordStop delegate not being found. Removed it and copied the package com.whisper.unity package folder in the downloaded git zip download and no error.

epl-matt said: ↑ Is there a way to add custom words? There are some words that I need to use but it doesn't pick them up as that word ever. Click to expand...

Strategos said: ↑ Thanks I will check these things and report back. Click to expand...

New major update: now whisper supports GPU inference on CUDA and Metal. It also should improve quality and fix some minor bugs. Check more details here .

Macoron said: ↑ New major update: now whisper supports GPU inference on CUDA and Metal. It also should improve quality and fix some minor bugs. Check more details here . Click to expand...

Tyke18 said: ↑ Hi does this have a voice activity detection feature? I want to be able to capture mic input on a Meta Quest 3 without the user having to press anything to indicate they are speaking (audio permissions must be granted first of course). Click to expand...

How does the Initial prompt work? My application needs to work in the following way. It needs to listen to a users natural speech and pick out any keywords (held in a database) on the fly during their speech. If it detects a keyword it will then trigger an event. Is filling the Initial Prompt field with my keywords the best solution to this? Should each word be comma separated in Initial Prompt field?

DanielSCG said: ↑ How does the Initial prompt work? My application needs to work in the following way. It needs to listen to a users natural speech and pick out any keywords (held in a database) on the fly during their speech. If it detects a keyword it will then trigger an event. Is filling the Initial Prompt field with my keywords the best solution to this? Should each word be comma separated in Initial Prompt field? View attachment 1414155 Click to expand...

Macoron said: ↑ In my experience, the initial prompt works best for: - Make whisper better understand rare or new words. For example person or company name, location name, etc - Guide transcription in certain writing styles. For example "WRITE ALL TEXT IN CAPS LOCK" - Setup context of previous transcription You can try to write the initial prompt like "house, car, house, house, train, car..." to set up some context for the model. This might help whisper to work better or might cause the model to hallucinate. Hard to tell without experiments. If you have limited set of words, you might be interested in grammar rules . They basically constrain model and force it to recognize words based on the set of rules. Unfortunately, they are not supported by whisper.unity yet . Click to expand...

DanielSCG said: ↑ Hi, Thanks for the fast reply. I have tried using the initial prompt but it doesn't seem to help much. To your knowledge is Whisper the best solution to use for my requirements? Maybe there would be some other transcriber that would work similarly? All I need it to do is recognise if a keyword is spoken with a good degree of accuracy. Click to expand...

Macoron said: ↑ Whisper should be good for such task. The accuracy grows greatly with model size. If you want to support only English it also make sense to use English-only models. They are much better than general models. I would also recommend not to use streaming, because it's less accurate. Whisper.cpp fails to transcript any audio that is less than 1 second in duration, so keep some audio margins for your words. Click to expand...

DanielSCG said: ↑ I need my application to recognise and list keywords the user is speaking in Realtime. For example is a user was saying a sentence like "I am in an house and I am about to inspect the door frame". The application should in Realtime show the keywords to the user on the screen. In this example the keywords would be in a database [House, Door]. So I need the app and speech to text to run for about 5 minuets in real time and just indicate to the user when they have spoken a keyword. I am developing this for android mobile devices so it needs to be lightweight enough to run on them. My surface book is what I'm using for development and ggml-small.eng.bin is the largest model it can handle. Is there some parameters in whisper I could change to better achieve these requirements? Click to expand...

Instantly share code, notes, and snippets.

akeller / WatsonSpeechToText.cs

- Download ZIP

- Star ( 0 ) 0 You must be signed in to star a gist

- Fork ( 0 ) 0 You must be signed in to fork a gist

- Embed Embed this gist in your website.

- Share Copy sharable link for this gist.

- Clone via HTTPS Clone using the web URL.

- Learn more about clone URLs

- Save akeller/281864b1ab3d0a592642158a1f809b2a to your computer and use it in GitHub Desktop.

akeller commented May 23, 2018 • edited

BREAKING CHANGES MADE TO SDK This is on my todo list to fix, but it is currently not working with the most recent version in the asset store.

Sorry, something went wrong.

(Free) Runtime Text To Speech Plugin

Needed simple TTS plugin for a small unity windows game that i’m working on.. I know asset store has few, but they seem to rely on Windows Speech platform voices, and one plugin that was completely standalone didnt really have good enough speech quality.. First i tried to use Mozilla-TTS (to generate voice files in advance), but it was impossible to get it to compile due to some weird tricks needed. Then found espeak-ng , which seems good enough quality and already had dotnet wrapper available! I Compiled speak dll with VisualStudio, had few issues with the wrapper, but got them fixed – compare Client.cs with the original. Next issue was DLL kept crashing, fixed it by using DLLManipulator .

Unity project: https://github.com/unitycoder/UnityRuntimeTextToSpeech

Note: See github issues for known issues and ideas to improve this.

Updates: Now outputs to AudioSource component, thanks to updates from @autious fork.

Share this:

Related Posts

- webgl+javascript TTS

About the Author:

7 comments + add comment.

So cool. What platforms does this work on? IOS? Android?

not tested, but pretty sure that the DLL doesnt work on those platforms. (and especially because of using the DLLManipulator, which needs to read dll file from specific folder or so).

Better use those paid asset store versions, they work on many platforms.

Cool. ¿It is possible to change the language?

try this https://github.com/unitycoder/UnityRuntimeTextToSpeech/issues/3

Will this work offline? If not recommend other. Actually, need offline TTS.

yes, its offline.

list of alternatives https://github.com/unitycoder/UnityRuntimeTextToSpeech/wiki/Alternative-TTS-plugins

Leave a comment

Name (required)

Mail (will not be published) (required)

XHTML: You can use these tags: <a href="" title=""> <abbr title=""> <acronym title=""> <b> <blockquote cite=""> <cite> <code> <del datetime=""> <em> <i> <q cite=""> <s> <strike> <strong>

Recent posts

- Editor tool: Copy selected gameobject’s names into clipboard as rows (for Excel)

- Editor tool: Replace string in selected gameobject’s names

- UnityHub: Enable built-in Login Dialog (no more browser login/logout issues!)

- Use TikTok-TTS in Unity (with WebRequest)

- Create Scene Thumbnail Image using OnSceneSaved & OnPreviewGUI

- Using Moonsharp (LUA) + Unity Webgl

- Using 3D gameobject prefabs with Unity Tilemap + NavMesh Surface

- Custom Unity Hub Project Template Preview Image/Video (using HTML+CSS in package description)

- Check if point is inside polygon 2D (using line intersection)

- UnityHub: Enable Create Project Button (without having to select Cloud Organization)

- UI: Make Tooltip background fit Text content length

UnityLauncherPro

Recent comments.

- mgear on [Asset Store] Point Cloud Viewer & Tools

- Matheus Prates on [Asset Store] Point Cloud Viewer & Tools

- mgear on Vector3 maths for dummies!

- Tài Smlie on [Asset Store] Mobile Paint *now free

- mgear on Scorched Earth Terrain (wip)

- Asker on UI Text TypeWriter Effect [Script]

- Maxime on [Asset Store] Point Cloud Viewer & Tools

@unitycoder_com

Subscribe to blog via email.

Enter your email address to subscribe to this blog and receive notifications of new posts by email.

Email Address

These materials are not sponsored by or affiliated with Unity Technologies or its affiliates. “Unity” is a trademark or registered trademark of Unity Technologies or its affiliates in the U.S. and elsewhere.

Disclosure: These posts may contain affiliate links, which means we may receive a commission if you click a link and purchase something that we have recommended. While clicking these links won't cost you any money, they will help me fund my development projects while recommending great assets!

Help | Advanced Search

Computer Science > Computation and Language

Title: unity: two-pass direct speech-to-speech translation with discrete units.

Abstract: Direct speech-to-speech translation (S2ST), in which all components can be optimized jointly, is advantageous over cascaded approaches to achieve fast inference with a simplified pipeline. We present a novel two-pass direct S2ST architecture, UnitY, which first generates textual representations and predicts discrete acoustic units subsequently. We enhance the model performance by subword prediction in the first-pass decoder, advanced two-pass decoder architecture design and search strategy, and better training regularization. To leverage large amounts of unlabeled text data, we pre-train the first-pass text decoder based on the self-supervised denoising auto-encoding task. Experimental evaluations on benchmark datasets at various data scales demonstrate that UnitY outperforms a single-pass speech-to-unit translation model by 2.5-4.2 ASR-BLEU with 2.83x decoding speed-up. We show that the proposed methods boost the performance even when predicting spectrogram in the second pass. However, predicting discrete units achieves 2.51x decoding speed-up compared to that case.

Submission history

Access paper:.

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

AI + Machine Learning , Announcements , Azure AI Content Safety , Azure AI Studio , Azure OpenAI Service , Partners

Introducing GPT-4o: OpenAI’s new flagship multimodal model now in preview on Azure

By Eric Boyd Corporate Vice President, Azure AI Platform, Microsoft

Posted on May 13, 2024 2 min read

- Tag: Copilot

- Tag: Generative AI

Microsoft is thrilled to announce the launch of GPT-4o, OpenAI’s new flagship model on Azure AI. This groundbreaking multimodal model integrates text, vision, and audio capabilities, setting a new standard for generative and conversational AI experiences. GPT-4o is available now in Azure OpenAI Service, to try in preview , with support for text and image.

Azure OpenAI Service

A step forward in generative AI for Azure OpenAI Service

GPT-4o offers a shift in how AI models interact with multimodal inputs. By seamlessly combining text, images, and audio, GPT-4o provides a richer, more engaging user experience.

Launch highlights: Immediate access and what you can expect

Azure OpenAI Service customers can explore GPT-4o’s extensive capabilities through a preview playground in Azure OpenAI Studio starting today in two regions in the US. This initial release focuses on text and vision inputs to provide a glimpse into the model’s potential, paving the way for further capabilities like audio and video.

Efficiency and cost-effectiveness

GPT-4o is engineered for speed and efficiency. Its advanced ability to handle complex queries with minimal resources can translate into cost savings and performance.

Potential use cases to explore with GPT-4o

The introduction of GPT-4o opens numerous possibilities for businesses in various sectors:

- Enhanced customer service : By integrating diverse data inputs, GPT-4o enables more dynamic and comprehensive customer support interactions.

- Advanced analytics : Leverage GPT-4o’s capability to process and analyze different types of data to enhance decision-making and uncover deeper insights.

- Content innovation : Use GPT-4o’s generative capabilities to create engaging and diverse content formats, catering to a broad range of consumer preferences.

Exciting future developments: GPT-4o at Microsoft Build 2024

We are eager to share more about GPT-4o and other Azure AI updates at Microsoft Build 2024 , to help developers further unlock the power of generative AI.

Get started with Azure OpenAI Service

Begin your journey with GPT-4o and Azure OpenAI Service by taking the following steps:

- Try out GPT-4o in Azure OpenAI Service Chat Playground (in preview).

- If you are not a current Azure OpenAI Service customer, apply for access by completing this form .

- Learn more about Azure OpenAI Service and the latest enhancements.

- Understand responsible AI tooling available in Azure with Azure AI Content Safety .

- Review the OpenAI blog on GPT-4o.

Let us know what you think of Azure and what you would like to see in the future.

Provide feedback

Build your cloud computing and Azure skills with free courses by Microsoft Learn.

Explore Azure learning

Related posts

AI + Machine Learning , Announcements , Azure AI , Azure AI Studio , Azure OpenAI Service , Events

New models added to the Phi-3 family, available on Microsoft Azure chevron_right

AI + Machine Learning , Announcements , Azure AI , Azure AI Content Safety , Azure AI Services , Azure AI Studio , Azure Cosmos DB , Azure Database for PostgreSQL , Azure Kubernetes Service (AKS) , Azure OpenAI Service , Azure SQL Database , Events

From code to production: New ways Azure helps you build transformational AI experiences chevron_right

AI + Machine Learning , Azure AI Studio , Customer stories

3 ways Microsoft Azure AI Studio helps accelerate the AI development journey chevron_right

AI + Machine Learning , Analyst Reports , Azure AI , Azure AI Content Safety , Azure AI Search , Azure AI Services , Azure AI Studio , Azure OpenAI Service , Partners

Microsoft is a Leader in the 2024 Gartner® Magic Quadrant™ for Cloud AI Developer Services chevron_right

Join the conversation, leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

I understand by submitting this form Microsoft is collecting my name, email and comment as a means to track comments on this website. This information will also be processed by an outside service for Spam protection. For more information, please review our Privacy Policy and Terms of Use .

I agree to the above

- Skip to main content

- Keyboard shortcuts for audio player

Benedictine College nuns denounce Harrison Butker's speech at their school

John Helton

Kansas City Chiefs kicker Harrison Butker speaks to the media during NFL football Super Bowl 58 opening night on Feb. 5, 2024, in Las Vegas. Butker railed against Pride month along with President Biden's leadership during the COVID-19 pandemic and his stance on abortion during a commencement address at Benedictine College last weekend. Charlie Riedel/AP hide caption

Kansas City Chiefs kicker Harrison Butker speaks to the media during NFL football Super Bowl 58 opening night on Feb. 5, 2024, in Las Vegas. Butker railed against Pride month along with President Biden's leadership during the COVID-19 pandemic and his stance on abortion during a commencement address at Benedictine College last weekend.

An order of nuns affiliated with Benedictine College rejected Kansas City Chiefs kicker Harrison's Butker's comments in a commencement speech there last weekend that stirred up a culture war skirmish.

"The sisters of Mount St. Scholastica do not believe that Harrison Butker's comments in his 2024 Benedictine College commencement address represent the Catholic, Benedictine, liberal arts college that our founders envisioned and in which we have been so invested," the nuns wrote in a statement posted on Facebook .

In his 20-minute address , Butker denounced abortion rights, Pride Month, COVID-19 lockdowns and "the tyranny of diversity, equity and inclusion" at the Catholic liberal arts college in Atchison, Kan.

He also told women in the audience to embrace the "vocation" of homemaker.

"I want to speak directly to you briefly because I think it is you, the women, who have had the most diabolical lies told to you. How many of you are sitting here now about to cross the stage, and are thinking about all the promotions and titles you're going to get in your career?" he asked. "Some of you may go on to lead successful careers in the world. But I would venture to guess that the majority of you are most excited about your marriage and the children you will bring into this world."

For many Missouri Catholics, abortion rights means choosing between faith, politics

That was one of the themes that the sisters of Mount St. Scholastica took issue with.

"Instead of promoting unity in our church, our nation, and the world, his comments seem to have fostered division," they wrote. "One of our concerns was the assertion that being a homemaker is the highest calling for a woman. We sisters have dedicated our lives to God and God's people, including the many women whom we have taught and influenced during the past 160 years. These women have made a tremendous difference in the world in their roles as wives and mothers and through their God-given gifts in leadership, scholarship, and their careers."

The Benedictine sisters of Mount St. Scholastica founded a school for girls in Atchinson in the 1860s. It merged with St. Benedict's College in 1971 to form Benedictine College.

Neither Butker nor the Chiefs have commented on the controversy. An online petition calling for the Chiefs to release the kicker had nearly 215,000 signatures as of Sunday morning.

6 in 10 U.S. Catholics are in favor of abortion rights, Pew Research report finds

The NFL, for its part, has distanced itself from Butker's remarks.

"Harrison Butker gave a speech in his personal capacity," Jonathan Beane, the NFL's senior VP and chief diversity and inclusion officer told NPR on Thursday. "His views are not those of the NFL as an organization."

Meanwhile, Butker's No. 7 jersey is one of the league's top-sellers , rivaling those of better-known teammates Patrick Mahomes and Travis Kelce.

Butker has been open about his faith. The 28-year-old father of two told the Eternal Word Television Network in 2019 that he grew up Catholic but practiced less in high school and college before rediscovering his belief later in life.

His comments have gotten some support from football fan social media accounts and Christian and conservative media personalities .

A video of his speech posted on Benedictine College's YouTube channel has 1.5 million views.

Rachel Treisman contributed to this story.

- Harrison Butker

- benedictine college

Unlock a new era of innovation with Windows Copilot Runtime and Copilot+ PCs

- Pavan Davuluri – Corporate Vice President, Windows + Devices

I am excited to be back at Build with the developer community this year.

Over the last year, we have worked on reimagining Windows PCs and yesterday, we introduced the world to a new category of Windows PCs called Copilot+ PCs.

Copilot+ PCs are the fastest, most intelligent Windows PCs ever with AI infused at every layer, starting with the world’s most powerful PC Neural Processing Units (NPUs) capable of delivering 40+ TOPS of compute. The new class of PCs is up to 20 times more powerful 1 and up to 100 times as efficient 2 for running AI workloads compared to traditional PCs. This is a quantum leap in performance, made possible by a quantum leap in efficiency. The NPU is part of a new System on Chip (SoC) that enables the most powerful and efficient Windows PCs ever built, with outstanding performance, incredible all day battery life, and great app experiences. Copilot+ PCs will be available in June, starting with Qualcomm’s Snapdragon X Series processors. Later this year we will have more devices in this category from Intel and AMD.

I am also excited that Qualcomm announced this morning its Snapdragon Dev Kit for Windows which has a special developer edition Snapdragon X Elite SoC. Featuring the NPU that powers the Copilot+ PCs, the Snapdragon Dev Kit for Windows has a form factor that is easily stackable and is designed specifically to be a developer’s everyday dev box, providing the maximum power and flexibility developers need. It is powered by a 3.8 GHz 12 Core Oryon CPU with dual core boost up to 4.3GHz, comes with 32 GB LPDDR5x memory, 512GB M2 storage, 80 Watt system architecture, support for up to 3 concurrent external displays and uses 20% ocean-bound-plastic. Learn more .

This new class of powerful next generation AI devices is an invitation to app developers to deliver differentiated AI experiences that run on the edge, taking advantage of NPUs that offer the benefits of minimal latency, cost efficiency, data privacy, and more.

As we continue our journey into the AI era of computing, we want to give Developers who are at the forefront of this AI transformation the right software tools in addition to these powerful NPU powered devices to accelerate the creation of differentiated AI experiences to over 1 billion users. Today, I’m thrilled to share some of the great capabilities coming to Windows, making Windows the best place for your development needs.

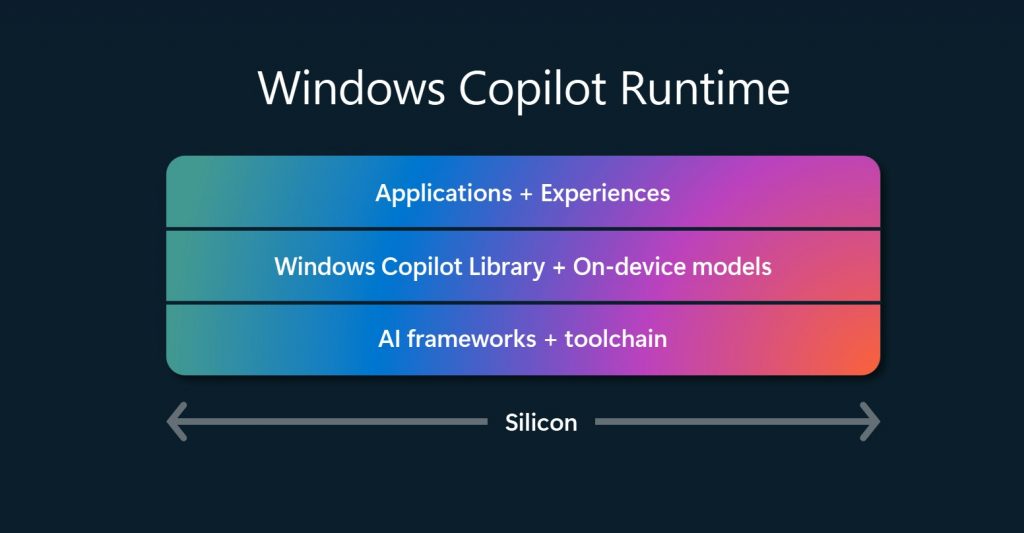

- We are excited to extend the Microsoft Copilot stack to Windows with Windows Copilot Runtime. We have infused AI into every layer of Windows, including a fundamental transformation of the OS itself to enable developers to accelerate AI development on Windows.

- Windows Copilot Runtime has everything you need to build great AI experiences regardless of where you are on your AI journey – whether you are just getting started or already have your own models. Windows Copilot Runtime includes Windows Copilot Library which is a set of APIs that are powered by the 40+ on-device AI models that ship with Windows. It also includes AI frameworks and toolchains to help developers bring their own on-device models to Windows. This is built on the foundation of powerful client silicon, including GPUs and NPUs.

- We are introducing Windows Semantic Index, a new OS capability which redefines search on Windows and powers new experiences like Recall. Later, we will make this capability available for developers with Vector Embeddings API to build their own vector store and RAG within their applications and with their app data.

- We are introducing Phi Silica which is built from the Phi series of models and is designed specifically for the NPUs in Copilot+ PCs. Windows is the first platform to have a state-of-the-art small language model (SLM) custom built for the NPU and shipping inbox.

- Phi Silica API along with OCR, Studio Effects, Live Captions, Recall User Activity APIs will be available in Windows Copilot Library in June. More APIs like Vector Embedding, RAG API, Text Summarization will be coming later.

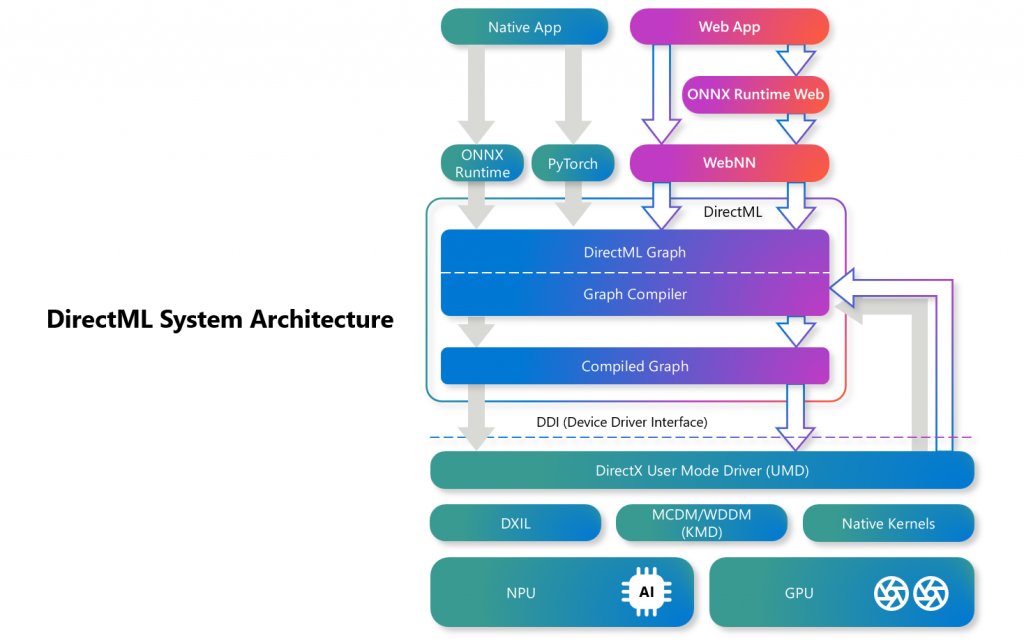

- We are introducing native support for PyTorch on Windows with DirectML which allows for thousands of Hugging Face models to just work on Windows.

- We are introducing Web Neural Network (WebNN) Developer Preview to Windows through DirectML. This allows web developers to take advantage of the silicon to deliver performant AI features in their web apps and can scale their AI investments across the breadth of the Windows ecosystem.

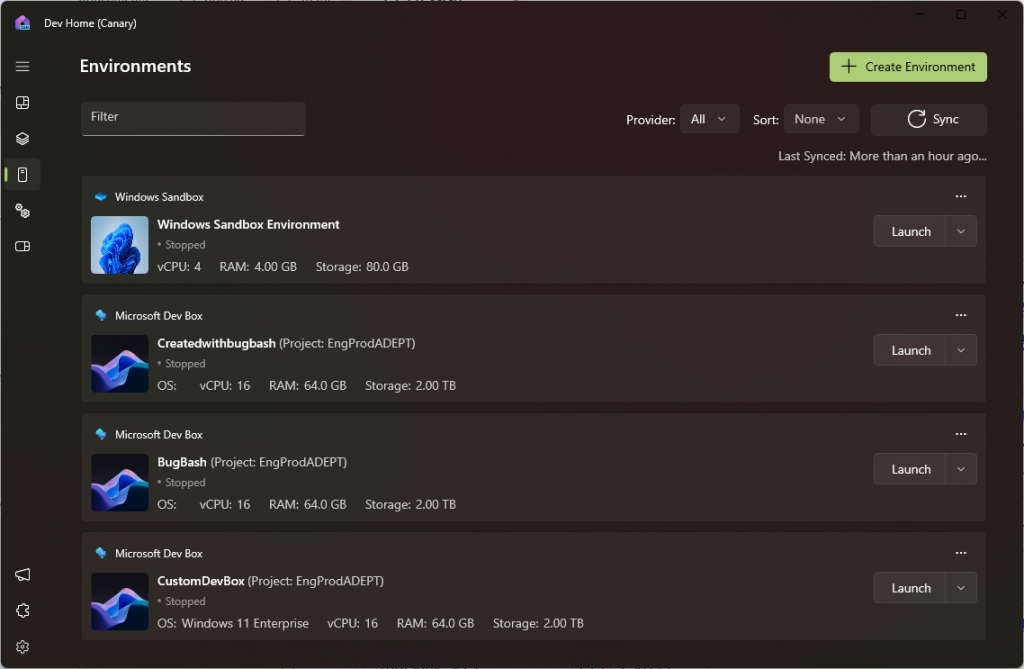

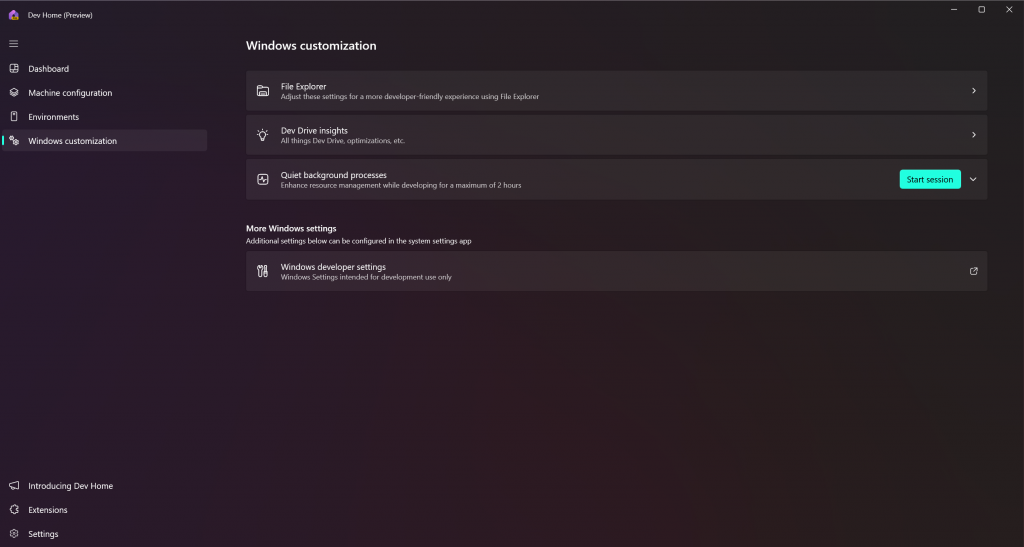

- We are introducing new productivity features in Dev Home like Environments, improvements to WSL, DevDrive and new updates to WinUI3 and WPF to help every developer become more productive on Windows.

I can’t wait to share more with you during our keynote today, be sure to register for Build and tune in !

Introducing Windows Copilot Runtime to provide a powerful AI platform for developers

We want to democratize the ability to experiment, to build, and to reach people with breakthrough AI experiences. That’s why we’re committed to making Windows the most open platform for AI development. Building a powerful AI platform takes more than a new chip or model, it takes reimagining the entire system, from top to bottom. The new Windows Copilot Runtime is that system. Developers can take advantage of Windows Copilot Runtime in a variety of ways, from higher level APIs that can be accessed via simple settings toggle, all the way to bringing your own machine learning models. It represents the end-to-end Windows ecosystem:

- Applications and Experiences created by Microsoft and developers like you across Windows shell, Win32 Apps and Web apps.

- Windows Copilot Library is the set of APIs powered by the 40+ on-device models that ship with Windows. This includes APIs and algorithms that power Windows experiences and are available for developers to tap into.

- AI frameworks like DirectML, ONNX Runtime, PyTorch, WebNN and toolchains like Olive, AI Toolkit for Visual Studio Code and more to help developers bring their own models and scale their AI apps across the breadth of the Windows hardware ecosystem.

- Windows Copilot Runtime is built on the foundation of powerful client silicon , including GPUs and NPUs.

New experiences built using the Windows Copilot Runtime