Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

A PyTorch implementation of the paper `Probabilistic Anchor Assignment with IoU Prediction for Object Detection` ECCV 2020 ( https://arxiv.org/abs/2007.08103 )

Folders and files

Repository files navigation, probabilistic anchor assignment with iou prediction for object detection.

By Kang Kim and Hee Seok Lee.

This is a PyTorch implementation of the paper Probabilistic Anchor Assignment with IoU Prediction for Object Detection ( paper link ), based on ATSS and maskrcnn-benchmark .

Now the code supports PyTorch 1.6 .

PAA is available at mmdetection . Many thanks to @jshilong for the great work!

Introduction

In object detection, determining which anchors to assign as positive or negative samples, known as anchor assignment , has been revealed as a core procedure that can significantly affect a model's performance. In this paper we propose a novel anchor assignment strategy that adaptively separates anchors into positive and negative samples for a ground truth bounding box according to the model's learning status such that it is able to reason about the separation in a probabilistic manner. To do so we first calculate the scores of anchors conditioned on the model and fit a probability distribution to these scores. The model is then trained with anchors separated into positive and negative samples according to their probabilities. Moreover, we investigate the gap between the training and testing objectives and propose to predict the Intersection-over-Unions of detected boxes as a measure of localization quality to reduce the discrepancy.

Installation

Please check INSTALL.md for installation instructions.

The inference command line on coco minival split:

Please note that:

- If your model's name is different, please replace PAA_R_50_FPN_1x.pth with your own.

- If you enounter out-of-memory error, please try to reduce TEST.IMS_PER_BATCH to 1.

- If you want to evaluate a different model, please change --config-file to its config file (in configs/paa ) and MODEL.WEIGHT to its weights file.

Results on COCO

We provide the performance of the following trained models. All models are trained with the configuration same as ATSS .

[1] 1x , 1.5x and 2x mean the model is trained for 90K, 135K and 180K iterations, respectively. [2] All results are obtained with a single model. [3] dcnv2 denotes deformable convolutional networks v2. Note that for ResNet based models, we apply deformable convolutions from stage c3 to c5 in backbones. For ResNeXt based models only stage c4 and c5 use deformable convolutions. All dcnv2 models use deformable convolutions in the last layer of detector towers. [4] Please use TEST.BBOX_AUG.ENABLED True to enable multi-scale testing.

Results of Faster R-CNN

We also provide experimental results that apply PAA to Region Proposal Network of Faster R-CNN. Code is available at PAA_Faster-RCNN .

The following command line will train PAA_R_50_FPN_1x on 8 GPUs with Synchronous Stochastic Gradient Descent (SGD):

- If you want to use fewer GPUs, please change --nproc_per_node to the number of GPUs. No other settings need to be changed. The total batch size does not depends on nproc_per_node . If you want to change the total batch size, please change SOLVER.IMS_PER_BATCH in configs/paa/paa_R_50_FPN_1x.yaml .

- The models will be saved into OUTPUT_DIR .

- If you want to train PAA with other backbones, please change --config-file .

Contributing to the project

Any pull requests or issues are welcome.

Code of conduct

Contributors 2.

- Python 78.5%

- Dockerfile 0.5%

Probabilistic Anchor Assignment with IoU Prediction for Object Detection

In object detection, determining which anchors to assign as positive or negative samples, known as anchor assignment , has been revealed as a core procedure that can significantly affect a model’s performance. In this paper we propose a novel anchor assignment strategy that adaptively separates anchors into positive and negative samples for a ground truth bounding box according to the model’s learning status such that it is able to reason about the separation in a probabilistic manner. To do so we first calculate the scores of anchors conditioned on the model and fit a probability distribution to these scores. The model is then trained with anchors separated into positive and negative samples according to their probabilities. Moreover, we investigate the gap between the training and testing objectives and propose to predict the Intersection-over-Unions of detected boxes as a measure of localization quality to reduce the discrepancy. The combined score of classification and localization qualities serving as a box selection metric in non-maximum suppression well aligns with the proposed anchor assignment strategy and leads significant performance improvements. The proposed methods only add a single convolutional layer to RetinaNet baseline and does not require multiple anchors per location, so are efficient. Experimental results verify the effectiveness of the proposed methods. Especially, our models set new records for single-stage detectors on MS COCO test-dev dataset with various backbones. Code is available at https://github.com/kkhoot/PAA .

1 Introduction

Object detection in which objects in a given image are classified and localized, is considered as one of the fundamental problems in Computer Vision. Since the seminal work of R-CNN [ 8 ] , recent advances in object detection have shown rapid improvements with many innovative architectural designs [ 28 , 21 , 41 , 43 ] , training objectives [ 7 , 22 , 3 , 29 ] and post-processing schemes [ 2 , 13 , 15 ] with strong CNN backbones [ 19 , 17 , 31 , 32 , 11 , 36 , 5 ] . For most of CNN-based detectors, a dominant paradigm of representing objects of various sizes and shapes is to enumerate anchor boxes of multiple scales and aspect ratios at every spatial location. In this paradigm, anchor assignment procedure in which anchors are assigned as positive or negative samples needs to be performed. The most common strategy to determine positive samples is to use Intersection-over-Union (IoU) between an anchor and a ground truth (GT) bounding box. For each GT box, one or more anchors are assigned as positive samples if its IoU with the GT box exceeds a certain threshold. Target values for both classification and localization (i.e. regression offsets) of these anchors are determined by the object category and the spatial coordinate of the GT box.

Although the simplicity and intuitiveness of this heuristic make it a popular choice, it has a clear limitation in that it ignores the actual content of the intersecting region, which may contain noisy background, nearby objects or few meaningful parts of the target object to be detected. Several recent studies [ 42 , 34 , 40 , 16 , 20 ] have identified this limitation and suggested various new anchor assignment strategies. These works include selecting positive samples based on the detection-specific likelihood [ 42 ] , the statistics of anchor IoUs [ 40 ] or the cleanness score of anchors [ 16 , 20 ] . All these methods show improvements compared to the baseline, and verify the importance of anchor assignment in object detection.

In this paper we would like to extend some of these ideas further and propose a novel anchor assignment strategy. In order for an anchor assignment strategy to be effective, a flexible number of anchors should be assigned as positives (or negatives) not only on IoUs between anchors and a GT box but also on how probable it is that a model can reason about the assignment. In this respect, the model needs to take part in the assignment procedure, and positive samples need to vary depending on the model. When no anchor has a high IoU for a GT box, some of the anchors need to be assigned as positive samples to reduce the impact of the improper anchor design. In this case, anchors in which the model finds the most meaningful cues about the target object (that may not necessarily be anchors of the highest IoU) can be assigned as positives. On the other side, when there are many anchors that the model finds equally of high quality and competitive, all of these anchors need to be treated as positives not to confuse the training process. Most importantly, to satisfy all these conditions, the quality of anchors as a positive sample needs to be evaluated reflecting the model’s current learning status , i.e. its parameter values.

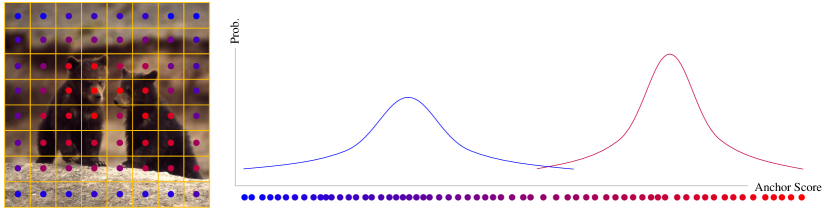

With this motivation, we propose a probabilistic anchor assignment (PAA) strategy that adaptively separates a set of anchors into positive and negative samples for a GT box according to the learning status of the model associated with it. To do so we first define a score of a detected bounding box that reflects both the classification and localization qualities. We then identify the connection between this score and the training objectives and represent the score as the combination of two loss objectives. Based on this scoring scheme, we calculate the scores of individual anchors that reflect how the model finds useful cues to detect a target object in each anchor. With these anchor scores, we aim to find a probability distribution of two modalities that best represents the scores as positive or negative samples as in Figure 1 . Under the found probability distribution, anchors with probabilities from the positive component are high are selected as positive samples. This transforms the anchor assignment problem to a maximum likelihood estimation for a probability distribution where the parameters of the distribution is determined by anchor scores. Based on the assumption that anchor scores calculated by the model are samples drawn from a probability distribution, it is expected that the model can infer the sample separation in a probabilistic way, leading to easier training of the model compared to other non-probabilistic assignments. Moreover, since positive samples are adaptively selected based on the anchor score distribution, it does not require a pre-defined number of positive samples nor an IoU threshold.

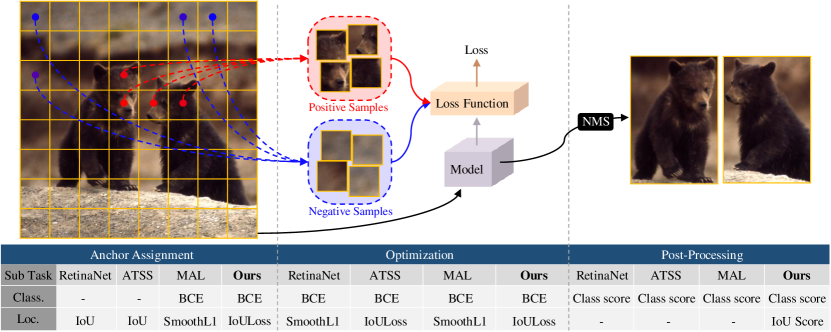

On top of that, we identify that in most modern object detectors, there is inconsistency between the testing scheme (selecting boxes according to the classification score only during NMS) and the training scheme (minimizing both classification and localization losses). Ideally, the quality of detected boxes should be measured based not only on classification but also on localization. To improve this incomplete scoring scheme and at the same time to reduce the discrepancy of objectives between the training and testing procedures, we propose to predict the IoU of a detected box as a localization quality, and multiply the classification score by the IoU score as a metric to rank detected boxes. This scoring is intuitive, and allows the box scoring scheme in the testing procedure to share the same ground not only with the objectives used during training, but also with the proposed anchor assignment strategy that brings both classification and localization into account, as depicted in Figure 2 . Combined with the proposed PAA, this simple extension significantly contributes to detection performance. We also compare the IoU prediction with the centerness prediction [ 33 , 40 ] and show the superiority of the proposed method.

With an additional improvement in post-processing named score voting, each of our methods shows clear improvements as revealed in the ablation studies. In particular, on COCO test-dev set [ 23 ] all our models achieve new state-of-the-art performance with significant margins. Our model only requires to add a single convolutional layer, and uses a single anchor per spatial locations similar to [ 40 ] , resulting in a smaller number of parameters compared to RetinaNet [ 22 ] . The proposed anchor assignment can be parallelized using GPUs and does not require extra computes in testing time. All this evidence verifies the efficacy of our proposed methods. The contributions of this paper are summarized as below:

1. We model the anchor assignment as a probabilistic procedure by calculating anchor scores from a detector model and maximizing the likelihood of these scores for a probability distribution. This allows the model to infer the assignment in a probabilistic way and adaptively determines positive samples.

2. To align the objectives of anchor assignment, optimization and post-processing procedures, we propose to predict the IoU of detected boxes and use the unified score of classification and localization as a ranking metric for NMS. On top of that, we propose the score voting method as an additional post-processing using the unified score to further boost the performance.

3. We perform extensive ablation studies and verify the effectiveness of the proposed methods. Our experiments on MS COCO dataset with five backbones set up new AP records for all tested settings.

2 Related Work

2.1 recent advances in object detection.

Since Region-CNN [ 8 ] and its improvements [ 7 , 28 ] , the concept of anchors and offset regression between anchors and ground truth (GT) boxes along with object category classification has been widely adopted. In many cases, multiple anchors of different scales and aspect ratios are assigned to each spatial location to cover various object sizes and shapes. Anchors that have IoU values greater than a threshold with one of GT boxes are considered as positive samples. Some systems use two-stage detectors [ 8 , 7 , 28 , 21 ] , which apply the anchor mechanism in a region proposal network (RPN) for class-agnostic object proposals. A second-stage detection head is run on aligned features [ 28 , 10 ] of each proposal. Some systems use single-stage detectors [ 25 , 24 , 26 , 41 , 22 , 43 ] , which does not have RPN and directly predict object categories and regression offsets at each spatial location. More recently, anchor-free models that do not rely on anchors to define positive and negative samples and regression offsets have been introduced. These models predict various key points such as corners [ 18 ] , extreme points [ 44 ] , center points [ 6 , 33 ] or arbitrary feature points [ 38 ] induced from deformable convolution [ 5 ] . [ 45 ] combines anchor-based detectors with anchor-free detection by adding additional anchor-free regression branches. It has been found in [ 40 ] that anchor-based and anchor-free models show similar performance when they use the same anchor assignment strategy.

2.2 Anchor Assignment in Object Detection

The task of selecting which anchors (or locations for anchor-free models) are to be designated as positive or negative samples has recently been identified as a crucial factor that greatly affects a model’s performance [ 37 , 42 , 40 ] . In this regard, several methods have been proposed to overcome the limitation of the IoU-based hard anchor assignment. MetaAnchor [ 37 ] predicts the parameters of the anchor functions (the last convolutional layers of detection heads) dynamically and takes anchor shapes as an argument, which provides the ability to change anchors in training and testing. Rather than enumerating pre-defined anchors across spatial locations, GuidedAnchoring [ 34 ] defines the locations of anchors near the center of GTs as positives and predicts their shapes. FreeAnchor [ 42 ] proposes a detection-customized likelihood that considers both the recall and precision of samples into account and determines positive anchors based on the estimated likelihood. ATSS [ 40 ] suggests an adaptive anchor assignment that calculates the mean and standard deviation of IoU values from a set of close anchors for each GT. It assigns anchors whose IoU values are higher than the sum of the mean and the standard deviation as positives. Although these works show some improvements, they either require additional layers and complicated structures [ 34 , 37 ] , or force only one anchor to have a full classification score which is not desirable in cases where multiple anchors are of high quality and competitive [ 42 ] , or rely on IoUs between pre-defined anchors and GTs and consider neither the actual content of the intersecting regions nor the model’s learning status [ 40 ] .

Similar to our work, MultipleAnchorLearning (MAL) [ 16 ] and NoisyAnchor [ 20 ] define anchor score functions based on classification and localization losses. However, they do not model the anchor selection procedure as a likelihood maximization for a probability distribution; rather, they choose a fixed number of best scoring anchors. Such a mechanism prevents these models from selecting a flexible number of positive samples according to the model’s learning status and input. MAL uses a linear scheduling that reduces the number of positives as training proceeds and requires a heuristic feature perturbation to mitigate it. NoisyAnchor fixes the number of positive samples throughout training. Also, they either miss the relation between the anchor scoring scheme and the box selection objective in NMS [ 16 ] or only indirectly relate them using soft-labels [ 20 ] .

2.3 Predicting Localization Quality in Object Detection

Predicting IoUs as a localization quality of detected bounding boxes is not new. YOLO and YOLOv2 [ 25 , 26 ] predict “objectness score”, which is the IoU of a detected box with its corresponding GT box, and multiply it with the classification score during inference. However, they do not investigate its effectiveness compared to the method that uses classification scores only, and their latest version [ 27 ] removes this prediction. IoU-Net [ 15 ] also predicts the IoUs of predicted boxes and proposed “IoU-guided NMS” that uses predicted IoUs instead of classification scores as the ranking keyword, and adjusts the selected box’s score as the maximum score of overlapping boxes. Although this approach can be effective, they do not correlate the classification score with the IoU as a unified score, nor do they relate the NMS procedure and the anchor assignment process. In contrast to predicting IoUs, some works [ 12 , 4 ] add an additional head to predict the variance of localization to regularize training [ 12 ] or penalize the classification score in testing [ 4 ] .

3 Proposed Methods

3.1 probabilistic anchor assignment algorithm.

Our goal here is to devise an anchor assignment strategy that takes three key considerations into account: Firstly, it should measure the quality of a given anchor based on how likely the model associated with it finds evidence to identify the target object with that anchor. Secondly, the separation of anchors into positive and negative samples should be adaptive so that it does not require a hyperparameter such as an IoU threshold. Lastly, the assignment strategy should be formulated as a likelihood maximization for a probability distribution in order for the model to be able to reason about the assignment in a probabilistic way. In this respect, we design an anchor scoring scheme and propose an anchor assignment that brings the scoring scheme into account.

Specifically, let us define the score of an anchor that reflects the quality of its bounding box prediction for the closest ground truth (GT) g 𝑔 g . One intuitive way is to calculate a classification score (compatibility with the GT class) and a localization score (compatibility with the GT box) and multiply them:

where S c l s subscript 𝑆 𝑐 𝑙 𝑠 S_{cls} , S l o c subscript 𝑆 𝑙 𝑜 𝑐 S_{loc} , and λ 𝜆 \lambda are the score of classification and localization of anchor a 𝑎 a given g 𝑔 g and a scalar to control the relative weight of two scores, respectively. x 𝑥 x and f θ subscript 𝑓 𝜃 f_{\theta} are an input image and a model with parameters θ 𝜃 \theta . Note that this scoring function is dependent on the model parameters θ 𝜃 \theta . We can define and get S c l s subscript 𝑆 𝑐 𝑙 𝑠 S_{cls} from the output of the classification head. How to define S l o c subscript 𝑆 𝑙 𝑜 𝑐 S_{loc} is less obvious, since the output of the localization head is encoded offset values rather than a score. Here we use the Intersection-over-Union (IoU) of a predicted box with its GT box as S l o c subscript 𝑆 𝑙 𝑜 𝑐 S_{loc} , as its range matches that of the classification score and its values naturally correspond to the quality of localization:

Taking the negative logarithm of score function S 𝑆 S , we get the following:

where ℒ c l s subscript ℒ 𝑐 𝑙 𝑠 \mathcal{L}_{cls} and ℒ I o U subscript ℒ 𝐼 𝑜 𝑈 \mathcal{L}_{IoU} denote binary cross entropy loss 1 1 1 We assume a binary classification task. Extending it to a multi-class case is straightforward. and IoU loss [ 39 ] respectively. One can also replace any of the losses with a more advanced objective such as Focal Loss [ 22 ] or GIoU Loss [ 29 ] . It is then legitimate that the negative sum of the two losses can act as a scoring function of an anchor given a GT box.

To allow a model to be able to reason about whether it should predict an anchor as a positive sample in a probabilistic way, we model anchor scores for a certain GT as samples drawn from a probability distribution and maximize the likelihood of the anchor scores w.r.t the parameters of the distribution. The anchors are then separated into positive and negative samples according to the probability of each being a positive or a negative. Since our goal is to distinguish a set of anchors into two groups (positives and negatives), any probability distribution that can model the multi-modality of samples can be used. Here we choose Gaussian Mixture Model (GMM) of two modalities to model the anchor score distribution.

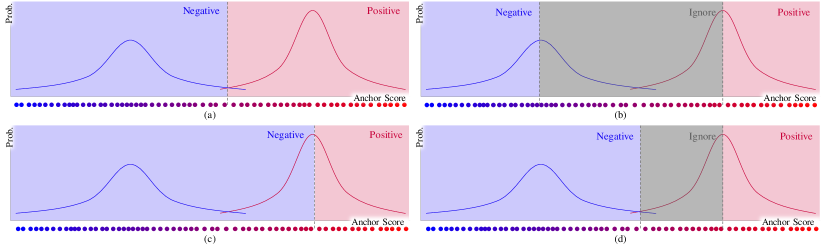

With the parameters of GMM estimated by EM, the probability of each anchor being a positive or a negative sample can be determined. With these probability values, various techniques can be used to separate the anchors into two groups. Figure 3 illustrates different examples of separation boundaries based on anchor probabilities. The proposed algorithm using one of these boundary schemes is described in Procedure 1 . To calculate anchor scores, anchors are first allocated to the GT of the highest IoU (Line 3). To make EM efficient, we collect top K 𝐾 K anchors from each pyramid level (Line 5-11) and perform EM (Line 12). Non-top K 𝐾 K anchors are assigned as negative samples (Line 16).

Note that the number of positive samples is adaptively determined depending on the estimated probability distribution conditioned on the model’s parameters. This is in contrast to previous approaches that ignore the model [ 40 ] or heuristically determine the number of samples as a hyperparameter [ 16 , 20 ] without modeling the anchor assignment as a likelihood maximization for a probability distribution. FreeAnchor [ 42 ] defines a detection-customized likelihood and models the product of the recall and the precision as the training objective. But their approach is significantly different than ours in that we do not separately design likelihoods for recall and precision, nor do we restrict the number of anchors that have a full classification score to one. In contrast, our likelihood is based on a simple one-dimensional GMM of two modalities conditioned on the model’s parameters, allowing the anchor assignment strategy to be easily identified by the model. This results in easier learning compared to other anchor assignment methods that require complicated sub-routines (e.g. the mean-max function to stabilize training [ 42 ] or the anchor depression procedure to avoid local minima [ 16 ] ) and thus leads to better performance as shown in the experiments.

To summarize our method and plug it into the training process of an object detector, we formulate the final training objective for an input image x 𝑥 x (we omit x 𝑥 x for brevity):

where P p o s ( a , θ , g ) subscript 𝑃 𝑝 𝑜 𝑠 𝑎 𝜃 𝑔 P_{pos}(a,\theta,g) and P n e g ( a , θ , g ) subscript 𝑃 𝑛 𝑒 𝑔 𝑎 𝜃 𝑔 P_{neg}(a,\theta,g) indicate the probability of an anchor being a positive or a negative and can be obtained by the proposed PAA. ∅ \varnothing means the background class. Our PAA algorithm can be viewed as a procedure to compute P p o s subscript 𝑃 𝑝 𝑜 𝑠 P_{pos} and P n e g subscript 𝑃 𝑛 𝑒 𝑔 P_{neg} and approximate them as binary values (i.e. separate anchors into two groups) to ease optimization. In each training iteration, after estimating P p o s subscript 𝑃 𝑝 𝑜 𝑠 P_{pos} and P n e g subscript 𝑃 𝑛 𝑒 𝑔 P_{neg} , the gradients of the loss objectives w.r.t. θ 𝜃 \theta can be calculated and stochastic gradient descent can be performed.

3.2 IoU Prediction as Localization Quality

The anchor scoring function in the proposed anchor assignment is derived from the training objective (i.e. the combined loss of two tasks), so the anchor assignment procedure is well aligned with the loss optimization. However, this is not the case for the testing procedure where the non-maximum suppression (NMS) is performed solely on the classification score. To remedy this, the localization quality can be incorporated into NMS procedure so that the same scoring function (Equation 1 ) can be used. However, GT information is only available during training, and so IoU between a detected box and its corresponding GT box cannot be computed at test time.

Here we propose a simple solution to this: we extend our model to predict the IoU of a predicted box with its corresponding GT box. This extension is straightforward as it requires a single convolutional layer as an additional prediction head that outputs a scalar value per anchor. We use Sigmoid activation on the output to obtain valid IoU values. The training objective then becomes (we omit input x for brevity):

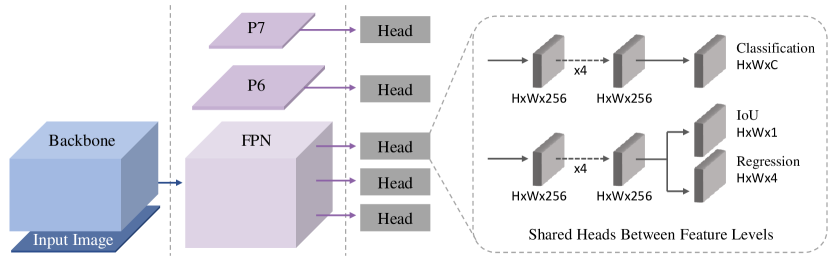

where L I o U P subscript 𝐿 𝐼 𝑜 𝑈 𝑃 {L}_{IoUP} is IoU prediction loss defined as binary cross entropy between predicted IoUs and true IoUs. With the predicted IoU, we compute the unified score of the detected box using Equation 1 and use it as a ranking metric for NMS procedure. As shown in the experiments, bringing IoU prediction into NMS significantly improves performance, especially when coupled with the proposed probabilistic anchor assignment. The overall network architecture is exactly the same as the one in FCOS [ 33 ] and ATSS [ 40 ] , which is RetinaNet with modified feature towers and an auxiliary prediction head. Note that this structure uses only a single anchor per spatial location and so has a smaller number of parameters and FLOPs compared to RetinaNet-based models using nine anchors.

3.3 Score Voting

As an additional improvement method here we propose a simple yet effective post-processing scheme. The proposed score voting method works on each box b 𝑏 b of remaining boxes after NMS procedure as follows:

where b ^ ^ 𝑏 \hat{b} , s i subscript 𝑠 𝑖 s_{i} and σ t subscript 𝜎 𝑡 \sigma_{t} is the updated box, the score computed by Equation 1 and a hyperparameter to adjust the weights of adjacent boxes b i subscript 𝑏 𝑖 b_{i} respectively. It is noted that this voting algorithm is inspired by “variance voting” described in [ 12 ] and p i subscript 𝑝 𝑖 p_{i} is defined in the same way. However, we do not use the variance prediction to calculate the weight of each neighboring box. Instead we use the unified score of classification and localization s i subscript 𝑠 𝑖 s_{i} as a weight along with p i subscript 𝑝 𝑖 p_{i} .

We found that using p i subscript 𝑝 𝑖 p_{i} alone as a box weight leads to a performance improvement, and multiplying it by s i subscript 𝑠 𝑖 s_{i} further boost the performance. In contrast to the variance voting, detectors without the variance prediction are capable of using the score voting by just weighting boxes with p i subscript 𝑝 𝑖 p_{i} . Detectors with IoU prediction head, like ours, can multiply it by s i subscript 𝑠 𝑖 s_{i} for better accuracy. Unlike the classification score only, s i subscript 𝑠 𝑖 s_{i} can act as a reliable weight since it does not assign large weights to boxes that have a high classification score and a poor localization quality.

4 Experiments

In this section we conduct extensive experiments to verify the effectiveness of the proposed methods on MS COCO benchmark [ 23 ] . We follow the common practice of using ‘trainval35k’ as training data (about 118k images) for all experiments. For ablation studies we measure accuracy on ‘minival’ of 5k images and comparisons with previous methods are done on ‘test-dev’ of about 20k images. All accuracy numbers are computed using the official COCO evaluation code.

4.1 Training Details

We use a COCO training setting which is the same as [ 40 ] in the batch size, frozen Batch Normalization, learning rate, etc. The exact setting can be found in the supplementary material. For ablation studies we use Res50 backbone and run 135k iterations of training. For comparisons with previous methods we run 180k iterations with various backbones. Similar to recent works [ 33 , 40 ] , we use GroupNorm [ 35 ] in detection feature towers, Focal Loss [ 22 ] as the classification loss, GIoU Loss [ 29 ] as the localization loss, and add trainable scalars to the regression head. λ 1 subscript 𝜆 1 \lambda_{1} is set to 1 to compute anchor scores and 1.3 when calculating Equation 3 . λ 2 subscript 𝜆 2 \lambda_{2} is set to 0.5 to balance the scales of each loss term. σ t subscript 𝜎 𝑡 \sigma_{t} is set to 0.025 if the score voting is used. Note that we do not use “centerness” prediction or “center sampling” [ 33 , 40 ] in our models. We set 𝒦 𝒦 \mathcal{K} to 9 although our method is not sensitive to its value similar to [ 40 ] . For GMM optimization, we set the minimum and maximum score of the candidate anchors as the mean of two Gaussians and set the precision values to one as an initialization of EM.

4.2 Ablation Studies

4.2.1 comparison between different anchor separation points.

Here we compare the anchor separation boundaries depicted in Figure 3 . The left table in Table LABEL:table:ablation shows the results. All the separation schemes work well, and we find that (c) gives the most stable performance. We also compare our method with two simpler methods, namely fixed numbers of positives (FNP) and fixed positive score ranges (FSR). FNP defines a pre-defined number of top-scoring samples as positives while FSR treats all anchors whose scores exceed a certain threshold as positives. As the results in the right of Table LABEL:table:ablation show, both methods show worse performance than PAA. FSR ( > > 0.3) fails because the model cannot find anchors whose scores are within the range at early iterations. This shows an advantage of PAA that adaptively determines the separation boundaries without hyperparameters that require careful hand-tuning and so are hard to be adaptive per data.

4.2.2 Effects of individual modules

In this section we verify the effectiveness of individual modules of the proposed methods. Accuracy numbers for various combinations are in Table LABEL:table:ablation . Changing anchor assignment from the IoU-based hard assignment to the proposed PAA shows improvements of 5.3% in AP score. Adding IoU prediction head and applying the unified score function in NMS procedure further boosts the performance to 40.8%. To further verify the impact of IoU prediction, we compare it with centerness prediction used in [ 33 , 40 ] . As can be seen in the results, centerness does not bring improvements to PAA. This is expected as weighting scores of detected boxes according to its centerness can hinder the detection of acentric or slanted objects. This shows that centerness-based scoring does not generalize well and the proposed IoU-based scoring can overcome this limitation. We also verify that IoU prediction is more effective than centerness prediction for ATSS [ 40 ] (39.8% vs. 39.4%). Finally, applying the score voting improves the performance to 41.0%, surpassing previous methods with Res50 backbone in Table 2 .Left with significant margins.

4.2.3 Accuracy of IoU prediction

We calculate the average error of IoU prediction for various backbones in Table 2 .Right. All backbones show less than 0.1 errors, showing that IoU prediction is plausible with an additional convolutional head.

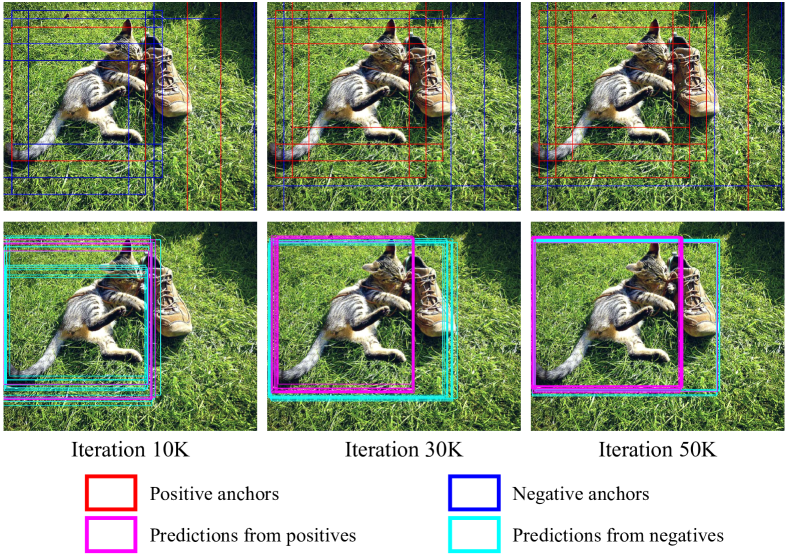

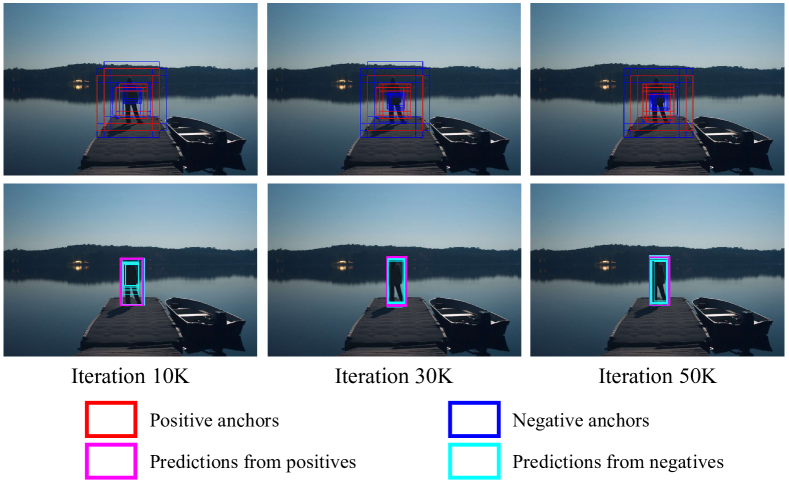

4.2.4 Visualization of anchor assignment

We visualize positive and negative samples separated by PAA in Figure 4(a) . As training proceeds, the distinction between positive and negative samples becomes clearer. Note that the positive anchors do not necessarily have larger IoU values with the target bounding box than the negative ones. Also, many negative anchors in the iteration 30k and 50k have high IoU values. Methods with a fixed number of positive samples [ 16 , 20 ] can assign these anchors as positives, and the model might predict these anchors with high scores during inference. Finally, many positive anchors have more accurate localization as training proceeds. In contrast to ours, methods like FreeAnchor [ 42 ] penalize all these anchors except the single best one, which can confuse training.

4.2.5 Statistics of positive samples

To compare our method and recent works that also select positive samples by scoring anchors, we plot the number of positive samples according to training iterations in Figure 4(b) . Unlike methods that either fix the number of samples [ 20 ] or use a linear decay [ 16 ] , ours choose a different number of samples per iteration, showing the adaptability of the method.

4.3 Comparison with State-of-the-art Methods

To verify our methods with previous state-of-the-art ones, we conduct experiments with five backbones as in Table 3 . We first compare our models trained with Res10 and previous models trained with the same backbone. Our Res101 model achieves 44.8% accuracy, surpassing previous best models [ 40 , 16 ] of 43.6 %. With ResNext101 our model improves to 46.6% (single-scale testing) and 49.4% (multi-scale testing) which also beats the previous best model of 45.9% and 47.0% [ 16 ] . Then we extend our models by applying the deformable convolution to the backbones and the last layer of feature towers same as [ 40 ] . These models also outperform the counterparts of ATSS, showing 1.1% and 1.3% improvements. Finally, with the deformable ResNext152 backbone, our models set new records for both the single scale testing (50.8%) and the multi-scale testing (53.5%).

5 Conclusions

In this paper we proposed a probabilistic anchor assignment (PAA) algorithm in which the anchor assignment is performed as a likelihood optimization for a probability distribution given anchor scores computed by the model associated with it. The core of PAA is in determining positive and negative samples in favor of the model so that it can infer the separation in a probabilistically reasonable way, leading to easier training compared to the heuristic IoU hard assignment or non-probabilistic assignment strategies. In addition to PAA, we identified the discrepancy of objectives in key procedures of object detection and proposed IoU prediction as a measure of localization quality to apply a unified score of classification and localization to NMS procedure. We also provided the score voting method which is a simple yet effective post-processing scheme that is applicable to most dense object detectors. Experiments showed that the proposed methods significantly boosted the detection performance, and surpassed all previous methods on COCO test-dev set.

- [1] NPS Photo, https://www.nps.gov/features/yell/slidefile/mammals/grizzlybear/Images/00110.jpg

- [2] Bodla, N., Singh, B., Chellappa, R., Davis, L.S.: Soft-nms–improving object detection with one line of code. In: Proceedings of the IEEE international conference on computer vision. pp. 5561–5569 (2017)

- [3] Chen, K., Li, J., Lin, W., See, J., Wang, J., Duan, L., Chen, Z., He, C., Zou, J.: Towards accurate one-stage object detection with ap-loss. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 5119–5127 (2019)

- [4] Choi, J., Chun, D., Kim, H., Lee, H.J.: Gaussian yolov3: An accurate and fast object detector using localization uncertainty for autonomous driving. In: Proceedings of the IEEE International Conference on Computer Vision. pp. 502–511 (2019)

- [5] Dai, J., Qi, H., Xiong, Y., Li, Y., Zhang, G., Hu, H., Wei, Y.: Deformable convolutional networks. In: Proceedings of the IEEE international conference on computer vision. pp. 764–773 (2017)

- [6] Duan, K., Bai, S., Xie, L., Qi, H., Huang, Q., Tian, Q.: Centernet: Keypoint triplets for object detection. In: Proceedings of the IEEE International Conference on Computer Vision. pp. 6569–6578 (2019)

- [7] Girshick, R.: Fast r-cnn. In: Proceedings of the IEEE international conference on computer vision. pp. 1440–1448 (2015)

- [8] Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 580–587 (2014)

- [9] Goyal, P., Dollár, P., Girshick, R., Noordhuis, P., Wesolowski, L., Kyrola, A., Tulloch, A., Jia, Y., He, K.: Accurate, large minibatch sgd: Training imagenet in 1 hour. arXiv preprint arXiv:1706.02677 (2017)

- [10] He, K., Gkioxari, G., Dollár, P., Girshick, R.: Mask r-cnn. In: Proceedings of the IEEE international conference on computer vision. pp. 2961–2969 (2017)

- [11] He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 770–778 (2016)

- [12] He, Y., Zhu, C., Wang, J., Savvides, M., Zhang, X.: Bounding box regression with uncertainty for accurate object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 2888–2897 (2019)

- [13] Hosang, J., Benenson, R., Schiele, B.: Learning non-maximum suppression. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 4507–4515 (2017)

- [14] Ioffe, S., Szegedy, C.: Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv preprint arXiv:1502.03167 (2015)

- [15] Jiang, B., Luo, R., Mao, J., Xiao, T., Jiang, Y.: Acquisition of localization confidence for accurate object detection. In: Proceedings of the European Conference on Computer Vision (ECCV). pp. 784–799 (2018)

- [16] Ke, W., Zhang, T., Huang, Z., Ye, Q., Liu, J., Huang, D.: Multiple anchor learning for visual object detection. arXiv preprint arXiv:1912.02252 (2019)

- [17] Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems. pp. 1097–1105 (2012)

- [18] Law, H., Deng, J.: Cornernet: Detecting objects as paired keypoints. In: Proceedings of the European Conference on Computer Vision (ECCV). pp. 734–750 (2018)

- [19] LeCun, Y., Boser, B., Denker, J.S., Henderson, D., Howard, R.E., Hubbard, W., Jackel, L.D.: Backpropagation applied to handwritten zip code recognition. Neural computation 1 (4), 541–551 (1989)

- [20] Li, H., Wu, Z., Zhu, C., Xiong, C., Socher, R., Davis, L.S.: Learning from noisy anchors for one-stage object detection. arXiv preprint arXiv:1912.05086 (2019)

- [21] Lin, T.Y., Dollár, P., Girshick, R., He, K., Hariharan, B., Belongie, S.: Feature pyramid networks for object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 2117–2125 (2017)

- [22] Lin, T.Y., Goyal, P., Girshick, R., He, K., Dollár, P.: Focal loss for dense object detection. In: Proceedings of the IEEE international conference on computer vision. pp. 2980–2988 (2017)

- [23] Lin, T.Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., Zitnick, C.L.: Microsoft coco: Common objects in context. In: European conference on computer vision. pp. 740–755. Springer (2014)

- [24] Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C.Y., Berg, A.C.: Ssd: Single shot multibox detector. In: European conference on computer vision. pp. 21–37. Springer (2016)

- [25] Redmon, J., Divvala, S., Girshick, R., Farhadi, A.: You only look once: Unified, real-time object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 779–788 (2016)

- [26] Redmon, J., Farhadi, A.: Yolo9000: better, faster, stronger. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 7263–7271 (2017)

- [27] Redmon, J., Farhadi, A.: Yolov3: An incremental improvement. arXiv preprint arXiv:1804.02767 (2018)

- [28] Ren, S., He, K., Girshick, R., Sun, J.: Faster r-cnn: Towards real-time object detection with region proposal networks. In: Advances in neural information processing systems. pp. 91–99 (2015)

- [29] Rezatofighi, H., Tsoi, N., Gwak, J., Sadeghian, A., Reid, I., Savarese, S.: Generalized intersection over union: A metric and a loss for bounding box regression. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 658–666 (2019)

- [30] Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., Huang, Z., Karpathy, A., Khosla, A., Bernstein, M., et al.: Imagenet large scale visual recognition challenge. International journal of computer vision 115 (3), 211–252 (2015)

- [31] Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

- [32] Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V., Rabinovich, A.: Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 1–9 (2015)

- [33] Tian, Z., Shen, C., Chen, H., He, T.: Fcos: Fully convolutional one-stage object detection. In: Proceedings of the IEEE International Conference on Computer Vision. pp. 9627–9636 (2019)

- [34] Wang, J., Chen, K., Yang, S., Loy, C.C., Lin, D.: Region proposal by guided anchoring. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 2965–2974 (2019)

- [35] Wu, Y., He, K.: Group normalization. In: Proceedings of the European Conference on Computer Vision (ECCV). pp. 3–19 (2018)

- [36] Xie, S., Girshick, R., Dollár, P., Tu, Z., He, K.: Aggregated residual transformations for deep neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 1492–1500 (2017)

- [37] Yang, T., Zhang, X., Li, Z., Zhang, W., Sun, J.: Metaanchor: Learning to detect objects with customized anchors. In: Advances in Neural Information Processing Systems. pp. 320–330 (2018)

- [38] Yang, Z., Liu, S., Hu, H., Wang, L., Lin, S.: Reppoints: Point set representation for object detection. In: Proceedings of the IEEE International Conference on Computer Vision. pp. 9657–9666 (2019)

- [39] Yu, J., Jiang, Y., Wang, Z., Cao, Z., Huang, T.: Unitbox: An advanced object detection network. In: Proceedings of the 24th ACM international conference on Multimedia. pp. 516–520 (2016)

- [40] Zhang, S., Chi, C., Yao, Y., Lei, Z., Li, S.Z.: Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection. arXiv preprint arXiv:1912.02424 (2019)

- [41] Zhang, S., Wen, L., Bian, X., Lei, Z., Li, S.Z.: Single-shot refinement neural network for object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 4203–4212 (2018)

- [42] Zhang, X., Wan, F., Liu, C., Ji, R., Ye, Q.: Freeanchor: Learning to match anchors for visual object detection. In: Advances in Neural Information Processing Systems. pp. 147–155 (2019)

- [43] Zhao, Q., Sheng, T., Wang, Y., Tang, Z., Chen, Y., Cai, L., Ling, H.: M2det: A single-shot object detector based on multi-level feature pyramid network. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 33, pp. 9259–9266 (2019)

- [44] Zhou, X., Zhuo, J., Krahenbuhl, P.: Bottom-up object detection by grouping extreme and center points. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 850–859 (2019)

- [45] Zhu, C., He, Y., Savvides, M.: Feature selective anchor-free module for single-shot object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 840–849 (2019)

6.1 Training Details

We train our models with 8 GPUs each of which holds two images during training. The parameters of Batch Normalization layers [ 14 ] are frozen as is a common practice. All backbones are pre-trained with ImageNet dataset [ 30 ] . We set the initial learning rate to 0.01 and decay it by a factor of 10 at 90k and 120k iterations for the 135k setting and at 120k and 160k for the 180k setting. For the 180k setting the multi-scale training strategy (resizing the shorter side of input images to a scale randomly chosen from 640 to 800) is adopted as is also a common practice. The momentum and weight decay are set to 0.9 and 1e-4 respectively. Following [ 9 ] we use the learning rate warmup for the first 500 iterations. It is noted that multiplying individual localization losses by the scores of an auxiliary task (in our case, this is predicted IoUs with corresponding GT boxes, and centerness scores when using the centerness prediction as in [ 33 , 40 ] ), which is also applied in previous works [ 33 , 40 ] , helps train faster and leads to a better performance.

6.2 Network architecture

Here we provide Figure 5 for a visualization of our network architecture. It is a modified RetinaNet architecture with a single anchor per spatial location which is exactly the same as models used in FCOS [ 33 ] and ATSS [ 40 ] . The only difference is that the additional head in our model predicts IoUs of predicted boxes whereas FCOS and ATTS models predict centerness scores.

6.3 More Ablation Studies

We conduct additional ablation studies regarding the effects of topk 𝒦 𝒦 \mathcal{K} and the default anchor scale. All the experiments in the main paper are conducted with 𝒦 = 9 𝒦 9 \mathcal{K}=9 and the default anchor scale of 8. The anchor size for each pyramid level is determined by the product of its stride and the default anchor scale 2 2 2 So with the default anchor scale 8 and a feature pyramid of strides from 8 to 128, the anchor sizes are from 64 to 1024. . Table 4 shows the results on different default anchor scales. It shows that the proposed probabilistic anchor assignment is robust to both 𝒦 𝒦 \mathcal{K} and anchor sizes.

6.4 More Visualization of Anchor Assignment

We visualize the proposed anchor assignment during training. Figure 6 shows anchor assignment results on COCO training set. Figure 7 shows anchor assignment results on a non-COCO image.

6.5 Visualization of Detection Results

We visualize detection results on COCO minival set in Figure 8 .

Probabilistic Anchor Assignment with IoU Prediction for Object Detection

- Conference paper

- First Online: 20 November 2020

- Cite this conference paper

- Kang Kim 12 &

- Hee Seok Lee 13

Part of the book series: Lecture Notes in Computer Science ((LNIP,volume 12370))

Included in the following conference series:

- European Conference on Computer Vision

4455 Accesses

191 Citations

In object detection, determining which anchors to assign as positive or negative samples, known as anchor assignment , has been revealed as a core procedure that can significantly affect a model’s performance. In this paper we propose a novel anchor assignment strategy that adaptively separates anchors into positive and negative samples for a ground truth bounding box according to the model’s learning status such that it is able to reason about the separation in a probabilistic manner. To do so we first calculate the scores of anchors conditioned on the model and fit a probability distribution to these scores. The model is then trained with anchors separated into positive and negative samples according to their probabilities. Moreover, we investigate the gap between the training and testing objectives and propose to predict the Intersection-over-Unions of detected boxes as a measure of localization quality to reduce the discrepancy. The combined score of classification and localization qualities serving as a box selection metric in non-maximum suppression well aligns with the proposed anchor assignment strategy and leads significant performance improvements. The proposed methods only add a single convolutional layer to RetinaNet baseline and does not require multiple anchors per location, so are efficient. Experimental results verify the effectiveness of the proposed methods. Especially, our models set new records for single-stage detectors on MS COCO test-dev dataset with various backbones. Code is available at https://github.com/kkhoot/PAA.

K. Kim—Work done while at Qualcomm Korea YH.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

We assume a binary classification task. Extending it to a multi-class case is straightforward.

NPS Photo. https://www.nps.gov/features/yell/slidefile/mammals/grizzlybear/Images/00110.jpg

Bodla, N., Singh, B., Chellappa, R., Davis, L.S.: Soft-nms-improving object detection with one line of code. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 5561–5569 (2017)

Google Scholar

Chen, K., et al.: Towards accurate one-stage object detection with ap-loss. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5119–5127 (2019)

Choi, J., Chun, D., Kim, H., Lee, H.J.: Gaussian yolov3: an accurate and fast object detector using localization uncertainty for autonomous driving. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 502–511 (2019)

Dai, J., et al.: Deformable convolutional networks. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 764–773 (2017)

Duan, K., Bai, S., Xie, L., Qi, H., Huang, Q., Tian, Q.: Centernet: keypoint triplets for object detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 6569–6578 (2019)

Girshick, R.: Fast r-cnn. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1440–1448 (2015)

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 580–587 (2014)

Goyal, P., et al.: Accurate, large minibatch sgd: training imagenet in 1 hour. arXiv preprint arXiv:1706.02677 (2017)

He, K., Gkioxari, G., Dollár, P., Girshick, R.: Mask r-cnn. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2961–2969 (2017)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

He, Y., Zhu, C., Wang, J., Savvides, M., Zhang, X.: Bounding box regression with uncertainty for accurate object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2888–2897 (2019)

Hosang, J., Benenson, R., Schiele, B.: Learning non-maximum suppression. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4507–4515 (2017)

Ioffe, S., Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift. arXiv preprint arXiv:1502.03167 (2015)

Jiang, B., Luo, R., Mao, J., Xiao, T., Jiang, Y.: Acquisition of localization confidence for accurate object detection. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 784–799 (2018)

Ke, W., Zhang, T., Huang, Z., Ye, Q., Liu, J., Huang, D.: Multiple anchor learning for visual object detection. arXiv preprint arXiv:1912.02252 (2019)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 1097–1105 (2012)

Law, H., Deng, J.: Cornernet: detecting objects as paired keypoints. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 734–750 (2018)

LeCun, Y., et al.: Backpropagation applied to handwritten zip code recognition. Neural Comput. 1 (4), 541–551 (1989)

Article Google Scholar

Li, H., Wu, Z., Zhu, C., Xiong, C., Socher, R., Davis, L.S.: Learning from noisy anchors for one-stage object detection. arXiv preprint arXiv:1912.05086 (2019)

Lin, T.Y., Dollár, P., Girshick, R., He, K., Hariharan, B., Belongie, S.: Feature pyramid networks for object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2117–2125 (2017)

Lin, T.Y., Goyal, P., Girshick, R., He, K., Dollár, P.: Focal loss for dense object detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2980–2988 (2017)

Lin, T., et al.: Microsoft COCO: common objects in context. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8693, pp. 740–755. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10602-1_48

Chapter Google Scholar

Liu, W., et al.: SSD: single shot multibox detector. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9905, pp. 21–37. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46448-0_2

Redmon, J., Divvala, S., Girshick, R., Farhadi, A.: You only look once: unified, real-time object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 779–788 (2016)

Redmon, J., Farhadi, A.: Yolo9000: better, faster, stronger. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7263–7271 (2017)

Redmon, J., Farhadi, A.: Yolov3: an incremental improvement. arXiv preprint arXiv:1804.02767 (2018)

Ren, S., He, K., Girshick, R., Sun, J.: Faster r-cnn: towards real-time object detection with region proposal networks. In: Advances in Neural Information Processing Systems, pp. 91–99 (2015)

Rezatofighi, H., Tsoi, N., Gwak, J., Sadeghian, A., Reid, I., Savarese, S.: Generalized intersection over union: a metric and a loss for bounding box regression. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 658–666 (2019)

Russakovsky, O., et al.: Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 115 (3), 211–252 (2015)

Article MathSciNet Google Scholar

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

Szegedy, C., et al.: Going deeper with convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–9 (2015)

Tian, Z., Shen, C., Chen, H., He, T.: Fcos: fully convolutional one-stage object detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 9627–9636 (2019)

Wang, J., Chen, K., Yang, S., Loy, C.C., Lin, D.: Region proposal by guided anchoring. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2965–2974 (2019)

Wu, Y., He, K.: Group normalization. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 3–19 (2018)

Xie, S., Girshick, R., Dollár, P., Tu, Z., He, K.: Aggregated residual transformations for deep neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1492–1500 (2017)

Yang, T., Zhang, X., Li, Z., Zhang, W., Sun, J.: Metaanchor: learning to detect objects with customized anchors. In: Advances in Neural Information Processing Systems, pp. 320–330 (2018)

Yang, Z., Liu, S., Hu, H., Wang, L., Lin, S.: Reppoints: point set representation for object detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 9657–9666 (2019)

Yu, J., Jiang, Y., Wang, Z., Cao, Z., Huang, T.: Unitbox: an advanced object detection network. In: Proceedings of the 24th ACM International Conference on Multimedia, pp. 516–520 (2016)

Zhang, S., Chi, C., Yao, Y., Lei, Z., Li, S.Z.: Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection. arXiv preprint arXiv:1912.02424 (2019)

Zhang, S., Wen, L., Bian, X., Lei, Z., Li, S.Z.: Single-shot refinement neural network for object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4203–4212 (2018)

Zhang, X., Wan, F., Liu, C., Ji, R., Ye, Q.: Freeanchor: learning to match anchors for visual object detection. In: Advances in Neural Information Processing Systems, pp. 147–155 (2019)

Zhao, Q., et al.: M2det: a single-shot object detector based on multi-level feature pyramid network. Proc. AAAI Conf. Artif. Intell. 33 , 9259–9266 (2019)

Zhou, X., Zhuo, J., Krahenbuhl, P.: Bottom-up object detection by grouping extreme and center points. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 850–859 (2019)

Zhu, C., He, Y., Savvides, M.: Feature selective anchor-free module for single-shot object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 840–849 (2019)

Download references

Author information

Authors and affiliations.

XL8 Inc., San Jose, USA

Qualcomm Korea YH, Seoul, South Korea

Hee Seok Lee

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Kang Kim .

Editor information

Editors and affiliations.

University of Oxford, Oxford, UK

Andrea Vedaldi

Graz University of Technology, Graz, Austria

Horst Bischof

University of Freiburg, Freiburg im Breisgau, Germany

Thomas Brox

University of North Carolina at Chapel Hill, Chapel Hill, NC, USA

Jan-Michael Frahm

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Supplementary material 1 (pdf 4125 KB)

Rights and permissions.

Reprints and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper.

Kim, K., Lee, H.S. (2020). Probabilistic Anchor Assignment with IoU Prediction for Object Detection. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, JM. (eds) Computer Vision – ECCV 2020. ECCV 2020. Lecture Notes in Computer Science(), vol 12370. Springer, Cham. https://doi.org/10.1007/978-3-030-58595-2_22

Download citation

DOI : https://doi.org/10.1007/978-3-030-58595-2_22

Published : 20 November 2020

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-58594-5

Online ISBN : 978-3-030-58595-2

eBook Packages : Computer Science Computer Science (R0)

Share this paper

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Subscribe to the PwC Newsletter

Join the community, edit method, add a method collection.

- ANCHOR GENERATION MODULES

Remove a collection

- ANCHOR GENERATION MODULES -

Add A Method Component

Remove a method component, probabilistic anchor assignment.

Probabilistic anchor assignment (PAA) adaptively separates a set of anchors into positive and negative samples for a GT box according to the learning status of the model associated with it. To do so we first define a score of a detected bounding box that reflects both the classification and localization qualities. We then identify the connection between this score and the training objectives and represent the score as the combination of two loss objectives. Based on this scoring scheme, we calculate the scores of individual anchors that reflect how the model finds useful cues to detect a target object in each anchor. With these anchor scores, we aim to find a probability distribution of two modalities that best represents the scores as positive or negative samples as in the Figure.

Under the found probability distribution, anchors with probabilities from the positive component are high are selected as positive samples. This transforms the anchor assignment problem to a maximum likelihood estimation for a probability distribution where the parameters of the distribution is determined by anchor scores. Based on the assumption that anchor scores calculated by the model are samples drawn from a probability distribution, it is expected that the model can infer the sample separation in a probabilistic way, leading to easier training of the model compared to other non-probabilistic assignments. Moreover, since positive samples are adaptively selected based on the anchor score distribution, it does not require a pre-defined number of positive samples nor an IoU threshold.

Usage Over Time

Categories edit add remove.

Probabilistic Assignment with Decoupled IoU Prediction for Visual Tracking

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

- Advanced Search

Probabilistic Anchor Assignment with IoU Prediction for Object Detection

XL8 Inc., San Jose, USA

Qualcomm Korea YH, Seoul, South Korea

- 16 citation

New Citation Alert added!

This alert has been successfully added and will be sent to:

You will be notified whenever a record that you have chosen has been cited.

To manage your alert preferences, click on the button below.

New Citation Alert!

Please log in to your account

- Publisher Site

Computer Vision – ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXV

In object detection, determining which anchors to assign as positive or negative samples, known as anchor assignment , has been revealed as a core procedure that can significantly affect a model’s performance. In this paper we propose a novel anchor assignment strategy that adaptively separates anchors into positive and negative samples for a ground truth bounding box according to the model’s learning status such that it is able to reason about the separation in a probabilistic manner. To do so we first calculate the scores of anchors conditioned on the model and fit a probability distribution to these scores. The model is then trained with anchors separated into positive and negative samples according to their probabilities. Moreover, we investigate the gap between the training and testing objectives and propose to predict the Intersection-over-Unions of detected boxes as a measure of localization quality to reduce the discrepancy. The combined score of classification and localization qualities serving as a box selection metric in non-maximum suppression well aligns with the proposed anchor assignment strategy and leads significant performance improvements. The proposed methods only add a single convolutional layer to RetinaNet baseline and does not require multiple anchors per location, so are efficient. Experimental results verify the effectiveness of the proposed methods. Especially, our models set new records for single-stage detectors on MS COCO test-dev dataset with various backbones. Code is available at https://github.com/kkhoot/PAA.

40 Facts About Elektrostal

Written by Lanette Mayes

Modified & Updated: 01 Jun 2024

Reviewed by Jessica Corbett

Elektrostal is a vibrant city located in the Moscow Oblast region of Russia. With a rich history, stunning architecture, and a thriving community, Elektrostal is a city that has much to offer. Whether you are a history buff, nature enthusiast, or simply curious about different cultures, Elektrostal is sure to captivate you.

This article will provide you with 40 fascinating facts about Elektrostal, giving you a better understanding of why this city is worth exploring. From its origins as an industrial hub to its modern-day charm, we will delve into the various aspects that make Elektrostal a unique and must-visit destination.

So, join us as we uncover the hidden treasures of Elektrostal and discover what makes this city a true gem in the heart of Russia.

Key Takeaways:

- Elektrostal, known as the “Motor City of Russia,” is a vibrant and growing city with a rich industrial history, offering diverse cultural experiences and a strong commitment to environmental sustainability.

- With its convenient location near Moscow, Elektrostal provides a picturesque landscape, vibrant nightlife, and a range of recreational activities, making it an ideal destination for residents and visitors alike.

Known as the “Motor City of Russia.”

Elektrostal, a city located in the Moscow Oblast region of Russia, earned the nickname “Motor City” due to its significant involvement in the automotive industry.

Home to the Elektrostal Metallurgical Plant.

Elektrostal is renowned for its metallurgical plant, which has been producing high-quality steel and alloys since its establishment in 1916.

Boasts a rich industrial heritage.

Elektrostal has a long history of industrial development, contributing to the growth and progress of the region.

Founded in 1916.

The city of Elektrostal was founded in 1916 as a result of the construction of the Elektrostal Metallurgical Plant.

Located approximately 50 kilometers east of Moscow.

Elektrostal is situated in close proximity to the Russian capital, making it easily accessible for both residents and visitors.

Known for its vibrant cultural scene.

Elektrostal is home to several cultural institutions, including museums, theaters, and art galleries that showcase the city’s rich artistic heritage.

A popular destination for nature lovers.

Surrounded by picturesque landscapes and forests, Elektrostal offers ample opportunities for outdoor activities such as hiking, camping, and birdwatching.

Hosts the annual Elektrostal City Day celebrations.

Every year, Elektrostal organizes festive events and activities to celebrate its founding, bringing together residents and visitors in a spirit of unity and joy.

Has a population of approximately 160,000 people.

Elektrostal is home to a diverse and vibrant community of around 160,000 residents, contributing to its dynamic atmosphere.

Boasts excellent education facilities.

The city is known for its well-established educational institutions, providing quality education to students of all ages.

A center for scientific research and innovation.

Elektrostal serves as an important hub for scientific research, particularly in the fields of metallurgy , materials science, and engineering.

Surrounded by picturesque lakes.

The city is blessed with numerous beautiful lakes , offering scenic views and recreational opportunities for locals and visitors alike.

Well-connected transportation system.

Elektrostal benefits from an efficient transportation network, including highways, railways, and public transportation options, ensuring convenient travel within and beyond the city.

Famous for its traditional Russian cuisine.

Food enthusiasts can indulge in authentic Russian dishes at numerous restaurants and cafes scattered throughout Elektrostal.

Home to notable architectural landmarks.

Elektrostal boasts impressive architecture, including the Church of the Transfiguration of the Lord and the Elektrostal Palace of Culture.

Offers a wide range of recreational facilities.

Residents and visitors can enjoy various recreational activities, such as sports complexes, swimming pools, and fitness centers, enhancing the overall quality of life.

Provides a high standard of healthcare.

Elektrostal is equipped with modern medical facilities, ensuring residents have access to quality healthcare services.

Home to the Elektrostal History Museum.

The Elektrostal History Museum showcases the city’s fascinating past through exhibitions and displays.

A hub for sports enthusiasts.

Elektrostal is passionate about sports, with numerous stadiums, arenas, and sports clubs offering opportunities for athletes and spectators.

Celebrates diverse cultural festivals.

Throughout the year, Elektrostal hosts a variety of cultural festivals, celebrating different ethnicities, traditions, and art forms.

Electric power played a significant role in its early development.

Elektrostal owes its name and initial growth to the establishment of electric power stations and the utilization of electricity in the industrial sector.

Boasts a thriving economy.

The city’s strong industrial base, coupled with its strategic location near Moscow, has contributed to Elektrostal’s prosperous economic status.

Houses the Elektrostal Drama Theater.

The Elektrostal Drama Theater is a cultural centerpiece, attracting theater enthusiasts from far and wide.

Popular destination for winter sports.

Elektrostal’s proximity to ski resorts and winter sport facilities makes it a favorite destination for skiing, snowboarding, and other winter activities.

Promotes environmental sustainability.

Elektrostal prioritizes environmental protection and sustainability, implementing initiatives to reduce pollution and preserve natural resources.

Home to renowned educational institutions.

Elektrostal is known for its prestigious schools and universities, offering a wide range of academic programs to students.

Committed to cultural preservation.

The city values its cultural heritage and takes active steps to preserve and promote traditional customs, crafts, and arts.

Hosts an annual International Film Festival.

The Elektrostal International Film Festival attracts filmmakers and cinema enthusiasts from around the world, showcasing a diverse range of films.

Encourages entrepreneurship and innovation.

Elektrostal supports aspiring entrepreneurs and fosters a culture of innovation, providing opportunities for startups and business development .

Offers a range of housing options.

Elektrostal provides diverse housing options, including apartments, houses, and residential complexes, catering to different lifestyles and budgets.

Home to notable sports teams.

Elektrostal is proud of its sports legacy , with several successful sports teams competing at regional and national levels.

Boasts a vibrant nightlife scene.

Residents and visitors can enjoy a lively nightlife in Elektrostal, with numerous bars, clubs, and entertainment venues.

Promotes cultural exchange and international relations.

Elektrostal actively engages in international partnerships, cultural exchanges, and diplomatic collaborations to foster global connections.

Surrounded by beautiful nature reserves.

Nearby nature reserves, such as the Barybino Forest and Luchinskoye Lake, offer opportunities for nature enthusiasts to explore and appreciate the region’s biodiversity.

Commemorates historical events.

The city pays tribute to significant historical events through memorials, monuments, and exhibitions, ensuring the preservation of collective memory.

Promotes sports and youth development.

Elektrostal invests in sports infrastructure and programs to encourage youth participation, health, and physical fitness.

Hosts annual cultural and artistic festivals.

Throughout the year, Elektrostal celebrates its cultural diversity through festivals dedicated to music, dance, art, and theater.

Provides a picturesque landscape for photography enthusiasts.

The city’s scenic beauty, architectural landmarks, and natural surroundings make it a paradise for photographers.

Connects to Moscow via a direct train line.

The convenient train connection between Elektrostal and Moscow makes commuting between the two cities effortless.

A city with a bright future.

Elektrostal continues to grow and develop, aiming to become a model city in terms of infrastructure, sustainability, and quality of life for its residents.

In conclusion, Elektrostal is a fascinating city with a rich history and a vibrant present. From its origins as a center of steel production to its modern-day status as a hub for education and industry, Elektrostal has plenty to offer both residents and visitors. With its beautiful parks, cultural attractions, and proximity to Moscow, there is no shortage of things to see and do in this dynamic city. Whether you’re interested in exploring its historical landmarks, enjoying outdoor activities, or immersing yourself in the local culture, Elektrostal has something for everyone. So, next time you find yourself in the Moscow region, don’t miss the opportunity to discover the hidden gems of Elektrostal.

Q: What is the population of Elektrostal?

A: As of the latest data, the population of Elektrostal is approximately XXXX.

Q: How far is Elektrostal from Moscow?

A: Elektrostal is located approximately XX kilometers away from Moscow.

Q: Are there any famous landmarks in Elektrostal?

A: Yes, Elektrostal is home to several notable landmarks, including XXXX and XXXX.

Q: What industries are prominent in Elektrostal?

A: Elektrostal is known for its steel production industry and is also a center for engineering and manufacturing.

Q: Are there any universities or educational institutions in Elektrostal?

A: Yes, Elektrostal is home to XXXX University and several other educational institutions.

Q: What are some popular outdoor activities in Elektrostal?

A: Elektrostal offers several outdoor activities, such as hiking, cycling, and picnicking in its beautiful parks.

Q: Is Elektrostal well-connected in terms of transportation?

A: Yes, Elektrostal has good transportation links, including trains and buses, making it easily accessible from nearby cities.

Q: Are there any annual events or festivals in Elektrostal?

A: Yes, Elektrostal hosts various events and festivals throughout the year, including XXXX and XXXX.

Elektrostal's fascinating history, vibrant culture, and promising future make it a city worth exploring. For more captivating facts about cities around the world, discover the unique characteristics that define each city . Uncover the hidden gems of Moscow Oblast through our in-depth look at Kolomna. Lastly, dive into the rich industrial heritage of Teesside, a thriving industrial center with its own story to tell.

Was this page helpful?

Our commitment to delivering trustworthy and engaging content is at the heart of what we do. Each fact on our site is contributed by real users like you, bringing a wealth of diverse insights and information. To ensure the highest standards of accuracy and reliability, our dedicated editors meticulously review each submission. This process guarantees that the facts we share are not only fascinating but also credible. Trust in our commitment to quality and authenticity as you explore and learn with us.

Share this Fact:

- Open access

- Published: 03 June 2024

Predicting gene expression state and prioritizing putative enhancers using 5hmC signal

- Edahi Gonzalez-Avalos ORCID: orcid.org/0000-0002-6817-4854 1 , 2 ,

- Atsushi Onodera ORCID: orcid.org/0000-0002-3715-9408 1 , 3 ,

- Daniela Samaniego-Castruita ORCID: orcid.org/0000-0001-6082-6603 1 , 4 ,

- Anjana Rao ORCID: orcid.org/0000-0002-1870-1775 1 , 2 , 5 , 6 , 7 &

- Ferhat Ay ORCID: orcid.org/0000-0002-0708-6914 1 , 2 , 7 , 8

Genome Biology volume 25 , Article number: 142 ( 2024 ) Cite this article

12 Accesses

49 Altmetric

Metrics details

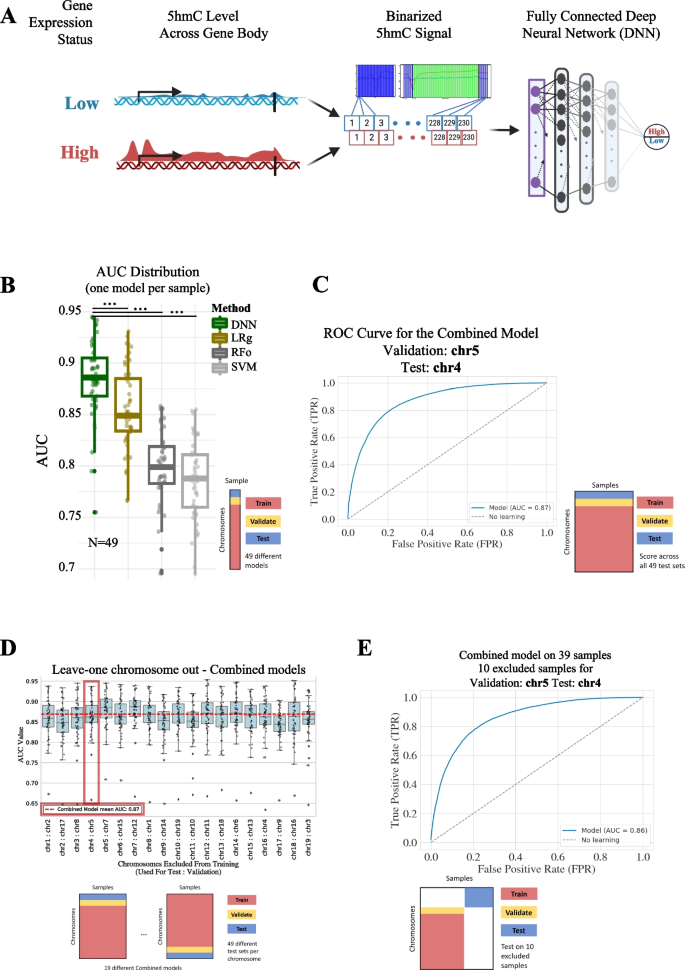

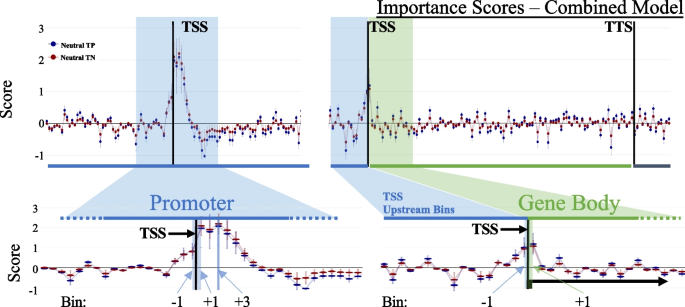

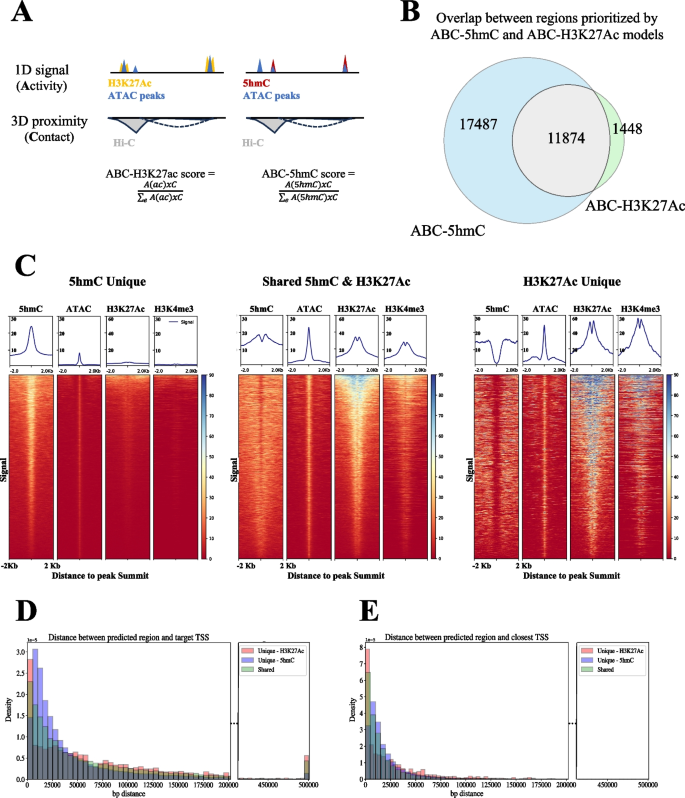

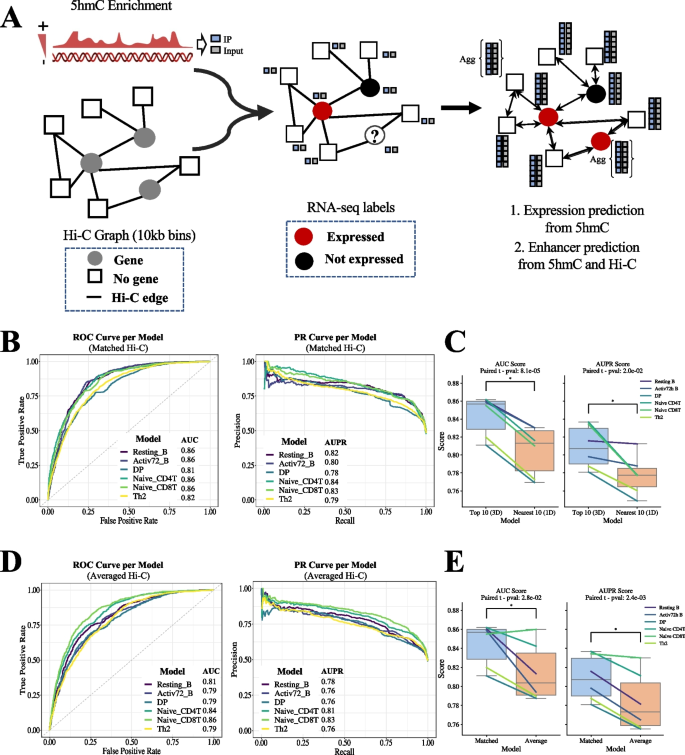

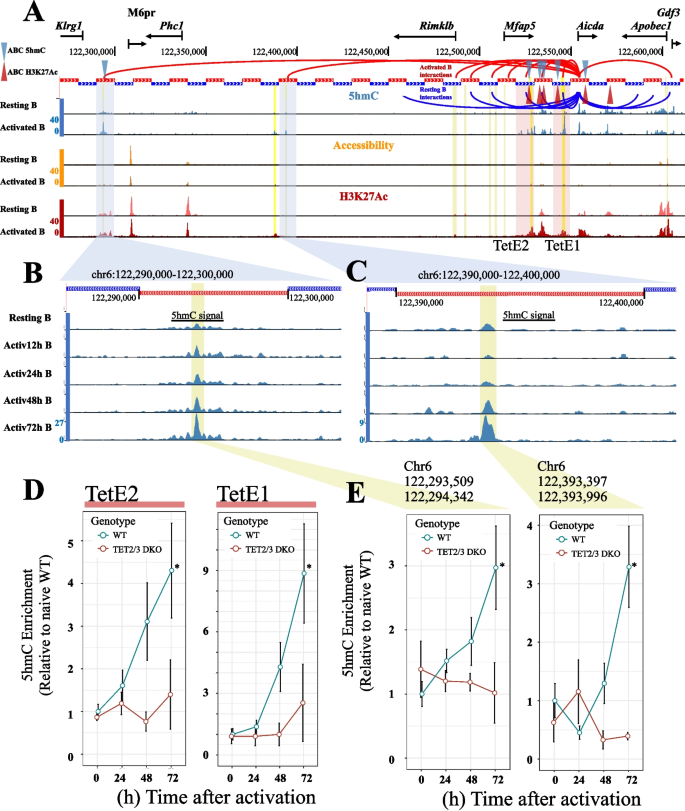

Like its parent base 5-methylcytosine (5mC), 5-hydroxymethylcytosine (5hmC) is a direct epigenetic modification of cytosines in the context of CpG dinucleotides. 5hmC is the most abundant oxidized form of 5mC, generated through the action of TET dioxygenases at gene bodies of actively-transcribed genes and at active or lineage-specific enhancers. Although such enrichments are reported for 5hmC, to date, predictive models of gene expression state or putative regulatory regions for genes using 5hmC have not been developed.

Here, by using only 5hmC enrichment in genic regions and their vicinity, we develop neural network models that predict gene expression state across 49 cell types. We show that our deep neural network models distinguish high vs low expression state utilizing only 5hmC levels and these predictive models generalize to unseen cell types. Further, in order to leverage 5hmC signal in distal enhancers for expression prediction, we employ an Activity-by-Contact model and also develop a graph convolutional neural network model with both utilizing Hi-C data and 5hmC enrichment to prioritize enhancer-promoter links. These approaches identify known and novel putative enhancers for key genes in multiple immune cell subsets.

Conclusions