If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

Biology library

Course: biology library > unit 1.

- The scientific method

Controlled experiments

- The scientific method and experimental design

Introduction

How are hypotheses tested.

- One pot of seeds gets watered every afternoon.

- The other pot of seeds doesn't get any water at all.

Control and experimental groups

Independent and dependent variables, independent variables, dependent variables, variability and repetition, controlled experiment case study: co 2 and coral bleaching.

- What your control and experimental groups would be

- What your independent and dependent variables would be

- What results you would predict in each group

Experimental setup

- Some corals were grown in tanks of normal seawater, which is not very acidic ( pH around 8.2 ). The corals in these tanks served as the control group .

- Other corals were grown in tanks of seawater that were more acidic than usual due to addition of CO 2 . One set of tanks was medium-acidity ( pH about 7.9 ), while another set was high-acidity ( pH about 7.65 ). Both the medium-acidity and high-acidity groups were experimental groups .

- In this experiment, the independent variable was the acidity ( pH ) of the seawater. The dependent variable was the degree of bleaching of the corals.

- The researchers used a large sample size and repeated their experiment. Each tank held 5 fragments of coral, and there were 5 identical tanks for each group (control, medium-acidity, and high-acidity). Experimental setup to test effects of water acidity on coral bleaching. Control group: Coral fragments are placed in a tank of normal seawater (pH 8.2). Experimental group 1: Coral fragments are placed in a tank of slightly acidified seawater (pH 7.9). Experimental group 2: Coral fragments are placed in a tank of more strongly acidified seawater (pH 7.65). The water acidity is the independent variable. 8 weeks are allowed to pass for each of the tanks... Control group: Corals are about 10% bleached on average. Experimental group 1 (medium acidity): Corals are about 20% bleached on average. Experimental group 2 (higher acidity): Corals are about 40% bleached on average. Degree of coral bleaching is the dependent variable. Note: None of these tanks was "acidic" on an absolute scale. That is, the pH values were all above the neutral pH of 7.0 . However, the two groups of experimental tanks were moderately and highly acidic to the corals , that is, relative to their natural habitat of plain seawater.

Analyzing the results

Non-experimental hypothesis tests, case study: coral bleaching and temperature, attribution:, works cited:.

- Hoegh-Guldberg, O. (1999). Climate change, coral bleaching, and the future of the world's coral reefs. Mar. Freshwater Res. , 50 , 839-866. Retrieved from www.reef.edu.au/climate/Hoegh-Guldberg%201999.pdf.

- Anthony, K. R. N., Kline, D. I., Diaz-Pulido, G., Dove, S., and Hoegh-Guldberg, O. (2008). Ocean acidification causes bleaching and productivity loss in coral reef builders. PNAS , 105 (45), 17442-17446. http://dx.doi.org/10.1073/pnas.0804478105 .

- University of California Museum of Paleontology. (2016). Misconceptions about science. In Understanding science . Retrieved from http://undsci.berkeley.edu/teaching/misconceptions.php .

- Hoegh-Guldberg, O. and Smith, G. J. (1989). The effect of sudden changes in temperature, light and salinity on the density and export of zooxanthellae from the reef corals Stylophora pistillata (Esper, 1797) and Seriatopora hystrix (Dana, 1846). J. Exp. Mar. Biol. Ecol. , 129 , 279-303. Retrieved from http://www.reef.edu.au/ohg/res-pic/HG%20papers/HG%20and%20Smith%201989%20BLEACH.pdf .

Additional references:

Want to join the conversation.

- Upvote Button navigates to signup page

- Downvote Button navigates to signup page

- Flag Button navigates to signup page

Chapter 6: Experimental Research

Conducting experiments, learning objectives.

- Describe several strategies for recruiting participants for an experiment.

- Explain why it is important to standardize the procedure of an experiment and several ways to do this.

- Explain what pilot testing is and why it is important.

The information presented so far in this chapter is enough to design a basic experiment. When it comes time to conduct that experiment, however, several additional practical issues arise. In this section, we consider some of these issues and how to deal with them. Much of this information applies to nonexperimental studies as well as experimental ones.

Recruiting Participants

Of course, at the start of any research project you should be thinking about how you will obtain your participants. Unless you have access to people with schizophrenia or incarcerated juvenile offenders, for example, then there is no point designing a study that focuses on these populations. But even if you plan to use a convenience sample, you will have to recruit participants for your study.

There are several approaches to recruiting participants. One is to use participants from a formal subject pool —an established group of people who have agreed to be contacted about participating in research studies. For example, at many colleges and universities, there is a subject pool consisting of students enrolled in introductory psychology courses who must participate in a certain number of studies to meet a course requirement. Researchers post descriptions of their studies and students sign up to participate, usually via an online system. Participants who are not in subject pools can also be recruited by posting or publishing advertisements or making personal appeals to groups that represent the population of interest. For example, a researcher interested in studying older adults could arrange to speak at a meeting of the residents at a retirement community to explain the study and ask for volunteers.

“Study” Retrieved from http://imgs.xkcd.com/comics/study.png (CC-BY-NC 2.5)

The Volunteer Subject

Even if the participants in a study receive compensation in the form of course credit, a small amount of money, or a chance at being treated for a psychological problem, they are still essentially volunteers. This is worth considering because people who volunteer to participate in psychological research have been shown to differ in predictable ways from those who do not volunteer. Specifically, there is good evidence that on average, volunteers have the following characteristics compared with nonvolunteers (Rosenthal & Rosnow, 1976) [1] :

- They are more interested in the topic of the research.

- They are more educated.

- They have a greater need for approval.

- They have higher intelligence quotients (IQs).

- They are more sociable.

- They are higher in social class.

This difference can be an issue of external validity if there is reason to believe that participants with these characteristics are likely to behave differently than the general population. For example, in testing different methods of persuading people, a rational argument might work better on volunteers than it does on the general population because of their generally higher educational level and IQ.

In many field experiments, the task is not recruiting participants but selecting them. For example, researchers Nicolas Guéguen and Marie-Agnès de Gail conducted a field experiment on the effect of being smiled at on helping, in which the participants were shoppers at a supermarket. A confederate walking down a stairway gazed directly at a shopper walking up the stairway and either smiled or did not smile. Shortly afterward, the shopper encountered another confederate, who dropped some computer diskettes on the ground. The dependent variable was whether or not the shopper stopped to help pick up the diskettes (Guéguen & de Gail, 2003) [2] . Notice that these participants were not “recruited,” but the researchers still had to select them from among all the shoppers taking the stairs that day. It is extremely important that this kind of selection be done according to a well-defined set of rules that is established before the data collection begins and can be explained clearly afterward. In this case, with each trip down the stairs, the confederate was instructed to gaze at the first person he encountered who appeared to be between the ages of 20 and 50. Only if the person gazed back did he or she become a participant in the study. The point of having a well-defined selection rule is to avoid bias in the selection of participants. For example, if the confederate was free to choose which shoppers he would gaze at, he might choose friendly-looking shoppers when he was set to smile and unfriendly-looking ones when he was not set to smile. As we will see shortly, such biases can be entirely unintentional.

Standardizing the Procedure

It is surprisingly easy to introduce extraneous variables during the procedure. For example, the same experimenter might give clear instructions to one participant but vague instructions to another. Or one experimenter might greet participants warmly while another barely makes eye contact with them. To the extent that such variables affect participants’ behaviour, they add noise to the data and make the effect of the independent variable more difficult to detect. If they vary across conditions, they become confounding variables and provide alternative explanations for the results. For example, if participants in a treatment group are tested by a warm and friendly experimenter and participants in a control group are tested by a cold and unfriendly one, then what appears to be an effect of the treatment might actually be an effect of experimenter demeanor. When there are multiple experimenters, the possibility for introducing extraneous variables is even greater, but is often necessary for practical reasons.

Experimenter’s Sex as an Extraneous Variable

It is well known that whether research participants are male or female can affect the results of a study. But what about whether the experimenter is male or female? There is plenty of evidence that this matters too. Male and female experimenters have slightly different ways of interacting with their participants, and of course participants also respond differently to male and female experimenters (Rosenthal, 1976) [3] .

For example, in a recent study on pain perception, participants immersed their hands in icy water for as long as they could (Ibolya, Brake, & Voss, 2004) [4] . Male participants tolerated the pain longer when the experimenter was a woman, and female participants tolerated it longer when the experimenter was a man.

Researcher Robert Rosenthal has spent much of his career showing that this kind of unintended variation in the procedure does, in fact, affect participants’ behaviour. Furthermore, one important source of such variation is the experimenter’s expectations about how participants “should” behave in the experiment. This outcome is referred to as an experimenter expectancy effect (Rosenthal, 1976) [5] . For example, if an experimenter expects participants in a treatment group to perform better on a task than participants in a control group, then he or she might unintentionally give the treatment group participants clearer instructions or more encouragement or allow them more time to complete the task. In a striking example, Rosenthal and Kermit Fode had several students in a laboratory course in psychology train rats to run through a maze. Although the rats were genetically similar, some of the students were told that they were working with “maze-bright” rats that had been bred to be good learners, and other students were told that they were working with “maze-dull” rats that had been bred to be poor learners. Sure enough, over five days of training, the “maze-bright” rats made more correct responses, made the correct response more quickly, and improved more steadily than the “maze-dull” rats (Rosenthal & Fode, 1963) [6] . Clearly it had to have been the students’ expectations about how the rats would perform that made the difference. But how? Some clues come from data gathered at the end of the study, which showed that students who expected their rats to learn quickly felt more positively about their animals and reported behaving toward them in a more friendly manner (e.g., handling them more).

The way to minimize unintended variation in the procedure is to standardize it as much as possible so that it is carried out in the same way for all participants regardless of the condition they are in. Here are several ways to do this:

- Create a written protocol that specifies everything that the experimenters are to do and say from the time they greet participants to the time they dismiss them.

- Create standard instructions that participants read themselves or that are read to them word for word by the experimenter.

- Automate the rest of the procedure as much as possible by using software packages for this purpose or even simple computer slide shows.

- Anticipate participants’ questions and either raise and answer them in the instructions or develop standard answers for them.

- Train multiple experimenters on the protocol together and have them practice on each other.

- Be sure that each experimenter tests participants in all conditions.

Another good practice is to arrange for the experimenters to be “blind” to the research question or to the condition that each participant is tested in. The idea is to minimize experimenter expectancy effects by minimizing the experimenters’ expectations. For example, in a drug study in which each participant receives the drug or a placebo, it is often the case that neither the participants nor the experimenter who interacts with the participants know which condition he or she has been assigned to. Because both the participants and the experimenters are blind to the condition, this technique is referred to as a double-blind study . (A single-blind study is one in which the participant, but not the experimenter, is blind to the condition.) Of course, there are many times this blinding is not possible. For example, if you are both the investigator and the only experimenter, it is not possible for you to remain blind to the research question. Also, in many studies the experimenter must know the condition because he or she must carry out the procedure in a different way in the different conditions.

“Placebo Blocker” retrieved from http://imgs.xkcd.com/comics/placebo_blocker.png (CC-BY-NC 2.5)

Record Keeping

It is essential to keep good records when you conduct an experiment. As discussed earlier, it is typical for experimenters to generate a written sequence of conditions before the study begins and then to test each new participant in the next condition in the sequence. As you test them, it is a good idea to add to this list basic demographic information; the date, time, and place of testing; and the name of the experimenter who did the testing. It is also a good idea to have a place for the experimenter to write down comments about unusual occurrences (e.g., a confused or uncooperative participant) or questions that come up. This kind of information can be useful later if you decide to analy z e sex differences or effects of different experimenters, or if a question arises about a particular participant or testing session.

It can also be useful to assign an identification number to each participant as you test them. Simply numbering them consecutively beginning with 1 is usually sufficient. This number can then also be written on any response sheets or questionnaires that participants generate, making it easier to keep them together.

Pilot Testing

It is always a good idea to conduct a pilot test of your experiment. A pilot test is a small-scale study conducted to make sure that a new procedure works as planned. In a pilot test, you can recruit participants formally (e.g., from an established participant pool) or you can recruit them informally from among family, friends, classmates, and so on. The number of participants can be small, but it should be enough to give you confidence that your procedure works as planned. There are several important questions that you can answer by conducting a pilot test:

- Do participants understand the instructions?

- What kind of misunderstandings do participants have, what kind of mistakes do they make, and what kind of questions do they ask?

- Do participants become bored or frustrated?

- Is an indirect manipulation effective? (You will need to include a manipulation check.)

- Can participants guess the research question or hypothesis?

- How long does the procedure take?

- Are computer programs or other automated procedures working properly?

- Are data being recorded correctly?

Of course, to answer some of these questions you will need to observe participants carefully during the procedure and talk with them about it afterward. Participants are often hesitant to criticize a study in front of the researcher, so be sure they understand that their participation is part of a pilot test and you are genuinely interested in feedback that will help you improve the procedure. If the procedure works as planned, then you can proceed with the actual study. If there are problems to be solved, you can solve them, pilot test the new procedure, and continue with this process until you are ready to proceed.

Key Takeaways

- There are several effective methods you can use to recruit research participants for your experiment, including through formal subject pools, advertisements, and personal appeals. Field experiments require well-defined participant selection procedures.

- It is important to standardize experimental procedures to minimize extraneous variables, including experimenter expectancy effects.

- It is important to conduct one or more small-scale pilot tests of an experiment to be sure that the procedure works as planned.

- elderly adults

- unemployed people

- regular exercisers

- math majors

- Discussion: Imagine a study in which you will visually present participants with a list of 20 words, one at a time, wait for a short time, and then ask them to recall as many of the words as they can. In the stressed condition, they are told that they might also be chosen to give a short speech in front of a small audience. In the unstressed condition, they are not told that they might have to give a speech. What are several specific things that you could do to standardize the procedure?

- Rosenthal, R., & Rosnow, R. L. (1976). The volunteer subject . New York, NY: Wiley. ↵

- Guéguen, N., & de Gail, Marie-Agnès. (2003). The effect of smiling on helping behaviour: Smiling and good Samaritan behaviour. Communication Reports, 16 , 133–140. ↵

- Rosenthal, R. (1976). Experimenter effects in behavioural research (enlarged ed.). New York, NY: Wiley. ↵

- Ibolya, K., Brake, A., & Voss, U. (2004). The effect of experimenter characteristics on pain reports in women and men. Pain, 112 , 142–147. ↵

- Rosenthal, R., & Fode, K. (1963). The effect of experimenter bias on performance of the albino rat. Behavioural Science, 8 , 183-189. ↵

- Research Methods in Psychology. Authored by : Paul C. Price, Rajiv S. Jhangiani, and I-Chant A. Chiang. Provided by : BCCampus. Located at : https://opentextbc.ca/researchmethods/ . License : CC BY-NC-SA: Attribution-NonCommercial-ShareAlike

Privacy Policy

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

How to Conduct a Psychology Experiment

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Emily is a board-certified science editor who has worked with top digital publishing brands like Voices for Biodiversity, Study.com, GoodTherapy, Vox, and Verywell.

:max_bytes(150000):strip_icc():format(webp)/Emily-Swaim-1000-0f3197de18f74329aeffb690a177160c.jpg)

Conducting your first psychology experiment can be a long, complicated, and sometimes intimidating process. It can be especially confusing if you are not quite sure where to begin or which steps to take.

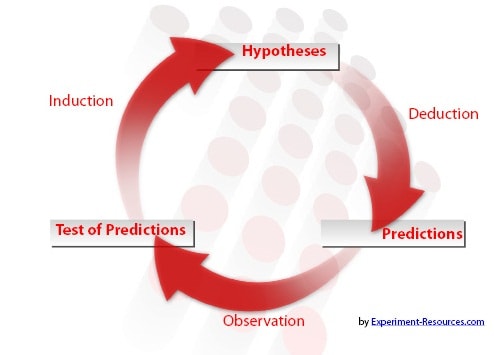

Like other sciences, psychology utilizes the scientific method and bases conclusions upon empirical evidence. When conducting an experiment, it is important to follow the seven basic steps of the scientific method:

- Ask a testable question

- Define your variables

- Conduct background research

- Design your experiment

- Perform the experiment

- Collect and analyze the data

- Draw conclusions

- Share the results with the scientific community

At a Glance

It's important to know the steps of the scientific method if you are conducting an experiment in psychology or other fields. The processes encompasses finding a problem you want to explore, learning what has already been discovered about the topic, determining your variables, and finally designing and performing your experiment. But the process doesn't end there! Once you've collected your data, it's time to analyze the numbers, determine what they mean, and share what you've found.

Find a Research Problem or Question

Picking a research problem can be one of the most challenging steps when you are conducting an experiment. After all, there are so many different topics you might choose to investigate.

Are you stuck for an idea? Consider some of the following:

Investigate a Commonly Held Belief

Folk knowledge is a good source of questions that can serve as the basis for psychological research. For example, many people believe that staying up all night to cram for a big exam can actually hurt test performance.

You could conduct a study to compare the test scores of students who stayed up all night with the scores of students who got a full night's sleep before the exam.

Review Psychology Literature

Published studies are a great source of unanswered research questions. In many cases, the authors will even note the need for further research. Find a published study that you find intriguing, and then come up with some questions that require further exploration.

Think About Everyday Problems

There are many practical applications for psychology research. Explore various problems that you or others face each day, and then consider how you could research potential solutions. For example, you might investigate different memorization strategies to determine which methods are most effective.

Define Your Variables

Variables are anything that might impact the outcome of your study. An operational definition describes exactly what the variables are and how they are measured within the context of your study.

For example, if you were doing a study on the impact of sleep deprivation on driving performance, you would need to operationally define sleep deprivation and driving performance .

An operational definition refers to a precise way that an abstract concept will be measured. For example, you cannot directly observe and measure something like test anxiety . You can, however, use an anxiety scale and assign values based on how many anxiety symptoms a person is experiencing.

In this example, you might define sleep deprivation as getting less than seven hours of sleep at night. You might define driving performance as how well a participant does on a driving test.

What is the purpose of operationally defining variables? The main purpose is control. By understanding what you are measuring, you can control for it by holding the variable constant between all groups or manipulating it as an independent variable .

Develop a Hypothesis

The next step is to develop a testable hypothesis that predicts how the operationally defined variables are related. In the recent example, the hypothesis might be: "Students who are sleep-deprived will perform worse than students who are not sleep-deprived on a test of driving performance."

Null Hypothesis

In order to determine if the results of the study are significant, it is essential to also have a null hypothesis. The null hypothesis is the prediction that one variable will have no association to the other variable.

In other words, the null hypothesis assumes that there will be no difference in the effects of the two treatments in our experimental and control groups .

The null hypothesis is assumed to be valid unless contradicted by the results. The experimenters can either reject the null hypothesis in favor of the alternative hypothesis or not reject the null hypothesis.

It is important to remember that not rejecting the null hypothesis does not mean that you are accepting the null hypothesis. To say that you are accepting the null hypothesis is to suggest that something is true simply because you did not find any evidence against it. This represents a logical fallacy that should be avoided in scientific research.

Conduct Background Research

Once you have developed a testable hypothesis, it is important to spend some time doing some background research. What do researchers already know about your topic? What questions remain unanswered?

You can learn about previous research on your topic by exploring books, journal articles, online databases, newspapers, and websites devoted to your subject.

Reading previous research helps you gain a better understanding of what you will encounter when conducting an experiment. Understanding the background of your topic provides a better basis for your own hypothesis.

After conducting a thorough review of the literature, you might choose to alter your own hypothesis. Background research also allows you to explain why you chose to investigate your particular hypothesis and articulate why the topic merits further exploration.

As you research the history of your topic, take careful notes and create a working bibliography of your sources. This information will be valuable when you begin to write up your experiment results.

Select an Experimental Design

After conducting background research and finalizing your hypothesis, your next step is to develop an experimental design. There are three basic types of designs that you might utilize. Each has its own strengths and weaknesses:

Pre-Experimental Design

A single group of participants is studied, and there is no comparison between a treatment group and a control group. Examples of pre-experimental designs include case studies (one group is given a treatment and the results are measured) and pre-test/post-test studies (one group is tested, given a treatment, and then retested).

Quasi-Experimental Design

This type of experimental design does include a control group but does not include randomization. This type of design is often used if it is not feasible or ethical to perform a randomized controlled trial.

True Experimental Design

A true experimental design, also known as a randomized controlled trial, includes both of the elements that pre-experimental designs and quasi-experimental designs lack—control groups and random assignment to groups.

Standardize Your Procedures

In order to arrive at legitimate conclusions, it is essential to compare apples to apples.

Each participant in each group must receive the same treatment under the same conditions.

For example, in our hypothetical study on the effects of sleep deprivation on driving performance, the driving test must be administered to each participant in the same way. The driving course must be the same, the obstacles faced must be the same, and the time given must be the same.

Choose Your Participants

In addition to making sure that the testing conditions are standardized, it is also essential to ensure that your pool of participants is the same.

If the individuals in your control group (those who are not sleep deprived) all happen to be amateur race car drivers while your experimental group (those that are sleep deprived) are all people who just recently earned their driver's licenses, your experiment will lack standardization.

When choosing subjects, there are some different techniques you can use.

Simple Random Sample

In a simple random sample, the participants are randomly selected from a group. A simple random sample can be used to represent the entire population from which the representative sample is drawn.

Drawing a simple random sample can be helpful when you don't know a lot about the characteristics of the population.

Stratified Random Sample

Participants must be randomly selected from different subsets of the population. These subsets might include characteristics such as geographic location, age, sex, race, or socioeconomic status.

Stratified random samples are more complex to carry out. However, you might opt for this method if there are key characteristics about the population that you want to explore in your research.

Conduct Tests and Collect Data

After you have selected participants, the next steps are to conduct your tests and collect the data. Before doing any testing, however, there are a few important concerns that need to be addressed.

Address Ethical Concerns

First, you need to be sure that your testing procedures are ethical . Generally, you will need to gain permission to conduct any type of testing with human participants by submitting the details of your experiment to your school's Institutional Review Board (IRB), sometimes referred to as the Human Subjects Committee.

Obtain Informed Consent

After you have gained approval from your institution's IRB, you will need to present informed consent forms to each participant. This form offers information on the study, the data that will be gathered, and how the results will be used. The form also gives participants the option to withdraw from the study at any point in time.

Once this step has been completed, you can begin administering your testing procedures and collecting the data.

Analyze the Results

After collecting your data, it is time to analyze the results of your experiment. Researchers use statistics to determine if the results of the study support the original hypothesis and if the results are statistically significant.

Statistical significance means that the study's results are unlikely to have occurred simply by chance.

The types of statistical methods you use to analyze your data depend largely on the type of data that you collected. If you are using a random sample of a larger population, you will need to utilize inferential statistics.

These statistical methods make inferences about how the results relate to the population at large.

Because you are making inferences based on a sample, it has to be assumed that there will be a certain margin of error. This refers to the amount of error in your results. A large margin of error means that there will be less confidence in your results, while a small margin of error means that you are more confident that your results are an accurate reflection of what exists in that population.

Share Your Results After Conducting an Experiment

Your final task in conducting an experiment is to communicate your results. By sharing your experiment with the scientific community, you are contributing to the knowledge base on that particular topic.

One of the most common ways to share research results is to publish the study in a peer-reviewed professional journal. Other methods include sharing results at conferences, in book chapters, or academic presentations.

In your case, it is likely that your class instructor will expect a formal write-up of your experiment in the same format required in a professional journal article or lab report :

- Introduction

- Tables and figures

What This Means For You

Designing and conducting a psychology experiment can be quite intimidating, but breaking the process down step-by-step can help. No matter what type of experiment you decide to perform, always check with your instructor and your school's institutional review board for permission before you begin.

NOAA SciJinks. What is the scientific method? .

Nestor, PG, Schutt, RK. Research Methods in Psychology . SAGE; 2015.

Andrade C. A student's guide to the classification and operationalization of variables in the conceptualization and eesign of a clinical study: Part 2 . Indian J Psychol Med . 2021;43(3):265-268. doi:10.1177/0253717621996151

Purna Singh A, Vadakedath S, Kandi V. Clinical research: A review of study designs, hypotheses, errors, sampling types, ethics, and informed consent . Cureus . 2023;15(1):e33374. doi:10.7759/cureus.33374

Colby College. The Experimental Method .

Leite DFB, Padilha MAS, Cecatti JG. Approaching literature review for academic purposes: The Literature Review Checklist . Clinics (Sao Paulo) . 2019;74:e1403. doi:10.6061/clinics/2019/e1403

Salkind NJ. Encyclopedia of Research Design . SAGE Publications, Inc.; 2010. doi:10.4135/9781412961288

Miller CJ, Smith SN, Pugatch M. Experimental and quasi-experimental designs in implementation research . Psychiatry Res . 2020;283:112452. doi:10.1016/j.psychres.2019.06.027

Nijhawan LP, Manthan D, Muddukrishna BS, et. al. Informed consent: Issues and challenges . J Adv Pharm Technol Rese . 2013;4(3):134-140. doi:10.4103/2231-4040.116779

Serdar CC, Cihan M, Yücel D, Serdar MA. Sample size, power and effect size revisited: simplified and practical approaches in pre-clinical, clinical and laboratory studies . Biochem Med (Zagreb) . 2021;31(1):010502. doi:10.11613/BM.2021.010502

American Psychological Association. Publication Manual of the American Psychological Association (7th ed.). Washington DC: The American Psychological Association; 2019.

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Experimental Research

23 Experiment Basics

Learning objectives.

- Explain what an experiment is and recognize examples of studies that are experiments and studies that are not experiments.

- Distinguish between the manipulation of the independent variable and control of extraneous variables and explain the importance of each.

- Recognize examples of confounding variables and explain how they affect the internal validity of a study.

- Define what a control condition is, explain its purpose in research on treatment effectiveness, and describe some alternative types of control conditions.

What Is an Experiment?

As we saw earlier in the book, an experiment is a type of study designed specifically to answer the question of whether there is a causal relationship between two variables. In other words, whether changes in one variable (referred to as an independent variable ) cause a change in another variable (referred to as a dependent variable ). Experiments have two fundamental features. The first is that the researchers manipulate, or systematically vary, the level of the independent variable. The different levels of the independent variable are called conditions . For example, in Darley and Latané’s experiment, the independent variable was the number of witnesses that participants believed to be present. The researchers manipulated this independent variable by telling participants that there were either one, two, or five other students involved in the discussion, thereby creating three conditions. For a new researcher, it is easy to confuse these terms by believing there are three independent variables in this situation: one, two, or five students involved in the discussion, but there is actually only one independent variable (number of witnesses) with three different levels or conditions (one, two or five students). The second fundamental feature of an experiment is that the researcher exerts control over, or minimizes the variability in, variables other than the independent and dependent variable. These other variables are called extraneous variables . Darley and Latané tested all their participants in the same room, exposed them to the same emergency situation, and so on. They also randomly assigned their participants to conditions so that the three groups would be similar to each other to begin with. Notice that although the words manipulation and control have similar meanings in everyday language, researchers make a clear distinction between them. They manipulate the independent variable by systematically changing its levels and control other variables by holding them constant.

Manipulation of the Independent Variable

Again, to manipulate an independent variable means to change its level systematically so that different groups of participants are exposed to different levels of that variable, or the same group of participants is exposed to different levels at different times. For example, to see whether expressive writing affects people’s health, a researcher might instruct some participants to write about traumatic experiences and others to write about neutral experiences. The different levels of the independent variable are referred to as conditions , and researchers often give the conditions short descriptive names to make it easy to talk and write about them. In this case, the conditions might be called the “traumatic condition” and the “neutral condition.”

Notice that the manipulation of an independent variable must involve the active intervention of the researcher. Comparing groups of people who differ on the independent variable before the study begins is not the same as manipulating that variable. For example, a researcher who compares the health of people who already keep a journal with the health of people who do not keep a journal has not manipulated this variable and therefore has not conducted an experiment. This distinction is important because groups that already differ in one way at the beginning of a study are likely to differ in other ways too. For example, people who choose to keep journals might also be more conscientious, more introverted, or less stressed than people who do not. Therefore, any observed difference between the two groups in terms of their health might have been caused by whether or not they keep a journal, or it might have been caused by any of the other differences between people who do and do not keep journals. Thus the active manipulation of the independent variable is crucial for eliminating potential alternative explanations for the results.

Of course, there are many situations in which the independent variable cannot be manipulated for practical or ethical reasons and therefore an experiment is not possible. For example, whether or not people have a significant early illness experience cannot be manipulated, making it impossible to conduct an experiment on the effect of early illness experiences on the development of hypochondriasis. This caveat does not mean it is impossible to study the relationship between early illness experiences and hypochondriasis—only that it must be done using nonexperimental approaches. We will discuss this type of methodology in detail later in the book.

Independent variables can be manipulated to create two conditions and experiments involving a single independent variable with two conditions are often referred to as a single factor two-level design . However, sometimes greater insights can be gained by adding more conditions to an experiment. When an experiment has one independent variable that is manipulated to produce more than two conditions it is referred to as a single factor multi level design . So rather than comparing a condition in which there was one witness to a condition in which there were five witnesses (which would represent a single-factor two-level design), Darley and Latané’s experiment used a single factor multi-level design, by manipulating the independent variable to produce three conditions (a one witness, a two witnesses, and a five witnesses condition).

Control of Extraneous Variables

As we have seen previously in the chapter, an extraneous variable is anything that varies in the context of a study other than the independent and dependent variables. In an experiment on the effect of expressive writing on health, for example, extraneous variables would include participant variables (individual differences) such as their writing ability, their diet, and their gender. They would also include situational or task variables such as the time of day when participants write, whether they write by hand or on a computer, and the weather. Extraneous variables pose a problem because many of them are likely to have some effect on the dependent variable. For example, participants’ health will be affected by many things other than whether or not they engage in expressive writing. This influencing factor can make it difficult to separate the effect of the independent variable from the effects of the extraneous variables, which is why it is important to control extraneous variables by holding them constant.

Extraneous Variables as “Noise”

Extraneous variables make it difficult to detect the effect of the independent variable in two ways. One is by adding variability or “noise” to the data. Imagine a simple experiment on the effect of mood (happy vs. sad) on the number of happy childhood events people are able to recall. Participants are put into a negative or positive mood (by showing them a happy or sad video clip) and then asked to recall as many happy childhood events as they can. The two leftmost columns of Table 5.1 show what the data might look like if there were no extraneous variables and the number of happy childhood events participants recalled was affected only by their moods. Every participant in the happy mood condition recalled exactly four happy childhood events, and every participant in the sad mood condition recalled exactly three. The effect of mood here is quite obvious. In reality, however, the data would probably look more like those in the two rightmost columns of Table 5.1 . Even in the happy mood condition, some participants would recall fewer happy memories because they have fewer to draw on, use less effective recall strategies, or are less motivated. And even in the sad mood condition, some participants would recall more happy childhood memories because they have more happy memories to draw on, they use more effective recall strategies, or they are more motivated. Although the mean difference between the two groups is the same as in the idealized data, this difference is much less obvious in the context of the greater variability in the data. Thus one reason researchers try to control extraneous variables is so their data look more like the idealized data in Table 5.1 , which makes the effect of the independent variable easier to detect (although real data never look quite that good).

One way to control extraneous variables is to hold them constant. This technique can mean holding situation or task variables constant by testing all participants in the same location, giving them identical instructions, treating them in the same way, and so on. It can also mean holding participant variables constant. For example, many studies of language limit participants to right-handed people, who generally have their language areas isolated in their left cerebral hemispheres [1] . Left-handed people are more likely to have their language areas isolated in their right cerebral hemispheres or distributed across both hemispheres, which can change the way they process language and thereby add noise to the data.

In principle, researchers can control extraneous variables by limiting participants to one very specific category of person, such as 20-year-old, heterosexual, female, right-handed psychology majors. The obvious downside to this approach is that it would lower the external validity of the study—in particular, the extent to which the results can be generalized beyond the people actually studied. For example, it might be unclear whether results obtained with a sample of younger lesbian women would apply to older gay men. In many situations, the advantages of a diverse sample (increased external validity) outweigh the reduction in noise achieved by a homogeneous one.

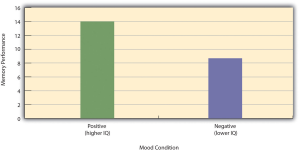

Extraneous Variables as Confounding Variables

The second way that extraneous variables can make it difficult to detect the effect of the independent variable is by becoming confounding variables. A confounding variable is an extraneous variable that differs on average across levels of the independent variable (i.e., it is an extraneous variable that varies systematically with the independent variable). For example, in almost all experiments, participants’ intelligence quotients (IQs) will be an extraneous variable. But as long as there are participants with lower and higher IQs in each condition so that the average IQ is roughly equal across the conditions, then this variation is probably acceptable (and may even be desirable). What would be bad, however, would be for participants in one condition to have substantially lower IQs on average and participants in another condition to have substantially higher IQs on average. In this case, IQ would be a confounding variable.

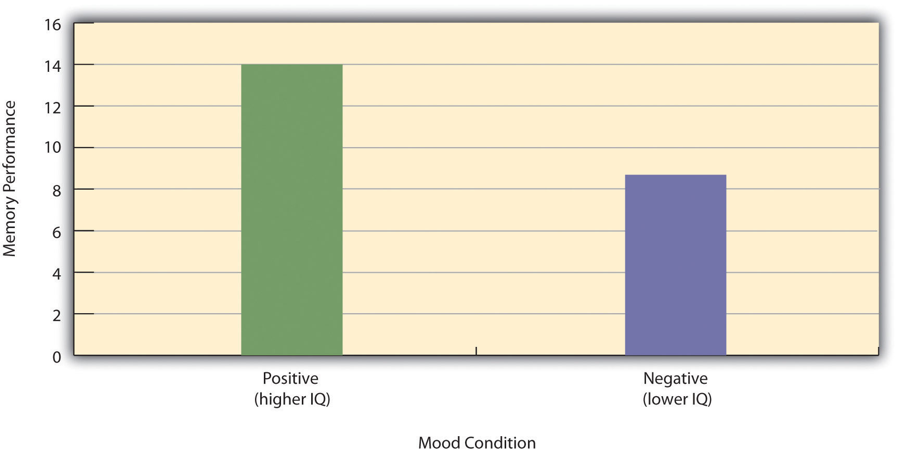

To confound means to confuse , and this effect is exactly why confounding variables are undesirable. Because they differ systematically across conditions—just like the independent variable—they provide an alternative explanation for any observed difference in the dependent variable. Figure 5.1 shows the results of a hypothetical study, in which participants in a positive mood condition scored higher on a memory task than participants in a negative mood condition. But if IQ is a confounding variable—with participants in the positive mood condition having higher IQs on average than participants in the negative mood condition—then it is unclear whether it was the positive moods or the higher IQs that caused participants in the first condition to score higher. One way to avoid confounding variables is by holding extraneous variables constant. For example, one could prevent IQ from becoming a confounding variable by limiting participants only to those with IQs of exactly 100. But this approach is not always desirable for reasons we have already discussed. A second and much more general approach—random assignment to conditions—will be discussed in detail shortly.

Treatment and Control Conditions

In psychological research, a treatment is any intervention meant to change people’s behavior for the better. This intervention includes psychotherapies and medical treatments for psychological disorders but also interventions designed to improve learning, promote conservation, reduce prejudice, and so on. To determine whether a treatment works, participants are randomly assigned to either a treatment condition , in which they receive the treatment, or a control condition , in which they do not receive the treatment. If participants in the treatment condition end up better off than participants in the control condition—for example, they are less depressed, learn faster, conserve more, express less prejudice—then the researcher can conclude that the treatment works. In research on the effectiveness of psychotherapies and medical treatments, this type of experiment is often called a randomized clinical trial .

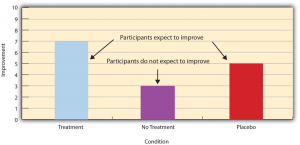

There are different types of control conditions. In a no-treatment control condition , participants receive no treatment whatsoever. One problem with this approach, however, is the existence of placebo effects. A placebo is a simulated treatment that lacks any active ingredient or element that should make it effective, and a placebo effect is a positive effect of such a treatment. Many folk remedies that seem to work—such as eating chicken soup for a cold or placing soap under the bed sheets to stop nighttime leg cramps—are probably nothing more than placebos. Although placebo effects are not well understood, they are probably driven primarily by people’s expectations that they will improve. Having the expectation to improve can result in reduced stress, anxiety, and depression, which can alter perceptions and even improve immune system functioning (Price, Finniss, & Benedetti, 2008) [2] .

Placebo effects are interesting in their own right (see Note “The Powerful Placebo” ), but they also pose a serious problem for researchers who want to determine whether a treatment works. Figure 5.2 shows some hypothetical results in which participants in a treatment condition improved more on average than participants in a no-treatment control condition. If these conditions (the two leftmost bars in Figure 5.2 ) were the only conditions in this experiment, however, one could not conclude that the treatment worked. It could be instead that participants in the treatment group improved more because they expected to improve, while those in the no-treatment control condition did not.

Fortunately, there are several solutions to this problem. One is to include a placebo control condition , in which participants receive a placebo that looks much like the treatment but lacks the active ingredient or element thought to be responsible for the treatment’s effectiveness. When participants in a treatment condition take a pill, for example, then those in a placebo control condition would take an identical-looking pill that lacks the active ingredient in the treatment (a “sugar pill”). In research on psychotherapy effectiveness, the placebo might involve going to a psychotherapist and talking in an unstructured way about one’s problems. The idea is that if participants in both the treatment and the placebo control groups expect to improve, then any improvement in the treatment group over and above that in the placebo control group must have been caused by the treatment and not by participants’ expectations. This difference is what is shown by a comparison of the two outer bars in Figure 5.4 .

Of course, the principle of informed consent requires that participants be told that they will be assigned to either a treatment or a placebo control condition—even though they cannot be told which until the experiment ends. In many cases the participants who had been in the control condition are then offered an opportunity to have the real treatment. An alternative approach is to use a wait-list control condition , in which participants are told that they will receive the treatment but must wait until the participants in the treatment condition have already received it. This disclosure allows researchers to compare participants who have received the treatment with participants who are not currently receiving it but who still expect to improve (eventually). A final solution to the problem of placebo effects is to leave out the control condition completely and compare any new treatment with the best available alternative treatment. For example, a new treatment for simple phobia could be compared with standard exposure therapy. Because participants in both conditions receive a treatment, their expectations about improvement should be similar. This approach also makes sense because once there is an effective treatment, the interesting question about a new treatment is not simply “Does it work?” but “Does it work better than what is already available?

The Powerful Placebo

Many people are not surprised that placebos can have a positive effect on disorders that seem fundamentally psychological, including depression, anxiety, and insomnia. However, placebos can also have a positive effect on disorders that most people think of as fundamentally physiological. These include asthma, ulcers, and warts (Shapiro & Shapiro, 1999) [3] . There is even evidence that placebo surgery—also called “sham surgery”—can be as effective as actual surgery.

Medical researcher J. Bruce Moseley and his colleagues conducted a study on the effectiveness of two arthroscopic surgery procedures for osteoarthritis of the knee (Moseley et al., 2002) [4] . The control participants in this study were prepped for surgery, received a tranquilizer, and even received three small incisions in their knees. But they did not receive the actual arthroscopic surgical procedure. Note that the IRB would have carefully considered the use of deception in this case and judged that the benefits of using it outweighed the risks and that there was no other way to answer the research question (about the effectiveness of a placebo procedure) without it. The surprising result was that all participants improved in terms of both knee pain and function, and the sham surgery group improved just as much as the treatment groups. According to the researchers, “This study provides strong evidence that arthroscopic lavage with or without débridement [the surgical procedures used] is not better than and appears to be equivalent to a placebo procedure in improving knee pain and self-reported function” (p. 85).

- Knecht, S., Dräger, B., Deppe, M., Bobe, L., Lohmann, H., Flöel, A., . . . Henningsen, H. (2000). Handedness and hemispheric language dominance in healthy humans. Brain: A Journal of Neurology, 123 (12), 2512-2518. http://dx.doi.org/10.1093/brain/123.12.2512 ↵

- Price, D. D., Finniss, D. G., & Benedetti, F. (2008). A comprehensive review of the placebo effect: Recent advances and current thought. Annual Review of Psychology, 59 , 565–590. ↵

- Shapiro, A. K., & Shapiro, E. (1999). The powerful placebo: From ancient priest to modern physician . Baltimore, MD: Johns Hopkins University Press. ↵

- Moseley, J. B., O’Malley, K., Petersen, N. J., Menke, T. J., Brody, B. A., Kuykendall, D. H., … Wray, N. P. (2002). A controlled trial of arthroscopic surgery for osteoarthritis of the knee. The New England Journal of Medicine, 347 , 81–88. ↵

A type of study designed specifically to answer the question of whether there is a causal relationship between two variables.

The variable the experimenter manipulates.

The variable the experimenter measures (it is the presumed effect).

The different levels of the independent variable to which participants are assigned.

Holding extraneous variables constant in order to separate the effect of the independent variable from the effect of the extraneous variables.

Any variable other than the dependent and independent variable.

Changing the level, or condition, of the independent variable systematically so that different groups of participants are exposed to different levels of that variable, or the same group of participants is exposed to different levels at different times.

An experiment design involving a single independent variable with two conditions.

When an experiment has one independent variable that is manipulated to produce more than two conditions.

An extraneous variable that varies systematically with the independent variable, and thus confuses the effect of the independent variable with the effect of the extraneous one.

Any intervention meant to change people’s behavior for the better.

The condition in which participants receive the treatment.

The condition in which participants do not receive the treatment.

An experiment that researches the effectiveness of psychotherapies and medical treatments.

The condition in which participants receive no treatment whatsoever.

A simulated treatment that lacks any active ingredient or element that is hypothesized to make the treatment effective, but is otherwise identical to the treatment.

An effect that is due to the placebo rather than the treatment.

Condition in which the participants receive a placebo rather than the treatment.

Condition in which participants are told that they will receive the treatment but must wait until the participants in the treatment condition have already received it.

Research Methods in Psychology Copyright © 2019 by Rajiv S. Jhangiani, I-Chant A. Chiang, Carrie Cuttler, & Dana C. Leighton is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

5.1: Experiment Basics

- Last updated

- Save as PDF

- Page ID 16114

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

Learning Objectives

- Explain what an experiment is and recognize examples of studies that are experiments and studies that are not experiments.

- Distinguish between the manipulation of the independent variable and control of extraneous variables and explain the importance of each.

- Recognize examples of confounding variables and explain how they affect the internal validity of a study.

What Is an Experiment?

As we saw earlier in the book, an experiment is a type of study designed specifically to answer the question of whether there is a causal relationship between two variables. In other words, whether changes in an independent variable cause a change in a dependent variable. Experiments have two fundamental features. The first is that the researchers manipulate, or systematically vary, the level of the independent variable. The different levels of the independent variable are called conditions . For example, in Darley and Latané’s experiment, the independent variable was the number of witnesses that participants believed to be present. The researchers manipulated this independent variable by telling participants that there were either one, two, or five other students involved in the discussion, thereby creating three conditions. For a new researcher, it is easy to confuse these terms by believing there are three independent variables in this situation: one, two, or five students involved in the discussion, but there is actually only one independent variable (number of witnesses) with three different levels or conditions (one, two or five students). The second fundamental feature of an experiment is that the researcher controls, or minimizes the variability in, variables other than the independent and dependent variable. These other variables are called extraneous variables . Darley and Latané tested all their participants in the same room, exposed them to the same emergency situation, and so on. They also randomly assigned their participants to conditions so that the three groups would be similar to each other to begin with. Notice that although the words manipulation and control have similar meanings in everyday language, researchers make a clear distinction between them. They manipulate the independent variable by systematically changing its levels and control other variables by holding them constant.

Manipulation of the Independent Variable

Again, to manipulate an independent variable means to change its level systematically so that different groups of participants are exposed to different levels of that variable, or the same group of participants is exposed to different levels at different times. For example, to see whether expressive writing affects people’s health, a researcher might instruct some participants to write about traumatic experiences and others to write about neutral experiences. As discussed earlier in this chapter, the different levels of the independent variable are referred to as conditions , and researchers often give the conditions short descriptive names to make it easy to talk and write about them. In this case, the conditions might be called the “traumatic condition” and the “neutral condition.”

Notice that the manipulation of an independent variable must involve the active intervention of the researcher. Comparing groups of people who differ on the independent variable before the study begins is not the same as manipulating that variable. For example, a researcher who compares the health of people who already keep a journal with the health of people who do not keep a journal has not manipulated this variable and therefore has not conducted an experiment. This distinction is important because groups that already differ in one way at the beginning of a study are likely to differ in other ways too. For example, people who choose to keep journals might also be more conscientious, more introverted, or less stressed than people who do not. Therefore, any observed difference between the two groups in terms of their health might have been caused by whether or not they keep a journal, or it might have been caused by any of the other differences between people who do and do not keep journals. Thus the active manipulation of the independent variable is crucial for eliminating potential alternative explanations for the results.

Of course, there are many situations in which the independent variable cannot be manipulated for practical or ethical reasons and therefore an experiment is not possible. For example, whether or not people have a significant early illness experience cannot be manipulated, making it impossible to conduct an experiment on the effect of early illness experiences on the development of hypochondriasis. This caveat does not mean it is impossible to study the relationship between early illness experiences and hypochondriasis—only that it must be done using nonexperimental approaches. We will discuss this type of methodology in detail later in the book.

Independent variables can be manipulated to create two conditions and experiments involving a single independent variable with two conditions is often referred to as a single factor two-level design. However, sometimes greater insights can be gained by adding more conditions to an experiment. When an experiment has one independent variable that is manipulated to produce more than two conditions it is referred to as a single factor multi level design. So rather than comparing a condition in which there was one witness to a condition in which there were five witnesses (which would represent a single-factor two-level design), Darley and Latané’s used a single factor multi-level design, by manipulating the independent variable to produce three conditions (a one witness, a two witnesses, and a five witnesses condition).

Control of Extraneous Variables

As we have seen previously in the chapter, an extraneous variable is anything that varies in the context of a study other than the independent and dependent variables. In an experiment on the effect of expressive writing on health, for example, extraneous variables would include participant variables (individual differences) such as their writing ability, their diet, and their gender. They would also include situational or task variables such as the time of day when participants write, whether they write by hand or on a computer, and the weather. Extraneous variables pose a problem because many of them are likely to have some effect on the dependent variable. For example, participants’ health will be affected by many things other than whether or not they engage in expressive writing. This influencing factor can make it difficult to separate the effect of the independent variable from the effects of the extraneous variables, which is why it is important to control extraneous variables by holding them constant.

Extraneous Variables as “Noise”

Extraneous variables make it difficult to detect the effect of the independent variable in two ways. One is by adding variability or “noise” to the data. Imagine a simple experiment on the effect of mood (happy vs. sad) on the number of happy childhood events people are able to recall. Participants are put into a negative or positive mood (by showing them a happy or sad video clip) and then asked to recall as many happy childhood events as they can. The two leftmost columns of Table \(\PageIndex{1}\) show what the data might look like if there were no extraneous variables and the number of happy childhood events participants recalled was affected only by their moods. Every participant in the happy mood condition recalled exactly four happy childhood events, and every participant in the sad mood condition recalled exactly three. The effect of mood here is quite obvious. In reality, however, the data would probably look more like those in the two rightmost columns of Table \(\PageIndex{1}\) . Even in the happy mood condition, some participants would recall fewer happy memories because they have fewer to draw on, use less effective recall strategies, or are less motivated. And even in the sad mood condition, some participants would recall more happy childhood memories because they have more happy memories to draw on, they use more effective recall strategies, or they are more motivated. Although the mean difference between the two groups is the same as in the idealized data, this difference is much less obvious in the context of the greater variability in the data. Thus one reason researchers try to control extraneous variables is so their data look more like the idealized data in Table \(\PageIndex{1}\) , which makes the effect of the independent variable easier to detect (although real data never look quite that good).

One way to control extraneous variables is to hold them constant. This technique can mean holding situation or task variables constant by testing all participants in the same location, giving them identical instructions, treating them in the same way, and so on. It can also mean holding participant variables constant. For example, many studies of language limit participants to right-handed people, who generally have their language areas isolated in their left cerebral hemispheres. Left-handed people are more likely to have their language areas isolated in their right cerebral hemispheres or distributed across both hemispheres, which can change the way they process language and thereby add noise to the data.

In principle, researchers can control extraneous variables by limiting participants to one very specific category of person, such as 20-year-old, heterosexual, female, right-handed psychology majors. The obvious downside to this approach is that it would lower the external validity of the study—in particular, the extent to which the results can be generalized beyond the people actually studied. For example, it might be unclear whether results obtained with a sample of younger heterosexual women would apply to older homosexual men. In many situations, the advantages of a diverse sample (increased external validity) outweigh the reduction in noise achieved by a homogeneous one.

Extraneous Variables as Confounding Variables

The second way that extraneous variables can make it difficult to detect the effect of the independent variable is by becoming confounding variables. A confounding variable is an extraneous variable that differs on average across levels of the independent variable (i.e., it is an extraneous variable that varies systematically with the independent variable). For example, in almost all experiments, participants’ intelligence quotients (IQs) will be an extraneous variable. But as long as there are participants with lower and higher IQs in each condition so that the average IQ is roughly equal across the conditions, then this variation is probably acceptable (and may even be desirable). What would be bad, however, would be for participants in one condition to have substantially lower IQs on average and participants in another condition to have substantially higher IQs on average. In this case, IQ would be a confounding variable.

To confound means to confuse , and this effect is exactly why confounding variables are undesirable. Because they differ systematically across conditions—just like the independent variable—they provide an alternative explanation for any observed difference in the dependent variable. Figure 5.1 shows the results of a hypothetical study, in which participants in a positive mood condition scored higher on a memory task than participants in a negative mood condition. But if IQ is a confounding variable—with participants in the positive mood condition having higher IQs on average than participants in the negative mood condition—then it is unclear whether it was the positive moods or the higher IQs that caused participants in the first condition to score higher. One way to avoid confounding variables is by holding extraneous variables constant. For example, one could prevent IQ from becoming a confounding variable by limiting participants only to those with IQs of exactly 100. But this approach is not always desirable for reasons we have already discussed. A second and much more general approach—random assignment to conditions—will be discussed in detail shortly.

Key Takeaways

- An experiment is a type of empirical study that features the manipulation of an independent variable, the measurement of a dependent variable, and control of extraneous variables.

- An extraneous variable is any variable other than the independent and dependent variables. A confound is an extraneous variable that varies systematically with the independent variable.

- Practice: List five variables that can be manipulated by the researcher in an experiment. List five variables that cannot be manipulated by the researcher in an experiment.

- Effect of parietal lobe damage on people’s ability to do basic arithmetic.

- Effect of being clinically depressed on the number of close friendships people have.

- Effect of group training on the social skills of teenagers with Asperger’s syndrome.

- Effect of paying people to take an IQ test on their performance on that test.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Chapter 6: Experimental Research

Conducting Experiments

Learning Objectives

- Describe several strategies for recruiting participants for an experiment.

- Explain why it is important to standardize the procedure of an experiment and several ways to do this.

- Explain what pilot testing is and why it is important.

The information presented so far in this chapter is enough to design a basic experiment. When it comes time to conduct that experiment, however, several additional practical issues arise. In this section, we consider some of these issues and how to deal with them. Much of this information applies to nonexperimental studies as well as experimental ones.

Recruiting Participants

Of course, at the start of any research project you should be thinking about how you will obtain your participants. Unless you have access to people with schizophrenia or incarcerated juvenile offenders, for example, then there is no point designing a study that focuses on these populations. But even if you plan to use a convenience sample, you will have to recruit participants for your study.

There are several approaches to recruiting participants. One is to use participants from a formal subject pool —an established group of people who have agreed to be contacted about participating in research studies. For example, at many colleges and universities, there is a subject pool consisting of students enrolled in introductory psychology courses who must participate in a certain number of studies to meet a course requirement. Researchers post descriptions of their studies and students sign up to participate, usually via an online system. Participants who are not in subject pools can also be recruited by posting or publishing advertisements or making personal appeals to groups that represent the population of interest. For example, a researcher interested in studying older adults could arrange to speak at a meeting of the residents at a retirement community to explain the study and ask for volunteers.

The Volunteer Subject

Even if the participants in a study receive compensation in the form of course credit, a small amount of money, or a chance at being treated for a psychological problem, they are still essentially volunteers. This is worth considering because people who volunteer to participate in psychological research have been shown to differ in predictable ways from those who do not volunteer. Specifically, there is good evidence that on average, volunteers have the following characteristics compared with nonvolunteers (Rosenthal & Rosnow, 1976) [1] :

- They are more interested in the topic of the research.

- They are more educated.

- They have a greater need for approval.

- They have higher intelligence quotients (IQs).

- They are more sociable.

- They are higher in social class.

This difference can be an issue of external validity if there is reason to believe that participants with these characteristics are likely to behave differently than the general population. For example, in testing different methods of persuading people, a rational argument might work better on volunteers than it does on the general population because of their generally higher educational level and IQ.

In many field experiments, the task is not recruiting participants but selecting them. For example, researchers Nicolas Guéguen and Marie-Agnès de Gail conducted a field experiment on the effect of being smiled at on helping, in which the participants were shoppers at a supermarket. A confederate walking down a stairway gazed directly at a shopper walking up the stairway and either smiled or did not smile. Shortly afterward, the shopper encountered another confederate, who dropped some computer diskettes on the ground. The dependent variable was whether or not the shopper stopped to help pick up the diskettes (Guéguen & de Gail, 2003) [2] . Notice that these participants were not “recruited,” but the researchers still had to select them from among all the shoppers taking the stairs that day. It is extremely important that this kind of selection be done according to a well-defined set of rules that is established before the data collection begins and can be explained clearly afterward. In this case, with each trip down the stairs, the confederate was instructed to gaze at the first person he encountered who appeared to be between the ages of 20 and 50. Only if the person gazed back did he or she become a participant in the study. The point of having a well-defined selection rule is to avoid bias in the selection of participants. For example, if the confederate was free to choose which shoppers he would gaze at, he might choose friendly-looking shoppers when he was set to smile and unfriendly-looking ones when he was not set to smile. As we will see shortly, such biases can be entirely unintentional.

Standardizing the Procedure

It is surprisingly easy to introduce extraneous variables during the procedure. For example, the same experimenter might give clear instructions to one participant but vague instructions to another. Or one experimenter might greet participants warmly while another barely makes eye contact with them. To the extent that such variables affect participants’ behaviour, they add noise to the data and make the effect of the independent variable more difficult to detect. If they vary across conditions, they become confounding variables and provide alternative explanations for the results. For example, if participants in a treatment group are tested by a warm and friendly experimenter and participants in a control group are tested by a cold and unfriendly one, then what appears to be an effect of the treatment might actually be an effect of experimenter demeanor. When there are multiple experimenters, the possibility for introducing extraneous variables is even greater, but is often necessary for practical reasons.

Experimenter’s Sex as an Extraneous Variable