Blog Technical posts

Data-driven testing with python.

Pay attention to zeros. If there is a zero, someone will divide by it.

Writing code, a good test coverage is an essential component, both for the reliability of the code and for the peace of mind of the developer.

There are tools (for example Nose) to measure the coverage of the codebase by checking the number of lines that are run during the execution of tests. An excellent coverage of the lines of code, however, does not necessarily imply an equally good coverage of the functionality of the code: the same statement can work correctly with certain data and fail with other equally legitimate ones. Some values also lend themselves more than others to generate errors: edge cases are the limit values of an interval, the index of the last iteration of a cycle, characters encoded in unexpected way, zeros, and so on.

In order to have an effective coverage of this type of errors, it can be easy to find yourself having to replicate entire blocks of code in tests, varying only minimal parts of them.

In this article, we will look at some of the tools offered by the Python ecosystem to manage this need elegantly (and “DRY”).

py.test parametrize

Pytest is a valid alternative to Unittest at a distance of a pip install . The two main innovations introduced in Pytest are the fixture and the parametrize decorator. The former is used to manage the setup of a test in a more granular way than the classic setUp() method. In this blog post, however, we are mainly interested in the parametrize decorator, which allows us to take an abstraction step in the test-case writing, dividing the test logic from the data to be input. We can then verify the correct functioning of the code with different edge cases, while avoiding the duplication of logic.

In the example, test_func will be performed twice, the first with value_A = 'first case', value_B = 1 and the second with value_A = 'second case', value_B = 2 .

During execution of the tests, the various parameters will be considered as independent test-cases and, in the event of failure, an identifier containing the data provided allows the developer to quickly trace the problematic case.

Faker provides methods to spontaneously create plausible data for our tests.

The data is generated by Providers included in the library (a complete list in the documentation), but it is also possible to create custom ones.

then usable by adding them to the global object the library is based on:

To understand certain cases where Faker can come in handy, let’s suppose for example that you want to perform tests to verify the correct creation of users into a database.

In this case, one possibility would be to recreate the database each time the test suite is run. However, creating a database is usually an operation that takes time, so it would be preferable to create it only the first time, perhaps using a dedicated command line option. The problem here is that, if we use hardcoded data in the testcase and if there is some kind of constraint on the users (for example, the unique email), the test would fail if run twice on the same database. With Faker we can easily avoid these conflicts because instead of the explicit data we have a function call that returns different data each time.

In this case, however, we renounce the reproducibility of the test: as the values of Faker are chosen in a random manner, a value that shows an error in the code could be randomly provided or not, so the execution of the test would generate different results in an unpredictable way.

Hypothesis is a data generation engine. The programmer, in this case, establishes the criteria with which the data must be generated and the library deals with generating examples (the terminology used in this library is inspired by the scientific world. The data generated by Hypothesis are called “examples”. We will also see other keywords such as “given”, “assume”… that respect the given criteria).

For example, if we want to test a function that takes integers, it will be sufficient to apply the given decorator to the test and to pass to it the integers strategy . In the documentation you will find all the strategies included in the library.

The test test_my_function takes two parameters in input, value_A and value_B . Hypothesis, through the given , decorator, fills these parameters with valid data, according to the specified strategy.

The main advantage over Faker is that the test will be run numerous times, with combinations of values value_A and value_B that are different each time. Hypothesis is also designed to look for edge cases that could hide errors. In our example, we have not defined any minor or major limit for the integers to be generated, so it is reasonable to expect that among the examples generated we will find, in addition to the simplest cases, the zero and values high enough (in absolute value) to generate integer overflow in some representations.

These are some examples generated by the text strategy:

(yes, most of these characters don’t even display in my browser)

Delegating to an external library the task of imagining possible limit cases that could put our code in difficulty is a great way to find possible errors that were not thought of and at the same time to maintain the code of the lean test.

Note that the number of test runs is not at the discretion of the programmer. In particular, through the settings decorator it is possible to set a maximum limit of examples to be generated

but this limit could still be exceeded if the test fails. This behaviour is due to another feature of Hypothesis: in case of failure, a test is repeated with increasingly elementary examples, in order to recreate (and provide in the report) the simplest example that guarantees a code failure.

In this case, for example, Hypothesis manages to find the limit for which the code actually fails:

A slightly more realistic example can be this:

Hypothesis stores in its cache the values obtained from the “falsification” process and provides them as the very first examples in the subsequent executions of the test, to allow the developer to immediately verify whether a previously revealed bug has been solved or not. We therefore have the reproducibility of the test for the examples that caused failures. To formalise this behaviour and find it even in a non-local environment, like a continuous integration server, we can specify with the decorator examples a number of examples that will always be executed before those that are randomly generated.

example is also an excellent “bookmark” for those who will read the code in the future, as it highlights possible misleading cases that could be missed at first sight.

Hypothesis: creating personalised strategies

All this is very useful, but often in our tests we need more complex structures than a simple string. Hypothesis involves the use of certain tools to generate complex data at will.

To start, the data output from a strategy can be passed to a map or from a filter .

Another possibility is to link multiple strategies, using flatmap .

In the example the first call to st.integers determines the length of the lists generated by st.lists and places a maximum limit of 10 elements for them, excluding however lists with a length equal to 5 elements.

For more complex operations, we can instead use the strategies.composite decorator, which allows us to obtain data from existing strategies, to modify them and to assemble them in a new strategy to be used in tests or as a brick for another custom strategy.

For example, to generate a valid payload for a web application, we could write something like the following code.

Suppose the payloads we want to generate include a number of mandatory and other optional fields. We then construct a payloads strategy, which first extracts the values for the mandatory fields, inserts them into a dictionary and, in a second phase, enriches this dictionary with a subset of the optional fields.

In the example we also wanted to include assume , which provides an additional rule in data creation and can be very useful.

All that remains is for us to define subdictionaries : a utility function, usable both as a stand-alone strategy and as a component for other customised strategies.

Our subdictionaries is little more than a call to random.sample() , but using the randoms strategy we get that Hypothesis can handle the random seed and thus treat the personalised strategy exactly like those of the library, during the process of “falsification” of the failed test-cases.

In both functions a draw argument is taken in input, which is managed entirely by the given decorator. The use of the payload strategy will therefore be of this type:

The creation of customised strategies lends itself particularly well to testing the correct behaviour of the application, while to verify the behaviour of our code in the case of specific failures it could become overly burdensome. We can however reuse the work performed to write the custom strategy and to alter the data provided by Hypothesis such as to cause the failures we want to verify.

It is possible that, as the complexity and the nesting of the strategies grow, the data generation may become slower, to the point of causing a Hypothesis inner health check to fail:

However, if the complexity achieved is necessary for this purpose, we can suppress the control in question for those single tests that would risk random failures, by meddling with the settings decorator:

These are just some of the tools available for data-driven testing in Python, being a constantly evolving environment. Of pytest.parametrize we can state that it is a tool to bear in mind when writing tests, because essentially it helps us to obtain a more elegant code.

Faker is an interesting possibility, it can be used to see scrolling data in our tests, but it doesn’t add much, while Hypothesis is undoubtedly a more powerful and mature library. It must be said that writing strategies for Hypothesis are an activity that takes time, especially when the data to be generated consists of several nested parts; but all the tools needed to do it are available. Hypothesis is perhaps not suitable for a unit-test written quickly during the drafting of the code, but it is definitely useful for an in-depth analysis of its own sources. As often happens in Test Driven Development , the design of tests helps to write better quality code immediately: Hypothesis encourages the developer to evaluate those borderline cases that sometimes end up, instead, being omitted.

February 7, 2024

Demystifying Hypothesis Generation: A Guide to AI-Driven Insights

Hypothesis generation involves making informed guesses about various aspects of a business, market, or problem that need further exploration and testing. This article discusses the process you need to follow while generating hypothesis and how an AI tool, like Akaike's BYOB can help you achieve the process quicker and better.

What is Hypothesis Generation?

Hypothesis generation involves making informed guesses about various aspects of a business, market, or problem that need further exploration and testing. It's a crucial step while applying the scientific method to business analysis and decision-making.

Here is an example from a popular B-school marketing case study:

A bicycle manufacturer noticed that their sales had dropped significantly in 2002 compared to the previous year. The team investigating the reasons for this had many hypotheses. One of them was: “many cycling enthusiasts have switched to walking with their iPods plugged in.” The Apple iPod was launched in late 2001 and was an immediate hit among young consumers. Data collected manually by the team seemed to show that the geographies around Apple stores had indeed shown a sales decline.

Traditionally, hypothesis generation is time-consuming and labour-intensive. However, the advent of Large Language Models (LLMs) and Generative AI (GenAI) tools has transformed the practice altogether. These AI tools can rapidly process extensive datasets, quickly identifying patterns, correlations, and insights that might have even slipped human eyes, thus streamlining the stages of hypothesis generation.

These tools have also revolutionised experimentation by optimising test designs, reducing resource-intensive processes, and delivering faster results. LLMs' role in hypothesis generation goes beyond mere assistance, bringing innovation and easy, data-driven decision-making to businesses.

Hypotheses come in various types, such as simple, complex, null, alternative, logical, statistical, or empirical. These categories are defined based on the relationships between the variables involved and the type of evidence required for testing them. In this article, we aim to demystify hypothesis generation. We will explore the role of LLMs in this process and outline the general steps involved, highlighting why it is a valuable tool in your arsenal.

Understanding Hypothesis Generation

A hypothesis is born from a set of underlying assumptions and a prediction of how those assumptions are anticipated to unfold in a given context. Essentially, it's an educated, articulated guess that forms the basis for action and outcome assessment.

A hypothesis is a declarative statement that has not yet been proven true. Based on past scholarship , we could sum it up as the following:

- A definite statement, not a question

- Based on observations and knowledge

- Testable and can be proven wrong

- Predicts the anticipated results clearly

- Contains a dependent and an independent variable where the dependent variable is the phenomenon being explained and the independent variable does the explaining

In a business setting, hypothesis generation becomes essential when people are made to explain their assumptions. This clarity from hypothesis to expected outcome is crucial, as it allows people to acknowledge a failed hypothesis if it does not provide the intended result. Promoting such a culture of effective hypothesising can lead to more thoughtful actions and a deeper understanding of outcomes. Failures become just another step on the way to success, and success brings more success.

Hypothesis generation is a continuous process where you start with an educated guess and refine it as you gather more information. You form a hypothesis based on what you know or observe.

Say you're a pen maker whose sales are down. You look at what you know:

- I can see that pen sales for my brand are down in May and June.

- I also know that schools are closed in May and June and that schoolchildren use a lot of pens.

- I hypothesise that my sales are down because school children are not using pens in May and June, and thus not buying newer ones.

The next step is to collect and analyse data to test this hypothesis, like tracking sales before and after school vacations. As you gather more data and insights, your hypothesis may evolve. You might discover that your hypothesis only holds in certain markets but not others, leading to a more refined hypothesis.

Once your hypothesis is proven correct, there are many actions you may take - (a) reduce supply in these months (b) reduce the price so that sales pick up (c) release a limited supply of novelty pens, and so on.

Once you decide on your action, you will further monitor the data to see if your actions are working. This iterative cycle of formulating, testing, and refining hypotheses - and using insights in decision-making - is vital in making impactful decisions and solving complex problems in various fields, from business to scientific research.

How do Analysts generate Hypotheses? Why is it iterative?

A typical human working towards a hypothesis would start with:

1. Picking the Default Action

2. Determining the Alternative Action

3. Figuring out the Null Hypothesis (H0)

4. Inverting the Null Hypothesis to get the Alternate Hypothesis (H1)

5. Hypothesis Testing

The default action is what you would naturally do, regardless of any hypothesis or in a case where you get no further information. The alternative action is the opposite of your default action.

The null hypothesis, or H0, is what brings about your default action. The alternative hypothesis (H1) is essentially the negation of H0.

For example, suppose you are tasked with analysing a highway tollgate data (timestamp, vehicle number, toll amount) to see if a raise in tollgate rates will increase revenue or cause a volume drop. Following the above steps, we can determine:

Now, we can start looking at past data of tollgate traffic in and around rate increases for different tollgates. Some data might be irrelevant. For example, some tollgates might be much cheaper so customers might not have cared about an increase. Or, some tollgates are next to a large city, and customers have no choice but to pay.

Ultimately, you are looking for the level of significance between traffic and rates for comparable tollgates. Significance is often noted as its P-value or probability value . P-value is a way to measure how surprising your test results are, assuming that your H0 holds true.

The lower the p-value, the more convincing your data is to change your default action.

Usually, a p-value that is less than 0.05 is considered to be statistically significant, meaning there is a need to change your null hypothesis and reject your default action. In our example, a low p-value would suggest that a 10% increase in the toll rate causes a significant dip in traffic (>3%). Thus, it is better if we keep our rates as is if we want to maintain revenue.

In other examples, where one has to explore the significance of different variables, we might find that some variables are not correlated at all. In general, hypothesis generation is an iterative process - you keep looking for data and keep considering whether that data convinces you to change your default action.

Internal and External Data

Hypothesis generation feeds on data. Data can be internal or external. In businesses, internal data is produced by company owned systems (areas such as operations, maintenance, personnel, finance, etc). External data comes from outside the company (customer data, competitor data, and so on).

Let’s consider a real-life hypothesis generated from internal data:

Multinational company Johnson & Johnson was looking to enhance employee performance and retention. Initially, they favoured experienced industry candidates for recruitment, assuming they'd stay longer and contribute faster. However, HR and the people analytics team at J&J hypothesised that recent college graduates outlast experienced hires and perform equally well. They compiled data on 47,000 employees to test the hypothesis and, based on it, Johnson & Johnson increased hires of new graduates by 20% , leading to reduced turnover with consistent performance.

For an analyst (or an AI assistant), external data is often hard to source - it may not be available as organised datasets (or reports), or it may be expensive to acquire. Teams might have to collect new data from surveys, questionnaires, customer feedback and more.

Further, there is the problem of context. Suppose an analyst is looking at the dynamic pricing of hotels offered on his company’s platform in a particular geography. Suppose further that the analyst has no context of the geography, the reasons people visit the locality, or of local alternatives; then the analyst will have to learn additional context to start making hypotheses to test.

Internal data, of course, is internal, meaning access is already guaranteed. However, this probably adds up to staggering volumes of data.

Looking Back, and Looking Forward

Data analysts often have to generate hypotheses retrospectively, where they formulate and evaluate H0 and H1 based on past data. For the sake of this article, let's call it retrospective hypothesis generation.

Alternatively, a prospective approach to hypothesis generation could be one where hypotheses are formulated before data collection or before a particular event or change is implemented.

For example:

A pen seller has a hypothesis that during the lean periods of summer, when schools are closed, a Buy One Get One (BOGO) campaign will lead to a 100% sales recovery because customers will buy pens in advance. He then collects feedback from customers in the form of a survey and also implements a BOGO campaign in a single territory to see whether his hypothesis is correct, or not.

The HR head of a multi-office employer realises that some of the company’s offices have been providing snacks at 4:30 PM in the common area, and the rest have not. He has a hunch that these offices have higher productivity. The leader asks the company’s data science team to look at employee productivity data and the employee location data. “Am I correct, and to what extent?”, he asks.

These examples also reflect another nuance, in which the data is collected differently:

- Observational: Observational testing happens when researchers observe a sample population and collect data as it occurs without intervention. The data for the snacks vs productivity hypothesis was observational.

- Experimental: In experimental testing, the sample is divided into multiple groups, with one control group. The test for the non-control groups will be varied to determine how the data collected differs from that of the control group. The data collected by the pen seller in the single territory experiment was experimental.

Such data-backed insights are a valuable resource for businesses because they allow for more informed decision-making, leading to the company's overall growth. Taking a data-driven decision, from forming a hypothesis to updating and validating it across iterations, to taking action based on your insights reduces guesswork, minimises risks, and guides businesses towards strategies that are more likely to succeed.

How can GenAI help in Hypothesis Generation?

Of course, hypothesis generation is not always straightforward. Understanding the earlier examples is easy for us because we're already inundated with context. But, in a situation where an analyst has no domain knowledge, suddenly, hypothesis generation becomes a tedious and challenging process.

AI, particularly high-capacity, robust tools such as LLMs, have radically changed how we process and analyse large volumes of data. With its help, we can sift through massive datasets with precision and speed, regardless of context, whether it's customer behaviour, financial trends, medical records, or more. Generative AI, including LLMs, are trained on diverse text data, enabling them to comprehend and process various topics.

Now, imagine an AI assistant helping you with hypothesis generation. LLMs are not born with context. Instead, they are trained upon vast amounts of data, enabling them to develop context in a completely unfamiliar environment. This skill is instrumental when adopting a more exploratory approach to hypothesis generation. For example, the HR leader from earlier could simply ask an LLM tool: “Can you look at this employee productivity data and find cohorts of high-productivity and see if they correlate to any other employee data like location, pedigree, years of service, marital status, etc?”

For an LLM-based tool to be useful, it requires a few things:

- Domain Knowledge: A human could take months to years to acclimatise to a particular field fully, but LLMs, when fed extensive information and utilising Natural Language Processing (NLP), can familiarise themselves in a very short time.

- Explainability: Explainability is its ability to explain its thought process and output to cease being a "black box".

- Customisation: For consistent improvement, contextual AI must allow tweaks, allowing users to change its behaviour to meet their expectations. Human intervention and validation is a necessary step in adoptingAI tools. NLP allows these tools to discern context within textual data, meaning it can read, categorise, and analyse data with unimaginable speed. LLMs, thus, can quickly develop contextual understanding and generate human-like text while processing vast amounts of unstructured data, making it easier for businesses and researchers to organise and utilise data effectively.LLMs have the potential to become indispensable tools for businesses. The future rests on AI tools that harness the powers of LLMs and NLP to deliver actionable insights, mitigate risks, inform decision-making, predict future trends, and drive business transformation across various sectors.

Together, these technologies empower data analysts to unravel hidden insights within their data. For our pen maker, for example, an AI tool could aid data analytics. It can look through historical data to track when sales peaked or go through sales data to identify the pens that sold the most. It can refine a hypothesis across iterations, just as a human analyst would. It can even be used to brainstorm other hypotheses. Consider the situation where you ask the LLM, " Where do I sell the most pens? ". It will go through all of the data you have made available - places where you sell pens, the number of pens you sold - to return the answer. Now, if we were to do this on our own, even if we were particularly meticulous about keeping records, it would take us at least five to ten minutes, that too, IF we know how to query a database and extract the needed information. If we don't, there's the added effort required to find and train such a person. An AI assistant, on the other hand, could share the answer with us in mere seconds. Its finely-honed talents in sorting through data, identifying patterns, refining hypotheses iteratively, and generating data-backed insights enhance problem-solving and decision-making, supercharging our business model.

Top-Down and Bottom-Up Hypothesis Generation

As we discussed earlier, every hypothesis begins with a default action that determines your initial hypotheses and all your subsequent data collection. You look at data and a LOT of data. The significance of your data is dependent on the effect and the relevance it has to your default action. This would be a top-down approach to hypothesis generation.

There is also the bottom-up method , where you start by going through your data and figuring out if there are any interesting correlations that you could leverage better. This method is usually not as focused as the earlier approach and, as a result, involves even more data collection, processing, and analysis. AI is a stellar tool for Exploratory Data Analysis (EDA). Wading through swathes of data to highlight trends, patterns, gaps, opportunities, errors, and concerns is hardly a challenge for an AI tool equipped with NLP and powered by LLMs.

EDA can help with:

- Cleaning your data

- Understanding your variables

- Analysing relationships between variables

An AI assistant performing EDA can help you review your data, remove redundant data points, identify errors, note relationships, and more. All of this ensures ease, efficiency, and, best of all, speed for your data analysts.

Good hypotheses are extremely difficult to generate. They are nuanced and, without necessary context, almost impossible to ascertain in a top-down approach. On the other hand, an AI tool adopting an exploratory approach is swift, easily running through available data - internal and external.

If you want to rearrange how your LLM looks at your data, you can also do that. Changing the weight you assign to the various events and categories in your data is a simple process. That’s why LLMs are a great tool in hypothesis generation - analysts can tailor them to their specific use cases.

Ethical Considerations and Challenges

There are numerous reasons why you should adopt AI tools into your hypothesis generation process. But why are they still not as popular as they should be?

Some worry that AI tools can inadvertently pick up human biases through the data it is fed. Others fear AI and raise privacy and trust concerns. Data quality and ability are also often questioned. Since LLMs and Generative AI are developing technologies, such issues are bound to be, but these are all obstacles researchers are earnestly tackling.

One oft-raised complaint against LLM tools (like OpenAI's ChatGPT) is that they 'fill in' gaps in knowledge, providing information where there is none, thus giving inaccurate, embellished, or outright wrong answers; this tendency to "hallucinate" was a major cause for concern. But, to combat this phenomenon, newer AI tools have started providing citations with the insights they offer so that their answers become verifiable. Human validation is an essential step in interpreting AI-generated hypotheses and queries in general. This is why we need a collaboration between the intelligent and artificially intelligent mind to ensure optimised performance.

Clearly, hypothesis generation is an immensely time-consuming activity. But AI can take care of all these steps for you. From helping you figure out your default action, determining all the major research questions, initial hypotheses and alternative actions, and exhaustively weeding through your data to collect all relevant points, AI can help make your analysts' jobs easier. It can take any approach - prospective, retrospective, exploratory, top-down, bottom-up, etc. Furthermore, with LLMs, your structured and unstructured data are taken care of, meaning no more worries about messy data! With the wonders of human intuition and the ease and reliability of Generative AI and Large Language Models, you can speed up and refine your process of hypothesis generation based on feedback and new data to provide the best assistance to your business.

Related Posts

The latest industry news, interviews, technologies, and resources.

.png)

On the Origin of Large Language Models: Tracing AI’s Big Bang

Discover how Large Language Models (LLMs) originated. Learn about the transition from language models to LARGE language models, thereby triggering AI’s Big Bang.

This AI tool predicts lung cancer with 94% accuracy in just 1 year of screening

Researchers from the Massachusetts General Cancer Center and MIT have developed Sybil, a deep-learning tool to change this. Using a data set of more than 20,000 LDCT scans, Sybil predicts a patient’s lung cancer risk for the next six years.

Smart Home Technologies: What’s cooking in AI?

Smart home technologies refer to home appliances and systems that can be monitored and controlled remotely using the IoT (Internet of Things)

Doing AI Even Before AI Said Hi to the World – Akaike Technologies

As an AI-native company, Akaike Technologies offers comprehensive and sustainable AI solutions that resolve these challenges while catering to the unique needs of each business.

Knowledge Center

Case Studies

© 2023 Akaike Technologies Pvt. Ltd. and/or its associates and partners

Terms of Use

Privacy Policy

Terms of Service

© Akaike Technologies Pvt. Ltd. and/or its associates and partners

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Perspective

- Published: 10 January 2012

Machine learning and data mining: strategies for hypothesis generation

- M A Oquendo 1 ,

- E Baca-Garcia 1 , 2 ,

- A Artés-Rodríguez 3 ,

- F Perez-Cruz 3 , 4 ,

- H C Galfalvy 1 ,

- H Blasco-Fontecilla 2 ,

- D Madigan 5 &

- N Duan 1 , 6

Molecular Psychiatry volume 17 , pages 956–959 ( 2012 ) Cite this article

5429 Accesses

62 Citations

6 Altmetric

Metrics details

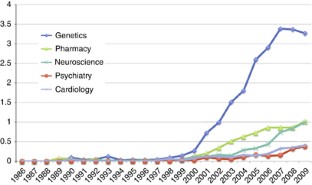

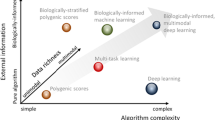

- Data mining

- Machine learning

- Neurological models

Strategies for generating knowledge in medicine have included observation of associations in clinical or research settings and more recently, development of pathophysiological models based on molecular biology. Although critically important, they limit hypothesis generation to an incremental pace. Machine learning and data mining are alternative approaches to identifying new vistas to pursue, as is already evident in the literature. In concert with these analytic strategies, novel approaches to data collection can enhance the hypothesis pipeline as well. In data farming, data are obtained in an ‘organic’ way, in the sense that it is entered by patients themselves and available for harvesting. In contrast, in evidence farming (EF), it is the provider who enters medical data about individual patients. EF differs from regular electronic medical record systems because frontline providers can use it to learn from their own past experience. In addition to the possibility of generating large databases with farming approaches, it is likely that we can further harness the power of large data sets collected using either farming or more standard techniques through implementation of data-mining and machine-learning strategies. Exploiting large databases to develop new hypotheses regarding neurobiological and genetic underpinnings of psychiatric illness is useful in itself, but also affords the opportunity to identify novel mechanisms to be targeted in drug discovery and development.

This is a preview of subscription content, access via your institution

Access options

Subscribe to this journal

Receive 12 print issues and online access

251,40 € per year

only 20,95 € per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

A primer on the use of machine learning to distil knowledge from data in biological psychiatry

Axes of a revolution: challenges and promises of big data in healthcare

Deep learning for small and big data in psychiatry

Carlsson A . A paradigm shift in brain research. Science 2001; 294 : 1021–1024.

Article CAS Google Scholar

Mitchell TM . The Discipline of Machine Learning . School of Computer Science: Pittsburgh, PA, 2006. Available from: http://aaai.org/AITopics/MachineLearning .

Google Scholar

Nilsson NJ . Introduction to Machine Learning. An early draft of a proposed textbook . Robotics Laboratory, Department of Computer Science, Stanford University: Stanford, 1996. Available from: http://robotics.stanford.edu/people/nilsson/mlbook.html .

Hand DJ . Mining medical data. Stat Methods Med Res 2000; 9 : 305–307.

PubMed CAS Google Scholar

Smyth P . Data mining: data analysis on a grand scale? Stat Methods Med Res 2000; 9 : 309–327.

Burgun A, Bodenreider O . Accessing and integrating data and knowledge for biomedical research. Yearb Med Inform 2008; 47 (Suppl 1): 91–101.

Hochberg AM, Hauben M, Pearson RK, O’Hara DJ, Reisinger SJ, Goldsmith DI et al . An evaluation of three signal-detection algorithms using a highly inclusive reference event database. Drug Saf 2009; 32 : 509–525.

Article Google Scholar

Sanz EJ, De-las-Cuevas C, Kiuru A, Bate A, Edwards R . Selective serotonin reuptake inhibitors in pregnant women and neonatal withdrawal syndrome: a database analysis. Lancet 2005; 365 : 482–487.

Baca-Garcia E, Perez-Rodriguez MM, Basurte-Villamor I, Saiz-Ruiz J, Leiva-Murillo JM, de Prado-Cumplido M et al . Using data mining to explore complex clinical decisions: A study of hospitalization after a suicide attempt. J Clin Psychiatry 2006; 67 : 1124–1132.

Ray S, Britschgi M, Herbert C, Takeda-Uchimura Y, Boxer A, Blennow K et al . Classification and prediction of clinical Alzheimer's diagnosis based on plasma signaling proteins. Nat Med 2007; 13 : 1359–1362.

Baca-Garcia E, Perez-Rodriguez MM, Basurte-Villamor I, Lopez-Castroman J, Fernandez del Moral AL, Jimenez-Arriero MA et al . Diagnostic stability and evolution of bipolar disorder in clinical practice: a prospective cohort study. Acta Psychiatr Scand 2007; 115 : 473–480.

Baca-Garcia E, Vaquero-Lorenzo C, Perez-Rodriguez MM, Gratacos M, Bayes M, Santiago-Mozos R et al . Nucleotide variation in central nervous system genes among male suicide attempters. Am J Med Genet B Neuropsychiatr Genet 2010; 153B : 208–213.

Sun D, van Erp TG, Thompson PM, Bearden CE, Daley M, Kushan L et al . Elucidating a magnetic resonance imaging-based neuroanatomic biomarker for psychosis: classification analysis using probabilistic brain atlas and machine learning algorithms. Biol Psychiatry 2009; 66 : 1055–1060.

Shen H, Wang L, Liu Y, Hu D . Discriminative analysis of resting-state functional connectivity patterns of schizophrenia using low dimensional embedding of fMRI. Neuroimage 2010; 49 : 3110–3121.

Hay MC, Weisner TS, Subramanian S, Duan N, Niedzinski EJ, Kravitz RL . Harnessing experience: exploring the gap between evidence-based medicine and clinical practice. J Eval Clin Pract 2008; 14 : 707–713.

Unutzer J, Choi Y, Cook IA, Oishi S . A web-based data management system to improve care for depression in a multicenter clinical trial. Psychiatr Serv 2002; 53 : 671–673.

Download references

Acknowledgements

Dr Blasco-Fontecilla acknowledges the Spanish Ministry of Health (Rio Hortega CM08/00170), Alicia Koplowitz Foundation, and Conchita Rabago Foundation for funding his post-doctoral rotation at CHRU, Montpellier, France. SAF2010-21849.

Author information

Authors and affiliations.

Department of Psychiatry, New York State Psychiatric Institute and Columbia University, New York, NY, USA

M A Oquendo, E Baca-Garcia, H C Galfalvy & N Duan

Fundacion Jimenez Diaz and Universidad Autonoma, CIBERSAM, Madrid, Spain

E Baca-Garcia & H Blasco-Fontecilla

Department of Signal Theory and Communications, Universidad Carlos III de Madrid, Madrid, Spain

A Artés-Rodríguez & F Perez-Cruz

Princeton University, Princeton, NJ, USA

F Perez-Cruz

Department of Statistics, Columbia University, New York, NY, USA

Department of Biostatistics, Columbia University, New York, NY, USA

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to M A Oquendo .

Ethics declarations

Competing interests.

Dr Oquendo has received unrestricted educational grants and/or lecture fees form Astra-Zeneca, Bristol Myers Squibb, Eli Lilly, Janssen, Otsuko, Pfizer, Sanofi-Aventis and Shire. Her family owns stock in Bistol Myers Squibb. The remaining authors declare no conflict of interest.

PowerPoint slides

Powerpoint slide for fig. 1, rights and permissions.

Reprints and permissions

About this article

Cite this article.

Oquendo, M., Baca-Garcia, E., Artés-Rodríguez, A. et al. Machine learning and data mining: strategies for hypothesis generation. Mol Psychiatry 17 , 956–959 (2012). https://doi.org/10.1038/mp.2011.173

Download citation

Received : 15 July 2011

Revised : 20 October 2011

Accepted : 21 November 2011

Published : 10 January 2012

Issue Date : October 2012

DOI : https://doi.org/10.1038/mp.2011.173

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- data farming

- inductive reasoning

This article is cited by

Applications of artificial intelligence−machine learning for detection of stress: a critical overview.

- Alexios-Fotios A. Mentis

- Donghoon Lee

- Panos Roussos

Molecular Psychiatry (2023)

Optimizing prediction of response to antidepressant medications using machine learning and integrated genetic, clinical, and demographic data

- Dekel Taliaz

- Amit Spinrad

- Bernard Lerer

Translational Psychiatry (2021)

Computational psychiatry: a report from the 2017 NIMH workshop on opportunities and challenges

- Michele Ferrante

- A. David Redish

- Joshua A. Gordon

Molecular Psychiatry (2019)

The role of machine learning in neuroimaging for drug discovery and development

- Orla M. Doyle

- Mitul A. Mehta

- Michael J. Brammer

Psychopharmacology (2015)

Stabilized sparse ordinal regression for medical risk stratification

- Truyen Tran

- Svetha Venkatesh

Knowledge and Information Systems (2015)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement . We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[Python] tests/test_feather.py::test_roundtrip : hypothesis.errors.FailedHealthCheck: Data generation is extremely slow […] #41318

mgorny commented Apr 21, 2024

Sorry, something went wrong.

mgorny commented Apr 22, 2024

No branches or pull requests

Test faster, fix more

Generating the right data

One thing that often causes people problems is figuring out how to generate the right data to fit their data model. You can start with just generating strings and integers, but eventually you want to be able to generate objects from your domain model. Hypothesis provides a lot of tools to help you build the data you want, but sometimes the choice can be a bit overwhelming.

Here’s a worked example to walk you through some of the details and help you get to grips with how to use them.

Suppose we have the following class:

A project has a name, a start date, and an end date.

How do we generate such a thing?

The idea is to break the problem down into parts, and then use the tools Hypothesis provides to assemble those parts into a strategy for generating our projects.

We’ll start by generating the data we need for each field, and then at the end we’ll see how to put it all together to generate a Project.

First we need to generate a name. We’ll use Hypothesis’s standard text strategy for that:

Lets customize this a bit: First off, lets say project names have to be non-empty.

Now, lets avoid the high end unicode for now (of course, your system should handle the full range of unicode, but this is just an example, right?).

To do this we need to pass an alphabet to the text strategy. This can either be a range of characters or another strategy. We’re going to use the characters strategy, which gives you a flexible way of describing a strategy for single-character text strings, to do that.

The max and min codepoint parameters do what you’d expect: They limit the range of permissible codepoints. We’ve blocked off the 0 codepoint (it’s not really useful and tends to just cause trouble with C libraries) and anything with a codepoint above 1000 - so we’re considering non-ASCII characters but nothing really high end.

The blacklist_categories parameter uses the notion of unicode category to limit the range of acceptable characters. If you want to see what category a character has you can use Python’s unicodedata module to find out:

The categories we’ve excluded are control characters and surrogates . Surrogates are excluded by default but when you explicitly pass in blacklist categories you need to exclude them yourself.

So we can put that together with text() to get a name matching our requirements:

But this is still not quite right: We’ve allowed spaces in names, but we don’t really want a name to start with or end with a space. You can see that this is currently allowed by asking Hypothesis for a more specific example:

So lets fix it so that they can’t by stripping the spaces off it.

To do this we’re going to use the strategy’s map method which lets you compose it with an arbitrary function to post-process the results into the for you want:

Now lets check that we can no longer have the above problem:

The problem is that our initial test worked because the strings we were generating were always non-empty because of the min_size parameter. We’re still only generating non-empty strings, but if we generate a string which is all spaces then strip it, the result will be empty after our map.

We can fix this using the strategy’s filter function, which restricts to only generating things which satisfy some condition:

And repeating the check:

Hypothesis raises NoSuchExample to indicate that… well, that there’s no such example.

In general you should be a little bit careful with filter and only use it to filter to conditions that are relatively hard to happen by accident. In this case it’s fine because the filter condition only fails if our initial draw was a string consisting entirely of spaces, but if we’d e.g. tried the opposite and tried to filter to strings that only had spaces, we’d have had a bad time of it and got a very slow and not very useful test.

Anyway, we now really do have a strategy that produces decent names for our projects. Lets put this all together into a test that demonstrates that our names now have the desired properties:

It’s not common practice to write tests for your strategies, but it can be helpful when trying to figure things out.

Dates and times

Hypothesis has date and time generation in a hypothesis.extra subpackage because it relies on pytz to generate them, but other than that it works in exactly the same way as before:

Lets constrain our dates to be UTC, because the sensible thing to do is to use UTC internally and convert on display to the user:

We can also constrain our projects to start in a reasonable range of years, as by default Hypothesis will cover the whole of representable history:

Again we can put together a test that checks this behaviour (though we have less code here so it’s less useful):

Putting it all together

We can now generate all the parts for our project definitions, but how do we generate a project?

The first thing to reach for is the builds function.

builds lets you take a set of strategies and feed their results as arguments to a function (or, in this case, class. Anything callable really) to create a new strategy that works by drawing those arguments then passing them to the function to give you that example.

Unfortunately, this isn’t quite right:

Projects can start after they end when we use builds this way. One way to fix this would be to use filter():

This will work, but it starts to edge into the territory of where filter should be avoided - about half of the initially generated examples will fail the filter.

What we’ll do instead is draw two dates and use whichever one is smallest as the start, and whatever is largest at the end. This is hard to do with builds because of the dependence between the arguments, so instead we’ll use builds’ more advanced cousin, composite :

The idea of composite is you get passed a magic first argument ‘draw’ that you can use to get examples out of a strategy. You then make as many draws as you want and use these to return the desired data.

You can also use the assume function to discard the current call if you get yourself into a state where you can’t proceed or where it’s easier to start again. In this case we do that when we draw the same data twice.

Note that in all of our examples we’re now writing projects() instead of projects. That’s because composite returns a function rather than a strategy. Any arguments to your defining function other than the first are also arguments to the one produced by composite.

We can now put together one final test that we got this bit right too:

Wrapping up

There’s a lot more to Hypothesis’s data generation than this, but hopefully it gives you a flavour of the sort of things to try and the sort of things that are possible.

It’s worth having a read of the documentation for this, and if you’re still stuck then try asking the community for some help. We’re pretty friendly.

IMAGES

VIDEO

COMMENTS

I have what I believe is a fairly straightforward test that is using an out-of-the box strategy, but I am getting failed health checks that the data generation is very slow. The entire test file is at the bottom of this issue so you can let me know if there is anything glaringly wrong with the test.

hypothesis.errors.FailedHealthCheck: Data generation is extremely slow: Only produced 7 valid examples in 1.20 seconds (0 invalid ones and 2 exceeded maximum size). Try decreasing size of the data you're generating (with e.g.max_size or max_leaves parameters). I wonder why 100 elements in a list became a "large data set".

Hypothesis' health checks are designed to detect and warn you about performance problems where your tests are slow, inefficient, or generating very large examples. ... , or occasionally for Hypothesis internal reasons. too_slow = 3 ¶ Check for when your data generation is extremely slow and likely to hurt testing.

For example, everything_except(int) returns a strategy that can generate anything that from_type() can ever generate, except for instances of int, and excluding instances of types added via register_type_strategy(). This is useful when writing tests which check that invalid input is rejected in a certain way. hypothesis.strategies. frozensets (elements, *, min_size = 0, max_size = None ...

The strategy is quite slow (it generates complex objects) and from time to time one of the tests fails the too_slow health check. When that happens, I take a deep sigh and I add. @settings(suppress_health_check=(HealthCheck.too_slow,)) to the test. Is there a way to suppress HealthCheck.too_slow once and for all, for all the tests that use the ...

It aims to improve the integration between Hypothesis and Pytest by providing extra information and convenient access to config options. pytest--hypothesis-show-statistics can be used to display test and data generation statistics. pytest--hypothesis-profile=<profile name> can be used to load a settings profile.

The data generated by Hypothesis are called "examples". We will also see other keywords such as "given", "assume"… that respect the given criteria). ... Data generation is extremely slow. However, if the complexity achieved is necessary for this purpose, we can suppress the control in question for those single tests that would ...

Although critically important, they limit hypothesis generation to an incremental pace. Machine learning and data mining are alternative approaches to identifying new vistas to pursue, as is ...

This is a very common mistake data science beginners make. Hypothesis generation is a process beginning with an educated guess whereas hypothesis testing is a process to conclude that the educated guess is true/false or the relationship between the variables is statistically significant or not.

Data-Driven Hypothesis Generation in Clinical Research 1 Introduction based on hypotheses related to A hypothesis is an educated guess about the relationships among several variables 1,2. Hypothesis generation occurs at the very early stage of the lifecycle of a research project 1,3-5. Typically, after hypothesis generation, study

Hypothesis generation involves making informed guesses about various aspects of a business, market, or problem that need further exploration and testing. This article discusses the process you need to follow while generating hypothesis and how an AI tool, like Akaike's BYOB can help you achieve the process quicker and better. BYOB. Data Analytics.

Although critically important, they limit hypothesis generation to an incremental pace. Machine learning and data mining are alternative approaches to identifying new vistas to pursue, as is ...

Data Synthesis Strategies. ¶. new in 0.6.0. pandera provides a utility for generating synthetic data purely from pandera schema or schema component objects. Under the hood, the schema metadata is collected to create a data-generating strategy using hypothesis, which is a property-based testing library.

FAILED tests/test_convert_builtin.py::test_array_to_pylist_roundtrip - hypothesis.errors.FailedHealthCheck: Data generation is extremely slow: Only produced 9 valid examples in 1.86 seconds (0 invalid ...

4. Photo by Anna Nekrashevich from Pexels. Hypothesis testing is a common statistical tool used in research and data science to support the certainty of findings. The aim of testing is to answer how probable an apparent effect is detected by chance given a random data sample. This article provides a detailed explanation of the key concepts in ...

The problem is that your strategy for unique names doesn't allow Hypothesis to generate the same name in two different runs of your test function, which must be allowed - otherwise, we can't try variations or discover a minimal failing example (among other problems).. This may also require some work to reset the state of your API for each input Hypothesis tries.

Generating the right data One thing that often causes people problems is figuring out how to generate the right data to fit their data model. You can start with just generating strings and integers, but eventually you want to be able to generate objects from your domain model. Hypothesis provides a lot of tools to help you build the data you want, but sometimes the choice can be a bit ...