Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

Computer science articles from across Nature Portfolio

Computer science is the study and development of the protocols required for automated processing and manipulation of data. This includes, for example, creating algorithms for efficiently searching large volumes of information or encrypting data so that it can be stored and transmitted securely.

Latest Research and Reviews

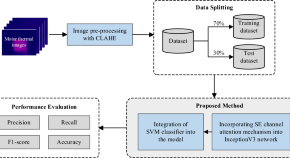

A deep learning approach for electric motor fault diagnosis based on modified InceptionV3

- Soo Siang Teoh

- Haidi Ibrahim

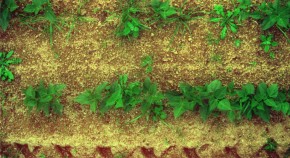

An accurate semantic segmentation model for bean seedlings and weeds identification based on improved ERFnet

- Haozhang Gao

- Mingyang Qi

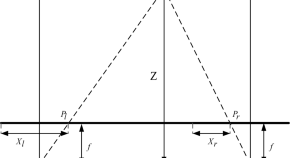

Research on underwater robot ranging technology based on semantic segmentation and binocular vision

- Kekuan Wang

- Zhongyang Wang

Machine vision-based autonomous road hazard avoidance system for self-driving vehicles

- Chengqun Qiu

- Changli Zha

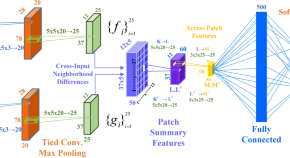

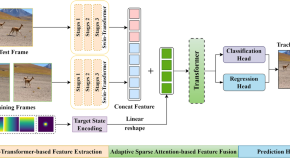

Adaptive sparse attention-based compact transformer for object tracking

- Lianyu Zhao

- Chenglin Wang

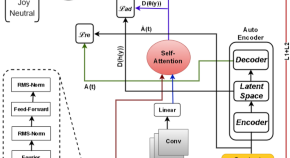

Predicting multi-label emojis, emotions, and sentiments in code-mixed texts using an emojifying sentiments framework

- Gopendra Vikram Singh

- Soumitra Ghosh

- Pushpak Bhattacharyya

News and Comment

Who owns your voice? Scarlett Johansson OpenAI complaint raises questions

In the age of artificial intelligence, situations are emerging that challenge the laws over rights to a persona.

- Nicola Jones

Anglo-American bias could make generative AI an invisible intellectual cage

- Queenie Luo

- Michael Puett

AlphaFold3 — why did Nature publish it without its code?

Criticism of our decision to publish AlphaFold3 raises important questions. We welcome readers’ views.

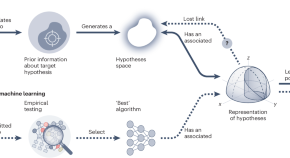

Back to basics to open the black box

Most research efforts in machine learning focus on performance and are detached from an explanation of the behaviour of the model. We call for going back to basics of machine learning methods, with more focus on the development of a basic understanding grounded in statistical theory.

- Diego Marcondes

- Adilson Simonis

- Junior Barrera

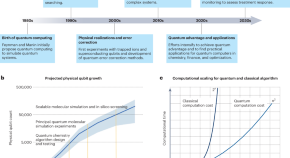

Quantum computing for oncology

As quantum technology advances, it holds immense potential to accelerate oncology discovery through enhanced molecular modeling, genomic analysis, medical imaging, and quantum sensing.

- Siddhi Ramesh

- Teague Tomesh

- Alexander T. Pearson

Autonomous interference-avoiding machine-to-machine communications

An article in IEEE Journal on Selected Areas in Communications proposes algorithmic solutions to dynamically optimize MIMO waveforms to minimize or eliminate interference in autonomous machine-to-machine communications.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Computer Science News

Top headlines, latest headlines.

- Tracking Animals Without Markers

- Blueprints of Self-Assembly

- Chatbots Tell People What They Want to Hear

- Male Vs Female Brain Structure

- Coming out to a Chatbot?

- Metro-Area Quantum Computer Network Demo

- AI for Computing Plasma Physics in Fusion

- AI Tool to Improve Heart Failure Care

- VR With Cinematoghraphics More Engaging

- Placebo Effect and AI

Earlier Headlines

Monday, may 13, 2024.

- Cats Purrfectly Demonstrate What It Takes to Trust Robots

- New Work Extends the Thermodynamic Theory of Computation

Thursday, May 9, 2024

- New Machine Learning Algorithm Promises Advances in Computing

- Robotic System Feeds People With Severe Mobility Limitations

Wednesday, May 8, 2024

- New Study Finds AI-Generated Empathy Has Its Limits

Tuesday, May 7, 2024

- New Super-Pure Silicon Chip Opens Path to Powerful Quantum Computers

- Engineers Develop Innovative Microbiome Analysis Software Tools

Monday, May 6, 2024

- Cybersecurity Education Varies Widely in US

- Experiment Opens Door for Millions of Qubits on One Chip

Friday, May 3, 2024

- Stretchable E-Skin Could Give Robots Human-Level Touch Sensitivity

Wednesday, May 1, 2024

- Science Has an AI Problem: This Group Says They Can Fix It

- Physicists Build New Device That Is Foundation for Quantum Computing

- Researchers Unlock Potential of 2D Magnetic Devices for Future Computing

- New Computer Algorithm Supercharges Climate Models and Could Lead to Better Predictions of Future Climate Change

- Improved AI Process Could Better Predict Water Supplies

Monday, April 29, 2024

- Researchers Develop a New Way to Instruct Dance in Virtual Reality

Friday, April 26, 2024

- Computer Scientists Unveil Novel Attacks on Cybersecurity

- From Disorder to Order: Flocking Birds and 'spinning' Particles

- New Circuit Boards Can Be Repeatedly Recycled

- Why Can't Robots Outrun Animals?

- High-Precision Blood Glucose Level Prediction Achieved by Few-Molecule Reservoir Computing

Thursday, April 25, 2024

- Computer Vision Researcher Develops Privacy Software for Surveillance Videos

Wednesday, April 24, 2024

- Artificial Intelligence Helps Scientists Engineer Plants to Fight Climate Change

- Scientists Tune the Entanglement Structure in an Array of Qubits

- Artificial Intelligence Can Develop Treatments to Prevent 'superbugs'

- Computer Game in School Made Students Better at Detecting Fake News

- Holographic Displays Offer a Glimpse Into an Immersive Future

- Opening Up the Potential of Thin-Film Electronics for Flexible Chip Design

Tuesday, April 23, 2024

- This Tiny Chip Can Safeguard User Data While Enabling Efficient Computing on a Smartphone

- Super Mario Hackers' Tricks Could Protect Software from Bugs

Monday, April 22, 2024

- 2D Materials Rotate Light Polarization

Friday, April 19, 2024

- Accelerating the Discovery of New Materials Via the Ion-Exchange Method

Thursday, April 18, 2024

- Teaching a Computer to Type Like a Human

- Skyrmions Move at Record Speeds: A Step Towards the Computing of the Future

Wednesday, April 17, 2024

- How 3D Printers Can Give Robots a Soft Touch

Monday, April 15, 2024

- Millions of Gamers Advance Biomedical Research

Thursday, April 11, 2024

- New Computer Vision Tool Wins Prize for Social Impact

- 'Surprising' Hidden Activity of Semiconductor Material Spotted by Researchers

- Breakthrough Promises Secure Quantum Computing at Home

Wednesday, April 10, 2024

- A Faster, Better Way to Prevent an AI Chatbot from Giving Toxic Responses

- Waterproof 'e-Glove' Could Help Scuba Divers Communicate

Monday, April 8, 2024

- A Pulse of Innovation: AI at the Service of Heart Research

- Protecting Art and Passwords With Biochemistry

Thursday, April 4, 2024

- New Privacy-Preserving Robotic Cameras Obscure Images Beyond Human Recognition

Wednesday, April 3, 2024

- Computer Scientists Show the Way: AI Models Need Not Be SO Power Hungry

Tuesday, April 2, 2024

- 100 Kilometers of Quantum-Encrypted Transfer

Monday, April 1, 2024

- I Spy With My Speedy Eye: Scientists Discover Speed of Visual Perception Ranges Widely in Humans

Thursday, March 28, 2024

- Revolutionary Biomimetic Olfactory Chips to Enable Advanced Gas Sensing and Odor Detection

- An Innovative Mixed Light Field Technique for Immersive Projection Mapping

Wednesday, March 27, 2024

- More Efficient TVs, Screens and Lighting

- New Software Enables Blind and Low-Vision Users to Create Interactive, Accessible Charts

Monday, March 25, 2024

- Pairing Crypto Mining With Green Hydrogen Offers Clean Energy Boost

- Scientists Deliver Quantum Algorithm to Develop New Materials and Chemistry

- The World Is One Step Closer to Secure Quantum Communication on a Global Scale

- Novel Quantum Algorithm for High-Quality Solutions to Combinatorial Optimization Problems

- Semiconductors at Scale: New Processor Achieves Remarkable Speed-Up in Problem Solving

- Artificial Nanofluidic Synapses Can Store Computational Memory

Friday, March 22, 2024

- Physicists Develop Modeling Software to Diagnose Serious Diseases

Thursday, March 21, 2024

- N-Channel Diamond Field-Effect Transistor

- AI Ethics Are Ignoring Children, Say Researchers

Wednesday, March 20, 2024

- AI Can Now Detect COVID-19 in Lung Ultrasound Images

- Verifying the Work of Quantum Computers

- Quantum Talk With Magnetic Disks

- Powerful New AI Can Predict People's Attitudes to Vaccines

Tuesday, March 19, 2024

- Brain-Inspired Wireless System to Gather Data from Salt-Sized Sensors

- Researchers Develop Deep Learning Model to Predict Breast Cancer

Monday, March 18, 2024

- Where Quantum Computers Can Score

Thursday, March 14, 2024

- New Study Shows Analog Computing Can Solve Complex Equations and Use Far Less Energy

- Information Overload Is a Personal and Societal Danger

Wednesday, March 13, 2024

- Artificial Intelligence Detects Heart Defects in Newborns

- Straightening Teeth? AI Can Help

- Staying in the Loop: How Superconductors Are Helping Computers 'remember'

- Mathematicians Use AI to Identify Emerging COVID-19 Variants

- New AI Technology Enables 3D Capture and Editing of Real-Life Objects

Monday, March 11, 2024

- How Do Neural Networks Learn? A Mathematical Formula Explains How They Detect Relevant Patterns

- Accessibility Toolkit for Game Engine Unity

- Design Rules and Synthesis of Quantum Memory Candidates

Thursday, March 7, 2024

- The Role of Machine Learning and Computer Vision in Imageomics

- Method Rapidly Verifies That a Robot Will Avoid Collisions

Wednesday, March 6, 2024

- Making Quantum Bits Fly

- 3D Reflector Microchips Could Speed Development of 6G Wireless

- AI Can Speed Design of Health Software

- Can You Tell AI-Generated People from Real Ones?

Tuesday, March 5, 2024

- Shortcut to Success: Toward Fast and Robust Quantum Control Through Accelerating Adiabatic Passage

Friday, March 1, 2024

- Software Speeds Up Drug Development

Thursday, February 29, 2024

- Researchers Use AI, Google Street View to Predict Household Energy Costs on Large Scale

Wednesday, February 28, 2024

- New AI Model Could Streamline Operations in a Robotic Warehouse

Monday, February 26, 2024

- Robots, Monitoring and Healthy Ecosystems Could Halve Pesticide Use Without Hurting Productivity

Thursday, February 22, 2024

- Researchers Harness 2D Magnetic Materials for Energy-Efficient Computing

Wednesday, February 21, 2024

- Method Identified to Double Computer Processing Speeds

Tuesday, February 20, 2024

- Science Fiction Meets Reality: New Technique to Overcome Obstructed Views

- Plasma Scientists Develop Computer Programs That Could Reduce the Cost of Microchips and Stimulate American Manufacturing

- Researchers Develop AI That Can Understand Light in Photographs

Friday, February 16, 2024

- New Chip Opens Door to AI Computing at Light Speed

Thursday, February 15, 2024

- A New Design for Quantum Computers

- Artificial Intelligence: Aim Policies at 'hardware' To Ensure AI Safety, Say Experts

Wednesday, February 14, 2024

- Fundamental Equation for Superconducting Quantum Bits Revised

Monday, February 12, 2024

- Widespread Machine Learning Methods Behind 'link Prediction' Are Performing Very Poorly

- Why Insects Navigate More Efficiently Than Robots

Friday, February 9, 2024

- Innovations in Depth from Focus/defocus Pave the Way to More Capable Computer Vision Systems

- LATEST NEWS

- Top Science

- Top Physical/Tech

- Top Environment

- Top Society/Education

- Health & Medicine

- Mind & Brain

- Living Well

- Space & Time

- Matter & Energy

- Computers & Math

- Business & Industry

- Computers and Internet

- Markets and Finance

- Computer Science

- Artificial Intelligence

- Communications

- Computational Biology

- Computer Graphics

- Computer Modeling

- Computer Programming

- Distributed Computing

- Information Technology

- Mobile Computing

- Photography

- Quantum Computers

- Spintronics Research

- Video Games

- Virtual Reality

- Education & Learning

- Educational Technology

- Neural Interfaces

- Mathematics

- Math Puzzles

- Mathematical Modeling

- Plants & Animals

- Earth & Climate

- Fossils & Ruins

- Science & Society

Strange & Offbeat

- Brain Network Responsible for Stuttering

- When Should You Neuter or Spay Your Dog?

- Why Do Dyeing Poison Frogs Tap Dance?

- Cold Supply Chains for Food: Huge Savings

- Genetic Mosaicism More Common Than Thought

- How Killifish Embryos Survive 8 Month Drought

- Simple Food Swaps to Cut Greenhouse Gases

- Fossil Porcupine in a Prickly Dilemma

- Future Climate Impacts Put Whale Diet at Risk

- Charge Your Laptop in a Minute?

Trending Topics

Subscribe to the PwC Newsletter

Join the community, trending research, automated unit test improvement using large language models at meta.

Codium-ai/cover-agent • 14 Feb 2024

This paper describes Meta's TestGen-LLM tool, which uses LLMs to automatically improve existing human-written tests.

Software Engineering

LCB-net: Long-Context Biasing for Audio-Visual Speech Recognition

The growing prevalence of online conferences and courses presents a new challenge in improving automatic speech recognition (ASR) with enriched textual information from video slides.

Sound Multimedia Audio and Speech Processing

CAM++: A Fast and Efficient Network for Speaker Verification Using Context-Aware Masking

Time delay neural network (TDNN) has been proven to be efficient for speaker verification.

Sound Audio and Speech Processing

Empowering Robotics with Large Language Models: osmAG Map Comprehension with LLMs

In this letter, we address the problem of enabling LLMs to comprehend Area Graph, a text-based map representation, in order to enhance their applicability in the field of mobile robotics.

Open-Source, Cost-Aware Kinematically Feasible Planning for Mobile and Surface Robotics

ros-planning/navigation2 • 23 Jan 2024

This work is motivated by the lack of performant and available feasible planners for mobile and surface robotics research.

Filter-adapted spatiotemporal sampling for real-time rendering

electronicarts/fastnoise • 23 Oct 2023

Stochastic sampling techniques are ubiquitous in real-time rendering, where performance constraints force the use of low sample counts, leading to noisy intermediate results.

Graphics I.3.3; I.3.7

HiFi-Codec: Group-residual Vector quantization for High Fidelity Audio Codec

Despite their usefulness, two challenges persist: (1) training these audio codec models can be difficult due to the lack of publicly available training processes and the need for large-scale data and GPUs; (2) achieving good reconstruction performance requires many codebooks, which increases the burden on generation models.

Panoptic-SLAM: Visual SLAM in Dynamic Environments using Panoptic Segmentation

iit-dlslab/panoptic-slam • 3 May 2024

The ones that deal with dynamic objects in the scenes usually rely on deep-learning-based methods to detect and filter these objects.

COIN-LIO: Complementary Intensity-Augmented LiDAR Inertial Odometry

ethz-asl/COIN-LIO • 2 Oct 2023

To effectively leverage intensity as an additional modality, we present a novel feature selection scheme that detects uninformative directions in the point cloud registration and explicitly selects patches with complementary image information.

SR-LIO: LiDAR-Inertial Odometry with Sweep Reconstruction

ZikangYuan/sr_livo • 19 Oct 2022

This paper proposes a novel LiDAR-Inertial odometry (LIO), named SR-LIO, based on an iterated extended Kalman filter (iEKF) framework.

Robotics 68T40 I.2.9

Suggestions or feedback?

MIT News | Massachusetts Institute of Technology

- Machine learning

- Social justice

- Black holes

- Classes and programs

Departments

- Aeronautics and Astronautics

- Brain and Cognitive Sciences

- Architecture

- Political Science

- Mechanical Engineering

Centers, Labs, & Programs

- Abdul Latif Jameel Poverty Action Lab (J-PAL)

- Picower Institute for Learning and Memory

- Lincoln Laboratory

- School of Architecture + Planning

- School of Engineering

- School of Humanities, Arts, and Social Sciences

- Sloan School of Management

- School of Science

- MIT Schwarzman College of Computing

Computer science and technology

Download RSS feed: News Articles / In the Media / Audio

Looking for a specific action in a video? This AI-based method can find it for you

A new approach could streamline virtual training processes or aid clinicians in reviewing diagnostic videos.

May 29, 2024

Read full story →

Controlled diffusion model can change material properties in images

“Alchemist” system adjusts the material attributes of specific objects within images to potentially modify video game models to fit different environments, fine-tune VFX, and diversify robotic training.

May 28, 2024

School of Engineering welcomes new faculty

Fifteen new faculty members join six of the school’s academic departments.

May 23, 2024

Robotic palm mimics human touch

MIT CSAIL researchers enhance robotic precision with sophisticated tactile sensors in the palm and agile fingers, setting the stage for improvements in human-robot interaction and prosthetic technology.

May 20, 2024

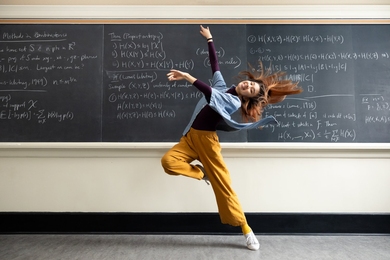

Janabel Xia: Algorithms, dance rhythms, and the drive to succeed

When the senior isn’t using mathematical and computational methods to boost driverless vehicles and fairer voting, she performs with MIT’s many dance groups to keep her on track.

May 17, 2024

Scientists use generative AI to answer complex questions in physics

A new technique that can automatically classify phases of physical systems could help scientists investigate novel materials.

May 16, 2024

New tool empowers users to fight online misinformation

The Trustnet browser extension lets individuals assess the accuracy of any content on any website.

Using ideas from game theory to improve the reliability of language models

A new “consensus game,” developed by MIT CSAIL researchers, elevates AI’s text comprehension and generation skills.

May 14, 2024

A better way to control shape-shifting soft robots

A new algorithm learns to squish, bend, or stretch a robot’s entire body to accomplish diverse tasks like avoiding obstacles or retrieving items.

May 10, 2024

Creating bespoke programming languages for efficient visual AI systems

Associate Professor Jonathan Ragan-Kelley optimizes how computer graphics and images are processed for the hardware of today and tomorrow.

May 3, 2024

Three from MIT named 2024-25 Goldwater Scholars

Undergraduates Ben Lou, Srinath Mahankali, and Kenta Suzuki, whose research explores math and physics, are honored for their academic excellence.

May 2, 2024

Fostering research, careers, and community in materials science

MICRO internship program expands, brings undergraduate interns from other schools to campus.

May 1, 2024

Natural language boosts LLM performance in coding, planning, and robotics

Three neurosymbolic methods help language models find better abstractions within natural language, then use those representations to execute complex tasks.

MIT Emerging Talent opens pathways for underserved global learners

Learners across 24 countries build technical and employment skills in a collaborative community.

April 25, 2024

Study demonstrates efficacy of MIT-led Brave Behind Bars program

Programming course for incarcerated people boosts digital literacy and self-efficacy, highlighting potential for reduced recidivism.

April 24, 2024

Massachusetts Institute of Technology 77 Massachusetts Avenue, Cambridge, MA, USA

- Map (opens in new window)

- Events (opens in new window)

- People (opens in new window)

- Careers (opens in new window)

- Accessibility

- Social Media Hub

- MIT on Facebook

- MIT on YouTube

- MIT on Instagram

- Newsletters

Where computing might go next

The future of computing depends in part on how we reckon with its past.

- Margaret O’Mara archive page

If the future of computing is anything like its past, then its trajectory will depend on things that have little to do with computing itself.

Technology does not appear from nowhere. It is rooted in time, place, and opportunity. No lab is an island; machines’ capabilities and constraints are determined not only by the laws of physics and chemistry but by who supports those technologies, who builds them, and where they grow.

Popular characterizations of computing have long emphasized the quirkiness and brilliance of those in the field, portraying a rule-breaking realm operating off on its own. Silicon Valley’s champions and boosters have perpetuated the mythos of an innovative land of garage startups and capitalist cowboys. The reality is different. Computing’s history is modern history—and especially American history—in miniature.

The United States’ extraordinary push to develop nuclear and other weapons during World War II unleashed a torrent of public spending on science and technology. The efforts thus funded trained a generation of technologists and fostered multiple computing projects, including ENIAC —the first all-digital computer, completed in 1946. Many of those funding streams eventually became permanent, financing basic and applied research at a scale unimaginable before the war.

The strategic priorities of the Cold War drove rapid development of transistorized technologies on both sides of the Iron Curtain. In a grim race for nuclear supremacy amid an optimistic age of scientific aspiration, government became computing’s biggest research sponsor and largest single customer. Colleges and universities churned out engineers and scientists. Electronic data processing defined the American age of the Organization Man, a nation built and sorted on punch cards.

The space race, especially after the Soviets beat the US into space with the launch of the Sputnik orbiter in late 1957, jump-started a silicon semiconductor industry in a sleepy agricultural region of Northern California, eventually shifting tech’s center of entrepreneurial gravity from East to West. Lanky engineers in white shirts and narrow ties turned giant machines into miniature electronic ones, sending Americans to the moon. (Of course, there were also women playing key, though often unrecognized, roles.)

In 1965, semiconductor pioneer Gordon Moore, who with colleagues had broken ranks with his boss William Shockley of Shockley Semiconductor to launch a new company, predicted that the number of transistors on an integrated circuit would double every year while costs would stay about the same. Moore’s Law was proved right. As computing power became greater and cheaper, digital innards replaced mechanical ones in nearly everything from cars to coffeemakers.

A new generation of computing innovators arrived in the Valley, beneficiaries of America’s great postwar prosperity but now protesting its wars and chafing against its culture. Their hair grew long; their shirts stayed untucked. Mainframes were seen as tools of the Establishment, and achievement on earth overshadowed shooting for the stars. Small was beautiful. Smiling young men crouched before home-brewed desktop terminals and built motherboards in garages. A beatific newly minted millionaire named Steve Jobs explained how a personal computer was like a bicycle for the mind. Despite their counterculture vibe, they were also ruthlessly competitive businesspeople. Government investment ebbed and private wealth grew.

The ARPANET became the commercial internet. What had been a walled garden accessible only to government-funded researchers became an extraordinary new platform for communication and business, as the screech of dial-up modems connected millions of home computers to the World Wide Web. Making this strange and exciting world accessible were very young companies with odd names: Netscape, eBay, Amazon.com, Yahoo.

By the turn of the millennium, a president had declared that the era of big government was over and the future lay in the internet’s vast expanse. Wall Street clamored for tech stocks, then didn’t; fortunes were made and lost in months. After the bust, new giants emerged. Computers became smaller: a smartphone in your pocket, a voice assistant in your kitchen. They grew larger, into the vast data banks and sprawling server farms of the cloud.

Fed with oceans of data, largely unfettered by regulation, computing got smarter. Autonomous vehicles trawled city streets, humanoid robots leaped across laboratories, algorithms tailored social media feeds and matched gig workers to customers. Fueled by the explosion of data and computation power, artificial intelligence became the new new thing. Silicon Valley was no longer a place in California but shorthand for a global industry, although tech wealth and power were consolidated ever more tightly in five US-based companies with a combined market capitalization greater than the GDP of Japan.

It was a trajectory of progress and wealth creation that some believed inevitable and enviable. Then, starting two years ago, resurgent nationalism and an economy-upending pandemic scrambled supply chains, curtailed the movement of people and capital, and reshuffled the global order. Smartphones recorded death on the streets and insurrection at the US Capitol. AI-enabled drones surveyed the enemy from above and waged war on those below. Tech moguls sat grimly before congressional committees, their talking points ringing hollow to freshly skeptical lawmakers.

Our relationship with computing had suddenly changed.

The past seven decades have produced stunning breakthroughs in science and engineering. The pace and scale of change would have amazed our mid-20th-century forebears. Yet techno-optimistic assurances about the positive social power of a networked computer on every desk have proved tragically naïve. The information age of late has been more effective at fomenting discord than advancing enlightenment, exacerbating social inequities and economic inequalities rather than transcending them.

The technology industry—produced and made wealthy by these immense advances in computing—has failed to imagine alternative futures both bold and practicable enough to address humanity’s gravest health and climatic challenges. Silicon Valley leaders promise space colonies while building grand corporate headquarters below sea level. They proclaim that the future lies in the metaverse , in the blockchain, in cryptocurrencies whose energy demands exceed those of entire nation-states.

The future of computing feels more tenuous, harder to map in a sea of information and disruption. That is not to say that predictions are futile, or that those who build and use technology have no control over where computing goes next. To the contrary: history abounds with examples of individual and collective action that altered social and political outcomes. But there are limits to the power of technology to overcome earthbound realities of politics, markets, and culture.

To understand computing’s future, look beyond the machine.

1. The hoodie problem

First, look to who will get to build the future of computing.

The tech industry long celebrated itself as a meritocracy, where anyone could get ahead on the strength of technical know-how and innovative spark. This assertion has been belied in recent years by the persistence of sharp racial and gender imbalances, particularly in the field’s topmost ranks. Men still vastly outnumber women in the C-suites and in key engineering roles at tech companies. Venture capital investors and venture-backed entrepreneurs remain mostly white and male. The number of Black and Latino technologists of any gender remains shamefully tiny.

Much of today’s computing innovation was born in Silicon Valley . And looking backward, it becomes easier to understand where tech’s meritocratic notions come from, as well as why its diversity problem has been difficult to solve.

Silicon Valley was once indeed a place where people without family money or connections could make a career and possibly a fortune. Those lanky engineers of the Valley’s space-age 1950s and 1960s were often heartland boys from middle-class backgrounds, riding the extraordinary escalator of upward mobility that America delivered to white men like them in the prosperous quarter-century after the end of World War II.

Many went to college on the GI Bill and won merit scholarships to places like Stanford and MIT, or paid minimal tuition at state universities like the University of California, Berkeley. They had their pick of engineering jobs as defense contracts fueled the growth of the electronics industry. Most had stay-at-home wives whose unpaid labor freed husbands to focus their energy on building new products, companies, markets. Public investments in suburban infrastructure made their cost of living reasonable, the commutes easy, the local schools excellent. Both law and market discrimination kept these suburbs nearly entirely white.

In the last half-century, political change and market restructuring slowed this escalator of upward mobility to a crawl , right at the time that women and minorities finally had opportunities to climb on. By the early 2000s, the homogeneity among those who built and financed tech products entrenched certain assumptions: that women were not suited for science, that tech talent always came dressed in a hoodie and had attended an elite school—whether or not someone graduated. It limited thinking about what problems to solve, what technologies to build, and what products to ship.

Having so much technology built by a narrow demographic—highly educated, West Coast based, and disproportionately white, male, and young—becomes especially problematic as the industry and its products grow and globalize. It has fueled considerable investment in driverless cars without enough attention to the roads and cities these cars will navigate. It has propelled an embrace of big data without enough attention to the human biases contained in that data . It has produced social media platforms that have fueled political disruption and violence at home and abroad. It has left rich areas of research and potentially vast market opportunities neglected.

Computing’s lack of diversity has always been a problem, but only in the past few years has it become a topic of public conversation and a target for corporate reform. That’s a positive sign. The immense wealth generated within Silicon Valley has also created a new generation of investors, including women and minorities who are deliberately putting their money in companies run by people who look like them.

But change is painfully slow. The market will not take care of imbalances on its own.

For the future of computing to include more diverse people and ideas, there needs to be a new escalator of upward mobility: inclusive investments in research, human capital, and communities that give a new generation the same assist the first generation of space-age engineers enjoyed. The builders cannot do it alone.

2. Brainpower monopolies

Then, look at who the industry's customers are and how it is regulated.

The military investment that undergirded computing’s first all-digital decades still casts a long shadow. Major tech hubs of today—the Bay Area, Boston, Seattle, Los Angeles—all began as centers of Cold War research and military spending. As the industry further commercialized in the 1970s and 1980s, defense activity faded from public view, but it hardly disappeared. For academic computer science, the Pentagon became an even more significant benefactor starting with Reagan-era programs like the Strategic Defense Initiative, the computer-enabled system of missile defense memorably nicknamed “Star Wars.”

In the past decade, after a brief lull in the early 2000s, the ties between the technology industry and the Pentagon have tightened once more. Some in Silicon Valley protest its engagement in the business of war, but their objections have done little to slow the growing stream of multibillion-dollar contracts for cloud computing and cyberweaponry. It is almost as if Silicon Valley is returning to its roots.

Defense work is one dimension of the increasingly visible and freshly contentious entanglement between the tech industry and the US government. Another is the growing call for new technology regulation and antitrust enforcement, with potentially significant consequences for how technological research will be funded and whose interests it will serve.

The extraordinary consolidation of wealth and power in the technology sector and the role the industry has played in spreading disinformation and sparking political ruptures have led to a dramatic change in the way lawmakers approach the industry. The US has had little appetite for reining in the tech business since the Department of Justice took on Microsoft 20 years ago. Yet after decades of bipartisan chumminess and laissez-faire tolerance, antitrust and privacy legislation is now moving through Congress. The Biden administration has appointed some of the industry’s most influential tech critics to key regulatory roles and has pushed for significant increases in regulatory enforcement.

The five giants—Amazon, Apple, Facebook, Google, and Microsoft—now spend as much or more lobbying in Washington, DC, as banks, pharmaceutical companies, and oil conglomerates, aiming to influence the shape of anticipated regulation. Tech leaders warn that breaking up large companies will open a path for Chinese firms to dominate global markets, and that regulatory intervention will squelch the innovation that made Silicon Valley great in the first place.

Viewed through a longer lens, the political pushback against Big Tech’s power is not surprising. Although sparked by the 2016 American presidential election, the Brexit referendum, and the role social media disinformation campaigns may have played in both, the political mood echoes one seen over a century ago.

We might be looking at a tech future where companies remain large but regulated, comparable to the technology and communications giants of the middle part of the 20th century. This model did not squelch technological innovation. Today, it could actually aid its growth and promote the sharing of new technologies.

Take the case of AT&T, a regulated monopoly for seven decades before its ultimate breakup in the early 1980s. In exchange for allowing it to provide universal telephone service, the US government required AT&T to stay out of other communication businesses, first by selling its telegraph subsidiary and later by steering clear of computing.

Like any for-profit enterprise, AT&T had a hard time sticking to the rules, especially after the computing field took off in the 1940s. One of these violations resulted in a 1956 consent decree under which the US required the telephone giant to license the inventions produced in its industrial research arm, Bell Laboratories, to other companies. One of those products was the transistor. Had AT&T not been forced to share this and related technological breakthroughs with other laboratories and firms, the trajectory of computing would have been dramatically different.

Right now, industrial research and development activities are extraordinarily concentrated once again. Regulators mostly looked the other way over the past two decades as tech firms pursued growth at all costs, and as large companies acquired smaller competitors. Top researchers left academia for high-paying jobs at the tech giants as well, consolidating a huge amount of the field’s brainpower in a few companies.

More so than at any other time in Silicon Valley’s ferociously entrepreneurial history, it is remarkably difficult for new entrants and their technologies to sustain meaningful market share without being subsumed or squelched by a larger, well-capitalized, market-dominant firm. More of computing’s big ideas are coming from a handful of industrial research labs and, not surprisingly, reflecting the business priorities of a select few large tech companies.

Tech firms may decry government intervention as antithetical to their ability to innovate. But follow the money, and the regulation, and it is clear that the public sector has played a critical role in fueling new computing discoveries—and building new markets around them—from the start.

3. Location, location, location

Last, think about where the business of computing happens.

The question of where “the next Silicon Valley” might grow has consumed politicians and business strategists around the world for far longer than you might imagine. French president Charles de Gaulle toured the Valley in 1960 to try to unlock its secrets. Many world leaders have followed in the decades since.

Silicon Somethings have sprung up across many continents, their gleaming research parks and California-style subdivisions designed to lure a globe-trotting workforce and cultivate a new set of tech entrepreneurs. Many have fallen short of their startup dreams, and all have fallen short of the standard set by the original, which has retained an extraordinary ability to generate one blockbuster company after another, through boom and bust.

While tech startups have begun to appear in a wider variety of places, about three in 10 venture capital firms and close to 60% of available investment dollars remain concentrated in the Bay Area. After more than half a century, it remains the center of computing innovation.

It does, however, have significant competition. China has been making the kinds of investments in higher education and advanced research that the US government made in the early Cold War, and its technology and internet sectors have produced enormous companies with global reach.

The specter of Chinese competition has driven bipartisan support for renewed American tech investment, including a potentially massive infusion of public subsidies into the US semiconductor industry. American companies have been losing ground to Asian competitors in the chip market for years. The economy-choking consequences of this became painfully clear when covid-related shutdowns slowed chip imports to a trickle, throttling production of the many consumer goods that rely on semiconductors to function.

As when Japan posed a competitive threat 40 years ago, the American agitation over China runs the risk of slipping into corrosive stereotypes and lightly veiled xenophobia. But it is also true that computing technology reflects the state and society that makes it, whether it be the American military-industrial complex of the late 20th century, the hippie-influenced West Coast culture of the 1970s, or the communist-capitalist China of today.

What’s next

Historians like me dislike making predictions. We know how difficult it is to map the future, especially when it comes to technology, and how often past forecasters have gotten things wrong.

Intensely forward-thinking and impatient with incrementalism, many modern technologists—especially those at the helm of large for-profit enterprises—are the opposite. They disdain politics, and resist getting dragged down by the realities of past and present as they imagine what lies over the horizon. They dream of a new age of quantum computers and artificial general intelligence, where machines do most of the work and much of the thinking.

They could use a healthy dose of historical thinking.

Whatever computing innovations will appear in the future, what matters most is how our culture, businesses, and society choose to use them. And those of us who analyze the past also should take some inspiration and direction from the technologists who have imagined what is not yet possible. Together, looking forward and backward, we may yet be able to get where we need to go.

It’s time to retire the term “user”

The proliferation of AI means we need a new word.

- Taylor Majewski archive page

Modernizing data with strategic purpose

Data strategies and modernization initiatives misaligned with the overall business strategy—or too narrowly focused on AI—leave substantial business value on the table.

- MIT Technology Review Insights archive page

How ASML took over the chipmaking chessboard

MIT Technology Review sat down with outgoing CTO Martin van den Brink to talk about the company’s rise to dominance and the life and death of Moore’s Law.

- Mat Honan archive page

- James O'Donnell archive page

Why it’s so hard for China’s chip industry to become self-sufficient

Chip companies from the US and China are developing new materials to reduce reliance on a Japanese monopoly. It won’t be easy.

- Zeyi Yang archive page

Stay connected

Get the latest updates from mit technology review.

Discover special offers, top stories, upcoming events, and more.

Thank you for submitting your email!

It looks like something went wrong.

We’re having trouble saving your preferences. Try refreshing this page and updating them one more time. If you continue to get this message, reach out to us at [email protected] with a list of newsletters you’d like to receive.

Open research in computer science

Spanning networks and communications to security and cryptology to big data, complexity, and analytics, SpringerOpen and BMC publish one of the leading open access portfolios in computer science. Learn about our journals and the research we publish here on this page.

Highly-cited recent articles

Spotlight on.

EPJ Data Science

See how EPJ Data Science brings attention to data science

Reasons to publish in Human-centric Computing and Information Sciences

Download this handy infographic to see all the reasons why Human-centric Computing and Information Sciences is a great place to publish.

We've asked a few of our authors about their experience of publishing with us.

What authors say about publishing in our journals:

Fast, transparent, and fair. - EPJ Data Science Easy submission process through online portal. - Journal of Cloud Computing Patient support and constant reminder at every phase. - Journal of Cloud Computing Quick and relevant. - Journal of Big Data

How to Submit Your Manuscript

Your browser needs to have JavaScript enabled to view this video

Computer science blog posts

Read the latest from the SpringerOpen blog

The SpringerOpen blog highlights recent noteworthy research of general interest published in our open access journals.

Failed to load RSS feed.

Help | Advanced Search

Computer Science > Machine Learning

Title: application of machine learning in agriculture: recent trends and future research avenues.

Abstract: Food production is a vital global concern and the potential for an agritech revolution through artificial intelligence (AI) remains largely unexplored. This paper presents a comprehensive review focused on the application of machine learning (ML) in agriculture, aiming to explore its transformative potential in farming practices and efficiency enhancement. To understand the extent of research activity in this field, statistical data have been gathered, revealing a substantial growth trend in recent years. This indicates that it stands out as one of the most dynamic and vibrant research domains. By introducing the concept of ML and delving into the realm of smart agriculture, including Precision Agriculture, Smart Farming, Digital Agriculture, and Agriculture 4.0, we investigate how AI can optimize crop output and minimize environmental impact. We highlight the capacity of ML to analyze and classify agricultural data, providing examples of improved productivity and profitability on farms. Furthermore, we discuss prominent ML models and their unique features that have shown promising results in agricultural applications. Through a systematic review of the literature, this paper addresses the existing literature gap on AI in agriculture and offers valuable information to newcomers and researchers. By shedding light on unexplored areas within this emerging field, our objective is to facilitate a deeper understanding of the significant contributions and potential of AI in agriculture, ultimately benefiting the research community.

Submission history

Access paper:.

- HTML (experimental)

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

computer science Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

Hiring CS Graduates: What We Learned from Employers

Computer science ( CS ) majors are in high demand and account for a large part of national computer and information technology job market applicants. Employment in this sector is projected to grow 12% between 2018 and 2028, which is faster than the average of all other occupations. Published data are available on traditional non-computer science-specific hiring processes. However, the hiring process for CS majors may be different. It is critical to have up-to-date information on questions such as “what positions are in high demand for CS majors?,” “what is a typical hiring process?,” and “what do employers say they look for when hiring CS graduates?” This article discusses the analysis of a survey of 218 recruiters hiring CS graduates in the United States. We used Atlas.ti to analyze qualitative survey data and report the results on what positions are in the highest demand, the hiring process, and the resume review process. Our study revealed that a software developer was the most common job the recruiters were looking to fill. We found that the hiring process steps for CS graduates are generally aligned with traditional hiring steps, with an additional emphasis on technical and coding tests. Recruiters reported that their hiring choices were based on reviewing resume’s experience, GPA, and projects sections. The results provide insights into the hiring process, decision making, resume analysis, and some discrepancies between current undergraduate CS program outcomes and employers’ expectations.

A Systematic Literature Review of Empiricism and Norms of Reporting in Computing Education Research Literature

Context. Computing Education Research (CER) is critical to help the computing education community and policy makers support the increasing population of students who need to learn computing skills for future careers. For a community to systematically advance knowledge about a topic, the members must be able to understand published work thoroughly enough to perform replications, conduct meta-analyses, and build theories. There is a need to understand whether published research allows the CER community to systematically advance knowledge and build theories. Objectives. The goal of this study is to characterize the reporting of empiricism in Computing Education Research literature by identifying whether publications include content necessary for researchers to perform replications, meta-analyses, and theory building. We answer three research questions related to this goal: (RQ1) What percentage of papers in CER venues have some form of empirical evaluation? (RQ2) Of the papers that have empirical evaluation, what are the characteristics of the empirical evaluation? (RQ3) Of the papers that have empirical evaluation, do they follow norms (both for inclusion and for labeling of information needed for replication, meta-analysis, and, eventually, theory-building) for reporting empirical work? Methods. We conducted a systematic literature review of the 2014 and 2015 proceedings or issues of five CER venues: Technical Symposium on Computer Science Education (SIGCSE TS), International Symposium on Computing Education Research (ICER), Conference on Innovation and Technology in Computer Science Education (ITiCSE), ACM Transactions on Computing Education (TOCE), and Computer Science Education (CSE). We developed and applied the CER Empiricism Assessment Rubric to the 427 papers accepted and published at these venues over 2014 and 2015. Two people evaluated each paper using the Base Rubric for characterizing the paper. An individual person applied the other rubrics to characterize the norms of reporting, as appropriate for the paper type. Any discrepancies or questions were discussed between multiple reviewers to resolve. Results. We found that over 80% of papers accepted across all five venues had some form of empirical evaluation. Quantitative evaluation methods were the most frequently reported. Papers most frequently reported results on interventions around pedagogical techniques, curriculum, community, or tools. There was a split in papers that had some type of comparison between an intervention and some other dataset or baseline. Most papers reported related work, following the expectations for doing so in the SIGCSE and CER community. However, many papers were lacking properly reported research objectives, goals, research questions, or hypotheses; description of participants; study design; data collection; and threats to validity. These results align with prior surveys of the CER literature. Conclusions. CER authors are contributing empirical results to the literature; however, not all norms for reporting are met. We encourage authors to provide clear, labeled details about their work so readers can use the study methodologies and results for replications and meta-analyses. As our community grows, our reporting of CER should mature to help establish computing education theory to support the next generation of computing learners.

Light Diacritic Restoration to Disambiguate Homographs in Modern Arabic Texts

Diacritic restoration (also known as diacritization or vowelization) is the process of inserting the correct diacritical markings into a text. Modern Arabic is typically written without diacritics, e.g., newspapers. This lack of diacritical markings often causes ambiguity, and though natives are adept at resolving, there are times they may fail. Diacritic restoration is a classical problem in computer science. Still, as most of the works tackle the full (heavy) diacritization of text, we, however, are interested in diacritizing the text using a fewer number of diacritics. Studies have shown that a fully diacritized text is visually displeasing and slows down the reading. This article proposes a system to diacritize homographs using the least number of diacritics, thus the name “light.” There is a large class of words that fall under the homograph category, and we will be dealing with the class of words that share the spelling but not the meaning. With fewer diacritics, we do not expect any effect on reading speed, while eye strain is reduced. The system contains morphological analyzer and context similarities. The morphological analyzer is used to generate all word candidates for diacritics. Then, through a statistical approach and context similarities, we resolve the homographs. Experimentally, the system shows very promising results, and our best accuracy is 85.6%.

A genre-based analysis of questions and comments in Q&A sessions after conference paper presentations in computer science

Gender diversity in computer science at a large public r1 research university: reporting on a self-study.

With the number of jobs in computer occupations on the rise, there is a greater need for computer science (CS) graduates than ever. At the same time, most CS departments across the country are only seeing 25–30% of women students in their classes, meaning that we are failing to draw interest from a large portion of the population. In this work, we explore the gender gap in CS at Rutgers University–New Brunswick, a large public R1 research university, using three data sets that span thousands of students across six academic years. Specifically, we combine these data sets to study the gender gaps in four core CS courses and explore the correlation of several factors with retention and the impact of these factors on changes to the gender gap as students proceed through the CS courses toward completing the CS major. For example, we find that a significant percentage of women students taking the introductory CS1 course for majors do not intend to major in CS, which may be a contributing factor to a large increase in the gender gap immediately after CS1. This finding implies that part of the retention task is attracting these women students to further explore the major. Results from our study include both novel findings and findings that are consistent with known challenges for increasing gender diversity in CS. In both cases, we provide extensive quantitative data in support of the findings.

Designing for Student-Directedness: How K–12 Teachers Utilize Peers to Support Projects

Student-directed projects—projects in which students have individual control over what they create and how to create it—are a promising practice for supporting the development of conceptual understanding and personal interest in K–12 computer science classrooms. In this article, we explore a central (and perhaps counterintuitive) design principle identified by a group of K–12 computer science teachers who support student-directed projects in their classrooms: in order for students to develop their own ideas and determine how to pursue them, students must have opportunities to engage with other students’ work. In this qualitative study, we investigated the instructional practices of 25 K–12 teachers using a series of in-depth, semi-structured interviews to develop understandings of how they used peer work to support student-directed projects in their classrooms. Teachers described supporting their students in navigating three stages of project development: generating ideas, pursuing ideas, and presenting ideas. For each of these three stages, teachers considered multiple factors to encourage engagement with peer work in their classrooms, including the quality and completeness of shared work and the modes of interaction with the work. We discuss how this pedagogical approach offers students new relationships to their own learning, to their peers, and to their teachers and communicates important messages to students about their own competence and agency, potentially contributing to aims within computer science for broadening participation.

Creativity in CS1: A Literature Review

Computer science is a fast-growing field in today’s digitized age, and working in this industry often requires creativity and innovative thought. An issue within computer science education, however, is that large introductory programming courses often involve little opportunity for creative thinking within coursework. The undergraduate introductory programming course (CS1) is notorious for its poor student performance and retention rates across multiple institutions. Integrating opportunities for creative thinking may help combat this issue by adding a personal touch to course content, which could allow beginner CS students to better relate to the abstract world of programming. Research on the role of creativity in computer science education (CSE) is an interesting area with a lot of room for exploration due to the complexity of the phenomenon of creativity as well as the CSE research field being fairly new compared to some other education fields where this topic has been more closely explored. To contribute to this area of research, this article provides a literature review exploring the concept of creativity as relevant to computer science education and CS1 in particular. Based on the review of the literature, we conclude creativity is an essential component to computer science, and the type of creativity that computer science requires is in fact, a teachable skill through the use of various tools and strategies. These strategies include the integration of open-ended assignments, large collaborative projects, learning by teaching, multimedia projects, small creative computational exercises, game development projects, digitally produced art, robotics, digital story-telling, music manipulation, and project-based learning. Research on each of these strategies and their effects on student experiences within CS1 is discussed in this review. Last, six main components of creativity-enhancing activities are identified based on the studies about incorporating creativity into CS1. These components are as follows: Collaboration, Relevance, Autonomy, Ownership, Hands-On Learning, and Visual Feedback. The purpose of this article is to contribute to computer science educators’ understanding of how creativity is best understood in the context of computer science education and explore practical applications of creativity theory in CS1 classrooms. This is an important collection of information for restructuring aspects of future introductory programming courses in creative, innovative ways that benefit student learning.

CATS: Customizable Abstractive Topic-based Summarization

Neural sequence-to-sequence models are the state-of-the-art approach used in abstractive summarization of textual documents, useful for producing condensed versions of source text narratives without being restricted to using only words from the original text. Despite the advances in abstractive summarization, custom generation of summaries (e.g., towards a user’s preference) remains unexplored. In this article, we present CATS, an abstractive neural summarization model that summarizes content in a sequence-to-sequence fashion while also introducing a new mechanism to control the underlying latent topic distribution of the produced summaries. We empirically illustrate the efficacy of our model in producing customized summaries and present findings that facilitate the design of such systems. We use the well-known CNN/DailyMail dataset to evaluate our model. Furthermore, we present a transfer-learning method and demonstrate the effectiveness of our approach in a low resource setting, i.e., abstractive summarization of meetings minutes, where combining the main available meetings’ transcripts datasets, AMI and International Computer Science Institute(ICSI) , results in merely a few hundred training documents.

Exploring students’ and lecturers’ views on collaboration and cooperation in computer science courses - a qualitative analysis

Factors affecting student educational choices regarding oer material in computer science, export citation format, share document.

- Harvard Library

- Research Guides

- Faculty of Arts & Sciences Libraries

Computer Science Library Research Guide

- Find Articles

- Get Started

- How to get the full-text

- What is Peer Review?

- Find Books in the SEC Library This link opens in a new window

- Find Conference Proceedings

- Find Dissertations and Theses

- Find Patents This link opens in a new window

- Find Standards

- Find Technical Reports

- Find Videos

- Ask a Librarian This link opens in a new window

Engineering Librarian

Library Databases

Below are some key engineering databases. If you have any questions, please feel free to contact me.

- ACM Digital Library Provides access to the Association for Computing Machinery (ACM) journals and magazines, as well as conference proceedings.

- IEEE Xplore Digital Library Provides full-text access to IEEE transactions, IEEE and IEE journals, magazines, and conference proceedings published since 1988, and all current IEEE standards; brings additional search and access features to IEEE/IEE digital library users more... less... Institute of Electrical and Electronics Engineering

- Inspec This database provides access to citations and abstracts in physics, electrical engineering, electronics, communications, control engineering, computers and computing, information technology, manufacturing and production engineering more... less... Produced by the Institution of Electrical Engineers.

- SPIE Digital Library SPIE proceedings include more than 450,000 optics and photonics conference papers spanning biomedicine, communications, sensors, defense and security, manufacturing, electronics, energy, and imaging. SPIE journals offer peer-reviewed articles on applied research in optics and photonics, including optical engineering, electronic imaging, biomedical optics, microlithography, remote sensing, and nanophotonics. more... less... Society of Photo-optical Instrumentation Engineers [issuing body]

- MathSciNet American Mathematical Society's searchable database covering the world's mathematical literature since 1940.

- SIAM Society for Industrial and Applied Mathematics' journals and proceedings

- CRC Handbook of Chemistry and Physics Full-text access to the CRC Handbook of Chemistry and Physics 99th Edition more... less... The CRC Handbook of Chemistry and Physics contains tables of physical, chemical, and other scientific data, including: basic units and conversion factors; symbols and terminology; physical constants of organic compounds; properties of elements and inorganic compounds; thermochemistry, electrochemistry and kinetics; fluid properties; biochemistry, analytical chemistry, molecular structure, and spectroscopy; atomic, molecular, and optical physics; nuclear and particle physics; properties of solids; polymer properties; geophysics, astronomy, and acoustics; mathematical tables.

- O'Reilly O'Reilly (previously Safari Tech Books Online) provides access to more than 35,000 full-text e-books from O'Reilly, Sams, Peachpit Press, Que, Addison-Wesley, and other publishers. Coverage includes business, information technology, software development, and computer science.

Multidisciplinary Databases

- Web of Science Search the world’s leading scholarly journals, books, and proceedings in the sciences, social sciences, and arts and humanities and navigate the full citation network. more... less... All cited references for all publications are fully indexed and searchable. Search across all authors and all author affiliations. Track citation activity with Citation Alerts. See citation activity and trends graphically with Citation Report. Use Analyze Results to identify trends and publication patterns. Your edition(s): Science Citation Index Expanded (1900-present) Social Sciences Citation Index (1900-present) Arts & Humanities Citation Index (1975-present) Conference Proceedings Citation Index- Science (1990-present) Conference Proceedings Citation Index- Social Science & Humanities (1990-present) Book Citation Index– Science (2005-present) Book Citation Index– Social Sciences & Humanities (2005-present) Emerging Sources Citation Index (2015-present) Current Chemical Reactions (1986-present) (Includes Institut National de la Propriete Industrielle structure data back to 1840) Index Chemicus (1993-present)

- Annual Reviews includes Biochemistry, Biomedical Data Science, Biomedical Engineering, Biophysics, Cancer Biology, Chemical and Biomolecular Engineering, Computer Science, Control, Robotics, and Autonomous Systems, Environment, Fluid Mechanics, Food Science and Technology, Materials Research, and more.

- ProQuest Central This database serves as the central resource for researchers at all levels. Covering more than 160 subject areas and features a diversified mix of content including scholarly journals, trade publications, magazines, books, newspapers, reports and videos.

- Very Short Introduction - Oxford Academic Very Short Introductions offer concise and original introductions to a wide range of subjects. Our expert authors combine facts, analysis, new insights, and enthusiasm to make often challenging topics highly readable to develop your core knowledge.

Other Resources

- CiteSeerX - CiteSeerx is an evolving scientific literature digital library and search engine that has focused primarily on the literature in computer and information science.

- MITCogNet - AI/Computational Modelling - books and articles

- dblp : computer science bibliography - this service provides open bibliographic information on major computer science journals and proceedings

- engrXiv preprints - engineering archive

- TechRxiv - preprints in Technology Research

- SSRN - Computer Science Research Network on SSRN is an open-access preprint server that provides a platform for the dissemination of early-stage research.

- bioRxiv - the preprint server for biology

- << Previous: Get Started

- Next: How to get the full-text >>

- Last Updated: Feb 27, 2024 1:52 PM

- URL: https://guides.library.harvard.edu/cs

Harvard University Digital Accessibility Policy

Advancements in Fake News Detection: A Comprehensive Machine Learning Approach Across Varied Datasets

- Original Research

- Published: 25 May 2024

- Volume 5 , article number 583 , ( 2024 )

Cite this article

- Adeel Aslam 1 ,

- Fazeel Abid 2 ,

- Jawad Rasheed ORCID: orcid.org/0000-0003-3761-1641 3 ,

- Anza Shabbir 1 ,

- Manahil Murtaza 1 ,

- Shtwai Alsubai 4 &

- Harun Elkiran 3

Fake news has become a major social problem in the current period, controlled by modern technology and the unrestricted flow of information across digital platforms. The deliberate spread of inaccurate or misleading information jeopardizes the public's ability to make educated decisions and seriously threatens the credibility of news sources. This study thoroughly examines the intricate terrain of identifying false news, utilizing state-of-the-art tools and creative approaches to tackle this crucial problem at the nexus of information sharing and technology. The study uses advanced machine learning (ML) models comprising multinomial Naive Bayes (MNB), linear support vector classifiers (SVC), random forests (RF), logistic regression (LR), gradient boosting (GB), decision trees (DT), and to discern and identify instances of fake news. The research shows remarkable performance using publicly available datasets, achieving 94% accuracy on the first dataset and 84% on the second. These results underscore the model's efficacy in reliably detecting fake news, thereby contributing substantially to the ongoing discourse on countering misinformation in the digital age. The research not only delves into the technical intricacies of employing diverse ML models but also emphasizes the broader societal implications of mitigating the impact of fake news on public discourse. The findings highlight the pressing need for proactive measures in developing robust systems capable of effectively identifying and addressing the propagation of false information. As technology evolves, the insights derived from this research serve as a foundation for advancing strategies to uphold the integrity of information sources and safeguard the public's ability to make well-informed decisions in an increasingly digitalized world.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

Fake news, disinformation and misinformation in social media: a review

Cyber risk and cybersecurity: a systematic review of data availability

The disaster of misinformation: a review of research in social media

Data availability.

The dataset is publicly available (given in Ref [ 9 ]).

Abdulrahman A, Baykara M. Fake news detection using machine learning and deep learning algorithms. In: 2020 international conference on advanced science and engineering (ICOASE). 2020. https://doi.org/10.1109/icoase51841.2020.9436605 .

Ahmed H, Traore I, Saad S. Detection of online fake news using N-gram analysis and machine learning techniques. 2017

Ahmed H, Traore I, Saad S. Detecting opinion spams and fake news using text classification. J Secur Priv. 2018;1(1) ( Wiley ).

Amazeen MA. Checking the fact-checkers in 2008: predicting political ad scrutiny and assessing consistency. J Political Mark. 2016;15(4):433–64.

Article Google Scholar

Arora P, Pandey M. Fake news detection using machine learning: a survey. 2020.

Baptista JP, Gradim A. Understanding fake news consumption: a reviaew. MDPI. 2020. https://doi.org/10.3390/socsci9100185

Beutel I, Kirschler O, Kokott S. How do fake news and hate speech affect political discussion and target persons and how can they be detected? Central and Eastern European eDem and eGov Days. 2022;342:37–81.

Bharadwaj P, Shao Z. Detecting fake news: a deep learning approach. arXiv preprint arXiv:1903.05781 . 2019

Bozku E. Fake news detection datasets. Kaggle. 2022. https://www.kaggle.com/datasets/emineyetm/fake-news-detection-datasets

Capuano N, Fenza G, Loia V, Nota FD. Content based fake news detection with machine and deep learning: a systematic review. Neurocomputing. 2023.

Chelmis C, Cambria E, SI L. Fake news detection on social media: a data mining perspective. ACM Trans Data Sci. 2019.

Chen L, Zhang T, Li T. Gradient boosting model for unbalanced quantitative mass spectra quality assessment. In: 2017 International conference on security, pattern analysis, and cybernetics (SPAC). 2017. https://doi.org/10.1109/spac.2017.8304311

Chen Y, Zhang J, Wu L, Yin D, Zhou X. Fake news detection with human-in-the-loop. 2021.

Wu C, Dongol B, Sifa R, Winslett M. Fake news detection on social media using a multimodal approach. In: Proceedings of the 2020 ACM multimedia conference. 2020.

Da San Martino G, Strapparava C. Leveraging linguistic patterns for fake news detection and mitigation. In: Proceedings of the 27th International conference on computational linguistics. 2018.

Ghenai A, Davey ARH. Real-time classification of news into fact-checked categories. In: IEEE/ACM International conference on advances in social networks analysis and mining. 2018.

Granik M, Mesyura V. Fake news detection using naive Bayes classifier. In 2017 IEEE first Ukraine conference on electrical and computer engineering (UKRCON). IEEE. 2017. pp. 900–903.

Granik M, Mayer KJ, Sukthankar G. Fake news detection on social media: a data mining perspective. In: ACM transactions on data science. 2018.

Islam F, Barbier G, Rokne J. Fake news detection in social media using linguistic metaproperties. ACM Trans Inf Syst. 2018.

Jiang B, Shi Y, Song Y, Li C, Zhang W. Fake news detection with attention mechanism. 2020.

Kabalci Y, Koçabaş B. Fake news detection using machine learning: an ensemble learning approach. J Ambient Intell Humaniz Comput. 2020.

Kaliyar RK. A comprehensive survey on fake news detection techniques. In Handbook of research on applications and implementations of machine learning and artificial intelligence. 2021.

Kaliyar RK, Goswami A, Narang P. Multiclass fake news detection using ensemble machine learning. In: 2019 IEEE 9th international conference on advanced computing (IACC). 2019. https://doi.org/10.1109/iacc48062.2019.8971579

Kaliyar RK, Goswami A, Narang P. Fakebert: fake news detection in social media with a Bert-based deep learning approach. Multimed Tools Appl. 2021;80(8):11765–88. https://doi.org/10.1007/s11042-020-10183-2 .

Karimi H, Tang J. Fake news detection: a hierarchical discourse-level structure (HDSF). 2021.

Kaur S, Kaur P. A multilevel fake news detection system using ensemble learning. Int J Mach Learn Cybern. 2019.

Kaur S, Kaur P. Fake news detection using hybrid technique of machine learning classifiers. In Soft Computing and Signal Processing. 2021.

Kudarvalli H, Fiaidhi J. Detecting fake news using machine learning algorithms. 2020. https://doi.org/10.36227/techrxiv.12089133

Li Z, Zhang Y, Wang S (2021) Fake news detection with transfer learning.

Liu J, Cao Y, Lin C, Shu K, Wang S. Fake news detection with multimodal analysis. 2020.

Liu Y, Wu YFB. Early detection of fake news on social media through propagation path classification with recurrent and Convolutional Networks. New Jersey Institute of Technology. https://researchwith.njit.edu/en/publications/early-detection-of-fake-news-on-social-media-through-propagation -. 1970

Mandical RR, Thampi GT, George G, Mathew G (2019) Fake news classification using machine learning: a comprehensive evaluation. Fut Gen Comput Syst.

Mishra R, Bhatia K, Singh A. Fake news detection with recurrent neural networks. 2019. arXiv preprint arXiv:1903.08989 .

Olan F, Jayawickrama U, Arakpogun EO, Suklan J, Liu S. Fake news on social media: the Impact on Society. Inf Syst Front. 2022; 1–16.

Potthast, M., Kiesel, J., Reinartz, K., Bevendorff, J., Stein, B., & Holzinger, A. (2017). A Stylometric Inquiry into Hyperpartisan and Fake News.

Preotiuc-Pietro D, Gaman M, Aletras N. Automatically identifying complaints in social media. 2019. arXiv.org. https://arxiv.org/abs/1906.03890

Puri H, Apte V. Detecting fake news on social media using geolocation information. 2020.

Qian F, Gong C, Sharma K, Liu Y. Neural user response generator: Fake news detection with collective user intelligence. In: Proceedings of the twenty-seventh international joint conference on artificial intelligence. 2018. https://doi.org/10.24963/ijcai.2018/533

Rasheed J. Analyzing the effect of filtering and feature-extraction techniques in a machine learning model for identification of infectious disease using radiography imaging. Symmetry. 2022;14(7):1398. https://doi.org/10.3390/sym14071398 .

Shu K, Mahudeswaran D, Wang S, Lee D, Liu H. Fake news detection on social media: a data mining perspective. ACM Comput. 2019.

Shu K, Sliva A, Wang S, Tang J, Liu H. Fake news detection on social media. ACM SIGKDD Explor Newsl. 2017;19(1):22–36. https://doi.org/10.1145/3137597.3137600 .

Shu K, Sliva A, Wang S, Tang J, Liu H. Fake news detection on social media: a data mining perspective. 2017. arXiv.org. https://arxiv.org/abs/1708.01967

Singh V, Khamparia A, Gudnavar S. Fake news detection using support vector machine and linguistic inquiry word count. In: Advanced data analytics for improved business outcomes. 2020.

Tahir T, Gence S, Rasool G, Umer T, Rasheed J, Yeo SF, Cevik T. Early software defects density prediction: training the international software benchmarking cross projects data using supervised learning. IEEE Access. 2023. https://doi.org/10.1109/ACCESS.2023.3339994 .

Verma A, Agrawal P, Hussain M, Varma V. Fake news detection with adversarial learning. 2020

Wang X, Yu F, Jiang L, Meng D. Fake news detection with meta-learning. 2021.

Waziry S, Wardak AB, Rasheed J, Shubair RM, Rajab K, Shaikh A. Performance comparison of machine learning driven approaches for classification of complex noises in quick response code images. Heliyon. 2023. https://doi.org/10.1016/j.heliyon.2023.e15108 .

Wu H, Yang P, Zhang C, Wu W, Yu Y (2020) Fake news detection with fact-checking

Zhang S, Dou D, Liu N, Tang J, Lin CY, Cheng X. Combating fake news: a survey on identification and mitigation techniques. ACM Trans Intell Syst Technol (TIST). 2019.

Zhang Y, Huang W, Liu Y (2021) Fake news detection with crowdsourcing.

Zhao H, Chen X, Nguyen T, Huang JZ, Williams G, Chen H. Stratified over-sampling bagging method for random forests on imbalanced data. Intell Secur Inform. 2016. https://doi.org/10.1007/978-3-319-31863-9_5 .

Download references

Acknowledgements

All the authors appreciate the valuable contributions of Dr. Fahad Mahmoud Ghabban to the study.

This research received no external funding.

Author information

Authors and affiliations.

National College of Business Administration and Economics, Lahore, Pakistan

Adeel Aslam, Anza Shabbir & Manahil Murtaza

Department of Information Systems, University of Management and Technology, Lahore, Pakistan

Fazeel Abid

Department of Computer Engineering, Istanbul Sabahattin Zaim University, Istanbul, Turkey

Jawad Rasheed & Harun Elkiran

Department of Computer Science, College of Computer Engineering and Sciences in Al-Kharj, Prince Sattam Bin Abdulaziz University, P.O. Box 151, 11942, Al-Kharj, Saudi Arabia

Shtwai Alsubai

You can also search for this author in PubMed Google Scholar