My Speech Class

Public Speaking Tips & Speech Topics

41 Psychology Speech Topic Ideas

Jim Peterson has over 20 years experience on speech writing. He wrote over 300 free speech topic ideas and how-to guides for any kind of public speaking and speech writing assignments at My Speech Class.

- Hierarchy of human needs theory of Abraham Maslow. The series of levels in that process are good main points: the physiological, safety, belonging, esteem and self-actualisation needs.

- Why do so many people find adolescence so difficult? Life circumstances perhaps make you feel like you are riding in a roller coaster due to the speedily physical and emotional changes. Mention the causes and the ways to cure. Psychology speech topics to help and advice other persons.

- How do you remember what you know? In other words describe the way your brain works for short-term and long-term memories. Molecular and chemical actions and reactions can be part of your informative conversation.

- Artificial Intelligence technologies. E.g. computer systems performing like humans (robotica), problem solving and knowledge management with reasoning based on past cases and data.

- Strong stimuli that cause changes in temporary behavior. E.g. Pills, money, food, sugar. In that case it is an good idea to speak about energy drinks and their short-term effectiveness – do not forget to mention the dangers …

- Jung’s theory about our ego, personal unconscious and collective unconscious. Psychoanalyst Carl Jung discovered that neurosis is based on tensions between our psyche and attitudes.

- What exactly is Emotional Intelligence? And why is it more important than IQ-ratings nowadays. How to measure EI with what personality tests?

- That brings me to the next psychology speech topics: the dangers of personality tests.

- Psychological persuasion techniques in speeches. E.g. body language, understanding audience’s motivations, trance and hypnosis.

- Marketing and selling techniques based on psychological effects. E.g. attractive stimulating colorful packaging, or influencing behavior of consumers while shopping in malls.

- Meditation helps to focus and calm down the mind. E.g. Teach your public to focus on breathing, revive each movement of an activity in slow motion, or the walking meditation.

- The reasons against and for becoming a behaviorist. Behaviorism is the methodological study of how the scientifically method of psychology.

- Sigmund Freud and his ideas. With a little bit of fantasy you can alter and convert these example themes into attractive psychology speech topics: our defense mechanisms, hypnosis and catharsis, psychosexual stages of development.

- Biological causes of a depression. E.g. biological and genetic, environmental, and emotional factors.

- When your boss is a woman. What happens to men? And to women?

- The first signs of anxiety disorders. E.g. sleeping and concentration problems, edgy and irritable feelings.

- How psychotherapy by trained professionals helps people to recover.

- How to improve your nonverbal communication skills and communicate effectively. Study someone’s incongruent body signs, vary your tone of voice, keep eye contact while talking informally of formally with a person.

- Always talk after traumatic events. Children, firefighter, police officers, medics in conflict zones.

- The number one phobia on earth is fear of public speaking – and not fear of dying. That I think is a very catchy psychological topic …

And a few more topics you can develop yourself:

- Dangers of personality tests.

- How to set and achieve unrealistic goals.

- Sigmund Freud Theory.

- The Maslow’s hierarchy of needs theory.

- Three ways to measure Emotional Intelligence.

- Why public speaking is the number one phobia on the planet.

- Animated violence does influence the attitude of young people.

- Becoming a millionaire will not make you happy.

- Being a pacifist is equal to being naive.

- Change doesn’t equal progress.

- Everyone is afraid to speak in public.

- Ideas have effect and consequence on lives.

- Mental attitude affects the healing process.

- Philanthropy is the fundament of curiosity.

- Praise in public and criticize or punish in private.

- Sometimes it is okay to lie.

- The importance of asking yourself why you stand for something.

- The only answer to cruelty is kindness.

- The trauma of shooting incidents last a lifetime.

- To grab people’s attention on stage, keep a close eye on their attitude and social backgrounds.

- Torture as an interrogation technique is never acceptable.

207 Value Speech Topics – Get The Facts

66 Military Speech Topics [Persuasive, Informative]

Leave a Comment

I accept the Privacy Policy

Reach out to us for sponsorship opportunities

Vivamus integer non suscipit taciti mus etiam at primis tempor sagittis euismod libero facilisi.

© 2024 My Speech Class

6 Mind-Blowing TED Talks About Psychology & Human Behavior

The human brain is complex and confusing, which explains why human behavior is so complex and confusing. People have a tendency to act one way when they feel something completely different. Here are a few interesting TED Talks that delve into human psychology and try to explain why we are the way we are.

The human brain is complex and confusing, which explains why human behavior is so complex and confusing. People have a tendency to act one way when they feel something completely different.

How many times have you been asked “What’s wrong?” only to answer “Nothing,” even though something truly was wrong? Personally, I’ve lost count. Humans are strange creatures, indeed.

And yet, the craziness of human behavior doesn’t end there. There are hundreds--even thousands--of different aspects to our behavior that have a strange science behind them. Some of what you believe may actually be false.

Here are a few interesting TED Talks that delve into human psychology and try to explain why we are the way we are.

The Paradox of Choice

http://youtu.be/VO6XEQIsCoM

The secret to happiness is low expectations .

With a quotable snippet like that, I think it’s easy to see that the giver of this TED Talk is very entertaining in his delivery and insightful in his material.

In this 20-minute clip, Barry Schwartz talks about the gradual increase in choices that we have as consumers and how making the right decision in a sea of choices can have a negative impact on our lives. He calls it the paradox of choice, and it all stems from a simple assumption: more freedom leads to more happiness.

Watch this video and learn why the availability of choices can actually be a detriment to your happiness as an individual.

A Kinder, Gentler Philosophy of Success

http://youtu.be/MtSE4rglxbY

Alain de Botton, a Swiss philosopher, presents a philosophical discussion on the modern world’s idea of success and how the structure of society influences our notions of success and failure. With a number of insightful illustrations and analogies, I think Botton successfully challenges the popular understanding of individual success.

He’s articulate, eloquent, and quite witty with his comments and remarks. Plus, he’s just plain funny. Even if the subject matter bores you (which I guarantee it won’t), you’ll still be entertained by his delivery style and his intelligent jokes.

Watching this clip was a pleasure and you won’t be disappointed.

The Surprising Science of Motivation

http://youtu.be/rrkrvAUbU9Y

In this talk, Daniel Pink discusses the outdated notion of extrinsic motivators in the modern world. Outside factors, like increased pay and other incentives, can actually be damaging to creativity, inspiration, and motivation.

While the idea of extrinsic motivation was useful and effective in the 20th Century, Pink argues that this outdated idea must be replaced by a new one: intrinsic motivation . Because 21st Century tasks differ so fundamentally from 20th Century tasks, new motivational forces are required.

This is a mind-blowing talk that confirms what we, as humans, already know: productivity is heightened when we want to do something rather than when we are only encouraged to do something. A must-see clip, indeed.

How We Read Each Other’s Minds

http://youtu.be/GOCUH7TxHRI

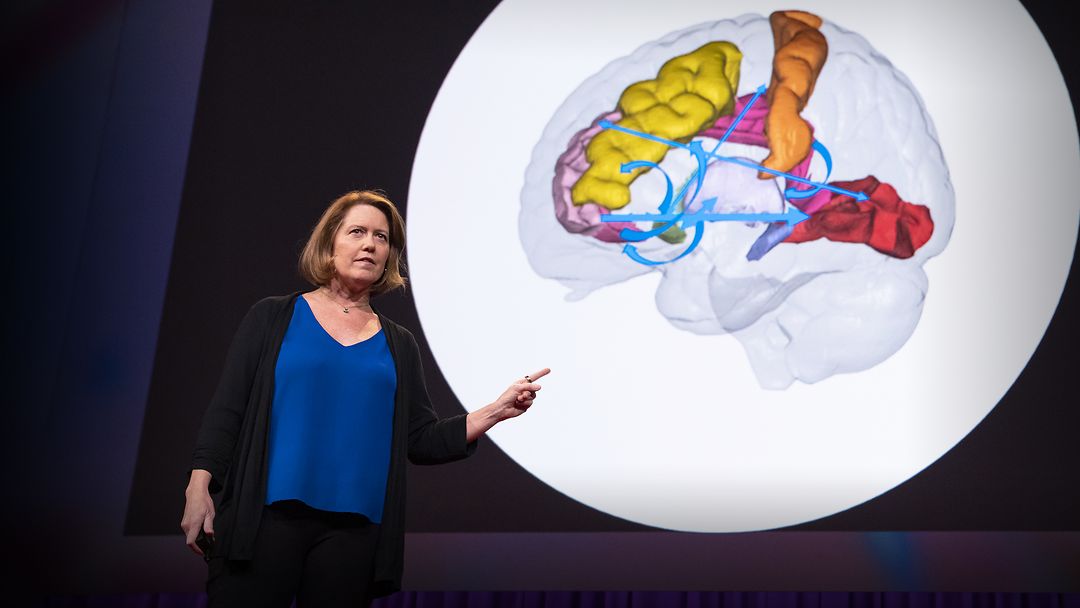

In this TED Talk, scientist Rebecca Saxe discusses a region of the brain--called the Right Temporo-Parietal Junction--that is used when you make judgments about other people and what they’re thinking.

Through a series of experiments, Saxe shows how the development of this area of the brain contributes to how you view other people, their thoughts, and their motives behind their actions. In other words, underdevelopment of the RTPJ results in a lessened ability for representing and understanding another’s beliefs.

If you love science jargon and scientific analysis, this one’s for you.

What You Don’t Know About Marriage

http://youtu.be/Y8u42OjH0ss

Nowadays, it’s a well-known fact that marriages--at least in America--are more likely to end in divorce than a happily-ever-after scenario. However, writer Jenna McCarthy presents her researched findings about the factors that are common in all successful marriages.

As a writer, McCarthy has injected jokes and humor into her presentation. Some may find her cute and pleasant to listen to. Others may be put off by her attempts to make the subject matter funny.

Nonetheless, if you are interested in making your current marriage work or if you want to know how to bulletproof your future marriage , this is the TED Talk for you.

The 8 Secrets to Success

http://youtu.be/Y6bbMQXQ180

Secrets? Perhaps if you’re young or if you’ve been hiding under a rock for the last decade. The topic of success--and how you can achieve it--has been examined and studied to death. Everyone wants to be successful, but not everyone is successful.

How can you increase the chance for your own personal success? In this TED Talk, Richard St. John tells you in eight simple words.

But beware: even if these are the secrets to success, they are not shortcuts to success. There are no shortcuts to success. So buckle yourself down, watch this video, and prepare yourself for the mental fortitude that you’ll need in order to cross the line from failure to success.

Do you follow TED Talks? Tell us about your picks in the subject of your choice.

Image Credits: Psychology Image Via Shutterstock

What makes us tick? These TED Talks -- from psychologists and journalists, doctors and patients -- share the latest research on why we do what we do.

Video playlists about Psychology

The political mind

A decade in review

The most popular TED Talks in Hindi

How perfectionism fails us

Talks about psychology.

The bias behind your undiagnosed chronic pain

When is anger justified? A philosophical inquiry

How to know if you're being selfish (and whether or not that's bad)

How to get motivated even when you don’t feel like it

My mission to change the narrative of mental health

How stress drains your brain — and what to do about it

How to make smart decisions more easily

The science behind how sickness shapes your mood

Why you shouldn't trust boredom

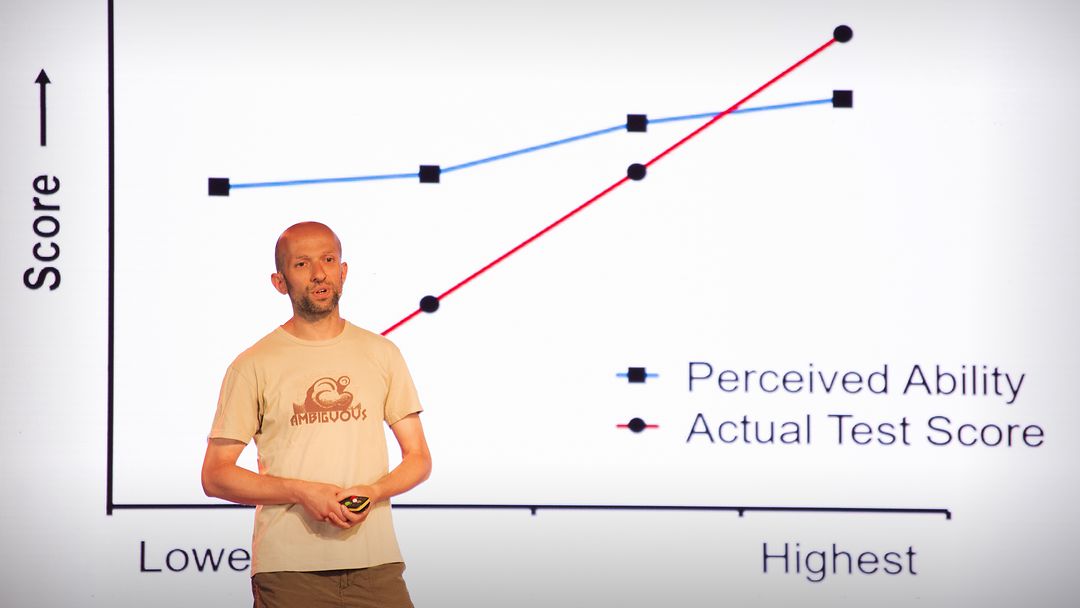

Are you really as good at something as you think?

How to overcome your mistakes

The single most important parenting strategy

Does more freedom at work mean more fulfillment?

How to hack your brain when you're in pain

Why is it so hard to break a bad habit?

How to enter flow state

Exclusive articles about psychology, a smart way to handle anxiety — courtesy of soccer great lionel messi, how do top athletes get into the zone by getting uncomfortable, here’s how you can handle stress like a lion, not a gazelle.

- Search Menu

- Browse content in Arts and Humanities

- Browse content in Archaeology

- Anglo-Saxon and Medieval Archaeology

- Archaeological Methodology and Techniques

- Archaeology by Region

- Archaeology of Religion

- Archaeology of Trade and Exchange

- Biblical Archaeology

- Contemporary and Public Archaeology

- Environmental Archaeology

- Historical Archaeology

- History and Theory of Archaeology

- Industrial Archaeology

- Landscape Archaeology

- Mortuary Archaeology

- Prehistoric Archaeology

- Underwater Archaeology

- Urban Archaeology

- Zooarchaeology

- Browse content in Architecture

- Architectural Structure and Design

- History of Architecture

- Residential and Domestic Buildings

- Theory of Architecture

- Browse content in Art

- Art Subjects and Themes

- History of Art

- Industrial and Commercial Art

- Theory of Art

- Biographical Studies

- Byzantine Studies

- Browse content in Classical Studies

- Classical History

- Classical Philosophy

- Classical Mythology

- Classical Literature

- Classical Reception

- Classical Art and Architecture

- Classical Oratory and Rhetoric

- Greek and Roman Epigraphy

- Greek and Roman Law

- Greek and Roman Archaeology

- Greek and Roman Papyrology

- Late Antiquity

- Religion in the Ancient World

- Digital Humanities

- Browse content in History

- Colonialism and Imperialism

- Diplomatic History

- Environmental History

- Genealogy, Heraldry, Names, and Honours

- Genocide and Ethnic Cleansing

- Historical Geography

- History by Period

- History of Agriculture

- History of Education

- History of Emotions

- History of Gender and Sexuality

- Industrial History

- Intellectual History

- International History

- Labour History

- Legal and Constitutional History

- Local and Family History

- Maritime History

- Military History

- National Liberation and Post-Colonialism

- Oral History

- Political History

- Public History

- Regional and National History

- Revolutions and Rebellions

- Slavery and Abolition of Slavery

- Social and Cultural History

- Theory, Methods, and Historiography

- Urban History

- World History

- Browse content in Language Teaching and Learning

- Language Learning (Specific Skills)

- Language Teaching Theory and Methods

- Browse content in Linguistics

- Applied Linguistics

- Cognitive Linguistics

- Computational Linguistics

- Forensic Linguistics

- Grammar, Syntax and Morphology

- Historical and Diachronic Linguistics

- History of English

- Language Acquisition

- Language Variation

- Language Families

- Language Evolution

- Language Reference

- Lexicography

- Linguistic Theories

- Linguistic Typology

- Linguistic Anthropology

- Phonetics and Phonology

- Psycholinguistics

- Sociolinguistics

- Translation and Interpretation

- Writing Systems

- Browse content in Literature

- Bibliography

- Children's Literature Studies

- Literary Studies (Asian)

- Literary Studies (European)

- Literary Studies (Eco-criticism)

- Literary Studies (Modernism)

- Literary Studies (Romanticism)

- Literary Studies (American)

- Literary Studies - World

- Literary Studies (1500 to 1800)

- Literary Studies (19th Century)

- Literary Studies (20th Century onwards)

- Literary Studies (African American Literature)

- Literary Studies (British and Irish)

- Literary Studies (Early and Medieval)

- Literary Studies (Fiction, Novelists, and Prose Writers)

- Literary Studies (Gender Studies)

- Literary Studies (Graphic Novels)

- Literary Studies (History of the Book)

- Literary Studies (Plays and Playwrights)

- Literary Studies (Poetry and Poets)

- Literary Studies (Postcolonial Literature)

- Literary Studies (Queer Studies)

- Literary Studies (Science Fiction)

- Literary Studies (Travel Literature)

- Literary Studies (War Literature)

- Literary Studies (Women's Writing)

- Literary Theory and Cultural Studies

- Mythology and Folklore

- Shakespeare Studies and Criticism

- Browse content in Media Studies

- Browse content in Music

- Applied Music

- Dance and Music

- Ethics in Music

- Ethnomusicology

- Gender and Sexuality in Music

- Medicine and Music

- Music Cultures

- Music and Religion

- Music and Culture

- Music and Media

- Music Education and Pedagogy

- Music Theory and Analysis

- Musical Scores, Lyrics, and Libretti

- Musical Structures, Styles, and Techniques

- Musicology and Music History

- Performance Practice and Studies

- Race and Ethnicity in Music

- Sound Studies

- Browse content in Performing Arts

- Browse content in Philosophy

- Aesthetics and Philosophy of Art

- Epistemology

- Feminist Philosophy

- History of Western Philosophy

- Metaphysics

- Moral Philosophy

- Non-Western Philosophy

- Philosophy of Science

- Philosophy of Action

- Philosophy of Law

- Philosophy of Religion

- Philosophy of Language

- Philosophy of Mind

- Philosophy of Perception

- Philosophy of Mathematics and Logic

- Practical Ethics

- Social and Political Philosophy

- Browse content in Religion

- Biblical Studies

- Christianity

- East Asian Religions

- History of Religion

- Judaism and Jewish Studies

- Qumran Studies

- Religion and Education

- Religion and Health

- Religion and Politics

- Religion and Science

- Religion and Law

- Religion and Art, Literature, and Music

- Religious Studies

- Browse content in Society and Culture

- Cookery, Food, and Drink

- Cultural Studies

- Customs and Traditions

- Ethical Issues and Debates

- Hobbies, Games, Arts and Crafts

- Lifestyle, Home, and Garden

- Natural world, Country Life, and Pets

- Popular Beliefs and Controversial Knowledge

- Sports and Outdoor Recreation

- Technology and Society

- Travel and Holiday

- Visual Culture

- Browse content in Law

- Arbitration

- Browse content in Company and Commercial Law

- Commercial Law

- Company Law

- Browse content in Comparative Law

- Systems of Law

- Competition Law

- Browse content in Constitutional and Administrative Law

- Government Powers

- Judicial Review

- Local Government Law

- Military and Defence Law

- Parliamentary and Legislative Practice

- Construction Law

- Contract Law

- Browse content in Criminal Law

- Criminal Procedure

- Criminal Evidence Law

- Sentencing and Punishment

- Employment and Labour Law

- Environment and Energy Law

- Browse content in Financial Law

- Banking Law

- Insolvency Law

- History of Law

- Human Rights and Immigration

- Intellectual Property Law

- Browse content in International Law

- Private International Law and Conflict of Laws

- Public International Law

- IT and Communications Law

- Jurisprudence and Philosophy of Law

- Law and Politics

- Law and Society

- Browse content in Legal System and Practice

- Courts and Procedure

- Legal Skills and Practice

- Primary Sources of Law

- Regulation of Legal Profession

- Medical and Healthcare Law

- Browse content in Policing

- Criminal Investigation and Detection

- Police and Security Services

- Police Procedure and Law

- Police Regional Planning

- Browse content in Property Law

- Personal Property Law

- Study and Revision

- Terrorism and National Security Law

- Browse content in Trusts Law

- Wills and Probate or Succession

- Browse content in Medicine and Health

- Browse content in Allied Health Professions

- Arts Therapies

- Clinical Science

- Dietetics and Nutrition

- Occupational Therapy

- Operating Department Practice

- Physiotherapy

- Radiography

- Speech and Language Therapy

- Browse content in Anaesthetics

- General Anaesthesia

- Neuroanaesthesia

- Browse content in Clinical Medicine

- Acute Medicine

- Cardiovascular Medicine

- Clinical Genetics

- Clinical Pharmacology and Therapeutics

- Dermatology

- Endocrinology and Diabetes

- Gastroenterology

- Genito-urinary Medicine

- Geriatric Medicine

- Infectious Diseases

- Medical Oncology

- Medical Toxicology

- Pain Medicine

- Palliative Medicine

- Rehabilitation Medicine

- Respiratory Medicine and Pulmonology

- Rheumatology

- Sleep Medicine

- Sports and Exercise Medicine

- Clinical Neuroscience

- Community Medical Services

- Critical Care

- Emergency Medicine

- Forensic Medicine

- Haematology

- History of Medicine

- Browse content in Medical Dentistry

- Oral and Maxillofacial Surgery

- Paediatric Dentistry

- Restorative Dentistry and Orthodontics

- Surgical Dentistry

- Medical Ethics

- Browse content in Medical Skills

- Clinical Skills

- Communication Skills

- Nursing Skills

- Surgical Skills

- Medical Statistics and Methodology

- Browse content in Neurology

- Clinical Neurophysiology

- Neuropathology

- Nursing Studies

- Browse content in Obstetrics and Gynaecology

- Gynaecology

- Occupational Medicine

- Ophthalmology

- Otolaryngology (ENT)

- Browse content in Paediatrics

- Neonatology

- Browse content in Pathology

- Chemical Pathology

- Clinical Cytogenetics and Molecular Genetics

- Histopathology

- Medical Microbiology and Virology

- Patient Education and Information

- Browse content in Pharmacology

- Psychopharmacology

- Browse content in Popular Health

- Caring for Others

- Complementary and Alternative Medicine

- Self-help and Personal Development

- Browse content in Preclinical Medicine

- Cell Biology

- Molecular Biology and Genetics

- Reproduction, Growth and Development

- Primary Care

- Professional Development in Medicine

- Browse content in Psychiatry

- Addiction Medicine

- Child and Adolescent Psychiatry

- Forensic Psychiatry

- Learning Disabilities

- Old Age Psychiatry

- Psychotherapy

- Browse content in Public Health and Epidemiology

- Epidemiology

- Public Health

- Browse content in Radiology

- Clinical Radiology

- Interventional Radiology

- Nuclear Medicine

- Radiation Oncology

- Reproductive Medicine

- Browse content in Surgery

- Cardiothoracic Surgery

- Gastro-intestinal and Colorectal Surgery

- General Surgery

- Neurosurgery

- Paediatric Surgery

- Peri-operative Care

- Plastic and Reconstructive Surgery

- Surgical Oncology

- Transplant Surgery

- Trauma and Orthopaedic Surgery

- Vascular Surgery

- Browse content in Science and Mathematics

- Browse content in Biological Sciences

- Aquatic Biology

- Biochemistry

- Bioinformatics and Computational Biology

- Developmental Biology

- Ecology and Conservation

- Evolutionary Biology

- Genetics and Genomics

- Microbiology

- Molecular and Cell Biology

- Natural History

- Plant Sciences and Forestry

- Research Methods in Life Sciences

- Structural Biology

- Systems Biology

- Zoology and Animal Sciences

- Browse content in Chemistry

- Analytical Chemistry

- Computational Chemistry

- Crystallography

- Environmental Chemistry

- Industrial Chemistry

- Inorganic Chemistry

- Materials Chemistry

- Medicinal Chemistry

- Mineralogy and Gems

- Organic Chemistry

- Physical Chemistry

- Polymer Chemistry

- Study and Communication Skills in Chemistry

- Theoretical Chemistry

- Browse content in Computer Science

- Artificial Intelligence

- Computer Architecture and Logic Design

- Game Studies

- Human-Computer Interaction

- Mathematical Theory of Computation

- Programming Languages

- Software Engineering

- Systems Analysis and Design

- Virtual Reality

- Browse content in Computing

- Business Applications

- Computer Security

- Computer Games

- Computer Networking and Communications

- Digital Lifestyle

- Graphical and Digital Media Applications

- Operating Systems

- Browse content in Earth Sciences and Geography

- Atmospheric Sciences

- Environmental Geography

- Geology and the Lithosphere

- Maps and Map-making

- Meteorology and Climatology

- Oceanography and Hydrology

- Palaeontology

- Physical Geography and Topography

- Regional Geography

- Soil Science

- Urban Geography

- Browse content in Engineering and Technology

- Agriculture and Farming

- Biological Engineering

- Civil Engineering, Surveying, and Building

- Electronics and Communications Engineering

- Energy Technology

- Engineering (General)

- Environmental Science, Engineering, and Technology

- History of Engineering and Technology

- Mechanical Engineering and Materials

- Technology of Industrial Chemistry

- Transport Technology and Trades

- Browse content in Environmental Science

- Applied Ecology (Environmental Science)

- Conservation of the Environment (Environmental Science)

- Environmental Sustainability

- Environmentalist Thought and Ideology (Environmental Science)

- Management of Land and Natural Resources (Environmental Science)

- Natural Disasters (Environmental Science)

- Nuclear Issues (Environmental Science)

- Pollution and Threats to the Environment (Environmental Science)

- Social Impact of Environmental Issues (Environmental Science)

- History of Science and Technology

- Browse content in Materials Science

- Ceramics and Glasses

- Composite Materials

- Metals, Alloying, and Corrosion

- Nanotechnology

- Browse content in Mathematics

- Applied Mathematics

- Biomathematics and Statistics

- History of Mathematics

- Mathematical Education

- Mathematical Finance

- Mathematical Analysis

- Numerical and Computational Mathematics

- Probability and Statistics

- Pure Mathematics

- Browse content in Neuroscience

- Cognition and Behavioural Neuroscience

- Development of the Nervous System

- Disorders of the Nervous System

- History of Neuroscience

- Invertebrate Neurobiology

- Molecular and Cellular Systems

- Neuroendocrinology and Autonomic Nervous System

- Neuroscientific Techniques

- Sensory and Motor Systems

- Browse content in Physics

- Astronomy and Astrophysics

- Atomic, Molecular, and Optical Physics

- Biological and Medical Physics

- Classical Mechanics

- Computational Physics

- Condensed Matter Physics

- Electromagnetism, Optics, and Acoustics

- History of Physics

- Mathematical and Statistical Physics

- Measurement Science

- Nuclear Physics

- Particles and Fields

- Plasma Physics

- Quantum Physics

- Relativity and Gravitation

- Semiconductor and Mesoscopic Physics

- Browse content in Psychology

- Affective Sciences

- Clinical Psychology

- Cognitive Neuroscience

- Cognitive Psychology

- Criminal and Forensic Psychology

- Developmental Psychology

- Educational Psychology

- Evolutionary Psychology

- Health Psychology

- History and Systems in Psychology

- Music Psychology

- Neuropsychology

- Organizational Psychology

- Psychological Assessment and Testing

- Psychology of Human-Technology Interaction

- Psychology Professional Development and Training

- Research Methods in Psychology

- Social Psychology

- Browse content in Social Sciences

- Browse content in Anthropology

- Anthropology of Religion

- Human Evolution

- Medical Anthropology

- Physical Anthropology

- Regional Anthropology

- Social and Cultural Anthropology

- Theory and Practice of Anthropology

- Browse content in Business and Management

- Business Strategy

- Business History

- Business Ethics

- Business and Government

- Business and Technology

- Business and the Environment

- Comparative Management

- Corporate Governance

- Corporate Social Responsibility

- Entrepreneurship

- Health Management

- Human Resource Management

- Industrial and Employment Relations

- Industry Studies

- Information and Communication Technologies

- International Business

- Knowledge Management

- Management and Management Techniques

- Operations Management

- Organizational Theory and Behaviour

- Pensions and Pension Management

- Public and Nonprofit Management

- Strategic Management

- Supply Chain Management

- Browse content in Criminology and Criminal Justice

- Criminal Justice

- Criminology

- Forms of Crime

- International and Comparative Criminology

- Youth Violence and Juvenile Justice

- Development Studies

- Browse content in Economics

- Agricultural, Environmental, and Natural Resource Economics

- Asian Economics

- Behavioural Finance

- Behavioural Economics and Neuroeconomics

- Econometrics and Mathematical Economics

- Economic Systems

- Economic Methodology

- Economic History

- Economic Development and Growth

- Financial Markets

- Financial Institutions and Services

- General Economics and Teaching

- Health, Education, and Welfare

- History of Economic Thought

- International Economics

- Labour and Demographic Economics

- Law and Economics

- Macroeconomics and Monetary Economics

- Microeconomics

- Public Economics

- Urban, Rural, and Regional Economics

- Welfare Economics

- Browse content in Education

- Adult Education and Continuous Learning

- Care and Counselling of Students

- Early Childhood and Elementary Education

- Educational Equipment and Technology

- Educational Strategies and Policy

- Higher and Further Education

- Organization and Management of Education

- Philosophy and Theory of Education

- Schools Studies

- Secondary Education

- Teaching of a Specific Subject

- Teaching of Specific Groups and Special Educational Needs

- Teaching Skills and Techniques

- Browse content in Environment

- Applied Ecology (Social Science)

- Climate Change

- Conservation of the Environment (Social Science)

- Environmentalist Thought and Ideology (Social Science)

- Natural Disasters (Environment)

- Social Impact of Environmental Issues (Social Science)

- Browse content in Human Geography

- Cultural Geography

- Economic Geography

- Political Geography

- Browse content in Interdisciplinary Studies

- Communication Studies

- Museums, Libraries, and Information Sciences

- Browse content in Politics

- African Politics

- Asian Politics

- Chinese Politics

- Comparative Politics

- Conflict Politics

- Elections and Electoral Studies

- Environmental Politics

- European Union

- Foreign Policy

- Gender and Politics

- Human Rights and Politics

- Indian Politics

- International Relations

- International Organization (Politics)

- International Political Economy

- Irish Politics

- Latin American Politics

- Middle Eastern Politics

- Political Methodology

- Political Communication

- Political Philosophy

- Political Sociology

- Political Theory

- Political Behaviour

- Political Economy

- Political Institutions

- Politics and Law

- Public Administration

- Public Policy

- Quantitative Political Methodology

- Regional Political Studies

- Russian Politics

- Security Studies

- State and Local Government

- UK Politics

- US Politics

- Browse content in Regional and Area Studies

- African Studies

- Asian Studies

- East Asian Studies

- Japanese Studies

- Latin American Studies

- Middle Eastern Studies

- Native American Studies

- Scottish Studies

- Browse content in Research and Information

- Research Methods

- Browse content in Social Work

- Addictions and Substance Misuse

- Adoption and Fostering

- Care of the Elderly

- Child and Adolescent Social Work

- Couple and Family Social Work

- Developmental and Physical Disabilities Social Work

- Direct Practice and Clinical Social Work

- Emergency Services

- Human Behaviour and the Social Environment

- International and Global Issues in Social Work

- Mental and Behavioural Health

- Social Justice and Human Rights

- Social Policy and Advocacy

- Social Work and Crime and Justice

- Social Work Macro Practice

- Social Work Practice Settings

- Social Work Research and Evidence-based Practice

- Welfare and Benefit Systems

- Browse content in Sociology

- Childhood Studies

- Community Development

- Comparative and Historical Sociology

- Economic Sociology

- Gender and Sexuality

- Gerontology and Ageing

- Health, Illness, and Medicine

- Marriage and the Family

- Migration Studies

- Occupations, Professions, and Work

- Organizations

- Population and Demography

- Race and Ethnicity

- Social Theory

- Social Movements and Social Change

- Social Research and Statistics

- Social Stratification, Inequality, and Mobility

- Sociology of Religion

- Sociology of Education

- Sport and Leisure

- Urban and Rural Studies

- Browse content in Warfare and Defence

- Defence Strategy, Planning, and Research

- Land Forces and Warfare

- Military Administration

- Military Life and Institutions

- Naval Forces and Warfare

- Other Warfare and Defence Issues

- Peace Studies and Conflict Resolution

- Weapons and Equipment

- < Previous chapter

- Next chapter >

26 Speech Perception

Sven L. Mattys, Department of Psychology, University of York, York, UK

- Published: 03 June 2013

- Cite Icon Cite

- Permissions Icon Permissions

Speech perception is conventionally defined as the perceptual and cognitive processes leading to the discrimination, identification, and interpretation of speech sounds. However, to gain a broader understanding of the concept, such processes must be investigated relative to their interaction with long-term knowledge—lexical information in particular. This chapter starts with a review of some of the fundamental characteristics of the speech signal and by an evaluation of the constraints that these characteristics impose on modeling speech perception. Long-standing questions are then discussed in the context of classic and more recent theories. Recurrent themes include the following: (1) the involvement of articulatory knowledge in speech perception, (2) the existence of a speech-specific mode of auditory processing, (3) the multimodal nature of speech perception, (4) the relative contribution of bottom-up and top-down flows of information to sound categorization, (5) the impact of the auditory environment on speech perception in infancy, and (6) the flexibility of the speech system in the face of novel or atypical input.

The complexity, variability, and fine temporal properties of the acoustic signal of speech have puzzled psycholinguists and speech engineers for decades. How can a signal seemingly devoid of regularity be decoded and recognized almost instantly, without any formal training, and despite being often experienced in suboptimal conditions? Without any real effort, we identify over a dozen speech sounds (phonemes) per second, recognize the words they constitute, almost immediately understand the message generated by the sentences they form, and often elaborate appropriate verbal and nonverbal responses before the utterance ends.

Unlike theories of letter perception and written-word recognition, theories of speech perception and spoken-word recognition have devoted a great deal of their investigation to a description of the signal itself, most of it carried out within the field of phonetics. In particular, the fact that speech is conveyed in the auditory modality has dramatic implications for the perceptual and cognitive operations underpinning its recognition. Research in speech perception has focused on the constraining effects of three main properties of the auditory signal: sequentiality, variability, and continuity.

Nature of the Speech Signal

Sequentiality.

One of the most obvious disadvantages of the auditory system compared to its visual counterpart is that the distribution of the auditory information is time bound, transient, and solely under the speaker’s control. Moreover, the auditory signal conveys its acoustic content in a relatively serial fashion, one bit of information at a time. The extreme spreading of information over time in the speech domain has important consequences for the mechanisms involved in perceiving and interpreting the input.

Illustration of the sequential nature of speech processing. ( A ) Waveform of a complete sentence, that is, air pressure changes (Y axis) over time (X axis). ( B–D ) Illustration of a listener’s progressive processing of the sentence at three successive points in time. The visible waveform represents the portion of signal that is available for processing at time t1 ( B ), t2 ( C ), and t3 ( D ).

In particular, given that relatively little information is conveyed per unit of time, the extraction of meaning can only be done within a window of time that far exceeds the amount of information that can be held in echoic memory (Huggins, 1975 ; Nooteboom, 1979 ). Likewise, given that there are no such things as “auditory saccades,” in which listeners would be able to skip ahead of the signal or replay the words or sentences they just heard, speech perception and lexical-sentential integration must take place sequentially, in real time (Fig. 26.1 ).

For a large part, listeners are extremely good at keeping up with the rapid flow of speech sounds. Marslen-Wilson ( 1987 ) showed that many words in sentences are often recognized well before their offset, sometimes as early as 200 ms after their onset, the average duration of one or two syllables. Other words, however, can only be disentangled from competitors later on, especially when they are short and phonetically reduced, for example, “you are” pronounced as “you’re” (Bard, Shillcock, & Altmann, 1988 ). Yet, in general, there is a consensus that speech perception and lexical access closely shadow the unfolding of the signal (e.g., the Cohort Model; Marslen-Wilson, 1987 ), even though “right-to-left” effects can sometimes be observed as well (Dahan, 2010 ).

Given the inevitable sequentiality of speech perception and the limited amount of information that humans can hold in their auditory short-term memory, an obvious question is whether fast speech, which allows more information to be packed into the same amount of time, helps listeners handle the transient nature of speech and, specifically, whether it affects the mechanisms leading to speech recognition. A problem, however, is that fast speech tends to be less clearly articulated (hypoarticulated), and hence, less intelligible. Thus, any processing gain due to denser information packing might be offset by diminished intelligibility. However, this confound can be avoided experimentally. Indeed, speech rate can be accelerated with minimal loss of intrinsic intelligibility via computer-assisted signal compression (e.g., Foulke & Sticht, 1969 ; van Buuren, Festen, & Houtgast, 1999 ). Time compression experiments have led to mixed results. Dupoux and Mehler ( 1990 ), for instance, found no effect of speech rate on how phonemes are perceived in monosyllabic versus disyllabic words. They started from the observation that the initial consonant of a monosyllabic word is detected faster if the word is high frequency than if it is low frequency, whereas frequency has no effect in multisyllabic words. This difference can be attributed to the use of a lexical route with short words and of a phonemic route with longer words. That is, short words are mapped directly onto lexical representations, whereas longer words undergo a process of decomposition into phonemes first. Critically, Dupoux and Mehler reported that a frequency effect did not appear when the duration of the disyllabic words was compressed to that of the monosyllabic words, suggesting that whether listeners use a lexical or phonemic route to identify phonemes depends on structural factors (number of phonemes or syllables) rather than time. Thus, on this account, the transient nature of speech has only a limited effect on the mechanisms underlying speech recognition.

In contrast, others have found significant effects of speech rate on lexical access. For example, both Pitt and Samuel ( 1995 ) and Radeau, Morais, Mousty, and Bertelson ( 2000 ) observed that the uniqueness point of a word, that is, the sequential point at which it can be uniquely specified (e.g., “spag” for “spaghetti”), could be dramatically altered when speech rate was manipulated. However, most changes were observed at slower rates, not at faster rates. Thus, changes in speech rate can have effects on recognition mechanisms, but these are observed mainly with time expansion, not with time compression. In sum, although the studies by Dupoux and Mehler ( 1990 ), Pitt and Samuel ( 1995 ), and Radeau et al. ( 2000 ) highlight different effects of time manipulation on speech processing, they all agree that packing more information per unit of time by accelerating speech rate does not compensate for the transient nature of the speech signal and for memory limitations. This is probably due to intrinsic perceptual and mnemonic limitations on how fast information can be processed by the speech system—at any rate.

In general, the sequential nature of speech processing is a feature that many models have struggled to implement not only because it requires taking into account echoic and short-term memory mechanisms (Mattys, 1997 ) but also because the sequentiality problem is compounded by a lack of clear boundaries between phonemes and between words, as described later.

The inspection of a speech waveform does not reveal clear acoustic correlates of what the human ear perceives as phoneme and word boundaries. The lack of boundaries is due to coarticulation between phonemes (the blending of articulatory gestures between adjacent phonemes) within and across words. Even though the degree of coarticulation between phonemes is somewhat less pronounced across than within words (Fougeron & Keating, 1997 ), the lack of clear and reliable gaps between words, along with the sequential nature of speech delivery, makes speech continuity one of the most challenging obstacles for both psycholinguistic theory and automatic speech recognition applications. Yet the absence of phoneme and word boundary markers hardly seems to pose a problem for everyday listening, as the subjective experience of speech is not one of continuity but, rather, of discreteness—that is, a string of sounds making up a string of words.

A great deal of the segmentation problem can be solved, at least in theory, based on lexical knowledge and contextual information. Key notions, here, are lexical competition and segmentation by lexical subtraction. In this view, lexical candidates are activated in multiple locations in the speech signal—that is, multiple alignment—and they compete for a segmentation solution that does not leave any fragments lexically unaccounted for (e.g., “great wall” is favored over “gray twall,” because “twall” in not an English word). Importantly, this knowledge-driven approach does not assign a specific computational status to segmentation, other than being the mere consequence of mechanisms associated with lexical competition (e.g., McClelland & Elman, 1986 ; Norris, 1994 ).

Another source of information for word segmentation draws upon broad prosodic and segmental regularities in the signal, which listeners use as heuristics for locating word boundaries. For example, languages whose words have a predominant rhythmic pattern (e.g., word-initial stress is predominant in English; word-final lengthening is predominant in French) provide a relatively straightforward—though probabilistic—segmentation strategy to their listeners (Cutler, 1994 ). The heuristic for English would go as follows: every time a strong syllable is encountered, a boundary is posited before that syllable . For French, it would be: every time a lengthened syllable is encountered, a boundary is posited after that syllable . Another documented heuristic is based on phonotactic probability, that is, the likelihood that specific phonemes follow each other in the words of a language (McQueen, 1998 ). Specifically, phonemes that are rarely found next to each other in words (e.g., very few English words contain the /fh/ diphone) would be probabilistically interpreted as having occurred across a word boundary (e.g., “tou gh h ero”). Finally, a wide array of acoustic-phonetic cues can also give away the position of a word boundary (Umeda & Coker, 1974 ). Indeed, phonemes tend to be realized differently depending on their position relative to a word or a syllable boundary. For example, in English, word-initial vowels are frequently glottalized (brief closure of the glottis, e.g., /e/ in “isle e nd,” compared to no closure in “I l e nd”), word-initial stop consonants are often aspirated (burst of air accompanying the release of a consonant, e.g., /t/ in “gray t anker” compared to no aspiration in “grea t anchor”).

It is important to note that, in everyday speech, lexically and sublexically driven segmentation cues usually coincide and reinforce each other. However, in suboptimal listening conditions (e.g., noise) or in rare cases where a conflict arises between those two sources of information, listeners have been shown to downplay sublexical discrepancies and give more heed to lexical plausibility (Mattys, White, & Melhorn, 2005 ; Fig. 26.2 ).

Variability

Perhaps the most defining challenge for the field of speech perception is the enormous variability of

Sketch of Mattys, White, and Melhorn’s ( 2005 ) hierarchical approach to speech segmentation. The relative weights of speech segmentation cues are illustrated by the width of the gray triangle. In optimal listening conditions, the cues in Tier I dominate. When lexical access is compromised or ambiguous, the cues in Tier II take over. Cues from Tier III are recruited when both lexical and segmental cues are compromised (e.g., background of severe noise). (Reprinted from Mattys, S. L., White, L., & Melhorn, J. F [2005]. Integration of multiple speech segmentation cues: A hierarchical framework. Journal of Experimental Psychology: General , 134 , 477–500 [Figure 7], by permission of the American Psychological Association.)

the signal relative to the stored representations onto which it must be matched. Variability can be found at the word level, where there are infinite ways a given word can be pronounced depending on accents, voice quality, speech rate, and so on, leading to a multitude of surface realizations for a unique target representation. But this many-to-one mapping problem is not different from the one encountered with written words in different handwritings or object recognition in general. In those cases, signal normalization can be effectively achieved by defining a set of core features unique to each word or object stored in memory and by reducing the mapping process to those features only.

The real issue with speech variability happens at a lower level, namely, phoneme categorization. Unlike letters whose realizations have at least some commonality from one instance to another, phonemes can vary widely in their acoustic manifestation—even within the same speaker. For example, as shown in Figure 26.3A , the realization of the phoneme /d/ has no immediately apparent acoustic commonality in /di/ and /du/ (Delattre, Liberman, & Cooper, 1955 ). This lack of acoustic invariance is the consequence of coarticulation: The articulation of /d/ in /di/ is partly determined by the articulatory preparation for /i/, and likewise for /d/ in /du/. The power of coarticulation is easily demonstrated by observing a speaker’s mouth prior to saying /di/ compared to /du/. The mode of articulation of /i/ (unrounded) versus /u/ (rounded) is visible on the speaker’s lips even before /d/ has been uttered. The resulting acoustics of /d/ preceding each vowel have therefore little in common.

The success of the search for acoustic cues, or invariants, capable of uniquely identifying phonemes or phonetic categories has been highly feature specific. For example, as illustrated in Figure 26.3A , the place of articulation of phonemes (i.e., the place in the vocal tract where the airstream is most constricted, which distinguishes, e.g., /b/, /d/, /g/) has been difficult to map onto specific acoustic cues. However, the difference between voiced and unvoiced stop consonants (/b/, /d/, /g/ vs. /p/, /t/, /k/) can be traced back fairly reliably to the duration between the release of the consonant and the moment when the vocal folds start vibrating, that is, the voice onset time (VOT; Liberman, Delattre, & Cooper, 1958 ). In English, the VOT of voiced stop consonants is typically around 0 ms (or at least shorter than 20 ms), whereas it is generally over 25 ms for voiceless consonants. Although this contrast has been shown to be somewhat influenced by consonant type and vocalic context (e.g., Lisker & Abramson, 1970 ), VOT is a fairly robust cue for the voiced-voiceless distinction.

( A ) Stylized spectrograms of /di/ and /du/. The dark bars, or formants, represent areas of peak energy on the frequency scale (Y axis), which correlate with zones of high resonance in the vocal tract. The curvy leads into the formants are formant transitions. They show coarticulation between the consonant and the following vowel. Note the dissimilarity between the second formant transitions in /di/ (rising) and /du/ (falling). However, as shown in ( B ), the extrapolation back in time of the two second formants’ transitions point to a common frequency locus.

Vowels are subject to coarticulatory influences, too, but the spectral structure of their middle portion is usually relatively stable, and hence, a taxonomy of vowels based on their unique distribution of energy bands along the frequency spectrum, or formants, can be attempted. However, such distribution is influenced by speaking rate, with fast speech typically leading to the target frequency of the formants being missed or leading to an asymmetric shortening of stressed versus unstressed vowels (Lindblom, 1963 ; Port, 1977 ). In general, speech rate variation is particularly problematic for acoustic cues involving time. Even stable cues such as VOT can lose their discriminability power when speech rate is altered. For example, at fast speech rates, the VOT difference between voiced and voiceless stop consonants decreases, making the two types of phonemes more difficult to distinguish (Summerfield, 1981 ). The same problem has been noted for the difference between /b/ and /w/, with /b/ having rapid formant transitions into the vowel and /w/ less rapid ones. This difference is less pronounced at fast speech rates (Miller & Liberman, 1979 ).

Yet, except for those conditions in which subtle differences are manipulated in the laboratory, listeners are surprisingly good at compensating for the acoustic distortions introduced by coarticulation and changes in speech rate. Thus, input variability, phonetic-context effects, and the lack of invariance do not appear to pose a serious problem for everyday speech perception. As reviewed later, however, theoretical accounts aiming to reconcile the complexity of the signal with the effortlessness of perception vary greatly.

Basic Phenomena and Questions in Speech Perception

Following are some of the observations that have shaped theoretical thinking in speech perception over the past 60 years. Most of them concern, in one way or another, the extent to which speech perception is carried out by a part of the auditory system dedicated to speech and involving speech-specific mechanisms not recruited for nonspeech sounds.

Categorical Perception

Categorical perception in a sensory phenomenon whereby a physically continuous dimension is perceived as discrete categories, with abrupt perceptual boundaries between categories and poor discrimination within categories (e.g., perception of the visible electromagnetic radiation spectrum as discrete colors). Early on, categorical perception was found to apply to phonemes—or at least some of them. For example, Liberman, Harris, Hoffman, and Griffith ( 1957 ) showed that synthesized syllables ranging from /ba/ to /da/ to /ga/ by gradually adjusting the transition between the consonant and the vowel’s formants (i.e., the formant transitions) were perceived as falling into coarse /b/, /d/, and /g/ categories, with poor discrimination between syllables belonging to a perceptual category and high discrimination between syllables straddling a perceptual boundary (Fig. 26.4 ). Importantly, categorical perception was not observed for matched auditory stimuli devoid of phonemic significance (Liberman, Harris, Eimas, Lisker, & Bastian, 1961 ). Moreover, since categorical perception meant that easy-to-identify syllables (spectrum endpoints) were also easy syllables to pronounce, whereas less-easy-to-identify syllables (spectrum midpoints) were generally less easy to pronounce, categorical perception was seen as a highly adaptive property of the speech system, and hence, evidence for a dedicated speech mode of the auditory system. This claim was later weakened by reports of categorical perception for nonspeech sounds (e.g., Miller, Wier, Pastore, Kelly, & Dooling, 1976 ) and for speech sounds by nonhuman species (e.g., Kluender, Diehl, & Killeen, 1987 ; Kuhl, 1981 ).

Idealized identification pattern (solid line, left Y axis) and discrimination pattern (dashed line, right Y axis) for categorical perception. Illustration with a /ba/ to /da/ continuum. Identification shows a sharp perceptual boundary between categories. Discrimination is finer around the boundary than inside the categories.

Effects of Phonetic Context

The effect of adjacent phonemes on the acoustic realization of a target phoneme (e.g., /d/ in /di/ vs. /du/) was mentioned earlier as a core element of the variability challenge. This challenge, that is, achieving perceptual constancy despite input variability, is perhaps most directly illustrated by the converse phenomenon, namely, the varying perception of a constant acoustic input as a function of its changing phonetic environment. Mann ( 1980 ) showed that the perception of a /da/-/ga/ continumm was shifted in the direction of reporting more /ga/ when it was preceded by /al/ and more /da/ when it was preceded by /ar/. Since these shifts are in the opposite direction of coarticulation between adjacent phonemes, listeners appear to compensate for the expected consequences of coarticulation. Whether compensation for coarticulation is evidence for a highly sophisticated mechanism whereby listeners use their implicit knowledge of how phonemes are produced—that is, coarticulated—to guide perception (e.g., Fowler, 2006 ) or simply a consequence of long-term association between the signal and the percept (e.g., Diehl, Lotto, & Holt, 2004 ; Lotto & Holt, 2006 ) has been a question of fundamental importance for theories of speech perception, as discussed later.

Integration of Acoustic and Optic Cues

The chief outcome of speech production is the emission of an acoustic signal. However, visual correlates, such as facial and lip movements, are often available to the listener as well. The effect of visual information on speech perception has been extensively studied, especially in the context of the benefit provided by visual cues for listeners with hearing impairments (e.g., Lachs, Pisoni, & Kirk, 2001 ) and for speech perception in noise (e.g., Sumby & Pollack, 1954 ). Visual-based enhancement is also observed for undegraded speech with a semantically complicated content or for foreign-accented speech (Reisberg, McLean, & Goldfield, 1987 ). In the laboratory, audiovisual integration is strikingly illustrated by the well-known McGurk effect. McGurk and McDonald ( 1976 ) showed that listeners presented with an acoustic /ba/ dubbed over a face saying /ga/ tended to report hearing /da/, a syllable whose place of articulation is intermediate between /ba/ and /ga/. The robustness and automaticity of the effect suggest that the acoustic and (visual) articulatory cues of speech are integrated at an early stage of processing. Whether early integration indicates that the primitives of speech perception are articulatory in nature or whether it simply highlights a learned association between acoustic and optic information has been a theoretically divisive debate (see Rosenblum, 2005 , for a review).

Lexical and Sentential Effects on Speech Perception

Although traditional approaches to speech perception often stop where word recognition begins (in the same way that approaches to word recognition often stop where sentence comprehension begins), speech perception has been profoundly influenced by the debate on how higher order knowledge affects the identification and categorization of phonemes and phonetic features. A key observation is that lexical knowledge and sentential context can aid phoneme identification, especially when the signal is ambiguous or degraded. For example, Warren and Obusek ( 1971 ) showed that a word can be heard as intact even when a component phoneme is missing and replaced with noise, for example, “legi*lature,” where the asterisk denotes the replaced phoneme. In this case, lexical knowledge dictates what the listener should have heard rather than what was actually there, a phenomenon referred to as phoneme restoration. Likewise, Warren and Warren ( 1970 ) showed that a word whose initial phoneme is degraded, for example, “*eel,” tends to be heard as “wheel” in “It was found that the *eel was on the axle” and as “peel” in “It was found that the *eel was on the orange.” Thus, phoneme identification can be strongly influenced by lexical and sentential knowledge even when the disambiguating context appears later than the degraded phoneme.

But is this truly of interest for speech perception ? In other words, could phoneme restoration (and other similar speech illusions) simply result from postperceptual, strategic biases? In this case, “*eel” would be interpreted as “wheel” simply because it makes pragmatic sense to do so in a particular sentential context, not because our perceptual system is genuinely tricked by high-level expectations. If so, contextual effects are of interest to speech-perception scientists only insofar as they suggest that speech perception happens in a system that is unpenetrable by higher order knowledge—an unfortunately convenient way of indirectly perpetuating the confinement of speech perception to the study of phoneme identification. The evidence for a postperceptual explanation is mixed. While Norris, McQueen, and Cutler ( 2000 ), Massaro ( 1989 ), and Oden and Massaro ( 1978 ), among others, found no evidence for online top-down feedback to the perceptual system and no logical reasons why such feedback should exist, Samuel ( 1981 , 1997 , 2001 ), Connine and Clifton ( 1987 ), and Magnuson, McMurray, Tanenhaus, and Aslin ( 2003 ), among others, have reported lexical effects on perception that challenge feedforward models—for example, evidence that lexical information truly alters low-level perceptual discrimination (Samuel, 1981 ). This debate has fostered extreme empirical ingenuity over the past decades but comparatively little change to theory. One exception, however, is that the debate has now spread to the long-term effects of higher order knowledge on speech perception. For example, while Norris, McQueen, and Cutler ( 2000 ) argue against online top-down feedback, the same group (2003) recognizes that perceptual (re-)tuning can happen over time, in the context of repeated exposure and learning. Placing the feedforward/feedback debate in the time domain provides an opportunity to examine the speech system at the interface with cognition, and memory functions in particular. It also allows more applied considerations to be introduced, such as the role of perceptual recalibration for second-language learning and speech perception in difficult listening conditions (Samuel & Kraljic, 2009 ), as discussed later.

Theories of Speech Perception (Narrowly and Broadly Construed)

Motor and articulatory-gesture theories.

The Motor Theory of speech perception, reported in a series of articles in the early 1950s by Liberman, Delattre, Cooper, and other researchers from the Haskins Laboratories, was the first to offer a conceptual solution to the lack-of-invariance problem. As mentioned earlier, the main stumbling block for speech-perception theories was the observation that many phonemes cannot uniquely be identified by a set of stable and reliable acoustic cues. For example, the formant transitions of /d/, especially the second formant, differ as a function of the following vowel. However, Delattre et al. ( 1955 ) found commonality between different /d/s by extrapolating the formant transitions back in time to their convergence point, or locus (or hub ; Potter, Kopp, & Green, 1947 ), as shown in Figure 26.3B . Thus, what is common to the formants of all /d/s is the frequency at their origin, that is, the frequency that would best reflect the position of the articulators prior to the release of the consonant. This led to one of the key arguments in support of the motor theory, namely that a one-to-one relationship between acoustics and phonemes can be established if the speech system includes a mechanism that allows the listener to work backward through the rules of production in order to identify the speaker’s intended phonemes. In other words, the lack-of-invariance problem can be solved if it can be demonstrated that listeners perceive speech by identifying the speaker’s intended speech gestures rather than (or in addition to) relying solely on the acoustic manifestation of such gestures. The McGurk effect, whereby auditory perception is dramatically altered by seeing the speaker’s moving lips (articulatory gestures), was an important contributor to the view that the perceptual primitives of speech are gestural in nature.

In addition to claiming that the motor system is recruited for perceiving speech (and partly because of this claim), the Motor Theory also posits that speech perception takes place in a highly specialized and speech-specific module that is neurally isolated and is most likely a unique and innate human endowment (Liberman, 1996 ; Liberman & Mattingly, 1985 ). However, even among supporters of a motor basis for speech perception, agreeing upon an operational definition of intended speech gestures and providing empirical evidence for the contribution of such intended gestures to perception proved difficult. This led Fowler and her colleagues to propose that the objects of speech perception are not intended articulatory gestures but real gestures, that is, actual vocal tract movements that are inferable from the acoustic signal itself (e.g., Fowler, 1986 , 1996 ). Thus, although Fowler’s Direct Realism approach aligns with the Motor Theory in that it claims that perceiving speech is perceiving gestures, it asserts that the acoustic signal itself is rich enough in articulatory information to provide a stable (i.e., invariant) signal-to-phoneme mapping algorithm. In doing so, Direct Realism can do away with claims about specialized and/or innate structures for speech perception.

Although the popularity of the original tenets of the Motor Theory—and, to some extent, associated gesture theories—has waned over the years, the theory has brought forward essential questions about the specificity of speech, the specialization of speech perception, and, more recently, the neuroanatomical substrate of a possible motor component of the speech apparatus (e.g., Gow & Segawa, 2009 ; Pulvermüller et al., 2006 ; Sussman, 1989 ; Whalen et al., 2006 ), a topic that regained interest following the discovery of mirror neurons in the premotor cortex (e.g., Rizzolatti & Craighero, 2004 ; but see Lotto, Hickok, & Holt, 2009 ). The debate has also shifted to a discussion of the extent to which the involvement of articulation during speech perception might in fact be under the listener’s control and its manifestation partly task specific (Yuen, Davis, Brysbaert, & Rastle, 2010 , Fig. 26.5 ; see comments by McGettigan, Agnew, & Scott, 2010 ; Rastle, Davis, & Brysbaert, 2010 ). The Motor Theory has also been extensively reviewed—and revisited—in an attempt to address problems highlighted by auditory-based models, as described later (e.g., Fowler, 2006 , 2008 ; Galantucci, Fowler, & Turvey, 2006 ; Lotto & Holt, 2006 ; Massaro & Chen, 2008 ).

Electropalatographic data showing the proportion of tongue contact on alveolar electrodes during the initial and final portions of /k/-initial (e.g., kib ) or /s/-initial (e.g., s ib ) syllables (collapsed) while a congruent or incongruent distractor is presented (Yuen et al., 2010 ). The distractor was presented auditorily in conditions A and B and visually in condition C. With the target kib as an example, the congruent distractor in the A condition was kib and the incongruent distractor started with a phoneme involving a different place of articulation (e.g., tib ). In condition B, the incongruent distractor started with a phoneme that differed from the target only by its voicing status, not by its place of articulation (e.g., gib ). Condition C was the same as condition A, except that the distractor was presented visually. The results show “traces” of the incongruent distractors in target production when the distractor is in articulatory competition with the target, particularly in the early portion of the phoneme (condition A), but not when it involves the same place of articulation (condition B), or when it is presented visually (condition C). The results suggest a close relationship between speech perception and speech production. (Reprinted from Yuen, I., Davis, M. H., Brysbaert, M., Rastle, K. [2010]. Activation of articulatory information in speech perception. Proceedings of the National Academy of Sciences USA , 107 , 592–597 [Figure 2], by permission of the National Academy of Sciences.)

Auditory Theory(ies)

The role of articulatory gestures in perceiving speech and the special status of the speech-perception system progressively came under attack largely because of insufficient hard evidence and lack of computational parsimony. Recall that recourse to articulatory gestures was originally posited as a way to solve the lack-of-invariance problem and turn a many(acoustic traces)-to-one(phoneme) mapping problem into a one(gesture)-to-one(phoneme) mapping solution. However, the lack of invariance problem turned out to be less prevalent and, at the same time, more complicated than originally claimed. Indeed, as mentioned earlier, many phonemes were found to preserve distinctive features across contexts (e.g., Blumstein & Stevens, 1981 ; Stevens & Blumstein, 1981 ). At the same time, lack of invariance was found in domains for which a gestural explanation was only of limited use, for example, voice quality, loudness, and speech rate.

Perhaps most problematic for gesture-based accounts was the finding by Kluender, Diehl, and Killeen ( 1987 ) that phonemic categorization, which was viewed by such accounts as necessitating access to gestural primitives, could be observed in species lacking the anatomical prerequisites for articulatory knowledge and practice (Japanese quail; Fig. 26.6 ). This result was seen by many as undermining both the motor component of speech perception and its human-specific nature. Parsimony became the new driving force. As Kluender et al. put it, “A theory of human phonetic categorization may need to be no more (and no less) complex than that required to explain the behavior of these quail” (p. 1197). The gestural explanation for compensation for coarticulation effects (Mann, 1980 ) was challenged by a general auditory mechanism as well. In Mann’s experiment, the perceptual shift on the /da/-/ga/ continumm induced by the preceding /al/ versus /ar/ context was explained by reference to articulatory gestures. However, Lotto and Kluender ( 1998 ) found a similar shift when the preceding context consisted of nonspeech sounds mimicking the spectral characteristics of the actual syllables (e.g., tone glides). Thus, the acoustic composition of the context, and in particular its spectral contrast with the following syllable, rather than an underlying reference to abstract articulatory gestures, was able to account for Mann’s context effect (but see Fowler, Brown, & Mann’s, 2000 , subsequent multimodal challenge to the auditory account).

However, auditory theories have been criticized for lacking in theoretical content. Auditory accounts are indeed largely based on counterarguments (and counterevidence) to the motor and gestural theories, rather than resting on a clear set of falsifiable principles (Diehl et al., 2004 ). While it is clear that a great deal of phenomena previously believed to require a gestural account can be explained within an arguably simpler auditory framework, it remains to be seen whether auditory theories can provide a satisfactory explanation for the entire class of phenomena in which the many-to-one puzzle has been observed (e.g., Pardo & Remez, 2006 ).

Pecking rates at test for positive stimuli (/dVs/) and negative stimuli (all others) for one of the quail in Kluender et al.’s ( 1987 ) study in eight vowel contexts. The test session was preceded by a learning phase in which the quail learned to discriminate /dVs/ syllables (i.e., syllables starting with /d/ and ending with /s/, with a varying intervocalic vowel) from /bVs/ and /gVs/ syllables, with four different intervocalic vowels not used in the test phase. During learning, the quail was rewarded for pecking in response to /d/-initial syllables (positive trials) but not to /b/- and /g/-initial syllables (negative trials). The figure shows that, at test, the quail pecked substantially more to positive than negative syllables, even though these syllables contained entirely new vowels, that is, vowels leading to different formant transitions with the initial consonant than those experienced during the learning phase. (Reprinted from Kluender, K. R., Diehl, R. L., & Killeen, P. R. [1987]. Japanese Quail can form phonetic categories. Science , 237 , 1195–1197 [Figure 1], by permission of the National Academy of Sciences.)

Top-Down Theories

This rubric and the following one (bottom-up theories) review theories of speech perception broadly construed . They are broadly construed in that they consider phonemic categorization, the scope of the narrowly construed theories, in the context of its interface with lexical knowledge. Although the traditional separation between narrowly and broadly construed theories originates from the respective historical goals of speech perception and spoken-word recognition research (Pisoni & Luce, 1987 ), an understanding of speech perception cannot be complete without an analysis of the impact of long-term knowledge on early sensory processes (see useful reviews in Goldinger, Pisoni, & Luce, 1996 ; Jusczyk & Luce, 2002 ).

The hallmark of top-down approaches to speech perception is that phonetic analysis and categorization can be influenced by knowledge stored in long-term memory, lexical knowledge in particular. As mentioned earlier, phoneme restoration studies (e.g., Warren & Obusek, 1971 ; Warren & Warren, 1970 ) showed that word knowledge could affect listeners’ interpretation of what they heard, but they did not provide direct evidence that phonetic categorization per se (i.e., perception , as it was referred to in that literature) was modified by lexical expectations. However, Samuel ( 1981 ) demonstrated that auditory acuity was indeed altered when lexical information was available (e.g., “pr*gress” [from “progress”], with * indicating the portion on which auditory acuity was measured) compared to when it was not (e.g., “cr*gress” [from the nonword “crogress”]).

This kind of result (see also, e.g., Ganong, 1980 ; Marslen-Wilson & Tyler, 1980 ; and, more recently, Gow, Segawa, Ahlfors, & Lin, 2008 ) led to conceptualizing the speech system as being deeply interactive, with information flowing not only from bottom to top but also from top down. For example, the TRACE model (more specifically, TRACE II; McClelland & Elman, 1986 ) is an interactive-activation model made of a large number of units organized into three levels: features, phonemes, and words (Fig. 26.7 A). The model includes bottom-up excitatory connections (from features to phonemes and from phonemes to words), inhibitory lateral connections (within each level), and, critically, top-down excitatory connections (from words to phonemes and from phonemes to features). Thus, the activation levels of features, for example, voicing, nasality, and burst, are partly determined by the activation levels of phonemes, and these are partly determined by the activation levels of words. In essence, this architecture places speech perception within a system that allows a given sensory input to yield a different perceptual experience (as opposed to interpretive experience) when it occurs in a word versus a nonword or next to phoneme x versus phoneme y, and so on. TRACE has been shown to simulate a large range of perceptual and psycholinguistic phenomena, for example, categorical perception, cue trading relations, phonetic context effects, compensation for coarticulation, lexical effects on phoneme detection/categorization, segmentation of embedded words, and so on. All this takes place within an architecture that is neither domain nor species specific. Later instantiations of TRACE have been proposed by McClelland ( 1991 ) and Movellan and McClelland ( 2001 ), but all of them preserve the core interactive architecture described in the original model.

Like TRACE, Grossberg’s Adaptive Resonance Theory (ART; e.g., Grossberg, 1986 ; Grossberg & Myers, 1999 ) suggests that perception emerges from a compromise, or stable state, between sensory information and stored lexical knowledge (Fig. 26.7B ). ART includes items (akin to subphonemic features or feature clusters) and list chunks (combinations of items whose composition is the result of prior learning; e.g., phonemes, syllables, or words). In ART, a sensory input activates items that, in turn, activate list chunks. List chunks feed back to component items, and items back to list chunks again in a bottom-up/top-down cyclic manner that extends over time, ultimately creating stable resonance between a set of items and a list chunk. Both TRACE and ART posit that connections between levels are only excitatory and connections within levels are only inhibitory. In ART, in typical circumstances, attention is directed to large chunks (e.g., words), and hence the content of smaller chunks is generally less readily available. Small mismatches between large chunks and small chunks do not prevent resonance, but large mismatches do. In other words, unlike TRACE, ART does not allow the speech system to “hallucinate” information that is not already there (however, for circumstances in which it could, see Grossberg, 2000a ). Large mismatches lead to the establishment of new chunks, and these gain resonance via subsequent exposure. In doing so, ART provides a solution to the stability-plasticity dilemma, that is, the unwanted erasure of prior learning by more recent learning (Grossberg, 1987 ), also referred to as catastrophic interference (e.g., McCloskey & Cohen, 1989 ).

Thus, like TRACE, ART posits that speech perception results from an online interaction between prelexical and lexical processes. However, ART is more deeply grounded in, and motivated by biologically plausible neural dynamics, where reciprocal connectivity and resonance states have been observed (e.g., Felleman & Van Essen, 1991 ). Likewise, ART replaces the hierarchical structure of TRACE with a more flexible one, in which tiers self-organize over time through competitive dynamics—as opposed to being predefined. Although sometimes accused of placing too few constraints on empirical expectations (Norris et al., 2000 ), the functional architecture of ART is thought to be more computationally economical than that of TRACE and more amenable to modeling both real-time and long-term temporal aspects of speech processing (Grossberg, Boardman, & Cohen, 1997 ).

Bottom-Up Theories