Machine Learning - CMU

PhD Dissertations

[all are .pdf files].

Learning Models that Match Jacob Tyo, 2024

Improving Human Integration across the Machine Learning Pipeline Charvi Rastogi, 2024

Reliable and Practical Machine Learning for Dynamic Healthcare Settings Helen Zhou, 2023

Automatic customization of large-scale spiking network models to neuronal population activity (unavailable) Shenghao Wu, 2023

Estimation of BVk functions from scattered data (unavailable) Addison J. Hu, 2023

Rethinking object categorization in computer vision (unavailable) Jayanth Koushik, 2023

Advances in Statistical Gene Networks Jinjin Tian, 2023 Post-hoc calibration without distributional assumptions Chirag Gupta, 2023

The Role of Noise, Proxies, and Dynamics in Algorithmic Fairness Nil-Jana Akpinar, 2023

Collaborative learning by leveraging siloed data Sebastian Caldas, 2023

Modeling Epidemiological Time Series Aaron Rumack, 2023

Human-Centered Machine Learning: A Statistical and Algorithmic Perspective Leqi Liu, 2023

Uncertainty Quantification under Distribution Shifts Aleksandr Podkopaev, 2023

Probabilistic Reinforcement Learning: Using Data to Define Desired Outcomes, and Inferring How to Get There Benjamin Eysenbach, 2023

Comparing Forecasters and Abstaining Classifiers Yo Joong Choe, 2023

Using Task Driven Methods to Uncover Representations of Human Vision and Semantics Aria Yuan Wang, 2023

Data-driven Decisions - An Anomaly Detection Perspective Shubhranshu Shekhar, 2023

Applied Mathematics of the Future Kin G. Olivares, 2023

METHODS AND APPLICATIONS OF EXPLAINABLE MACHINE LEARNING Joon Sik Kim, 2023

NEURAL REASONING FOR QUESTION ANSWERING Haitian Sun, 2023

Principled Machine Learning for Societally Consequential Decision Making Amanda Coston, 2023

Long term brain dynamics extend cognitive neuroscience to timescales relevant for health and physiology Maxwell B. Wang, 2023

Long term brain dynamics extend cognitive neuroscience to timescales relevant for health and physiology Darby M. Losey, 2023

Calibrated Conditional Density Models and Predictive Inference via Local Diagnostics David Zhao, 2023

Towards an Application-based Pipeline for Explainability Gregory Plumb, 2022

Objective Criteria for Explainable Machine Learning Chih-Kuan Yeh, 2022

Making Scientific Peer Review Scientific Ivan Stelmakh, 2022

Facets of regularization in high-dimensional learning: Cross-validation, risk monotonization, and model complexity Pratik Patil, 2022

Active Robot Perception using Programmable Light Curtains Siddharth Ancha, 2022

Strategies for Black-Box and Multi-Objective Optimization Biswajit Paria, 2022

Unifying State and Policy-Level Explanations for Reinforcement Learning Nicholay Topin, 2022

Sensor Fusion Frameworks for Nowcasting Maria Jahja, 2022

Equilibrium Approaches to Modern Deep Learning Shaojie Bai, 2022

Towards General Natural Language Understanding with Probabilistic Worldbuilding Abulhair Saparov, 2022

Applications of Point Process Modeling to Spiking Neurons (Unavailable) Yu Chen, 2021

Neural variability: structure, sources, control, and data augmentation Akash Umakantha, 2021

Structure and time course of neural population activity during learning Jay Hennig, 2021

Cross-view Learning with Limited Supervision Yao-Hung Hubert Tsai, 2021

Meta Reinforcement Learning through Memory Emilio Parisotto, 2021

Learning Embodied Agents with Scalably-Supervised Reinforcement Learning Lisa Lee, 2021

Learning to Predict and Make Decisions under Distribution Shift Yifan Wu, 2021

Statistical Game Theory Arun Sai Suggala, 2021

Towards Knowledge-capable AI: Agents that See, Speak, Act and Know Kenneth Marino, 2021

Learning and Reasoning with Fast Semidefinite Programming and Mixing Methods Po-Wei Wang, 2021

Bridging Language in Machines with Language in the Brain Mariya Toneva, 2021

Curriculum Learning Otilia Stretcu, 2021

Principles of Learning in Multitask Settings: A Probabilistic Perspective Maruan Al-Shedivat, 2021

Towards Robust and Resilient Machine Learning Adarsh Prasad, 2021

Towards Training AI Agents with All Types of Experiences: A Unified ML Formalism Zhiting Hu, 2021

Building Intelligent Autonomous Navigation Agents Devendra Chaplot, 2021

Learning to See by Moving: Self-supervising 3D Scene Representations for Perception, Control, and Visual Reasoning Hsiao-Yu Fish Tung, 2021

Statistical Astrophysics: From Extrasolar Planets to the Large-scale Structure of the Universe Collin Politsch, 2020

Causal Inference with Complex Data Structures and Non-Standard Effects Kwhangho Kim, 2020

Networks, Point Processes, and Networks of Point Processes Neil Spencer, 2020

Dissecting neural variability using population recordings, network models, and neurofeedback (Unavailable) Ryan Williamson, 2020

Predicting Health and Safety: Essays in Machine Learning for Decision Support in the Public Sector Dylan Fitzpatrick, 2020

Towards a Unified Framework for Learning and Reasoning Han Zhao, 2020

Learning DAGs with Continuous Optimization Xun Zheng, 2020

Machine Learning and Multiagent Preferences Ritesh Noothigattu, 2020

Learning and Decision Making from Diverse Forms of Information Yichong Xu, 2020

Towards Data-Efficient Machine Learning Qizhe Xie, 2020

Change modeling for understanding our world and the counterfactual one(s) William Herlands, 2020

Machine Learning in High-Stakes Settings: Risks and Opportunities Maria De-Arteaga, 2020

Data Decomposition for Constrained Visual Learning Calvin Murdock, 2020

Structured Sparse Regression Methods for Learning from High-Dimensional Genomic Data Micol Marchetti-Bowick, 2020

Towards Efficient Automated Machine Learning Liam Li, 2020

LEARNING COLLECTIONS OF FUNCTIONS Emmanouil Antonios Platanios, 2020

Provable, structured, and efficient methods for robustness of deep networks to adversarial examples Eric Wong , 2020

Reconstructing and Mining Signals: Algorithms and Applications Hyun Ah Song, 2020

Probabilistic Single Cell Lineage Tracing Chieh Lin, 2020

Graphical network modeling of phase coupling in brain activity (unavailable) Josue Orellana, 2019

Strategic Exploration in Reinforcement Learning - New Algorithms and Learning Guarantees Christoph Dann, 2019 Learning Generative Models using Transformations Chun-Liang Li, 2019

Estimating Probability Distributions and their Properties Shashank Singh, 2019

Post-Inference Methods for Scalable Probabilistic Modeling and Sequential Decision Making Willie Neiswanger, 2019

Accelerating Text-as-Data Research in Computational Social Science Dallas Card, 2019

Multi-view Relationships for Analytics and Inference Eric Lei, 2019

Information flow in networks based on nonstationary multivariate neural recordings Natalie Klein, 2019

Competitive Analysis for Machine Learning & Data Science Michael Spece, 2019

The When, Where and Why of Human Memory Retrieval Qiong Zhang, 2019

Towards Effective and Efficient Learning at Scale Adams Wei Yu, 2019

Towards Literate Artificial Intelligence Mrinmaya Sachan, 2019

Learning Gene Networks Underlying Clinical Phenotypes Under SNP Perturbations From Genome-Wide Data Calvin McCarter, 2019

Unified Models for Dynamical Systems Carlton Downey, 2019

Anytime Prediction and Learning for the Balance between Computation and Accuracy Hanzhang Hu, 2019

Statistical and Computational Properties of Some "User-Friendly" Methods for High-Dimensional Estimation Alnur Ali, 2019

Nonparametric Methods with Total Variation Type Regularization Veeranjaneyulu Sadhanala, 2019

New Advances in Sparse Learning, Deep Networks, and Adversarial Learning: Theory and Applications Hongyang Zhang, 2019

Gradient Descent for Non-convex Problems in Modern Machine Learning Simon Shaolei Du, 2019

Selective Data Acquisition in Learning and Decision Making Problems Yining Wang, 2019

Anomaly Detection in Graphs and Time Series: Algorithms and Applications Bryan Hooi, 2019

Neural dynamics and interactions in the human ventral visual pathway Yuanning Li, 2018

Tuning Hyperparameters without Grad Students: Scaling up Bandit Optimisation Kirthevasan Kandasamy, 2018

Teaching Machines to Classify from Natural Language Interactions Shashank Srivastava, 2018

Statistical Inference for Geometric Data Jisu Kim, 2018

Representation Learning @ Scale Manzil Zaheer, 2018

Diversity-promoting and Large-scale Machine Learning for Healthcare Pengtao Xie, 2018

Distribution and Histogram (DIsH) Learning Junier Oliva, 2018

Stress Detection for Keystroke Dynamics Shing-Hon Lau, 2018

Sublinear-Time Learning and Inference for High-Dimensional Models Enxu Yan, 2018

Neural population activity in the visual cortex: Statistical methods and application Benjamin Cowley, 2018

Efficient Methods for Prediction and Control in Partially Observable Environments Ahmed Hefny, 2018

Learning with Staleness Wei Dai, 2018

Statistical Approach for Functionally Validating Transcription Factor Bindings Using Population SNP and Gene Expression Data Jing Xiang, 2017

New Paradigms and Optimality Guarantees in Statistical Learning and Estimation Yu-Xiang Wang, 2017

Dynamic Question Ordering: Obtaining Useful Information While Reducing User Burden Kirstin Early, 2017

New Optimization Methods for Modern Machine Learning Sashank J. Reddi, 2017

Active Search with Complex Actions and Rewards Yifei Ma, 2017

Why Machine Learning Works George D. Montañez , 2017

Source-Space Analyses in MEG/EEG and Applications to Explore Spatio-temporal Neural Dynamics in Human Vision Ying Yang , 2017

Computational Tools for Identification and Analysis of Neuronal Population Activity Pengcheng Zhou, 2016

Expressive Collaborative Music Performance via Machine Learning Gus (Guangyu) Xia, 2016

Supervision Beyond Manual Annotations for Learning Visual Representations Carl Doersch, 2016

Exploring Weakly Labeled Data Across the Noise-Bias Spectrum Robert W. H. Fisher, 2016

Optimizing Optimization: Scalable Convex Programming with Proximal Operators Matt Wytock, 2016

Combining Neural Population Recordings: Theory and Application William Bishop, 2015

Discovering Compact and Informative Structures through Data Partitioning Madalina Fiterau-Brostean, 2015

Machine Learning in Space and Time Seth R. Flaxman, 2015

The Time and Location of Natural Reading Processes in the Brain Leila Wehbe, 2015

Shape-Constrained Estimation in High Dimensions Min Xu, 2015

Spectral Probabilistic Modeling and Applications to Natural Language Processing Ankur Parikh, 2015 Computational and Statistical Advances in Testing and Learning Aaditya Kumar Ramdas, 2015

Corpora and Cognition: The Semantic Composition of Adjectives and Nouns in the Human Brain Alona Fyshe, 2015

Learning Statistical Features of Scene Images Wooyoung Lee, 2014

Towards Scalable Analysis of Images and Videos Bin Zhao, 2014

Statistical Text Analysis for Social Science Brendan T. O'Connor, 2014

Modeling Large Social Networks in Context Qirong Ho, 2014

Semi-Cooperative Learning in Smart Grid Agents Prashant P. Reddy, 2013

On Learning from Collective Data Liang Xiong, 2013

Exploiting Non-sequence Data in Dynamic Model Learning Tzu-Kuo Huang, 2013

Mathematical Theories of Interaction with Oracles Liu Yang, 2013

Short-Sighted Probabilistic Planning Felipe W. Trevizan, 2013

Statistical Models and Algorithms for Studying Hand and Finger Kinematics and their Neural Mechanisms Lucia Castellanos, 2013

Approximation Algorithms and New Models for Clustering and Learning Pranjal Awasthi, 2013

Uncovering Structure in High-Dimensions: Networks and Multi-task Learning Problems Mladen Kolar, 2013

Learning with Sparsity: Structures, Optimization and Applications Xi Chen, 2013

GraphLab: A Distributed Abstraction for Large Scale Machine Learning Yucheng Low, 2013

Graph Structured Normal Means Inference James Sharpnack, 2013 (Joint Statistics & ML PhD)

Probabilistic Models for Collecting, Analyzing, and Modeling Expression Data Hai-Son Phuoc Le, 2013

Learning Large-Scale Conditional Random Fields Joseph K. Bradley, 2013

New Statistical Applications for Differential Privacy Rob Hall, 2013 (Joint Statistics & ML PhD)

Parallel and Distributed Systems for Probabilistic Reasoning Joseph Gonzalez, 2012

Spectral Approaches to Learning Predictive Representations Byron Boots, 2012

Attribute Learning using Joint Human and Machine Computation Edith L. M. Law, 2012

Statistical Methods for Studying Genetic Variation in Populations Suyash Shringarpure, 2012

Data Mining Meets HCI: Making Sense of Large Graphs Duen Horng (Polo) Chau, 2012

Learning with Limited Supervision by Input and Output Coding Yi Zhang, 2012

Target Sequence Clustering Benjamin Shih, 2011

Nonparametric Learning in High Dimensions Han Liu, 2010 (Joint Statistics & ML PhD)

Structural Analysis of Large Networks: Observations and Applications Mary McGlohon, 2010

Modeling Purposeful Adaptive Behavior with the Principle of Maximum Causal Entropy Brian D. Ziebart, 2010

Tractable Algorithms for Proximity Search on Large Graphs Purnamrita Sarkar, 2010

Rare Category Analysis Jingrui He, 2010

Coupled Semi-Supervised Learning Andrew Carlson, 2010

Fast Algorithms for Querying and Mining Large Graphs Hanghang Tong, 2009

Efficient Matrix Models for Relational Learning Ajit Paul Singh, 2009

Exploiting Domain and Task Regularities for Robust Named Entity Recognition Andrew O. Arnold, 2009

Theoretical Foundations of Active Learning Steve Hanneke, 2009

Generalized Learning Factors Analysis: Improving Cognitive Models with Machine Learning Hao Cen, 2009

Detecting Patterns of Anomalies Kaustav Das, 2009

Dynamics of Large Networks Jurij Leskovec, 2008

Computational Methods for Analyzing and Modeling Gene Regulation Dynamics Jason Ernst, 2008

Stacked Graphical Learning Zhenzhen Kou, 2007

Actively Learning Specific Function Properties with Applications to Statistical Inference Brent Bryan, 2007

Approximate Inference, Structure Learning and Feature Estimation in Markov Random Fields Pradeep Ravikumar, 2007

Scalable Graphical Models for Social Networks Anna Goldenberg, 2007

Measure Concentration of Strongly Mixing Processes with Applications Leonid Kontorovich, 2007

Tools for Graph Mining Deepayan Chakrabarti, 2005

Automatic Discovery of Latent Variable Models Ricardo Silva, 2005

- Warning : Invalid argument supplied for foreach() in /home/customer/www/opendatascience.com/public_html/wp-includes/nav-menu.php on line 95 Warning : array_merge(): Expected parameter 2 to be an array, null given in /home/customer/www/opendatascience.com/public_html/wp-includes/nav-menu.php on line 102

- ODSC EUROPE

- AI+ Training

- Speak at ODSC

- Data Analytics

- Data Engineering

- Data Visualization

- Deep Learning

- Generative AI

- Machine Learning

- NLP and LLMs

- Business & Use Cases

- Career Advice

- Write for us

- ODSC Community Slack Channel

- Upcoming Webinars

17 Compelling Machine Learning Ph.D. Dissertations

Machine Learning Modeling Research posted by Daniel Gutierrez, ODSC August 12, 2021 Daniel Gutierrez, ODSC

Working in the field of data science, I’m always seeking ways to keep current in the field and there are a number of important resources available for this purpose: new book titles, blog articles, conference sessions, Meetups, webinars/podcasts, not to mention the gems floating around in social media. But to dig even deeper, I routinely look at what’s coming out of the world’s research labs. And one great way to keep a pulse for what the research community is working on is to monitor the flow of new machine learning Ph.D. dissertations. Admittedly, many such theses are laser-focused and narrow, but from previous experience reading these documents, you can learn an awful lot about new ways to solve difficult problems over a vast range of problem domains.

In this article, I present a number of hand-picked machine learning dissertations that I found compelling in terms of my own areas of interest and aligned with problems that I’m working on. I hope you’ll find a number of them that match your own interests. Each dissertation may be challenging to consume but the process will result in hours of satisfying summer reading. Enjoy!

Please check out my previous data science dissertation round-up article .

1. Fitting Convex Sets to Data: Algorithms and Applications

This machine learning dissertation concerns the geometric problem of finding a convex set that best fits a given data set. The overarching question serves as an abstraction for data-analytical tasks arising in a range of scientific and engineering applications with a focus on two specific instances: (i) a key challenge that arises in solving inverse problems is ill-posedness due to a lack of measurements. A prominent family of methods for addressing such issues is based on augmenting optimization-based approaches with a convex penalty function so as to induce a desired structure in the solution. These functions are typically chosen using prior knowledge about the data. The thesis also studies the problem of learning convex penalty functions directly from data for settings in which we lack the domain expertise to choose a penalty function. The solution relies on suitably transforming the problem of learning a penalty function into a fitting task; and (ii) the problem of fitting tractably-described convex sets given the optimal value of linear functionals evaluated in different directions.

2. Structured Tensors and the Geometry of Data

This machine learning dissertation analyzes data to build a quantitative understanding of the world. Linear algebra is the foundation of algorithms, dating back one hundred years, for extracting structure from data. Modern technologies provide an abundance of multi-dimensional data, in which multiple variables or factors can be compared simultaneously. To organize and analyze such data sets we can use a tensor , the higher-order analogue of a matrix. However, many theoretical and practical challenges arise in extending linear algebra to the setting of tensors. The first part of the thesis studies and develops the algebraic theory of tensors. The second part of the thesis presents three algorithms for tensor data. The algorithms use algebraic and geometric structure to give guarantees of optimality.

3. Statistical approaches for spatial prediction and anomaly detection

This machine learning dissertation is primarily a description of three projects. It starts with a method for spatial prediction and parameter estimation for irregularly spaced, and non-Gaussian data. It is shown that by judiciously replacing the likelihood with an empirical likelihood in the Bayesian hierarchical model, approximate posterior distributions for the mean and covariance parameters can be obtained. Due to the complex nature of the hierarchical model, standard Markov chain Monte Carlo methods cannot be applied to sample from the posterior distributions. To overcome this issue, a generalized sequential Monte Carlo algorithm is used. Finally, this method is applied to iron concentrations in California. The second project focuses on anomaly detection for functional data; specifically for functional data where the observed functions may lie over different domains. By approximating each function as a low-rank sum of spline basis functions the coefficients will be compared for each basis across each function. The idea being, if two functions are similar then their respective coefficients should not be significantly different. This project concludes with an application of the proposed method to detect anomalous behavior of users of a supercomputer at NREL. The final project is an extension of the second project to two-dimensional data. This project aims to detect location and temporal anomalies from ground motion data from a fiber-optic cable using distributed acoustic sensing (DAS).

4. Sampling for Streaming Data

Advances in data acquisition technology pose challenges in analyzing large volumes of streaming data. Sampling is a natural yet powerful tool for analyzing such data sets due to their competent estimation accuracy and low computational cost. Unfortunately, sampling methods and their statistical properties for streaming data, especially streaming time series data, are not well studied in the literature. Meanwhile, estimating the dependence structure of multidimensional streaming time-series data in real-time is challenging. With large volumes of streaming data, the problem becomes more difficult when the multidimensional data are collected asynchronously across distributed nodes, which motivates us to sample representative data points from streams. This machine learning dissertation proposes a series of leverage score-based sampling methods for streaming time series data. The simulation studies and real data analysis are conducted to validate the proposed methods. The theoretical analysis of the asymptotic behaviors of the least-squares estimator is developed based on the subsamples.

5. Statistical Machine Learning Methods for Complex, Heterogeneous Data

This machine learning dissertation develops statistical machine learning methodology for three distinct tasks. Each method blends classical statistical approaches with machine learning methods to provide principled solutions to problems with complex, heterogeneous data sets. The first framework proposes two methods for high-dimensional shape-constrained regression and classification. These methods reshape pre-trained prediction rules to satisfy shape constraints like monotonicity and convexity. The second method provides a nonparametric approach to the econometric analysis of discrete choice. This method provides a scalable algorithm for estimating utility functions with random forests, and combines this with random effects to properly model preference heterogeneity. The final method draws inspiration from early work in statistical machine translation to construct embeddings for variable-length objects like mathematical equations

6. Topics in Multivariate Statistics with Dependent Data

This machine learning dissertation comprises four chapters. The first is an introduction to the topics of the dissertation and the remaining chapters contain the main results. Chapter 2 gives new results for consistency of maximum likelihood estimators with a focus on multivariate mixed models. The presented theory builds on the idea of using subsets of the full data to establish consistency of estimators based on the full data. The theory is applied to two multivariate mixed models for which it was unknown whether maximum likelihood estimators are consistent. In Chapter 3 an algorithm is proposed for maximum likelihood estimation of a covariance matrix when the corresponding correlation matrix can be written as the Kronecker product of two lower-dimensional correlation matrices. The proposed method is fully likelihood-based. Some desirable properties of separable correlation in comparison to separable covariance are also discussed. Chapter 4 is concerned with Bayesian vector auto-regressions (VARs). A collapsed Gibbs sampler is proposed for Bayesian VARs with predictors and the convergence properties of the algorithm are studied.

7. Model Selection and Estimation for High-dimensional Data Analysis

In the era of big data, uncovering useful information and hidden patterns in the data is prevalent in different fields. However, it is challenging to effectively select input variables in data and estimate their effects. The goal of this machine learning dissertation is to develop reproducible statistical approaches that provide mechanistic explanations of the phenomenon observed in big data analysis. The research contains two parts: variable selection and model estimation. The first part investigates how to measure and interpret the usefulness of an input variable using an approach called “variable importance learning” and builds tools (methodology and software) that can be widely applied. Two variable importance measures are proposed, a parametric measure SOIL and a non-parametric measure CVIL, using the idea of a model combining and cross-validation respectively. The SOIL method is theoretically shown to have the inclusion/exclusion property: When the model weights are properly around the true model, the SOIL importance can well separate the variables in the true model from the rest. The CVIL method possesses desirable theoretical properties and enhances the interpretability of many mysterious but effective machine learning methods. The second part focuses on how to estimate the effect of a useful input variable in the case where the interaction of two input variables exists. Investigated is the minimax rate of convergence for regression estimation in high-dimensional sparse linear models with two-way interactions, and construct an adaptive estimator that achieves the minimax rate of convergence regardless of the true heredity condition and the sparsity indices.

8. High-Dimensional Structured Regression Using Convex Optimization

While the term “Big Data” can have multiple meanings, this dissertation considers the type of data in which the number of features can be much greater than the number of observations (also known as high-dimensional data). High-dimensional data is abundant in contemporary scientific research due to the rapid advances in new data-measurement technologies and computing power. Recent advances in statistics have witnessed great development in the field of high-dimensional data analysis. This machine learning dissertation proposes three methods that study three different components of a general framework of the high-dimensional structured regression problem. A general theme of the proposed methods is that they cast a certain structured regression as a convex optimization problem. In so doing, the theoretical properties of each method can be well studied, and efficient computation is facilitated. Each method is accompanied by a thorough theoretical analysis of its performance, and also by an R package containing its practical implementation. It is shown that the proposed methods perform favorably (both theoretically and practically) compared with pre-existing methods.

9. Asymptotics and Interpretability of Decision Trees and Decision Tree Ensembles

Decision trees and decision tree ensembles are widely used nonparametric statistical models. A decision tree is a binary tree that recursively segments the covariate space along the coordinate directions to create hyper rectangles as basic prediction units for fitting constant values within each of them. A decision tree ensemble combines multiple decision trees, either in parallel or in sequence, in order to increase model flexibility and accuracy, as well as to reduce prediction variance. Despite the fact that tree models have been extensively used in practice, results on their asymptotic behaviors are scarce. This machine learning dissertation presents analyses on tree asymptotics in the perspectives of tree terminal nodes, tree ensembles, and models incorporating tree ensembles respectively. The study introduces a few new tree-related learning frameworks which provides provable statistical guarantees and interpretations. A study on the Gini index used in the greedy tree building algorithm reveals its limiting distribution, leading to the development of a test of better splitting that helps to measure the uncertain optimality of a decision tree split. This test is combined with the concept of decision tree distillation, which implements a decision tree to mimic the behavior of a block box model, to generate stable interpretations by guaranteeing a unique distillation tree structure as long as there are sufficiently many random sample points. Also applied is mild modification and regularization to the standard tree boosting to create a new boosting framework named Boulevard. Also included is an integration of two new mechanisms: honest trees , which isolate the tree terminal values from the tree structure, and adaptive shrinkage , which scales the boosting history to create an equally weighted ensemble. This theoretical development provides the prerequisite for the practice of statistical inference with boosted trees. Lastly, the thesis investigates the feasibility of incorporating existing semi-parametric models with tree boosting.

10. Bayesian Models for Imputing Missing Data and Editing Erroneous Responses in Surveys

This dissertation develops Bayesian methods for handling unit nonresponse, item nonresponse, and erroneous responses in large-scale surveys and censuses containing categorical data. The focus is on applications of nested household data where individuals are nested within households and certain combinations of the variables are not allowed, such as the U.S. Decennial Census, as well as surveys subject to both unit and item nonresponse, such as the Current Population Survey.

11. Localized Variable Selection with Random Forest

Due to recent advances in computer technology, the cost of collecting and storing data has dropped drastically. This makes it feasible to collect large amounts of information for each data point. This increasing trend in feature dimensionality justifies the need for research on variable selection. Random forest (RF) has demonstrated the ability to select important variables and model complex data. However, simulations confirm that it fails in detecting less influential features in presence of variables with large impacts in some cases. This dissertation proposes two algorithms for localized variable selection: clustering-based feature selection (CBFS) and locally adjusted feature importance (LAFI). Both methods aim to find regions where the effects of weaker features can be isolated and measured. CBFS combines RF variable selection with a two-stage clustering method to detect variables where their effect can be detected only in certain regions. LAFI, on the other hand, uses a binary tree approach to split data into bins based on response variable rankings, and implements RF to find important variables in each bin. Larger LAFI is assigned to variables that get selected in more bins. Simulations and real data sets are used to evaluate these variable selection methods.

12. Functional Principal Component Analysis and Sparse Functional Regression

The focus of this dissertation is on functional data which are sparsely and irregularly observed. Such data require special consideration, as classical functional data methods and theory were developed for densely observed data. As is the case in much of functional data analysis, the functional principal components (FPCs) play a key role in current sparse functional data methods via the Karhunen-Loéve expansion. Thus, after a review of relevant background material, this dissertation is divided roughly into two parts, the first focusing specifically on theoretical properties of FPCs, and the second on regression for sparsely observed functional data.

13. Essays In Causal Inference: Addressing Bias In Observational And Randomized Studies Through Analysis And Design

In observational studies, identifying assumptions may fail, often quietly and without notice, leading to biased causal estimates. Although less of a concern in randomized trials where treatment is assigned at random, bias may still enter the equation through other means. This dissertation has three parts, each developing new methods to address a particular pattern or source of bias in the setting being studied. The first part extends the conventional sensitivity analysis methods for observational studies to better address patterns of heterogeneous confounding in matched-pair designs. The second part develops a modified difference-in-difference design for comparative interrupted time-series studies. The method permits partial identification of causal effects when the parallel trends assumption is violated by an interaction between group and history. The method is applied to a study of the repeal of Missouri’s permit-to-purchase handgun law and its effect on firearm homicide rates. The final part presents a study design to identify vaccine efficacy in randomized control trials when there is no gold standard case definition. The approach augments a two-arm randomized trial with natural variation of a genetic trait to produce a factorial experiment.

14. Bayesian Shrinkage: Computation, Methods, and Theory

Sparsity is a standard structural assumption that is made while modeling high-dimensional statistical parameters. This assumption essentially entails a lower-dimensional embedding of the high-dimensional parameter thus enabling sound statistical inference. Apart from this obvious statistical motivation, in many modern applications of statistics such as Genomics, Neuroscience, etc. parameters of interest are indeed of this nature. For over almost two decades, spike and slab type priors have been the Bayesian gold standard for modeling of sparsity. However, due to their computational bottlenecks, shrinkage priors have emerged as a powerful alternative. This family of priors can almost exclusively be represented as a scale mixture of Gaussian distribution and posterior Markov chain Monte Carlo (MCMC) updates of related parameters are then relatively easy to design. Although shrinkage priors were tipped as having computational scalability in high-dimensions, when the number of parameters is in thousands or more, they do come with their own computational challenges. Standard MCMC algorithms implementing shrinkage priors generally scale cubic in the dimension of the parameter making real-life application of these priors severely limited.

The first chapter of this dissertation addresses this computational issue and proposes an alternative exact posterior sampling algorithm complexity of which that linearly in the ambient dimension. The algorithm developed in the first chapter is specifically designed for regression problems. The second chapter develops a Bayesian method based on shrinkage priors for high-dimensional multiple response regression. Chapter three chooses a specific member of the shrinkage family known as the horseshoe prior and studies its convergence rates in several high-dimensional models.

15. Topics in Measurement Error Analysis and High-Dimensional Binary Classification

This dissertation proposes novel methods to tackle two problems: the misspecified model with measurement error and high-dimensional binary classification, both have a crucial impact on applications in public health. The first problem exists in the epidemiology practice. Epidemiologists often categorize a continuous risk predictor since categorization is thought to be more robust and interpretable, even when the true risk model is not a categorical one. Thus, their goal is to fit the categorical model and interpret the categorical parameters. The second project considers the problem of high-dimensional classification between the two groups with unequal covariance matrices. Rather than estimating the full quadratic discriminant rule, it is proposed to perform simultaneous variable selection and linear dimension reduction on original data, with the subsequent application of quadratic discriminant analysis on the reduced space. Further, in order to support the proposed methodology, two R packages were developed, CCP and DAP, along with two vignettes as long-format illustrations for their usage.

16. Model-Based Penalized Regression

This dissertation contains three chapters that consider penalized regression from a model-based perspective, interpreting penalties as assumed prior distributions for unknown regression coefficients. The first chapter shows that treating a lasso penalty as a prior can facilitate the choice of tuning parameters when standard methods for choosing the tuning parameters are not available, and when it is necessary to choose multiple tuning parameters simultaneously. The second chapter considers a possible drawback of treating penalties as models, specifically possible misspecification. The third chapter introduces structured shrinkage priors for dependent regression coefficients which generalize popular independent shrinkage priors. These can be useful in various applied settings where many regression coefficients are not only expected to be nearly or exactly equal to zero, but also structured.

17. Topics on Least Squares Estimation

This dissertation revisits and makes progress on some old but challenging problems concerning least squares estimation, the work-horse of supervised machine learning. Two major problems are addressed: (i) least squares estimation with heavy-tailed errors, and (ii) least squares estimation in non-Donsker classes. For (i), this problem is studied both from a worst-case perspective, and a more refined envelope perspective. For (ii), two case studies are performed in the context of (a) estimation involving sets and (b) estimation of multivariate isotonic functions. Understanding these particular aspects of least squares estimation problems requires several new tools in the empirical process theory, including a sharp multiplier inequality controlling the size of the multiplier empirical process, and matching upper and lower bounds for empirical processes indexed by non-Donsker classes.

How to Learn More about Machine Learning

At our upcoming event this November 16th-18th in San Francisco, ODSC West 2021 will feature a plethora of talks, workshops, and training sessions on machine learning and machine learning research. You can register now for 50% off all ticket types before the discount drops to 40% in a few weeks. Some highlighted sessions on machine learning include:

- Towards More Energy-Efficient Neural Networks? Use Your Brain!: Olaf de Leeuw | Data Scientist | Dataworkz

- Practical MLOps: Automation Journey: Evgenii Vinogradov, PhD | Head of DHW Development | YooMoney

- Applications of Modern Survival Modeling with Python: Brian Kent, PhD | Data Scientist | Founder The Crosstab Kite

- Using Change Detection Algorithms for Detecting Anomalous Behavior in Large Systems: Veena Mendiratta, PhD | Adjunct Faculty, Network Reliability and Analytics Researcher | Northwestern University

Sessions on MLOps:

- Tuning Hyperparameters with Reproducible Experiments: Milecia McGregor | Senior Software Engineer | Iterative

- MLOps… From Model to Production: Filipa Peleja, PhD | Lead Data Scientist | Levi Strauss & Co

- Operationalization of Models Developed and Deployed in Heterogeneous Platforms: Sourav Mazumder | Data Scientist, Thought Leader, AI & ML Operationalization Leader | IBM

- Develop and Deploy a Machine Learning Pipeline in 45 Minutes with Ploomber: Eduardo Blancas | Data Scientist | Fidelity Investments

Sessions on Deep Learning:

- GANs: Theory and Practice, Image Synthesis With GANs Using TensorFlow: Ajay Baranwal | Center Director | Center for Deep Learning in Electronic Manufacturing, Inc

- Machine Learning With Graphs: Going Beyond Tabular Data: Dr. Clair J. Sullivan | Data Science Advocate | Neo4j

- Deep Dive into Reinforcement Learning with PPO using TF-Agents & TensorFlow 2.0: Oliver Zeigermann | Software Developer | embarc Software Consulting GmbH

- Get Started with Time-Series Forecasting using the Google Cloud AI Platform: Karl Weinmeister | Developer Relations Engineering Manager | Google

Daniel Gutierrez, ODSC

Daniel D. Gutierrez is a practicing data scientist who’s been working with data long before the field came in vogue. As a technology journalist, he enjoys keeping a pulse on this fast-paced industry. Daniel is also an educator having taught data science, machine learning and R classes at the university level. He has authored four computer industry books on database and data science technology, including his most recent title, “Machine Learning and Data Science: An Introduction to Statistical Learning Methods with R.” Daniel holds a BS in Mathematics and Computer Science from UCLA.

ODSC’s AI Weekly Recap: Week of May 24th

AI and Data Science News posted by ODSC Team May 24, 2024 Every week, the ODSC team researches the latest advancements in AI. We review a selection of...

Breaking: John Snow Labs Achieves State-of-the-Art Medical LLM Accuracy

AI and Data Science News posted by ODSC Team May 23, 2024 John Snow Labs, a leader in healthcare-related AI, has announced that it set a new benchmark...

IBM Announces Major Updates to watsonx Platform

AI and Data Science News posted by ODSC Team May 23, 2024 At its annual THINK conference, IBM unveiled significant updates to its watsonx platform, marking one year...

Google Custom Search

Wir verwenden Google für unsere Suche. Mit Klick auf „Suche aktivieren“ aktivieren Sie das Suchfeld und akzeptieren die Nutzungsbedingungen.

Hinweise zum Einsatz der Google Suche

- Data Analytics and Machine Learning Group

- TUM School of Computation, Information and Technology

- Technical University of Munich

Open Topics

We offer multiple Bachelor/Master theses, Guided Research projects and IDPs in the area of data mining/machine learning. A non-exhaustive list of open topics is listed below.

If you are interested in a thesis or a guided research project, please send your CV and transcript of records to Prof. Stephan Günnemann via email and we will arrange a meeting to talk about the potential topics.

Graph Neural Networks for Spatial Transcriptomics

Type: Master's Thesis

Prerequisites:

- Strong machine learning knowledge

- Proficiency with Python and deep learning frameworks (PyTorch, TensorFlow, JAX)

- Knowledge of graph neural networks (e.g., GCN, MPNN)

- Optional: Knowledge of bioinformatics and genomics

Description:

Spatial transcriptomics is a cutting-edge field at the intersection of genomics and spatial analysis, aiming to understand gene expression patterns within the context of tissue architecture. Our project focuses on leveraging graph neural networks (GNNs) to unlock the full potential of spatial transcriptomic data. Unlike traditional methods, GNNs can effectively capture the intricate spatial relationships between cells, enabling more accurate modeling and interpretation of gene expression dynamics across tissues. We seek motivated students to explore novel GNN architectures tailored for spatial transcriptomics, with a particular emphasis on addressing challenges such as spatial heterogeneity, cell-cell interactions, and spatially varying gene expression patterns.

Contact : Filippo Guerranti , Alessandro Palma

References:

- Cell clustering for spatial transcriptomics data with graph neural network

- Unsupervised spatially embedded deep representation of spatial transcriptomics

- SpaGCN: Integrating gene expression, spatial location and histology to identify spatial domains and spatially variable genes by graph convolutional network

- DeepST: identifying spatial domains in spatial transcriptomics by deep learning

- Deciphering spatial domains from spatially resolved transcriptomics with an adaptive graph attention auto-encoder

GCNG: graph convolutional networks for inferring gene interaction from spatial transcriptomics data

Generative Models for Drug Discovery

Type: Mater Thesis / Guided Research

- Proficiency with Python and deep learning frameworks (PyTorch or TensorFlow)

- Knowledge of graph neural networks (e.g. GCN, MPNN)

- No formal education in chemistry, physics or biology needed!

Effectively designing molecular geometries is essential to advancing pharmaceutical innovations, a domain which has experienced great attention through the success of generative models. These models promise a more efficient exploration of the vast chemical space and generation of novel compounds with specific properties by leveraging their learned representations, potentially leading to the discovery of molecules with unique properties that would otherwise go undiscovered. Our topics lie at the intersection of generative models like diffusion/flow matching models and graph representation learning, e.g., graph neural networks. The focus of our projects can be model development with an emphasis on downstream tasks ( e.g., diffusion guidance at inference time ) and a better understanding of the limitations of existing models.

Contact : Johanna Sommer , Leon Hetzel

Equivariant Diffusion for Molecule Generation in 3D

Equivariant Flow Matching with Hybrid Probability Transport for 3D Molecule Generation

Structure-based Drug Design with Equivariant Diffusion Models

Efficient Machine Learning: Pruning, Quantization, Distillation, and More - DAML x Pruna AI

Type: Master's Thesis / Guided Research / Hiwi

- Strong knowledge in machine learning

- Proficiency with Python and deep learning frameworks (TensorFlow or PyTorch)

The efficiency of machine learning algorithms is commonly evaluated by looking at target performance, speed and memory footprint metrics. Reduce the costs associated to these metrics is of primary importance for real-world applications with limited ressources (e.g. embedded systems, real-time predictions). In this project, you will work in collaboration with the DAML research group and the Pruna AI startup on investigating solutions to improve the efficiency of machine leanring models by looking at multiple techniques like pruning, quantization, distillation, and more.

Contact: Bertrand Charpentier

- The Efficiency Misnomer

- A Gradient Flow Framework for Analyzing Network Pruning

- Distilling the Knowledge in a Neural Network

- A Survey of Quantization Methods for Efficient Neural Network Inference

Deep Generative Models

Type: Master Thesis / Guided Research

- Strong machine learning and probability theory knowledge

- Knowledge of generative models and their basics (e.g., Normalizing Flows, Diffusion Models, VAE)

- Optional: Neural ODEs/SDEs, Optimal Transport, Measure Theory

With recent advances, such as Diffusion Models, Transformers, Normalizing Flows, Flow Matching, etc., the field of generative models has gained significant attention in the machine learning and artificial intelligence research community. However, many problems and questions remain open, and the application to complex data domains such as graphs, time series, point processes, and sets is often non-trivial. We are interested in supervising motivated students to explore and extend the capabilities of state-of-the-art generative models for various data domains.

Contact : Marcel Kollovieh , David Lüdke

- Flow Matching for Generative Modeling

- Auto-Encoding Variational Bayes

- Denoising Diffusion Probabilistic Models

- Structured Denoising Diffusion Models in Discrete State-Spaces

Active Learning for Multi Agent 3D Object Detection

Type: Master's Thesis Industrial partner: BMW

Prerequisites:

- Strong knowledge in machine learning

- Knowledge in Object Detection

- Excellent programming skills

- Proficiency with Python and deep learning frameworks (TensorFlow or PyTorch)

Description:

In autonomous driving, state-of-the-art deep neural networks are used for perception tasks like for example 3D object detection. To provide promising results, these networks often require a lot of complex annotation data for training. These annotations are often costly and redundant. Active learning is used to select the most informative samples for annotation and cover a dataset with as less annotated data as possible.

The objective is to explore active learning approaches for 3D object detection using combined uncertainty and diversity based methods.

Contact: Sebastian Schmidt

References:

- Exploring Diversity-based Active Learning for 3D Object Detection in Autonomous Driving

- Efficient Uncertainty Estimation for Semantic Segmentation in Videos

- KECOR: Kernel Coding Rate Maximization for Active 3D Object Detection

- Towards Open World Active Learning for 3D Object Detection

Graph Neural Networks

Type: Master's thesis / Bachelor's thesis / guided research

- Knowledge of graph/network theory

Graph neural networks (GNNs) have recently achieved great successes in a wide variety of applications, such as chemistry, reinforcement learning, knowledge graphs, traffic networks, or computer vision. These models leverage graph data by updating node representations based on messages passed between nodes connected by edges, or by transforming node representation using spectral graph properties. These approaches are very effective, but many theoretical aspects of these models remain unclear and there are many possible extensions to improve GNNs and go beyond the nodes' direct neighbors and simple message aggregation.

Contact: Simon Geisler

- Semi-supervised classification with graph convolutional networks

- Relational inductive biases, deep learning, and graph networks

- Diffusion Improves Graph Learning

- Weisfeiler and leman go neural: Higher-order graph neural networks

- Reliable Graph Neural Networks via Robust Aggregation

Physics-aware Graph Neural Networks

Type: Master's thesis / guided research

- Proficiency with Python and deep learning frameworks (JAX or PyTorch)

- Knowledge of graph neural networks (e.g. GCN, MPNN, SchNet)

- Optional: Knowledge of machine learning on molecules and quantum chemistry

Deep learning models, especially graph neural networks (GNNs), have recently achieved great successes in predicting quantum mechanical properties of molecules. There is a vast amount of applications for these models, such as finding the best method of chemical synthesis or selecting candidates for drugs, construction materials, batteries, or solar cells. However, GNNs have only been proposed in recent years and there remain many open questions about how to best represent and leverage quantum mechanical properties and methods.

Contact: Nicholas Gao

- Directional Message Passing for Molecular Graphs

- Neural message passing for quantum chemistry

- Learning to Simulate Complex Physics with Graph Network

- Ab initio solution of the many-electron Schrödinger equation with deep neural networks

- Ab-Initio Potential Energy Surfaces by Pairing GNNs with Neural Wave Functions

- Tensor field networks: Rotation- and translation-equivariant neural networks for 3D point clouds

Robustness Verification for Deep Classifiers

Type: Master's thesis / Guided research

- Strong machine learning knowledge (at least equivalent to IN2064 plus an advanced course on deep learning)

- Strong background in mathematical optimization (preferably combined with Machine Learning setting)

- Proficiency with python and deep learning frameworks (Pytorch or Tensorflow)

- (Preferred) Knowledge of training techniques to obtain classifiers that are robust against small perturbations in data

Description : Recent work shows that deep classifiers suffer under presence of adversarial examples: misclassified points that are very close to the training samples or even visually indistinguishable from them. This undesired behaviour constraints possibilities of deployment in safety critical scenarios for promising classification methods based on neural nets. Therefore, new training methods should be proposed that promote (or preferably ensure) robust behaviour of the classifier around training samples.

Contact: Aleksei Kuvshinov

References (Background):

- Intriguing properties of neural networks

- Explaining and harnessing adversarial examples

- SoK: Certified Robustness for Deep Neural Networks

- Certified Adversarial Robustness via Randomized Smoothing

- Formal guarantees on the robustness of a classifier against adversarial manipulation

- Towards deep learning models resistant to adversarial attacks

- Provable defenses against adversarial examples via the convex outer adversarial polytope

- Certified defenses against adversarial examples

- Lipschitz-margin training: Scalable certification of perturbation invariance for deep neural networks

Uncertainty Estimation in Deep Learning

Type: Master's Thesis / Guided Research

- Strong knowledge in probability theory

Safe prediction is a key feature in many intelligent systems. Classically, Machine Learning models compute output predictions regardless of the underlying uncertainty of the encountered situations. In contrast, aleatoric and epistemic uncertainty bring knowledge about undecidable and uncommon situations. The uncertainty view can be a substantial help to detect and explain unsafe predictions, and therefore make ML systems more robust. The goal of this project is to improve the uncertainty estimation in ML models in various types of task.

Contact: Tom Wollschläger , Dominik Fuchsgruber , Bertrand Charpentier

- Can You Trust Your Model’s Uncertainty? Evaluating Predictive Uncertainty Under Dataset Shift

- Predictive Uncertainty Estimation via Prior Networks

- Posterior Network: Uncertainty Estimation without OOD samples via Density-based Pseudo-Counts

- Evidential Deep Learning to Quantify Classification Uncertainty

- Weight Uncertainty in Neural Networks

Hierarchies in Deep Learning

Type: Master's Thesis / Guided Research

Multi-scale structures are ubiquitous in real life datasets. As an example, phylogenetic nomenclature naturally reveals a hierarchical classification of species based on their historical evolutions. Learning multi-scale structures can help to exhibit natural and meaningful organizations in the data and also to obtain compact data representation. The goal of this project is to leverage multi-scale structures to improve speed, performances and understanding of Deep Learning models.

Contact: Marcel Kollovieh , Bertrand Charpentier

- Tree Sampling Divergence: An Information-Theoretic Metricfor Hierarchical Graph Clustering

- Hierarchical Graph Representation Learning with Differentiable Pooling

- Gradient-based Hierarchical Clustering

- Gradient-based Hierarchical Clustering using Continuous Representations of Trees in Hyperbolic Space

- Princeton University Doctoral Dissertations, 2011-2024

- Operations Research and Financial Engineering

Items in Dataspace are protected by copyright, with all rights reserved, unless otherwise indicated.

- Survey Paper

- Open access

- Published: 01 July 2020

Cybersecurity data science: an overview from machine learning perspective

- Iqbal H. Sarker ORCID: orcid.org/0000-0003-1740-5517 1 , 2 ,

- A. S. M. Kayes 3 ,

- Shahriar Badsha 4 ,

- Hamed Alqahtani 5 ,

- Paul Watters 3 &

- Alex Ng 3

Journal of Big Data volume 7 , Article number: 41 ( 2020 ) Cite this article

146k Accesses

249 Citations

51 Altmetric

Metrics details

In a computing context, cybersecurity is undergoing massive shifts in technology and its operations in recent days, and data science is driving the change. Extracting security incident patterns or insights from cybersecurity data and building corresponding data-driven model , is the key to make a security system automated and intelligent. To understand and analyze the actual phenomena with data, various scientific methods, machine learning techniques, processes, and systems are used, which is commonly known as data science. In this paper, we focus and briefly discuss on cybersecurity data science , where the data is being gathered from relevant cybersecurity sources, and the analytics complement the latest data-driven patterns for providing more effective security solutions. The concept of cybersecurity data science allows making the computing process more actionable and intelligent as compared to traditional ones in the domain of cybersecurity. We then discuss and summarize a number of associated research issues and future directions . Furthermore, we provide a machine learning based multi-layered framework for the purpose of cybersecurity modeling. Overall, our goal is not only to discuss cybersecurity data science and relevant methods but also to focus the applicability towards data-driven intelligent decision making for protecting the systems from cyber-attacks.

Introduction

Due to the increasing dependency on digitalization and Internet-of-Things (IoT) [ 1 ], various security incidents such as unauthorized access [ 2 ], malware attack [ 3 ], zero-day attack [ 4 ], data breach [ 5 ], denial of service (DoS) [ 2 ], social engineering or phishing [ 6 ] etc. have grown at an exponential rate in recent years. For instance, in 2010, there were less than 50 million unique malware executables known to the security community. By 2012, they were double around 100 million, and in 2019, there are more than 900 million malicious executables known to the security community, and this number is likely to grow, according to the statistics of AV-TEST institute in Germany [ 7 ]. Cybercrime and attacks can cause devastating financial losses and affect organizations and individuals as well. It’s estimated that, a data breach costs 8.19 million USD for the United States and 3.9 million USD on an average [ 8 ], and the annual cost to the global economy from cybercrime is 400 billion USD [ 9 ]. According to Juniper Research [ 10 ], the number of records breached each year to nearly triple over the next 5 years. Thus, it’s essential that organizations need to adopt and implement a strong cybersecurity approach to mitigate the loss. According to [ 11 ], the national security of a country depends on the business, government, and individual citizens having access to applications and tools which are highly secure, and the capability on detecting and eliminating such cyber-threats in a timely way. Therefore, to effectively identify various cyber incidents either previously seen or unseen, and intelligently protect the relevant systems from such cyber-attacks, is a key issue to be solved urgently.

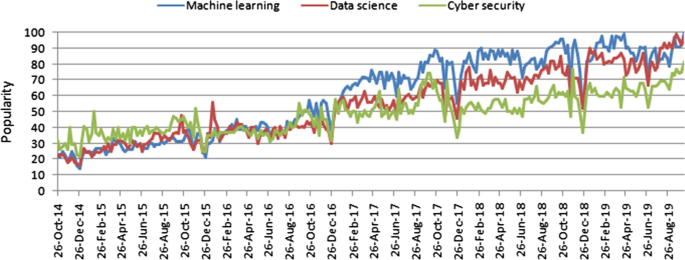

Popularity trends of data science, machine learning and cybersecurity over time, where x-axis represents the timestamp information and y axis represents the corresponding popularity values

Cybersecurity is a set of technologies and processes designed to protect computers, networks, programs and data from attack, damage, or unauthorized access [ 12 ]. In recent days, cybersecurity is undergoing massive shifts in technology and its operations in the context of computing, and data science (DS) is driving the change, where machine learning (ML), a core part of “Artificial Intelligence” (AI) can play a vital role to discover the insights from data. Machine learning can significantly change the cybersecurity landscape and data science is leading a new scientific paradigm [ 13 , 14 ]. The popularity of these related technologies is increasing day-by-day, which is shown in Fig. 1 , based on the data of the last five years collected from Google Trends [ 15 ]. The figure represents timestamp information in terms of a particular date in the x-axis and corresponding popularity in the range of 0 (minimum) to 100 (maximum) in the y-axis. As shown in Fig. 1 , the popularity indication values of these areas are less than 30 in 2014, while they exceed 70 in 2019, i.e., more than double in terms of increased popularity. In this paper, we focus on cybersecurity data science (CDS), which is broadly related to these areas in terms of security data processing techniques and intelligent decision making in real-world applications. Overall, CDS is security data-focused, applies machine learning methods to quantify cyber risks, and ultimately seeks to optimize cybersecurity operations. Thus, the purpose of this paper is for those academia and industry people who want to study and develop a data-driven smart cybersecurity model based on machine learning techniques. Therefore, great emphasis is placed on a thorough description of various types of machine learning methods, and their relations and usage in the context of cybersecurity. This paper does not describe all of the different techniques used in cybersecurity in detail; instead, it gives an overview of cybersecurity data science modeling based on artificial intelligence, particularly from machine learning perspective.

The ultimate goal of cybersecurity data science is data-driven intelligent decision making from security data for smart cybersecurity solutions. CDS represents a partial paradigm shift from traditional well-known security solutions such as firewalls, user authentication and access control, cryptography systems etc. that might not be effective according to today’s need in cyber industry [ 16 , 17 , 18 , 19 ]. The problems are these are typically handled statically by a few experienced security analysts, where data management is done in an ad-hoc manner [ 20 , 21 ]. However, as an increasing number of cybersecurity incidents in different formats mentioned above continuously appear over time, such conventional solutions have encountered limitations in mitigating such cyber risks. As a result, numerous advanced attacks are created and spread very quickly throughout the Internet. Although several researchers use various data analysis and learning techniques to build cybersecurity models that are summarized in “ Machine learning tasks in cybersecurity ” section, a comprehensive security model based on the effective discovery of security insights and latest security patterns could be more useful. To address this issue, we need to develop more flexible and efficient security mechanisms that can respond to threats and to update security policies to mitigate them intelligently in a timely manner. To achieve this goal, it is inherently required to analyze a massive amount of relevant cybersecurity data generated from various sources such as network and system sources, and to discover insights or proper security policies with minimal human intervention in an automated manner.

Analyzing cybersecurity data and building the right tools and processes to successfully protect against cybersecurity incidents goes beyond a simple set of functional requirements and knowledge about risks, threats or vulnerabilities. For effectively extracting the insights or the patterns of security incidents, several machine learning techniques, such as feature engineering, data clustering, classification, and association analysis, or neural network-based deep learning techniques can be used, which are briefly discussed in “ Machine learning tasks in cybersecurity ” section. These learning techniques are capable to find the anomalies or malicious behavior and data-driven patterns of associated security incidents to make an intelligent decision. Thus, based on the concept of data-driven decision making, we aim to focus on cybersecurity data science , where the data is being gathered from relevant cybersecurity sources such as network activity, database activity, application activity, or user activity, and the analytics complement the latest data-driven patterns for providing corresponding security solutions.

The contributions of this paper are summarized as follows.

We first make a brief discussion on the concept of cybersecurity data science and relevant methods to understand its applicability towards data-driven intelligent decision making in the domain of cybersecurity. For this purpose, we also make a review and brief discussion on different machine learning tasks in cybersecurity, and summarize various cybersecurity datasets highlighting their usage in different data-driven cyber applications.

We then discuss and summarize a number of associated research issues and future directions in the area of cybersecurity data science, that could help both the academia and industry people to further research and development in relevant application areas.

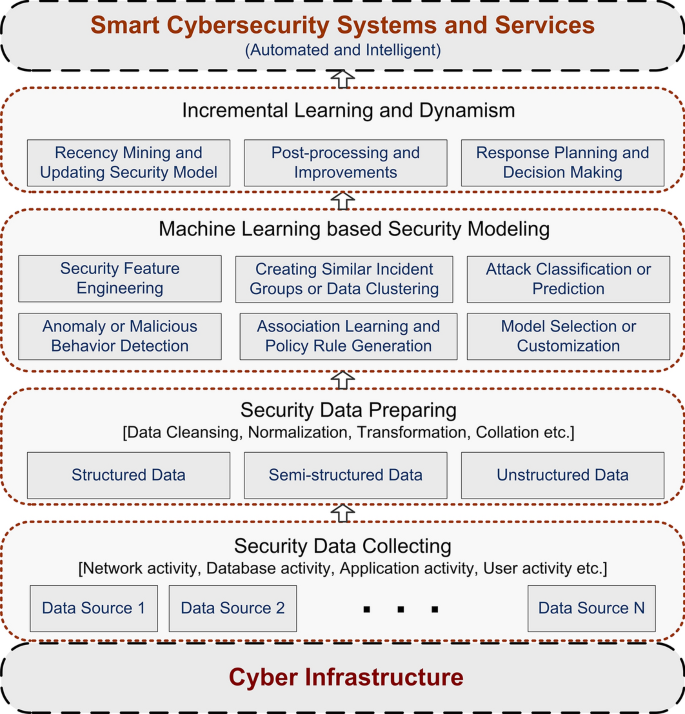

Finally, we provide a generic multi-layered framework of the cybersecurity data science model based on machine learning techniques. In this framework, we briefly discuss how the cybersecurity data science model can be used to discover useful insights from security data and making data-driven intelligent decisions to build smart cybersecurity systems.

The remainder of the paper is organized as follows. “ Background ” section summarizes background of our study and gives an overview of the related technologies of cybersecurity data science. “ Cybersecurity data science ” section defines and discusses briefly about cybersecurity data science including various categories of cyber incidents data. In “ Machine learning tasks in cybersecurity ” section, we briefly discuss various categories of machine learning techniques including their relations with cybersecurity tasks and summarize a number of machine learning based cybersecurity models in the field. “ Research issues and future directions ” section briefly discusses and highlights various research issues and future directions in the area of cybersecurity data science. In “ A multi-layered framework for smart cybersecurity services ” section, we suggest a machine learning-based framework to build cybersecurity data science model and discuss various layers with their roles. In “ Discussion ” section, we highlight several key points regarding our studies. Finally, “ Conclusion ” section concludes this paper.

In this section, we give an overview of the related technologies of cybersecurity data science including various types of cybersecurity incidents and defense strategies.

- Cybersecurity

Over the last half-century, the information and communication technology (ICT) industry has evolved greatly, which is ubiquitous and closely integrated with our modern society. Thus, protecting ICT systems and applications from cyber-attacks has been greatly concerned by the security policymakers in recent days [ 22 ]. The act of protecting ICT systems from various cyber-threats or attacks has come to be known as cybersecurity [ 9 ]. Several aspects are associated with cybersecurity: measures to protect information and communication technology; the raw data and information it contains and their processing and transmitting; associated virtual and physical elements of the systems; the degree of protection resulting from the application of those measures; and eventually the associated field of professional endeavor [ 23 ]. Craigen et al. defined “cybersecurity as a set of tools, practices, and guidelines that can be used to protect computer networks, software programs, and data from attack, damage, or unauthorized access” [ 24 ]. According to Aftergood et al. [ 12 ], “cybersecurity is a set of technologies and processes designed to protect computers, networks, programs and data from attacks and unauthorized access, alteration, or destruction”. Overall, cybersecurity concerns with the understanding of diverse cyber-attacks and devising corresponding defense strategies that preserve several properties defined as below [ 25 , 26 ].

Confidentiality is a property used to prevent the access and disclosure of information to unauthorized individuals, entities or systems.

Integrity is a property used to prevent any modification or destruction of information in an unauthorized manner.

Availability is a property used to ensure timely and reliable access of information assets and systems to an authorized entity.

The term cybersecurity applies in a variety of contexts, from business to mobile computing, and can be divided into several common categories. These are - network security that mainly focuses on securing a computer network from cyber attackers or intruders; application security that takes into account keeping the software and the devices free of risks or cyber-threats; information security that mainly considers security and the privacy of relevant data; operational security that includes the processes of handling and protecting data assets. Typical cybersecurity systems are composed of network security systems and computer security systems containing a firewall, antivirus software, or an intrusion detection system [ 27 ].

Cyberattacks and security risks

The risks typically associated with any attack, which considers three security factors, such as threats, i.e., who is attacking, vulnerabilities, i.e., the weaknesses they are attacking, and impacts, i.e., what the attack does [ 9 ]. A security incident is an act that threatens the confidentiality, integrity, or availability of information assets and systems. Several types of cybersecurity incidents that may result in security risks on an organization’s systems and networks or an individual [ 2 ]. These are:

Unauthorized access that describes the act of accessing information to network, systems or data without authorization that results in a violation of a security policy [ 2 ];

Malware known as malicious software, is any program or software that intentionally designed to cause damage to a computer, client, server, or computer network, e.g., botnets. Examples of different types of malware including computer viruses, worms, Trojan horses, adware, ransomware, spyware, malicious bots, etc. [ 3 , 26 ]; Ransom malware, or ransomware , is an emerging form of malware that prevents users from accessing their systems or personal files, or the devices, then demands an anonymous online payment in order to restore access.

Denial-of-Service is an attack meant to shut down a machine or network, making it inaccessible to its intended users by flooding the target with traffic that triggers a crash. The Denial-of-Service (DoS) attack typically uses one computer with an Internet connection, while distributed denial-of-service (DDoS) attack uses multiple computers and Internet connections to flood the targeted resource [ 2 ];

Phishing a type of social engineering , used for a broad range of malicious activities accomplished through human interactions, in which the fraudulent attempt takes part to obtain sensitive information such as banking and credit card details, login credentials, or personally identifiable information by disguising oneself as a trusted individual or entity via an electronic communication such as email, text, or instant message, etc. [ 26 ];

Zero-day attack is considered as the term that is used to describe the threat of an unknown security vulnerability for which either the patch has not been released or the application developers were unaware [ 4 , 28 ].

Beside these attacks mentioned above, privilege escalation [ 29 ], password attack [ 30 ], insider threat [ 31 ], man-in-the-middle [ 32 ], advanced persistent threat [ 33 ], SQL injection attack [ 34 ], cryptojacking attack [ 35 ], web application attack [ 30 ] etc. are well-known as security incidents in the field of cybersecurity. A data breach is another type of security incident, known as a data leak, which is involved in the unauthorized access of data by an individual, application, or service [ 5 ]. Thus, all data breaches are considered as security incidents, however, all the security incidents are not data breaches. Most data breaches occur in the banking industry involving the credit card numbers, personal information, followed by the healthcare sector and the public sector [ 36 ].

Cybersecurity defense strategies

Defense strategies are needed to protect data or information, information systems, and networks from cyber-attacks or intrusions. More granularly, they are responsible for preventing data breaches or security incidents and monitoring and reacting to intrusions, which can be defined as any kind of unauthorized activity that causes damage to an information system [ 37 ]. An intrusion detection system (IDS) is typically represented as “a device or software application that monitors a computer network or systems for malicious activity or policy violations” [ 38 ]. The traditional well-known security solutions such as anti-virus, firewalls, user authentication, access control, data encryption and cryptography systems, however might not be effective according to today’s need in the cyber industry

[ 16 , 17 , 18 , 19 ]. On the other hand, IDS resolves the issues by analyzing security data from several key points in a computer network or system [ 39 , 40 ]. Moreover, intrusion detection systems can be used to detect both internal and external attacks.

Intrusion detection systems are different categories according to the usage scope. For instance, a host-based intrusion detection system (HIDS), and network intrusion detection system (NIDS) are the most common types based on the scope of single computers to large networks. In a HIDS, the system monitors important files on an individual system, while it analyzes and monitors network connections for suspicious traffic in a NIDS. Similarly, based on methodologies, the signature-based IDS, and anomaly-based IDS are the most well-known variants [ 37 ].

Signature-based IDS : A signature can be a predefined string, pattern, or rule that corresponds to a known attack. A particular pattern is identified as the detection of corresponding attacks in a signature-based IDS. An example of a signature can be known patterns or a byte sequence in a network traffic, or sequences used by malware. To detect the attacks, anti-virus software uses such types of sequences or patterns as a signature while performing the matching operation. Signature-based IDS is also known as knowledge-based or misuse detection [ 41 ]. This technique can be efficient to process a high volume of network traffic, however, is strictly limited to the known attacks only. Thus, detecting new attacks or unseen attacks is one of the biggest challenges faced by this signature-based system.

Anomaly-based IDS : The concept of anomaly-based detection overcomes the issues of signature-based IDS discussed above. In an anomaly-based intrusion detection system, the behavior of the network is first examined to find dynamic patterns, to automatically create a data-driven model, to profile the normal behavior, and thus it detects deviations in the case of any anomalies [ 41 ]. Thus, anomaly-based IDS can be treated as a dynamic approach, which follows behavior-oriented detection. The main advantage of anomaly-based IDS is the ability to identify unknown or zero-day attacks [ 42 ]. However, the issue is that the identified anomaly or abnormal behavior is not always an indicator of intrusions. It sometimes may happen because of several factors such as policy changes or offering a new service.

In addition, a hybrid detection approach [ 43 , 44 ] that takes into account both the misuse and anomaly-based techniques discussed above can be used to detect intrusions. In a hybrid system, the misuse detection system is used for detecting known types of intrusions and anomaly detection system is used for novel attacks [ 45 ]. Beside these approaches, stateful protocol analysis can also be used to detect intrusions that identifies deviations of protocol state similarly to the anomaly-based method, however it uses predetermined universal profiles based on accepted definitions of benign activity [ 41 ]. In Table 1 , we have summarized these common approaches highlighting their pros and cons. Once the detecting has been completed, the intrusion prevention system (IPS) that is intended to prevent malicious events, can be used to mitigate the risks in different ways such as manual, providing notification, or automatic process [ 46 ]. Among these approaches, an automatic response system could be more effective as it does not involve a human interface between the detection and response systems.

- Data science

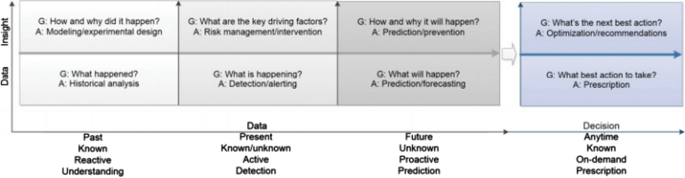

We are living in the age of data, advanced analytics, and data science, which are related to data-driven intelligent decision making. Although, the process of searching patterns or discovering hidden and interesting knowledge from data is known as data mining [ 47 ], in this paper, we use the broader term “data science” rather than data mining. The reason is that, data science, in its most fundamental form, is all about understanding of data. It involves studying, processing, and extracting valuable insights from a set of information. In addition to data mining, data analytics is also related to data science. The development of data mining, knowledge discovery, and machine learning that refers creating algorithms and program which learn on their own, together with the original data analysis and descriptive analytics from the statistical perspective, forms the general concept of “data analytics” [ 47 ]. Nowadays, many researchers use the term “data science” to describe the interdisciplinary field of data collection, preprocessing, inferring, or making decisions by analyzing the data. To understand and analyze the actual phenomena with data, various scientific methods, machine learning techniques, processes, and systems are used, which is commonly known as data science. According to Cao et al. [ 47 ] “data science is a new interdisciplinary field that synthesizes and builds on statistics, informatics, computing, communication, management, and sociology to study data and its environments, to transform data to insights and decisions by following a data-to-knowledge-to-wisdom thinking and methodology”. As a high-level statement in the context of cybersecurity, we can conclude that it is the study of security data to provide data-driven solutions for the given security problems, as known as “the science of cybersecurity data”. Figure 2 shows the typical data-to-insight-to-decision transfer at different periods and general analytic stages in data science, in terms of a variety of analytics goals (G) and approaches (A) to achieve the data-to-decision goal [ 47 ].

Data-to-insight-to-decision analytic stages in data science [ 47 ]