- How it works

Reliability and Validity – Definitions, Types & Examples

Published by Alvin Nicolas at August 16th, 2021 , Revised On October 26, 2023

A researcher must test the collected data before making any conclusion. Every research design needs to be concerned with reliability and validity to measure the quality of the research.

What is Reliability?

Reliability refers to the consistency of the measurement. Reliability shows how trustworthy is the score of the test. If the collected data shows the same results after being tested using various methods and sample groups, the information is reliable. If your method has reliability, the results will be valid.

Example: If you weigh yourself on a weighing scale throughout the day, you’ll get the same results. These are considered reliable results obtained through repeated measures.

Example: If a teacher conducts the same math test of students and repeats it next week with the same questions. If she gets the same score, then the reliability of the test is high.

What is the Validity?

Validity refers to the accuracy of the measurement. Validity shows how a specific test is suitable for a particular situation. If the results are accurate according to the researcher’s situation, explanation, and prediction, then the research is valid.

If the method of measuring is accurate, then it’ll produce accurate results. If a method is reliable, then it’s valid. In contrast, if a method is not reliable, it’s not valid.

Example: Your weighing scale shows different results each time you weigh yourself within a day even after handling it carefully, and weighing before and after meals. Your weighing machine might be malfunctioning. It means your method had low reliability. Hence you are getting inaccurate or inconsistent results that are not valid.

Example: Suppose a questionnaire is distributed among a group of people to check the quality of a skincare product and repeated the same questionnaire with many groups. If you get the same response from various participants, it means the validity of the questionnaire and product is high as it has high reliability.

Most of the time, validity is difficult to measure even though the process of measurement is reliable. It isn’t easy to interpret the real situation.

Example: If the weighing scale shows the same result, let’s say 70 kg each time, even if your actual weight is 55 kg, then it means the weighing scale is malfunctioning. However, it was showing consistent results, but it cannot be considered as reliable. It means the method has low reliability.

Internal Vs. External Validity

One of the key features of randomised designs is that they have significantly high internal and external validity.

Internal validity is the ability to draw a causal link between your treatment and the dependent variable of interest. It means the observed changes should be due to the experiment conducted, and any external factor should not influence the variables .

Example: age, level, height, and grade.

External validity is the ability to identify and generalise your study outcomes to the population at large. The relationship between the study’s situation and the situations outside the study is considered external validity.

Also, read about Inductive vs Deductive reasoning in this article.

Looking for reliable dissertation support?

We hear you.

- Whether you want a full dissertation written or need help forming a dissertation proposal, we can help you with both.

- Get different dissertation services at ResearchProspect and score amazing grades!

Threats to Interval Validity

Threats of external validity, how to assess reliability and validity.

Reliability can be measured by comparing the consistency of the procedure and its results. There are various methods to measure validity and reliability. Reliability can be measured through various statistical methods depending on the types of validity, as explained below:

Types of Reliability

Types of validity.

As we discussed above, the reliability of the measurement alone cannot determine its validity. Validity is difficult to be measured even if the method is reliable. The following type of tests is conducted for measuring validity.

Does your Research Methodology Have the Following?

- Great Research/Sources

- Perfect Language

- Accurate Sources

If not, we can help. Our panel of experts makes sure to keep the 3 pillars of Research Methodology strong.

How to Increase Reliability?

- Use an appropriate questionnaire to measure the competency level.

- Ensure a consistent environment for participants

- Make the participants familiar with the criteria of assessment.

- Train the participants appropriately.

- Analyse the research items regularly to avoid poor performance.

How to Increase Validity?

Ensuring Validity is also not an easy job. A proper functioning method to ensure validity is given below:

- The reactivity should be minimised at the first concern.

- The Hawthorne effect should be reduced.

- The respondents should be motivated.

- The intervals between the pre-test and post-test should not be lengthy.

- Dropout rates should be avoided.

- The inter-rater reliability should be ensured.

- Control and experimental groups should be matched with each other.

How to Implement Reliability and Validity in your Thesis?

According to the experts, it is helpful if to implement the concept of reliability and Validity. Especially, in the thesis and the dissertation, these concepts are adopted much. The method for implementation given below:

Frequently Asked Questions

What is reliability and validity in research.

Reliability in research refers to the consistency and stability of measurements or findings. Validity relates to the accuracy and truthfulness of results, measuring what the study intends to. Both are crucial for trustworthy and credible research outcomes.

What is validity?

Validity in research refers to the extent to which a study accurately measures what it intends to measure. It ensures that the results are truly representative of the phenomena under investigation. Without validity, research findings may be irrelevant, misleading, or incorrect, limiting their applicability and credibility.

What is reliability?

Reliability in research refers to the consistency and stability of measurements over time. If a study is reliable, repeating the experiment or test under the same conditions should produce similar results. Without reliability, findings become unpredictable and lack dependability, potentially undermining the study’s credibility and generalisability.

What is reliability in psychology?

In psychology, reliability refers to the consistency of a measurement tool or test. A reliable psychological assessment produces stable and consistent results across different times, situations, or raters. It ensures that an instrument’s scores are not due to random error, making the findings dependable and reproducible in similar conditions.

What is test retest reliability?

Test-retest reliability assesses the consistency of measurements taken by a test over time. It involves administering the same test to the same participants at two different points in time and comparing the results. A high correlation between the scores indicates that the test produces stable and consistent results over time.

How to improve reliability of an experiment?

- Standardise procedures and instructions.

- Use consistent and precise measurement tools.

- Train observers or raters to reduce subjective judgments.

- Increase sample size to reduce random errors.

- Conduct pilot studies to refine methods.

- Repeat measurements or use multiple methods.

- Address potential sources of variability.

What is the difference between reliability and validity?

Reliability refers to the consistency and repeatability of measurements, ensuring results are stable over time. Validity indicates how well an instrument measures what it’s intended to measure, ensuring accuracy and relevance. While a test can be reliable without being valid, a valid test must inherently be reliable. Both are essential for credible research.

Are interviews reliable and valid?

Interviews can be both reliable and valid, but they are susceptible to biases. The reliability and validity depend on the design, structure, and execution of the interview. Structured interviews with standardised questions improve reliability. Validity is enhanced when questions accurately capture the intended construct and when interviewer biases are minimised.

Are IQ tests valid and reliable?

IQ tests are generally considered reliable, producing consistent scores over time. Their validity, however, is a subject of debate. While they effectively measure certain cognitive skills, whether they capture the entirety of “intelligence” or predict success in all life areas is contested. Cultural bias and over-reliance on tests are also concerns.

Are questionnaires reliable and valid?

Questionnaires can be both reliable and valid if well-designed. Reliability is achieved when they produce consistent results over time or across similar populations. Validity is ensured when questions accurately measure the intended construct. However, factors like poorly phrased questions, respondent bias, and lack of standardisation can compromise their reliability and validity.

You May Also Like

Inductive and deductive reasoning takes into account assumptions and incidents. Here is all you need to know about inductive vs deductive reasoning.

This article provides the key advantages of primary research over secondary research so you can make an informed decision.

You can transcribe an interview by converting a conversation into a written format including question-answer recording sessions between two or more people.

USEFUL LINKS

LEARNING RESOURCES

COMPANY DETAILS

- How It Works

- Privacy Policy

Home » Reliability – Types, Examples and Guide

Reliability – Types, Examples and Guide

Table of Contents

Reliability

Definition:

Reliability refers to the consistency, dependability, and trustworthiness of a system, process, or measurement to perform its intended function or produce consistent results over time. It is a desirable characteristic in various domains, including engineering, manufacturing, software development, and data analysis.

Reliability In Engineering

In engineering and manufacturing, reliability refers to the ability of a product, equipment, or system to function without failure or breakdown under normal operating conditions for a specified period. A reliable system consistently performs its intended functions, meets performance requirements, and withstands various environmental factors, stress, or wear and tear.

Reliability In Software Development

In software development, reliability relates to the stability and consistency of software applications or systems. A reliable software program operates consistently without crashing, produces accurate results, and handles errors or exceptions gracefully. Reliability is often measured by metrics such as mean time between failures (MTBF) and mean time to repair (MTTR).

Reliability In Data Analysis and Statistics

In data analysis and statistics, reliability refers to the consistency and repeatability of measurements or assessments. For example, if a measurement instrument consistently produces similar results when measuring the same quantity or if multiple raters consistently agree on the same assessment, it is considered reliable. Reliability is often assessed using statistical measures such as test-retest reliability, inter-rater reliability, or internal consistency.

Research Reliability

Research reliability refers to the consistency, stability, and repeatability of research findings . It indicates the extent to which a research study produces consistent and dependable results when conducted under similar conditions. In other words, research reliability assesses whether the same results would be obtained if the study were replicated with the same methodology, sample, and context.

What Affects Reliability in Research

Several factors can affect the reliability of research measurements and assessments. Here are some common factors that can impact reliability:

Measurement Error

Measurement error refers to the variability or inconsistency in the measurements that is not due to the construct being measured. It can arise from various sources, such as the limitations of the measurement instrument, environmental factors, or the characteristics of the participants. Measurement error reduces the reliability of the measure by introducing random variability into the data.

Rater/Observer Bias

In studies involving subjective assessments or ratings, the biases or subjective judgments of the raters or observers can affect reliability. If different raters interpret and evaluate the same phenomenon differently, it can lead to inconsistencies in the ratings, resulting in lower inter-rater reliability.

Participant Factors

Characteristics or factors related to the participants themselves can influence reliability. For example, factors such as fatigue, motivation, attention, or mood can introduce variability in responses, affecting the reliability of self-report measures or performance assessments.

Instrumentation

The quality and characteristics of the measurement instrument can impact reliability. If the instrument lacks clarity, has ambiguous items or instructions, or is prone to measurement errors, it can decrease the reliability of the measure. Poorly designed or unreliable instruments can introduce measurement error and decrease the consistency of the measurements.

Sample Size

Sample size can affect reliability, especially in studies where the reliability coefficient is based on correlations or variability within the sample. A larger sample size generally provides more stable estimates of reliability, while smaller samples can yield less precise estimates.

Time Interval

The time interval between test administrations can impact test-retest reliability. If the time interval is too short, participants may recall their previous responses and answer in a similar manner, artificially inflating the reliability coefficient. On the other hand, if the time interval is too long, true changes in the construct being measured may occur, leading to lower test-retest reliability.

Content Sampling

The specific items or questions included in a measure can affect reliability. If the measure does not adequately sample the full range of the construct being measured or if the items are too similar or redundant, it can result in lower internal consistency reliability.

Scoring and Data Handling

Errors in scoring, data entry, or data handling can introduce variability and impact reliability. Inaccurate or inconsistent scoring procedures, data entry mistakes, or mishandling of missing data can affect the reliability of the measurements.

Context and Environment

The context and environment in which measurements are obtained can influence reliability. Factors such as noise, distractions, lighting conditions, or the presence of others can introduce variability and affect the consistency of the measurements.

Types of Reliability

There are several types of reliability that are commonly discussed in research and measurement contexts. Here are some of the main types of reliability:

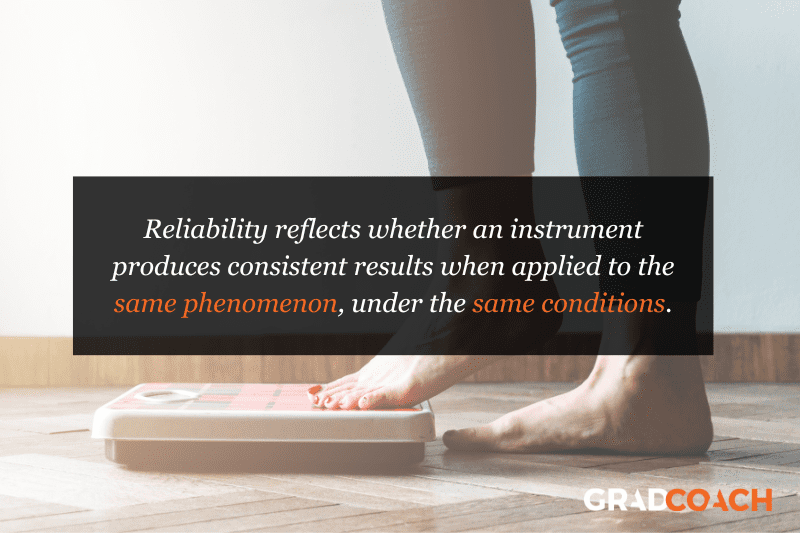

Test-Retest Reliability

This type of reliability assesses the consistency of a measure over time. It involves administering the same test or measure to the same group of individuals on two separate occasions and then comparing the results. If the scores are similar or highly correlated across the two testing points, it indicates good test-retest reliability.

Inter-Rater Reliability

Inter-rater reliability examines the degree of agreement or consistency between different raters or observers who are assessing the same phenomenon. It is commonly used in subjective evaluations or assessments where judgments are made by multiple individuals. High inter-rater reliability suggests that different observers are likely to reach the same conclusions or make consistent assessments.

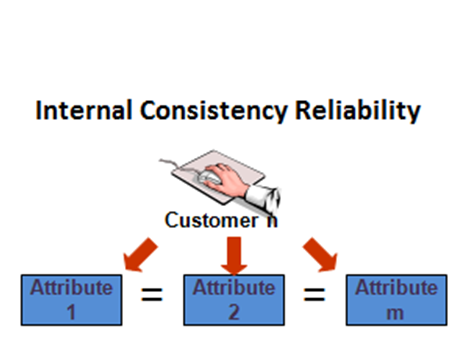

Internal Consistency Reliability

Internal consistency reliability assesses the extent to which the items or questions within a measure are consistent with each other. It is commonly measured using techniques such as Cronbach’s alpha. High internal consistency reliability indicates that the items within a measure are measuring the same construct or concept consistently.

Parallel Forms Reliability

Parallel forms reliability assesses the consistency of different versions or forms of a test that are intended to measure the same construct. Two equivalent versions of a test are administered to the same group of individuals, and the scores are compared to determine the level of agreement between the forms.

Split-Half Reliability

Split-half reliability involves splitting a measure into two halves and examining the consistency between the two halves. It can be done by dividing the items into odd-even pairs or by randomly splitting the items. The scores from the two halves are then compared to assess the degree of consistency.

Alternate Forms Reliability

Alternate forms reliability is similar to parallel forms reliability, but it involves administering two different versions of a test to the same group of individuals. The two forms should be equivalent and measure the same construct. The scores from the two forms are then compared to assess the level of agreement.

Applications of Reliability

Reliability has several important applications across various fields and disciplines. Here are some common applications of reliability:

Psychological and Educational Testing

Reliability is crucial in psychological and educational testing to ensure that the scores obtained from assessments are consistent and dependable. It helps to determine the accuracy and stability of measures such as intelligence tests, personality assessments, academic exams, and aptitude tests.

Market Research

In market research, reliability is important for ensuring consistent and dependable data collection. Surveys, questionnaires, and other data collection instruments need to have high reliability to obtain accurate and consistent responses from participants. Reliability analysis helps researchers identify and address any issues that may affect the consistency of the data.

Health and Medical Research

Reliability is essential in health and medical research to ensure that measurements and assessments used in studies are consistent and trustworthy. This includes the reliability of diagnostic tests, patient-reported outcome measures, observational measures, and psychometric scales. High reliability is crucial for making valid inferences and drawing reliable conclusions from research findings.

Quality Control and Manufacturing

Reliability analysis is widely used in industries such as manufacturing and quality control to assess the reliability of products and processes. It helps to identify and address sources of variation and inconsistency, ensuring that products meet the required standards and specifications consistently.

Social Science Research

Reliability plays a vital role in social science research, including fields such as sociology, anthropology, and political science. It is used to assess the consistency of measurement tools, such as surveys or observational protocols, to ensure that the data collected is reliable and can be trusted for analysis and interpretation.

Performance Evaluation

Reliability is important in performance evaluation systems used in organizations and workplaces. Whether it’s assessing employee performance, evaluating the reliability of scoring rubrics, or measuring the consistency of ratings by supervisors, reliability analysis helps ensure fairness and consistency in the evaluation process.

Psychometrics and Scale Development

Reliability analysis is a fundamental step in psychometrics, which involves developing and validating measurement scales. Researchers assess the reliability of items and subscales to ensure that the scale measures the intended construct consistently and accurately.

Examples of Reliability

Here are some examples of reliability in different contexts:

Test-Retest Reliability Example: A researcher administers a personality questionnaire to a group of participants and then administers the same questionnaire to the same participants after a certain period, such as two weeks. The scores obtained from the two administrations are highly correlated, indicating good test-retest reliability.

Inter-Rater Reliability Example: Multiple teachers assess the essays of a group of students using a standardized grading rubric. The ratings assigned by the teachers show a high level of agreement or correlation, indicating good inter-rater reliability.

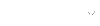

Internal Consistency Reliability Example: A researcher develops a questionnaire to measure job satisfaction. The researcher administers the questionnaire to a group of employees and calculates Cronbach’s alpha to assess internal consistency. The calculated value of Cronbach’s alpha is high (e.g., above 0.8), indicating good internal consistency reliability.

Parallel Forms Reliability Example: Two versions of a mathematics exam are created, which are designed to measure the same mathematical skills. Both versions of the exam are administered to the same group of students, and the scores from the two versions are highly correlated, indicating good parallel forms reliability.

Split-Half Reliability Example: A researcher develops a survey to measure self-esteem. The survey consists of 20 items, and the researcher randomly divides the items into two halves. The scores obtained from each half of the survey show a high level of agreement or correlation, indicating good split-half reliability.

Alternate Forms Reliability Example: A researcher develops two versions of a language proficiency test, which are designed to measure the same language skills. Both versions of the test are administered to the same group of participants, and the scores from the two versions are highly correlated, indicating good alternate forms reliability.

Where to Write About Reliability in A Thesis

When writing about reliability in a thesis, there are several sections where you can address this topic. Here are some common sections in a thesis where you can discuss reliability:

Introduction :

In the introduction section of your thesis, you can provide an overview of the study and briefly introduce the concept of reliability. Explain why reliability is important in your research field and how it relates to your study objectives.

Theoretical Framework:

If your thesis includes a theoretical framework or a literature review, this is a suitable section to discuss reliability. Provide an overview of the relevant theories, models, or concepts related to reliability in your field. Discuss how other researchers have measured and assessed reliability in similar studies.

Methodology:

The methodology section is crucial for addressing reliability. Describe the research design, data collection methods, and measurement instruments used in your study. Explain how you ensured the reliability of your measurements or data collection procedures. This may involve discussing pilot studies, inter-rater reliability, test-retest reliability, or other techniques used to assess and improve reliability.

Data Analysis:

In the data analysis section, you can discuss the statistical techniques employed to assess the reliability of your data. This might include measures such as Cronbach’s alpha, Cohen’s kappa, or intraclass correlation coefficients (ICC), depending on the nature of your data and research design. Present the results of reliability analyses and interpret their implications for your study.

Discussion:

In the discussion section, analyze and interpret the reliability results in relation to your research findings and objectives. Discuss any limitations or challenges encountered in establishing or maintaining reliability in your study. Consider the implications of reliability for the validity and generalizability of your results.

Conclusion:

In the conclusion section, summarize the main points discussed in your thesis regarding reliability. Emphasize the importance of reliability in research and highlight any recommendations or suggestions for future studies to enhance reliability.

Importance of Reliability

Reliability is of utmost importance in research, measurement, and various practical applications. Here are some key reasons why reliability is important:

- Consistency : Reliability ensures consistency in measurements and assessments. Consistent results indicate that the measure or instrument is stable and produces similar outcomes when applied repeatedly. This consistency allows researchers and practitioners to have confidence in the reliability of the data collected and the conclusions drawn from it.

- Accuracy : Reliability is closely linked to accuracy. A reliable measure produces results that are close to the true value or state of the phenomenon being measured. When a measure is unreliable, it introduces error and uncertainty into the data, which can lead to incorrect interpretations and flawed decision-making.

- Trustworthiness : Reliability enhances the trustworthiness of measurements and assessments. When a measure is reliable, it indicates that it is dependable and can be trusted to provide consistent and accurate results. This is particularly important in fields where decisions and actions are based on the data collected, such as education, healthcare, and market research.

- Comparability : Reliability enables meaningful comparisons between different groups, individuals, or time points. When measures are reliable, differences or changes observed can be attributed to true differences in the underlying construct, rather than measurement error. This allows for valid comparisons and evaluations, both within a study and across different studies.

- Validity : Reliability is a prerequisite for validity. Validity refers to the extent to which a measure or assessment accurately captures the construct it is intended to measure. If a measure is unreliable, it cannot be valid, as it does not consistently reflect the construct of interest. Establishing reliability is an important step in establishing the validity of a measure.

- Decision-making : Reliability is crucial for making informed decisions based on data. Whether it’s evaluating employee performance, diagnosing medical conditions, or conducting research studies, reliable measurements and assessments provide a solid foundation for decision-making processes. They help to reduce uncertainty and increase confidence in the conclusions drawn from the data.

- Quality Assurance : Reliability is essential for maintaining quality assurance in various fields. It allows organizations to assess and monitor the consistency and dependability of their processes, products, and services. By ensuring reliability, organizations can identify areas of improvement, address sources of variation, and deliver consistent and high-quality outcomes.

Limitations of Reliability

Here are some limitations of reliability:

- Limited to consistency: Reliability primarily focuses on the consistency of measurements and findings. However, it does not guarantee the accuracy or validity of the measurements. A measurement can be consistent but still systematically biased or flawed, leading to inaccurate results. Reliability alone cannot address validity concerns.

- Context-dependent: Reliability can be influenced by the specific context, conditions, or population under study. A measurement or instrument that demonstrates high reliability in one context may not necessarily exhibit the same level of reliability in a different context. Researchers need to consider the specific characteristics and limitations of their study context when interpreting reliability.

- Inadequate for complex constructs: Reliability is often based on the assumption of unidimensionality, which means that a measurement instrument is designed to capture a single construct. However, many real-world phenomena are complex and multidimensional, making it challenging to assess reliability accurately. Reliability measures may not adequately capture the full complexity of such constructs.

- Susceptible to systematic errors: Reliability focuses on minimizing random errors, but it may not detect or address systematic errors or biases in measurements. Systematic errors can arise from flaws in the measurement instrument, data collection procedures, or sample selection. Reliability assessments may not fully capture or address these systematic errors, leading to biased or inaccurate results.

- Relies on assumptions: Reliability assessments often rely on certain assumptions, such as the assumption of measurement invariance or the assumption of stable conditions over time. These assumptions may not always hold true in real-world research settings, particularly when studying dynamic or evolving phenomena. Failure to meet these assumptions can compromise the reliability of the research.

- Limited to quantitative measures: Reliability is typically applied to quantitative measures and instruments, which can be problematic when studying qualitative or subjective phenomena. Reliability measures may not fully capture the richness and complexity of qualitative data, limiting their applicability in certain research domains.

Also see Reliability Vs Validity

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Validity – Types, Examples and Guide

Alternate Forms Reliability – Methods, Examples...

Construct Validity – Types, Threats and Examples

Internal Validity – Threats, Examples and Guide

Reliability Vs Validity

Internal Consistency Reliability – Methods...

Understanding Reliability and Validity

These related research issues ask us to consider whether we are studying what we think we are studying and whether the measures we use are consistent.

Reliability

Reliability is the extent to which an experiment, test, or any measuring procedure yields the same result on repeated trials. Without the agreement of independent observers able to replicate research procedures, or the ability to use research tools and procedures that yield consistent measurements, researchers would be unable to satisfactorily draw conclusions, formulate theories, or make claims about the generalizability of their research. In addition to its important role in research, reliability is critical for many parts of our lives, including manufacturing, medicine, and sports.

Reliability is such an important concept that it has been defined in terms of its application to a wide range of activities. For researchers, four key types of reliability are:

Equivalency Reliability

Equivalency reliability is the extent to which two items measure identical concepts at an identical level of difficulty. Equivalency reliability is determined by relating two sets of test scores to one another to highlight the degree of relationship or association. In quantitative studies and particularly in experimental studies, a correlation coefficient, statistically referred to as r , is used to show the strength of the correlation between a dependent variable (the subject under study), and one or more independent variables , which are manipulated to determine effects on the dependent variable. An important consideration is that equivalency reliability is concerned with correlational, not causal, relationships.

For example, a researcher studying university English students happened to notice that when some students were studying for finals, their holiday shopping began. Intrigued by this, the researcher attempted to observe how often, or to what degree, this these two behaviors co-occurred throughout the academic year. The researcher used the results of the observations to assess the correlation between studying throughout the academic year and shopping for gifts. The researcher concluded there was poor equivalency reliability between the two actions. In other words, studying was not a reliable predictor of shopping for gifts.

Stability Reliability

Stability reliability (sometimes called test, re-test reliability) is the agreement of measuring instruments over time. To determine stability, a measure or test is repeated on the same subjects at a future date. Results are compared and correlated with the initial test to give a measure of stability.

An example of stability reliability would be the method of maintaining weights used by the U.S. Bureau of Standards. Platinum objects of fixed weight (one kilogram, one pound, etc...) are kept locked away. Once a year they are taken out and weighed, allowing scales to be reset so they are "weighing" accurately. Keeping track of how much the scales are off from year to year establishes a stability reliability for these instruments. In this instance, the platinum weights themselves are assumed to have a perfectly fixed stability reliability.

Internal Consistency

Internal consistency is the extent to which tests or procedures assess the same characteristic, skill or quality. It is a measure of the precision between the observers or of the measuring instruments used in a study. This type of reliability often helps researchers interpret data and predict the value of scores and the limits of the relationship among variables.

For example, a researcher designs a questionnaire to find out about college students' dissatisfaction with a particular textbook. Analyzing the internal consistency of the survey items dealing with dissatisfaction will reveal the extent to which items on the questionnaire focus on the notion of dissatisfaction.

Interrater Reliability

Interrater reliability is the extent to which two or more individuals (coders or raters) agree. Interrater reliability addresses the consistency of the implementation of a rating system.

A test of interrater reliability would be the following scenario: Two or more researchers are observing a high school classroom. The class is discussing a movie that they have just viewed as a group. The researchers have a sliding rating scale (1 being most positive, 5 being most negative) with which they are rating the student's oral responses. Interrater reliability assesses the consistency of how the rating system is implemented. For example, if one researcher gives a "1" to a student response, while another researcher gives a "5," obviously the interrater reliability would be inconsistent. Interrater reliability is dependent upon the ability of two or more individuals to be consistent. Training, education and monitoring skills can enhance interrater reliability.

Related Information: Reliability Example

An example of the importance of reliability is the use of measuring devices in Olympic track and field events. For the vast majority of people, ordinary measuring rulers and their degree of accuracy are reliable enough. However, for an Olympic event, such as the discus throw, the slightest variation in a measuring device -- whether it is a tape, clock, or other device -- could mean the difference between the gold and silver medals. Additionally, it could mean the difference between a new world record and outright failure to qualify for an event. Olympic measuring devices, then, must be reliable from one throw or race to another and from one competition to another. They must also be reliable when used in different parts of the world, as temperature, air pressure, humidity, interpretation, or other variables might affect their readings.

Validity refers to the degree to which a study accurately reflects or assesses the specific concept that the researcher is attempting to measure. While reliability is concerned with the accuracy of the actual measuring instrument or procedure, validity is concerned with the study's success at measuring what the researchers set out to measure.

Researchers should be concerned with both external and internal validity. External validity refers to the extent to which the results of a study are generalizable or transferable. (Most discussions of external validity focus solely on generalizability; see Campbell and Stanley, 1966. We include a reference here to transferability because many qualitative research studies are not designed to be generalized.)

Internal validity refers to (1) the rigor with which the study was conducted (e.g., the study's design, the care taken to conduct measurements, and decisions concerning what was and wasn't measured) and (2) the extent to which the designers of a study have taken into account alternative explanations for any causal relationships they explore (Huitt, 1998). In studies that do not explore causal relationships, only the first of these definitions should be considered when assessing internal validity.

Scholars discuss several types of internal validity. For brief discussions of several types of internal validity, click on the items below:

Face Validity

Face validity is concerned with how a measure or procedure appears. Does it seem like a reasonable way to gain the information the researchers are attempting to obtain? Does it seem well designed? Does it seem as though it will work reliably? Unlike content validity, face validity does not depend on established theories for support (Fink, 1995).

Criterion Related Validity

Criterion related validity, also referred to as instrumental validity, is used to demonstrate the accuracy of a measure or procedure by comparing it with another measure or procedure which has been demonstrated to be valid.

For example, imagine a hands-on driving test has been shown to be an accurate test of driving skills. By comparing the scores on the written driving test with the scores from the hands-on driving test, the written test can be validated by using a criterion related strategy in which the hands-on driving test is compared to the written test.

Construct Validity

Construct validity seeks agreement between a theoretical concept and a specific measuring device or procedure. For example, a researcher inventing a new IQ test might spend a great deal of time attempting to "define" intelligence in order to reach an acceptable level of construct validity.

Construct validity can be broken down into two sub-categories: Convergent validity and discriminate validity. Convergent validity is the actual general agreement among ratings, gathered independently of one another, where measures should be theoretically related. Discriminate validity is the lack of a relationship among measures which theoretically should not be related.

To understand whether a piece of research has construct validity, three steps should be followed. First, the theoretical relationships must be specified. Second, the empirical relationships between the measures of the concepts must be examined. Third, the empirical evidence must be interpreted in terms of how it clarifies the construct validity of the particular measure being tested (Carmines & Zeller, p. 23).

Content Validity

Content Validity is based on the extent to which a measurement reflects the specific intended domain of content (Carmines & Zeller, 1991, p.20).

Content validity is illustrated using the following examples: Researchers aim to study mathematical learning and create a survey to test for mathematical skill. If these researchers only tested for multiplication and then drew conclusions from that survey, their study would not show content validity because it excludes other mathematical functions. Although the establishment of content validity for placement-type exams seems relatively straight-forward, the process becomes more complex as it moves into the more abstract domain of socio-cultural studies. For example, a researcher needing to measure an attitude like self-esteem must decide what constitutes a relevant domain of content for that attitude. For socio-cultural studies, content validity forces the researchers to define the very domains they are attempting to study.

Related Information: Validity Example

Many recreational activities of high school students involve driving cars. A researcher, wanting to measure whether recreational activities have a negative effect on grade point average in high school students, might conduct a survey asking how many students drive to school and then attempt to find a correlation between these two factors. Because many students might use their cars for purposes other than or in addition to recreation (e.g., driving to work after school, driving to school rather than walking or taking a bus), this research study might prove invalid. Even if a strong correlation was found between driving and grade point average, driving to school in and of itself would seem to be an invalid measure of recreational activity.

The challenges of achieving reliability and validity are among the most difficult faced by researchers. In this section, we offer commentaries on these challenges.

Difficulties of Achieving Reliability

It is important to understand some of the problems concerning reliability which might arise. It would be ideal to reliably measure, every time, exactly those things which we intend to measure. However, researchers can go to great lengths and make every attempt to ensure accuracy in their studies, and still deal with the inherent difficulties of measuring particular events or behaviors. Sometimes, and particularly in studies of natural settings, the only measuring device available is the researcher's own observations of human interaction or human reaction to varying stimuli. As these methods are ultimately subjective in nature, results may be unreliable and multiple interpretations are possible. Three of these inherent difficulties are quixotic reliability, diachronic reliability and synchronic reliability.

Quixotic reliability refers to the situation where a single manner of observation consistently, yet erroneously, yields the same result. It is often a problem when research appears to be going well. This consistency might seem to suggest that the experiment was demonstrating perfect stability reliability. This, however, would not be the case.

For example, if a measuring device used in an Olympic competition always read 100 meters for every discus throw, this would be an example of an instrument consistently, yet erroneously, yielding the same result. However, quixotic reliability is often more subtle in its occurrences than this. For example, suppose a group of German researchers doing an ethnographic study of American attitudes ask questions and record responses. Parts of their study might produce responses which seem reliable, yet turn out to measure felicitous verbal embellishments required for "correct" social behavior. Asking Americans, "How are you?" for example, would in most cases, elicit the token, "Fine, thanks." However, this response would not accurately represent the mental or physical state of the respondents.

Diachronic reliability refers to the stability of observations over time. It is similar to stability reliability in that it deals with time. While this type of reliability is appropriate to assess features that remain relatively unchanged over time, such as landscape benchmarks or buildings, the same level of reliability is more difficult to achieve with socio-cultural phenomena.

For example, in a follow-up study one year later of reading comprehension in a specific group of school children, diachronic reliability would be hard to achieve. If the test were given to the same subjects a year later, many confounding variables would have impacted the researchers' ability to reproduce the same circumstances present at the first test. The final results would almost assuredly not reflect the degree of stability sought by the researchers.

Synchronic reliability refers to the similarity of observations within the same time frame; it is not about the similarity of things observed. Synchronic reliability, unlike diachronic reliability, rarely involves observations of identical things. Rather, it concerns itself with particularities of interest to the research.

For example, a researcher studies the actions of a duck's wing in flight and the actions of a hummingbird's wing in flight. Despite the fact that the researcher is studying two distinctly different kinds of wings, the action of the wings and the phenomenon produced is the same.

Comments on a Flawed, Yet Influential Study

An example of the dangers of generalizing from research that is inconsistent, invalid, unreliable, and incomplete is found in the Time magazine article, "On A Screen Near You: Cyberporn" (De Witt, 1995). This article relies on a study done at Carnegie Mellon University to determine the extent and implications of online pornography. Inherent to the study are methodological problems of unqualified hypotheses and conclusions, unsupported generalizations and a lack of peer review.

Ignoring the functional problems that manifest themselves later in the study, it seems that there are a number of ethical problems within the article. The article claims to be an exhaustive study of pornography on the Internet, (it was anything but exhaustive), it resembles a case study more than anything else. Marty Rimm, author of the undergraduate paper that Time used as a basis for the article, claims the paper was an "exhaustive study" of online pornography when, in fact, the study based most of its conclusions about pornography on the Internet on the "descriptions of slightly more than 4,000 images" (Meeks, 1995, p. 1). Some USENET groups see hundreds of postings in a day.

Considering the thousands of USENET groups, 4,000 images no longer carries the authoritative weight that its author intended. The real problem is that the study (an undergraduate paper similar to a second-semester composition assignment) was based not on pornographic images themselves, but on the descriptions of those images. This kind of reduction detracts significantly from the integrity of the final claims made by the author. In fact, this kind of research is commensurate with doing a study of the content of pornographic movies based on the titles of the movies, then making sociological generalizations based on what those titles indicate. (This is obviously a problem with a number of types of validity, because Rimm is not studying what he thinks he is studying, but instead something quite different. )

The author of the Time article, Philip Elmer De Witt writes, "The research team at CMU has undertaken the first systematic study of pornography on the Information Superhighway" (Godwin, 1995, p. 1). His statement is problematic in at least three ways. First, the research team actually consisted of a few of Rimm's undergraduate friends with no methodological training whatsoever. Additionally, no mention of the degree of interrater reliability is made. Second, this systematic study is actually merely a "non-randomly selected subset of commercial bulletin-board systems that focus on selling porn" (Godwin, p. 6). As pornography vending is actually just a small part of the whole concerning the use of pornography on the Internet, the entire premise of this study's content validity is firmly called into question. Finally, the use of the term "Information Superhighway" is a false assessment of what in actuality is only a few USENET groups and BBSs (Bulletin Board System), which make up only a small fraction of the entire "Information Superhighway" traffic. Essentially, what is here is yet another violation of content validity.

De Witt is quoted as saying: "In an 18-month study, the team surveyed 917,410 sexually-explicit pictures, descriptions, short-stories and film clips. On those USENET newsgroups where digitized images are stored, 83.5 percent of the pictures were pornographic" (De Witt 40).

Statistically, some interesting contradictions arise. The figure 917,410 was taken from adult-oriented BBSs--none came from actual USENET groups or the Internet itself. This is a glaring discrepancy. Out of the 917,410 files, 212,114 are only descriptions (Hoffman & Novak, 1995, p.2). The question is, how many actual images did the "researchers" see?

"Between April and July 1994, the research team downloaded all available images (3,254)...the team encountered technical difficulties with 13 percent of these images...This left a total of 2,830 images for analysis" (p. 2). This means that out of 917,410 files discussed in this study, 914,580 of them were not even pictures! As for the 83.5 percent figure, this is actually based on "17 alt.binaries groups that Rimm considered pornographic" (p. 2).

In real terms, 17 USENET groups is a fraction of a percent of all USENET groups available. Worse yet, Time claimed that "...only about 3 percent of all messages on the USENET [represent pornographic material], while the USENET itself represents 11.5 percent of the traffic on the Internet" (De Witt, p. 40).

Time neglected to carry the interpretation of this data out to its logical conclusion, which is that less than half of 1 percent (3 percent of 11 percent) of the images on the Internet are associated with newsgroups that contain pornographic imagery. Furthermore, of this half percent, an unknown but even smaller percentage of the messages in newsgroups that are 'associated with pornographic imagery', actually contained pornographic material (Hoffman & Novak, p. 3).

Another blunder can be seen in the avoidance of peer-review, which suggests that there was some political interests being served in having the study become a Time cover story. Marty Rimm contracted the Georgetown Law Review and Time in an agreement to publish his study as long as they kept it under lock and key. During the months before publication, many interested scholars and professionals tried in vain to obtain a copy of the study in order to check it for flaws. De Witt justified not letting such peer-review take place, and also justified the reliability and validity of the study, on the grounds that because the Georgetown Law Review had accepted it, it was therefore reliable and valid, and needed no peer-review. What he didn't know, was that law reviews are not edited by professionals, but by "third year law students" (Godwin, p. 4).

There are many consequences of the failure to subject such a study to the scrutiny of peer review. If it was Rimm's desire to publish an article about on-line pornography in a manner that legitimized his article, yet escaped the kind of critical review the piece would have to undergo if published in a scholarly journal of computer-science, engineering, marketing, psychology, or communications. What better venue than a law journal? A law journal article would have the added advantage of being taken seriously by law professors, lawyers, and legally-trained policymakers. By virtue of where it appeared, it would automatically be catapulted into the center of the policy debate surrounding online censorship and freedom of speech (Godwin).

Herein lies the dangerous implication of such a study: Because the questions surrounding pornography are of such immediate political concern, the study was placed in the forefront of the U.S. domestic policy debate over censorship on the Internet, (an integral aspect of current anti-First Amendment legislation) with little regard for its validity or reliability.

On June 26, the day the article came out, Senator Grassley, (co-sponsor of the anti-porn bill, along with Senator Dole) began drafting a speech that was to be delivered that very day in the Senate, using the study as evidence. The same day, at the same time, Mike Godwin posted on WELL (Whole Earth 'Lectronic Link, a forum for professionals on the Internet) what turned out to be the overstatement of the year: "Philip's story is an utter disaster, and it will damage the debate about this issue because we will have to spend lots of time correcting misunderstandings that are directly attributable to the story" (Meeks, p. 7).

As Godwin was writing this, Senator Grassley was speaking to the Senate: "Mr. President, I want to repeat that: 83.5 percent of the 900,000 images reviewed--these are all on the Internet--are pornographic, according to the Carnegie-Mellon study" ( p. 7). Several days later, Senator Dole was waving the magazine in front of the Senate like a battle flag.

Donna Hoffman, professor at Vanderbilt University, summed up the dangerous political implications by saying, "The critically important national debate over First Amendment rights and restrictions of information on the Internet and other emerging media requires facts and informed opinion, not hysteria" (p.1).

In addition to the hysteria, Hoffman sees a plethora of other problems with the study. "Because the content analysis and classification scheme are 'black boxes,'" Hoffman said, "because no reliability and validity results are presented, because no statistical testing of the differences both within and among categories for different types of listings has been performed, and because not a single hypothesis has been tested, formally or otherwise, no conclusions should be drawn until the issues raised in this critique are resolved" (p. 4).

However, the damage has already been done. This questionable research by an undergraduate engineering major has been generalized to such an extent that even the U.S. Senate, and in particular Senators Grassley and Dole, have been duped, albeit through the strength of their own desires to see only what they wanted to see.

Annotated Bibliography

American Psychological Association. (1985). Standards for educational and psychological testing. Washington, DC: Author.

This work on focuses on reliability, validity and the standards that testers need to achieve in order to ensure accuracy.

Babbie, E.R. & Huitt, R.E. (1979). The practice of social research 2nd ed . Belmont, CA: Wadsworth Publishing.

An overview of social research and its applications.

Beauchamp, T. L., Faden, R.R., Wallace, Jr., R.J. & Walters, L . ( 1982). Ethical issues in social science research. Baltimore and London: The Johns Hopkins University Press.

A systematic overview of ethical issues in Social Science Research written by researchers with firsthand familiarity with the situations and problems researchers face in their work. This book raises several questions of how reliability and validity can be affected by ethics.

Borman, K.M. et al. (1986). Ethnographic and qualitative research design and why it doesn't work. American behavioral scientist 30 , 42-57.

The authors pose questions concerning threats to qualitative research and suggest solutions.

Bowen, K. A. (1996, Oct. 12). The sin of omission -punishable by death to internal validity: An argument for integration of quantitative research methods to strengthen internal validity. Available: http://trochim.human.cornell.edu/gallery/bowen/hss691.htm

An entire Web site that examines the merits of integrating qualitative and quantitative research methodologies through triangulation. The author argues that improving the internal validity of social science will be the result of such a union.

Brinberg, D. & McGrath, J.E. (1985). Validity and the research process . Beverly Hills: Sage Publications.

The authors investigate validity as value and propose the Validity Network Schema, a process by which researchers can infuse validity into their research.

Bussières, J-F. (1996, Oct.12). Reliability and validity of information provided by museum Web sites. Available: http://www.oise.on.ca/~jfbussieres/issue.html

This Web page examines the validity of museum Web sites which calls into question the validity of Web-based resources in general. Addresses the issue that all Websites should be examined with skepticism about the validity of the information contained within them.

Campbell, D. T. & Stanley, J.C. (1963). Experimental and quasi-experimental designs for research. Boston: Houghton Mifflin.

An overview of experimental research that includes pre-experimental designs, controls for internal validity, and tables listing sources of invalidity in quasi-experimental designs. Reference list and examples.

Carmines, E. G. & Zeller, R.A. (1991). Reliability and validity assessment . Newbury Park: Sage Publications.

An introduction to research methodology that includes classical test theory, validity, and methods of assessing reliability.

Carroll, K. M. (1995). Methodological issues and problems in the assessment of substance use. Psychological Assessment, Sep. 7 n3 , 349-58.

Discusses methodological issues in research involving the assessment of substance abuse. Introduces strategies for avoiding problems with the reliability and validity of methods.

Connelly, F. M. & Clandinin, D.J. (1990). Stories of experience and narrative inquiry. Educational Researcher 19:5 , 2-12.

A survey of narrative inquiry that outlines criteria, methods, and writing forms. It includes a discussion of risks and dangers in narrative studies, as well as a research agenda for curricula and classroom studies.

De Witt, P.E. (1995, July 3). On a screen near you: Cyberporn. Time, 38-45.

This is an exhaustive Carnegie Mellon study of online pornography by Marty Rimm, electrical engineering student.

Fink, A., ed. (1995). The survey Handbook, v.1 .Thousand Oaks, CA: Sage.

A guide to survey; this is the first in a series referred to as the "survey kit". It includes bibliograpgical references. Addresses survey design, analysis, reporting surveys and how to measure the validity and reliability of surveys.

Fink, A., ed. (1995). How to measure survey reliability and validity v. 7 . Thousand Oaks, CA: Sage.

This volume seeks to select and apply reliability criteria and select and apply validity criteria. The fundamental principles of scaling and scoring are considered.

Godwin, M. (1995, July). JournoPorn, dissection of the Time article. Available: http://www.hotwired.com

A detailed critique of Time magazine's Cyberporn , outlining flaws of methodology as well as exploring the underlying assumptions of the article.

Hambleton, R.K. & Zaal, J.N., eds. (1991). Advances in educational and psychological testing . Boston: Kluwer Academic.

Information on the concepts of reliability and validity in psychology and education.

Harnish, D.L. (1992). Human judgment and the logic of evidence: A critical examination of research methods in special education transition literature . In D.L. Harnish et al. eds., Selected readings in transition.

This article investigates threats to validity in special education research.

Haynes, N. M. (1995). How skewed is 'the bell curve'? Book Product Reviews . 1-24.

This paper claims that R.J. Herrnstein and C. Murray's The Bell Curve: Intelligence and Class Structure in American Life does not have scientific merit and claims that the bell curve is an unreliable measure of intelligence.

Healey, J. F. (1993). Statistics: A tool for social research, 3rd ed . Belmont: Wadsworth Publishing.

Inferential statistics, measures of association, and multivariate techniques in statistical analysis for social scientists are addressed.

Helberg, C. (1996, Oct.12). Pitfalls of data analysis (or how to avoid lies and damned lies). Available: http//maddog/fammed.wisc.edu/pitfalls/

A discussion of things researchers often overlook in their data analysis and how statistics are often used to skew reliability and validity for the researchers purposes.

Hoffman, D. L. and Novak, T.P. (1995, July). A detailed critique of the Time article: Cyberporn. Available: http://www.hotwired.com

A methodological critique of the Time article that uncovers some of the fundamental flaws in the statistics and the conclusions made by De Witt.

Huitt, William G. (1998). Internal and External Validity . http://www.valdosta.peachnet.edu/~whuitt/psy702/intro/valdgn.html

A Web document addressing key issues of external and internal validity.

Jones, J. E. & Bearley, W.L. (1996, Oct 12). Reliability and validity of training instruments. Organizational Universe Systems. Available: http://ous.usa.net/relval.htm

The authors discuss the reliability and validity of training design in a business setting. Basic terms are defined and examples provided.

Cultural Anthropology Methods Journal. (1996, Oct. 12). Available: http://www.lawrence.edu/~bradleyc/cam.html

An online journal containing articles on the practical application of research methods when conducting qualitative and quantitative research. Reliability and validity are addressed throughout.

Kirk, J. & Miller, M. M. (1986). Reliability and validity in qualitative research. Beverly Hills: Sage Publications.

This text describes objectivity in qualitative research by focusing on the issues of validity and reliability in terms of their limitations and applicability in the social and natural sciences.

Krakower, J. & Niwa, S. (1985). An assessment of validity and reliability of the institutinal perfarmance survey . Boulder, CO: National center for higher education management systems.

Educational surveys and higher education research and the efeectiveness of organization.

Lauer, J. M. & Asher, J.W. (1988). Composition Research. New York: Oxford University Press.

A discussion of empirical designs in the context of composition research as a whole.

Laurent, J. et al. (1992, Mar.) Review of validity research on the stanford-binet intelligence scale: 4th Ed. Psychological Assessment . 102-112.

This paper looks at the results of construct and criterion- related validity studies to determine if the SB:FE is a valid measure of intelligence.

LeCompte, M. D., Millroy, W.L., & Preissle, J. eds. (1992). The handbook of qualitative research in education. San Diego: Academic Press.

A compilation of the range of methodological and theoretical qualitative inquiry in the human sciences and education research. Numerous contributing authors apply their expertise to discussing a wide variety of issues pertaining to educational and humanities research as well as suggestions about how to deal with problems when conducting research.

McDowell, I. & Newell, C. (1987). Measuring health: A guide to rating scales and questionnaires . New York: Oxford University Press.

This gives a variety of examples of health measurement techniques and scales and discusses the validity and reliability of important health measures.

Meeks, B. (1995, July). Muckraker: How Time failed. Available: http://www.hotwired.com

A step-by-step outline of the events which took place during the researching, writing, and negotiating of the Time article of 3 July, 1995 titled: On A Screen Near You: Cyberporn .

Merriam, S. B. (1995). What can you tell from an N of 1?: Issues of validity and reliability in qualitative research. Journal of Lifelong Learning v4 , 51-60.

Addresses issues of validity and reliability in qualitative research for education. Discusses philosophical assumptions underlying the concepts of internal validity, reliability, and external validity or generalizability. Presents strategies for ensuring rigor and trustworthiness when conducting qualitative research.

Morris, L.L, Fitzgibbon, C.T., & Lindheim, E. (1987). How to measure performance and use tests. In J.L. Herman (Ed.), Program evaluation kit (2nd ed.). Newbury Park, CA: Sage.

Discussion of reliability and validity as it pertyains to measuring students' performance.

Murray, S., et al. (1979, April). Technical issues as threats to internal validity of experimental and quasi-experimental designs. San Francisco: University of California. 8-12.

(From Yang et al. bibliography--unavailable as of this writing.)

Russ-Eft, D. F. (1980). Validity and reliability in survey research. American Institutes for Research in the Behavioral Sciences August , 227 151.

An investigation of validity and reliability in survey research with and overview of the concepts of reliability and validity. Specific procedures for measuring sources of error are suggested as well as general suggestions for improving the reliability and validity of survey data. A extensive annotated bibliography is provided.

Ryser, G. R. (1994). Developing reliable and valid authentic assessments for the classroom: Is it possible? Journal of Secondary Gifted Education Fall, v6 n1 , 62-66.

Defines the meanings of reliability and validity as they apply to standardized measures of classroom assessment. This article defines reliability as scorability and stability and validity is seen as students' ability to use knowledge authentically in the field.

Schmidt, W., et al. (1982). Validity as a variable: Can the same certification test be valid for all students? Institute for Research on Teaching July, ED 227 151.

A technical report that presents specific criteria for judging content, instructional and curricular validity as related to certification tests in education.

Scholfield, P. (1995). Quantifying language. A researcher's and teacher's guide to gathering language data and reducing it to figures . Bristol: Multilingual Matters.

A guide to categorizing, measuring, testing, and assessing aspects of language. A source for language-related practitioners and researchers in conjunction with other resources on research methods and statistics. Questions of reliability, and validity are also explored.

Scriven, M. (1993). Hard-Won Lessons in Program Evaluation . San Francisco: Jossey-Bass Publishers.

A common sense approach for evaluating the validity of various educational programs and how to address specific issues facing evaluators.

Shou, P. (1993, Jan.). The singer loomis inventory of personality: A review and critique. [Paper presented at the Annual Meeting of the Southwest Educational Research Association.]

Evidence for reliability and validity are reviewed. A summary evaluation suggests that SLIP (developed by two Jungian analysts to allow examination of personality from the perspective of Jung's typology) appears to be a useful tool for educators and counselors.

Sutton, L.R. (1992). Community college teacher evaluation instrument: A reliability and validity study . Diss. Colorado State University.

Studies of reliability and validity in occupational and educational research.

Thompson, B. & Daniel, L.G. (1996, Oct.). Seminal readings on reliability and validity: A "hit parade" bibliography. Educational and psychological measurement v. 56 , 741-745.

Editorial board members of Educational and Psychological Measurement generated bibliography of definitive publications of measurement research. Many articles are directly related to reliability and validity.

Thompson, E. Y., et al. (1995). Overview of qualitative research . Diss. Colorado State University.

A discussion of strengths and weaknesses of qualitative research and its evolution and adaptation. Appendices and annotated bibliography.

Traver, C. et al. (1995). Case Study . Diss. Colorado State University.

This presentation gives an overview of case study research, providing definitions and a brief history and explanation of how to design research.

Trochim, William M. K. (1996) External validity. (. Available: http://trochim.human.cornell.edu/kb/EXTERVAL.htm

A comprehensive treatment of external validity found in William Trochim's online text about research methods and issues.

Trochim, William M. K. (1996) Introduction to validity. (. Available: hhttp://trochim.human.cornell.edu/kb/INTROVAL.htm

An introduction to validity found in William Trochim's online text about research methods and issues.

Trochim, William M. K. (1996) Reliability. (. Available: http://trochim.human.cornell.edu/kb/reltypes.htm

A comprehensive treatment of reliability found in William Trochim's online text about research methods and issues.

Validity. (1996, Oct. 12). Available: http://vislab-www.nps.navy.mil/~haga/validity.html

A source for definitions of various forms and types of reliability and validity.

Vinsonhaler, J. F., et al. (1983, July). Improving diagnostic reliability in reading through training. Institute for Research on Teaching ED 237 934.

This technical report investigates the practical application of a program intended to improve the diagnoses of reading deficient students. Here, reliability is assumed and a pragmatic answer to a specific educational problem is suggested as a result.

Wentland, E. J. & Smith, K.W. (1993). Survey responses: An evaluation of their validity . San Diego: Academic Press.

This book looks at the factors affecting response validity (or the accuracy of self-reports in surveys) and provides several examples with varying accuracy levels.

Wiget, A. (1996). Father juan greyrobe: Reconstructing tradition histories, and the reliability and validity of uncorroborated oral tradition. Ethnohistory 43:3 , 459-482.

This paper presents a convincing argument for the validity of oral histories in ethnographic research where at least some of the evidence can be corroborated through written records.

Yang, G. H., et al. (1995). Experimental and quasi-experimental educational research . Diss. Colorado State University.

This discussion defines experimentation and considers the rhetorical issues and advantages and disadvantages of experimental research. Annotated bibliography.

Yarroch, W. L. (1991, Sept.). The Implications of content versus validity on science tests. Journal of Research in Science Teaching , 619-629.

The use of content validity as the primary assurance of the measurement accuracy for science assessment examinations is questioned. An alternative accuracy measure, item validity, is proposed to look at qualitative comparisons between different factors.

Yin, R. K. (1989). Case study research: Design and methods . London: Sage Publications.

This book discusses the design process of case study research, including collection of evidence, composing the case study report, and designing single and multiple case studies.

Related Links

Internal Validity Tutorial. An interactive tutorial on internal validity.

http://server.bmod.athabascau.ca/html/Validity/index.shtml

Howell, Jonathan, Paul Miller, Hyun Hee Park, Deborah Sattler, Todd Schack, Eric Spery, Shelley Widhalm, & Mike Palmquist. (2005). Reliability and Validity. Writing@CSU . Colorado State University. https://writing.colostate.edu/guides/guide.cfm?guideid=66

- Reliability vs Validity in Research: Types & Examples

In everyday life, we probably use reliability to describe how something is valid. However, in research and testing, reliability and validity are not the same things.

When it comes to data analysis, reliability refers to how easily replicable an outcome is. For example, if you measure a cup of rice three times, and you get the same result each time, that result is reliable.

The validity, on the other hand, refers to the measurement’s accuracy. This means that if the standard weight for a cup of rice is 5 grams, and you measure a cup of rice, it should be 5 grams.

So, while reliability and validity are intertwined, they are not synonymous. If one of the measurement parameters, such as your scale, is distorted, the results will be consistent but invalid.

Data must be consistent and accurate to be used to draw useful conclusions. In this article, we’ll look at how to assess data reliability and validity, as well as how to apply it.

Read: Internal Validity in Research: Definition, Threats, Examples

What is Reliability?

When a measurement is consistent it’s reliable. But of course, reliability doesn’t mean your outcome will be the same, it just means it will be in the same range.

For example, if you scored 95% on a test the first time and the next you score, 96%, your results are reliable. So, even if there is a minor difference in the outcomes, as long as it is within the error margin, your results are reliable.

Reliability allows you to assess the degree of consistency in your results. So, if you’re getting similar results, reliability provides an answer to the question of how similar your results are.

What is Validity?

A measurement or test is valid when it correlates with the expected result. It examines the accuracy of your result.

Here’s where things get tricky: to establish the validity of a test, the results must be consistent. Looking at most experiments (especially physical measurements), the standard value that establishes the accuracy of a measurement is the outcome of repeating the test to obtain a consistent result.

Read: What is Participant Bias? How to Detect & Avoid It

For example, before I can conclude that all 12-inch rulers are one foot, I must repeat the experiment several times and obtain very similar results, indicating that 12-inch rulers are indeed one foot.

Most scientific experiments are inextricably linked in terms of validity and reliability. For example, if you’re measuring distance or depth, valid answers are likely to be reliable.

But for social experiences, one isn’t the indication of the other. For example, most people believe that people that wear glasses are smart.

Of course, I’ll find examples of people who wear glasses and have high IQs (reliability), but the truth is that most people who wear glasses simply need their vision to be better (validity).

So reliable answers aren’t always correct but valid answers are always reliable.

How Are Reliability and Validity Assessed?

When assessing reliability, we want to know if the measurement can be replicated. Of course, we’d have to change some variables to ensure that this test holds, the most important of which are time, items, and observers.

If the main factor you change when performing a reliability test is time, you’re performing a test-retest reliability assessment.

Read: What is Publication Bias? (How to Detect & Avoid It)

However, if you are changing items, you are performing an internal consistency assessment. It means you’re measuring multiple items with a single instrument.

Finally, if you’re measuring the same item with the same instrument but using different observers or judges, you’re performing an inter-rater reliability test.

Assessing Validity

Evaluating validity can be more tedious than reliability. With reliability, you’re attempting to demonstrate that your results are consistent, whereas, with validity, you want to prove the correctness of your outcome.

Although validity is mainly categorized under two sections (internal and external), there are more than fifteen ways to check the validity of a test. In this article, we’ll be covering four.

First, content validity, measures whether the test covers all the content it needs to provide the outcome you’re expecting.

Suppose I wanted to test the hypothesis that 90% of Generation Z uses social media polls for surveys while 90% of millennials use forms. I’d need a sample size that accounts for how Gen Z and millennials gather information.

Next, criterion validity is when you compare your results to what you’re supposed to get based on a chosen criteria. There are two ways these could be measured, predictive or concurrent validity.

Read: Survey Errors To Avoid: Types, Sources, Examples, Mitigation

Following that, we have face validity . It’s how we anticipate a test to be. For instance, when answering a customer service survey, I’d expect to be asked about how I feel about the service provided.

Lastly, construct-related validity . This is a little more complicated, but it helps to show how the validity of research is based on different findings.

As a result, it provides information that either proves or disproves that certain things are related.

Types of Reliability

We have three main types of reliability assessment and here’s how they work:

1) Test-retest Reliability

This assessment refers to the consistency of outcomes over time. Testing reliability over time does not imply changing the amount of time it takes to conduct an experiment; rather, it means repeating the experiment multiple times in a short time.

For example, if I measure the length of my hair today, and tomorrow, I’ll most likely get the same result each time.

A short period is relative in terms of reliability; two days for measuring hair length is considered short. But that’s far too long to test how quickly water dries on the sand.

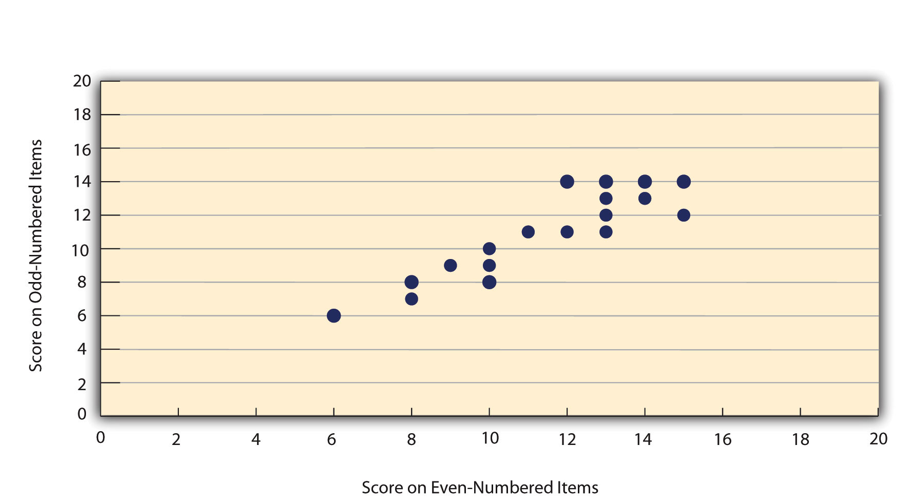

A test-retest correlation is used to compare the consistency of your results. This is typically a scatter plot that shows how similar your values are between the two experiments.

If your answers are reliable, your scatter plots will most likely have a lot of overlapping points, but if they aren’t, the points (values) will be spread across the graph.

Read: Sampling Bias: Definition, Types + [Examples]

2) Internal Consistency

It’s also known as internal reliability. It refers to the consistency of results for various items when measured on the same scale.

This is particularly important in social science research, such as surveys, because it helps determine the consistency of people’s responses when asked the same questions.

Most introverts, for example, would say they enjoy spending time alone and having few friends. However, if some introverts claim that they either do not want time alone or prefer to be surrounded by many friends, it doesn’t add up.

These people who claim to be introverts or one this factor isn’t a reliable way of measuring introversion.