- Comprehensive Learning Paths

- 150+ Hours of Videos

- Complete Access to Jupyter notebooks, Datasets, References.

Python Yield – What does the yield keyword do?

- September 7, 2020

- Venmani A D

Adding yield keyword to a function will make the function return a generator object that can be iterated upon.

What does the yield keyword do?

Approaches to overcome generator exhaustion, how to materialize generators, how yield works, step by step, exercise 1: write a program to create a generator that generates cubes of numbers up to 1000 using yield, exercise 2: write a program to return odd number by pipelining generators, difference between yield and return.

yield in Python can be used like the return statement in a function. When done so, the function instead of returning the output, it returns a generator that can be iterated upon.

You can then iterate through the generator to extract items. Iterating is done using a for loop or simply using the next() function. But what exactly happens when you use yield ?

What the yield keyword does is as follows:

Each time you iterate, Python runs the code until it encounters a yield statement inside the function. Then, it sends the yielded value and pauses the function in that state without exiting.

When the function is invoked the next time, the state at which it was last paused is remembered and execution is continued from that point onwards. This continues until the generator is exhausted.

What does remembering the state mean?

It means, any local variable you may have created inside the function before yield was called will be available the next time you invoke the function. This is NOT the way a regular function usually behaves.

Now, how is it different from using the return keyword?

Had you used return in place of yield , the function would have returned the respective value, all the local variable values that the function had earlier computed would be cleared off and the next time the function is called, the function execution will start fresh.

Since the yield enables the function to remember its ‘state’, this function can be used to generate values in a logic defined by you. So, it function becomes a ‘generator’.

Now you can iterate through the generator object. But it works only once.

Calling the generator the second time wont give anything. Because the generator object is already exhausted and has to be re-initialized.

If you call next() over this iterator, a StopIteration error is raised

To overcome generator exhaustion, you can:

- Approach 1 : Replenish the generator by recreating it again and iterate over. You just saw how to do this.

- Approach 2 : Iterate by calling the function that created the generator in the first place

- Approach 3 (best) : Convert it to an class that implements a __iter__() method. This creates an iterator every time, so you don’t have to worry about the generator getting exhausted.

We’ve see the first approach already. Approach 2: The second approach is to simple replace the generator with a call the the function that produced the generator, which is simple_generator() in this case. This will continue to work no matter how many times you iterate it.

Approach 3: Now, let’s try creating a class that implements a __iter__() method. It creates an iterator object every time, so you don’t have to keep recreating the generator.

We often store data in a list if you want to materialize it at some point. If you do so, the content of the list occupies tangible memory. The larger the list gets, it occupies more memory resource.

But if there is a certain logic behind producing the items that you want, you don’t have to store in a list. But rather, simply write a generator that will produce the items whenever you want them.

Let’s say, you want to iterate through squares of numbers from 1 to 10. There are at least two ways you can go about it: create the list beforehand and iterate. Or create a generator that will produce these numbers.

Let’s do the same with generators now.

Generators are memory efficient because the values are not materialized until called. And are usually faster. You will want to use a generator especially if you know the logic to produce the next number (or any object) that you want to generate.

Can a generator be materialized to a list?

Yes. You can do so easily using list comprehensions or by simply calling list() .

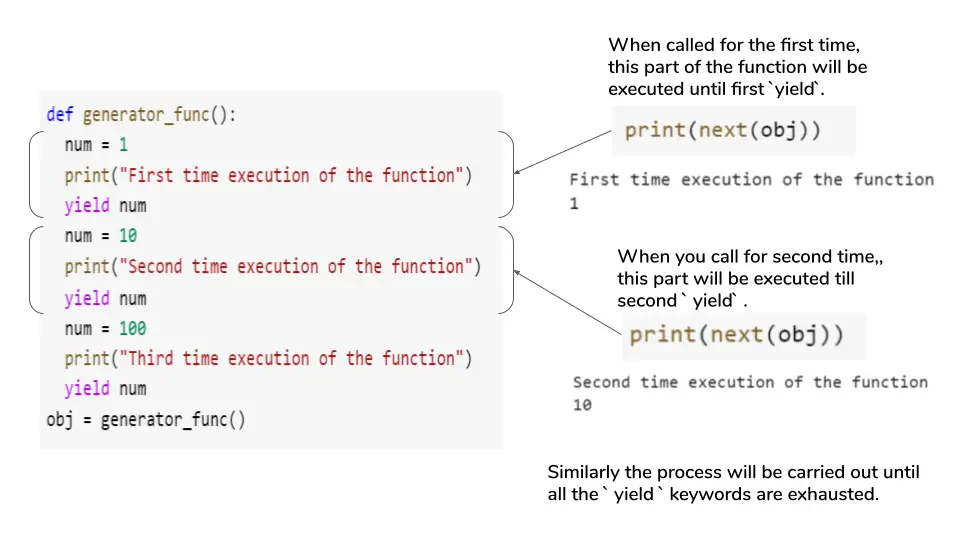

yield is a keyword that returns from the function without destroying the state of it’s local variables. When you replace return with yield in a function, it causes the function to hand back a generator object to its caller. In effect, yield will prevent the function from exiting, until the next time next() is called. When called, it will start executing from the point where it paused before. Output:

See that I have created a function using yield keyword. Let’s try to access the function, as we have created an object obj for the function, it will be defined as an iterator. So to access it, use the next() function. It will iterate until the next yield statement is reached.

I am going to try to create a generator function which will return the cubic of the number until the cube limit reaches 1000, one at a time using yield keyword. The memory will be alloted only to the element which is running, after the execution of output of that element, the memory will be deleted.

Multiple generators can be pipelined(one generator using another) as a series of operations in the same code. Pipelining also makes the code more efficient and easy to read. For pipeling functions, use () paranthesis to give function caller inside a function.

More Articles

How to convert python code to cython (and speed up 100x), how to convert python to cython inside jupyter notebooks, install opencv python – a comprehensive guide to installing “opencv-python”, install pip mac – how to install pip in macos: a comprehensive guide, scrapy vs. beautiful soup: which is better for web scraping, add python to path – how to add python to the path environment variable in windows, similar articles, complete introduction to linear regression in r, how to implement common statistical significance tests and find the p value, logistic regression – a complete tutorial with examples in r.

Subscribe to Machine Learning Plus for high value data science content

© Machinelearningplus. All rights reserved.

Machine Learning A-Z™: Hands-On Python & R In Data Science

Free sample videos:.

- Learn Python

- Python Lists

- Python Dictionaries

- Python Strings

- Python Functions

- Learn Pandas & NumPy

- Pandas Tutorials

- Numpy Tutorials

- Learn Data Visualization

- Python Seaborn

- Python Matplotlib

Using Python Generators and yield: A Complete Guide

- February 15, 2023 February 15, 2023

In this tutorial, you’ll learn how to use generators in Python, including how to interpret the yield expression and how to use generator expressions . You’ll learn what the benefits of Python generators are and why they’re often referred to as lazy iteration. Then, you’ll learn how they work and how they’re different from normal functions.

Python generators provide you with the means to create your own iterator functions. These functions allow you to generate complex, memory-intensive operations. These operations will be executed lazily, meaning that you can better manage the memory of your Python program.

By the end of this tutorial, you’ll have learned:

- What Python generators are and how to use the yield expression

- How to use multiple yield keywords in a single generator

- How to use generator expressions to make generators simpler to write

- Some common use cases for Python generators

Table of Contents

Understanding Python Generators

Before diving into what generators are, let’s explore what iterators are. Iterators are objects that can be iterated upon, meaning that they return one action or item at a time . To be considered an iterator, objects need to implement two methods: __iter__() and __next__() . Some common examples of iterators in Python include for loops and list comprehensions .

Generators are a Pythonic implementation of creating iterators, without needing to explicitly implement a class with __iter__() and __next__() methods. Similarly, you don’t need to keep track of the object’s internal state. An important thing to note is that generators iterate over an object lazily, meaning they do not store their contents in memory .

The yield statement’s job is to control the flow of a generator function. The statement goes further to handle the state of the generator function, pausing it until it’s called again, using the next() function.

Creating a Simple Generator

In this section, you’ll learn how to create a basic generator. One of the key syntactical differences between a normal function and a generator function is that the generator function includes a yield statement .

Let’s see how we can create a simple generator function:

Let’s break down what is happening here:

- We define a function, return_n_values() , which takes a single parameter, n

- In the function, we first set the value of num to 0

- We then enter a while loop that evaluates whether the value of num is less than our function argument, n

- While that condition is True , we yield the value of num

- Then, we increment the value of num using the augmented assignment operator

Immediately, there are two very interesting things that happen:

- We use yield instead of return

- A statement follows the yield statement, which isn’t ignored

Let’s see how we can actually use this function:

In the code above, we create a variable values , which is the result of calling our generator function with an argument of 5 passed in. When we print the value of values , a generator object is returned.

So, how do we access the values in our generator object? This is done using the next() function, which calls the internal .__iter__() method. Let’s see how this works in Python:

We can see here that the value of 0 is returned. However, intuitively, we know that the values of 0 through 4 should be returned. Because a Python generator remembers the function’s state, we can call the next() function multiple times . Let’s call it a few more times:

In this case, we’ve yielded all of the values that the while loop will accept. Let’s see what happens when we call the next() function a sixth time:

We can see in the code sample above that when the condition of our while loop is no longer True , Python will raise StopIteration .

In the next section, you’ll learn how to create a Python generator using a for loop.

Creating a Python Generator with a For Loop

In the previous example, you learned how to create and use a simple generator. However, the example above is complicated by the fact that we’re yielding a value and then incrementing it. This can often make generators much more difficult for beginners and novices to understand.

Instead, we can use a for loop, rather than a while loop, for simpler generators . Let’s rewrite our previous generator using a for loop to make the process a little more intuitive:

In the code block above, we used a for loop instead of a while loop. We used the Python range() function to create a range of values from 0 through to the end of the values. This simplifies the generator a little bit, making it more approachable to readers of your code.

Unpacking a Generator with a For Loop

In many cases, you’ll see generators wrapped inside of for loops, in order to exhaust all possible yields. In these cases, the benefit of generators is less about remembering the state (though this is used, of course, internally), and more about using memory wisely.

In the code block above, we used a for loop to loop over each iteration of the generator. This implicitly calls the __next__() method. Note that we’re using the optional end= parameter of the print function, which allows you to overwrite the default newline character .

Creating a Python Generator with Multiple Yield Statements

A very interesting difference between Python functions and generators is that a generator can actually hold more than one yield expressions ! While, theoretically, a function can have more than one return keyword, nothing after the first will execute.

Let’s take a look at an example where we define a generator with more than one yield statement:

In the code block above, our generator has more than one yield statement. When we call the first next() function, it returns only the first yielded value. We can keep calling the next() function until all the yielded values are depleted. At this point, the generator will raise a StopIteration exception.

Understanding the Performance of Python Generators

One of the key things to understand is why you’d want to use a Python generator. Because Python generators evaluate lazily, they use significantly less memory than other objects.

For example, if we created a generator that yielded the first one million numbers, the generator doesn’t actually hold the values. Meanwhile, by using a list comprehension to create a list of the first one million values, the list actually holds the values. Let’s see what this looks like:

In the code block above, we import the sys library which allows us to access the getsizeof() function. We then print the size of both the generator and the list. We can see that the list is over 75,000 times larger.

In the following section, you’ll learn how to simplify creating generators by using generator expressions.

Creating Python Generator Expressions

When you want to create one-off generators, using a function can seem redundant. Similar to list and dictionary comprehensions , Python allows you to create generator expressions. This simplifies the process of creating generators, especially for generators that you only need to use once.

In order to create a generator expression, you wrap the expression in parentheses . Say you wanted to create a generator that yields the numbers from zero through four. Then, you could write (i for i in range(5)) .

In the example above, we used a generator expression to yield values from 0 to 4. We then call the next() function five times to print out the values in the generator.

In the following section, we’ll dive further into the yield statement.

Understanding the Python yield Statement

The Python yield statement can often feel unintuitive to newcomers to generators. What separates the yield statement from the return statement is that rather than ending the process, it simply suspends the current process.

The yield statement will suspend the process and return the yielded value. When the subsequent next() function is called, the process is resumed until the following value is yielded.

What is great about this is that the state of the process is saved. This means that Python will know where to pick up its iteration, allowing it to move forward without a problem.

How to Throw Exceptions in Python Generators Using throw

Python generators have access to a special method, .throw() , which allows them to throw an exception at a specific point of iteration . This can be helpful if you know that an erroneous value may exist in the generator.

Let’s take a look at how we can use the .throw() method in a Python generator:

Let’s break down how we can use the .throw() method to throw an exception in a Python generator:

- We create our generator using a generator expression

- We then use a for loop to loop over each value

- Within the for loop, we use an if statement to check if the value is equal to 3. If it is, we call the .throw() method, which raises an error

In some cases, you may simply want to stop a generator, rather than throwing an exception. This is what you’ll learn in the following section.

How to Stop a Python Generator Using stop

Python allows you to stop iterating over a generator by using the .close() function . This can be very helpful if you’re reading a file using a generator and you only want to read the file until a certain condition is met.

Let’s repeat our previous example, though we’ll stop the generator rather than throwing an exception:

In the code block above we used the .close() method to stop the iteration. While the example above is simple, it can be extended quite a lot. Imagine reading a file using Python – rather than reading the entire file, you may only want to read it until you find a given line.

In this tutorial, you learned how to use generators in Python, including how to interpret the yield expression and how to use generator expressions . You learned what the benefits of Python generators are and why they’re often referred to as lazy iteration. Then, you learned how they work and how they’re different from normal functions.

Additional Resources

To learn more about related topics, check out the resources below:

- Understanding and Using Functions in Python for Data Science

- Python: Return Multiple Values from a Function

- Python Built-In Functions

- Python generators: Official Documentation

Nik Piepenbreier

Nik is the author of datagy.io and has over a decade of experience working with data analytics, data science, and Python. He specializes in teaching developers how to use Python for data science using hands-on tutorials. View Author posts

2 thoughts on “Using Python Generators and yield: A Complete Guide”

Guides like these are highlights in the net. Thanks

Thanks so much, Vik!!

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Introduction to Python (for experienced coders)

- Advanced Python

- Python for non-programmers

- Testing Python code with pytest

- Data analytics with NumPy and Pandas

- Introduction to machine learning in Python

- Python for system administrators

- Python Practice Workshop

- Regular expressions

- Introduction to Git

- Ace Python Interviews (free)

- Intro Python: Fundamentals

- Intro Python: Functions

- Object-oriented Python

- Advanced python functions

- Advanced Python data structures

- Advanced Python objects

- Comprehending Comprehensions

- Weekly Python Exercise

- Understanding and mastering Git

- Practice Makes Regexp

- Python Workout

- Pandas Workout

- Regular expressions crash course

- Boolean indexing in NumPy and Pandas

- Variable scoping in Python

- Working with files in Python

- Teach programming better

- About Reuven

Making sense of generators, coroutines, and “yield from” in Python

May 8, 2020 . By Reuven

Consider the following (ridiculous) Python function:

I can define the function, and then run it. What do I get back?

Not surprisingly, I get 1 back. That’s because Python reaches that first “return” statement and returns 1. There’s no need to continue onto the second and third “return” statements. (Actually, from Python’s perspective, those latter two statements don’t even exist; they are removed from the bytecode altogether at compilation time.)

What happens if I write my function a bit differently, using “ yield ” instead of “ return “?

If I run my function now, I get the following:

That’s right: Because I used “yield” instead of “return”, running the function doesn’t execute the function body. Rather, I get back a generator object, meaning something that implements the iterator protocol. For this reason, the second kind of function (using “yield”) is called a “generator function,” although you’ll often hear people describe them as “generators.”

Because generators (i.e., the objects returned by generator functions) implement the iterator protocol, they can be put into “for” loops:

What do we get back?

How does this work? With each iteration, the body of the generator function is executed. If there’s a “yield” statement, then that value is returned to the “for” loop. And then, most significantly, the generator goes to sleep, pausing immediately after that “yield” statement executes. When the next iteration occurs, the function wakes up at the point where it was paused, and continues running, as if nothing at all had happened.

In other words:

- A generator function, when executed, returns a generator object.

- The generator object implements the iterator protocol, meaning that it knows what to do in a “for” loop.

- Each time the generator reaches a “yield” statement, it returns the yielded value to the “for” loop, and goes to sleep.

- With each successive iteration, the generator starts running from where it paused (i.e., just after the most recent “yield” statement)

- When the generator reaches the end of the function, or encounters a “return” statement, it raises a StopIteration exception, which is how Python iterators indicate that they’ve reached the end of the line.

We can simulate this all ourselves, as follows:

The “ next ” built-in function is how Python asks an iterator for … well, for the next object that it wants to produce. The response to “next” can either be an object or the StopIteration exception.

This kind of generator function can be quite useful: You can use it for caching, filtering, and treating infinite (or very large) data sets in smaller chunks.

But used in this way, generator functions are for one-way communication. We can retrieve information from a generator using “next”, but we cannot interact with it, modify its trajectory, or otherwise affect its execution while it’s running. (That’s not entirely true: The “ throw ” method allows you to force an exception to be raised within the generator, which you can use to affect what the generator should do.)

A number of years ago, Python introduced the “send” method for generators, and a modification of how “yield” can be used. Consider this code:

The above code looks a bit weird, in that “yield’ is on the right side of an assignment statement. This means that “yield” must be providing a value to the generator. Where is it getting that value from?

Answer: From the “send” method, which can be invoked in place of the “next” function. The “send” method works just like “next”, except that you can pass any Python data structure you want into the generator. And whatever you send to the generator is then assigned to “x”.

Now, you need to “prime” it the first time with “next”, rather than “send”. But other than that, it works just like any other generator — except that whatever you “send” will then be a part of the coroutine. As before, each invocation of next/send will execute all of the code until and including the “yield” statement.

It might seem weird, but because the “yield” is on the right side of an assignment operator, and because the right side of assignment always executes before the left side, the generator goes to sleep after returning the right side, but before assigning any value to the left side. When it wakes up, the first thing that happens is that the sent value is assigned to the left side.

Here’s how that can look:

Now, this is admittedly pretty neat: Our coroutine hangs around, waiting for us to give it a number to dial.

For a long time, it seemed like such coroutines were solutions looking for problems. After all, what can you do with such a thing? From what I can tell, people in the Python world were excited about this sort of idea, but aside from a handful who really understood the potential, coroutines were ignored and seen as somewhere between weird and esoteric.

(I should add that the term “coroutine” has changed ts meaning somewhat in the Python world over the last few years, as the “ asyncio ” library has gained in popularity. I have nothing against asyncio, and have been increasingly impressed with what it does, and how it does it. But that’s not the sort of coroutine I’m talking about here. Note that asyncio’s coroutines started off as generators, and there are still many things to understand in asyncio via generators. But that’s not my topic here.)

So, where do you use a generator-based coroutine? How can you think about it?

My suggestion: Think of it as an in-program microservice. A nanoservice, if you will, available to your Python program.

Why do I say this? Because the moment that you think of it this way, what you do and don’t want to do with coroutines becomes much clearer.

- Want to communicate with a database? Use a coroutine, whose local variables will stick around across queries, and can thus remain connected without using lots of ugly global variables. Send your SQL queries to the coroutine, and get back the query results.

- Want to communicate with an external network service, such as a stock-market quote system? Use a coroutine, to which you can send a tuple of symbol and date, and from which you’ll receive the latest information in a dictionary.

- Want to automatically translate files from one format to another? Use a coroutine, which can take input in one encoding/format and produce output in another encoding/format.

Let’s create two simple coroutines that demonstrate how this can work. First, a Pig Latin translator, which will receive strings in English and will return them translated into Pig Latin:

Our service coroutine is “pig_latin_translator”, which uses the “pl_sentence” function to do its translation work. Let’s fire it up:

Amazing! Whenever we want to translate some English into Pig Latin, we can do so with our translator, sitting in memory and waiting to serve us. Perhaps this isn’t the most elegant or sophisticated use of coroutines, but it certainly works.

Let’s look at another example: A corporate support chatbot. You know, the sort of thing that appears on a company’s Web site, allows you to enter your complaints, and then actually helps you. No, wait — that’s science fiction; in reality, such chat bots are always unable to help, while telling you how important you are. Let’s create such an unhelpful chatbot:

This chatbot, as its name implies, waits for your input, and then ignores it entirely, returning a canned message meant to make you feel good about yourself and the service you’re getting. Of course, you don’t really feel good after such a conversation, but at least the company has saved on salaries, right?

But I digress.

Let’s see what happens when we run our chatbot:

A number of years ago, Python introduced a new form of “yield”, known as “ yield from “. And I have to say that the documentation and examples are … well, they make the simple case very obvious and easy to understand, but make the hard case quite difficult to understand. I hope that I can clear that up.

The basic idea is that if you have a function, it’s normal to call other functions from within it. That’s a standard technique in programming, one which allows us to write shorter, more specific functions, as well as to take advantage of abstraction.

But what if you have a generator that wants to return data from another generator, or any other iterable? You could do something like this:

In other words, we turn to “g”, our generator. And with each iteration, we ask it for its next element. What does the generator do? It invokes a “for” loop on “data”. So with each iteration, we’re asking “g”, and “g” is asking “data”. We can shorten this code with “yield from”:

We got the same result, even though the body of “wrapper” is now dramatically shorter. “yield from” basically lets us outsource the “yield” to another iterable, namely “data”. Our generator is basically saying, “I don’t want to deal with this any more, so I’ll just ask data to take over from here.”

This is the simple use case for “yield from”, and it’s not really very compelling. After all, did they need to add new syntax to the language in order to reduce our “for” loops? The answer is “no.”

So what is “yield from” used for? Consider the two coroutines that we wrote above, for Pig Latin and customer service. Imagine that the companies providing these services have now merged, and that we would like to have a single in-memory service that handles both of them. In other words, we would like to have a coroutine to which we can send “1” to translate Pig Latin and “2” to get customer service.

This all sounds fine, until we realize that we’re somehow going to need to get a value from the caller’s “send” method, and then pass it along to one of our coroutines. That’s going to look rather messy, no?

And so, the real reason to use “yield from” is when you have a coroutine that acts as an agent between its caller and other coroutines. By using “yield from”, you not only outsource the yielded values to a sub-generator, but you also allow that sub-generator to get inputs from the user. For example:

Now, what happens if we invoke this?

Fantastic, right? We’re calling “s.send” — meaning, our messages are being sent to the switchboard coroutine. But because it has used “yield from”, our message is passed along to “pig_latin_translator”. And when the translation is done, that coroutine yields its value, which bubbles up directly to the original caller.

Of course, I can also get customer support:

Pretty nifty, eh? But we can do even better, allowing people to go back from our sub-generator to our main one, and then choose a different one:

Here’s an example of how that would work:

So, what have we seen here?

- Coroutines are like in-memory microservices, with state that remains across calls.

- We use “next” to prime a coroutine the first time, and then use “send” to deliver additional messages.

- If we want to provide a meta-microservice, or a coroutine that invokes other coroutines, then we can use “yield from”.

- “yield from” connects the initial “send” method with the sub-coroutine, effectively passing through the coroutine that’s using “yield from”.

I hope that this helps you to consider when and how to use coroutines — and also how you can use “yield from” in your code in more sophisticated ways than just avoiding “for” loops.

Related Posts

Prepare yourself for a better career, with my new Python learning memberships

I’m banned for life from advertising on meta. because i teach python., sharpen your pandas skills with “bamboo weekly”.

This article is very nice and well written. Yet I have a question: a function which send requests to a database could be a coroutine, or the method of a class. In both cases, connection parameters could be stored, and the class or the coroutine would sit in memory waiting for requests. The advantage of the coroutine remains unclear to me, where is the asynchronicity here? will the code behave somehow differently? be faster? better handle blocking IO? if I use a coroutine vs a method?

It’s mostly a matter of packaging and style. Objects give you a higher-level abstraction. Closures are probably a bit faster (but not much, necessarily), and keep things a bit more private.

To be honest, I usually think of this kind of coroutine as (a) an interesting intellectual exercise or (b) a way to start understanding the mindset of asyncio, which uses a different type of coroutine, but is still in the same direction.

That said, I recently spoke with people who are using precisely this kind of architecture for Web applications in Rust (not in Python). I was fascinated to hear what they had to say about it, and was a bit surprised to hear that it was really in use as an architecture!

For the most part, generator-based coroutines in Python are no longer really used.

This is amazing post!!! It helped clarified my understanding on coroutines and yield from. Thank you!

In the last example when you send None what is going on?

He is breaking from the latin translator (there is a if is is None: break;) and so the main message (menu) is displayed

Thank You very much. I really learned something new.

Thank you very much! This is just what needed!

I learned something new. Thank you!

Very helpful article, thanks. (Found it through a google search for `yield from`). FWIW, I noticed one typo: in your second generation switchboard example, you show the prompt after the switchboard assignment instead of after the initial `next()`.

Thanks, and I’ll fix the typo!

I also found this article through searching `yield from`. It was very confusing to me, but this article for sure helped me so much!

Session expired

Please log in again. The login page will open in a new tab. After logging in you can close it and return to this page.

Understanding Python's "yield" Keyword

The yield keyword in Python is used to create generators. A generator is a type of collection that produces items on-the-fly and can only be iterated once. By using generators you can improve your application's performance and consume less memory as compared to normal collections, so it provides a nice boost in performance.

In this article we'll explain how to use the yield keyword in Python and what it does exactly. But first, let's study the difference between a simple list collection and generator, and then we will see how yield can be used to create more complex generators.

- Differences Between a List and Generator

In the following script we will create both a list and a generator and will try to see where they differ. First we'll create a simple list and check its type:

When running this code you should see that the type displayed will be "list".

Now let's iterate over all the items in the squared_list .

The above script will produce following results:

Now let's create a generator and perform the same exact task:

To create a generator, you start exactly as you would with list comprehension, but instead you have to use parentheses instead of square brackets. The above script will display "generator" as the type for squared_gen variable. Now let's iterate over the generator using a for-loop.

The output will be:

The output is the same as that of the list. So what is the difference? One of the main differences lies in the way the list and generators store elements in the memory. Lists store all of the elements in memory at once, whereas generators "create" each item on-the-fly, displays it, and then moves to the next element, discarding the previous element from the memory.

One way to verify this is to check the length of both the list and generator that we just created. The len(squared_list) will return 5 while len(squared_gen) will throw an error that a generator has no length. Also, you can iterate over a list as many times as you want but you can iterate over a generator only once. To iterate again, you must create the generator again.

- Using the Yield Keyword

Now we know the difference between simple collections and generators, let us see how yield can help us define a generator.

In the previous examples, we created a generator implicitly using the list comprehension style. However in more complex scenarios we can instead create functions that return a generator. The yield keyword, unlike the return statement, is used to turn a regular Python function in to a generator. This is used as an alternative to returning an entire list at once. This will be again explained with the help of some simple examples.

Again, let's first see what our function returns if we do not use the yield keyword. Execute the following script:

In this script a function cube_numbers is created that accepts a list of numbers, take their cubes and returns the entire list to the caller. When this function is called, a list of cubes is returned and stored in the cubes variable. You can see from the output that the returned data is in-fact a full list:

Now, instead of returning a list, let's modify the above script so that it returns a generator.

Check out our hands-on, practical guide to learning Git, with best-practices, industry-accepted standards, and included cheat sheet. Stop Googling Git commands and actually learn it!

In the above script, the cube_numbers function returns a generator instead of list of cubed number. It's very simple to create a generator using the yield keyword. Here we do not need the temporary cube_list variable to store cubed number, so even our cube_numbers method is simpler. Also, no return statement is needed, but instead the yield keyword is used to return the cubed number inside of the for-loop.

Now, when cube_number function is called, a generator is returned, which we can verify by running the code:

Even though we called the cube_numbers function, it doesn't actually execute at this point in time, and there are not yet any items stored in memory.

To get the function to execute, and therefore the next item from generator, we use the built-in next method. When you call the next iterator on the generator for the first time, the function is executed until the yield keyword is encountered. Once yield is found the value passed to it is returned to the calling function and the generator function is paused in its current state.

Here is how you get a value from your generator:

The above function will return "1". Now when you call next again on the generator, the cube_numbers function will resume executing from where it stopped previously at yield . The function will continue to execute until it finds yield again. The next function will keep returning cubed value one by one until all the values in the list are iterated.

Once all the values are iterated the next function throws a StopIteration exception. It is important to mention that the cubes generator doesn't store any of these items in memory, rather the cubed values are computed at runtime, returned, and forgotten. The only extra memory used is the state data for the generator itself, which is usually much less than a large list. This makes generators ideal for memory-intensive tasks.

Instead of always having to use the next iterator, you can instead use a "for" loop to iterate over a generators values. When using a "for" loop, behind the scenes the next iterator is called until all the items in the generator are iterated over.

- Optimized Performance

As mentioned earlier, generators are very handy when it comes to memory-intensive tasks since they do not need to store all of the collection items in memory, rather they generate items on the fly and discards it as soon as the iterator moves to the next item.

In the previous examples the performance difference of a simple list and generator was not visible since the list sizes were so small. In this section we'll check out some examples where we can distinguish between the performance of lists and generators.

In the code below we will write a function that returns a list that contains 1 million dummy car objects. We will calculate the memory occupied by the process before and after calling the function (which creates the list).

Take a look at the following code:

Note : You may have to pip install psutil to get this code to work on your machine.

In the machine on which the code was run, following results were obtained (yours may look slightly different):

Before the list was created the process memory was 8 MB , and after the creation of list with 1 million items, the occupied memory jumped to 334 MB . Also, the time it took to create the list was 1.58 seconds.

Now, let's repeat the above process but replace the list with generator. Execute the following script:

Here we have to use the for car in car_list_gen(1000000) loop to ensure that all 1000000 cars are actually generated.

Following results were obtained by executing the above script:

From the output, you can see that by using generators the memory difference is much smaller than before (from 8 MB to 40 MB ) since the generators do not store the items in memory. Furthermore, the time taken to call the generator function was a bit faster as well at 1.37 seconds, which is about 14% faster than the list creation.

Hopefully from this article you have a better understanding of the yield keyword, including how it's used, what it's used for, and why you'd want to use it. Python generators are a great way to improve the performance of your programs and they're very simple to use, but understanding when to use them is the challenge for many novice programmers.

You might also like...

- Hidden Features of Python

- Python Docstrings

- Handling Unix Signals in Python

- The Best Machine Learning Libraries in Python

- Guide to Sending HTTP Requests in Python with urllib3

Improve your dev skills!

Get tutorials, guides, and dev jobs in your inbox.

No spam ever. Unsubscribe at any time. Read our Privacy Policy.

In this article

Building Your First Convolutional Neural Network With Keras

Most resources start with pristine datasets, start at importing and finish at validation. There's much more to know. Why was a class predicted? Where was...

Data Visualization in Python with Matplotlib and Pandas

Data Visualization in Python with Matplotlib and Pandas is a course designed to take absolute beginners to Pandas and Matplotlib, with basic Python knowledge, and...

© 2013- 2024 Stack Abuse. All rights reserved.

Popular Articles

- Syntax And Usage (May 23, 2024)

- Reading And Writing (Apr 27, 2024)

- Asyncio (Apr 26, 2024)

- Metaclasses (May 04, 2024)

- Type Hints (May 04, 2024)

python yield

Switch to English

Table of Contents

Introduction

Understanding generators, explanation of 'yield from', tips and tricks, common error-prone cases and how to avoid them.

- To fully appreciate the 'yield from' construct, it's essential first to understand generators in Python. A generator is a special type of function that returns an iterator. It allows you to iterate over a sequence of values, but unlike lists or tuples, it doesn't store the whole sequence in memory. Instead, it generates each value on-the-fly, which can be very memory-efficient for large sequences.

- Now, let's dive into the 'yield from' construct. 'yield from' is a phrase used in Python to delegate part of the generator's operations to another generator or iterable. This can simplify the code in a generator function, particularly when it's dealing with nested for loops.

- Remember to use 'yield from' only when you want to delegate part of a generator's operations to another generator or iterable. If you're going to yield a single value, use 'yield' instead.

- When using 'yield from' with an iterable, ensure that the iterable doesn't produce an infinite sequence. Otherwise, your generator will get stuck in an infinite loop.

- Keep in mind that 'yield from' only works in Python 3.3 and later. If you're using an earlier version of Python, you'll have to use a for loop instead.

- One common mistake is trying to use 'yield from' in a non-generator function. Remember, 'yield' and 'yield from' can only be used in generator functions. If you try to use them in a regular function, you'll get a SyntaxError.

- Another common error is trying to yield from a non-iterable object. 'yield from' requires an iterable, so make sure the object you're yielding from can be iterated over. If it can't, Python will raise a TypeError.

- To avoid these errors, always ensure that your 'yield from' statement is in a generator function and that the object you're yielding from is iterable.

- 'yield from' is a powerful feature in Python that can make your code cleaner and more readable. It's an advanced topic, but once you understand it, it can be a great tool in your Python programming toolbox.

- Just remember to use 'yield from' appropriately and watch out for common error-prone cases. With practice, you'll be able to use 'yield from' effectively and write more efficient Python code.

Python Tutorial

File handling, python modules, python numpy, python pandas, python matplotlib, python scipy, machine learning, python mysql, python mongodb, python reference, module reference, python how to, python examples, python yield keyword.

❮ Python Keywords

Return three values from a function:

Definition and Usage

The yield keyword is used to return a list of values from a function.

Unlike the return keyword which stops further execution of the function, the yield keyword continues to the end of the function.

When you call a function with yield keyword(s), the return value will be a list of values, one for each yield .

Related Pages

Use the return keyword to return only one value, and stop further execution.

Read more about functions in our Python Functions Tutorial .

COLOR PICKER

Contact Sales

If you want to use W3Schools services as an educational institution, team or enterprise, send us an e-mail: [email protected]

Report Error

If you want to report an error, or if you want to make a suggestion, send us an e-mail: [email protected]

Top Tutorials

Top references, top examples, get certified.

Python Enhancement Proposals

- Python »

- PEP Index »

PEP 572 – Assignment Expressions

The importance of real code, exceptional cases, scope of the target, relative precedence of :=, change to evaluation order, differences between assignment expressions and assignment statements, specification changes during implementation, _pydecimal.py, datetime.py, sysconfig.py, simplifying list comprehensions, capturing condition values, changing the scope rules for comprehensions, alternative spellings, special-casing conditional statements, special-casing comprehensions, lowering operator precedence, allowing commas to the right, always requiring parentheses, why not just turn existing assignment into an expression, with assignment expressions, why bother with assignment statements, why not use a sublocal scope and prevent namespace pollution, style guide recommendations, acknowledgements, a numeric example, appendix b: rough code translations for comprehensions, appendix c: no changes to scope semantics.

This is a proposal for creating a way to assign to variables within an expression using the notation NAME := expr .

As part of this change, there is also an update to dictionary comprehension evaluation order to ensure key expressions are executed before value expressions (allowing the key to be bound to a name and then re-used as part of calculating the corresponding value).

During discussion of this PEP, the operator became informally known as “the walrus operator”. The construct’s formal name is “Assignment Expressions” (as per the PEP title), but they may also be referred to as “Named Expressions” (e.g. the CPython reference implementation uses that name internally).

Naming the result of an expression is an important part of programming, allowing a descriptive name to be used in place of a longer expression, and permitting reuse. Currently, this feature is available only in statement form, making it unavailable in list comprehensions and other expression contexts.

Additionally, naming sub-parts of a large expression can assist an interactive debugger, providing useful display hooks and partial results. Without a way to capture sub-expressions inline, this would require refactoring of the original code; with assignment expressions, this merely requires the insertion of a few name := markers. Removing the need to refactor reduces the likelihood that the code be inadvertently changed as part of debugging (a common cause of Heisenbugs), and is easier to dictate to another programmer.

During the development of this PEP many people (supporters and critics both) have had a tendency to focus on toy examples on the one hand, and on overly complex examples on the other.

The danger of toy examples is twofold: they are often too abstract to make anyone go “ooh, that’s compelling”, and they are easily refuted with “I would never write it that way anyway”.

The danger of overly complex examples is that they provide a convenient strawman for critics of the proposal to shoot down (“that’s obfuscated”).

Yet there is some use for both extremely simple and extremely complex examples: they are helpful to clarify the intended semantics. Therefore, there will be some of each below.

However, in order to be compelling , examples should be rooted in real code, i.e. code that was written without any thought of this PEP, as part of a useful application, however large or small. Tim Peters has been extremely helpful by going over his own personal code repository and picking examples of code he had written that (in his view) would have been clearer if rewritten with (sparing) use of assignment expressions. His conclusion: the current proposal would have allowed a modest but clear improvement in quite a few bits of code.

Another use of real code is to observe indirectly how much value programmers place on compactness. Guido van Rossum searched through a Dropbox code base and discovered some evidence that programmers value writing fewer lines over shorter lines.

Case in point: Guido found several examples where a programmer repeated a subexpression, slowing down the program, in order to save one line of code, e.g. instead of writing:

they would write:

Another example illustrates that programmers sometimes do more work to save an extra level of indentation:

This code tries to match pattern2 even if pattern1 has a match (in which case the match on pattern2 is never used). The more efficient rewrite would have been:

Syntax and semantics

In most contexts where arbitrary Python expressions can be used, a named expression can appear. This is of the form NAME := expr where expr is any valid Python expression other than an unparenthesized tuple, and NAME is an identifier.

The value of such a named expression is the same as the incorporated expression, with the additional side-effect that the target is assigned that value:

There are a few places where assignment expressions are not allowed, in order to avoid ambiguities or user confusion:

This rule is included to simplify the choice for the user between an assignment statement and an assignment expression – there is no syntactic position where both are valid.

Again, this rule is included to avoid two visually similar ways of saying the same thing.

This rule is included to disallow excessively confusing code, and because parsing keyword arguments is complex enough already.

This rule is included to discourage side effects in a position whose exact semantics are already confusing to many users (cf. the common style recommendation against mutable default values), and also to echo the similar prohibition in calls (the previous bullet).

The reasoning here is similar to the two previous cases; this ungrouped assortment of symbols and operators composed of : and = is hard to read correctly.

This allows lambda to always bind less tightly than := ; having a name binding at the top level inside a lambda function is unlikely to be of value, as there is no way to make use of it. In cases where the name will be used more than once, the expression is likely to need parenthesizing anyway, so this prohibition will rarely affect code.

This shows that what looks like an assignment operator in an f-string is not always an assignment operator. The f-string parser uses : to indicate formatting options. To preserve backwards compatibility, assignment operator usage inside of f-strings must be parenthesized. As noted above, this usage of the assignment operator is not recommended.

An assignment expression does not introduce a new scope. In most cases the scope in which the target will be bound is self-explanatory: it is the current scope. If this scope contains a nonlocal or global declaration for the target, the assignment expression honors that. A lambda (being an explicit, if anonymous, function definition) counts as a scope for this purpose.

There is one special case: an assignment expression occurring in a list, set or dict comprehension or in a generator expression (below collectively referred to as “comprehensions”) binds the target in the containing scope, honoring a nonlocal or global declaration for the target in that scope, if one exists. For the purpose of this rule the containing scope of a nested comprehension is the scope that contains the outermost comprehension. A lambda counts as a containing scope.

The motivation for this special case is twofold. First, it allows us to conveniently capture a “witness” for an any() expression, or a counterexample for all() , for example:

Second, it allows a compact way of updating mutable state from a comprehension, for example:

However, an assignment expression target name cannot be the same as a for -target name appearing in any comprehension containing the assignment expression. The latter names are local to the comprehension in which they appear, so it would be contradictory for a contained use of the same name to refer to the scope containing the outermost comprehension instead.

For example, [i := i+1 for i in range(5)] is invalid: the for i part establishes that i is local to the comprehension, but the i := part insists that i is not local to the comprehension. The same reason makes these examples invalid too:

While it’s technically possible to assign consistent semantics to these cases, it’s difficult to determine whether those semantics actually make sense in the absence of real use cases. Accordingly, the reference implementation [1] will ensure that such cases raise SyntaxError , rather than executing with implementation defined behaviour.

This restriction applies even if the assignment expression is never executed:

For the comprehension body (the part before the first “for” keyword) and the filter expression (the part after “if” and before any nested “for”), this restriction applies solely to target names that are also used as iteration variables in the comprehension. Lambda expressions appearing in these positions introduce a new explicit function scope, and hence may use assignment expressions with no additional restrictions.

Due to design constraints in the reference implementation (the symbol table analyser cannot easily detect when names are re-used between the leftmost comprehension iterable expression and the rest of the comprehension), named expressions are disallowed entirely as part of comprehension iterable expressions (the part after each “in”, and before any subsequent “if” or “for” keyword):

A further exception applies when an assignment expression occurs in a comprehension whose containing scope is a class scope. If the rules above were to result in the target being assigned in that class’s scope, the assignment expression is expressly invalid. This case also raises SyntaxError :

(The reason for the latter exception is the implicit function scope created for comprehensions – there is currently no runtime mechanism for a function to refer to a variable in the containing class scope, and we do not want to add such a mechanism. If this issue ever gets resolved this special case may be removed from the specification of assignment expressions. Note that the problem already exists for using a variable defined in the class scope from a comprehension.)

See Appendix B for some examples of how the rules for targets in comprehensions translate to equivalent code.

The := operator groups more tightly than a comma in all syntactic positions where it is legal, but less tightly than all other operators, including or , and , not , and conditional expressions ( A if C else B ). As follows from section “Exceptional cases” above, it is never allowed at the same level as = . In case a different grouping is desired, parentheses should be used.

The := operator may be used directly in a positional function call argument; however it is invalid directly in a keyword argument.

Some examples to clarify what’s technically valid or invalid:

Most of the “valid” examples above are not recommended, since human readers of Python source code who are quickly glancing at some code may miss the distinction. But simple cases are not objectionable:

This PEP recommends always putting spaces around := , similar to PEP 8 ’s recommendation for = when used for assignment, whereas the latter disallows spaces around = used for keyword arguments.)

In order to have precisely defined semantics, the proposal requires evaluation order to be well-defined. This is technically not a new requirement, as function calls may already have side effects. Python already has a rule that subexpressions are generally evaluated from left to right. However, assignment expressions make these side effects more visible, and we propose a single change to the current evaluation order:

- In a dict comprehension {X: Y for ...} , Y is currently evaluated before X . We propose to change this so that X is evaluated before Y . (In a dict display like {X: Y} this is already the case, and also in dict((X, Y) for ...) which should clearly be equivalent to the dict comprehension.)

Most importantly, since := is an expression, it can be used in contexts where statements are illegal, including lambda functions and comprehensions.

Conversely, assignment expressions don’t support the advanced features found in assignment statements:

- Multiple targets are not directly supported: x = y = z = 0 # Equivalent: (z := (y := (x := 0)))

- Single assignment targets other than a single NAME are not supported: # No equivalent a [ i ] = x self . rest = []

- Priority around commas is different: x = 1 , 2 # Sets x to (1, 2) ( x := 1 , 2 ) # Sets x to 1

- Iterable packing and unpacking (both regular or extended forms) are not supported: # Equivalent needs extra parentheses loc = x , y # Use (loc := (x, y)) info = name , phone , * rest # Use (info := (name, phone, *rest)) # No equivalent px , py , pz = position name , phone , email , * other_info = contact

- Inline type annotations are not supported: # Closest equivalent is "p: Optional[int]" as a separate declaration p : Optional [ int ] = None

- Augmented assignment is not supported: total += tax # Equivalent: (total := total + tax)

The following changes have been made based on implementation experience and additional review after the PEP was first accepted and before Python 3.8 was released:

- for consistency with other similar exceptions, and to avoid locking in an exception name that is not necessarily going to improve clarity for end users, the originally proposed TargetScopeError subclass of SyntaxError was dropped in favour of just raising SyntaxError directly. [3]

- due to a limitation in CPython’s symbol table analysis process, the reference implementation raises SyntaxError for all uses of named expressions inside comprehension iterable expressions, rather than only raising them when the named expression target conflicts with one of the iteration variables in the comprehension. This could be revisited given sufficiently compelling examples, but the extra complexity needed to implement the more selective restriction doesn’t seem worthwhile for purely hypothetical use cases.

Examples from the Python standard library

env_base is only used on these lines, putting its assignment on the if moves it as the “header” of the block.

- Current: env_base = os . environ . get ( "PYTHONUSERBASE" , None ) if env_base : return env_base

- Improved: if env_base := os . environ . get ( "PYTHONUSERBASE" , None ): return env_base

Avoid nested if and remove one indentation level.

- Current: if self . _is_special : ans = self . _check_nans ( context = context ) if ans : return ans

- Improved: if self . _is_special and ( ans := self . _check_nans ( context = context )): return ans

Code looks more regular and avoid multiple nested if. (See Appendix A for the origin of this example.)

- Current: reductor = dispatch_table . get ( cls ) if reductor : rv = reductor ( x ) else : reductor = getattr ( x , "__reduce_ex__" , None ) if reductor : rv = reductor ( 4 ) else : reductor = getattr ( x , "__reduce__" , None ) if reductor : rv = reductor () else : raise Error ( "un(deep)copyable object of type %s " % cls )

- Improved: if reductor := dispatch_table . get ( cls ): rv = reductor ( x ) elif reductor := getattr ( x , "__reduce_ex__" , None ): rv = reductor ( 4 ) elif reductor := getattr ( x , "__reduce__" , None ): rv = reductor () else : raise Error ( "un(deep)copyable object of type %s " % cls )

tz is only used for s += tz , moving its assignment inside the if helps to show its scope.

- Current: s = _format_time ( self . _hour , self . _minute , self . _second , self . _microsecond , timespec ) tz = self . _tzstr () if tz : s += tz return s

- Improved: s = _format_time ( self . _hour , self . _minute , self . _second , self . _microsecond , timespec ) if tz := self . _tzstr (): s += tz return s

Calling fp.readline() in the while condition and calling .match() on the if lines make the code more compact without making it harder to understand.

- Current: while True : line = fp . readline () if not line : break m = define_rx . match ( line ) if m : n , v = m . group ( 1 , 2 ) try : v = int ( v ) except ValueError : pass vars [ n ] = v else : m = undef_rx . match ( line ) if m : vars [ m . group ( 1 )] = 0

- Improved: while line := fp . readline (): if m := define_rx . match ( line ): n , v = m . group ( 1 , 2 ) try : v = int ( v ) except ValueError : pass vars [ n ] = v elif m := undef_rx . match ( line ): vars [ m . group ( 1 )] = 0

A list comprehension can map and filter efficiently by capturing the condition:

Similarly, a subexpression can be reused within the main expression, by giving it a name on first use:

Note that in both cases the variable y is bound in the containing scope (i.e. at the same level as results or stuff ).

Assignment expressions can be used to good effect in the header of an if or while statement:

Particularly with the while loop, this can remove the need to have an infinite loop, an assignment, and a condition. It also creates a smooth parallel between a loop which simply uses a function call as its condition, and one which uses that as its condition but also uses the actual value.

An example from the low-level UNIX world:

Rejected alternative proposals

Proposals broadly similar to this one have come up frequently on python-ideas. Below are a number of alternative syntaxes, some of them specific to comprehensions, which have been rejected in favour of the one given above.

A previous version of this PEP proposed subtle changes to the scope rules for comprehensions, to make them more usable in class scope and to unify the scope of the “outermost iterable” and the rest of the comprehension. However, this part of the proposal would have caused backwards incompatibilities, and has been withdrawn so the PEP can focus on assignment expressions.

Broadly the same semantics as the current proposal, but spelled differently.

Since EXPR as NAME already has meaning in import , except and with statements (with different semantics), this would create unnecessary confusion or require special-casing (e.g. to forbid assignment within the headers of these statements).

(Note that with EXPR as VAR does not simply assign the value of EXPR to VAR – it calls EXPR.__enter__() and assigns the result of that to VAR .)

Additional reasons to prefer := over this spelling include:

- In if f(x) as y the assignment target doesn’t jump out at you – it just reads like if f x blah blah and it is too similar visually to if f(x) and y .

- import foo as bar

- except Exc as var

- with ctxmgr() as var

To the contrary, the assignment expression does not belong to the if or while that starts the line, and we intentionally allow assignment expressions in other contexts as well.

- NAME = EXPR

- if NAME := EXPR

reinforces the visual recognition of assignment expressions.

This syntax is inspired by languages such as R and Haskell, and some programmable calculators. (Note that a left-facing arrow y <- f(x) is not possible in Python, as it would be interpreted as less-than and unary minus.) This syntax has a slight advantage over ‘as’ in that it does not conflict with with , except and import , but otherwise is equivalent. But it is entirely unrelated to Python’s other use of -> (function return type annotations), and compared to := (which dates back to Algol-58) it has a much weaker tradition.

This has the advantage that leaked usage can be readily detected, removing some forms of syntactic ambiguity. However, this would be the only place in Python where a variable’s scope is encoded into its name, making refactoring harder.

Execution order is inverted (the indented body is performed first, followed by the “header”). This requires a new keyword, unless an existing keyword is repurposed (most likely with: ). See PEP 3150 for prior discussion on this subject (with the proposed keyword being given: ).

This syntax has fewer conflicts than as does (conflicting only with the raise Exc from Exc notation), but is otherwise comparable to it. Instead of paralleling with expr as target: (which can be useful but can also be confusing), this has no parallels, but is evocative.

One of the most popular use-cases is if and while statements. Instead of a more general solution, this proposal enhances the syntax of these two statements to add a means of capturing the compared value:

This works beautifully if and ONLY if the desired condition is based on the truthiness of the captured value. It is thus effective for specific use-cases (regex matches, socket reads that return '' when done), and completely useless in more complicated cases (e.g. where the condition is f(x) < 0 and you want to capture the value of f(x) ). It also has no benefit to list comprehensions.

Advantages: No syntactic ambiguities. Disadvantages: Answers only a fraction of possible use-cases, even in if / while statements.

Another common use-case is comprehensions (list/set/dict, and genexps). As above, proposals have been made for comprehension-specific solutions.

This brings the subexpression to a location in between the ‘for’ loop and the expression. It introduces an additional language keyword, which creates conflicts. Of the three, where reads the most cleanly, but also has the greatest potential for conflict (e.g. SQLAlchemy and numpy have where methods, as does tkinter.dnd.Icon in the standard library).

As above, but reusing the with keyword. Doesn’t read too badly, and needs no additional language keyword. Is restricted to comprehensions, though, and cannot as easily be transformed into “longhand” for-loop syntax. Has the C problem that an equals sign in an expression can now create a name binding, rather than performing a comparison. Would raise the question of why “with NAME = EXPR:” cannot be used as a statement on its own.

As per option 2, but using as rather than an equals sign. Aligns syntactically with other uses of as for name binding, but a simple transformation to for-loop longhand would create drastically different semantics; the meaning of with inside a comprehension would be completely different from the meaning as a stand-alone statement, while retaining identical syntax.

Regardless of the spelling chosen, this introduces a stark difference between comprehensions and the equivalent unrolled long-hand form of the loop. It is no longer possible to unwrap the loop into statement form without reworking any name bindings. The only keyword that can be repurposed to this task is with , thus giving it sneakily different semantics in a comprehension than in a statement; alternatively, a new keyword is needed, with all the costs therein.

There are two logical precedences for the := operator. Either it should bind as loosely as possible, as does statement-assignment; or it should bind more tightly than comparison operators. Placing its precedence between the comparison and arithmetic operators (to be precise: just lower than bitwise OR) allows most uses inside while and if conditions to be spelled without parentheses, as it is most likely that you wish to capture the value of something, then perform a comparison on it:

Once find() returns -1, the loop terminates. If := binds as loosely as = does, this would capture the result of the comparison (generally either True or False ), which is less useful.

While this behaviour would be convenient in many situations, it is also harder to explain than “the := operator behaves just like the assignment statement”, and as such, the precedence for := has been made as close as possible to that of = (with the exception that it binds tighter than comma).

Some critics have claimed that the assignment expressions should allow unparenthesized tuples on the right, so that these two would be equivalent:

(With the current version of the proposal, the latter would be equivalent to ((point := x), y) .)

However, adopting this stance would logically lead to the conclusion that when used in a function call, assignment expressions also bind less tight than comma, so we’d have the following confusing equivalence:

The less confusing option is to make := bind more tightly than comma.

It’s been proposed to just always require parentheses around an assignment expression. This would resolve many ambiguities, and indeed parentheses will frequently be needed to extract the desired subexpression. But in the following cases the extra parentheses feel redundant:

Frequently Raised Objections

C and its derivatives define the = operator as an expression, rather than a statement as is Python’s way. This allows assignments in more contexts, including contexts where comparisons are more common. The syntactic similarity between if (x == y) and if (x = y) belies their drastically different semantics. Thus this proposal uses := to clarify the distinction.

The two forms have different flexibilities. The := operator can be used inside a larger expression; the = statement can be augmented to += and its friends, can be chained, and can assign to attributes and subscripts.

Previous revisions of this proposal involved sublocal scope (restricted to a single statement), preventing name leakage and namespace pollution. While a definite advantage in a number of situations, this increases complexity in many others, and the costs are not justified by the benefits. In the interests of language simplicity, the name bindings created here are exactly equivalent to any other name bindings, including that usage at class or module scope will create externally-visible names. This is no different from for loops or other constructs, and can be solved the same way: del the name once it is no longer needed, or prefix it with an underscore.

(The author wishes to thank Guido van Rossum and Christoph Groth for their suggestions to move the proposal in this direction. [2] )

As expression assignments can sometimes be used equivalently to statement assignments, the question of which should be preferred will arise. For the benefit of style guides such as PEP 8 , two recommendations are suggested.

- If either assignment statements or assignment expressions can be used, prefer statements; they are a clear declaration of intent.

- If using assignment expressions would lead to ambiguity about execution order, restructure it to use statements instead.

The authors wish to thank Alyssa Coghlan and Steven D’Aprano for their considerable contributions to this proposal, and members of the core-mentorship mailing list for assistance with implementation.

Appendix A: Tim Peters’s findings

Here’s a brief essay Tim Peters wrote on the topic.

I dislike “busy” lines of code, and also dislike putting conceptually unrelated logic on a single line. So, for example, instead of:

instead. So I suspected I’d find few places I’d want to use assignment expressions. I didn’t even consider them for lines already stretching halfway across the screen. In other cases, “unrelated” ruled:

is a vast improvement over the briefer:

The original two statements are doing entirely different conceptual things, and slamming them together is conceptually insane.

In other cases, combining related logic made it harder to understand, such as rewriting:

as the briefer:

The while test there is too subtle, crucially relying on strict left-to-right evaluation in a non-short-circuiting or method-chaining context. My brain isn’t wired that way.

But cases like that were rare. Name binding is very frequent, and “sparse is better than dense” does not mean “almost empty is better than sparse”. For example, I have many functions that return None or 0 to communicate “I have nothing useful to return in this case, but since that’s expected often I’m not going to annoy you with an exception”. This is essentially the same as regular expression search functions returning None when there is no match. So there was lots of code of the form:

I find that clearer, and certainly a bit less typing and pattern-matching reading, as:

It’s also nice to trade away a small amount of horizontal whitespace to get another _line_ of surrounding code on screen. I didn’t give much weight to this at first, but it was so very frequent it added up, and I soon enough became annoyed that I couldn’t actually run the briefer code. That surprised me!

There are other cases where assignment expressions really shine. Rather than pick another from my code, Kirill Balunov gave a lovely example from the standard library’s copy() function in copy.py :

The ever-increasing indentation is semantically misleading: the logic is conceptually flat, “the first test that succeeds wins”:

Using easy assignment expressions allows the visual structure of the code to emphasize the conceptual flatness of the logic; ever-increasing indentation obscured it.

A smaller example from my code delighted me, both allowing to put inherently related logic in a single line, and allowing to remove an annoying “artificial” indentation level:

That if is about as long as I want my lines to get, but remains easy to follow.

So, in all, in most lines binding a name, I wouldn’t use assignment expressions, but because that construct is so very frequent, that leaves many places I would. In most of the latter, I found a small win that adds up due to how often it occurs, and in the rest I found a moderate to major win. I’d certainly use it more often than ternary if , but significantly less often than augmented assignment.

I have another example that quite impressed me at the time.

Where all variables are positive integers, and a is at least as large as the n’th root of x, this algorithm returns the floor of the n’th root of x (and roughly doubling the number of accurate bits per iteration):

It’s not obvious why that works, but is no more obvious in the “loop and a half” form. It’s hard to prove correctness without building on the right insight (the “arithmetic mean - geometric mean inequality”), and knowing some non-trivial things about how nested floor functions behave. That is, the challenges are in the math, not really in the coding.

If you do know all that, then the assignment-expression form is easily read as “while the current guess is too large, get a smaller guess”, where the “too large?” test and the new guess share an expensive sub-expression.

To my eyes, the original form is harder to understand:

This appendix attempts to clarify (though not specify) the rules when a target occurs in a comprehension or in a generator expression. For a number of illustrative examples we show the original code, containing a comprehension, and the translation, where the comprehension has been replaced by an equivalent generator function plus some scaffolding.

Since [x for ...] is equivalent to list(x for ...) these examples all use list comprehensions without loss of generality. And since these examples are meant to clarify edge cases of the rules, they aren’t trying to look like real code.

Note: comprehensions are already implemented via synthesizing nested generator functions like those in this appendix. The new part is adding appropriate declarations to establish the intended scope of assignment expression targets (the same scope they resolve to as if the assignment were performed in the block containing the outermost comprehension). For type inference purposes, these illustrative expansions do not imply that assignment expression targets are always Optional (but they do indicate the target binding scope).

Let’s start with a reminder of what code is generated for a generator expression without assignment expression.

- Original code (EXPR usually references VAR): def f (): a = [ EXPR for VAR in ITERABLE ]

- Translation (let’s not worry about name conflicts): def f (): def genexpr ( iterator ): for VAR in iterator : yield EXPR a = list ( genexpr ( iter ( ITERABLE )))

Let’s add a simple assignment expression.

- Original code: def f (): a = [ TARGET := EXPR for VAR in ITERABLE ]

- Translation: def f (): if False : TARGET = None # Dead code to ensure TARGET is a local variable def genexpr ( iterator ): nonlocal TARGET for VAR in iterator : TARGET = EXPR yield TARGET a = list ( genexpr ( iter ( ITERABLE )))

Let’s add a global TARGET declaration in f() .

- Original code: def f (): global TARGET a = [ TARGET := EXPR for VAR in ITERABLE ]

- Translation: def f (): global TARGET def genexpr ( iterator ): global TARGET for VAR in iterator : TARGET = EXPR yield TARGET a = list ( genexpr ( iter ( ITERABLE )))

Or instead let’s add a nonlocal TARGET declaration in f() .

- Original code: def g (): TARGET = ... def f (): nonlocal TARGET a = [ TARGET := EXPR for VAR in ITERABLE ]