- Privacy Policy

Home » Research Techniques – Methods, Types and Examples

Research Techniques – Methods, Types and Examples

Table of Contents

Research Techniques

Definition:

Research techniques refer to the various methods, processes, and tools used to collect, analyze, and interpret data for the purpose of answering research questions or testing hypotheses.

Methods of Research Techniques

The methods of research techniques refer to the overall approaches or frameworks that guide a research study, including the theoretical perspective, research design, sampling strategy, data collection and analysis techniques, and ethical considerations. Some common methods of research techniques are:

- Quantitative research: This is a research method that focuses on collecting and analyzing numerical data to establish patterns, relationships, and cause-and-effect relationships. Examples of quantitative research techniques are surveys, experiments, and statistical analysis.

- Qualitative research: This is a research method that focuses on collecting and analyzing non-numerical data, such as text, images, and videos, to gain insights into the subjective experiences and perspectives of the participants. Examples of qualitative research techniques are interviews, focus groups, and content analysis.

- Mixed-methods research: This is a research method that combines quantitative and qualitative research techniques to provide a more comprehensive understanding of a research question. Examples of mixed-methods research techniques are surveys with open-ended questions and case studies with statistical analysis.

- Action research: This is a research method that focuses on solving real-world problems by collaborating with stakeholders and using a cyclical process of planning, action, and reflection. Examples of action research techniques are participatory action research and community-based participatory research.

- Experimental research : This is a research method that involves manipulating one or more variables to observe the effect on an outcome, to establish cause-and-effect relationships. Examples of experimental research techniques are randomized controlled trials and quasi-experimental designs.

- Observational research: This is a research method that involves observing and recording behavior or phenomena in natural settings to gain insights into the subject of study. Examples of observational research techniques are naturalistic observation and structured observation.

Types of Research Techniques

There are several types of research techniques used in various fields. Some of the most common ones are:

- Surveys : This is a quantitative research technique that involves collecting data through questionnaires or interviews to gather information from a large group of people.

- Experiments : This is a scientific research technique that involves manipulating one or more variables to observe the effect on an outcome, to establish cause-and-effect relationships.

- Case studies: This is a qualitative research technique that involves in-depth analysis of a single case, such as an individual, group, or event, to understand the complexities of the case.

- Observational studies : This is a research technique that involves observing and recording behavior or phenomena in natural settings to gain insights into the subject of study.

- Content analysis: This is a research technique used to analyze text or other media content to identify patterns, themes, or meanings.

- Focus groups: This is a research technique that involves gathering a small group of people to discuss a topic or issue and provide feedback on a product or service.

- Meta-analysis: This is a statistical research technique that involves combining data from multiple studies to assess the overall effect of a treatment or intervention.

- Action research: This is a research technique used to solve real-world problems by collaborating with stakeholders and using a cyclical process of planning, action, and reflection.

- Interviews : Interviews are another technique used in research, and they can be conducted in person or over the phone. They are often used to gather in-depth information about an individual’s experiences or opinions. For example, a researcher might conduct interviews with cancer patients to learn more about their experiences with treatment.

Example of Research Techniques

Here’s an example of how research techniques might be used by a student conducting a research project:

Let’s say a high school student is interested in investigating the impact of social media on mental health. They could use a variety of research techniques to gather data and analyze their findings, including:

- Literature review : The student could conduct a literature review to gather existing research studies, articles, and books that discuss the relationship between social media and mental health. This will provide a foundation of knowledge on the topic and help the student identify gaps in the research that they could address.

- Surveys : The student could design and distribute a survey to gather information from a sample of individuals about their social media usage and how it affects their mental health. The survey could include questions about the frequency of social media use, the types of content consumed, and how it makes them feel.

- Interviews : The student could conduct interviews with individuals who have experienced mental health issues and ask them about their social media use, and how it has impacted their mental health. This could provide a more in-depth understanding of how social media affects people on an individual level.

- Data analysis : The student could use statistical software to analyze the data collected from the surveys and interviews. This would allow them to identify patterns and relationships between social media usage and mental health outcomes.

- Report writing : Based on the findings from their research, the student could write a report that summarizes their research methods, findings, and conclusions. They could present their report to their peers or their teacher to share their insights on the topic.

Overall, by using a combination of research techniques, the student can investigate their research question thoroughly and systematically, and make meaningful contributions to the field of social media and mental health research.

Purpose of Research Techniques

The Purposes of Research Techniques are as follows:

- To investigate and gain knowledge about a particular phenomenon or topic

- To generate new ideas and theories

- To test existing theories and hypotheses

- To identify and evaluate potential solutions to problems

- To gather data and evidence to inform decision-making

- To identify trends and patterns in data

- To explore cause-and-effect relationships between variables

- To develop and refine measurement tools and methodologies

- To establish the reliability and validity of research findings

- To communicate research findings to others in a clear and concise manner.

Applications of Research Techniques

Here are some applications of research techniques:

- Scientific research: to explore, investigate and understand natural phenomena, and to generate new knowledge and theories.

- Market research: to collect and analyze data about consumer behavior, preferences, and trends, and to help businesses make informed decisions about product development, pricing, and marketing strategies.

- Medical research : to study diseases and their treatments, and to develop new medicines, therapies, and medical technologies.

- Social research : to explore and understand human behavior, attitudes, and values, and to inform public policy decisions related to education, health care, social welfare, and other areas.

- Educational research : to study teaching and learning processes, and to develop effective teaching methods and instructional materials.

- Environmental research: to investigate the impact of human activities on the environment, and to develop solutions to environmental problems.

- Engineering Research: to design, develop, and improve products, processes, and systems, and to optimize their performance and efficiency.

- Criminal justice research : to study crime patterns, causes, and prevention strategies, and to evaluate the effectiveness of criminal justice policies and programs.

- Psychological research : to investigate human cognition, emotion, and behavior, and to develop interventions to address mental health issues.

- Historical research: to study past events, societies, and cultures, and to develop an understanding of how they shape our present.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Data Collection – Methods Types and Examples

Delimitations in Research – Types, Examples and...

Research Process – Steps, Examples and Tips

Research Design – Types, Methods and Examples

Institutional Review Board – Application Sample...

Evaluating Research – Process, Examples and...

News alert: UC Berkeley has announced its next university librarian

Secondary menu

- Log in to your Library account

- Hours and Maps

- Connect from Off Campus

- UC Berkeley Home

Search form

Research methods--quantitative, qualitative, and more: overview.

- Quantitative Research

- Qualitative Research

- Data Science Methods (Machine Learning, AI, Big Data)

- Text Mining and Computational Text Analysis

- Evidence Synthesis/Systematic Reviews

- Get Data, Get Help!

About Research Methods

This guide provides an overview of research methods, how to choose and use them, and supports and resources at UC Berkeley.

As Patten and Newhart note in the book Understanding Research Methods , "Research methods are the building blocks of the scientific enterprise. They are the "how" for building systematic knowledge. The accumulation of knowledge through research is by its nature a collective endeavor. Each well-designed study provides evidence that may support, amend, refute, or deepen the understanding of existing knowledge...Decisions are important throughout the practice of research and are designed to help researchers collect evidence that includes the full spectrum of the phenomenon under study, to maintain logical rules, and to mitigate or account for possible sources of bias. In many ways, learning research methods is learning how to see and make these decisions."

The choice of methods varies by discipline, by the kind of phenomenon being studied and the data being used to study it, by the technology available, and more. This guide is an introduction, but if you don't see what you need here, always contact your subject librarian, and/or take a look to see if there's a library research guide that will answer your question.

Suggestions for changes and additions to this guide are welcome!

START HERE: SAGE Research Methods

Without question, the most comprehensive resource available from the library is SAGE Research Methods. HERE IS THE ONLINE GUIDE to this one-stop shopping collection, and some helpful links are below:

- SAGE Research Methods

- Little Green Books (Quantitative Methods)

- Little Blue Books (Qualitative Methods)

- Dictionaries and Encyclopedias

- Case studies of real research projects

- Sample datasets for hands-on practice

- Streaming video--see methods come to life

- Methodspace- -a community for researchers

- SAGE Research Methods Course Mapping

Library Data Services at UC Berkeley

Library Data Services Program and Digital Scholarship Services

The LDSP offers a variety of services and tools ! From this link, check out pages for each of the following topics: discovering data, managing data, collecting data, GIS data, text data mining, publishing data, digital scholarship, open science, and the Research Data Management Program.

Be sure also to check out the visual guide to where to seek assistance on campus with any research question you may have!

Library GIS Services

Other Data Services at Berkeley

D-Lab Supports Berkeley faculty, staff, and graduate students with research in data intensive social science, including a wide range of training and workshop offerings Dryad Dryad is a simple self-service tool for researchers to use in publishing their datasets. It provides tools for the effective publication of and access to research data. Geospatial Innovation Facility (GIF) Provides leadership and training across a broad array of integrated mapping technologies on campu Research Data Management A UC Berkeley guide and consulting service for research data management issues

General Research Methods Resources

Here are some general resources for assistance:

- Assistance from ICPSR (must create an account to access): Getting Help with Data , and Resources for Students

- Wiley Stats Ref for background information on statistics topics

- Survey Documentation and Analysis (SDA) . Program for easy web-based analysis of survey data.

Consultants

- D-Lab/Data Science Discovery Consultants Request help with your research project from peer consultants.

- Research data (RDM) consulting Meet with RDM consultants before designing the data security, storage, and sharing aspects of your qualitative project.

- Statistics Department Consulting Services A service in which advanced graduate students, under faculty supervision, are available to consult during specified hours in the Fall and Spring semesters.

Related Resourcex

- IRB / CPHS Qualitative research projects with human subjects often require that you go through an ethics review.

- OURS (Office of Undergraduate Research and Scholarships) OURS supports undergraduates who want to embark on research projects and assistantships. In particular, check out their "Getting Started in Research" workshops

- Sponsored Projects Sponsored projects works with researchers applying for major external grants.

- Next: Quantitative Research >>

- Last Updated: Apr 25, 2024 11:09 AM

- URL: https://guides.lib.berkeley.edu/researchmethods

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

Research Methods | Definition, Types, Examples

Research methods are specific procedures for collecting and analysing data. Developing your research methods is an integral part of your research design . When planning your methods, there are two key decisions you will make.

First, decide how you will collect data . Your methods depend on what type of data you need to answer your research question :

- Qualitative vs quantitative : Will your data take the form of words or numbers?

- Primary vs secondary : Will you collect original data yourself, or will you use data that have already been collected by someone else?

- Descriptive vs experimental : Will you take measurements of something as it is, or will you perform an experiment?

Second, decide how you will analyse the data .

- For quantitative data, you can use statistical analysis methods to test relationships between variables.

- For qualitative data, you can use methods such as thematic analysis to interpret patterns and meanings in the data.

Table of contents

Methods for collecting data, examples of data collection methods, methods for analysing data, examples of data analysis methods, frequently asked questions about methodology.

Data are the information that you collect for the purposes of answering your research question . The type of data you need depends on the aims of your research.

Qualitative vs quantitative data

Your choice of qualitative or quantitative data collection depends on the type of knowledge you want to develop.

For questions about ideas, experiences and meanings, or to study something that can’t be described numerically, collect qualitative data .

If you want to develop a more mechanistic understanding of a topic, or your research involves hypothesis testing , collect quantitative data .

You can also take a mixed methods approach, where you use both qualitative and quantitative research methods.

Primary vs secondary data

Primary data are any original information that you collect for the purposes of answering your research question (e.g. through surveys , observations and experiments ). Secondary data are information that has already been collected by other researchers (e.g. in a government census or previous scientific studies).

If you are exploring a novel research question, you’ll probably need to collect primary data. But if you want to synthesise existing knowledge, analyse historical trends, or identify patterns on a large scale, secondary data might be a better choice.

Descriptive vs experimental data

In descriptive research , you collect data about your study subject without intervening. The validity of your research will depend on your sampling method .

In experimental research , you systematically intervene in a process and measure the outcome. The validity of your research will depend on your experimental design .

To conduct an experiment, you need to be able to vary your independent variable , precisely measure your dependent variable, and control for confounding variables . If it’s practically and ethically possible, this method is the best choice for answering questions about cause and effect.

Prevent plagiarism, run a free check.

Your data analysis methods will depend on the type of data you collect and how you prepare them for analysis.

Data can often be analysed both quantitatively and qualitatively. For example, survey responses could be analysed qualitatively by studying the meanings of responses or quantitatively by studying the frequencies of responses.

Qualitative analysis methods

Qualitative analysis is used to understand words, ideas, and experiences. You can use it to interpret data that were collected:

- From open-ended survey and interview questions, literature reviews, case studies, and other sources that use text rather than numbers.

- Using non-probability sampling methods .

Qualitative analysis tends to be quite flexible and relies on the researcher’s judgement, so you have to reflect carefully on your choices and assumptions.

Quantitative analysis methods

Quantitative analysis uses numbers and statistics to understand frequencies, averages and correlations (in descriptive studies) or cause-and-effect relationships (in experiments).

You can use quantitative analysis to interpret data that were collected either:

- During an experiment.

- Using probability sampling methods .

Because the data are collected and analysed in a statistically valid way, the results of quantitative analysis can be easily standardised and shared among researchers.

Quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings.

Quantitative methods allow you to test a hypothesis by systematically collecting and analysing data, while qualitative methods allow you to explore ideas and experiences in depth.

In mixed methods research , you use both qualitative and quantitative data collection and analysis methods to answer your research question .

A sample is a subset of individuals from a larger population. Sampling means selecting the group that you will actually collect data from in your research.

For example, if you are researching the opinions of students in your university, you could survey a sample of 100 students.

Statistical sampling allows you to test a hypothesis about the characteristics of a population. There are various sampling methods you can use to ensure that your sample is representative of the population as a whole.

The research methods you use depend on the type of data you need to answer your research question .

- If you want to measure something or test a hypothesis , use quantitative methods . If you want to explore ideas, thoughts, and meanings, use qualitative methods .

- If you want to analyse a large amount of readily available data, use secondary data. If you want data specific to your purposes with control over how they are generated, collect primary data.

- If you want to establish cause-and-effect relationships between variables , use experimental methods. If you want to understand the characteristics of a research subject, use descriptive methods.

Methodology refers to the overarching strategy and rationale of your research project . It involves studying the methods used in your field and the theories or principles behind them, in order to develop an approach that matches your objectives.

Methods are the specific tools and procedures you use to collect and analyse data (e.g. experiments, surveys , and statistical tests ).

In shorter scientific papers, where the aim is to report the findings of a specific study, you might simply describe what you did in a methods section .

In a longer or more complex research project, such as a thesis or dissertation , you will probably include a methodology section , where you explain your approach to answering the research questions and cite relevant sources to support your choice of methods.

Is this article helpful?

More interesting articles.

- A Quick Guide to Experimental Design | 5 Steps & Examples

- Between-Subjects Design | Examples, Pros & Cons

- Case Study | Definition, Examples & Methods

- Cluster Sampling | A Simple Step-by-Step Guide with Examples

- Confounding Variables | Definition, Examples & Controls

- Construct Validity | Definition, Types, & Examples

- Content Analysis | A Step-by-Step Guide with Examples

- Control Groups and Treatment Groups | Uses & Examples

- Controlled Experiments | Methods & Examples of Control

- Correlation vs Causation | Differences, Designs & Examples

- Correlational Research | Guide, Design & Examples

- Critical Discourse Analysis | Definition, Guide & Examples

- Cross-Sectional Study | Definitions, Uses & Examples

- Data Cleaning | A Guide with Examples & Steps

- Data Collection Methods | Step-by-Step Guide & Examples

- Descriptive Research Design | Definition, Methods & Examples

- Doing Survey Research | A Step-by-Step Guide & Examples

- Ethical Considerations in Research | Types & Examples

- Explanatory Research | Definition, Guide, & Examples

- Explanatory vs Response Variables | Definitions & Examples

- Exploratory Research | Definition, Guide, & Examples

- External Validity | Types, Threats & Examples

- Extraneous Variables | Examples, Types, Controls

- Face Validity | Guide with Definition & Examples

- How to Do Thematic Analysis | Guide & Examples

- How to Write a Strong Hypothesis | Guide & Examples

- Inclusion and Exclusion Criteria | Examples & Definition

- Independent vs Dependent Variables | Definition & Examples

- Inductive Reasoning | Types, Examples, Explanation

- Inductive vs Deductive Research Approach (with Examples)

- Internal Validity | Definition, Threats & Examples

- Internal vs External Validity | Understanding Differences & Examples

- Longitudinal Study | Definition, Approaches & Examples

- Mediator vs Moderator Variables | Differences & Examples

- Mixed Methods Research | Definition, Guide, & Examples

- Multistage Sampling | An Introductory Guide with Examples

- Naturalistic Observation | Definition, Guide & Examples

- Operationalisation | A Guide with Examples, Pros & Cons

- Population vs Sample | Definitions, Differences & Examples

- Primary Research | Definition, Types, & Examples

- Qualitative vs Quantitative Research | Examples & Methods

- Quasi-Experimental Design | Definition, Types & Examples

- Questionnaire Design | Methods, Question Types & Examples

- Random Assignment in Experiments | Introduction & Examples

- Reliability vs Validity in Research | Differences, Types & Examples

- Reproducibility vs Replicability | Difference & Examples

- Research Design | Step-by-Step Guide with Examples

- Sampling Methods | Types, Techniques, & Examples

- Semi-Structured Interview | Definition, Guide & Examples

- Simple Random Sampling | Definition, Steps & Examples

- Stratified Sampling | A Step-by-Step Guide with Examples

- Structured Interview | Definition, Guide & Examples

- Systematic Review | Definition, Examples & Guide

- Systematic Sampling | A Step-by-Step Guide with Examples

- Textual Analysis | Guide, 3 Approaches & Examples

- The 4 Types of Reliability in Research | Definitions & Examples

- The 4 Types of Validity | Types, Definitions & Examples

- Transcribing an Interview | 5 Steps & Transcription Software

- Triangulation in Research | Guide, Types, Examples

- Types of Interviews in Research | Guide & Examples

- Types of Research Designs Compared | Examples

- Types of Variables in Research | Definitions & Examples

- Unstructured Interview | Definition, Guide & Examples

- What Are Control Variables | Definition & Examples

- What Is a Case-Control Study? | Definition & Examples

- What Is a Cohort Study? | Definition & Examples

- What Is a Conceptual Framework? | Tips & Examples

- What Is a Double-Barrelled Question?

- What Is a Double-Blind Study? | Introduction & Examples

- What Is a Focus Group? | Step-by-Step Guide & Examples

- What Is a Likert Scale? | Guide & Examples

- What is a Literature Review? | Guide, Template, & Examples

- What Is a Prospective Cohort Study? | Definition & Examples

- What Is a Retrospective Cohort Study? | Definition & Examples

- What Is Action Research? | Definition & Examples

- What Is an Observational Study? | Guide & Examples

- What Is Concurrent Validity? | Definition & Examples

- What Is Content Validity? | Definition & Examples

- What Is Convenience Sampling? | Definition & Examples

- What Is Convergent Validity? | Definition & Examples

- What Is Criterion Validity? | Definition & Examples

- What Is Deductive Reasoning? | Explanation & Examples

- What Is Discriminant Validity? | Definition & Example

- What Is Ecological Validity? | Definition & Examples

- What Is Ethnography? | Meaning, Guide & Examples

- What Is Non-Probability Sampling? | Types & Examples

- What Is Participant Observation? | Definition & Examples

- What Is Peer Review? | Types & Examples

- What Is Predictive Validity? | Examples & Definition

- What Is Probability Sampling? | Types & Examples

- What Is Purposive Sampling? | Definition & Examples

- What Is Qualitative Observation? | Definition & Examples

- What Is Qualitative Research? | Methods & Examples

- What Is Quantitative Observation? | Definition & Examples

- What Is Quantitative Research? | Definition & Methods

- What Is Quota Sampling? | Definition & Examples

- What is Secondary Research? | Definition, Types, & Examples

- What Is Snowball Sampling? | Definition & Examples

- Within-Subjects Design | Explanation, Approaches, Examples

About methods, techniques, and research tools

The next step in the work of a researcher: the choice of a research method.

Let’s consider a research project as a journey: its purpose is to find answers to the (research) questions compelling to the researcher. The path to this goal is marked by the research method . Your role as a social researcher is to consciously choose the most appropriate path and follow its principles and guidelines; this oath is about choosing a research question . You also have to consider the reliability of your measurements. Remember that in the scientific community only the reliable data is considered worth analyzing, drawing conclusions and arguing about. Thus, the objectives of the research together with the research questions set the direction of your research, while the research method indicates how you will collect data. In addition, research methods imply the research tools that should be used to gather data and draw conclusions.

Note : The aim of this module is to provide practical tips and necessary knowledge for choosing the appropriate research method. During this module, you will see examples of research methods used in social sciences, but at this stage we will not discuss them in detail. You will learn more about the application of selected methods in the next section of the Toolbox: Conducting Research.

A research method is defined as a way of scientifically studying a phenomenon. It consists of specific activities within the research procedure, supplemented by a set of tools used to collect and analyze data.

The intentional , planned , and conscious choice of a research method is a guarantee of the success of the entire research project. The choice of the research method should therefore precede the research itself and lead to the consistent application of the principles of the method during its course (small lapses are possible in qualitative research, which you will learn about soon). The research method is characterized by its replicability . By this we mean that the results of the study should be verifiable and help another researcher replicate the study in another context. The diagram below presents the characteristics of research method that you should keep in mind when implementing your research project.

The concept of the research method is related to another concept that is important to us: the research technique . For each method, we can indicate many techniques that in some way detail the sequence of research activities within the selected method, while also indicating the optimal research tools. In other words, while the research method quite generally dictates the way of scientific study, the technique tells what the process should look like and with what tools it should be carried out. Usually, we are talking about a data collection technique, but its choice also determines the method of data analysis and verification of research hypotheses.

Various classifications of research methods and techniques were proposed in academic literature. Sometimes one author qualifies a procedure as a method, and another defines it as a technique. This problem is highlighted by Bäcker et al. (2016: 66-67):

in practice, the distinction between research method and research technique is vague. It is said that a method is more general than a research technique, but the line between general and specific is not set at one precise point. This distinction is somewhat intuitive (...) Ultimately, it is less important whether the set of methodological rules used in the study is called a method or a technique. It is more important that it is applied correctly and complies with the requirements.

When carrying out a research project, you will almost certainly come across terms like "methodology" or "research technique" and ‘method’. These terms look similar, but shouldn’t be used interchangeably. Their proper use proves your readiness for the role of a researcher. Sometimes, in the work of students, the word “methodology” tends to be misused. In fact, it simply means the study of scientific research methods and research procedures. You have already learned what we mean by the term "research method". Now try to intuitively link the terms from the list with their correct definition.

Top 21 must-have digital tools for researchers

Last updated

12 May 2023

Reviewed by

Jean Kaluza

Research drives many decisions across various industries, including:

Uncovering customer motivations and behaviors to design better products

Assessing whether a market exists for your product or service

Running clinical studies to develop a medical breakthrough

Conducting effective and shareable research can be a painstaking process. Manual processes are sluggish and archaic, and they can also be inaccurate. That’s where advanced online tools can help.

The right tools can enable businesses to lean into research for better forecasting, planning, and more reliable decisions.

- Why do researchers need research tools?

Research is challenging and time-consuming. Analyzing data , running focus groups , reading research papers , and looking for useful insights take plenty of heavy lifting.

These days, researchers can’t just rely on manual processes. Instead, they’re using advanced tools that:

Speed up the research process

Enable new ways of reaching customers

Improve organization and accuracy

Allow better monitoring throughout the process

Enhance collaboration across key stakeholders

- The most important digital tools for researchers

Some tools can help at every stage, making researching simpler and faster.

They ensure accurate and efficient information collection, management, referencing, and analysis.

Some of the most important digital tools for researchers include:

Research management tools

Research management can be a complex and challenging process. Some tools address the various challenges that arise when referencing and managing papers.

.css-10ptwjf{-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;background:transparent;border:0;color:inherit;cursor:pointer;-webkit-flex-shrink:0;-ms-flex-negative:0;flex-shrink:0;-webkit-text-decoration:underline;text-decoration:underline;}.css-10ptwjf:disabled{opacity:0.6;pointer-events:none;} Zotero

Coined as a personal research assistant, Zotero is a tool that brings efficiency to the research process. Zotero helps researchers collect, organize, annotate, and share research easily.

Zotero integrates with internet browsers, so researchers can easily save an article, publication, or research study on the platform for later.

The tool also has an advanced organizing system to allow users to label, tag, and categorize information for faster insights and a seamless analysis process.

Messy paper stacks––digital or physical––are a thing of the past with Paperpile. This reference management tool integrates with Google Docs, saving users time with citations and paper management.

Referencing, researching, and gaining insights is much cleaner and more productive, as all papers are in the same place. Plus, it’s easier to find a paper when you need it.

Acting as a single source of truth (SSOT), Dovetail houses research from the entire organization in a simple-to-use place. Researchers can use the all-in-one platform to collate and store data from interviews , forms, surveys , focus groups, and more.

Dovetail helps users quickly categorize and analyze data to uncover truly actionable insights . This helps organizations bring customer insights into every decision for better forecasting, planning, and decision-making.

Dovetail integrates with other helpful tools like Slack, Atlassian, Notion, and Zapier for a truly efficient workflow.

Putting together papers and referencing sources can be a huge time consumer. EndNote claims that researchers waste 200,000 hours per year formatting citations.

To address the issue, the tool formats citations automatically––simultaneously creating a bibliography while the user writes.

EndNote is also a cloud-based system that allows remote working, multiple-user interaction and collaboration, and seamless working on different devices.

Information survey tools

Surveys are a common way to gain data from customers. These tools can make the process simpler and more cost-effective.

With ready-made survey templates––to collect NPS data, customer effort scores , five-star surveys, and more––getting going with Delighted is straightforward.

Delighted helps teams collect and analyze survey feedback without needing any technical knowledge. The templates are customizable, so you can align the content with your brand. That way, the survey feels like it’s coming from your company, not a third party.

SurveyMonkey

With millions of customers worldwide, SurveyMonkey is another leader in online surveys. SurveyMonkey offers hundreds of templates that researchers can use to set up and deploy surveys quickly.

Whether your survey is about team performance, hotel feedback, post-event feedback, or an employee exit, SurveyMonkey has a ready-to-use template.

Typeform offers free templates you can quickly embed, which comes with a point of difference: It designs forms and surveys with people in mind, focusing on customer enjoyment.

Typeform employs the ‘one question at a time’ method to keep engagement rates and completions high. It focuses on surveys that feel more like conversations than a list of questions.

Web data analysis tools

Collecting data can take time––especially technical information. Some tools make that process simpler.

For those conducting clinical research, data collection can be incredibly time-consuming. Teamscope provides an online platform to collect and manage data simply and easily.

Researchers and medical professionals often collect clinical data through paper forms or digital means. Those are too easy to lose, tricky to manage, and challenging to collaborate on.

With Teamscope, you can easily collect, store, and electronically analyze data like patient-reported outcomes and surveys.

Heap is a digital insights platform providing context on the entire customer journey . This helps businesses improve customer feedback , conversion rates, and loyalty.

Through Heap, you can seamlessly view and analyze the customer journey across all platforms and touchpoints, whether through the app or website.

Another analytics tool, Smartlook, combines quantitative and qualitative analytics into one platform. This helps organizations understand user behavior and make crucial improvements.

Smartlook is useful for analyzing web pages, purchasing flows, and optimizing conversion rates.

Project management tools

Managing multiple research projects across many teams can be complex and challenging. Project management tools can ease the burden on researchers.

Visual productivity tool Trello helps research teams manage their projects more efficiently. Trello makes product tracking easier with:

A range of workflow options

Unique project board layouts

Advanced descriptions

Integrations

Trello also works as an SSOT to stay on top of projects and collaborate effectively as a team.

To connect research, workflows, and teams, Airtable provides a clean interactive interface.

With Airtable, it’s simple to place research projects in a list view, workstream, or road map to synthesize information and quickly collaborate. The Sync feature makes it easy to link all your research data to one place for faster action.

For product teams, Asana gathers development, copywriting, design, research teams, and product managers in one space.

As a task management platform, Asana offers all the expected features and more, including time-tracking and Jira integration. The platform offers reporting alongside data collection methods , so it’s a favorite for product teams in the tech space.

Grammar checker tools

Grammar tools ensure your research projects are professional and proofed.

No one’s perfect, especially when it comes to spelling, punctuation, and grammar. That’s where Grammarly can help.

Grammarly’s AI-powered platform reviews your content and corrects any mistakes. Through helpful integrations with other platforms––such as Gmail, Google Docs, Twitter, and LinkedIn––it’s simple to spellcheck as you go.

Another helpful grammar tool is Trinka AI. Trinka is specifically for technical and academic styles of writing. It doesn’t just correct mistakes in spelling, punctuation, and grammar; it also offers explanations and additional information when errors show.

Researchers can also use Trinka to enhance their writing and:

Align it with technical and academic styles

Improve areas like syntax and word choice

Discover relevant suggestions based on the content topic

Plagiarism checker tools

Avoiding plagiarism is crucial for the integrity of research. Using checker tools can ensure your work is original.

Plagiarism checker Quetext uses DeepSearch™ technology to quickly sort through online content to search for signs of plagiarism.

With color coding, annotations, and an overall score, it’s easy to identify conflict areas and fix them accordingly.

Duplichecker

Another helpful plagiarism tool is Duplichecker, which scans pieces of content for issues. The service is free for content up to 1000 words, with paid options available after that.

If plagiarism occurs, a percentage identifies how much is duplicate content. However, the interface is relatively basic, offering little additional information.

Journal finder tools

Finding the right journals for your project can be challenging––especially with the plethora of inaccurate or predatory content online. Journal finder tools can solve this issue.

Enago Journal Finder

The Enago Open Access Journal Finder sorts through online journals to verify their legitimacy. Through Engao, you can discover pre-vetted, high-quality journals through a validated journal index.

Enago’s search tool also helps users find relevant journals for their subject matter, speeding up the research process.

JournalFinder

JournalFinder is another journal tool that’s popular with academics and researchers. It makes the process of discovering relevant journals fast by leaning into a machine-learning algorithm.

This is useful for discovering key information and finding the right journals to publish and share your work in.

Social networking for researchers

Collaboration between researchers can improve the accuracy and sharing of information. Promoting research findings can also be essential for public health, safety, and more.

While typical social networks exist, some are specifically designed for academics.

ResearchGate

Networking platform ResearchGate encourages researchers to connect, collaborate, and share within the scientific community. With 20 million researchers on the platform, it's a popular choice.

ResearchGate is founded on an intention to advance research. The platform provides topic pages for easy connection within a field of expertise and access to millions of publications to help users stay up to date.

Academia is another commonly used platform that connects 220 million academics and researchers within their specialties.

The platform aims to accelerate research with discovery tools and grow a researcher’s audience to promote their ideas.

On Academia, users can access 47 million PDFs for free. They cover topics from mechanical engineering to applied economics and child psychology.

- Expedited research with the power of tools

For researchers, finding data and information can be time-consuming and complex to manage. That’s where the power of tools comes in.

Manual processes are slow, outdated, and have a larger potential for inaccuracies.

Leaning into tools can help researchers speed up their processes, conduct efficient research, boost their accuracy, and share their work effectively.

With tools available for project and data management, web data collection, and journal finding, researchers have plenty of assistance at their disposal.

When it comes to connecting with customers, advanced tools boost customer connection while continually bringing their needs and wants into products and services.

What are primary research tools?

Primary research is data and information that you collect firsthand through surveys, customer interviews, or focus groups.

Secondary research is data and information from other sources, such as journals, research bodies, or online content.

Primary researcher tools use methods like surveys and customer interviews. You can use these tools to collect, store, or manage information effectively and uncover more accurate insights.

What is the difference between tools and methods in research?

Research methods relate to how researchers gather information and data.

For example, surveys, focus groups, customer interviews, and A/B testing are research methods that gather information.

On the other hand, tools assist areas of research. Researchers may use tools to more efficiently gather data, store data securely, or uncover insights.

Tools can improve research methods, ensuring efficiency and accuracy while reducing complexity.

Should you be using a customer insights hub?

Do you want to discover previous research faster?

Do you share your research findings with others?

Do you analyze research data?

Start for free today, add your research, and get to key insights faster

Editor’s picks

Last updated: 13 April 2023

Last updated: 14 February 2024

Last updated: 27 January 2024

Last updated: 18 April 2023

Last updated: 8 February 2023

Last updated: 23 January 2024

Last updated: 30 January 2024

Last updated: 7 February 2023

Last updated: 18 May 2023

Last updated: 31 January 2024

Last updated: 13 May 2024

Latest articles

Related topics, .css-je19u9{-webkit-align-items:flex-end;-webkit-box-align:flex-end;-ms-flex-align:flex-end;align-items:flex-end;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-flex-direction:row;-ms-flex-direction:row;flex-direction:row;-webkit-box-flex-wrap:wrap;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;-webkit-box-pack:center;-ms-flex-pack:center;-webkit-justify-content:center;justify-content:center;row-gap:0;text-align:center;max-width:671px;}@media (max-width: 1079px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}}@media (max-width: 799px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}} decide what to .css-1kiodld{max-height:56px;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;}@media (max-width: 1079px){.css-1kiodld{display:none;}} build next, decide what to build next.

Users report unexpectedly high data usage, especially during streaming sessions.

Users find it hard to navigate from the home page to relevant playlists in the app.

It would be great to have a sleep timer feature, especially for bedtime listening.

I need better filters to find the songs or artists I’m looking for.

Log in or sign up

Get started for free

- U.S. Department of Health & Human Services

- Virtual Tour

- Staff Directory

- En Español

You are here

Impact of nih research.

Revolutionizing Science

Research Tools

NIH leads the charge on developing new research tools that have broad applications, pushing the boundaries on multiple research fronts.

Small Molecule Screening

Research-tools--small-molecule-screening.jpg.

Thanks to NIH, publicly funded researchers now have access to resources and tools with the capacity to screen large numbers of small molecules, helping them to more efficiently study genes and discover treatments for human diseases. Researchers used these resources to develop FDA-approved treatments for ulcerative colitis and relapsing forms of multiple sclerosis.

Image credit: National Center for Advancing Translational Sciences, NIH

- This advancement in small molecule research makes it easier for scientists to use and understand molecular compounds in basic research and drug development.

- The NIH Common Fund Molecular Libraries and Imaging Program also launched PubChem, an open chemistry database that contains information on chemical structures, properties, and biological activities of over 100 million compounds, including small molecules.

- NIH also developed tools and resources to help scientists conduct preclinical research, with a focus on small molecule screening.

Single Cell Analysis

Research-tools--single-cell-analysis.jpg.

NIH fostered a technological revolution in single cell analysis research, leading to the development of cutting-edge tools, methods, platforms, and cell atlases to identify and characterize features of single cells within a variety of human tissues. These technologies are available to the entire research community to foster additional breakthroughs in research.

Image credit: NIH

- The human body contains approximately 37 trillion cells, carefully organized in tissues to carry out the daily processes that keep the body alive and healthy. Analysis of single cells poses many technological challenges.

- Between 2012 and 2017, the NIH Common Fund Single Cell Analysis Program found a three-fold increase in the number of single cell analysis projects funded by NIH and an approximate doubling of relevant publications.

- Understanding cells at the individual level may lead to new understandings of development, health, aging, and disease.

research-tools--cryo-em.jpg

NIH funded the development and dissemination of cryo-electron microscopy (cryo-EM), a tool that enables high-resolution images of proteins and other biological structures. Cryo-EM has helped researchers identify potential new therapeutic targets for vaccines and drugs.

Image credit: Huilin Li, Brookhaven National Laboratory, and Bruce Stillman, Cold Spring Harbor Laboratory

- An NIH-funded researcher was awarded the 2017 Nobel Prize in Chemistry for their work characterizing proteins using cryo-EM.

- Since 2018, the NIH-supported National Centers for Cryo-EM enabled researchers to determine the structure of more than 300 proteins, including the SARS-CoV-2 spike protein, and trained more than 1,000 investigators in this cutting-edge technique.

Cell Culture Technology

Research-tools--cell-culture-technology.jpg.

NIH scientists created Matrigel, a specialized gel that promotes cell growth on a 3-D surface that mimics the environment within the body. Today, Matrigel is widely used in labs around the world to study cells that were previously impossible to grow and to investigate complex cell activities in a more relevant environment.

Image credit: David Sone

- Prior to this invention, scientists grew cells in a flat layer in plastic culture dishes, which was not sufficient to grow specialized cells, like stem cells.

- Using Matrigel, researchers discovered new insights into nerve growth, the formation of blood vessels, and stem and cancer cell biology. It is also being used to screen cancer drugs and to support development of artificial tissues that can mimic organ function.

- More than 13,000 scientific papers have cited the use of Matrigel in their studies.

Cancer Genome Atlas

Research-tools--cancer-genome-atlas.jpg.

The Cancer Genome Atlas (TCGA) is a landmark NIH cancer genomics program that transformed our understanding of cancer by analyzing tumors from 11,000 patients with 33 different cancer types. Findings from TCGA identified new ways to prevent, diagnose, and treat cancers, such as gliomas and stomach cancer.

Image credit: Darryl Leja, National Human Genome Research Institute, NIH

- TCGA showed that different cancers can share molecular traits regardless of the organ or tissue they are found in. This enabled the emergence of precision medicine in oncology—cancer treatment based on molecular traits rather than the tissue in the body where the cancer started.

- TCGA generated over 2.5 petabytes (1 petabyte = 500 billion pages of standard printed text!) of data on genes, proteins, and their modifications in cancer by bringing together 20 collaborating institutions across the U.S. and Canada.

Recombinant DNA

Research-tools--recombinant-dna.jpg.

Because of NIH-funded research on recombinant DNA technology, researchers developed techniques that can enable the production of large quantities of important peptides—the building blocks of proteins—which can be used to produce certain medicines.

Image credit: National Human Genome Research Institute, NIH

- Scientists use specialized molecules to snip out a specific gene from a long strand of DNA, creating recombinant DNA by inserting it into bacterial or yeast cells. These cells reproduce quickly and, following the gene’s instructions, make large amounts of the desired peptide.

- These techniques enabled the production of synthetic insulin to treat diabetes.

- Medicines produced using these techniques have been used for more than 30 years.

- In 1980, an NIH-funded researcher received a Nobel Prize for research on recombinant DNA.

Imaging Technology

Research-tools--imaging-technology.jpg.

Significant innovation in clinical imaging technology is a result of NIH-funded research. Imaging technologies now have higher resolution and greater sensitivity, with new categories of imaging, like digital 3D reconstructions, now being commonly used.

Image credit: Clinical Center, NIH

- A new type of positron emission tomography (PET) that looks for prostate cancer specific proteins has been found to be 27% more accurate than standard methods for detecting prostate cancers.

- NIH-supported improvements in PET technologies resulted in a more sensitive technology that can capture scans in under a minute and reduce the dose of dye given to patients.

- NIH-funded research led to the development of nuclear magnetic resonance imaging, which won a Nobel Prize, and is the same technique used in MRIs in clinical settings.

- Molecular Libraries and Imaging: https://commonfund.nih.gov/molecularlibraries/index

- Preclinical Research Toolbox: https://ncats.nih.gov/expertise/preclinical

- PubChem: https://pubchemdocs.ncbi.nlm.nih.gov/statistics

- Molecular Libraries and Imaging Program Highlights: https://commonfund.nih.gov/Molecularlibraries/programhighlights

- Article: Ozanimod accepted for priority review by FDA for the treatment of ulcerative colitis: https://www.scripps.edu/news-and-events/press-room/2021/20210203-rosen-roberts-ozanimod-fda-ulcerative-colitis.html

- Article: U.S. Food and Drug Administration Approves Bristol Myers Squibb’s Zeposia® (ozanimod), an Oral Treatment for Adults with Moderately to Severely Active Ulcerative Colitis: https://news.bms.com/news/corporate-financial/2021/U.S.-Food-and-Drug-Administration-Approves-Bristol-Myers-Squibbs-Zeposia-ozanimod-an-Oral-Treatment-for-Adults-with-Moderately-to-Severely-Active-Ulcerative-Colitis1/default.aspx

- NIH Single Cell Analysis Program: https://commonfund.nih.gov/singlecell

- Roy AL, et al. Sci Adv . 2018;4(8):eaat8573. PMID: 30083611 .

- The Human BioMolecular Atlas Program: https://commonfund.nih.gov/HuBMAP

- HuBMAP Data Portal: https://portal.hubmapconsortium.org/

- Cellular Senescence Network: https://commonfund.nih.gov/senescence

- LungMAP: https://www.lungmap.net/

- GenitoUrinary Development Molecular Anatomy Project: https://www.gudmap.org/

- Transformative High-Resolution Cryoelectron Microscopy Program: https://commonfund.nih.gov/CryoEM

- Cryo-Electron Microscopy Program Centers: https://www.cryoemcenters.org

- Zhang K, et al. bioRxiv [Preprint]. 2020:2020.08.11.245696. Update in: QRB Discov . 2020;1:e11. PMID: 32817943 .

- NIH Nobel Laureates: https://www.nih.gov/about-nih/what-we-do/nih-almanac/nobel-laureates

- Cressey D, et al. Nature . 2017;550(7675):167. PMID: 29022937 .

- Simian M, et al. J Cell Biol . 2017;216(1):31-40. PMID: 28031422 .

- Article: An Interview with Hynda Kleinman: https://irp.nih.gov/catalyst/v21i4/alumni-news

- Kleinman HK, et al. Semin Cancer Biol . 2005;15(5):378-86. PMID: 15975825 .

- Article: Hair today, gone tomorrow: NIDCR'S Hynda Kleinman takes off for new horizons: https://nihsearch.cit.nih.gov/catalyst/2005/05.11.01/page4.html

- The Cancer Genome Atlas Program: https://www.cancer.gov/about-nci/organization/ccg/research/structural-genomics/tcga

- NIGMS-Supported Nobelists: https://www.nigms.nih.gov/pages/GMNobelists.aspx

- Article: Celebrating the discovery and development of insulin: www.niddk.nih.gov/news/archive/2021/celebrating-discovery-development-insulin

- National Institute of General Medical Sciences. The New Genetics . 2010. https://nigms.nih.gov/education/Booklets/the-new-genetics/Documents/Booklet-The-New-Genetics.pdf

- Article: Commemorating the 50th Anniversary of the National Cancer Act (NCA50): Clinical Imaging — Then and Now: https://dctd.cancer.gov/NewsEvents/20210712_NCA50.htm?cid=soc_ig_en_enterprise_nca50

- EXPLORER Total Body PET Scanner: https://health.ucdavis.edu/radiology/myexam/PET/Equipment/explorer.html

- Badawi RD, et al. J Nucl Med . 2019;60(3):299-303. PMID: 30733314 .

- Article: PSMA PET-CT Accurately Detects Prostate Cancer Spread, Trial Shows: https://www.cancer.gov/news-events/cancer-currents-blog/2020/prostate-cancer-psma-pet-ct-metastasis

- Magnetic Resonance Imaging (MRI): https://www.nibib.nih.gov/science-education/science-topics/magnetic-resonance-imaging-mri

This page last reviewed on March 1, 2023

Connect with Us

- More Social Media from NIH

Data Analysis Techniques in Research – Methods, Tools & Examples

Varun Saharawat is a seasoned professional in the fields of SEO and content writing. With a profound knowledge of the intricate aspects of these disciplines, Varun has established himself as a valuable asset in the world of digital marketing and online content creation.

Data analysis techniques in research are essential because they allow researchers to derive meaningful insights from data sets to support their hypotheses or research objectives.

Data Analysis Techniques in Research : While various groups, institutions, and professionals may have diverse approaches to data analysis, a universal definition captures its essence. Data analysis involves refining, transforming, and interpreting raw data to derive actionable insights that guide informed decision-making for businesses.

A straightforward illustration of data analysis emerges when we make everyday decisions, basing our choices on past experiences or predictions of potential outcomes.

If you want to learn more about this topic and acquire valuable skills that will set you apart in today’s data-driven world, we highly recommend enrolling in the Data Analytics Course by Physics Wallah . And as a special offer for our readers, use the coupon code “READER” to get a discount on this course.

Table of Contents

What is Data Analysis?

Data analysis is the systematic process of inspecting, cleaning, transforming, and interpreting data with the objective of discovering valuable insights and drawing meaningful conclusions. This process involves several steps:

- Inspecting : Initial examination of data to understand its structure, quality, and completeness.

- Cleaning : Removing errors, inconsistencies, or irrelevant information to ensure accurate analysis.

- Transforming : Converting data into a format suitable for analysis, such as normalization or aggregation.

- Interpreting : Analyzing the transformed data to identify patterns, trends, and relationships.

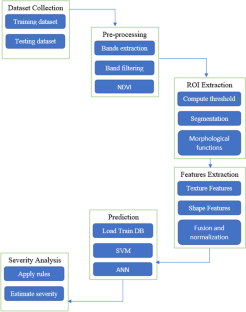

Types of Data Analysis Techniques in Research

Data analysis techniques in research are categorized into qualitative and quantitative methods, each with its specific approaches and tools. These techniques are instrumental in extracting meaningful insights, patterns, and relationships from data to support informed decision-making, validate hypotheses, and derive actionable recommendations. Below is an in-depth exploration of the various types of data analysis techniques commonly employed in research:

1) Qualitative Analysis:

Definition: Qualitative analysis focuses on understanding non-numerical data, such as opinions, concepts, or experiences, to derive insights into human behavior, attitudes, and perceptions.

- Content Analysis: Examines textual data, such as interview transcripts, articles, or open-ended survey responses, to identify themes, patterns, or trends.

- Narrative Analysis: Analyzes personal stories or narratives to understand individuals’ experiences, emotions, or perspectives.

- Ethnographic Studies: Involves observing and analyzing cultural practices, behaviors, and norms within specific communities or settings.

2) Quantitative Analysis:

Quantitative analysis emphasizes numerical data and employs statistical methods to explore relationships, patterns, and trends. It encompasses several approaches:

Descriptive Analysis:

- Frequency Distribution: Represents the number of occurrences of distinct values within a dataset.

- Central Tendency: Measures such as mean, median, and mode provide insights into the central values of a dataset.

- Dispersion: Techniques like variance and standard deviation indicate the spread or variability of data.

Diagnostic Analysis:

- Regression Analysis: Assesses the relationship between dependent and independent variables, enabling prediction or understanding causality.

- ANOVA (Analysis of Variance): Examines differences between groups to identify significant variations or effects.

Predictive Analysis:

- Time Series Forecasting: Uses historical data points to predict future trends or outcomes.

- Machine Learning Algorithms: Techniques like decision trees, random forests, and neural networks predict outcomes based on patterns in data.

Prescriptive Analysis:

- Optimization Models: Utilizes linear programming, integer programming, or other optimization techniques to identify the best solutions or strategies.

- Simulation: Mimics real-world scenarios to evaluate various strategies or decisions and determine optimal outcomes.

Specific Techniques:

- Monte Carlo Simulation: Models probabilistic outcomes to assess risk and uncertainty.

- Factor Analysis: Reduces the dimensionality of data by identifying underlying factors or components.

- Cohort Analysis: Studies specific groups or cohorts over time to understand trends, behaviors, or patterns within these groups.

- Cluster Analysis: Classifies objects or individuals into homogeneous groups or clusters based on similarities or attributes.

- Sentiment Analysis: Uses natural language processing and machine learning techniques to determine sentiment, emotions, or opinions from textual data.

Also Read: AI and Predictive Analytics: Examples, Tools, Uses, Ai Vs Predictive Analytics

Data Analysis Techniques in Research Examples

To provide a clearer understanding of how data analysis techniques are applied in research, let’s consider a hypothetical research study focused on evaluating the impact of online learning platforms on students’ academic performance.

Research Objective:

Determine if students using online learning platforms achieve higher academic performance compared to those relying solely on traditional classroom instruction.

Data Collection:

- Quantitative Data: Academic scores (grades) of students using online platforms and those using traditional classroom methods.

- Qualitative Data: Feedback from students regarding their learning experiences, challenges faced, and preferences.

Data Analysis Techniques Applied:

1) Descriptive Analysis:

- Calculate the mean, median, and mode of academic scores for both groups.

- Create frequency distributions to represent the distribution of grades in each group.

2) Diagnostic Analysis:

- Conduct an Analysis of Variance (ANOVA) to determine if there’s a statistically significant difference in academic scores between the two groups.

- Perform Regression Analysis to assess the relationship between the time spent on online platforms and academic performance.

3) Predictive Analysis:

- Utilize Time Series Forecasting to predict future academic performance trends based on historical data.

- Implement Machine Learning algorithms to develop a predictive model that identifies factors contributing to academic success on online platforms.

4) Prescriptive Analysis:

- Apply Optimization Models to identify the optimal combination of online learning resources (e.g., video lectures, interactive quizzes) that maximize academic performance.

- Use Simulation Techniques to evaluate different scenarios, such as varying student engagement levels with online resources, to determine the most effective strategies for improving learning outcomes.

5) Specific Techniques:

- Conduct Factor Analysis on qualitative feedback to identify common themes or factors influencing students’ perceptions and experiences with online learning.

- Perform Cluster Analysis to segment students based on their engagement levels, preferences, or academic outcomes, enabling targeted interventions or personalized learning strategies.

- Apply Sentiment Analysis on textual feedback to categorize students’ sentiments as positive, negative, or neutral regarding online learning experiences.

By applying a combination of qualitative and quantitative data analysis techniques, this research example aims to provide comprehensive insights into the effectiveness of online learning platforms.

Also Read: Learning Path to Become a Data Analyst in 2024

Data Analysis Techniques in Quantitative Research

Quantitative research involves collecting numerical data to examine relationships, test hypotheses, and make predictions. Various data analysis techniques are employed to interpret and draw conclusions from quantitative data. Here are some key data analysis techniques commonly used in quantitative research:

1) Descriptive Statistics:

- Description: Descriptive statistics are used to summarize and describe the main aspects of a dataset, such as central tendency (mean, median, mode), variability (range, variance, standard deviation), and distribution (skewness, kurtosis).

- Applications: Summarizing data, identifying patterns, and providing initial insights into the dataset.

2) Inferential Statistics:

- Description: Inferential statistics involve making predictions or inferences about a population based on a sample of data. This technique includes hypothesis testing, confidence intervals, t-tests, chi-square tests, analysis of variance (ANOVA), regression analysis, and correlation analysis.

- Applications: Testing hypotheses, making predictions, and generalizing findings from a sample to a larger population.

3) Regression Analysis:

- Description: Regression analysis is a statistical technique used to model and examine the relationship between a dependent variable and one or more independent variables. Linear regression, multiple regression, logistic regression, and nonlinear regression are common types of regression analysis .

- Applications: Predicting outcomes, identifying relationships between variables, and understanding the impact of independent variables on the dependent variable.

4) Correlation Analysis:

- Description: Correlation analysis is used to measure and assess the strength and direction of the relationship between two or more variables. The Pearson correlation coefficient, Spearman rank correlation coefficient, and Kendall’s tau are commonly used measures of correlation.

- Applications: Identifying associations between variables and assessing the degree and nature of the relationship.

5) Factor Analysis:

- Description: Factor analysis is a multivariate statistical technique used to identify and analyze underlying relationships or factors among a set of observed variables. It helps in reducing the dimensionality of data and identifying latent variables or constructs.

- Applications: Identifying underlying factors or constructs, simplifying data structures, and understanding the underlying relationships among variables.

6) Time Series Analysis:

- Description: Time series analysis involves analyzing data collected or recorded over a specific period at regular intervals to identify patterns, trends, and seasonality. Techniques such as moving averages, exponential smoothing, autoregressive integrated moving average (ARIMA), and Fourier analysis are used.

- Applications: Forecasting future trends, analyzing seasonal patterns, and understanding time-dependent relationships in data.

7) ANOVA (Analysis of Variance):

- Description: Analysis of variance (ANOVA) is a statistical technique used to analyze and compare the means of two or more groups or treatments to determine if they are statistically different from each other. One-way ANOVA, two-way ANOVA, and MANOVA (Multivariate Analysis of Variance) are common types of ANOVA.

- Applications: Comparing group means, testing hypotheses, and determining the effects of categorical independent variables on a continuous dependent variable.

8) Chi-Square Tests:

- Description: Chi-square tests are non-parametric statistical tests used to assess the association between categorical variables in a contingency table. The Chi-square test of independence, goodness-of-fit test, and test of homogeneity are common chi-square tests.

- Applications: Testing relationships between categorical variables, assessing goodness-of-fit, and evaluating independence.

These quantitative data analysis techniques provide researchers with valuable tools and methods to analyze, interpret, and derive meaningful insights from numerical data. The selection of a specific technique often depends on the research objectives, the nature of the data, and the underlying assumptions of the statistical methods being used.

Also Read: Analysis vs. Analytics: How Are They Different?

Data Analysis Methods

Data analysis methods refer to the techniques and procedures used to analyze, interpret, and draw conclusions from data. These methods are essential for transforming raw data into meaningful insights, facilitating decision-making processes, and driving strategies across various fields. Here are some common data analysis methods:

- Description: Descriptive statistics summarize and organize data to provide a clear and concise overview of the dataset. Measures such as mean, median, mode, range, variance, and standard deviation are commonly used.

- Description: Inferential statistics involve making predictions or inferences about a population based on a sample of data. Techniques such as hypothesis testing, confidence intervals, and regression analysis are used.

3) Exploratory Data Analysis (EDA):

- Description: EDA techniques involve visually exploring and analyzing data to discover patterns, relationships, anomalies, and insights. Methods such as scatter plots, histograms, box plots, and correlation matrices are utilized.

- Applications: Identifying trends, patterns, outliers, and relationships within the dataset.

4) Predictive Analytics:

- Description: Predictive analytics use statistical algorithms and machine learning techniques to analyze historical data and make predictions about future events or outcomes. Techniques such as regression analysis, time series forecasting, and machine learning algorithms (e.g., decision trees, random forests, neural networks) are employed.

- Applications: Forecasting future trends, predicting outcomes, and identifying potential risks or opportunities.

5) Prescriptive Analytics:

- Description: Prescriptive analytics involve analyzing data to recommend actions or strategies that optimize specific objectives or outcomes. Optimization techniques, simulation models, and decision-making algorithms are utilized.

- Applications: Recommending optimal strategies, decision-making support, and resource allocation.

6) Qualitative Data Analysis:

- Description: Qualitative data analysis involves analyzing non-numerical data, such as text, images, videos, or audio, to identify themes, patterns, and insights. Methods such as content analysis, thematic analysis, and narrative analysis are used.

- Applications: Understanding human behavior, attitudes, perceptions, and experiences.

7) Big Data Analytics:

- Description: Big data analytics methods are designed to analyze large volumes of structured and unstructured data to extract valuable insights. Technologies such as Hadoop, Spark, and NoSQL databases are used to process and analyze big data.

- Applications: Analyzing large datasets, identifying trends, patterns, and insights from big data sources.

8) Text Analytics:

- Description: Text analytics methods involve analyzing textual data, such as customer reviews, social media posts, emails, and documents, to extract meaningful information and insights. Techniques such as sentiment analysis, text mining, and natural language processing (NLP) are used.

- Applications: Analyzing customer feedback, monitoring brand reputation, and extracting insights from textual data sources.

These data analysis methods are instrumental in transforming data into actionable insights, informing decision-making processes, and driving organizational success across various sectors, including business, healthcare, finance, marketing, and research. The selection of a specific method often depends on the nature of the data, the research objectives, and the analytical requirements of the project or organization.

Also Read: Quantitative Data Analysis: Types, Analysis & Examples

Data Analysis Tools

Data analysis tools are essential instruments that facilitate the process of examining, cleaning, transforming, and modeling data to uncover useful information, make informed decisions, and drive strategies. Here are some prominent data analysis tools widely used across various industries:

1) Microsoft Excel:

- Description: A spreadsheet software that offers basic to advanced data analysis features, including pivot tables, data visualization tools, and statistical functions.

- Applications: Data cleaning, basic statistical analysis, visualization, and reporting.

2) R Programming Language:

- Description: An open-source programming language specifically designed for statistical computing and data visualization.

- Applications: Advanced statistical analysis, data manipulation, visualization, and machine learning.

3) Python (with Libraries like Pandas, NumPy, Matplotlib, and Seaborn):

- Description: A versatile programming language with libraries that support data manipulation, analysis, and visualization.

- Applications: Data cleaning, statistical analysis, machine learning, and data visualization.

4) SPSS (Statistical Package for the Social Sciences):

- Description: A comprehensive statistical software suite used for data analysis, data mining, and predictive analytics.

- Applications: Descriptive statistics, hypothesis testing, regression analysis, and advanced analytics.

5) SAS (Statistical Analysis System):

- Description: A software suite used for advanced analytics, multivariate analysis, and predictive modeling.

- Applications: Data management, statistical analysis, predictive modeling, and business intelligence.

6) Tableau:

- Description: A data visualization tool that allows users to create interactive and shareable dashboards and reports.

- Applications: Data visualization , business intelligence , and interactive dashboard creation.

7) Power BI:

- Description: A business analytics tool developed by Microsoft that provides interactive visualizations and business intelligence capabilities.

- Applications: Data visualization, business intelligence, reporting, and dashboard creation.

8) SQL (Structured Query Language) Databases (e.g., MySQL, PostgreSQL, Microsoft SQL Server):

- Description: Database management systems that support data storage, retrieval, and manipulation using SQL queries.

- Applications: Data retrieval, data cleaning, data transformation, and database management.

9) Apache Spark:

- Description: A fast and general-purpose distributed computing system designed for big data processing and analytics.

- Applications: Big data processing, machine learning, data streaming, and real-time analytics.

10) IBM SPSS Modeler:

- Description: A data mining software application used for building predictive models and conducting advanced analytics.

- Applications: Predictive modeling, data mining, statistical analysis, and decision optimization.

These tools serve various purposes and cater to different data analysis needs, from basic statistical analysis and data visualization to advanced analytics, machine learning, and big data processing. The choice of a specific tool often depends on the nature of the data, the complexity of the analysis, and the specific requirements of the project or organization.

Also Read: How to Analyze Survey Data: Methods & Examples

Importance of Data Analysis in Research

The importance of data analysis in research cannot be overstated; it serves as the backbone of any scientific investigation or study. Here are several key reasons why data analysis is crucial in the research process:

- Data analysis helps ensure that the results obtained are valid and reliable. By systematically examining the data, researchers can identify any inconsistencies or anomalies that may affect the credibility of the findings.

- Effective data analysis provides researchers with the necessary information to make informed decisions. By interpreting the collected data, researchers can draw conclusions, make predictions, or formulate recommendations based on evidence rather than intuition or guesswork.