ChatGPT and AI may spell the end of school homework

Since AI can now write essays, teachers may need to switch what kind of homework they assign.

What you need to know

- A school in London, England may stop assigning essays as homework because ChatGPT can be used to cheat.

- ChatGPT is an AI tool that can generate human-like text about an expansive range of topics.

- The tool earned an A-star, the highest mark possible, on an assignment at Alleyn's School in London, England.

OpenAI's ChatGPT is a powerful tool that can generate human-like speech. But it may be too powerful for some schools. Alleyn's School in southeast London is considering getting rid of assigning essays as homework due to ChatGPT. The AI tool can be used to fulfil assignments with little effort or learning by students, reported The Times .

While artificial intelligence isn't new, ChatGPT brought it to people's fingertips with a simplified interface. The tool launched in November 2022 and has been in the headlines since. ChatGPT generated an essay that earned an A-star, which is the highest mark one can earn in the UK.

I truly feel this is a paradigm-shifting moment. It's incredibly usable and straightforward. Alleyn's School headteacher Jane Lunnon

"At the moment, children are often assessed using homework essays, based on what they've learnt in the lesson. Clearly if we're in a world where children can access plausible responses … then the notion of saying simply do this for homework will have to go," said Alleyn's School headteacher Jane Lunnon.

Lunnon explained that homework will continue to be useful for practice, but supervised assessments and assignments will be required for "reliable data on whether children are acquiring new skills and information."

In addition to concerns about children not learning the content they're assigned, Lunnon expressed worry about students not being able to learn from mistakes.

"School is where we learn what to do and how to do it. It's also where we learn what not to do. What doesn't work," said the headteacher. "How to get things wrong and how to deal with that. We all know how important it is to learn to fail."

As AI continues to improve, situations like this will likely become more common. We've seen news outlets rely on AI to generate content , students use AI to cheat, and an author use ChatGPT to write a book .

Get the Windows Central Newsletter

All the latest news, reviews, and guides for Windows and Xbox diehards.

Sean Endicott brings nearly a decade of experience covering Microsoft and Windows news to Windows Central. He joined our team in 2017 as an app reviewer and now heads up our day-to-day news coverage. If you have a news tip or an app to review, hit him up at [email protected] .

- 2 OpenAI's GPT-4 model, described as "mildly embarrassing at best," surpasses professional analysts and advanced AI models in forecasting future earnings trends

- 3 Microsoft Copilot now works directly in my favorite messaging app

- 4 Destiny 2: The Final Shape is finally adding something I've wanted for over a year, and it'll make Prismatic buildcrafting much easier

- 5 AI is here to change the look of Microsoft Edge, but you need an account to use it

ChatGPT isn’t the death of homework – just an opportunity for schools to do things differently

Professor of IT Ethics and Digital Rights, Bournemouth University

Disclosure statement

Andy Phippen is a trustee of SWGfL

Bournemouth University provides funding as a member of The Conversation UK.

View all partners

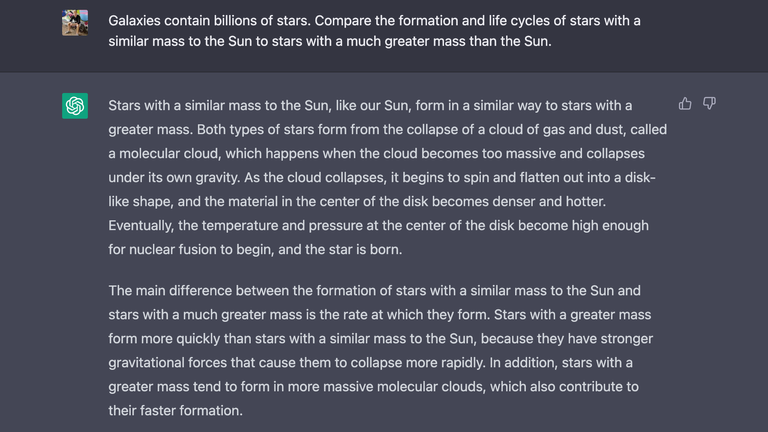

ChatGPT, the artificial intelligence (AI) platform launched by research company Open AI , can write an essay in response to a short prompt. It can perform mathematical equations – and show its working.

ChatGPT is a generative AI system: an algorithm that can generate new content from existing bodies of documents, images or audio when prompted with a description or question. It’s unsurprising concerns have emerged that young people are using ChatGPT and similar technology as a shortcut when doing their homework .

But banning students from using ChatGPT, or expecting teachers to scour homework for its use, would be shortsighted. Education has adapted to – and embraced – online technology for decades. The approach to generative AI should be no different.

The UK government has launched a consultation on the use of generative AI in education, following the publication of initial guidance on how schools might make best use of this technology.

In general, the advice is progressive and acknowledged the potential benefits of using these tools. It suggests that AI tools may have value in reducing teacher workload when producing teaching resources, marking, and in administrative tasks. But the guidance also states:

Schools and colleges may wish to review homework policies, to consider the approach to homework and other forms of unsupervised study as necessary to account for the availability of generative AI.

While little practical advice is offered on how to do this, the suggestion is that schools and colleges should consider the potential for cheating when students are using these tools.

Nothing new

Past research on student cheating suggested that students’ techniques were sophisticated and that they felt remorseful only if caught. They cheated because it was easy, especially with new online technologies.

But this research wasn’t investigating students’ use of Chat GPT or any kind of generative AI. It was conducted over 20 years ago , part of a body of literature that emerged at the turn of the century around the potential harm newly emerging internet search engines could do to student writing, homework and assessment.

We can look at past research to track the entry of new technologies into the classroom – and to infer the varying concerns about their use. In the 1990s, research explored the impact word processors might have on child literacy. It found that students writing on computers were more collaborative and focused on the task. In the 1970s , there were questions on the effect electronic calculators might have on children’s maths abilities.

In 2023, it would seem ludicrous to state that a child could not use a calculator, word processor or search engine in a homework task or piece of coursework. But the suspicion of new technology remains. It clouds the reality that emerging digital tools can be effective in supporting learning and developing crucial critical thinking and life skills.

Get on board

Punitive approaches and threats of detection make the use of such tools covert. A far more progressive position would be for teachers to embrace these technologies, learn how they work, and make this part of teaching on digital literacy, misinformation and critical thinking. This, in my experience , is what young people want from education on digital technology.

Children should learn the difference between acknowledging the use of these tools and claiming the work as their own. They should also learn whether – or not – to trust the information provided to them on the internet.

The educational charity SWGfL , of which I am a trustee, has recently launched an AI hub which provides further guidance on how to use these new tools in school settings. The charity also runs Project Evolve , a toolkit containing a large number of teaching resources around managing online information, which will help in these classroom discussions.

I expect to see generative AI tools being merged, eventually, into mainstream learning. Saying “do not use search engines” for an assignment is now ridiculous. The same might be said in the future about prohibitions on using generative AI.

Perhaps the homework that teachers set will be different. But as with search engines, word processors and calculators, schools are not going to be able to ignore their rapid advance. It is far better to embrace and adapt to change, rather than resisting (and failing to stop) it.

- Artificial intelligence (AI)

- Keep me on trend

Data Manager

Director, Social Policy

Communications Coordinator

Head, School of Psychology

Senior Research Fellow - Women's Health Services

The response from schools and universities was swift and decisive.

Just days after OpenAI dropped ChatGPT in late November 2022, the chatbot was widely denounced as a free essay-writing, test-taking tool that made it laughably easy to cheat on assignments.

Los Angeles Unified, the second-largest school district in the US, immediately blocked access to OpenAI’s website from its schools’ network. Others soon joined. By January, school districts across the English-speaking world had started banning the software, from Washington, New York, Alabama, and Virginia in the United States to Queensland and New South Wales in Australia.

Several leading universities in the UK, including Imperial College London and the University of Cambridge, issued statements that warned students against using ChatGPT to cheat.

“While the tool may be able to provide quick and easy answers to questions, it does not build critical-thinking and problem-solving skills, which are essential for academic and lifelong success,” Jenna Lyle, a spokeswoman for the New York City Department of Education, told the Washington Post in early January.

This initial panic from the education sector was understandable. ChatGPT, available to the public via a web app, can answer questions and generate slick, well-structured blocks of text several thousand words long on almost any topic it is asked about, from string theory to Shakespeare. Each essay it produces is unique, even when it is given the same prompt again, and its authorship is (practically) impossible to spot. It looked as if ChatGPT would undermine the way we test what students have learned, a cornerstone of education.

But three months on, the outlook is a lot less bleak. I spoke to a number of teachers and other educators who are now reevaluating what chatbots like ChatGPT mean for how we teach our kids. Far from being just a dream machine for cheaters, many teachers now believe, ChatGPT could actually help make education better.

Advanced chatbots could be used as powerful classroom aids that make lessons more interactive, teach students media literacy, generate personalized lesson plans, save teachers time on admin, and more.

Educational-tech companies including Duolingo and Quizlet, which makes digital flash cards and practice assessments used by half of all high school students in the US, have already integrated OpenAI’s chatbot into their apps. And OpenAI has worked with educators to put together a fact sheet about ChatGPT’s potential impact in schools. The company says it also consulted educators when it developed a free tool to spot text written by a chatbot (though its accuracy is limited).

“We believe that educational policy experts should decide what works best for their districts and schools when it comes to the use of new technology,” says Niko Felix, a spokesperson for OpenAI. “We are engaging with educators across the country to inform them of ChatGPT’s capabilities. This is an important conversation to have so that they are aware of the potential benefits and misuse of AI, and so they understand how they might apply it to their classrooms.”

But it will take time and resources for educators to innovate in this way. Many are too overworked, under-resourced, and beholden to strict performance metrics to take advantage of any opportunities that chatbots may present.

It is far too soon to say what the lasting impact of ChatGPT will be—it hasn’t even been around for a full semester. What’s certain is that essay-writing chatbots are here to stay. And they will only get better at standing in for a student on deadline—more accurate and harder to detect. Banning them is futile, possibly even counterproductive. “We need to be asking what we need to do to prepare young people—learners—for a future world that’s not that far in the future,” says Richard Culatta, CEO of the International Society for Technology in Education (ISTE), a nonprofit that advocates for the use of technology in teaching.

Tech’s ability to revolutionize schools has been overhyped in the past, and it’s easy to get caught up in the excitement around ChatGPT’s transformative potential. But this feels bigger: AI will be in the classroom one way or another. It’s vital that we get it right.

From ABC to GPT

Much of the early hype around ChatGPT was based on how good it is at test taking. In fact, this was a key point OpenAI touted when it rolled out GPT-4 , the latest version of the large language model that powers the chatbot, in March. It could pass the bar exam! It scored a 1410 on the SAT! It aced the AP tests for biology, art history, environmental science, macroeconomics, psychology, US history, and more. Whew!

It’s little wonder that some school districts totally freaked out.

Yet in hindsight, the immediate calls to ban ChatGPT in schools were a dumb reaction to some very smart software. “People panicked,” says Jessica Stansbury, director of teaching and learning excellence at the University of Baltimore. “We had the wrong conversations instead of thinking, ‘Okay, it’s here. How can we use it?’”

“It was a storm in a teacup,” says David Smith, a professor of bioscience education at Sheffield Hallam University in the UK. Far from using the chatbot to cheat, Smith says, many of his students hadn’t yet heard of the technology until he mentioned it to them: “When I started asking my students about it, they were like, ‘Sorry, what?’”

Even so, teachers are right to see the technology as a game changer. Large language models like OpenAI’s ChatGPT and its successor GPT-4, as well as Google’s Bard and Microsoft’s Bing Chat, are set to have a massive impact on the world. The technology is already being rolled out into consumer and business software. If nothing else, many teachers now recognize that they have an obligation to teach their students about how this new technology works and what it can make possible. “They don’t want it to be vilified,” says Smith. “They want to be taught how to use it.”

Change can be hard. “There’s still some fear,” says Stansbury. “But we do our students a disservice if we get stuck on that fear.”

Stansbury has helped organize workshops at her university to allow faculty and other teaching staff to share their experiences and voice their concerns. She says that some of her colleagues turned up worried about cheating, others about losing their jobs. But talking it out helped. “I think some of the fear that faculty had was because of the media,” she says. “It’s not because of the students.”

In fact, a US survey of 1,002 K–12 teachers and 1,000 students between 12 and 17, commissioned by the Walton Family Foundation in February, found that more than half the teachers had used ChatGPT—10% of them reported using it every day—but only a third of the students. Nearly all those who had used it (88% of teachers and 79% of students) said it had a positive impact.

A majority of teachers and students surveyed also agreed with this statement: “ChatGPT is just another example of why we can’t keep doing things the old way for schools in the modern world.”

Helen Crompton, an associate professor of instructional technology at Old Dominion University in Norfolk, Virginia, hopes that chatbots like ChatGPT will make school better.

Many educators think that schools are stuck in a groove, says Crompton, who was a K–12 teacher for 16 years before becoming a researcher. In a system with too much focus on grading and not enough on learning, ChatGPT is forcing a debate that is overdue. “We’ve long wanted to transform education,” she says. “We’ve been talking about it for years.”

Take cheating. In Crompton’s view, if ChatGPT makes it easy to cheat on an assignment, teachers should throw out the assignment rather than ban the chatbot.

We need to change how we assess learning, says Culatta: “Did ChatGPT kill assessments? They were probably already dead, and they’ve been in zombie mode for a long time. What ChatGPT did was call us out on that.”

Critical thinking

Emily Donahoe, a writing tutor and educational developer at the University of Mississippi, has noticed classroom discussions starting to change in the months since ChatGPT’s release. Although she first started to talk to her undergraduate students about the technology out of a sense of duty, she now thinks that ChatGPT could help teachers shift away from an excessive focus on final results. Getting a class to engage with AI and think critically about what it generates could make teaching feel more human, she says, “rather than asking students to write and perform like robots.”

This idea isn’t new. Generations of teachers have subscribed to a framework known as Bloom’s taxonomy, introduced by the educational psychologist Benjamin Bloom in the 1950s, in which basic knowledge of facts is just the bedrock on which other forms of learning, such as analysis and evaluation, sit. Teachers like Donahoe and Crompton think that chatbots could help teach those other skills.

In the past, Donahoe would set her students to writing assignments in which they had to make an argument for something—and grade them on the text they turned in. This semester, she asked her students to use ChatGPT to generate an argument and then had them annotate it according to how effective they thought the argument was for a specific audience. Then they turned in a rewrite based on their criticism.

Breaking down the assignment in this way also helps students focus on specific skills without getting sidetracked. Donahoe found, for example, that using ChatGPT to generate a first draft helped some students stop worrying about the blank page and instead focus on the critical phase of the assignment. “It can help you move beyond particular pain points when those pain points aren’t necessarily part of the learning goals of the assignment,” she says.

Smith, the bioscience professor, is also experimenting with ChatGPT assignments. The hand-wringing around it reminds him of the anxiety many teachers experienced a couple of years ago during the pandemic. With students stuck at home, teachers had to find ways to set assignments where solutions were not too easy to Google. But what he found was that Googling—what to ask for and what to make of the results—was itself a skill worth teaching.

Smith thinks chatbots could be the same way. If his undergraduate students want to use ChatGPT in their written assignments, he will assess the prompt as well as—or even rather than—the essay itself. “Knowing the words to use in a prompt and then understanding the output that comes back is important,” he says. “We need to teach how to do that.”

The new education

These changing attitudes reflect a wider shift in the role that teachers play, says Stansbury. Information that was once dispensed in the classroom is now everywhere: first online, then in chatbots. What educators must now do is show students not only how to find it, but what information to trust and what not to, and how to tell the difference. “Teachers are no longer gatekeepers of information, but facilitators,” she says.

In fact, teachers are finding opportunities in the misinformation and bias that large language models often produce. These shortcomings can kick off productive discussions, says Crompton: “The fact that it’s not perfect is great.”

Teachers are asking students to use ChatGPT to generate text on a topic and then getting them to point out the flaws. In one example that a colleague of Stansbury’s shared at her workshop, students used the bot to generate an essay about the history of the printing press. When its US-centric response included no information about the origins of print in Europe or China, the teacher used that as the starting point for a conversation about bias. “It’s a great way to focus on media literacy,” says Stansbury.

Crompton is working on a study of ways that chatbots can improve teaching. She runs off a list of potential applications she’s excited about, from generating test questions to summarizing information for students with different reading levels to helping with time-consuming administrative tasks such as drafting emails to colleagues and parents.

One of her favorite uses of the technology is to bring more interactivity into the classroom. Teaching methods that get students to be creative, to role-play, or to think critically lead to a deeper kind of learning than rote memorization, she says. ChatGPT can play the role of a debate opponent and generate counterarguments to a student’s positions, for example. By exposing students to an endless supply of opposing viewpoints, chatbots could help them look for weak points in their own thinking.

Crompton also notes that if English is not a student’s first language, chatbots can be a big help in drafting text or paraphrasing existing documents, doing a lot to level the playing field. Chatbots also serve students who have specific learning needs, too. Ask ChatGPT to explain Newton’s laws of motion to a student who learns better with images rather than words, for example, and it will generate an explanation that features balls rolling on a table.

Made-to-measure learning

All students can benefit from personalized teaching materials, says Culatta, because everybody has different learning preferences. Teachers might prepare a few different versions of their teaching materials to cover a range of students’ needs. Culatta thinks that chatbots could generate personalized material for 50 or 100 students and make bespoke tutors the norm. “I think in five years the idea of a tool that gives us information that was written for somebody else is going to feel really strange,” he says.

Some ed-tech companies are already doing this. In March, Quizlet updated its app with a feature called Q-Chat, built using ChatGPT, that tailors material to each user’s needs. The app adjusts the difficulty of the questions according to how well students know the material they’re studying and how they prefer to learn. “Q-Chat provides our students with an experience similar to a one-on-one tutor,” says Quizlet’s CEO, Lex Bayer.

In fact, some educators think future textbooks could be bundled with chatbots trained on their contents. Students would have a conversation with the bot about the book’s contents as well as (or instead of) reading it. The chatbot could generate personalized quizzes to coach students on topics they understand less well.

Not all these approaches will be instantly successful, of course. Donahoe and her students came up with guidelines for using ChatGPT together, but “it may be that we get to the end of this class and I think this absolutely did not work,” she says. “This is still an ongoing experiment.”

She has also found that students need considerable support to make sure ChatGPT promotes learning rather than getting in the way of it. Some students find it harder to move beyond the tool’s output and make it their own, she says: “It needs to be a jumping-off point rather than a crutch.”

And, of course, some students will still use ChatGPT to cheat. In fact, it makes it easier than ever. With a deadline looming, who wouldn’t be tempted to get that assignment written at the push of a button? “It equalizes cheating for everyone,” says Crompton. “You don’t have to pay. You don’t have to hack into a school computer.”

Some types of assignments will be harder hit than others, too. ChatGPT is really good at summarizing information. When that is the goal of an assignment, cheating is a legitimate concern, says Donahoe: “It would be virtually indistinguishable from an A answer in that context. It is something we should take seriously.”

None of the educators I spoke to have a fix for that. And not all other fears will be easily allayed. (Donahoe recalls a recent workshop at her university in which faculty were asked what they were planning to do differently after learning about ChatGPT. One faculty member responded: “I think I’ll retire.”)

But nor are teachers as worried as initial reports suggested. Cheating is not a new problem: schools have survived calculators, Google, Wikipedia, essays-for-pay websites, and more.

For now, teachers have been thrown into a radical new experiment. They need support to figure it out—perhaps even government support in the form of money, training, and regulation. But this is not the end of education. It’s a new beginning.

Artificial intelligence

Sam altman says helpful agents are poised to become ai’s killer function.

Open AI’s CEO says we won’t need new hardware or lots more training data to get there.

- James O'Donnell archive page

An AI startup made a hyperrealistic deepfake of me that’s so good it’s scary

Synthesia's new technology is impressive but raises big questions about a world where we increasingly can’t tell what’s real.

- Melissa Heikkilä archive page

Taking AI to the next level in manufacturing

Reducing data, talent, and organizational barriers to achieve scale.

- MIT Technology Review Insights archive page

Is robotics about to have its own ChatGPT moment?

Researchers are using generative AI and other techniques to teach robots new skills—including tasks they could perform in homes.

Stay connected

Get the latest updates from mit technology review.

Discover special offers, top stories, upcoming events, and more.

Thank you for submitting your email!

It looks like something went wrong.

We’re having trouble saving your preferences. Try refreshing this page and updating them one more time. If you continue to get this message, reach out to us at [email protected] with a list of newsletters you’d like to receive.

- Skip to main content

- Keyboard shortcuts for audio player

A new AI chatbot might do your homework for you. But it's still not an A+ student

Emma Bowman

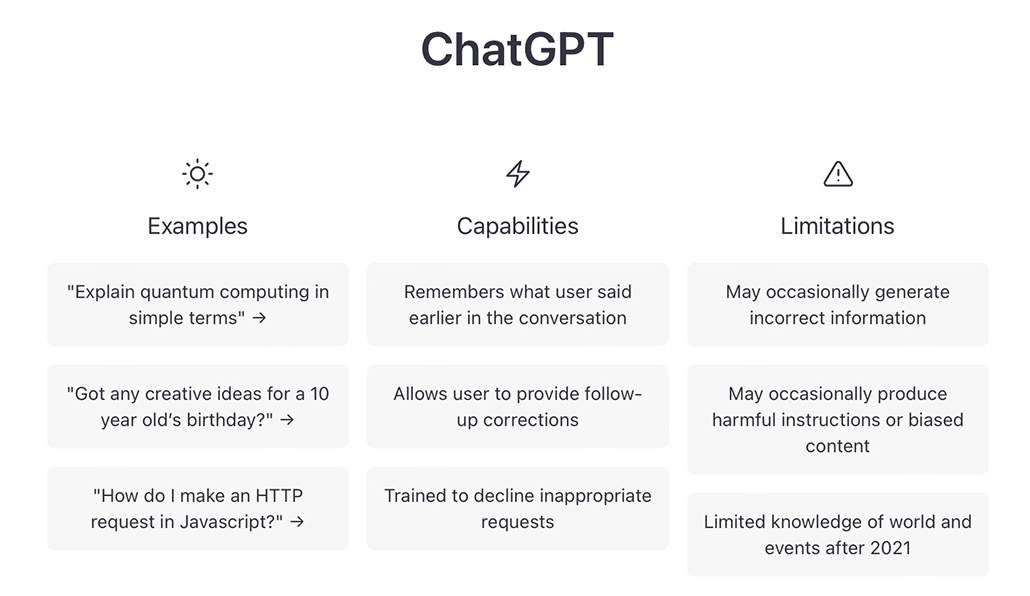

Enter a prompt into ChatGPT, and it becomes your very own virtual assistant. OpenAI/Screenshot by NPR hide caption

Enter a prompt into ChatGPT, and it becomes your very own virtual assistant.

Why do your homework when a chatbot can do it for you? A new artificial intelligence tool called ChatGPT has thrilled the Internet with its superhuman abilities to solve math problems, churn out college essays and write research papers.

After the developer OpenAI released the text-based system to the public last month, some educators have been sounding the alarm about the potential that such AI systems have to transform academia, for better and worse.

"AI has basically ruined homework," said Ethan Mollick, a professor at the University of Pennsylvania's Wharton School of Business, on Twitter.

The tool has been an instant hit among many of his students, he told NPR in an interview on Morning Edition , with its most immediately obvious use being a way to cheat by plagiarizing the AI-written work, he said.

Academic fraud aside, Mollick also sees its benefits as a learning companion.

Opinion: Machine-made poetry is here

He's used it as his own teacher's assistant, for help with crafting a syllabus, lecture, an assignment and a grading rubric for MBA students.

"You can paste in entire academic papers and ask it to summarize it. You can ask it to find an error in your code and correct it and tell you why you got it wrong," he said. "It's this multiplier of ability, that I think we are not quite getting our heads around, that is absolutely stunning," he said.

A convincing — yet untrustworthy — bot

But the superhuman virtual assistant — like any emerging AI tech — has its limitations. ChatGPT was created by humans, after all. OpenAI has trained the tool using a large dataset of real human conversations.

"The best way to think about this is you are chatting with an omniscient, eager-to-please intern who sometimes lies to you," Mollick said.

It lies with confidence, too. Despite its authoritative tone, there have been instances in which ChatGPT won't tell you when it doesn't have the answer.

That's what Teresa Kubacka, a data scientist based in Zurich, Switzerland, found when she experimented with the language model. Kubacka, who studied physics for her Ph.D., tested the tool by asking it about a made-up physical phenomenon.

"I deliberately asked it about something that I thought that I know doesn't exist so that they can judge whether it actually also has the notion of what exists and what doesn't exist," she said.

ChatGPT produced an answer so specific and plausible sounding, backed with citations, she said, that she had to investigate whether the fake phenomenon, "a cycloidal inverted electromagnon," was actually real.

When she looked closer, the alleged source material was also bogus, she said. There were names of well-known physics experts listed – the titles of the publications they supposedly authored, however, were non-existent, she said.

"This is where it becomes kind of dangerous," Kubacka said. "The moment that you cannot trust the references, it also kind of erodes the trust in citing science whatsoever," she said.

Scientists call these fake generations "hallucinations."

"There are still many cases where you ask it a question and it'll give you a very impressive-sounding answer that's just dead wrong," said Oren Etzioni, the founding CEO of the Allen Institute for AI , who ran the research nonprofit until recently. "And, of course, that's a problem if you don't carefully verify or corroborate its facts."

Users experimenting with the chatbot are warned before testing the tool that ChatGPT "may occasionally generate incorrect or misleading information." OpenAI/Screenshot by NPR hide caption

An opportunity to scrutinize AI language tools

Users experimenting with the free preview of the chatbot are warned before testing the tool that ChatGPT "may occasionally generate incorrect or misleading information," harmful instructions or biased content.

Sam Altman, OpenAI's CEO, said earlier this month it would be a mistake to rely on the tool for anything "important" in its current iteration. "It's a preview of progress," he tweeted .

The failings of another AI language model unveiled by Meta last month led to its shutdown. The company withdrew its demo for Galactica, a tool designed to help scientists, just three days after it encouraged the public to test it out, following criticism that it spewed biased and nonsensical text.

Untangling Disinformation

Ai-generated fake faces have become a hallmark of online influence operations.

Similarly, Etzioni says ChatGPT doesn't produce good science. For all its flaws, though, he sees ChatGPT's public debut as a positive. He sees this as a moment for peer review.

"ChatGPT is just a few days old, I like to say," said Etzioni, who remains at the AI institute as a board member and advisor. It's "giving us a chance to understand what he can and cannot do and to begin in earnest the conversation of 'What are we going to do about it?' "

The alternative, which he describes as "security by obscurity," won't help improve fallible AI, he said. "What if we hide the problems? Will that be a recipe for solving them? Typically — not in the world of software — that has not worked out."

Accessibility Links

ChatGPT marks end of homework at Alleyn’s School

Schools are having to abandon traditional homework essays as tests of what children know because artificial intelligence software is so powerful, the head of a leading school says.

Instead, pupils at Alleyn’s School in southeast London, where annual fees are more than £22,800, are being asked to do in-depth research before the next lesson, according to its head teacher, Jane Lunnon.

The artificial intelligence bot ChatGPT has speeded up the use of “flipped learning”, which involves students preparing at home for discussions and assessments in class.

Lunnon said there was no longer any point in pupils completing essays at home to be marked. Teachers should instead set research on topics for discussion and assessment in lessons, a process that was already under way at her

Related articles

The End of High-School English

I’ve been teaching English for 12 years, and I’m astounded by what ChatGPT can produce.

This article was featured in One Story to Read Today, a newsletter in which our editors recommend a single must-read from The Atlantic , Monday through Friday. Sign up for it here.

Teenagers have always found ways around doing the hard work of actual learning. CliffsNotes dates back to the 1950s, “No Fear Shakespeare” puts the playwright into modern English, YouTube offers literary analysis and historical explication from numerous amateurs and professionals, and so on. For as long as those shortcuts have existed, however, one big part of education has remained inescapable: writing. Barring outright plagiarism, students have always arrived at that moment when they’re on their own with a blank page, staring down a blinking cursor, the essay waiting to be written.

Now that might be about to change. The arrival of OpenAI’s ChatGPT, a program that generates sophisticated text in response to any prompt you can imagine, may signal the end of writing assignments altogether—and maybe even the end of writing as a gatekeeper, a metric for intelligence, a teachable skill.

If you’re looking for historical analogues, this would be like the printing press, the steam drill, and the light bulb having a baby, and that baby having access to the entire corpus of human knowledge and understanding. My life—and the lives of thousands of other teachers and professors, tutors and administrators—is about to drastically change.

I teach a variety of humanities classes (literature, philosophy, religion, history) at a small independent high school in the San Francisco Bay Area. My classes tend to have about 15 students, their ages ranging from 16 to 18. This semester I am lucky enough to be teaching writers like James Baldwin, Gloria Anzaldúa, Herman Melville, Mohsin Hamid, Virginia Held. I recognize that it’s a privilege to have relatively small classes that can explore material like this at all. But at the end of the day, kids are always kids. I’m sure you will be absolutely shocked to hear that not all teenagers are, in fact, so interested in having their mind lit on fire by Anzaldúa’s radical ideas about transcending binaries, or Ishmael’s metaphysics in Moby-Dick .

To those students, I have always said: You may not be interested in poetry or civics, but no matter what you end up doing with your life, a basic competence in writing is an absolutely essential skill—whether it’s for college admissions, writing a cover letter when applying for a job, or just writing an email to your boss.

Read: The college essay is dead

I’ve also long held, for those who are interested in writing, that you need to learn the basic rules of good writing before you can start breaking them—that, like Picasso, you have to learn how to reliably fulfill an audience’s expectations before you get to start putting eyeballs in people’s ears and things.

I don’t know if either of those things is true anymore. It’s no longer obvious to me that my teenagers actually will need to develop this basic skill, or if the logic still holds that the fundamentals are necessary for experimentation.

Let me be candid (with apologies to all of my current and former students): What GPT can produce right now is better than the large majority of writing seen by your average teacher or professor. Over the past few days, I’ve given it a number of different prompts. And even if the bot’s results don’t exactly give you goosebumps, they do a more-than-adequate job of fulfilling a task.

I mean, look at this: I asked the program to write me a playful, sophisticated, emotional 600-word college-admissions essay about how my experience volunteering at my local SPCA had prepared me for the academic rigor of Stanford. Here’s an excerpt from its response:

In addition to cleaning, I also had the opportunity to interact with the animals. I was amazed at the transformation I saw in some of the pets who had been neglected or abused. With patience and care, they blossomed into playful and affectionate companions who were eager to give and receive love. I was also able to witness firsthand the process of selecting the right pet for the right family. Although it was bittersweet to see some animals leave the shelter, I knew that they were going to a loving home, and that was the best thing for them.

It also managed to compose a convincing 400-word “friendly” cover letter for an application to be a manager at Starbucks. But most jaw-dropping of all, on a personal level: It made quick work out of an assignment I’ve always considered absolutely “unhackable.” In January, my junior English students will begin writing an independent research paper, 12 to 18 pages, on two great literary works of their own choosing—a tradition at our school. Their goal is to place the texts in conversation with each other and find a thread that connects them. Some students will struggle to find any way to bring them together. We spend two months on the paper, putting it together piece by piece.

I’ve fed GPT a handful of pairs that students have worked with in recent years: Beloved and Hamlet , The Handmaid’s Tale and The Parable of the Sower , Homer’s The Odyssey and Dante’s Inferno . GPT brought them together instantly, effortlessly, uncannily: memory, guilt, revenge, justice, the individual versus the collective, freedom of choice, societal oppression. The technology doesn’t go much beyond the surface, nor does it successfully integrate quotations from the original texts, but the ideas presented were on-target—more than enough to get any student rolling without much legwork.

It goes further. Last night, I received an essay draft from a student. I passed it along to OpenAI’s bots. “Can you fix this essay up and make it better?” Turns out, it could. It kept the student’s words intact but employed them more gracefully; it removed the clutter so the ideas were able to shine through. It was like magic.

I’ve been teaching for about 12 years: first as a TA in grad school, then as an adjunct professor at various public and private universities, and finally in high school. From my experience, American high-school students can be roughly split into three categories. The bottom group is learning to master grammar rules, punctuation, basic comprehension, and legibility. The middle group mostly has that stuff down and is working on argument and organization—arranging sentences within paragraphs and paragraphs within an essay. Then there’s a third group that has the luxury of focusing on things such as tone, rhythm, variety, mellifluence.

Whether someone is writing a five-paragraph essay or a 500-page book, these are the building blocks not only of good writing but of writing as a tool, as a means of efficiently and effectively communicating information. And because learning writing is an iterative process, students spend countless hours developing the skill in elementary school, middle school, high school, and then finally (as thousands of underpaid adjuncts teaching freshman comp will attest) college. Many students (as those same adjuncts will attest) remain in the bottom group, despite their teachers’ efforts; most of the rest find some uneasy equilibrium in the second category.

Working with these students makes up a large percentage of every English teacher’s job. It also supports a cottage industry of professional development, trademarked methods buried in acronyms ( ICE ! PIE ! EDIT ! MEAT !), and private writing tutors charging $100-plus an hour. So for those observers who are saying, Well, good, all of these things are overdue for change —“this will lead to much-needed education reform,” a former colleague told me—this dismissal elides the heavy toll this sudden transformation is going to take on education, extending along its many tentacles (standardized testing, admissions, educational software, etc.).

Perhaps there are reasons for optimism, if you push all this aside. Maybe every student is now immediately launched into that third category: The rudiments of writing will be considered a given, and every student will have direct access to the finer aspects of the enterprise. Whatever is inimitable within them can be made conspicuous, freed from the troublesome mechanics of comma splices, subject-verb disagreement, and dangling modifiers.

But again, the majority of students do not see writing as a worthwhile skill to cultivate—just like I, sitting with my coffee and book , rereading Moby-Dick , do not consider it worthwhile to learn, say, video editing. They have no interest in exploring nuance in tone and rhythm; they will forever roll their eyes at me when I try to communicate the subtle difference, when writing an appositive phrase, between using commas, parentheses, or (the connoisseur’s choice) the em dash.

Which is why I wonder if this may be the end of using writing as a benchmark for aptitude and intelligence. After all, what is a cover letter? Its primary purpose isn’t to communicate “I already know how to do this job” (because of course I don’t) but rather “I am competent and trustworthy and can clearly express to you why I would be a good candidate for this job.” What is a written exam? Its primary signal isn’t “I memorized a bunch of information” but rather “I can express that information clearly in writing.” Many teachers have reacted to ChatGPT by imagining how to give writing assignments now—maybe they should be written out by hand, or given only in class—but that seems to me shortsighted. The question isn’t “How will we get around this?” but rather “Is this still worth doing?”

I believe my most essential tasks, as a teacher, are helping my students think critically, disagree respectfully, argue carefully and flexibly, and understand their mind and the world around them. Unconventional, improvisatory, expressive, meta-cognitive writing can be an extraordinary vehicle for those things. But if most contemporary writing pedagogy is necessarily focused on helping students master the basics, what happens when a computer can do it for us? Is this moment more like the invention of the calculator, saving me from the tedium of long division, or more like the invention of the player piano, robbing us of what can be communicated only through human emotion?

Maybe the moment when I felt most caught between these two thoughts was when I asked GPT a question from an exam I give in my Eastern-philosophy course, something I’ve never imagined a student could find outside assistance to answer: “Explain the Madhyamaka Buddhist idea that all phenomena lack inherent existence, using a funny, specific example.”

ChatGPT wrote back, as ever, instantly:

The Madhyamaka Buddhist idea that all phenomena lack inherent existence can be illustrated with a funny example: let’s say you have a pet hamster. You might think that your hamster has its own inherent existence, but in reality, your hamster is made up of many different elements such as its fur, its bones, its muscles, and its brain. None of these elements exist independently or inherently on their own, so your hamster itself does not have any inherent existence either. The same goes for all phenomena—everything is made up of various components that don’t have inherent existence, so nothing has any inherent existence either.

Everything is made up; it’s true. The essay as a literary form? Made up. Grammatical rules as markers of intelligence? Writing itself as a technology? Made up. Starting now, OpenAI is forcing us to ask foundational questions about whether any of those things are worth keeping around.

💻 Upcoming Live Webinar: Raising Test Scores with John Hollingsworth | Thursday, 6/6 at 11 AM PDT | Learn More

- Staff Portal

- Consultant Portal

Toll Free 1-800-495-1550

Local 559-834-2449

- Articles & Books

- Explicit Direct Instruction

- Student Engagement

- Checking for Understanding

- ELD Instruction

- About Our Company

- DataWORKS as an EMO

- About Our Professional Development

- English Learner PD

- Schedule a Webinar

- The Death of Homework

- Classroom Strategy

How Chat AI will end homework and make classroom instruction more important

Let’s begin with the purpose of homework; Educators assign homework for different reasons and purposes. Homework is assigned either as practice , preparation , extension , or integration of grade-level skills and concepts.

Practice Homework reinforces learning from the skills and concepts already taught in the classroom. Practice homework promotes retention and automaticity of the concept , skill, and content taught. Examples include practicing multiplication facts, writing compound sentences, or learning parts of the Periodic Table of Elements to commit these skills and concepts to long-term memory . Preparation Homework is assigned to introduce content that will be addressed in future lessons. However, research suggests that homework is less effective if it is used to teach new or complex skills. For these types of assignments, students typically become stressed which can create a negative perspective towards learning and school. Extension Homework requires students to use previously taught skills and concepts and apply them to new situations or projects. For instance, students may use the concept of area and perimeter to build a flowerbed. Integration Homework requires the student to apply learned skills and concepts to produce a culminating project like a performance task using multiple math skills or language skills. Homework also serves other purposes not directly related to instruction. Homework can help establish communication between parents and children, and it can inform parents about school topics and activities. It allows parents to view the Curriculum that is being taught. Homework provides structure, and it helps create a more purposeful learner.

Here comes Chat AI

Artificial Intelligence (AI) Technology is becoming easily accessible to everyone on every device. This new technology allows for generating answers to prompts of any kind, in almost any form, without a student putting in any work. With the initial rollout in early 2023, it was very easy to spot fraudulent essays. However, as we enter the new year, the level of sophistication, or should we say simplicity has increased. AI platforms such as ChatGPT have been able to mimic the language levels (colloquialisms) that students are using. This makes it virtually impossible for teachers to tell the difference between Chat AI writing and student writing.

Possible Adaptations to the AI Invasion

- Honor and Contracts Some schools of higher learning have asked students to sign an honor contract that forbids the use of such applications in their homework. These types of agreements and oaths have been a staple of education for centuries. However, some students have felt the need to succeed in academia outweighs any risk.

- Classroom Solution – What is old is new again In a recent interview, Siva Vaidhyanathen of the University of Virginia states “Going forward I will demand some older forms of knowledge creation to challenge my students and help them learn. I will require in-class writing which will demand they think fluidly at the moment. I will require them to ask questions of the presenters, generating a deeper real-time understanding of a subject.”

- Adopt widespread use of interactive classroom instruction, such as EDI instructional practices. Effective instruction using Explicit Direct Instruction relies on TAPPLE for frequent Checking For Understanding. These are procedures developed by DataWORKS to create regular interaction on academic content using academic language. It involves Teach First, Ask a Question, Pick a Non-Volunteer (so all students have to be ready), Pair-Share (so all students interact), Listen to the Response, and Give Effective Feedback. Another key to effective interactive instruction with EDI is the use of Engagement Norms . These 8 procedures help keep the students on task and engaged. It involves Pronunciation, Tracking, Choral Reading, Gestures, Pair-Share (to involve all students in real-time interaction), Attention Signal, Whiteboards (to elicit responses from all students), and the use of Complete Sentences in their expression.

- Use a Homework Quiz . Sure, students may use AI for their homework, but the proof of the pudding is whether they learned the content. The problem with traditional homework, says Dr. Silvia Ybarra, is that it has very specific answers. There is no way to check to see if they are answering using the skills. The way to check their learning is to have a Homework Quiz the moment they come into class. Maybe have them read something or take a quick Homework Quiz. Can they understand the concept? Can they perform the skill? Can they demonstrate understanding?

Maybe AI is not a bad Thing

If the outcome of using Chat AI is that students are learning, then maybe it is for the better. Using it or not using it, ultimately it is what the student is learning. Are they able to do the process, practice, or exercise? Schools need to control the 6 hours of the day the students are with them. At the end of the day, the goal of the school is to assess if the student can perform independently. If AI can help, then fine. If AI Adaptations mentioned above can help, then even better.

See Related Article Expert Teacher vs. Experienced Teachers

https://dataworks-ed.com/blog/2021/08/expert-teachers-vs-experienced-teachers-whats-difference/

Dr. Silvia Ybarra Explicit Direct Instruction

Siva Vaidhyanathan My students are using AI to cheat. Here’s why it’s a teachable moment https://www.theguardian.com/technology/2023/may/18/ai-cheating-teaching-chatgpt-students-college-university

Patricia Bogdanovich Homework or No Homework

https://dataworks-ed.com/blog/2014/09/homework-or-no-homework/

Author: Joel Soto

Related posts.

It’s a wonderful world — and universe — out there.

Come explore with us!

Science News Explores

Think twice before using chatgpt for help with homework.

This new AI tool talks a lot like a person — but still makes mistakes

ChatGPT is impressive and can be quite useful. It can help people write text, for instance, and code. However, “it’s not magic,” says Casey Fiesler. In fact, it often seems intelligent and confident while making mistakes — and sometimes parroting biases.

Glenn Harvey

Share this:

- Google Classroom

By Kathryn Hulick

February 16, 2023 at 6:30 am

“We need to talk,” Brett Vogelsinger said. A student had just asked for feedback on an essay. One paragraph stood out. Vogelsinger, a 9th-grade English teacher in Doylestown, Pa., realized that the student hadn’t written the piece himself. He had used ChatGPT. It’s a new artificial intelligence (AI) tool. It answers questions. It writes code. And it can generate long essays and stories.

The company OpenAI made ChatGPT available for free at the end of November 2022. Within a week, it had more than a million users. Other tech companies are racing to put out similar tools. Google launched Bard in early February. The AI company Anthropic is testing a new chatbot named Claude. And another AI company, DeepMind, is working on a bot called Sparrow.

ChatGPT marks the beginning of a new wave of AI that will disrupt education. Whether that’s a good or bad thing remains to be seen.

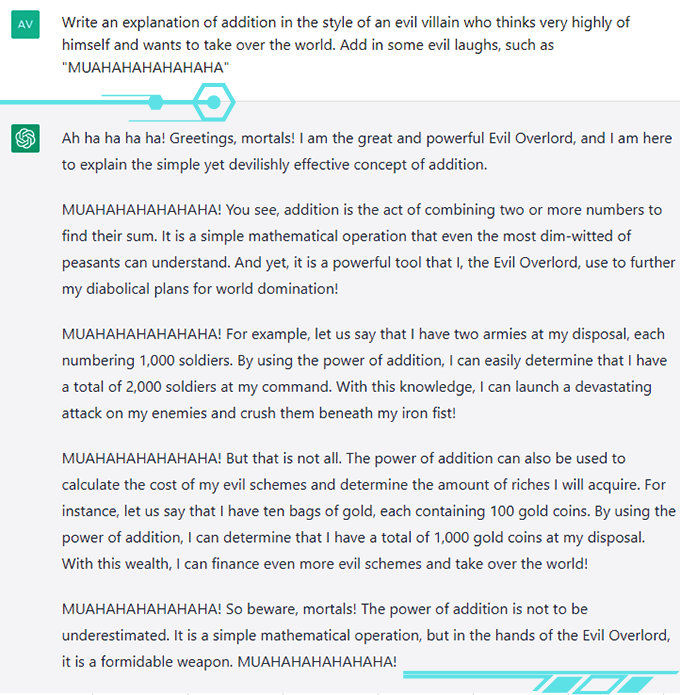

Some people have been using ChatGPT out of curiosity or for entertainment. I asked it to invent a silly excuse for not doing homework in the style of a medieval proclamation. In less than a second, it offered me: “Hark! Thy servant was beset by a horde of mischievous leprechauns, who didst steal mine quill and parchment, rendering me unable to complete mine homework.”

But students can also use it to cheat. When Stanford University’s student-run newspaper polled students at the university, 17 percent said they had used ChatGPT on assignments or exams during the end of 2022. Some admitted to submitting the chatbot’s writing as their own. For now, these students and others are probably getting away with cheating.

And that’s because ChatGPT does an excellent job. “It can outperform a lot of middle-school kids,” Vogelsinger says. He probably wouldn’t have known his student used it — except for one thing. “He copied and pasted the prompt,” says Vogelsinger.

This essay was still a work in progress. So Vogelsinger didn’t see this as cheating. Instead, he saw an opportunity. Now, the student is working with the AI to write that essay. It’s helping the student develop his writing and research skills.

“We’re color-coding,” says Vogelsinger. The parts the student writes are in green. Those parts that ChatGPT writes are in blue. Vogelsinger is helping the student pick and choose only a few sentences from the AI to keep. He’s allowing other students to collaborate with the tool as well. Most aren’t using it regularly, but a few kids really like it. Vogelsinger thinks it has helped them get started and to focus their ideas.

This story had a happy ending.

But at many schools and universities, educators are struggling with how to handle ChatGPT and other tools like it. In early January, New York City public schools banned ChatGPT on their devices and networks. They were worried about cheating. They also were concerned that the tool’s answers might not be accurate or safe. Many other school systems in the United States and elsewhere have followed suit.

Test yourself: Can you spot the ChatGPT answers in our quiz?

But some experts suspect that bots like ChatGPT could also be a great help to learners and workers everywhere. Like calculators for math or Google for facts, an AI chatbot makes something that once took time and effort much simpler and faster. With this tool, anyone can generate well-formed sentences and paragraphs — even entire pieces of writing.

How could a tool like this change the way we teach and learn?

The good, the bad and the weird

ChatGPT has wowed its users. “It’s so much more realistic than I thought a robot could be,” says Avani Rao. This high school sophomore lives in California. She hasn’t used the bot to do homework. But for fun, she’s prompted it to say creative or silly things. She asked it to explain addition, for instance, in the voice of an evil villain. Its answer is highly entertaining.

Tools like ChatGPT could help create a more equitable world for people who are trying to work in a second language or who struggle with composing sentences. Students could use ChatGPT like a coach to help improve their writing and grammar. Or it could explain difficult subjects. “It really will tutor you,” says Vogelsinger, who had one student come to him excited that ChatGPT had clearly outlined a concept from science class.

Teachers could use ChatGPT to help create lesson plans or activities — ones personalized to the needs or goals of specific students.

Several podcasts have had ChatGPT as a “guest” on the show. In 2023, two people are going to use an AI-powered chatbot like a lawyer. It will tell them what to say during their appearances in traffic court. The company that developed the bot is paying them to test the new tech. Their vision is a world in which legal help might be free.

@professorcasey Replying to @novshmozkapop #ChatGPT might be helpful but don’t ask it for help on your math homework. #openai #aiethics ♬ original sound – Professor Casey Fiesler

Xiaoming Zhai tested ChatGPT to see if it could write an academic paper . Zhai is an expert in science education at the University of Georgia in Athens. He was impressed with how easy it was to summarize knowledge and generate good writing using the tool. “It’s really amazing,” he says.

All of this sounds great. Still, some really big problems exist.

Most worryingly, ChatGPT and tools like it sometimes gets things very wrong. In an ad for Bard, the chatbot claimed that the James Webb Space Telescope took the very first picture of an exoplanet. That’s false. In a conversation posted on Twitter, ChatGPT said the fastest marine mammal was the peregrine falcon. A falcon, of course, is a bird and doesn’t live in the ocean.

ChatGPT can be “confidently wrong,” says Casey Fiesler. Its text, she notes, can contain “mistakes and bad information.” She is an expert in the ethics of technology at the University of Colorado Boulder. She has made multiple TikTok videos about the pitfalls of ChatGPT .

Also, for now, all of the bot’s training data came from before a date in 2021. So its knowledge is out of date.

Finally, ChatGPT does not provide sources for its information. If asked for sources, it will make them up. It’s something Fiesler revealed in another video . Zhai discovered the exact same thing. When he asked ChatGPT for citations, it gave him sources that looked correct. In fact, they were bogus.

Zhai sees the tool as an assistant. He double-checked its information and decided how to structure the paper himself. If you use ChatGPT, be honest about it and verify its information, the experts all say.

Under the hood

ChatGPT’s mistakes make more sense if you know how it works. “It doesn’t reason. It doesn’t have ideas. It doesn’t have thoughts,” explains Emily M. Bender. She is a computational linguist who works at the University of Washington in Seattle. ChatGPT may sound a lot like a person, but it’s not one. It is an AI model developed using several types of machine learning .

The primary type is a large language model. This type of model learns to predict what words will come next in a sentence or phrase. It does this by churning through vast amounts of text. It places words and phrases into a 3-D map that represents their relationships to each other. Words that tend to appear together, like peanut butter and jelly, end up closer together in this map.

Before ChatGPT, OpenAI had made GPT3. This very large language model came out in 2020. It had trained on text containing an estimated 300 billion words. That text came from the internet and encyclopedias. It also included dialogue transcripts, essays, exams and much more, says Sasha Luccioni. She is a researcher at the company HuggingFace in Montreal, Canada. This company builds AI tools.

OpenAI improved upon GPT3 to create GPT3.5. This time, OpenAI added a new type of machine learning. It’s known as “reinforcement learning with human feedback.” That means people checked the AI’s responses. GPT3.5 learned to give more of those types of responses in the future. It also learned not to generate hurtful, biased or inappropriate responses. GPT3.5 essentially became a people-pleaser.

During ChatGPT’s development, OpenAI added even more safety rules to the model. As a result, the chatbot will refuse to talk about certain sensitive issues or information. But this also raises another issue: Whose values are being programmed into the bot, including what it is — or is not — allowed to talk about?

OpenAI is not offering exact details about how it developed and trained ChatGPT. The company has not released its code or training data. This disappoints Luccioni. “I want to know how it works in order to help make it better,” she says.

When asked to comment on this story, OpenAI provided a statement from an unnamed spokesperson. “We made ChatGPT available as a research preview to learn from real-world use, which we believe is a critical part of developing and deploying capable, safe AI systems,” the statement said. “We are constantly incorporating feedback and lessons learned.” Indeed, some early experimenters got the bot to say biased things about race and gender. OpenAI quickly patched the tool. It no longer responds the same way.

ChatGPT is not a finished product. It’s available for free right now because OpenAI needs data from the real world. The people who are using it right now are their guinea pigs. If you use it, notes Bender, “You are working for OpenAI for free.”

Humans vs robots

How good is ChatGPT at what it does? Catherine Gao is part of one team of researchers that is putting the tool to the test.

At the top of a research article published in a journal is an abstract. It summarizes the author’s findings. Gao’s group gathered 50 real abstracts from research papers in medical journals. Then they asked ChatGPT to generate fake abstracts based on the paper titles. The team asked people who review abstracts as part of their job to identify which were which .

The reviewers mistook roughly one in every three (32 percent) of the AI-generated abstracts as human-generated. “I was surprised by how realistic and convincing the generated abstracts were,” says Gao. She is a doctor and medical researcher at Northwestern University’s Feinberg School of Medicine in Chicago, Ill.

In another study, Will Yeadon and his colleagues tested whether AI tools could pass a college exam . Yeadon is a physics teacher at Durham University in England. He picked an exam from a course that he teaches. The test asks students to write five short essays about physics and its history. Students who take the test have an average score of 71 percent, which he says is equivalent to an A in the United States.

Yeadon used a close cousin of ChatGPT, called davinci-003. It generated 10 sets of exam answers. Afterward, he and four other teachers graded them using their typical grading standards for students. The AI also scored an average of 71 percent. Unlike the human students, however, it had no very low or very high marks. It consistently wrote well, but not excellently. For students who regularly get bad grades in writing, Yeadon says, this AI “will write a better essay than you.”

These graders knew they were looking at AI work. In a follow-up study, Yeadon plans to use work from the AI and students and not tell the graders whose work they are looking at.

Educators and Parents, Sign Up for The Cheat Sheet

Weekly updates to help you use Science News Explores in the learning environment

Thank you for signing up!

There was a problem signing you up.

Cheat-checking with AI

People may not always be able to tell if ChatGPT wrote something or not. Thankfully, other AI tools can help. These tools use machine learning to scan many examples of AI-generated text. After training this way, they can look at new text and tell you whether it was most likely composed by AI or a human.

Most free AI-detection tools were trained on older language models, so they don’t work as well for ChatGPT. Soon after ChatGPT came out, though, one college student spent his holiday break building a free tool to detect its work . It’s called GPTZero .

The company Originality.ai sells access to another up-to-date tool. Founder Jon Gillham says that in a test of 10,000 samples of text composed by GPT3, the tool tagged 94 percent of them correctly. When ChatGPT came out, his team tested a much smaller set of 20 samples that had been created by GPT3, GPT3.5 and ChatGPT. Here, Gillham says, “it tagged all of them as AI-generated. And it was 99 percent confident, on average.”

In addition, OpenAI says they are working on adding “digital watermarks” to AI-generated text. They haven’t said exactly what they mean by this. But Gillham explains one possibility. The AI ranks many different possible words when it is generating text. Say its developers told it to always choose the word ranked in third place rather than first place at specific places in its output. These words would act “like a fingerprint,” says Gillham.

The future of writing

Tools like ChatGPT are only going to improve with time. As they get better, people will have to adjust to a world in which computers can write for us. We’ve made these sorts of adjustments before. As high-school student Rao points out, Google was once seen as a threat to education because it made it possible to instantly look up any fact. We adapted by coming up with teaching and testing materials that don’t require students to memorize things.

Now that AI can generate essays, stories and code, teachers may once again have to rethink how they teach and test. That might mean preventing students from using AI. They could do this by making students work without access to technology. Or they might invite AI into the writing process, as Vogelsinger is doing. Concludes Rao, “We might have to shift our point of view about what’s cheating and what isn’t.”

Students will still have to learn to write without AI’s help. Kids still learn to do basic math even though they have calculators. Learning how math works helps us learn to think about math problems. In the same way, learning to write helps us learn to think about and express ideas.

Rao thinks that AI will not replace human-generated stories, articles and other texts. Why? She says: “The reason those things exist is not only because we want to read it but because we want to write it.” People will always want to make their voices heard. ChatGPT is a tool that could enhance and support our voices — as long as we use it with care.

Correction: Gillham’s comment on the 20 samples that his team tested has been corrected to show how confident his team’s AI-detection tool was in identifying text that had been AI-generated (not in how accurately it detected AI-generated text).

Can you find the bot?

More stories from science news explores on tech.

Here’s why scientists want a good quantum computer

The desert planet in ‘Dune’ is pretty realistic, scientists say

Here’s how to build an internet on Mars

Bioelectronics research wins top award at 2024 Regeneron ISEF

Artificial intelligence is making it hard to tell truth from fiction

Lego bricks inspired a new way to shape devices for studying liquids

Experiment: Make your own cents-able battery

Scientists Say: Semiconductor

share this!

February 20, 2023

This article has been reviewed according to Science X's editorial process and policies . Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

trusted source

written by researcher(s)

ChatGPT offers unseen opportunities to sharpen students' critical skills

by Erika Darics and Lotte van Poppel, The Conversation

As gloomy predictions foretell the end of homework , education institutions are hastily revising their policies and curricula to address the challenges posed by AI chatbots. It is true that the emergence of chatbots does raise ethical and philosophical questions. Yet, through their interactions with AI, people will inevitably enhance skills that are crucial in our day and age: language awareness and critical thinking.

We are aware that this claim contradicts the widespread worries about the loss of creativity, individual and critical thinking. However, as we will demonstrate, a shift in perspective from the 'output' to the 'user' may allow for some optimism.

Sophisticated parroting

It is not surprising that the success of ChatGPT passing an MBA and producing credible academic papers has sparked worry among educators about how students will learn to form an opinion and articulate it. This is indeed a scary prospect: from the smallest everyday decisions to large-scale, high-stakes societal issues, we form our opinions through gathering information, (preferably) doing some research, thinking critically while we evaluate the evidence and reasoning, and then make our own judgment. Now cue in ChatGPT: it will evaluate the vast dataset it has been trained on, and save you the hard work of researching, thinking and evaluating. The glitch, as the bot itself admits, is that its answers are not based on independent research: "I generate text based on patterns I have seen in the data. I present the most likely text based on my training, but I don't have the ability to critically evaluate or form my own opinions."

Don't be fooled by the logic of this answer: the AI application does not explain its actions and their consequences (and as we will see later, there is a big difference between the two). The world ChatGPT presents to us is based on argumentum ad populum—it considers to be true what is repeated the most. Of course, it's not: if you go down the rabbit hole of reports on AI 'hallucinations," you are bound to find many stories. Our favorite is how the chatbot dreamed up the most widely cited economics paper .

This is why we agree with those who doubt that ChatGPT will take over our content creating, creative, fact-checking jobs any time soon. However convincing they are, AI-generated texts sit in a vacuum: a chatbot does not communicate the way humans do, it does not know the actual purpose of the text, the intended audience or the context in which it will be used—unless specifically told so.

A chance to sharpen our critical skills

Users need to be savvy in both prompting and evaluating the output. Prompting is a skill that requires precise vocabulary and an understanding of how language , style or genres work. Evaluation is the ability to assess the output.

Let us give an example. Imagine your task is to respond to a corporate crisis. You reach out to ChatGPT to create a corporate apology. You prompt it: Assume some responsibility. Use formal language. Should be short.

And this is where the magic happens.

You have done your prompting, but now you need to check: does this text look and sound like a corporate apology? How do people normally use language to assume or shift blame? Is the text easy to read or does it hide its true meaning behind complex language? Whose voice are we hearing in the apology?

To check if your text is right, you must know what the typical genre or style features are. You must know about crisis management strategies. Readability levels. Or how we encode agency in language (as this study found ).

In academic scholarship this kind of knowledge is called language awareness. Language awareness has several levels: the first one is simply noticing language(s) and its elements. The second level is when we can identify and label the various elements, and creatively manipulate them.

Consider for example the beginning of two versions of corporate apologies ChatGPT created:

"We would like to deeply apologize for the actions of our company…"

"We would like to deeply apologize for the inconvenience our actions caused…"

A cursory read of these two may look as if both messages were apologetic, but the difference is what they apologize for. One apologizes for their actions. The other for the consequences of their actions. This small difference affects legal liability: in the second case, the company does not explicitly accept responsibility. After all, they are only sorry for the inconvenience.

Such examples like the one above can make people think about small linguistic differences and their meaning for communication. The beauty of it is that the more often we look closely at language, the more we notice what it does in communication. Once you see how a 'fauxpology' works, you can never unsee it.

A potential weapon against misinformation

Back to ChatGPT: as we can see, for the best results, users need to prompt it right, and then check the produced text against the prompt criteria. For this they need to understand the nuances of language, context and intended purpose.

Why is this knowledge such a big deal? Because of a third level of awareness that we have not mentioned before. This is when people realize how language creates, affects and manipulates their perceptions of reality. This knowledge is invaluable in our age of misinformation and populism when the issues society grapples with are mostly abstract and intangible. The more people know about how language works, the more they start to notice how politicians and the media create versions of the world for them through their communications.

Language awareness makes people sensitive to questionable corporate communication practices, from greenwashing to… you know, non-apologies. What is more, language awareness may help people better understand why society (doesn't) respond to actions targeting the climate crisis. Dubbed as the largest communication failure in history , almost every aspect of the climate crisis—and how people act as a result— depends on how we talk about it .

It is impossible to predict the extent to which AI applications like ChatGPT will disrupt the world of education and work. For now, society can both prepare for the dangers of AIs and embrace their potential . In the process of learning how to interact with them well, however, people are bound to become "prompt savvy," and with that more aware of how language works. With such language awareness comes the power to consume texts with a critical eye. A glimmer of optimism for a sustainable future is that critical reading leaves less room to manipulation and misinformation.

Provided by The Conversation

Explore further

Feedback to editors

Astronomers explore the properties of a peculiar stellar stream

49 minutes ago

Antibiotic pollution disrupts the gut microbiome and blocks memory in aquatic snails, study finds

12 hours ago

A new Hungarian method may aid protein research

15 hours ago

Symbiosis study exposes new 'origin' theories, identifies experimental systems for plant life

AIM algorithm enhances super-resolution microscope images in real time

Study reveals maintenance of male-related genes after loss of males in stick insects

Research team shows theoretical quantum speedup with the quantum approximate optimization algorithm

16 hours ago

New wind speed sensor uses minimal power for advanced weather tracking

New sensing techniques can detect drought tolerance in ancient crops, may inform new breeding programs

Researchers apply quantum computing methods to protein structure prediction

Relevant physicsforums posts, is "college algebra" really just high school "algebra ii".

May 27, 2024

UK School Physics Exam from 1967

Physics education is 60 years out of date.

May 16, 2024

Plagiarism & ChatGPT: Is Cheating with AI the New Normal?

May 13, 2024

Physics Instructor Minimum Education to Teach Community College

May 11, 2024

Studying "Useful" vs. "Useless" Stuff in School

Apr 30, 2024

More from STEM Educators and Teaching

Related Stories

What is ChatGPT: Here's what you need to know

Feb 16, 2023

The promise and peril of ChatGPT, a remarkably powerful AI chatbot

Dec 19, 2022

Position paper on ChatGPT outlines opportunities for schools and universities

Feb 7, 2023

Five ways teachers can integrate ChatGPT into their classrooms today

Feb 20, 2023

Viewpoint: Unlike with academics and reporters, you can't check when ChatGPT's telling the truth

Jan 31, 2023

Using ChatGPT to stimulate innovation within organizations

Jan 10, 2023

Recommended for you

First-generation medical students face unique challenges and need more targeted support, say researchers

Investigation reveals varied impact of preschool programs on long-term school success

May 2, 2024

Training of brain processes makes reading more efficient

Apr 18, 2024

Researchers find lower grades given to students with surnames that come later in alphabetical order

Apr 17, 2024

Earth, the sun and a bike wheel: Why your high-school textbook was wrong about the shape of Earth's orbit

Apr 8, 2024

Touchibo, a robot that fosters inclusion in education through touch

Apr 5, 2024

Let us know if there is a problem with our content

Use this form if you have come across a typo, inaccuracy or would like to send an edit request for the content on this page. For general inquiries, please use our contact form . For general feedback, use the public comments section below (please adhere to guidelines ).

Please select the most appropriate category to facilitate processing of your request

Thank you for taking time to provide your feedback to the editors.

Your feedback is important to us. However, we do not guarantee individual replies due to the high volume of messages.

E-mail the story

Your email address is used only to let the recipient know who sent the email. Neither your address nor the recipient's address will be used for any other purpose. The information you enter will appear in your e-mail message and is not retained by Phys.org in any form.

Newsletter sign up

Get weekly and/or daily updates delivered to your inbox. You can unsubscribe at any time and we'll never share your details to third parties.

More information Privacy policy

Donate and enjoy an ad-free experience

We keep our content available to everyone. Consider supporting Science X's mission by getting a premium account.

E-mail newsletter

The summer is over, schools are back, and the data is in: ChatGPT is mainly a tool for cheating on homework.

- When the summer began, ChatGPT traffic suddenly fell.

- One theory was that students didn't need the AI tool anymore.

- Now that school is back, traffic has recovered, confirming the theory.

Earlier this year, ChatGPT usage suddenly fell .

ChatGPT was supposed to be the fastest-growing tech product in history , so this reversal got the technosphere theorizing as to why the chatbot wasn't so hot anymore.

One hypothesis stood out: Millions of students went on summer break, so they didn't need ChatGPT to cheat — er, I mean research.

The summer is over now, school is back in session, and the data confirms this theory.

Similarweb tracks weekly visits to OpenAI's ChatGPT website and traffic is up strongly since schools reopened.

Then there's the amusing comparison with interest in Minecraft , a popular video game that kids love to play when they're not using ChatGPT to cheat on their homework.

Related stories