11 Tips For Writing a Dissertation Data Analysis

Since the evolution of the fourth industrial revolution – the Digital World; lots of data have surrounded us. There are terabytes of data around us or in data centers that need to be processed and used. The data needs to be appropriately analyzed to process it, and Dissertation data analysis forms its basis. If data analysis is valid and free from errors, the research outcomes will be reliable and lead to a successful dissertation.

Considering the complexity of many data analysis projects, it becomes challenging to get precise results if analysts are not familiar with data analysis tools and tests properly. The analysis is a time-taking process that starts with collecting valid and relevant data and ends with the demonstration of error-free results.

So, in today’s topic, we will cover the need to analyze data, dissertation data analysis, and mainly the tips for writing an outstanding data analysis dissertation. If you are a doctoral student and plan to perform dissertation data analysis on your data, make sure that you give this article a thorough read for the best tips!

What is Data Analysis in Dissertation?

Dissertation Data Analysis is the process of understanding, gathering, compiling, and processing a large amount of data. Then identifying common patterns in responses and critically examining facts and figures to find the rationale behind those outcomes.

Even f you have the data collected and compiled in the form of facts and figures, it is not enough for proving your research outcomes. There is still a need to apply dissertation data analysis on your data; to use it in the dissertation. It provides scientific support to the thesis and conclusion of the research.

Data Analysis Tools

There are plenty of indicative tests used to analyze data and infer relevant results for the discussion part. Following are some tests used to perform analysis of data leading to a scientific conclusion:

11 Most Useful Tips for Dissertation Data Analysis

Doctoral students need to perform dissertation data analysis and then dissertation to receive their degree. Many Ph.D. students find it hard to do dissertation data analysis because they are not trained in it.

1. Dissertation Data Analysis Services

The first tip applies to those students who can afford to look for help with their dissertation data analysis work. It’s a viable option, and it can help with time management and with building the other elements of the dissertation with much detail.

Dissertation Analysis services are professional services that help doctoral students with all the basics of their dissertation work, from planning, research and clarification, methodology, dissertation data analysis and review, literature review, and final powerpoint presentation.

One great reference for dissertation data analysis professional services is Statistics Solutions , they’ve been around for over 22 years helping students succeed in their dissertation work. You can find the link to their website here .

For a proper dissertation data analysis, the student should have a clear understanding and statistical knowledge. Through this knowledge and experience, a student can perform dissertation analysis on their own.

Following are some helpful tips for writing a splendid dissertation data analysis:

2. Relevance of Collected Data

If the data is irrelevant and not appropriate, you might get distracted from the point of focus. To show the reader that you can critically solve the problem, make sure that you write a theoretical proposition regarding the selection and analysis of data.

3. Data Analysis

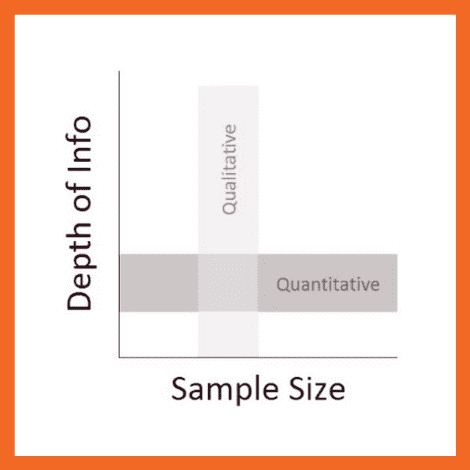

For analysis, it is crucial to use such methods that fit best with the types of data collected and the research objectives. Elaborate on these methods and the ones that justify your data collection methods thoroughly. Make sure to make the reader believe that you did not choose your method randomly. Instead, you arrived at it after critical analysis and prolonged research.

On the other hand, quantitative analysis refers to the analysis and interpretation of facts and figures – to build reasoning behind the advent of primary findings. An assessment of the main results and the literature review plays a pivotal role in qualitative and quantitative analysis.

The overall objective of data analysis is to detect patterns and inclinations in data and then present the outcomes implicitly. It helps in providing a solid foundation for critical conclusions and assisting the researcher to complete the dissertation proposal.

4. Qualitative Data Analysis

Qualitative data refers to data that does not involve numbers. You are required to carry out an analysis of the data collected through experiments, focus groups, and interviews. This can be a time-taking process because it requires iterative examination and sometimes demanding the application of hermeneutics. Note that using qualitative technique doesn’t only mean generating good outcomes but to unveil more profound knowledge that can be transferrable.

Presenting qualitative data analysis in a dissertation can also be a challenging task. It contains longer and more detailed responses. Placing such comprehensive data coherently in one chapter of the dissertation can be difficult due to two reasons. Firstly, we cannot figure out clearly which data to include and which one to exclude. Secondly, unlike quantitative data, it becomes problematic to present data in figures and tables. Making information condensed into a visual representation is not possible. As a writer, it is of essence to address both of these challenges.

Qualitative Data Analysis Methods

Following are the methods used to perform quantitative data analysis.

- Deductive Method

This method involves analyzing qualitative data based on an argument that a researcher already defines. It’s a comparatively easy approach to analyze data. It is suitable for the researcher with a fair idea about the responses they are likely to receive from the questionnaires.

- Inductive Method

In this method, the researcher analyzes the data not based on any predefined rules. It is a time-taking process used by students who have very little knowledge of the research phenomenon.

5. Quantitative Data Analysis

Quantitative data contains facts and figures obtained from scientific research and requires extensive statistical analysis. After collection and analysis, you will be able to conclude. Generic outcomes can be accepted beyond the sample by assuming that it is representative – one of the preliminary checkpoints to carry out in your analysis to a larger group. This method is also referred to as the “scientific method”, gaining its roots from natural sciences.

The Presentation of quantitative data depends on the domain to which it is being presented. It is beneficial to consider your audience while writing your findings. Quantitative data for hard sciences might require numeric inputs and statistics. As for natural sciences , such comprehensive analysis is not required.

Quantitative Analysis Methods

Following are some of the methods used to perform quantitative data analysis.

- Trend analysis: This corresponds to a statistical analysis approach to look at the trend of quantitative data collected over a considerable period.

- Cross-tabulation: This method uses a tabula way to draw readings among data sets in research.

- Conjoint analysis : Quantitative data analysis method that can collect and analyze advanced measures. These measures provide a thorough vision about purchasing decisions and the most importantly, marked parameters.

- TURF analysis: This approach assesses the total market reach of a service or product or a mix of both.

- Gap analysis: It utilizes the side-by-side matrix to portray quantitative data, which captures the difference between the actual and expected performance.

- Text analysis: In this method, innovative tools enumerate open-ended data into easily understandable data.

6. Data Presentation Tools

Since large volumes of data need to be represented, it becomes a difficult task to present such an amount of data in coherent ways. To resolve this issue, consider all the available choices you have, such as tables, charts, diagrams, and graphs.

Tables help in presenting both qualitative and quantitative data concisely. While presenting data, always keep your reader in mind. Anything clear to you may not be apparent to your reader. So, constantly rethink whether your data presentation method is understandable to someone less conversant with your research and findings. If the answer is “No”, you may need to rethink your Presentation.

7. Include Appendix or Addendum

After presenting a large amount of data, your dissertation analysis part might get messy and look disorganized. Also, you would not be cutting down or excluding the data you spent days and months collecting. To avoid this, you should include an appendix part.

The data you find hard to arrange within the text, include that in the appendix part of a dissertation . And place questionnaires, copies of focus groups and interviews, and data sheets in the appendix. On the other hand, one must put the statistical analysis and sayings quoted by interviewees within the dissertation.

8. Thoroughness of Data

It is a common misconception that the data presented is self-explanatory. Most of the students provide the data and quotes and think that it is enough and explaining everything. It is not sufficient. Rather than just quoting everything, you should analyze and identify which data you will use to approve or disapprove your standpoints.

Thoroughly demonstrate the ideas and critically analyze each perspective taking care of the points where errors can occur. Always make sure to discuss the anomalies and strengths of your data to add credibility to your research.

9. Discussing Data

Discussion of data involves elaborating the dimensions to classify patterns, themes, and trends in presented data. In addition, to balancing, also take theoretical interpretations into account. Discuss the reliability of your data by assessing their effect and significance. Do not hide the anomalies. While using interviews to discuss the data, make sure you use relevant quotes to develop a strong rationale.

It also involves answering what you are trying to do with the data and how you have structured your findings. Once you have presented the results, the reader will be looking for interpretation. Hence, it is essential to deliver the understanding as soon as you have submitted your data.

10. Findings and Results

Findings refer to the facts derived after the analysis of collected data. These outcomes should be stated; clearly, their statements should tightly support your objective and provide logical reasoning and scientific backing to your point. This part comprises of majority part of the dissertation.

In the finding part, you should tell the reader what they are looking for. There should be no suspense for the reader as it would divert their attention. State your findings clearly and concisely so that they can get the idea of what is more to come in your dissertation.

11. Connection with Literature Review

At the ending of your data analysis in the dissertation, make sure to compare your data with other published research. In this way, you can identify the points of differences and agreements. Check the consistency of your findings if they meet your expectations—lookup for bottleneck position. Analyze and discuss the reasons behind it. Identify the key themes, gaps, and the relation of your findings with the literature review. In short, you should link your data with your research question, and the questions should form a basis for literature.

The Role of Data Analytics at The Senior Management Level

From small and medium-sized businesses to Fortune 500 conglomerates, the success of a modern business is now increasingly tied to how the company implements its data infrastructure and data-based decision-making. According

The Decision-Making Model Explained (In Plain Terms)

Any form of the systematic decision-making process is better enhanced with data. But making sense of big data or even small data analysis when venturing into a decision-making process might

13 Reasons Why Data Is Important in Decision Making

Wrapping Up

Writing data analysis in the dissertation involves dedication, and its implementations demand sound knowledge and proper planning. Choosing your topic, gathering relevant data, analyzing it, presenting your data and findings correctly, discussing the results, connecting with the literature and conclusions are milestones in it. Among these checkpoints, the Data analysis stage is most important and requires a lot of keenness.

In this article, we thoroughly looked at the tips that prove valuable for writing a data analysis in a dissertation. Make sure to give this article a thorough read before you write data analysis in the dissertation leading to the successful future of your research.

Oxbridge Essays. Top 10 Tips for Writing a Dissertation Data Analysis.

Emidio Amadebai

As an IT Engineer, who is passionate about learning and sharing. I have worked and learned quite a bit from Data Engineers, Data Analysts, Business Analysts, and Key Decision Makers almost for the past 5 years. Interested in learning more about Data Science and How to leverage it for better decision-making in my business and hopefully help you do the same in yours.

Recent Posts

Causal vs Evidential Decision-making (How to Make Businesses More Effective)

In today’s fast-paced business landscape, it is crucial to make informed decisions to stay in the competition which makes it important to understand the concept of the different characteristics and...

Bootstrapping vs. Boosting

Over the past decade, the field of machine learning has witnessed remarkable advancements in predictive techniques and ensemble learning methods. Ensemble techniques are very popular in machine...

- Cookies & Privacy

- GETTING STARTED

- Introduction

- FUNDAMENTALS

Getting to the main article

Choosing your route

Setting research questions/ hypotheses

Assessment point

Building the theoretical case

Setting your research strategy

Data collection

Data analysis

Data analysis techniques

In STAGE NINE: Data analysis , we discuss the data you will have collected during STAGE EIGHT: Data collection . However, before you collect your data, having followed the research strategy you set out in this STAGE SIX , it is useful to think about the data analysis techniques you may apply to your data when it is collected.

The statistical tests that are appropriate for your dissertation will depend on (a) the research questions/hypotheses you have set, (b) the research design you are using, and (c) the nature of your data. You should already been clear about your research questions/hypotheses from STAGE THREE: Setting research questions and/or hypotheses , as well as knowing the goal of your research design from STEP TWO: Research design in this STAGE SIX: Setting your research strategy . These two pieces of information - your research questions/hypotheses and research design - will let you know, in principle , the statistical tests that may be appropriate to run on your data in order to answer your research questions.

We highlight the words in principle and may because the most appropriate statistical test to run on your data not only depend on your research questions/hypotheses and research design, but also the nature of your data . As you should have identified in STEP THREE: Research methods , and in the article, Types of variables , in the Fundamentals part of Lærd Dissertation, (a) not all data is the same, and (b) not all variables are measured in the same way (i.e., variables can be dichotomous, ordinal or continuous). In addition, not all data is normal , nor is the data when comparing groups necessarily equal , terms we explain in the Data Analysis section in the Fundamentals part of Lærd Dissertation. As a result, you might think that running a particular statistical test is correct at this point of setting your research strategy (e.g., a statistical test called a dependent t-test ), based on the research questions/hypotheses you have set, but when you collect your data (i.e., during STAGE EIGHT: Data collection ), the data may fail certain assumptions that are important to such a statistical test (i.e., normality and homogeneity of variance ). As a result, you have to run another statistical test (e.g., a Wilcoxon signed-rank test instead of a dependent t-test ).

At this stage in the dissertation process, it is important, or at the very least, useful to think about the data analysis techniques you may apply to your data when it is collected. We suggest that you do this for two reasons:

REASON A Supervisors sometimes expect you to know what statistical analysis you will perform at this stage of the dissertation process

This is not always the case, but if you have had to write a Dissertation Proposal or Ethics Proposal , there is sometimes an expectation that you explain the type of data analysis that you plan to carry out. An understanding of the data analysis that you will carry out on your data can also be an expected component of the Research Strategy chapter of your dissertation write-up (i.e., usually Chapter Three: Research Strategy ). Therefore, it is a good time to think about the data analysis process if you plan to start writing up this chapter at this stage.

REASON B It takes time to get your head around data analysis

When you come to analyse your data in STAGE NINE: Data analysis , you will need to think about (a) selecting the correct statistical tests to perform on your data, (b) running these tests on your data using a statistics package such as SPSS, and (c) learning how to interpret the output from such statistical tests so that you can answer your research questions or hypotheses. Whilst we show you how to do this for a wide range of scenarios in the in the Data Analysis section in the Fundamentals part of Lærd Dissertation, it can be a time consuming process. Unless you took an advanced statistics module/option as part of your degree (i.e., not just an introductory course to statistics, which are often taught in undergraduate and master?s degrees), it can take time to get your head around data analysis. Starting this process at this stage (i.e., STAGE SIX: Research strategy ), rather than waiting until you finish collecting your data (i.e., STAGE EIGHT: Data collection ) is a sensible approach.

Final thoughts...

Setting the research strategy for your dissertation required you to describe, explain and justify the research paradigm, quantitative research design, research method(s), sampling strategy, and approach towards research ethics and data analysis that you plan to follow, as well as determine how you will ensure the research quality of your findings so that you can effectively answer your research questions/hypotheses. However, from a practical perspective, just remember that the main goal of STAGE SIX: Research strategy is to have a clear research strategy that you can implement (i.e., operationalize ). After all, if you are unable to clearly follow your plan and carry out your research in the field, you will struggle to answer your research questions/hypotheses. Once you are sure that you have a clear plan, it is a good idea to take a step back, speak with your supervisor, and assess where you are before moving on to collect data. Therefore, when you are ready, proceed to STAGE SEVEN: Assessment point .

- Link to facebook

- Link to linkedin

- Link to twitter

- Link to youtube

- Writing Tips

5 Tips for Handling your Thesis Data Analysis

3-minute read

- 23rd June 2015

When writing your thesis, the process of analyzing data and working with statistics can be pretty hard at first. This is true whether you’re using specialized data analysis software, like SPSS, or a more descriptive approach. But there are a few guidelines you can follow to make things simpler.

1. Choose the Best Analytical Method for Your Project

The sheer variety of techniques available for data analysis can be confusing! If you are writing a thesis on internet marketing, for instance, your approach to analysis will be very different to someone writing about biochemistry. As such it is important to adopt an approach appropriate to your research.

2. Double Check Your Methodology

If you are working with quantitative data, it is important to make sure that your analytical techniques are compatible with the methods used to gather your data. Having a clear understanding of what you have done so far will ensure that you achieve accurate results.

For instance, when performing statistical analysis, you may have to choose between parametric and non-parametric testing. If your data is sampled from a population with a broadly Gaussian (i.e., normal) distribution, you will almost always want to use some form of non-parametric testing.

But if you can’t remember or aren’t sure how you selected your sample, you won’t necessarily know the best test to use!

3. Familiarize Yourself with Statistical Analysis and Analytical Software

Thanks to various clever computer programs, you no longer have to be a math genius to conduct top-grade statistical analysis. Nevertheless, learning the basics will help you make informed choices when designing your research and prevent you from making basic mistakes.

Find this useful?

Subscribe to our newsletter and get writing tips from our editors straight to your inbox.

Likewise, trying out different software packages will allow you to pick the one best suited to your needs on your current project.

4. Present Your Data Clearly and Consistently

This is possibly one of the most important parts of writing up your results. Even if your data and statistics are perfect, failure to present your analysis clearly will make it difficult for your reader to follow.

Ask yourself how your analysis would look to someone unfamiliar with your project. If they would be able to understand your analysis, you’re on the right track!

5. Make It Relevant!

Finally, remember that data analysis is about more than just presenting your data. You should also relate your analysis back to your research objectives, discussing its relevance and justifying your interpretations.

This will ensure that your work is easy to follow and demonstrate your understanding of the methods used. So no matter what you are writing about, the analysis is a great time to show off how clever you are!

Share this article:

Post A New Comment

Got content that needs a quick turnaround? Let us polish your work. Explore our editorial business services.

9-minute read

How to Use Infographics to Boost Your Presentation

Is your content getting noticed? Capturing and maintaining an audience’s attention is a challenge when...

8-minute read

Why Interactive PDFs Are Better for Engagement

Are you looking to enhance engagement and captivate your audience through your professional documents? Interactive...

7-minute read

Seven Key Strategies for Voice Search Optimization

Voice search optimization is rapidly shaping the digital landscape, requiring content professionals to adapt their...

4-minute read

Five Creative Ways to Showcase Your Digital Portfolio

Are you a creative freelancer looking to make a lasting impression on potential clients or...

How to Ace Slack Messaging for Contractors and Freelancers

Effective professional communication is an important skill for contractors and freelancers navigating remote work environments....

How to Insert a Text Box in a Google Doc

Google Docs is a powerful collaborative tool, and mastering its features can significantly enhance your...

Make sure your writing is the best it can be with our expert English proofreading and editing.

How do I make a data analysis for my bachelor, master or PhD thesis?

A data analysis is an evaluation of formal data to gain knowledge for the bachelor’s, master’s or doctoral thesis. The aim is to identify patterns in the data, i.e. regularities, irregularities or at least anomalies.

Data can come in many forms, from numbers to the extensive descriptions of objects. As a rule, this data is always in numerical form such as time series or numerical sequences or statistics of all kinds. However, statistics are already processed data.

Data analysis requires some creativity because the solution is usually not obvious. After all, no one has conducted an analysis like this before, or at least you haven't found anything about it in the literature.

The results of a data analysis are answers to initial questions and detailed questions. The answers are numbers and graphics and the interpretation of these numbers and graphics.

What are the advantages of data analysis compared to other methods?

- Numbers are universal

- The data is tangible.

- There are algorithms for calculations and it is easier than a text evaluation.

- The addressees quickly understand the results.

- You can really do magic and impress the addressees.

- It’s easier to visualize the results.

What are the disadvantages of data analysis?

- Garbage in, garbage out. If the quality of the data is poor, it’s impossible to obtain reliable results.

- The dependency in data retrieval can be quite annoying. Here are some tips for attracting participants for a survey.

- You have to know or learn methods or find someone who can help you.

- Mistakes can be devastating.

- Missing substance can be detected quickly.

- Pictures say more than a thousand words. Therefore, if you can’t fill the pages with words, at least throw in graphics. However, usually only the words count.

Under what conditions can or should I conduct a data analysis?

- If I have to.

- You must be able to get the right data.

- If I can perform the calculations myself or at least understand, explain and repeat the calculated evaluations of others.

- You want a clear personal contribution right from the start.

How do I create the evaluation design for the data analysis?

The most important thing is to ask the right questions, enough questions and also clearly formulated questions. Here are some techniques for asking the right questions:

Good formulation: What is the relationship between Alpha and Beta?

Poor formulation: How are Alpha and Beta related?

Now it’s time for the methods for the calculation. There are dozens of statistical methods, but as always, most calculations can be done with only a handful of statistical methods.

- Which detailed questions can be formulated as the research question?

- What data is available? In what format? How is the data prepared?

- Which key figures allow statements?

- What methods are available to calculate such indicators? Do my details match? By type (scales), by size (number of records).

- Do I not need to have a lot of data for a data analysis?

It depends on the media, the questions and the methods I want to use.

A fixed rule is that I need at least 30 data sets for a statistical analysis in order to be able to make representative statements about the population. So statistically it doesn't matter if I have 30 or 30 million records. That's why statistics were invented...

What mistakes do I need to watch out for?

- Don't do the analysis at the last minute.

- Formulate questions and hypotheses for evaluation BEFORE data collection!

- Stay persistent, keep going.

- Leave the results for a while then revise them.

- You have to combine theory and the state of research with your results.

- You must have the time under control

Which tools can I use?

You can use programs of all kinds for calculations. But asking questions is your most powerful aide.

Who can legally help me with a data analysis?

The great intellectual challenge is to develop the research design, to obtain the data and to interpret the results in the end.

Am I allowed to let others perform the calculations?

That's a thing. In the end, every program is useful. If someone else is operating a program, then they can simply be seen as an extension of the program. But this is a comfortable view... Of course, it’s better if you do your own calculations.

A good compromise is to find some help, do a practical calculation then follow the calculation steps meticulously so next time you can do the math yourself. Basically, this functions as a permitted training. One can then justify each step of the calculation in the defense.

What's the best place to start?

Clearly with the detailed questions and hypotheses. These two guide the entire data analysis. So formulate as many detailed questions as possible to answer your main question or research question. You can find detailed instructions and examples for the formulation of these so-called detailed questions in the Thesis Guide.

How does the Aristolo Guide help with data evaluation for the bachelor’s or master’s thesis or dissertation?

The Thesis Guide or Dissertation Guide has instructions for data collection, data preparation, data analysis and interpretation. The guide can also teach you how to formulate questions and answer them with data to create your own experiment. We also have many templates for questionnaires and analyses of all kinds. Good luck writing your text! Silvio and the Aristolo Team PS: Check out the Thesis-ABC and the Thesis Guide for writing a bachelor or master thesis in 31 days.

- Deutschland

- United Kingdom

- PhD Dissertations

- Master’s Dissertations

- Bachelor’s Dissertations

- Scientific Dissertations

- Medical Dissertations

- Bioscience Dissertations

- Social Sciences Dissertations

- Psychology Dissertations

- Humanities Dissertations

- Engineering Dissertations

- Economics Dissertations

- Service Overview

- Revisión en inglés

- Relecture en anglais

- Revisão em inglês

Manuscript Editing

- Research Paper Editing

- Lektorat Doktorarbeit

- Dissertation Proofreading

- Englisches Lektorat

- Journal Manuscript Editing

- Scientific Manuscript Editing Services

- Book Manuscript Editing

- PhD Thesis Proofreading Services

- Wissenschaftslektorat

- Korektura anglického textu

- Akademisches Lektorat

- Journal Article Editing

- Manuscript Editing Services

PhD Thesis Editing

- Medical Editing Sciences

- Proofreading Rates UK

- Medical Proofreading

- PhD Proofreading

- Academic Proofreading

- PhD Proofreaders

- Best Dissertation Proofreaders

- Masters Dissertation Proofreading

- Proofreading PhD Thesis Price

- PhD Dissertation Editing

- Lektorat Englisch Preise

- Lektorieren Englisch

- Wissenschaftliches Lektorat

- Thesis Proofreading Services

- PhD Thesis Proofreading

- Proofreading Thesis Cost

- Proofreading Thesis

- Thesis Editing Services

- Professional Thesis Editing

- PhD Thesis Editing Services

- Thesis Editing Cost

- Dissertation Proofreading Services

- Proofreading Dissertation

PhD Dissertation Proofreading

- Dissertation Proofreading Cost

- Dissertation Proofreader

- Correção de Artigos Científicos

- Correção de Trabalhos Academicos

- Serviços de Correção de Inglês

- Correção de Dissertação

- Correção de Textos Precos

- Revision en Ingles

- Revision de Textos en Ingles

- Revision de Tesis

- Revision Medica en Ingles

- Revision de Tesis Precio

- Revisão de Artigos Científicos

- Revisão de Trabalhos Academicos

- Serviços de Revisão de Inglês

- Revisão de Dissertação

- Revisão de Textos Precos

- Corrección de Textos en Ingles

- Corrección de Tesis

- Corrección de Tesis Precio

- Corrección Medica en Ingles

- Corrector ingles

- Choosing the right Journal

- Journal Editor’s Feedback

- Dealing with Rejection

- Quantitative Research Examples

- Number of scientific papers published per year

- Acknowledgements Example

- ISO, ANSI, CFR & Other

- Types of Peer Review

- Withdrawing a Paper

- What is a good h-index

- Appendix paper

- Cover Letter Templates

- Writing an Article

- How To Write the Findings

- Abbreviations: ‘Ibid.’ & ‘Id.’

- Sample letter to editor for publication

- Tables and figures in research paper

- Journal Metrics

- Revision Process of Journal Publishing

- JOURNAL GUIDELINES

Select Page

Writing the Data Analysis Chapter(s): Results and Evidence

Posted by Rene Tetzner | Oct 19, 2021 | PhD Success | 0 |

4.4 Writing the Data Analysis Chapter(s): Results and Evidence

Unlike the introduction, literature review and methodology chapter(s), your results chapter(s) will need to be written for the first time as you draft your thesis even if you submitted a proposal, though this part of your thesis will certainly build upon the preceding chapters. You should have carefully recorded and collected the data (test results, participant responses, computer print outs, observations, transcriptions, notes of various kinds etc.) from your research as you conducted it, so now is the time to review, organise and analyse the data. If your study is quantitative in nature, make sure that you know what all the numbers mean and that you consider them in direct relation to the topic, problem or phenomenon you are investigating, and especially in relation to your research questions and hypotheses. You may find that you require the services of a statistician to help make sense of the data, in which case, obtaining that help sooner rather than later is advisable, because you need to understand your results thoroughly before you can write about them. If, on the other hand, your study is qualitative, you will need to read through the data you have collected several times to become familiar with them both as a whole and in detail so that you can establish important themes, patterns and categories. Remember that ‘qualitative analysis is a creative process and requires thoughtful judgments about what is significant and meaningful in the data’ (Roberts, 2010, p.174; see also Miles & Huberman, 1994) – judgements that often need to be made before the findings can be effectively analysed and presented. If you are combining methodologies in your research, you will also need to consider relationships between the results obtained from the different methods, integrating all the data you have obtained and discovering how the results of one approach support or correlate with the results of another. Ideally, you will have taken careful notes recording your initial thoughts and analyses about the sources you consulted and the results and evidence provided by particular methods and instruments as you put them into practice (as suggested in Sections 2.1.2 and 2.1.4), as these will prove helpful while you consider how best to present your results in your thesis.

Although the ways in which to present and organise the results of doctoral research differ markedly depending on the nature of the study and its findings, as on author and committee preferences and university and department guidelines, there are several basic principles that apply to virtually all theses. First and foremost is the need to present the results of your research both clearly and concisely, and in as objective and factual a manner as possible. There will be time and space to elaborate and interpret your results and speculate on their significance and implications in the final discussion chapter(s) of your thesis, but, generally speaking, such reflection on the meaning of the results should be entirely separate from the factual report of your research findings. There are exceptions, of course, and some candidates, supervisors and departments may prefer the factual presentation and interpretive discussion of results to be blended, just as some thesis topics may demand such treatment, but this is rare and best avoided unless there are persuasive reasons to avoid separating the facts from your thoughts about them. If you do find that you need to blend facts and interpretation in reporting your results, make sure that your language leaves no doubt about the line between the two: words such as ‘seems,’ ‘appears,’ ‘may,’ ‘might,’ probably’ and the like will effectively distinguish analytical speculation from more factual reporting (see also Section 4.5).

You need not dedicate much space in this part of the thesis to the methods you used to arrive at your results because these have already been described in your methodology chapter(s), but they can certainly be revisited briefly to clarify or lend structure to your report. Results are most often presented in a straightforward narrative form which is often supplemented by tables and perhaps by figures such as graphs, charts and maps. An effective approach is to decide immediately which information would be best included in tables and figures, and then to prepare those tables and figures before you begin writing the text for the chapter (see Section 4.4.1 on designing effective tables and figures). Arranging your data into the visually immediate formats provided by tables and figures can, for one, produce interesting surprises by enabling you to see trends and details that you may not have noticed previously, and writing the report of your results will prove easier when you have the tables and figures to work with just as your readers ultimately will. In addition, while the text of the results chapter(s) should certainly highlight the most notable data included in tables and figures, it is essential not to repeat information unnecessarily, so writing with the tables and figures already constructed will help you keep repetition to a minimum. Finally, writing about the tables and figures you create will help you test their clarity and effectiveness for your readers, and you can make any necessary adjustments to the tables and figures as you work. Be sure to refer to each table and figure by number in your text and to make it absolutely clear what you want your readers to see or understand in the table or figure (e.g., ‘see Table 1 for the scores’ and ‘Figure 2 shows this relationship’).

Beyond combining textual narration with the data presented in tables and figures, you will need to organise your report of the results in a manner best suited to the material. You may choose to arrange the presentation of your results chronologically or in a hierarchical order that represents their importance; you might subdivide your results into sections (or separate chapters if there is a great deal of information to accommodate) focussing on the findings of different kinds of methodology (quantitative versus qualitative, for instance) or of different tests, trials, surveys, reviews, case studies and so on; or you may want to create sections (or chapters) focussing on specific themes, patterns or categories or on your research questions and/or hypotheses. The last approach allows you to cluster results that relate to a particular question or hypothesis into a single section and can be particularly useful because it provides cohesion for the thesis as a whole and forces you to focus closely on the issues central to the topic, problem or phenomenon you are investigating. You will, for instance, be able to refer back to the questions and hypotheses presented in your introduction (see Section 3.1), to answer the questions and confirm or dismiss the hypotheses and to anticipate in relation to those questions and hypotheses the discussion and interpretation of your findings that will appear in the next part of the thesis (see Section 4.5). Less effective is an approach that organises the presentation of results according to the items of a survey or questionnaire, because these lend the structure of the instrument used to the results instead of connecting those results directly to the aims, themes and argument of your thesis, but such an organisation can certainly be an important early step in your analysis of the findings and might even be valid for the final thesis if, for instance, your work focuses on developing the instrument involved.

The results generated by doctoral research are unique, and this book cannot hope to outline all the possible approaches for presenting the data and analyses that constitute research results, but it is essential that you devote considerable thought and special care to the way in which you structure the report of your results (Section 6.1 on headings may prove helpful). Whatever structure you choose should accurately reflect the nature of your results and highlight their most important and interesting trends, and it should also effectively allow you (in the next part of the thesis) to discuss and speculate upon your findings in ways that will test the premises of your study, work well in the overall argument of your thesis and lead to significant implications for your research. Regardless of how you organise the main body of your results chapter(s), however, you should include a final paragraph (or more than one paragraph if necessary) that briefly summarises and explains the key results and also guides the reader on to the discussion and interpretation of those results in the following chapter(s).

Why PhD Success?

To Graduate Successfully

This article is part of a book called "PhD Success" which focuses on the writing process of a phd thesis, with its aim being to provide sound practices and principles for reporting and formatting in text the methods, results and discussion of even the most innovative and unique research in ways that are clear, correct, professional and persuasive.

The assumption of the book is that the doctoral candidate reading it is both eager to write and more than capable of doing so, but nonetheless requires information and guidance on exactly what he or she should be writing and how best to approach the task. The basic components of a doctoral thesis are outlined and described, as are the elements of complete and accurate scholarly references, and detailed descriptions of writing practices are clarified through the use of numerous examples.

The basic components of a doctoral thesis are outlined and described, as are the elements of complete and accurate scholarly references, and detailed descriptions of writing practices are clarified through the use of numerous examples. PhD Success provides guidance for students familiar with English and the procedures of English universities, but it also acknowledges that many theses in the English language are now written by candidates whose first language is not English, so it carefully explains the scholarly styles, conventions and standards expected of a successful doctoral thesis in the English language.

Individual chapters of this book address reflective and critical writing early in the thesis process; working successfully with thesis supervisors and benefiting from commentary and criticism; drafting and revising effective thesis chapters and developing an academic or scientific argument; writing and formatting a thesis in clear and correct scholarly English; citing, quoting and documenting sources thoroughly and accurately; and preparing for and excelling in thesis meetings and examinations.

Completing a doctoral thesis successfully requires long and penetrating thought, intellectual rigour and creativity, original research and sound methods (whether established or innovative), precision in recording detail and a wide-ranging thoroughness, as much perseverance and mental toughness as insight and brilliance, and, no matter how many helpful writing guides are consulted, a great deal of hard work over a significant period of time. Writing a thesis can be an enjoyable as well as a challenging experience, however, and even if it is not always so, the personal and professional rewards of achieving such an enormous goal are considerable, as all doctoral candidates no doubt realise, and will last a great deal longer than any problems that may be encountered during the process.

Interested in Proofreading your PhD Thesis? Get in Touch with us

If you are interested in proofreading your PhD thesis or dissertation, please explore our expert dissertation proofreading services.

Rene Tetzner

Rene Tetzner's blog posts dedicated to academic writing. Although the focus is on How To Write a Doctoral Thesis, many other important aspects of research-based writing, editing and publishing are addressed in helpful detail.

Related Posts

PhD Success – How To Write a Doctoral Thesis

October 1, 2021

Table of Contents – PhD Success

October 2, 2021

The Essential – Preliminary Matter

October 3, 2021

The Main Body of the Thesis

October 4, 2021

CUNY Academic Works

Home > Dissertations, Theses & Capstones Projects by Program > Data Analysis & Visualization Master’s Theses and Capstone Projects

Data Analysis & Visualization Master’s Theses and Capstone Projects

Dissertations/theses/capstones from 2024 2024.

The Charge Forward: An Assessment of Electric Vehicle Charging Infrastructure in New York City , Christopher S. Cali

Visualizing a Life, Uprooted: An Interactive, Web-Map and Scroll-Driven Exploration of the Oral History of my Great-Grandfather – from Ottoman Cilicia to Lebanon and Beyond , Alyssa Campbell

Examining the Health Risks of Particulate Matter 2.5 in New York City: How it Affects Marginalized Groups and the Steps Needed to Reduce Air Pollution , Freddy Castro

Clustering of Patients with Heart Disease , Mukadder Cinar

Modeling of COVID-19 Clinical Outcomes in Mexico: An Analysis of Demographic, Clinical, and Chronic Disease Factors , Livia Clarete

Invisible Hand of Socioeconomic Factors in Rising Trend of Maternal Mortality Rates in the U.S. , Disha Kanada

Multi-Perspective Analysis for Derivative Financial Product Prediction with Stacked Recurrent Neural Networks, Natural Language Processing and Large Language Model , Ethan Lo

What Does One Billion Dollars Look Like?: Visualizing Extreme Wealth , William Mahoney Luckman

Making Sense of Making Parole in New York , Alexandra McGlinchy

Employment Outcomes in Higher Education , Yunxia Wei

Dissertations/Theses/Capstones from 2023 2023

Phantom Shootings , Allan Ambris

Naming Venus: An Exploration of Goddesses, Heroines, and Famous Women , Kavya Beheraj

Social Impacts of Robotics on the Labor and Employment Market , Kelvin Espinal

Fighting the Invisibility of Domestic Violence , Yesenny Fernandez

Navigating Through World’s Military Spending Data with Scroll-Event Driven Visualization , Hong Beom Hur

Evocative Visualization of Void and Fluidity , Tomiko Karino

Analyzing Relationships with Machine Learning , Oscar Ko

Analyzing ‘Fight the Power’ Part 1: Music and Longevity Across Evolving Marketing Eras , Shokolatte Tachikawa

Stand-up Comedy Visualized , Berna Yenidogan

Dissertations/Theses/Capstones from 2022 2022

El Ritmo del Westside: Exploring the Musical Landscape of San Antonio’s Historic Westside , Valeria Alderete

A Comparison of Machine Learning Techniques for Validating Students’ Proficiency in Mathematics , Alexander Avdeev

A Machine Learning Approach to Predicting the Onset of Type II Diabetes in a Sample of Pima Indian Women , Meriem Benarbia

Disrepair, Displacement and Distress: Finding Housing Stories Through Data Visualizations , Jennifer Cheng

Blockchain: Key Principles , Nadezda Chikurova

Data for Power: A Visual Tool for Organizing Unions , Shay Culpepper

Happiness From a Different Perspective , Suparna Das

Happiness and Policy Implications: A Sociological View , Sarah M. Kahl

Heating Fire Incidents in New York City , Merissa K. Lissade

NYC vs. Covid-19: The Human and Financial Resources Deployed to Fight the Most Expensive Health Emergency in History in NYC during the Year 2020 , Elmer A. Maldonado Ramirez

Slices of the Big Apple: A Visual Explanation and Analysis of the New York City Budget , Joanne Ramadani

The Value of NFTs , Angelina Tham

Air Pollution, Climate Change, and Our Health , Kathia Vargas Feliz

Peru's Fishmeal Industry: Its Societal and Environmental Impact , Angel Vizurraga

Why, New York City? Gauging the Quality of Life Through the Thoughts of Tweeters , Sheryl Williams

Dissertations/Theses/Capstones from 2021 2021

Data Analysis and Visualization to Dismantle Gender Discrimination in the Field of Technology , Quinn Bolewicki

Remaking Cinema: Black Hollywood Films, Filmmakers, and Finances , Kiana A. Carrington

Detecting Stance on Covid-19 Vaccine in a Polarized Media , Rodica Ceslov

Dota 2 Hero Selection Analysis , Zhan Gong

An Analysis of Machine Learning Techniques for Economic Recession Prediction , Sheridan Kamal

Black Women in Romance , Vianny C. Lugo Aracena

The Public Innovations Explorer: A Geo-Spatial & Linked-Data Visualization Platform For Publicly Funded Innovation Research In The United States , Seth Schimmel

Making Space for Unquantifiable Data: Hand-drawn Data Visualization , Eva Sibinga

Who Pays? New York State Political Donor Matching with Machine Learning , Annalisa Wilde

- Colleges, Schools, Centers

- Disciplines

Advanced Search

- Notify me via email or RSS

Author Corner

- Data Analysis & Visualization Program

Home | About | FAQ | My Account | Accessibility Statement

Privacy Copyright

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Data Descriptor

- Open access

- Published: 03 May 2024

A dataset for measuring the impact of research data and their curation

- Libby Hemphill ORCID: orcid.org/0000-0002-3793-7281 1 , 2 ,

- Andrea Thomer 3 ,

- Sara Lafia 1 ,

- Lizhou Fan 2 ,

- David Bleckley ORCID: orcid.org/0000-0001-7715-4348 1 &

- Elizabeth Moss 1

Scientific Data volume 11 , Article number: 442 ( 2024 ) Cite this article

595 Accesses

8 Altmetric

Metrics details

- Research data

- Social sciences

Science funders, publishers, and data archives make decisions about how to responsibly allocate resources to maximize the reuse potential of research data. This paper introduces a dataset developed to measure the impact of archival and data curation decisions on data reuse. The dataset describes 10,605 social science research datasets, their curation histories, and reuse contexts in 94,755 publications that cover 59 years from 1963 to 2022. The dataset was constructed from study-level metadata, citing publications, and curation records available through the Inter-university Consortium for Political and Social Research (ICPSR) at the University of Michigan. The dataset includes information about study-level attributes (e.g., PIs, funders, subject terms); usage statistics (e.g., downloads, citations); archiving decisions (e.g., curation activities, data transformations); and bibliometric attributes (e.g., journals, authors) for citing publications. This dataset provides information on factors that contribute to long-term data reuse, which can inform the design of effective evidence-based recommendations to support high-impact research data curation decisions.

Similar content being viewed by others

SciSciNet: A large-scale open data lake for the science of science research

Data, measurement and empirical methods in the science of science

Interdisciplinarity revisited: evidence for research impact and dynamism

Background & summary.

Recent policy changes in funding agencies and academic journals have increased data sharing among researchers and between researchers and the public. Data sharing advances science and provides the transparency necessary for evaluating, replicating, and verifying results. However, many data-sharing policies do not explain what constitutes an appropriate dataset for archiving or how to determine the value of datasets to secondary users 1 , 2 , 3 . Questions about how to allocate data-sharing resources efficiently and responsibly have gone unanswered 4 , 5 , 6 . For instance, data-sharing policies recognize that not all data should be curated and preserved, but they do not articulate metrics or guidelines for determining what data are most worthy of investment.

Despite the potential for innovation and advancement that data sharing holds, the best strategies to prioritize datasets for preparation and archiving are often unclear. Some datasets are likely to have more downstream potential than others, and data curation policies and workflows should prioritize high-value data instead of being one-size-fits-all. Though prior research in library and information science has shown that the “analytic potential” of a dataset is key to its reuse value 7 , work is needed to implement conceptual data reuse frameworks 8 , 9 , 10 , 11 , 12 , 13 , 14 . In addition, publishers and data archives need guidance to develop metrics and evaluation strategies to assess the impact of datasets.

Several existing resources have been compiled to study the relationship between the reuse of scholarly products, such as datasets (Table 1 ); however, none of these resources include explicit information on how curation processes are applied to data to increase their value, maximize their accessibility, and ensure their long-term preservation. The CCex (Curation Costs Exchange) provides models of curation services along with cost-related datasets shared by contributors but does not make explicit connections between them or include reuse information 15 . Analyses on platforms such as DataCite 16 have focused on metadata completeness and record usage, but have not included related curation-level information. Analyses of GenBank 17 and FigShare 18 , 19 citation networks do not include curation information. Related studies of Github repository reuse 20 and Softcite software citation 21 reveal significant factors that impact the reuse of secondary research products but do not focus on research data. RD-Switchboard 22 and DSKG 23 are scholarly knowledge graphs linking research data to articles, patents, and grants, but largely omit social science research data and do not include curation-level factors. To our knowledge, other studies of curation work in organizations similar to ICPSR – such as GESIS 24 , Dataverse 25 , and DANS 26 – have not made their underlying data available for analysis.

This paper describes a dataset 27 compiled for the MICA project (Measuring the Impact of Curation Actions) led by investigators at ICPSR, a large social science data archive at the University of Michigan. The dataset was originally developed to study the impacts of data curation and archiving on data reuse. The MICA dataset has supported several previous publications investigating the intensity of data curation actions 28 , the relationship between data curation actions and data reuse 29 , and the structures of research communities in a data citation network 30 . Collectively, these studies help explain the return on various types of curatorial investments. The dataset that we introduce in this paper, which we refer to as the MICA dataset, has the potential to address research questions in the areas of science (e.g., knowledge production), library and information science (e.g., scholarly communication), and data archiving (e.g., reproducible workflows).

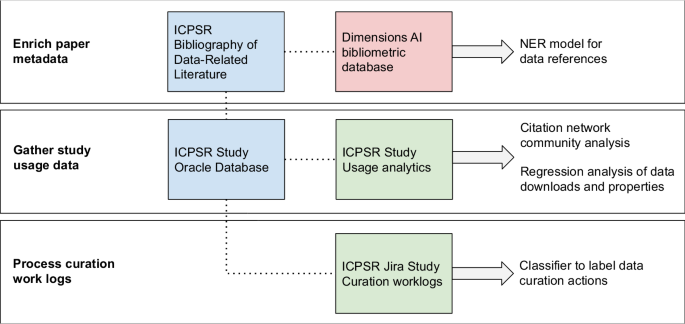

We constructed the MICA dataset 27 using records available at ICPSR, a large social science data archive at the University of Michigan. Data set creation involved: collecting and enriching metadata for articles indexed in the ICPSR Bibliography of Data-related Literature against the Dimensions AI bibliometric database; gathering usage statistics for studies from ICPSR’s administrative database; processing data curation work logs from ICPSR’s project tracking platform, Jira; and linking data in social science studies and series to citing analysis papers (Fig. 1 ).

Steps to prepare MICA dataset for analysis - external sources are red, primary internal sources are blue, and internal linked sources are green.

Enrich paper metadata

The ICPSR Bibliography of Data-related Literature is a growing database of literature in which data from ICPSR studies have been used. Its creation was funded by the National Science Foundation (Award 9977984), and for the past 20 years it has been supported by ICPSR membership and multiple US federally-funded and foundation-funded topical archives at ICPSR. The Bibliography was originally launched in the year 2000 to aid in data discovery by providing a searchable database linking publications to the study data used in them. The Bibliography collects the universe of output based on the data shared in each study through, which is made available through each ICPSR study’s webpage. The Bibliography contains both peer-reviewed and grey literature, which provides evidence for measuring the impact of research data. For an item to be included in the ICPSR Bibliography, it must contain an analysis of data archived by ICPSR or contain a discussion or critique of the data collection process, study design, or methodology 31 . The Bibliography is manually curated by a team of librarians and information specialists at ICPSR who enter and validate entries. Some publications are supplied to the Bibliography by data depositors, and some citations are submitted to the Bibliography by authors who abide by ICPSR’s terms of use requiring them to submit citations to works in which they analyzed data retrieved from ICPSR. Most of the Bibliography is populated by Bibliography team members, who create custom queries for ICPSR studies performed across numerous sources, including Google Scholar, ProQuest, SSRN, and others. Each record in the Bibliography is one publication that has used one or more ICPSR studies. The version we used was captured on 2021-11-16 and included 94,755 publications.

To expand the coverage of the ICPSR Bibliography, we searched exhaustively for all ICPSR study names, unique numbers assigned to ICPSR studies, and DOIs 32 using a full-text index available through the Dimensions AI database 33 . We accessed Dimensions through a license agreement with the University of Michigan. ICPSR Bibliography librarians and information specialists manually reviewed and validated new entries that matched one or more search criteria. We then used Dimensions to gather enriched metadata and full-text links for items in the Bibliography with DOIs. We matched 43% of the items in the Bibliography to enriched Dimensions metadata including abstracts, field of research codes, concepts, and authors’ institutional information; we also obtained links to full text for 16% of Bibliography items. Based on licensing agreements, we included Dimensions identifiers and links to full text so that users with valid publisher and database access can construct an enriched publication dataset.

Gather study usage data

ICPSR maintains a relational administrative database, DBInfo, that organizes study-level metadata and information on data reuse across separate tables. Studies at ICPSR consist of one or more files collected at a single time or for a single purpose; studies in which the same variables are observed over time are grouped into series. Each study at ICPSR is assigned a DOI, and its metadata are stored in DBInfo. Study metadata follows the Data Documentation Initiative (DDI) Codebook 2.5 standard. DDI elements included in our dataset are title, ICPSR study identification number, DOI, authoring entities, description (abstract), funding agencies, subject terms assigned to the study during curation, and geographic coverage. We also created variables based on DDI elements: total variable count, the presence of survey question text in the metadata, the number of author entities, and whether an author entity was an institution. We gathered metadata for ICPSR’s 10,605 unrestricted public-use studies available as of 2021-11-16 ( https://www.icpsr.umich.edu/web/pages/membership/or/metadata/oai.html ).

To link study usage data with study-level metadata records, we joined study metadata from DBinfo on study usage information, which included total study downloads (data and documentation), individual data file downloads, and cumulative citations from the ICPSR Bibliography. We also gathered descriptive metadata for each study and its variables, which allowed us to summarize and append recoded fields onto the study-level metadata such as curation level, number and type of principle investigators, total variable count, and binary variables indicating whether the study data were made available for online analysis, whether survey question text was made searchable online, and whether the study variables were indexed for search. These characteristics describe aspects of the discoverability of the data to compare with other characteristics of the study. We used the study and series numbers included in the ICPSR Bibliography as unique identifiers to link papers to metadata and analyze the community structure of dataset co-citations in the ICPSR Bibliography 32 .

Process curation work logs

Researchers deposit data at ICPSR for curation and long-term preservation. Between 2016 and 2020, more than 3,000 research studies were deposited with ICPSR. Since 2017, ICPSR has organized curation work into a central unit that provides varied levels of curation that vary in the intensity and complexity of data enhancement that they provide. While the levels of curation are standardized as to effort (level one = less effort, level three = most effort), the specific curatorial actions undertaken for each dataset vary. The specific curation actions are captured in Jira, a work tracking program, which data curators at ICPSR use to collaborate and communicate their progress through tickets. We obtained access to a corpus of 669 completed Jira tickets corresponding to the curation of 566 unique studies between February 2017 and December 2019 28 .

To process the tickets, we focused only on their work log portions, which contained free text descriptions of work that data curators had performed on a deposited study, along with the curators’ identifiers, and timestamps. To protect the confidentiality of the data curators and the processing steps they performed, we collaborated with ICPSR’s curation unit to propose a classification scheme, which we used to train a Naive Bayes classifier and label curation actions in each work log sentence. The eight curation action labels we proposed 28 were: (1) initial review and planning, (2) data transformation, (3) metadata, (4) documentation, (5) quality checks, (6) communication, (7) other, and (8) non-curation work. We note that these categories of curation work are very specific to the curatorial processes and types of data stored at ICPSR, and may not match the curation activities at other repositories. After applying the classifier to the work log sentences, we obtained summary-level curation actions for a subset of all ICPSR studies (5%), along with the total number of hours spent on data curation for each study, and the proportion of time associated with each action during curation.

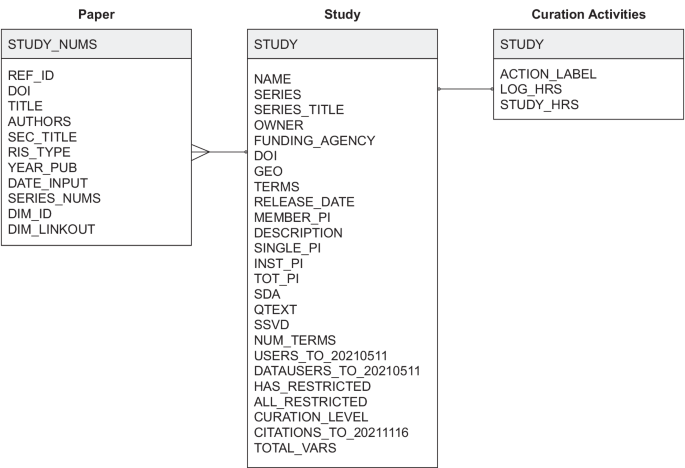

Data Records

The MICA dataset 27 connects records for each of ICPSR’s archived research studies to the research publications that use them and related curation activities available for a subset of studies (Fig. 2 ). Each of the three tables published in the dataset is available as a study archived at ICPSR. The data tables are distributed as statistical files available for use in SAS, SPSS, Stata, and R as well as delimited and ASCII text files. The dataset is organized around studies and papers as primary entities. The studies table lists ICPSR studies, their metadata attributes, and usage information; the papers table was constructed using the ICPSR Bibliography and Dimensions database; and the curation logs table summarizes the data curation steps performed on a subset of ICPSR studies.

Studies (“ICPSR_STUDIES”): 10,605 social science research datasets available through ICPSR up to 2021-11-16 with variables for ICPSR study number, digital object identifier, study name, series number, series title, authoring entities, full-text description, release date, funding agency, geographic coverage, subject terms, topical archive, curation level, single principal investigator (PI), institutional PI, the total number of PIs, total variables in data files, question text availability, study variable indexing, level of restriction, total unique users downloading study data files and codebooks, total unique users downloading data only, and total unique papers citing data through November 2021. Studies map to the papers and curation logs table through ICPSR study numbers as “STUDY”. However, not every study in this table will have records in the papers and curation logs tables.

Papers (“ICPSR_PAPERS”): 94,755 publications collected from 2000-08-11 to 2021-11-16 in the ICPSR Bibliography and enriched with metadata from the Dimensions database with variables for paper number, identifier, title, authors, publication venue, item type, publication date, input date, ICPSR series numbers used in the paper, ICPSR study numbers used in the paper, the Dimension identifier, and the Dimensions link to the publication’s full text. Papers map to the studies table through ICPSR study numbers in the “STUDY_NUMS” field. Each record represents a single publication, and because a researcher can use multiple datasets when creating a publication, each record may list multiple studies or series.

Curation logs (“ICPSR_CURATION_LOGS”): 649 curation logs for 563 ICPSR studies (although most studies in the subset had one curation log, some studies were associated with multiple logs, with a maximum of 10) curated between February 2017 and December 2019 with variables for study number, action labels assigned to work description sentences using a classifier trained on ICPSR curation logs, hours of work associated with a single log entry, and total hours of work logged for the curation ticket. Curation logs map to the study and paper tables through ICPSR study numbers as “STUDY”. Each record represents a single logged action, and future users may wish to aggregate actions to the study level before joining tables.

Entity-relation diagram.

Technical Validation

We report on the reliability of the dataset’s metadata in the following subsections. To support future reuse of the dataset, curation services provided through ICPSR improved data quality by checking for missing values, adding variable labels, and creating a codebook.

All 10,605 studies available through ICPSR have a DOI and a full-text description summarizing what the study is about, the purpose of the study, the main topics covered, and the questions the PIs attempted to answer when they conducted the study. Personal names (i.e., principal investigators) and organizational names (i.e., funding agencies) are standardized against an authority list maintained by ICPSR; geographic names and subject terms are also standardized and hierarchically indexed in the ICPSR Thesaurus 34 . Many of ICPSR’s studies (63%) are in a series and are distributed through the ICPSR General Archive (56%), a non-topical archive that accepts any social or behavioral science data. While study data have been available through ICPSR since 1962, the earliest digital release date recorded for a study was 1984-03-18, when ICPSR’s database was first employed, and the most recent date is 2021-10-28 when the dataset was collected.

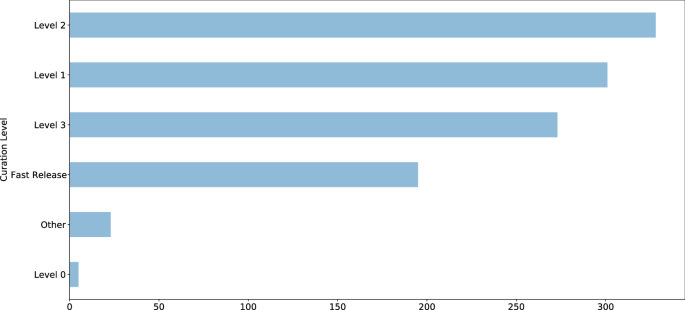

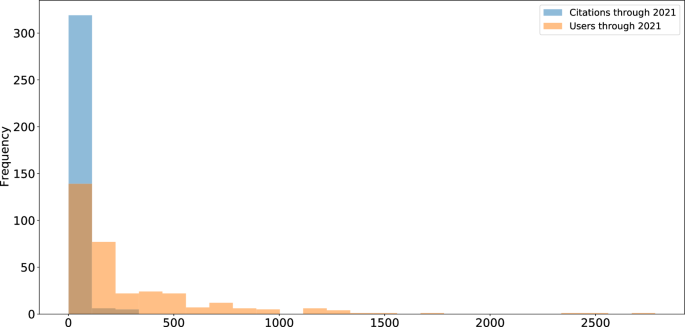

Curation level information was recorded starting in 2017 and is available for 1,125 studies (11%); approximately 80% of studies with assigned curation levels received curation services, equally distributed between Levels 1 (least intensive), 2 (moderately intensive), and 3 (most intensive) (Fig. 3 ). Detailed descriptions of ICPSR’s curation levels are available online 35 . Additional metadata are available for a subset of 421 studies (4%), including information about whether the study has a single PI, an institutional PI, the total number of PIs involved, total variables recorded is available for online analysis, has searchable question text, has variables that are indexed for search, contains one or more restricted files, and whether the study is completely restricted. We provided additional metadata for this subset of ICPSR studies because they were released within the past five years and detailed curation and usage information were available for them. Usage statistics including total downloads and data file downloads are available for this subset of studies as well; citation statistics are available for 8,030 studies (76%). Most ICPSR studies have fewer than 500 users, as indicated by total downloads, or citations (Fig. 4 ).

ICPSR study curation levels.

ICPSR study usage.

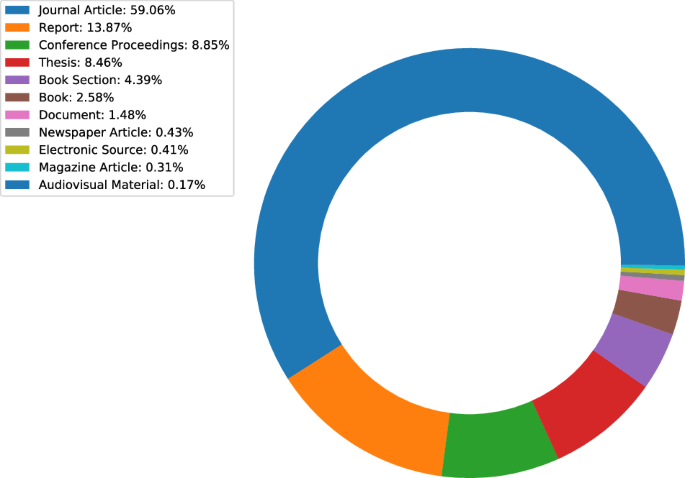

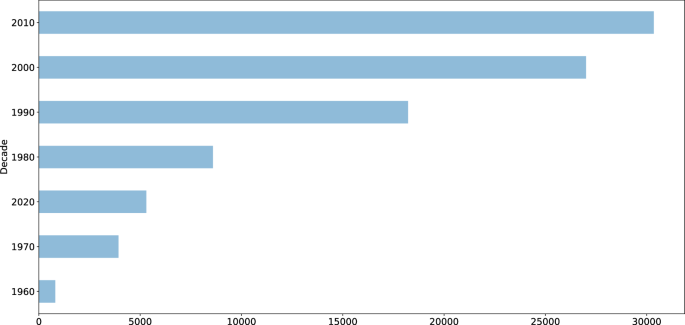

A subset of 43,102 publications (45%) available in the ICPSR Bibliography had a DOI. Author metadata were entered as free text, meaning that variations may exist and require additional normalization and pre-processing prior to analysis. While author information is standardized for each publication, individual names may appear in different sort orders (e.g., “Earls, Felton J.” and “Stephen W. Raudenbush”). Most of the items in the ICPSR Bibliography as of 2021-11-16 were journal articles (59%), reports (14%), conference presentations (9%), or theses (8%) (Fig. 5 ). The number of publications collected in the Bibliography has increased each decade since the inception of ICPSR in 1962 (Fig. 6 ). Most ICPSR studies (76%) have one or more citations in a publication.

ICPSR Bibliography citation types.

ICPSR citations by decade.

Usage Notes

The dataset consists of three tables that can be joined using the “STUDY” key as shown in Fig. 2 . The “ICPSR_PAPERS” table contains one row per paper with one or more cited studies in the “STUDY_NUMS” column. We manipulated and analyzed the tables as CSV files with the Pandas library 36 in Python and the Tidyverse packages 37 in R.

The present MICA dataset can be used independently to study the relationship between curation decisions and data reuse. Evidence of reuse for specific studies is available in several forms: usage information, including downloads and citation counts; and citation contexts within papers that cite data. Analysis may also be performed on the citation network formed between datasets and papers that use them. Finally, curation actions can be associated with properties of studies and usage histories.

This dataset has several limitations of which users should be aware. First, Jira tickets can only be used to represent the intensiveness of curation for activities undertaken since 2017, when ICPSR started using both Curation Levels and Jira. Studies published before 2017 were all curated, but documentation of the extent of that curation was not standardized and therefore could not be included in these analyses. Second, the measure of publications relies upon the authors’ clarity of data citation and the ICPSR Bibliography staff’s ability to discover citations with varying formality and clarity. Thus, there is always a chance that some secondary-data-citing publications have been left out of the bibliography. Finally, there may be some cases in which a paper in the ICSPSR bibliography did not actually obtain data from ICPSR. For example, PIs have often written about or even distributed their data prior to their archival in ICSPR. Therefore, those publications would not have cited ICPSR but they are still collected in the Bibliography as being directly related to the data that were eventually deposited at ICPSR.

In summary, the MICA dataset contains relationships between two main types of entities – papers and studies – which can be mined. The tables in the MICA dataset have supported network analysis (community structure and clique detection) 30 ; natural language processing (NER for dataset reference detection) 32 ; visualizing citation networks (to search for datasets) 38 ; and regression analysis (on curation decisions and data downloads) 29 . The data are currently being used to develop research metrics and recommendation systems for research data. Given that DOIs are provided for ICPSR studies and articles in the ICPSR Bibliography, the MICA dataset can also be used with other bibliometric databases, including DataCite, Crossref, OpenAlex, and related indexes. Subscription-based services, such as Dimensions AI, are also compatible with the MICA dataset. In some cases, these services provide abstracts or full text for papers from which data citation contexts can be extracted for semantic content analysis.

Code availability

The code 27 used to produce the MICA project dataset is available on GitHub at https://github.com/ICPSR/mica-data-descriptor and through Zenodo with the identifier https://doi.org/10.5281/zenodo.8432666 . Data manipulation and pre-processing were performed in Python. Data curation for distribution was performed in SPSS.

He, L. & Han, Z. Do usage counts of scientific data make sense? An investigation of the Dryad repository. Library Hi Tech 35 , 332–342 (2017).

Article Google Scholar

Brickley, D., Burgess, M. & Noy, N. Google dataset search: Building a search engine for datasets in an open web ecosystem. In The World Wide Web Conference - WWW ‘19 , 1365–1375 (ACM Press, San Francisco, CA, USA, 2019).

Buneman, P., Dosso, D., Lissandrini, M. & Silvello, G. Data citation and the citation graph. Quantitative Science Studies 2 , 1399–1422 (2022).

Chao, T. C. Disciplinary reach: Investigating the impact of dataset reuse in the earth sciences. Proceedings of the American Society for Information Science and Technology 48 , 1–8 (2011).

Article ADS Google Scholar

Parr, C. et al . A discussion of value metrics for data repositories in earth and environmental sciences. Data Science Journal 18 , 58 (2019).

Eschenfelder, K. R., Shankar, K. & Downey, G. The financial maintenance of social science data archives: Four case studies of long–term infrastructure work. J. Assoc. Inf. Sci. Technol. 73 , 1723–1740 (2022).

Palmer, C. L., Weber, N. M. & Cragin, M. H. The analytic potential of scientific data: Understanding re-use value. Proceedings of the American Society for Information Science and Technology 48 , 1–10 (2011).

Zimmerman, A. S. New knowledge from old data: The role of standards in the sharing and reuse of ecological data. Sci. Technol. Human Values 33 , 631–652 (2008).

Cragin, M. H., Palmer, C. L., Carlson, J. R. & Witt, M. Data sharing, small science and institutional repositories. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 368 , 4023–4038 (2010).

Article ADS CAS Google Scholar

Fear, K. M. Measuring and Anticipating the Impact of Data Reuse . Ph.D. thesis, University of Michigan (2013).

Borgman, C. L., Van de Sompel, H., Scharnhorst, A., van den Berg, H. & Treloar, A. Who uses the digital data archive? An exploratory study of DANS. Proceedings of the Association for Information Science and Technology 52 , 1–4 (2015).

Pasquetto, I. V., Borgman, C. L. & Wofford, M. F. Uses and reuses of scientific data: The data creators’ advantage. Harvard Data Science Review 1 (2019).

Gregory, K., Groth, P., Scharnhorst, A. & Wyatt, S. Lost or found? Discovering data needed for research. Harvard Data Science Review (2020).

York, J. Seeking equilibrium in data reuse: A study of knowledge satisficing . Ph.D. thesis, University of Michigan (2022).

Kilbride, W. & Norris, S. Collaborating to clarify the cost of curation. New Review of Information Networking 19 , 44–48 (2014).

Robinson-Garcia, N., Mongeon, P., Jeng, W. & Costas, R. DataCite as a novel bibliometric source: Coverage, strengths and limitations. Journal of Informetrics 11 , 841–854 (2017).

Qin, J., Hemsley, J. & Bratt, S. E. The structural shift and collaboration capacity in GenBank networks: A longitudinal study. Quantitative Science Studies 3 , 174–193 (2022).

Article PubMed PubMed Central Google Scholar

Acuna, D. E., Yi, Z., Liang, L. & Zhuang, H. Predicting the usage of scientific datasets based on article, author, institution, and journal bibliometrics. In Smits, M. (ed.) Information for a Better World: Shaping the Global Future. iConference 2022 ., 42–52 (Springer International Publishing, Cham, 2022).

Zeng, T., Wu, L., Bratt, S. & Acuna, D. E. Assigning credit to scientific datasets using article citation networks. Journal of Informetrics 14 , 101013 (2020).

Koesten, L., Vougiouklis, P., Simperl, E. & Groth, P. Dataset reuse: Toward translating principles to practice. Patterns 1 , 100136 (2020).

Du, C., Cohoon, J., Lopez, P. & Howison, J. Softcite dataset: A dataset of software mentions in biomedical and economic research publications. J. Assoc. Inf. Sci. Technol. 72 , 870–884 (2021).

Aryani, A. et al . A research graph dataset for connecting research data repositories using RD-Switchboard. Sci Data 5 , 180099 (2018).

Färber, M. & Lamprecht, D. The data set knowledge graph: Creating a linked open data source for data sets. Quantitative Science Studies 2 , 1324–1355 (2021).

Perry, A. & Netscher, S. Measuring the time spent on data curation. Journal of Documentation 78 , 282–304 (2022).