Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

3.2 Quantitative Research Designs

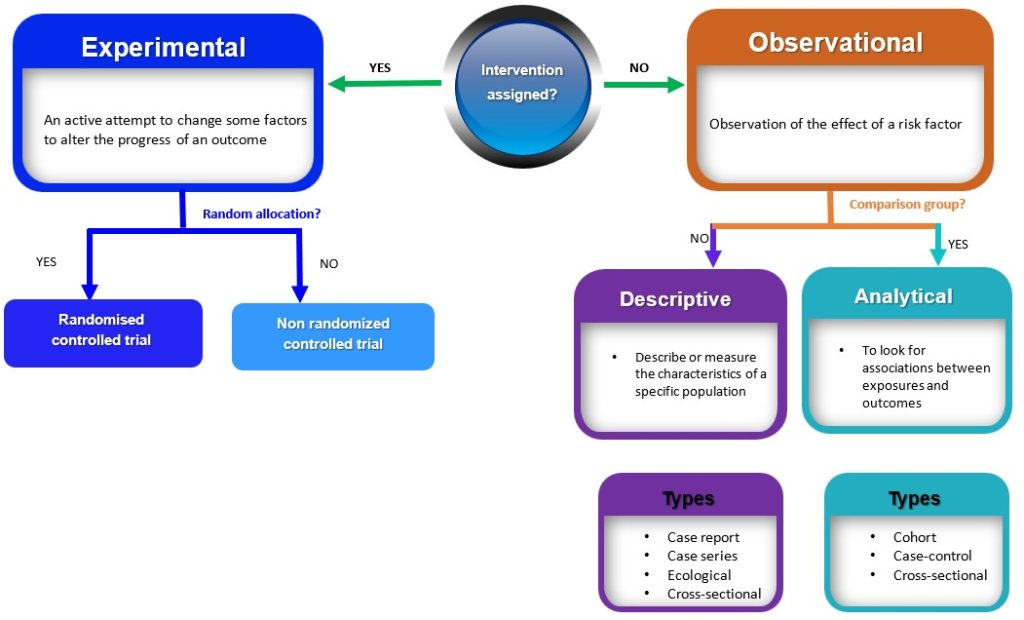

Quantitive research study designs can be broadly classified into two main groups (observational and experimental) depending on if an intervention is assigned. If an intervention is assigned, then an experimental study design will be considered; however, if no intervention is planned or assigned, then an observational study will be conducted. 3 These broad classes are further subdivided into specific study designs, as shown in Figure 3.1. In practice, quantitative studies usually begin simply as descriptive studies, which could subsequently be progressed to more complex analytic studies and then to experimental studies where appropriate.

Observational studies

Observational studies are research designs that involve observing and measuring the characteristics of a sample or population without intervening, altering or manipulating any variables (Figure 3.1). 3 Observational studies can be further subdivided into descriptive and analytic studies. 3

Descriptive observational studies

Descriptive studies are research designs that describe or measure the characteristics of a specific population or phenomenon. These characteristics include descriptions related to the phenomenon under investigation, the people involved, the place, and the time. 4 These study designs are typically non-experimental and do not involve manipulating variables; rather, they rely on the collection and analysis of numerical data to draw conclusions. Examples of descriptive studies include case reports, case series, ecological studies and cross-sectional (prevalence studies). 2 These are discussed below

- Case Reports and Case series

Case reports and case series are both types of descriptive studies in research. A case report is a detailed account of the medical history, diagnosis, treatment, and outcome of a single patient. 5 On the other hand, case series is a collection of cases with similar clinical features. 5 Case series are frequently used to explain the natural history of a disease, the clinical characteristics, and the health outcomes for a group of patients who underwent a certain treatment. Case series typically involve a larger number of patients than case reports. 5 Both case reports and case series are used to illustrate unusual or atypical features found in patients in practice. 5 In a typical, real-world clinical situation, they are both used to describe the clinical characteristics and outcomes of individual patients or a group of patients with a particular condition. These studies have the potential to generate new research questions and ideas. 5 However, there are drawbacks to both case reports and case series, such as the absence of control groups and the potential for bias. Yet, they can be useful sources of clinical data, particularly when researching uncommon or recently discovered illnesses. 5 An example of a case report is the study by van Tulleken, Tipton and Haper, 2018 which showed that open-water swimming was used as a treatment for major depressive disorder for a 24-year-old female patient. 6 Weekly open (cold) water swimming was trialled, leading to an immediate improvement in mood following each swim. A sustained and gradual reduction in symptoms of depression, and consequently a reduction in, and cessation of, medication was observed. 6 An example of a case series is the article by Chen et al , 2020 which described the epidemiology and clinical characteristics of COVID-19 infection among 12 confirmed cases in Jilin Province, China. 7

- Ecological studies

Ecological studies examine the relationship between exposure and outcome at the population level. Unlike other epidemiological studies focusing on individual-level data, ecological studies use aggregate data to investigate the relationship between exposure and outcome of interest. 8 In ecological studies, data on prevalence and the degree of exposure to a given risk factor within a population are typically collected and analysed to see if exposure and results are related. 8 Ecological studies shed light on the total burden of disease or health-related events within a population and assist in the identification of potential risk factors that might increase the incidence of disease/event. However, these studies cannot prove causation or take into account characteristics at the individual level that can influence the connection between exposure and result. This implies that ecological findings cannot be interpreted and extrapolated to individuals. 9 For example, the association between urbanisation and Type 2 Diabetes was investigated at the country level, and the role of intermediate variables (physical inactivity, sugar consumption and obesity) was examined. One of the key findings of the study showed that in high-income countries (HIC), physical inactivity and obesity were the main determinants of T2D prevalence. 10 However, it will be wrong to infer that people who are physically inactive and obese in HIC have a higher risk of T2D.

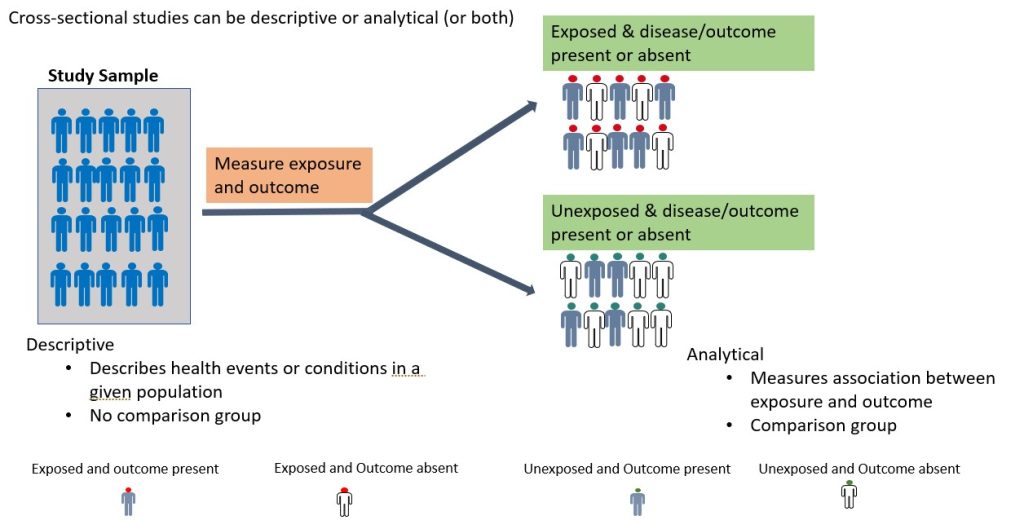

- Cross-sectional Descriptive (Prevalence) studies

A cross-sectional study is an observational study in which the researcher collects data on a group of participants at a single point in time. 11 The goal is to describe the characteristics of the group or to explore relationships between variables. Cross-sectional studies can be either descriptive or analytical (Figure 3.2). 11 Descriptive cross-sectional studies are also known as prevalence studies measuring the proportions of health events or conditions in a given population. 11 Although analytical cross-sectional studies also measure prevalence, however, the relationship between the outcomes and other variables, such as risk factors, is also assessed. 12 The main strength of cross-sectional studies is that they are quick and cost-effective. However, they cannot establish causality and may be vulnerable to bias and confounding ( these concepts will be discussed further later in this chapter under “avoiding error in quantitative research) . An example of a cross-sectional study is the study by Kim et al., 2020 which examined burnout and job stress among physical and occupational therapists in various Korean hospital settings. 13 Findings of the study showed that burnout and work-related stress differed significantly based on several factors, with hospital size, gender, and age as the main contributory factors. The more vulnerable group consisted of female therapists in their 20s at small- or medium-sized hospitals with lower scores for quality of life. 13

Analytical Observational studies

Analytical observational studies aim to establish an association between exposure and outcome and identify causes of disease (causal relationship). 14 Analytical observational studies include analytical cross-sectional ( discussed above ), case-control and cohort studies. 14 This research method could be prospective(cohort study) or retrospective (case-control study), depending on the direction of the enquiry. 14

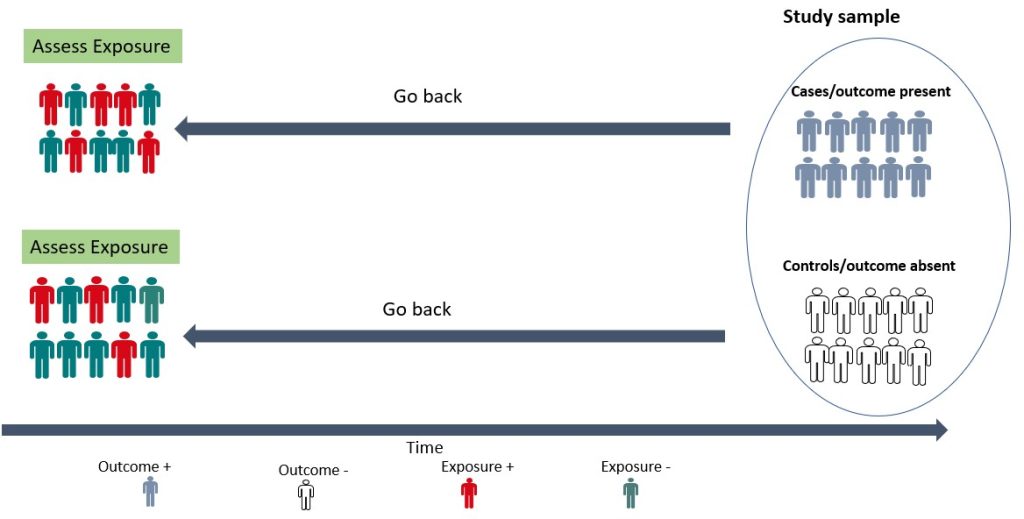

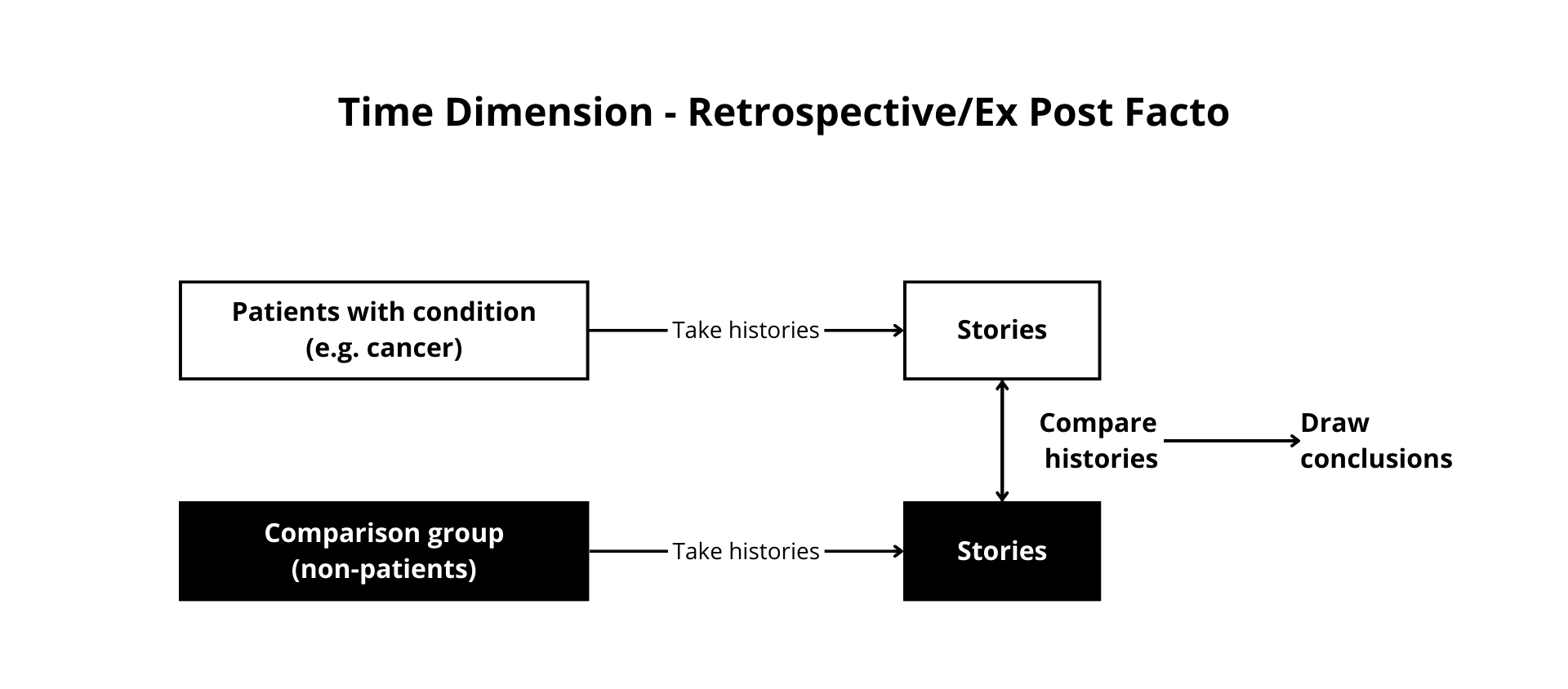

- Case-control studies

A case-control study is a retrospective study in which the researcher compares a group of individuals with a specific outcome (cases) to a group of individuals without that outcome (controls) to identify factors associated with the outcome. 15 As shown in Figure 3.3 below, the cases and controls are recruited and asked questions retrospectively (going back in time) about possible risk factors for the outcome under investigation. A case-control study is relatively efficient in terms of time, money and effort, suited for rare diseases or outcomes with a long latent period, and can examine multiple risk factors. 15 For example, before the cause of lung cancer, was established, a case-control study was conducted by British researchers Richard Doll and Bradford Hill in 1950. 16 Subjects with lung cancer were compared with those who did not have lung cancer, and details about their smoking habits were obtained. 16 The findings from this initial study showed that cancer patients were more frequent and heavy smokers. 16 Over the years, more evidence has been generated implicating tobacco as a significant cause of lung cancer. 17, 18 Case-control studies are, therefore, useful for examining rare outcomes and can be conducted more quickly and with fewer resources than other study designs. Nonetheless, it should be noted that case-control studies are susceptible to bias in selecting cases and controls and may not be representative of the overall population. 15

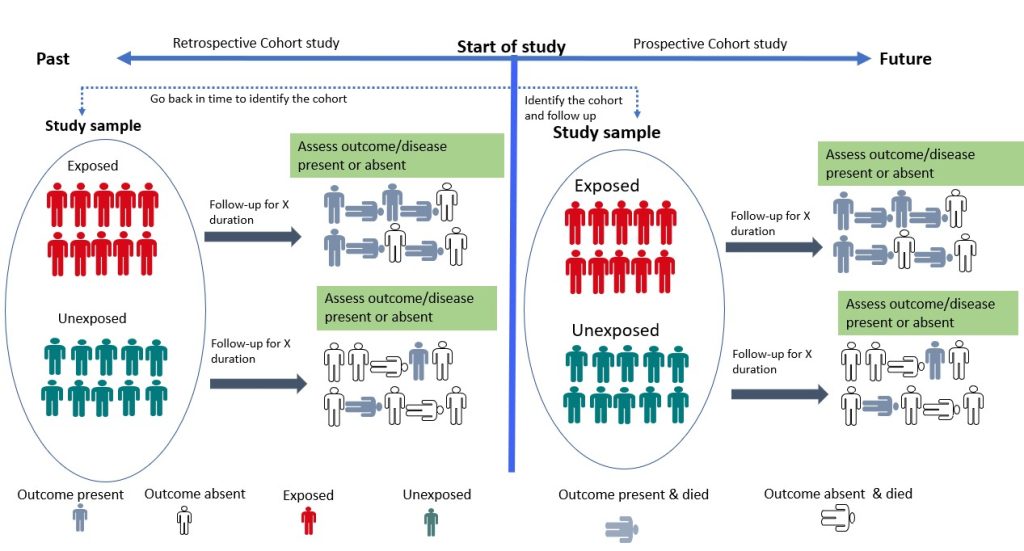

- Cohort Study

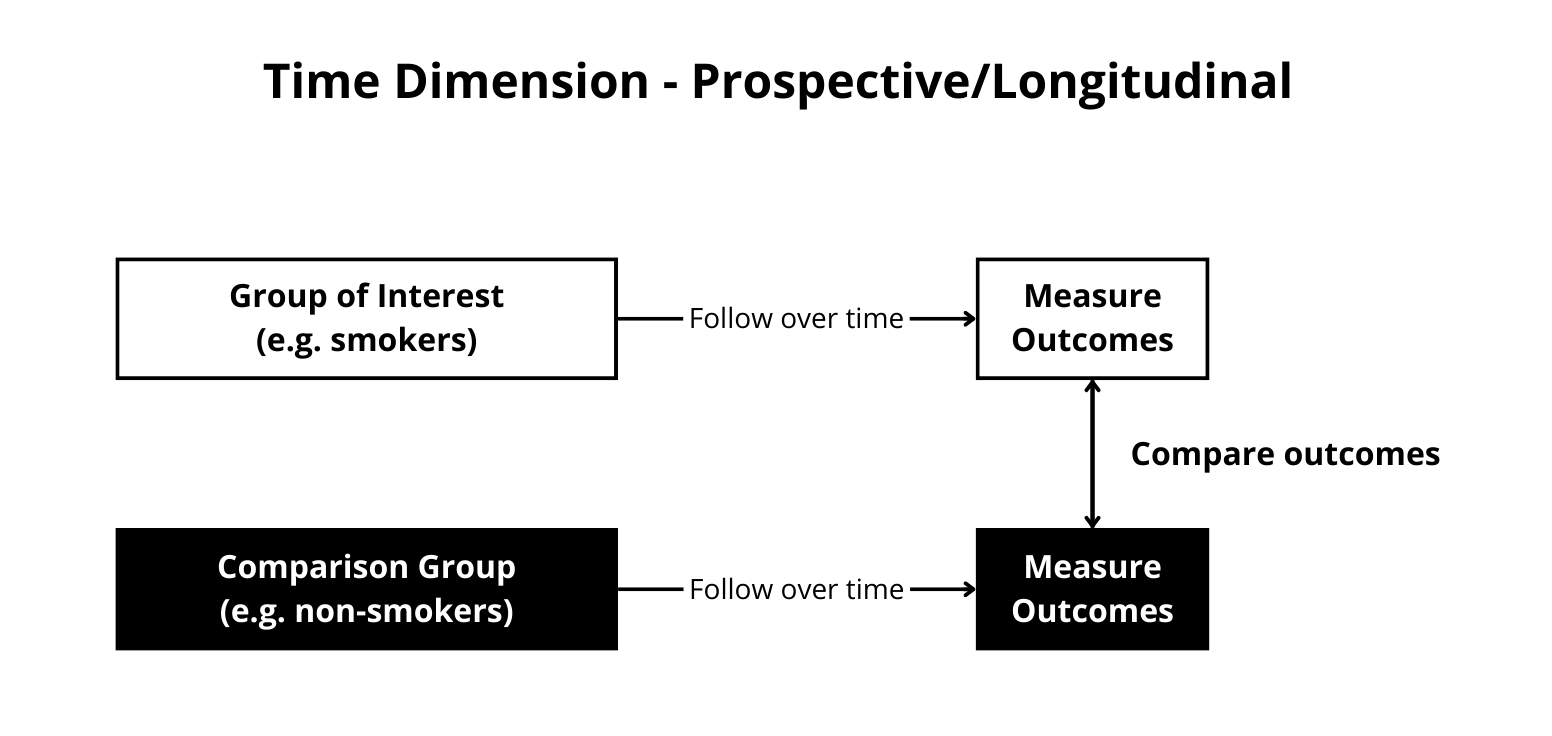

Cohort studies are longitudinal studies in which the researcher follows a group of individuals who share a common characteristic (e.g., age, occupation) over time to monitor the occurrence of a particular health outcome. 19 The study begins with the selection of a group of individuals who are initially free of the disease or health outcome of interest (the “cohort”). The cohort is then divided into two or more groups based on their level of exposure (for example, those who have been exposed to a certain risk factor and those who have not). 19 Participants are then followed up, and their health outcomes are tracked over time. The incidence of the health outcome is compared between exposed and non-exposed groups, and the relationship between exposure and the outcome is quantified using statistical methods. 19 Cohort studies can be prospective or retrospective (Figure 3.4). 20 In a prospective cohort study, the researchers plan the study so that participants are enrolled at the start of the study and followed over time. 20, 21 In a retrospective cohort study, data on exposure and outcome are collected from existing records or databases. The researchers go back in time (via available records) to find a cohort that was initially healthy and “at risk” and assess each participant’s exposure status at the start of the observation period. 20, 21 Cohort studies provide an understanding of disease risk factors based on findings in thousands of individuals over many years and are the foundation of epidemiological research. 19 They are useful for investigating the natural history of a disease, identifying risk factors for a disease, providing strong evidence for causality and estimating the incidence of a disease or health outcome in a population. However, they can be expensive and time-consuming to conduct. 15 An example of a cohort study is the study by Watts et al, 2015 which investigated whether the communication and language skills of children who have a history of stuttering are different from children who do not have a history of stuttering at ages 2–5 years. 22 The findings revealed that children with a history of stuttering, as a group, demonstrated higher scores on early communication and language measures compared to their fluent peers. According to the authors, clinicians can be reassured by the finding that, on average, children who stutter have early communication and language skills that meet developmental expectations. 22

Experimental Study Designs (Interventional studies)

Experimental studies involve manipulating one or more variables in order to measure their effects on one or more outcomes. 23 In this type of study, the researcher assigns individuals to two or more groups that receive or do not receive the intervention. Well-designed and conducted interventional studies are used to establish cause-and-effect relationships between variables. 23 Experimental studies can be broadly classified into two – randomised controlled trials and non-randomised controlled trials. 23 These study designs are discussed below:

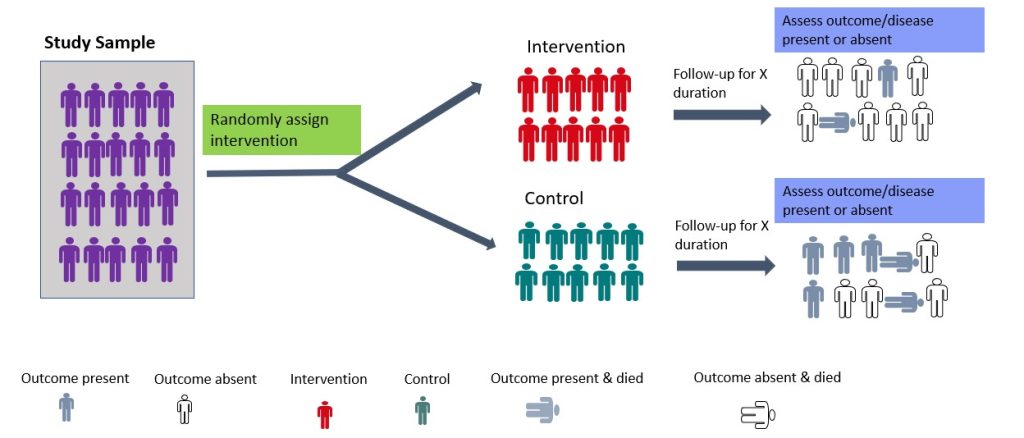

- Randomised Controlled Trial

Randomised controlled trials (RCTs) are experimental studies in which participants are randomly assigned to the intervention or control arm of the study. 23 The experimental group receives the intervention, while the control group does not (Figure 3.5). RCTs involve random allocation (not by choice of the participants or investigators) of participants to a control or intervention group (Figure 3.5). 24 Randomization or random allocation minimises bias and offers a rigorous method to analyse cause-and-effect links between an intervention and outcome. 24 Randomization balances participant characteristics (both observed and unobserved) between the groups. 24 This is so that any differences in results can be attributed to the research intervention. 24 The most basic form of randomisation is allocating treatment by tossing a coin. Other methods include using statistical software to generate random number tables and assigning participants by simple randomisation or allocating them sequentially using numbered opaque envelopes containing treatment information. 25 This is why RCTs are often considered the gold standard in research methodology. 24 While RCTs are effective in establishing causality, they are not without limitations. RCTs are expensive to conduct and time-consuming. In addition, ethical considerations may limit the types of interventions that can be tested in RCTs. They may also not be appropriate for rare events or diseases and may not always reflect real-world situations, limiting their application in clinical practice. 24 An example of a randomised controlled trial is the study by Shebib et al., 2019 which investigated the effect of a 12-week digital care program (DCP) on improving lower-back pain. The treatment group (DCP) received the 12-week DCP, consisting of sensor-guided exercise therapy, education, cognitive behavioural therapy, team and individual behavioural coaching, activity tracking, and symptom tracking – all administered remotely via an app. 26 While the control group received three digital education articles only. The findings of the study showed that the DCP resulted in improved health outcomes compared to treatment-as-usual and has the potential to scale personalised evidence-based non-invasive treatment for patients with lower-back pain. 26

- Non-randomised controlled design (Quasi-experimental)

Non-randomised controlled trial (non-RCT) designs are used where randomisation is impossible or difficult to achieve. This type of study design requires allocation of the exposure/intervention by the researcher. 23 In some clinical settings, it is impossible to randomise or blind participants. In such cases, non-randomised designs are employed. 27 Examples include pre-posttest design (with or without controls) and interrupted time series. 27, 28 For the pre-posttest design that involves a control group, participants (subjects) are allocated to intervention or control groups (without randomisation) by the researcher. 28 On the other hand, it could be a single pre-posttest design study where all subjects are assessed at baseline, the intervention is given, and the subjects are re-assessed post-intervention. 28 An example of this type of study was reported by Lamont and Brunero (2018 ), who examined the effect of a workplace violence training program for generalist nurses in the acute hospital setting. The authors found a statistically significant increase in behaviour intention scores and overall confidence in coping with patient aggression post-test. 29 Another type of non-RCT study is the interrupted time series (ITS) in which data are gathered before and after intervention at various evenly spaced time points (such as weekly, monthly, or yearly). 30 Thus, it is crucial to take note of the precise moment an intervention occurred. The primary goal of an interrupted time series is to determine whether the data pattern observed post-intervention differs from that noted prior. 30 Several ITS were conducted to investigate the effectiveness of the different prevention strategies (such as lockdown and border closure) used during the COVID pandemic. 31, 32 Although non-RCT may be more feasible to RCTs, they are more prone to bias than RCTs due to the lack of randomisation and may not be able to control for all the variables that might affect the outcome. 23

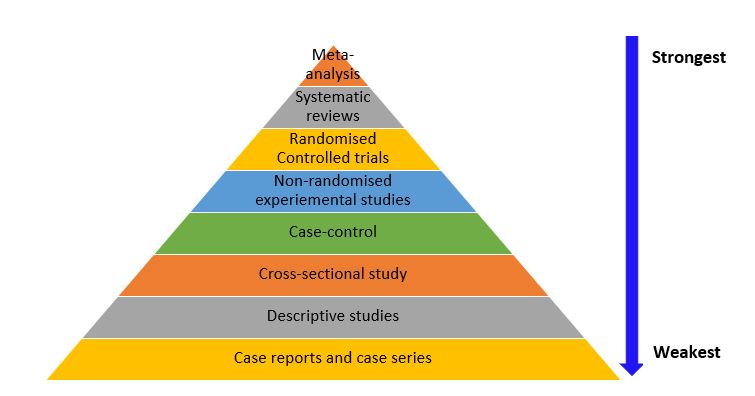

Hierarchy of Evidence

While each study design has its unique characteristics and strengths, they are not without weaknesses (as already discussed) that impact the accuracy of the results and research evidence they provide. The hierarchy of evidence is a framework used to rank the evidence provided by different study designs in research evaluating healthcare interventions with respect to the strength of the presented results (i.e., validity and reliability of the findings). 33 Study designs can be ranked in terms of their ability to provide valid evidence on the effectiveness (intervention achieves the intended outcomes), appropriateness (impact of the intervention from the perspective of its recipient) and feasibility (intervention is implementable) of the research results they provide. 33 As shown in Figure 3.6, meta-analyses, systematic reviews, and RCTs provide stronger best-practice evidence and scientific base for clinical practice than descriptive studies as well as case reports and case series. Nonetheless, it is important to note that the research question/ hypothesis determines the study design, and not all questions can be answered using an interventional design. In addition, there are other factors that need to be considered when choosing a study design, such as funding, time constraints, and ethical considerations, and these factors are discussed in detail in chapter 6.

An Introduction to Research Methods for Undergraduate Health Profession Students Copyright © 2023 by Faith Alele and Bunmi Malau-Aduli is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License , except where otherwise noted.

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

Chapter 3: Methodology (Quantitative

Related Papers

Godwill Medukenya

Sandeep Kumar

Alliana Ulila

Spring Season Publications

vasant kothari

Ralph Renzo Salangsang

Second Language Learning and Teaching

Magdalena Walenta

Air Medical Journal

cheryl Thompson

ribwar aljaf

RELATED PAPERS

OEm Conversations With

Filipa Pinho

Vichi Iacob

Economic and Environmental Geology

Sang-Mo KOH

Jurnal Potensi

Dedi Hantono

Geraldine Macdonald

Brazilian Journal of Development

Karem Vieira

The American journal of tropical medicine and hygiene

Camila Petzoldt

Bernardo Pérez

Bioelectrochemistry

Benoit Van den Eynde

Physical Science International Journal

Khan Hossain

Nina Mastra

Mustapha Lemiti

Sustainability

Ana Fernández-Viciana

Vittorio Morfino

Biochemical Engineering Journal

Sergio Quispe Rios

Synthetic Metals

Talat Yalcin

Mikko Itälahti

Frontiers in Cellular Neuroscience

Anton Liopo

International Journal of Accounting, Finance and Social Science Research (IJAFSSR)

Muyiwa DAGUNDURO

IOP Conference Series: Earth and Environmental Science

siti halimah

Acta ethologica

Natividade Vieira

Journal of Cleaner Production

heinz leuenberger

실시간카지노 토토사이트

Ernesto Rodriguez Ramos

West African Journal of Applied Ecology

Irene Appeaning

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

Research Methodology

- First Online: 29 June 2019

Cite this chapter

- Vaneet Kaur 3

Part of the book series: Innovation, Technology, and Knowledge Management ((ITKM))

1059 Accesses

The chapter presents methodology employed for examining framework developed, during the literature review, for the purpose of present study. In light of the research objectives, the chapter works upon the ontology, epistemology as well as the methodology adopted for the present study. The research is based on positivist philosophy which postulates that phenomena of interest in the social world, can be studied as concrete cause and effect relationships, following a quantitative research design and a deductive approach. Consequently, the present study has used the existing body of literature to deduce relationships between constructs and develops a strategy to test the proposed theory with the ultimate objective of confirming and building upon the existing knowledge in the field. Further, the chapter presents a roadmap for the study which showcases the journey towards achieving research objectives in a series of well-defined logical steps. The process followed for building survey instrument as well as sampling design has been laid down in a similar manner. While the survey design enumerates various methods adopted along with justifications, the sampling design sets forth target population, sampling frame, sampling units, sampling method and suitable sample size for the study. The chapter also spells out the operational definitions of the key variables before exhibiting the three-stage research process followed in the present study. In the first stage, questionnaire has been developed based upon key constructs from various theories/researchers in the field. Thereafter, the draft questionnaire has been refined with the help of a pilot study and its reliability and validity has been tested. Finally, in light of the results of the pilot study, the questionnaire has been finalized and final data has been collected. In doing so, the step-by-step process of gathering data from various sources has been presented. Towards end, the chapter throws spotlight on various statistical methods employed for analysis of data, along with the presentation of rationale for the selection of specific techniques used for the purpose of presentation of outcomes of the present research.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as EPUB and PDF

- Read on any device

- Instant download

- Own it forever

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Aasland, A. (2008). A user manual for SPSS analysis (pp. 1–60).

Google Scholar

Accenture Annual Report. (2016). Annual Report: 2016 Leading in the New. Retrieved February 13, 2017 from https://www.accenture.com/t20161030T213116__w__/in-en/_acnmedia/PDF-35/Accenture-2016-Shareholder-Letter10-K006.pdf#zoom=50 .

Achieng’Nyaura, L., & Omwenga, D. J. (2016). Factors affecting employee retention in the hotel industry in Mombasa County. Imperial Journal of Interdisciplinary Research, 2 (12).

Agariya, A. K., & Yayi, S. H. (2015). ERM scale development and validation in Indian IT sector. Journal of Internet Banking and Commerce, 20 (1), 1–16.

Aibinu, A. A., & Al-Lawati, A. M. (2010). Using PLS-SEM technique to model construction organizations’ willingness to participate in e-bidding. Automation in Construction, 19 (6), 714–724.

Article Google Scholar

Akgün, A. E., Keskin, H., & Byrne, J. (2012). Antecedents and contingent effects of organizational adaptive Capability on firm product innovativeness. Journal of Production and Innovation Management, 29 (S1), 171–189.

Akman, G., & Yilmaz, C. (2008). Innovative capability, innovation strategy and market orientation. International Journal of Innovation and Management, 12 (1), 69–111.

Akroush, M. N., Abu-ElSamen, A. A., Al-Shibly, M. S., & Al-Khawaldeh, F. M. (2010). Conceptualisation and development of customer service skills scale: An investigation of Jordanian customers. International Journal of Mobile Communications, 8 (6), 625–653.

AlKindy, A. M., Shah, I. M., & Jusoh, A. (2016). The impact of transformational leadership behaviors on work performance of Omani civil service agencies. Asian Social Science, 12 (3), 152.

Al-Mabrouk, K., & Soar, J. (2009). A delphi examination of emerging issues for successful information technology transfer in North Africa a case of Libya. African Journal of Business Management, 3 (3), 107.

Alonso-Almeida. (2015). Proactive and reactive strategies deployed by restaurants in times of crisis: Effects on capabilities, organization and competitive advantage. International Journal of Contemporary Hospitality Management, 27 (7), 1641–1661.

Alrubaiee, P., Alzubi, H. M., Hanandeh, R., & Ali, R. A. (2015). Investigating the relationship between knowledge management processes and organizational performance the mediating effect of organizational innovation. International Review of Management and Business Research, 4 (4), 989–1009.

Alters, B. J. (1997). Whose nature of science? Journal of Research in Science Teaching, 34 (1), 39–55.

Al-Thawwad, R. M. (2008). Technology transfer and sustainability-adapting factors: Culture, physical environment, and geographical location. In Proceedings of the 2008 IAJC-IJME International Conference .

Ammachchi, N. (2017). Healthcare demand spurring cloud & analytics development rush. Retrieved February 19, 2017 from http://www.nearshoreamericas.com/firms-focus-developing-low-cost-solutions-demand-outsourcing-rises-healthcare-sector-report/ .

Anatan, L. (2014). Factors influencing supply chain competitive advantage and performance. International Journal of Business and Information, 9 (3), 311–335.

Arkkelin, D. (2014). Using SPSS to understand research and data analysis.

Aroian, K. J., Kulwicki, A., Kaskiri, E. A., Templin, T. N., & Wells, C. L. (2007). Psychometric evaluation of the Arabic language version of the profile of mood states. Research in Nursing & Health, 30 (5), 531–541.

Asongu, S. A. (2013). Liberalization and financial sector competition: A critical contribution to the empirics with an African assessment.

Ayagre, P., Appiah-Gyamerah, I., & Nartey, J. (2014). The effectiveness of internal control systems of banks. The case of Ghanaian banks. International Journal of Accounting and Financial Reporting, 4 (2), 377.

Azizi, R., Maleki, M., Moradi-moghadam, M., & Cruz-machado, V. (2016). The impact of knowledge management practices on supply chain quality management and competitive advantages. Management and Production Engineering Review, 7 (1), 4–12.

Baariu, F. K. (2015). Factors influencing subscriber adoption of Mobile payments: A case of Safaricom’s Lipana M-Pesa Service in Embu Town , Kenya (Doctoral dissertation, University of Nairobi).

Babbie, E. R. (2011). Introduction to social research . Belmont: Wadsworth Cengage Learning.

Bagozzi, R. P., & Heatherton, T. F. (1994). A general approach to representing multifaceted personality constructs: Application to state self-esteem. Structural Equation Modeling: A Multidisciplinary Journal, 1 (1), 35–67.

Barlett, J. E., Kotrlik, J. W., & Higgins, C. C. (2001). Organizational research: Determining appropriate sample size in survey research. Information Technology, Learning, and Performance Journal, 19 (1), 43.

Barrales-molina, V., Bustinza, Ó. F., & Gutiérrez-gutiérrez, L. J. (2013). Explaining the causes and effects of dynamic capabilities generation: A multiple-indicator multiple-cause modelling approach. British Journal of Management, 24 , 571–591.

Barrales-molina, V., Martínez-lópez, F. J., & Gázquez-abad, J. C. (2014). Dynamic marketing capabilities: Toward an integrative framework. International Journal of Management Reviews, 16 , 397–416.

Bastian, R. W., & Thomas, J. P. (2016). Do talkativeness and vocal loudness correlate with laryngeal pathology? A study of the vocal overdoer/underdoer continuum. Journal of Voice, 30 (5), 557–562.

Bentler, P. M., & Mooijaart, A. B. (1989). Choice of structural model via parsimony: A rationale based on precision. Psychological Bulletin, 106 (2), 315–317.

Boari, C., Fratocchi, L., & Presutti, M. (2011). The Interrelated Impact of Social Networks and Knowledge Acquisition on Internationalisation Process of High-Tech Small Firms. In Proceedings of the 32th Annual Conference Academy of International Business, Bath .

Boralh, C. F. (2013). Impact of stress on depression and anxiety in dental students and professionals. International Public Health Journal, 5 (4), 485.

Bound, J. P., & Voulvoulis, N. (2005). Household disposal of pharmaceuticals as a pathway for aquatic contamination in the United Kingdom. Environmental Health Perspectives, 113 , 1705–1711.

Breznik, L., & Lahovnik, M. (2014). Renewing the resource base in line with the dynamic capabilities view: A key to sustained competitive advantage in the IT industry. Journal for East European Management Studies, 19 (4), 453–485.

Breznik, L., & Lahovnik, M. (2016). Dynamic capabilities and competitive advantage: Findings from case studies. Management: Journal of Contemporary Management Issues, 21 (Special issue), 167–185.

Cadiz, D., Sawyer, J. E., & Griffith, T. L. (2009). Developing and validating field measurement scales for absorptive capacity and experienced community of practice. Educational and Psychological Measurement, 69 (6), 1035–1058.

Carroll, G. B., Hébert, D. M., & Roy, J. M. (1999). Youth action strategies in violence prevention. Journal of Adolescent Health, 25 (1), 7–13.

Cepeda, G., & Vera, D. (2007). Dynamic capabilities and operational capabilities: A knowledge management perspective. Journal of Business Research, 60 (5), 426–437.

Chaharmahali, S. M., & Siadat, S. A. (2010). Achieving organizational ambidexterity: Understanding and explaining ambidextrous organisation.

Champoux, A., & Ommanney, C. S. L. (1986). Photo-interpretation, digital mapping, and the evolution of glaciers in glacier National Park, BC. Annals of Glaciology, 8 (1), 27–30.

Charan, C. S., & Nambirajan, T. (2016). An empirical investigation of supply chain engineering on lean thinking paradigms of in-house goldsmiths. The International Journal of Applied Business and Economic Research, 14 (6), 4475–4492.

Chau, P. Y. (2001). Inhibitors to EDI adoption in small business: An empirical investigation. Journal of Electronic Commerce Research, 2 (2), 78–88.

Chen, L. C. (2010). Multi-skilling in the hotel industry in Taiwan.

Chen, H. H., Lee, P. Y., & Lay, T. J. (2009). Drivers of dynamic learning and dynamic competitive capabilities in international strategic alliances. Journal of Business Research, 62 (12), 1289–1295.

Chen, C. W., Yu, P. H., & Li, Y. J. (2016). Understanding group-buying websites continuous use behavior: A use and gratifications theory perspective. Journal of Economics and Management, 12 (2), 177–204.

Chua, R. L., Cockfield, G., & Al-Hakim, L. (2008, November). Factors affecting trust within Australian beef supply chain. In 4th international congress on logistics and SCM systems: Effective supply chain and logistic management for sustainable development (pp. 26–28).

Cognizant Annual Report. (2015). Cognizant annual report 2015. Retrieved February 14, 2017 from http://investors.cognizant.com/download/Cognizant_AnnualReport_2015.pdf .

Cox, B. G., Mage, D. T., & Immerman, F. W. (1988). Sample design considerations for indoor air exposure surveys. JAPCA, 38 (10), 1266–1270.

Creswell, J. W. (2009). Editorial: Mapping the field of mixed methods research. Journal of Mixed Methods Research, 3 (2), 95–108.

Creswell, J. W., & Clark, V. L. P. (2007). Designing and conducting mixed methods research . Thousand Oaks: Sage.

Daniel, J. (2011). Sampling essentials: Practical guidelines for making sampling choices . London: Sage.

De Winter, J. C., & Dodou, D. (2010). Five-point Likert items: T test versus Mann-Whitney-Wilcoxon. Practical Assessment, Research & Evaluation, 15 (11), 1–12.

Deans, P. C., Karwan, K. R., Goslar, M. D., Ricks, D. A., & Toyne, B. (1991). Identification of key international information systems issues in US-based multinational corporations. Journal of Management Information Systems, 7 (4), 27–50.

Dei Mensah, R. (2014). Effects of human resource management practices on retention of employees in the banking industry in Accra, Ghana (Doctoral dissertation, Kenyatta University).

Dubey, R. (2016). Re-imagining Infosys. Retrieved February 19, 2017 from http://www.businesstoday.in/magazine/cover-story/how-infosys-ceo-is-trying-to-bring-back-the-company-into-high-growth-mode/story/230431.html .

Dunn, S., Cragg, B., Graham, I. D., Medves, J., & Gaboury, I. (2013). Interprofessional shared decision making in the NICU: A survey of an interprofessional healthcare team. Journal of Research in Interprofessional Practice and Education, 3 (1).

Einwiller, S. (2003). When reputation engenders trust: An empirical investigation in business-to-consumer electronic commerce. Electronic Markets, 13 (3), 196–209.

Eliassen, K. M., & Hopstock, L. A. (2011). Sleep promotion in the intensive care unit—A survey of nurses’ interventions. Intensive and Critical Care Nursing, 27 (3), 138–142.

Elliott, M., Page, K., Worrall-Carter, L., & Rolley, J. (2013). Examining adverse events after intensive care unit discharge: Outcomes from a pilot questionnaire. International Journal of Nursing Practice, 19 (5), 479–486.

Fabrigar, L. R., Wegener, D. T., MacCallum, R. C., & Strahan, E. J. (1999). Evaluating the use of exploratory factor analysis in psychological research. Psychological Methods, 4 (3), 272–299.

Filippini, R., Güttel, W. H., & Nosella, A. (2012). Dynamic capabilities and the evolution of knowledge management projects in SMEs. International Journal of Technology Management, 60 (3/4), 202.

Finstad, K. (2010). Response interpolation and scale sensitivity: Evidence against 5-point scales. Journal of Usability Studies, 5 (3), 104–110.

Fleming, C. M., & Bowden, M. (2009). Web-based surveys as an alternative to traditional mail methods. Journal of Environmental Management, 90 (1), 284–292.

Foss, N. J., & Pedersen, T. (2004). Organizing knowledge processes in the multinational corporation: An introduction. Journal of International Business Studies, 35 (5), 340–349.

Frosi, G., Barros, V. A., Oliveira, M. T., Cavalcante, U. M. T., Maia, L. C., & Santos, M. G. (2016). Increase in biomass of two woody species from a seasonal dry tropical forest in association with AMF with different phosphorus levels. Applied Soil Ecology, 102 , 46–52.

Fujisato, H., Ito, M., Takebayashi, Y., Hosogoshi, H., Kato, N., Nakajima, S., & Horikoshi, M. (2017). Reliability and validity of the Japanese version of the emotion regulation skills questionnaire. Journal of Affective Disorders, 208 , 145–152.

Garg, R., & De, K. (2012). Impact of dynamic capabilities on the export orientation and export performance of small and medium sized enterprises in emerging markets: A conceptual model. African Journal of Business Management, 6 (29), 8464–8474.

Gerbing, D. W., & Anderson, J. C. (1988). An updated paradigm for scale development incorporating unidimensionality and its assessment. Journal of Marketing Research, 25 , 186–192.

Getz, L. M., Marks, S., & Roy, M. (2014). The influence of stress, optimism, and music training on music uses and preferences. Psychology of Music, 42 (1), 71–85.

Gibson, C. B., & Birkinshaw, J. (2004). The antecedents, consequences, and mediating role of organizational ambidexterity. Academy of Management Journal, 47 (2), 209–226.

Glasow, P. A. (2005). Fundamentals of survey research methodology.

Global MAKE Report. (2016). Global Most Admired Knowledge Enterprises (MAKE) report: Executive summary. Retrieved February 22, 2017 from http://www.knowledgebusiness.com/knowledgebusiness/templates/ViewAttachment.aspx?hyperLinkId=6695 .

Gold, A. H., Malhotra, A., & Segars, A. H. (2001). Knowledge management: An organizational capabilities perspective. Journal of Management Information Systems, 18 (1), 185–214.

Goltz, N. G. (2012). Influence of the first impression on credibility evaluation of online information (Bachelor’s thesis, University of Twente).

Graham, J. D., Beaulieu, N. D., Sussman, D., Sadowitz, M., & Li, Y. C. (1999). Who lives near coke plants and oil refineries? An exploration of the environmental inequity hypothesis. Risk Analysis, 19 (2), 171–186.

Granados, M. L. (2015). Knowing what social enterprises know. In 5th EMES International Research Conference on Social Enterprise (pp. 1–20).

Guo, Y. M., & Poole, M. S. (2009). Antecedents of flow in online shopping: A test of alternative models. Information Systems Journal, 19 (4), 369–390.

Hadadi, M., Ebrahimi Takamjani, I., Ebrahim Mosavi, M., Aminian, G., Fardipour, S., & Abbasi, F. (2016). Cross-cultural adaptation, reliability, and validity of the Persian version of the Cumberland ankle instability tool. Disability and Rehabilitation , 8288(February), 1–9. https://doi.org/10.1080/09638288.2016.1207105

Haghighi, M. A., Bagheri, R., & Kalat, P. S. (2015). The relationship of knowledge management and organizational performance in science and technology parks of Tehran. Independent Journal of Management & Production, 6 (2), 422–448.

Hahm, S., Knuth, D., Kehl, D., & Schmidt, S. (2016). The impact of different natures of experience on risk perception regarding fire-related incidents: A comparison of firefighters and emergency survivors using cross-national data. Safety Science, 82 , 274–282.

Hansen, S. S., & Lee, J. K. (2013). What drives consumers to pass along marketer-generated eWOM in social network games? Social and game factors in play. Journal of Theoretical and Applied Electronic Commerce Research, 8 (1), 53–68.

Haq, M. (2015). A comparative analysis of qualitative and quantitative research methods and a justification for adopting mixed methods in social research.

Hashim, Y. A. (2010). Determining sufficiency of sample size in management survey research activities. International Journal of Organisational Management & Entrepreneurship Development, 6 (1), 119–130.

Hill, R. (1998). What sample size is “enough” in internet survey research. Interpersonal Computing and Technology: An Electronic Journal for the 21st Century, 6 (3–4), 1–12.

Hinkin, T. R. (1995). A review of scale development practices in the study of organizations. Journal of Management, 21 (5), 967–988.

Hogan, S. J., Soutar, G. N., McColl-Kennedy, J. R., & Sweeney, J. C. (2011). Reconceptualizing professional service firm innovation capability: Scale development. Industrial Marketing Management, 40 (8), 1264–1273.

Holm, K. E., LaChance, H. R., Bowler, R. P., Make, B. J., & Wamboldt, F. S. (2010). Family factors are associated with psychological distress and smoking status in chronic obstructive pulmonary disease. General Hospital Psychiatry, 32 (5), 492–498.

Horng, J. S., Teng, C. C., & Baum, T. G. (2009). Evaluating the quality of undergraduate hospitality, tourism and leisure programmes. Journal of Hospitality, Leisure, Sport and Tourism Education, 8 (1), 37–54.

Huan, Y., & Li, D. (2015). Effects of intellectual capital on innovative performance: The role of knowledge- based dynamic capability. Management Decision, 53 (1), 40–56.

Huckleberry, S. D. (2011). Commitment to coaching: Using the sport commitment model as a theoretical framework with soccer coaches (Doctoral dissertation, Ohio University).

Humborstad, S. I. W., & Perry, C. (2011). Employee empowerment, job satisfaction and organizational commitment: An in-depth empirical investigation. Chinese Management Studies, 5 (3), 325–344.

Infosys Annual Report. (2015). Infosys annual report 2015. Retrieved February 12, 2017 from https://www.infosys.com/investors/reports-filings/annual-report/annual/Documents/infosys-AR-15.pdf .

Investment Standard. (2016). Cognizant is the best pick out of the 4 information technology service providers. Retrieved February 19, 2017 from http://seekingalpha.com/article/3961500-cognizant-best-pick-4-information-technology-service-providers .

Jansen, J. J., Van Den Bosch, F. A., & Volberda, H. W. (2005). Managing potential and realized absorptive capacity: How do organizational antecedents matter? Academy of Management Journal, 48 (6), 999–1015.

John, N. A., Seme, A., Roro, M. A., & Tsui, A. O. (2017). Understanding the meaning of marital relationship quality among couples in peri-urban Ethiopia. Culture, Health & Sexuality, 19 (2), 267–278.

Joo, J., & Sang, Y. (2013). Exploring Koreans’ smartphone usage: An integrated model of the technology acceptance model and uses and gratifications theory. Computers in Human Behavior, 29 (6), 2512–2518.

Kaehler, C., Busatto, F., Becker, G. V., Hansen, P. B., & Santos, J. L. S. (2014). Relationship between adaptive capability and strategic orientation: An empirical study in a Brazilian company. iBusiness .

Kajfez, R. L. (2014). Graduate student identity: A balancing act between roles.

Kam Sing Wong, S., & Tong, C. (2012). The influence of market orientation on new product success. European Journal of Innovation Management, 15 (1), 99–121.

Karttunen, V., Sahlman, H., Repo, J. K., Woo, C. S. J., Myöhänen, K., Myllynen, P., & Vähäkangas, K. H. (2015). Criteria and challenges of the human placental perfusion–Data from a large series of perfusions. Toxicology In Vitro, 29 (7), 1482–1491.

Kaur, V., & Mehta, V. (2016a). Knowledge-based dynamic capabilities: A new perspective for achieving global competitiveness in IT sector. Pacific Business Review International, 1 (3), 95–106.

Kaur, V., & Mehta, V. (2016b). Leveraging knowledge processes for building higher-order dynamic capabilities: An empirical evidence from IT sector in India. JIMS 8M , July- September.

Kaya, A., Iwamoto, D. K., Grivel, M., Clinton, L., & Brady, J. (2016). The role of feminine and masculine norms in college women’s alcohol use. Psychology of Men & Masculinity, 17 (2), 206–214.

Kenny, A., McLoone, S., Ward, T., & Delaney, D. (2006). Using user perception to determine suitable error thresholds for dead reckoning in distributed interactive applications.

Kianpour, K., Jusoh, A., & Asghari, M. (2012). Importance of Price for buying environmentally friendly products. Journal of Economics and Behavioral Studies, 4 (6), 371–375.

Kim, J., & Forsythe, S. (2008). Sensory enabling technology acceptance model (SE-TAM): A multiple-group structural model comparison. Psychology & Marketing, 25 (9), 901–922.

Kim, Y. J., Oh, Y., Park, S., Cho, S., & Park, H. (2013). Stratified sampling design based on data mining. Healthcare Informatics Research, 19 (3), 186–195.

Kim, R., Yang, H., & Chao, Y. (2016). Effect of brand equity& country origin on Korean consumers’ choice for beer brands. The Business & Management Review, 7 (3), 398.

Kimweli, J. M. (2013). The role of monitoring and evaluation practices to the success of donor funded food security intervention projects a case study of Kibwezi District. International Journal of Academic Research in Business and Social Sciences, 3 (6), 9.

Kinsfogel, K. M., & Grych, J. H. (2004). Interparental conflict and adolescent dating relationships: Integrating cognitive, emotional, and peer influences. Journal of Family Psychology, 18 (3), 505–515.

Kivimäki, M., Vahtera, J., Pentti, J., Thomson, L., Griffiths, A., & Cox, T. (2001). Downsizing, changes in work, and self-rated health of employees: A 7-year 3-wave panel study. Anxiety, Stress and Coping, 14 (1), 59–73.

Klemann, B. (2012). The unknowingly consumers of Fairtrade products.

Kothari, C. R. (2004). Research methodology: Methods and techniques . New Delhi: New Age International.

Krause, D. R. (1999). The antecedents of buying firms’ efforts to improve suppliers. Journal of Operations Management, 17 (2), 205–224.

Krejcie, R. V., & Morgan, D. W. (1970). Determining sample size for research activities. Educational and Psychological Measurement., 30 , 607–610.

Krige, S. M., Mahomoodally, F. M., Subratty, A. H., & Ramasawmy, D. (2012). Relationship between socio-demographic factors and eating practices in a multicultural society. Food and Nutrition Sciences, 3 (3), 286–295.

Krzakiewicz, K. (2013). Dynamic capabilities and knowledge management. Management, 17 (2), 1–15.

Kuzic, J., Fisher, J., Scollary, A., Dawson, L., Kuzic, M., & Turner, R. (2005). Modus vivendi of E-business. PACIS 2005 Proceedings , 99.

Laframboise, K., Croteau, A. M., Beaudry, A., & Manovas, M. (2009). Interdepartmental knowledge transfer success during information technology projects. International Journal of Knowledge Management , 189–210.

Landaeta, R. E. (2008). Evaluating benefits and challenges of knowledge transfer across projects. Engineering Management Journal, 20 (1), 29–38.

Lee, Y., Chen, A., Yang, Y. L., Ho, G. H., Liu, H. T., & Lai, H. Y. (2005). The prophylactic antiemetic effects of ondansetron, propofol, and midazolam in female patients undergoing sevoflurane anaesthesia for ambulatory surgery: A-42. European Journal of Anaesthesiology (EJA), 22 , 11–12.

Lee, V. H., Foo, A. T. L., Leong, L. Y., & Ooi, K. B. (2016). Can competitive advantage be achieved through knowledge management? A case study on SMEs. Expert Systems with Applications, 65 , 136–151.

Leech, N. L., Barrett, K. C., & Morgan, G. A. (2005). SPSS for intermediate statistics: Use and interpretation . New Jersey: Psychology Press.

Leonardi, F., Spazzafumo, L., & Marcellini, F. (2005). Subjective Well-being: The constructionist point of view. A longitudinal study to verify the predictive power of top-down effects and bottom-up processes. Social Indicators Research, 70 (1), 53–77.

Li, D. Y., & Liu, J. (2014). Dynamic capabilities, environmental dynamism, and competitive advantage: Evidence from China. Journal of Business Research, 67 (1), 2793–2799.

Liao, S. H., Fei, W. C., & Chen, C. C. (2007). Knowledge sharing, absorptive capacity, and innovation capability: An empirical study of Taiwan’s knowledge-intensive industries. Journal of Information Science, 33 (3), 340–359.

Liao, S. H., & Wu, C. C. (2009). The relationship among knowledge management, organizational learning, and organizational performance. International Journal of Business and Management, 4 (4), 64.

Liao, T. S., Rice, J., & Lu, J. C. (2014). The vicissitudes of Competitive advantage: Empirical evidence from Australian manufacturing SMEs. Journal of Small Business Management, 53 (2), 469–481.

Liu, S., & Deng, Z. (2015). Understanding knowledge management capability in business process outsourcing: A cluster analysis. Management Decision, 53 (1), 1–11.

Liu, C. L. E., Ghauri, P. N., & Sinkovics, R. R. (2010). Understanding the impact of relational capital and organizational learning on alliance outcomes. Journal of World Business, 45 (3), 237–249.

Luís, C., Cothran, E. G., & do Mar Oom, M. (2007). Inbreeding and genetic structure in the endangered Sorraia horse breed: Implications for its conservation and management. Journal of Heredity, 98 (3), 232–237.

MacDonald, C. M., & Atwood, M. E. (2014, June). What does it mean for a system to be useful?: An exploratory study of usefulness. In Proceedings of the 2014 conference on designing interactive systems (pp. 885–894). New York: ACM.

Mafini, C., & Dlodlo, N. (2014). The relationship between extrinsic motivation, job satisfaction and life satisfaction amongst employees in a public organisation. SA Journal of Industrial Psychology, 40 (1), 01–12.

Mafini, C., Dhurup, M., & Mandhlazi, L. (2014). Shopper typologies amongst a generation Y consumer cohort and variations in terms of age in the fashion apparel market: Original research. Acta Commercii, 14 (1), 1–11.

Mageswari, S. U., Sivasubramanian, C., & Dath, T. S. (2015). Knowledge management enablers, processes and innovation in Small manufacturing firms: A structural equation modeling approach. IUP Journal of Knowledge Management, 13 (1), 33.

Mahoney, J. T. (2005). Resource-based theory, dynamic capabilities, and real options. In Foundations for organizational science. Economic foundations of strategy . Thousand Oaks: SAGE Publications.

Malhotra, N., Hall, J., Shaw, M., & Oppenheim, P. (2008). Essentials of marketing research, 2nd Australian edition.

Manan, R. M. (2016). The use of hangman game in motivating students in Learning English. ELT Perspective, 4 (2).

Manco-Johnson, M., Morrissey-Harding, G., Edelman-Lewis, B., Oster, G., & Larson, P. (2004). Development and validation of a measure of disease-specific quality of life in young children with haemophilia. Haemophilia, 10 (1), 34–41.

Marek, L. (2016). Guess which Illinois company uses the most worker visas. Retrieved February 13, 2017 from http://www.chicagobusiness.com/article/20160227/ISSUE01/302279994/guess-which-illinois-company-uses-the-most-worker-visas .

Martin, C. M., Roach, V. A., Nguyen, N., Rice, C. L., & Wilson, T. D. (2013). Comparison of 3D reconstructive technologies used for morphometric research and the translation of knowledge using a decision matrix. Anatomical Sciences Education, 6 (6), 393–403.

Maskatia, S. A., Altman, C. A., Morris, S. A., & Cabrera, A. G. (2013). The echocardiography “boot camp”: A novel approach in pediatric cardiovascular imaging education. Journal of the American Society of Echocardiography, 26 (10), 1187–1192.

Matson, J. L., Boisjoli, J., Rojahn, J., & Hess, J. (2009). A factor analysis of challenging behaviors assessed with the baby and infant screen for children with autism traits. Research in Autism Spectrum Disorders, 3 (3), 714–722.

Matusik, S. F., & Heeley, M. B. (2005). Absorptive capacity in the software Industry: Identifying dimensions that affect knowledge and knowledge creation activities. Journal of Management, 31 (4), 549–572.

Matveev, A. V. (2002). The advantages of employing quantitative and qualitative methods in intercultural research: Practical implications from the study of the perceptions of intercultural communication competence by American and Russian managers. Bulletin of Russian Communication Association Theory of Communication and Applied Communication, 1 , 59–67.

McDermott, E. P., & Ervin, D. (2005). The influence of procedural and distributive variables on settlement rates in employment discrimination mediation. Journal of Dispute Resolution, 45 , 1–16.

McKelvie, A. (2007). Innovation in new firms: Examining the role of knowledge and growth willingness.

Mendonca, J., & Sen, A. (2016). IT companies including TCS, Infosys, Wipro bracing for slowest topline expansion on annual basis. Retrieved February 19 2017 from http://economictimes.indiatimes.com/markets/stocks/earnings/it-companies-including-tcs-infosys-wipro-bracing-for-slowest-topline-expansion-on-annual-basis/articleshow/51639858.cms .

Mesina, F., De Deyne, C., Judong, M., Vandermeersch, E., & Heylen, R. (2005). Quality survey of pre-operative assessment: Influence of a standard questionnaire: A-38. European Journal of Anaesthesiology (EJA), 22 , 11.

Michailova, S., & Zhan, W. (2014). Dynamic capabilities and innovation in MNC subsidiaries. Journal of World Business , 1–9.

Miller, R., Salmona, M., & Melton, J. (2012). Modeling student concern for professional online image. Journal of Internet Social Networking & Virtual Communities, 3 (2), 1.

Minarro-Viseras, E., Baines, T., & Sweeney, M. (2005). Key success factors when implementing strategic manufacturing initiatives. International Journal of Operations & Production Management, 25 (2), 151–179.

Monferrer, D., Blesa, A., & Ripollés, M. (2015). Catching dynamic capabilities through market-oriented networks. European Journal of International Management, 9 (3), 384–408.

Moyer, J. E. (2007). Learning from leisure reading: A study of adult public library patrons. Reference & User Services Quarterly, 46 , 66–79.

Mulaik, S. A., James, L. R., Van Alstine, J., Bennett, N., Lind, S., & Stilwell, C. D. (1989). Evaluation of goodness-of-fit indices for structural equation models. Psychological Bulletin, 105 (3), 430–445.

Murphy, T. H., & Terry, H. R. (1998). Faculty needs associated with agricultural distance education. Journal of Agricultural Education, 39 , 17–27.

Murphy, C., Hearty, C., Murray, M., & McCaul, C. (2005). Patient preferences for desired post-anaesthesia outcomes-a comparison with medical provider perspective: A-40. European Journal of Anaesthesiology (EJA), 22 , 11.

Nair, A., Rustambekov, E., McShane, M., & Fainshmidt, S. (2014). Enterprise risk management as a dynamic Capability: A test of its effectiveness during a crisis. Managerial and Decision Economics, 35 , 555–566.

Nandan, S. (2010). Determinants of customer satisfaction on service quality: A study of railway platforms in India. Journal of Public Transportation, 13 (1), 6.

NASSCOM Indian IT-BPM Industry Report. (2016). NASSCOM Indian IT-BPM Industry Report 2016. Retrieved January 11, 2017 from http://www.nasscom.in/itbpm-sector-india-strategic-review-2016 .

Nedzinskas, Š. (2013). Dynamic capabilities and organizational inertia interaction in volatile environment. Retrieved from http://archive.ism.lt/handle/1/301 .

Nguyen, T. N. Q. (2010). Knowledge management capability and competitive advantage: An empirical study of Vietnamese enterprises.

Nguyen, N. T. D., & Aoyama, A. (2014). Achieving efficient technology transfer through a specific corporate culture facilitated by management practices. The Journal of High Technology Management Research, 25 (2), 108–122.

Nguyen, Q. T. N., & Neck, P. A. (2008, July). Knowledge management as dynamic capabilities: Does it work in emerging less developed countries. In Proceedings of the 16th Annual Conference on Pacific Basin Finance, Economics, Accounting and Management (pp. 1–18).

Nieves, J., & Haller, S. (2014). Building dynamic capabilities through knowledge resources. Tourism Management, 40 , 224–232.

Nirmal, R. (2016). Indian IT firms late movers in digital race. Retrieved February 19, 2017 from http://www.thehindubusinessline.com/info-tech/indian-it-firms-late-movers-in-digital-race/article8505379.ece .

Numthavaj, P., Bhongmakapat, T., Roongpuwabaht, B., Ingsathit, A., & Thakkinstian, A. (2017). The validity and reliability of Thai Sinonasal outcome Test-22. European Archives of Oto-Rhino-Laryngology, 274 (1), 289–295.

Obwoge, M. E., Mwangi, S. M., & Nyongesa, W. J. (2013). Linking TVET institutions and industry in Kenya: Where are we. The International Journal of Economy, Management and Social Science, 2 (4), 91–96.

Oktemgil, M., & Greenley, G. (1997). Consequences of high and low adaptive capability in UK companies. European Journal of Marketing, 31 (7), 445–466.

Ouyang, Y. (2015). A cyclic model for knowledge management capability-a review study. Arabian Journal of Business and Management Review, 5 (2), 1–9.

Paloniemi, R., & Vainio, A. (2011). Legitimacy and empowerment: Combining two conceptual approaches for explaining forest owners’ willingness to cooperate in nature conservation. Journal of Integrative Environmental Sciences, 8 (2), 123–138.

Pant, S., & Lado, A. (2013). Strategic business process offshoring and Competitive advantage: The role of strategic intent and absorptive capacity. Journal of Information Science and Technology, 9 (1), 25–58.

Paramati, S. R., Gupta, R., Maheshwari, S., & Nagar, V. (2016). The empirical relationship between the value of rupee and performance of information technology firms: Evidence from India. International Journal of Business and Globalisation, 16 (4), 512–529.

Parida, V., Oghazi, P., & Cedergren, S. (2016). A study of how ICT capabilities can influence dynamic capabilities. Journal of Enterprise Information Management, 29 (2), 1–22.

Parkhurst, K. A., Conwell, Y., & Van Orden, K. A. (2016). The interpersonal needs questionnaire with a shortened response scale for oral administration with older adults. Aging & Mental Health, 20 (3), 277–283.

Payne, A. A., Gottfredson, D. C., & Gottfredson, G. D. (2006). School predictors of the intensity of implementation of school-based prevention programs: Results from a national study. Prevention Science, 7 (2), 225–237.

Pereira-Moliner, J., Font, X., Molina-Azorín, J., Lopez-Gamero, M. D., Tarí, J. J., & Pertusa-Ortega, E. (2015). The holy grail: Environmental management, competitive advantage and business performance in the Spanish hotel industry. International Journal of Contemporary Hospitality Management, 27 (5), 714–738.

Persada, S. F., Razif, M., Lin, S. C., & Nadlifatin, R. (2014). Toward paperless public announcement on environmental impact assessment (EIA) through SMS gateway in Indonesia. Procedia Environmental Sciences, 20 , 271–279.

Pertusa-Ortega, E. M., Molina-Azorín, J. F., & Claver-Cortés, E. (2010). Competitive strategy, structure and firm performance: A comparison of the resource-based view and the contingency approach. Management Decision, 48 (8), 1282–1303.

Peters, M. D., Wieder, B., Sutton, S. G., & Wake, J. (2016). Business intelligence systems use in performance measurement capabilities: Implications for enhanced competitive advantage. International Journal of Accounting Information Systems, 21 (1–17), 1–17.

Protogerou, A., Caloghirou, Y., & Lioukas, S. (2011). Dynamic capabilities and their indirect impact on firm performance. Industrial and Corporate Change, 21 (3), 615–647.

Rapiah, M., Wee, S. H., Ibrahim Kamal, A. R., & Rozainun, A. A. (2010). The relationship between strategic performance measurement systems and organisational competitive advantage. Asia-Pacific Management Accounting Journal, 5 (1), 1–20.

Reuner, T. (2016). HfS blueprint Report, ServiceNow services 2016, excerpt for Cognizant. Retrieved February 2, 2017 from https://www.cognizant.com/services-resources/Services/hfs-blueprint-report-servicenow-2016.pdf .

Ríos, V. R., & del Campo, E. P. (2013). Business research methods: Theory and practice . Madrid: ESIC Editorial.

Sachitra, V. (2015). Review of Competitive advantage measurements: The case of agricultural firms. IV, 303–317.

Sahney, S., Banwet, D. K., & Karunes, S. (2004). Customer requirement constructs: The premise for TQM in education: A comparative study of select engineering and management institutions in the Indian context. International Journal of Productivity and Performance Management, 53 (6), 499–520.

Sampe, F. (2012). The influence of organizational learning on performance in Indonesian SMEs.

Sarlak, M. A., Shafiei, M., Sarlak, M. A., Shafiei, M., Capability, M., Capability, I., & Competitive, S. (2013). A research in relationship between entrepreneurship, marketing Capability, innovative Capability and sustainable Competitive advantage. Kaveh Industrial City, 7 (8), 1490–1497.

Saunders, M., Lewis, P., & Thornhill, A. (2012). Research methods for business students . Pearson.

Schiff, J. H., Fornaschon, S., Schiff, M., Martin, E., & Motsch, J. (2005). Measuring patient dissatisfaction with anethesia care: A-41. European Journal of Anaesthesiology (EJA), 22 , 11.

Schwartz, S. J., Coatsworth, J. D., Pantin, H., Prado, G., Sharp, E. H., & Szapocznik, J. (2006). The role of ecodevelopmental context and self-concept in depressive and externalizing symptoms in Hispanic adolescents. International Journal of Behavioral Development, 30 (4), 359–370.

Scott, V. C., Sandberg, J. G., Harper, J. M., & Miller, R. B. (2012). The impact of depressive symptoms and health on sexual satisfaction for older couples: Implications for clinicians. Contemporary Family Therapy, 34 (3), 376–390.

Shafia, M. A., Shavvalpour, S., Hosseini, M., & Hosseini, R. (2016). Mediating effect of technological innovation capabilities between dynamic capabilities and competitiveness of research and technology organisations. Technology Analysis & Strategic Management, 28 , 1–16. https://doi.org/10.1080/09537325.2016.1158404 .

Shahzad, K., Faisal, A., Farhan, S., Sami, A., Bajwa, U., & Sultani, R. (2016). Integrating knowledge management (KM) strategies and processes to enhance organizational creativity and performance: An empirical investigation. Journal of Modelling in Management, 11 (1), 1–34.

Sharma, A. (2016). Five reasons why you should avoid investing in IT stocks. Retrieved February 19, 2017 from http://www.businesstoday.in/markets/company-stock/five-reasons-why-you-should-avoid-investing-in-infosys-tcs-wipro/story/238225.html .

Sharma, J. K., & Singh, A. K. (2012). Absorptive capability and competitive advantage: Some insights from Indian pharmaceutical Industry. International Journal of Management and Business Research, 2 (3), 175–192.

Shepherd, R. M., & Edelmann, R. J. (2005). Reasons for internet use and social anxiety. Personality and Individual Differences, 39 (5), 949–958.

Singh, R., & Khanduja, D. (2010). Customer requirements grouping–a prerequisite for successful implementation of TQM in technical education. International Journal of Management in Education, 4 (2), 201–215.

Small, M. J., Gupta, J., Frederic, R., Joseph, G., Theodore, M., & Kershaw, T. (2008). Intimate partner and nonpartner violence against pregnant women in rural Haiti. International Journal of Gynecology & Obstetrics, 102 (3), 226–231.

Srivastava, M. (2016). IT biggies expect weaker Sept quarter. Retrieved February 19, 2017 from http://www.business-standard.com/article/companies/it-biggies-expect-weaker-sept-quarter-116100400680_1.html .

Stoten, D. W. (2016). Discourse, knowledge and power: The continuing debate over the DBA. Journal of Management Development, 35 (4), 430–447.

Sudarvel, J., & Velmurugan, R. (2015). Semi month effect in Indian IT sector with reference to BSE IT index. International Journal of Advance Research in Computer Science and Management Studies, 3 (10), 155–159.

Sylvia, M., & Terhaar, M. (2014). An approach to clinical data Management for the Doctor of nursing practice curriculum. Journal of Professional Nursing, 30 (1), 56–62.

Tabachnick, B. G., & Fidell, L. S. (2007). Multivariate analysis of variance and covariance. Using Multivariate Statistics, 3 , 402–407.

Teece, D. J. (2014). The foundations of Enterprise performance: Dynamic and ordinary capabilities in an (economic) theory of firms. The Academy of Management Perspectives, 28 (4), 328–352.

Teece, D. J., Pisano, G., & Shuen, A. (1997). Dynamic capabilities and strategic management. Strategic Management Journal, 18 (7), 509–533.

Thomas, J. B., Sussman, S. W., & Henderson, J. C. (2001). Understanding “strategic learning”: Linking organizational learning, knowledge management, and sensemaking. Organization Science, 12 (3), 331–345.

Travis, S. E., & Grace, J. B. (2010). Predicting performance for ecological restoration: A case study using Spartina alterniflora. Ecological Applications, 20 (1), 192–204.

Tseng, S., & Lee, P. (2014). The effect of knowledge management capability and dynamic capability on organizational performance. Journal of Enterprise Information Management, 27 (2), 158–179.

Turker, D. (2009). Measuring corporate social responsibility: A scale development study. Journal of Business Ethics, 85 (4), 411–427.

Vanham, D., Mak, T. N., & Gawlik, B. M. (2016). Urban food consumption and associated water resources: The example of Dutch cities. Science of the Total Environment, 565 , 232–239.

Visser, P. S., Krosnick, J. A., & Lavrakas, P. J. (2000). Survey research. In H.T. Reis & C.M. Judd (Eds.), Handbook of research methods in social and personality psychology (pp. 223-252). New York: Cambridge.

Vitale, G., Sala, F., Consonni, F., Teruzzi, M., Greco, M., Bertoli, E., & Maisano, P. (2005). Perioperative complications correlate with acid-base balance in elderly trauma patients: A-37. European Journal of Anaesthesiology (EJA), 22 , 10–11.

Wang, C. L., & Ahmed, P. K. (2004). Leveraging knowledge in the innovation and learning process at GKN. International Journal of Technology Management, 27 (6/7), 674–688.

Wang, C. L., Senaratne, C., & Rafiq, M. (2015). Success traps, dynamic capabilities and firm performance. British Journal of Management, 26 , 26–44.

Wasswa Katono, I. (2011). Student evaluation of e-service quality criteria in Uganda: The case of automatic teller machines. International Journal of Emerging Markets, 6 (3), 200–216.

Wasylkiw, L., Currie, M. A., Meuse, R., & Pardoe, R. (2010). Perceptions of male ideals: The power of presentation. International Journal of Men's Health, 9 (2), 144–153.

Wilhelm, H., Schlömer, M., & Maurer, I. (2015). How dynamic capabilities affect the effectiveness and efficiency of operating routines under high and Low levels of environmental dynamism. British Journal of Management , 1–19.

Wilkens, U., Menzel, D., & Pawlowsky, P. (2004). Inside the black-box : Analysing the generation of Core competencies and dynamic capabilities by exploring collective minds. An organizational learning perspective. Management Review, 15 (1), 8–27.

Willemsen, M. C., & de Vries, H. (1996). Saying “no” to environmental tobacco smoke: Determinants of assertiveness among nonsmoking employees. Preventive Medicine, 25 (5), 575–582.

Williams, M., Peterson, G. M., Tenni, P. C., & Bindoff, I. K. (2012). A clinical knowledge measurement tool to assess the ability of community pharmacists to detect drug-related problems. International Journal of Pharmacy Practice, 20 (4), 238–248.

Wintermark, M., Huss, D. S., Shah, B. B., Tustison, N., Druzgal, T. J., Kassell, N., & Elias, W. J. (2014). Thalamic connectivity in patients with essential tremor treated with MR imaging–guided focused ultrasound: In vivo Fiber tracking by using diffusion-tensor MR imaging. Radiology, 272 (1), 202–209.

Wipro Annual Report. (2015). Wipro annual report 2014–15. Retrieved February 16, 2017 from http://www.wipro.com/documents/investors/pdf-files/Wipro-annual-report-2014-15.pdf .

Wu, J., & Chen, X. (2012). Leaders’ social ties, knowledge acquisition capability and firm competitive advantage. Asia Pacific Journal of Management, 29 (2), 331–350.

Yamane, T. (1967). Elementary Sampling Theory Prentice Inc. Englewood Cliffs. NS, USA, 1, 371–390.

Zahra, S., Sapienza, H. J., & Davidsson, P. (2006). Entrepreneurship and dynamic capabilities: A review, model and research agenda. Journal of Management Studies, 43 (4), 917–955.

Zaied, A. N. H. (2012). An integrated knowledge management capabilities framework for assessing organizational performance. International Journal of Information Technology and Computer Science, 4 (2), 1–10.

Zakaria, Z. A., Anuar, H. S., & Udin, Z. M. (2015). The relationship between external and internal factors of information systems success towards employee performance: A case of Royal Malaysia custom department. International Journal of Economics, Finance and Management, 4 (2), 54–60.

Zheng, S., Zhang, W., & Du, J. (2011). Knowledge-based dynamic capabilities and innovation in networked environments. Journal of Knowledge Management, 15 (6), 1035–1051.

Zikmund, W. G., Babin, B. J., Carr, J. C., & Griffin, M. (2010). Business research methods . Mason: South Western Cengage Learning.

Download references

Author information

Authors and affiliations.

The University of Texas at Dallas, Richardson, TX, USA

Vaneet Kaur

You can also search for this author in PubMed Google Scholar

Rights and permissions

Reprints and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this chapter

Kaur, V. (2019). Research Methodology. In: Knowledge-Based Dynamic Capabilities. Innovation, Technology, and Knowledge Management. Springer, Cham. https://doi.org/10.1007/978-3-030-21649-8_3

Download citation

DOI : https://doi.org/10.1007/978-3-030-21649-8_3

Published : 29 June 2019

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-21648-1

Online ISBN : 978-3-030-21649-8

eBook Packages : Business and Management Business and Management (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

2 Types of Quantitative Research Designs

There are three main groups of Research Designs that will be explored in this chapter.

- Experimental

- Quasi-experimental

- Non-experimental

When reviewing each design, the purpose and key features of the design, advantages and disadvantages, and the most commonly used designs within the category will be reviewed.

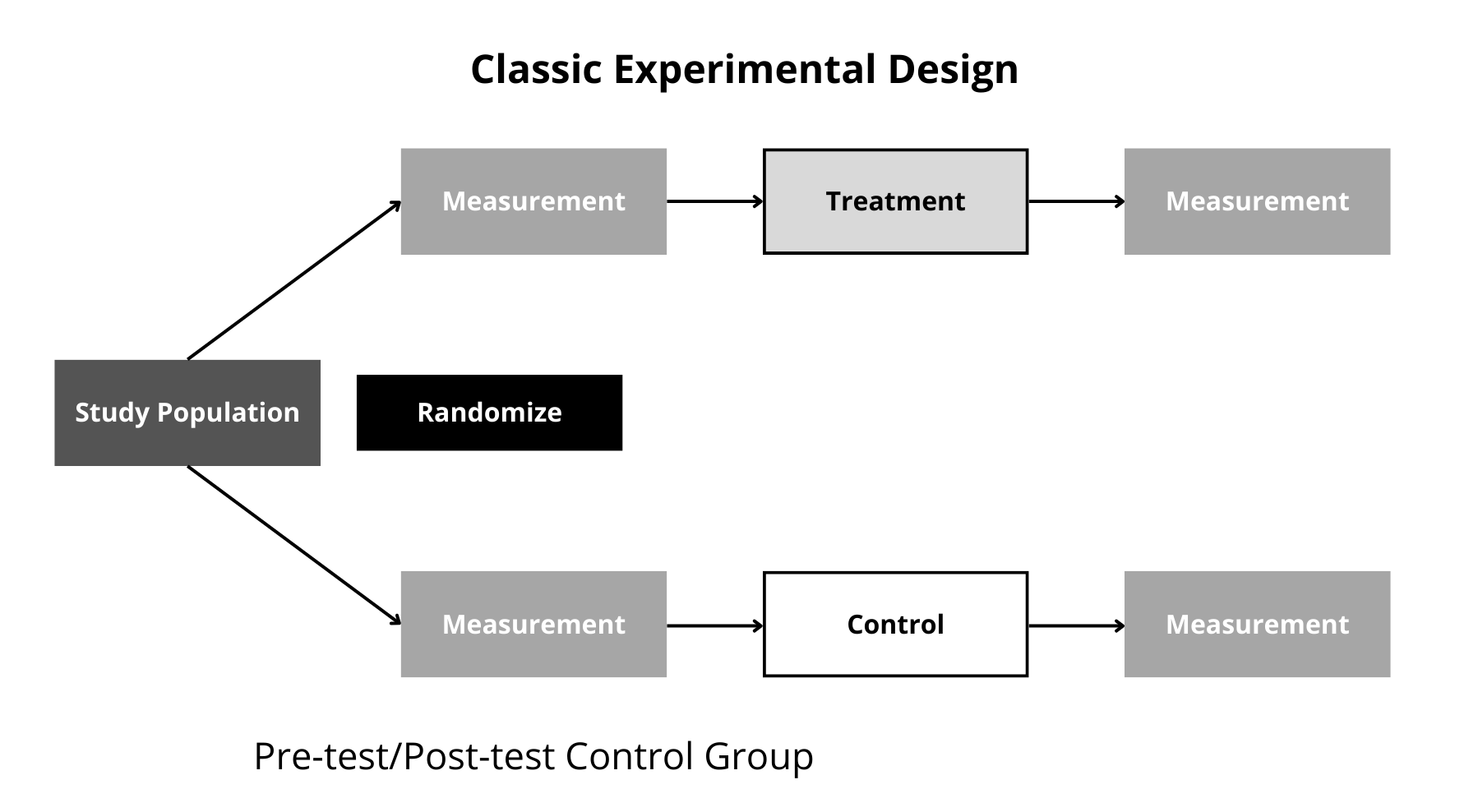

1. Experimental Design

Purpose: Evaluate outcomes in terms of efficacy and/or cost effectiveness

Experimental design features include:

- Randomization of subjects to groups

- Manipulation of independent variable (e.g., an intervention or treatment)

- Control – the use of a control group and control measures (for controlling extraneous variables )

Advantages:

- Most appropriate for testing cause-and-effect relationships (e.g., generalizability is most likely)

- Provides the highest level of evidence (e.g., level II) for single studies

Disadvantages:

- Attrition especially control group participants or with ‘before-after’ experimental designs

- Feasibility and logistics may be an issue is certain settings (e.g., long-term care homes)

Caution: Not all research questions are amenable to experimental manipulation or randomization

Most Commonly Used Experimental Designs

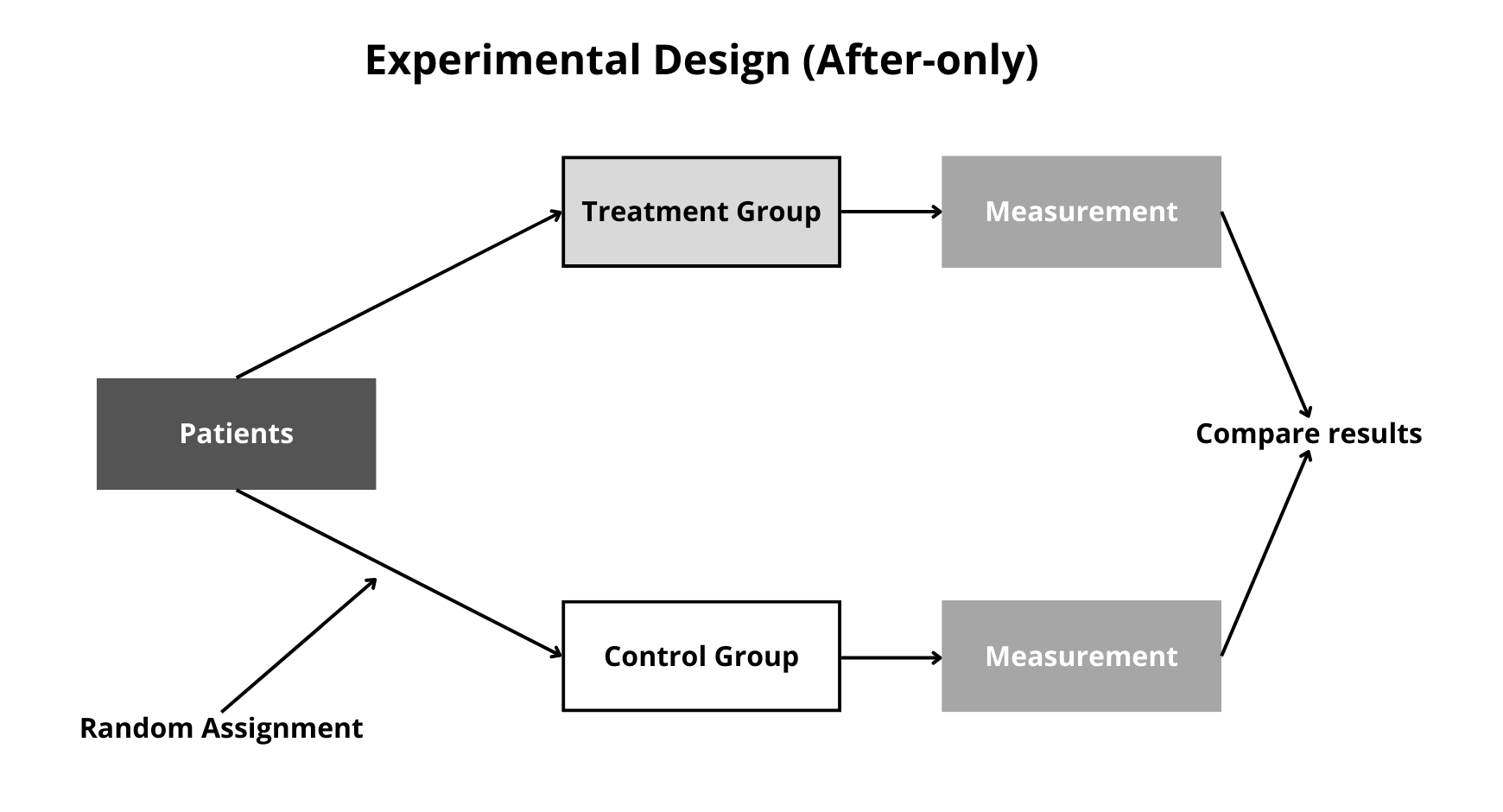

- True experimental (pre- post-test ) design (also referred to as Randomized Control Trials or RCTs ):

Figure 3. True experimental design (pre-post-test).

- After-only (post-test only) design :

Figure 4. After-only (post-test only) design.

- Solomon four-group design

This design is similar to the true experimental design but has an additional two groups, for a total of four groups. Two groups are experimental, while two groups are control. These “extra” groups do not receive the pre-test, allowing the researchers to evaluate the effect of the pretest on the post-test in the first two groups.

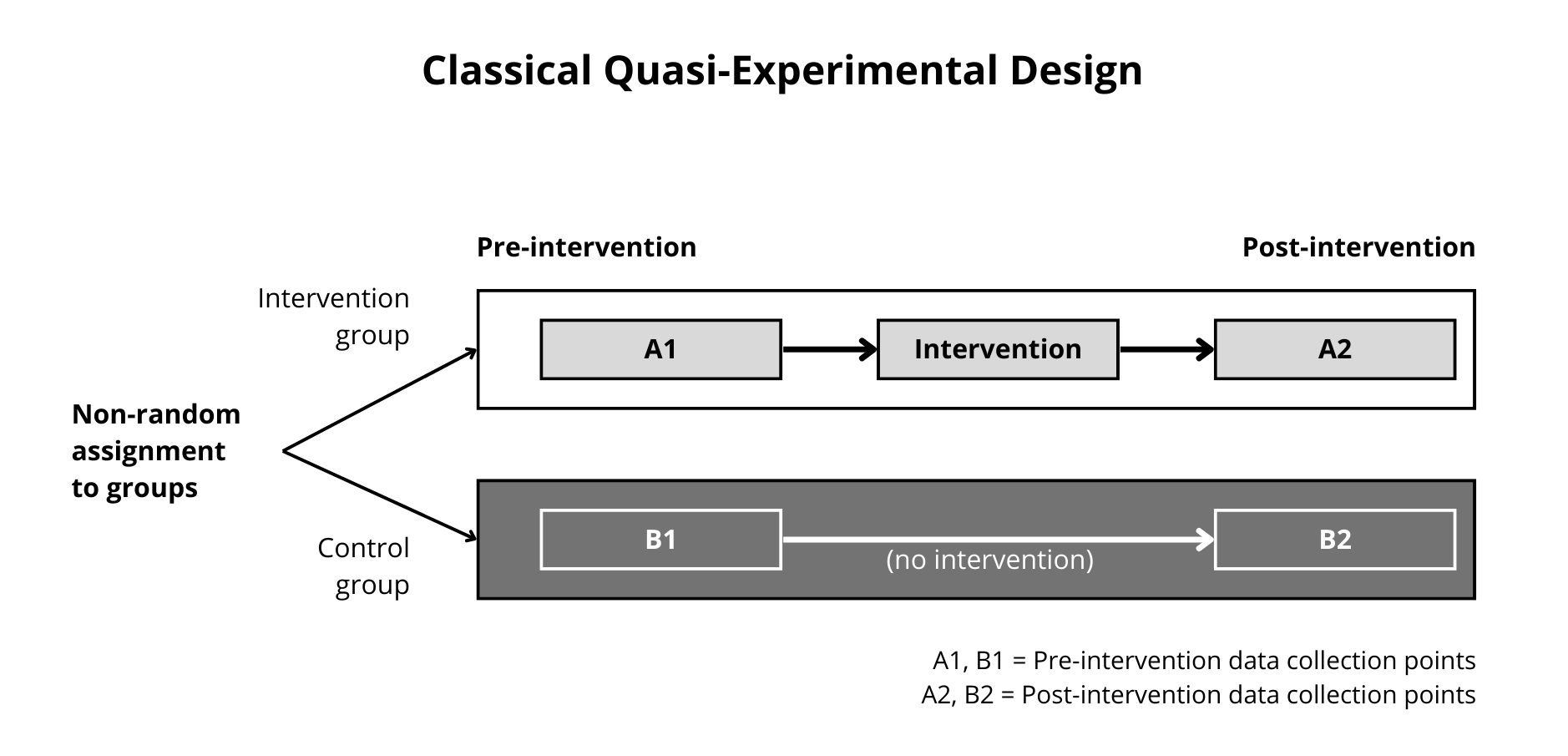

2. Quasi-Experimental Design

Purpose: Similar to experimental design, but used when not all the features of an experimental design can be met:

- Manipulation of the independent variable (e.g., an intervention or treatment)

- Experimental and control groups may not be randomly assigned (no randomization)

- There may or may not be a control group

Advantages:

- Feasibility and logistics are enhanced, particularly in clinical settings

- Offers some degree of generalizability (e.g., applicable to population of interest)

- May be more adaptable in real-world practice environments

Disadvantages:

- Generally weaker than experimental designs because groups may not be equal with respect to extraneous variable due to the lack of randomization

- As a result, cause-and-effect relationships are difficult to claim

Options for Quasi-experimental Designs include :

- Non-equivalent control group design

Figure 5. Classical Quasi-Experimental Design. Adapted from Knowledge for Health

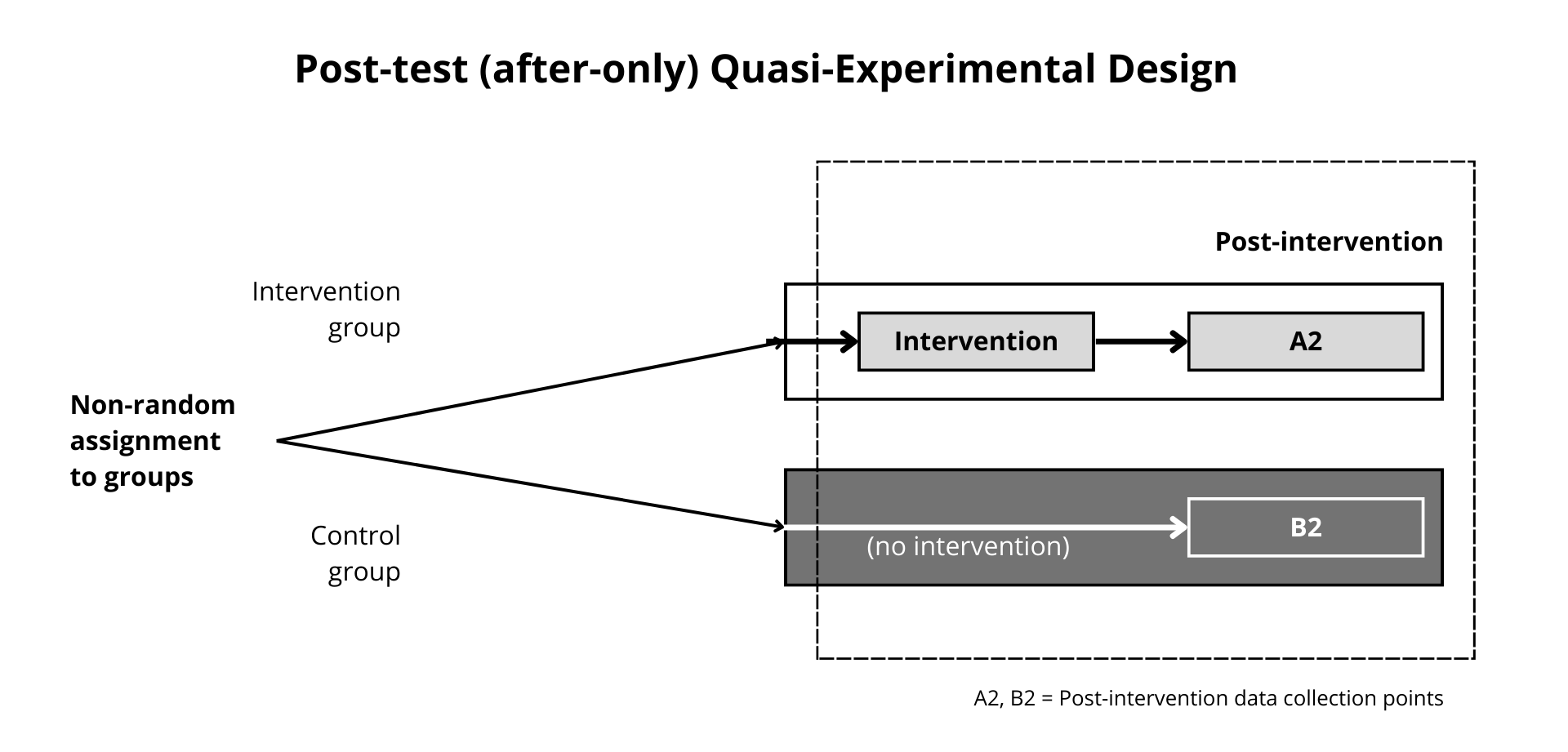

- After-only control group design

Figure 6. Post-Test Only Quasi-Experimental Design. Adapted from Knowledge for Health.

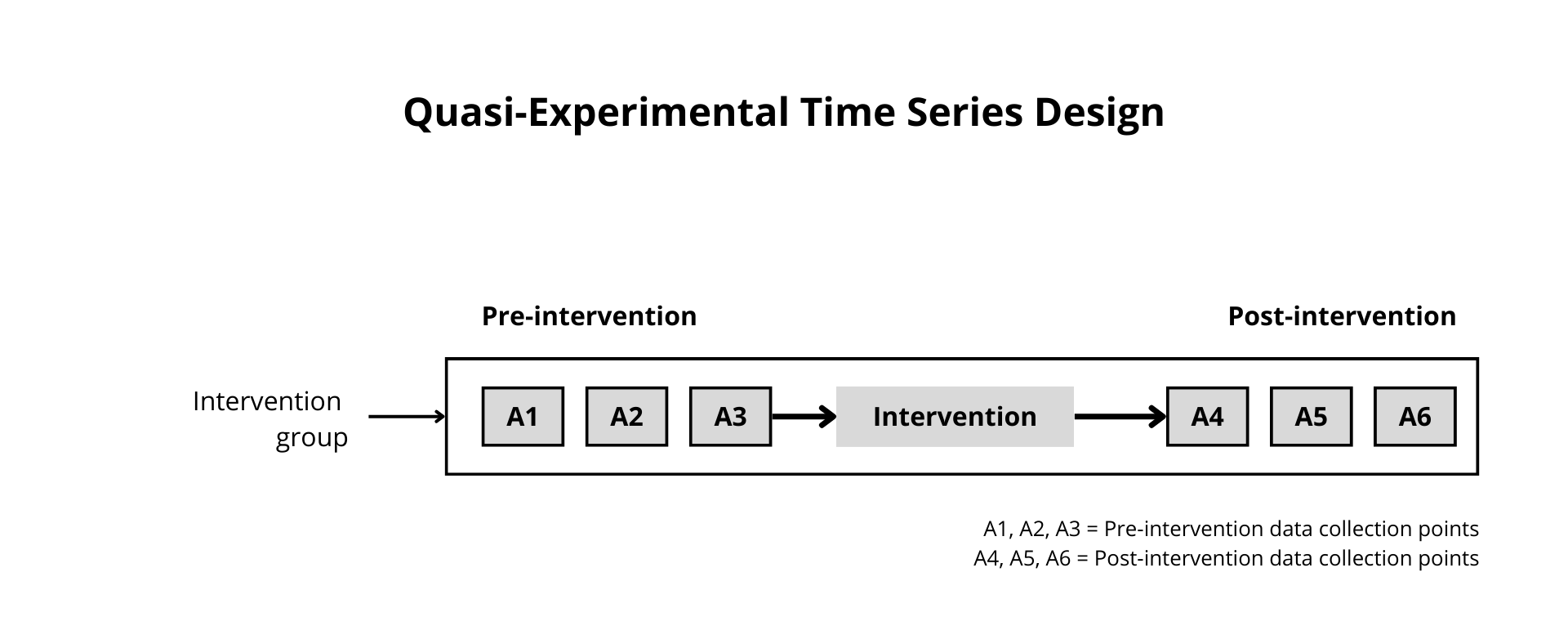

- Time-series design Important note: The time series design is considered quasi-experimental because subjects serve as their ‘own controls’ (same group of people, compared before and after the intervention for changes over time).

Figure 7. Time-series design. Adapted from Knowledge for Health

- One group pre-test-post-design design In this design there is no control group. The one group, considered the experimental group, is tested pre and post the intervention. The design is still considered quasi-experimental as there is manipulation of the intervention.

3. Non-experimental

Purpose: When the problem to be solved or examined is not amenable to experimentation; used when the researcher wants to:

- Study a phenomenon at one point in time or over a period of time

- Study (and measure) variables as they naturally occur

- Test relationships and differences among variables

- Used when the knowledge base on a phenomenon of interest is limited or when the research question is broad or exploratory in nature

- Appropriate for forecasting or making predictions

- Useful when the features of an experiment (e.g., randomization, control, and manipulation) are not appropriate or possible (e.g., ethical issues)

- Inability to claim cause-and-effect relationships

Options for Non-experimental Designs include:

- Survey studies: descriptive, exploratory, comparative

- Relationship or difference studies: Correlational, developmental

- Cross-sectional studies

- Longitudinal or Prospective studies

Figure 8. Longitudinal or Prospective studies. Adapted from University of Minnesota, Driven for Discover Libraries .

- Retrospective ( Ex Post Facto ) studies

Additional terms to consider when reading research

Learners may find it difficult when reading research to identify the Research Design used. Please consult the table below for more information on terms frequently used in research.

This refers to how the sample is selected. When randomization is used each participant from the desired population has an equal chance of being assigned to the experimental or control group.

These are variable that may interfere with the independent and dependent variables. Also called mediating variables.

The loss of participants from the study.

An Introduction to Quantitative Research Design for Students in Health Sciences Copyright © 2024 by Amy Hallaran and Julie Gaudet is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License , except where otherwise noted.

Share This Book

- Technical Support

- Find My Rep

You are here

Research Design Qualitative, Quantitative, and Mixed Methods Approaches

- John W. Creswell - Department of Family Medicine, University of Michigan

- J. David Creswell - Carnegie Mellon University, USA

- Description

See what’s new to this edition by selecting the Features tab on this page. Should you need additional information or have questions regarding the HEOA information provided for this title, including what is new to this edition, please email [email protected] . Please include your name, contact information, and the name of the title for which you would like more information. For information on the HEOA, please go to http://ed.gov/policy/highered/leg/hea08/index.html .

For assistance with your order: Please email us at [email protected] or connect with your SAGE representative.

SAGE 2455 Teller Road Thousand Oaks, CA 91320 www.sagepub.com

Supplements

“A long time ago, I participated in one of Dr. Creswell’s workshops on mixed methods research.... I am still learning from Dr. Creswell. I appreciate how he takes complex topics and makes them accessible to everyone. But I must caution my students that Dr. Creswell’s easygoing cadence and elegant descriptions sometimes mask the depth of the material. This reminds me of why he is such a highly respected researcher and teacher.”

“I always have enjoyed using Creswell's books (as a student and as an instructor) because the writing is straightforward.”

“This book is based around dissertation chapters, and that's why I love it using in my class. Practical, concise, and to the point!”

“This book is easy to use. The information and additional charts are also helpful.”

Clear material, student support website, and faculty resources.

The book provides a comprehensive overview and does well at demystifying the research philosophy. I have recommended it to my level 7 students for their dissertation project.

This book will be added to next academic year's reading list.

I am fed up with trying to get access to this "inspection copy". You don't respond to emails (and the email addresses you provide do not work). I get regular emails from you saying my ebook order is ready, but it does not appear in VitalSource and I cannot access it through any link on this web page. I am not willing to waste any more time on this. There are good alternatives.

Excellent introduction for research methods.

Creswell has always had excellent textbooks. Sixth Edition is no exception!

- Fully updated for the 7th edition of the Publication Manual of the American Psychological Association.

- More inclusive and supportive language throughout helps readers better see themselves in the research process.

- Learning Objectives provide additional structure and clarity to the reading process.

- The latest information on participatory research, evaluating literature for quality, using software to design literature maps, and additional statistical software types is newly included in this edition.

- Chapter 4: Writing Strategies and Ethical Considerations now includes information on indigenous populations and data collection after IRB review.

- An updated Chapter 8: Quantitative Methods now includes more foundational details, such as Type 1 and Type 2 errors and discussions of advantages and disadvantages of quantitative designs.

- A restructured and revised Chapter 10: Mixed Methods Procedures brings state-of-the-art thinking to this increasingly popular approach.

- Chapters 8, 9, and 10 now have parallel structures so readers can better compare and contrast each approach.

- Reworked end-of-chapter exercises offer a more straightforward path to application for students.

- New research examples throughout the text offer students contemporary studies for evaluation.

- Current references and additional readings are included in this new edition.

- Compares qualitative, quantitative, and mixed methods research in one book for unparalleled coverage.

- Highly interdisciplinary examples make this book widely appealing to a broad range of courses and disciplines.