What is Ad Hoc Analysis and Reporting? Process, Examples

Appinio Research · 26.03.2024 · 33min read

Have you ever needed to find quick answers to pressing questions or solve unexpected problems in your business? Enter ad hoc analysis, a powerful approach that allows you to dive into your data on demand, uncover insights, and make informed decisions in real time. In today's fast-paced world, where change is constant and uncertainties abound, having the ability to explore data flexibly and adaptively is invaluable. Whether you're trying to understand customer behavior , optimize operations, or mitigate risks, ad hoc analysis empowers you to extract actionable insights from your data swiftly and effectively. It's like having a flashlight in the dark, illuminating hidden patterns and revealing opportunities that may have otherwise gone unnoticed.

What is Ad Hoc Analysis?

Ad hoc analysis is a dynamic process that involves exploring data to answer specific questions or address immediate needs. Unlike routine reporting, which follows predefined formats and schedules, ad hoc analysis is driven by the need for timely insights and actionable intelligence. Its purpose is to uncover hidden patterns, trends, and relationships within data that may not be readily apparent, enabling organizations to make informed decisions and respond quickly to changing circumstances.

Ad hoc analysis involves the flexible and on-demand exploration of data to gain insights or solve specific problems. It allows analysts to dig deeper into datasets, ask ad hoc questions, and derive meaningful insights that may not have been anticipated beforehand. The term "ad hoc" is derived from Latin and means "for this purpose," emphasizing the improvised and opportunistic nature of this type of analysis.

Purpose of Ad Hoc Analysis

The primary purpose of ad hoc analysis is to support decision-making by providing timely and relevant insights into complex datasets. It allows organizations to:

- Identify emerging trends or patterns that may impact business operations.

- Investigate anomalies or outliers to understand their underlying causes .

- Explore relationships between variables to uncover opportunities or risks.

- Generate hypotheses and test assumptions in real time.

- Inform strategic planning, resource allocation, and risk management efforts.

By enabling analysts to explore data in an iterative and exploratory manner, ad hoc analysis empowers organizations to adapt to changing environments, seize opportunities, and mitigate risks effectively.

Importance of Ad Hoc Analysis in Decision Making

Ad hoc analysis plays a crucial role in decision-making across various industries and functions. Here are some key reasons why ad hoc analysis is important:

- Flexibility : Ad hoc analysis offers flexibility and agility, allowing organizations to respond quickly to evolving business needs and market dynamics. It enables decision-makers to explore new ideas, test hypotheses, and adapt strategies in real time.

- Customization : Unlike standardized reports or dashboards, ad hoc analysis allows for customization and personalization. Analysts can tailor their analyses to specific questions or problems, ensuring that insights are directly relevant to decision-makers needs.

- Insight Generation : Ad hoc analysis uncovers insights that may not be captured by routine reporting or predefined metrics. Analysts can uncover hidden patterns, trends, and correlations that drive innovation and competitive advantage by delving into data with a curious and open-minded approach.

- Risk Management : In today's fast-paced and uncertain business environment, proactive risk management is essential. Ad hoc analysis enables organizations to identify and mitigate risks by analyzing historical data, monitoring key indicators, and anticipating potential threats.

- Opportunity Identification : Ad hoc analysis helps organizations identify new opportunities for growth, innovation, and optimization. Analysts can uncover untapped markets, customer segments, or product offerings that drive revenue and profitability by exploring data from different angles and perspectives.

- Continuous Improvement : Ad hoc analysis fosters a culture of constant improvement and learning within organizations. By encouraging experimentation and exploration, organizations can drive innovation, refine processes, and stay ahead of the competition.

Ad hoc analysis is not just a tool for data analysis—it's a mindset and approach that empowers organizations to harness the full potential of their data, make better decisions, and achieve their strategic objectives.

Understanding Ad Hoc Analysis

Ad hoc analysis is a dynamic process that involves digging into your data to answer specific questions or solve immediate problems. Let's delve deeper into what it entails.

Ad Hoc Analysis Characteristics

At its core, ad hoc analysis refers to the flexible and on-demand examination of data to gain insights or address specific queries. Unlike routine reporting, which follows predetermined schedules, ad hoc analysis is triggered by the need to explore a particular issue or opportunity.

Its characteristics include:

- Flexibility : Ad hoc analysis adapts to the ever-changing needs of businesses, allowing analysts to explore data as new questions arise.

- Timeliness : It offers timely insights, enabling organizations to make informed decisions quickly in response to emerging issues or opportunities.

- Unstructured Nature : Ad hoc analysis often deals with unstructured or semi-structured data, requiring creativity and resourcefulness in data exploration.

Ad Hoc Analysis vs. Regular Reporting

While regular reporting provides standardized insights on predetermined metrics, ad hoc analysis offers a more customized and exploratory approach. Here's how they differ:

- Purpose : Regular reporting aims to track key performance indicators (KPIs) over time, while ad hoc analysis seeks to uncover new insights or address specific questions.

- Frequency : Regular reporting occurs at regular intervals (e.g., daily, weekly, monthly), whereas ad hoc analysis occurs on an as-needed basis.

- Scope : Regular reporting focuses on predefined metrics and reports, whereas ad hoc analysis explores a wide range of data sources and questions.

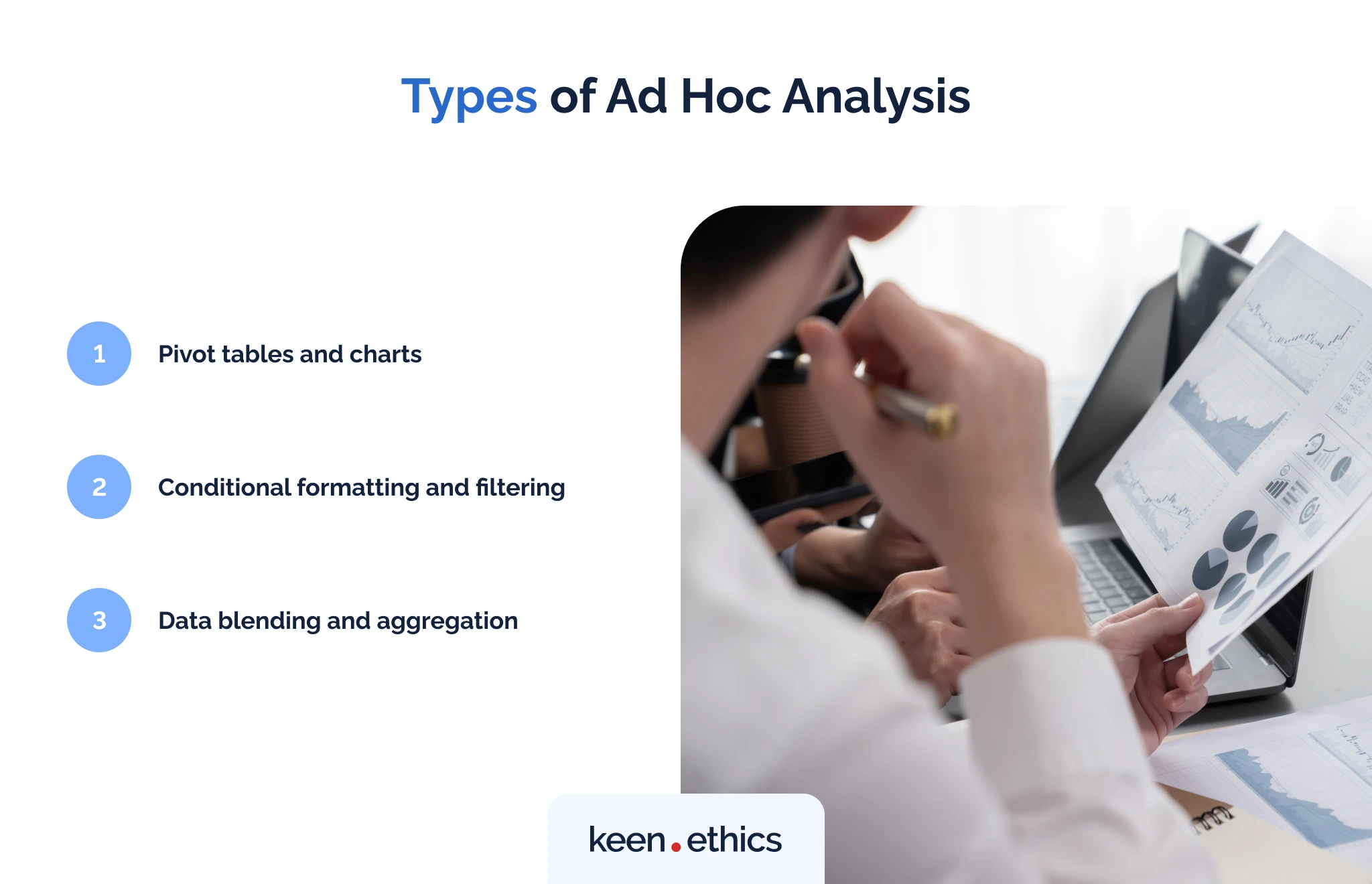

Types of Ad Hoc Analysis

Ad hoc analysis encompasses various types, each serving distinct purposes in data exploration and decision-making. These types include:

- Exploratory Analysis : This type involves exploring data to identify patterns, trends, or relationships without predefined hypotheses. It's often used in the initial stages of data exploration.

- Diagnostic Analysis : Diagnostic analysis aims to uncover the root causes of observed phenomena or issues. It delves deeper into data to understand why specific outcomes occur.

- Predictive Analysis : Predictive analysis leverages historical data to forecast future trends, behaviors, or events. It employs statistical modeling and machine learning algorithms to make predictions based on past patterns.

Common Data Sources

Ad hoc analysis can draw upon a wide array of data sources, depending on the nature of the questions being addressed and the data availability. Common data sources include:

- Structured Data : This includes data stored in relational databases, spreadsheets, and data warehouses, typically organized in rows and columns.

- Unstructured Data : Unstructured data sources, such as text documents, social media feeds, and multimedia content, require specialized techniques for analysis.

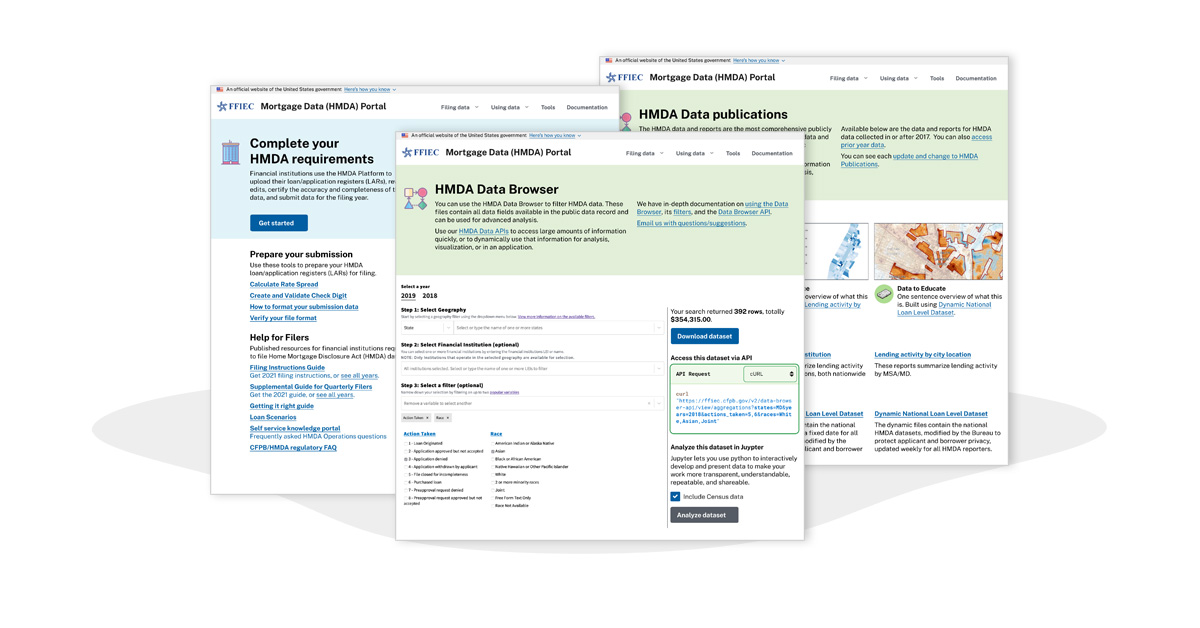

- External Data : Organizations may also tap into external data sources, such as market research reports, government databases, or third-party APIs, to enrich their analyses.

Organizations can gain comprehensive insights and make more informed decisions by leveraging diverse data sources. Understanding these foundational aspects of ad hoc analysis is crucial for conducting effective data exploration and driving actionable insights.

How to Prepare for Ad Hoc Analysis?

Before diving into ad hoc analysis, it's crucial to lay a solid foundation by preparing adequately. This involves defining your objectives, gathering and organizing data, selecting the right tools, and ensuring data quality. Let's explore these steps in detail.

Defining Objectives and Questions

The first step in preparing for ad hoc analysis is to clearly define your objectives and formulate the questions you seek to answer.

- Identify Key Objectives : Determine the overarching goals of your analysis. What are you trying to achieve? Are you looking to optimize processes, identify growth opportunities, or solve a specific problem?

- Formulate Relevant Questions : Break down your objectives into specific, actionable questions. What information do you need to answer these questions? What insights are you hoping to uncover?

By defining clear objectives and questions, you can focus your analysis efforts and ensure that you gather the necessary data to address your specific needs.

Data Collection and Organization

Once you have defined your objectives and questions, the next step is to gather relevant data and organize it in a format conducive to analysis.

- Identify Data Sources : Determine where your data resides. This may include internal databases, third-party sources, or even manual sources such as surveys or interviews.

- Extract and Collect Data : Extract data from the identified sources and collect it in a central location. This may involve using data extraction tools, APIs, or manual data entry.

- Clean and Preprocess Data : Before conducting analysis, it's essential to clean and preprocess the data to ensure its quality and consistency. This may involve removing duplicates, handling missing values, and standardizing formats.

Organizing your data in a systematic manner will streamline the analysis process and ensure that you can easily access and manipulate the data as needed. For a streamlined data collection process that complements your ad hoc analysis needs, consider leveraging Appinio .

With its intuitive platform and robust capabilities, Appinio simplifies data collection from diverse sources, allowing you to gather real-time consumer insights effortlessly. By incorporating Appinio into your data collection strategy, you can expedite the process and focus on deriving actionable insights to drive your business forward.

Ready to experience the power of rapid data collection? Book a demo today and see how Appinio can revolutionize your ad hoc analysis workflow.

Book a Demo

Tools and Software

Choosing the right tools and software is critical for conducting ad hoc analysis efficiently and effectively.

- Analytical Capabilities : Choose tools that offer a wide range of analytical capabilities, including data visualization, statistical analysis , and predictive modeling .

- Ease of Use : Look for user-friendly and intuitive tools, especially if you're not a seasoned data analyst. This will reduce the learning curve and enable you to get up and running quickly.

- Compatibility : Ensure the tools you choose are compatible with your existing systems and data sources. This will facilitate seamless integration and data exchange.

- Scalability : Consider the tools' scalability, especially if your analysis needs are likely to grow over time. Choose tools that can accommodate larger datasets and more complex analyses.

Popular tools for ad hoc analysis include Microsoft Excel and Python with libraries like Pandas and NumPy, R, and business intelligence platforms like Tableau and Power BI.

Data Quality Assurance

Ensuring the quality of your data is paramount for obtaining reliable insights and making informed decisions. To assess and maintain data quality:

- Data Validation : Perform data validation checks to ensure the data is accurate, complete, and consistent. This may involve verifying data against predefined rules or business logic.

- Data Cleansing : Cleanse the data by removing duplicates, correcting errors, and standardizing formats. This will help eliminate discrepancies and ensure uniformity across the dataset.

- Data Governance : Implement data governance policies and procedures to maintain data integrity and security. This may include access controls, data encryption, and regular audits.

- Continuous Monitoring : Continuously monitor data quality metrics and address any issues that arise promptly. This will help prevent data degradation over time and ensure your analyses are based on reliable information.

By prioritizing data quality assurance, you can enhance the accuracy and reliability of your ad hoc analyses, leading to more confident decision-making and better outcomes.

How to Perform Ad Hoc Analysis?

Now that you've prepared your data and defined your objectives, it's time to conduct ad hoc analysis. This involves selecting appropriate analytical techniques, exploring your data, applying advanced statistical methods, visualizing your findings, and validating hypotheses.

Choosing Analytical Techniques

Selecting the proper analytical techniques is crucial for extracting meaningful insights from your data.

- Nature of the Data : Assess the nature of your data, including its structure, size, and complexity. Different techniques may be more suitable for structured versus unstructured data or small versus large datasets.

- Objectives of Analysis : Align the choice of techniques with your analysis objectives. Are you trying to identify patterns, relationships, anomalies, or trends? Choose techniques that are well-suited to address your specific questions.

- Expertise and Resources : Consider your team's knowledge and the availability of resources, such as computational power and software tools. Choose techniques that your team is comfortable with and that can be executed efficiently.

Standard analytical techniques include descriptive statistics, inferential statistics, machine learning algorithms, and data mining techniques.

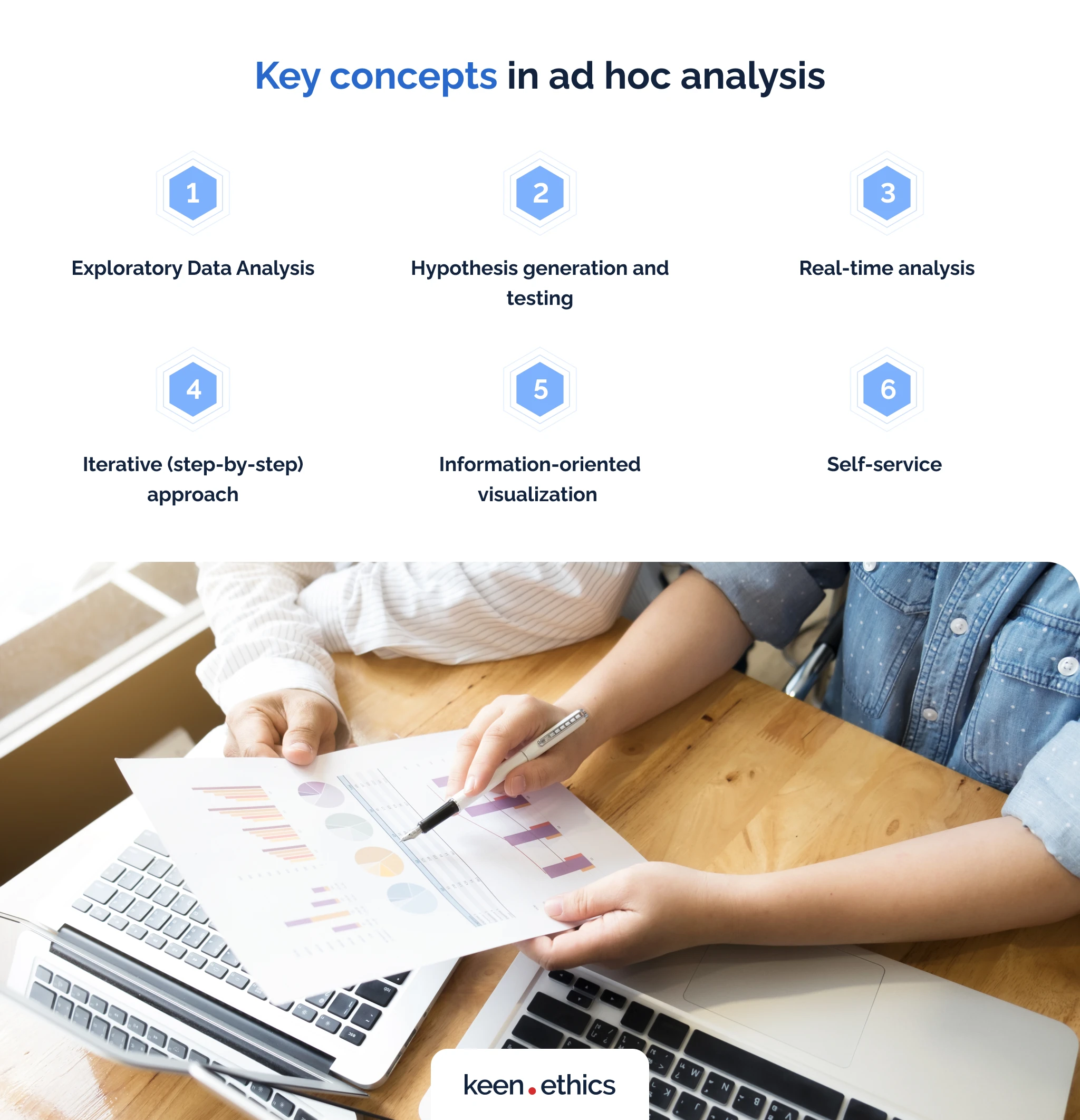

Exploratory Data Analysis (EDA)

Exploratory Data Analysis (EDA) is a critical step in ad hoc analysis that involves uncovering patterns, trends, and relationships within your data. Here's how to approach EDA:

- Summary Statistics : Calculate summary statistics such as mean, median, mode, variance, and standard deviation to understand the central tendencies and variability of your data.

- Data Visualization : Visualize your data using charts, graphs, and plots to identify patterns and outliers. Popular visualization techniques include histograms, scatter plots, box plots, and heat maps .

- Correlation Analysis : Explore correlations between variables to understand how they are related to each other. Use correlation matrices and scatter plots to visualize relationships.

- Dimensionality Reduction : If working with high-dimensional data, consider using dimensionality reduction techniques such as principal component analysis (PCA) or t-distributed stochastic neighbor embedding (t-SNE) to visualize and explore the data in lower dimensions.

Advanced Statistical Methods

For more in-depth analysis, consider applying advanced statistical methods to your data. These methods can help uncover hidden insights and relationships. Some advanced statistical methods include:

- Regression Analysis : Use regression analysis to model the relationship between dependent and independent variables. Linear regression, logistic regression, and multivariate regression are common techniques.

- Hypothesis Testing : Conduct hypothesis tests to assess the statistical significance of observed differences or relationships. Standard tests include t-tests, chi-square tests, ANOVA, and Mann-Whitney U tests.

- Time Series Analysis : If working with time series data, apply time-series analysis techniques to understand patterns and trends over time. This may involve methods such as autocorrelation, seasonal decomposition, and forecasting.

Data Visualization

Visualizing your findings is essential for communicating insights effectively to stakeholders.

- Choose the Right Visualizations : Select visualizations that best represent your data and convey your key messages. Consider factors such as the type of data, the relationships you want to highlight, and the audience's preferences .

- Use Clear Labels and Titles : Ensure that your visualizations are easy to interpret by using clear labels, titles, and legends. Avoid clutter and unnecessary decorations that may distract from the main message.

- Interactive Visualizations : If possible, create interactive visualizations allowing users to explore the data interactively. This can enhance engagement and enable users to gain deeper insights by drilling down into specific data points.

- Accessibility : Make your visualizations accessible to all users, including those with visual impairments. Use appropriate color schemes, font sizes, and contrast ratios to ensure readability.

Iterative Approach and Hypothesis Testing

Adopting an iterative approach to analysis allows you to refine your hypotheses and validate your findings through hypothesis testing.

- Formulate Hypotheses : Based on your initial explorations, formulate hypotheses about the relationships or patterns in the data that you want to test.

- Design Experiments : Design experiments or tests to evaluate your hypotheses. This may involve collecting additional data or conducting statistical tests.

- Evaluate Results : Analyze the results of your experiments and assess whether they support or refute your hypotheses. Consider factors such as statistical significance , effect size, and practical significance.

- Iterate as Needed : If the results are inconclusive or unexpected, iterate on your analysis by refining your hypotheses and conducting further investigations. This iterative process helps ensure that your conclusions are robust and reliable.

By following these steps and techniques, you can perform ad hoc analysis effectively, uncover valuable insights, and make informed decisions based on data-driven evidence.

Ad Hoc Analysis Examples

To better understand how ad hoc analysis can be applied in real-world scenarios, let's explore some examples across different industries and domains:

1. Marketing Campaign Optimization

Imagine you're a marketing analyst tasked with optimizing a company's digital advertising campaigns . Through ad hoc analysis, you can delve into various metrics such as click-through rates, conversion rates, and return on ad spend (ROAS) to identify trends and patterns. For instance, you may discover that certain demographic segments or ad creatives perform better than others. By iteratively testing and refining different campaign strategies based on these insights, you can improve overall campaign performance and maximize ROI.

2. Supply Chain Optimization

In the realm of supply chain management, ad hoc analysis can play a critical role in identifying inefficiencies and optimizing processes. For example, you might analyze historical sales data, inventory levels, and production schedules to identify bottlenecks or excess inventory. Through exploratory analysis, you may uncover seasonal demand patterns or supply chain disruptions that impact operations. Armed with these insights, supply chain managers can make data-driven decisions to streamline operations, reduce costs, and improve customer satisfaction.

3. Financial Risk Assessment

Financial institutions leverage ad hoc analysis to assess and mitigate various types of risks, such as credit risk, market risk, and operational risk. For example, a bank may analyze loan performance data to identify factors associated with loan defaults or delinquencies. By applying advanced statistical methods such as logistic regression or decision trees , analysts can develop predictive models to assess creditworthiness and optimize lending strategies. This enables banks to make informed decisions about loan approvals, pricing, and risk management.

4. Retail Merchandising Analysis

In the retail industry, ad hoc analysis is used to optimize merchandising strategies, pricing decisions, and inventory management. Retailers may analyze sales data, customer demographics , and market trends to identify product preferences and purchasing behaviors . Through segmentation analysis, retailers can tailor their merchandising efforts to specific customer segments and optimize product assortments. By monitoring key performance indicators (KPIs) such as sell-through rates and inventory turnover, retailers can make data-driven decisions to maximize sales and profitability.

How to Report Ad Hoc Analysis Findings?

After conducting ad hoc analysis, effectively communicating your findings is essential for driving informed decision-making within your organization. Let's explore how to structure your report, interpret and communicate results, tailor reports to different audiences, incorporate visual aids, and document methods and assumptions.

1. Structure the Report

Structuring your report in a clear and logical manner enhances readability and ensures that your findings are presented in a cohesive manner.

- Executive Summary : Provide a brief overview of your analysis, including the objectives, key findings, and recommendations. This section should concisely summarize the main points of your report.

- Introduction : Introduce the purpose and scope of the analysis, as well as any background information or context that is relevant to understanding the findings.

- Methodology : Describe the methods and techniques used in the analysis, including data collection , analytical approaches, and any assumptions made.

- Findings : Present the main findings of your analysis, organized in a logical sequence. Use headings, subheadings, and bullet points to enhance clarity and readability.

- Discussion : Interpret the findings in the context of the objectives and provide insights into their implications. Discuss any patterns, trends, or relationships observed in the data.

- Recommendations : Based on the analysis findings, provide actionable recommendations. Clearly outline the steps to address any issues or capitalize on opportunities identified.

- Conclusion : Summarize the main findings and recommendations, reiterating their importance and potential impact on the organization.

- References : Include a list of references or citations for any sources of information or data used in the analysis.

2. Interpret and Communicate Results

Interpreting and communicating the results of your analysis effectively is crucial for ensuring that stakeholders understand the implications and can make informed decisions.

- Use Plain Language : Avoid technical jargon and complex terminology that may confuse or alienate non-technical stakeholders. Use plain language to explain concepts and findings in a clear and accessible manner.

- Provide Context : Help stakeholders understand the significance of the findings by providing relevant context and background information. Explain why the analysis was conducted and how the findings relate to broader organizational goals or objectives.

- Highlight Key Insights : Focus on the most important insights and findings rather than overwhelming stakeholders with excessive detail. Use visual aids, summaries, and bullet points to highlight key takeaways.

- Address Implications : Discuss the implications of the findings and their potential impact on the organization. Consider both short-term and long-term implications and any risks or uncertainties.

- Encourage Dialogue : Foster open communication and encourage stakeholders to ask questions and seek clarification. Be prepared to engage in discussions and provide additional context or information as needed.

3. Tailor Reports to Different Audiences

Different stakeholders may have varying levels of expertise and interests, so it's essential to tailor your reports to meet their specific needs and preferences.

- Executive Summary for Decision Makers : Provide a concise executive summary highlighting key findings and recommendations for senior leaders and decision-makers who may not have time to review the full report.

- Detailed Analysis for Analysts : Include more thorough analysis, methodologies , and supporting data for analysts or technical stakeholders who require a deeper understanding of the analysis process and results.

- Customized Dashboards or Visualizations : Create customized dashboards or visualizations for different audiences, allowing them to interact with the data and explore insights relevant to their areas of interest.

- Personalized Presentations : Deliver personalized presentations or briefings to different stakeholder groups, focusing on the aspects of the analysis most relevant to their roles or responsibilities.

By tailoring your reports to different audiences, you can ensure that each stakeholder receives the information they need in a meaningful and actionable format.

4. Incorporate Visual Aids

Visual aids such as charts, graphs, and diagrams can enhance the clarity and impact of your reports by making complex information more accessible and engaging.

- Choose Appropriate Visualizations : Select visualizations that best represent the data and convey the key messages of your analysis. Choose from various chart types, including bar charts, line charts, pie charts, scatter plots, and heat maps.

- Simplify Complex Data : Use visualizations to simplify complex data and highlight trends, patterns, or relationships. Avoid clutter and unnecessary detail that may detract from the main message.

- Ensure Readability : Use clear labels, titles, and legends to ensure that visualizations are easy to read and interpret. Use appropriate colors, fonts, and formatting to enhance readability and accessibility.

- Use Interactive Features : If possible, incorporate interactive features into your visualizations that allow stakeholders to explore the data further. This can enhance engagement and enable stakeholders to gain deeper insights by drilling down into specific data points.

- Provide Context : Provide context and annotations to help stakeholders understand the significance of the visualizations and how they relate to the analysis objectives.

By incorporating visual aids effectively, you can make your reports more engaging and persuasive, helping stakeholders better understand and act on the findings of your analysis.

5. Document Methods and Assumptions

Documenting the methods and assumptions used in your analysis is essential for transparency and reproducibility. It allows stakeholders to understand how the findings were obtained and evaluate their reliability.

- Describe Data Sources and Collection Methods : Provide details about the sources of data used in the analysis and the methods used to collect and prepare the data for analysis.

- Explain Analytical Techniques : Describe the analytical techniques and methodologies used in the analysis, including any statistical methods, algorithms, or models employed.

- Document Assumptions and Limitations : Clearly state any assumptions made during the analysis, as well as any limitations or constraints that may impact the validity of the findings. Be transparent about the uncertainties and risks associated with the analysis.

- Provide Reproducible Code or Scripts : If applicable, provide reproducible code or scripts that allow others to replicate the analysis independently. This can include programming code, SQL queries, or data manipulation scripts.

- Include References and Citations : Provide references or citations for any external sources of information or data used in the analysis, ensuring that proper credit is given and allowing stakeholders to access additional information if needed.

By documenting methods and assumptions thoroughly, you can build trust and credibility with stakeholders and facilitate collaboration and knowledge sharing within your organization.

Ad Hoc Analysis Best Practices

Performing ad hoc analysis effectively requires a combination of skills, techniques, and strategies. Here are some best practices and tips to help you conduct ad hoc analysis more efficiently and derive valuable insights:

- Define Clear Objectives : Before analyzing the data, clearly define the objectives and questions you seek to answer. This will help you focus your efforts and ensure that you stay on track.

- Start with Exploratory Analysis : Begin your analysis with exploratory techniques to gain an initial understanding of the data and identify any patterns or trends. This will provide valuable insights that can guide further analysis.

- Iterate and Refine : Adopt an iterative approach to analysis, refining your hypotheses and techniques based on initial findings. Be open to adjusting your approach as new insights emerge.

- Leverage Diverse Data Sources : Tap into diverse data sources to enrich your analysis and gain comprehensive insights. Consider both internal and external sources of data that may provide valuable context or information.

- Maintain Data Quality : Prioritize data quality assurance throughout the analysis process, ensuring your findings are based on accurate, reliable data. Cleanse, validate, and verify the data to minimize errors and inconsistencies.

- Document Processes and Assumptions : Document the methods, assumptions, and decisions made during the analysis to ensure transparency and reproducibility. This will facilitate collaboration and knowledge sharing within your organization.

- Communicate Findings Effectively : Use clear, concise language to communicate your findings and recommendations to stakeholders. Tailor your reports and presentations to the needs and preferences of different audiences.

- Stay Curious and Open-Minded : Approach ad hoc analysis with curiosity and an open mind, remaining receptive to unexpected insights and discoveries. Embrace uncertainty and ambiguity as opportunities for learning and exploration.

- Seek Feedback and Collaboration : Solicit feedback from colleagues, mentors, and stakeholders throughout the analysis process. Collaboration and peer review can help validate findings and identify blind spots or biases.

- Continuously Learn and Improve : Invest in ongoing learning and professional development to expand your analytical skills and stay abreast of emerging trends and techniques in data analysis.

Ad Hoc Analysis Challenges

While ad hoc analysis offers numerous benefits, it also presents unique challenges that analysts must navigate. Here are some common challenges associated with ad hoc analysis:

- Data Quality Issues : Poor data quality, including missing values, errors, and inconsistencies, can hinder the accuracy and reliability of ad hoc analysis results. Addressing data quality issues requires careful data cleansing and validation.

- Time Constraints : Ad hoc analysis often needs to be performed quickly to respond to immediate business needs or opportunities. Time constraints can limit the depth and thoroughness of analysis, requiring analysts to prioritize key insights.

- Resource Limitations : Limited access to data, tools, or expertise can pose challenges for ad hoc analysis. Organizations may need to invest in training, infrastructure, or external resources to support effective analysis.

- Complexity of Unstructured Data : Dealing with unstructured or semi-structured data, such as text documents or social media feeds, can be challenging. Analysts must employ specialized techniques and tools to extract insights from these data types.

- Overcoming Analytical Bias : Analysts may inadvertently introduce biases into their analysis, leading to skewed or misleading results. It's essential to remain vigilant and transparent about potential biases and take steps to mitigate them.

By recognizing and addressing these challenges, analysts can enhance the effectiveness and credibility of their ad hoc analysis efforts, ultimately driving more informed decision-making within their organizations.

Conclusion for Ad Hioc Analysis

Ad hoc analysis is a versatile tool that empowers organizations to navigate the complexities of data and make informed decisions quickly. By enabling analysts to explore data on demand, ad hoc analysis provides a flexible and adaptive approach to problem-solving, allowing organizations to respond effectively to changing circumstances and capitalize on opportunities. From marketing campaign optimization to supply chain management, healthcare outcomes analysis, financial risk assessment, and retail merchandising analysis, the applications of ad hoc analysis are vast and varied. By embracing the principles of ad hoc analysis and incorporating best practices into their workflows, organizations can unlock the full potential of their data and drive business success. In today's data-driven world, the ability to extract actionable insights from data is more critical than ever. Ad hoc analysis offers a pathway to deeper understanding and better decision-making, enabling organizations to stay agile, competitive, and resilient in the face of uncertainty. By harnessing the power of ad hoc analysis, organizations can gain a competitive edge, optimize processes, mitigate risks, and uncover new opportunities for growth and innovation. As technology continues to evolve and data volumes grow exponentially, the importance of ad hoc analysis will only continue to increase. So, whether you're a seasoned data analyst or just beginning your journey into data analysis, embracing ad hoc analysis can lead to better outcomes and brighter futures for your organization.

How to Quickly Collect Data for Ad Hoc Analysis?

Introducing Appinio , your gateway to lightning-fast market research within the realm of ad hoc analysis. As a real-time market research platform, Appinio specializes in delivering immediate consumer insights, empowering companies to make swift, data-driven decisions.

With Appinio, conducting your own market research becomes a breeze:

- Lightning-fast Insights: From questions to insights in mere minutes, Appinio accelerates the pace of ad hoc analysis, ensuring you get the answers you need precisely when you need them.

- Intuitive Platform: No need for a PhD in research—Appinio's platform is designed to be user-friendly and accessible to all, allowing anyone to conduct sophisticated market research effortlessly.

- Global Reach: With access to over 90 countries and the ability to define precise target groups from 1200+ characteristics, Appinio enables you to gather insights from diverse demographics worldwide, all with an average field time of under 23 minutes for 1,000 respondents.

Get free access to the platform!

Join the loop 💌

Be the first to hear about new updates, product news, and data insights. We'll send it all straight to your inbox.

Get the latest market research news straight to your inbox! 💌

Wait, there's more

25.04.2024 | 37min read

Targeted Advertising: Definition, Benefits, Examples

17.04.2024 | 25min read

Quota Sampling: Definition, Types, Methods, Examples

15.04.2024 | 34min read

What is Market Share? Definition, Formula, Examples

Unveiling the Power of Ad Hoc Analysis: A Comprehensive Guide

Introduction

In the ever-evolving landscape of data analytics, the concept of ad hoc analysis stands as a dynamic catalyst for informed decision-making. Ad hoc analysis represents a departure from traditional, structured data examinations, offering the freedom to explore and derive insights on the fly. This real-time, impromptu approach enables professionals at all levels to interact with data intuitively, fostering a more responsive and agile decision-making process. In a world where business landscapes change swiftly, ad hoc analysis serves as a valuable tool for identifying trends, anomalies, and emerging opportunities. This article embarks on a comprehensive exploration of ad hoc analysis, delving into its fundamental principles, key components, and the manifold benefits it brings to organizations. By understanding the significance of ad hoc analysis and its transformative impact on user empowerment and rapid decision-making, businesses can unlock new dimensions of analytical capabilities, ensuring they stay ahead in an increasingly data-centric world. Join us as we unravel the layers of ad hoc analysis, navigating its applications, best practices, and the promising future it holds in the realm of data-driven decision-making.

Understanding Ad Hoc Analysis

At the core of modern data analytics, Ad Hoc Analysis emerges as a dynamic and indispensable tool, providing organizations with the agility to respond to ever-changing data landscapes. Ad Hoc Analysis is essentially an on-the-fly approach to data exploration, allowing users to conduct impromptu analyses without relying on pre-determined queries or structured reports. Its significance in data analysis lies in its ability to accommodate the unpredictable nature of business questions, facilitating real-time insights and informed decision-making.

Traditional data analysis methods often involve predefined queries and structured reports, limiting the flexibility to adapt to emerging trends or unexpected patterns. Ad Hoc Analysis, on the other hand, offers a dynamic environment where users can explore data interactively, posing questions and uncovering insights in real-time. This adaptability is crucial in situations where immediate decisions are required or when dealing with rapidly evolving data scenarios.

The importance of Ad Hoc Analysis is underscored by its empowerment of users at all levels within an organization. By offering a user-friendly interface and intuitive tools, individuals across various departments can independently analyze data, reducing dependence on dedicated, data analysts and teams. This democratization of data analysis enhances organizational responsiveness and ensures that decision-makers have the freedom to explore and extract insights without the constraints of predefined structures. In essence, Ad Hoc Analysis stands as a linchpin in the data analytics toolkit, championing a dynamic, user-centric, and real-time approach to uncovering actionable insights.

Key Components of Ad Hoc Analysis

Flexibility in manipulating data:.

The efficacy of Ad Hoc Analysis lies in its key components that contribute to a dynamic and user-driven approach to data exploration. At the forefront is the unparalleled flexibility it provides in manipulating and analyzing data. Unlike traditional hoc reporting and analysis methods that adhere to rigid structures, Ad Hoc Analysis allows users to interactively manipulate data, tailor analyses to specific questions, and adjust parameters on the fly. This flexibility ensures that users can adapt their analytical approach to the ever-evolving nature of business data, fostering a more responsive decision-making process.

Real-Time Exploration and Analysis:

Real-time exploration and analysis constitute another crucial component of Ad Hoc Analysis. In a rapidly changing business environment, the ability to derive insights in real-time is paramount. Ad Hoc Analysis facilitates this by allowing business users to explore data dynamically as it is generated, ensuring that organizations can respond swiftly to emerging trends, identify anomalies, and seize opportunities promptly.

User Empowerment Across the Organization:

Moreover, Ad Hoc Analysis stands out for its capacity to empower users at all levels within an organization. The tools associated with Ad Hoc Analysis often boast user-friendly interfaces and intuitive features, enabling individuals across various departments to independently analyze data without necessitating advanced technical skills. This democratization of data analysis not only reduces the burden on dedicated data teams but also ensures that decision-makers at different organizational levels have the autonomy to extract valuable insights, promoting a culture of data-driven decision-making throughout the organization. As a result, Ad Hoc Analysis stands as a cornerstone, fostering adaptability, responsiveness, and user empowerment in the data analytics landscape.

Benefits of Ad Hoc Analysis

Rapid decision-making:.

Ad hoc analysis emerges as a linchpin in facilitating rapid decision-making, offering a swift and responsive mechanism for professionals to adapt to changing scenarios. In dynamic environments where market conditions, consumer preferences, or internal factors evolve swiftly, the ability to quickly analyze and interpret data becomes paramount. Ad hoc analysis enables decision-makers to promptly access insights, empowering them to make informed choices on the spot without waiting for pre-structured reports or analyses.

Customized Insights:

A significant advantage of ad hoc analysis lies in its capacity to provide customized insights tailored to specific questions or scenarios. Unlike standardized reports that may not address niche inquiries, ad hoc analysis allows users to frame questions dynamically, ensuring that the analyses generated are directly relevant to the unique needs of the moment. This customization enhances the precision and applicability of the insights derived, supporting decision-makers in gaining a nuanced understanding of the data at hand.

Identifying Trends and Anomalies:

Ad hoc analysis serves as a proactive tool for identifying both trends and anomalies within datasets. The real-time exploration capability enables users to spot emerging patterns or irregularities that might go unnoticed in traditional reporting structures. This anticipatory approach allows organizations to stay ahead of trends, capitalize on emerging opportunities, and address anomalies before they escalate, contributing to a more resilient and foresighted decision-making process.

Reduced Dependence on IT:

Ad hoc analysis tools often boast user-friendly interfaces that empower non-technical users to conduct analyses independently. This reduction in dependence on IT teams streamlines the decision-making process, enabling professionals from various departments to explore and derive insights without requiring extensive technical skills. The democratization of data analysis through intuitive interfaces enhances organizational agility, fostering a culture where data-driven decision-making is accessible to a broader spectrum of users.

Examples of Ad Hoc Analysis in Action:

Real-world scenarios:.

Ad hoc analysis has proven invaluable in numerous real-world scenarios, showcasing its adaptability and effectiveness across diverse industries. In the financial sector, for instance, investment analysts often utilize ad hoc analysis to quickly respond to market fluctuations. By dynamically exploring data, they can make timely investment decisions, adapting to changing economic conditions and staying ahead of market trends. In the healthcare industry, ad hoc analysis plays a crucial role in patient care and resource allocation. Healthcare professionals use on-the-fly analyses to identify patterns in patient data, allowing for personalized treatment plans and more efficient use of medical resources. During public health crises, such as a pandemic, ad hoc analysis becomes instrumental in tracking the spread of diseases, predicting hotspots, and allocating resources strategically.

Industries and Use Cases:

Several industries benefit significantly from the flexibility and immediacy of ad hoc analysis. In retail, for instance, ad hoc analysis helps optimize inventory management by quickly identifying product trends and adjusting stock levels accordingly. E-commerce platforms leverage this approach to analyze customer behavior in real-time, enhancing personalized recommendations and improving the overall shopping experience.

The telecommunications sector relies on ad hoc analysis to monitor network performance and identify potential issues swiftly. Telecom operators can analyze data on-the-fly to optimize network resources, ensuring seamless connectivity and addressing disruptions promptly. Similarly, in manufacturing, ad hoc analysis aids in quality control by enabling real-time monitoring of production processes and identifying deviations that may affect product quality.

In the technology industry, especially in software development, ad hoc analysis is employed to identify bugs, optimize code performance, and make swift adjustments during the development process. The ability to analyze data dynamically ensures a more agile and responsive approach to software development, leading to faster problem resolution and product improvements.

These examples underscore the versatility of ad hoc analysis, demonstrating its applicability in enhancing decision-making and efficiency across a spectrum of industries and use cases.

Challenges and Considerations

Challenges associated with ad hoc analysis:.

Despite its numerous benefits, ad hoc analysis is not without its challenges. One significant challenge is the potential for data inconsistency and accuracy issues. Since ad hoc analyses often involve quick, on-the-fly exploration, there is a risk of overlooking data quality, leading to erroneous conclusions. Additionally, the lack of predefined structures may result in varied interpretations of the same dataset, posing challenges in maintaining consistency across analyses. Security concerns also arise, as ad hoc analyses may involve sensitive or confidential data, necessitating robust access controls to prevent unauthorized access and data breaches.

Considerations for Effective Implementation:

To maximize the benefits of ad hoc analysis while mitigating challenges, certain considerations are crucial for effective implementation. Establishing clear guidelines and best practices for ad hoc analysis is essential to maintain consistency and accuracy. Organizations should prioritize data governance, ensuring that data quality and security measures are upheld during impromptu analyses. Providing adequate training for users, especially those without a strong background in data analysis, is vital for fostering a data-literate culture and preventing misinterpretations. Collaborative platforms that enable sharing and documentation of ad hoc reports and analyses can enhance transparency and communication within the organization.

Moreover, organizations must strike a balance between flexibility and control by implementing governance frameworks that guide users in their ad hoc analyses while allowing for innovation. Regularly reviewing and updating data policies, security protocols, and analysis guidelines will ensure that ad hoc analysis of company data remains a valuable and risk-mitigated tool in the organization's decision-making arsenal.

Introduction to Ad Hoc Analysis Tools and Technologies:

As the demand for dynamic, on-the-fly data exploration rises, a variety of tools and technologies have emerged, each designed to facilitate impromptu analyses and empower users at various technical skill levels. Among these, Sprinkle Data stands out as a powerful and versatile solution, offering innovative features alongside other popular tools in the ad hoc analysis space.

Popular Tools for Ad Hoc Analysis

- Sprinkle Data:

- Sprinkle Data stands as a leading player in the ad hoc analysis arena, known for its user-friendly interface and robust functionality.

- With Sprinkle Data, users can effortlessly navigate and explore data in real-time, leveraging features that facilitate quick insights and informed decision-making.

- Its intuitive design allows for seamless ad hoc analyses, making it accessible to both technical and non-technical users.

- Tableau's reputation for an intuitive interface extends to ad hoc analysis, providing users with drag-and-drop capabilities for dynamic visualizations.

- Renowned for its visualization prowess, Tableau enables users to create interactive analyses effortlessly.

- Microsoft's Power BI is a versatile tool for ad hoc analysis, featuring natural language querying and integration with various Microsoft applications.

- Its robust suite of tools facilitates dynamic data exploration, enhancing the overall ad hoc analysis experience.

- Google Data Studio:

- Google Data Studio is celebrated for its simplicity and collaborative features, allowing users to create, customize, and share reports and dashboards effortlessly.

- Seamless integration with other Google services contributes to a user-friendly environment for ad hoc analysis.

Features Enhancing User Experience:

- Drag-and-Drop Interfaces:

- Common to many ad hoc analysis tools, drag-and-drop interfaces simplify data manipulation and dynamic visualization creation, reducing the need for complex coding.

- Natural Language Processing (NLP):

- Tools with NLP capabilities, including Sprinkle Data, enable users to interact with data using plain language, enhancing accessibility for non-technical users.

- Collaboration and Sharing:

- Robust collaboration features in these tools, such as shared workspaces and real-time collaboration, promote teamwork and contribute to a more agile decision-making process.

- Data Connectivity:

- Ad hoc analysis tools, including Sprinkle Data, often support connectivity to a diverse range of data sources, ensuring users can analyze information from various channels.

As organizations navigate the complexities of data-driven decision-making, the landscape of ad hoc reporting and analysis tools continues to evolve, with a collective focus on enhancing usability, collaboration, and the overall user experience.

Best Practices for Ad Hoc Analysis:

Tips for effective ad hoc analysis:.

- Define Clear Objectives:

- Begin by clearly defining the objectives of your ad hoc analysis. Clearly articulate the questions you seek to answer or the insights you aim to uncover. This focused approach ensures that your analysis remains purposeful and aligned with your goals.

- Start Simple:

- Begin with simple analyses before diving into complex queries. Gradually refine your approach based on the insights gained. This iterative process allows for a more thorough understanding of the data and prevents potential misinterpretations.

- Utilize Visualization Tools:

- Leverage visualization tools to represent data intuitively. Graphs, charts, and dashboards can enhance comprehension and aid in identifying patterns or outliers more efficiently. Tools like Sprinkle Data, Tableau, or Power BI offer robust visualization features.

- Regularly Save and Document:

- Save your analyses regularly and provide clear documentation. This ensures that insights are reproducible and shareable within your team. Documentation becomes crucial for future reference and contributes to a collaborative analytical environment.

Importance of Data Accuracy and Quality:

Ensure data consistency:.

Validate and ensure the consistency of your data sources. Discrepancies or inaccuracies in datasets can lead to unreliable conclusions. Regularly verify data integrity to maintain the accuracy of your ad hoc analyses.

Verify Data Sources:

Verify the credibility and reliability of your data sources. Relying on accurate and trustworthy data is fundamental for making informed decisions. Cross-checking data from multiple sources adds an extra layer of validation.

Implement Data Governance:

Establish robust, data management and governance practices to maintain high data quality. This involves defining data ownership, implementing data validation processes, and ensuring compliance with data quality standards.

Data Cleansing and Transformation:

Prioritize data cleansing and transformation processes to handle missing or inconsistent data. Addressing data quality issues at the preprocessing stage contributes to the reliability of your ad hoc analyses.

Emphasizing these best practices as needed basis for effective ad hoc analysis, coupled with a commitment to data accuracy and quality, establishes a solid foundation for organizations seeking to derive meaningful insights from their dynamic data environments. As the landscape of data analytics continues to evolve, adherence to these practices ensures that ad hoc analyses contribute significantly to informed decision-making processes.

Future Trends in Ad Hoc Analysis:

Emerging trends and advancements:.

Machine Learning Integration:

The integration of machine learning algorithms within ad hoc analysis tools is an emerging trend. This advancement allows systems to learn from user interactions, offering automated insights and predictive analytics as users navigate through the data dynamically.

Natural Language Processing (NLP) Enhancements:

NLP capabilities are expected to undergo significant enhancements. Future ad hoc analysis tools may feature more sophisticated NLP, enabling users to interact with data using even more natural and context-aware language, making it accessible to a broader range of users.

Augmented Analytics:

Augmented analytics, combining machine learning and AI-driven insights, is poised to transform ad hoc analysis. These tools will proactively assist users in formulating queries, interpreting results, and suggesting relevant visualizations, making the analytical process more intuitive and efficient.

Evolution of the Landscape:

Increased Integration with Big Data Platforms:

As organizations continue to leverage big data, ad hoc analysis tools are likely to integrate more seamlessly with big data platforms. This evolution ensures that users can explore and analyze vast datasets efficiently, unlocking insights from diverse and complex data sources. Enhanced Collaboration Features:

The future of ad hoc analysis will see a heightened emphasis on collaboration features. Real-time collaborative environments will become more sophisticated, allowing teams to work together seamlessly on ad hoc analyses, fostering collective decision-making.

Advancements in Data Visualization:

The evolution of data visualization techniques will play a pivotal role. Ad hoc analysis tools will likely incorporate more advanced visualization options, including augmented reality (AR) and immersive data experiences, providing users with novel ways to interpret and communicate insights.

Greater Automation for Routine Tasks:

Routine and repetitive tasks in ad hoc analysis, such as data cleaning and basic exploratory analyses, are expected to become more automated. This allows users to focus on more complex and strategic aspects of the analysis, enhancing overall productivity.

As ad hoc analysis becomes increasingly integral to organizational decision-making, these emerging trends and advancements signify a future where the ad hoc report process is not only more sophisticated but also more accessible and collaborative. The evolving landscape promises a more intelligent, automated, and user-friendly ad hoc analysis experience, empowering organizations to glean deeper insights from their data.

Conclusion:

In the dynamic landscape of data analysis, this exploration into ad hoc analysis has revealed its pivotal role in reshaping the way organizations extract insights and make informed decisions. The ability to conduct impromptu, on-the-fly analyses emerged as a powerful tool, providing users across various industries with which analysis tools offer unprecedented flexibility and responsiveness.

In summarizing the key points, we began by defining ad hoc analysis, highlighting its dynamic nature that sets it apart from traditional, predefined approaches. The discussion then unfolded to showcase real-world scenarios where ad hoc analysis proved instrumental, emphasizing its effectiveness in diverse industries, from finance and healthcare to retail and telecommunications.

The many benefits of ad hoc analysis, from rapid decision-making and customized insights to identifying trends and reducing dependence on IT, underscored its transformative impact on organizational agility. We explored popular tools like Sprinkle Data, Tableau, Power BI, and Google Data Studio, noting how their features enhance the user experience, making ad hoc analysis accessible to both technical and non-technical users.

Delving into challenges and considerations, we acknowledged potential hurdles while providing insights into mitigating risks and ensuring effective implementation of reporting solutions. Best practices for ad hoc analysis, focusing on clear objectives, starting simple, and emphasizing data accuracy, offered practical guidance for users navigating the dynamic data landscape.

Looking towards the future, we identified emerging trends like machine learning integration, enhanced NLP capabilities, and augmented analytics, forecasting a landscape where ad hoc analysis and business intelligence become more sophisticated, collaborative, and automated.

In conclusion, ad hoc analysis stands as a cornerstone in the data-driven era, empowering organizations to navigate complexities, respond swiftly to challenges, and seize opportunities. Its significance lies not just in the analyses it produces, but in the agility, it brings to decision-making processes, ensuring organizations remain adaptive and thrive in an ever-evolving business environment. As the data analytics landscape continues to evolve, ad hoc analysis remains a key protagonist, promising continued innovation and transformative insights for those who harness its capabilities effectively.

Related Posts

The power of advanced analytics, 10x faster path to no-code analytics, top 30 data analytics tools for 2024, why is digital marketing analytics useful, what is embedded analytics its benefits & tools, unlocking insights: a guide to self-service analytics , using agile analytics to deliver business-focused solutions, data warehouse as a service (dwaas): transforming analytics with the cloud, bigtable vs. bigquery: a comprehensive comparison for data management and analytics, marketing analytics tools: the ultimate guide to help you choose the right marketing analytics tool .

Create Your Free Account

Ingest, transform and analyze data without writing a single line of code.

Join our Community

Get help, network with fellow data engineers, and access product updates..

Get started now.

Free 14 day trial. No credit card required. Got a question? Reach out to us!

Understanding manufacturing repurposing: a multiple-case study of ad hoc healthcare product production during COVID-19

- Open access

- Published: 28 July 2022

- Volume 15 , pages 1257–1269, ( 2022 )

Cite this article

You have full access to this open access article

- Wan Ri Ho ORCID: orcid.org/0000-0003-2540-0732 1 ,

- Omid Maghazei ORCID: orcid.org/0000-0002-2257-3550 1 &

- Torbjørn H. Netland ORCID: orcid.org/0000-0001-7382-1051 1

2784 Accesses

3 Citations

4 Altmetric

Explore all metrics

The repurposing of manufacturing facilities has provided a solution to the surge in demand for healthcare products during the COVID-19 pandemic. Despite being a widespread and important phenomenon, manufacturing repurposing has received scarce research. This paper develops a grounded understanding of the key factors that influence manufacturing repurposing at the macro and micro levels. We collected rich qualitative data from 45 case studies of firms’ repurposing initiatives during COVID-19. Our study focuses on four types of healthcare products that experienced skyrocketing demand during the first months of the COVID-19 pandemic: face shields, facemasks, hand sanitizers, and medical ventilators. Based on the case studies, we identify and generalize driving factors for manufacturing repurposing and their relationships, which are summarized in causal loop diagrams at both macro and micro levels. Our research provides practitioners, policymakers, and scholars with a conceptual understanding of the phenomenon of manufacturing repurposing. It helps manufacturing managers understand why, when, and how they should engage in manufacturing repurposing and informs policymakers when and how to tailor incentive policies and support schemes to changing situations. Scholars can build on our work to develop and test dynamic system–behavior models of the phenomenon or to pursue other research paths we discover. The world stands to benefit from improved manufacturing repurposing capabilities to be better prepared for future disruptions.

Similar content being viewed by others

Supply chain disruptions and resilience: a major review and future research agenda

Literature review of Industry 4.0 and related technologies

Supply Chain Management: An Overview

Avoid common mistakes on your manuscript.

1 Introduction

As the COVID-19 pandemic swept across the world in 2020, the demand for particular healthcare equipment skyrocketed far beyond the level of any safety stock (Hald and Coslugeanu 2021 ). Manufacturing repurposing has been considered a rapid response to addressing the global shortage of critical items during the COVID-19 pandemic (Joglekar et al. 2020 ; López-Gómez et al. 2020 ). Manufacturers from different industries engaged in manufacturing repurposing either to gain goodwill or to capture the business opportunities presented (Betti and Heinzmann 2020 ; López-Gómez et al. 2020 ). Firms have particularly started to produce personal protective equipment (PPE) or medical equipment products. For example, beer manufacturer BrewDog began producing hand sanitizers, sports car manufacturer Ferrari manufactured respirator valves, and luxury label Prada made facemasks (Netland 2020 ; Garza-Reyes et al. 2021 ). In other cases, firms sought to fight the pandemic by inventing new products (e.g., modified scuba masks for ventilators and hygienic surgical gowns) or finding new ways to use novel technologies (e.g., collaborative robots and additive manufacturing) (Malik et al. 2020 ). However, these efforts have raised substantial challenges and risks (Garza-Reyes et al. 2021 ). For example, there has been high uncertainty related to the dynamics of the pandemic, and firms have generally lacked experience in repurposing manufacturing. During the past two years, the scale and scope of manufacturing repurposing have been unprecedented, which raises many intriguing questions for research.

We define manufacturing repurposing as a firm’s rapid conversion of capacities and capabilities to produce new-to-the-firm products. Manufacturing repurposing has been a strong phenomenon in the industry during COVID-19, but it is almost entirely new to the literature. In particular, the literature on manufacturing repurposing in the context of pandemics is in its infancy (Garza-Reyes et al. 2021 ). Existing reports have barely started to explain why and how manufacturing firms repurposed to respond to COVID-19 (e.g., Ashforth 2020 ; Avery 2020 ; De Massis and Rondi 2020 ; George et al. 2020 ; Lawton et al. 2020 ; Shepherd 2020 ; Rouleau et al. 2021 ). In contrast, there is already extensive literature on the effects of COVID-19 on existing operations and supply chains (Phillips et al. 2022 ; Barbieri et al. 2020 ; Naz et al. 2021 ; Reed 2021 ; Yu et al. 2021 ). Therefore, there should be ample opportunity to contribute new insights into manufacturing repurposing, considering the extent and variety of repurposing during the COVID-19 pandemic. In this paper, we ask the following macro-and micro-level research questions: What factors affect manufacturing repurposing activities, and how do they relate to each other?

Addressing our research question, we contribute an understanding of manufacturing repurposing and the factors that affect its dynamic development. As one of the first multi-case empirical analyses of manufacturing repurposing, this is a novel contribution to the emerging literature. We use a multiple case study approach to map and analyze a large number of manufacturing repurposing initiatives during the COVID-19 pandemic. We focus on healthcare products that experienced explosive demand growth during spring 2020, more precisely from March to June 2020, depending on location. After analyzing the data via structured coding methods, we visualized the system dynamics of manufacturing repurposing in causal loop diagrams at the macro and micro levels. By bringing forward the key constructs of manufacturing repurposing, we lay a foundation for future research. The causal loop diagrams also provide practical insights for practitioners and policymakers, which can help improve decision-making processes in future emergencies. We also elaborate on the challenges and opportunities of manufacturing repurposing and outline promising research avenues.

The remainder of this paper is structured as follows. Section 2 provides a literature review of manufacturing repurposing. Section 3 details the research methodology. Section 4 presents our structured qualitative analysis. Section 5 summarizes the results in the form of macro- and micro-level causal loop diagrams. Section 6 discusses the implications of this study for both research and practice as well as its limitations and outlook. Section 7 concludes the paper.

2 Literature review

Manufacturing repurposing is not a new phenomenon, but it lacks a dedicated and established stream of research. During all kinds of crises throughout history, humans have ingeniously developed ways to produce the needed products and tools to fix arising problems. During wartime, for example, repurposing production capacities to produce armory, ammunition, and other products in high demand is normal (e.g., Overy 1994 ). During natural disasters or other emergency events, local needs often require swift responses from local companies, which can help by producing products other than they normally do. For example, during the Aisin Seiki fire in the Kariya factory in 1997, Toyota’s supply of brake fluid valves was disrupted. This disruption drove Toyota to request other suppliers to repurpose production lines to produce valves for Toyota; within a short week, several companies began producing the needed valves. Manufacturing history is ripe with such stories, but they have not been studied collectively as phenomena. However, this is changing due to the unprecedented scale and scope of manufacturing repurposing the world has experienced during the COVID-19 pandemic.

During the recent pandemic, “manufacturing/production repurposing” has been used as a term to represent activities where a manufacturer uses its current capacities and capabilities to shift production to high-demand healthcare products like ventilators, facemasks, or sanitizers (e.g., Betti and Heinzmann 2020 ; López-Gómez et al. 2020 ). Scholars have picked up this term and studied the phenomenon using a variety of problem statements and approaches. The three dominant streams in the nascent literature have been: (1) barriers and success factors for successful repurposing, (2) supply chain issues, and (3) innovation.

Regarding the first stream of literature, Okorie et al. ( 2020 ) evaluate manufacturing repurposing as a firm-level pandemic response tool and identify enablers and barriers to repurposing. In particular, Okorie et al. ( 2020 ) recommend that manufacturing companies increase their flexibility, accelerate the adoption of digital technologies, and improve organizational processes such as decision making and organizational learning during pandemic and post-pandemic situations. The role of digital transformation in swift repurposing has also been emphasized by Soldatos et al. ( 2021 ). Relatedly, Poduval et al. ( 2021 ) use a model-based approach to identify and rank 11 types of barriers that played a central role in the repurposing of an existing manufacturing plant. Poduval et al. ( 2021 ) show that the identified barriers are interrelated and highlight the complexity of the manufacturing repurposing phenomenon. However, none of these studies have aimed to provide an understanding of all the internal and external factors that affect manufacturing repurposing and their causal relationships.

The second stream takes a supply chain perspective on manufacturing repurposing. For example, Falcone et al. ( 2022 ) use the concept of supply chain plasticity, which is defined as a firm’s “capability of rapidly making major changes to a supply chain to accommodate significant shifts in the business environment” (Zinn and Goldsby 2019 , p. 184). Falcone et al. ( 2022 ) argue that the more supply chain plasticity is developed in a firm, the more capability the firm has to repurpose existing operations during disruptions. Some industrial reports also extrapolated the repurposing concept to supply chains, which could increase resilience and social responsibility (e.g., see Accenture 2022 ). Such approaches allow mobilizing available resources in supply chains, similar to Toyota’s supply chain response during the Aisin Seiki fire (Nishiguchi and Beaudet 1998 ). Ivanov ( 2021 ) even suggests that repurposing could be used as an adaptation strategy to maintain supply chain viability during a crisis. While offering important contributions, the supply chain stream fails to capture the complex and interrelated system dynamics that occur between firms, their supply chains, and the external environment during manufacturing repurposing initiatives.

The third notable stream of research in the nascent literature on manufacturing repurposing focuses on innovation. For example, Liu et al. ( 2021a ) explore the effect of shared purpose in driving change in innovation processes and explain how design capability and manufacturing flexibility play key roles in accelerating innovation processes during disruptions. Focusing on the repurposing case of VentilatorChallengeUK, Liu et al. ( 2021b ) highlight open innovation, exaptation, Footnote 1 and ecosystem strategies during the rapid-scale-ups of ventilator production. Poduval et al. ( 2021 ) also point out that innovation is one of the main barriers. Relatedly Schwabe et al. ( 2021 ) provide a maturity model, which focuses on the speed of innovation diffusion from ideation to market saturation based on the repurposing and customization of existing mass manufacturing infrastructures during the COVID-19 pandemic. Innovation of products, processes, and organizations is key to successful repurposing, but it is not sufficient in its own right.

From the nascent but growing literature on manufacturing repurposing reviewed above, it is clear that it is a multifaceted, complex, and dynamic phenomenon. We aim to bring the facets together in a holistic understanding of the phenomenon. We empirically examine macro-and micro-level interactions within manufacturing repurposing projects, which we use to delineate dynamic cause-and-effect relationships that drive or slow down manufacturing repurposing.

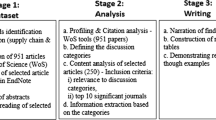

3 Research method

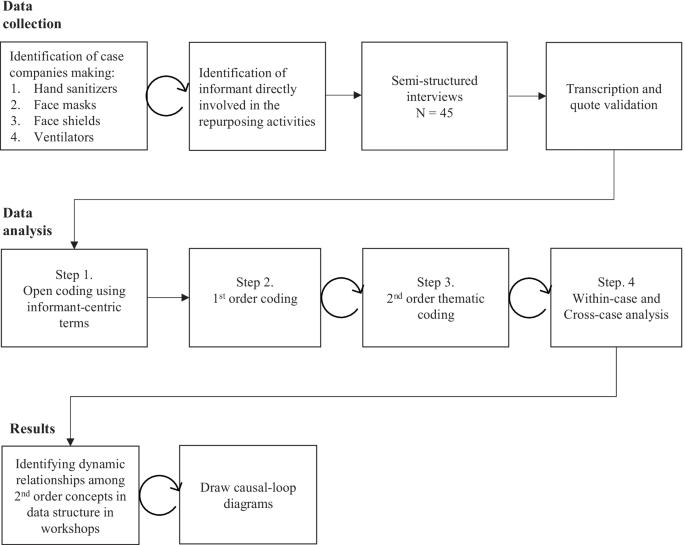

We set out to build a grounded understanding of manufacturing repurposing. We used an inductive approach based on the systematic collection and analysis of data (Glaser and Strauss 1967 ; Gioia et al. 2013 ). To aid in collecting systematic, representative, and in-depth data, we turned to the rich methodological literature on case studies (e.g., Yin 1989 ; Voss et al. 2002 ). Case studies summarize insiders’ views of particular events to portray new insights, methods, or techniques. Figure 1 provides a high-level overview of our research process, and the details are explained in the following sections. Curved arrows represent iterations. This section explains the data collection process.

Flowchart of the research process

To increase internal validity, we narrowed down the focus of our study to four commonly repurposed products during COVID-19: face shields, face masks, hand sanitizers, and ventilators. The selected healthcare products were among those listed in the World Health Organization’s (WHO) technical guidance on essential resource planning during COVID-19 (WHO 2020 ). The unit of analysis was manufacturing repurposing operations in factories, including links to the internal and external stakeholders and partners involved, which provided the focal point of the research and served as the basis for sample selection.

To identify respondents, we used purposive and snowball sampling procedures, as explained by Miles and Huberman ( 1994 ). We explicitly sought a balanced sample that was not limited to only “successful” repurposing initiatives. The respondents were selected based on their direct involvement in producing these products. First, we focused on the most visible repurposing projects of the selected products in countless media reports and used social media platforms, such as LinkedIn or email, to contact the companies. Second, additional respondents were selected through a snowballing approach in which our primary respondents or contacted persons connected us with another potential respondent. We reached out to around 500 companies, of which about one in ten agreed to participate. In total, we interviewed 45 senior managers from 45 different companies. Semi-structured interviews were conducted from January 2021 to July 2021. The respondents’ profiles are summarized in Table 1 .

Due to travel restrictions during the pandemic, all interviews were conducted using videoconferencing. The interview questions were separated into two parts: the macro level of the supply chain and external issues and the micro level of firm repurposing operations (the interview guide is included in Appendix A ). The semi-structured interviews lasted an average of 65 min. They were recorded, and the relevant content was transcribed. We have collected a qualitative database consisting of 915 pages (185,100 words). The interviews were carried out by two researchers, and the notes were cross-compared after the interviews. Internal reliability was improved by validating the transcribed reports with the informants.

To analyze the data, we used the Gioia method (see Gioia et al. 2013 ), which is an inductive approach that uses many iterations of analysis to arrive at higher-level concepts. We first carried out open coding with Maxqda software (Berlin, Germany). First- and second-order codes were assigned based on the in-vivo texts from the semi-structured interviews. This thematic coding was then discussed with the research team to reduce coding bias and improve the interpretation of the qualitative data. Second, for our higher-level constructs, we purposefully coded for context, antecedents, enablers, and barriers to manufacturing repurposing. As is common in qualitative research, these steps were iterative. We then conducted a within-case analysis and summarized each case along with the second-order codes. An example is shown in Appendix B , split into the macro level of our analysis (Table B-1 Panel A) and the micro level (Table B-1 Panel B).

Once all cases were coded and described, the next step was a cross-case analysis. We used second-order codes from the interviews to build patterns of key constructs. To structure and present our findings, we applied a data visualization tool from system dynamics called causal loop diagrams (see Forrester 1994 ). This method was selected for its ability to model complex business decisions to form a structural and behavioral representation of the system (Forrester 1961 ; Sterman 2000 ). Causal loop diagrams map all essential relationships in a system. They show variables as texts, and the causal relationships among them are represented as arrows. We gradually built the causal loop diagrams through workshops, as we added case after case to the grounded emerging “story.” Consistent with our data, we developed two levels of causal loop diagrams to delineate the relationships between the factors involved externally (macro) and internally (micro) in the firm.

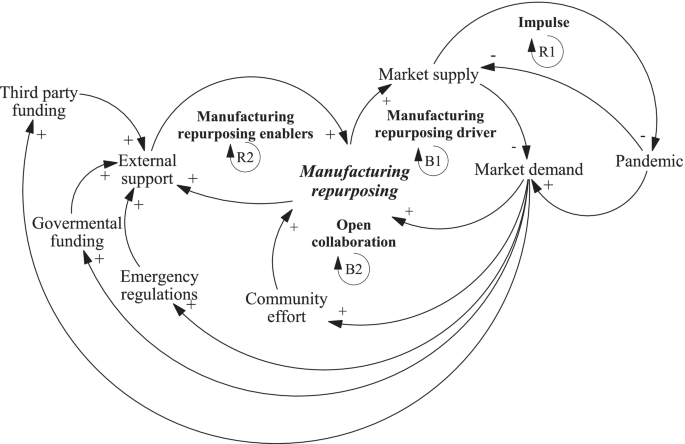

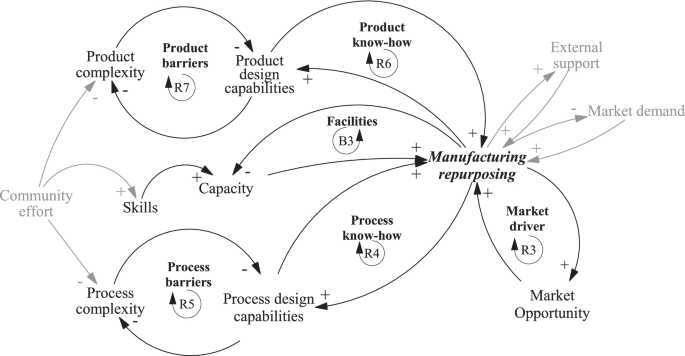

The causal loop diagrams in Figs. 2 and 3 show manufacturing repurposing from the macro-and micro-level perspectives, respectively.

Causal loop diagram of manufacturing repurposing at the macro-level. Notes: R Reinforcing loop, B Balancing loop

Causal loop diagram of manufacturing repurposing at the micro- level. Notes: R Reinforcing loop, B Balancing loop

5.1 Macro perspective on manufacturing repurposing

Figure 2 illustrates the system dynamics of manufacturing repurposing at the macro level. The pandemic provides an impulse to increase the market demand for specific products, which is represented by the reinforcing loop R1. Due to the surge in demand, the COVID-19 pandemic led to a supply shortage of PPE and clinical care equipment (CCE) in spring 2020, resulting in a reinforcing loop of supply shortages caused by the pandemic. Thus, a balancing loop was necessary to regulate supply shortages, leading to manufacturing repurposing activities (see balancing loop B1).

Manufacturing repurposing serves as a balancing mechanism for the overall system. It reduces market demand by producing the required PPE and CCE to meet the gaps created by the pandemic. Manufacturing repurposing was seen through various organizations venturing into new product development or collaborating to scale up a legacy product, as denoted by the open collaboration loop (B2). This collaboration played a pivotal role in enabling manufacturing to repurpose supply chains through open-source platforms (i.e., community efforts) . Two community effort examples include the WHO’s initiative to publish the formula for manufacturing hand sanitizers online and other firms’ and designers’ initiatives to make 3D printing designs available online for direct printing. This collaborative effort significantly accelerated the manufacturing repurposing process. Manufacturers can bypass the design phase and directly channel their resources into manufacturing and scaling up, resembling the open innovation practices introduced by Chesbrough ( 2003 ).