Research Question Generator for Free

If you’re looking for the best research question generator, you’re in the right place. Get a list of ideas for your essay, research paper, or any other project with this online tool.

- 🎓 How to Use the Tool

- 🤔 What Is a Research Question?

- 😺 Research Question Examples

- 👣 Steps to Making a Research Question

📝 Research Question Maker: the Benefits

🔗 references, 🎓 research question generator: how to use it.

Research can’t be done without a clear purpose, an intention behind it.

This intention is usually reflected in a research question, which indicates how you approach your study topic.

If you’re unsure how to write a good research question or are new to this process, you’ll surely benefit from our free online tool. All you need is:

- Indicate your search term or title

- Stipulate the subject or academic area

- Press “Generate questions”

- Choose a suitable research question from the generated list.

As you can see, this is the best research question generator requiring minimal input for smart question formulation. Try it out to see how simple the process is.

🤔 Why Make an Inquiry Question?

A research question is a question that you formulate for your scientific inquiry . It is a question that sets the scope for your study and determines how you will approach the identified problem, gap, or issue.

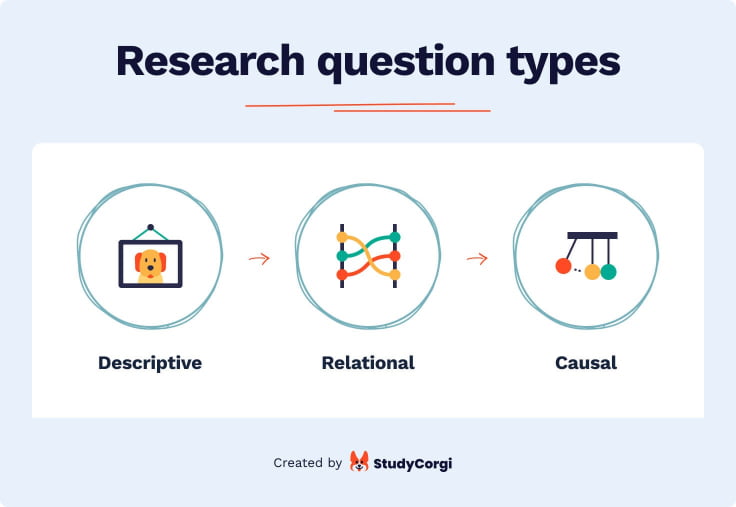

Questions can be descriptive , meaning they aim to describe or measure a subject of the researcher's interest.

Otherwise, they can be exploratory , focusing on the under-researched areas and aiming to expand the existing research evidence on the topic.

If there's enough knowledge about the subject, and you want to dig deeper into the existing trends and relationships, you can also use an explanatory research question.

What Makes a Strong Research Question?

The strength of your formulated research question determines the quality of your research, whether it’s a short argumentative essay or an extensive research paper . So, you should review the quality of your question before conducting the full-scale study.

Its parameters of quality are as follows:

- Clarity . The question should be specific about the focus of your inquiry.

- Complexity . It should not be self-obvious or primitively answered with a “yes” or “no” variant.

- Focus . The question should match the size and type of your academic assignment.

- Conciseness . It should be brief and understandable.

- Debatability . There should be more than one potential answer to the question.

😺 Research Question Examples: Good & Not So Good

Here are some examples to illustrate what we mean by quality criteria and how you can ensure that your question meets them.

Lack of Clarity

The bad example is too general and does not clearly estimate what effect you want to analyze or what aspect of video gaming you're interested in. A much better variant is in the right column.

Look at some other research question examples that are clear enough:

- Terrorism: what is it and how to counter it?

- Sex trafficking: why do we have to address it?

- Palliative care: what constitutes the best technique for technicians communication with patients and families?

- How do vacuum cleaners work?

- What does it mean to age well?

Lack of Focus

The bad example is not focused, as it doesn’t specify what benefits you want to identify and in what context the uniform is approached. A more effective variant is in the right column.

Look at some other research question examples that are focused enough:

- How are biochemical conditions and brain activity linked to crime?

- World wars and national conflicts: what were the reasons?

- Why does crime exist in society?

- Decolonization in Canada: what does decolonization mean?

The bad example is too simplistic and doesn’t focus on the aspects of help that dogs can give to their owners. A more effective variant is in the right column.

Look at some other research question examples that are complex enough:

- How is resource scarcity impacting the chocolate industry?

- What should the Brazilian government do about reducing Amazon’s deforestation?

- Why is a collaborative approach vital during a pandemic?

- What impact has COVID-19 had on the economy?

- How to teach handwriting effectively?

Lack of Debatability

The problem of diabetes is well-known and doesn’t cause any doubts. So, you should add debatability to the discussed issue.

Look at some other research question examples that are debatable enough:

- Online vs. print journalism: what is more beneficial?

- Why will artificial intelligence not replace human in near future?

- What are the differences between art and design?

- Crime TV: how is criminality represented on television?

The question in the left column is too long and ambiguous, making the readers lose focus. You can shorten it without losing the essence.

Look at some other research question examples that are concise enough:

- What is the best way to address obesity in the US?

- Doctoral degree in nursing: why is it important?

- What are the benefits of X-rays in medicine?

- To what extent do emotions influence moral judgment?

- Why did the Industrial Revolution happen in England?

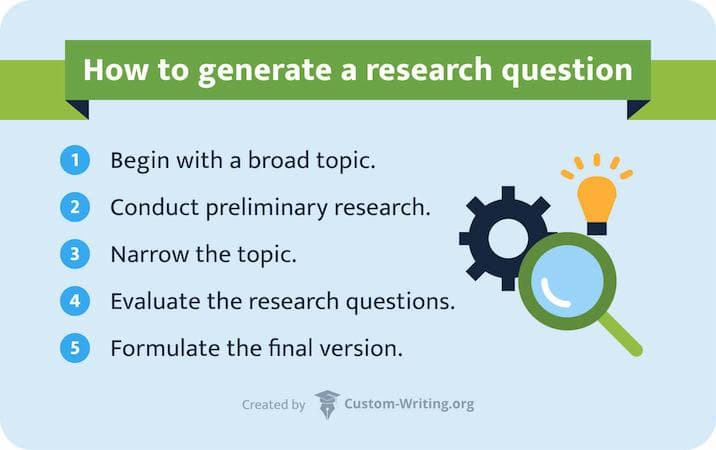

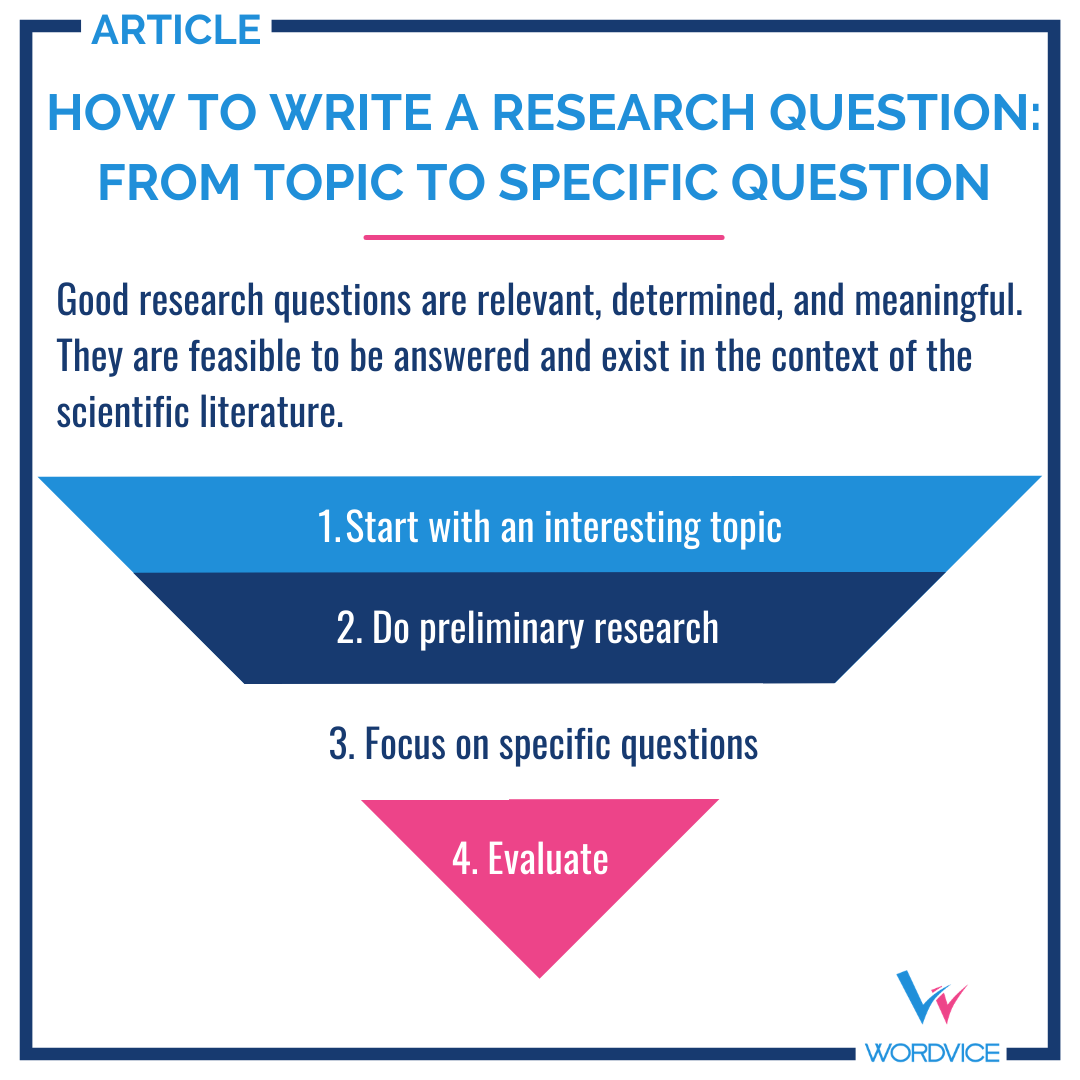

👣 Steps to Generate Research Questions

Now, it’s time to get down from science to practice. Here is a tried-and-tested algorithm for killer research question generation.

- Pick a topic . Once you get a writing assignment, it’s time to find an appropriate topic first . You can’t formulate a thesis statement or research question if you know nothing about your subject, so it's time to narrow your scope and find out as much as possible about the upcoming task.

- Research the topic . After you’re brainstormed several topic options, you should do some research. This stage takes the guesswork out of the academic process, allowing you to discover what scholars and other respected people think about your subject.

- Clarify who your audience is . Think about who will read your piece. Will it be the professor, your classmates, or the general audience consisting of laypersons? Ensure the research question sounds competent enough for a professor and understandable enough for laypeople.

- Approach the subject critically . With a well-articulated topic at hand, you should start asking the "why's" and "how's" about it. Look at the subject as a kid; don't limit your curiosity. You're sure to arrive at some interesting topics to reveal the hidden sides of the chosen issue.

- Evaluate the questions . Now that you have a couple of questions about your topic, evaluate them in terms of research value. Are all of them clear and focused? Will answering all of them take time and research, or is the answer already on the surface? By assessing each option you’ve formulated, you’re sure to choose one leader and use it as your main research question for the scientific study.

Thank you for reading this article! If you need to quickly formulate a thesis statement, consider using our free thesis maker .

❓ Research Questions Generator FAQ

Updated: Oct 25th, 2023

- Developing research questions - Library - Monash University

- Formulation of Research Question – Stepwise Approach - PMC

- Examples of Good and Bad Research Questions

- How To Write a Research Question: Steps and Examples

- Narrowing a Topic and Developing a Research Question

- Free Essays

- Writing Tools

- Lit. Guides

- Donate a Paper

- Referencing Guides

- Free Textbooks

- Tongue Twisters

- Job Openings

- Expert Application

- Video Contest

- Writing Scholarship

- Discount Codes

- IvyPanda Shop

- Terms and Conditions

- Privacy Policy

- Cookies Policy

- Copyright Principles

- DMCA Request

- Service Notice

With our question generator, you can get a unique research question for your assignment, be it an essay, research, proposal, or speech. In a couple of clicks, our tool will make a perfect question for you to ease the process of writing. Try our generator to write the best work possible.

Research Question Generator: Best Tool for Students

Stuck formulating a research question? Try the tool we’ve made! With our research question generator, you’ll get a list of ideas for an academic assignment of any level. All you need to do is add the keywords you’re interested in, push the button, and enjoy the result!

Now, here comes your inspiration 😃

Please try again with some different keywords.

Why Use Research Question Generator?

The choice of research topic is a vital step in the process of any academic task completion. Whether you’re working on a small essay or a large dissertation, your topic will make it fail or fly. The best way to cope with the naming task and proceed to the writing part is to use our free online tool for title generation. Its benefits are indisputable.

- The tool generates research questions, not just topics

- It makes questions focused on your field of interest

- It’s free and quick in use

Research Question Generator: How to Use

Using our research question generator tool, you won’t need to crack your brains over this part of the writing assignment anymore. All you need to do is:

- Insert your study topic of interest in the relevant tab

- Choose a subject and click “Generate topics”

- Grab one of the offered options on the list

The results will be preliminary; you should use them as an initial reference point and refine them further for a workable, correctly formulated research question.

Research Questions: Types & Examples

Depending on your type of study (quantitative vs. qualitative), you might need to formulate different research question types. For instance, a typical quantitative research project would need a quantitative research question, which can be created with the following formula:

Variable(s) + object that possesses that variable + socio-demographic characteristics

You can choose among three quantitative research question types: descriptive, comparative, and relationship-based. Let's consider each type in more detail to clarify the practical side of question formulation.

Descriptive

As its name suggests, a descriptive research question inquires about the number, frequency, or intensity of something and aims to describe a quantitative issue. Some examples include:

- How often do people download personal finance apps in 2022?

- How regularly do Americans go on holidays abroad?

- How many subscriptions for paid learning resources do UK students make a year?

Comparative

Comparative research questions presuppose comparing and contrasting things within a research study. You should pick two or more objects, select a criterion for comparison, and discuss it in detail. Here are good examples:

- What is the difference in calorie intake between Japanese and American preschoolers?

- Does male and female social media use duration per day differ in the USA?

- What are the attitudes of Baby Boomers versus Millennials to freelance work?

Relationship-based

Relationship-based research is a bit more complex, so you'll need extra work to formulate a good research question. Here, you should single out:

- The independent variable

- The dependent variable

- The socio-demographics of your population of interest

Let’s illustrate how it works:

- How does the socio-economic status affect schoolchildren’s dropout rates in the UK?

- What is the relationship between screen time and obesity among American preschoolers?

Research Question Maker FAQ

In a nutshell, a research question is the one you set to answer by performing a specific academic study. Thus, for instance, if your research question is, “How did global warming affect bird migration in California?," you will study bird migration patterns concerning global warming dynamics.

You should think about the population affected by your topic, the specific aspect of your concern, and the timing/historical period you want to study. It’s also necessary to specify the location – a specific country, company, industry sector, the whole world, etc.

A great, effective research question should answer the "who, what, when, where" questions. In other words, you should define the subject of interest, the issue of your concern related to that subject, the timeframe, and the location of your study.

If you don’t know how to write a compelling research question, use our automated tool to complete the task in seconds. You only need to insert your subject of interest, and smart algorithms will do the rest, presenting a set of workable, interesting question suggestions.

Research Question Generator for Students

Our online topic question generator is a free tool that creates topic questions in no time. It can easily make an endless list of random research questions based on your query.

Can't decide on the topic question for your project? Check out our free topic question generator and get a suitable research question in 3 steps!

Please try again with some different keywords.

- 👉 Why Use Our Tool?

💡 What Is a Topic Question?

✒️ how to write a research question.

- 📜 Research Question Example

🔗 References

👉 why use our topic question generator.

Our research topic question generator is worth using for several reasons:

- It saves you time. You can develop many ideas and formulate research questions for all of them within seconds.

- It is entirely free. Our tool doesn’t have any limits, probation periods, or subscription plans. Use it as much as you want and don’t pay a cent.

- It is download- and registration-free. Use it in any browser from any device. No applications are needed. You also don’t have to submit any personal data.

- It’s easy to use. You can see an explanation for every step next to each field you need to fill in.

- You can easily check yourself. Spend a couple of seconds to check your research question on logic and coherence.

A research topic question is a question you aim to answer while researching and writing your paper. It states the matter you study and the hypothesis you will prove or disprove. This question shares your assumptions and goals, giving your readers a basic understanding of your paper’s content.

It also helps you focus while researching and gives your research scope and limitations. Of course, your research question needs to be relevant to your study subject and attractive to you. Any paper will lack an objective and specificity without an adequately stated research question.

Research Topic Vs. Research Topic Question

‘Research topic’ and ‘research question’ are different concepts that are often confused.

Research Question Types: Quantitative and Qualitative

Another essential differentiation to know – there are quantitative and qualitative research questions.

- Quantitative research questions are more specific and number-oriented . They seek clear answers such as “yes” or “no,” a number, or another straightforward solution. Example: How many senior high school students in New York failed to achieve the desired SAT scores due to stress factors?

- Qualitative research questions can be broader and more flexible. They seek an explanation of phenomena rather than a short answer. Example: What is the role of stress factors in the academic performance of high school senior students who reside in New York?

Now let’s get to know how to create your own research question. This skill will help you structure your papers more efficiently.

Step 1: Choose Your Research Topic

If you’ve already received general guidelines from your instructor, find a specific area of knowledge that interests you. It shouldn’t be too broad or too narrow. You can divide it into sub-topics and note them. Discuss your topic with someone or brainstorm to get more ideas. You can write down all your thoughts and extract potential issues from this paragraph or text.

Step 2: Research

After you’ve chosen a topic, do preliminary research . Search for keywords relevant to your topics to see what current discussions are in the scientific community. It will be easier for you to cross out those ideas that are already researched too well. In addition, you might spot some knowledge gaps that you can later fill in. We recommend avoiding poorly researched areas unless you are confident you can rely solely on the data you gather.

Step 3: Narrow Your Topic

At this stage, you already have some knowledge about the matter. You can tell good ideas from bad ones and formulate a couple of research questions. Leave only the best options that you actually want to proceed with. You can create several draft variations of your top picks and research them again. Depending on the results you get, you can leave the best alternatives for the next step.

Step 4: Evaluate What You’ve Got

Evaluate your topics by these criteria:

- Clarity . Check if there are any vague details and consider adjusting them.

- Focus . Your research matter should be unambiguous , without other interpretations.

- Complexity . A good topic research question shouldn’t be too difficult or too easy.

- Ethics . Your ideas and word choice shouldn’t be prejudiced or offensive.

- Relevance . Your hypothesis and research question should correspond with current discussions.

- Feasibility . Make sure you can conduct the research that will answer your question.

Step 5: Edit Your Research Question

Now you can create the final version of your research question. Use our tool to compare your interpretation with the one produced by artificial intelligence. Though you might change it based on your findings, you must create a perfect statement now. You need to make it as narrow as possible. If you don’t know how to make it more specific, leave it till you get the first research results.

📜 Research Question Generator: Examples

Compare a good and bad research question to understand the importance of following all rules:

Thank you for reading till the end. We hope you found the information and tool useful for your studies. Don’t forget to share it with your peers, and good luck with your paper!

Updated: May 17th, 2024

- The Writing Center | How to Write a Research Question | Research Based Writing

- How to Write a Research Question: Types, Steps, and Examples | Research.com

- Pick a Topic & Develop a Research Question – CSI Library at CUNY College of Staten Island Library

Quantitative Research Question Generator

A research question is the core of any academic paper. Yet, the formulation of a solid quantitative research question can be a challenging task for students. That’s why the NerdyRoo team created an outstanding tool that will become your ultimate academic assistant.

🚀 Why Use Our Generator?

🔎 what is a quantitative research question.

- ✍️ Writing Steps

- ✨ Question Examples

🔗 References

Doubting whether our quantitative research question generator is worth using? It is! Our tool has many benefits:

- It’s entirely free

- It’s accessible online and without registration

- It’s easy to use

- It saves your time

- It boosts productivity

- It instantly generates a high-quality quantitative research question.

Quantitative questions are close-ended questions used for gathering numerical data. Such questions help to collect responses from big samples and, relying on the findings, make data-driven conclusions. A research question is essential to any quantitative research paper because it presents the topic and the paper's aim.

Quantitative research questions always contain variables : things that are being studied. It's crucial to ensure your variables are attainable and measurable. For example, you cannot measure organizational change and emergency response, but you can determine the frequency of organizational change and emergency response score.

Types of Quantitative Research Questions

Do you know that there are 3 types of quantitative research questions? Take a look at them and decide which type is the most suitable for your paper.

Quantitative vs. Qualitative Research Questions

Many students confuse quantitative and qualitative research . Despite having similar-sounding names, they're very different:

- Quantitative questions are aimed at collecting raw numerical data.

- Qualitative questions have an answer expressed in words. They also allow getting respondents’ personal perspectives on a research topic.

Let’s examine the main differences between qualitative and quantitative research :

✍️ How to Write a Quantitative Research Question

Want to craft an outstanding quantitative research question? We know how to help you! Follow the 5 steps below and get a flawless result:

1. Choose the type of research question.

Decide whether you need a descriptive, comparative, or relationship-based quantitative research question. How your question starts will also depend on the type.

2. Identify the variables.

See how many variables you have. Don't forget to distinguish between dependent and independent ones .

3. Set the groups.

Your study will focus on one or more groups. For example, you might be interested in social media use among Gen-Z Americans, male Millennials, LGBTQ+ people, or any other demographic.

4. Specify the context.

Include any words that will make your research question more specific, such as "per month," "the most significant," or "daily."

5. Compose the research question.

Combine all the elements from the previous steps. Use our quantitative research question generator to ensure the highest result quality!

✨ Quantitative Research Question Examples

Now, let's look at some well-formulated quantitative research questions with explanations for variables and groups.

Thanks for visiting our webpage! Good luck with your quantitative research. Use our online tool and share it with your friends!

❓ Quantitative Research Questions FAQs

❓ what is an example of a quantitative research question.

A quantitative research question might be the following: "What is the relationship between website user-friendliness and customer purchase intention among male and female consumers of age 25 to 30?" Another example would be: "What percentage of Bachelor's graduates acquire a Master's degree?"

❓ What are the quantitative questions?

Quantitative questions are close-ended questions used for collecting numerical data. Such questions help gather responses from big samples and trace patterns in the selected study area. Relying on the finding of quantitative research, the researcher can make solid decisions.

❓ How do you write a quantitative research question?

- Identify the variables.

- Decide on the focus groups.

- Specify the context.

To ensure the best result, use our online generator. It will create a flawless research question for free in a couple of seconds!

❓ What questions does quantitative research answer?

Quantitative research answers any kind of question involving numerical data analysis. For example, it may help to determine the interdependence of variables, examine current trends within the industry, and even create forecasts.

- Developing Your Research Questions: Nova Southeastern University

- How to Write a Research Question: George Mason University

- Quantitative Methods: University of Southern California

- Research Question Overview: North Central University

Create Key Questions to Guide Your Studies

AI Generators in Science and Research

🧪🔍 Formulate relevant questions to guide your scientific work. Define your research objective precisely!

Provide additional feedback

The quality of a study is often based on the relevance of its initial questions. In the vast world of research, asking the right questions is essential to obtaining meaningful, impactful answers.

📝 Questions That Matter

Our generator helps you formulate pertinent, targeted questions to give a clear direction to your research.

💡 Suitable for All Subjects

Whether it's life sciences, astrophysics or sociology We cover a wide range of fields to meet your needs.

🌐 Set your course

With thoughtful questions, optimize reach and efficiency of your research within the scientific community.

Similar publications :

Popular generators:

Create Limitless with Generator AI

Immerse yourself in a world where every idea is instantly transformed into reality. Generator AI brings your boldest visions to life in the blink of an eye.

Quantitative Research Question Generator

Wondering how to come up with a fascinating research question? This tool is for you.

- 📝 Qualitative Research Definition

- ✍️ Creating a Qualitative Research Question

- 👼 Useful Tips

🔗 References

What does the qualitative research question generator do.

Our qualitative research question generator creates science topics for any studying field.

You just need to:

- Add the necessary information into the textboxes;

- Click “Generate”;

- Look at the examples if necessary.

You will see different research areas, from broad to narrow ones. If you didn't find the ones you like, click "see more."

Don't worry if it's your first qualitative research experience. We will teach you how to formulate research questions and get the most out of this tool.

📝 What Is Qualitative Research?

First, let's define the difference between qualitative and quantitative research. Qualitative research focuses on meaning and experience. Quantitative research aims to provide empirical evidence to prove a hypothesis.

Qualitative research is an inquiry information gathering method used to study social phenomena. In most cases, qualitative research relies on interviews, observations, focus groups , and case studies. The main goal of a researcher is to gain deep insights instead of focusing on numbers and statistics. Qualitative research is popular in psychology, anthropology, sociology, political science, education studies, and social work.

Take a look at the 6 characteristics of qualitative research:

- It relies on a real-world setting.

- It uses multiple data collection methods .

- It depends on researchers' data more than on prior research.

- It considers participants' understanding of phenomena.

- It uses complex reasoning.

- It has a flexible course of study.

What Is a Qualitative Research Question?

Qualitative research question tries to understand the meaning people tie to a concept. It seeks to explore the phenomena rather than explain them. For that reason, a qualitative research question is usually more general.

How to write a good qualitative research question? Make sure it meets the requirements below:

- Broadness. Qualitative research questions need to be broad and specific enough.

- Relevance. A topic should interest your audience and follow the given instructions.

- Applicability. The results of your research should have scientific value and implementation opportunities.

- Clarity. Your readers need to know what your paper is about after seeing the research question.

- Flexibility. The scenario of your research can change while you conduct it. That is why qualitative research questions are often open-ended.

✍️ How to Make a Qualitative Research Question?

Now we will explain how to choose and create an excellent research title. Just make sure you follow these three steps:

- Brainstorm your ideas. Take a piece of paper or use any digital device to note your thoughts. Based on the task, make a list of as many research topics as you can.

- Choose the topics that are perfect at this stage. You can start with your interests and the areas already familiar to you. Use your old papers. In case you have noticed any knowledge gaps while in them, add these topics too. Think about how your work can contribute to the chosen science field.

- Conduct a preliminary literature review on your topics. It will help to eliminate the ones that are already studied well.

- Define the purpose of your research. For example, you want to understand what rewards and perks are the most stimulating for employees. Discuss your ideas with your instructor. If you have an opportunity to choose a supervisor, find a professor with experience within your area of interest.

- Choose one topic and think of a research question . If you discuss your research in class, listen to what your peers say about it. It can give you new ideas and insights on the topic.

- After choosing your topic, research the area more thoroughly. You might rely on your supervisor's instructions at this stage. Take notes on your findings and think about how you can use them. Think of the questions you had after reading the materials and how you can answer them.

- Formulate your research question. Make the question as concise as possible. If you find it challenging to put your thoughts into one sentence, write a small paragraph. Then you can shorten it and form a question.

- Create 1-3 sub-questions in addition to your main qualitative research question. They should highlight the purpose of your research and give more information about your work.

✨ Benefits of Qualitative Research Question Generator

Knowing how to create a qualitative research topic is excellent. But imagine what you can do if you combine your knowledge with artificial intelligence.

Here's how students can benefit from using our tool:

- It saves time. The tool generates an infinite list of topics in one second. You just need to choose the ones that appeal to you.

- It is 100% free. No registration, subscription, or donation is required.

- It has no limits. Use this tool as many times as needed.

- It is easy to use. Type your research keywords into the search bar and click "Search topic."

- It values your privacy. You don't need to disclose any personal data to use this tool.

👼 Tips for Writing a Qualitative Research Question

Here are some nice bonus tips for you:

- Use qualitative verbs such as "describe", "understand", "discover".

- Avoid quantitative research-related verbs such as "effect", "influence", "relate".

- Be sure that it is possible to answer the question fully.

- Don't be afraid to adjust your research question if your research leads to biased results.

- Check if your research question and information-gathering methods are ethical.

- Make sure your research question doesn't contradict your purpose statement.

- Rely on research findings rather than on your predictions.

❓ How do you write a qualitative research question?

Brainstorm your ideas and highlight the best ones. To formulate a research question, use qualitative words and broad ideas. Create a paragraph to narrow it down later. The complexity of your research question depends on the type of paper and the instructions you received.

❓ What are typical qualitative research questions?

Typical qualitative research questions begin with words such as "how," "what," "to what extent," etc. They imply that there should be an extended deep answer to the question. In addition, there can be qualitative sub-questions that are narrower than the central question.

❓ What are examples of research questions?

Here are two examples of nursing research questions:

Qualitative research question: What are the consequences of psychological side effects of ADHD medication for children?

Quantitative research question: How do the psychological side effects of ADHD medication for children influence academic performance?

- Qualitative research questions; Scientific Inquiry in Social Work

- How to write Qualitative Research Questions and Questionnaires

- Strategies for Selecting a Research Topic - ResearchGate

- Qualitative or Quantitative Research? | MQHRG - McGill University

- Call to +1 844 889-9952

Scientific Research Question Generator

Feeling stuck trying to make a fresh and creative research question? Try our free research question generator! Choose a suitable question from a list of suggestions or build your own.

- Question Maker

- Question Finder

Make a scientific research question with this tool in 3 simple steps:

Please try again with some different keywords.

- 🧪 What Is This Tool?

‼️ Why Are Research Questions Important?

- 📃 How to Create a Science Paper?

- 🔗 References

🧪 Scientific Research Question Generator: What Is It?

Welcome to the page of our scientific research question generator! Right about now, you’re probably wondering – what is this tool, and how does it work? We present you with two options – a generator and a builder. You can read more about them below.

Scientific Question Generator

Deciding to use a question generator is a great alternative to save time and get what you want. You won’t have to suffer for hours looking for a fresh and creative idea! Once you customize the generator to your requirements, you’ll get incredible results.

What is good about this option? Simply put, you’ll only need to follow a few basic steps to create a research question. First, enter the keywords for your future work. You can also select a research area to optimize the generator’s search. Run a search for results and choose a question option from the many suggested ideas! You can refresh your search until you find the research question that fits and inspires you the most.

Research Question Builder

This tool has another feature that may come in handy – a generator of individual research questions from scratch. You don’t need to come up with your own options and guess how to write a well-written idea. It is a valuable function that will save time and produce more creative outcomes. To generate it, you’ll have to specify more qualifying study details.

As the first step, decide your study group and the factor that affects it . Next, try to formulate a measurable outcome of your work. You can add another study group to expand the generator’s capabilities. And finally, specify the time frame of the study. As a result, you have a ready-made individualized research question.

A research question is a helpful tool both for students and researchers. Sound and well-constructed questions are the ones that can shape the structure of your study. They should be grounded in consciously chosen data, instead of random variables. We can use these important questions not only for academic objectives but also in other life situations. For example, by studying the research questions of a potential employer, you can understand the suitability of the company and this kind of job for you.

A well-worded question will be easier for you to answer. You can also use it to outline your research and identify possible problems. That approach will reduce the time it takes to prepare the design of your study. To create a good research question , you need to:

- Choose an area of interest.

- Focus on a specific topic.

- Compose smaller support questions .

- Select the type of data collection and review the applicable literature.

- Identify your target audience.

📃 How to Create a Good Science Paper?

Scientific research papers are similar to the standard essays you are used to writing in school and college. But they have their specificities that you should be aware of. In this section, we have broken down the structure of a typical science paper and explained what goes into each part.

Your title should be specific and concise. It should also describe the subject and be comprehensive. However, it should be clear enough to be understood by a broader target audience, not just narrowly focused specialists.

The abstract is often a necessary component of academic work. The principal aim is to allow the reader a quick look at the scientific material and decide whether they are interested. However, this part shouldn’t be as technical as the main study, so as not to distract them. The abstract consists of general objectives, methods, results, and conclusions, and is approximately 150 to 250 words long. Note that you shouldn’t include citations, notations, and abbreviations.

Introduction

You should write an introduction describing the statement of your problem, and why it’s relevant and worthwhile. A few paragraphs will be enough. You can mention the main sources you have been working with to keep your audience involved. Also, remember to provide the necessary context and background information for your research. You can finish the introduction by explaining the essence of your research question and the value of your answer.

Methods & Materials

In this section of the paper, you should provide the methods and materials you have used for your study. It’s necessary to make your results replicable, and use qualitative or quantitative research methods (or a mix of both). You can use tables, diagrams, and charts to visually represent this information. You shouldn’t disclose your work findings, but you can include preview conclusions for reference.

At this point, we present the final study results, outlining the essential conclusions. Remember, there is no need to discuss the findings or cause-and-effect relationships. Avoid including subtotal results you have received and don’t affect the bottom line. Also, avoid manipulating your audience or exaggerating your achievements, as your results should be testable.

Provide the most meaningful results for discussion . Describe how these results relate to your question and how they are consistent with the results of other researchers. Indicate if the results coincided with your expectations and how you can interpret them. Also, mention if your findings raise issues and how they impact the scope of the study. You may finish up with the relevance of your conclusions.

When you give data in tables or charts, be sure to include a header describing the information in them. Don’t use tables or charts if they are irrelevant. Also, don’t insert them if you need to display data that can fit into a couple of sentences. Make sure to annotate all the visual data you end up using and mention them in the list of figures in the appendix.

Every scientific research paper must have a list of references at the end. This is to avoid plagiarism and to support the validity of your study. Remember to use notations as you go along and indicate them in the text. Then, you must list all the literature used in alphabetical order at the end of the paper. Double-check the citation style of your institution before making this list.

We hope you found our tool helpful in your work! Be sure to check out the FAQ section below if you still have any questions.

❓ Scientific Question Generator – FAQ

❓ how do you develop a scientific question.

Formulate the question in such a way that you can study it. It should be clear, understandable, and brief. After reading your research question, the reader should understand what your paper will be about. Therefore, it should have an objective , relevance, and meaning.

❓ What are good examples of a science research question?

“What are the legal aspects affecting the decrease in people who drive under the influence of alcohol in the USA?” — This question focuses on a defined topic and reviews the effectiveness of existing legislation.

“How can universities improve the environment for students to become more LGBT-inclusive?” — This question focuses on one specific issue and addresses a narrowly targeted area.

❓ What are the 3 qualities of a good scientific question?

A good question should be feasible in the context of the research accessible to the field of study, ethical, sufficient methods, and materials. It should be interesting, engaging, and intriguing to the target audience. Finally, it should also be relevant and provide new ideas to the chosen field for future research.

Updated: May 17th, 2024

📎 References

- Scientific Writing Made Easy: A Step-by-Step Guide to Undergraduate Writing in the Biological Sciences – Sheela P. Turbek, Taylor M. Chock, Kyle Donahue, Caroline A. Havrilla, Angela M. Oliverio, Stephanie K. Polutchko, Lauren G. Shoemaker & Lara Vimercati, Ecological Society of America

- Writing the Scientific Paper – Emily Wortman-Wunder & Kate Kiefer, Colorado State University

- Organizing Your Social Sciences Research Paper, University of Southern California

- Your research question – Imperial College London

- Developing research questions – Monash University

Research Question Maker

Please try again with some different keywords or subjects.

Looking for a research question maker to get a ready research question or build one from scratch?

Search no more!

This 2-in-1 online research question making tool can do both in seconds.

Try our it and break free from the stressful experience. The tool is user-friendly, and you can easily access it online for free.

- ️🤔 How to Use the Tool?

- ️🕵🏽 What Is a Research Question?

- ️🔢 Research Question Formula

- ️🔎 Research Question Types

- ️✅ Research Question Checklist

- ️👀 Examples

- ️🔗 References

🤔 Research Question Maker: How to Use the 2-in-1 Tool?

Getting a ready research question.

You don't have to stress over our research question generator because you get impressive results within a few seconds.

Get your research question by following the steps below:

- Enter the keywords related to the research question you are interested in exploring.

- Choose your study area if necessary.

- Run the search and wait for the results.

- Look at the many ideas that the question maker will propose.

You can refresh the search button until you find the question that suits your research paper.

Building a Tailor-made Research Question

Another option of this 2-in-1 tool enables you to build a tailor-made research question from scratch. To get one quickly, perform the following steps:

🕵🏽 What Is a Research Question?

A research question is important in guiding your research paper, essay, or thesis. It offers the direction of your research and clarifies what you want to focus on.

Good research questions require you to synthesize facts from several sources and interpret them to get an answer.

It is essential to understand the features of a good research question before you start the formulation process.

Your question should be:

- Focused. It should focus on one research issue.

- Specific. The question should contain narrowed ideas .

- Researchable. You should get answers from qualitative and quantitative research sources .

- Feasible. It should be workable within the practical limitations

- Original. The question should be unique and authentic.

- Relevant. It needs to be based on your subject discipline.

- Complex. It should offer adequate scope for analysis and discussion.

Research papers or essays require one research question, as a rule. However, extensive projects like dissertations and theses can have several research questions focusing on the main research issue.

The thesis statement is the response you develop; it sets the direction of your arguments. It should be relevant to the research question.

Thus, you can also use an online thesis maker to ensure it aligns with your formulated questions.

🔢 Research Question Formula

In research writing , you must begin with a topic of interest. Analyzing the original title, you have chosen will give you a good and well-defined research question.

There is an effective formula you can use when formulating your research question.

Topic + Concept = Research question

The topic should be specific with a strong focus on a subject matter, while the concept surrounding it should be from a broad field.

For example:

Your topic could be social media, nursing, standardized tests, cybersecurity, etc. Conversely, concepts can be the risks and benefits of your topic, the recent trends, challenges faced by the industry, etc.

Let us explore the formula and create a few research questions.

- Standardized tests (topic) + recent trends (concept) = How have standardized tests impacted the education sector? (research question)

- Cybersecurity (topic) + effect (concept) = How has cybersecurity affected the evolution of technology? (research question)

Therefore, ensure your research question is neither too broad nor too narrow. Broad topics and concepts might overwhelm you with numerous sources. On the other hand, narrow questions will limit you when exploring the project's scope.

🔎 Research Question Types

When formulating your research question, choose from 3 fundamental types that your academic paper can focus on.

Descriptive Research Question

When your investigation intends to disclose existing patterns within the research subject, you should use this type.

A descriptive question urges you to collect measurable information about the attributes of subjects with certain views. It could be a number, occurrence, or amount that describes a research problem.

Here are some examples:

- What is the percentage of people with fitness apps in 2022?

- What is the average debt load of an American?

- How often do students use online writing services in the UK?

Relational Research Question

This type focuses on comparing two or more entities in a research investigation. After picking your variables, you must choose a comparison parameter and provide its detailed discussion.

Some examples are as follows:

- What is the difference between men and women's salaries in IT?

- What is the correlation between alcohol and depression?

- Is there a relationship between a vegan diet and the low-income bracket?

Causal Research Question

This is a cause-and-effect type of research question. It seeks to prove how one variable affects another one.

Great examples are:

- How does advertising impact consumer behavior?

- Do public opinion polls alter voter inclinations?

- How does employee training affect performance in the employment market?

✅ How to Make a Research Question Stronger? The Checklist

Developing questions seems like a simple task for students. But it can be quite challenging if you want to create an effective research question. The latter can make or break your paper, so you should focus on strengthening and refining it.

How do you make your research question strong? The criteria below will show whether you've already arrived at a workable question.

👀 Research Question Examples

- What does a change-ready organization look like?

- Wearable medical devices: how will they transform healthcare?

- What effect did the World War II wartime experience have on African americans?

- Biodiversity on the Earth: why is it crucial for the environment?

- What makes William Shakespeare relevant in the modern day?

- How did the Civil War affect the distribution of wealth in the United States?

- What is love?

- Why should businesses embrace remote work?

- What impact has feminism had in the study of women and crime?

- How to construct a mixed methods research design?

- What is a halogenated hydrocarbon?

Thank you for reading this article! If you need to formulate a research title, try using our title-generating tool .

❓ Research Question Maker Tool FAQ

❓ what is a good research question.

A great research question is specific and answerable within a workable time frame. It should focus on one topic and be researchable using primary and secondary data. In short, it should have a clear statement of what the researcher is supposed to do to get practical answers.

❓ How to formulate a research question?

To understand how to create a research question, you need to think about how your topic affects a particular population. You should also consider the period of investigation and the location – it could be an organization, country, or commercial industry.

❓ How to write a qualitative research question?

Your questions should reveal research issues and opinions from the respondents in your study. Qualitative questions seek to discover and understand how people make sense of their life experiences and events. The results of qualitative research are analyzed narratively, so don't try to quantify them.

❓ How to find a research question?

If you find it difficult to compose a unique research question, use our question maker tool and get it within a few seconds. Just enter the right keywords about your subject of interest, and the smart algorithms will produce a list of questions that suit your case.

🔗 References

- How to Write a Research Question - GMU Writing Center

- How to Write a Research Question: Steps and Examples

- Narrowing a Topic and Developing a Research Question

- Formulation of Research Question – Stepwise Approach - PMC

- Writing Research Questions: Purpose & Examples - Study.com

- News & Highlights

- Publications and Documents

- Postgraduate Education

- Browse Our Courses

- C/T Research Academy

- K12 Investigator Training

- Harvard Catalyst On-Demand

- Translational Innovator

- SMART IRB Reliance Request

- Biostatistics Consulting

- Regulatory Support

- Pilot Funding

- Informatics Program

- Community Engagement

- Diversity Inclusion

- Research Enrollment and Diversity

- Harvard Catalyst Profiles

Creating a Good Research Question

- Advice & Growth

- Process in Practice

Successful translation of research begins with a strong question. How do you get started? How do good research questions evolve? And where do you find inspiration to generate good questions in the first place? It’s helpful to understand existing frameworks, guidelines, and standards, as well as hear from researchers who utilize these strategies in their own work.

In the fall and winter of 2020, Naomi Fisher, MD, conducted 10 interviews with clinical and translational researchers at Harvard University and affiliated academic healthcare centers, with the purpose of capturing their experiences developing good research questions. The researchers featured in this project represent various specialties, drawn from every stage of their careers. Below you will find clips from their interviews and additional resources that highlight how to get started, as well as helpful frameworks and factors to consider. Additionally, visit the Advice & Growth section to hear candid advice and explore the Process in Practice section to hear how researchers have applied these recommendations to their published research.

- Naomi Fisher, MD , is associate professor of medicine at Harvard Medical School (HMS), and clinical staff at Brigham and Women’s Hospital (BWH). Fisher is founder and director of Hypertension Services and the Hypertension Specialty Clinic at the BWH, where she is a renowned endocrinologist. She serves as a faculty director for communication-related Boundary-Crossing Skills for Research Careers webinar sessions and the Writing and Communication Center .

- Christopher Gibbons, MD , is associate professor of neurology at HMS, and clinical staff at Beth Israel Deaconess Medical Center (BIDMC) and Joslin Diabetes Center. Gibbons’ research focus is on peripheral and autonomic neuropathies.

- Clare Tempany-Afdhal, MD , is professor of radiology at HMS and the Ferenc Jolesz Chair of Research, Radiology at BWH. Her major areas of research are MR imaging of the pelvis and image- guided therapy.

- David Sykes, MD, PhD , is assistant professor of medicine at Massachusetts General Hospital (MGH), he is also principal investigator at the Sykes Lab at MGH. His special interest area is rare hematologic conditions.

- Elliot Israel, MD , is professor of medicine at HMS, director of the Respiratory Therapy Department, the director of clinical research in the Pulmonary and Critical Care Medical Division and associate physician at BWH. Israel’s research interests include therapeutic interventions to alter asthmatic airway hyperactivity and the role of arachidonic acid metabolites in airway narrowing.

- Jonathan Williams, MD, MMSc , is assistant professor of medicine at HMS, and associate physician at BWH. He focuses on endocrinology, specifically unravelling the intricate relationship between genetics and environment with respect to susceptibility to cardiometabolic disease.

- Junichi Tokuda, PhD , is associate professor of radiology at HMS, and is a research scientist at the Department of Radiology, BWH. Tokuda is particularly interested in technologies to support image-guided “closed-loop” interventions. He also serves as a principal investigator leading several projects funded by the National Institutes of Health and industry.

- Osama Rahma, MD , is assistant professor of medicine at HMS and clinical staff member in medical oncology at Dana-Farber Cancer Institute (DFCI). Rhama is currently a principal investigator at the Center for Immuno-Oncology and Gastroenterology Cancer Center at DFCI. His research focus is on drug development of combinational immune therapeutics.

- Sharmila Dorbala, MD, MPH , is professor of radiology at HMS and clinical staff at BWH in cardiovascular medicine and radiology. She is also the president of the American Society of Nuclear Medicine. Dorbala’s specialty is using nuclear medicine for cardiovascular discoveries.

- Subha Ramani, PhD, MBBS, MMed , is associate professor of medicine at HMS, as well as associate physician in the Division of General Internal Medicine and Primary Care at BWH. Ramani’s scholarly interests focus on innovative approaches to teaching, learning and assessment of clinical trainees, faculty development in teaching, and qualitative research methods in medical education.

- Ursula Kaiser, MD , is professor at HMS and chief of the Division of Endocrinology, Diabetes and Hypertension, and senior physician at BWH. Kaiser’s research focuses on understanding the molecular mechanisms by which pulsatile gonadotropin-releasing hormone regulates the expression of luteinizing hormone and follicle-stimulating hormone genes.

Insights on Creating a Good Research Question

Play Junichi Tokuda video

Play Ursula Kaiser video

Start Successfully: Build the Foundation of a Good Research Question

Start Successfully Resources

Ideation in Device Development: Finding Clinical Need Josh Tolkoff, MS A lecture explaining the critical importance of identifying a compelling clinical need before embarking on a research project. Play Ideation in Device Development video .

Radical Innovation Jeff Karp, PhD This ThinkResearch podcast episode focuses on one researcher’s approach using radical simplicity to break down big problems and questions. Play Radical Innovation .

Using Healthcare Data: How can Researchers Come up with Interesting Questions? Anupam Jena, MD, PhD Another ThinkResearch podcast episode addresses how to discover good research questions by using a backward design approach which involves analyzing big data and allowing the research question to unfold from findings. Play Using Healthcare Data .

Important Factors: Consider Feasibility and Novelty

Refining Your Research Question

Play video of Clare Tempany-Afdhal

Play Elliott Israel video

Frameworks and Structure: Evaluate Research Questions Using Tools and Techniques

Frameworks and Structure Resources

Designing Clinical Research Hulley et al. A comprehensive and practical guide to clinical research, including the FINER framework for evaluating research questions. Learn more about the book .

Translational Medicine Library Guide Queens University Library An introduction to popular frameworks for research questions, including FINER and PICO. Review translational medicine guide .

Asking a Good T3/T4 Question Niteesh K. Choudhry, MD, PhD This video explains the PICO framework in practice as participants in a workshop propose research questions that compare interventions. Play Asking a Good T3/T4 Question video

Introduction to Designing & Conducting Mixed Methods Research An online course that provides a deeper dive into mixed methods’ research questions and methodologies. Learn more about the course

Network and Support: Find the Collaborators and Stakeholders to Help Evaluate Research Questions

Network & Support Resource

Bench-to-bedside, Bedside-to-bench Christopher Gibbons, MD In this lecture, Gibbons shares his experience of bringing research from bench to bedside, and from bedside to bench. His talk highlights the formation and evolution of research questions based on clinical need. Play Bench-to-bedside.

Research Question Generator Online

Are you looking for effective aid in research question formulation? Try our research question generator and get ideas for any project instantly.

- 🤖 How to Use the Tool

❗ Why Is a Research Question Important?

🔖 research question types & examples, 🗺️ how to generate a research question.

- 👀 More Examples

- 🔍 References

🤖 How to Use a Research Question Generator?

Struggling to develop a good research question for your college essay , proposal , or dissertation ? Don't waste time anymore, as our research question generator is available online for free.

Our tool is designed to provide original questions to suit any subject discipline.

Generate your questions in a few easy steps as shown below:

- Add your research group and the influencing factor.

- Indicate your dependent variable (the thing you’re planning to measure).

- Add the optional parameters (the second research group and the time frame).

- Look at the examples if necessary.

Once you get the initial results, you can still refine the questions to get relevant and practical research questions for your project.

The main importance of formulating a research question is to break down a broad topic and narrow it to a specific field of investigation . It helps you derive a practical knowledge of the topic of interest. The research question also acts as a guiding structure for the entire investigation from paragraph to paragraph. Besides, you can define research issues and spot gaps in the study.

The research questions disclose the boundaries and limitations of your research, ensuring it is consistent and relevant. Ultimately, these questions will directly affect the research methods you will use to collect and analyze data. They also affect the process of generating a thesis statement . With a checker proposal, you can also polish your research question to ensure it aligns with the research purpose.

The research writing process covers different types of questions, depending on the depth of study and subject matter. It is important to know the kind of research you want to do; it will help you in the formulation of an effective research question. You can select quantitative, qualitative, or mixed methods studies to develop your questions.

Let us explore some of these question types in detail to help you choose a workable option for your project:

Quantitative Research Questions

Quantitative questions are specific and objective, providing detailed information about a particular research topic . The data you collect from this research type is quantifiable and can be studied using figures.

These questions also delineate a relationship between the research design and the research question.

Quantitative questions focus on issues like:

- "How often"

- "How intense"

- "Is there a statistical relationship"

They illustrate the response with numbers.

In addition, quantitative questions help you to explore existing patterns in data from a specific location or context. The collected information allows researchers to make logical and data-driven conclusions.

This type of research question can be classified further into 3 categories.

Descriptive Research Questions

Such questions seek to describe a quantifiable problem and investigate the numbers, rates, or intensity of the issue. They are usually used to write descriptive papers .

Comparative Research Questions

As the name suggests, comparative questions intend to compare and contrast two or more issues in a research project. These questions are used in comparative papers . To formulate such a question, identify two or more variables, choose a standard for comparison, and present an in-depth discussion.

Let's look at a few examples.

Relationship-based Research Questions

Relationship-based questions reveal and identify a connection between two or more research variables . Such questions entail a dependent variable, an independent variable, and a socio-demographic of the population you are interested in studying.

Qualitative Research Questions

Qualitative research questions are open-ended and aim to explore or explain respondents' subjective meanings and experiences . You can't measure the data you collect from a qualitative research question in figures, as it's mostly narrative. Some of the common types include those described below.

Exploratory Research Questions

These questions investigate a particular research topic without any assumptions.

Explanatory Research Questions

These questions examine the reasons and find connections between existing entities.

Mixed Methods Studies

When you combine quantitative and qualitative research questions, you will get a mixed-method research study . It answers your research question more comprehensively since it combines the advantages of both research methods in a pragmatic study .

This mixed study can focus on quantitative data (score comparison with attitude ranking) and qualitative insights from student interviews about attitudes.

We have outlined a few steps to generate exceptional questions for students who don't know how to write them effectively.

👀 More Research Question Examples

- Why do minorities delay going to the doctor?

- What makes humans mortal genetically?

- Why and how did the US get involved in the Korean War?

- The virus COVID-19: what went wrong?

- What is cancel culture, and can it go too far?

- How do human infants acquire a language?

- Eastern vs. Western religions: what’s the difference?

- Why is capitalism better than socialism?

- What do Hamlet and Oedipus have in common?

- How does language influence our world?

- Competence for nurses: why is it important?

- COVID-19 pandemic: what we can learn from the past?

❓ Research Question Generator FAQ

❓ how to form a research question.

You should select an interesting topic related to the subject you are studying. Carry out preliminary research with our research question generator online and pick the question from the list of offered suggestions. Refine the question until you are satisfied with the result.

❓ What makes a good research question?

An effective research question should focus on a single issue and clearly state the research direction you will take. The topic should neither be too broad nor too narrow – just enough to keep you focused on the main scope of the study. Also, it should be answerable with a comprehensive analysis.

❓ How to find the research question in an article?

In an academic article, the research question is usually placed at the end of the introduction, right before the literature review. At times, it may be included in the methods section – after the review of academic research.

❓ How to write a quantitative research question?

Identify what claim you want to make in your research purpose. Choose a dependent variable, an independent variable, and a target population, and formulate the assumed relationship between the variables for that respondent group. Ensure the data you collect is measured within a specific context.

🔗 References

- Types of Research Questions With Examples

- Developing research questions - Library - Monash University

- Research Question - Research Guide - LibGuides

- How To Write a Research Question: Steps and Examples

- How to Write a Research Question - GMU Writing Center

We use cookies and similar technologies to improve your website experience and help us understand how you use our website. By continuing to use this website, you consent to the usage of cookies. Learn more about our Privacy Statement and Cookie Policy .

- Our Mission

- Code of Conduct

- The Consultants

- Hours and Locations

- Apply to Become a Consultant

- Make an Appointment

- Face-to-Face Appointments

- Zoom Appointments

- Written Feedback Appointments

- Support for Writers with Disabilities

- Policies and Restrictions

- Upcoming Workshops

- Class Workshops

- Meet the Consultants

- Writing Guides and Tools

- Schedule an appointment! Login or Register

- Graduate Students

- ESOL Students

How to Write a Research Question

What is a research question? A research question is the question around which you center your research. It should be:

- clear : it provides enough specifics that one’s audience can easily understand its purpose without needing additional explanation.

- focused : it is narrow enough that it can be answered thoroughly in the space the writing task allows.

- concise : it is expressed in the fewest possible words.

- complex : it is not answerable with a simple “yes” or “no,” but rather requires synthesis and analysis of ideas and sources prior to composition of an answer.

- arguable : its potential answers are open to debate rather than accepted facts.

You should ask a question about an issue that you are genuinely curious and/or passionate about.

The question you ask should be developed for the discipline you are studying. A question appropriate for Biology, for instance, is different from an appropriate one in Political Science or Sociology. If you are developing your question for a course other than first-year composition, you may want to discuss your ideas for a research question with your professor.

Why is a research question essential to the research process? Research questions help writers focus their research by providing a path through the research and writing process. The specificity of a well-developed research question helps writers avoid the “all-about” paper and work toward supporting a specific, arguable thesis.

Steps to developing a research question:

- Choose an interesting general topic. Most professional researchers focus on topics they are genuinely interested in studying. Writers should choose a broad topic about which they genuinely would like to know more. An example of a general topic might be “Slavery in the American South” or “Films of the 1930s.”

- Do some preliminary research on your general topic. Do a few quick searches in current periodicals and journals on your topic to see what’s already been done and to help you narrow your focus. What issues are scholars and researchers discussing, when it comes to your topic? What questions occur to you as you read these articles?

- Consider your audience. For most college papers, your audience will be academic, but always keep your audience in mind when narrowing your topic and developing your question. Would that particular audience be interested in the question you are developing?

- Start asking questions. Taking into consideration all of the above, start asking yourself open-ended “how” and “why” questions about your general topic. For example, “Why were slave narratives effective tools in working toward the abolishment of slavery?” or “How did the films of the 1930s reflect or respond to the conditions of the Great Depression?”

- Is your research question clear? With so much research available on any given topic, research questions must be as clear as possible in order to be effective in helping the writer direct his or her research.

- Is your research question focused? Research questions must be specific enough to be well covered in the space available.

- Is your research question complex? Research questions should not be answerable with a simple “yes” or “no” or by easily-found facts. They should, instead, require both research and analysis on the part of the writer. They often begin with “How” or “Why.”

- Begin your research . After you’ve come up with a question, think about the possible paths your research could take. What sources should you consult as you seek answers to your question? What research process will ensure that you find a variety of perspectives and responses to your question?

Sample Research Questions

Unclear: How should social networking sites address the harm they cause? Clear: What action should social networking sites like MySpace and Facebook take to protect users’ personal information and privacy? The unclear version of this question doesn’t specify which social networking sites or suggest what kind of harm the sites might be causing. It also assumes that this “harm” is proven and/or accepted. The clearer version specifies sites (MySpace and Facebook), the type of potential harm (privacy issues), and who may be experiencing that harm (users). A strong research question should never leave room for ambiguity or interpretation. Unfocused: What is the effect on the environment from global warming? Focused: What is the most significant effect of glacial melting on the lives of penguins in Antarctica?

The unfocused research question is so broad that it couldn’t be adequately answered in a book-length piece, let alone a standard college-level paper. The focused version narrows down to a specific effect of global warming (glacial melting), a specific place (Antarctica), and a specific animal that is affected (penguins). It also requires the writer to take a stance on which effect has the greatest impact on the affected animal. When in doubt, make a research question as narrow and focused as possible.

Too simple: How are doctors addressing diabetes in the U.S.? Appropriately Complex: What main environmental, behavioral, and genetic factors predict whether Americans will develop diabetes, and how can these commonalities be used to aid the medical community in prevention of the disease?

The simple version of this question can be looked up online and answered in a few factual sentences; it leaves no room for analysis. The more complex version is written in two parts; it is thought provoking and requires both significant investigation and evaluation from the writer. As a general rule of thumb, if a quick Google search can answer a research question, it’s likely not very effective.

Last updated 8/8/2018

The Writing Center

4400 University Drive, 2G8 Fairfax, VA 22030

- Johnson Center, Room 227E

- +1-703-993-1200

- [email protected]

Quick Links

- Register with us

© Copyright 2024 George Mason University . All Rights Reserved. Privacy Statement | Accessibility

Library buildings are open for UniSA staff and students via UniSA ID swipe cards. Please contact us on Ask the Library for any assistance. Find out about other changes to Library services .

Develop your research question

- Search for your assignment

- Find books and journal articles

- Find government and organisation information

- Properties and calculations

- Drug information

- Assignment Help

STEP 1: Understand your research objective

Before you start developing your research question, think about your research objectives:

- What are you trying to do? (compare, analyse)

- What do you need to know about the topic?

- What type of research are you doing?

- What types of information/studies do you need? (e.g. randomised controlled trial, case study, guideline, protocol?)

- Does the information need to be current?

Watch the following video (6:26) to get you started:

Key points from the video

- All good academic research starts with a research question.

- A research question is an actual question you want to answer about a particular topic.

- Developing a question helps you focus on an aspect of your topic, which will streamline your research and writing.

- Pick a topic you are interested in.

- Narrow the topic to a particular aspect.

- Brainstorm some questions around your topic aspect.

- Select a question to work with.

- Focus the question by making it more specific. Make sure your question clearly states who, what, when, where, and why.

- A good research question focuses on one issue only and requires analysis.

- Your search for information should be directed by your research question.

- Your thesis or hypothesis should be a direct answer to your research question, summarised into one sentence.

STEP 2: Search before you research

The benefits of doing a background search :

- You can gather more background knowledge on a subject

- explore different aspects of your topic

- identify additional keywords and terminology

STEP 3: Choose a topic

The resources linked below are a good place to start:

- UpToDate It covers thousands of clinical topics grouped into specialties with links to articles, drugs and drug interaction databases, medical calculators and guidelines.

- An@tomedia This online anatomy resource features images, videos, and slides together with interactive, educational text and quiz questions.

- Anatomy.tv Find 3D anatomical images; functional anatomy animations and videos, and MRI, anatomy, and clinical slides. Test your knowledge through interactive activities and quizzes.

STEP 4: Brainstorm your questions

Now you have explored different aspects of your topic, you may construct more focused questions (you can create a few questions and pick one later).

Learn more:

- Clear and present questions: formulating questions for evidence based practice (Booth 2006) This article provides an overview of thinking in relation to the theory and practice of formulating answerable research questions.

STEP 5: Pick a question and focus

Once you have a few questions to choose from, pick one and refine it even further.

Are you required to use "PICO"?

- PICO worksheet

- Other frameworks

The PICO framework (or other variations) can be useful for developing an answerable clinical question.

The example question used in this guide is a PICO question: How does speech therapy compare to cognitive behavioural therapy in improving speech fluency in adolescents?

Use the interactive PICO worksheet to get started with your question, or you can download the worksheet document.

- Building your question with PICO

Here are some different frameworks you may want to use:

There are a number of PICO variations which can be used for different types of questions, such as qualitative, and background and foreground questions. Visit the Evidence-Based Practice (EBP) Guide to learn more:

- Evidence Based Practice guide

- << Previous: Plan your search

- Next: Search for your assignment >>

- Last Updated: Apr 18, 2024 10:51 AM

- URL: https://guides.library.unisa.edu.au/Chemistry

The text within this Guide is licensed CC BY 4.0 . Image licenses can be found within the image attributions document on the last page of the Guide. Ask the Library for information about reuse rights for other content within this Guide.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Chapter 2: Getting Started in Research

Generating Good Research Questions

Learning Objectives

- Describe some common sources of research ideas and generate research ideas using those sources.

- Describe some techniques for turning research ideas into empirical research questions and use those techniques to generate questions.

- Explain what makes a research question interesting and evaluate research questions in terms of their interestingness.

Good research must begin with a good research question. Yet coming up with good research questions is something that novice researchers often find difficult and stressful. One reason is that this is a creative process that can appear mysterious—even magical—with experienced researchers seeming to pull interesting research questions out of thin air. However, psychological research on creativity has shown that it is neither as mysterious nor as magical as it appears. It is largely the product of ordinary thinking strategies and persistence (Weisberg, 1993) [1] . This section covers some fairly simple strategies for finding general research ideas, turning those ideas into empirically testable research questions, and finally evaluating those questions in terms of how interesting they are and how feasible they would be to answer.

Finding Inspiration

Research questions often begin as more general research ideas—usually focusing on some behaviour or psychological characteristic: talkativeness, learning, depression, bungee jumping, and so on. Before looking at how to turn such ideas into empirically testable research questions, it is worth looking at where such ideas come from in the first place. Three of the most common sources of inspiration are informal observations, practical problems, and previous research.