- Privacy Policy

Home » Secondary Data – Types, Methods and Examples

Secondary Data – Types, Methods and Examples

Table of Contents

Secondary Data

Definition:

Secondary data refers to information that has been collected, processed, and published by someone else, rather than the researcher gathering the data firsthand. This can include data from sources such as government publications, academic journals, market research reports, and other existing datasets.

Secondary Data Types

Types of secondary data are as follows:

- Published data: Published data refers to data that has been published in books, magazines, newspapers, and other print media. Examples include statistical reports, market research reports, and scholarly articles.

- Government data: Government data refers to data collected by government agencies and departments. This can include data on demographics, economic trends, crime rates, and health statistics.

- Commercial data: Commercial data is data collected by businesses for their own purposes. This can include sales data, customer feedback, and market research data.

- Academic data: Academic data refers to data collected by researchers for academic purposes. This can include data from experiments, surveys, and observational studies.

- Online data: Online data refers to data that is available on the internet. This can include social media posts, website analytics, and online customer reviews.

- Organizational data: Organizational data is data collected by businesses or organizations for their own purposes. This can include data on employee performance, financial records, and customer satisfaction.

- Historical data : Historical data refers to data that was collected in the past and is still available for research purposes. This can include census data, historical documents, and archival records.

- International data: International data refers to data collected from other countries for research purposes. This can include data on international trade, health statistics, and demographic trends.

- Public data : Public data refers to data that is available to the general public. This can include data from government agencies, non-profit organizations, and other sources.

- Private data: Private data refers to data that is not available to the general public. This can include confidential business data, personal medical records, and financial data.

- Big data: Big data refers to large, complex datasets that are difficult to manage and analyze using traditional data processing methods. This can include social media data, sensor data, and other types of data generated by digital devices.

Secondary Data Collection Methods

Secondary Data Collection Methods are as follows:

- Published sources: Researchers can gather secondary data from published sources such as books, journals, reports, and newspapers. These sources often provide comprehensive information on a variety of topics.

- Online sources: With the growth of the internet, researchers can now access a vast amount of secondary data online. This includes websites, databases, and online archives.

- Government sources : Government agencies often collect and publish a wide range of secondary data on topics such as demographics, crime rates, and health statistics. Researchers can obtain this data through government websites, publications, or data portals.

- Commercial sources: Businesses often collect and analyze data for marketing research or customer profiling. Researchers can obtain this data through commercial data providers or by purchasing market research reports.

- Academic sources: Researchers can also obtain secondary data from academic sources such as published research studies, academic journals, and dissertations.

- Personal contacts: Researchers can also obtain secondary data from personal contacts, such as experts in a particular field or individuals with specialized knowledge.

Secondary Data Formats

Secondary data can come in various formats depending on the source from which it is obtained. Here are some common formats of secondary data:

- Numeric Data: Numeric data is often in the form of statistics and numerical figures that have been compiled and reported by organizations such as government agencies, research institutions, and commercial enterprises. This can include data such as population figures, GDP, sales figures, and market share.

- Textual Data: Textual data is often in the form of written documents, such as reports, articles, and books. This can include qualitative data such as descriptions, opinions, and narratives.

- Audiovisual Data : Audiovisual data is often in the form of recordings, videos, and photographs. This can include data such as interviews, focus group discussions, and other types of qualitative data.

- Geospatial Data: Geospatial data is often in the form of maps, satellite images, and geographic information systems (GIS) data. This can include data such as demographic information, land use patterns, and transportation networks.

- Transactional Data : Transactional data is often in the form of digital records of financial and business transactions. This can include data such as purchase histories, customer behavior, and financial transactions.

- Social Media Data: Social media data is often in the form of user-generated content from social media platforms such as Facebook, Twitter, and Instagram. This can include data such as user demographics, content trends, and sentiment analysis.

Secondary Data Analysis Methods

Secondary data analysis involves the use of pre-existing data for research purposes. Here are some common methods of secondary data analysis:

- Descriptive Analysis: This method involves describing the characteristics of a dataset, such as the mean, standard deviation, and range of the data. Descriptive analysis can be used to summarize data and provide an overview of trends.

- Inferential Analysis: This method involves making inferences and drawing conclusions about a population based on a sample of data. Inferential analysis can be used to test hypotheses and determine the statistical significance of relationships between variables.

- Content Analysis: This method involves analyzing textual or visual data to identify patterns and themes. Content analysis can be used to study the content of documents, media coverage, and social media posts.

- Time-Series Analysis : This method involves analyzing data over time to identify trends and patterns. Time-series analysis can be used to study economic trends, climate change, and other phenomena that change over time.

- Spatial Analysis : This method involves analyzing data in relation to geographic location. Spatial analysis can be used to study patterns of disease spread, land use patterns, and the effects of environmental factors on health outcomes.

- Meta-Analysis: This method involves combining data from multiple studies to draw conclusions about a particular phenomenon. Meta-analysis can be used to synthesize the results of previous research and provide a more comprehensive understanding of a particular topic.

Secondary Data Gathering Guide

Here are some steps to follow when gathering secondary data:

- Define your research question: Start by defining your research question and identifying the specific information you need to answer it. This will help you identify the type of secondary data you need and where to find it.

- Identify relevant sources: Identify potential sources of secondary data, including published sources, online databases, government sources, and commercial data providers. Consider the reliability and validity of each source.

- Evaluate the quality of the data: Evaluate the quality and reliability of the data you plan to use. Consider the data collection methods, sample size, and potential biases. Make sure the data is relevant to your research question and is suitable for the type of analysis you plan to conduct.

- Collect the data: Collect the relevant data from the identified sources. Use a consistent method to record and organize the data to make analysis easier.

- Validate the data: Validate the data to ensure that it is accurate and reliable. Check for inconsistencies, missing data, and errors. Address any issues before analyzing the data.

- Analyze the data: Analyze the data using appropriate statistical and analytical methods. Use descriptive and inferential statistics to summarize and draw conclusions from the data.

- Interpret the results: Interpret the results of your analysis and draw conclusions based on the data. Make sure your conclusions are supported by the data and are relevant to your research question.

- Communicate the findings : Communicate your findings clearly and concisely. Use appropriate visual aids such as graphs and charts to help explain your results.

Examples of Secondary Data

Here are some examples of secondary data from different fields:

- Healthcare : Hospital records, medical journals, clinical trial data, and disease registries are examples of secondary data sources in healthcare. These sources can provide researchers with information on patient demographics, disease prevalence, and treatment outcomes.

- Marketing : Market research reports, customer surveys, and sales data are examples of secondary data sources in marketing. These sources can provide marketers with information on consumer preferences, market trends, and competitor activity.

- Education : Student test scores, graduation rates, and enrollment statistics are examples of secondary data sources in education. These sources can provide researchers with information on student achievement, teacher effectiveness, and educational disparities.

- Finance : Stock market data, financial statements, and credit reports are examples of secondary data sources in finance. These sources can provide investors with information on market trends, company performance, and creditworthiness.

- Social Science : Government statistics, census data, and survey data are examples of secondary data sources in social science. These sources can provide researchers with information on population demographics, social trends, and political attitudes.

- Environmental Science : Climate data, remote sensing data, and ecological monitoring data are examples of secondary data sources in environmental science. These sources can provide researchers with information on weather patterns, land use, and biodiversity.

Purpose of Secondary Data

The purpose of secondary data is to provide researchers with information that has already been collected by others for other purposes. Secondary data can be used to support research questions, test hypotheses, and answer research objectives. Some of the key purposes of secondary data are:

- To gain a better understanding of the research topic : Secondary data can be used to provide context and background information on a research topic. This can help researchers understand the historical and social context of their research and gain insights into relevant variables and relationships.

- To save time and resources: Collecting new primary data can be time-consuming and expensive. Using existing secondary data sources can save researchers time and resources by providing access to pre-existing data that has already been collected and organized.

- To provide comparative data : Secondary data can be used to compare and contrast findings across different studies or datasets. This can help researchers identify trends, patterns, and relationships that may not have been apparent from individual studies.

- To support triangulation: Triangulation is the process of using multiple sources of data to confirm or refute research findings. Secondary data can be used to support triangulation by providing additional sources of data to support or refute primary research findings.

- To supplement primary data : Secondary data can be used to supplement primary data by providing additional information or insights that were not captured by the primary research. This can help researchers gain a more complete understanding of the research topic and draw more robust conclusions.

When to use Secondary Data

Secondary data can be useful in a variety of research contexts, and there are several situations in which it may be appropriate to use secondary data. Some common situations in which secondary data may be used include:

- When primary data collection is not feasible : Collecting primary data can be time-consuming and expensive, and in some cases, it may not be feasible to collect primary data. In these situations, secondary data can provide valuable insights and information.

- When exploring a new research area : Secondary data can be a useful starting point for researchers who are exploring a new research area. Secondary data can provide context and background information on a research topic, and can help researchers identify key variables and relationships to explore further.

- When comparing and contrasting research findings: Secondary data can be used to compare and contrast findings across different studies or datasets. This can help researchers identify trends, patterns, and relationships that may not have been apparent from individual studies.

- When triangulating research findings: Triangulation is the process of using multiple sources of data to confirm or refute research findings. Secondary data can be used to support triangulation by providing additional sources of data to support or refute primary research findings.

- When validating research findings : Secondary data can be used to validate primary research findings by providing additional sources of data that support or refute the primary findings.

Characteristics of Secondary Data

Secondary data have several characteristics that distinguish them from primary data. Here are some of the key characteristics of secondary data:

- Non-reactive: Secondary data are non-reactive, meaning that they are not collected for the specific purpose of the research study. This means that the researcher has no control over the data collection process, and cannot influence how the data were collected.

- Time-saving: Secondary data are pre-existing, meaning that they have already been collected and organized by someone else. This can save the researcher time and resources, as they do not need to collect the data themselves.

- Wide-ranging : Secondary data sources can provide a wide range of information on a variety of topics. This can be useful for researchers who are exploring a new research area or seeking to compare and contrast research findings.

- Less expensive: Secondary data are generally less expensive than primary data, as they do not require the researcher to incur the costs associated with data collection.

- Potential for bias : Secondary data may be subject to biases that were present in the original data collection process. For example, data may have been collected using a biased sampling method or the data may be incomplete or inaccurate.

- Lack of control: The researcher has no control over the data collection process and cannot ensure that the data were collected using appropriate methods or measures.

- Requires careful evaluation : Secondary data sources must be evaluated carefully to ensure that they are appropriate for the research question and analysis. This includes assessing the quality, reliability, and validity of the data sources.

Advantages of Secondary Data

There are several advantages to using secondary data in research, including:

- Time-saving : Collecting primary data can be time-consuming and expensive. Secondary data can be accessed quickly and easily, which can save researchers time and resources.

- Cost-effective: Secondary data are generally less expensive than primary data, as they do not require the researcher to incur the costs associated with data collection.

- Large sample size : Secondary data sources often have larger sample sizes than primary data sources, which can increase the statistical power of the research.

- Access to historical data : Secondary data sources can provide access to historical data, which can be useful for researchers who are studying trends over time.

- No ethical concerns: Secondary data are already in existence, so there are no ethical concerns related to collecting data from human subjects.

- May be more objective : Secondary data may be more objective than primary data, as the data were not collected for the specific purpose of the research study.

Limitations of Secondary Data

While there are many advantages to using secondary data in research, there are also some limitations that should be considered. Some of the main limitations of secondary data include:

- Lack of control over data quality : Researchers do not have control over the data collection process, which means they cannot ensure the accuracy or completeness of the data.

- Limited availability: Secondary data may not be available for the specific research question or study design.

- Lack of information on sampling and data collection methods: Researchers may not have access to information on the sampling and data collection methods used to gather the secondary data. This can make it difficult to evaluate the quality of the data.

- Data may not be up-to-date: Secondary data may not be up-to-date or relevant to the current research question.

- Data may be incomplete or inaccurate : Secondary data may be incomplete or inaccurate due to missing or incorrect data points, data entry errors, or other factors.

- Biases in data collection: The data may have been collected using biased sampling or data collection methods, which can limit the validity of the data.

- Lack of control over variables: Researchers have limited control over the variables that were measured in the original data collection process, which can limit the ability to draw conclusions about causality.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Primary Data – Types, Methods and Examples

Qualitative Data – Types, Methods and Examples

Research Data – Types Methods and Examples

Quantitative Data – Types, Methods and Examples

Information in Research – Types and Examples

Root out friction in every digital experience, super-charge conversion rates, and optimize digital self-service

Uncover insights from any interaction, deliver AI-powered agent coaching, and reduce cost to serve

Increase revenue and loyalty with real-time insights and recommendations delivered to teams on the ground

Know how your people feel and empower managers to improve employee engagement, productivity, and retention

Take action in the moments that matter most along the employee journey and drive bottom line growth

Whatever they’re are saying, wherever they’re saying it, know exactly what’s going on with your people

Get faster, richer insights with qual and quant tools that make powerful market research available to everyone

Run concept tests, pricing studies, prototyping + more with fast, powerful studies designed by UX research experts

Track your brand performance 24/7 and act quickly to respond to opportunities and challenges in your market

Explore the platform powering Experience Management

- Free Account

- For Digital

- For Customer Care

- For Human Resources

- For Researchers

- Financial Services

- All Industries

Popular Use Cases

- Customer Experience

- Employee Experience

- Net Promoter Score

- Voice of Customer

- Customer Success Hub

- Product Documentation

- Training & Certification

- XM Institute

- Popular Resources

- Customer Stories

- Artificial Intelligence

Market Research

- Partnerships

- Marketplace

The annual gathering of the experience leaders at the world’s iconic brands building breakthrough business results, live in Salt Lake City.

- English/AU & NZ

- Español/Europa

- Español/América Latina

- Português Brasileiro

- REQUEST DEMO

- Experience Management

- Secondary Research

Try Qualtrics for free

Secondary research: definition, methods, & examples.

19 min read This ultimate guide to secondary research helps you understand changes in market trends, customers buying patterns and your competition using existing data sources.

In situations where you’re not involved in the data gathering process ( primary research ), you have to rely on existing information and data to arrive at specific research conclusions or outcomes. This approach is known as secondary research.

In this article, we’re going to explain what secondary research is, how it works, and share some examples of it in practice.

Free eBook: The ultimate guide to conducting market research

What is secondary research?

Secondary research, also known as desk research, is a research method that involves compiling existing data sourced from a variety of channels . This includes internal sources (e.g.in-house research) or, more commonly, external sources (such as government statistics, organizational bodies, and the internet).

Secondary research comes in several formats, such as published datasets, reports, and survey responses , and can also be sourced from websites, libraries, and museums.

The information is usually free — or available at a limited access cost — and gathered using surveys , telephone interviews, observation, face-to-face interviews, and more.

When using secondary research, researchers collect, verify, analyze and incorporate it to help them confirm research goals for the research period.

As well as the above, it can be used to review previous research into an area of interest. Researchers can look for patterns across data spanning several years and identify trends — or use it to verify early hypothesis statements and establish whether it’s worth continuing research into a prospective area.

How to conduct secondary research

There are five key steps to conducting secondary research effectively and efficiently:

1. Identify and define the research topic

First, understand what you will be researching and define the topic by thinking about the research questions you want to be answered.

Ask yourself: What is the point of conducting this research? Then, ask: What do we want to achieve?

This may indicate an exploratory reason (why something happened) or confirm a hypothesis. The answers may indicate ideas that need primary or secondary research (or a combination) to investigate them.

2. Find research and existing data sources

If secondary research is needed, think about where you might find the information. This helps you narrow down your secondary sources to those that help you answer your questions. What keywords do you need to use?

Which organizations are closely working on this topic already? Are there any competitors that you need to be aware of?

Create a list of the data sources, information, and people that could help you with your work.

3. Begin searching and collecting the existing data

Now that you have the list of data sources, start accessing the data and collect the information into an organized system. This may mean you start setting up research journal accounts or making telephone calls to book meetings with third-party research teams to verify the details around data results.

As you search and access information, remember to check the data’s date, the credibility of the source, the relevance of the material to your research topic, and the methodology used by the third-party researchers. Start small and as you gain results, investigate further in the areas that help your research’s aims.

4. Combine the data and compare the results

When you have your data in one place, you need to understand, filter, order, and combine it intelligently. Data may come in different formats where some data could be unusable, while other information may need to be deleted.

After this, you can start to look at different data sets to see what they tell you. You may find that you need to compare the same datasets over different periods for changes over time or compare different datasets to notice overlaps or trends. Ask yourself: What does this data mean to my research? Does it help or hinder my research?

5. Analyze your data and explore further

In this last stage of the process, look at the information you have and ask yourself if this answers your original questions for your research. Are there any gaps? Do you understand the information you’ve found? If you feel there is more to cover, repeat the steps and delve deeper into the topic so that you can get all the information you need.

If secondary research can’t provide these answers, consider supplementing your results with data gained from primary research. As you explore further, add to your knowledge and update your findings. This will help you present clear, credible information.

Primary vs secondary research

Unlike secondary research, primary research involves creating data first-hand by directly working with interviewees, target users, or a target market. Primary research focuses on the method for carrying out research, asking questions, and collecting data using approaches such as:

- Interviews (panel, face-to-face or over the phone)

- Questionnaires or surveys

- Focus groups

Using these methods, researchers can get in-depth, targeted responses to questions, making results more accurate and specific to their research goals. However, it does take time to do and administer.

Unlike primary research, secondary research uses existing data, which also includes published results from primary research. Researchers summarize the existing research and use the results to support their research goals.

Both primary and secondary research have their places. Primary research can support the findings found through secondary research (and fill knowledge gaps), while secondary research can be a starting point for further primary research. Because of this, these research methods are often combined for optimal research results that are accurate at both the micro and macro level.

Sources of Secondary Research

There are two types of secondary research sources: internal and external. Internal data refers to in-house data that can be gathered from the researcher’s organization. External data refers to data published outside of and not owned by the researcher’s organization.

Internal data

Internal data is a good first port of call for insights and knowledge, as you may already have relevant information stored in your systems. Because you own this information — and it won’t be available to other researchers — it can give you a competitive edge . Examples of internal data include:

- Database information on sales history and business goal conversions

- Information from website applications and mobile site data

- Customer-generated data on product and service efficiency and use

- Previous research results or supplemental research areas

- Previous campaign results

External data

External data is useful when you: 1) need information on a new topic, 2) want to fill in gaps in your knowledge, or 3) want data that breaks down a population or market for trend and pattern analysis. Examples of external data include:

- Government, non-government agencies, and trade body statistics

- Company reports and research

- Competitor research

- Public library collections

- Textbooks and research journals

- Media stories in newspapers

- Online journals and research sites

Three examples of secondary research methods in action

How and why might you conduct secondary research? Let’s look at a few examples:

1. Collecting factual information from the internet on a specific topic or market

There are plenty of sites that hold data for people to view and use in their research. For example, Google Scholar, ResearchGate, or Wiley Online Library all provide previous research on a particular topic. Researchers can create free accounts and use the search facilities to look into a topic by keyword, before following the instructions to download or export results for further analysis.

This can be useful for exploring a new market that your organization wants to consider entering. For instance, by viewing the U.S Census Bureau demographic data for that area, you can see what the demographics of your target audience are , and create compelling marketing campaigns accordingly.

2. Finding out the views of your target audience on a particular topic

If you’re interested in seeing the historical views on a particular topic, for example, attitudes to women’s rights in the US, you can turn to secondary sources.

Textbooks, news articles, reviews, and journal entries can all provide qualitative reports and interviews covering how people discussed women’s rights. There may be multimedia elements like video or documented posters of propaganda showing biased language usage.

By gathering this information, synthesizing it, and evaluating the language, who created it and when it was shared, you can create a timeline of how a topic was discussed over time.

3. When you want to know the latest thinking on a topic

Educational institutions, such as schools and colleges, create a lot of research-based reports on younger audiences or their academic specialisms. Dissertations from students also can be submitted to research journals, making these places useful places to see the latest insights from a new generation of academics.

Information can be requested — and sometimes academic institutions may want to collaborate and conduct research on your behalf. This can provide key primary data in areas that you want to research, as well as secondary data sources for your research.

Advantages of secondary research

There are several benefits of using secondary research, which we’ve outlined below:

- Easily and readily available data – There is an abundance of readily accessible data sources that have been pre-collected for use, in person at local libraries and online using the internet. This data is usually sorted by filters or can be exported into spreadsheet format, meaning that little technical expertise is needed to access and use the data.

- Faster research speeds – Since the data is already published and in the public arena, you don’t need to collect this information through primary research. This can make the research easier to do and faster, as you can get started with the data quickly.

- Low financial and time costs – Most secondary data sources can be accessed for free or at a small cost to the researcher, so the overall research costs are kept low. In addition, by saving on preliminary research, the time costs for the researcher are kept down as well.

- Secondary data can drive additional research actions – The insights gained can support future research activities (like conducting a follow-up survey or specifying future detailed research topics) or help add value to these activities.

- Secondary data can be useful pre-research insights – Secondary source data can provide pre-research insights and information on effects that can help resolve whether research should be conducted. It can also help highlight knowledge gaps, so subsequent research can consider this.

- Ability to scale up results – Secondary sources can include large datasets (like Census data results across several states) so research results can be scaled up quickly using large secondary data sources.

Disadvantages of secondary research

The disadvantages of secondary research are worth considering in advance of conducting research :

- Secondary research data can be out of date – Secondary sources can be updated regularly, but if you’re exploring the data between two updates, the data can be out of date. Researchers will need to consider whether the data available provides the right research coverage dates, so that insights are accurate and timely, or if the data needs to be updated. Also, fast-moving markets may find secondary data expires very quickly.

- Secondary research needs to be verified and interpreted – Where there’s a lot of data from one source, a researcher needs to review and analyze it. The data may need to be verified against other data sets or your hypotheses for accuracy and to ensure you’re using the right data for your research.

- The researcher has had no control over the secondary research – As the researcher has not been involved in the secondary research, invalid data can affect the results. It’s therefore vital that the methodology and controls are closely reviewed so that the data is collected in a systematic and error-free way.

- Secondary research data is not exclusive – As data sets are commonly available, there is no exclusivity and many researchers can use the same data. This can be problematic where researchers want to have exclusive rights over the research results and risk duplication of research in the future.

When do we conduct secondary research?

Now that you know the basics of secondary research, when do researchers normally conduct secondary research?

It’s often used at the beginning of research, when the researcher is trying to understand the current landscape . In addition, if the research area is new to the researcher, it can form crucial background context to help them understand what information exists already. This can plug knowledge gaps, supplement the researcher’s own learning or add to the research.

Secondary research can also be used in conjunction with primary research. Secondary research can become the formative research that helps pinpoint where further primary research is needed to find out specific information. It can also support or verify the findings from primary research.

You can use secondary research where high levels of control aren’t needed by the researcher, but a lot of knowledge on a topic is required from different angles.

Secondary research should not be used in place of primary research as both are very different and are used for various circumstances.

Questions to ask before conducting secondary research

Before you start your secondary research, ask yourself these questions:

- Is there similar internal data that we have created for a similar area in the past?

If your organization has past research, it’s best to review this work before starting a new project. The older work may provide you with the answers, and give you a starting dataset and context of how your organization approached the research before. However, be mindful that the work is probably out of date and view it with that note in mind. Read through and look for where this helps your research goals or where more work is needed.

- What am I trying to achieve with this research?

When you have clear goals, and understand what you need to achieve, you can look for the perfect type of secondary or primary research to support the aims. Different secondary research data will provide you with different information – for example, looking at news stories to tell you a breakdown of your market’s buying patterns won’t be as useful as internal or external data e-commerce and sales data sources.

- How credible will my research be?

If you are looking for credibility, you want to consider how accurate the research results will need to be, and if you can sacrifice credibility for speed by using secondary sources to get you started. Bear in mind which sources you choose — low-credibility data sites, like political party websites that are highly biased to favor their own party, would skew your results.

- What is the date of the secondary research?

When you’re looking to conduct research, you want the results to be as useful as possible , so using data that is 10 years old won’t be as accurate as using data that was created a year ago. Since a lot can change in a few years, note the date of your research and look for earlier data sets that can tell you a more recent picture of results. One caveat to this is using data collected over a long-term period for comparisons with earlier periods, which can tell you about the rate and direction of change.

- Can the data sources be verified? Does the information you have check out?

If you can’t verify the data by looking at the research methodology, speaking to the original team or cross-checking the facts with other research, it could be hard to be sure that the data is accurate. Think about whether you can use another source, or if it’s worth doing some supplementary primary research to replicate and verify results to help with this issue.

We created a front-to-back guide on conducting market research, The ultimate guide to conducting market research , so you can understand the research journey with confidence.

In it, you’ll learn more about:

- What effective market research looks like

- The use cases for market research

- The most important steps to conducting market research

- And how to take action on your research findings

Download the free guide for a clearer view on secondary research and other key research types for your business.

Related resources

Market intelligence 10 min read, marketing insights 11 min read, ethnographic research 11 min read, qualitative vs quantitative research 13 min read, qualitative research questions 11 min read, qualitative research design 12 min read, primary vs secondary research 14 min read, request demo.

Ready to learn more about Qualtrics?

What is secondary research?

Last updated

7 February 2023

Reviewed by

Cathy Heath

In this guide, we explain in detail what secondary research is, including the difference between this research method and primary research, the different sources for secondary research, and how you can benefit from this research method.

Analyze your secondary research

Bring your secondary research together inside Dovetail, tag PDFs, and uncover actionable insights

- Overview of secondary research

Secondary research is a method by which the researcher finds existing data, filters it to meet the context of their research question, analyzes it, and then summarizes it to come up with valid research conclusions.

This research method involves searching for information, often via the internet, using keywords or search terms relevant to the research question. The goal is to find data from internal and external sources that are up-to-date and authoritative, and that fully answer the question.

Secondary research reviews existing research and looks for patterns, trends, and insights, which helps determine what further research, if any, is needed.

- Secondary research methods

Secondary research is more economical than primary research, mainly because the methods for this type of research use existing data and do not require the data to be collected first-hand or by a third party that you have to pay.

Secondary research is referred to as ‘desk research’ or ‘desktop research,’ since the data can be retrieved from behind a desk instead of having to host a focus group and create the research from scratch.

Finding existing research is relatively easy since there are numerous accessible sources organizations can use to obtain the information they need. These include:

The internet: This data is either free or behind a paywall. Yet, while there are plenty of sites on the internet with information that can be used, businesses need to be careful to collect information from trusted and authentic websites to ensure the data is accurate.

Government agencies: Government agencies are typically known to provide valuable, trustworthy information that companies can use for their research.

The public library: This establishment holds paper-based and online sources of reliable information, including business databases, magazines, newspapers, and government publications. Be mindful of any copyright restrictions that may apply when using these sources.

Commercial information: This source provides first-hand information on politics, demographics, and economic developments through information aggregators, newspapers, magazines, radio, blogs, podcasts, and journals. This information may be free or behind a paywall.

Educational and scientific facilities: Universities, colleges, and specialized research facilities carry out significant amounts of research. As a result, they have data that may be available to the public and businesses for use.

- Key differences between primary research and secondary research

Both primary and secondary research methods provide researchers with vital, complementary information, despite some major differences between the two approaches.

Primary research involves gathering first-hand information by directly working with the target market, users, and interviewees. Researchers ask questions directly using surveys , interviews, and focus groups.

Through the primary research method, researchers obtain targeted responses and accurate results directly related to their overall research goals.

Secondary research uses existing data, such as published reports, that have already been completed through earlier primary and secondary research. Researchers can use this existing data to support their research goals and preliminary research findings.

Other notable differences between primary and secondary research include:

Relevance: Primary research uses raw data relevant to the investigation's goals. Secondary research may contain irrelevant data or may not neatly fit the parameters of the researcher's goals.

Time: Primary research takes a lot of time. Secondary research can be done relatively quickly.

Researcher bias: Primary research can be subject to researcher bias.

Cost: Primary research can be expensive. Secondary research can be more affordable because the data is often free. However, valuable data is often behind a paywall. The piece of secondary research you want may not exist or be very expensive, so you may have to turn to primary research to fill the information gap.

- When to conduct secondary research

Both primary and secondary research have roles to play in providing a holistic and accurate understanding of a topic. Generally, secondary research is done at the beginning of the research phase, especially if the topic is new.

Secondary research can provide context and critical background information to understand the issue at hand and identify any gaps, that could then be filled by primary research.

- How to conduct secondary research

Researchers usually follow several steps for secondary research.

1. Identify and define the research topic

Before starting either of these research methods, you first need to determine the following:

Topic to be researched

Purpose of this research

For instance, you may want to explore a question, determine why something happened, or confirm whether an issue is true.

At this stage, you also need to consider what search terms or keywords might be the most effective for this topic. You could do this by looking at what synonyms exist for your topic, the use of industry terms and acronyms, as well as the balance between statistical or quantitative data and contextual data to support your research topic.

It’s also essential to define what you don’t want to cover in your secondary research process. This might be choosing only to use recent information or only focusing on research based on a particular country or type of consumer. From there, once you know what you want to know and why you can decide whether you need to use both primary and secondary research to answer your questions.

2. Find research and existing data sources

Once you have determined your research topic , select the information sources that will provide you with the most appropriate and relevant data for your research. If you need secondary research, you want to determine where this information can likely be found, for example:

Trade associations

Government sources

Create a list of the relevant data sources , and other organizations or people that can help you find what you need.

3. Begin searching and collecting the existing data

Once you have narrowed down your sources, you will start gathering this information and putting it into an organized system. This often involves:

Checking the credibility of the source

Setting up meetings with research teams

Signing up for accounts to access certain websites or journals

One search result on the internet often leads to other pieces of helpful information, known as ‘pearl gathering’ or ‘pearl harvesting.’ This is usually a serendipitous activity, which can lead to valuable nuggets of information you may not have been aware of or considered.

4. Combine the data and compare the results

Once you have gathered all the data, start going through it by carefully examining all the information and comparing it to ensure the data is usable and that it isn’t duplicated or corrupted. Contradictory information is useful—just make sure you note the contradiction and the context. Be mindful of copyright and plagiarism when using secondary research and always cite your sources.

Once you have assessed everything, you will begin to look at what this information tells you by checking out the trends and comparing the different datasets. You will also investigate what this information means for your research, whether it helps your overall goal, and any gaps or deficiencies.

5. Analyze your data and explore further

In the final stage of conducting secondary research, you will analyze the data you have gathered and determine if it answers the questions you had before you started researching. Check that you understand the information, whether it fills in all your gaps, and whether it provides you with other insights or actions you should take next.

If you still need further data, repeat these steps to find additional information that can help you explore your topic more deeply. You may also need to supplement what you find with primary research to ensure that your data is complete, accurate, transparent, and credible.

- The advantages of secondary research

There are numerous advantages to performing secondary research. Some key benefits are:

Quicker than primary research: Because the data is already available, you can usually find the information you need fairly quickly. Not only will secondary research help you research faster, but you will also start optimizing the data more quickly.

Plenty of available data: There are countless sources for you to choose from, making research more accessible. This data may be already compiled and arranged, such as statistical information, so you can quickly make use of it.

Lower costs: Since you will not have to carry out the research from scratch, secondary research tends to be much more affordable than primary research.

Opens doors to further research: � Existing research usually identifies whether more research needs to be done. This could mean follow-up surveys or telephone interviews with subject matter experts (SME) to add value to your own research.

- The disadvantages of secondary research

While there are plenty of benefits to secondary research are plenty, there are some issues you should be aware of. These include:

Credibility issues: It is important to verify the sources used. Some information may be biased and not reflect or hide, relevant issues or challenges. It could also be inaccurate.

No recent information: Even if data may seem accurate, it may not be up to date, so the information you gather may no longer be correct. Outdated research can distort your overall findings.

Poor quality: Because secondary research tends to make conclusions from primary research data, the success of secondary research will depend on the quality and context of the research that has already been completed. If the research you are using is of poor quality, this will bring down the quality of your own findings.

Research doesn’t exist or is not easily accessible, or is expensive: Sometimes the information you need is confidential or proprietary, such as sales or earnings figures. Many information-based businesses attach value to the information they hold or publish, so the costs to access this information can be prohibitive.

Should you complete secondary research or primary research first?

Due to the costs and time involved in primary research, it may be more beneficial to conduct secondary market research first. This will save you time and provide a picture of what issues you may come across in your research. This allows you to focus on using more expensive primary research to get the specific answers you want.

What should you ask yourself before using secondary research data?

Check the date of the research to make sure it is still relevant. Also, determine the data source so you can assess how credible and trustworthy it is likely to be. For example, data from known brands, professional organizations, and even government agencies are usually excellent sources to use in your secondary research, as it tends to be trustworthy.

Be careful when using some websites and personal blogs as they may be based on opinions rather than facts. However, these sources can be useful for determining sentiment about a product or service, and help direct any primary research.

Editor’s picks

Last updated: 11 January 2024

Last updated: 15 January 2024

Last updated: 25 November 2023

Last updated: 12 May 2023

Last updated: 30 April 2024

Last updated: 18 May 2023

Last updated: 10 April 2023

Latest articles

Related topics, .css-je19u9{-webkit-align-items:flex-end;-webkit-box-align:flex-end;-ms-flex-align:flex-end;align-items:flex-end;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-flex-direction:row;-ms-flex-direction:row;flex-direction:row;-webkit-box-flex-wrap:wrap;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;-webkit-box-pack:center;-ms-flex-pack:center;-webkit-justify-content:center;justify-content:center;row-gap:0;text-align:center;max-width:671px;}@media (max-width: 1079px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}}@media (max-width: 799px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}} decide what to .css-1kiodld{max-height:56px;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;}@media (max-width: 1079px){.css-1kiodld{display:none;}} build next, decide what to build next.

Users report unexpectedly high data usage, especially during streaming sessions.

Users find it hard to navigate from the home page to relevant playlists in the app.

It would be great to have a sleep timer feature, especially for bedtime listening.

I need better filters to find the songs or artists I’m looking for.

Log in or sign up

Get started for free

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Secondary Research: Definition, Methods and Examples.

In the world of research, there are two main types of data sources: primary and secondary. While primary research involves collecting new data directly from individuals or sources, secondary research involves analyzing existing data already collected by someone else. Today we’ll discuss secondary research.

One common source of this research is published research reports and other documents. These materials can often be found in public libraries, on websites, or even as data extracted from previously conducted surveys. In addition, many government and non-government agencies maintain extensive data repositories that can be accessed for research purposes.

LEARN ABOUT: Research Process Steps

While secondary research may not offer the same level of control as primary research, it can be a highly valuable tool for gaining insights and identifying trends. Researchers can save time and resources by leveraging existing data sources while still uncovering important information.

What is Secondary Research: Definition

Secondary research is a research method that involves using already existing data. Existing data is summarized and collated to increase the overall effectiveness of the research.

One of the key advantages of secondary research is that it allows us to gain insights and draw conclusions without having to collect new data ourselves. This can save time and resources and also allow us to build upon existing knowledge and expertise.

When conducting secondary research, it’s important to be thorough and thoughtful in our approach. This means carefully selecting the sources and ensuring that the data we’re analyzing is reliable and relevant to the research question . It also means being critical and analytical in the analysis and recognizing any potential biases or limitations in the data.

LEARN ABOUT: Level of Analysis

Secondary research is much more cost-effective than primary research , as it uses already existing data, unlike primary research, where data is collected firsthand by organizations or businesses or they can employ a third party to collect data on their behalf.

LEARN ABOUT: Data Analytics Projects

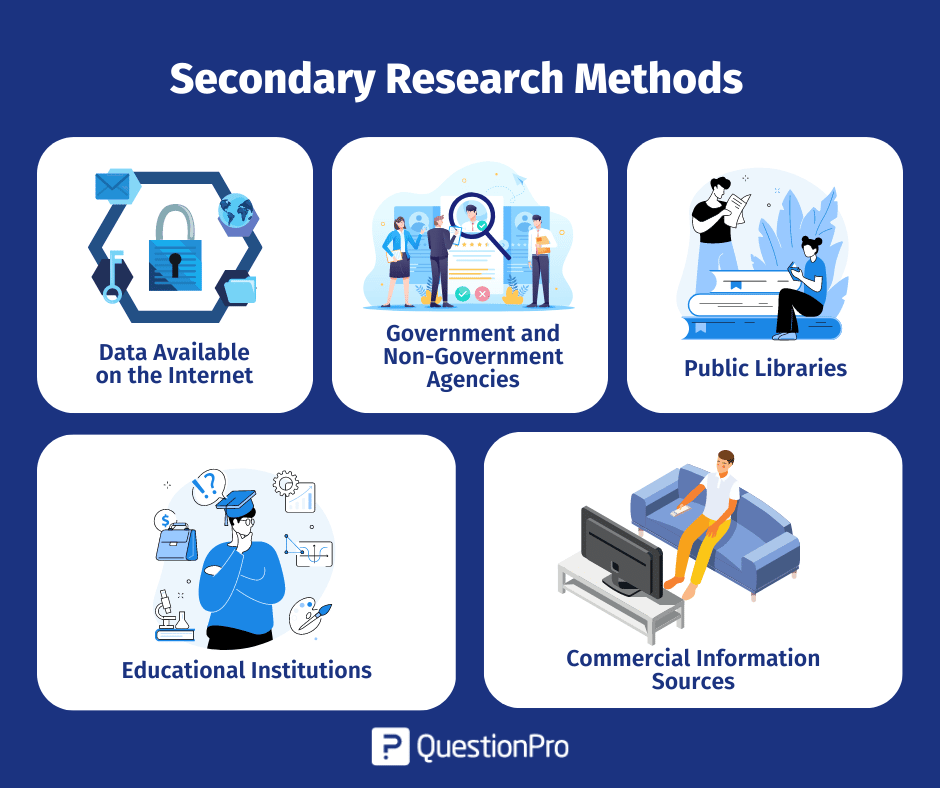

Secondary Research Methods with Examples

Secondary research is cost-effective, one of the reasons it is a popular choice among many businesses and organizations. Not every organization is able to pay a huge sum of money to conduct research and gather data. So, rightly secondary research is also termed “ desk research ”, as data can be retrieved from sitting behind a desk.

The following are popularly used secondary research methods and examples:

1. Data Available on The Internet

One of the most popular ways to collect secondary data is the internet. Data is readily available on the internet and can be downloaded at the click of a button.

This data is practically free of cost, or one may have to pay a negligible amount to download the already existing data. Websites have a lot of information that businesses or organizations can use to suit their research needs. However, organizations need to consider only authentic and trusted website to collect information.

2. Government and Non-Government Agencies

Data for secondary research can also be collected from some government and non-government agencies. For example, US Government Printing Office, US Census Bureau, and Small Business Development Centers have valuable and relevant data that businesses or organizations can use.

There is a certain cost applicable to download or use data available with these agencies. Data obtained from these agencies are authentic and trustworthy.

3. Public Libraries

Public libraries are another good source to search for data for this research. Public libraries have copies of important research that were conducted earlier. They are a storehouse of important information and documents from which information can be extracted.

The services provided in these public libraries vary from one library to another. More often, libraries have a huge collection of government publications with market statistics, large collection of business directories and newsletters.

4. Educational Institutions

Importance of collecting data from educational institutions for secondary research is often overlooked. However, more research is conducted in colleges and universities than any other business sector.

The data that is collected by universities is mainly for primary research. However, businesses or organizations can approach educational institutions and request for data from them.

5. Commercial Information Sources

Local newspapers, journals, magazines, radio and TV stations are a great source to obtain data for secondary research. These commercial information sources have first-hand information on economic developments, political agenda, market research, demographic segmentation and similar subjects.

Businesses or organizations can request to obtain data that is most relevant to their study. Businesses not only have the opportunity to identify their prospective clients but can also know about the avenues to promote their products or services through these sources as they have a wider reach.

Key Differences between Primary Research and Secondary Research

Understanding the distinction between primary research and secondary research is essential in determining which research method is best for your project. These are the two main types of research methods, each with advantages and disadvantages. In this section, we will explore the critical differences between the two and when it is appropriate to use them.

How to Conduct Secondary Research?

We have already learned about the differences between primary and secondary research. Now, let’s take a closer look at how to conduct it.

Secondary research is an important tool for gathering information already collected and analyzed by others. It can help us save time and money and allow us to gain insights into the subject we are researching. So, in this section, we will discuss some common methods and tips for conducting it effectively.

Here are the steps involved in conducting secondary research:

1. Identify the topic of research: Before beginning secondary research, identify the topic that needs research. Once that’s done, list down the research attributes and its purpose.

2. Identify research sources: Next, narrow down on the information sources that will provide most relevant data and information applicable to your research.

3. Collect existing data: Once the data collection sources are narrowed down, check for any previous data that is available which is closely related to the topic. Data related to research can be obtained from various sources like newspapers, public libraries, government and non-government agencies etc.

4. Combine and compare: Once data is collected, combine and compare the data for any duplication and assemble data into a usable format. Make sure to collect data from authentic sources. Incorrect data can hamper research severely.

4. Analyze data: Analyze collected data and identify if all questions are answered. If not, repeat the process if there is a need to dwell further into actionable insights.

Advantages of Secondary Research

Secondary research offers a number of advantages to researchers, including efficiency, the ability to build upon existing knowledge, and the ability to conduct research in situations where primary research may not be possible or ethical. By carefully selecting their sources and being thoughtful in their approach, researchers can leverage secondary research to drive impact and advance the field. Some key advantages are the following:

1. Most information in this research is readily available. There are many sources from which relevant data can be collected and used, unlike primary research, where data needs to collect from scratch.

2. This is a less expensive and less time-consuming process as data required is easily available and doesn’t cost much if extracted from authentic sources. A minimum expenditure is associated to obtain data.

3. The data that is collected through secondary research gives organizations or businesses an idea about the effectiveness of primary research. Hence, organizations or businesses can form a hypothesis and evaluate cost of conducting primary research.

4. Secondary research is quicker to conduct because of the availability of data. It can be completed within a few weeks depending on the objective of businesses or scale of data needed.

As we can see, this research is the process of analyzing data already collected by someone else, and it can offer a number of benefits to researchers.

Disadvantages of Secondary Research

On the other hand, we have some disadvantages that come with doing secondary research. Some of the most notorious are the following:

1. Although data is readily available, credibility evaluation must be performed to understand the authenticity of the information available.

2. Not all secondary data resources offer the latest reports and statistics. Even when the data is accurate, it may not be updated enough to accommodate recent timelines.

3. Secondary research derives its conclusion from collective primary research data. The success of your research will depend, to a greater extent, on the quality of research already conducted by primary research.

LEARN ABOUT: 12 Best Tools for Researchers

In conclusion, secondary research is an important tool for researchers exploring various topics. By leveraging existing data sources, researchers can save time and resources, build upon existing knowledge, and conduct research in situations where primary research may not be feasible.

There are a variety of methods and examples of secondary research, from analyzing public data sets to reviewing previously published research papers. As students and aspiring researchers, it’s important to understand the benefits and limitations of this research and to approach it thoughtfully and critically. By doing so, we can continue to advance our understanding of the world around us and contribute to meaningful research that positively impacts society.

QuestionPro can be a useful tool for conducting secondary research in a variety of ways. You can create online surveys that target a specific population, collecting data that can be analyzed to gain insights into consumer behavior, attitudes, and preferences; analyze existing data sets that you have obtained through other means or benchmark your organization against others in your industry or against industry standards. The software provides a range of benchmarking tools that can help you compare your performance on key metrics, such as customer satisfaction, with that of your peers.

Using QuestionPro thoughtfully and strategically allows you to gain valuable insights to inform decision-making and drive business success. Start today for free! No credit card is required.

LEARN MORE FREE TRIAL

MORE LIKE THIS

The Best Email Survey Tool to Boost Your Feedback Game

May 7, 2024

Top 10 Employee Engagement Survey Tools

Top 20 Employee Engagement Software Solutions

May 3, 2024

15 Best Customer Experience Software of 2024

May 2, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

Secondary Research Guide: Definition, Methods, Examples

Apr 3, 2024

8 min. read

The internet has vastly expanded our access to information, allowing us to learn almost anything about everything. But not all market research is created equal , and this secondary research guide explains why.

There are two key ways to do research. One is to test your own ideas, make your own observations, and collect your own data to derive conclusions. The other is to use secondary research — where someone else has done most of the heavy lifting for you.

Here’s an overview of secondary research and the value it brings to data-driven businesses.

Secondary Research Definition: What Is Secondary Research?

Primary vs Secondary Market Research

What Are Secondary Research Methods?

Advantages of secondary research, disadvantages of secondary research, best practices for secondary research, how to conduct secondary research with meltwater.

Secondary research definition: The process of collecting information from existing sources and data that have already been analyzed by others.

Secondary research (aka desk research or complementary research ) provides a foundation to help you understand a topic, with the goal of building on existing knowledge. They often cover the same information as primary sources, but they add a layer of analysis and explanation to them.

Users can choose from several secondary research types and sources, including:

- Journal articles

- Research papers

With secondary sources, users can draw insights, detect trends , and validate findings to jumpstart their research efforts.

Primary vs. Secondary Market Research

We’ve touched a little on primary research , but it’s essential to understand exactly how primary and secondary research are unique.

Think of primary research as the “thing” itself, and secondary research as the analysis of the “thing,” like these primary and secondary research examples:

- An expert gives an interview (primary research) and a marketer uses that interview to write an article (secondary research).

- A company conducts a consumer satisfaction survey (primary research) and a business analyst uses the survey data to write a market trend report (secondary research).

- A marketing team launches a new advertising campaign across various platforms (primary research) and a marketing research firm, like Meltwater for market research , compiles the campaign performance data to benchmark against industry standards (secondary research).

In other words, primary sources make original contributions to a topic or issue, while secondary sources analyze, synthesize, or interpret primary sources.

Both are necessary when optimizing a business, gaining a competitive edge , improving marketing, or understanding consumer trends that may impact your business.

Secondary research methods focus on analyzing existing data rather than collecting primary data . Common examples of secondary research methods include:

- Literature review . Researchers analyze and synthesize existing literature (e.g., white papers, research papers, articles) to find knowledge gaps and build on current findings.

- Content analysis . Researchers review media sources and published content to find meaningful patterns and trends.

- AI-powered secondary research . Platforms like Meltwater for market research analyze vast amounts of complex data and use AI technologies like natural language processing and machine learning to turn data into contextual insights.

Researchers today have access to more secondary research companies and market research tools and technology than ever before, allowing them to streamline their efforts and improve their findings.

Want to see how Meltwater can complement your secondary market research efforts? Simply fill out the form at the bottom of this post, and we'll be in touch.

Conducting secondary research offers benefits in every job function and use case, from marketing to the C-suite. Here are a few advantages you can expect.

Cost and time efficiency

Using existing research saves you time and money compared to conducting primary research. Secondary data is readily available and easily accessible via libraries, free publications, or the Internet. This is particularly advantageous when you face time constraints or when a project requires a large amount of data and research.

Access to large datasets

Secondary data gives you access to larger data sets and sample sizes compared to what primary methods may produce. Larger sample sizes can improve the statistical power of the study and add more credibility to your findings.

Ability to analyze trends and patterns

Using larger sample sizes, researchers have more opportunities to find and analyze trends and patterns. The more data that supports a trend or pattern, the more trustworthy the trend becomes and the more useful for making decisions.

Historical context

Using a combination of older and recent data allows researchers to gain historical context about patterns and trends. Learning what’s happened before can help decision-makers gain a better current understanding and improve how they approach a problem or project.

Basis for further research

Ideally, you’ll use secondary research to further other efforts . Secondary sources help to identify knowledge gaps, highlight areas for improvement, or conduct deeper investigations.

Tip: Learn how to use Meltwater as a research tool and how Meltwater uses AI.

Secondary research comes with a few drawbacks, though these aren’t necessarily deal breakers when deciding to use secondary sources.

Reliability concerns

Researchers don’t always know where the data comes from or how it’s collected, which can lead to reliability concerns. They don’t control the initial process, nor do they always know the original purpose for collecting the data, both of which can lead to skewed results.

Potential bias

The original data collectors may have a specific agenda when doing their primary research, which may lead to biased findings. Evaluating the credibility and integrity of secondary data sources can prove difficult.

Outdated information

Secondary sources may contain outdated information, especially when dealing with rapidly evolving trends or fields. Using outdated information can lead to inaccurate conclusions and widen knowledge gaps.

Limitations in customization

Relying on secondary data means being at the mercy of what’s already published. It doesn’t consider your specific use cases, which limits you as to how you can customize and use the data.

A lack of relevance

Secondary research rarely holds all the answers you need, at least from a single source. You typically need multiple secondary sources to piece together a narrative, and even then you might not find the specific information you need.

To make secondary market research your new best friend, you’ll need to think critically about its strengths and find ways to overcome its weaknesses. Let’s review some best practices to use secondary research to its fullest potential.

Identify credible sources for secondary research

To overcome the challenges of bias, accuracy, and reliability, choose secondary sources that have a demonstrated history of excellence . For example, an article published in a medical journal naturally has more credibility than a blog post on a little-known website.

Assess credibility based on peer reviews, author expertise, sampling techniques, publication reputation, and data collection methodologies. Cross-reference the data with other sources to gain a general consensus of truth.

The more credibility “factors” a source has, the more confidently you can rely on it.

Evaluate the quality and relevance of secondary data

You can gauge the quality of the data by asking simple questions:

- How complete is the data?

- How old is the data?

- Is this data relevant to my needs?

- Does the data come from a known, trustworthy source?

It’s best to focus on data that aligns with your research objectives. Knowing the questions you want to answer and the outcomes you want to achieve ahead of time helps you focus only on data that offers meaningful insights.

Document your sources

If you’re sharing secondary data with others, it’s essential to document your sources to gain others’ trust. They don’t have the benefit of being “in the trenches” with you during your research, and sharing your sources can add credibility to your findings and gain instant buy-in.

Secondary market research offers an efficient, cost-effective way to learn more about a topic or trend, providing a comprehensive understanding of the customer journey . Compared to primary research, users can gain broader insights, analyze trends and patterns, and gain a solid foundation for further exploration by using secondary sources.

Meltwater for market research speeds up the time to value in using secondary research with AI-powered insights, enhancing your understanding of the customer journey. Using natural language processing, machine learning, and trusted data science processes, Meltwater helps you find relevant data and automatically surfaces insights to help you understand its significance. Our solution identifies hidden connections between data points you might not know to look for and spells out what the data means, allowing you to make better decisions based on accurate conclusions. Learn more about Meltwater's power as a secondary research solution when you request a demo by filling out the form below:

Continue Reading

How To Do Market Research: Definition, Types, Methods

What Are Consumer Insights? Meaning, Examples, Strategy

Market Intelligence 101: What It Is & How To Use It

The 13 Best Market Research Tools

Consumer Intelligence: Definition & Examples

9 Top Consumer Insights Tools & Companies

What Is Desk Research? Meaning, Methodology, Examples

Top Secondary Market Research Companies | Desk Research Companies

What is Secondary Research? Types, Methods, Examples

Appinio Research · 20.09.2023 · 13min read

Have you ever wondered how researchers gather valuable insights without conducting new experiments or surveys? That's where secondary research steps in—a powerful approach that allows us to explore existing data and information others collect.

Whether you're a student, a professional, or someone seeking to make informed decisions, understanding the art of secondary research opens doors to a wealth of knowledge.

What is Secondary Research?

Secondary Research refers to the process of gathering and analyzing existing data, information, and knowledge that has been previously collected and compiled by others. This approach allows researchers to leverage available sources, such as articles, reports, and databases, to gain insights, validate hypotheses, and make informed decisions without collecting new data.

Benefits of Secondary Research

Secondary research offers a range of advantages that can significantly enhance your research process and the quality of your findings.

- Time and Cost Efficiency: Secondary research saves time and resources by utilizing existing data sources, eliminating the need for data collection from scratch.

- Wide Range of Data: Secondary research provides access to vast information from various sources, allowing for comprehensive analysis.

- Historical Perspective: Examining past research helps identify trends, changes, and long-term patterns that might not be immediately apparent.

- Reduced Bias: As data is collected by others, there's often less inherent bias than in conducting primary research, where biases might affect data collection.

- Support for Primary Research: Secondary research can lay the foundation for primary research by providing context and insights into gaps in existing knowledge.

- Comparative Analysis : By integrating data from multiple sources, you can conduct robust comparative analyses for more accurate conclusions.

- Benchmarking and Validation: Secondary research aids in benchmarking performance against industry standards and validating hypotheses.

Primary Research vs. Secondary Research

When it comes to research methodologies, primary and secondary research each have their distinct characteristics and advantages. Here's a brief comparison to help you understand the differences.

Primary Research

- Data Source: Involves collecting new data directly from original sources.

- Data Collection: Researchers design and conduct surveys, interviews, experiments, or observations.

- Time and Resources: Typically requires more time, effort, and resources due to data collection.

- Fresh Insights: Provides firsthand, up-to-date information tailored to specific research questions.

- Control: Researchers control the data collection process and can shape methodologies.

Secondary Research

- Data Source: Involves utilizing existing data and information collected by others.

- Data Collection: Researchers search, select, and analyze data from published sources, reports, and databases.

- Time and Resources: Generally more time-efficient and cost-effective as data is already available.

- Existing Knowledge: Utilizes data that has been previously compiled, often providing broader context.

- Less Control: Researchers have limited control over how data was collected originally, if any.

Choosing between primary and secondary research depends on your research objectives, available resources, and the depth of insights you require.

Types of Secondary Research

Secondary research encompasses various types of existing data sources that can provide valuable insights for your research endeavors. Understanding these types can help you choose the most relevant sources for your objectives.