Communication

iResearchNet

Custom Writing Services

Comparative research.

A specific comparative research methodology is known in most social sciences. Its definition often refers to countries and cultures at the same time, because cultural differences between countries can be rather small (e.g., in Scandinavian countries), whereas very different cultural or ethnic groups may live within one country (e.g., minorities in the United States). Comparative studies have their problems on every level of research, i.e., from theory to types of research questions, operationalization, instruments, sampling, and interpretation of results.

The major problem in comparative research, regardless of the discipline, is that all aspects of the analysis from theory to datasets may vary in definitions and/or categories. As the objects to compare usually belong to different systemic contexts, the establishment of equivalence and comparability is thus a major challenge of comparative research. This is often “operationalized” as functional equivalence, i.e., the functionality of the research objects within the different system contexts must be equivalent. Neither equivalence nor its absence, “bias,” can be presumed. It has to be analyzed and tested for on all the different levels of the research process.

Equivalence And Bias

Equivalence has to be analyzed and established on at least three levels: on the levels of the construct, the item, and the method (van de Vijver & Tanzer 1997). Whenever a test on any of these levels shows negative results, a cultural bias is supposable. Thus, bias on these three levels can be described as the opposite of equivalence. Van de Vijver and Leung (1997) define bias as the variance within certain variables or indicators that can only be caused by culturally unspecific measurement. For example, a media content analysis could examine the amount of foreign affairs coverage in one variable, by measuring the length of newspaper articles. If, however, newspaper articles in country A are generally longer than they are in country B, irrespective of their topic, the result of a sum or mean index of foreign affairs coverage would almost inevitably lead to the conclusion that the amount of foreign affairs coverage in country A is higher than in country B. This outcome would be hardly surprising and not in focus with the research question, because the countries’ average amount of foreign affairs coverage is not related to the national average length of articles. To avoid cultural bias, the results must be standardized or weighted, for example by the mean article length.

To find out whether construct equivalence can be assumed, the researcher will generally require external data and rather complex procedures of culture-specific construct validation(s). Ideally, this includes analyses of the external structure, i.e., theoretical references to other constructs, as well as an examination of the latent or internal structure. The internal structure consists of the relationships between the construct’s sub-dimensions. It can be tested using confirmatory factor analyses, multidimensional scaling, or item analyses. Equivalence can be assumed if the construct validation for every culture has been successful and if the internal and external structures are identical in every country. However, it has to be stated that it is hardly possible to prove construct equivalence beyond any doubts (Wirth & Kolb 2004).

Even with a given construct equivalence, bias can still occur on the item level. The verbalization of items in surveys and of definitions and categories in content analyses can cause bias due to culture-specific connotations. Item bias is mostly evoked by bad, in the sense of nonequivalent, translation or by culture-specific questions and categories (van de Vijver & Leung 1997). Compared to the complex procedures discussed in the case of construct equivalence, the testing for item bias is rather simple (once construct equivalence has been established): Persons from different cultures who take the same positions or ranks on an imaginary construct scale must show the same answering attitude toward every item that measures the construct. Statistically, the correlation of the single items with the total (sum) score have to be identical in every culture, as test theory generally uses the total score to estimate the position of any individual on the construct scale. In brief, equivalence on the item level is established whenever the same sub-dimensions or issues can be used to explain the same theoretical construct in every country (Wirth & Kolb 2004).

When the instruments are ready for application, method equivalence comes to the fore. Method equivalence consists of sample equivalence, instrument equivalence, and administration equivalence. Violation of any of these equivalences produces a method bias. Sample equivalence refers to an equivalent selection of subjects or units of analysis. Instrument equivalence deals with the examination of whether people in every culture agree to take part in the study equivalently, and whether they are used to the instruments equivalently (Lauf & Peter 2001). Finally, a bias on the administration level can occur due to culturespecific attitudes of the interviewers that might produce culture-specific answers. Another source of administration bias could be found in socio-demographic differences between the various national interviewer teams (van de Vijver & Tanzer 1997).

The Role Of Theory

Theory plays a major role in three dimensions when looking for a comparative research strategy: theoretical diversity, theory drivenness, and contextual factors (Wirth & Kolb 2004). Swanson (1992) distinguishes between three principal strategies of dealing with international theoretical diversity. A common possibility is called the avoidance strategy. Many international comparisons are made by teams that come from one culture or nation only. Usually, their research interests are restricted to their own (scientific) socialization. Within this monocultural context, broad approaches cannot be applied and “intertheoretical” questions cannot be answered. This strategy includes atheoretical and unitheoretical (referring to one national theory) studies with or without contextualization (van den Vijver & Leung 2000; Wirth & Kolb 2004).

The pretheoretical strategy tries to avoid cultural and theoretical bias in another way: these studies are undertaken without a strict theoretical background until results are to be interpreted. The advantage of this strategy lies in the exploration, i.e., in developing new theories. Although, following the strict principles of critical rationalism, because of the missing theoretical background the proving of theoretical deduced hypotheses is not applicable (Popper 1994). Most of the results remain on a descriptive level and never reach theoretical diversity. Besides, the instruments for pretheoretical studies must be almost “holistic,” in order to integrate every theoretical construct conceivable for the interpretation. These studies are mostly contextualized and can, thus, become rather extensive (Swanson 1992).

Finally, when a research team develops a meta-theoretical orientation to build a framework for the basic theories and research questions, the data can be analyzed using different theoretical backgrounds. This meta-theoretical strategy allows the extensive use of all data and contextual factors, producing, however, quite a variety of often very different results, which are not easily summarized in one report (Swanson 1992). It is obvious that the higher is the level of theoretical diversity, the greater has to be the effort for construct equivalence.

Research Questions

Van de Vijver and Leung (1996, 1997) distinguish between two types of research questions: structure-oriented questions are mostly interested in the relationship between certain variables, whereas level-oriented questions focus on the parameter values. If, for example, a knowledge gap study analyzes the relationship between the knowledge gained from television news by recipients with high and low socio-economic status (SES) in the UK and the US, the question is structure oriented, because the focus is on a national relationship of knowledge indices and the mean gain of knowledge is not taken into account. Usually, structure-oriented data require correlation or regression analyses. If the main interest of the study is a comparison of the mean gain of knowledge of people with low SES in the UK and the US, the research question is level oriented, because the two knowledge indices of the two nations are to be compared. In this case, one would most probably use analyses of variance. The risk for cultural bias is the same for both kinds of research questions.

Emic And Etic Strategies Of Operationalization

Before the operationalization of an international comparison, the research team has to analyze construct equivalence to prove comparability. In the case of missing internal construct equivalence, the construct cannot be measured equivalently in every country. The decision of whether or not to use the same instruments in every country does not have any impact on this problem of missing construct equivalence. An emic approach could solve this problem. The operationalization for the measurement of the construct(s) is developed nationally, so that the culture-specific adequacy of each of the national instruments will be high. Comparison on the construct level remains possible, even though the instruments vary culturally, because functional equivalence has been established on the construct level by the culture-specific measurement. In general, this procedure will even be possible if national instruments already exist.

As measurement differs from culture to culture, the integration of the national results can be very difficult. Strictly speaking, this disadvantage of emic studies only allows for the interpretation of a structure-oriented outcome with a thorny validation process. It has to be proven that the measurements with different indicators on different scales really lead to data on equivalent constructs. By using external reference data from every culture, complex weighting and standardization procedures can possibly lead to valid equalization of levels and variance (van de Vijver & Leung 1996, 1997). In research practice, emic measuring and data analysis is often used to cast light on cultural differences.

If construct equivalence can be assumed after an in-depth analysis, an etic modus operandi could be recommended. In this logic, approaching the different cultures by using the same or a slightly adapted instrument is valid because the constructs are “functioning” equally in every culture. Consequently, an emic proceeding should most probably come to similar instruments in every culture. Reciprocally, an etic approach must lead to bias and measurement artifacts when applied under the circumstances of missing construct equivalence.

It is obvious that the advantages of emic proceedings are not only the adequate measurement of culture-specific elements, but also the possible inclusion of, e.g., idiographic elements of each culture. Thus, this approach can be seen as a compromise of qualitative and quantitative methodologies. Sometimes comparative researchers suggest analyzing cultural processes in a holistic way without crushing them into variables; psychometric, quantitative data collection would be suitable for similar cultures only. As an objection to this simplification, one should remember the emic approach’s potential to provide the researchers with comparable data, as described above. In contrast, holistic analyses produce culture-specific outcomes that will not be comparable; the problem of equivalence and bias has only been moved to the interpretation of results.

Adaptation Of The Instruments

Difficulties in establishing equivalence are regularly linked to linguistic problems. How can a researcher try to establish functional equivalence without knowledge of every language of the cultures under examination? For a linguistic adaptation of the theoretical background as well as for the instruments, one can again discriminate between “more etic” and “more emic” approaches.

Translation-oriented approaches produce two translated versions of the text: one in the “foreign” language and one after retranslation into the original language. The latter version can be compared to the original version to evaluate the translation. Note that this method produces eticly formed instruments, which can only work whenever functional equivalence has been established on every superior level. Van de Vijver and Tanzer (1997) call this procedure application of an instrument in another language. In a “more emic” cultural adaptation, cultural singularities can be included if, e.g., culture-specific connotations are counterbalanced by a different item formulation.

Purely emic approaches develop entirely culture-specific instruments without translation. Two assembly approaches are available (van de Vijver & Tanzer 1997). First, in order to maintain the committee approach, an international interdisciplinary group of experts of the cultures, languages, and research field decides whether the instruments are to be formed culture-specifically or whether a cultural adaptation will be sufficient. Second, the dual-focus approach tries to find a compromise between literal, grammatical, syntactical, and construct equivalence. Native speakers and/or bilinguals arrange the different language versions together with the research team in a multistep procedure (Erkut et al. 1999).

Usually, researchers use personal preference and accessibility of data to select the countries or cultures to study. This kind of forming of an atheoretical sample avoids many problems (but not cultural bias!). At the same time, it ignores some advantages. Przeworski and Teune (1970) suggest two systematic and theory-driven approaches. The quasiexperimental most similar systems design tries to stress cultural differences. To minimize the possible causes for the differences, those countries are chosen that are the “most similar,” so that the few dissimilarities between these countries are most likely to be the reason for the different outcomes. Whenever the hypotheses highlight intercultural similarities, the most different systems design is appropriate. Here, in a kind of turned-around quasi-experimental logic, the focus lies on similarities between cultures, even though these differ in the greatest possible way (Kolb 2004; Wirth & Kolb 2004).

Random sampling and representativeness play a minor role in international comparisons. The number of states in the world is limited and a normal distribution for the social factors under examination, i.e., the precondition of random sampling, cannot be assumed. Moreover, many statistical methods meet problems when applied under the condition of a low number of cases (Hartmann 1995).

Data Analysis And Interpretation Of Results

Given the presented conceptual and methodological problems of international research, special care must be taken over data analysis and the interpretation of results. As the implementation of every single variable of relevance is hardly accomplishable in international research, the documentation of methods, work process, and data analysis is even more important here than in single-culture studies. Thus, the evaluation of the results must ensue in additional studies. At any rate, an intensive use of different statistical analyses beyond the “general” comparison of arithmetic means can lead to further validation of the results and especially of the interpretation. Van de Vijver and Leung (1997) present a widespread summary of data analysis procedures, including structureand level-oriented approaches, examples of SPSS syntax, and references.

Following Przeworski’s and Teune’s research strategies (1970), results of comparative research can be classified into differences and similarities between the research objects. For both types, Kohn (1989) introduces two separate ways of interpretation. Intercultural similarities seem to be easier to interpret, at first glance. The difficulties emerge when regarding equivalence on the one hand (i.e., there may be covert cultural differences within culturally biased similarities), and when regarding the causes of similarities on the other. The causes will be especially hard to determine in the case of “most different” countries, as different combinations of different indicators can theoretically produce the same results. Esser (2000) refers to diverse theoretical backgrounds that will lead either to differences (e.g., action-theoretically based micro-research) or to similarities (e.g., system-theoretically oriented macro-approaches). In general, the starting point of Przeworski and Teune (1970) seems to be the easier way to come to interesting results and interpretations, using the quasi-experimental approach for “most similar systems with different outcome.” In addition to the advantages of causal interpretation, the “most similar” systems are likely to be equivalent from the top level of the construct to the bottom level of indicators and items. “Controlling” other influences can minimize methodological problems and makes analysis and interpretation more valid.

References:

- Erkut, S., Alarcón, O., García Coll, C., Tropp, L. R., & Vázquez García, H. A. (1999). The dual-focus approach to creating bilingual measures. Journal of Cross-Cultural Psychology, 30(2), 206 –218.

- Esser, F. (2000). Journalismus vergleichen: Journalismustheorie und komparative Forschung [Comparing journalism: Journalism theory and comparative research]. In M. Löffelholz (ed.), Theorien des Journalismus: Ein diskursives Handbuch [Journalism theories: A discoursal handbook]. Wiesbaden: Westdeutscher, pp. 123 –146.

- Esser, F., & Pfetsch, B. (eds.) (2004). Comparing political communication: Theories, cases, and challenges. Cambridge: Cambridge University Press.

- Hartmann, J. (1995). Vergleichende Politikwissenschaft: Ein Lehrbuch [Comparative political science: A textbook]. Frankfurt: Campus.

- Kohn, M. L. (1989). Cross-national research as an analytic strategy. In M. L. Kohn (ed.), Crossnational research in sociology. Newbury Park, CA: Sage, pp. 77–102.

- Kolb, S. (2004). Voraussetzungen für und Gewinn bringende Anwendung von quasiexperimentellen Ansätzen in der kulturvergleichenden Kommunikationsforschung [Precondition for and advantageous application of quasi-experimental approaches in comparative communication research]. In W. Wirth, E. Lauf, & A. Fahr (eds.), Forschungslogik und – design in der Kommunikationswissenschaft, vol. 1: Einführung, Problematisierungen und Aspekte der Methodenlogik aus kommunikationswissenschaftlicher Perspektive [Logic of inquiry and research designs in communication research, vol. 1: Introduction, problematization, and aspects of methodology from a communications point of view]. Cologne: Halem, 2004, pp. 157–178.

- Lauf, E., & Peter, J. (2001). Die Codierung verschiedensprachiger Inhalte: Erhebungskonzepte und Gütemaße [Coding of content in different languages: Concepts of inquiry and quality indices]. In E. Lauf & W. Wirth (eds.), Inhaltsanalyse: Perspektiven, Probleme, Potentiale [Content analysis: Perspectives, problems, potentialities]. Cologne: Halem, pp. 199 –217.

- Popper, K. R. (1994). Logik der Forschung [Logic of inquiry], 10th edn. Tübingen: Mohr.

- Przeworski, A., & Teune, H. (1970). The logic of comparative social inquiry. Malabar, FL: Krieger.

- Swanson, D. L. (1992). Managing theoretical diversity in cross-national studies of political In J. G. Blumler, J. M. McLeod, & K. E. Rosengren (eds.), Comparatively speaking: Communication and culture across space and time. Newbury Park, CA: Sage, pp. 19 –34.

- Vijver, F. van de, & Leung, K. (1996). Methods and data analysis of comparative research. In J. W. Berry, Y. H. Poortinga, & J. Pandey (eds.), Handbook of cross-cultural research. Boston, MA: Allyn and Bacon, pp. 257–300.

- Vijver, F. van de, & Leung, K. (1997). Methods and data analysis of cross-cultural research. Thousand Oaks, CA: Sage.

- Vijver, F. van de, & Leung, K. (2000). Methodological issues in psychological research on culture. Journal of Cross-Cultural Psychology, 31(1), 33 –51.

- Vijver, F. van de, & Tanzer, N. K. (1997). Bias and equivalence in cross-cultural assessment: An overview. European Journal of Applied Psychology, 47(4), 263 –279.

- Wirth, W., & Kolb, S. (2004). Designs and methods of comparative political communication research. In F. Esser, & B. Pfetsch (eds.), Comparing political communication: Theories, cases, and challenges. Cambridge: Cambridge University Press, pp. 87–111.

3. Comparative Research Methods

This chapter examines the ‘art of comparing’ by showing how to relate a theoretically guided research question to a properly founded research answer by developing an adequate research design. It first considers the role of variables in comparative research, before discussing the meaning of ‘cases’ and case selection. It then looks at the ‘core’ of the comparative research method: the use of the logic of comparative inquiry to analyse the relationships between variables (representing theory), and the information contained in the cases (the data). Two logics are distinguished: Method of Difference and Method of Agreement. The chapter concludes with an assessment of some problems common to the use of comparative methods.

- Related Documents

3. Comparative research methods

This chapter examines the ‘art of comparing’ by showing how to relate a theoretically guided research question to a properly founded research answer by developing an adequate research design. It first considers the role of variables in comparative research before discussing the meaning of ‘cases’ and case selection. It then looks at the ‘core’ of the comparative research method: the use of the logic of comparative inquiry to analyse the relationships between variables (representing theory) and the information contained in the cases (the data). Two logics are distinguished: Method of Difference and Method of Agreement. The chapter concludes with an assessment of some problems common to the use of comparative methods.

Rethinking Comparison

Qualitative comparative methods – and specifically controlled qualitative comparisons – are central to the study of politics. They are not the only kind of comparison, though, that can help us better understand political processes and outcomes. Yet there are few guides for how to conduct non-controlled comparative research. This volume brings together chapters from more than a dozen leading methods scholars from across the discipline of political science, including positivist and interpretivist scholars, qualitative methodologists, mixed-methods researchers, ethnographers, historians, and statisticians. Their work revolutionizes qualitative research design by diversifying the repertoire of comparative methods available to students of politics, offering readers clear suggestions for what kinds of comparisons might be possible, why they are useful, and how to execute them. By systematically thinking through how we engage in qualitative comparisons and the kinds of insights those comparisons produce, these collected essays create new possibilities to advance what we know about politics.

PERAN PEMUDA RELAWAN DEMOKRASI DALAM MENINGKATKAN PARTISIPASI POLITIK MASYARAKAT PADA PEMILIHAN UMUM LEGISLATIF TAHUN 2014 DAN IMPLIKASINYA TERHADAP KETAHANAN POLITIK WILAYAH (STUDI PADA RELAWAN DEMOKRASI BANYUMAS, JAWA TENGAH)

This research was going to described the role of Banyumas Democracy Volunteer ( Relawan Demokrasi Banyumas) in increasing political public partitipation in Banyumas’s legislative election 2014 and its implication to Banyumas’s political resilience. This research used qualitative research design as a research method. Data were collected by in depth review, observation and documentation. This research used purpossive sampling technique with stakeholder sampling variant to pick informants. The research showed that Banyumas Democracy Volunteer had a positive role in developing political resilience in Banyumas. Their role was gave political education and election education to voters in Banyumas. In the other words, Banyumas Democracy Volunteer had a vital role in developing ideal political resilience in Banyumas.Keywords: Banyumas Democracy Volunteer, Democracy, Election, Political Resilience of Region.

Ezer Kenegdo: Eksistensi Perempuan dan Perannya dalam Keluarga

AbstractPurpose of this study was to describe the meaning of ezer kenegdo and to know position and role of women in the family. The research method used is qualitative research methods (library research). The term of “ ezer kenegdo” refer to a helper but her position withoutsuperiority and inferiority. “The patner model” between men and women is uderstood in relation to one another as the same function, where differences are complementary and mutually beneficial in all walks of life and human endeavors.Keywords: Ezer Kenegdo; Women; Family.AbstrakTujuan penulisan artikel ini adalah untuk mendeskripsikan pengertian ezer kenegdo dan mengetahui kedudukan dan peran perempuan dalam keluarga. Metode yang digunakan adalah metode kualitatif library research. Ungkapan “ezer kenegdo” menunjuk pada seorang penolong namun kedudukannya adalah setara tanpa ada superioritas dan inferioritas. “Model kepatneran” antara laki-laki dan perempuan dipahami dengan hubungan satu dengan yang lain sebagai fungsi yang sama, yang mana perbedaan adalah saling melengkapi dan saling menguntungkan dalam semua lapisan kehidupan dan usaha manusia.Kata Kunci: Ezer Kenegdo, Prerempuan, Keluarga.

Commentary on ‘Opportunities and Challenges of Engaged Indigenous Scholarship’ (Van de Ven, Meyer, & Jing, 2018)

The mission ofManagement and Organization Review, founded in 2005, is to publish research about Chinese management and organizations, foreign organizations operating in China, or Chinese firms operating globally. The aspiration is to develop knowledge that is unique to China as well as universal knowledge that may transcend China. Articulated in the first editorial published in the inaugural issue of MOR (2005) and further elaborated in a second editorial (Tsui, 2006), the question of contextualization is framed, discussing the role of context in the choices of the research question, theory, measurement, and research design. The idea of ‘engaged indigenous research’ by Van de Ven, Meyer, and Jing (2018) describes the highest level of contextualization, with the local context serving as the primary factor guiding all the decisions of a research project. Tsui (2007: 1353) refers to it as ‘deep contextualization’.

PERAN DINAS KESEHATAN DALAM MENANGGULANGI GIZI BURUK ANAK DI KECAMATAN NGAMPRAH KABUPATEN BANDUNG BARAT

The title of this research is "The Role of the Health Office in Tackling Child Malnutrition in Ngamprah District, West Bandung Regency". The problem in this research is not yet optimal implementation of the Health Office in Overcoming Malnutrition in Children in Ngamprah District, West Bandung Regency. The research method that researchers use is descriptive research methods with a qualitative approach. The technique of determining the informants used was purposive sampling technique. The results showed that in carrying out its duties and functions, the health office, the Health Office had implemented a sufficiently optimal role seen from the six indicators of success in overcoming malnutrition, namely: All Posyandu carry out weighing operations at least once a month All toddlers are weighed, All cases of malnutrition are referred to the Puskemas Nursing or Home Sick, all cases of malnutrition are treated at the health center. Nursing or hospitalization is handled according to the management of malnutrition. All post-treatment malnourished toddlers receive assistance.

Jazz jem sessions in the aspect of listener perception

The purpose of the article is to identify the characteristic features ofjazz jam sessions as creative and concert events. The research methods arebased on the use of a number of empirical approaches. The historicalmethod has characterized the periodization of the emergence andpopularity of jam session as an artistic phenomenon. The use of themethod of comparison of jazz jam sessions and jazz concert made itpossible to determine the characteristic features of jams. An appeal toaxiological research methods has identified the most strikingimprovisational solos of leading jazz artists. Of particular importance inthe context of the article are the methods of analysis and synthesis,observation and generalization. It is important to pay attention to the use ofa structural-functional scientific-research method that indicates theeffectiveness of technological and execution processes on jams. Scientificinnovation. The article is about discovering the peculiarities of the jamsession phenomenon and defining the role of interaction between theaudience of improviser listeners and musicians throughout the jams. Theprocesses of development of jazz concerts and improvisations at jamsessions are revealed. Conclusions. The scientific research providedconfirms the fact that system of interactions between musicians amongthemselves and the audience, as well as improvisation of the performers atthe jam sessions is immense and infinite. That is why modern jazz singersand the audience will always strive for its development and understanding.This way is worth starting with repeated listening to improvisation, in theimmediate presence of the jam sessions (both participant and listener).

THE ROLE OF TECHNOLOGY INFORMATION SYSTEMS AND APPLICATION OF SAK ETAP ON DEVELOPMENT MODEL FINANCIAL POSITION REPORT

Bina Siswa SMA Plus Cisarua addressing in Jl. colonel canal masturi no. 64. At the time of document making, record-keeping of transaction relating to account real or financial position report account especially, Bina Siswa SMA Plus Cisarua has applied computer that is by using the application of Microsoft Office Word 2007 and Microsoft Excel 2007, in practice of control to relative financial position report account unable to be added with the duration process performed within financial statement making. For the problems then writer takes title: “The Role Of Technology Information Systems And Aplication Of SAK ETAP On Development Model Financial Position Report”. Research type which writer applies is research type academy, data type which writer applies is qualitative data and quantitative data, research design type which writer applies is research design deskriptif-analistis, research method which writer applies is descriptive research method, survey and eksperiment, data collecting technique which writer applies is field researcher what consisted of interview and observation library research, system development method which writer applies is methodologies orienting at process, data and output. System development structure applied is Iterasi. Design of information system applied is context diagram, data flow diagram, and flowchart. Design of this financial position report accounting information system according to statement of financial accounting standard SAK ETAP and output consisted of information of accumulated fixed assets, receivable list, transaction summary of cash, transaction summary of bank and financial position report.

Dilema Hakim Pengadilan Agama dalam Menyelesaikan Perkara Hukum Keluarga Melalui Mediasi

This article aims to determine the role of judges in resolving family law cases through mediation in the Religious Courts, where judges have the position as state officials as regulated in Law Number 43 of 1999 concerning Basic Personnel, can also be a mediator in the judiciary. as regulated in Supreme Court Regulation Number 1 of 2016 concerning Mediation Procedures where judges have the responsibility to seek peace at every level of the trial and are also involved in mediation procedures. The research method used in this article uses normative legal research methods. Whereas until now judges still have a very important role in resolving family law cases in the Religious Courts due to the fact that there are still many negotiating processes with mediation assisted by judges, even though on the one hand the number of non-judge mediators is available, although in each region it is not evenly distributed in terms of number and capacity. non-judge mediator.

Anime affection on human IQ and behavior in Saudi Arabia

The present study attempted to determine the effects of watching anime and understanding if watching anime could affect the mental and social aspects of kids or other group of ages, and also to decide that the teenagers and children should watch anime or not. The research design used in this study is the descriptive research method and observational where in data and facts from direct observations and online questionnaires were used to answer the research question. The finding of this study suggested that anime viewers has higher level of general knowledge comparing with the non- anime viewers and as well as higher IQ level significantly in a specific group, besides anime can be used to spread a background about any culture and plays a role in increase the economy.

Export Citation Format

Share document.

Comparison in Scientific Research: Uncovering statistically significant relationships

by Anthony Carpi, Ph.D., Anne E. Egger, Ph.D.

Listen to this reading

Did you know that when Europeans first saw chimpanzees, they thought the animals were hairy, adult humans with stunted growth? A study of chimpanzees paved the way for comparison to be recognized as an important research method. Later, Charles Darwin and others used this comparative research method in the development of the theory of evolution.

Comparison is used to determine and quantify relationships between two or more variables by observing different groups that either by choice or circumstance are exposed to different treatments.

Comparison includes both retrospective studies that look at events that have already occurred, and prospective studies, that examine variables from the present forward.

Comparative research is similar to experimentation in that it involves comparing a treatment group to a control, but it differs in that the treatment is observed rather than being consciously imposed due to ethical concerns, or because it is not possible, such as in a retrospective study.

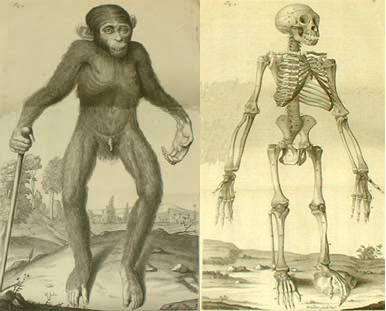

Anyone who has stared at a chimpanzee in a zoo (Figure 1) has probably wondered about the animal's similarity to humans. Chimps make facial expressions that resemble humans, use their hands in much the same way we do, are adept at using different objects as tools, and even laugh when they are tickled. It may not be surprising to learn then that when the first captured chimpanzees were brought to Europe in the 17 th century, people were confused, labeling the animals "pygmies" and speculating that they were stunted versions of "full-grown" humans. A London physician named Edward Tyson obtained a "pygmie" that had died of an infection shortly after arriving in London, and began a systematic study of the animal that cataloged the differences between chimpanzees and humans, thus helping to establish comparative research as a scientific method .

Figure 1: A chimpanzee

- A brief history of comparative methods

In 1698, Tyson, a member of the Royal Society of London, began a detailed dissection of the "pygmie" he had obtained and published his findings in the 1699 work: Orang-Outang, sive Homo Sylvestris: or, the Anatomy of a Pygmie Compared with that of a Monkey, an Ape, and a Man . The title of the work further reflects the misconception that existed at the time – Tyson did not use the term Orang-Outang in its modern sense to refer to the orangutan; he used it in its literal translation from the Malay language as "man of the woods," as that is how the chimps were viewed.

Tyson took great care in his dissection. He precisely measured and compared a number of anatomical variables such as brain size of the "pygmie," ape, and human. He recorded his measurements of the "pygmie," even down to the direction in which the animal's hair grew: "The tendency of the Hair of all of the Body was downwards; but only from the Wrists to the Elbows 'twas upwards" (Russell, 1967). Aided by William Cowper, Tyson made drawings of various anatomical structures, taking great care to accurately depict the dimensions of these structures so that they could be compared to those in humans (Figure 2). His systematic comparative study of the dimensions of anatomical structures in the chimp, ape, and human led him to state:

in the Organization of abundance of its Parts, it more approached to the Structure of the same in Men: But where it differs from a Man, there it resembles plainly the Common Ape, more than any other Animal. (Russell, 1967)

Tyson's comparative studies proved exceptionally accurate and his research was used by others, including Thomas Henry Huxley in Evidence as to Man's Place in Nature (1863) and Charles Darwin in The Descent of Man (1871).

Figure 2: Edward Tyson's drawing of the external appearance of a "pygmie" (left) and the animal's skeleton (right) from The Anatomy of a Pygmie Compared with that of a Monkey, an Ape, and a Man from the second edition, London, printed for T. Osborne, 1751.

Tyson's methodical and scientific approach to anatomical dissection contributed to the development of evolutionary theory and helped establish the field of comparative anatomy. Further, Tyson's work helps to highlight the importance of comparison as a scientific research method .

- Comparison as a scientific research method

Comparative research represents one approach in the spectrum of scientific research methods and in some ways is a hybrid of other methods, drawing on aspects of both experimental science (see our Experimentation in Science module) and descriptive research (see our Description in Science module). Similar to experimentation, comparison seeks to decipher the relationship between two or more variables by documenting observed differences and similarities between two or more subjects or groups. In contrast to experimentation, the comparative researcher does not subject one of those groups to a treatment , but rather observes a group that either by choice or circumstance has been subject to a treatment. Thus comparison involves observation in a more "natural" setting, not subject to experimental confines, and in this way evokes similarities with description.

Importantly, the simple comparison of two variables or objects is not comparative research . Tyson's work would not have been considered scientific research if he had simply noted that "pygmies" looked like humans without measuring bone lengths and hair growth patterns. Instead, comparative research involves the systematic cataloging of the nature and/or behavior of two or more variables, and the quantification of the relationship between them.

Figure 3: Skeleton of the juvenile chimpanzee dissected by Edward Tyson, currently displayed at the Natural History Museum, London.

While the choice of which research method to use is a personal decision based in part on the training of the researchers conducting the study, there are a number of scenarios in which comparative research would likely be the primary choice.

- The first scenario is one in which the scientist is not trying to measure a response to change, but rather he or she may be trying to understand the similarities and differences between two subjects . For example, Tyson was not observing a change in his "pygmie" in response to an experimental treatment . Instead, his research was a comparison of the unknown "pygmie" to humans and apes in order to determine the relationship between them.

- A second scenario in which comparative studies are common is when the physical scale or timeline of a question may prevent experimentation. For example, in the field of paleoclimatology, researchers have compared cores taken from sediments deposited millions of years ago in the world's oceans to see if the sedimentary composition is similar across all oceans or differs according to geographic location. Because the sediments in these cores were deposited millions of years ago, it would be impossible to obtain these results through the experimental method . Research designed to look at past events such as sediment cores deposited millions of years ago is referred to as retrospective research.

- A third common comparative scenario is when the ethical implications of an experimental treatment preclude an experimental design. Researchers who study the toxicity of environmental pollutants or the spread of disease in humans are precluded from purposefully exposing a group of individuals to the toxin or disease for ethical reasons. In these situations, researchers would set up a comparative study by identifying individuals who have been accidentally exposed to the pollutant or disease and comparing their symptoms to those of a control group of people who were not exposed. Research designed to look at events from the present into the future, such as a study looking at the development of symptoms in individuals exposed to a pollutant, is referred to as prospective research.

Comparative science was significantly strengthened in the late 19th and early 20th century with the introduction of modern statistical methods . These were used to quantify the association between variables (see our Statistics in Science module). Today, statistical methods are critical for quantifying the nature of relationships examined in many comparative studies. The outcome of comparative research is often presented in one of the following ways: as a probability , as a statement of statistical significance , or as a declaration of risk. For example, in 2007 Kristensen and Bjerkedal showed that there is a statistically significant relationship (at the 95% confidence level) between birth order and IQ by comparing test scores of first-born children to those of their younger siblings (Kristensen & Bjerkedal, 2007). And numerous studies have contributed to the determination that the risk of developing lung cancer is 30 times greater in smokers than in nonsmokers (NCI, 1997).

Comprehension Checkpoint

- Comparison in practice: The case of cigarettes

In 1919, Dr. George Dock, chairman of the Department of Medicine at Barnes Hospital in St. Louis, asked all of the third- and fourth-year medical students at the teaching hospital to observe an autopsy of a man with a disease so rare, he claimed, that most of the students would likely never see another case of it in their careers. With the medical students gathered around, the physicians conducting the autopsy observed that the patient's lungs were speckled with large dark masses of cells that had caused extensive damage to the lung tissue and had forced the airways to close and collapse. Dr. Alton Ochsner, one of the students who observed the autopsy, would write years later that "I did not see another case until 1936, seventeen years later, when in a period of six months, I saw nine patients with cancer of the lung. – All the afflicted patients were men who smoked heavily and had smoked since World War I" (Meyer, 1992).

Figure 4: Image from a stereoptic card showing a woman smoking a cigarette circa 1900

The American physician Dr. Isaac Adler was, in fact, the first scientist to propose a link between cigarette smoking and lung cancer in 1912, based on his observation that lung cancer patients often reported that they were smokers. Adler's observations, however, were anecdotal, and provided no scientific evidence toward demonstrating a relationship. The German epidemiologist Franz Müller is credited with the first case-control study of smoking and lung cancer in the 1930s. Müller sent a survey to the relatives of individuals who had died of cancer, and asked them about the smoking habits of the deceased. Based on the responses he received, Müller reported a higher incidence of lung cancer among heavy smokers compared to light smokers. However, the study had a number of problems. First, it relied on the memory of relatives of deceased individuals rather than first-hand observations, and second, no statistical association was made. Soon after this, the tobacco industry began to sponsor research with the biased goal of repudiating negative health claims against cigarettes (see our Scientific Institutions and Societies module for more information on sponsored research).

Beginning in the 1950s, several well-controlled comparative studies were initiated. In 1950, Ernest Wynder and Evarts Graham published a retrospective study comparing the smoking habits of 605 hospital patients with lung cancer to 780 hospital patients with other diseases (Wynder & Graham, 1950). Their study showed that 1.3% of lung cancer patients were nonsmokers while 14.6% of patients with other diseases were nonsmokers. In addition, 51.2% of lung cancer patients were "excessive" smokers while only 19.1% of other patients were excessive smokers. Both of these comparisons proved to be statistically significant differences. The statisticians who analyzed the data concluded:

when the nonsmokers and the total of the high smoking classes of patients with lung cancer are compared with patients who have other diseases, we can reject the null hypothesis that smoking has no effect on the induction of cancer of the lungs.

Wynder and Graham also suggested that there might be a lag of ten years or more between the period of smoking in an individual and the onset of clinical symptoms of cancer. This would present a major challenge to researchers since any study that investigated the relationship between smoking and lung cancer in a prospective fashion would have to last many years.

Richard Doll and Austin Hill published a similar comparative study in 1950 in which they showed that there was a statistically higher incidence of smoking among lung cancer patients compared to patients with other diseases (Doll & Hill, 1950). In their discussion, Doll and Hill raise an interesting point regarding comparative research methods by saying,

This is not necessarily to state that smoking causes carcinoma of the lung. The association would occur if carcinoma of the lung caused people to smoke or if both attributes were end-effects of a common cause.

They go on to assert that because the habit of smoking was seen to develop before the onset of lung cancer, the argument that lung cancer leads to smoking can be rejected. They therefore conclude, "that smoking is a factor, and an important factor, in the production of carcinoma of the lung."

Despite this substantial evidence , both the tobacco industry and unbiased scientists raised objections, claiming that the retrospective research on smoking was "limited, inconclusive, and controversial." The industry stated that the studies published did not demonstrate cause and effect, but rather a spurious association between two variables . Dr. Wilhelm Hueper of the National Cancer Institute, a scientist with a long history of research into occupational causes of cancers, argued that the emphasis on cigarettes as the only cause of lung cancer would compromise research support for other causes of lung cancer. Ronald Fisher , a renowned statistician, also was opposed to the conclusions of Doll and others, purportedly because they promoted a "puritanical" view of smoking.

The tobacco industry mounted an extensive campaign of misinformation, sponsoring and then citing research that showed that smoking did not cause "cardiac pain" as a distraction from the studies that were being published regarding cigarettes and lung cancer. The industry also highlighted studies that showed that individuals who quit smoking suffered from mild depression, and they pointed to the fact that even some doctors themselves smoked cigarettes as evidence that cigarettes were not harmful (Figure 5).

Figure 5: Cigarette advertisement circa 1946.

While the scientific research began to impact health officials and some legislators, the industry's ad campaign was effective. The US Federal Trade Commission banned tobacco companies from making health claims about their products in 1955. However, more significant regulation was averted. An editorial that appeared in the New York Times in 1963 summed up the national sentiment when it stated that the tobacco industry made a "valid point," and the public should refrain from making a decision regarding cigarettes until further reports were issued by the US Surgeon General.

In 1951, Doll and Hill enrolled 40,000 British physicians in a prospective comparative study to examine the association between smoking and the development of lung cancer. In contrast to the retrospective studies that followed patients with lung cancer back in time, the prospective study was designed to follow the group forward in time. In 1952, Drs. E. Cuyler Hammond and Daniel Horn enrolled 187,783 white males in the United States in a similar prospective study. And in 1959, the American Cancer Society (ACS) began the first of two large-scale prospective studies of the association between smoking and the development of lung cancer. The first ACS study, named Cancer Prevention Study I, enrolled more than 1 million individuals and tracked their health, smoking and other lifestyle habits, development of diseases, cause of death, and life expectancy for almost 13 years (Garfinkel, 1985).

All of the studies demonstrated that smokers are at a higher risk of developing and dying from lung cancer than nonsmokers. The ACS study further showed that smokers have elevated rates of other pulmonary diseases, coronary artery disease, stroke, and cardiovascular problems. The two ACS Cancer Prevention Studies would eventually show that 52% of deaths among smokers enrolled in the studies were attributed to cigarettes.

In the second half of the 20 th century, evidence from other scientific research methods would contribute multiple lines of evidence to the conclusion that cigarette smoke is a major cause of lung cancer:

Descriptive studies of the pathology of lungs of deceased smokers would demonstrate that smoking causes significant physiological damage to the lungs. Experiments that exposed mice, rats, and other laboratory animals to cigarette smoke showed that it caused cancer in these animals (see our Experimentation in Science module for more information). Physiological models would help demonstrate the mechanism by which cigarette smoke causes cancer.

As evidence linking cigarette smoke to lung cancer and other diseases accumulated, the public, the legal community, and regulators slowly responded. In 1957, the US Surgeon General first acknowledged an association between smoking and lung cancer when a report was issued stating, "It is clear that there is an increasing and consistent body of evidence that excessive cigarette smoking is one of the causative factors in lung cancer." In 1965, over objections by the tobacco industry and the American Medical Association, which had just accepted a $10 million grant from the tobacco companies, the US Congress passed the Federal Cigarette Labeling and Advertising Act, which required that cigarette packs carry the warning: "Caution: Cigarette Smoking May Be Hazardous to Your Health." In 1967, the US Surgeon General issued a second report stating that cigarette smoking is the principal cause of lung cancer in the United States. While the tobacco companies found legal means to protect themselves for decades following this, in 1996, Brown and Williamson Tobacco Company was ordered to pay $750,000 in a tobacco liability lawsuit; it became the first liability award paid to an individual by a tobacco company.

- Comparison across disciplines

Comparative studies are used in a host of scientific disciplines, from anthropology to archaeology, comparative biology, epidemiology , psychology, and even forensic science. DNA fingerprinting, a technique used to incriminate or exonerate a suspect using biological evidence , is based on comparative science. In DNA fingerprinting, segments of DNA are isolated from a suspect and from biological evidence such as blood, semen, or other tissue left at a crime scene. Up to 20 different segments of DNA are compared between that of the suspect and the DNA found at the crime scene. If all of the segments match, the investigator can calculate the statistical probability that the DNA came from the suspect as opposed to someone else. Thus DNA matches are described in terms of a "1 in 1 million" or "1 in 1 billion" chance of error.

Comparative methods are also commonly used in studies involving humans due to the ethical limits of experimental treatment . For example, in 2007, Petter Kristensen and Tor Bjerkedal published a study in which they compared the IQ of over 250,000 male Norwegians in the military (Kristensen & Bjerkedal, 2007). The researchers found a significant relationship between birth order and IQ, where the average IQ of first-born male children was approximately three points higher than the average IQ of the second-born male in the same family. The researchers further showed that this relationship was correlated with social rather than biological factors, as second-born males who grew up in families in which the first-born child died had average IQs similar to other first-born children. One might imagine a scenario in which this type of study could be carried out experimentally, for example, purposefully removing first-born male children from certain families, but the ethics of such an experiment preclude it from ever being conducted.

- Limitations of comparative methods

One of the primary limitations of comparative methods is the control of other variables that might influence a study. For example, as pointed out by Doll and Hill in 1950, the association between smoking and cancer deaths could have meant that: a) smoking caused lung cancer, b) lung cancer caused individuals to take up smoking, or c) a third unknown variable caused lung cancer AND caused individuals to smoke (Doll & Hill, 1950). As a result, comparative researchers often go to great lengths to choose two different study groups that are similar in almost all respects except for the treatment in question. In fact, many comparative studies in humans are carried out on identical twins for this exact reason. For example, in the field of tobacco research , dozens of comparative twin studies have been used to examine everything from the health effects of cigarette smoke to the genetic basis of addiction.

- Comparison in modern practice

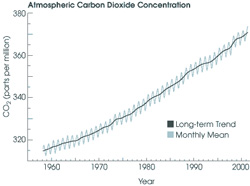

Figure 6: The "Keeling curve," a long-term record of atmospheric CO 2 concentration measured at the Mauna Loa Observatory (Keeling et al.). Although the annual oscillations represent natural, seasonal variations, the long-term increase means that concentrations are higher than they have been in 400,000 years. Graphic courtesy of NASA's Earth Observatory.

Despite the lessons learned during the debate that ensued over the possible effects of cigarette smoke, misconceptions still surround comparative science. For example, in the late 1950s, Charles Keeling , an oceanographer at the Scripps Institute of Oceanography, began to publish data he had gathered from a long-term descriptive study of atmospheric carbon dioxide (CO 2 ) levels at the Mauna Loa observatory in Hawaii (Keeling, 1958). Keeling observed that atmospheric CO 2 levels were increasing at a rapid rate (Figure 6). He and other researchers began to suspect that rising CO 2 levels were associated with increasing global mean temperatures, and several comparative studies have since correlated rising CO 2 levels with rising global temperature (Keeling, 1970). Together with research from modeling studies (see our Modeling in Scientific Research module), this research has provided evidence for an association between global climate change and the burning of fossil fuels (which emits CO 2 ).

Yet in a move reminiscent of the fight launched by the tobacco companies, the oil and fossil fuel industry launched a major public relations campaign against climate change research . As late as 1989, scientists funded by the oil industry were producing reports that called the research on climate change "noisy junk science" (Roberts, 1989). As with the tobacco issue, challenges to early comparative studies tried to paint the method as less reliable than experimental methods. But the challenges actually strengthened the science by prompting more researchers to launch investigations, thus providing multiple lines of evidence supporting an association between atmospheric CO 2 concentrations and climate change. As a result, the culmination of multiple lines of scientific evidence prompted the Intergovernmental Panel on Climate Change organized by the United Nations to issue a report stating that "Warming of the climate system is unequivocal," and "Carbon dioxide is the most important anthropogenic greenhouse gas (IPCC, 2007)."

Comparative studies are a critical part of the spectrum of research methods currently used in science. They allow scientists to apply a treatment-control design in settings that preclude experimentation, and they can provide invaluable information about the relationships between variables . The intense scrutiny that comparison has undergone in the public arena due to cases involving cigarettes and climate change has actually strengthened the method by clarifying its role in science and emphasizing the reliability of data obtained from these studies.

Table of Contents

Activate glossary term highlighting to easily identify key terms within the module. Once highlighted, you can click on these terms to view their definitions.

Activate NGSS annotations to easily identify NGSS standards within the module. Once highlighted, you can click on them to view these standards.

Market research

Comparative research: what it is and how to conduct it

TRY OUT NOW

Comparative research involves comparing elements to better understand the similarities and differences between them, applying rigorous methods and analyzing the results to draw meaningful conclusions. It helps to expand knowledge and provides a basis for informed decisions.

Learn more about its features and how it can be done.

- 1 What is comparative research?

- 2 Why comparative research?

- 3.1 1. Define the goal of comparative research

- 3.2 2. Select the items to compare

- 3.3 3. Collect data

- 3.4 4. Analysis of the data

- 3.5 5. Interpretation of results

- 3.6 6. Conclusion(s) from the comparative research

- 3.7 7. Present the results of the comparative research

- 3.8 Conclusion

- 4 1:1 Live Online Presentation: QUESTIONPRO MARKET RESEARCH SOFTWARE

- 5 Try software for market research and experience management now for 10 days free of charge!

What is comparative research?

Comparative research is research designed to analyse and compare two or more elements or phenomena to identify similarities, differences, and patterns between them. It is used in various disciplines such as science, psychology, sociology and economics.

The main features of comparative research are:

- Compare : A direct comparison is made between two or more objects. Similarities and differences in terms of characteristics, behaviors, effects or other relevant aspects are examined.

- Clear targets: It has specific and clearly defined goals. An attempt can be made to understand the causes of the differences or similarities observed, to explain the effects of the variables being compared, or to suggest better approaches or solutions.

- Kontext : Comparative research is carried out in a specific context. This means taking into account factors such as time, location, culture, socio-economic environment, etc., which may influence the elements being compared.

- Different approaches: In comparative research, various methods and techniques can be used to collect data. These include, among other things: Case studies , surveys, direct observation, document analysis and statistical analysis.

- Analysis and conclusion : Comparative research involves analyzing the data collected and drawing conclusions based on the comparisons made. These conclusions can provide important information about causal relationships, trends, or observed patterns.

- generalization : Depending on the scope of the study, the results may allow generalizations about the elements being compared. However, it is important to be aware of the limitations and to consider the validity of the results in different contexts.

Why comparative research?

Comparative research is used in a variety of situations and for a variety of purposes. Here are some examples where you can use this approach:

- Understanding cultural differences : Comparative research is useful for analyzing and understanding cultural differences between different groups of people. It can help identify particular practices, values, beliefs and behaviors in different societies.

- Evaluation of policies and programs : It makes it possible to analyse how policies are implemented and what results are achieved in different contexts, thus identifying good practices or areas for improvement.

- Market research : In business, comparative research is used to analyse and compare the demand for products or services in different markets. This helps companies understand consumer preferences, adapt their marketing strategies and make informed decisions about expanding into new markets.

- Scientific research : Comparative research is carried out in a variety of disciplines Scientific research applied. In biology, for example, it can be used to compare species and study their characteristics and behavior. In psychology, it is used to compare groups of people and understand differences in behavior or personality.

- Analysis of educational policies and systems : Comparative research is used in the field of education to analyse and compare the educational policies and systems of different countries or regions. This helps identify successful practices, common challenges and opportunities for improvement in education.

- Labor market studies: They help analyse and compare working conditions, wages and other work-related aspects in different industries or countries. This provides information about labor market trends and inequalities.

How to Conduct Comparative Research

Conducting comparative research requires a few basic steps. Here is a simple explanation of how to do it:

1. Define the goal of comparative research

Before you begin, you should be clear about what you want to achieve with comparative research. Clearly define the goal and the research questions you want to answer.

2. Select the items to compare

Determine the elements, phenomena, or groups you want to compare. These can be different countries, cultures, policies, products, groups of people, etc. Make sure they are comparable and that you can get relevant data for each item.

Make sure you have a clear understanding of the elements you want to compare and that they are relevant to your research objective. The selected elements should be comparable to each other. This means that they should have characteristics and properties that can be measured and compared in a meaningful way.

When selecting a sample of items for comparison, ensure that it is a representative sample of the population or group to which you want to generalize the results.

3. Collect data

Use a variety of sources and methods to collect data about the items being compared. Identify appropriate data sources to collect information about the items being compared. These sources may include surveys, interviews, direct observations, databases, historical records, government reports, academic literature, media, and others.

Perform a quality check on the data collected. This includes checking the consistency, accuracy and completeness of the data. If necessary, perform additional checks or contact participants to clarify any ambiguities or errors in the data.

4. Analysis of the data

Review the data collected and conduct a comparative analysis. Identify similarities and differences between the elements being compared. You can use statistical analysis techniques and comparison graphs, or simply compare the data qualitatively.

You can descriptive statistics Use to summarize and present quantitative data clearly and concisely. This can include measures of central tendency (such as mean, median or mode) and measures of dispersion (such as standard deviation or span). With the help of descriptive statistics you can understand the main characteristics of the items being compared.

5. Interpretation of results

Based on the analyses carried out, interpret the results of the investigation. Identify patterns, trends, or causal relationships that emerge from the comparison. Explain the similarities and differences observed and look for possible explanations.

Try to find possible explanations for the results observed in your comparative research. Identify key variables that may influence the similarities and differences identified. Consider whether there are underlying causal factors or mediating variables that could explain the results obtained.

6. Conclusion(s) from the comparative research

Draw relevant conclusions based on the interpretation of the results. Summarize the most important results of the comparative research and answer the research questions asked in the first step.

Reflect on the impact of your findings in the broader context. Explore how the results may contribute to existing knowledge on the topic and how they might impact practice. Additionally, identify the limitations of your comparative research, such as: B. possible biases or limitations in the sample or methods used.

7. Present the results of the comparative research

Communicate the results of your comparative research clearly and concisely. You can use written reports, visual presentations, charts, or comparative tables, whichever works best for your audience.

Comparative research is used to analyse and compare elements, phenomena or practices in order to understand differences, identify best practices, evaluate policies or programs and make informed decisions in various fields such as culture, economics, science, education, etc.

Remember that data collection is a crucial phase in comparative research. It is important that it is carried out carefully and accurately in order to obtain reliable and valid information that allows you to make meaningful comparisons between the selected items.

Online survey tools like QuestionPro, help you with structured data collection. If you choose, you can first set up a free account to try out the basic features, or request a demo to let us know your research needs and learn more about our products and various licenses.

1:1 live online presentation: QUESTIONPRO MARKET RESEARCH SOFTWARE

Arrange an individual appointment and discover our market research software.

Try software for market research and experience management now for 10 days free of charge!

Do you have any questions about the content of this blog? Simply contact us via contact form . We look forward to a dialogue with you! You too can test QuestionPro for 10 days free of charge and without risk in depth!

Test the agile market research and experience management platform for qualitative and quantitative data collection and data analysis from QuestionPro for 10 days free of charge

FURTHER KEYWORDS

Market research | Empirical research | Research process | Survey research

SHARE THIS ARTICLE

KEYWORDS OF THIS BLOG POST

Comparative research | Research | Compare

FURTHER INFORMATION

- Research Process: Steps to conduct the research

- Market research: examples, tips, data collection, data analysis, software for carrying out and presenting the results

- Empirical research: definition, methods and examples

- Data control: what it is, what types there are and how to carry it out

- Data collection tools: which are the best?

- Sentiment analyses and semantic text analysis based on artificial intelligence

- All information about the experience management platform QuestionPro

- Cross-sectional data: what are they, characteristics and types

PRESS RELEASES

What is comparative analysis? A complete guide

Last updated

18 April 2023

Reviewed by

Jean Kaluza

Comparative analysis is a valuable tool for acquiring deep insights into your organization’s processes, products, and services so you can continuously improve them.

Similarly, if you want to streamline, price appropriately, and ultimately be a market leader, you’ll likely need to draw on comparative analyses quite often.

When faced with multiple options or solutions to a given problem, a thorough comparative analysis can help you compare and contrast your options and make a clear, informed decision.

If you want to get up to speed on conducting a comparative analysis or need a refresher, here’s your guide.

Make comparative analysis less tedious

Dovetail streamlines comparative analysis to help you uncover and share actionable insights

- What exactly is comparative analysis?

A comparative analysis is a side-by-side comparison that systematically compares two or more things to pinpoint their similarities and differences. The focus of the investigation might be conceptual—a particular problem, idea, or theory—or perhaps something more tangible, like two different data sets.

For instance, you could use comparative analysis to investigate how your product features measure up to the competition.

After a successful comparative analysis, you should be able to identify strengths and weaknesses and clearly understand which product is more effective.

You could also use comparative analysis to examine different methods of producing that product and determine which way is most efficient and profitable.

The potential applications for using comparative analysis in everyday business are almost unlimited. That said, a comparative analysis is most commonly used to examine

Emerging trends and opportunities (new technologies, marketing)

Competitor strategies

Financial health

Effects of trends on a target audience

- Why is comparative analysis so important?

Comparative analysis can help narrow your focus so your business pursues the most meaningful opportunities rather than attempting dozens of improvements simultaneously.

A comparative approach also helps frame up data to illuminate interrelationships. For example, comparative research might reveal nuanced relationships or critical contexts behind specific processes or dependencies that wouldn’t be well-understood without the research.

For instance, if your business compares the cost of producing several existing products relative to which ones have historically sold well, that should provide helpful information once you’re ready to look at developing new products or features.

- Comparative vs. competitive analysis—what’s the difference?

Comparative analysis is generally divided into three subtypes, using quantitative or qualitative data and then extending the findings to a larger group. These include

Pattern analysis —identifying patterns or recurrences of trends and behavior across large data sets.

Data filtering —analyzing large data sets to extract an underlying subset of information. It may involve rearranging, excluding, and apportioning comparative data to fit different criteria.

Decision tree —flowcharting to visually map and assess potential outcomes, costs, and consequences.

In contrast, competitive analysis is a type of comparative analysis in which you deeply research one or more of your industry competitors. In this case, you’re using qualitative research to explore what the competition is up to across one or more dimensions.

For example

Service delivery —metrics like the Net Promoter Scores indicate customer satisfaction levels.

Market position — the share of the market that the competition has captured.

Brand reputation —how well-known or recognized your competitors are within their target market.

- Tips for optimizing your comparative analysis

Conduct original research

Thorough, independent research is a significant asset when doing comparative analysis. It provides evidence to support your findings and may present a perspective or angle not considered previously.

Make analysis routine

To get the maximum benefit from comparative research, make it a regular practice, and establish a cadence you can realistically stick to. Some business areas you could plan to analyze regularly include:

Profitability

Competition

Experiment with controlled and uncontrolled variables

In addition to simply comparing and contrasting, explore how different variables might affect your outcomes.

For example, a controllable variable would be offering a seasonal feature like a shopping bot to assist in holiday shopping or raising or lowering the selling price of a product.

Uncontrollable variables include weather, changing regulations, the current political climate, or global pandemics.

Put equal effort into each point of comparison

Most people enter into comparative research with a particular idea or hypothesis already in mind to validate. For instance, you might try to prove the worthwhileness of launching a new service. So, you may be disappointed if your analysis results don’t support your plan.

However, in any comparative analysis, try to maintain an unbiased approach by spending equal time debating the merits and drawbacks of any decision. Ultimately, this will be a practical, more long-term sustainable approach for your business than focusing only on the evidence that favors pursuing your argument or strategy.

Writing a comparative analysis in five steps

To put together a coherent, insightful analysis that goes beyond a list of pros and cons or similarities and differences, try organizing the information into these five components:

1. Frame of reference

Here is where you provide context. First, what driving idea or problem is your research anchored in? Then, for added substance, cite existing research or insights from a subject matter expert, such as a thought leader in marketing, startup growth, or investment

2. Grounds for comparison Why have you chosen to examine the two things you’re analyzing instead of focusing on two entirely different things? What are you hoping to accomplish?

3. Thesis What argument or choice are you advocating for? What will be the before and after effects of going with either decision? What do you anticipate happening with and without this approach?

For example, “If we release an AI feature for our shopping cart, we will have an edge over the rest of the market before the holiday season.” The finished comparative analysis will weigh all the pros and cons of choosing to build the new expensive AI feature including variables like how “intelligent” it will be, what it “pushes” customers to use, how much it takes off the plates of customer service etc.

Ultimately, you will gauge whether building an AI feature is the right plan for your e-commerce shop.

4. Organize the scheme Typically, there are two ways to organize a comparative analysis report. First, you can discuss everything about comparison point “A” and then go into everything about aspect “B.” Or, you alternate back and forth between points “A” and “B,” sometimes referred to as point-by-point analysis.

Using the AI feature as an example again, you could cover all the pros and cons of building the AI feature, then discuss the benefits and drawbacks of building and maintaining the feature. Or you could compare and contrast each aspect of the AI feature, one at a time. For example, a side-by-side comparison of the AI feature to shopping without it, then proceeding to another point of differentiation.

5. Connect the dots Tie it all together in a way that either confirms or disproves your hypothesis.

For instance, “Building the AI bot would allow our customer service team to save 12% on returns in Q3 while offering optimizations and savings in future strategies. However, it would also increase the product development budget by 43% in both Q1 and Q2. Our budget for product development won’t increase again until series 3 of funding is reached, so despite its potential, we will hold off building the bot until funding is secured and more opportunities and benefits can be proved effective.”

Get started today

Go from raw data to valuable insights with a flexible research platform

Editor’s picks

Last updated: 21 December 2023

Last updated: 16 December 2023

Last updated: 6 October 2023

Last updated: 5 March 2024

Last updated: 25 November 2023

Last updated: 15 February 2024

Last updated: 11 March 2024

Last updated: 12 December 2023

Last updated: 6 March 2024

Last updated: 10 April 2023

Last updated: 20 December 2023

Latest articles

Related topics, log in or sign up.

Get started for free

Characteristics of a Comparative Research Design

Hannah richardson, 28 jun 2018.

Comparative research essentially compares two groups in an attempt to draw a conclusion about them. Researchers attempt to identify and analyze similarities and differences between groups, and these studies are most often cross-national, comparing two separate people groups. Comparative studies can be used to increase understanding between cultures and societies and create a foundation for compromise and collaboration. These studies contain both quantitative and qualitative research methods.

Explore this article

- Comparative Quantitative

- Comparative Qualitative

- When to Use It

- When Not to Use It

1 Comparative Quantitative