What Is Statistical Analysis?

Statistical analysis is a technique we use to find patterns in data and make inferences about those patterns to describe variability in the results of a data set or an experiment.

In its simplest form, statistical analysis answers questions about:

- Quantification — how big/small/tall/wide is it?

- Variability — growth, increase, decline

- The confidence level of these variabilities

What Are the 2 Types of Statistical Analysis?

- Descriptive Statistics: Descriptive statistical analysis describes the quality of the data by summarizing large data sets into single measures.

- Inferential Statistics: Inferential statistical analysis allows you to draw conclusions from your sample data set and make predictions about a population using statistical tests.

What’s the Purpose of Statistical Analysis?

Using statistical analysis, you can determine trends in the data by calculating your data set’s mean or median. You can also analyze the variation between different data points from the mean to get the standard deviation . Furthermore, to test the validity of your statistical analysis conclusions, you can use hypothesis testing techniques, like P-value, to determine the likelihood that the observed variability could have occurred by chance.

More From Abdishakur Hassan The 7 Best Thematic Map Types for Geospatial Data

Statistical Analysis Methods

There are two major types of statistical data analysis: descriptive and inferential.

Descriptive Statistical Analysis

Descriptive statistical analysis describes the quality of the data by summarizing large data sets into single measures.

Within the descriptive analysis branch, there are two main types: measures of central tendency (i.e. mean, median and mode) and measures of dispersion or variation (i.e. variance , standard deviation and range).

For example, you can calculate the average exam results in a class using central tendency or, in particular, the mean. In that case, you’d sum all student results and divide by the number of tests. You can also calculate the data set’s spread by calculating the variance. To calculate the variance, subtract each exam result in the data set from the mean, square the answer, add everything together and divide by the number of tests.

Inferential Statistics

On the other hand, inferential statistical analysis allows you to draw conclusions from your sample data set and make predictions about a population using statistical tests.

There are two main types of inferential statistical analysis: hypothesis testing and regression analysis. We use hypothesis testing to test and validate assumptions in order to draw conclusions about a population from the sample data. Popular tests include Z-test, F-Test, ANOVA test and confidence intervals . On the other hand, regression analysis primarily estimates the relationship between a dependent variable and one or more independent variables. There are numerous types of regression analysis but the most popular ones include linear and logistic regression .

Statistical Analysis Steps

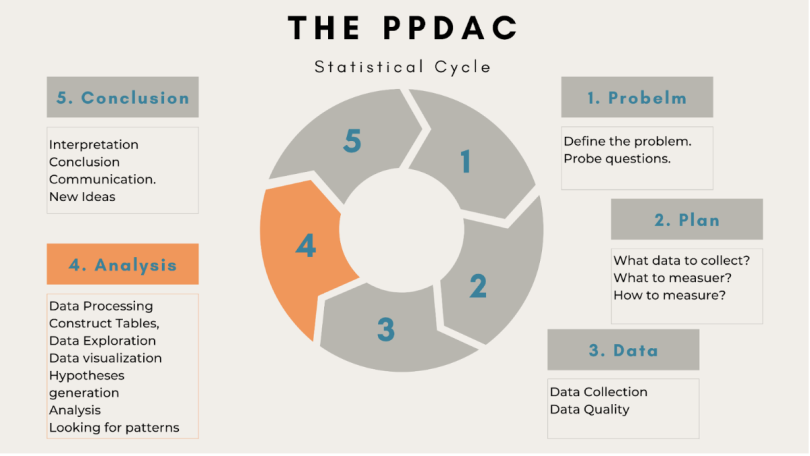

In the era of big data and data science, there is a rising demand for a more problem-driven approach. As a result, we must approach statistical analysis holistically. We may divide the entire process into five different and significant stages by using the well-known PPDAC model of statistics: Problem, Plan, Data, Analysis and Conclusion.

In the first stage, you define the problem you want to tackle and explore questions about the problem.

Next is the planning phase. You can check whether data is available or if you need to collect data for your problem. You also determine what to measure and how to measure it.

The third stage involves data collection, understanding the data and checking its quality.

4. Analysis

Statistical data analysis is the fourth stage. Here you process and explore the data with the help of tables, graphs and other data visualizations. You also develop and scrutinize your hypothesis in this stage of analysis.

5. Conclusion

The final step involves interpretations and conclusions from your analysis. It also covers generating new ideas for the next iteration. Thus, statistical analysis is not a one-time event but an iterative process.

Statistical Analysis Uses

Statistical analysis is useful for research and decision making because it allows us to understand the world around us and draw conclusions by testing our assumptions. Statistical analysis is important for various applications, including:

- Statistical quality control and analysis in product development

- Clinical trials

- Customer satisfaction surveys and customer experience research

- Marketing operations management

- Process improvement and optimization

- Training needs

More on Statistical Analysis From Built In Experts Intro to Descriptive Statistics for Machine Learning

Benefits of Statistical Analysis

Here are some of the reasons why statistical analysis is widespread in many applications and why it’s necessary:

Understand Data

Statistical analysis gives you a better understanding of the data and what they mean. These types of analyses provide information that would otherwise be difficult to obtain by merely looking at the numbers without considering their relationship.

Find Causal Relationships

Statistical analysis can help you investigate causation or establish the precise meaning of an experiment, like when you’re looking for a relationship between two variables.

Make Data-Informed Decisions

Businesses are constantly looking to find ways to improve their services and products . Statistical analysis allows you to make data-informed decisions about your business or future actions by helping you identify trends in your data, whether positive or negative.

Determine Probability

Statistical analysis is an approach to understanding how the probability of certain events affects the outcome of an experiment. It helps scientists and engineers decide how much confidence they can have in the results of their research, how to interpret their data and what questions they can feasibly answer.

You’ve Got Questions. Our Experts Have Answers. Confidence Intervals, Explained!

What Are the Risks of Statistical Analysis?

Statistical analysis can be valuable and effective, but it’s an imperfect approach. Even if the analyst or researcher performs a thorough statistical analysis, there may still be known or unknown problems that can affect the results. Therefore, statistical analysis is not a one-size-fits-all process. If you want to get good results, you need to know what you’re doing. It can take a lot of time to figure out which type of statistical analysis will work best for your situation .

Thus, you should remember that our conclusions drawn from statistical analysis don’t always guarantee correct results. This can be dangerous when making business decisions. In marketing , for example, we may come to the wrong conclusion about a product . Therefore, the conclusions we draw from statistical data analysis are often approximated; testing for all factors affecting an observation is impossible.

Built In’s expert contributor network publishes thoughtful, solutions-oriented stories written by innovative tech professionals. It is the tech industry’s definitive destination for sharing compelling, first-person accounts of problem-solving on the road to innovation.

Great Companies Need Great People. That's Where We Come In.

- Top Courses

- Online Degrees

- Find your New Career

- Join for Free

What Is Statistical Analysis? Definition, Types, and Jobs

Statistical analytics is a high demand career with great benefits. Learn how you can apply your statistical and data science skills to this growing field.

![statistical research data [Featured image] Analysts study sheets of paper containing statistical harts and graphs](https://d3njjcbhbojbot.cloudfront.net/api/utilities/v1/imageproxy/https://images.ctfassets.net/wp1lcwdav1p1/AQKsPKoyLl59rCLTXDp5F/51987f157870c8376005fa5a73d11f36/GettyImages-1133887505__1_.jpg?w=1500&h=680&q=60&fit=fill&f=faces&fm=jpg&fl=progressive&auto=format%2Ccompress&dpr=1&w=1000)

Statistical analysis is the process of collecting large volumes of data and then using statistics and other data analysis techniques to identify trends, patterns, and insights. If you're a whiz at data and statistics, statistical analysis could be a great career match for you. The rise of big data, machine learning, and technology in our society has created a high demand for statistical analysts, and it's an exciting time to develop these skills and find a job you love. In this article, you'll learn more about statistical analysis, including its definition, different types of it, how it's done, and jobs that use it. At the end, you'll also explore suggested cost-effective courses than can help you gain greater knowledge of both statistical and data analytics.

Statistical analysis definition

Statistical analysis is the process of collecting and analyzing large volumes of data in order to identify trends and develop valuable insights.

In the professional world, statistical analysts take raw data and find correlations between variables to reveal patterns and trends to relevant stakeholders. Working in a wide range of different fields, statistical analysts are responsible for new scientific discoveries, improving the health of our communities, and guiding business decisions.

Types of statistical analysis

There are two main types of statistical analysis: descriptive and inferential. As a statistical analyst, you'll likely use both types in your daily work to ensure that data is both clearly communicated to others and that it's used effectively to develop actionable insights. At a glance, here's what you need to know about both types of statistical analysis:

Descriptive statistical analysis

Descriptive statistics summarizes the information within a data set without drawing conclusions about its contents. For example, if a business gave you a book of its expenses and you summarized the percentage of money it spent on different categories of items, then you would be performing a form of descriptive statistics.

When performing descriptive statistics, you will often use data visualization to present information in the form of graphs, tables, and charts to clearly convey it to others in an understandable format. Typically, leaders in a company or organization will then use this data to guide their decision making going forward.

Inferential statistical analysis

Inferential statistics takes the results of descriptive statistics one step further by drawing conclusions from the data and then making recommendations. For example, instead of only summarizing the business's expenses, you might go on to recommend in which areas to reduce spending and suggest an alternative budget.

Inferential statistical analysis is often used by businesses to inform company decisions and in scientific research to find new relationships between variables.

Statistical analyst duties

Statistical analysts focus on making large sets of data understandable to a more general audience. In effect, you'll use your math and data skills to translate big numbers into easily digestible graphs, charts, and summaries for key decision makers within businesses and other organizations. Typical job responsibilities of statistical analysts include:

Extracting and organizing large sets of raw data

Determining which data is relevant and which should be excluded

Developing new data collection strategies

Meeting with clients and professionals to review data analysis plans

Creating data reports and easily understandable representations of the data

Presenting data

Interpreting data results

Creating recommendations for a company or other organizations

Your job responsibilities will differ depending on whether you work for a federal agency, a private company, or another business sector. Many industries need statistical analysts, so exploring your passions and seeing how you can best apply your data skills can be exciting.

Statistical analysis skills

Because most of your job responsibilities will likely focus on data and statistical analysis, mathematical skills are crucial. High-level math skills can help you fact-check your work and create strategies to analyze the data, even if you use software for many computations. When honing in on your mathematical skills, focusing on statistics—specifically statistics with large data sets—can help set you apart when searching for job opportunities. Competency with computer software and learning new platforms will also help you excel in more advanced positions and put you in high demand.

Data analytics , problem-solving, and critical thinking are vital skills to help you determine the data set’s true meaning and bigger picture. Often, large data sets may not represent what they appear on the surface. To get to the bottom of things, you'll need to think critically about factors that may influence the data set, create an informed analysis plan, and parse out bias to identify insightful trends.

To excel in the workplace, you'll need to hone your database management skills, keep up to date on statistical methodology, and continually improve your research skills. These skills take time to build, so starting with introductory courses and having patience while you build skills is important.

Common software used in statistical analytics jobs

Statistical analysis often involves computations using big data that is too large to compute by hand. The good news is that many kinds of statistical software have been developed to help analyze data effectively and efficiently. Gaining mastery over this statistical software can make you look attractive to employers and allow you to work on more complex projects.

Statistical software is beneficial for both descriptive and inferential statistics. You can use it to generate charts and graphs or perform computations to draw conclusions and inferences from the data. While the type of statistical software you will use will depend on your employer, common software used include:

Read more: The 7 Data Analysis Software You Need to Know

Pathways to a career in statistical analytics

Many paths to becoming a statistical analyst exist, but most jobs in this field require a bachelor’s degree. Employers will typically look for a degree in an area that focuses on math, computer science, statistics, or data science to ensure you have the skills needed for the job. If your bachelor’s degree is in another field, gaining experience through entry-level data entry jobs can help get your foot in the door. Many employers look for work experience in related careers such as being a research assistant, data manager, or intern in the field.

Earning a graduate degree in statistical analytics or a related field can also help you stand out on your resume and demonstrate a deep knowledge of the skills needed to perform the job successfully. Generally, employers focus more on making sure you have the mathematical and data analysis skills required to perform complex statistical analytics on its data. After all, you will be helping them to make decisions, so they want to feel confident in your ability to advise them in the right direction.

Read more: Your Guide to a Career as a Statistician—What to Expect

How much do statistical analytics professionals earn?

Statistical analysts earn well above the national average and enjoy many benefits on the job. There are many careers utilizing statistical analytics, so comparing salaries can help determine if the job benefits align with your expectations.

Median annual salary: $113,990

Job outlook for 2022 to 2032: 23% [ 1 ]

Data scientist

Median annual salary: $103,500

Job outlook for 2022 to 2032: 35% [ 2 ]

Financial risk specialist

Median annual salary: $102,120

Job outlook for 2022 to 2032: 8% [ 3 ]

Investment analyst

Median annual salary: $95,080

Operational research analyst

Median annual salary: $85,720

Job outlook for 2022 to 2032: 23% [ 4 ]

Market research analyst

Median annual salary: $68,230

Job outlook for 2022 to 2032: 13% [ 5 ]

Statistician

Median annual salary: $99,960

Job outlook for 2022 to 2032: 30% [ 6 ]

Read more: How Much Do Statisticians Make? Your 2022 Statistician Salary Guide

Statistical analysis job outlook

Jobs that use statistical analysis have a positive outlook for the foreseeable future.

According to the US Bureau of Labor Statistics (BLS), the number of jobs for mathematicians and statisticians is projected to grow by 30 percent between 2022 and 2032, adding an average of 3,500 new jobs each year throughout the decade [ 6 ].

As we create more ways to collect data worldwide, there will be an increased need for people able to analyze and make sense of the data.

Ready to take the next step in your career?

Statistical analytics could be an excellent career match for those with an affinity for math, data, and problem-solving. Here are some popular courses to consider as you prepare for a career in statistical analysis:

Learn fundamental processes and tools with Google's Data Analytics Professional Certificate . You'll learn how to process and analyze data, use key analysis tools, apply R programming, and create visualizations that can inform key business decisions.

Grow your comfort using R with Duke University's Data Analysis with R Specialization . Statistical analysts commonly use R for testing, modeling, and analysis. Here, you'll learn and practice those processes.

Apply statistical analysis with Rice University's Business Statistics and Analysis Specialization . Contextualize your technical and analytical skills by using them to solve business problems and complete a hands-on Capstone Project to demonstrate your knowledge.

Article sources

US Bureau of Labor Statistics. " Occupational Outlook Handbook: Actuaries , https://www.bls.gov/ooh/math/actuaries.htm." Accessed November 21, 2023.

US Bureau of Labor Statistics. " Occupational Outlook Handbook: Data Scientists , https://www.bls.gov/ooh/math/data-scientists.htm." Accessed Accessed November 21, 2023.

US Bureau of Labor Statistics. " Occupational Outlook Handbook: Financial Analysts , https://www.bls.gov/ooh/business-and-financial/financial-analysts.htm." Accessed Accessed November 21, 2023.

US Bureau of Labor Statistics. " Occupational Outlook Handbook: Operations Research Analysts , https://www.bls.gov/ooh/math/operations-research-analysts.htm." Accessed Accessed November 21, 2023.

US Bureau of Labor Statistics. " Occupational Outlook Handbook: Market Research Analyst , https://www.bls.gov/ooh/business-and-financial/market-research-analysts.htm." Accessed Accessed November 21, 2023.

US Bureau of Labor Statistics. " Occupational Outlook Handbook: Mathematicians and Statisticians , https://www.bls.gov/ooh/math/mathematicians-and-statisticians.htm." Accessed Accessed November 21, 2023.

Keep reading

Coursera staff.

Editorial Team

Coursera’s editorial team is comprised of highly experienced professional editors, writers, and fact...

This content has been made available for informational purposes only. Learners are advised to conduct additional research to ensure that courses and other credentials pursued meet their personal, professional, and financial goals.

- Data Center

- Applications

- Open Source

Datamation content and product recommendations are editorially independent. We may make money when you click on links to our partners. Learn More .

Statistical analysis is a systematic method of gathering, analyzing, interpreting, presenting, and deriving conclusions from data. It employs statistical tools to find patterns, trends, and links within datasets to facilitate informed decision-making. Data collection, description, exploratory data analysis (EDA), inferential statistics, statistical modeling, data visualization, and interpretation are all important aspects of statistical analysis.

Used in quantitative research to gather and analyze data, statistical data analysis provides a more comprehensive view of operational landscapes and gives organizations the insights they need to make strategic, evidence-based decisions. Here’s what you need to know.

Table of Contents

How Does Statistical Analysis Work?

The strategic use of statistical analysis procedures helps organizations get insights from data to make educated decisions. Statistical analytic approaches, which include everything from data extraction to the creation of actionable recommendations, provide a systematic approach to comprehending, interpreting, and using large datasets. By navigating these complex processes, businesses uncover hidden patterns in their data and extract important insights that can be used as a compass for strategic decision-making.

Extracting and Organizing Raw Data

Extracting and organizing raw data entails gathering information from a variety of sources, combining datasets, and assuring data quality through rigorous cleaning. In healthcare, for example, this method may comprise combining patient information from several systems to assess patterns in illness prevalence and treatment outcomes.

Identifying Essential Data

Identifying key data—and excluding irrelevant data—necessitates a thorough analysis of the dataset. Analysts use variable selection strategies to filter datasets to emphasize characteristics most relevant to the objectives, resulting in more focused and meaningful analysis.

Developing Innovative Collection Strategies

Innovative data collection procedures include everything from creating successful surveys and organizing experiments to data mining to extract data from a wide range of sources. Researchers in environmental studies might use remote sensing technology to obtain data on how plants and land cover change over time. Modern approaches such as satellite photography and machine learning algorithms help scientists improve the depth and precision of data collecting, opening the way for more nuanced analyses and informed decision-making.

Collaborating With Experts

Collaborating with clients and specialists to review data analysis tactics can align analytical approaches with organizational objectives. In finance, for example, engaging with investment professionals ensures that data analysis tactics analyze market trends and make educated investment decisions. Analysts may modify their tactics by incorporating comments from domain experts, making the ensuing study more relevant and applicable to the given sector or subject.

Creating Reports and Visualizations

Creating data reports and visualizations entails generating extensive summaries and graphical representations for clarity. In e-commerce, reports might indicate user purchase trends using visualizations like heatmaps to highlight popular goods. Businesses that display data in a visually accessible format can rapidly analyze patterns and make data-driven choices that optimize product offers and improve the entire consumer experience.

Analyzing Data Findings

This step entails using statistical tools to discover patterns, correlations, and insights in the dataset. In manufacturing, data analysis can identify connections between production factors and product faults, leading process improvement efforts. Engineers may discover and resolve fundamental causes using statistical tools and methodologies, resulting in higher product quality and operational efficiency.

Acting on the Data

Synthesizing findings from data analysis leads to the development of organizational recommendations. In the hospitality business, for example, data analysis might indicate trends in client preferences, resulting in strategic suggestions for tailored services and marketing efforts. Continuous improvement ideas based on analytical results help the firm adapt to a changing market scenario and compete more effectively.

The Importance of Statistical Analysis

The importance of statistical analysis goes far beyond data processing; it is the cornerstone in giving vital insights required for strategic decision-making, especially in the dynamic area of presenting new items to the market. Statistical analysis, which meticulously examines data, not only reveals trends and patterns but also provides a full insight into customer behavior, preferences, and market dynamics.

This abundance of information is a guiding force for enterprises, allowing them to make data-driven decisions that optimize product launches, improve market positioning, and ultimately drive success in an ever-changing business landscape.

2 Types of Statistical Analysis

There are two forms of statistical analysis, descriptive statistics, and statistical inference, both of which play an important role in guaranteeing data correctness and communicability using various analytical approaches.

By combining the capabilities of descriptive statistics with statistical inference, analysts can completely characterize the data and draw relevant insights that extend beyond the observed sample, guaranteeing conclusions that are resilient, trustworthy, and applicable to a larger context. This dual method improves the overall dependability of statistical analysis, making it an effective tool for obtaining important information from a variety of datasets.

Descriptive Statistics

This type of statistical analysis is all about visuals. Raw data doesn’t mean much on its own, and the sheer quantity can be overwhelming to digest. Descriptive statistical analysis focuses on creating a basic visual description of the data or turning information into graphs, charts, and other visuals that help people understand the meaning of the values in the data set. Descriptive analysis isn’t about explaining or drawing conclusions, though. It is only the practice of digesting and summarizing raw data to better understand it.

Statistical Inference

Inferential statistics practices involve more upfront hypotheses and follow-up explanations than descriptive statistics. In this type of statistical analysis, you are less focused on the entire collection of raw data and instead, take a sample and test your hypothesis or first estimation. From this sample and the results of your experiment, you can use inferential statistics to infer conclusions about the rest of the data set.

6 Benefits of Statistical Analysis

Statistical analysis enables a methodical and data-driven approach to decision-making and helps organizations maximize the value of their data, resulting in increased efficiency, informed decision-making, and innovation. Here are six of the most important benefits:

- Competitive Analysis: Statistical analysis illuminates your company’s objective value—knowing common metrics like sales revenue and net profit margin allows you to compare your performance to competitors.

- True Sales Visibility: The sales team says it is having a good week, and the numbers look good, but how can you accurately measure the impact on sales numbers? Statistical data analysis measures sales data and associates it with specific timeframes, products, and individual salespeople, which gives better visibility of marketing and sales successes.

- Predictive Analytics: Predictive analytics allows you to use past numerical data to predict future outcomes and areas where your team should make adjustments to improve performance.

- Risk Assessment and Management: Statistical tools help organizations analyze and manage risks more efficiently. Organizations may use historical data to identify possible hazards, anticipate future outcomes, and apply risk mitigation methods, lowering uncertainty and improving overall risk management.

- Resource Optimization: Statistical analysis identifies areas of inefficiency or underutilization, improving personnel management, budget allocation, and resource deployment and leading to increased operational efficiency and cost savings.

- Informed Decision Making: Statistical analysis allows businesses to base judgments on factual data rather than intuition. Data analysis allows firms to uncover patterns, trends, and correlations, resulting in better informed and strategic decision-making processes.

5-Step Statistical Analysis Process

Here are five essential steps for executing a thorough statistical analysis. By carefully following these stages, analysts may undertake a complete and rigorous statistical analysis, creating the framework for informed decision-making and providing actionable insights for both individuals and businesses.

Step 1: Data Identification and Description

Identify and clarify the features of the data to be analyzed. Understanding the nature of the dataset is critical in building the framework for a thorough statistical analysis.

Step 2: Establishing the Population Connection

Make progress toward creating a meaningful relationship between the studied data and the larger sample population from which it is drawn. This stage entails contextualizing the data within the greater framework of the population it represents, increasing the analysis’s relevance and application.

Step 3: Model Construction and Synthesis

Create a model that accurately captures and synthesizes the complex relationship between the population under study and the unique dataset. Creating a well-defined model is essential for analyzing data and generating useful insights.

Step 4: Model Validity Verification

Apply the model to thorough testing and inspection to ensure its validity. This stage guarantees that the model properly represents the population’s underlying dynamics, which improves the trustworthiness of future analysis and results.

Step 5: Predictive Analysis of Future Trends

Using predictive analytics tools , you may take your analysis to the next level. This final stage forecasts future trends and occurrences based on the developed model, providing significant insights into probable developments and assisting with proactive decision-making.

5 Statistical Analysis Methods

There are five common statistical analysis methods, each adapted to distinct data goals and guiding rapid decision-making. The approach you choose is determined by the nature of your dataset and the goals you want to achieve.

Finding the mean—the average, or center point, of the dataset—is computed by adding all the values and dividing by the number of observations. In real-world situations, the mean is used to calculate a representative value that captures the usual magnitude of a group of observations. For example, in educational evaluations, the mean score of a class provides educators with a concise measure of overall performance, allowing them to determine the general level of comprehension.

Standard Deviation

The standard deviation measures the degree of variance or dispersion within a dataset. By demonstrating how far individual values differ from the mean, it provides information about the dataset’s general dispersion. In practice, the standard deviation is used in financial analysis to analyze the volatility of stock prices. A higher standard deviation indicates greater price volatility, which helps investors evaluate and manage risks associated with various investment opportunities.

Regression analysis seeks to understand and predict connections between variables. This statistical approach is used in a variety of disciplines, including marketing, where it helps anticipate sales based on advertising spend. For example, a corporation may use regression analysis to assess how changes in advertising spending affect product sales, allowing for more efficient resource allocation for future marketing efforts.

Hypothesis Testing

Hypothesis testing is used to determine the validity of a claim or hypothesis regarding a population parameter. In medical research, hypothesis testing may be used to compare the efficacy of a novel medicine against a traditional treatment. Researchers develop a null hypothesis, implying that there is no difference between treatments, and then use statistical tests to assess if there is sufficient evidence to reject the null hypothesis in favor of the alternative.

Sample Size Determination

Choosing an adequate sample size is critical for producing trustworthy and relevant results in a study. In clinical studies, for example, researchers determine the sample size to ensure that the study has the statistical power to detect differences in treatment results. A well-determined sample size strikes a compromise between the requirement for precision and practical factors, thereby strengthening the study’s results and helping evidence-based decision-making.

Bottom Line: Identify Patterns and Trends With Statistical Analysis

Statistical analysis can provide organizations with insights into customer behavior, market dynamics, and operational efficiency. This information simplifies decision-making and prepares organizations to adapt and prosper in changing situations. Organizations that use top-tier statistical analysis tools can leverage the power of data, uncover trends, and stay at the forefront of innovation, assuring a competitive advantage in today’s ever-changing technological world.

Interested in statistical analysis? Learn how to run Monte Carlo simulations and master logistic regression in Excel.

Subscribe to Data Insider

Learn the latest news and best practices about data science, big data analytics, artificial intelligence, data security, and more.

Similar articles

Mastering structured data: from basics to real-world applications, 9 best ai certification courses to future-proof your career in 2024, hubspot crm vs. salesforce: head-to-head comparison (2024), get the free newsletter.

Subscribe to Data Insider for top news, trends & analysis

Latest Articles

Mastering structured data: from..., 9 best ai certification..., 10 best cloud-based project..., 9 top rpa companies....

SciSciNet: A large-scale open data lake for the science of science research

Data, measurement and empirical methods in the science of science

Interdisciplinarity revisited: evidence for research impact and dynamism

Background & summary.

Recent policy changes in funding agencies and academic journals have increased data sharing among researchers and between researchers and the public. Data sharing advances science and provides the transparency necessary for evaluating, replicating, and verifying results. However, many data-sharing policies do not explain what constitutes an appropriate dataset for archiving or how to determine the value of datasets to secondary users 1 , 2 , 3 . Questions about how to allocate data-sharing resources efficiently and responsibly have gone unanswered 4 , 5 , 6 . For instance, data-sharing policies recognize that not all data should be curated and preserved, but they do not articulate metrics or guidelines for determining what data are most worthy of investment.

Despite the potential for innovation and advancement that data sharing holds, the best strategies to prioritize datasets for preparation and archiving are often unclear. Some datasets are likely to have more downstream potential than others, and data curation policies and workflows should prioritize high-value data instead of being one-size-fits-all. Though prior research in library and information science has shown that the “analytic potential” of a dataset is key to its reuse value 7 , work is needed to implement conceptual data reuse frameworks 8 , 9 , 10 , 11 , 12 , 13 , 14 . In addition, publishers and data archives need guidance to develop metrics and evaluation strategies to assess the impact of datasets.

Several existing resources have been compiled to study the relationship between the reuse of scholarly products, such as datasets (Table 1 ); however, none of these resources include explicit information on how curation processes are applied to data to increase their value, maximize their accessibility, and ensure their long-term preservation. The CCex (Curation Costs Exchange) provides models of curation services along with cost-related datasets shared by contributors but does not make explicit connections between them or include reuse information 15 . Analyses on platforms such as DataCite 16 have focused on metadata completeness and record usage, but have not included related curation-level information. Analyses of GenBank 17 and FigShare 18 , 19 citation networks do not include curation information. Related studies of Github repository reuse 20 and Softcite software citation 21 reveal significant factors that impact the reuse of secondary research products but do not focus on research data. RD-Switchboard 22 and DSKG 23 are scholarly knowledge graphs linking research data to articles, patents, and grants, but largely omit social science research data and do not include curation-level factors. To our knowledge, other studies of curation work in organizations similar to ICPSR – such as GESIS 24 , Dataverse 25 , and DANS 26 – have not made their underlying data available for analysis.

This paper describes a dataset 27 compiled for the MICA project (Measuring the Impact of Curation Actions) led by investigators at ICPSR, a large social science data archive at the University of Michigan. The dataset was originally developed to study the impacts of data curation and archiving on data reuse. The MICA dataset has supported several previous publications investigating the intensity of data curation actions 28 , the relationship between data curation actions and data reuse 29 , and the structures of research communities in a data citation network 30 . Collectively, these studies help explain the return on various types of curatorial investments. The dataset that we introduce in this paper, which we refer to as the MICA dataset, has the potential to address research questions in the areas of science (e.g., knowledge production), library and information science (e.g., scholarly communication), and data archiving (e.g., reproducible workflows).

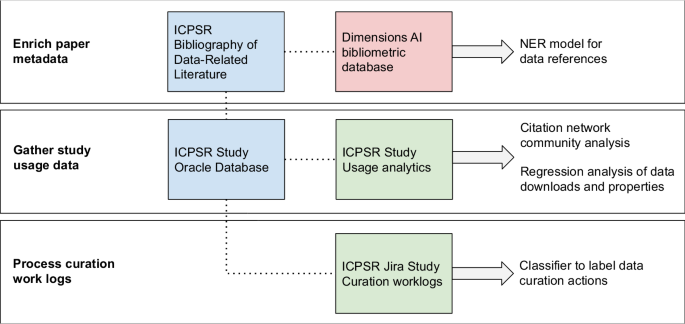

We constructed the MICA dataset 27 using records available at ICPSR, a large social science data archive at the University of Michigan. Data set creation involved: collecting and enriching metadata for articles indexed in the ICPSR Bibliography of Data-related Literature against the Dimensions AI bibliometric database; gathering usage statistics for studies from ICPSR’s administrative database; processing data curation work logs from ICPSR’s project tracking platform, Jira; and linking data in social science studies and series to citing analysis papers (Fig. 1 ).

Steps to prepare MICA dataset for analysis - external sources are red, primary internal sources are blue, and internal linked sources are green.

Enrich paper metadata

The ICPSR Bibliography of Data-related Literature is a growing database of literature in which data from ICPSR studies have been used. Its creation was funded by the National Science Foundation (Award 9977984), and for the past 20 years it has been supported by ICPSR membership and multiple US federally-funded and foundation-funded topical archives at ICPSR. The Bibliography was originally launched in the year 2000 to aid in data discovery by providing a searchable database linking publications to the study data used in them. The Bibliography collects the universe of output based on the data shared in each study through, which is made available through each ICPSR study’s webpage. The Bibliography contains both peer-reviewed and grey literature, which provides evidence for measuring the impact of research data. For an item to be included in the ICPSR Bibliography, it must contain an analysis of data archived by ICPSR or contain a discussion or critique of the data collection process, study design, or methodology 31 . The Bibliography is manually curated by a team of librarians and information specialists at ICPSR who enter and validate entries. Some publications are supplied to the Bibliography by data depositors, and some citations are submitted to the Bibliography by authors who abide by ICPSR’s terms of use requiring them to submit citations to works in which they analyzed data retrieved from ICPSR. Most of the Bibliography is populated by Bibliography team members, who create custom queries for ICPSR studies performed across numerous sources, including Google Scholar, ProQuest, SSRN, and others. Each record in the Bibliography is one publication that has used one or more ICPSR studies. The version we used was captured on 2021-11-16 and included 94,755 publications.

To expand the coverage of the ICPSR Bibliography, we searched exhaustively for all ICPSR study names, unique numbers assigned to ICPSR studies, and DOIs 32 using a full-text index available through the Dimensions AI database 33 . We accessed Dimensions through a license agreement with the University of Michigan. ICPSR Bibliography librarians and information specialists manually reviewed and validated new entries that matched one or more search criteria. We then used Dimensions to gather enriched metadata and full-text links for items in the Bibliography with DOIs. We matched 43% of the items in the Bibliography to enriched Dimensions metadata including abstracts, field of research codes, concepts, and authors’ institutional information; we also obtained links to full text for 16% of Bibliography items. Based on licensing agreements, we included Dimensions identifiers and links to full text so that users with valid publisher and database access can construct an enriched publication dataset.

Gather study usage data

ICPSR maintains a relational administrative database, DBInfo, that organizes study-level metadata and information on data reuse across separate tables. Studies at ICPSR consist of one or more files collected at a single time or for a single purpose; studies in which the same variables are observed over time are grouped into series. Each study at ICPSR is assigned a DOI, and its metadata are stored in DBInfo. Study metadata follows the Data Documentation Initiative (DDI) Codebook 2.5 standard. DDI elements included in our dataset are title, ICPSR study identification number, DOI, authoring entities, description (abstract), funding agencies, subject terms assigned to the study during curation, and geographic coverage. We also created variables based on DDI elements: total variable count, the presence of survey question text in the metadata, the number of author entities, and whether an author entity was an institution. We gathered metadata for ICPSR’s 10,605 unrestricted public-use studies available as of 2021-11-16 ( https://www.icpsr.umich.edu/web/pages/membership/or/metadata/oai.html ).

To link study usage data with study-level metadata records, we joined study metadata from DBinfo on study usage information, which included total study downloads (data and documentation), individual data file downloads, and cumulative citations from the ICPSR Bibliography. We also gathered descriptive metadata for each study and its variables, which allowed us to summarize and append recoded fields onto the study-level metadata such as curation level, number and type of principle investigators, total variable count, and binary variables indicating whether the study data were made available for online analysis, whether survey question text was made searchable online, and whether the study variables were indexed for search. These characteristics describe aspects of the discoverability of the data to compare with other characteristics of the study. We used the study and series numbers included in the ICPSR Bibliography as unique identifiers to link papers to metadata and analyze the community structure of dataset co-citations in the ICPSR Bibliography 32 .

Process curation work logs

Researchers deposit data at ICPSR for curation and long-term preservation. Between 2016 and 2020, more than 3,000 research studies were deposited with ICPSR. Since 2017, ICPSR has organized curation work into a central unit that provides varied levels of curation that vary in the intensity and complexity of data enhancement that they provide. While the levels of curation are standardized as to effort (level one = less effort, level three = most effort), the specific curatorial actions undertaken for each dataset vary. The specific curation actions are captured in Jira, a work tracking program, which data curators at ICPSR use to collaborate and communicate their progress through tickets. We obtained access to a corpus of 669 completed Jira tickets corresponding to the curation of 566 unique studies between February 2017 and December 2019 28 .

To process the tickets, we focused only on their work log portions, which contained free text descriptions of work that data curators had performed on a deposited study, along with the curators’ identifiers, and timestamps. To protect the confidentiality of the data curators and the processing steps they performed, we collaborated with ICPSR’s curation unit to propose a classification scheme, which we used to train a Naive Bayes classifier and label curation actions in each work log sentence. The eight curation action labels we proposed 28 were: (1) initial review and planning, (2) data transformation, (3) metadata, (4) documentation, (5) quality checks, (6) communication, (7) other, and (8) non-curation work. We note that these categories of curation work are very specific to the curatorial processes and types of data stored at ICPSR, and may not match the curation activities at other repositories. After applying the classifier to the work log sentences, we obtained summary-level curation actions for a subset of all ICPSR studies (5%), along with the total number of hours spent on data curation for each study, and the proportion of time associated with each action during curation.

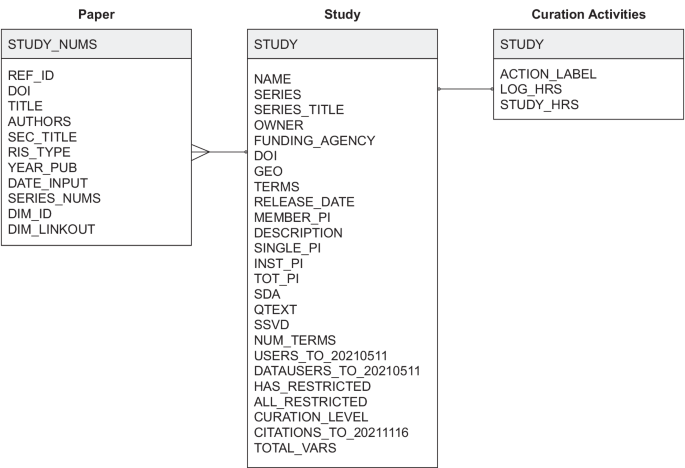

Data Records

The MICA dataset 27 connects records for each of ICPSR’s archived research studies to the research publications that use them and related curation activities available for a subset of studies (Fig. 2 ). Each of the three tables published in the dataset is available as a study archived at ICPSR. The data tables are distributed as statistical files available for use in SAS, SPSS, Stata, and R as well as delimited and ASCII text files. The dataset is organized around studies and papers as primary entities. The studies table lists ICPSR studies, their metadata attributes, and usage information; the papers table was constructed using the ICPSR Bibliography and Dimensions database; and the curation logs table summarizes the data curation steps performed on a subset of ICPSR studies.

Studies (“ICPSR_STUDIES”): 10,605 social science research datasets available through ICPSR up to 2021-11-16 with variables for ICPSR study number, digital object identifier, study name, series number, series title, authoring entities, full-text description, release date, funding agency, geographic coverage, subject terms, topical archive, curation level, single principal investigator (PI), institutional PI, the total number of PIs, total variables in data files, question text availability, study variable indexing, level of restriction, total unique users downloading study data files and codebooks, total unique users downloading data only, and total unique papers citing data through November 2021. Studies map to the papers and curation logs table through ICPSR study numbers as “STUDY”. However, not every study in this table will have records in the papers and curation logs tables.

Papers (“ICPSR_PAPERS”): 94,755 publications collected from 2000-08-11 to 2021-11-16 in the ICPSR Bibliography and enriched with metadata from the Dimensions database with variables for paper number, identifier, title, authors, publication venue, item type, publication date, input date, ICPSR series numbers used in the paper, ICPSR study numbers used in the paper, the Dimension identifier, and the Dimensions link to the publication’s full text. Papers map to the studies table through ICPSR study numbers in the “STUDY_NUMS” field. Each record represents a single publication, and because a researcher can use multiple datasets when creating a publication, each record may list multiple studies or series.

Curation logs (“ICPSR_CURATION_LOGS”): 649 curation logs for 563 ICPSR studies (although most studies in the subset had one curation log, some studies were associated with multiple logs, with a maximum of 10) curated between February 2017 and December 2019 with variables for study number, action labels assigned to work description sentences using a classifier trained on ICPSR curation logs, hours of work associated with a single log entry, and total hours of work logged for the curation ticket. Curation logs map to the study and paper tables through ICPSR study numbers as “STUDY”. Each record represents a single logged action, and future users may wish to aggregate actions to the study level before joining tables.

Entity-relation diagram.

Technical Validation

We report on the reliability of the dataset’s metadata in the following subsections. To support future reuse of the dataset, curation services provided through ICPSR improved data quality by checking for missing values, adding variable labels, and creating a codebook.

All 10,605 studies available through ICPSR have a DOI and a full-text description summarizing what the study is about, the purpose of the study, the main topics covered, and the questions the PIs attempted to answer when they conducted the study. Personal names (i.e., principal investigators) and organizational names (i.e., funding agencies) are standardized against an authority list maintained by ICPSR; geographic names and subject terms are also standardized and hierarchically indexed in the ICPSR Thesaurus 34 . Many of ICPSR’s studies (63%) are in a series and are distributed through the ICPSR General Archive (56%), a non-topical archive that accepts any social or behavioral science data. While study data have been available through ICPSR since 1962, the earliest digital release date recorded for a study was 1984-03-18, when ICPSR’s database was first employed, and the most recent date is 2021-10-28 when the dataset was collected.

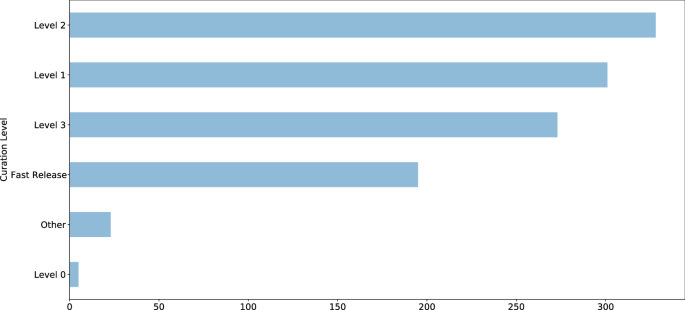

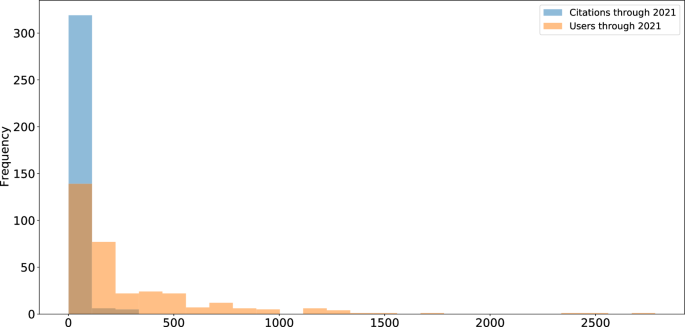

Curation level information was recorded starting in 2017 and is available for 1,125 studies (11%); approximately 80% of studies with assigned curation levels received curation services, equally distributed between Levels 1 (least intensive), 2 (moderately intensive), and 3 (most intensive) (Fig. 3 ). Detailed descriptions of ICPSR’s curation levels are available online 35 . Additional metadata are available for a subset of 421 studies (4%), including information about whether the study has a single PI, an institutional PI, the total number of PIs involved, total variables recorded is available for online analysis, has searchable question text, has variables that are indexed for search, contains one or more restricted files, and whether the study is completely restricted. We provided additional metadata for this subset of ICPSR studies because they were released within the past five years and detailed curation and usage information were available for them. Usage statistics including total downloads and data file downloads are available for this subset of studies as well; citation statistics are available for 8,030 studies (76%). Most ICPSR studies have fewer than 500 users, as indicated by total downloads, or citations (Fig. 4 ).

ICPSR study curation levels.

ICPSR study usage.

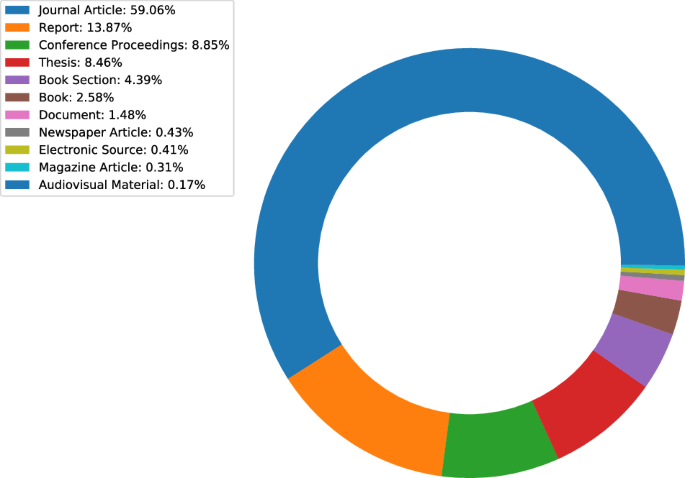

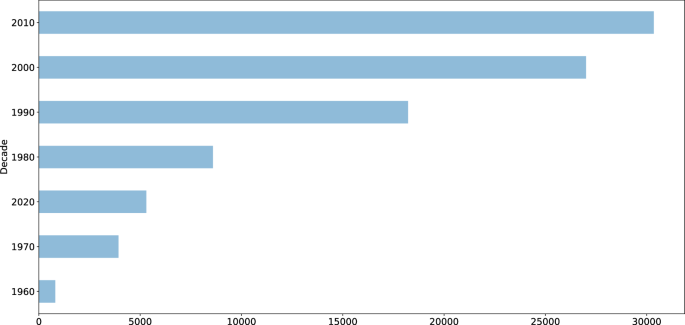

A subset of 43,102 publications (45%) available in the ICPSR Bibliography had a DOI. Author metadata were entered as free text, meaning that variations may exist and require additional normalization and pre-processing prior to analysis. While author information is standardized for each publication, individual names may appear in different sort orders (e.g., “Earls, Felton J.” and “Stephen W. Raudenbush”). Most of the items in the ICPSR Bibliography as of 2021-11-16 were journal articles (59%), reports (14%), conference presentations (9%), or theses (8%) (Fig. 5 ). The number of publications collected in the Bibliography has increased each decade since the inception of ICPSR in 1962 (Fig. 6 ). Most ICPSR studies (76%) have one or more citations in a publication.

ICPSR Bibliography citation types.

ICPSR citations by decade.

Usage Notes

The dataset consists of three tables that can be joined using the “STUDY” key as shown in Fig. 2 . The “ICPSR_PAPERS” table contains one row per paper with one or more cited studies in the “STUDY_NUMS” column. We manipulated and analyzed the tables as CSV files with the Pandas library 36 in Python and the Tidyverse packages 37 in R.

The present MICA dataset can be used independently to study the relationship between curation decisions and data reuse. Evidence of reuse for specific studies is available in several forms: usage information, including downloads and citation counts; and citation contexts within papers that cite data. Analysis may also be performed on the citation network formed between datasets and papers that use them. Finally, curation actions can be associated with properties of studies and usage histories.

This dataset has several limitations of which users should be aware. First, Jira tickets can only be used to represent the intensiveness of curation for activities undertaken since 2017, when ICPSR started using both Curation Levels and Jira. Studies published before 2017 were all curated, but documentation of the extent of that curation was not standardized and therefore could not be included in these analyses. Second, the measure of publications relies upon the authors’ clarity of data citation and the ICPSR Bibliography staff’s ability to discover citations with varying formality and clarity. Thus, there is always a chance that some secondary-data-citing publications have been left out of the bibliography. Finally, there may be some cases in which a paper in the ICSPSR bibliography did not actually obtain data from ICPSR. For example, PIs have often written about or even distributed their data prior to their archival in ICSPR. Therefore, those publications would not have cited ICPSR but they are still collected in the Bibliography as being directly related to the data that were eventually deposited at ICPSR.

In summary, the MICA dataset contains relationships between two main types of entities – papers and studies – which can be mined. The tables in the MICA dataset have supported network analysis (community structure and clique detection) 30 ; natural language processing (NER for dataset reference detection) 32 ; visualizing citation networks (to search for datasets) 38 ; and regression analysis (on curation decisions and data downloads) 29 . The data are currently being used to develop research metrics and recommendation systems for research data. Given that DOIs are provided for ICPSR studies and articles in the ICPSR Bibliography, the MICA dataset can also be used with other bibliometric databases, including DataCite, Crossref, OpenAlex, and related indexes. Subscription-based services, such as Dimensions AI, are also compatible with the MICA dataset. In some cases, these services provide abstracts or full text for papers from which data citation contexts can be extracted for semantic content analysis.

Code availability

The code 27 used to produce the MICA project dataset is available on GitHub at https://github.com/ICPSR/mica-data-descriptor and through Zenodo with the identifier https://doi.org/10.5281/zenodo.8432666 . Data manipulation and pre-processing were performed in Python. Data curation for distribution was performed in SPSS.

He, L. & Han, Z. Do usage counts of scientific data make sense? An investigation of the Dryad repository. Library Hi Tech 35 , 332–342 (2017).

Article Google Scholar

Brickley, D., Burgess, M. & Noy, N. Google dataset search: Building a search engine for datasets in an open web ecosystem. In The World Wide Web Conference - WWW ‘19 , 1365–1375 (ACM Press, San Francisco, CA, USA, 2019).

Buneman, P., Dosso, D., Lissandrini, M. & Silvello, G. Data citation and the citation graph. Quantitative Science Studies 2 , 1399–1422 (2022).

Chao, T. C. Disciplinary reach: Investigating the impact of dataset reuse in the earth sciences. Proceedings of the American Society for Information Science and Technology 48 , 1–8 (2011).

Article ADS Google Scholar

Parr, C. et al . A discussion of value metrics for data repositories in earth and environmental sciences. Data Science Journal 18 , 58 (2019).

Eschenfelder, K. R., Shankar, K. & Downey, G. The financial maintenance of social science data archives: Four case studies of long–term infrastructure work. J. Assoc. Inf. Sci. Technol. 73 , 1723–1740 (2022).

Palmer, C. L., Weber, N. M. & Cragin, M. H. The analytic potential of scientific data: Understanding re-use value. Proceedings of the American Society for Information Science and Technology 48 , 1–10 (2011).

Zimmerman, A. S. New knowledge from old data: The role of standards in the sharing and reuse of ecological data. Sci. Technol. Human Values 33 , 631–652 (2008).

Cragin, M. H., Palmer, C. L., Carlson, J. R. & Witt, M. Data sharing, small science and institutional repositories. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 368 , 4023–4038 (2010).

Article ADS CAS Google Scholar

Fear, K. M. Measuring and Anticipating the Impact of Data Reuse . Ph.D. thesis, University of Michigan (2013).

Borgman, C. L., Van de Sompel, H., Scharnhorst, A., van den Berg, H. & Treloar, A. Who uses the digital data archive? An exploratory study of DANS. Proceedings of the Association for Information Science and Technology 52 , 1–4 (2015).

Pasquetto, I. V., Borgman, C. L. & Wofford, M. F. Uses and reuses of scientific data: The data creators’ advantage. Harvard Data Science Review 1 (2019).

Gregory, K., Groth, P., Scharnhorst, A. & Wyatt, S. Lost or found? Discovering data needed for research. Harvard Data Science Review (2020).

York, J. Seeking equilibrium in data reuse: A study of knowledge satisficing . Ph.D. thesis, University of Michigan (2022).

Kilbride, W. & Norris, S. Collaborating to clarify the cost of curation. New Review of Information Networking 19 , 44–48 (2014).

Robinson-Garcia, N., Mongeon, P., Jeng, W. & Costas, R. DataCite as a novel bibliometric source: Coverage, strengths and limitations. Journal of Informetrics 11 , 841–854 (2017).

Qin, J., Hemsley, J. & Bratt, S. E. The structural shift and collaboration capacity in GenBank networks: A longitudinal study. Quantitative Science Studies 3 , 174–193 (2022).

Article PubMed PubMed Central Google Scholar

Acuna, D. E., Yi, Z., Liang, L. & Zhuang, H. Predicting the usage of scientific datasets based on article, author, institution, and journal bibliometrics. In Smits, M. (ed.) Information for a Better World: Shaping the Global Future. iConference 2022 ., 42–52 (Springer International Publishing, Cham, 2022).

Zeng, T., Wu, L., Bratt, S. & Acuna, D. E. Assigning credit to scientific datasets using article citation networks. Journal of Informetrics 14 , 101013 (2020).

Koesten, L., Vougiouklis, P., Simperl, E. & Groth, P. Dataset reuse: Toward translating principles to practice. Patterns 1 , 100136 (2020).

Du, C., Cohoon, J., Lopez, P. & Howison, J. Softcite dataset: A dataset of software mentions in biomedical and economic research publications. J. Assoc. Inf. Sci. Technol. 72 , 870–884 (2021).

Aryani, A. et al . A research graph dataset for connecting research data repositories using RD-Switchboard. Sci Data 5 , 180099 (2018).

Färber, M. & Lamprecht, D. The data set knowledge graph: Creating a linked open data source for data sets. Quantitative Science Studies 2 , 1324–1355 (2021).

Perry, A. & Netscher, S. Measuring the time spent on data curation. Journal of Documentation 78 , 282–304 (2022).

Trisovic, A. et al . Advancing computational reproducibility in the Dataverse data repository platform. In Proceedings of the 3rd International Workshop on Practical Reproducible Evaluation of Computer Systems , P-RECS ‘20, 15–20, https://doi.org/10.1145/3391800.3398173 (Association for Computing Machinery, New York, NY, USA, 2020).

Borgman, C. L., Scharnhorst, A. & Golshan, M. S. Digital data archives as knowledge infrastructures: Mediating data sharing and reuse. Journal of the Association for Information Science and Technology 70 , 888–904, https://doi.org/10.1002/asi.24172 (2019).

Lafia, S. et al . MICA Data Descriptor. Zenodo https://doi.org/10.5281/zenodo.8432666 (2023).

Lafia, S., Thomer, A., Bleckley, D., Akmon, D. & Hemphill, L. Leveraging machine learning to detect data curation activities. In 2021 IEEE 17th International Conference on eScience (eScience) , 149–158, https://doi.org/10.1109/eScience51609.2021.00025 (2021).

Hemphill, L., Pienta, A., Lafia, S., Akmon, D. & Bleckley, D. How do properties of data, their curation, and their funding relate to reuse? J. Assoc. Inf. Sci. Technol. 73 , 1432–44, https://doi.org/10.1002/asi.24646 (2021).

Lafia, S., Fan, L., Thomer, A. & Hemphill, L. Subdivisions and crossroads: Identifying hidden community structures in a data archive’s citation network. Quantitative Science Studies 3 , 694–714, https://doi.org/10.1162/qss_a_00209 (2022).

ICPSR. ICPSR Bibliography of Data-related Literature: Collection Criteria. https://www.icpsr.umich.edu/web/pages/ICPSR/citations/collection-criteria.html (2023).

Lafia, S., Fan, L. & Hemphill, L. A natural language processing pipeline for detecting informal data references in academic literature. Proc. Assoc. Inf. Sci. Technol. 59 , 169–178, https://doi.org/10.1002/pra2.614 (2022).

Hook, D. W., Porter, S. J. & Herzog, C. Dimensions: Building context for search and evaluation. Frontiers in Research Metrics and Analytics 3 , 23, https://doi.org/10.3389/frma.2018.00023 (2018).

https://www.icpsr.umich.edu/web/ICPSR/thesaurus (2002). ICPSR. ICPSR Thesaurus.

https://www.icpsr.umich.edu/files/datamanagement/icpsr-curation-levels.pdf (2020). ICPSR. ICPSR Curation Levels.

McKinney, W. Data Structures for Statistical Computing in Python. In van der Walt, S. & Millman, J. (eds.) Proceedings of the 9th Python in Science Conference , 56–61 (2010).

Wickham, H. et al . Welcome to the Tidyverse. Journal of Open Source Software 4 , 1686 (2019).

Fan, L., Lafia, S., Li, L., Yang, F. & Hemphill, L. DataChat: Prototyping a conversational agent for dataset search and visualization. Proc. Assoc. Inf. Sci. Technol. 60 , 586–591 (2023).

Download references

Acknowledgements

We thank the ICPSR Bibliography staff, the ICPSR Data Curation Unit, and the ICPSR Data Stewardship Committee for their support of this research. This material is based upon work supported by the National Science Foundation under grant 1930645. This project was made possible in part by the Institute of Museum and Library Services LG-37-19-0134-19.

Author information

Authors and affiliations.

Inter-university Consortium for Political and Social Research, University of Michigan, Ann Arbor, MI, 48104, USA

Libby Hemphill, Sara Lafia, David Bleckley & Elizabeth Moss

School of Information, University of Michigan, Ann Arbor, MI, 48104, USA

Libby Hemphill & Lizhou Fan

School of Information, University of Arizona, Tucson, AZ, 85721, USA

Andrea Thomer

You can also search for this author in PubMed Google Scholar

Contributions

L.H. and A.T. conceptualized the study design, D.B., E.M., and S.L. prepared the data, S.L., L.F., and L.H. analyzed the data, and D.B. validated the data. All authors reviewed and edited the manuscript.

Corresponding author

Correspondence to Libby Hemphill .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Hemphill, L., Thomer, A., Lafia, S. et al. A dataset for measuring the impact of research data and their curation. Sci Data 11 , 442 (2024). https://doi.org/10.1038/s41597-024-03303-2

Download citation

Received : 16 November 2023

Accepted : 24 April 2024

Published : 03 May 2024

DOI : https://doi.org/10.1038/s41597-024-03303-2

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Statistical Papers

Statistical Papers is a forum for presentation and critical assessment of statistical methods encouraging the discussion of methodological foundations and potential applications.

- The Journal stresses statistical methods that have broad applications, giving special attention to those relevant to the economic and social sciences.

- Covers all topics of modern data science, such as frequentist and Bayesian design and inference as well as statistical learning.

- Contains original research papers (regular articles), survey articles, short communications, reports on statistical software, and book reviews.

- High author satisfaction with 90% likely to publish in the journal again.

- Werner G. Müller,

- Carsten Jentsch,

- Shuangzhe Liu,

- Ulrike Schneider

Latest issue

Volume 65, Issue 2

Latest articles

Analyzing quantitative performance: bayesian estimation of 3-component mixture geometric distributions based on kumaraswamy prior.

- Nadeem Akhtar

- Sajjad Ahmad Khan

- Haifa Alqahtani

Variation comparison between infinitely divisible distributions and the normal distribution

Multivariate stochastic comparisons of sequential order statistics with non-identical components

- Tanmay Sahoo

- Nil Kamal Hazra

- Narayanaswamy Balakrishnan

New copula families and mixing properties

- Martial Longla

Multiple random change points in survival analysis with applications to clinical trials

Journal updates

Write & submit: overleaf latex template.

Overleaf LaTeX Template

Journal information

- Australian Business Deans Council (ABDC) Journal Quality List

- Current Index to Statistics

- Google Scholar

- Japanese Science and Technology Agency (JST)

- Mathematical Reviews

- Norwegian Register for Scientific Journals and Series

- OCLC WorldCat Discovery Service

- Research Papers in Economics (RePEc)

- Science Citation Index Expanded (SCIE)

- TD Net Discovery Service

- UGC-CARE List (India)

Rights and permissions

Editorial policies

© Springer-Verlag GmbH Germany, part of Springer Nature

- Find a journal

- Publish with us

- Track your research

Effective Use of Statistics in Research – Methods and Tools for Data Analysis

Remember that impending feeling you get when you are asked to analyze your data! Now that you have all the required raw data, you need to statistically prove your hypothesis. Representing your numerical data as part of statistics in research will also help in breaking the stereotype of being a biology student who can’t do math.

Statistical methods are essential for scientific research. In fact, statistical methods dominate the scientific research as they include planning, designing, collecting data, analyzing, drawing meaningful interpretation and reporting of research findings. Furthermore, the results acquired from research project are meaningless raw data unless analyzed with statistical tools. Therefore, determining statistics in research is of utmost necessity to justify research findings. In this article, we will discuss how using statistical methods for biology could help draw meaningful conclusion to analyze biological studies.

Table of Contents

Role of Statistics in Biological Research

Statistics is a branch of science that deals with collection, organization and analysis of data from the sample to the whole population. Moreover, it aids in designing a study more meticulously and also give a logical reasoning in concluding the hypothesis. Furthermore, biology study focuses on study of living organisms and their complex living pathways, which are very dynamic and cannot be explained with logical reasoning. However, statistics is more complex a field of study that defines and explains study patterns based on the sample sizes used. To be precise, statistics provides a trend in the conducted study.

Biological researchers often disregard the use of statistics in their research planning, and mainly use statistical tools at the end of their experiment. Therefore, giving rise to a complicated set of results which are not easily analyzed from statistical tools in research. Statistics in research can help a researcher approach the study in a stepwise manner, wherein the statistical analysis in research follows –

1. Establishing a Sample Size

Usually, a biological experiment starts with choosing samples and selecting the right number of repetitive experiments. Statistics in research deals with basics in statistics that provides statistical randomness and law of using large samples. Statistics teaches how choosing a sample size from a random large pool of sample helps extrapolate statistical findings and reduce experimental bias and errors.

2. Testing of Hypothesis

When conducting a statistical study with large sample pool, biological researchers must make sure that a conclusion is statistically significant. To achieve this, a researcher must create a hypothesis before examining the distribution of data. Furthermore, statistics in research helps interpret the data clustered near the mean of distributed data or spread across the distribution. These trends help analyze the sample and signify the hypothesis.

3. Data Interpretation Through Analysis

When dealing with large data, statistics in research assist in data analysis. This helps researchers to draw an effective conclusion from their experiment and observations. Concluding the study manually or from visual observation may give erroneous results; therefore, thorough statistical analysis will take into consideration all the other statistical measures and variance in the sample to provide a detailed interpretation of the data. Therefore, researchers produce a detailed and important data to support the conclusion.

Types of Statistical Research Methods That Aid in Data Analysis

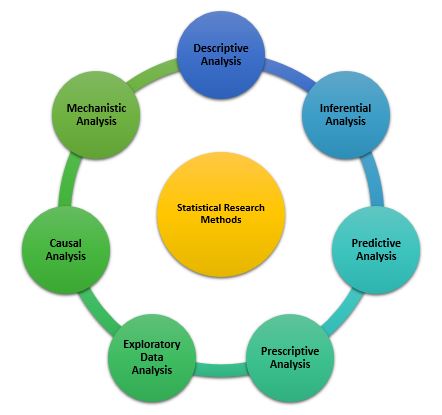

Statistical analysis is the process of analyzing samples of data into patterns or trends that help researchers anticipate situations and make appropriate research conclusions. Based on the type of data, statistical analyses are of the following type:

1. Descriptive Analysis

The descriptive statistical analysis allows organizing and summarizing the large data into graphs and tables . Descriptive analysis involves various processes such as tabulation, measure of central tendency, measure of dispersion or variance, skewness measurements etc.

2. Inferential Analysis

The inferential statistical analysis allows to extrapolate the data acquired from a small sample size to the complete population. This analysis helps draw conclusions and make decisions about the whole population on the basis of sample data. It is a highly recommended statistical method for research projects that work with smaller sample size and meaning to extrapolate conclusion for large population.

3. Predictive Analysis

Predictive analysis is used to make a prediction of future events. This analysis is approached by marketing companies, insurance organizations, online service providers, data-driven marketing, and financial corporations.

4. Prescriptive Analysis

Prescriptive analysis examines data to find out what can be done next. It is widely used in business analysis for finding out the best possible outcome for a situation. It is nearly related to descriptive and predictive analysis. However, prescriptive analysis deals with giving appropriate suggestions among the available preferences.

5. Exploratory Data Analysis

EDA is generally the first step of the data analysis process that is conducted before performing any other statistical analysis technique. It completely focuses on analyzing patterns in the data to recognize potential relationships. EDA is used to discover unknown associations within data, inspect missing data from collected data and obtain maximum insights.

6. Causal Analysis

Causal analysis assists in understanding and determining the reasons behind “why” things happen in a certain way, as they appear. This analysis helps identify root cause of failures or simply find the basic reason why something could happen. For example, causal analysis is used to understand what will happen to the provided variable if another variable changes.

7. Mechanistic Analysis

This is a least common type of statistical analysis. The mechanistic analysis is used in the process of big data analytics and biological science. It uses the concept of understanding individual changes in variables that cause changes in other variables correspondingly while excluding external influences.

Important Statistical Tools In Research

Researchers in the biological field find statistical analysis in research as the scariest aspect of completing research. However, statistical tools in research can help researchers understand what to do with data and how to interpret the results, making this process as easy as possible.

1. Statistical Package for Social Science (SPSS)

It is a widely used software package for human behavior research. SPSS can compile descriptive statistics, as well as graphical depictions of result. Moreover, it includes the option to create scripts that automate analysis or carry out more advanced statistical processing.

2. R Foundation for Statistical Computing

This software package is used among human behavior research and other fields. R is a powerful tool and has a steep learning curve. However, it requires a certain level of coding. Furthermore, it comes with an active community that is engaged in building and enhancing the software and the associated plugins.

3. MATLAB (The Mathworks)

It is an analytical platform and a programming language. Researchers and engineers use this software and create their own code and help answer their research question. While MatLab can be a difficult tool to use for novices, it offers flexibility in terms of what the researcher needs.

4. Microsoft Excel

Not the best solution for statistical analysis in research, but MS Excel offers wide variety of tools for data visualization and simple statistics. It is easy to generate summary and customizable graphs and figures. MS Excel is the most accessible option for those wanting to start with statistics.

5. Statistical Analysis Software (SAS)

It is a statistical platform used in business, healthcare, and human behavior research alike. It can carry out advanced analyzes and produce publication-worthy figures, tables and charts .

6. GraphPad Prism

It is a premium software that is primarily used among biology researchers. But, it offers a range of variety to be used in various other fields. Similar to SPSS, GraphPad gives scripting option to automate analyses to carry out complex statistical calculations.

This software offers basic as well as advanced statistical tools for data analysis. However, similar to GraphPad and SPSS, minitab needs command over coding and can offer automated analyses.

Use of Statistical Tools In Research and Data Analysis

Statistical tools manage the large data. Many biological studies use large data to analyze the trends and patterns in studies. Therefore, using statistical tools becomes essential, as they manage the large data sets, making data processing more convenient.

Following these steps will help biological researchers to showcase the statistics in research in detail, and develop accurate hypothesis and use correct tools for it.

There are a range of statistical tools in research which can help researchers manage their research data and improve the outcome of their research by better interpretation of data. You could use statistics in research by understanding the research question, knowledge of statistics and your personal experience in coding.

Have you faced challenges while using statistics in research? How did you manage it? Did you use any of the statistical tools to help you with your research data? Do write to us or comment below!

Frequently Asked Questions

Statistics in research can help a researcher approach the study in a stepwise manner: 1. Establishing a sample size 2. Testing of hypothesis 3. Data interpretation through analysis