A mobile survey is still a survey: Why market researchers need to go beyond “mobile-first”

SUBSCRIBE Sign up to get new resources from Rival.

Recommended.

Curiosity, AI, and impact: 3 takeaways from Quirk’s Chicago 2024

Market research trends: Predictions on what’s next for the insights industry

Rival Technologies' Spring 2024 product release

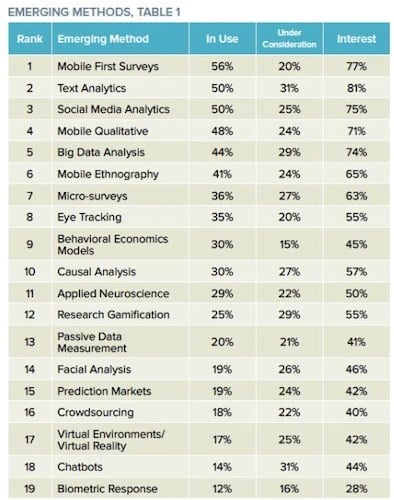

According to the 2020 Insights Practice edition of the GreenBook Research Industry Trends (GRIT) report, mobile-first surveys are finally the most popular emerging research method. The annual study found that 56% of researchers are now using mobile surveys , easily beating t ext analytics (50%) and social media analytics (50%) as the most widely used emerging market research method today.

The popularity of mobile surveys is pretty broad. For instance, a relatively equal percentage of research buyers (52%) and suppliers (58%) are using this technology. Globally, mobile surveys also enjoy wide adoption, with a majority of researchers in North America (55%), Europe (63%) and APAC (57%) using them.

While it’s encouraging to see that mobile surveys are finally being used by a majority of researchers, GreenBook’s study raises an important question: I s the market research industry adopting mobile-first practices fast enough? 🤔

GreenBook’s survey found that 20% of researchers are considering bringing in mobile survey software into their research toolkit, but 15% are still unsure—and 6% are not interested at all. Other mobile-first methodologies such as mobile ethnography and micro-surveys are growing but aren’t being used by the majority yet.

“I t is worrying that 20% of people only list mobile-first surveys as being under consideration ,” writes Ray Poynter, Chief Research Officer at Potentiate, in his analysis of GreenBook’s report. “ For some time, it has been necessary to accommodate mobile devices for most projects, and it is widely recognized that mobile first is the best way of doing that. ”

20% of researchers are considering bringing in mobile survey software into their research toolkit, but 15% are still unsure.

According to recent stats, 5 billion people globally have mobile devices. Many of these people—for instance, younger ones like Gen Zs —are mobile-first consumers or digital natives . If companies want to reach and hear from a more diverse set of customers, their market research strategy should be mobile-first rather than simply being mobile-friendly.

A mobile survey is still a survey

While adapting survey software that supports mobile-first initiatives is important, it’s also not enough if companies want to increase response rates, get richer insights and improve business outcomes. Using mobile surveys doesn’t address the hard reality that surveys in general are falling out of favor with consumers .

The volume of feedback requests people get is resulting to a survey backlash. As MarketingMag Editor Ben Ice recently pointed out, customers are “ buried under the immense weight of requests for feedback and survey participation .” From the ubiquitous NPS and CSAT questionnaires to those annoying pop-up website surveys, people are constantly being asked to rate this and that.

Unfortunately, customers don’t see or understand how their feedback is resulting to meaningful improvements .

“Many customers submit themselves to [the survey] process, hoping for better experiences ,” points out Gene Leganza , VP at Forreste r , in an article about his 2020 predictions . “B ut firms have rewarded these customers poorly: Customer experiences haven’t gotten better for three years .”

The terrible survey experience is resulting to lower response rates and declining ROI, according to Forrester. The research firm is predicting that more companies will adapt customer intelligence tools and build in-house research platforms this year as a result of the survey backlash.

“If you’ve ever taken an online survey, you know that most look like you’re taking a test."

Unfortunately, re-sizing buttons and open-text boxes so they fit on mobile screens won’t increase consumer appetite for surveys. What’s needed is a complete re-imagining of the survey experience and an examination of the value we’re providing research participants.

Think back to the last time you took an online survey. Was the experience fun and rewarding , or did it seem like a super long interrogation? I’m willing to bet it’s the latter.

“If you’ve ever taken an online survey, you know that most look like you’re taking a test ,” Reach3 Insights CEO & Founder Matt Kleinschmit recently explained to MediaPost . “They’re oftentimes very long, there are endless multi-choice grids, radio buttons, and very clinical, formalized language.”

This approach is problematic, according to Kleinschmit , as it results to feedback that’s not as rich or as candid and authentic.

“ People get into what I refer to as test-taking mode. They start to think about their answers, they’re rationalizing their responses, they feel like they’re trying to provide the answers that the person who is giving the test wants to hear. ”

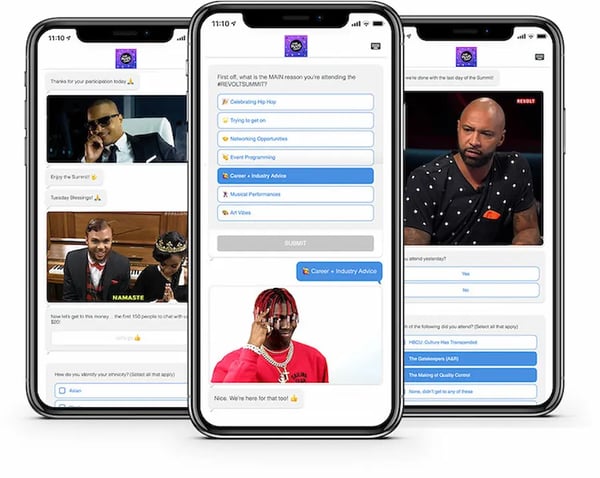

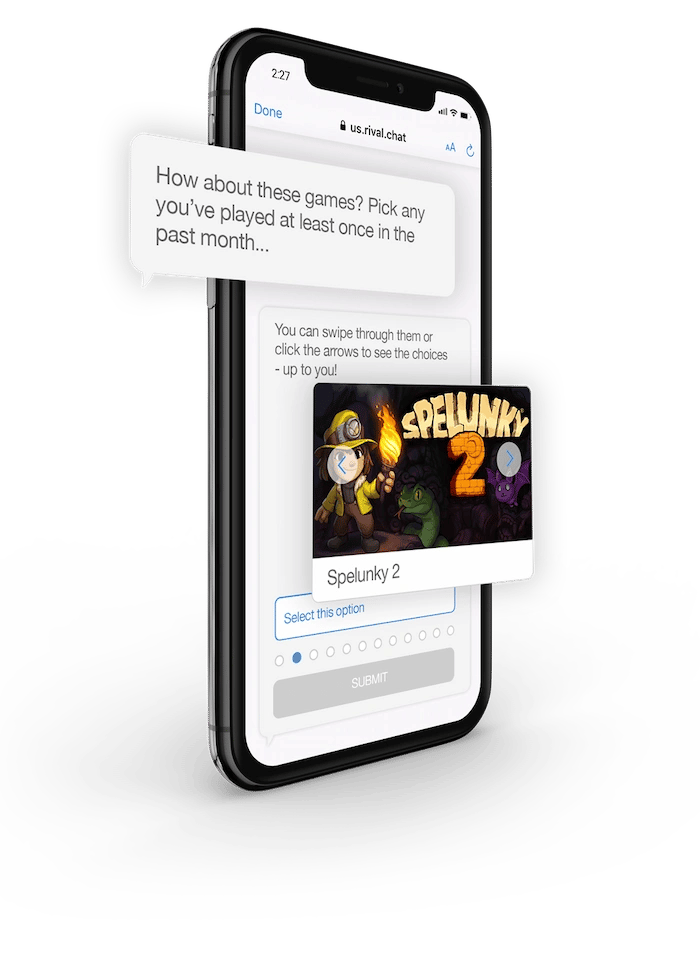

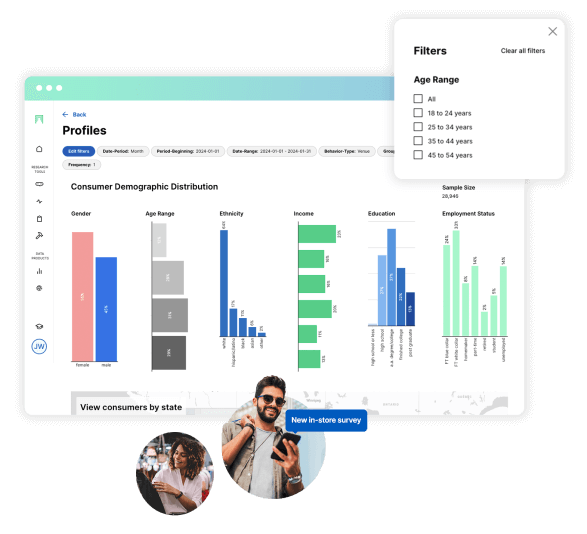

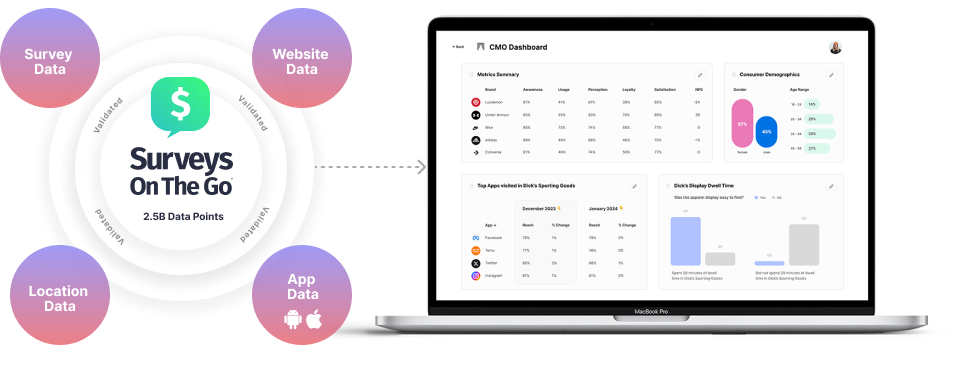

At Rival, we think the survey fatigue issue is so big that we created a new insight platform that —in many ways— is similar to t raditional survey tools , but is also significantly different.

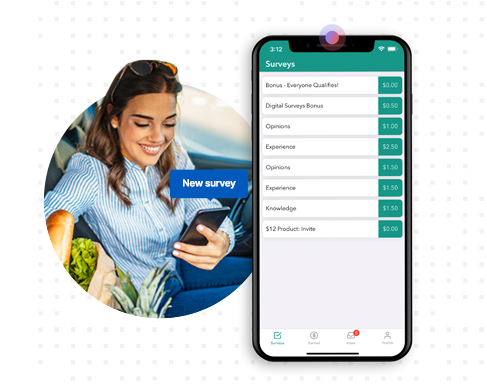

We don’t call our surveys “surveys.” We call them chats— conversational surveys hosted through messaging apps like Facebook Messenger, WeChat, WhatsApp or on mobile web browsers. Chat surveys are mobile-first, but they’re not just mobile surveys.

Yes, you can capture both quantitative and qualitative data through chats, but they’re different from mobile surveys in several ways:

- Everything about chats—from the language and tone used to the user experience—is conversational . If it sounds like a survey, it’s not really a chat!

- Chats are friendlier than mobile surveys, more informal and more visual than mobile surveys

- They’re shorter and more iterative

- They use t he smartphones’ capabilities to make it easy and seamless for research participants to submit photos, audio and selfie video s

Early research-on-research on chats show s that people are responding to chats as a new way of engaging with brands.

Just as important, chats provide the continuity researchers need to be confident with the data they get . In a parallel study we did last year, we saw that chats would generally yield the same business decisions as traditional surveys . Chat survey research also does not introduce any demographic skews if the sample source is the same. That said, given that chats work seamlessly with popular mobile messaging apps and social media, we see a huge potential for this technology to improve in-the-moment research and to reach Gen Zs and more ethnically diverse respondents.

Conclusion

It’s great to see mobile surveys are moving from merely a market research trend into a mainstream, everyday tool for capturing customer insights . As we explored here, however, there’s a bigger opportunity to completely re-imagine surveys and deliver a respondent experience that’s more fun, more conversational and more human.

IT'S TIME TO GO BEYOND MOBILE SURVEYS

Watch our webinar, "Mobile Research Best Practices" to learn how to harness the full power of mobile tech to engage your customers and fans for insights.

Kelvin Claveria (@kcclaveria) is Director of Demand Generation at Rival Technologies.

You May Also Like

2022 GRIT Top 50 Most Innovative Suppliers: Thanks for voting for Rival!

Forrester's Cinny Little on elevating the impact of market research through mobile-first approaches

MRMW 2022: 5 panel sessions we can’t wait to see

Talk to an expert.

Got questions about insight communities and mobile research? Chat with one of our experts.

Subscribe by Email

No comments yet.

Let us know what you think

Home • Knowledge hub • The Impact of Mobile Devices on Survey Responses: Why Question Types Matter More Than Ever.

The Impact of Mobile Devices on Survey Responses: Why Question Types Matter More Than Ever.

With the proliferation of smartphones and tablets, it’s no surprise that more and more people are completing surveys on their mobile devices. But what does this mean for marketers, product managers, and market researchers?

In this article, we’ll explore how mobile devices have changed the survey landscape and why it’s crucial to design mobile-friendly surveys. We’ll dive into the various question types, discuss their effectiveness on mobile devices, and provide best practices for designing surveys that work well on screens of all sizes.

But first, let’s take a step back and consider how mobile devices have changed our interaction with technology. These devices have revolutionized how we communicate, consume content, and engage with brands in just a few short years. People spend more time on their phones than ever before, and this trend will continue.

As marketers and researchers, we must keep up with these changes and adapt our strategies accordingly. By understanding the impact of mobile devices on survey responses, we can design surveys that are more engaging, more effective, and ultimately more valuable for our businesses. So let’s dive in and explore the exciting world of mobile surveys!

The Mobile Survey Landscape

The mobile survey landscape is constantly evolving, and staying up-to-date with the latest trends and statistics is essential. According to Statista, in 2023, the current number of smartphone users in the world today is 6.92 billion, meaning 86.29% of the world’s population owns a smartphone. This means that a large percentage of survey respondents are completing surveys on their mobile devices.

While mobile surveys offer many benefits, such as increased convenience and accessibility, they also present some unique challenges. One of the biggest challenges is the limited screen size of mobile devices. It’s crucial to design surveys that are optimized for smaller screens, with clear and concise questions and answer options.

In a survey by Google, 94% of respondents reported using their smartphones to take surveys.

Another challenge is user attention span. Mobile users often multitask and are easily distracted, so surveys must be engaging and easy to complete. If a survey takes too long or requires too much effort, respondents will likely abandon it before completing it.

Despite these challenges, mobile surveys can be highly effective when designed correctly. In fact, a study found that mobile surveys have a completion rate that is 10% higher than desktop surveys. Additionally, mobile surveys tend to have higher response rates and lower costs, making them an attractive option for brands.

Understanding Question Types

Understanding the different types of survey questions is crucial to designing effective mobile surveys. Let’s closely examine some of the most common question types and how they work on mobile devices.

Open-ended questions allow respondents to provide their own answers and can be useful for collecting qualitative data. However, they can be more challenging to answer on a mobile device, as they often require more typing and can be harder to read on a smaller screen. In contrast, closed-ended questions provide a set of predefined answer options, such as yes or no, and are often easier to answer on a mobile device.

Multiple-choice questions are a popular closed-ended question type, where respondents are given a set of answer options to choose from. These can be effective on mobile devices if the options are clear and easy to read. However, if the options are too lengthy or complex, they may be difficult to read on a small screen.

Rating scales are another common question type, where respondents are asked to rate their level of agreement or satisfaction on a scale of 1 to 5 or 1 to 10. Rating scales can be effective on mobile devices if they are designed to fit the smaller screen size, and the rating options are clearly labeled and easy to select.

Research by Quirk’s Media found that surveys optimized for mobile devices are completed 30-40% faster than those optimized for desktops.

It’s worth noting that some question types, such as matrix questions or grid questions, can be challenging to answer on a mobile device. These types of questions require respondents to evaluate multiple items, which can be difficult to do on a smaller screen.

Best Practices for Mobile-Friendly Surveys

Designing surveys that are mobile-friendly is crucial to maximizing completion rates and gathering accurate data. Here are some best practices for designing mobile-friendly surveys:

- Keep it concise: Mobile users have limited attention spans, so it’s essential to keep survey questions and answer options short and to the point. Avoid using long or complicated sentences, and consider breaking up longer questions into smaller, more manageable chunks.

- Use clear formatting: Use a clear and easy-to-read font, with a font size of at least 14 points, to ensure the text is readable on smaller screens. Use plenty of white space between questions and answer options to help respondents navigate the survey more easily.

- Optimize for different devices: Make sure your survey is optimized for different screen sizes and device types. Test your survey on different devices to ensure it looks and functions correctly on each one.

- Keep answer options consistent: Make sure that answer options are consistent throughout the survey. This will make it easier for respondents to understand the question and select the appropriate answer.

- Provide clear instructions: Provide clear and concise instructions at the beginning of the survey to help respondents understand how to complete the survey. Include instructions on how to navigate through the survey and how long it is expected to take.

- Use skip logic: Skip logic allows respondents to skip questions that are not relevant to them, which can help to reduce survey fatigue and improve completion rates. However, ensure that skip logic is used sparingly, as it can add complexity to the survey.

- Test and iterate: Testing and iterating are essential parts of survey design . Test your survey on a small sample of respondents before launching it to a larger audience, and use their feedback to make improvements.

Key Takeaways

Mobile devices have revolutionized how people interact with technology, including completing surveys. To maximize response rates and gather accurate data, it’s essential to design mobile-friendly surveys.

This means selecting the right question types and optimizing surveys for different screen sizes and devices.

Key takeaways from this blog post include:

- Mobile devices are an important platform for survey completion and should be taken into consideration when designing surveys.

- Closed-ended questions, such as multiple-choice questions and rating scales, tend to work better on mobile devices than open-ended questions.

- Mobile surveys should be concise, well-formatted, and optimized for different devices.

- Best practices for mobile surveys include keeping answer options consistent, providing clear instructions, and testing and iterating.

Brands and researchers can create engaging, effective surveys that provide valuable insights into consumer behavior and preferences by using a mobile-first approach and following these best practices.

Get regular insights

Keep up to date with the latest insights from our research as well as all our company news in our free monthly newsletter.

- First Name *

- Last Name *

- Business Email *

Helping brands uncover valuable insights

We’ve been working with Kadence on a couple of strategic projects, which influenced our product roadmap roll-out within the region. Their work has been exceptional in providing me the insights that I need. Senior Marketing Executive Arla Foods

Kadence’s reports give us the insight, conclusion and recommended execution needed to give us a different perspective, which provided us with an opportunity to relook at our go to market strategy in a different direction which we are now reaping the benefits from. Sales & Marketing Bridgestone

Kadence helped us not only conduct a thorough and insightful piece of research, its interpretation of the data provided many useful and unexpected good-news stories that we were able to use in our communications and interactions with government bodies. General Manager PR -Internal Communications & Government Affairs Mitsubishi

Kadence team is more like a partner to us. We have run a number of projects together and … the pro-activeness, out of the box thinking and delivering in spite of tight deadlines are some of the key reasons we always reach out to them. Vital Strategies

Kadence were an excellent partner on this project; they took time to really understand our business challenges, and developed a research approach that would tackle the exam question from all directions. The impact of the work is still being felt now, several years later. Customer Intelligence Director Wall Street Journal

Get In Touch

" (Required) " indicates required fields

Privacy Overview

- Pollfish School

- Market Research

- Survey Guides

- Get started

- Mobile Surveys vs Online Surveys: Definitions, Examples & More

How to choose between mobile surveys and online surveys

If you’re planning on venturing into the unknown, whether it’s a new business venture, new product, or even something a little more laid back, like launching a new event, getting the measure of potential ups and downs is vital; that’s where mobile surveys can be extremely important.

A well-constructed survey can give you valuable insight into key criteria such as:

- How much people are willing to pay for a product

- Where do they usually buy a product

- How often do they use a service

All of which is vital information for success.

There are two main ways to conduct a survey: mobile surveys and online surveys. What are the differences and which one is best for you?

Here are a few considerations that should help you decide which survey to choose.

Mobile Surveys vs. Online Surveys

What is an online survey.

Online surveys are surveys that are created with an online survey platform and distributed online through a variety of survey distribution methods .

These methods include (but are not limited to):

- Assisted crowdsourcing

- Sending surveys via email

- Sharing your survey on your website or blog

What Is A Mobile Survey?

Mobile surveys are created with an online survey platform, optimized for and distributed exclusively on mobile devices.

These survey platforms create relationships with app publishers, delivering surveys inside mobile apps in exchange for in-app incentives like an extra life in a game or access to exclusive recipes in a cooking app.

There are pros and cons to different survey methods. Check out how the two stack up below.

Potential Reach

Over the past fifteen years, mobile phone use has expanded drastically. This makes surveying respondents on their mobile devices a great choice as a research tool because the potential reach is bigger than ever. What’s more, the nature of mobile device use inspires respondents to fill out mobile surveys more completely than their email-based online counterparts.

Surveys conducted via email invitation will also struggle to get through email filters, something that mobile surveys don’t need to worry about. A survey sent straight to a mobile device has a much higher open rate.

Survey Feedback

Online surveys offer really in-depth data which definitely helps decision-makers. However, the data sometimes takes a few hours, or even days, to be compiled meaning decisions can’t be made as quickly. Mobile surveys on the other hand usually offer real-time feedback, making data analysis a much more responsive task.

Target Audience

Surveys are ineffective if the data collected is from an inaccurate source; if your target market is 25-30, there isn’t much point in researching 65 to 70-year-olds! Luckily, both mobile and online surveys offer excellent targeting to make sure you’re reaching the right people with your questions.

Of course, you can choose to target certain demographics with your survey, usually at an extra cost. Mobile wins out here, too. Although both methods offer segmentation to help improve survey accuracy, mobile devices are usually unique to an individual, so there’s less room for error or the wrong user filling in the survey.

Survey Questions and Options

While mobile surveys offer up a great way to reach plenty of people who are more willing to answer your survey, the questions you can ask are definitely more limited than those available in an emailed survey. Why? Basically, mobile users are less inclined to read lengthy questions. So if you’re using mobile surveys, questions need to be short and to the point, whereas online surveys can deliver more in-depth questions.

There you have it, plenty of things to think about before you conduct your next survey. On the face of it, mobile surveys (which are definitely growing in favor) seem to offer more benefits. However, it’s safe to say either online or mobile surveys are a valuable, integral part of any market research depending on the audience you are trying to reach and what your survey needs are.

Do you want to distribute your survey? Pollfish offers you access to millions of targeted consumers to get survey responses from $0.95 per complete. Launch your survey today.

Privacy Preference Center

Privacy preferences.

- Apr 12, 2022

Mobile Survey: Everything You Need To Know

Updated: Mar 26

Mobile phones have become a key part of everyone's daily lives and are more extensively used for different purposes than just making phone calls and texting from uploading videos to social media, online search queries or making contactless payments. So, it is crucial to tap into this for feedback and research by conducting a mobile survey to reach and engage with your key audience.

Table of contents:

What is a mobile survey?

What is an advantage of mobile surveys, how are mobile surveys used, 9 tips in using a mobile survey.

[Disclosure: This post contains affiliate links, meaning we get a commission if you decide to make a purchase through these links at no additional cost to you.]

A mobile survey is where a targeted group of individuals answer survey questions using a mobile device, whether that is a mobile phone or tablet as long as the survey is optimised to display on mobile devices otherwise known as Mobile First.

Mobile surveys can be accessible to a wide audience who are likely to respond to these surveys as the number of mobile users continues to grow worldwide and who are adopting multiple uses with these devices such as social media or taking pictures.

Accessibility in reaching a large audience is an advantage of mobile surveys but there many more benefits of using a mobile survey, which are highlighted below.

Enables you to access hard to reach groups

As mentioned earlier as there is a growing number of people using mobile devices, you are able to reach younger audiences who are more receptive to technology, so mobile surveys make it easier to contact them whereas it is more difficult reaching this audience via desktop surveys.

Adapts to all screen sizes

Just a follow on from the above point, mobile surveys can be used for all screen sizes providing these surveys are optimised to display on mobile devices. While standard online surveys have tended to be more suitable for laptops or desktop computers but have struggled to appear correctly on mobile devices and certain questions may not work properly especially large visuals.

Easy to use and distribute

Mobile surveys are great in capturing feedback from respondents, while they on the move as this type of survey is compatible with any mobile device, so individuals taking part can complete these surveys at their own convenience and don’t need to be at a computer. Distribution of these surveys is easy to do as well via SMS, email, website pop-up, social media, QR codes and push notifications .

Better response rates

As people carry around mobile phones all the time, it makes it a very convenient way for the surveys to be completed at any time as well as reaching all audiences thus a higher response rate compared to more standard online surveys. This is particularly true if you can make these surveys more engaging with the tools available and even include your own branding, so these respondents have a better user experience when answering your questions.

Faster responses

As mobile surveys are much shorter than traditional surveys, you tend to get a better response as mentioned above but with the additional benefit that you can collect and analyse results in real time rather than waiting, so you can take action sooner.

There are a variety of ways mobile surveys are utilised, whether that is for market research or feedback, below are just some examples of how mobile surveys are used by businesses and organisations.

Post product feedback

Where after a short period of time after purchase, customers are asked to provide feedback either via a downloaded app, email or SMS (mobile survey invite) to give their thoughts and review of the new product.

Restaurant and food delivery order feedback

Customers are prompted to give their feedback after mobile payment through a QR code or sent a message regarding the meal, service and delivery time.

Research trackers

For continuous research trackers of consumer product or service brands, mobile surveys are an ideal way to improve response rates and receive results quicker over time, particularly if you are trying reach younger audiences or people in remote areas.

Ad hoc surveys

For these one-off surveys such as concept tests , pricing research , new product development and ad evaluations , using mobile surveys are great for the same reasons mentioned above in reaching all consumers and quicker results.

In-store experience

With the use of QR codes or downloaded apps, you can ask customers their feedback , while they are in the store on their overall experience, reasons why they visited, tag their location and other aspects of their shopping experience.

Video diaries

Recording videos of their experience with a brand such as product reviews and you see visually the products, the shop and copy of their receipts to give context to what they are saying.

Website feedback

This is through pop-ups that come up when visiting a website via your mobile phone and are asked for your feedback about the website , whether it is easy to navigate or the visual appearance of the site along with reasons for visiting and how they heard about the website.

Customer service feedback

This could be based on your experience in-store or over the phone with a sales representative, which tend to be post purchase feedback on the overall service you received.

The following are 9 tips to keep in mind when using a mobile survey:

1. Keep the overall length of the survey short

People have a limited attention span especially when using their mobile for a survey, so the ideal length for this type of survey tends to be 3-4 minutes but should be no more than 10 minutes, otherwise you will have many people dropping out.

2. Questions should be short and simple

Questions should be easy to understand and concise especially as there is not much room available on a mobile screen.

3. Ensure the surveys are touch screen enabled

You need to ensure the surveys are touch screen enabled in order to be suitable for different screen sizes.

4. Minimise the amount of scrolling

Limit the amount of scrolling the respondents will need to do in order to answer each question.

5. Avoid large images

It is best to avoid large images otherwise you will not only be able to see the image clearly in portrait view where you need to scroll in any direction but also the file size may prolong download time. If you are having difficulty showing an image clearly, it’s best to add an instruction at the start of the question to ask the respondent to rotate their mobile screen to landscape to see the picture more clearly.

6. One question should appear at a time

Keep it limited to one question at a time as you cannot get away with multiple questions on one mobile screen as you may do with a survey on desktop.

7. Limit the number of open-ended questions

As these types of questions are open for respondents to give their opinion, this can take some time so it’s best to keep the number down to 1 or 2 opened ended questions.

8. Keep away from matrix style questions if you can

It's best to stay away from matrix style questions if possible because these questions may work well on desktop computers but these large grids are not compatible for mobile screen sizes, so it’s best to break this down into smaller chunks for the respondent to digest this information and respond.

9. Make sure to test the mobile survey

It’s best practice to test a mobile survey a number of times before you launch the survey. You can spread the task of testing amongst your family, friends and colleagues to ensure your survey works properly and identify any issues early on to resolve.

Hope you enjoyed reading this article and if you are interested in running your own mobile survey, why not check out the 5 best survey maker platforms to consider using which enable you to do mobile surveys easily and much more.

RELATED POSTS BELOW ABOUT SURVEYS AND QUESTIONS

Market Research Online Surveys: Best Practices & Tips

Benchmarking Survey Results: Why Is It Important?

How To Do A Survey In 9 Easy Steps

Survey Bot: What’s Great About Using It

What's Great About Using VideoAsk

Conversational Forms: Discover What So Good About Them

Top 5 Website Survey Questions About Usability

Questionnaire vs Survey: Key Differences You Should Learn

Employee Engagement Surveys: Benefits, Tips, Questions

Survey Panel: What Is It & Benefits Of Using One

Types Of Surveys: Overview, Types, Advantages, Examples & More

Market Research Online: Benefits, Methods & Tools

How To Design A Good Questionnaire

Type Of Customer Feedback Questions To Ask

NPS Calculation: Learn All You Need To Know

Concept Testing: Examples, Types, Costs, Benefits

#AnparResearch #mobilesurvey

- SURVEYS & QUESTIONS

Related Posts

Learn What's Great About Using VideoAsk

- Short Report

- Open access

- Published: 08 July 2013

Use of mobile devices to answer online surveys: implications for research

- John A Cunningham 1 , 2 ,

- Clayton Neighbors 3 ,

- Nicolas Bertholet 4 &

- Christian S Hendershot 2

BMC Research Notes volume 6 , Article number: 258 ( 2013 ) Cite this article

3492 Accesses

9 Citations

4 Altmetric

Metrics details

There is a growing use of mobile devices to access the Internet. We examined whether participants who used a mobile device to access a brief online survey were quicker to respond to the survey but also, less likely to complete it than participants using a traditional web browser.

Using data from a recently completed online intervention trial, we found that participants using mobile devices were quicker to access the survey but less likely to complete it compared to participants using a traditional web browser. More concerning, mobile device users were also less likely to respond to a request to complete a six week follow-up survey compared to those using traditional web browsers.

Conclusions

With roughly a third of participants using mobile devices to answer an online survey in this study, the impact of mobile device usage on survey completion rates is a concern.

Trial registration

ClinicalTrials.gov: NCT01521078

Introduction

A common means of recruiting participants for Internet-based interventions trials, particularly in college populations, is to use mass invitations through email lists (e.g.,[ 1 – 3 ]). In this procedure, a list of student email addresses is obtained from the college registrar and students are sent an invitation to participate in a research trial that contains a link to a web page which describes the trial and asks a series of questions regarding the behavior under study. The strengths of this method include the speed with which a large sample can be recruited and the ability to proactively engage participants who exhibit the risk behavior under study but who would not normally seek help (e.g., heavy drinking college students).

This method of recruitment has been used fairly extensively over the last decade. But will it remain a profitable way of recruiting participants with the widespread adoption of mobile devices with Internet and email capabilities (e.g., iPhone, Blackberry, Andriod device, iPad)? A Pew Internet Project survey conducted in 2012 found that 66% of US 18–29 year-olds owned cell phones[ 4 ]. One hypothesis is that this prevalence of smart phones and other mobile devices could be an advantage to participant recruitment through email invitation because potential participants can access their email quickly from anywhere. An opposing hypothesis has to do with the way things can be displayed on a screen or the ease of answering a survey that has been optimized for a computer screen. This alternate hypothesis posits that mobile devices are a disadvantage because fewer participants who use these devices would complete the survey and/or the online intervention under study. This research note examines both of these hypotheses using results from a recent online intervention trial which employed an email list as its recruitment method.

Undergraduates at an American university (N = 10,000) received an email invitation to participate in an online survey of alcohol use[ 5 ] in the spring of 2012. The email invitation offered a $5 online gift card in exchange for completing a brief screening survey and included a hyperlink to the web survey. This brief screener served to identify heavy drinkers who would then be enrolled into the intervention trial. Students who accessed the survey were directed to an online consent form. Those who provided informed consent proceeded to the brief screening survey, which consisted only of demographic questions and a 3-item alcohol screener (the AUDIT-C,[ 6 ]). The AUDIT-C items assess frequency of drinking, usual quantity of drinking, and number of days on which participants consumed 5 or more drinks (a 3-month reference frame was applied for AUDIT-C questions). Upon completing the survey, participants who met a pre-defined cutoff for heavy drinking (AUDIT-C score of 4 or greater) were asked to agree to a follow-up survey in 6 weeks, whereas those with scores less than 4 were thanked for their participation and screened out of the subsequent trial.

Heavy drinkers who agreed to be contacted for a subsequent survey were randomized to receive immediate access to an online alcohol use intervention program, or to receive no access. Those randomized to the access condition received a prompt to view a website, Check Your Drinking University (CYDU,[ 7 ]). The CYDU program, described in more detail elsewhere[ 5 ] provides users with brief personalized feedback about their alcohol use, including normative feedback specific to age, sex and geographic region. This web-based feedback program, tailored to undergraduates, is based on a similar screening program shown to reduce alcohol use in a community sample of adults[ 8 ].

Students who were offered access to the CYDU program could either agree (in which case they were immediately redirected to the site), or decline (in which case they were not redirected). Six weeks following the screening questionnaire, participants in both experimental conditions received an email link with a request to complete a follow-up survey of alcohol use. Follow-up drinking rates were evaluated to examine the potential impact of providing students with open access to web-based self-help materials on subsequent drinking behavior. Results pertaining to the intervention component of the study are reported elsewhere[ 5 ]. Upon each instance of survey access, the web-based data collection software recorded information on the web browser type used by participants to complete the survey. Of interest for the current analyses was the proportion of individuals who used a mobile device to access the survey screening survey, as well as associations of mobile device use with survey completion rates, and retention in the study during the 6-week follow-up. Both the baseline and follow-up surveys, as well as the CYDU can be viewed on a mobile devise. However, the surveys and CYDU have not been optimized for viewing on a mobile devise platform.

Ethics and consent procedure

The study was approved by the standing research ethics board of the Centre for Addiction and Mental Health (#152/2011) and is in compliance with the Helsinki declaration for research involving human participants. Informed consent was obtained using an online consent form that the participant had to actively agree to and all participants were over the age of 16.

Survey completion rates

Of 10,000 email invitations, 1768 students (17.7%) responded by accessing the survey link. Of these participants, 89% (1575) provided informed consent and accessed the screener questions. The vast majority (98.4%) of those who accessed the screener questions completed and submitted the survey. Attesting to the brevity of the survey, 92.8% of survey completers submitted the completed survey within 1 minute of initial access.

Use of mobile devices for survey access

Web browser signatures collected during survey submissions were used to identify instances where survey access occurred via mobile device web browsers (e.g., iPhone, iPad, Android, Blackberry), versus traditional web browsers. Of the 1768 participants who accessed the survey, 514 (29.1% of responders) did so using mobile devices. The most common mobile device type used was the iPhone (reflecting 55% of submissions from mobile devices). Mobile phones reflected the majority of mobile devices, although a proportion used tablet software (iPad, 9% of mobile device responses). Note that the analyses were also re-conducted with the iPad coded as a traditional web browser. However, there was no difference in the pattern of results. Unfortunately, there were not enough participants using iPads to allow for a separate category.

Primary analyses examined participation outcomes as a function of whether participants accessed the surveys using a mobile device. Of participants who accessed the survey and proceeded to the consent form, mobile device users were significantly less likely than traditional users to complete the form by indicating their agreement to participate (83.1% vs. 91.9% respectively; χ 2 (1) = 30.20, p < .001). By extension, mobile phone users were also significantly less likely to complete the entire survey (80.4% vs. 90.7% respectively; χ 2 (1) = 35.12, p < .001). Although the vast majority of those providing informed consent completed the survey, mobile device users were nonetheless more likely than non-users to terminate the survey before completion (3.3% of users vs. 1.0% of non-users; χ 2 (1) = 10.73, p < .005). An examination of survey completion time, conducted after truncating response time at 9 minutes (to account for browsers that were left open for extended periods following the initial access, which excluded 52 participants from the analysis), revealed that mobile device users took marginally longer (1.23 minutes, SD = .95) than non-users (1.05 minutes, SD = .36) to complete the survey; this difference was statistically significant (F (1) = 29.16, p < .001). Most participants logged onto the baseline survey within the first hour of the email invitation being sent out (80%, 1421). Of participants who responded in the first 72 hours (n = 1673), mobile device users logged on significantly faster on average (M = 484 minutes, SD = 706 minutes) than non-mobile users (M = 651 minutes, SD = 873 minutes), F (1, 1671) = 14.31, p < .001.

Follow-up survey access rates

A total of 425 participants who met heavy drinking criteria agreed to be contacted for the 6-week follow-up survey. Of these, 29.6% had used a mobile device to access the baseline survey. A total of 294 heavy drinkers completed the follow-up survey at 6 weeks (69% of those who agreed to the 6-week follow-up). Notably, those who used a mobile device to complete the baseline screening questionnaire were significantly less likely to complete the 6-week follow-up survey (57.9% follow-up completion rate) compared to those who used a traditional operating system (73.9% follow-up completion rate), χ 2 (1) = 10.61, p = .002.

Rates of agreement to view the web-based intervention

Of participants in the intervention condition (n = 211), 62% agreed to be sent to a website that would let them see how their drinking compares with other university students. There was no significant difference ( p > .05) between those who used a mobile device (57.1% agreed) and those who used a traditional operating system (64.9%) on agreement to be redirected to the website. Participants who completed the 6-week follow-up (n = 294) and were in the intervention condition (n = 151) were asked if they had tried the CYDU website. There were no significant differences ( p > .05) between those who used a mobile device (17.6%) and those who used a traditional operating system (26.5%) on stating that they had tried the website.

Using data from a randomized controlled trial evaluating an online alcohol intervention conducted with a sizable college student sample, we examined the impact of using mobile devices when making initial contact with a web-based intervention. This method of recruitment is distinct from another growing line of research – the use of mobile devices to collect momentary ecological response data over a period of time[ 9 ]. The current analysis was concerned with the impact of mobile devices on the completion of web-based surveys optimized for a traditional web browser (i.e., on a desktop or laptop computer). There were two hypotheses explored – that mobile device use would lead to quicker access and that mobile device use would lead to poorer completion rates. Both of these hypotheses were supported. Participants who employed a mobile device accessed the survey an average of nearly three hours earlier, which was approximately 25% faster than those who employed a standard computer internet service. However, once they had accessed the survey, participants who employed a mobile phone were less likely to complete the survey (despite its brevity) than those who used a traditional operating system. More importantly (at least for intervention trial studies), those participants who used a mobile device to respond to the survey at baseline were more than 25% less likely to complete the six-week follow-up than participants employing a regular computer Internet service.

These results would be likely to be even more pronounced in other studies. This is because the survey employed in this trial was only five items long and most participants completed it in less than two minutes. Other online intervention trials normally include considerably longer surveys. It is possible though that those participants using a mobile device who were confronted by a longer survey would simply move to a computer to respond to the survey. Our primary concern with the limitations of a mobile device to complete this type of research trial – that it would make participants less likely to complete the intervention component of the trial (in this case an online personalized feedback intervention for problem drinkers) did not gain clear support. However, this was likely because so few participants who were provided access to the online intervention actually said they tried it so that any differences in completion rates between those using a mobile device versus a traditional device would need to be very large to reach statistical significance.

There were several limitations to these analyses. Primarily, the categorization of participants’ mobile device was rather crude and based on information available in web browser signatures. We made the assumption that iPads should be categorized with other mobile devices (e.g., iPhone, Blackberry) rather than with traditional web browsers. However, the look and feel of completing a survey optimized for a computer screen would probably be easier to respond to using an iPad than on other mobile devices. Unfortunately, there were not enough participants using an iPad in the current sample to allow for a more fine grained analysis. In addition, it would be worthwhile replicating these findings using a different study sample within a research study that employed longer surveys in order to confirm these results. Nevertheless, this study does point to a growing limitation with the email list method of participant recruitment as mobile device use becomes more common. Further, it speaks to the need to consider the different platforms that participants use to reply to the online surveys in future research studies. Based on the results of the current study, attempts to maximize participant engagement and retention in web-based intervention studies by limiting access from mobile devices may be warranted. Potential strategies could include programming surveys or intervention materials so as to preempt access via mobile device software, or restricting participant contact to those times of day when respondents are most likely to have access to a desktop computer. Alternatively, some online survey material could be optimized for completion on a mobile device and the participant could be directed to this version of the survey if the online survey collection program detects connection from a mobile device web browser.

This paper explored the impact of mobile devices on the completion of web-based surveys. It was determined that while mobile device users accessed a web-based survey significantly faster than their counterparts using traditional web browsers, participants who had accessed the survey on their mobile device were less likely to complete it, as well as its subsequent follow-up. These findings highlight important limitations with web-based surveys, particularly with regard to retention and engagement of participants using mobile devices.

Bingham CR, Barretto AI, Walton MA, Bryant CM, Shope JT, Raghunathan TE: Efficacy of a web-based, tailored, alcohol prevention/intervention program for college students: initial findings. J Am Coll Health. 2010, 58: 349-356. 10.1080/07448480903501178.

Article PubMed Google Scholar

Neighbors C, Lewis MA, Atkins DC, Jensen MM, Walter T, Fossos N, Lee CM, Larimer ME: Efficacy of web-based personalized normative feedback: a two-year randomized controlled trial. J Consult Clin Psychol. 2010, 78: 898-911.

Article PubMed PubMed Central Google Scholar

Neighbors C, Lee CM, Atkins DC, Lewis MA, Kaysen D, Mittmann A, Fossos N, Geisner IM, Zheng C, Larimer ME: A randomized controlled trial of event-specific prevention strategies for reducing problematic drinking associated with 21st birthday celebrations. J Consult Clin Psychol. 2012, 80: 850-862.

Two-thirds of young adults and those with higher income are smart phone owners. http://pewinternet.org/Reports/2012/Smartphone-Update-Sept-2012.aspx ,

Cunningham JA, Hendershot CS, Murphy M, Neighbors C: Pragmatic randomized controlled trial of providing access to a brief personalized alcohol feedback intervention in university students. Addict Sci Clin Pract. 2012, 7: 21-10.1186/1940-0640-7-21.

Dawson DA, Grant BF, Stinson FS, Zhou Y: Effectiveness of the derived Alcohol Use Disorders Identification Test (AUDIT-C) in screening for alcohol use disorders and risk drinking in the US general population. Alcohol Clin Exp Res. 2005, 29: 844-854. 10.1097/01.ALC.0000164374.32229.A2.

Check Your Drinking University. http://www.CheckYourDrinkingU.net ,

Cunningham JA, Wild TC, Cordingley J, van Mierlo T, Humphreys K: A randomized controlled trial of an internet-based intervention for alcohol abusers. Addiction. 2009, 104: 2023-2032. 10.1111/j.1360-0443.2009.02726.x.

Kuntsche E, Labhart F: Investigating the drinking patterns of young people over the course of the evening at weekends. Drug Alcohol Depend. 2012, 124: 319-324. 10.1016/j.drugalcdep.2012.02.001.

Download references

Acknowledgements

John Cunningham was supported as the Canada Research Chair in Brief Interventions for Addictive Behaviours during the conduct of this research.

Author information

Authors and affiliations.

Centre for Mental Health Research, The Australian National University, Canberra, Australia

John A Cunningham

Centre for Addiction and Mental Health, 33 Russell St, Toronto, M5S 2S1, Canada

John A Cunningham & Christian S Hendershot

Department of Psychology, University of Houston, 126 Heyne Bldg, Houston, 77204-5022, USA

Clayton Neighbors

Alcohol Treatment Center, Department of Community Medicine and Health, Lausanne University Hospital, Lausanne, Switzerland

Nicolas Bertholet

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to John A Cunningham .

Additional information

Competing interests.

The authors declare that they have no competing interests.

Authors’ contributions

JC, CN, NB, and CH contributed to the design of the study. JC conducted the analysis and wrote the draft of the manuscript. All authors read and approved the final manuscript.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License ( http://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Reprints and permissions

About this article

Cite this article.

Cunningham, J.A., Neighbors, C., Bertholet, N. et al. Use of mobile devices to answer online surveys: implications for research. BMC Res Notes 6 , 258 (2013). https://doi.org/10.1186/1756-0500-6-258

Download citation

Received : 29 December 2012

Accepted : 03 July 2013

Published : 08 July 2013

DOI : https://doi.org/10.1186/1756-0500-6-258

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Brief intervention

- Mobile device

BMC Research Notes

ISSN: 1756-0500

- Submission enquiries: [email protected]

- General enquiries: [email protected]

- Solutions Industry Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Member Experience Technology Use case NPS+ Communities Audience InsightsHub InstantAnswers Digsite LivePolls Journey Mapping GDPR Positive People Science 360 Feedback Surveys Research Edition

- Resources Blog eBooks Survey Templates Case Studies Training Webinars Help center

Mobile Research

Mobile research is a rapidly growing discipline of researchers who focus primarily on mobile-based research studies..

Join over 10 million users

Content Index

- What is Mobile Research?

Applications of Mobile Research

Factors affecting mobile research.

- Advantages of Mobile Research

Mobile Research Survey Tool

Watch the 1-minute tour

Most of the 2000s were spent on static devices like desktops. The world has transformed since then and so has the use of digital platforms and these days every person is online “on the go”. Almost 95% of the US citizens own a mobile phone and 90% of them have mobile internet access. Moreover, smartphone usage has doubled in the past 3 years with October 2016 marking the first time in history when mobile access through smartphones surpassed desktops.

With the rapid growth of mobile internet access, there is an unprecedented opportunity to tap into this newly assembled base of users to conducted focused and more precise mobile research.

So, what is mobile research?

Mobile research is a rapidly growing discipline of researchers who focus primarily on mobile-based research studies to tap into the flexibility, customizability, accuracy, and localization to get faster and more precise insights.

It’s easy, convenient and straightforward to capture data from anywhere and anytime as it uses the benefits of “mobile” technology to conduct effective “research”.

This research type can be used in three major ways:

- Recruiting a panel that will take a survey using their mobile platforms.

- Appoint interviewers to collect responses using tablets or smartphones.

- Collect data without internet (Offline mobile surveys).

In the first method, you can arrange a panel that would respond to your surveys and give you precise insights. As a panel consists of selected, filtered and handpicked individuals who already qualify for the research, asking them questions and getting insights is not just more easier but far more accurate and detailed.

The second method is applied on site for B2B or B2C purposes where you appoint interviewers or in most cases employees, to collect data on mobile devices. This method is very effective during concerts or live events where face to face data collection is possible for understanding user experience and making improvements.

Another way of conducting this research is by collecting data from locations where internet isn’t available. In such cases, the data collected offline will get automatically synced once internet becomes accessible.

In mobile research, all respondents take part from a mobile device. This presents researchers with both - opportunities and some restrictions. In such a situation, it is important to keep in mind these factors that affect the mobile research process:

Scrolling can get on people’s nerves. Respondents do not mind switching pages to answers ‘n’ number of questions but they do mind long scrolling surveys. This may impact the number of people completing the survey and can be quite a decisive factor for mobile research. If your survey has too many questions, you should increase the number of pages rather than increasing the length of a single page that would increase the scrolling time.

This can also be done by evaluating the questions that you intend to add in this research survey. Removing all the redundant questions will not only shorten the surveys but will also increase the effectiveness of the survey as a shorter survey will be easy and less time consuming to fill out.

For any kind of survey, question types are important and when it comes to mobile surveys, this can be all the more important. Create questions that the respondents can easily reply to from their mobile devices. Multiple choice questions are one of the most popularly used questions for a mobile-friendly survey. You may also want to avoid using open ended or descriptive questions because of the limitations that come with the size of the mobile screen. Instead of asking longer questions, you can also split the question into various multiple choice questions which will get you better results.

Apart from the question types in general, you may want to take care of the answer options too. Offering positive options first and then the negative options will affect the kind of answers you get. For mobile devices, horizontal scrolling should be strictly restricted as it can get very laborious for respondents to do that.

Loading time of videos and images are different for laptops and for mobile devices. Most mobile devices are operated using the data from phone networks and not ethernet or wi-fi. Due to this, it takes more time for the videos to load on phone than it would take on a laptop.

Decide on the minimum number of videos that you would like to use on mobile devices that may not impact the number of people taking the mobile research survey.

A few other factors that affect mobile research-

- Create mobile compatible logos.

- Mobile-friendly fonts and texts.

- Option for full screen coverage that will eliminate interruptions from other applications.

Advantages of Mobile Research:

As everyone is on mobile devices these days, gauging attention of the respondents via mobile research is prompter than the other means. Due to the various modes like surveys or mobile applications or GPS, getting in touch with your respondents becomes a very easy job. If the survey has direct, relevant questions, survey makers can get faster and more accurate answers via these research surveys.

In case a survey requires the respondents to fill in specific details, uploading images or recording voice notes or collecting information in a diary format is easier using mobile surveys. This is the main reason why these surveys are more adaptable than the traditional ones.

Quicker survey completion time, higher rate in collecting data, tracking of the respondent’s geo location etc. are other reasons that mobile research surveys are better.

These research surveys can be made interactive by asking the respondents to submit videos or images.

All QuestionPro mobile research survey tools offer 100% mobile compatible surveys. All the surveys created using our online platform are by default mobile compatible with no display restrictions regarding of the type of questions (standard or advanced).

QuestionPro also offers an offline mobile application to conduct these surveys in locations where internet connection isn’t available. Incase the responses are collected offline, they can be conveniently synced when network is accessible again. Due to this integral feature, you can get in touch with respondents that you usually wouldn’t be able to with the other survey tools in the market.

Along with these offerings, QuestionPro also provides 250+ mobile friendly survey templates.

- Sample questions

- Sample reports

- Survey logic

- Integrations

- Professional services

- Survey Software

- Customer Experience

- Communities

- Polls Explore the QuestionPro Poll Software - The World's leading Online Poll Maker & Creator. Create online polls, distribute them using email and multiple other options and start analyzing poll results.

- Research Edition

- InsightsHub

- Survey Templates

- Case Studies

- AI in Market Research

- Quiz Templates

- Qualtrics Alternative Explore the list of features that QuestionPro has compared to Qualtrics and learn how you can get more, for less.

- SurveyMonkey Alternative

- VisionCritical Alternative

- Medallia Alternative

- Likert Scale Complete Likert Scale Questions, Examples and Surveys for 5, 7 and 9 point scales. Learn everything about Likert Scale with corresponding example for each question and survey demonstrations.

- Conjoint Analysis

- Net Promoter Score (NPS) Learn everything about Net Promoter Score (NPS) and the Net Promoter Question. Get a clear view on the universal Net Promoter Score Formula, how to undertake Net Promoter Score Calculation followed by a simple Net Promoter Score Example.

- Offline Surveys

- Customer Satisfaction Surveys

- Employee Survey Software Employee survey software & tool to create, send and analyze employee surveys. Get real-time analysis for employee satisfaction, engagement, work culture and map your employee experience from onboarding to exit!

- Market Research Survey Software Real-time, automated and advanced market research survey software & tool to create surveys, collect data and analyze results for actionable market insights.

- GDPR & EU Compliance

- Employee Experience

- Customer Journey

- Executive Team

- In the news

- Testimonials

- Advisory Board

QuestionPro in your language

- Encuestas Online

- Pesquisa Online

- Umfrage Software

- برامج للمسح

Awards & certificates

The experience journal.

Find innovative ideas about Experience Management from the experts

- © 2021 QuestionPro Survey Software | +1 (800) 531 0228

- Privacy Statement

- Terms of Use

- Cookie Settings

5 Major Benefits of Mobile Surveys for Market Research

Benefits of Mobile Survey

Today, around 7.26 billion people own mobile devices. It’s a multi-purpose device from which we shop, consult our bank accounts, make restaurant reservations, interact with other people or consume all kinds of multimedia content. This figure is expected to grow steadily, which makes sense considering that we’ve collectively come to depend on our mobile phones to meet a significant number of needs in our lives.

The constant and widespread use of mobile phones makes for countless benefits for conducting a mobile survey. Mobile surveys guarantee, among other things, speed, reliability, and convenience. This makes them stand out from other platforms such as computers, which are not readily available to a large part of the population.

At Zinklar, we have created a mobile survey platform that has the unique benefit of providing high-quality data in real-time. Let’s take a look at some of the additional benefits that come from mobile surveys and why we believe the future of market research is mobile.

Read below the top 5 benefits of using a mobile survey:

1. Greater Representation Within the Sample

One of the key advantages of conducting surveys via mobile devices is the ability to reach very diverse audiences. The phone allows access to representative samples of all ages, conditions, and socioeconomic levels , which enriches the quality of the data compared to other platforms. Access to computer use presents a legitimate barrier to getting in contact with all users. Firstly, not everyone has access to a computer and, if they do, they have to make the time to be in front of the computer to answer the questionnaire. With a mobile device, it is always easier to find that moment between activities in which to do so.

2. Faster Result Time

These days, who takes even five minutes away from their phone? Beyond the day-to-day capabilities of smartphones that we rely on, actually, mobile phones are the primary source of the internet for many people. So if brands want to reach the consumer faster and generate faster insights, the key is reaching people in their pockets. With mobile surveys, users can answer the survey in total comfort at any time, without having to wait to be able to sit in front of a computer. Data can be collected earlier, which speeds up decision-making.

3. Up-to-the-Moment Precision

Creating mobile-exclusive studies allows for fast results timed to precision with real-time activities. Interaction via mobile lets companies selects the specific moments when they want to send out surveys , making it likely that consumers are most engaged. For example, a survey on pizza consumption could be sent out late on a Friday afternoon, a study on cleaning product consumption could go out on a Sunday mid-morning, or multimedia consumption in the family could be a study that is launched on a Saturday evening.

4. Brevity Yields Better Results

Surveys designed for cell phones are shorter and more concise. Brevity helps to increase the sample’s willingness to complete the study and consequently allows for better-quality data collection. In fact, studies have shown that studies that clock in around seven minutes ultimately yield the best quality data. To this end, insights teams need to work to design questionnaires that consistently and accurately collect the most relevant information in the least amount of time.

5. Access to Additional Data

Mobile phones make it possible to combine stated findings with additional user data that is more easily accessible using technology. For example, users can scan a purchase receipt with the phone camera to report the last purchase details or tag their location when leaving a review. Users can opt into those sharing this information at the moment that is most relevant and convenient for them.

These mobile survey benefits make it possible to achieve two vital goals for market research: sample quality and data quality . With these variables at the forefront of market research efforts, mobile technology is the best solution for brands looking to optimize their research processes. Putting convenience at the center of the user experience and combining it with process agility is the way forward, and mobile surveys are the key to getting there.

Receive regular updates from Zinklar!

Related articles, cookie policy, privacy overview.

- Research article

- Open access

- Published: 12 September 2013

A survey study of the association between mobile phone use and daytime sleepiness in California high school students

- Nila Nathan 1 &

- Jamie Zeitzer 2 , 3

BMC Public Health volume 13 , Article number: 840 ( 2013 ) Cite this article

138k Accesses

36 Citations

4 Altmetric

Metrics details

Mobile phone use is near ubiquitous in teenagers. Paralleling the rise in mobile phone use is an equally rapid decline in the amount of time teenagers are spending asleep at night. Prior research indicates that there might be a relationship between daytime sleepiness and nocturnal mobile phone use in teenagers in a variety of countries. As such, the aim of this study was to see if there was an association between mobile phone use, especially at night, and sleepiness in a group of U.S. teenagers.

A questionnaire containing an Epworth Sleepiness Scale (ESS) modified for use in teens and questions about qualitative and quantitative use of the mobile phone was completed by students attending Mountain View High School in Mountain View, California (n = 211).

Multivariate regression analysis indicated that ESS score was significantly associated with being female, feeling a need to be accessible by mobile phone all of the time, and a past attempt to reduce mobile phone use. The number of daily texts or phone calls was not directly associated with ESS. Those individuals who felt they needed to be accessible and those who had attempted to reduce mobile phone use were also ones who stayed up later to use the mobile phone and were awakened more often at night by the mobile phone.

Conclusions

The relationship between daytime sleepiness and mobile phone use was not directly related to the volume of texting but may be related to the temporal pattern of mobile phone use.

Peer Review reports

Mobile phone use has drastically increased in recent years, fueled by new technology such as ‘smart phones’. In 2012, it was estimated that 78% of all Americans aged 12–17 years had a mobile phone and 37% had a smart phone [ 1 ]. Despite the growing number of adolescent mobile phone users, there has been limited examination of the behavioral effects of mobile phone usage on adolescents and their sleep and subsequent daytime sleepiness.

Mobile phone use in teens likely compounds the biological causes of sleep loss. With the onset of puberty, there are changes in innate circadian rhythms that lead to a delay in the habitual timing of sleep onset [ 2 ]. As school start times are not correspondingly later, this leads to a reduction in the time available for sleep and is consequently thought to contribute to the endemic sleepiness of teenagers. The use of mobile phones may compound this sleepiness by extending the waking hours further into the night. Munezawa and colleagues [ 3 ] analyzed 94,777 responses to questionnaires sent out to junior and senior high school students in Japan and found that the use of mobile phones for calling or sending text messages after they went to bed was associated with sleep disturbances such as short sleep duration, subjective poor sleep quality, excessive daytime sleepiness and insomnia symptoms. Soderqvist et al. in their study of Swedish adolescents aged 15–19 years, found that regular users of mobile phones reported health symptoms such as tiredness, stress, headache, anxiety, concentration difficulties and sleep disturbances more often than less frequent users [ 4 ]. Van der Bulck studied 1,656 school children in Belgium and found that prevalent mobile phone use in adolescents was related to increased levels of daytime tiredness [ 5 ]. Punamaki et al. studied Finnish teens and found that intensive mobile phone use lead to more health complaints and musculoskeletal symptoms in girls both directly and through deteriorated sleep, as well as increased daytime tiredness [ 6 ]. In one prospective study of young Swedish adults, aged 20–24, those who were high volume mobile phone users and male, but not female, were at greater risk for developing sleep disturbances a year later [ 7 ]. The association of mobile phone utilization and either sleep or sleepiness in teens in the United States has only been described by a telephone poll. In the 2011 National Sleep Foundation poll, 20% of those under the age of 30 reported that they were awakened by a phone call, text or e-mail message at least a few nights a week [ 8 ]. This type of nocturnal awakening was self-reported more frequently by those who also reported that they drove while drowsy.

As there has been limited examination of how mobile phone usage affects the behavior of young children and adolescents, none of which have addressed the effects of such usage on daytime sleepiness in U.S. teens, it seemed worthwhile to attempt a cross-sectional study of sleep and mobile phone utilization in a U.S. high school. As such, it was the purpose of this study to examine the association of mobile phone utilization and sleepiness patterns in a sample of U.S. teens. We hypothesized that an increased number of calls would be associated with increased sleepiness.

We designed a survey that contained questions concerning sleepiness and mobile phone use (see Additional file 1 ). Sleepiness was assessed using a version of the Epworth Sleepiness Scale (ESS) [ 9 ] modified for use in adolescents [ 10 ]. The modified ESS consists of eight questions that assessed the likelihood of dozing in the following circumstances: sitting and reading, watching TV, sitting inactive in a public place, as a passenger in a car for an hour without a break, lying down to rest in the afternoon when circumstances permit, sitting and talking to someone, sitting quietly after a lunch, in a car while stopped for a few minutes in traffic. Responses were limited to a Likert-like scale using the following: no chance of dozing (0), slight chance of dozing (1), moderate chance of dozing (2), or high chance of dozing (3). This yielded total ESS scores ranging from 0 to 24, with scores over 10 being associated with clinically-significant sleepiness [ 9 ]. We also included a set of modified questions, originally designed by Thomée et al., that assess the subjective impact of mobile phone use [ 7 ]. These included the number of mobile calls made or received each day, the number of texts made or received each day, being awakened by the mobile phone at night (never/occasionally/monthly/weekly/daily), staying up late to use the mobile phone (never/occasionally/monthly/weekly/daily), expectations of accessibility by mobile phone (never/occasionally/daily/all day/around-the-clock), stressfulness of accessibility (not at all/a little bit/rather/very), use mobile phone too much (yes/no), and tried and failed to reduce mobile phone use (yes/no).

An email invitation to complete an electronic form of the survey ( http://www.surveymonkey.com ) was sent to the entire student body of the Mountain View High School, located in Mountain View, California, USA, on April 5, 2012. Out of the approximately 2,000 students attending the school, a total of 211 responded by the collection date of April 23, 2012. Data analyses are described below (OrginPro8, OriginLab, Northampton MA). Summary data are provided as mean ± SD for age and ESS and as median (range) for the number of texts and/or phone calls made or received per day as these were non-normally distributed (p’s <; 0.001, Kolmogorov Smirnov test). To examine the relationship between sleepiness and predictor variables, stepwise multivariate regression analyses were performed. Collinearity in the data was examined by calculating the Variance Inflation Factor (VIF). Post hoc t-tests, ANOVA, Mann–Whitney U tests, and Spearman correlations were used, as appropriate, to examine specific components of the model and their relationship to sleepiness. χ 2 tests were used to examine categorical variables. The study was done within the regulations codified by the Declaration of Helsinki and approved by the administration of Mountain View High School.

Sixty-eight males and 143 females responded to the survey. Most (96.7%) respondents owned a mobile phone. The remainder of the analyses presented herein is on the 202 respondents (64 male, 138 female) who indicated that they owned a mobile phone (Tables 1 and 2 ). The youngest participant in the survey was 14 years old and the oldest was 19 years old (16 ± 1.2 years), representative of the age range of this school. The median number of mobile phone calls made or received per day was 2 and ranged from 0 to 60. The median number of text messages sent or received per day was 22.5 and ranged from 0 to 700. While about half of the respondents (53%) had never been awakened by the mobile phone at night, 35% were occasionally awakened, 5.9% were awakened a few times a month, 5.0% were awakened a few times a week, and 1.0% were awakened almost every night. About one-quarter (27%) of respondents had never stayed awake later than a target bedtime in order to use the mobile phone, however 36% occasionally stayed awake, 19% stayed awake a few times a month, 8.5% stayed awake a few times a week, and 10% stayed awake almost every night in order to use the mobile phone. In regards to feeling an expectation of accessibility, 7.5% reported that they needed to be accessible around the clock, 26% reported that they needed to be accessible all day, 52% reported they needed to be accessible daily, 13% reported that they only needed to be accessible now and then, and 1.0% reported they never needed to be accessible. Nearly half (49%) of the survey participants viewed accessibility via mobile phones to be not at all stressful, 45% found it to be a little bit stressful, 4.5% found it rather stressful, and 1.0% found it very stressful. More than one-third (36%) reported that they or someone close to them thought that they used the mobile phone too much. Few (17%) had tried but were unable to reduce their mobile phone use.

Subjective sleepiness on the ESS ranged from 0 to 18 (6.8 ± 3.5, with higher numbers indicating greater sleepiness), with 25% of participants having ESS scores in the excessively sleepy range (ESS ≥ 10). We examined predictors of subjective sleepiness (ESS score) using stepwise multivariate regression analysis with the following independent variables: age, sex, frequency of nocturnal awakening by the phone, frequency of staying up too late to use the phone, self-perceived accessibility by phone, stressfulness of this accessibility, attempted and failed to reduce phone use, excessive phone use determined by others, number of texts per day, and number of phone calls per day. Only subjects with complete data sets were used in our modeling (n = 191 of 202). Our final model (Table 3 ) indicated that sex, frequency of accessibility, and a failed attempt to reduce mobile phone use were all predictive of daytime sleepiness (F 6,194 = 4.35, p <; 0.001, r 2 = 0.12). These model variables lacked collinearity (VIF’s <; 3.9), indicating that they were not likely to represent the same source of variance. Despite the lack of significance in the multivariate model, given previously published data [ 4 – 6 ], we independently tested if there was a relationship between the number of estimated texts and sleepiness, but found no such correlation (r = 0.13, p = 0.07; Spearman correlation). In examining the final model, it appears that those who felt that they needed to be accessible “around the clock” (ESS = 9.2 ± 2.9) were sleepier than all others (ESS = 6.7 ± 3.4) (p <; 0.01, post hoc t -test). The relationship between sleepiness and reporting having tried, but failed, to reduce mobile phone use was such that those who had tried to reduce phone use were more sleepy (ESS = 8.3 ± 3.6) than those who had not (ESS = 6.5 ± 3.4) (p <; 0.01, post hoc t -test). While more females had tried to reduce their mobile phone use, sex did not modify the relationship between the attempt to reduce mobile phone use and sleepiness (p = 0.32, two-way ANOVA), thus retaining attempt and failure to reduce mobile phone use as an independent modifier of ESS scores.