The text is released under the CC-BY-NC-ND license , and code is released under the MIT license . If you find this content useful, please consider supporting the work by buying the book !

Working with Time Series

< Vectorized String Operations | Contents | High-Performance Pandas: eval() and query() >

Pandas was developed in the context of financial modeling, so as you might expect, it contains a fairly extensive set of tools for working with dates, times, and time-indexed data. Date and time data comes in a few flavors, which we will discuss here:

- Time stamps reference particular moments in time (e.g., July 4th, 2015 at 7:00am).

- Time intervals and periods reference a length of time between a particular beginning and end point; for example, the year 2015. Periods usually reference a special case of time intervals in which each interval is of uniform length and does not overlap (e.g., 24 hour-long periods comprising days).

- Time deltas or durations reference an exact length of time (e.g., a duration of 22.56 seconds).

In this section, we will introduce how to work with each of these types of date/time data in Pandas. This short section is by no means a complete guide to the time series tools available in Python or Pandas, but instead is intended as a broad overview of how you as a user should approach working with time series. We will start with a brief discussion of tools for dealing with dates and times in Python, before moving more specifically to a discussion of the tools provided by Pandas. After listing some resources that go into more depth, we will review some short examples of working with time series data in Pandas.

Dates and Times in Python ¶

The Python world has a number of available representations of dates, times, deltas, and timespans. While the time series tools provided by Pandas tend to be the most useful for data science applications, it is helpful to see their relationship to other packages used in Python.

Native Python dates and times: datetime and dateutil ¶

Python's basic objects for working with dates and times reside in the built-in datetime module. Along with the third-party dateutil module, you can use it to quickly perform a host of useful functionalities on dates and times. For example, you can manually build a date using the datetime type:

Or, using the dateutil module, you can parse dates from a variety of string formats:

Once you have a datetime object, you can do things like printing the day of the week:

In the final line, we've used one of the standard string format codes for printing dates ( "%A" ), which you can read about in the strftime section of Python's datetime documentation . Documentation of other useful date utilities can be found in dateutil's online documentation . A related package to be aware of is pytz , which contains tools for working with the most migrane-inducing piece of time series data: time zones.

The power of datetime and dateutil lie in their flexibility and easy syntax: you can use these objects and their built-in methods to easily perform nearly any operation you might be interested in. Where they break down is when you wish to work with large arrays of dates and times: just as lists of Python numerical variables are suboptimal compared to NumPy-style typed numerical arrays, lists of Python datetime objects are suboptimal compared to typed arrays of encoded dates.

Typed arrays of times: NumPy's datetime64 ¶

The weaknesses of Python's datetime format inspired the NumPy team to add a set of native time series data type to NumPy. The datetime64 dtype encodes dates as 64-bit integers, and thus allows arrays of dates to be represented very compactly. The datetime64 requires a very specific input format:

Once we have this date formatted, however, we can quickly do vectorized operations on it:

Because of the uniform type in NumPy datetime64 arrays, this type of operation can be accomplished much more quickly than if we were working directly with Python's datetime objects, especially as arrays get large (we introduced this type of vectorization in Computation on NumPy Arrays: Universal Functions ).

One detail of the datetime64 and timedelta64 objects is that they are built on a fundamental time unit . Because the datetime64 object is limited to 64-bit precision, the range of encodable times is $2^{64}$ times this fundamental unit. In other words, datetime64 imposes a trade-off between time resolution and maximum time span .

For example, if you want a time resolution of one nanosecond, you only have enough information to encode a range of $2^{64}$ nanoseconds, or just under 600 years. NumPy will infer the desired unit from the input; for example, here is a day-based datetime:

Here is a minute-based datetime:

Notice that the time zone is automatically set to the local time on the computer executing the code. You can force any desired fundamental unit using one of many format codes; for example, here we'll force a nanosecond-based time:

The following table, drawn from the NumPy datetime64 documentation , lists the available format codes along with the relative and absolute timespans that they can encode:

For the types of data we see in the real world, a useful default is datetime64[ns] , as it can encode a useful range of modern dates with a suitably fine precision.

Finally, we will note that while the datetime64 data type addresses some of the deficiencies of the built-in Python datetime type, it lacks many of the convenient methods and functions provided by datetime and especially dateutil . More information can be found in NumPy's datetime64 documentation .

Dates and times in pandas: best of both worlds ¶

Pandas builds upon all the tools just discussed to provide a Timestamp object, which combines the ease-of-use of datetime and dateutil with the efficient storage and vectorized interface of numpy.datetime64 . From a group of these Timestamp objects, Pandas can construct a DatetimeIndex that can be used to index data in a Series or DataFrame ; we'll see many examples of this below.

For example, we can use Pandas tools to repeat the demonstration from above. We can parse a flexibly formatted string date, and use format codes to output the day of the week:

Additionally, we can do NumPy-style vectorized operations directly on this same object:

In the next section, we will take a closer look at manipulating time series data with the tools provided by Pandas.

Pandas Time Series: Indexing by Time ¶

Where the Pandas time series tools really become useful is when you begin to index data by timestamps . For example, we can construct a Series object that has time indexed data:

Now that we have this data in a Series , we can make use of any of the Series indexing patterns we discussed in previous sections, passing values that can be coerced into dates:

There are additional special date-only indexing operations, such as passing a year to obtain a slice of all data from that year:

Later, we will see additional examples of the convenience of dates-as-indices. But first, a closer look at the available time series data structures.

Pandas Time Series Data Structures ¶

This section will introduce the fundamental Pandas data structures for working with time series data:

- For time stamps , Pandas provides the Timestamp type. As mentioned before, it is essentially a replacement for Python's native datetime , but is based on the more efficient numpy.datetime64 data type. The associated Index structure is DatetimeIndex .

- For time Periods , Pandas provides the Period type. This encodes a fixed-frequency interval based on numpy.datetime64 . The associated index structure is PeriodIndex .

- For time deltas or durations , Pandas provides the Timedelta type. Timedelta is a more efficient replacement for Python's native datetime.timedelta type, and is based on numpy.timedelta64 . The associated index structure is TimedeltaIndex .

The most fundamental of these date/time objects are the Timestamp and DatetimeIndex objects. While these class objects can be invoked directly, it is more common to use the pd.to_datetime() function, which can parse a wide variety of formats. Passing a single date to pd.to_datetime() yields a Timestamp ; passing a series of dates by default yields a DatetimeIndex :

Any DatetimeIndex can be converted to a PeriodIndex with the to_period() function with the addition of a frequency code; here we'll use 'D' to indicate daily frequency:

A TimedeltaIndex is created, for example, when a date is subtracted from another:

Regular sequences: pd.date_range() ¶

To make the creation of regular date sequences more convenient, Pandas offers a few functions for this purpose: pd.date_range() for timestamps, pd.period_range() for periods, and pd.timedelta_range() for time deltas. We've seen that Python's range() and NumPy's np.arange() turn a startpoint, endpoint, and optional stepsize into a sequence. Similarly, pd.date_range() accepts a start date, an end date, and an optional frequency code to create a regular sequence of dates. By default, the frequency is one day:

Alternatively, the date range can be specified not with a start and endpoint, but with a startpoint and a number of periods:

The spacing can be modified by altering the freq argument, which defaults to D . For example, here we will construct a range of hourly timestamps:

To create regular sequences of Period or Timedelta values, the very similar pd.period_range() and pd.timedelta_range() functions are useful. Here are some monthly periods:

And a sequence of durations increasing by an hour:

All of these require an understanding of Pandas frequency codes, which we'll summarize in the next section.

Frequencies and Offsets ¶

Fundamental to these Pandas time series tools is the concept of a frequency or date offset. Just as we saw the D (day) and H (hour) codes above, we can use such codes to specify any desired frequency spacing. The following table summarizes the main codes available:

The monthly, quarterly, and annual frequencies are all marked at the end of the specified period. By adding an S suffix to any of these, they instead will be marked at the beginning:

Additionally, you can change the month used to mark any quarterly or annual code by adding a three-letter month code as a suffix:

- Q-JAN , BQ-FEB , QS-MAR , BQS-APR , etc.

- A-JAN , BA-FEB , AS-MAR , BAS-APR , etc.

In the same way, the split-point of the weekly frequency can be modified by adding a three-letter weekday code:

- W-SUN , W-MON , W-TUE , W-WED , etc.

On top of this, codes can be combined with numbers to specify other frequencies. For example, for a frequency of 2 hours 30 minutes, we can combine the hour ( H ) and minute ( T ) codes as follows:

All of these short codes refer to specific instances of Pandas time series offsets, which can be found in the pd.tseries.offsets module. For example, we can create a business day offset directly as follows:

For more discussion of the use of frequencies and offsets, see the "DateOffset" section of the Pandas documentation.

Resampling, Shifting, and Windowing ¶

The ability to use dates and times as indices to intuitively organize and access data is an important piece of the Pandas time series tools. The benefits of indexed data in general (automatic alignment during operations, intuitive data slicing and access, etc.) still apply, and Pandas provides several additional time series-specific operations.

We will take a look at a few of those here, using some stock price data as an example. Because Pandas was developed largely in a finance context, it includes some very specific tools for financial data. For example, the accompanying pandas-datareader package (installable via conda install pandas-datareader ), knows how to import financial data from a number of available sources, including Yahoo finance, Google Finance, and others. Here we will load Google's closing price history:

For simplicity, we'll use just the closing price:

We can visualize this using the plot() method, after the normal Matplotlib setup boilerplate (see Chapter 4 ):

Resampling and converting frequencies ¶

One common need for time series data is resampling at a higher or lower frequency. This can be done using the resample() method, or the much simpler asfreq() method. The primary difference between the two is that resample() is fundamentally a data aggregation , while asfreq() is fundamentally a data selection .

Taking a look at the Google closing price, let's compare what the two return when we down-sample the data. Here we will resample the data at the end of business year:

Notice the difference: at each point, resample reports the average of the previous year , while asfreq reports the value at the end of the year .

For up-sampling, resample() and asfreq() are largely equivalent, though resample has many more options available. In this case, the default for both methods is to leave the up-sampled points empty, that is, filled with NA values. Just as with the pd.fillna() function discussed previously, asfreq() accepts a method argument to specify how values are imputed. Here, we will resample the business day data at a daily frequency (i.e., including weekends):

The top panel is the default: non-business days are left as NA values and do not appear on the plot. The bottom panel shows the differences between two strategies for filling the gaps: forward-filling and backward-filling.

Time-shifts ¶

Another common time series-specific operation is shifting of data in time. Pandas has two closely related methods for computing this: shift() and tshift() In short, the difference between them is that shift() shifts the data , while tshift() shifts the index . In both cases, the shift is specified in multiples of the frequency.

Here we will both shift() and tshift() by 900 days;

We see here that shift(900) shifts the data by 900 days, pushing some of it off the end of the graph (and leaving NA values at the other end), while tshift(900) shifts the index values by 900 days.

A common context for this type of shift is in computing differences over time. For example, we use shifted values to compute the one-year return on investment for Google stock over the course of the dataset:

This helps us to see the overall trend in Google stock: thus far, the most profitable times to invest in Google have been (unsurprisingly, in retrospect) shortly after its IPO, and in the middle of the 2009 recession.

Rolling windows ¶

Rolling statistics are a third type of time series-specific operation implemented by Pandas. These can be accomplished via the rolling() attribute of Series and DataFrame objects, which returns a view similar to what we saw with the groupby operation (see Aggregation and Grouping ). This rolling view makes available a number of aggregation operations by default.

For example, here is the one-year centered rolling mean and standard deviation of the Google stock prices:

As with group-by operations, the aggregate() and apply() methods can be used for custom rolling computations.

Where to Learn More ¶

This section has provided only a brief summary of some of the most essential features of time series tools provided by Pandas; for a more complete discussion, you can refer to the "Time Series/Date" section of the Pandas online documentation.

Another excellent resource is the textbook Python for Data Analysis by Wes McKinney (OReilly, 2012). Although it is now a few years old, it is an invaluable resource on the use of Pandas. In particular, this book emphasizes time series tools in the context of business and finance, and focuses much more on particular details of business calendars, time zones, and related topics.

As always, you can also use the IPython help functionality to explore and try further options available to the functions and methods discussed here. I find this often is the best way to learn a new Python tool.

Example: Visualizing Seattle Bicycle Counts ¶

As a more involved example of working with some time series data, let's take a look at bicycle counts on Seattle's Fremont Bridge . This data comes from an automated bicycle counter, installed in late 2012, which has inductive sensors on the east and west sidewalks of the bridge. The hourly bicycle counts can be downloaded from http://data.seattle.gov/ ; here is the direct link to the dataset .

As of summer 2016, the CSV can be downloaded as follows:

Once this dataset is downloaded, we can use Pandas to read the CSV output into a DataFrame . We will specify that we want the Date as an index, and we want these dates to be automatically parsed:

For convenience, we'll further process this dataset by shortening the column names and adding a "Total" column:

Now let's take a look at the summary statistics for this data:

Visualizing the data ¶

We can gain some insight into the dataset by visualizing it. Let's start by plotting the raw data:

The ~25,000 hourly samples are far too dense for us to make much sense of. We can gain more insight by resampling the data to a coarser grid. Let's resample by week:

This shows us some interesting seasonal trends: as you might expect, people bicycle more in the summer than in the winter, and even within a particular season the bicycle use varies from week to week (likely dependent on weather; see In Depth: Linear Regression where we explore this further).

Another way that comes in handy for aggregating the data is to use a rolling mean, utilizing the pd.rolling_mean() function. Here we'll do a 30 day rolling mean of our data, making sure to center the window:

The jaggedness of the result is due to the hard cutoff of the window. We can get a smoother version of a rolling mean using a window function–for example, a Gaussian window. The following code specifies both the width of the window (we chose 50 days) and the width of the Gaussian within the window (we chose 10 days):

Digging into the data ¶

While these smoothed data views are useful to get an idea of the general trend in the data, they hide much of the interesting structure. For example, we might want to look at the average traffic as a function of the time of day. We can do this using the GroupBy functionality discussed in Aggregation and Grouping :

The hourly traffic is a strongly bimodal distribution, with peaks around 8:00 in the morning and 5:00 in the evening. This is likely evidence of a strong component of commuter traffic crossing the bridge. This is further evidenced by the differences between the western sidewalk (generally used going toward downtown Seattle), which peaks more strongly in the morning, and the eastern sidewalk (generally used going away from downtown Seattle), which peaks more strongly in the evening.

We also might be curious about how things change based on the day of the week. Again, we can do this with a simple groupby:

This shows a strong distinction between weekday and weekend totals, with around twice as many average riders crossing the bridge on Monday through Friday than on Saturday and Sunday.

With this in mind, let's do a compound GroupBy and look at the hourly trend on weekdays versus weekends. We'll start by grouping by both a flag marking the weekend, and the time of day:

Now we'll use some of the Matplotlib tools described in Multiple Subplots to plot two panels side by side:

The result is very interesting: we see a bimodal commute pattern during the work week, and a unimodal recreational pattern during the weekends. It would be interesting to dig through this data in more detail, and examine the effect of weather, temperature, time of year, and other factors on people's commuting patterns; for further discussion, see my blog post "Is Seattle Really Seeing an Uptick In Cycling?" , which uses a subset of this data. We will also revisit this dataset in the context of modeling in In Depth: Linear Regression .

- Comprehensive Learning Paths

- 150+ Hours of Videos

- Complete Access to Jupyter notebooks, Datasets, References.

Machine Learning

- Time Series

ARIMA Model – Complete Guide to Time Series Forecasting in Python

- August 22, 2021

- Selva Prabhakaran

Using ARIMA model, you can forecast a time series using the series past values. In this post, we build an optimal ARIMA model from scratch and extend it to Seasonal ARIMA (SARIMA) and SARIMAX models. You will also see how to build autoarima models in python

- Introduction to Time Series Forecasting

- Introduction to ARIMA Models

- What does the p, d and q in ARIMA model mean?

- What are AR and MA models

- How to find the order of differencing (d) in ARIMA model

- How to find the order of the AR term (p)

- How to find the order of the MA term (q)

- How to handle if a time series is slightly under or over differenced

- How to build the ARIMA Model

- How to do find the optimal ARIMA model manually using Out-of-Time Cross validation

- Accuracy Metrics for Time Series Forecast

- How to do Auto Arima Forecast in Python

- How to interpret the residual plots in ARIMA model

- How to automatically build SARIMA model in python

- How to build SARIMAX Model with exogenous variable

- Practice Exercises

1. Introduction to Time Series Forecasting

A time series is a sequence where a metric is recorded over regular time intervals.

Depending on the frequency, a time series can be of yearly (ex: annual budget), quarterly (ex: expenses), monthly (ex: air traffic), weekly (ex: sales qty), daily (ex: weather), hourly (ex: stocks price), minutes (ex: inbound calls in a call canter) and even seconds wise (ex: web traffic).

We have already seen the steps involved in a previous post on Time Series Analysis . If you haven’t read it, I highly encourage you to do so.

Forecasting is the next step where you want to predict the future values the series is going to take.

But why forecast?

Because, forecasting a time series (like demand and sales) is often of tremendous commercial value.

In most manufacturing companies, it drives the fundamental business planning, procurement and production activities. Any errors in the forecasts will ripple down throughout the supply chain or any business context for that matter. So it’s important to get the forecasts accurate in order to save on costs and is critical to success.

Not just in manufacturing, the techniques and concepts behind time series forecasting are applicable in any business.

Now forecasting a time series can be broadly divided into two types.

If you use only the previous values of the time series to predict its future values, it is called Univariate Time Series Forecasting .

And if you use predictors other than the series (a.k.a exogenous variables) to forecast it is called Multi Variate Time Series Forecasting .

This post focuses on a particular type of forecasting method called ARIMA modeling.

ARIMA, short for ‘AutoRegressive Integrated Moving Average’, is a forecasting algorithm based on the idea that the information in the past values of the time series can alone be used to predict the future values.

2. Introduction to ARIMA Models

So what exactly is an ARIMA model?

ARIMA, short for ‘Auto Regressive Integrated Moving Average’ is actually a class of models that ‘explains’ a given time series based on its own past values, that is, its own lags and the lagged forecast errors, so that equation can be used to forecast future values.

Any ‘non-seasonal’ time series that exhibits patterns and is not a random white noise can be modeled with ARIMA models.

An ARIMA model is characterized by 3 terms: p, d, q

p is the order of the AR term

q is the order of the MA term

d is the number of differencing required to make the time series stationary

If a time series, has seasonal patterns, then you need to add seasonal terms and it becomes SARIMA, short for ‘Seasonal ARIMA’. More on that once we finish ARIMA.

So, what does the ‘order of AR term’ even mean? Before we go there, let’s first look at the ‘d’ term.

3. What does the p, d and q in ARIMA model mean?

The first step to build an ARIMA model is to make the time series stationary.

Because, term ‘Auto Regressive’ in ARIMA means it is a linear regression model that uses its own lags as predictors. Linear regression models, as you know, work best when the predictors are not correlated and are independent of each other.

So how to make a series stationary?

The most common approach is to difference it. That is, subtract the previous value from the current value. Sometimes, depending on the complexity of the series, more than one differencing may be needed.

The value of d, therefore, is the minimum number of differencing needed to make the series stationary. And if the time series is already stationary, then d = 0.

Next, what are the ‘p’ and ‘q’ terms?

‘p’ is the order of the ‘Auto Regressive’ (AR) term. It refers to the number of lags of Y to be used as predictors. And ‘q’ is the order of the ‘Moving Average’ (MA) term. It refers to the number of lagged forecast errors that should go into the ARIMA Model.

Want to get hands-on experience on Time Series Forecasting Project? Join MLPlus university and try the exhaustive Restaurant Visitor Forecasting Project Course. Get proficient in implementing multiple forecasting strategies using ARIMA and other time series algorithms to solve a real world forecasting problem.

4. What are AR and MA models?

So what are AR and MA models? what is the actual mathematical formula for the AR and MA models?

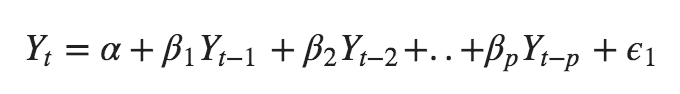

A pure Auto Regressive (AR only) model is one where Yt depends only on its own lags. That is, Yt is a function of the ‘lags of Yt’.

where, Y{t-1} is the lag1 of the series, beta1 is the coefficient of lag1 that the model estimates and `alpha` is the intercept term, also estimated by the model.

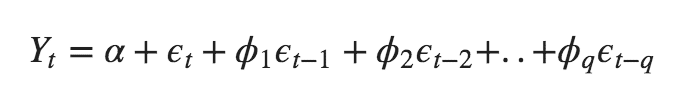

Likewise a pure Moving Average (MA only) model is one where Yt depends only on the lagged forecast errors.

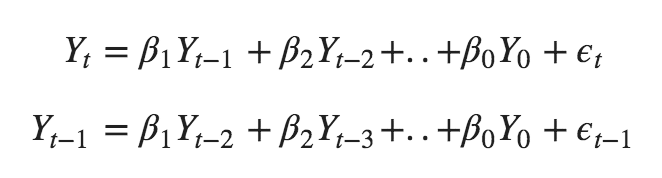

where the error terms are the errors of the autoregressive models of the respective lags. The errors Et and E(t-1) are the errors from the following equations :

That was AR and MA models respectively.

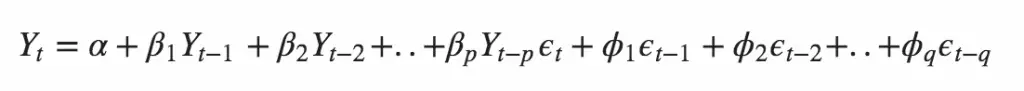

So what does the equation of an ARIMA model look like?

An ARIMA model is one where the time series was differenced at least once to make it stationary and you combine the AR and the MA terms. So the equation becomes:

ARIMA model in words:

Predicted Yt = Constant + Linear combination Lags of Y (upto p lags) + Linear Combination of Lagged forecast errors (upto q lags)

The objective, therefore, is to identify the values of p, d and q. But how?

Let’s start with finding the ‘d’.

5. How to find the order of differencing (d) in ARIMA model

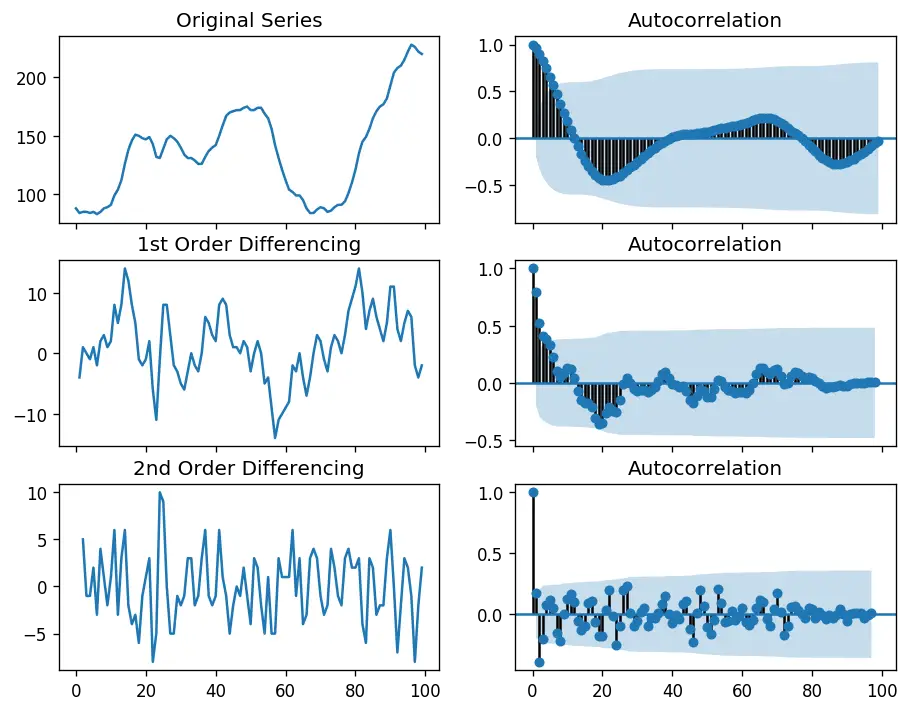

The purpose of differencing it to make the time series stationary.

But you need to be careful to not over-difference the series. Because, an over differenced series may still be stationary, which in turn will affect the model parameters.

So how to determine the right order of differencing?

The right order of differencing is the minimum differencing required to get a near-stationary series which roams around a defined mean and the ACF plot reaches to zero fairly quick.

If the autocorrelations are positive for many number of lags (10 or more), then the series needs further differencing. On the other hand, if the lag 1 autocorrelation itself is too negative, then the series is probably over-differenced.

In the event, you can’t really decide between two orders of differencing, then go with the order that gives the least standard deviation in the differenced series.

Let’s see how to do it with an example.

First, I am going to check if the series is stationary using the Augmented Dickey Fuller test ( adfuller() ), from the statsmodels package.

Because, you need differencing only if the series is non-stationary. Else, no differencing is needed, that is, d=0.

The null hypothesis of the ADF test is that the time series is non-stationary. So, if the p-value of the test is less than the significance level (0.05) then you reject the null hypothesis and infer that the time series is indeed stationary.

So, in our case, if P Value > 0.05 we go ahead with finding the order of differencing.

Since P-value is greater than the significance level, let’s difference the series and see how the autocorrelation plot looks like.

For the above series, the time series reaches stationarity with two orders of differencing. But on looking at the autocorrelation plot for the 2nd differencing the lag goes into the far negative zone fairly quick, which indicates, the series might have been over differenced.

So, I am going to tentatively fix the order of differencing as 1 even though the series is not perfectly stationary (weak stationarity).

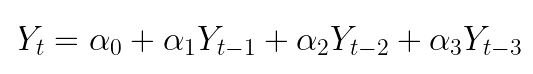

6. How to find the order of the AR term (p)

The next step is to identify if the model needs any AR terms. You can find out the required number of AR terms by inspecting the Partial Autocorrelation (PACF) plot.

But what is PACF?

Partial autocorrelation can be imagined as the correlation between the series and its lag, after excluding the contributions from the intermediate lags. So, PACF sort of conveys the pure correlation between a lag and the series. That way, you will know if that lag is needed in the AR term or not.

So what is the formula for PACF mathematically?

Partial autocorrelation of lag (k) of a series is the coefficient of that lag in the autoregression equation of Y.

That is, suppose, if Y_t is the current series and Y_t-1 is the lag 1 of Y , then the partial autocorrelation of lag 3 ( Y_t-3 ) is the coefficient $\alpha_3$ of Y_t-3 in the above equation.

Good. Now, how to find the number of AR terms?

Any autocorrelation in a stationarized series can be rectified by adding enough AR terms. So, we initially take the order of AR term to be equal to as many lags that crosses the significance limit in the PACF plot.

You can observe that the PACF lag 1 is quite significant since is well above the significance line. Lag 2 turns out to be significant as well, slightly managing to cross the significance limit (blue region). But I am going to be conservative and tentatively fix the p as 1.

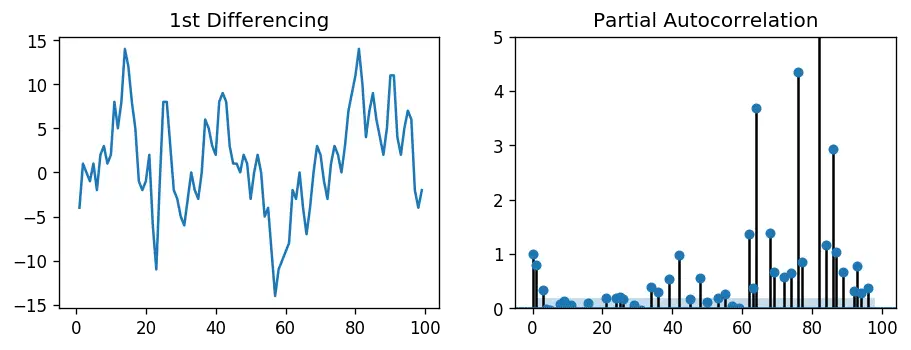

7. How to find the order of the MA term (q)

Just like how we looked at the PACF plot for the number of AR terms, you can look at the ACF plot for the number of MA terms. An MA term is technically, the error of the lagged forecast.

The ACF tells how many MA terms are required to remove any autocorrelation in the stationarized series.

Let’s see the autocorrelation plot of the differenced series.

Couple of lags are well above the significance line. So, let’s tentatively fix q as 2. When in doubt, go with the simpler model that sufficiently explains the Y.

8. How to handle if a time series is slightly under or over differenced

It may so happen that your series is slightly under differenced, that differencing it one more time makes it slightly over-differenced.

How to handle this case?

If your series is slightly under differenced, adding one or more additional AR terms usually makes it up. Likewise, if it is slightly over-differenced, try adding an additional MA term.

9. How to build the ARIMA Model

Now that you’ve determined the values of p, d and q, you have everything needed to fit the ARIMA model. Let’s use the ARIMA() implementation in statsmodels package. (** You can also check out the free video lesson on forecasting restaurant visitors with ARIMA and then check how to test and improve the model )

The model summary reveals a lot of information. The table in the middle is the coefficients table where the values under ‘coef’ are the weights of the respective terms.

Notice here the coefficient of the MA2 term is close to zero and the P-Value in ‘P>|z|’ column is highly insignificant. It should ideally be less than 0.05 for the respective X to be significant.

So, let’s rebuild the model without the MA2 term.

The model AIC has reduced, which is good. The P Values of the AR1 and MA1 terms have improved and are highly significant (<< 0.05).

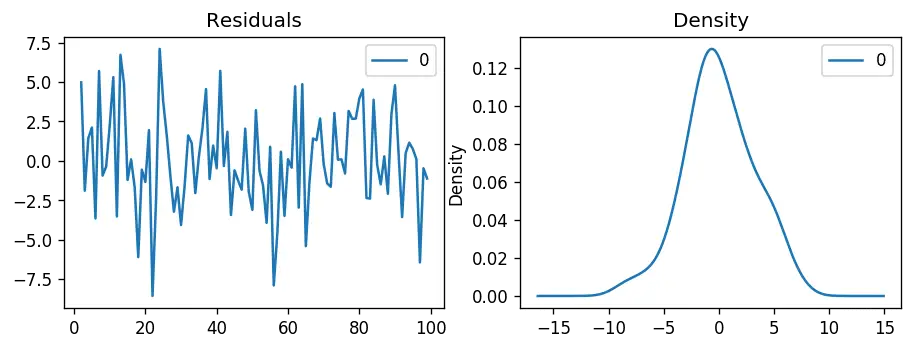

Let’s plot the residuals to ensure there are no patterns (that is, look for constant mean and variance).

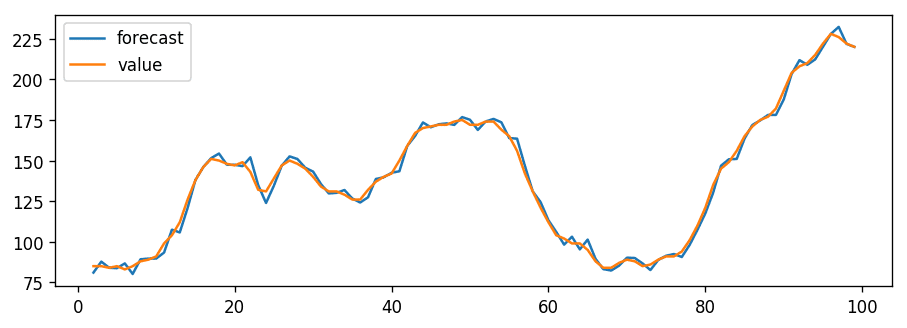

The residual errors seem fine with near zero mean and uniform variance. Let’s plot the actuals against the fitted values using plot_predict() .

When you set dynamic=False the in-sample lagged values are used for prediction.

That is, the model gets trained up until the previous value to make the next prediction. This can make the fitted forecast and actuals look artificially good.

So, we seem to have a decent ARIMA model. But is that the best?

Can’t say that at this point because we haven’t actually forecasted into the future and compared the forecast with the actual performance.

So, the real validation you need now is the Out-of-Time cross-validation.

10. How to do find the optimal ARIMA model manually using Out-of-Time Cross validation

In Out-of-Time cross-validation, you take few steps back in time and forecast into the future to as many steps you took back. Then you compare the forecast against the actuals.

To do out-of-time cross-validation, you need to create the training and testing dataset by splitting the time series into 2 contiguous parts in approximately 75:25 ratio or a reasonable proportion based on time frequency of series.

Why am I not sampling the training data randomly you ask?

That’s because the order sequence of the time series should be intact in order to use it for forecasting.

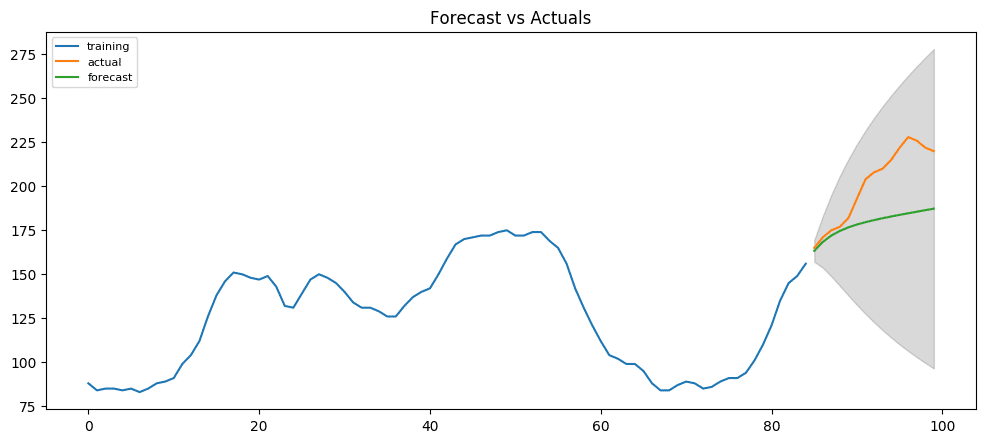

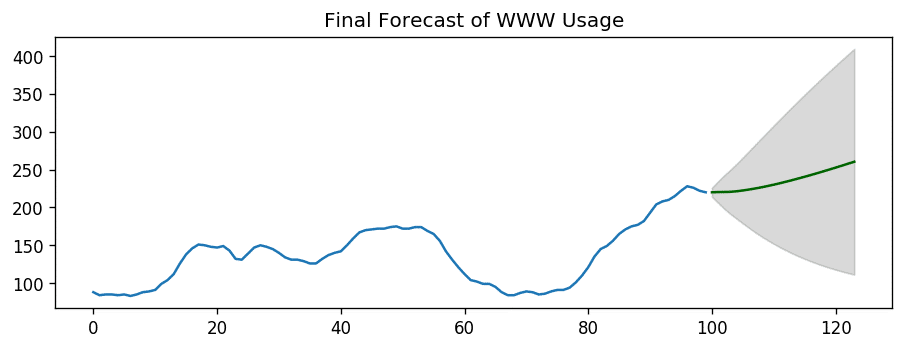

You can now build the ARIMA model on training dataset, forecast and plot it.

From the chart, the ARIMA(1,1,1) model seems to give a directionally correct forecast. And the actual observed values lie within the 95% confidence band. That seems fine.

But each of the predicted forecasts is consistently below the actuals. That means, by adding a small constant to our forecast, the accuracy will certainly improve. So, there is definitely scope for improvement.

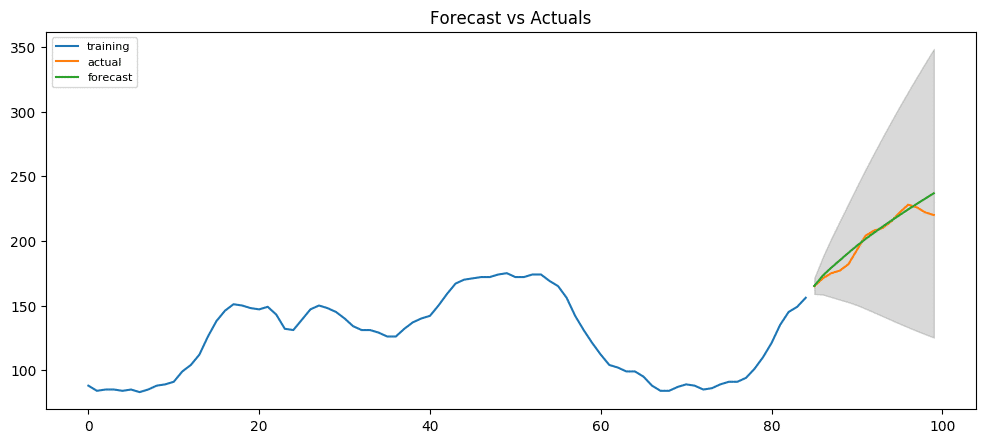

So, what I am going to do is to increase the order of differencing to two, that is set d=2 and iteratively increase p to up to 5 and then q up to 5 to see which model gives least AIC and also look for a chart that gives closer actuals and forecasts.

While doing this, I keep an eye on the P values of the AR and MA terms in the model summary. They should be as close to zero, ideally, less than 0.05.

The AIC has reduced to 440 from 515. Good. The P-values of the X terms are less the < 0.05, which is great.

So overall it’s much better.

Ideally, you should go back multiple points in time, like, go back 1, 2, 3 and 4 quarters and see how your forecasts are performing at various points in the year.

Here’s a great practice exercise: Try to go back 27, 30, 33, 36 data points and see how the forcasts performs. The forecast performance can be judged using various accuracy metrics discussed next.

11. Accuracy Metrics for Time Series Forecast

The commonly used accuracy metrics to judge forecasts are:

- Mean Absolute Percentage Error (MAPE)

- Mean Error (ME)

- Mean Absolute Error (MAE)

- Mean Percentage Error (MPE)

- Root Mean Squared Error (RMSE)

- Lag 1 Autocorrelation of Error (ACF1)

- Correlation between the Actual and the Forecast (corr)

- Min-Max Error (minmax)

Typically, if you are comparing forecasts of two different series, the MAPE, Correlation and Min-Max Error can be used.

Why not use the other metrics?

Because only the above three are percentage errors that vary between 0 and 1. That way, you can judge how good is the forecast irrespective of the scale of the series.

The other error metrics are quantities. That implies, an RMSE of 100 for a series whose mean is in 1000’s is better than an RMSE of 5 for series in 10’s. So, you can’t really use them to compare the forecasts of two different scaled time series.

Around 2.2% MAPE implies the model is about 97.8% accurate in predicting the next 15 observations.

Now you know how to build an ARIMA model manually.

But in industrial situations, you will be given a lot of time series to be forecasted and the forecasting exercise be repeated regularly.

So we need a way to automate the best model selection process.

12. How to do Auto Arima Forecast in Python

Like R’s popular auto.arima() function, the pmdarima package provides auto_arima() with similar functionality.

auto_arima() uses a stepwise approach to search multiple combinations of p,d,q parameters and chooses the best model that has the least AIC.

13. How to interpret the residual plots in ARIMA model

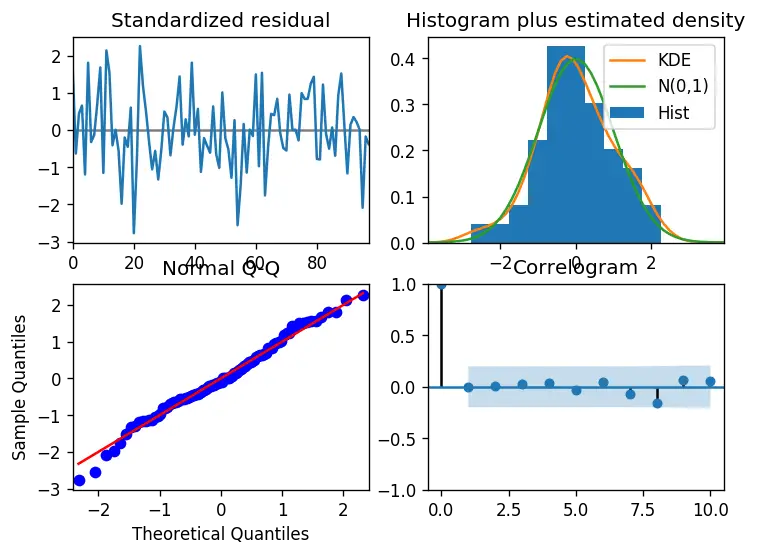

Let’s review the residual plots using stepwise_fit.

So how to interpret the plot diagnostics?

Top left: The residual errors seem to fluctuate around a mean of zero and have a uniform variance.

Top Right: The density plot suggest normal distribution with mean zero.

Bottom left: All the dots should fall perfectly in line with the red line. Any significant deviations would imply the distribution is skewed.

Bottom Right: The Correlogram, aka, ACF plot shows the residual errors are not autocorrelated. Any autocorrelation would imply that there is some pattern in the residual errors which are not explained in the model. So you will need to look for more X’s (predictors) to the model.

Overall, it seems to be a good fit. Let’s forecast.

14. How to automatically build SARIMA model in python

The problem with plain ARIMA model is it does not support seasonality.

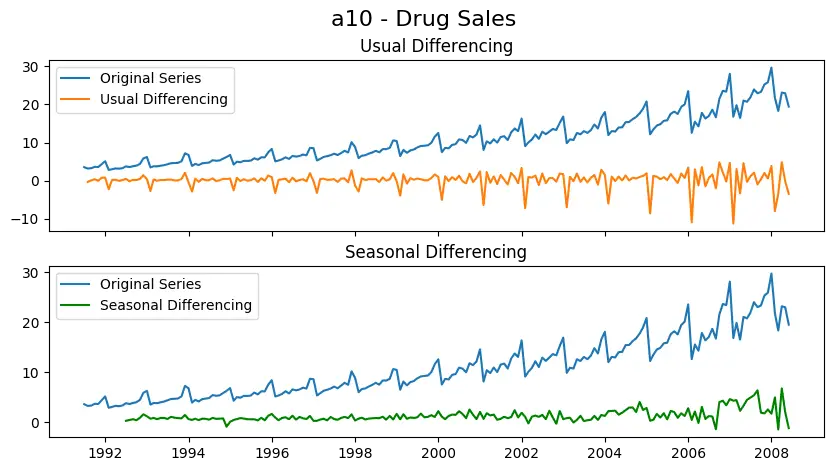

If your time series has defined seasonality, then, go for SARIMA which uses seasonal differencing.

Seasonal differencing is similar to regular differencing, but, instead of subtracting consecutive terms, you subtract the value from previous season.

So, the model will be represented as SARIMA(p,d,q)x(P,D,Q), where, P, D and Q are SAR, order of seasonal differencing and SMA terms respectively and 'x' is the frequency of the time series.

If your model has well defined seasonal patterns, then enforce D=1 for a given frequency ‘x’.

Here’s some practical advice on building SARIMA model:

As a general rule, set the model parameters such that D never exceeds one. And the total differencing ‘d + D’ never exceeds 2. Try to keep only either SAR or SMA terms if your model has seasonal components.

Let’s build an SARIMA model on 'a10' – the drug sales dataset.

As you can clearly see, the seasonal spikes is intact after applying usual differencing (lag 1). Whereas, it is rectified after seasonal differencing.

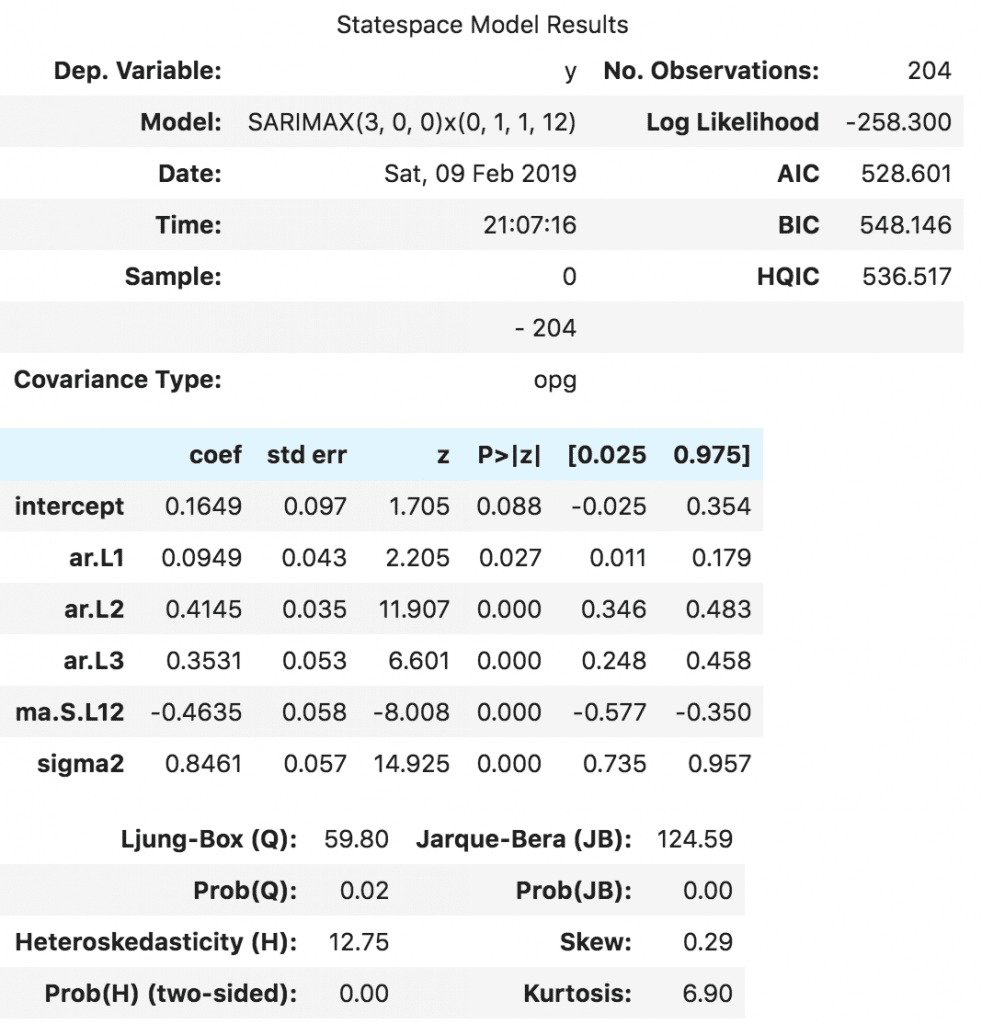

Let’s build the SARIMA model using pmdarima ‘s auto_arima() . To do that, you need to set seasonal=True , set the frequency m=12 for month wise series and enforce D=1 .

The model has estimated the AIC and the P values of the coefficients look significant. Let’s look at the residual diagnostics plot.

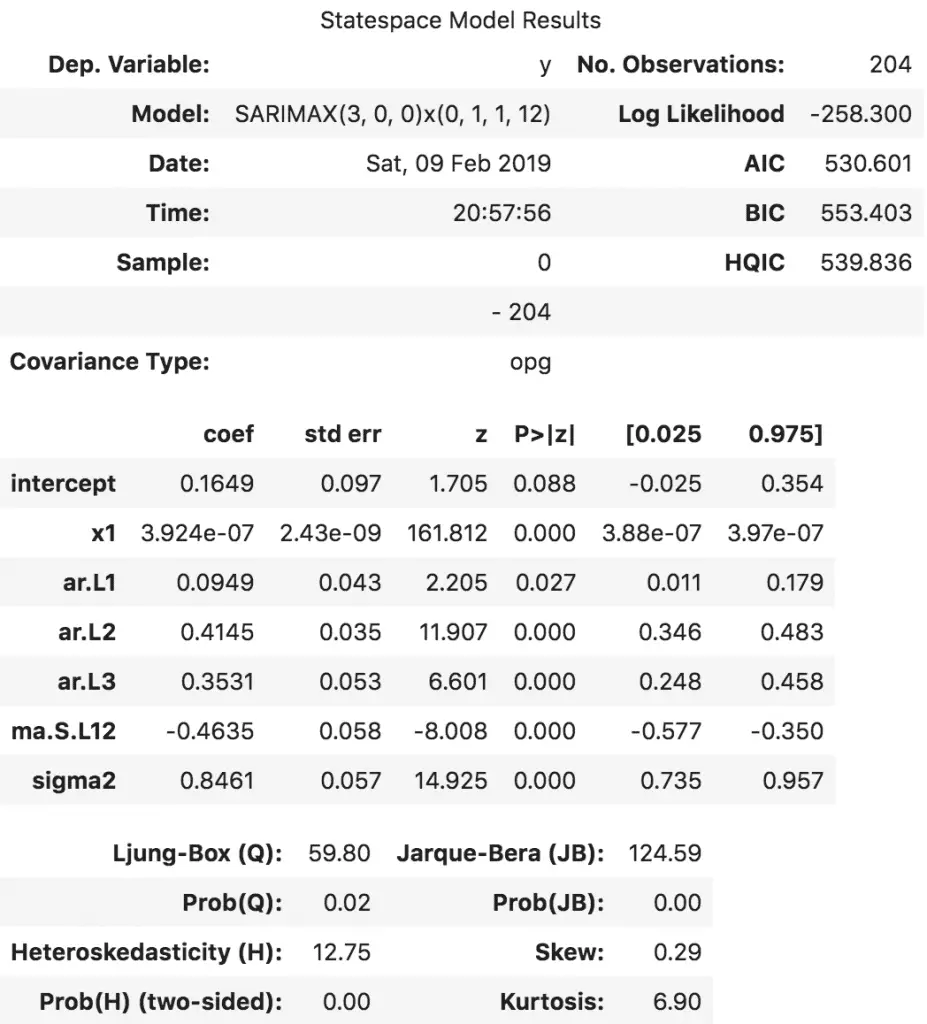

The best model SARIMAX(3, 0, 0)x(0, 1, 1, 12) has an AIC of 528.6 and the P Values are significant.

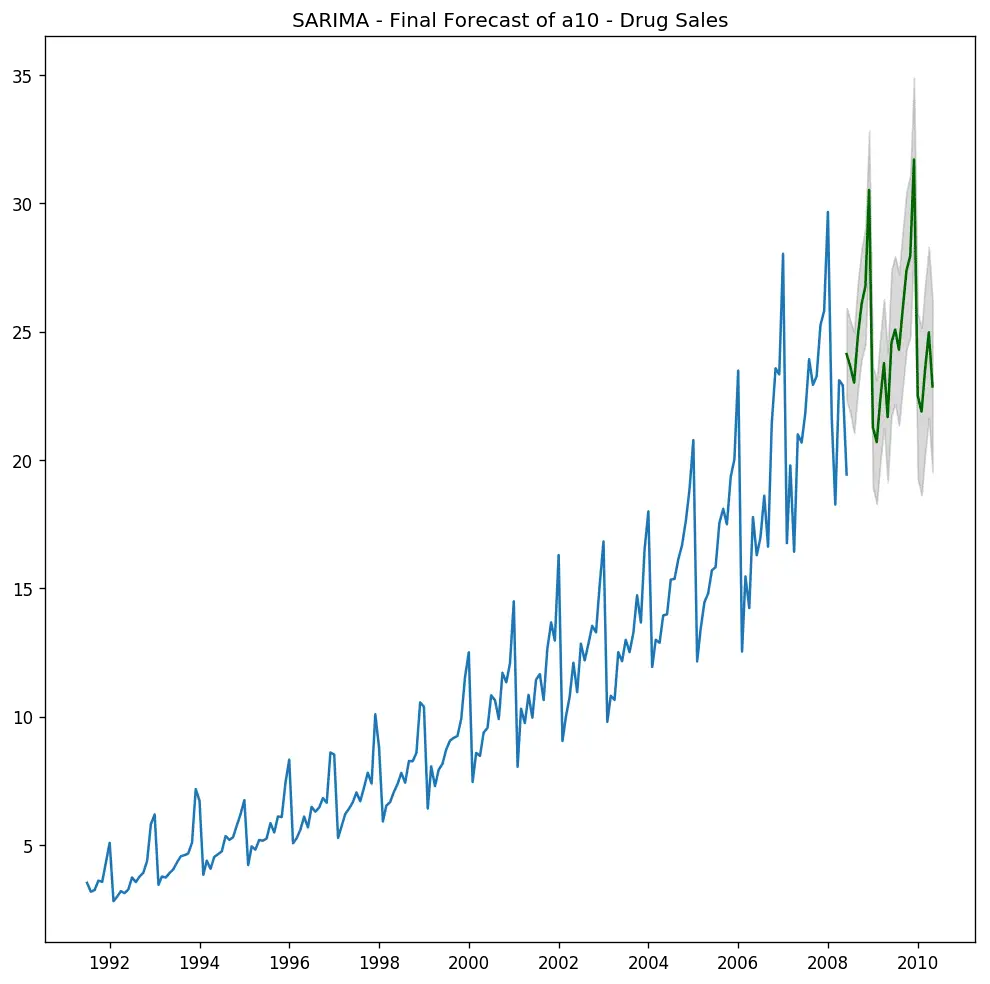

Let’s forecast for the next 24 months.

There you have a nice forecast that captures the expected seasonal demand pattern.

15. How to build SARIMAX Model with exogenous variable

The SARIMA model we built is good. I would stop here typically.

But for the sake of completeness, let’s try and force an external predictor, also called, ‘exogenous variable’ into the model. This model is called the SARIMAX model.

The only requirement to use an exogenous variable is you need to know the value of the variable during the forecast period as well.

For the sake of demonstration, I am going to use the seasonal index from the classical seasonal decomposition on the latest 36 months of data.

Why the seasonal index? Isn’t SARIMA already modeling the seasonality, you ask?

You are correct.

But also, I want to see how the model looks if we force the recent seasonality pattern into the training and forecast.

Secondly, this is a good variable for demo purpose. So you can use this as a template and plug in any of your variables into the code. The seasonal index is a good exogenous variable because it repeats every frequency cycle, 12 months in this case.

So, you will always know what values the seasonal index will hold for the future forecasts.

Let’s compute the seasonal index so that it can be forced as a (exogenous) predictor to the SARIMAX model.

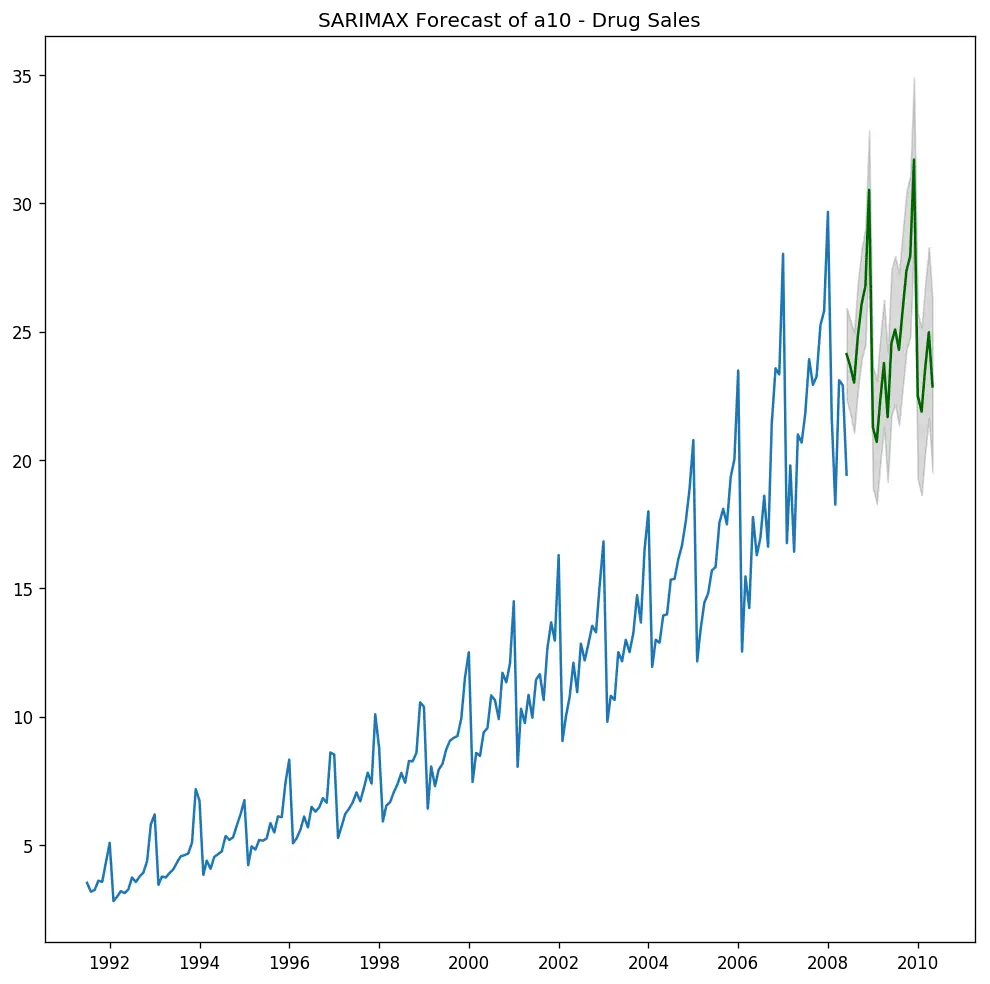

The exogenous variable (seasonal index) is ready. Let’s build the SARIMAX model.

So, we have the model with the exogenous term. But the coefficient is very small for x1 , so the contribution from that variable will be negligible. Let’s forecast it anyway.

We have effectively forced the latest seasonal effect of the latest 3 years into the model instead of the entire history.

Alright let’s forecast into the next 24 months. For this, you need the value of the seasonal index for the next 24 months.

16. Practice Exercises

In the AirPassengers dataset, go back 12 months in time and build the SARIMA forecast for the next 12 months.

- Is the series stationary? If not what sort of differencing is required?

- What is the order of your best model?

- What is the AIC of your model?

- What is the MAPE achieved in OOT cross-validation?

- What is the order of the best model predicted by auto_arima() method?

17. Conclusion

Congrats if you reached this point. Give yourself a BIG hug if you were able to solve the practice exercises.

I really hope you found this useful?

We have covered a lot of concepts starting from the very basics of forecasting, AR, MA, ARIMA, SARIMA and finally the SARIMAX model. If you have any questions please write in the comments section. Meanwhile, I will work on the next article.

Happy Learning!

Hands-on implementation on real project: Learn how to implement ARIMA using multiple strategies and multiple other time series models in my Restaurant Visitor Forecasting Course

More Articles

Granger causality test in python, granger causality test, augmented dickey fuller test (adf test) – must read guide, kpss test for stationarity, vector autoregression (var) – comprehensive guide with examples in python, similar articles, complete introduction to linear regression in r, how to implement common statistical significance tests and find the p value, logistic regression – a complete tutorial with examples in r.

Subscribe to Machine Learning Plus for high value data science content

© Machinelearningplus. All rights reserved.

Machine Learning A-Z™: Hands-On Python & R In Data Science

Free sample videos:.

Eduardo Avelar

C4w3: using rnns to predict time series, c4w3: using rnns to predict time series #.

Lesson 22: Plotting time series and generated data ¶

So far, we have only seen how to plot measured data. We have make plots using Bokeh where each glyph represents a single measurement. We used some glyphs that did not represent singe measurements when we make box plots, histograms, and formal (staircase-like) ECDFs, but the construction of those plots was abstracted away in the iqplot package. In this lesson, we will learn how to make plots where the points are related to one another via an ordering so that we can connect the points with lines. It is one extra aspect to think about when constructing a graphic.

Plotting time series data ¶

One class of measured data we have not considered is time series data. Time series data are typically not plotted as points, but rather with joined lines. To get some experience plotting data sets like this, we will use some data from Markus Meister ’s group, collected by Dawna Bagherian and Kyu Lee. The file ~/git/bootcamp/data/retina_spikes.csv contains the data set. They put electrodes in the retinal cells of a mouse and measured voltage. From the time trace of voltage, they can detect and characterize spiking events. The first column of the CSV file is the time in milliseconds (ms) that the measurement was taken, and the second column is the voltage in units of microvolts (µV). Let’s load in the data.

Let’s create a figure to hold our plot. We will make it wide and narrow, since it is a long time course of potentials.

To make a plot of these data, we use the p.line() method. This makes a line with joints at each measurement. I usually choose to specicy the line_width=2 kwarg, giving me a line two pixels in width, since I find the default of one pixel to be a bit thin. You can check out the available line properties in the Bokeh docs .

We can clearly see several spiking events in the data. When we zoom, we can resolve the finer structure.

Plotting generated data ¶

You’re now a pro at plotting measured data. But sometimes, you want to plot smooth functions. To do this, you can use Numpy and/or Scipy to generate arrays of values of smooth functions.

We will plot the Airy disk , which we encounter in biology when doing microscopy as the diffraction pattern of light passing through a pinhole. Here is a picture of the diffraction pattern from a laser (with the main peak overexposed).

The equation for the radial light intensity of an Airy disk is

\begin{align} \frac{I(x)}{I_0} = 4 \left(\frac{J_1(x)}{x}\right)^2, \end{align}

where \(I_0\) is the maximum intensity (the intensity at the center of the image) and \(x\) is the radial distance from the center. Here, \(J_1(x)\) is the first order Bessel function of the first kind. Yeesh. How do we plot that ?

Fortunately, SciPy has lots of special functions available. Specifically, scipy.special.j1() computes exactly what we are after! We pass in a NumPy array that has the values of \(x\) we want to plot and then compute the \(y\) -values using the expression for the normalized intensity.

To plot a smooth curve, we use the np.linspace() function with lots of points. We then connect the points with straight lines, which to the eye look like a smooth curve. Let’s try it. We’ll use 400 points, which I find is a good rule of thumb for not-too-oscillating functions.

Now that we have the values we want to plot, we could construct a Pandas DataFrame to pass in as the source to p.line() . We do not need to take this extra step, though. If we instead leave source unspecified, and pass in NumPy arrays for x and y , Bokeh will directly use those in constructing the plot.

We could also plot dots (which doesn’t make sense here, but we’ll show it just to see who the line joining works to make a plot of a smooth function).

There is one detail I swept under the rug here. What happens if we compute the function for \(x = 0\) ?

We get a RuntimeWarning because we divided by zero. We know that

\begin{align} \lim_{x\to 0} \frac{J_1(x)}{x} = \frac{1}{2}, \end{align}

so we could write a new function that checks if \(x = 0\) and returns the appropriate limit for \(x = 0\) . In the x array I constructed for the plot, we hopped over zero, so it was never evaluated. If we were being careful, we could write our own Airy function that deals with this.

Computing environment ¶

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

Abrar-Mustakim/Sequence-Time-Series-and-Prediction-Tensorflow-Coursera

Folders and files.

- Jupyter Notebook 100.0%

IMAGES

VIDEO

COMMENTS

Working with Generated Time Series. From the course Sequences, Time Series and Prediction, DeepLearning.AI, Coursera, Week 1 - Sequences and Prediction 1 star 1 fork Branches Tags Activity

C4W1: Working with time series C4W2: Predicting time series C4W3: Using RNNs to predict time series C4W4: Using real world data TensorFlow Dev Cert - updated C1W1 Assignment: Housing Prices C1W2: Implementing Callbacks in TensorFlow using the MNIST Dataset C1W3: Improve MNIST with Convolutions

This Specialization will teach you best practices for using TensorFlow, a popular open-source framework for machine learning. In this fourth course, you will learn how to build time series models in TensorFlow. You'll first implement best practices to prepare time series data. You'll also explore how RNNs and 1D ConvNets can be used for ...

# The time dimension or the x-coordinate of the time series TIME = np. arange (4 * 365 + 1, dtype = "float32") # Initial series is just a straight line with a y-intercept y_intercept = 10 slope = 0.01 SERIES = trend (TIME, slope) + y_intercept # Adding seasonality amplitude = 40 SERIES += seasonality (TIME, period = 365, amplitude = amplitude ...

This tutorial is an introduction to time series forecasting using TensorFlow. It builds a few different styles of models including Convolutional and Recurrent Neural Networks (CNNs and RNNs). This is covered in two main parts, with subsections: Forecast for a single time step: A single feature. All features.

TimeSeriesGenerator will basically embed your time series inside a TimeSeriesGenerator object that will later directly be feed inside your network. And then we create our object : generator ...

Pandas Time Series Data Structures¶ This section will introduce the fundamental Pandas data structures for working with time series data: For time stamps, Pandas provides the Timestamp type. As mentioned before, it is essentially a replacement for Python's native datetime, but is based on the more efficient numpy.datetime64 data type.

This Repo includes my work in the Sequences and Time Series course of the Tensorflow in Practice Specialization by deeplearning.ai at Coursera Resources. Readme Activity. Stars. 33 stars Watchers. 2 watching Forks. 27 forks Report repository Releases No releases published. Packages 0. No packages published .

n_features = 1. series = series.reshape((len(series), n_features)) The TimeseriesGenerator will then split the series into samples with the shape [ batch, n_input, 1] or [8, 2, 1] for all eight samples in the generator and the two lag observations used as time steps. The complete example is listed below.

Image 14 — Time series shifting (2) (image by author) Notice how the same thing happened but from the opposite side. Rolling. Time series data in original format can be quite volatile, especially on smaller aggregation levels. The concept of rolling, or moving averages is a useful technique for smoothing time series data. You can actually ...

Working With Time Series. If you're following along with this lesson and not using the provided Jupyter Notebook from this course's supporting materials, you can copy-paste the following temp_c list: One of the main thrusts for creating the pandas module was to work with time-series data. To showcase some of the ways that you can work with ...

This post focuses on a particular type of forecasting method called ARIMA modeling. ARIMA, short for 'AutoRegressive Integrated Moving Average', is a forecasting algorithm based on the idea that the information in the past values of the time series can alone be used to predict the future values. 2.

smooth_past_series = moving_average_forecast (SERIES [SPLIT_TIME-365-5:-365 + 5], 10) print (f "smooth past series has shape: {smooth_past_series. shape} \n ") # Add the smoothed out past values to the moving avg of diff series diff_moving_avg_plus_smooth_past = smooth_past_series + diff_moving_avg print (f "moving average forecast with diff ...

C4W1: Working with time series C4W2: Predicting time series C4W3: Using RNNs to predict time series C4W4: Using real world data TensorFlow Dev Cert - updated C1W1 Assignment: Housing Prices C1W2: Implementing Callbacks in TensorFlow using the MNIST Dataset C1W3: Improve MNIST with Convolutions

Plotting time series data One class of measured data we have not considered is time series data. Time series data are typically not plotted as points, but rather with joined lines. To get some experience plotting data sets like this, we will use some data from Markus Meister's group, collected by Dawna Bagherian and Kyu Lee.

You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. Reload to refresh your session. You switched accounts on another tab or window.

This Specialization will teach you best practices for using TensorFlow, a popular open-source framework for machine learning. In this fourth course, you will learn how to build time series models in TensorFlow. You'll first implement best practices to prepare time series data. You'll also explore how RNNs and 1D ConvNets can be used for ...

Photo by Agê Barros on Unsplash 1. Background. In a previous article, the idea of generating artificial or synthetic data was explored, given a limited amount of dataset as a starter.The data taken at that time was tabular, which is like a regular dataset which we usually encounter. In this article however, we will look at time-series data and explore a way to generate synthetic time-series data.

In this fourth course, you will learn how to build time series models in TensorFlow. You'll first implement best practices to prepare time series data. You'll also explore how RNNs and 1D ConvNets can be used for prediction. Finally, you'll apply everything you've learned throughout the Specialization to build a sunspot prediction model using real-world data - camara94/tensorflow ...

Lesson 22: Plotting time series and generated data. [1]: import numpy as np import pandas as pd import scipy.special import bokeh.io import bokeh.plotting bokeh.io.output_notebook() BokehJS 2.3.2 successfully loaded. So far, we have only seen how to plot measured data. We have make plots using Bokeh where each glyph represents a single measurement.

week 1- This week i explored the nature of time series data, and you saw some of the more common attributes of them, including things like seasonality and trend. I looked at some statistical methods for predicting time series data also.

This Repo includes my work in the Sequences and Time Series course of the Tensorflow in Practice Specialization by deeplearning.ai at Coursera - Sequences-Time-Series-Prediction-in-Tensorflow/Course 4 - Quiz 1.pdf at master · MoRebaie/Sequences-Time-Series-Prediction-in-Tensorflow

You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. Reload to refresh your session. You switched accounts on another tab or window.