- A Model for the National Assessment of Higher Order Thinking

- International Critical Thinking Essay Test

- Online Critical Thinking Basic Concepts Test

- Online Critical Thinking Basic Concepts Sample Test

Consequential Validity: Using Assessment to Drive Instruction

Translate this page from English...

*Machine translated pages not guaranteed for accuracy. Click Here for our professional translations.

Critical Thinking Testing and Assessment

The purpose of assessment in instruction is improvement. The purpose of assessing instruction for critical thinking is improving the teaching of discipline-based thinking (historical, biological, sociological, mathematical, etc.) It is to improve students’ abilities to think their way through content using disciplined skill in reasoning. The more particular we can be about what we want students to learn about critical thinking, the better we can devise instruction with that particular end in view.

The Foundation for Critical Thinking offers assessment instruments which share in the same general goal: to enable educators to gather evidence relevant to determining the extent to which instruction is teaching students to think critically (in the process of learning content). To this end, the Fellows of the Foundation recommend:

that academic institutions and units establish an oversight committee for critical thinking, and

that this oversight committee utilizes a combination of assessment instruments (the more the better) to generate incentives for faculty, by providing them with as much evidence as feasible of the actual state of instruction for critical thinking.

The following instruments are available to generate evidence relevant to critical thinking teaching and learning:

Course Evaluation Form : Provides evidence of whether, and to what extent, students perceive faculty as fostering critical thinking in instruction (course by course). Machine-scoreable.

Online Critical Thinking Basic Concepts Test : Provides evidence of whether, and to what extent, students understand the fundamental concepts embedded in critical thinking (and hence tests student readiness to think critically). Machine-scoreable.

Critical Thinking Reading and Writing Test : Provides evidence of whether, and to what extent, students can read closely and write substantively (and hence tests students' abilities to read and write critically). Short-answer.

International Critical Thinking Essay Test : Provides evidence of whether, and to what extent, students are able to analyze and assess excerpts from textbooks or professional writing. Short-answer.

Commission Study Protocol for Interviewing Faculty Regarding Critical Thinking : Provides evidence of whether, and to what extent, critical thinking is being taught at a college or university. Can be adapted for high school. Based on the California Commission Study . Short-answer.

Protocol for Interviewing Faculty Regarding Critical Thinking : Provides evidence of whether, and to what extent, critical thinking is being taught at a college or university. Can be adapted for high school. Short-answer.

Protocol for Interviewing Students Regarding Critical Thinking : Provides evidence of whether, and to what extent, students are learning to think critically at a college or university. Can be adapted for high school). Short-answer.

Criteria for Critical Thinking Assignments : Can be used by faculty in designing classroom assignments, or by administrators in assessing the extent to which faculty are fostering critical thinking.

Rubrics for Assessing Student Reasoning Abilities : A useful tool in assessing the extent to which students are reasoning well through course content.

All of the above assessment instruments can be used as part of pre- and post-assessment strategies to gauge development over various time periods.

Consequential Validity

All of the above assessment instruments, when used appropriately and graded accurately, should lead to a high degree of consequential validity. In other words, the use of the instruments should cause teachers to teach in such a way as to foster critical thinking in their various subjects. In this light, for students to perform well on the various instruments, teachers will need to design instruction so that students can perform well on them. Students cannot become skilled in critical thinking without learning (first) the concepts and principles that underlie critical thinking and (second) applying them in a variety of forms of thinking: historical thinking, sociological thinking, biological thinking, etc. Students cannot become skilled in analyzing and assessing reasoning without practicing it. However, when they have routine practice in paraphrasing, summarizing, analyzing, and assessing, they will develop skills of mind requisite to the art of thinking well within any subject or discipline, not to mention thinking well within the various domains of human life.

For full copies of this and many other critical thinking articles, books, videos, and more, join us at the Center for Critical Thinking Community Online - the world's leading online community dedicated to critical thinking! Also featuring interactive learning activities, study groups, and even a social media component, this learning platform will change your conception of intellectual development.

- Table of Contents

- Random Entry

- Chronological

- Editorial Information

- About the SEP

- Editorial Board

- How to Cite the SEP

- Special Characters

- Advanced Tools

- Support the SEP

- PDFs for SEP Friends

- Make a Donation

- SEPIA for Libraries

- Back to Entry

- Entry Contents

- Entry Bibliography

- Academic Tools

- Friends PDF Preview

- Author and Citation Info

- Back to Top

Supplement to Critical Thinking

How can one assess, for purposes of instruction or research, the degree to which a person possesses the dispositions, skills and knowledge of a critical thinker?

In psychometrics, assessment instruments are judged according to their validity and reliability.

Roughly speaking, an instrument is valid if it measures accurately what it purports to measure, given standard conditions. More precisely, the degree of validity is “the degree to which evidence and theory support the interpretations of test scores for proposed uses of tests” (American Educational Research Association 2014: 11). In other words, a test is not valid or invalid in itself. Rather, validity is a property of an interpretation of a given score on a given test for a specified use. Determining the degree of validity of such an interpretation requires collection and integration of the relevant evidence, which may be based on test content, test takers’ response processes, a test’s internal structure, relationship of test scores to other variables, and consequences of the interpretation (American Educational Research Association 2014: 13–21). Criterion-related evidence consists of correlations between scores on the test and performance on another test of the same construct; its weight depends on how well supported is the assumption that the other test can be used as a criterion. Content-related evidence is evidence that the test covers the full range of abilities that it claims to test. Construct-related evidence is evidence that a correct answer reflects good performance of the kind being measured and an incorrect answer reflects poor performance.

An instrument is reliable if it consistently produces the same result, whether across different forms of the same test (parallel-forms reliability), across different items (internal consistency), across different administrations to the same person (test-retest reliability), or across ratings of the same answer by different people (inter-rater reliability). Internal consistency should be expected only if the instrument purports to measure a single undifferentiated construct, and thus should not be expected of a test that measures a suite of critical thinking dispositions or critical thinking abilities, assuming that some people are better in some of the respects measured than in others (for example, very willing to inquire but rather closed-minded). Otherwise, reliability is a necessary but not a sufficient condition of validity; a standard example of a reliable instrument that is not valid is a bathroom scale that consistently under-reports a person’s weight.

Assessing dispositions is difficult if one uses a multiple-choice format with known adverse consequences of a low score. It is pretty easy to tell what answer to the question “How open-minded are you?” will get the highest score and to give that answer, even if one knows that the answer is incorrect. If an item probes less directly for a critical thinking disposition, for example by asking how often the test taker pays close attention to views with which the test taker disagrees, the answer may differ from reality because of self-deception or simple lack of awareness of one’s personal thinking style, and its interpretation is problematic, even if factor analysis enables one to identify a distinct factor measured by a group of questions that includes this one (Ennis 1996). Nevertheless, Facione, Sánchez, and Facione (1994) used this approach to develop the California Critical Thinking Dispositions Inventory (CCTDI). They began with 225 statements expressive of a disposition towards or away from critical thinking (using the long list of dispositions in Facione 1990a), validated the statements with talk-aloud and conversational strategies in focus groups to determine whether people in the target population understood the items in the way intended, administered a pilot version of the test with 150 items, and eliminated items that failed to discriminate among test takers or were inversely correlated with overall results or added little refinement to overall scores (Facione 2000). They used item analysis and factor analysis to group the measured dispositions into seven broad constructs: open-mindedness, analyticity, cognitive maturity, truth-seeking, systematicity, inquisitiveness, and self-confidence (Facione, Sánchez, and Facione 1994). The resulting test consists of 75 agree-disagree statements and takes 20 minutes to administer. A repeated disturbing finding is that North American students taking the test tend to score low on the truth-seeking sub-scale (on which a low score results from agreeing to such statements as the following: “To get people to agree with me I would give any reason that worked”. “Everyone always argues from their own self-interest, including me”. “If there are four reasons in favor and one against, I’ll go with the four”.) Development of the CCTDI made it possible to test whether good critical thinking abilities and good critical thinking dispositions go together, in which case it might be enough to teach one without the other. Facione (2000) reports that administration of the CCTDI and the California Critical Thinking Skills Test (CCTST) to almost 8,000 post-secondary students in the United States revealed a statistically significant but weak correlation between total scores on the two tests, and also between paired sub-scores from the two tests. The implication is that both abilities and dispositions need to be taught, that one cannot expect improvement in one to bring with it improvement in the other.

A more direct way of assessing critical thinking dispositions would be to see what people do when put in a situation where the dispositions would reveal themselves. Ennis (1996) reports promising initial work with guided open-ended opportunities to give evidence of dispositions, but no standardized test seems to have emerged from this work. There are however standardized aspect-specific tests of critical thinking dispositions. The Critical Problem Solving Scale (Berman et al. 2001: 518) takes as a measure of the disposition to suspend judgment the number of distinct good aspects attributed to an option judged to be the worst among those generated by the test taker. Stanovich, West and Toplak (2011: 800–810) list tests developed by cognitive psychologists of the following dispositions: resistance to miserly information processing, resistance to myside thinking, absence of irrelevant context effects in decision-making, actively open-minded thinking, valuing reason and truth, tendency to seek information, objective reasoning style, tendency to seek consistency, sense of self-efficacy, prudent discounting of the future, self-control skills, and emotional regulation.

It is easier to measure critical thinking skills or abilities than to measure dispositions. The following eight currently available standardized tests purport to measure them: the Watson-Glaser Critical Thinking Appraisal (Watson & Glaser 1980a, 1980b, 1994), the Cornell Critical Thinking Tests Level X and Level Z (Ennis & Millman 1971; Ennis, Millman, & Tomko 1985, 2005), the Ennis-Weir Critical Thinking Essay Test (Ennis & Weir 1985), the California Critical Thinking Skills Test (Facione 1990b, 1992), the Halpern Critical Thinking Assessment (Halpern 2016), the Critical Thinking Assessment Test (Center for Assessment & Improvement of Learning 2017), the Collegiate Learning Assessment (Council for Aid to Education 2017), the HEIghten Critical Thinking Assessment (https://territorium.com/heighten/), and a suite of critical thinking assessments for different groups and purposes offered by Insight Assessment (https://www.insightassessment.com/products). The Critical Thinking Assessment Test (CAT) is unique among them in being designed for use by college faculty to help them improve their development of students’ critical thinking skills (Haynes et al. 2015; Haynes & Stein 2021). Also, for some years the United Kingdom body OCR (Oxford Cambridge and RSA Examinations) awarded AS and A Level certificates in critical thinking on the basis of an examination (OCR 2011). Many of these standardized tests have received scholarly evaluations at the hands of, among others, Ennis (1958), McPeck (1981), Norris and Ennis (1989), Fisher and Scriven (1997), Possin (2008, 2013a, 2013b, 2013c, 2014, 2020) and Hatcher and Possin (2021). Their evaluations provide a useful set of criteria that such tests ideally should meet, as does the description by Ennis (1984) of problems in testing for competence in critical thinking: the soundness of multiple-choice items, the clarity and soundness of instructions to test takers, the information and mental processing used in selecting an answer to a multiple-choice item, the role of background beliefs and ideological commitments in selecting an answer to a multiple-choice item, the tenability of a test’s underlying conception of critical thinking and its component abilities, the set of abilities that the test manual claims are covered by the test, the extent to which the test actually covers these abilities, the appropriateness of the weighting given to various abilities in the scoring system, the accuracy and intellectual honesty of the test manual, the interest of the test to the target population of test takers, the scope for guessing, the scope for choosing a keyed answer by being test-wise, precautions against cheating in the administration of the test, clarity and soundness of materials for training essay graders, inter-rater reliability in grading essays, and clarity and soundness of advance guidance to test takers on what is required in an essay. Rear (2019) has challenged the use of standardized tests of critical thinking as a way to measure educational outcomes, on the grounds that they (1) fail to take into account disputes about conceptions of critical thinking, (2) are not completely valid or reliable, and (3) fail to evaluate skills used in real academic tasks. He proposes instead assessments based on discipline-specific content.

There are also aspect-specific standardized tests of critical thinking abilities. Stanovich, West and Toplak (2011: 800–810) list tests of probabilistic reasoning, insights into qualitative decision theory, knowledge of scientific reasoning, knowledge of rules of logical consistency and validity, and economic thinking. They also list instruments that probe for irrational thinking, such as superstitious thinking, belief in the superiority of intuition, over-reliance on folk wisdom and folk psychology, belief in “special” expertise, financial misconceptions, overestimation of one’s introspective powers, dysfunctional beliefs, and a notion of self that encourages egocentric processing. They regard these tests along with the previously mentioned tests of critical thinking dispositions as the building blocks for a comprehensive test of rationality, whose development (they write) may be logistically difficult and would require millions of dollars.

A superb example of assessment of an aspect of critical thinking ability is the Test on Appraising Observations (Norris & King 1983, 1985, 1990a, 1990b), which was designed for classroom administration to senior high school students. The test focuses entirely on the ability to appraise observation statements and in particular on the ability to determine in a specified context which of two statements there is more reason to believe. According to the test manual (Norris & King 1985, 1990b), a person’s score on the multiple-choice version of the test, which is the number of items that are answered correctly, can justifiably be given either a criterion-referenced or a norm-referenced interpretation.

On a criterion-referenced interpretation, those who do well on the test have a firm grasp of the principles for appraising observation statements, and those who do poorly have a weak grasp of them. This interpretation can be justified by the content of the test and the way it was developed, which incorporated a method of controlling for background beliefs articulated and defended by Norris (1985). Norris and King synthesized from judicial practice, psychological research and common-sense psychology 31 principles for appraising observation statements, in the form of empirical generalizations about tendencies, such as the principle that observation statements tend to be more believable than inferences based on them (Norris & King 1984). They constructed items in which exactly one of the 31 principles determined which of two statements was more believable. Using a carefully constructed protocol, they interviewed about 100 students who responded to these items in order to determine the thinking that led them to choose the answers they did (Norris & King 1984). In several iterations of the test, they adjusted items so that selection of the correct answer generally reflected good thinking and selection of an incorrect answer reflected poor thinking. Thus they have good evidence that good performance on the test is due to good thinking about observation statements and that poor performance is due to poor thinking about observation statements. Collectively, the 50 items on the final version of the test require application of 29 of the 31 principles for appraising observation statements, with 13 principles tested by one item, 12 by two items, three by three items, and one by four items. Thus there is comprehensive coverage of the principles for appraising observation statements. Fisher and Scriven (1997: 135–136) judge the items to be well worked and sound, with one exception. The test is clearly written at a grade 6 reading level, meaning that poor performance cannot be attributed to difficulties in reading comprehension by the intended adolescent test takers. The stories that frame the items are realistic, and are engaging enough to stimulate test takers’ interest. Thus the most plausible explanation of a given score on the test is that it reflects roughly the degree to which the test taker can apply principles for appraising observations in real situations. In other words, there is good justification of the proposed interpretation that those who do well on the test have a firm grasp of the principles for appraising observation statements and those who do poorly have a weak grasp of them.

To get norms for performance on the test, Norris and King arranged for seven groups of high school students in different types of communities and with different levels of academic ability to take the test. The test manual includes percentiles, means, and standard deviations for each of these seven groups. These norms allow teachers to compare the performance of their class on the test to that of a similar group of students.

Copyright © 2022 by David Hitchcock < hitchckd @ mcmaster . ca >

- Accessibility

Support SEP

Mirror sites.

View this site from another server:

- Info about mirror sites

The Stanford Encyclopedia of Philosophy is copyright © 2024 by The Metaphysics Research Lab , Department of Philosophy, Stanford University

Library of Congress Catalog Data: ISSN 1095-5054

Why Schools Need to Change Yes, We Can Define, Teach, and Assess Critical Thinking Skills

Jeff Heyck-Williams (He, His, Him) Director of the Two Rivers Learning Institute in Washington, DC

Today’s learners face an uncertain present and a rapidly changing future that demand far different skills and knowledge than were needed in the 20th century. We also know so much more about enabling deep, powerful learning than we ever did before. Our collective future depends on how well young people prepare for the challenges and opportunities of 21st-century life.

Critical thinking is a thing. We can define it; we can teach it; and we can assess it.

While the idea of teaching critical thinking has been bandied around in education circles since at least the time of John Dewey, it has taken greater prominence in the education debates with the advent of the term “21st century skills” and discussions of deeper learning. There is increasing agreement among education reformers that critical thinking is an essential ingredient for long-term success for all of our students.

However, there are still those in the education establishment and in the media who argue that critical thinking isn’t really a thing, or that these skills aren’t well defined and, even if they could be defined, they can’t be taught or assessed.

To those naysayers, I have to disagree. Critical thinking is a thing. We can define it; we can teach it; and we can assess it. In fact, as part of a multi-year Assessment for Learning Project , Two Rivers Public Charter School in Washington, D.C., has done just that.

Before I dive into what we have done, I want to acknowledge that some of the criticism has merit.

First, there are those that argue that critical thinking can only exist when students have a vast fund of knowledge. Meaning that a student cannot think critically if they don’t have something substantive about which to think. I agree. Students do need a robust foundation of core content knowledge to effectively think critically. Schools still have a responsibility for building students’ content knowledge.

However, I would argue that students don’t need to wait to think critically until after they have mastered some arbitrary amount of knowledge. They can start building critical thinking skills when they walk in the door. All students come to school with experience and knowledge which they can immediately think critically about. In fact, some of the thinking that they learn to do helps augment and solidify the discipline-specific academic knowledge that they are learning.

The second criticism is that critical thinking skills are always highly contextual. In this argument, the critics make the point that the types of thinking that students do in history is categorically different from the types of thinking students do in science or math. Thus, the idea of teaching broadly defined, content-neutral critical thinking skills is impossible. I agree that there are domain-specific thinking skills that students should learn in each discipline. However, I also believe that there are several generalizable skills that elementary school students can learn that have broad applicability to their academic and social lives. That is what we have done at Two Rivers.

Defining Critical Thinking Skills

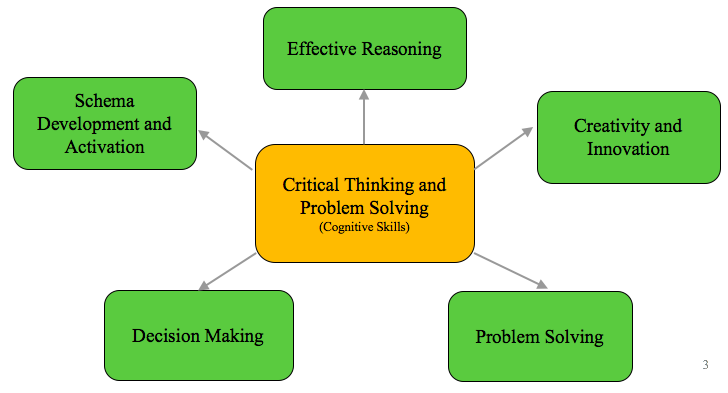

We began this work by first defining what we mean by critical thinking. After a review of the literature and looking at the practice at other schools, we identified five constructs that encompass a set of broadly applicable skills: schema development and activation; effective reasoning; creativity and innovation; problem solving; and decision making.

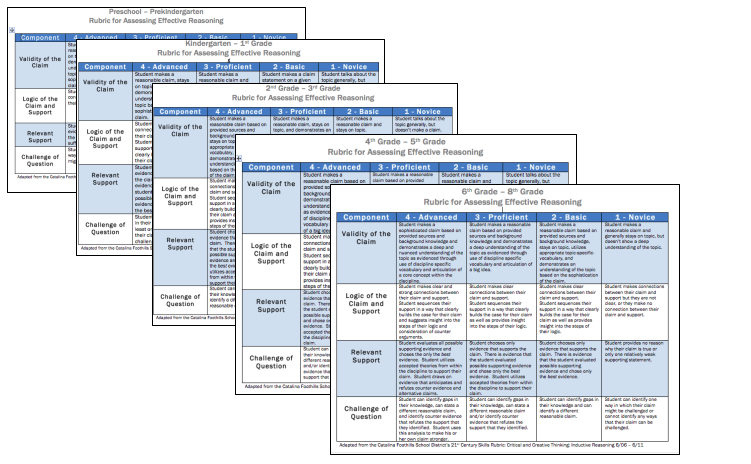

We then created rubrics to provide a concrete vision of what each of these constructs look like in practice. Working with the Stanford Center for Assessment, Learning and Equity (SCALE) , we refined these rubrics to capture clear and discrete skills.

For example, we defined effective reasoning as the skill of creating an evidence-based claim: students need to construct a claim, identify relevant support, link their support to their claim, and identify possible questions or counter claims. Rubrics provide an explicit vision of the skill of effective reasoning for students and teachers. By breaking the rubrics down for different grade bands, we have been able not only to describe what reasoning is but also to delineate how the skills develop in students from preschool through 8th grade.

Before moving on, I want to freely acknowledge that in narrowly defining reasoning as the construction of evidence-based claims we have disregarded some elements of reasoning that students can and should learn. For example, the difference between constructing claims through deductive versus inductive means is not highlighted in our definition. However, by privileging a definition that has broad applicability across disciplines, we are able to gain traction in developing the roots of critical thinking. In this case, to formulate well-supported claims or arguments.

Teaching Critical Thinking Skills

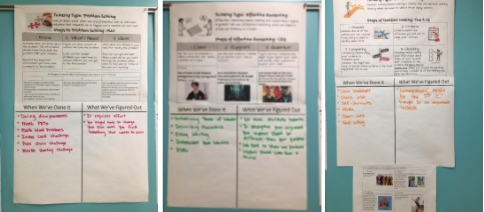

The definitions of critical thinking constructs were only useful to us in as much as they translated into practical skills that teachers could teach and students could learn and use. Consequently, we have found that to teach a set of cognitive skills, we needed thinking routines that defined the regular application of these critical thinking and problem-solving skills across domains. Building on Harvard’s Project Zero Visible Thinking work, we have named routines aligned with each of our constructs.

For example, with the construct of effective reasoning, we aligned the Claim-Support-Question thinking routine to our rubric. Teachers then were able to teach students that whenever they were making an argument, the norm in the class was to use the routine in constructing their claim and support. The flexibility of the routine has allowed us to apply it from preschool through 8th grade and across disciplines from science to economics and from math to literacy.

Kathryn Mancino, a 5th grade teacher at Two Rivers, has deliberately taught three of our thinking routines to students using the anchor charts above. Her charts name the components of each routine and has a place for students to record when they’ve used it and what they have figured out about the routine. By using this structure with a chart that can be added to throughout the year, students see the routines as broadly applicable across disciplines and are able to refine their application over time.

Assessing Critical Thinking Skills

By defining specific constructs of critical thinking and building thinking routines that support their implementation in classrooms, we have operated under the assumption that students are developing skills that they will be able to transfer to other settings. However, we recognized both the importance and the challenge of gathering reliable data to confirm this.

With this in mind, we have developed a series of short performance tasks around novel discipline-neutral contexts in which students can apply the constructs of thinking. Through these tasks, we have been able to provide an opportunity for students to demonstrate their ability to transfer the types of thinking beyond the original classroom setting. Once again, we have worked with SCALE to define tasks where students easily access the content but where the cognitive lift requires them to demonstrate their thinking abilities.

These assessments demonstrate that it is possible to capture meaningful data on students’ critical thinking abilities. They are not intended to be high stakes accountability measures. Instead, they are designed to give students, teachers, and school leaders discrete formative data on hard to measure skills.

While it is clearly difficult, and we have not solved all of the challenges to scaling assessments of critical thinking, we can define, teach, and assess these skills . In fact, knowing how important they are for the economy of the future and our democracy, it is essential that we do.

Jeff Heyck-Williams (He, His, Him)

Director of the two rivers learning institute.

Jeff Heyck-Williams is the director of the Two Rivers Learning Institute and a founder of Two Rivers Public Charter School. He has led work around creating school-wide cultures of mathematics, developing assessments of critical thinking and problem-solving, and supporting project-based learning.

Read More About Why Schools Need to Change

Nurturing STEM Identity and Belonging: The Role of Equitable Program Implementation in Project Invent

Alexis Lopez (she/her)

May 9, 2024

Bring Your Vision for Student Success to Life with NGLC and Bravely

March 13, 2024

For Ethical AI, Listen to Teachers

Jason Wilmot

October 23, 2023

- ADEA Connect

- Communities

- Career Opportunities

- New Thinking

- ADEA Governance

- House of Delegates

- Board of Directors

- Advisory Committees

- Sections and Special Interest Groups

- Governance Documents and Publications

- Dental Faculty Code of Conduct

- ADEAGies Foundation

- About ADEAGies Foundation

- ADEAGies Newsroom

- Gies Awards

- Press Center

- Strategic Directions

- 2023 Annual Report

- ADEA Membership

- Institutions

- Faculty and Staff

- Individuals

- Corporations

- ADEA Members

- Predoctoral Dental

- Allied Dental

- Nonfederal Advanced Dental

- U.S. Federal Dental

- Students, Residents and Fellows

- Corporate Members

- Member Directory

- Directory of Institutional Members (DIM)

- 5 Questions With

- ADEA Member to Member Recruitment

- Students, Residents, and Fellows

- Information For

- Deans & Program Directors

- Current Students & Residents

- Prospective Students

- Educational Meetings

- Upcoming Events

- 2025 Annual Session & Exhibition

- eLearn Webinars

- Past Events

- Professional Development

- eLearn Micro-credentials

- Leadership Institute

- Leadership Institute Alumni Association (LIAA)

- Faculty Development Programs

- ADEA Scholarships, Awards and Fellowships

- Academic Fellowship

- For Students

- For Dental Educators

- For Leadership Institute Fellows

- Teaching Resources

- ADEA weTeach®

- MedEdPORTAL

Critical Thinking Skills Toolbox

- Resources for Teaching

- Policy Topics

- Task Force Report

- Opioid Epidemic

- Financing Dental Education

- Holistic Review

- Sex-based Health Differences

- Access, Diversity and Inclusion

- ADEA Commission on Change and Innovation in Dental Education

- Tool Resources

- Campus Liaisons

- Policy Resources

- Policy Publications

- Holistic Review Workshops

- Leading Conversations Webinar Series

- Collaborations

- Summer Health Professions Education Program

- Minority Dental Faculty Development Program

- Federal Advocacy

- Dental School Legislators

- Policy Letters and Memos

- Legislative Process

- Federal Advocacy Toolkit

- State Information

- Opioid Abuse

- Tracking Map

- Loan Forgiveness Programs

- State Advocacy Toolkit

- Canadian Information

- Dental Schools

- Provincial Information

- ADEA Advocate

- Books and Guides

- About ADEA Publications

- 2023-24 Official Guide

- Dental School Explorer

- Dental Education Trends

- Ordering Publications

- ADEA Bookstore

- Newsletters

- About ADEA Newsletters

- Bulletin of Dental Education

- Charting Progress

- Subscribe to Newsletter

- Journal of Dental Education

- Subscriptions

- Submissions FAQs

- Data, Analysis and Research

- Educational Institutions

- Applicants, Enrollees and Graduates

- Dental School Seniors

- ADEA AADSAS® (Dental School)

- AADSAS Applicants

- Health Professions Advisors

- Admissions Officers

- ADEA CAAPID® (International Dentists)

- CAAPID Applicants

- Program Finder

- ADEA DHCAS® (Dental Hygiene Programs)

- DHCAS Applicants

- Program Directors

- ADEA PASS® (Advanced Dental Education Programs)

- PASS Applicants

- PASS Evaluators

- DentEd Jobs

- Information For:

- Introduction

- Overview of Critical Thinking Skills

- Teaching Observations

- Avenues for Research

CTS Tools for Faculty and Student Assessment

- Critical Thinking and Assessment

- Conclusions

- Bibliography

- Helpful Links

- Appendix A. Author's Impressions of Vignettes

A number of critical thinking skills inventories and measures have been developed:

Watson-Glaser Critical Thinking Appraisal (WGCTA) Cornell Critical Thinking Test California Critical Thinking Disposition Inventory (CCTDI) California Critical Thinking Skills Test (CCTST) Health Science Reasoning Test (HSRT) Professional Judgment Rating Form (PJRF) Teaching for Thinking Student Course Evaluation Form Holistic Critical Thinking Scoring Rubric Peer Evaluation of Group Presentation Form

Excluding the Watson-Glaser Critical Thinking Appraisal and the Cornell Critical Thinking Test, Facione and Facione developed the critical thinking skills instruments listed above. However, it is important to point out that all of these measures are of questionable utility for dental educators because their content is general rather than dental education specific. (See Critical Thinking and Assessment .)

Table 7. Purposes of Critical Thinking Skills Instruments

Reliability and Validity

Reliability means that individual scores from an instrument should be the same or nearly the same from one administration of the instrument to another. The instrument can be assumed to be free of bias and measurement error (68). Alpha coefficients are often used to report an estimate of internal consistency. Scores of .70 or higher indicate that the instrument has high reliability when the stakes are moderate. Scores of .80 and higher are appropriate when the stakes are high.

Validity means that individual scores from a particular instrument are meaningful, make sense, and allow researchers to draw conclusions from the sample to the population that is being studied (69) Researchers often refer to "content" or "face" validity. Content validity or face validity is the extent to which questions on an instrument are representative of the possible questions that a researcher could ask about that particular content or skills.

Watson-Glaser Critical Thinking Appraisal-FS (WGCTA-FS)

The WGCTA-FS is a 40-item inventory created to replace Forms A and B of the original test, which participants reported was too long.70 This inventory assesses test takers' skills in:

(a) Inference: the extent to which the individual recognizes whether assumptions are clearly stated (b) Recognition of assumptions: whether an individual recognizes whether assumptions are clearly stated (c) Deduction: whether an individual decides if certain conclusions follow the information provided (d) Interpretation: whether an individual considers evidence provided and determines whether generalizations from data are warranted (e) Evaluation of arguments: whether an individual distinguishes strong and relevant arguments from weak and irrelevant arguments

Researchers investigated the reliability and validity of the WGCTA-FS for subjects in academic fields. Participants included 586 university students. Internal consistencies for the total WGCTA-FS among students majoring in psychology, educational psychology, and special education, including undergraduates and graduates, ranged from .74 to .92. The correlations between course grades and total WGCTA-FS scores for all groups ranged from .24 to .62 and were significant at the p < .05 of p < .01. In addition, internal consistency and test-retest reliability for the WGCTA-FS have been measured as .81. The WGCTA-FS was found to be a reliable and valid instrument for measuring critical thinking (71).

Cornell Critical Thinking Test (CCTT)

There are two forms of the CCTT, X and Z. Form X is for students in grades 4-14. Form Z is for advanced and gifted high school students, undergraduate and graduate students, and adults. Reliability estimates for Form Z range from .49 to .87 across the 42 groups who have been tested. Measures of validity were computed in standard conditions, roughly defined as conditions that do not adversely affect test performance. Correlations between Level Z and other measures of critical thinking are about .50.72 The CCTT is reportedly as predictive of graduate school grades as the Graduate Record Exam (GRE), a measure of aptitude, and the Miller Analogies Test, and tends to correlate between .2 and .4.73

California Critical Thinking Disposition Inventory (CCTDI)

Facione and Facione have reported significant relationships between the CCTDI and the CCTST. When faculty focus on critical thinking in planning curriculum development, modest cross-sectional and longitudinal gains have been demonstrated in students' CTS.74 The CCTDI consists of seven subscales and an overall score. The recommended cut-off score for each scale is 40, the suggested target score is 50, and the maximum score is 60. Scores below 40 on a specific scale are weak in that CT disposition, and scores above 50 on a scale are strong in that dispositional aspect. An overall score of 280 shows serious deficiency in disposition toward CT, while an overall score of 350 (while rare) shows across the board strength. The seven subscales are analyticity, self-confidence, inquisitiveness, maturity, open-mindedness, systematicity, and truth seeking (75).

In a study of instructional strategies and their influence on the development of critical thinking among undergraduate nursing students, Tiwari, Lai, and Yuen found that, compared with lecture students, PBL students showed significantly greater improvement in overall CCTDI (p = .0048), Truth seeking (p = .0008), Analyticity (p =.0368) and Critical Thinking Self-confidence (p =.0342) subscales from the first to the second time points; in overall CCTDI (p = .0083), Truth seeking (p= .0090), and Analyticity (p =.0354) subscales from the second to the third time points; and in Truth seeking (p = .0173) and Systematicity (p = .0440) subscales scores from the first to the fourth time points (76). California Critical Thinking Skills Test (CCTST)

Studies have shown the California Critical Thinking Skills Test captured gain scores in students' critical thinking over one quarter or one semester. Multiple health science programs have demonstrated significant gains in students' critical thinking using site-specific curriculum. Studies conducted to control for re-test bias showed no testing effect from pre- to post-test means using two independent groups of CT students. Since behavioral science measures can be impacted by social-desirability bias-the participant's desire to answer in ways that would please the researcher-researchers are urged to have participants take the Marlowe Crowne Social Desirability Scale simultaneously when measuring pre- and post-test changes in critical thinking skills. The CCTST is a 34-item instrument. This test has been correlated with the CCTDI with a sample of 1,557 nursing education students. Results show that, r = .201, and the relationship between the CCTST and the CCTDI is significant at p< .001. Significant relationships between CCTST and other measures including the GRE total, GRE-analytic, GRE-Verbal, GRE-Quantitative, the WGCTA, and the SAT Math and Verbal have also been reported. The two forms of the CCTST, A and B, are considered statistically significant. Depending on the testing, context KR-20 alphas range from .70 to .75. The newest version is CCTST Form 2000, and depending on the testing context, KR-20 alphas range from .78-.84.77

The Health Science Reasoning Test (HSRT)

Items within this inventory cover the domain of CT cognitive skills identified by a Delphi group of experts whose work resulted in the development of the CCTDI and CCTST. This test measures health science undergraduate and graduate students' CTS. Although test items are set in health sciences and clinical practice contexts, test takers are not required to have discipline-specific health sciences knowledge. For this reason, the test may have limited utility in dental education (78).

Preliminary estimates of internal consistency show that overall KR-20 coefficients range from .77 to .83.79 The instrument has moderate reliability on analysis and inference subscales, although the factor loadings appear adequate. The low K-20 coefficients may be result of small sample size, variance in item response, or both (see following table).

Table 8. Estimates of Internal Consistency and Factor Loading by Subscale for HSRT

Professional Judgment Rating Form (PJRF)

The scale consists of two sets of descriptors. The first set relates primarily to the attitudinal (habits of mind) dimension of CT. The second set relates primarily to CTS.

A single rater should know the student well enough to respond to at least 17 or the 20 descriptors with confidence. If not, the validity of the ratings may be questionable. If a single rater is used and ratings over time show some consistency, comparisons between ratings may be used to assess changes. If more than one rater is used, then inter-rater reliability must be established among the raters to yield meaningful results. While the PJRF can be used to assess the effectiveness of training programs for individuals or groups, the evaluation of participants' actual skills are best measured by an objective tool such as the California Critical Thinking Skills Test.

Teaching for Thinking Student Course Evaluation Form

Course evaluations typically ask for responses of "agree" or "disagree" to items focusing on teacher behavior. Typically the questions do not solicit information about student learning. Because contemporary thinking about curriculum is interested in student learning, this form was developed to address differences in pedagogy and subject matter, learning outcomes, student demographics, and course level characteristic of education today. This form also grew out of a "one size fits all" approach to teaching evaluations and a recognition of the limitations of this practice. It offers information about how a particular course enhances student knowledge, sensitivities, and dispositions. The form gives students an opportunity to provide feedback that can be used to improve instruction.

Holistic Critical Thinking Scoring Rubric

This assessment tool uses a four-point classification schema that lists particular opposing reasoning skills for select criteria. One advantage of a rubric is that it offers clearly delineated components and scales for evaluating outcomes. This rubric explains how students' CTS will be evaluated, and it provides a consistent framework for the professor as evaluator. Users can add or delete any of the statements to reflect their institution's effort to measure CT. Like most rubrics, this form is likely to have high face validity since the items tend to be relevant or descriptive of the target concept. This rubric can be used to rate student work or to assess learning outcomes. Experienced evaluators should engage in a process leading to consensus regarding what kinds of things should be classified and in what ways.80 If used improperly or by inexperienced evaluators, unreliable results may occur.

Peer Evaluation of Group Presentation Form

This form offers a common set of criteria to be used by peers and the instructor to evaluate student-led group presentations regarding concepts, analysis of arguments or positions, and conclusions.81 Users have an opportunity to rate the degree to which each component was demonstrated. Open-ended questions give users an opportunity to cite examples of how concepts, the analysis of arguments or positions, and conclusions were demonstrated.

Table 8. Proposed Universal Criteria for Evaluating Students' Critical Thinking Skills

Aside from the use of the above-mentioned assessment tools, Dexter et al. recommended that all schools develop universal criteria for evaluating students' development of critical thinking skills (82).

Their rationale for the proposed criteria is that if faculty give feedback using these criteria, graduates will internalize these skills and use them to monitor their own thinking and practice (see Table 4).

- Application Information

- ADEA GoDental

- ADEA AADSAS

- ADEA CAAPID

- Events & Professional Development

- Scholarships, Awards & Fellowships

- Publications & Data

- Official Guide to Dental Schools

- Data, Analysis & Research

- Follow Us On:

- ADEA Privacy Policy

- Terms of Use

- Website Feedback

- Website Help

Development and validation of an instrument to measure undergraduate chemistry students’ critical thinking skills †

First published on 12th July 2019

The importance of developing and assessing student critical thinking at university can be seen through its inclusion as a graduate attribute for universities and from research highlighting the value employers, educators and students place on demonstrating critical thinking skills. Critical thinking skills are seldom explicitly assessed at universities. Commercial critical thinking assessments, which are often generic in context, are available. However, literature suggests that assessments that use a context relevant to the students more accurately reflect their critical thinking skills. This paper describes the development and evaluation of a chemistry critical thinking test (the Danczak–Overton–Thompson Chemistry Critical Thinking Test or DOT test), set in a chemistry context, and designed to be administered to undergraduate chemistry students at any level of study. Development and evaluation occurred over three versions of the DOT test through a variety of quantitative and qualitative reliability and validity testing phases. The studies suggest that the final version of the DOT test has good internal reliability, strong test–retest reliability, moderate convergent validity relative to a commercially available test and is independent of previous academic achievement and university of study. Criterion validity testing revealed that third year students performed statistically significantly better on the DOT test relative to first year students, and postgraduates and academics performed statistically significantly better than third year students. The statistical and qualitative analysis indicates that the DOT test is a suitable instrument for the chemistry education community to use to measure the development of undergraduate chemistry students’ critical thinking skills.

Introduction

A survey of 167 recent science graduates compared the development of a variety of skills at university to the skills used in the work place ( Sarkar et al. , 2016 ). It found that 30% of graduates in full-time positions identified critical thinking as one of the top five skills they would like to have developed further within their undergraduate studies. Students, governments and employers all recognise that not only is developing students’ critical thinking an intrinsic good, but that it better prepares them to meet and exceed employer expectations when making decisions, solving problems and reflecting on their own performance ( Lindsay, 2015 ). Hence, it has become somewhat of an expectation from governments, employers and students that it is the responsibility of higher education providers to develop students’ critical thinking skills. Yet, despite the clear need to develop these skills, measuring student attainment of critical thinking is challenging.

The definition of critical thinking

Cognitive psychologists and education researchers use the term critical thinking to describe a set of cognitive skills, strategies or behaviours that increase the likelihood of a desired outcome ( Halpern, 1996b ; Tiruneh et al. , 2014 ). Psychologists typically investigate critical thinking experimentally and have developed a series of reasoning schemas with which to study and define critical thinking; conditional reasoning, statistical reasoning, methodological reasoning and verbal reasoning ( Nisbett et al. , 1987 ; Lehman and Nisbett, 1990 ). Halpern (1993) expanded on these schemas to define critical thinking as the thinking required to solve problems, formulate inferences, calculate likelihoods and make decisions.

In education research there is often an emphasis on critical thinking as a skill set ( Bailin, 2002 ) or putting critical thought into tangible action ( Barnett, 1997 ). Dressel and Mayhew (1954) suggested it is educationally useful to define critical thinking as the sum of specific behaviours which could be observed from student acts. They identify these critical thinking abilities as identifying central issues, recognising underlying assumptions, evaluating evidence or authority, and drawing warranted conclusions. Bailin (2002) raises the point that from a pedagogical perspective many of the skills or dispositions commonly used to define critical thinking are difficult to observe and, therefore, difficult to assess. Consequently, Bailin suggests that the concept of critical thinking should explicitly focus on adherence to criteria and standards to reflect ‘good’ critical thinking ( Bailin, 2002, p. 368 ).

It appears that there are several definitions of critical thinking of equally valuable meaning ( Moore, 2013 ). There is agreement across much of the field that meta-cognitive skills, such as self-evaluation, are essential to a well-rounded process of critical thinking ( Glaser, 1984 ; Kuhn, 1999 ; Pithers and Soden, 2000 ). There are key themes such as ‘critical thinking: as judgement, as scepticism, as originality, as sensitive reading, or as rationality’ which can be identified across the literature. In the context of developing an individual's critical thinking it is important that these themes take the form of observable behaviours.

Developing students’ critical thinking

In the latter half of the 20th century informal logic gained academic credence as it challenged the previous ideas of logic being related purely to deduction or inference and that there were, in fact, theories of argumentation and logical fallacies ( Johnson et al. , 1996 ). These theories began to be taught at universities as standalone courses free from any context in efforts to teach the structure of arguments and recognition of fallacies using abstract theories and symbolism. Cognitive psychology research lent evidence to the argument that critical thinking could be developed within a specific discipline and those reasoning skills were, at least to some degree, transferable to situations encountered in daily life ( Lehman et al. , 1988 ; Lehman and Nisbett, 1990 ). These perspectives form the basis of the subject generalist, who believed critical thinking can be developed independent of subject specific knowledge.

McMillan (1987) carried out a review of 27 empirical studies conducted at higher education institutions where critical thinking was taught, either in standalone courses or integrated into discipline-specific courses such as science. The review found that standalone and integrated courses were equally successful in developing critical thinking, provided critical thinking developmental goals were made explicit to the students. The review also suggested that the development of critical thinking was most effective when its principles were taught across a variety of discipline areas so as to make knowledge retrieval easier.

Ennis (1989) suggested that there are a range of approaches through which critical thinking can be taught: general, where critical thinking is taught separate from content or ‘discipline’; infusion, where the subject matter is covered in great depth and teaching of critical thinking is explicit; immersion, where the subject matter is covered in great depth but critical thinking goals are implicit; and mixed, a combination of the general approach with either the infusion or immersion approach. Ennis (1990) arrived at a pragmatic view to concede that the best critical thinking occurs within one's area of expertise, or domain specificity, but that critical thinking can still be effectively developed with or without discipline specific knowledge ( McMillan, 1987 ; Ennis, 1990 ).

Many scholars still remain entrenched in the debate regarding the role discipline-specific knowledge has in the development of critical thinking. For example, Moore (2011) rejected the use of critical thinking as a catch-all term to describe a range of cognitive skills, believing that to teach critical thinking as a set of generalisable skills is insufficient to provide students with an adequate foundation for the breadth of problems they will encounter throughout their studies. Conversely, Davies (2013) accepts that critical thinking skills share fundamentals at the basis of all disciplines and that there can be a need to accommodate the discipline-specific needs ‘higher up’ in tertiary education via the infusion approach. However, Davies considers the specifist approach to developing critical thinking ‘dangerous and wrong-headed’ ( Davies, 2013, p. 543 ), citing government reports and primary literature which demonstrates tertiary students’ inability to identify elements of arguments, and championing the need for standalone critical thinking courses.

Pedagogical approaches to developing critical thinking in chemistry in higher education range from writing exercises ( Oliver-Hoyo, 2003 ; Martineau and Boisvert, 2011 ; Stephenson and Sadler-Mcknight, 2016 ), inquiry-based projects ( Gupta et al. , 2015 ), flipped lectures ( Flynn, 2011 ) and open-ended practicals ( Klein and Carney, 2014 ) to gamification ( Henderson, 2010 ), and work integrated learning (WIL) ( Edwards et al. , 2015 ). Researchers have demonstrated the benefits of developing critical thinking skills across all first, second and third year programs of an undergraduate degree ( Phillips and Bond, 2004 ; Iwaoka et al. , 2010 ). Phillips and Bond (2004) indicated that such interventions help develop a culture of inquiry, and better prepare students for employment.

Some studies demonstrate the outcomes of teaching interventions via validated commercially available critical thinking tests, available from a variety of vendors for a fee ( Abrami et al. , 2008 ; Tiruneh et al. , 2014 ; Abrami et al. , 2015 ; Carter et al. , 2015 ). There are arguments against the generalisability of these commercially available tests. Many academics believe assessments need to closely align with the intervention(s) ( Ennis, 1993 ) and a more accurate representation of student ability is obtained when a critical thinking assessment is related to a students’ discipline, as they attach greater significance to the assessment ( Halpern, 1998 ).

Review of commercial critical thinking assessment tools

Several reviews of empirical studies suggest that the WGCTA is the most prominent test in use ( Behar-Horenstein and Niu, 2011 ; Carter et al. , 2015 ; Huber and Kuncel, 2016 ). However, the CCTST was developed much later than the WGCTA and recent trends suggest the CCTST has gained popularity amongst researchers since its inception. Typically, the tests are administered to address questions regarding the development of critical thinking over time or the effect of a teaching intervention. The results of this testing are inconsistent; some studies report significant changes while others report no significant changes in critical thinking ( Behar-Horenstein and Niu, 2011 ). For example, Carter et al. (2015) found studies which used the CCTST or the WGCTA did not all support the hypothesis of improved critical thinking with time, with some studies reporting increases, and some studies reporting decreases or no change over time. These reviews highlight the importance of experimental design when evaluating critical thinking. McMillan (1987) reviewed 27 studies and found that only seven of them demonstrated significant changes in critical thinking. He concluded that tests which were designed by the researcher are a better measure of critical thinking, as they specifically address the desired critical thinking learning outcomes, as opposed to commercially available tools which attempt to measure critical thinking as a broad and generalised construct.

The need for a contextualised chemistry critical thinking test

Several examples of chemistry specific critical thinking tests and teaching tools were found in the literature. However, while all of these tests and teaching activities were set within a chemistry context, they require discipline specific knowledge and/or were not suitable for very large cohorts of students. For example Jacob (2004) presented students with six questions each consisting of a statement requiring an understanding of declarative chemical knowledge. Students were expected to select whether the conclusion was valid, possible or invalid and provide a short statement to explain their reasoning. Similarly, Kogut (1993) developed exercises where students were required to note observations and underlying assumptions of chemical phenomena then develop hypotheses, and experimental designs with which to test these hypotheses. However, understanding the observations and underlying assumptions was dependent on declarative chemical knowledge such as trends in the periodic table or the ideal gas law.

Garratt et al. (1999) developed an entire book dedicated to developing chemistry critical thinking, titled ‘A Question of Chemistry’. In writing this book the authors took the view that thinking critically in chemistry draws on the generic skills of critical thinking and what they call ‘an ethos of a particular scientific method’ ( Garratt et al. , 2000, p. 153 ). The approach to delivering these questions ranged from basic multiple choice questions, to rearranging statements to generate a cohesive argument, or open-ended responses. The statements preceding the questions are very discipline specific and the authors acknowledge they are inaccessible to a lay person. Overall the chemistry context is used because ‘it adds to the students’ motivation if they can see the exercises are firmly rooted in, and therefore relevant to, their chosen discipline’ ( Garratt et al. , 2000, p. 166 ).

Thus, an opportunity has been identified to develop a chemistry critical thinking test which could be used to assist chemistry educators and chemistry education researchers in evaluating the effectiveness of teaching interventions designed to develop the critical thinking skills of chemistry undergraduate students. This study aimed to determine whether a valid and reliable critical thinking test could be developed and contextualised within the discipline of chemistry, yet independent of any discipline-specific knowledge, so as to accurately reflect the critical thinking ability of chemistry students from any level of study, at any university.

This study describes the development and reliability and validity testing of an instrument with which to measure undergraduate chemistry students’ critical thinking skills: The Danczak–Overton–Thompson Chemistry Critical Thinking Test (DOT test).

As critical thinking tests are considered to evaluate a psychometric construct ( Nunnally and Bernstein, 1994 ) there must be supporting evidence of their reliability and validity ( Kline, 2005 ; DeVellis, 2012 ). Changes made to each iteration of the DOT test and the qualitative and quantitative analysis performed at each stage of the study are described below.

Qualitative data analysis

The qualitative data for DOT V1 and DOT V2 were treated as separate studies. The data for these studies were collected and analysed separately as described in the following. The data collected from focus groups throughout this research were recorded with permission of the participants and transcribed verbatim into Microsoft Word, at which point participants were de-identified. The transcripts were then imported into NVivo version 11 and an initial analysis was performed to identify emergent themes. The data then underwent a second analysis to ensure any underlying themes were identified. A third review of the data used a redundancy approach to combine similar themes. The final themes were then used for subsequent coding of the transcripts ( Bryman, 2008 ).

Developing an operational definition of critical thinking

Therefore, students, teaching staff and employers did not define critical thinking in the holistic fashion of philosophers, cognitive psychologists or education researchers. In fact, very much in line with the constructivist paradigm ( Ferguson, 2007 ), participants seem to have drawn on elements of critical thinking relative to the environments in which they had previously been required to use critical thinking. For example, students focused on analysis and problem solving, possibly due to the assessment driven environment of university, whereas employers cited innovation and global contexts, likely to be reflective of a commercial environment.

The definitions of critical thinking in the literature ( Lehman et al. , 1988 ; Facione, 1990 ; Halpern, 1996b ) cover a wide range of skills and behaviours. These definitions often imply that to think critically necessitates that all of these skills or behaviours be demonstrated. However, it seems almost impossible that all of these attributes could be observed at a given point in time, let alone assessed ( Dressel and Mayhew, 1954 ; Bailin, 2002 ). Whilst the students in Danczak et al. (2017) used ‘problem solving’ and ‘analysis’ to define critical thinking, it does not necessarily mean that their description accurately reflects the critical thinking skills they have actually acquired, but rather their perception of what critical thinking skills they have developed. Therefore, to base a chemistry critical thinking test solely on analysis and problem solving skills would lead to the omission of the assessment of other important aspects of critical thinking.

To this end, the operation definition of critical thinking acknowledges the analysis and problem solving focus that students predominately used to describe critical thinking, whilst expanding into other important aspects of critical thinking such as inference and judgement. Consequently, guidance was sought from existing critical thinking assessments, as described below.

Development of the Danczak–Overton–Thompson chemistry critical thinking test (DOT test)

The WGCTA is an 85 item test which has undergone extensive scrutiny in the literature since its inception in the 1920s ( Behar-Horenstein and Niu, 2011 ; Huber and Kuncel, 2016 ). The WGCTA covers the core principles of critical thinking divided into five sections: inference, assumption identification, deduction, interpreting information and evaluation of arguments. The questions test each aspect of critical thinking independent of context. Each section consists of brief instructions and three or four short parent statements. Each parent statement acts as a prompt for three to seven subsequent questions. The instructions, parent statements, and the questions themselves were concise with respect to language and reading requirements. The fact that the WGCTA focused on testing assumptions, deductions, inferences, analysing arguments and interpreting information was an inherit limitation in its ability to assess all critical thinking skills and behaviours. However, these elements are commonly described by many definitions of critical thinking ( Facione, 1990 ; Halpern, 1996b ).

The questions from the WGCTA practice test were analysed and used to structure the DOT test. The pilot version of the DOT test (DOT P) was initially developed with 85 questions, set within a chemistry or science context, and using similar structure and instructions to the WGCTA with five sub-scales: making assumptions, analysing arguments, developing hypotheses, testing hypotheses, and drawing conclusions.

Below is an example of paired statements and questions written for the DOT P. This question is revisited throughout this paper to illustrate the evolution of the test throughout the study. The DOT P used essentially the same instructions as provided on the WGCTA practice test. In later versions of the DOT Test the instructions were changed as will be discussed later.

The parent statement of the exemplar question from the WGCTA required the participant to recognise proposition A and proposition B are different, and explicitly states that there is a relationship between proposition A and proposition C. This format was used to generate the following parent statement:

A chemist tested a metal centred complex by placing it in a magnetic field. The complex was attracted to the magnetic field. From this result the chemist decided the complex had unpaired electrons and was therefore paramagnetic rather than diamagnetic.

In writing an assumption question for the DOT test, paramagnetic and diamagnetic behaviour of metal complexes replaced propositions A and B. The relationship between propositions A and C was replaced with paramagnetic behaviour being related to unpaired electrons. The question then asked if it is a valid or invalid assumption that proposition B is not related to proposition C.

Diamagnetic metals centred complexes do not have any unpaired electrons.

The correct answer was a valid assumption as this question required the participant to identify proposition B and C were not related. The explanation for the correct answer was as follows:

The paragraph suggests that if the complex has unpaired electrons it is paramagnetic. This means diamagnetic complexes likely cannot have unpaired electrons.

All 85 questions on the WGCTA practice test were analysed in the manner exemplified above to develop the DOT P. In designing the test there were two requirements that had to be met. Firstly, the test needed to be able to be completed comfortably within 30 minutes to allow it to be administered in short time frames, such as at the end of laboratory sessions, and to increase the likelihood of voluntary completion by students. Secondly, the test needed to be able to accurately assess the critical thinking of chemistry students from any level of study, from first year general chemistry students to final year students. To this end, chemistry terminology was carefully chosen to ensure that prior knowledge of chemistry was not necessary to comprehend the questions. Chemical phenomena were explained and contextualised completely within the parent statement and the questions.

DOT pilot study: content validity

The test took in excess of 40 minutes to complete. Therefore, questions which were identified as unclear, which did not illicit the intended responses, or caused misconceptions of the scientific content were removed. The resulting DOT V1 contained seven questions relating to ‘Making Assumptions’, seven questions relating to ‘Analysing Arguments’, six questions relating to ‘Developing Hypotheses’, five questions relating to ‘Testing Hypotheses’ and five questions relating to ‘Drawing Conclusions’. The terms used to select a multiple choice option were written in a manner more accessible to science students, for example using terms such as ‘Valid Assumption’ or ‘Invalid Assumption’ instead of ‘Assumption Made’ or ‘Assumption Not Made’. Finally, the number of options in the ‘Developing Hypotheses’ section were reduced from five to three of ‘likely to be an accurate inference’, ‘insufficient information to determine accuracy’ and ‘unlikely to be an accurate inference’.

Data treatment of responses to the DOT test and WGCTA-S

All responses to the DOT test and WGCTA-S were imported into IBM SPSS statistics (V22). Frequency tables were generated to determine erroneous or missing data. Data was considered erroneous when participants had selected ‘C’ to questions which only contained options ‘A’ or ‘B’, or when undergraduate students who identified their education/occupation as that of an academic. The erroneous data was deleted and treated as missing data points. In each study a variable was created to determine the sum of unanswered questions (missing data) for each participant. Pallant (2016, pp. 58–59) suggests a judgement call is required when considering missing data and whether to treat certain cases as genuine attempts to complete the test or not. In the context of this study a genuine attempt was based on the number of questions a participant left unanswered. Participants who attempted at least 27 questions were considered to have genuinely attempted the test.

Responses to all DOT Test (and WGTCA-S) questions were coded as correct or incorrect responses. Upon performing descriptive statistics, the DOT V1 scores were found to exhibit a normal (Gaussian) distribution whereas the DOT V3 exhibited a non-parametric (not-normal) distribution. In light of these distributions it was decided to treat all data obtained as non-parametric.

Internal reliability of each iteration of the DOT test was determined by calculating Cronbach's α ( Cronbach, 1951 ). Within this study the comparison between two continuous variables was made using the non-parametric equivalent of a Pearson's r , Spearman's Rank Order test as recommended by Pallant (2016) . Continuous variables included DOT test scores and previous academic achievement as measured by tertiary entrance scores (ATAR score). When comparing DOT test scores between education groups, which were treated as categorical variables, the non-parametric equivalent of t -test, a Mann–Whitney U test was used. When comparing a continuous variable of the same participants taken at different times using Wilcoxon signed rank test, the non-parametric equivalent of a paired t -test, was used.

DOT V1: internal reliability and content validity

Internal reliability method, content validity method, dot v2: test–retest reliability, convergent validity and content validity.

The most extensive rewriting of the parent statements occurred in the ‘Analysing Arguments’ section. The feedback provided from the focus groups indicated that parent statements did not include sufficient information to adequately respond to the questions.

Additional qualifying statements were added to several questions in order to reduce ambiguity. In the parent statement of the exemplar question the first sentence was added to eliminate the need to understand that differences exist between diamagnetic and paramagnetic metal complexes, with respect to how they interact with magnetic fields:

Paramagnetic and diamagnetic metal complexes behave differently when exposed to a magnetic field. A chemist tested a metal complex by placing it in a magnetic field. From the result of the test the chemist decided the metal complex had unpaired electrons and was therefore paramagnetic.

Finally, great effort was made in the organisation of the DOT V2 to guide the test taker through a critical thinking process. Similar to Halpern's approach to analysing an argument ( Halpern, 1996a ), Halpern teaches that an argument is comprised of several conclusions, and that the credibility of these conclusions must be evaluated. Furthermore, the validity of any assumptions, inferences and deductions used to construct the conclusions within an argument need to be analysed. To this end the test taker was provided with scaffolding from making assumptions to analysing arguments in line with Halpern's approach.

Test–retest reliability and convergent validity method

On the first day, demographic data was collected: sex, dominant language, previous academic achievement using tertiary entrance scores (ATAR), level of chemistry being studied and highest level of chemistry study completed at Monash University. Students completed the DOT V2 using an optical reader multiple choice answer sheet. This was followed by completion of the WGCTA-S in line with procedures outlined by the Watson-Glaser critical thinking appraisal short form manual (2006). The WGCTA-S was chosen for analysis of convergent validity, as it was similar in length to the DOT V2 and was intended to measure the same aspects of critical thinking. The fact that participants completed the DOT V2 and then the WGCTA-S may have affected the participants’ performance on the WGCTA-S. This limitation will be addressed in the results.

After a brief break, the participants were divided into groups of five to eight students and interviewed about their overall impression of the WGCTA-S and their approach to various questions. Interviewers prevented the participants from discussing the DOT V2 so as not to influence each other's responses upon retesting.

On the second day participants repeated the DOT V2. DOT V2 attempts were completed on consecutive days to minimise participant attrition. Upon completion of the DOT V2 and after a short break, participants were divided into two groups of nine and interviewed about their impressions of the DOT V2, how they approached various questions and comparisons between the DOT V2 and WGCTA-S.

Responses to the tests and demographic data were imported into IBM SPSS (V22). Data was treated in accordance with the procedure outlined earlier. With the exception of tertiary entrance score, there was no missing or erroneous demographic data. Spearman's rank order correlations were performed comparing ATAR scores to scores on the WGCTA-S and the DOT V2. Test–retest reliability was determined using a Wilcoxon signed rank test ( Pallant, 2016, pp. 234–236, pp. 249–253 ), When the scores of the tests taken at different times have no significant difference, as determined by a p value greater than 0.05, the test can be considered to have acceptable test–retest reliability ( Pallant, 2016, p. 235 ). Acceptable test–retest reliability does not imply that test attempts are equivalent. Rather, good test–retest reliability suggests that the precision of the test to measure the construct of interest is acceptable. Median scores of the participants’ first attempt of the DOT V2 were compared with the median score of the participants’ second attempt of the DOT V2. To determine the convergent validity of the DOT V2 the relationship between scores on the DOT V2 and performance on the WGCTA-S was investigated using Spearman's Rank order correlation.

After approximately 15 minutes of participants freely discussing the relevant test, the interviewers asked the participants to look at a given section on a test, for example the ‘Testing Hypotheses’ section of the DOT V2, and identify any questions they found problematic. In the absence of students identifying any problematic questions, the interviewers used a list of questions from each test to prompt discussion. The participants were then asked as a group:

• ‘ What do you think the question is asking you? ’

• ‘ What do you think is the important information in this question? ’

• ‘ Why did you give the answer(s) you did to this question? ’

The interview recordings were transcribed and analysed in line with the procedures and theoretical frameworks described previously to result in four distinct themes which were used to code the transcripts.

DOT V3: internal reliability, criterion validity content validity and discriminate validity

Many scientific terms were either simplified or removed in the DOT V3. In the case of the exemplar question, the focus was moved to an alloy of thallium and lead rather than a ‘metal complex’. Generalising this question to focus on an alloy allowed these questions to retain scientific accuracy and reduce the tendency for participants to draw on knowledge outside the information presented in the questions:

Metals which are paramagnetic or diamagnetic behave differently when exposed to an induced magnetic field. A chemist tested a metallic alloy sample containing thallium and lead by placing it in an induced magnetic field. From the test results the chemist decided the metallic alloy sample repelled the induced magnetic field and therefore was diamagnetic.

This statement was then followed by the prompt asking the participant to decide if the assumption presented was valid or invalid:

Paramagnetic metals do not repel induced magnetic fields.

Several terms were rewritten as their use in science implied assumptions as identified by the student focus groups. These assumptions were not intended and hence the questions were reworded. For example, question 14 asked whether a ‘low yield’ would occur in a given synthetic route. The term ‘low yield’ was changed to ‘an insignificant amount’ to remove any assumptions regarding the term ‘yield’.

The study of the DOT V3 required participants to be drawn from several distinct groups in order to assess criterion and discriminate validity. For the purpose of criterion validity, the DOT V3 was administered to first year and third year undergraduate chemistry students, honours and PhD students and post-doctoral researchers at Monash University, and chemistry education academics from an online community of practice. Furthermore, third year undergraduate chemistry students from another Australian higher education institution (Curtin University) also completed the DOT V3 to determine discriminate validity with respect to performance of the DOT V3 outside of Monash University.

Participants