Please wait...

Essay, Precis & Comprehension: English Language Preparation

The English language Paper is a scoring section which is a major deciding factor in various Bank & Government examinations. It tests your writing & reading skills, grammar & vocabulary.

This English Paper comprises of: Essay, Precis and Comprehension in most exams like the RBI Grade B. However, the comprehension part is common among the SBI, IBPS and SSC CGL examinations. In the following article, we have provided a few tips to help you score better in your English paper of Bank & Government exams.

English Comprehension

- Your need to have a good reading speed to master comprehension

Learn how to improve your reading speed: Improve Reading Speed for English Paper

- Understand the context of the passage as sometimes the questions may not be direct

- Read newspapers, and editorials on trending topics. Bank Exam English paper ’s comprehensions may consist passages based on topics like Economy, Banking, Finance, etc.

- Master your vocabulary. Though this comprehension won’t test you on vocabulary, you should be able to understand the meaning of the words through the context

- Read up idioms and phrases and understand their meaning. You can expect questions on idioms and phrases

What should an Essay comprise of?

Essay is a short piece of writing on a particular topic. Essays are error free in terms or grammar and spelling and also follow a structure and have a flow of ideas. Following is a basic ideal essay structure:

Introduction:

Should contain brief introduction of the topic and background. Mention your view before elaborating it in the body of the essay.

Used to present views on the subject in detailed manner. Restrict this to 2 or 3 paragraphs. Detail out examples to support your view. Put forth your strongest argument first followed by second strongest and so on. Each paragraph can contain one idea and sentences supporting it.

Conclusion:

Summarize your main argument using strongest evidences that support it. Don’t introduce a new idea here. Restate your main view, but do not use the same words used in the body.

It’s important that you plan your essay before you type it out. Spend a few minutes on planning and think about what you’re going to write instead of starting immediately. Suppose you have 15 minutes, spend 5 minutes planning, 8 minutes writing and 2 minutes revising/editing.

Precis ( pronounced Preisi ) is a concise summary of a passage. It includes all the essential points, mood and tone of the authors and main idea of the original passage.

- Go through sample precis and practice

- Follow rules for precis writing

- Stick to the word limit provided

Step 1: Read the given passage, highlight and underline the important points and note down the keywords in the same order as in the original passage.

Step 2: Note down the central theme/gist of the passage and tone of the author.

Step 3: Re-read the passage and compare it with the notes you made to check if you missed any crucial information.

Step 4: Provide an appropriate topic to your precis.

Step 5: Draft a precis based on the notes.

For a more detailed guide on how to write a perfect precis, click here: Comprehensive Guide to Write a Perfect Precis for English Paper .

General tips

- Ensure correct usage of grammar, spelling & punctuation

- Stick to the word limit

- Avoid using fancy words. Use simple language

- Solve previous year English language papers

- Read newspaper editorials daily. Topics are mostly based on what has been in news for the past 1 year. Refer to current affairs capsules and pick topics from there, read them and write short essays on them

- Make a note of important facts and figures

- Don’t take the time for granted. In most Bank & Government exam’s descriptive section you will have to type on the keyboard, so those of you who are slow at typing need to practice typing precis and essays on computers. The keyboard will be of old type and hence may be slow. So a faster typing speed will give you an edge over others in the exam

Click to take free sectional English paper mock tests for RBI Grade B Phase 2!

We hope these last minute tips on English language paper of Bank and Government Exams help you prepare better. Download and use this article as a handy guide during your preparation to improve your score in English paper.

We wish you all the very best for your exam.

Further reading:

RBI Grade B Phase 2 Economic & Social Issues Preparation Guide

Rbi grade b phase 2 finance & management preparation guide.

The most comprehensive online preparation portal for MBA, Banking and Government exams. Explore a range of mock tests and study material at www.oliveboard.in

Oliveboard Live Courses & Mock Test Series

- Download 500+ Free Ebooks for Govt. Exams Preparations

- Attempt Free SSC CGL Mock Test 2024

- Attempt Free IBPS Mock Test 2024

- Attempt Free SSC CHSL Mock Test 2024

- Download Oliveboard App

- Follow Us on Google News for Latest Update

- Join Telegram Group for Latest Govt Jobs Update

Leave a comment Cancel reply

Download 500+ Free Ebooks (Limited Offer)👉👉

Thank You 🙌

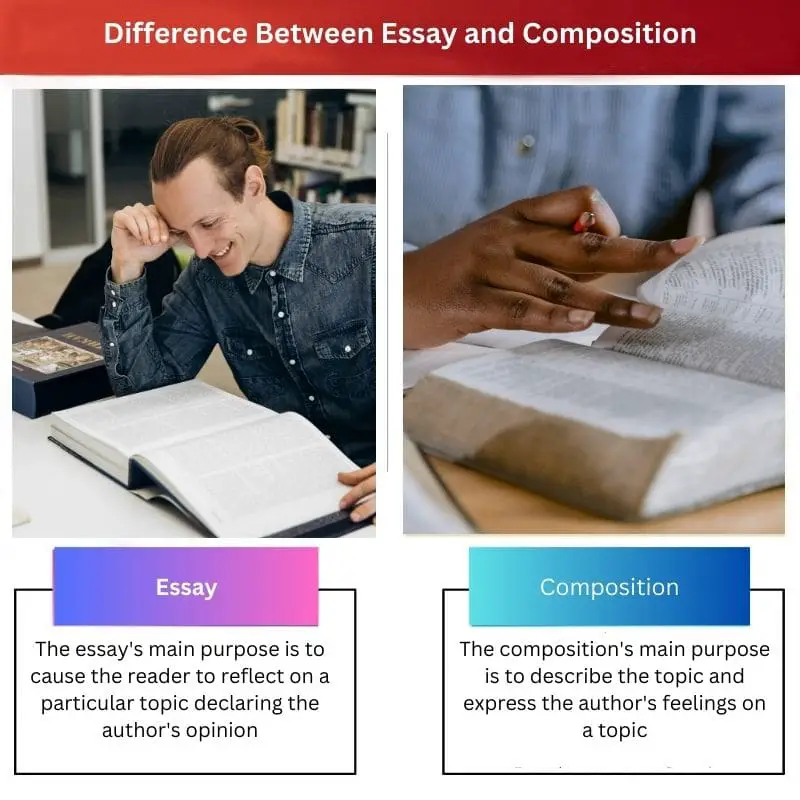

Essay vs Composition: Difference and Comparison

Some students make a mistake, thinking an essay and composition are synonymous. These terms are not contrary on the one side, and on the other side, there is a significant distinction between them.

Key Takeaways Essay and composition are both forms of academic writing that require critical thinking, analysis, and effective communication; essay is a more specific term that refers to a piece of writing that presents a thesis statement and supports it with evidence and analysis. The composition can encompass various types of writing, including essays, narratives, and descriptive pieces; an essay is a specific type of composition with a more structured format. An essay includes an introduction, body paragraphs, and a conclusion, while composition may not have a specific structure or format.

Essay vs. Composition

Essays are about the writer’s opinion on a particular topic. They are structured and follow patterns, including an introduction, a body paragraph, and a conclusion. The composition can be about any topic, and it is not structured. It is not about any specific opinion or argument.

As such, essay and composition are not interchangeable terms. They also have different writing purposes. An essay aims to push readership to develop their position on a topic. A composition explains the topic and compares phenomena without declaring the author’s position.

An essay is a text of a small volume (sometimes a college essay can be up to 7-10 pages long, but usually, the required volume is not more than 2-3 pages). The essay is written in a prosaic style. In an essay, the author states his personal opinion on a topic.

The author can express his vision in a free form. In an essay, the author is speaking on a particular phenomenon, event, or opinion that is reasoning with his view. The essay requires not only gathering specific relevant information but also adding it to your thoughts and arguments.

Similar Reads

- Composition vs Inheritance: Difference and Comparison

- Aggregation vs Composition: Difference and Comparison

- Blog vs Essay: Difference and Comparison

- The an Essay vs Creative Writing: Difference and Comparison

This is not a one-day job for most students. That is why they apply to paper writing services for help from skilled professional writers. These services aim to teach students how to explain their thoughts and structure their essays correctly.

The work created with the help of writing services is a completed essay that can be added to the student’s impressions. The composition is a creative paper presenting the author’s thoughts and feelings on the topic without explaining his opinion.

For example, the composition topic about the Great Depression is “Franklin D. Roosevelt’s role during the Great Depression.” The essay topic about the Great Depression will be: “Did the New Deal solve the problem of the Great Depression?”

Comparison Table

What is essay.

This genre has recently become popular, but its roots date back to the 16 th century. Today, the essay is offered as a college and university assignment. An essay is a type of work built around a central topic.

The main purpose of writing an essay is to provoke the reader into reflection . Writing an essay allows learning to formulate your thoughts, structure information, find arguments, express the individual impression, and formulate your position.

The characteristics of an essay are a small volume, a specific topic, and free composition. The author must build a trusting relationship with the reader; therefore, writing an essay is much more difficult than writing a composition.

What is Composition?

A composition is a creative work, on a prescribed topic. It has a clear presentation structure.

In the composition, you can agree or disagree with the opinion of other authors, express your thoughts about what you read, compare works of different authors, and analyze their vision. A composition is expected to provide full disclosure of the topic.

To provide it, the paper must follow a set structure: an introduction that outlines the essential problem of the topic. This body explains and reveals the main idea of the composition and a logical conclusion. Therefore, a composition has a larger volume than an essay.

Main Differences Between An Essay And Composition

- There is a significant difference in style. A composition mainly contains the analysis of the topic. At the same time, the author’s position is clearly expressed in the essay.

- Compositions and essays vary in length. The essay, most often, has a small volume because the author’s thoughts must be clearly stated. The composition has a prescribed structure and a larger volume.

- An essay allows the author to express creativity and show his vision and attitude toward a specific phenomenon. A composition explains the topic according to its concept and doesn’t have to be supplemented with unusual thoughts.

- To write an essay, finding an original idea or developing an out-of-the-box view of a situation is significant. At the same time, writing a composition requires reading about the topic and talking about it.

Last Updated : 11 June, 2023

I’ve put so much effort writing this blog post to provide value to you. It’ll be very helpful for me, if you consider sharing it on social media or with your friends/family. SHARING IS ♥️

Emma Smith holds an MA degree in English from Irvine Valley College. She has been a Journalist since 2002, writing articles on the English language, Sports, and Law. Read more about me on her bio page .

Share this post!

24 thoughts on “essay vs composition: difference and comparison”.

Informative and thought-provoking! This article serves as a valuable resource for students and teachers, offering a clear understanding of the differences between essays and compositions.

The article’s comprehensive breakdown of the differences between essays and compositions is enlightening. It’s a valuable resource that could greatly benefit students and writers aiming to enhance their academic writing skills.

Definitely agree! This article provides a solid understanding of these academic writing forms.

The depth of the analysis in this post provides significant value, particularly in helping writers develop greater clarity on the requirements of essays and compositions.

The article offers a comprehensive analysis of the differences between essays and compositions, emphasizing the importance of understanding their distinct characteristics. It’s a great resource for students, teachers, and anyone interested in academic writing.

Absolutely agree! This is a valuable resource for anyone looking to improve their writing skills.

The article provides an excellent breakdown of essays and compositions, offering valuable insights into their unique characteristics and purposes. It is a highly informative read for both students and writers.

The article’s depth of analysis is impressive, making it a valuable guide for understanding the distinctions between essays and compositions.

Absolutely! This article serves as a detailed and comprehensive resource for grasping the nuances of academic writing.

This article effectively highlights the distinctions between essays and compositions, serving as an insightful resource for students and educators alike. The detailed comparison table is particularly helpful in understanding the differences.

Absolutely, the comparison table is a fantastic visual aid for grasping the disparities between essays and compositions.

The wealth of information provided in this article is incredibly enlightening, offering a thorough understanding of the differences between essays and compositions. It’s an invaluable read for students and aspiring writers.

I completely agree! This article is a comprehensive and informative resource for anyone looking to enhance their academic writing skills.

Absolutely! The distinctions laid out here provide a clear understanding of these academic writing forms, serving as a valuable resource for students and educators.

The post presents a well-structured and detailed comparison of essays and compositions, providing an insightful guide for students and writers. It offers a wealth of information on these academic writing forms.

I completely agree! This article delivers significant value in clarifying the distinctions between essays and compositions.

Absolutely, the depth and precision in the comparisons is commendable and highly beneficial for aspiring authors.

The post offers insightful comparisons between essays and compositions, providing a clear understanding of their respective purposes and structures. It’s a valuable read for students and writers seeking a deeper understanding of academic writing forms.

Absolutely, the precision in drawing the distinctions makes this article a must-read for students and academic writers.

The comparisons and detailed explanations are highly informative and beneficial for aspiring authors and students alike.

This article presents a detailed and well-structured comparison of essays and compositions, offering valuable insights into their unique characteristics and purposes. It’s a significant resource for students and writers alike.

Absolutely! The article delivers crucial information for developing a profound understanding of essays and compositions, providing an essential guide for aspiring authors and students.

The article’s clarifications make it clear that essays and compositions are not interchangeable terms, and provide a detailed description of their unique characteristics. Writers and educators will likely find this information incredibly helpful.

Definitely! The distinctions highlighted here are essential for understanding the nuances of academic writing.

Leave a Comment Cancel reply

Save my name, email, and website in this browser for the next time I comment.

Want to save this article for later? Click the heart in the bottom right corner to save to your own articles box!

British Council Malaysia

- English schools

- myClass Login

- Show search Search Search Close search

- English courses

- Kids and teens

- Learning resources for parents

Tips on how to prepare for comprehension and composition

Comprehension and composition are topics that our children learn in school. They are part of the learning process that will help them to eventually become better readers, writers and thinkers. Some students experience difficulty in this area especially when it comes to the comprehension and composition exercises.

Here are some helpful tips to guide us, both parents and children, in overcoming the hurdles related to this subject matter:

How important is background knowledge?

Over the past two decades, research into how we read and write has shown that the biggest factor affecting comprehension and composition is how much we know about the topic we are reading or writing about.

Your Pokémon Go obsessed child with a C average would likely be scoring an A in a comprehension paper if the text was about Pokémon Go. Student with good background knowledge can open up a comprehension paper on any range of topics and quickly understand what the writer is talking about. In their composition paper, they can easily pull out interesting and well-developed ideas for any question they are asked. Unfortunately, Pokémon Go is unlikely to be the sole topic of exams.

In order for your child to produce the relevant, interesting, and wide ranging essay required to get top marks, knowledge of the following topic areas is useful:

- Choice is a new issue in many countries. For most of human history, the concept of choosing clothes, schools, or even spouses was an alien idea.

- Even now, not all countries or societies have the same amount of choices available.

- Some countries choose to restrict their citizen’s choice based on economic or ideological reasons.

Without knowing this, students can only write about their own limited experience of choice – perhaps the difference between a hawker centre and a mall, which won’t lead to failure but also won’t bring in the higher grades.

What does your child need to do?

In the comprehension paper, the question types that students struggle with most – inference, authorial intention, and summary – are all based on their understanding of the passage.

An encyclopaedic knowledge of all 151 Pokémon may help your child ‘catch ‘em all’ but because of the huge variety of topics that comprehension and composition tests could include, this is not enough to succeed academically. For example, topics may include food, geography, consumerism and technology, science, nature and pollution.

Bearing this in mind, how can your child build their background knowledge to do better in this kind of exam?

The short term

In the short term, teens often possess a fair amount of background knowledge gained from the variety of subjects they study and their personal interests (sports, travel, Pokémon Go!).

In the lead up to the exam, try drawing these strands together. For example, getting your child to make a timeline charting when scientific discoveries were made, historical events happened or works of literature were written. Perhaps they can also try placing on a map the countries they have read about in social studies, English, or geography. Research into cognitive processes suggests that by drawing these kinds of links in our knowledge, it becomes more meaningful and memorable.

The long term

Building up a general knowledge bank over time will give your child a huge advantage. Our curriculum at the British Council supports this by focusing on essay writing and non-fiction texts filled with information.

From Secondary 1, our students study a topic for five weeks and regularly review what they’ve learned through quizzes and assessment tasks throughout the year. This leads to long-term learning and retention: absolutely essential for those mid-year and end-of-year exams!

That doesn’t mean that your child’s passion for Pokémon should be squashed. Research is clear that the more students read about a topic, whatever it’s about, the better they do in school exams.

But in addition to this, your child needs to build a broad general knowledge to be able to quickly comprehend exam questions on a whole range of topics. Who knows, perhaps a well-chosen example of how Pokémon Go has affected sedentary lifestyles might make a difference!

Home » Education » Difference Between Essay and Composition

Difference Between Essay and Composition

Main difference – essay vs composition.

Many students think that the two words Essay and Composition mean the same and can be used interchangeably. While it is true that essay is an essay a type of composition, not all compositions are essays. Let us first look at the meaning of composition. A composition can refer to any creative work, be it a short story, poem, essay, research paper or a piece of music. Therefore, the main difference between essay and composition is that essay is a type of composition whereas composition refers to any creative work .

What is an Essay

An essay is a literary composition that describes, analyzes, and evaluates a certain topic or an issue . It typically contains a combination of facts and figures and personal opinions, ideas of the writer. Essays are a type of commonly used academic writing in the field of education. In fact, the essay can be introduced as the main type of literary composition written in school level.

An essay typically consists of a brief introduction, a body that consists of supporting paragraphs, and a conclusion. However, the structure, content and the purpose of an essay can depend on the type of the essay. An essay can be classified into various types depending on the given essay title, or the style of the essay writer. Narrative , Descriptive , Argumentative , Expository , Persuasive , etc. are some of these essay types. The content , structure and style of the essay also depend on the nature of the essay. The complexity of the essay also depends on the type of the essay. For example, narrative and descriptive essays can be written even by primary school students whereas argumentative and persuasive essays are usually being written by older students.

What is a Composition

The term composition can refer to any creative work . A composition can be a piece of music, art of literature. For example, Symphony No. 40 in G minor is a composition by Mozart.

The term literary composition can refer a poem, short story, essay, drama , novel or even a research paper. It refers to an original and creative literary work.

Essay is a relatively short piece of writing on a particular topic.

Composition is a creative work.

Interconnection

Essay is a type of composition.

Not all compositions are essays.

Essay can be categorized as narrative, descriptive, persuasive, argumentative, expository, etc.

A composition can be a short story, novel, poem, essay, drama, painting, piece of music, etc.

Prose vs verse

Essay is always written in prose.

About the Author: admin

you may also like these.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- HHS Author Manuscripts

Relations between Reading and Writing: A Longitudinal Examination from Grades 3 to 6

Young-suk grace kim.

University of California Irvine

Yaacov Petscher

Florida Center for Reading Research

Jeanne Wanzek

Vanderbilt University

Stephanie Al Otaiba

Southern Methodist University

We investigated developmental trajectories of and the relation between reading and writing (word reading, reading comprehension, spelling, and written composition), using longitudinal data from students in Grades 3 to 6 in the US. Results revealed that word reading and spelling were best described as having linear growth trajectories whereas reading comprehension and written composition showed nonlinear growth trajectories with a quadratic function during the examined developmental period. Word reading and spelling were consistently strongly related (.73 ≤ r s ≤ .80) whereas reading comprehension and written composition were weakly related (.21 ≤ r s ≤ .37). Initial status and linear slope were negatively and moderately related for word reading (−.44) whereas they were strongly and positively related for spelling (.73). Initial status of word reading predicted initial status and growth rate of spelling; and growth rate of word reading predicted growth rate of spelling. In contrast, spelling did not predict word reading. When it comes to reading comprehension and writing, initial status of reading comprehension predicted initial status (.69), but not linear growth rate, of written comprehension. These results indicate that reading-writing relations are stronger at the lexical level than at the discourse level and may be a unidirectional one from reading to writing at least between Grades 3 and 6. Results are discussed in light of the interactive dynamic literacy model of reading-writing relations, and component skills of reading and writing development.

Reading and writing are the foundational skills for academic achievement and civic life. Many tasks, including those in school, require both reading and writing (e.g., taking notes or summarizing a chapter). Although reading and writing have been considered separately in much of the previous research in terms of theoretical models and curriculum ( Shanahan, 2006 ), their relations have been recognized (see Fitzgerald & Shanahan, 2000 ; Langer & Flihan, 2000 ; Shanahan, 2006 for review). In the present study, our goal was to expand our understanding of developmental trajectories of reading and writing (word reading, reading comprehension, spelling, and written composition), and to examine developmental relations between reading and writing at the lexical level (word reading and spelling) and discourse level (reading comprehension and written composition), using longitudinal data from upper-elementary grades (Grades 3 to 6).

Successful reading comprehension entails construction of an accurate situation model based on the given written text ( Kintsch, 1988 ). Therefore, decoding or reading words is a necessary skill (Hoover & Gough, 1990). The other necessary skill is comprehension, which involves parsing and analysis of linguistic information of the given text. This requires working memory and attention to hold and access linguistic information (Daneman & Merikle, 1996; Kim, 2017 ) as well as oral language skills such as vocabulary and grammatical knowledge ( Cromley & Azevedo, 2007 ; Elleman, Lindo, Morphy, & Compton, 2009 ; Kim, 2015 , 2017 ; National Institute of Child Health and Human Development, 2000 ; Vellutino, Tunmer, Jaccard, & Chen, 2007 ). In addition, construction of an accurate situation model requires making inferences and integrating propositions across the text and with one’s background knowledge to establish global coherence. These inference and integration processes draw on higher order cognitive skills such as inference, perspective taking, and comprehension monitoring ( Cain & Oakhill, 1999 ; Cain, Oakhill, & Bryant, 2004 ; Cromley & Azevedo, 2007 ; Kim, 2015 , 2017 ; Kim & Phillips, 2014 ; Oakhill & Cain, 2012 ; Pressley & Ghatala, 1990 ).

In writing (written composition), one has to generate content in print. As a production task, transcription skills (spelling, handwriting or keyboarding fluency) are necessary (e.g., Berninger & Amtmann, 2002; Graham, Berninger, Abbott, Abbott, & Whitaker, 1997 ; Juel, Griffith, & Gough, 1986 ). Generated ideas undergo translation into oral language in order to express ideas and propositions with accurate words and sentence structures; and thus, writing draws on oral language skills ( Berninger & Abbott, 2010 ; Kim et al., 2011 , 2013 , 2015a ; Olinghouse, 2008 ). Of course, quality writing is not a sum of words and sentences, but requires local and global coherence ( Kim & Schatschneider, 2017 ; Bamberg, 1983 ). Coherence is achieved when propositions are logically and tightly presented and organized, and meet the needs of the audience. This draws on higher order cognitive skills such as inference, perspective taking ( Kim & Schatschneider, 2017 ; Kim & Graham, 2018 ), and self-regulation and monitoring (Berninger & Amtmann, 2002; Kim & Graham, 2018 ; Limpo & Alves, 2013 ). Coordinating these multiple processes of generating, translating, and transcribing ideas relies on working memory to access short term and long term memory (Berninger & Amtmann, 2002; Hayes & Chenoweth, 2007 ; Kellogg, 1999 ; Kim & Schatschneider, 2017 ) as well as sustained attention ( Berninger & Winn, 2006 ).

What is apparent in this brief review is similarities of component skills of reading and writing skills (see Kim & Graham, 2018 ; Fitzgerald & Shanahan, 2000 ). Then, what is the nature of reading and writing relations 1 ? According to the interactive and dynamic literacy model ( Kim & Graham, 2018 ), reading and writing are hypothesized to co-develop and influence each other during development (interactive), but the relations change as a function of grain size and developmental phase (dynamic). The interactive nature of the relation is expected for two reasons. First, if reading and writing share language and cognitive resources to a large extent, then, development of those skills would influence both reading and writing. Second, the functional and experiential aspect of reading and writing facilitates co-development ( Fitzgerald & Shanahan, 2000 ). The majority of reading and writing tasks occur together (e.g., writing in response to written source materials; note taking after reading); and this functional aspect would facilitate and reinforce learning key knowledge and meta-awareness about print and text attributes (e.g., text structures) in the context of reading and writing.

Reading-writing relations are also expected to be dynamic or to change as a function of various factors such as grain size ( Kim & Graham, 2018 ). When the grain size is relatively small (i.e., word reading and spelling), reading-writing relations are expected to be stronger because these draw on a more or less confined set of skills such as orthography, phonology, and semantics ( Adams, 1990 ; Carlisle & Katz, 2006 ; Deacon & Bryant, 2005 ; Kim, Apel, & Al Otaiba, 2013 ; Nagy, Berninger, & Abbott, 2006 ; Ehri, 2000 ; Treiman, 1993 ). In contrast, when the grain size is larger (i.e., discourse-level skills such as reading comprehension and written composition), the relation is hypothesized to be weaker because discourse literacy skills draw on a more highly complex set of component skills, which entails more ways to be divergent (see Kim & Graham, 2018 ). Extant evidence provides support for different magnitudes of relations as a function of grain size (i.e., lexical versus discourse level literacy skills). Moderate to strong correlations have been reported for lexical-level literacy skills (i.e., word reading and spelling; .50 ≤ r s ≤ .84; Ahmed, Wagner, & Lopez, 2014 ; Berninger & Swanson, 1994 ; Ehri, 2000 ; Juel et al., 1986 ; Kim, 2011 ; Kim, Al Otaiba, Wanzek, & Gatlin, 2015a ) whereas a weaker relation has been reported for reading comprehension and written composition (.01 ≤ r s ≤ .59; Abbott & Berninger, 1993 ; Ahmed et al., 2014 ; Berninger & Abbott, 2010 ; Berninger et al., 1993; Juel et al., 1986 ; Kim et al., 2015a ).

Although previous work on reading-writing relations has been informative, empirical investigations of developmental relations between reading and writing using longitudinal data were limited. In fact, little is known about developmental patterns of writing skills (for reading development, see, for example, Kieffer, 2011 ; McCoach, O’Connell, Reis, & Levitt, 2006 ; Morgan, Farkas, & Wu, 2011 ), let alone developmental relations between reading and writing. In other words, our understanding is limited about a) the functional form or shape of development – whether writing skills, including both spelling and written composition, develop linearly or non-linearly; and b) the nature of growth in terms of the relation between initial status and the other growth parameters (linear slope and/or quadratic function) – a positive relation between initial status and linear growth would indicate that students with more advanced skills at initial status would growth faster, similar to the Matthew Effect ( Stanovich, 1986 ), whereas a negative relation would indicate a mastery relation where students with advanced initial status showing less growth.

Relatively few studies have investigated developmental trajectories for either spelling or writing. In spelling, a nonlinear developmental trajectory was reported for Norwegian-speaking children in the first three years of schooling ( Lervag & Hulme, 2010 ). Nonlinear developmental trajectories in spelling were also found for Korean-speaking children and developmental trajectories differed as a function of word characteristics ( Kim, Petscher, & Park, 2016 ). In written composition, only a couple of studies have investigated development trajectories. Kim, Puranik, and Al Otaiba (2015b) investigated growth trajectories of writing within Grade 1 (beginning to end) for three groups of English-speaking children: typically developing children, children with language impairment, and those with speech impairment. They found that although there were differences in initial status among the three groups, the linear developmental rate in writing did not differ among the three groups of children. This study was limited, however, because it examined development within a relatively short period (Grade 1), and the functional form of the growth trajectory was limited to a linear model because only three waves of data were available. Another longitudinal study, conducted by Ahmed and her colleagues ( 2014 ), followed English-speaking children from Grades 1 to 4, but growth trajectories over time were not examined because their focus was the relation between reading and writing, using changes in scores between grades.

The vast majority of previous studies on reading-writing relations have been cross-sectional investigations, and they have reported somewhat mixed findings. Some reported a unidirectional relation of reading to writing ( Kim, 2011 ; Kim et al., 2015a ); some reported a direction from writing to reading ( Berninger, Abbott, Abbott, Graham, & Richards, 2002 ; see also Graham & Hebert’s [2010] meta-analysis); and others reported bidirectional relations ( Berninger & Abbott, 2010 ; Kim & Graham, 2018 ; Shanahan & Lomax, 1986; Shanahan & Lomax, 1988). Results from limited extant longitudinal studies are also mixed. Lerkkanen, Rasku-Puttonen, Aunola, and Nurmi (2004) , using longitudinal data (4 time points across the year) from Finnish first grade children, reported a bidirectional relation between reading (composed of word reading and reading comprehension) and spelling during the initial phase of development, but not during the later phase. As for the relation between written composition and reading (composed of word reading and reading comprehension), the direction was from writing to reading, but not the other way around. Ahmed et al. (2014) examined reading-writing relations at the lexical, sentence, and discourse levels using longitudinal data from Grades 1 to 4, and found different patterns at different grain sizes. They reported a unidirectional relation from reading to writing at the lexical (word reading-spelling) and discourse levels (reading comprehension and written composition), but a bidirectional relation at the sentence level.

Findings from these studies suggest that reading and writing are related, but the developmental nature of relations still remains unclear. Building on these previous studies, the primary goal of the present study was to expand our understanding of the development of reading and writing, and their interrelations. To this end, we examined growth trajectories and developmental relations of reading and writing at the lexical and discourse-levels. Although previous studies did reveal relations between reading and writing, the number of studies which explicitly examined developmental relations at the same grain size of language (i.e., lexical level and discourse level) using longitudinal data is extremely limited, with the above noted Ahmed et al.’s (2014) study as an exception. We examined the reading-writing relations at the lexical-level and discourse-level, respectively. This is because theory and evidence clearly indicate that the component skills of reading and writing differ for lexical literacy skills (e.g., Adams, 1990 ; Treiman, 1993 ) versus discourse literacy skills (e.g., Berninger & Winn, 2006 ; Hoover & Gough, 1990; Perfetti & Stafura, 2014; Kim, 2017 ).

With the overarching goal of examining developmental relations between reading and writing at the lexical and discourse-levels, we had the following two research questions:

- What are the patterns of development of reading (word reading and reading comprehension) and writing (spelling and written composition) from Grades 3 to 6?

How are growth trajectories in reading and writing interrelated over time from Grades 3 to 6?

With regard to the first research question, we expected nonlinear growth trajectories for word reading, spelling, and reading comprehension where linear development is followed by a slowing down (or plateau). Due to lack of prior evidence in the grades we examined (i.e., Grades 3 to 6), we did not have a specific hypothesis about the functional form of growth trajectories for written composition. In terms of reading-writing relations, we hypothesized a stronger relation between word reading and spelling than that for reading comprehension and written composition. We also hypothesized a bidirectional relation particularly between word reading and spelling based on fairly strong bivariate relations reviewed above. For reading comprehension and written composition, we expected a weaker relation, and did not have a specific hypothesis about bidirectionality, given lack of empirical data in upper elementary grades.

Participants

Data from the present study are from a longitudinal study of students’ reading and writing development in the South Eastern region of the US. Cross sectional results on predictors of writing in Grades 1 to 3 have been reported previously ( Kim et al., 2014 , 2015a ). However, longitudinal data from Grades 3 (mean age = 8.25, SD =.39) to 6, the focal grades in the present study, have not been reported. The longitudinal study was composed of two cohorts of children in the same district. In other words, the sample sizes in each grade (see Table 1 ) were the sum of two cohorts of children.

Descriptive statistics for outcome measures

Note. WJ = Woodcock Johnson; LWID = Letter Word Identification Task; PC = Passage Comprehension; WIAT = Wechsler Individual Achievement Test; One day = One day prompt; TDTO = Thematic Development and Text Organization

As shown in Table 1 , total sample size in each grade varied across years and each measure 2 . For instance, in spelling, data from a total of 359 children were available in Grade 3 whereas in Grade 6, data were available for 278 children. An empirical test of whether missingness is completely at random (MCAR; Little, 1988 ) or not revealed that all data in grades 3–6 were MCAR, χ 2 (492) = 530.13, p = .114, with the exception of the grade 6 writing data, χ 2 (4) = 21.46, p < .001. However, a review of the data suggested that the data were not non-ignorable missing and the patterns of missing were unrelated to the variables themselves. As such, full-information maximum likelihood was the appropriate method for estimating coefficients in the presence of missing data ( Enders, 2010 ).

The sample was composed of 53% male students who were predominantly African-Americans (59%), followed by White (29%), Multi-racial (9%), Other (2%), and Native American or Asian (1%). We noted a pattern of more attrition related to free and reduced lunch price status. In grade 3, 51% of students were eligible for free or reduced price lunch compared to 49% in grade 4, 39% in grade 5, and 29% in grade 6. Further, 10% of students in grade 3 were identified with a primary exceptionality, 7% in grade 4, 6% in grade 5, and 6% in grade 6. No students were identified as having limited English proficiency.

Word reading

Children’s word reading was assessed by the Letter Word Identification task of the Woodcock Johnson-III (WJ-III; Woodcock, McGrew, & Mather, 2001 ). In this task, the child is asked read aloud words of increasing difficulty. This task assesses children’s decoding skill and knowledge of word specific spellings in English. Cronbach’s alpha estimates across grades 3–6 ranged from .90 to .91 according the test manual. The Letter Word Identification task of WJ-III has been widely used in previous studies and has been shown to be strongly related to other word reading tasks (e.g., r = .92; Kim et al., 2015a ; Kim & Wagner, 2015 ).

Reading comprehension

The Passage Comprehension task of WJ-III was used. This is a cloze task where the child is asked to read sentences and short passages and to fill in the blanks. Cronbach’s alpha estimates across the grades ranged from .76 to .84. This has also been widely used as a measure of reading comprehension with strong correlations with other well-established measures of reading comprehension (e.g., .70 ≤ r s ≤ .82; Keenan et al., 2008 ; Kim & Wagner, 2015 ).

The Spelling task of WJ-III was used. This is a dictation task where the child hears the word in isolation, in a sentence, and in isolation again, and is asked to spell it. Cronbach’s alpha estimates across the grades ranged from .90 to .91. The WJ-III has been reported to be strongly related to word reading skills (.76 ≤ r s ≤ .83; Kim et al., 2015a ; McGrew, Schrank, & Woodcock, 2007 ).

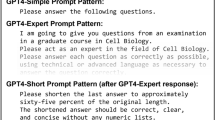

Written composition

Written composition was measured by two tasks: The Essay Composition task of the Wechsler Individual Achievement Test-3 rd (WIAT-3; Wechsler, 2009 ) and an experimental task that were used in previous studies ( Kim et al., 2014 , 2015a ; McMaster, Du, & Pétursdôttir, 2009 ; also see Abbott & Berninger, 1993 for a similar prompt). In the WIAT task, the child was asked to write about her favorite game and provide three reasons. The WIAT task has been widely used in previous studies (e.g., Berninger & Abbott, 2010 ) and was related to other writing prompts (.38 ≤ r s ≤ .45; Kim et al., 2015a ). In the experimental task, the child was asked to write about something interesting that happened after they got home from school one day (One day prompt hereafter). The One day prompt has been shown to be related to the WIAT writing task ( r = .45; Kim et al., 2015a ) and was related to other indicators of writing proficiency such as writing productivity and fluency ( McMaster et al., 2009 ). Children were given 15 minutes to complete each of their writing tasks.

Students’ written compositions were evaluated on the quality of ideas on a scale of 0 (unscorable) to 7, which was modified from the widely used 6+1 Trait approach ( Northwest Regional Educational Laboratory, 2011 ). A similar approach has been widely used in previous studies ( Graham, Berninger, & Fan, 2007 ; Hooper, Swartz, Wakely, de Kruif, & Montgomery, 2002 ; Kim et al., 2014 ; , 2015a ; Olinghouse, 2008 ). Compositions that had rich and clear ideas with details received higher scores. In addition to the idea quality, the WIAT Essay Composition task was also evaluated on thematic development and text organization (TDTO hereafter) following the examiner’s manual. Coders were rigorously trained to achieve high reliability within each year as well as across the years. For the present study, we established inter-rater reliability using 40–50 written compositions per prompt per year; Cohen’s Kappa ranged from .78 to .97.

Children were assessed in the spring by carefully trained assessors in a quiet space in each school. Assessment consisted of two sessions of individual assessment and two sessions of small group sessions. Research assistants were trained for two hours prior to each assessment session were required to pass a fidelity check before administering assessments to the participants in order to ensure accuracy in administration and scoring. The reading tasks and the spelling task were individually administered whereas the written compositions were administered in a small group setting (3–4 children).

Data Analytic Approach

We employed a combination of latent individual growth curve modeling and structural equation modeling in this study. An important aspect of evaluating the structural cross-construct relations is first understanding the underlying functional form of growth for each of the four outcome types. To this end, four specific latent variable models were tested for each outcome: a linear growth model, a non-linear growth model with non-linearity defined through a quadratic term, a linear free-loading growth model, and a linear latent change score (or dual change score) model. Each of these models reflect an alternative consideration of how growth is shaped ( Petscher, Quinn, & Wagner, 2016 ). The linear latent growth model describes a strictly linear relation over time regardless of the number of time points in the model; thus, even though there are four observed waves of data, the linear model forces a linear growth curve. The non-linear growth model extends the linear model by allowing multiple non-linear terms to be added above the linear slope; and the different alternative nonlinear models were evaluated for precise estimation of non-linearity. In the present data, four available time points permitted specification of a quadratic parameter to be estimated to determine the rate of celeration that one grows (i.e., acceleration or deceleration). The freed loading growth curve model is eponymous such that the loadings on the slope factor in the growth model are freely estimated rather than fixed at particular time intervals. In this way, the shape of the curve is defined by the estimated loadings, not a priori determined values. For example, in a linear growth model the loadings may be coded as 0, 1, 2, 3 for four time points and the equal interval coding points to the assumption of equal interval change over time. A freed loading growth model may code the loading structure as 0, *, *, 1 where 0 and 1 denote the beginning and end of change and * denotes freely estimated proportional change that may occur between times 1 and 4. The dual change score model ( McArdle, 2009 ) may be viewed as a hybrid of direct and/or indirect models with individual growth curve analysis. Dual change models include two types of change parameters, an average slope factor, such as in the linear model, and a proportional change parameter that reflects the relation between a prior time point and the change between two time points.

For the word reading, spelling, and reading comprehension outcomes, the latent growth models were fit directly to the observed measures. However, for written composition, with multiple measures of writing data at each time point, multiple indicator growth models ( Meredith & Tisak, 1990 ) were specified for each of the four general model types described above. The inclusion of the multiple indicators necessitate additional model testing steps to evaluate levels of longitudinal invariance for the loadings, intercepts, and variances. The level(s) of measurement invariance serves to ensure that the latent variables are measured on the same metric over time so that differences in the latent means and variances are due to individual differences in the latent scores and not due to biases that are consequential to a lack of measurement invariance. Loading invariance was first tested, followed by various iterations of freeing model constraints on the basis of modification indices. Once a decision was made regarding measurement invariance, the multiple indicator growth models were specified.

Following the growth model evaluations, two structural equation models were specified for pairs of constructs. First, the latent intercept and slope factors from the word reading growth model were used as predictors of factors in the spelling growth model, as well as the latent intercept from the spelling growth model as a predictor of growth in word reading. Second, the latent intercept and slope factors from the reading comprehension growth model were used as predictors of factors in the writing growth model. Fit for all latent variables was evaluated using the comparative fit index (CFI; Bentler, 1990 ), Tucker-Lewis index (TLI; Bentler & Bonett, 1980 ), and the root mean square error of approximation (RMSEA; Browne & Cudeck, 1992 ). CFI and TLI values greater than .90 are considered to be minimally sufficient criteria for acceptable model fit ( Hooper, Coughlan, & Mullen, 2008 ) and RMSEA values <.10 are desirable. The Bayes Information Criteria (BIC) was used as another index for comparing model fit with model difference of at least 5 suggesting practically important differences (Raftery, 1995).

Descriptive Statistics

Table 1 provides the descriptive statistics (W scores for the WJ measures & standard scores when available) across all measures and time points. Mean standard scores in the standardized and normed tasks were in the average range across the years (93–111). Average W scores in WJ-III Letter-Word Identification scores increased from grade 3 ( M = 499.40, SD = 19.34) to grade 6 ( M = 519.48, SD = 17.98), as did the WJ-III Spelling scores (grade 3: M = 498.85, SD = 17.08; grade 6: M = 515.91, SD = 16.16), and the WJ-III Passage Comprehension scores (grade 3: M = 491.34, SD = 11.26; grade 6: M = 501.61, SD = 11.49).

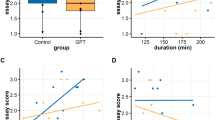

For writing measures, raw scores showed increases from grades 3 to 6 on both the WIAT TDTO (grade 3: M = 6.67, SD = 2.88; grade 6: M = 9.62, SD = 4.01) and the WIAT idea quality (grade 3: M = 3.80, SD = 0.88; grade 6: M = 4.33, SD = 1.15). The mean WIAT TDTO standard scores were in the average range (106–111). In contrast, mean scores for the One Day idea quality measure did not show a similar pattern of growth, but decreased slightly (grade 3: M = 4.40, SD = 1.07; grade 6: M = 4.25, SD = 0.99). Although this may appear surprising, a slight dip or no growth in a particular year in writing quality has been previously reported ( Ahmed et al., 2014 ).

Correlations among the measures across grades are reported in Table 2 . The relations between reading and writing in each grade varied: Word reading and spelling were strongly related (.73 ≤ r s ≤ .80) whereas reading comprehension and writing were somewhat weakly related (.21 ≤ r s ≤ .37). Correlation matrices within tasks across grades show that word reading tasks (.75 ≤ r s ≤ .86) and spelling (.83 ≤ r s ≤ .89) were strongly correlated across grades. Reading comprehension was also fairly strongly related across the grades (.60 ≤ r s ≤ .69) In contrast, correlations in writing scores across grades were weakly to moderately related (.15 ≤ r s ≤ .50).

Correlations among variables

G = Grade; LWID = Letter Word Identification Task; PC = Passage Comprehension; WT = WIAT Essay task; One day = One day prompt; TDTO = Thematic Development and Text Organization

Research Question 1

What are the patterns of development in reading (word reading and reading comprehension) and writing (spelling and written composition) from Grades 3 to 6?

Prior to the specification of the growth models for all outcomes, the longitudinal invariance of the writing measures was evaluated with Mplus v7.0 ( Muthén & Muthén, 1998–2013 ). The first phase of the model building was to identify the extent to which a single factor best represented the measurement-level covariances among the three measured writing variables at each of the grade levels. However, because the model was just-identified (i.e., 0 degrees of freedom), fit indices were not available for the grade-based models. The baseline model for longitudinal invariance specified longitudinal constraints on the loadings, intercepts, and residual variances and the model fit was poor: χ 2 (75) = 480.97, RMSEA = .107, (90% RMSEA CI = .098, .116), CFI = .68, TLI = .72. Through a series of model revisions a final model was specified that included invariant loadings and intercepts, partially invariant residual variances (i.e., grade 3 WIAT TDTO was freely estimated), and the addition of three residual covariances among writing measures, χ 2 (62) = 115.40, RMSEA = .043 (90% RMSEA CI = .030, .055), CFI = .96, TLI = .96, and the fit of this final model was significantly better than the fully invariant model (Δχ 2 = 365.57, Δdf = 13, p < .001).

As noted above, four alternative growth models were examined and compared for each of the outcomes, word reading, spelling, reading comprehension, and writing. Model fit results are reported in Table 3 . Generally, each model configuration fit well to the outcomes. For example, the word reading models all maintained acceptable CFI and TLI (>.95) as well as RMSEA (<.10). When using the BIC to compare relative model fit, both the dual change score model (BIC = 9,720) and freed loading model (BIC = 9,719) were lower by at least 5 points from the linear latent growth (BIC = 9,735) and quadratic growth (BIC = 9,729) models but only differed by 1 point from each other. Based on the χ 2 /df ratio and the measurement simplicity, the freed loading model was selected for word reading. The results from the freed loading model indicated that 45% of the total growth in word reading having occurred between grades 3 and 4, 26% of growth occurring between grades 4 and 5, and 29% of growth occurring between grades 5 and 6. The comparison of the spelling growth models showed an advantage for the dual change score model over the freed loading and non-linear growth models by 11 points on the BIC, as well as a 41 point difference with the linear latent growth model.

Developmental model fit for word reading, spelling, reading comprehension, and writing

Note . BIC = Bayes Information Criteria, df = degrees of freedom, RMSEA = root mean square error of approximation, LB = 90% RMSEA lower bound, UB = 90% RMSEA upper bound, CFI = comparative fit index, TLI = Tucker-Lewis index.

For reading comprehension, the quadratic growth and dual change scores models fit better to the other two alternatives, and similar to the word reading model selection, the χ 2 /df ratio and the measurement parsimony led to the selection of the quadratic growth model. Finally, the quadratic growth model was selected for the writing outcome based on its relative fit to the dual change models (i.e., ΔBIC = 30), and its superior fit to the linear latent growth model (Δχ 2 = 14.74, Δdf = 4, p < .01) and the freed loading model (Δχ 2 = 8.74, Δdf = 2, p < .05).

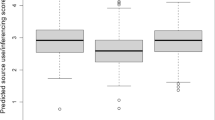

Randomly selected individual growth curves ( n = 25) for each of the four outcomes are presented in Figure 1 . The word reading curves reflect the linear relation over time with slight individual differences in the amount of change occurring. Similarly, though spelling change over time appear non-linear, the variance in the linear and quadratic slope functions were minimal and resulted in relatively parallel development. Both the reading comprehension and latent writing trajectories demonstrated individual differences in change with large differences observed in the latent writing development.

Randomly selected estimated individual curves from n =25 for word reading, spelling, reading comprehension, and latent writing across grades 3–6 (Times 1–4).

Research Question 2

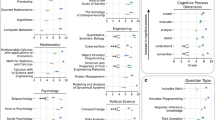

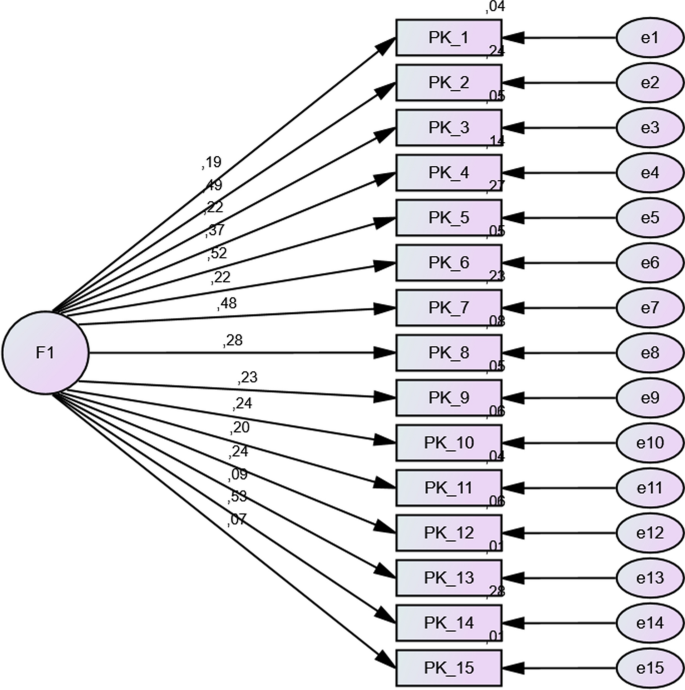

The first structural analysis tested the relation between word reading intercept (centered in grade 3) and slope in predicting spelling intercept (centered in grade 3) and slope, χ 2 (22) = 27.92, RMSEA = .024, 90% RMSEA CI = .000, .047, CFI = .99, TLI = .99. Standardized path coefficients are presented in Figure 2 (Unstandardized model coefficients are reported in Appendix A1 ). Word reading intercept (initial status) and slope were moderately and negatively related (−.44), indicating that children who had higher word reading in Grade 3 had a slower growth rate in word reading. In contrast, spelling intercept and slope had a strong and positive relation (.73), indicating that children who had a higher spelling skill showed a faster growth rate over time. In terms of the relation between word reading and spelling, Grade 3 word reading scores significantly predicted Grade 3 spelling scores (.86) as well as the average spelling growth trajectory (.96). Word reading growth also uniquely predicted the average spelling growth trajectory (.22). In contrast, Grade 3 spelling scores did not significantly predict growth in word reading (.16, p > .50). A model including bi-directional paths from word reading slope to spelling slope did not converge; a final model included a covariance between word reading and spelling slopes with the correlation estimated as .08 ( p > .50). The inclusion of the word reading predictors resulted in 75% of the variance in Grade 3 spelling explained along with 84% of the variance in spelling growth.

Latent word reading development predicting latent spelling development. LWID = Letter Word Identification

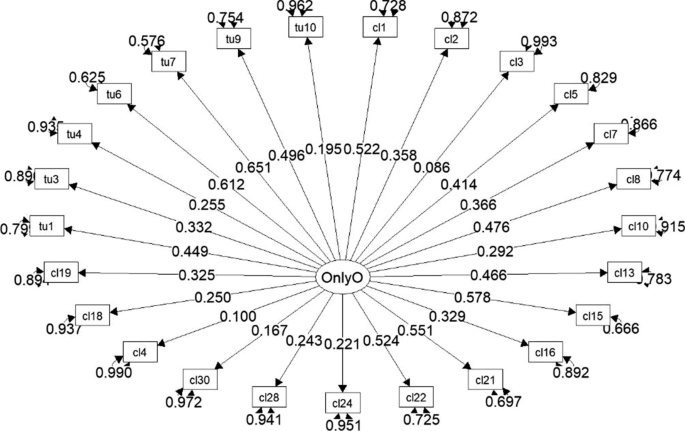

Standardized path coefficients for the predictive model of reading comprehension development to writing development are shown in Figure 3 : χ 2 (110) = 218.45, RMSEA = .045, 90% RMSEA CI = .037, .054, CFI = .95, TLI = .94 (Unstandardized model coefficients are reported in Appendix A2 ). Although our goal was to examine how growth trajectories (initial status, linear slope, and quadratic terms) in reading comprehension and writing are related to one another, this was not permitted due to zero variance in the linear slope and quadratic terms in reading comprehension as well as the quadratic term in writing. As shown in Figure 3 , Grade 3 reading comprehension significantly predicted Grade 3 writing (.69) 3 , but did not significantly explain differences in the linear writing slope (.10, p = .29). Grade 3 reading comprehension explained 48% of the variance in Grade 3 writing and 1% of the variance in the linear writing slope. Furthermore, intercept and linear slope in writing were not related (.09, p = .74); and the relation between intercept and linear slope in reading comprehension was not estimated due to lack of variance in the slope of reading comprehension.

Latent reading comprehension development predicting latent writing development. PC = Passage Comprehension; W = Writing quality

Two overarching questions guided the present study: a) what are the growth trajectories or growth patterns in reading and writing across Grades 3 to 6, and b) what is the developmental relation between reading and writing for children in these grades. We focused on development from Grades 3 to 6 when children are expected to have developed foundational literacy skills, but continue to develop reading and writing skills. Colloquially, they have moved from a learning to read to a reading to learn phase (Chall, 1983).

We found that different growth models described best the four different reading and writing outcomes. Overall, alternative models for the four literacy outcomes fit the data well. Unlike our hypothesis of nonlinear trajectories, for lexical-level literacy skills, word reading and spelling, linear models (freed loading and dual change models) described data best, at least from Grades 3 to 6. For word reading, results from the freed loading growth curve fit the data best and showed the amount of growth in word reading varied as function of development time points. The largest amount of growth (45% of total growth) occurred between grades 3 and 4 with less growth between grades 4 and 5 (26%) and between grades 5 and 6 (29%). This is convergent with a previous study, which found that growth in reading skills was larger in lower grades than upper grades ( Kieffer, 2011 ). For spelling, again the dual change score model described the data best. The dual change score model does not differ from the traditional growth model in terms of shapes of growth pattern. The dual change score model, however, adds nuances because it captures proportional (i.e., auto-regressive) growth parameters (changes between two time points) in addition to average growth parameters in traditional growth models (changes across all the time points).

Interestingly, however, for reading comprehension and written composition, nonlinear trajectories with a quadratic function described the data best. In other words, developmental trajectories were characterized by an initial linear development followed by slowing down (or plateau). This nonlinear trajectory in reading comprehension is convergent with previous work (e.g., Kieffer, 2011 ). The present study is the first one to describe any growth pattern over time in written composition beyond a single academic year and/or from Grades 3 to 6. Taken together with the limited extant work, it appears that reading comprehension and written composition develop in nonlinear trajectories, characterized by initial strong growth and followed by a pattern of deceleration, or slowing down, at least from Grades 3 to 6.

Another interesting finding about growth trajectories in reading and writing was the relation between initial status and linear growth rate. For word reading, children’s status in Grade 3 was negatively related to rate of growth (−.44) such that those who had advanced word reading in Grade 3 had a slower growth rate through the grades. Spelling, on the other hand, showed a different pattern with a strong positive relation between initial status and growth rate (.73), indicating that students with a higher spelling skill at Grade 3 developed at a faster rate from Grades 3 to 6. Although there might be several explanations, we speculate that these results are attributed, at least partially, to the fact that children in these grades are in different developmental phases in word reading versus spelling. In word reading, many children have reached high levels of proficiency by Grade 3, and therefore, their subsequent learning rate is slower as their learning approaches a ceiling. In spelling, however, students’ overall development in Grade 3 did not quite reach as high because spelling requires greater accuracy and precision in orthographic representations than reading ( Ehri, 2000 ). Therefore, there is sufficient room for further growth for the majority of learners, and those with more advanced spelling in Grade 3 continue to grow at a fast rate in subsequent grades, presumably because they have more solid foundations in component skills of spelling. Another possible explanation may relate to instruction; that is by third grade, relatively little reading instructional focus is at the word level, because students are expected to have mastered learning to read whereas spelling instruction may continue, particularly for more complex word patterns. This speculation, however, requires future studies.

Results for discourse-level literacy skills were less clear. Unfortunately, the relation between initial status and growth rates was not estimable for reading comprehension due to the lack of variance in the linear slope and quadratic parameters. In written composition, although there was variation in the linear slope, the relation between initial status and linear slope was not statistically significant. This finding suggests that initial student writing levels do not necessarily predict future growth in writing. However, given that this was the first study to explicitly examine the relations between initial status and growth trajectories in writing, our findings cannot be compared to any previous research, and so will require replication in future studies.

Turning to the relation between reading and writing, we hypothesized a dynamic relation between reading and writing as a function of grain size – differential relations for the lexical-level skills versus discourse-level skills, hypothesizing a stronger relation between word reading and spelling than between reading comprehension and written composition. This hypothesis was supported such that bivariate correlations between word reading and spelling were strong across grades (.73 ≤ r s ≤ .80). The strong correlation between word reading and spelling is convergent with theoretical explanations and empirical evidence that word reading and spelling rely on a limited number of highly similar skills such as phonological awareness, orthographic awareness (letter knowledge and letter patterns), and morphological awareness ( Apel, Wilson-Fowler, Brimo, & Perrin, 2012 ; Berninger et al., 1998 ; Ehri, Satlow, & Gaskins, 2009 ; Kim, 2011 ; Kim et al., 2013 ;Treiman, 1998).

When reading-writing relations were examined at the discourse level, the relation was weak (.21 ≤ r s ≤ .37), convergent with previous evidence ( Ahmed et al., 2014 ; Berninger & Swanson, 1994 ; Berninger & Abbott, 2010 ; Kim et al., 2015a ). The overall weak relation indicates that reading comprehension and written composition have shared variance, but are unique and independent to a large extent, at least during the relatively early phase of development examined in the present study (Grades 3 – 6). Reading comprehension and written comprehension draw on complex, similar sets of skills and knowledge such as oral language, lexical-level literacy skills, higher-order cognitive skills, background knowledge, and self-regulatory processes (e.g., Berninger & Abbott, 2010 ; Berninger et al., 2002 ; Conners, 2009 ; Cain, Oakhill, & Bryant, 2004 ; Compton, Miller, Elleman, & Steacy, 2014 ; Cromley & Azevedo, 2007 ; Graham et al., 2002; Graham et al., 2007 ; Kim, 2015 , 2017 ; Kim & Schatschneider, 2017 ; Kim & Schatschneider, 2018; Vellutino et al., 2007 ). However, as noted earlier, higher order skills such as reading comprehension and written comprehension which draw on a number of knowledge, skills, and factors are likely to be divergent as a construct. Furthermore, demands for reading comprehension and written composition differ. As a production task that involves multiple processes of planning (including generating and organizing ideas), goal setting, translating, monitoring, reviewing, evaluation, and revising (Hayes, 2012; Hayes & Flower, 1980), skilled writing requires regulating one’s attention, decisions, and behaviors throughout these process ( Berninger & Winn, 2006 ; Hayes & Flower, 1980; Hayes, 2012). Therefore, although reading comprehension and written composition draw on a similar set of knowledge and skills (e.g., oral language, self-regulation), the extent to which component skills are required for reading comprehension versus writing tasks might vary, resulting in a weaker relation ( Kim & Graham, 2018 ).

The hypothesis about the interactive nature of relations between reading and writing was not supported in the present study. Instead, our findings indicate a unidirectional relation from reading to writing both at the lexical and discourse levels. Initial status in word reading strongly predicted initial status (.86) and linear growth rate of spelling (.96). In other words, children who had higher word reading in Grade 3 also had higher spelling in Grade 3 and experienced a faster growth rate in spelling. Growth rate in word reading also predicted growth rate in spelling (.22) after accounting for the contribution of initial status in word reading, indicating that children who had faster growth in word reading also had faster growth in spelling. When the contribution of spelling to word reading was examined, initial status in spelling was positively related to word reading slope, but was not statistically significant. When growth rate in spelling was hypothesized to predict growth rate in word reading, the model did not converge. Although the causes of model non-convergence is unclear, overall the present findings indicate that development of word reading facilitates development of spelling skills but not the other way around at least from Grades 3 to 6. The unidirectional relation from word reading to spelling is convergent with a previous longitudinal study from Grades 1 to 4 ( Ahmed et al., 2014 ), but divergent with a meta-analysis reporting a large effect of spelling instruction on word reading (average effect size = .68; Graham & Hebert, 2010).

Furthermore, reading comprehension in Grade 3 fairly strongly predicted writing in Grade 3 (.69). However, neither initial status in reading comprehension (in Grade 3) nor in written composition predicted linear growth in written composition. The relation from reading comprehension to writing is convergent with an earlier study by Ahmed et al. (2014) with younger children, and suggests that knowledge of and experiences with reading comprehension are likely to contribute to written composition, but not the other way around, at least during Grades 3 to 6. This appears to contradict previous findings on the effect of writing instruction on reading (Graham & Hebert, 2010) or the positive effects on reading and writing when instruction explicitly targets both reading and writing ( Graham et al., in press ). These discrepancies might suggest that for writing to transfer to reading at the discourse level, explicit and targeted instruction might be necessary. Although writing acquisition and experiences may help children to think about and to reflect on how information is presented in written texts, which promotes awareness of text structure and text meaning, and, consequently, reading comprehension ( Graham & Harris, 2017 ; Langer & Flihan, 2000 ), these might be beneficial for children who have highly developed meta-cognition or might require instruction that explicitly identifies these aspects to promote transfer of skills between writing and reading comprehension. Future studies are warranted for this speculation.

Limitations and Conclusion

Results of the present findings should be interpreted with the following limitations in mind. First, there was a lack of variance in the linear parameter of reading comprehension as well as in the quadratic parameter of reading comprehension and written composition. These indicate that children in Grades 3 to 6 did not vary in linear growth rate in reading comprehension and quadratic function in reading comprehension and written composition. While these are potentially important findings themselves, these limited the scope of relations that could be estimated in the present study. Measuring a construct (e.g., reading comprehension) using multiple tasks would be beneficial in several aspects, including reduction of measurement error and addressing the issue of zero variance in future studies. Furthermore, previous studies have shown that reading comprehension measures vary in the extent to which they tap into component skills ( Cutting & Scarborough, 2006 ; Keenan, Betjemann, & Olson, 2008 ). Therefore, the extent to which our present findings are influenced by the use of a particular reading comprehension task (i.e., WJ Passage Comprehension) is an open question and requires future work. Second, the foci of the present study were developmental trajectories and reading-writing relations; and thus, an investigation of component skills and their relations to growth trajectories of reading and writing was beyond the scope of the present study. Such an investigation would shed light on shared and unique aspects of reading and writing development (see Kim & Graham, 2018 ). Third, we did not observe the amount or quality of instruction in reading or writing; future research might explore how instruction and interventions mediate growth trajectories. Moreover, variation across classrooms across the grades was not accounted for in the statistical model for its complexity. Finally, our findings should be replicated with different samples of students in terms of both ethnicity, English language proficiency, and free and reduced lunch price status.

In conclusion, we found that linear developmental trajectories describe development of lexical-level literacy skills whereas a nonlinear function describes development of discourse-level literacy skills from Grades 3 to 6. We also found that reading-writing relations are more likely to be from reading to writing at lexical- and discourse levels, at least during these grades. Future longitudinal and experimental investigations are needed to replicate and extend the present study to further reveal similarities and uniqueness of reading versus writing, and the nature of their relations.

Acknowledgments

This research was supported by [masked for blind review]. The authors appreciate participating children, their parents, and teachers and school personnel.

Unstandardized model coefficients for passage comprehension and writing structural equation model

Note . WG3= grade 3 writing, WG4 = grade 4 writing, WG5 = grade 5 writing, WG6 = grade 6 writing; PC = passage comprehension; Int. = intercept; Var./Res. Var. = model variances and residual variances. p -values of 999 are indicative of model coefficients that were assigned a fixed value.

Unstandardized model coefficients for word reading and spelling structural equation model

Note . SG3= grade 3 spelling, SG4 = grade 4 spelling, SG5 = grade 5 spelling, SG6 = grade 6 spelling; SG34 = grade 3–4 latent change score, SG45 = grade 4–5 latent change score, SG56 = grade 5–6; latent change score, WR = word reading; LWID = letter word identification; Int. = intercept; Var./Res. Var. = model variances and residual variances. p -values of 999 are indicative of model coefficients that were assigned a fixed value.

1 The similarities that reading and writing draw on do not indicate that reading and writing are the same or a single construct ( Kim & Graham, 2018 ). Instead, reading and writing differ in demands and thus, in the extent to which they draw on resources. Spelling places greater demands on memory for accurate recall of word specific spelling patterns than does word reading, and word reading and spelling are not likely the same constructs (see Ehri, 2000 for a review; but see Kent et al., 2015; Mehta, Foorman, Branum-Martin, & Taylor, 2005 ). Written composition is also a more self-directed process than reading comprehension, and thus, is likely to draw on self-regulation to a greater extent than for reading comprehension ( Kim & Graham, 2018 ).

2 There is a dip in sample size in Grade 4. This was primarily because a few schools’ decision not to participate in the study during that year with changes in the leadership.

3 An alternative model tested a covariance between reading comprehension and written composition initial status, resulting in a .67 correlation between the constructs.

Contributor Information

Young-Suk Grace Kim, University of California Irvine.

Yaacov Petscher, Florida Center for Reading Research.

Jeanne Wanzek, Vanderbilt University.

Stephanie Al Otaiba, Southern Methodist University.

- Abbott RD, Berninger VW. Structural equation modeling of relationships among developmental skills and writing skills in primary- and intermediate-grade writers. Journal of Educational Psychology. 1993; 85 :478–508. [ Google Scholar ]

- Adams MJ. Beginning to reading: Thinking and learning about print. Cambridge, MA: MIT Press; 1990. [ Google Scholar ]

- Ahmed Y, Wagner RK, Lopez D. Developmental relations between reading and writing at the word, sentence, and text levels: A latent change score analysis. Journal of Educational Psychology. 2014; 106 :419–434. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Apel K, Wilson-Fowler EB, Brimo D, Perrin NA. Metalinguistic contributions to reading and spelling in second and third grade students. Reading and Writing: An Interdisciplinary Journal. 2012; 25 :1283–1305. [ Google Scholar ]

- Bamberg B. What makes a text coherent? College Composition and Communication. 1983; 34 :417–429. [ Google Scholar ]

- Bentler PM. Comparative fit indexes in structural models. Psychological Bulletin. 1990; 107 :238–246. [ PubMed ] [ Google Scholar ]

- Bentler PM, Bonett DG. Significance tests and goodness of fit in the analysis of covariance structures. Psychological Bulletin. 1980; 88 :588. [ Google Scholar ]

- Berninger VW, Abbott RD. Listening comprehension, oral expression, reading comprehension, and written expression: Related yet unique language systems in Grades 1, 3, 5, and 7. Journal of Educational Psychology. 2010; 102 :635–651. doi: 10.1037/a0019319. [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Berninger VW, Abbott RD, Abbott SP, Graham S, Richards T. Writing and reading: Connections between language by hand and language by eye. Journal of Learning Disabilities. 2002; 35 :39–56. doi: 10.1177/002221940203500104. [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Berninger VW, Abbott RD, Rogan L, Reed E, Abbott S, Brooks A, … Graham S. Teaching spelling to children with specific learning disabilities: The mind’s ear and eye beat the computer or pencil. Learning Disability Quarterly. 1998; 21 :106–122. [ Google Scholar ]

- Berninger V, Amtmann D. Preventing written expression disabilities through early and continuing assessment and intervention for handwriting and/or spelling problems: Research into practice. In: Swanson H, Harris K, Graham S, editors. Handbook of Learning Disabilities. New York: The Guilford Press; 2003. pp. 323–344. [ Google Scholar ]

- Berninger VW, Swanson HL. Children’s writing; toward a process theory of the development of skilled writing. In: Butterfield E, editor. Children’s writing: Toward a process theory of development of skilled writing. Greenwich, CT: JAI Press; 1994. pp. 57–81. Reproduced in The Learning and Teaching of Reading and Writing (by R. Stainthorp). Wiley, 2006. [ Google Scholar ]

- Berninger VW, Winn WD. Implications of advancements in brain research and technology for writing development, writing instruction, and educational evolution. In: MacArthur C, Graham S, Fitzgerald J, editors. Handbook of writing research. New York, NY: Guilford Press; 2006. pp. 96–114. [ Google Scholar ]

- Bourassa D, Treiman R. Linguistic foundations of spelling development. In: Wyse D, Andrews R, Hoffman J, editors. Routledge international handbook of English, language and literacy teaching. London, UK: Routledge; 2009. pp. 182–192. [ Google Scholar ]

- Bourassa DC, Treiman R, Kessler B. Use of morphology in spelling by children with dyslexia and typically developing children. Memory & Cognition. 2006; 34 :703–714. [ PubMed ] [ Google Scholar ]