Data Analysis Techniques in Research – Methods, Tools & Examples

Varun Saharawat is a seasoned professional in the fields of SEO and content writing. With a profound knowledge of the intricate aspects of these disciplines, Varun has established himself as a valuable asset in the world of digital marketing and online content creation.

Data analysis techniques in research are essential because they allow researchers to derive meaningful insights from data sets to support their hypotheses or research objectives.

Data Analysis Techniques in Research : While various groups, institutions, and professionals may have diverse approaches to data analysis, a universal definition captures its essence. Data analysis involves refining, transforming, and interpreting raw data to derive actionable insights that guide informed decision-making for businesses.

A straightforward illustration of data analysis emerges when we make everyday decisions, basing our choices on past experiences or predictions of potential outcomes.

If you want to learn more about this topic and acquire valuable skills that will set you apart in today’s data-driven world, we highly recommend enrolling in the Data Analytics Course by Physics Wallah . And as a special offer for our readers, use the coupon code “READER” to get a discount on this course.

Table of Contents

What is Data Analysis?

Data analysis is the systematic process of inspecting, cleaning, transforming, and interpreting data with the objective of discovering valuable insights and drawing meaningful conclusions. This process involves several steps:

- Inspecting : Initial examination of data to understand its structure, quality, and completeness.

- Cleaning : Removing errors, inconsistencies, or irrelevant information to ensure accurate analysis.

- Transforming : Converting data into a format suitable for analysis, such as normalization or aggregation.

- Interpreting : Analyzing the transformed data to identify patterns, trends, and relationships.

Types of Data Analysis Techniques in Research

Data analysis techniques in research are categorized into qualitative and quantitative methods, each with its specific approaches and tools. These techniques are instrumental in extracting meaningful insights, patterns, and relationships from data to support informed decision-making, validate hypotheses, and derive actionable recommendations. Below is an in-depth exploration of the various types of data analysis techniques commonly employed in research:

1) Qualitative Analysis:

Definition: Qualitative analysis focuses on understanding non-numerical data, such as opinions, concepts, or experiences, to derive insights into human behavior, attitudes, and perceptions.

- Content Analysis: Examines textual data, such as interview transcripts, articles, or open-ended survey responses, to identify themes, patterns, or trends.

- Narrative Analysis: Analyzes personal stories or narratives to understand individuals’ experiences, emotions, or perspectives.

- Ethnographic Studies: Involves observing and analyzing cultural practices, behaviors, and norms within specific communities or settings.

2) Quantitative Analysis:

Quantitative analysis emphasizes numerical data and employs statistical methods to explore relationships, patterns, and trends. It encompasses several approaches:

Descriptive Analysis:

- Frequency Distribution: Represents the number of occurrences of distinct values within a dataset.

- Central Tendency: Measures such as mean, median, and mode provide insights into the central values of a dataset.

- Dispersion: Techniques like variance and standard deviation indicate the spread or variability of data.

Diagnostic Analysis:

- Regression Analysis: Assesses the relationship between dependent and independent variables, enabling prediction or understanding causality.

- ANOVA (Analysis of Variance): Examines differences between groups to identify significant variations or effects.

Predictive Analysis:

- Time Series Forecasting: Uses historical data points to predict future trends or outcomes.

- Machine Learning Algorithms: Techniques like decision trees, random forests, and neural networks predict outcomes based on patterns in data.

Prescriptive Analysis:

- Optimization Models: Utilizes linear programming, integer programming, or other optimization techniques to identify the best solutions or strategies.

- Simulation: Mimics real-world scenarios to evaluate various strategies or decisions and determine optimal outcomes.

Specific Techniques:

- Monte Carlo Simulation: Models probabilistic outcomes to assess risk and uncertainty.

- Factor Analysis: Reduces the dimensionality of data by identifying underlying factors or components.

- Cohort Analysis: Studies specific groups or cohorts over time to understand trends, behaviors, or patterns within these groups.

- Cluster Analysis: Classifies objects or individuals into homogeneous groups or clusters based on similarities or attributes.

- Sentiment Analysis: Uses natural language processing and machine learning techniques to determine sentiment, emotions, or opinions from textual data.

Also Read: AI and Predictive Analytics: Examples, Tools, Uses, Ai Vs Predictive Analytics

Data Analysis Techniques in Research Examples

To provide a clearer understanding of how data analysis techniques are applied in research, let’s consider a hypothetical research study focused on evaluating the impact of online learning platforms on students’ academic performance.

Research Objective:

Determine if students using online learning platforms achieve higher academic performance compared to those relying solely on traditional classroom instruction.

Data Collection:

- Quantitative Data: Academic scores (grades) of students using online platforms and those using traditional classroom methods.

- Qualitative Data: Feedback from students regarding their learning experiences, challenges faced, and preferences.

Data Analysis Techniques Applied:

1) Descriptive Analysis:

- Calculate the mean, median, and mode of academic scores for both groups.

- Create frequency distributions to represent the distribution of grades in each group.

2) Diagnostic Analysis:

- Conduct an Analysis of Variance (ANOVA) to determine if there’s a statistically significant difference in academic scores between the two groups.

- Perform Regression Analysis to assess the relationship between the time spent on online platforms and academic performance.

3) Predictive Analysis:

- Utilize Time Series Forecasting to predict future academic performance trends based on historical data.

- Implement Machine Learning algorithms to develop a predictive model that identifies factors contributing to academic success on online platforms.

4) Prescriptive Analysis:

- Apply Optimization Models to identify the optimal combination of online learning resources (e.g., video lectures, interactive quizzes) that maximize academic performance.

- Use Simulation Techniques to evaluate different scenarios, such as varying student engagement levels with online resources, to determine the most effective strategies for improving learning outcomes.

5) Specific Techniques:

- Conduct Factor Analysis on qualitative feedback to identify common themes or factors influencing students’ perceptions and experiences with online learning.

- Perform Cluster Analysis to segment students based on their engagement levels, preferences, or academic outcomes, enabling targeted interventions or personalized learning strategies.

- Apply Sentiment Analysis on textual feedback to categorize students’ sentiments as positive, negative, or neutral regarding online learning experiences.

By applying a combination of qualitative and quantitative data analysis techniques, this research example aims to provide comprehensive insights into the effectiveness of online learning platforms.

Also Read: Learning Path to Become a Data Analyst in 2024

Data Analysis Techniques in Quantitative Research

Quantitative research involves collecting numerical data to examine relationships, test hypotheses, and make predictions. Various data analysis techniques are employed to interpret and draw conclusions from quantitative data. Here are some key data analysis techniques commonly used in quantitative research:

1) Descriptive Statistics:

- Description: Descriptive statistics are used to summarize and describe the main aspects of a dataset, such as central tendency (mean, median, mode), variability (range, variance, standard deviation), and distribution (skewness, kurtosis).

- Applications: Summarizing data, identifying patterns, and providing initial insights into the dataset.

2) Inferential Statistics:

- Description: Inferential statistics involve making predictions or inferences about a population based on a sample of data. This technique includes hypothesis testing, confidence intervals, t-tests, chi-square tests, analysis of variance (ANOVA), regression analysis, and correlation analysis.

- Applications: Testing hypotheses, making predictions, and generalizing findings from a sample to a larger population.

3) Regression Analysis:

- Description: Regression analysis is a statistical technique used to model and examine the relationship between a dependent variable and one or more independent variables. Linear regression, multiple regression, logistic regression, and nonlinear regression are common types of regression analysis .

- Applications: Predicting outcomes, identifying relationships between variables, and understanding the impact of independent variables on the dependent variable.

4) Correlation Analysis:

- Description: Correlation analysis is used to measure and assess the strength and direction of the relationship between two or more variables. The Pearson correlation coefficient, Spearman rank correlation coefficient, and Kendall’s tau are commonly used measures of correlation.

- Applications: Identifying associations between variables and assessing the degree and nature of the relationship.

5) Factor Analysis:

- Description: Factor analysis is a multivariate statistical technique used to identify and analyze underlying relationships or factors among a set of observed variables. It helps in reducing the dimensionality of data and identifying latent variables or constructs.

- Applications: Identifying underlying factors or constructs, simplifying data structures, and understanding the underlying relationships among variables.

6) Time Series Analysis:

- Description: Time series analysis involves analyzing data collected or recorded over a specific period at regular intervals to identify patterns, trends, and seasonality. Techniques such as moving averages, exponential smoothing, autoregressive integrated moving average (ARIMA), and Fourier analysis are used.

- Applications: Forecasting future trends, analyzing seasonal patterns, and understanding time-dependent relationships in data.

7) ANOVA (Analysis of Variance):

- Description: Analysis of variance (ANOVA) is a statistical technique used to analyze and compare the means of two or more groups or treatments to determine if they are statistically different from each other. One-way ANOVA, two-way ANOVA, and MANOVA (Multivariate Analysis of Variance) are common types of ANOVA.

- Applications: Comparing group means, testing hypotheses, and determining the effects of categorical independent variables on a continuous dependent variable.

8) Chi-Square Tests:

- Description: Chi-square tests are non-parametric statistical tests used to assess the association between categorical variables in a contingency table. The Chi-square test of independence, goodness-of-fit test, and test of homogeneity are common chi-square tests.

- Applications: Testing relationships between categorical variables, assessing goodness-of-fit, and evaluating independence.

These quantitative data analysis techniques provide researchers with valuable tools and methods to analyze, interpret, and derive meaningful insights from numerical data. The selection of a specific technique often depends on the research objectives, the nature of the data, and the underlying assumptions of the statistical methods being used.

Also Read: Analysis vs. Analytics: How Are They Different?

Data Analysis Methods

Data analysis methods refer to the techniques and procedures used to analyze, interpret, and draw conclusions from data. These methods are essential for transforming raw data into meaningful insights, facilitating decision-making processes, and driving strategies across various fields. Here are some common data analysis methods:

- Description: Descriptive statistics summarize and organize data to provide a clear and concise overview of the dataset. Measures such as mean, median, mode, range, variance, and standard deviation are commonly used.

- Description: Inferential statistics involve making predictions or inferences about a population based on a sample of data. Techniques such as hypothesis testing, confidence intervals, and regression analysis are used.

3) Exploratory Data Analysis (EDA):

- Description: EDA techniques involve visually exploring and analyzing data to discover patterns, relationships, anomalies, and insights. Methods such as scatter plots, histograms, box plots, and correlation matrices are utilized.

- Applications: Identifying trends, patterns, outliers, and relationships within the dataset.

4) Predictive Analytics:

- Description: Predictive analytics use statistical algorithms and machine learning techniques to analyze historical data and make predictions about future events or outcomes. Techniques such as regression analysis, time series forecasting, and machine learning algorithms (e.g., decision trees, random forests, neural networks) are employed.

- Applications: Forecasting future trends, predicting outcomes, and identifying potential risks or opportunities.

5) Prescriptive Analytics:

- Description: Prescriptive analytics involve analyzing data to recommend actions or strategies that optimize specific objectives or outcomes. Optimization techniques, simulation models, and decision-making algorithms are utilized.

- Applications: Recommending optimal strategies, decision-making support, and resource allocation.

6) Qualitative Data Analysis:

- Description: Qualitative data analysis involves analyzing non-numerical data, such as text, images, videos, or audio, to identify themes, patterns, and insights. Methods such as content analysis, thematic analysis, and narrative analysis are used.

- Applications: Understanding human behavior, attitudes, perceptions, and experiences.

7) Big Data Analytics:

- Description: Big data analytics methods are designed to analyze large volumes of structured and unstructured data to extract valuable insights. Technologies such as Hadoop, Spark, and NoSQL databases are used to process and analyze big data.

- Applications: Analyzing large datasets, identifying trends, patterns, and insights from big data sources.

8) Text Analytics:

- Description: Text analytics methods involve analyzing textual data, such as customer reviews, social media posts, emails, and documents, to extract meaningful information and insights. Techniques such as sentiment analysis, text mining, and natural language processing (NLP) are used.

- Applications: Analyzing customer feedback, monitoring brand reputation, and extracting insights from textual data sources.

These data analysis methods are instrumental in transforming data into actionable insights, informing decision-making processes, and driving organizational success across various sectors, including business, healthcare, finance, marketing, and research. The selection of a specific method often depends on the nature of the data, the research objectives, and the analytical requirements of the project or organization.

Also Read: Quantitative Data Analysis: Types, Analysis & Examples

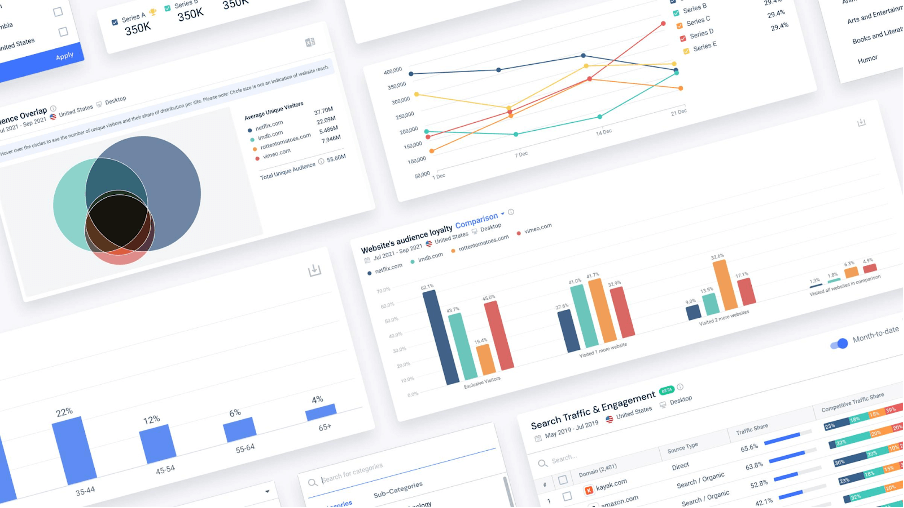

Data Analysis Tools

Data analysis tools are essential instruments that facilitate the process of examining, cleaning, transforming, and modeling data to uncover useful information, make informed decisions, and drive strategies. Here are some prominent data analysis tools widely used across various industries:

1) Microsoft Excel:

- Description: A spreadsheet software that offers basic to advanced data analysis features, including pivot tables, data visualization tools, and statistical functions.

- Applications: Data cleaning, basic statistical analysis, visualization, and reporting.

2) R Programming Language:

- Description: An open-source programming language specifically designed for statistical computing and data visualization.

- Applications: Advanced statistical analysis, data manipulation, visualization, and machine learning.

3) Python (with Libraries like Pandas, NumPy, Matplotlib, and Seaborn):

- Description: A versatile programming language with libraries that support data manipulation, analysis, and visualization.

- Applications: Data cleaning, statistical analysis, machine learning, and data visualization.

4) SPSS (Statistical Package for the Social Sciences):

- Description: A comprehensive statistical software suite used for data analysis, data mining, and predictive analytics.

- Applications: Descriptive statistics, hypothesis testing, regression analysis, and advanced analytics.

5) SAS (Statistical Analysis System):

- Description: A software suite used for advanced analytics, multivariate analysis, and predictive modeling.

- Applications: Data management, statistical analysis, predictive modeling, and business intelligence.

6) Tableau:

- Description: A data visualization tool that allows users to create interactive and shareable dashboards and reports.

- Applications: Data visualization , business intelligence , and interactive dashboard creation.

7) Power BI:

- Description: A business analytics tool developed by Microsoft that provides interactive visualizations and business intelligence capabilities.

- Applications: Data visualization, business intelligence, reporting, and dashboard creation.

8) SQL (Structured Query Language) Databases (e.g., MySQL, PostgreSQL, Microsoft SQL Server):

- Description: Database management systems that support data storage, retrieval, and manipulation using SQL queries.

- Applications: Data retrieval, data cleaning, data transformation, and database management.

9) Apache Spark:

- Description: A fast and general-purpose distributed computing system designed for big data processing and analytics.

- Applications: Big data processing, machine learning, data streaming, and real-time analytics.

10) IBM SPSS Modeler:

- Description: A data mining software application used for building predictive models and conducting advanced analytics.

- Applications: Predictive modeling, data mining, statistical analysis, and decision optimization.

These tools serve various purposes and cater to different data analysis needs, from basic statistical analysis and data visualization to advanced analytics, machine learning, and big data processing. The choice of a specific tool often depends on the nature of the data, the complexity of the analysis, and the specific requirements of the project or organization.

Also Read: How to Analyze Survey Data: Methods & Examples

Importance of Data Analysis in Research

The importance of data analysis in research cannot be overstated; it serves as the backbone of any scientific investigation or study. Here are several key reasons why data analysis is crucial in the research process:

- Data analysis helps ensure that the results obtained are valid and reliable. By systematically examining the data, researchers can identify any inconsistencies or anomalies that may affect the credibility of the findings.

- Effective data analysis provides researchers with the necessary information to make informed decisions. By interpreting the collected data, researchers can draw conclusions, make predictions, or formulate recommendations based on evidence rather than intuition or guesswork.

- Data analysis allows researchers to identify patterns, trends, and relationships within the data. This can lead to a deeper understanding of the research topic, enabling researchers to uncover insights that may not be immediately apparent.

- In empirical research, data analysis plays a critical role in testing hypotheses. Researchers collect data to either support or refute their hypotheses, and data analysis provides the tools and techniques to evaluate these hypotheses rigorously.

- Transparent and well-executed data analysis enhances the credibility of research findings. By clearly documenting the data analysis methods and procedures, researchers allow others to replicate the study, thereby contributing to the reproducibility of research findings.

- In fields such as business or healthcare, data analysis helps organizations allocate resources more efficiently. By analyzing data on consumer behavior, market trends, or patient outcomes, organizations can make strategic decisions about resource allocation, budgeting, and planning.

- In public policy and social sciences, data analysis is instrumental in developing and evaluating policies and interventions. By analyzing data on social, economic, or environmental factors, policymakers can assess the effectiveness of existing policies and inform the development of new ones.

- Data analysis allows for continuous improvement in research methods and practices. By analyzing past research projects, identifying areas for improvement, and implementing changes based on data-driven insights, researchers can refine their approaches and enhance the quality of future research endeavors.

However, it is important to remember that mastering these techniques requires practice and continuous learning. That’s why we highly recommend the Data Analytics Course by Physics Wallah . Not only does it cover all the fundamentals of data analysis, but it also provides hands-on experience with various tools such as Excel, Python, and Tableau. Plus, if you use the “ READER ” coupon code at checkout, you can get a special discount on the course.

For Latest Tech Related Information, Join Our Official Free Telegram Group : PW Skills Telegram Group

Data Analysis Techniques in Research FAQs

What are the 5 techniques for data analysis.

The five techniques for data analysis include: Descriptive Analysis Diagnostic Analysis Predictive Analysis Prescriptive Analysis Qualitative Analysis

What are techniques of data analysis in research?

Techniques of data analysis in research encompass both qualitative and quantitative methods. These techniques involve processes like summarizing raw data, investigating causes of events, forecasting future outcomes, offering recommendations based on predictions, and examining non-numerical data to understand concepts or experiences.

What are the 3 methods of data analysis?

The three primary methods of data analysis are: Qualitative Analysis Quantitative Analysis Mixed-Methods Analysis

What are the four types of data analysis techniques?

The four types of data analysis techniques are: Descriptive Analysis Diagnostic Analysis Predictive Analysis Prescriptive Analysis

- 10 Best Companies For Data Analysis Internships 2024

This article will help you provide the top 10 best companies for a Data Analysis Internship which will not only…

- What Is Business Analytics Business Intelligence?

Want to learn what Business analytics business intelligence is? Reading this article will help you to understand all topics clearly,…

- Which Course is Best for a Data Analyst?

Looking to build your career as a Data Analyst but Don’t know how to start and where to start from?…

Related Articles

- Full Form Of OLAP

- Which Course is Best for Business Analyst? (Business Analysts Online Courses)

- Best Courses For Data Analytics: Top 10 Courses For Your Career in Trend

- Why is Data Analytics Skills Important?

- What is Data Analytics in Database?

- Finance Data Analysis: What is a Financial Data Analysis?

- What are Data Analysis Tools?

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Data Analysis in Research: Types & Methods

Content Index

Why analyze data in research?

Types of data in research, finding patterns in the qualitative data, methods used for data analysis in qualitative research, preparing data for analysis, methods used for data analysis in quantitative research, considerations in research data analysis, what is data analysis in research.

Definition of research in data analysis: According to LeCompte and Schensul, research data analysis is a process used by researchers to reduce data to a story and interpret it to derive insights. The data analysis process helps reduce a large chunk of data into smaller fragments, which makes sense.

Three essential things occur during the data analysis process — the first is data organization . Summarization and categorization together contribute to becoming the second known method used for data reduction. It helps find patterns and themes in the data for easy identification and linking. The third and last way is data analysis – researchers do it in both top-down and bottom-up fashion.

LEARN ABOUT: Research Process Steps

On the other hand, Marshall and Rossman describe data analysis as a messy, ambiguous, and time-consuming but creative and fascinating process through which a mass of collected data is brought to order, structure and meaning.

We can say that “the data analysis and data interpretation is a process representing the application of deductive and inductive logic to the research and data analysis.”

Researchers rely heavily on data as they have a story to tell or research problems to solve. It starts with a question, and data is nothing but an answer to that question. But, what if there is no question to ask? Well! It is possible to explore data even without a problem – we call it ‘Data Mining’, which often reveals some interesting patterns within the data that are worth exploring.

Irrelevant to the type of data researchers explore, their mission and audiences’ vision guide them to find the patterns to shape the story they want to tell. One of the essential things expected from researchers while analyzing data is to stay open and remain unbiased toward unexpected patterns, expressions, and results. Remember, sometimes, data analysis tells the most unforeseen yet exciting stories that were not expected when initiating data analysis. Therefore, rely on the data you have at hand and enjoy the journey of exploratory research.

Create a Free Account

Every kind of data has a rare quality of describing things after assigning a specific value to it. For analysis, you need to organize these values, processed and presented in a given context, to make it useful. Data can be in different forms; here are the primary data types.

- Qualitative data: When the data presented has words and descriptions, then we call it qualitative data . Although you can observe this data, it is subjective and harder to analyze data in research, especially for comparison. Example: Quality data represents everything describing taste, experience, texture, or an opinion that is considered quality data. This type of data is usually collected through focus groups, personal qualitative interviews , qualitative observation or using open-ended questions in surveys.

- Quantitative data: Any data expressed in numbers of numerical figures are called quantitative data . This type of data can be distinguished into categories, grouped, measured, calculated, or ranked. Example: questions such as age, rank, cost, length, weight, scores, etc. everything comes under this type of data. You can present such data in graphical format, charts, or apply statistical analysis methods to this data. The (Outcomes Measurement Systems) OMS questionnaires in surveys are a significant source of collecting numeric data.

- Categorical data: It is data presented in groups. However, an item included in the categorical data cannot belong to more than one group. Example: A person responding to a survey by telling his living style, marital status, smoking habit, or drinking habit comes under the categorical data. A chi-square test is a standard method used to analyze this data.

Learn More : Examples of Qualitative Data in Education

Data analysis in qualitative research

Data analysis and qualitative data research work a little differently from the numerical data as the quality data is made up of words, descriptions, images, objects, and sometimes symbols. Getting insight from such complicated information is a complicated process. Hence it is typically used for exploratory research and data analysis .

Although there are several ways to find patterns in the textual information, a word-based method is the most relied and widely used global technique for research and data analysis. Notably, the data analysis process in qualitative research is manual. Here the researchers usually read the available data and find repetitive or commonly used words.

For example, while studying data collected from African countries to understand the most pressing issues people face, researchers might find “food” and “hunger” are the most commonly used words and will highlight them for further analysis.

LEARN ABOUT: Level of Analysis

The keyword context is another widely used word-based technique. In this method, the researcher tries to understand the concept by analyzing the context in which the participants use a particular keyword.

For example , researchers conducting research and data analysis for studying the concept of ‘diabetes’ amongst respondents might analyze the context of when and how the respondent has used or referred to the word ‘diabetes.’

The scrutiny-based technique is also one of the highly recommended text analysis methods used to identify a quality data pattern. Compare and contrast is the widely used method under this technique to differentiate how a specific text is similar or different from each other.

For example: To find out the “importance of resident doctor in a company,” the collected data is divided into people who think it is necessary to hire a resident doctor and those who think it is unnecessary. Compare and contrast is the best method that can be used to analyze the polls having single-answer questions types .

Metaphors can be used to reduce the data pile and find patterns in it so that it becomes easier to connect data with theory.

Variable Partitioning is another technique used to split variables so that researchers can find more coherent descriptions and explanations from the enormous data.

LEARN ABOUT: Qualitative Research Questions and Questionnaires

There are several techniques to analyze the data in qualitative research, but here are some commonly used methods,

- Content Analysis: It is widely accepted and the most frequently employed technique for data analysis in research methodology. It can be used to analyze the documented information from text, images, and sometimes from the physical items. It depends on the research questions to predict when and where to use this method.

- Narrative Analysis: This method is used to analyze content gathered from various sources such as personal interviews, field observation, and surveys . The majority of times, stories, or opinions shared by people are focused on finding answers to the research questions.

- Discourse Analysis: Similar to narrative analysis, discourse analysis is used to analyze the interactions with people. Nevertheless, this particular method considers the social context under which or within which the communication between the researcher and respondent takes place. In addition to that, discourse analysis also focuses on the lifestyle and day-to-day environment while deriving any conclusion.

- Grounded Theory: When you want to explain why a particular phenomenon happened, then using grounded theory for analyzing quality data is the best resort. Grounded theory is applied to study data about the host of similar cases occurring in different settings. When researchers are using this method, they might alter explanations or produce new ones until they arrive at some conclusion.

LEARN ABOUT: 12 Best Tools for Researchers

Data analysis in quantitative research

The first stage in research and data analysis is to make it for the analysis so that the nominal data can be converted into something meaningful. Data preparation consists of the below phases.

Phase I: Data Validation

Data validation is done to understand if the collected data sample is per the pre-set standards, or it is a biased data sample again divided into four different stages

- Fraud: To ensure an actual human being records each response to the survey or the questionnaire

- Screening: To make sure each participant or respondent is selected or chosen in compliance with the research criteria

- Procedure: To ensure ethical standards were maintained while collecting the data sample

- Completeness: To ensure that the respondent has answered all the questions in an online survey. Else, the interviewer had asked all the questions devised in the questionnaire.

Phase II: Data Editing

More often, an extensive research data sample comes loaded with errors. Respondents sometimes fill in some fields incorrectly or sometimes skip them accidentally. Data editing is a process wherein the researchers have to confirm that the provided data is free of such errors. They need to conduct necessary checks and outlier checks to edit the raw edit and make it ready for analysis.

Phase III: Data Coding

Out of all three, this is the most critical phase of data preparation associated with grouping and assigning values to the survey responses . If a survey is completed with a 1000 sample size, the researcher will create an age bracket to distinguish the respondents based on their age. Thus, it becomes easier to analyze small data buckets rather than deal with the massive data pile.

LEARN ABOUT: Steps in Qualitative Research

After the data is prepared for analysis, researchers are open to using different research and data analysis methods to derive meaningful insights. For sure, statistical analysis plans are the most favored to analyze numerical data. In statistical analysis, distinguishing between categorical data and numerical data is essential, as categorical data involves distinct categories or labels, while numerical data consists of measurable quantities. The method is again classified into two groups. First, ‘Descriptive Statistics’ used to describe data. Second, ‘Inferential statistics’ that helps in comparing the data .

Descriptive statistics

This method is used to describe the basic features of versatile types of data in research. It presents the data in such a meaningful way that pattern in the data starts making sense. Nevertheless, the descriptive analysis does not go beyond making conclusions. The conclusions are again based on the hypothesis researchers have formulated so far. Here are a few major types of descriptive analysis methods.

Measures of Frequency

- Count, Percent, Frequency

- It is used to denote home often a particular event occurs.

- Researchers use it when they want to showcase how often a response is given.

Measures of Central Tendency

- Mean, Median, Mode

- The method is widely used to demonstrate distribution by various points.

- Researchers use this method when they want to showcase the most commonly or averagely indicated response.

Measures of Dispersion or Variation

- Range, Variance, Standard deviation

- Here the field equals high/low points.

- Variance standard deviation = difference between the observed score and mean

- It is used to identify the spread of scores by stating intervals.

- Researchers use this method to showcase data spread out. It helps them identify the depth until which the data is spread out that it directly affects the mean.

Measures of Position

- Percentile ranks, Quartile ranks

- It relies on standardized scores helping researchers to identify the relationship between different scores.

- It is often used when researchers want to compare scores with the average count.

For quantitative research use of descriptive analysis often give absolute numbers, but the in-depth analysis is never sufficient to demonstrate the rationale behind those numbers. Nevertheless, it is necessary to think of the best method for research and data analysis suiting your survey questionnaire and what story researchers want to tell. For example, the mean is the best way to demonstrate the students’ average scores in schools. It is better to rely on the descriptive statistics when the researchers intend to keep the research or outcome limited to the provided sample without generalizing it. For example, when you want to compare average voting done in two different cities, differential statistics are enough.

Descriptive analysis is also called a ‘univariate analysis’ since it is commonly used to analyze a single variable.

Inferential statistics

Inferential statistics are used to make predictions about a larger population after research and data analysis of the representing population’s collected sample. For example, you can ask some odd 100 audiences at a movie theater if they like the movie they are watching. Researchers then use inferential statistics on the collected sample to reason that about 80-90% of people like the movie.

Here are two significant areas of inferential statistics.

- Estimating parameters: It takes statistics from the sample research data and demonstrates something about the population parameter.

- Hypothesis test: I t’s about sampling research data to answer the survey research questions. For example, researchers might be interested to understand if the new shade of lipstick recently launched is good or not, or if the multivitamin capsules help children to perform better at games.

These are sophisticated analysis methods used to showcase the relationship between different variables instead of describing a single variable. It is often used when researchers want something beyond absolute numbers to understand the relationship between variables.

Here are some of the commonly used methods for data analysis in research.

- Correlation: When researchers are not conducting experimental research or quasi-experimental research wherein the researchers are interested to understand the relationship between two or more variables, they opt for correlational research methods.

- Cross-tabulation: Also called contingency tables, cross-tabulation is used to analyze the relationship between multiple variables. Suppose provided data has age and gender categories presented in rows and columns. A two-dimensional cross-tabulation helps for seamless data analysis and research by showing the number of males and females in each age category.

- Regression analysis: For understanding the strong relationship between two variables, researchers do not look beyond the primary and commonly used regression analysis method, which is also a type of predictive analysis used. In this method, you have an essential factor called the dependent variable. You also have multiple independent variables in regression analysis. You undertake efforts to find out the impact of independent variables on the dependent variable. The values of both independent and dependent variables are assumed as being ascertained in an error-free random manner.

- Frequency tables: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Analysis of variance: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Researchers must have the necessary research skills to analyze and manipulation the data , Getting trained to demonstrate a high standard of research practice. Ideally, researchers must possess more than a basic understanding of the rationale of selecting one statistical method over the other to obtain better data insights.

- Usually, research and data analytics projects differ by scientific discipline; therefore, getting statistical advice at the beginning of analysis helps design a survey questionnaire, select data collection methods , and choose samples.

LEARN ABOUT: Best Data Collection Tools

- The primary aim of data research and analysis is to derive ultimate insights that are unbiased. Any mistake in or keeping a biased mind to collect data, selecting an analysis method, or choosing audience sample il to draw a biased inference.

- Irrelevant to the sophistication used in research data and analysis is enough to rectify the poorly defined objective outcome measurements. It does not matter if the design is at fault or intentions are not clear, but lack of clarity might mislead readers, so avoid the practice.

- The motive behind data analysis in research is to present accurate and reliable data. As far as possible, avoid statistical errors, and find a way to deal with everyday challenges like outliers, missing data, data altering, data mining , or developing graphical representation.

LEARN MORE: Descriptive Research vs Correlational Research The sheer amount of data generated daily is frightening. Especially when data analysis has taken center stage. in 2018. In last year, the total data supply amounted to 2.8 trillion gigabytes. Hence, it is clear that the enterprises willing to survive in the hypercompetitive world must possess an excellent capability to analyze complex research data, derive actionable insights, and adapt to the new market needs.

LEARN ABOUT: Average Order Value

QuestionPro is an online survey platform that empowers organizations in data analysis and research and provides them a medium to collect data by creating appealing surveys.

MORE LIKE THIS

Top 20 Employee Engagement Software Solutions

May 3, 2024

15 Best Customer Experience Software of 2024

May 2, 2024

Journey Orchestration Platforms: Top 11 Platforms in 2024

Top 12 Employee Pulse Survey Tools Unlocking Insights in 2024

May 1, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

Top 21 must-have digital tools for researchers

Last updated

12 May 2023

Reviewed by

Jean Kaluza

Research drives many decisions across various industries, including:

Uncovering customer motivations and behaviors to design better products

Assessing whether a market exists for your product or service

Running clinical studies to develop a medical breakthrough

Conducting effective and shareable research can be a painstaking process. Manual processes are sluggish and archaic, and they can also be inaccurate. That’s where advanced online tools can help.

The right tools can enable businesses to lean into research for better forecasting, planning, and more reliable decisions.

- Why do researchers need research tools?

Research is challenging and time-consuming. Analyzing data , running focus groups , reading research papers , and looking for useful insights take plenty of heavy lifting.

These days, researchers can’t just rely on manual processes. Instead, they’re using advanced tools that:

Speed up the research process

Enable new ways of reaching customers

Improve organization and accuracy

Allow better monitoring throughout the process

Enhance collaboration across key stakeholders

- The most important digital tools for researchers

Some tools can help at every stage, making researching simpler and faster.

They ensure accurate and efficient information collection, management, referencing, and analysis.

Some of the most important digital tools for researchers include:

Research management tools

Research management can be a complex and challenging process. Some tools address the various challenges that arise when referencing and managing papers.

.css-10ptwjf{-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;background:transparent;border:0;color:inherit;cursor:pointer;-webkit-flex-shrink:0;-ms-flex-negative:0;flex-shrink:0;-webkit-text-decoration:underline;text-decoration:underline;}.css-10ptwjf:disabled{opacity:0.6;pointer-events:none;} Zotero

Coined as a personal research assistant, Zotero is a tool that brings efficiency to the research process. Zotero helps researchers collect, organize, annotate, and share research easily.

Zotero integrates with internet browsers, so researchers can easily save an article, publication, or research study on the platform for later.

The tool also has an advanced organizing system to allow users to label, tag, and categorize information for faster insights and a seamless analysis process.

Messy paper stacks––digital or physical––are a thing of the past with Paperpile. This reference management tool integrates with Google Docs, saving users time with citations and paper management.

Referencing, researching, and gaining insights is much cleaner and more productive, as all papers are in the same place. Plus, it’s easier to find a paper when you need it.

Acting as a single source of truth (SSOT), Dovetail houses research from the entire organization in a simple-to-use place. Researchers can use the all-in-one platform to collate and store data from interviews , forms, surveys , focus groups, and more.

Dovetail helps users quickly categorize and analyze data to uncover truly actionable insights . This helps organizations bring customer insights into every decision for better forecasting, planning, and decision-making.

Dovetail integrates with other helpful tools like Slack, Atlassian, Notion, and Zapier for a truly efficient workflow.

Putting together papers and referencing sources can be a huge time consumer. EndNote claims that researchers waste 200,000 hours per year formatting citations.

To address the issue, the tool formats citations automatically––simultaneously creating a bibliography while the user writes.

EndNote is also a cloud-based system that allows remote working, multiple-user interaction and collaboration, and seamless working on different devices.

Information survey tools

Surveys are a common way to gain data from customers. These tools can make the process simpler and more cost-effective.

With ready-made survey templates––to collect NPS data, customer effort scores , five-star surveys, and more––getting going with Delighted is straightforward.

Delighted helps teams collect and analyze survey feedback without needing any technical knowledge. The templates are customizable, so you can align the content with your brand. That way, the survey feels like it’s coming from your company, not a third party.

SurveyMonkey

With millions of customers worldwide, SurveyMonkey is another leader in online surveys. SurveyMonkey offers hundreds of templates that researchers can use to set up and deploy surveys quickly.

Whether your survey is about team performance, hotel feedback, post-event feedback, or an employee exit, SurveyMonkey has a ready-to-use template.

Typeform offers free templates you can quickly embed, which comes with a point of difference: It designs forms and surveys with people in mind, focusing on customer enjoyment.

Typeform employs the ‘one question at a time’ method to keep engagement rates and completions high. It focuses on surveys that feel more like conversations than a list of questions.

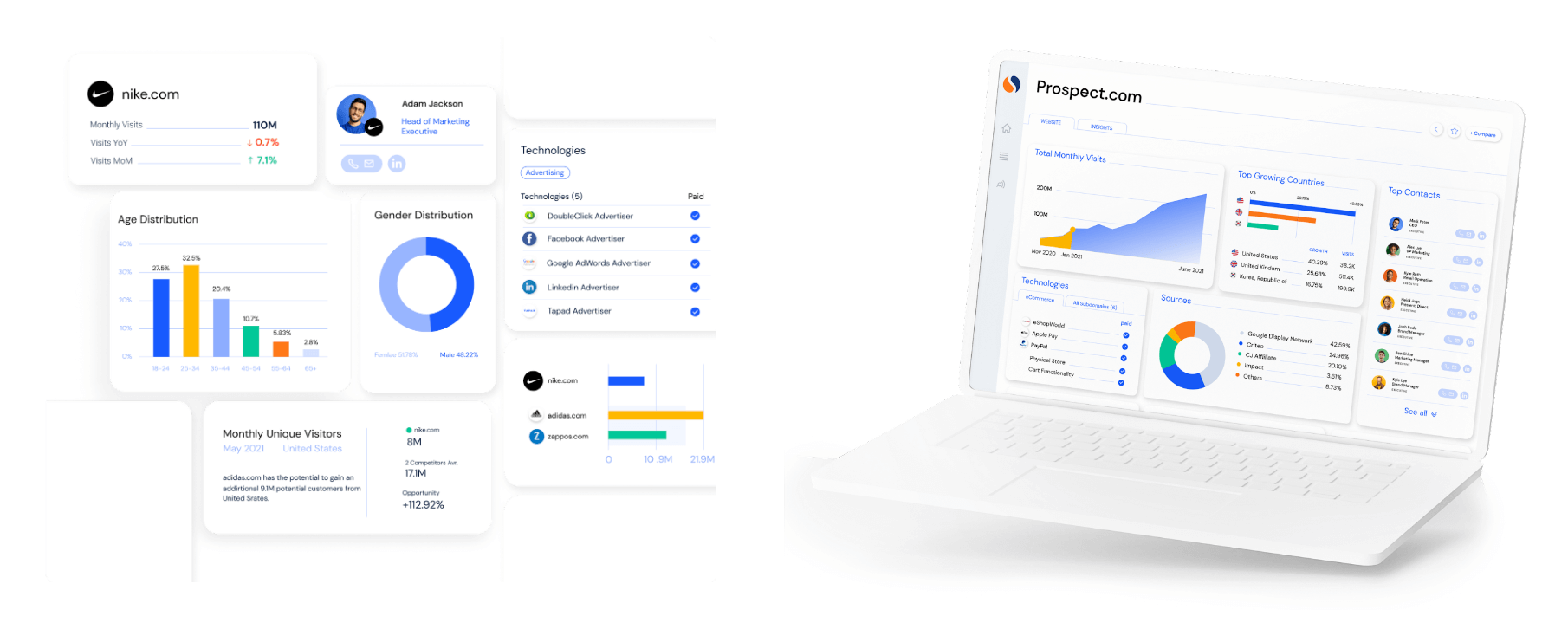

Web data analysis tools

Collecting data can take time––especially technical information. Some tools make that process simpler.

For those conducting clinical research, data collection can be incredibly time-consuming. Teamscope provides an online platform to collect and manage data simply and easily.

Researchers and medical professionals often collect clinical data through paper forms or digital means. Those are too easy to lose, tricky to manage, and challenging to collaborate on.

With Teamscope, you can easily collect, store, and electronically analyze data like patient-reported outcomes and surveys.

Heap is a digital insights platform providing context on the entire customer journey . This helps businesses improve customer feedback , conversion rates, and loyalty.

Through Heap, you can seamlessly view and analyze the customer journey across all platforms and touchpoints, whether through the app or website.

Another analytics tool, Smartlook, combines quantitative and qualitative analytics into one platform. This helps organizations understand user behavior and make crucial improvements.

Smartlook is useful for analyzing web pages, purchasing flows, and optimizing conversion rates.

Project management tools

Managing multiple research projects across many teams can be complex and challenging. Project management tools can ease the burden on researchers.

Visual productivity tool Trello helps research teams manage their projects more efficiently. Trello makes product tracking easier with:

A range of workflow options

Unique project board layouts

Advanced descriptions

Integrations

Trello also works as an SSOT to stay on top of projects and collaborate effectively as a team.

To connect research, workflows, and teams, Airtable provides a clean interactive interface.

With Airtable, it’s simple to place research projects in a list view, workstream, or road map to synthesize information and quickly collaborate. The Sync feature makes it easy to link all your research data to one place for faster action.

For product teams, Asana gathers development, copywriting, design, research teams, and product managers in one space.

As a task management platform, Asana offers all the expected features and more, including time-tracking and Jira integration. The platform offers reporting alongside data collection methods , so it’s a favorite for product teams in the tech space.

Grammar checker tools

Grammar tools ensure your research projects are professional and proofed.

No one’s perfect, especially when it comes to spelling, punctuation, and grammar. That’s where Grammarly can help.

Grammarly’s AI-powered platform reviews your content and corrects any mistakes. Through helpful integrations with other platforms––such as Gmail, Google Docs, Twitter, and LinkedIn––it’s simple to spellcheck as you go.

Another helpful grammar tool is Trinka AI. Trinka is specifically for technical and academic styles of writing. It doesn’t just correct mistakes in spelling, punctuation, and grammar; it also offers explanations and additional information when errors show.

Researchers can also use Trinka to enhance their writing and:

Align it with technical and academic styles

Improve areas like syntax and word choice

Discover relevant suggestions based on the content topic

Plagiarism checker tools

Avoiding plagiarism is crucial for the integrity of research. Using checker tools can ensure your work is original.

Plagiarism checker Quetext uses DeepSearch™ technology to quickly sort through online content to search for signs of plagiarism.

With color coding, annotations, and an overall score, it’s easy to identify conflict areas and fix them accordingly.

Duplichecker

Another helpful plagiarism tool is Duplichecker, which scans pieces of content for issues. The service is free for content up to 1000 words, with paid options available after that.

If plagiarism occurs, a percentage identifies how much is duplicate content. However, the interface is relatively basic, offering little additional information.

Journal finder tools

Finding the right journals for your project can be challenging––especially with the plethora of inaccurate or predatory content online. Journal finder tools can solve this issue.

Enago Journal Finder

The Enago Open Access Journal Finder sorts through online journals to verify their legitimacy. Through Engao, you can discover pre-vetted, high-quality journals through a validated journal index.

Enago’s search tool also helps users find relevant journals for their subject matter, speeding up the research process.

JournalFinder

JournalFinder is another journal tool that’s popular with academics and researchers. It makes the process of discovering relevant journals fast by leaning into a machine-learning algorithm.

This is useful for discovering key information and finding the right journals to publish and share your work in.

Social networking for researchers

Collaboration between researchers can improve the accuracy and sharing of information. Promoting research findings can also be essential for public health, safety, and more.

While typical social networks exist, some are specifically designed for academics.

ResearchGate

Networking platform ResearchGate encourages researchers to connect, collaborate, and share within the scientific community. With 20 million researchers on the platform, it's a popular choice.

ResearchGate is founded on an intention to advance research. The platform provides topic pages for easy connection within a field of expertise and access to millions of publications to help users stay up to date.

Academia is another commonly used platform that connects 220 million academics and researchers within their specialties.

The platform aims to accelerate research with discovery tools and grow a researcher’s audience to promote their ideas.

On Academia, users can access 47 million PDFs for free. They cover topics from mechanical engineering to applied economics and child psychology.

- Expedited research with the power of tools

For researchers, finding data and information can be time-consuming and complex to manage. That’s where the power of tools comes in.

Manual processes are slow, outdated, and have a larger potential for inaccuracies.

Leaning into tools can help researchers speed up their processes, conduct efficient research, boost their accuracy, and share their work effectively.

With tools available for project and data management, web data collection, and journal finding, researchers have plenty of assistance at their disposal.

When it comes to connecting with customers, advanced tools boost customer connection while continually bringing their needs and wants into products and services.

What are primary research tools?

Primary research is data and information that you collect firsthand through surveys, customer interviews, or focus groups.

Secondary research is data and information from other sources, such as journals, research bodies, or online content.

Primary researcher tools use methods like surveys and customer interviews. You can use these tools to collect, store, or manage information effectively and uncover more accurate insights.

What is the difference between tools and methods in research?

Research methods relate to how researchers gather information and data.

For example, surveys, focus groups, customer interviews, and A/B testing are research methods that gather information.

On the other hand, tools assist areas of research. Researchers may use tools to more efficiently gather data, store data securely, or uncover insights.

Tools can improve research methods, ensuring efficiency and accuracy while reducing complexity.

Editor’s picks

Last updated: 11 January 2024

Last updated: 15 January 2024

Last updated: 25 November 2023

Last updated: 12 May 2023

Last updated: 30 April 2024

Last updated: 18 May 2023

Last updated: 10 April 2023

Latest articles

Related topics, .css-je19u9{-webkit-align-items:flex-end;-webkit-box-align:flex-end;-ms-flex-align:flex-end;align-items:flex-end;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-flex-direction:row;-ms-flex-direction:row;flex-direction:row;-webkit-box-flex-wrap:wrap;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;-webkit-box-pack:center;-ms-flex-pack:center;-webkit-justify-content:center;justify-content:center;row-gap:0;text-align:center;max-width:671px;}@media (max-width: 1079px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}}@media (max-width: 799px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}} decide what to .css-1kiodld{max-height:56px;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;}@media (max-width: 1079px){.css-1kiodld{display:none;}} build next, decide what to build next.

Users report unexpectedly high data usage, especially during streaming sessions.

Users find it hard to navigate from the home page to relevant playlists in the app.

It would be great to have a sleep timer feature, especially for bedtime listening.

I need better filters to find the songs or artists I’m looking for.

Log in or sign up

Get started for free

Statistical Methods for Data Analysis: a Comprehensive Guide

In today’s data-driven world, understanding statistical methods for data analysis is like having a superpower.

Whether you’re a student, a professional, or just a curious mind, diving into the realm of data can unlock insights and decisions that propel success.

Statistical methods for data analysis are the tools and techniques used to collect, analyze, interpret, and present data in a meaningful way.

From businesses optimizing operations to researchers uncovering new discoveries, these methods are foundational to making informed decisions based on data.

In this blog post, we’ll embark on a journey through the fascinating world of statistical analysis, exploring its key concepts, methodologies, and applications.

Introduction to Statistical Methods

At its core, statistical methods are the backbone of data analysis, helping us make sense of numbers and patterns in the world around us.

Whether you’re looking at sales figures, medical research, or even your fitness tracker’s data, statistical methods are what turn raw data into useful insights.

But before we dive into complex formulas and tests, let’s start with the basics.

Data comes in two main types: qualitative and quantitative data .

Quantitative data is all about numbers and quantities (like your height or the number of steps you walked today), while qualitative data deals with categories and qualities (like your favorite color or the breed of your dog).

And when we talk about measuring these data points, we use different scales like nominal, ordinal , interval , and ratio.

These scales help us understand the nature of our data—whether we’re ranking it (ordinal), simply categorizing it (nominal), or measuring it with a true zero point (ratio).

In a nutshell, statistical methods start with understanding the type and scale of your data.

This foundational knowledge sets the stage for everything from summarizing your data to making complex predictions.

Descriptive Statistics: Simplifying Data

Imagine you’re at a party and you meet a bunch of new people.

When you go home, your roommate asks, “So, what were they like?” You could describe each person in detail, but instead, you give a summary: “Most were college students, around 20-25 years old, pretty fun crowd!”

That’s essentially what descriptive statistics does for data.

It summarizes and describes the main features of a collection of data in an easy-to-understand way. Let’s break this down further.

The Basics: Mean, Median, and Mode

- Mean is just a fancy term for the average. If you add up everyone’s age at the party and divide by the number of people, you’ve got your mean age.

- Median is the middle number in a sorted list. If you line up everyone from the youngest to the oldest and pick the person in the middle, their age is your median. This is super handy when someone’s age is way off the chart (like if your grandma crashed the party), as it doesn’t skew the data.

- Mode is the most common age at the party. If you notice a lot of people are 22, then 22 is your mode. It’s like the age that wins the popularity contest.

Spreading the News: Range, Variance, and Standard Deviation

- Range gives you an idea of how spread out the ages are. It’s the difference between the oldest and the youngest. A small range means everyone’s around the same age, while a big range means a wider variety.

- Variance is a bit more complex. It measures how much the ages differ from the average age. A higher variance means ages are more spread out.

- Standard Deviation is the square root of variance. It’s like variance but back on a scale that makes sense. It tells you, on average, how far each person’s age is from the mean age.

Picture Perfect: Graphical Representations

- Histograms are like bar charts showing how many people fall into different age groups. They give you a quick glance at how ages are distributed.

- Bar Charts are great for comparing different categories, like how many men vs. women were at the party.

- Box Plots (or box-and-whisker plots) show you the median, the range, and if there are any outliers (like grandma).

- Scatter Plots are used when you want to see if there’s a relationship between two things, like if bringing more snacks means people stay longer at the party.

Why Descriptive Statistics Matter?

Descriptive statistics are your first step in data analysis.

They help you understand your data at a glance and prepare you for deeper analysis.

Without them, you’re like someone trying to guess what a party was like without any context.

Whether you’re looking at survey responses, test scores, or party attendees, descriptive statistics give you the tools to summarize and describe your data in a way that’s easy to grasp.

This approach is crucial in educational settings, particularly for enhancing math learning outcomes. For those looking to deepen their understanding of math or seeking additional support, check out this link: https://www.mathnasium.com/ math-tutors-near-me .

Remember, the goal of descriptive statistics is to simplify the complex.

Inferential Statistics: Beyond the Basics

Let’s keep the party analogy rolling, but this time, imagine you couldn’t attend the party yourself.

You’re curious if the party was as fun as everyone said it would be.

Instead of asking every single attendee, you decide to ask a few friends who went.

Based on their experiences, you try to infer what the entire party was like.

This is essentially what inferential statistics does with data.

It allows you to make predictions or draw conclusions about a larger group (the population) based on a smaller group (a sample). Let’s dive into how this works.

Probability

Inferential statistics is all about playing the odds.

When you make an inference, you’re saying, “Based on my sample, there’s a certain probability that my conclusion about the whole population is correct.”

It’s like betting on whether the party was fun, based on a few friends’ opinions.

The Central Limit Theorem (CLT)

The Central Limit Theorem is the superhero of statistics.

It tells us that if you take enough samples from a population, the sample means (averages) will form a normal distribution (a bell curve), no matter what the population distribution looks like.

This is crucial because it allows us to use sample data to make inferences about the population mean with a known level of uncertainty.

Confidence Intervals

Imagine you’re pretty sure the party was fun, but you want to know how fun.

A confidence interval gives you a range of values within which you believe the true mean fun level of the party lies.

It’s like saying, “I’m 95% confident the party’s fun rating was between 7 and 9 out of 10.”

Hypothesis Testing

This is where you get to be a bit of a detective. You start with a hypothesis (a guess) about the population.

For example, your null hypothesis might be “the party was average fun.” Then you use your sample data to test this hypothesis.

If the data strongly suggests otherwise, you might reject the null hypothesis and accept the alternative hypothesis, which could be “the party was super fun.”

The p-value tells you how likely it is that your data would have occurred by random chance if the null hypothesis were true.

A low p-value (typically less than 0.05) indicates that your findings are significant—that is, unlikely to have happened by chance.

It’s like saying, “The chance that all my friends are exaggerating about the party being fun is really low, so the party probably was fun.”

Why Inferential Statistics Matter?

Inferential statistics let us go beyond just describing our data.

They allow us to make educated guesses about a larger population based on a sample.

This is incredibly useful in almost every field—science, business, public health, and yes, even planning your next party.

By using probability, the Central Limit Theorem, confidence intervals, hypothesis testing, and p-values, we can make informed decisions without needing to ask every single person in the population.

It saves time, resources, and helps us understand the world more scientifically.

Remember, while inferential statistics gives us powerful tools for making predictions, those predictions come with a level of uncertainty.

Being a good data scientist means understanding and communicating that uncertainty clearly.

So next time you hear about a party you missed, use inferential statistics to figure out just how much FOMO (fear of missing out) you should really feel!

Common Statistical Tests: Choosing Your Data’s Best Friend

Alright, now that we’ve covered the basics of descriptive and inferential statistics, it’s time to talk about how we actually apply these concepts to make sense of data.

It’s like deciding on the best way to find out who was the life of the party.

You have several tools (tests) at your disposal, and choosing the right one depends on what you’re trying to find out and the type of data you have.

Let’s explore some of the most common statistical tests and when to use them.

T-Tests: Comparing Averages

Imagine you want to know if the average fun level was higher at this year’s party compared to last year’s.

A t-test helps you compare the means (averages) of two groups to see if they’re statistically different.

There are a couple of flavors:

- Independent t-test : Use this when comparing two different groups, like this year’s party vs. last year’s party.

- Paired t-test : Use this when comparing the same group at two different times or under two different conditions, like if you measured everyone’s fun level before and after the party.

ANOVA : When Three’s Not a Crowd.

But what if you had three or more parties to compare? That’s where ANOVA (Analysis of Variance) comes in handy.

It lets you compare the means across multiple groups at once to see if at least one of them is significantly different.

It’s like comparing the fun levels across several years’ parties to see if one year stood out.

Chi-Square Test: Categorically Speaking

Now, let’s say you’re interested in whether the type of music (pop, rock, electronic) affects party attendance.

Since you’re dealing with categories (types of music) and counts (number of attendees), you’ll use the Chi-Square test.

It’s great for seeing if there’s a relationship between two categorical variables.

Correlation and Regression: Finding Relationships

What if you suspect that the amount of snacks available at the party affects how long guests stay? To explore this, you’d use:

- Correlation analysis to see if there’s a relationship between two continuous variables (like snacks and party duration). It tells you how closely related two things are.

- Regression analysis goes a step further by not only showing if there’s a relationship but also how one variable predicts the other. It’s like saying, “For every extra bag of chips, guests stay an average of 10 minutes longer.”

Non-parametric Tests: When Assumptions Don’t Hold

All the tests mentioned above assume your data follows a normal distribution and meets other criteria.

But what if your data doesn’t play by these rules?

Enter non-parametric tests, like the Mann-Whitney U test (for comparing two groups when you can’t use a t-test) or the Kruskal-Wallis test (like ANOVA but for non-normal distributions).

Picking the Right Test

Choosing the right statistical test is crucial and depends on:

- The type of data you have (categorical vs. continuous).

- Whether you’re comparing groups or looking for relationships.

- The distribution of your data (normal vs. non-normal).

Why These Tests Matter?

Just like you’d pick the right tool for a job, selecting the appropriate statistical test helps you make valid and reliable conclusions about your data.

Whether you’re trying to prove a point, make a decision, or just understand the world a bit better, these tests are your gateway to insights.

By mastering these tests, you become a detective in the world of data, ready to uncover the truth behind the numbers!

Regression Analysis: Predicting the Future

Ever wondered if you could predict how much fun you’re going to have at a party based on the number of friends going, or how the amount of snacks available might affect the overall party vibe?

That’s where regression analysis comes into play, acting like a crystal ball for your data.

What is Regression Analysis?

Regression analysis is a powerful statistical method that allows you to examine the relationship between two or more variables of interest.

Think of it as detective work, where you’re trying to figure out if, how, and to what extent certain factors (like snacks and music volume) predict an outcome (like the fun level at a party).

The Two Main Characters: Independent and Dependent Variables

- Independent Variable(s): These are the predictors or factors that you suspect might influence the outcome. For example, the quantity of snacks.

- Dependent Variable: This is the outcome you’re interested in predicting. In our case, it could be the fun level of the party.

Linear Regression: The Straight Line Relationship

The most basic form of regression analysis is linear regression .

It predicts the outcome based on a linear relationship between the independent and dependent variables.

If you plot this on a graph, you’d ideally see a straight line where, as the amount of snacks increases, so does the fun level (hopefully!).

- Simple Linear Regression involves just one independent variable. It’s like saying, “Let’s see if just the number of snacks can predict the fun level.”

- Multiple Linear Regression takes it up a notch by including more than one independent variable. Now, you’re looking at whether the quantity of snacks, type of music, and number of guests together can predict the fun level.

Logistic Regression: When Outcomes are Either/Or

Not all predictions are about numbers.

Sometimes, you just want to know if something will happen or not—will the party be a hit or a flop?

Logistic regression is used for these binary outcomes.

Instead of predicting a precise fun level, it predicts the probability of the party being a hit based on the same predictors (snacks, music, guests).

Making Sense of the Results

- Coefficients: In regression analysis, each predictor has a coefficient, telling you how much the dependent variable is expected to change when that predictor changes by one unit, all else being equal.

- R-squared : This value tells you how much of the variation in your dependent variable can be explained by the independent variables. A higher R-squared means a better fit between your model and the data.

Why Regression Analysis Rocks?

Regression analysis is like having a superpower. It helps you understand which factors matter most, which can be ignored, and how different factors come together to influence the outcome.

This insight is invaluable whether you’re planning a party, running a business, or conducting scientific research.

Bringing It All Together

Imagine you’ve gathered data on several parties, including the number of guests, type of music, and amount of snacks, along with a fun level rating for each.

By running a regression analysis, you can start to predict future parties’ success, tailoring your planning to maximize fun.

It’s a practical tool for making informed decisions based on past data, helping you throw legendary parties, optimize business strategies, or understand complex relationships in your research.

In essence, regression analysis helps turn your data into actionable insights, guiding you towards smarter decisions and better predictions.

So next time you’re knee-deep in data, remember: regression analysis might just be the key to unlocking its secrets.

Non-parametric Methods: Playing By Different Rules

So far, we’ve talked a lot about statistical methods that rely on certain assumptions about your data, like it being normally distributed (forming that classic bell curve) or having a specific scale of measurement.

But what happens when your data doesn’t fit these molds?

Maybe the scores from your last party’s karaoke contest are all over the place, or you’re trying to compare the popularity of various party games but only have rankings, not scores.

This is where non-parametric methods come to the rescue.

Breaking Free from Assumptions

Non-parametric methods are the rebels of the statistical world.

They don’t assume your data follows a normal distribution or that it meets strict requirements regarding measurement scales.

These methods are perfect for dealing with ordinal data (like rankings), nominal data (like categories), or when your data is skewed or has outliers that would throw off other tests.

When to Use Non-parametric Methods?

- Your data is not normally distributed, and transformations don’t help.

- You have ordinal data (like survey responses that range from “Strongly Disagree” to “Strongly Agree”).

- You’re dealing with ranks or categories rather than precise measurements.

- Your sample size is small, making it hard to meet the assumptions required for parametric tests.

Some Popular Non-parametric Tests

- Mann-Whitney U Test: Think of it as the non-parametric counterpart to the independent samples t-test. Use this when you want to compare the differences between two independent groups on a ranking or ordinal scale.

- Kruskal-Wallis Test: This is your go-to when you have three or more groups to compare, and it’s similar to an ANOVA but for ranked/ordinal data or when your data doesn’t meet ANOVA’s assumptions.

- Spearman’s Rank Correlation: When you want to see if there’s a relationship between two sets of rankings, Spearman’s got your back. It’s like Pearson’s correlation for continuous data but designed for ranks.

- Wilcoxon Signed-Rank Test: Use this for comparing two related samples when you can’t use the paired t-test, typically because the differences between pairs are not normally distributed.

The Beauty of Flexibility

The real charm of non-parametric methods is their flexibility.

They let you work with data that’s not textbook perfect, which is often the case in the real world.

Whether you’re analyzing customer satisfaction surveys, comparing the effectiveness of different marketing strategies, or just trying to figure out if people prefer pizza or tacos at parties, non-parametric tests provide a robust way to get meaningful insights.

Keeping It Real