BROUGHT TO YOU BY

- Applications

- Computer Science

- Data Science Icons

- Machine Learning

- Mathematics

Dartmouth Summer Research Project: The Birth of Artificial Intelligence

Held in the summer of 1956, the dartmouth summer research project on artificial intelligence brought together some of the brightest minds in computing and cognitive science — and is considered to have founded artificial intelligence (ai) as a field..

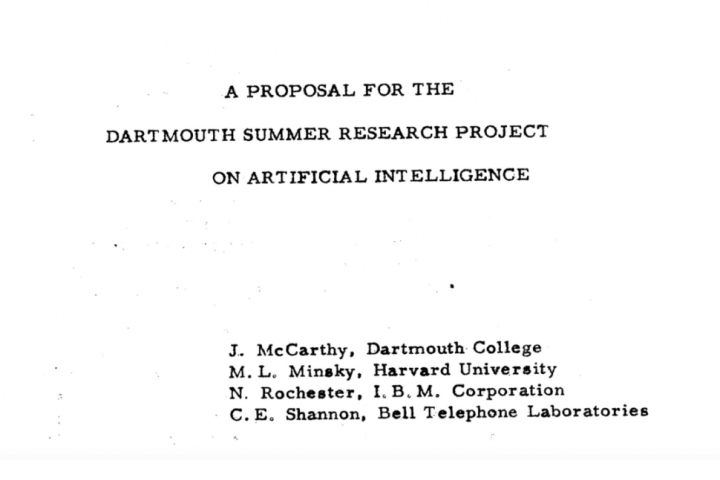

In the early 1950s, the field of “thinking machines” was given an array of names, from cybernetics to automata theory to complex information processing. Prior to the conference, John McCarthy — a young Assistant Professor of Mathematics at Dartmouth College — had been disappointed by submissions to the Annals of Mathematics Studies journal. He regretted that contributors didn’t focus on the potential for computers to possess intelligence beyond simple behaviors. So, he decided to organize a group to clarify and develop ideas about thinking machines.

“At the time I believed if only we could get everyone who was interested in the subject together to devote time to it and avoid distractions, we could make real progress”. John McCarthy

John approached the Rockefeller Foundation to request funding for a summer seminar at Dartmouth for 10 participants. In 1955, he formally proposed the project, along with friends and colleagues Marvin Minsky (Harvard University), Nathaniel Rochester (IBM Corporation), and Claude Shannon (Bell Telephone Laboratories).

Laying the Foundations of AI

The workshop was based on the conjecture that, “Every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves.”

Although they came from very different backgrounds, all the attendees believed that the act of thinking is not unique either to humans or even biological beings. Participants came and went, and discussions were wide reaching. The term AI itself was first coined and directions such as symbolic methods were initiated. Many of the participants would later make key contributions to AI, ushering in a new era.

IBM First Computer

Nathaniel Rochester designs the IBM 701, the first computer marketed by IBM.

“Machine Learning” is coined

Attendee Arthur Samuel coins the term “machine learning” and creates the Samuel Checkers-Playing program, one of the world’s first successful self-learning programs.

Marvin Minsky Wins the Turing Award

Marvin Minsky wins the Turing Award for his “central role in creating, shaping, promoting and advancing the field of artificial intelligence.”

Discover more articles

K-nearest neighbors algorithm: classification and regression star, katherine johnson: trailblazing nasa mathematician, mary lucy cartwright: the inspired mathematician behind chaos theory, your inbox will love data science.

For IEEE Members

Ieee spectrum, follow ieee spectrum, support ieee spectrum, enjoy more free content and benefits by creating an account, saving articles to read later requires an ieee spectrum account, the institute content is only available for members, downloading full pdf issues is exclusive for ieee members, downloading this e-book is exclusive for ieee members, access to spectrum 's digital edition is exclusive for ieee members, following topics is a feature exclusive for ieee members, adding your response to an article requires an ieee spectrum account, create an account to access more content and features on ieee spectrum , including the ability to save articles to read later, download spectrum collections, and participate in conversations with readers and editors. for more exclusive content and features, consider joining ieee ., join the world’s largest professional organization devoted to engineering and applied sciences and get access to all of spectrum’s articles, archives, pdf downloads, and other benefits. learn more →, join the world’s largest professional organization devoted to engineering and applied sciences and get access to this e-book plus all of ieee spectrum’s articles, archives, pdf downloads, and other benefits. learn more →, access thousands of articles — completely free, create an account and get exclusive content and features: save articles, download collections, and talk to tech insiders — all free for full access and benefits, join ieee as a paying member., the meeting of the minds that launched ai, there’s more to this group photo from a 1956 ai workshop than you’d think.

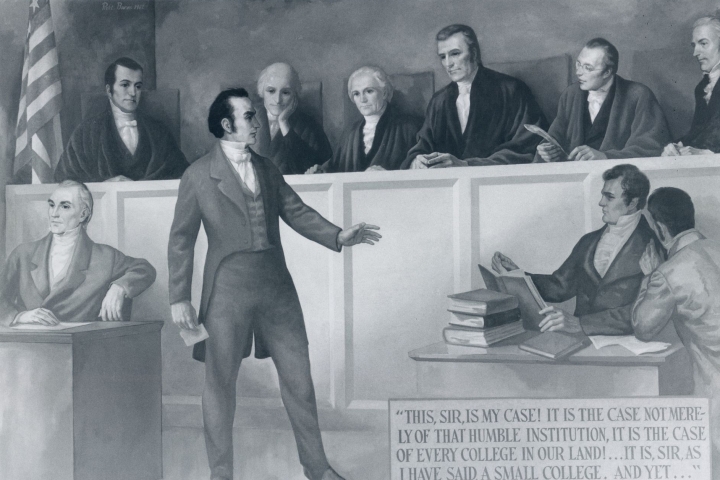

At the 1956 Dartmouth AI workshop, the organizers and a few other participants gathered in front of Dartmouth Hall.

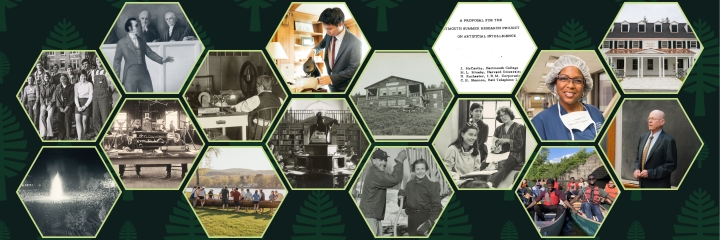

The Dartmouth Summer Research Project on Artificial Intelligence , held from 18 June through 17 August of 1956, is widely considered the event that kicked off AI as a research discipline. Organized by John McCarthy , Marvin Minsky , Claude Shannon , and Nathaniel Rochester , it brought together a few dozen of the leading thinkers in AI, computer science, and information theory to map out future paths for investigation.

A group photo [shown above] captured seven of the main participants. When the photo was reprinted in Eliza Strickland’s October 2021 article “The Turbulent Past and Uncertain Future of Artificial Intelligence” in IEEE Spectrum , the caption identified six people, plus one “unknown.” So who was this unknown person?

Who is in the photo?

Six of the people in the photo are easy to identify. In the back row, from left to right, we see Oliver Selfridge , Nathaniel Rochester, Marvin Minsky, and John McCarthy. Sitting in front on the left is Ray Solomonoff , and on the right, Claude Shannon. All six contributed to AI, computer science, or related fields in the decades following the Dartmouth workshop.

Between Solomonoff and Shannon is the unknown person. Over the years, some people suggested that this was Trenchard More , another AI expert who attended the workshop.

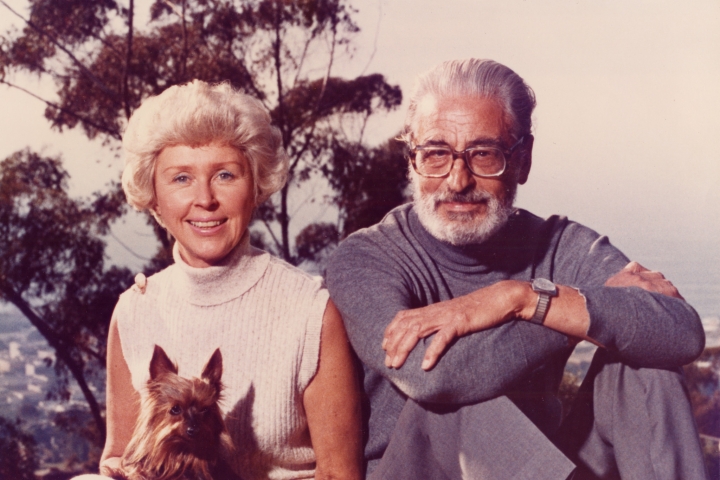

I first ran across the Dartmouth group photo in 2018, when I was gathering material for Ray’s memorial website . Ray and I had met in 1969, and we got married in 1989; he passed away in late 2009. Over the years, I had attended a number of his talks, and I had met many of Ray’s peers and colleagues in AI, so I was curious about the photo.

I thought, “Gee, that guy in the middle doesn’t look like my memory of Trenchard.” So I called up Trenchard’s son Paul More. He assured me that the unknown person was not his father.

More recently, I discovered a letter among Ray’s papers. On 8 November 1956, Nat Rochester sent a short note and a copy of the photo to some colleagues: “Enclosed is a print of the photograph I took of the Artificial Intelligence group.” He sent his note to McCarthy, Minsky, Selfridge, Shannon, Solomonoff—and Peter Milner.

So the unknown person must be Milner! This makes perfect sense. Milner was working on neuropsychology at McGill University , in Montreal, although he had trained as an electrical engineer. He’s not generally lumped in with the other AI pioneers because his research interests diverged from theirs. Even at Dartmouth, he felt he was in over his head, as he wrote in his 1999 autobiography: “I was invited to a meeting of computer scientists and information theorists at Dartmouth College…. Most of the time I had no idea what they were talking about.”

In his fascinating autobiography, Milner writes about his work in radar development during World War II, and his switch after the war from nuclear-reactor design to psychology. His doctoral thesis in 1954, “ Effects of Intracranial Stimulation on Rat Behaviour ,” examined the effects of electrical stimulation on certain rat neurons, which became widely and enthusiastically known as “pleasure centers.”

This work led to one of Milner’s most famous papers, “ The Cell Assembly: Mark II ,” in 1957. The paper describes how, when a neuron in the brain fires, it excites similar connected neurons (especially those already aroused by sensory input) and randomly excites other cortical neurons. Cells may form assemblies and connect with other assemblies. But the neurons don’t seem to exhibit the same snowballing behavior of atoms that leads to an exponential explosion. How neurons might inhibit this effect were among his ideas that led to new insights at the workshop.

Milner’s work contributed to the early development of artificial neural networks, and it’s why he was included in the Dartmouth meeting. There was considerable interest among AI researchers in studying the brain and neurons in order to reproduce its functions and intelligence.

But as Strickland notes in her October 2021 Spectrum article, a division was already forming in AI research. One side focused on replicating the brain, while the other was more interested in what the mind might do to directly solve problems. Scientists interested in this latter approach were also represented at Dartmouth and later championed the rise of symbolic logic, using heuristic and algorithmic processes, which I’ll discuss in a bit.

Where Was the Photo Taken?

Rochester’s photo from 1956 shows the left-hand side of Dartmouth Hall in the background. In 2006 Dartmouth convened a conference, AI@50 , to celebrate the 50th anniversary of the AI gathering and to discuss AI’s present and future. Trenchard More, the person most often misidentified as the “unknown person” in Nat’s photo, met with the organizers, James Moor and Carey Heckman, as well as Wendy Conquest, who was working on a movie about AI for the conference. None of the AI@50 organizers knew exactly where the 1956 meeting had taken place.

More led them across the lawn and to the left-hand side door of Dartmouth Hall. He showed them the rooms that were used, which in turn triggered an old memory. During the 1956 meeting, as More recalled in a 2011 interview , “Selfridge, and Minsky, and McCarthy, and Ray Solomonoff, and I gathered around a dictionary on a stand to look up the word heuristic , because we thought that might be a useful word.” On that 2006 tour of Dartmouth Hall, he was delighted to find that the dictionary was still there.

The word heuristic was invoked all through the summer of 1956. Instead of trying to analyze the brain to develop machine intelligence, some participants focused on the operational steps needed to solve a given problem, making particular use of heuristic methods to quickly identify the steps.

Early in the summer, for instance, Herb Simon and Allen Newell gave a talk on a program they had written, the logic theory machine . The program relied on early ideas of symbolic logic, with algorithmic steps and heuristic guidance in list form. They later won the 1975 Turing Award for these ideas. Think of heuristics as intuitive guides. The logic theory machine used such guides to initiate the algorithmic steps—that is, the set of instructions to actually carry out the problem solving.

Who Wasn’t in the Photo

There was one person who was at the Dartmouth Workshop from time to time but was never included in any of the lists of attendees: Gloria Minsky, Marvin’s wife.

But Gloria was definitely a presence that summer. Marvin, Ray, and John McCarthy were the only three participants to stay for the entire eight-week workshop. Everyone else came and went as their schedules allowed. At the time, Gloria was a pediatrics fellow at Children’s Hospital in Boston, but whenever she could, she would drive up to Dartmouth, stay in Marvin’s apartment, and visit with whoever was at the workshop.

Several years earlier, in the spring of 1952, Gloria had been doing her residency in pathology at New York’s Bellevue Hospital, when she began dating Marvin. Marvin was a Ph.D. student at Princeton, as was McCarthy, and the two were invited to Bell Labs for the summer to work under Claude Shannon. In July, just four months after their first meeting, Gloria and Marvin got married. Although Marvin was working nonstop for Shannon, Shannon insisted he and Gloria take a honeymoon in New Mexico.

Four years later, McCarthy, Shannon, and Minsky, along with Nat Rochester, organized the Dartmouth workshop . Gloria remembered a conversation between her husband and Ray, in which Marvin expressed a thought that later became one of his hallmarks: “You need to see something in more than one way to understand it.” In Minsky’s 2007 book The Emotion Machine , he looked at how emotions, intuitions, and feelings create different descriptions and provide different ways of looking at things. He tended to favor symbolic logic and deductive methods in AI, which he called “good old-fashioned AI.”

Ray, meanwhile, was focused on probabilities—the likelihood of something happening and predictions of how it might evolve. He later developed algorithmic probability, an early version of algorithmic information theory, in which each different description of something leads with a probabilistic likelihood (some more likely, some less likely) of a given outcome in the future. Probabilistic methods eventually became the underpinnings of machine learning.

These days, as chatbots enter the limelight, and compression methods are used more in AI, the value of understanding things in many ways and using probabilistic predictions will only grow in importance. That is, logic and probability methods are uniting. These in turn are being aided by new work on neural nets as well as symbolic logic. And so the photo that Nat Rochester took not only captured a moment in time for AI. It also offered a glimpse into how AI would develop.

The author thanks Gloria Minsky, Margaret Minsky, Nicholas Rochester, Julie Sussman, Gerald Jay Sussman, and Paul More for their help and patience.

- Marvin Minsky’s Legacy of Students and Ideas ›

- How Claude Shannon Helped Kick-start Machine Learning ›

- The Turbulent Past and Uncertain Future of Artificial Intelligence ›

- A Proposal for the Dartmouth Summer Research Project on Artificial ... ›

- Ray Solomonoff and the Dartmouth Summer Research Project in ... ›

- Artificial Intelligence (AI) Coined at Dartmouth | Dartmouth ›

Why One Man Spent 12 Years Fighting Robocalls

Tiny biosensor unlocks the secrets of sweat, startups say india is ideal for testing self-driving cars, related stories, ai chip trims energy budget back by 99+ percent, faster, more secure photonic chip boosts ai training, what if the biggest ai fear is ai fear itself.

- Visual Essays

- Print Subscription

A Look Back on the Dartmouth Summer Research Project on Artificial Intelligence

At this convention that took place on campus in the summer of 1956, the term “artificial intelligence” was coined by scientists..

For six weeks in the summer of 1956, a group of scientists convened on Dartmouth’s campus for the Dartmouth Summer Research Project on Artificial Intelligence. It was at this meeting that the term “artificial intelligence,” was coined. Decades later, artificial intelligence has made significant advancements. While the recent onset of programs like ChatGPT are changing the artificial intelligence landscape once again, The Dartmouth investigates the history of artificial intelligence on campus.

That initial conference in 1956 paved the way for the future of artificial intelligence in academia, according to Cade Metz, author of the book “Genius Makers: the Mavericks who Brought AI to Google, Facebook and the World.”

“It set the goals for this field,” Metz said. “The way we think about the technology is because of the way it was framed at that conference.”

However, the connection between Dartmouth and the birth of AI is not very well-known, according to some students. DALI Lab outreach chair and developer Jason Pak ’24 said that he had heard of the conference, but that he didn’t think it was widely discussed in the computer science department.

“In general, a lot of CS students don’t know a lot about the history of AI at Dartmouth,” Pak said. “When I’m taking CS classes, it is not something that I’m actively thinking about.”

Even though the connection between Dartmouth and the birth of artificial intelligence is not widely known on campus today, the conference’s influence on academic research in AI was far-reaching, Metz said. In fact, four of the conference participants built three of the largest and most influential AI labs at other universities across the country, shifting the nexus of AI research away from Dartmouth.

Conference participants John McCarthy and Marvin Minsky would establish AI labs at Stanford and MIT, respectively, while two other participants, Alan Newell and Hebert Simon, built an AI lab at Carnegie Mellon. Taken together, the labs at MIT, Stanford and Carnegie Mellon drove AI research for decades, Metz said.

Although the conference participants were optimistic, in the following decades, they would not achieve many of the achievements they believed would be possible with AI. Some participants in the conference, for example, believed that a computer would be able to beat any human in chess within just a decade.

“The goal was to build a machine that could do what the human brain could do,” Metz said. “Generally speaking, they didn’t think [the development of AI] would take that long.”

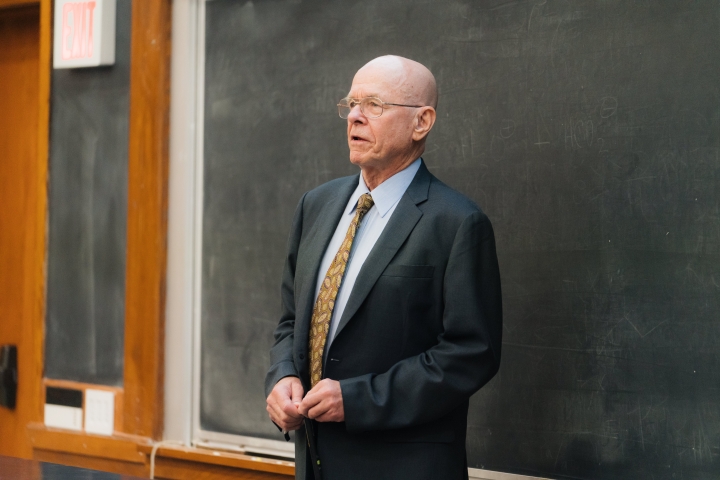

The conference mostly consisted of brainstorming ideas about how AI should work. However, “there was very little written record” of the conference, according to computer science professor emeritus Thomas Kurtz, in an interview that is part of the Rauner Special Collections archives.

The conference represented all kinds of disciplines coming together, Metz said. At that point, AI was a field at the intersection of computer science and psychology and it had overlaps with other emerging disciplines, such as neuroscience, he added.

Metz said that after the conference, two camps of AI research emerged. One camp believed in what is called neural networks, mathematical systems that learn skills by analyzing data. The idea of neural networks was based on the concept that machines can learn like the human brain, creating new connections and growing over time by responding to real-world input data.

Some of the conference participants would go on to argue that it wasn’t possible for machines to learn on their own. Instead, they believed in what is called “symbolic AI.”

“They felt like you had to build AI rule-by-rule,” Metz said. “You had to define intelligence yourself; you had to — rule-by-rule, line-by-line — define how intelligence would work.”

Notably, conference participant Marvin Minsky would go on to cast doubt on the neural network idea, particularly after the 1969 publication of “Perceptrons,” co-authored by Minsky and mathematician Seymour Paper, which Metz said led to a decline in neural network research.

Over the decades, Minsky adapted his ideas about neural networks, according to Joseph Rosen, a surgery professor at Dartmouth Hitchcock Medical Center. Rosen first met Minsky in 1989 and remained a close friend of his until Minsky’s death in 2016.

Minsky’s views on neural networks were complex, Rosen said, but his interest in studying AI was driven by a desire to understand human intelligence and how it worked.

“Marvin was most interested in how computers and AI could help us better understand ourselves,” Rosen said.

In about 2010, however, the neural network idea “was proven to be the way forward,” Metz said. Neural networks allow artificial intelligence programs to learn tasks on their own, which has driven a current boom in AI research, he added.

Given the boom in research activity around neural networks, some Dartmouth students feel like there is an opportunity for growth in AI-related courses and research opportunities. According to Pak, currently, the computer science department mostly focuses on research areas other than AI. Of the 64 general computer science courses offered every year, only two are related to AI, according to the computer science department website.

“A lot of our interests are shaped by the classes we take,” Pak said. “There is definitely room for more growth in AI-related courses.”

There is a high demand for classes related to AI, according to Pak. Despite being a computer science and music double major, he said he could not get into a course called MUS 14.05: “Music and Artificial Intelligence” because of the demand.

DALI Lab developer and former development lead Samiha Datta ’23 said that she is doing her senior thesis on neural language processing, a subfield of AI and machine learning. Datta said that the conference is pretty well-referenced, but she believes that many students do not know much about the specifics.

She added she thinks the department is aware of and trying to improve the lack of courses taught directly related to AI, and that it is “more possible” to do AI research at Dartmouth now than it would have been a few years ago, due to the recent onboarding of four new professors who do AI research.

“I feel lucky to be doing research on AI at the same place where the term was coined,” Datta said.

Reflection: Savoring the Foco Soup Station

A Deep Dive into Dimensions

Studying a Hemisphere Away

Julia cross ’24 dies at age 21, letter to the editor: a member of the men’s basketball team speaks out in opposition to unionization, college community reacts to dartmouth’s ‘c’ grade on adl’s antisemitism report card, dartmouth names honorary degree recipients, james parker up for parole in may, 23 years after the zantop murders.

The Dartmouth

Science in the News

Opening the lines of communication between research scientists and the wider community.

- SITN Facebook Page

- SITN Twitter Feed

- SITN Instagram Page

- SITN Lectures on YouTube

- SITN Podcast on SoundCloud

- Subscribe to the SITN Mailing List

- SITN Website RSS Feed

The History of Artificial Intelligence

by Rockwell Anyoha

Can Machines Think?

In the first half of the 20 th century, science fiction familiarized the world with the concept of artificially intelligent robots. It began with the “heartless” Tin man from the Wizard of Oz and continued with the humanoid robot that impersonated Maria in Metropolis . By the 1950s, we had a generation of scientists, mathematicians, and philosophers with the concept of artificial intelligence (or AI) culturally assimilated in their minds. One such person was Alan Turing, a young British polymath who explored the mathematical possibility of artificial intelligence. Turing suggested that humans use available information as well as reason in order to solve problems and make decisions, so why can’t machines do the same thing? This was the logical framework of his 1950 paper, Computing Machinery and Intelligence in which he discussed how to build intelligent machines and how to test their intelligence.

Making the Pursuit Possible

Unfortunately, talk is cheap. What stopped Turing from getting to work right then and there? First, computers needed to fundamentally change. Before 1949 computers lacked a key prerequisite for intelligence: they couldn’t store commands, only execute them. In other words, computers could be told what to do but couldn’t remember what they did. Second, computing was extremely expensive . In the early 1950s, the cost of leasing a computer ran up to $200,000 a month. Only prestigious universities and big technology companies could afford to dillydally in these uncharted waters. A proof of concept as well as advocacy from high profile people were needed to persuade funding sources that machine intelligence was worth pursuing.

The Conference that Started it All

Five years later, the proof of concept was initialized through Allen Newell, Cliff Shaw, and Herbert Simon’s, Logic Theorist . The Logic Theorist was a program designed to mimic the problem solving skills of a human and was funded by Research and Development (RAND) Corporation. It’s considered by many to be the first artificial intelligence program and was presented at the Dartmouth Summer Research Project on Artificial Intelligence (DSRPAI) hosted by John McCarthy and Marvin Minsky in 1956. In this historic conference, McCarthy, imagining a great collaborative effort, brought together top researchers from various fields for an open ended discussion on artificial intelligence, the term which he coined at the very event. Sadly, the conference fell short of McCarthy’s expectations; people came and went as they pleased, and there was failure to agree on standard methods for the field. Despite this, everyone whole-heartedly aligned with the sentiment that AI was achievable. The significance of this event cannot be undermined as it catalyzed the next twenty years of AI research.

Roller Coaster of Success and Setbacks

From 1957 to 1974, AI flourished. Computers could store more information and became faster, cheaper, and more accessible. Machine learning algorithms also improved and people got better at knowing which algorithm to apply to their problem. Early demonstrations such as Newell and Simon’s General Problem Solver and Joseph Weizenbaum’s ELIZA showed promise toward the goals of problem solving and the interpretation of spoken language respectively. These successes, as well as the advocacy of leading researchers (namely the attendees of the DSRPAI) convinced government agencies such as the Defense Advanced Research Projects Agency (DARPA) to fund AI research at several institutions. The government was particularly interested in a machine that could transcribe and translate spoken language as well as high throughput data processing. Optimism was high and expectations were even higher. In 1970 Marvin Minsky told Life Magazine, “from three to eight years we will have a machine with the general intelligence of an average human being.” However, while the basic proof of principle was there, there was still a long way to go before the end goals of natural language processing, abstract thinking, and self-recognition could be achieved.

Breaching the initial fog of AI revealed a mountain of obstacles. The biggest was the lack of computational power to do anything substantial: computers simply couldn’t store enough information or process it fast enough. In order to communicate, for example, one needs to know the meanings of many words and understand them in many combinations. Hans Moravec, a doctoral student of McCarthy at the time, stated that “computers were still millions of times too weak to exhibit intelligence.” As patience dwindled so did the funding, and research came to a slow roll for ten years.

In the 1980’s, AI was reignited by two sources: an expansion of the algorithmic toolkit, and a boost of funds. John Hopfield and David Rumelhart popularized “deep learning” techniques which allowed computers to learn using experience. On the other hand Edward Feigenbaum introduced expert systems which mimicked the decision making process of a human expert. The program would ask an expert in a field how to respond in a given situation, and once this was learned for virtually every situation, non-experts could receive advice from that program. Expert systems were widely used in industries. The Japanese government heavily funded expert systems and other AI related endeavors as part of their Fifth Generation Computer Project (FGCP). From 1982-1990, they invested $400 million dollars with the goals of revolutionizing computer processing, implementing logic programming, and improving artificial intelligence. Unfortunately, most of the ambitious goals were not met. However, it could be argued that the indirect effects of the FGCP inspired a talented young generation of engineers and scientists. Regardless, funding of the FGCP ceased, and AI fell out of the limelight.

Ironically, in the absence of government funding and public hype, AI thrived. During the 1990s and 2000s, many of the landmark goals of artificial intelligence had been achieved. In 1997, reigning world chess champion and grand master Gary Kasparov was defeated by IBM’s Deep Blue , a chess playing computer program. This highly publicized match was the first time a reigning world chess champion loss to a computer and served as a huge step towards an artificially intelligent decision making program. In the same year, speech recognition software, developed by Dragon Systems, was implemented on Windows . This was another great step forward but in the direction of the spoken language interpretation endeavor. It seemed that there wasn’t a problem machines couldn’t handle. Even human emotion was fair game as evidenced by Kismet , a robot developed by Cynthia Breazeal that could recognize and display emotions.

Time Heals all Wounds

We haven’t gotten any smarter about how we are coding artificial intelligence, so what changed? It turns out, the fundamental limit of computer storage that was holding us back 30 years ago was no longer a problem. Moore’s Law , which estimates that the memory and speed of computers doubles every year, had finally caught up and in many cases, surpassed our needs. This is precisely how Deep Blue was able to defeat Gary Kasparov in 1997, and how Google’s Alpha Go was able to defeat Chinese Go champion, Ke Jie, only a few months ago. It offers a bit of an explanation to the roller coaster of AI research; we saturate the capabilities of AI to the level of our current computational power (computer storage and processing speed), and then wait for Moore’s Law to catch up again.

Artificial Intelligence is Everywhere

We now live in the age of “ big data ,” an age in which we have the capacity to collect huge sums of information too cumbersome for a person to process. The application of artificial intelligence in this regard has already been quite fruitful in several industries such as technology, banking , marketing , and entertainment . We’ve seen that even if algorithms don’t improve much, big data and massive computing simply allow artificial intelligence to learn through brute force. There may be evidence that Moore’s law is slowing down a tad, but the increase in data certainly hasn’t lost any momentum . Breakthroughs in computer science, mathematics, or neuroscience all serve as potential outs through the ceiling of Moore’s Law.

So what is in store for the future? In the immediate future, AI language is looking like the next big thing. In fact, it’s already underway. I can’t remember the last time I called a company and directly spoke with a human. These days, machines are even calling me! One could imagine interacting with an expert system in a fluid conversation, or having a conversation in two different languages being translated in real time. We can also expect to see driverless cars on the road in the next twenty years (and that is conservative). In the long term, the goal is general intelligence, that is a machine that surpasses human cognitive abilities in all tasks. This is along the lines of the sentient robot we are used to seeing in movies. To me, it seems inconceivable that this would be accomplished in the next 50 years. Even if the capability is there, the ethical questions would serve as a strong barrier against fruition. When that time comes (but better even before the time comes), we will need to have a serious conversation about machine policy and ethics (ironically both fundamentally human subjects), but for now, we’ll allow AI to steadily improve and run amok in society.

Rockwell Anyoha is a graduate student in the department of molecular biology with a background in physics and genetics. His current project employs the use of machine learning to model animal behavior. In his free time, Rockwell enjoys playing soccer and debating mundane topics.

This article is part of a Special Edition on Artificial Intelligence .

For more information:

Brief Timeline of AI

https://www.livescience.com/47544-history-of-a-i-artificial-intelligence-infographic.html

Complete Historical Overview

http://courses.cs.washington.edu/courses/csep590/06au/projects/history-ai.pdf

Dartmouth Summer Research Project on Artificial Intelligence

https://www.aaai.org/ojs/index.php/aimagazine/article/view/1904/1802

Future of AI

https://www.technologyreview.com/s/602830/the-future-of-artificial-intelligence-and-cybernetics/

Discussion on Future Ethical Challenges Facing AI

http://www.bbc.com/future/story/20170307-the-ethical-challenge-facing-artificial-intelligence

Detailed Review of Ethics of AI

https://intelligence.org/files/EthicsofAI.pdf

Share this:

- Click to print (Opens in new window)

- Click to email a link to a friend (Opens in new window)

- Click to share on Facebook (Opens in new window)

- Click to share on Twitter (Opens in new window)

- Click to share on Reddit (Opens in new window)

281 thoughts on “ The History of Artificial Intelligence ”

Mismet .Non profit A1,who are u kidding billionaires .algorthims no thku climate change redress what a disgrace mess u internet valleys fools be .for the advancemence of humanity ,steven halkins said before he died The Organic world in realiry is where its at & actually compassion .Frm toYe I say NO THANKYOU. THE Disgrace YERS .!!!!!!!!!!!!! Climate change aceralation is NoT to be ignored or REDRESS computers cellphones landfill money exploitation racketeers.Too .Shame on u . & NO ThKU to A1

Hello, I’m a new fan of your website, and I’m really enjoying it. As a result, I am glad to share this information with you.

GOOD I LIKE IT BUT IT IS TOO BIG

The potential downsides are concerning

nicely done Rockwell Anyoha

This article was very informational.

this was really helpful. I can do a full good informing essay just on this source.

Thank you very much

I found this article informative and entertaining. I like the fact that it touches on the history and development of AI from the beginning to now, including some insights into the near future. It has a futuristic outlook on AI! Note: I love the resources list

A story of over 90 years told in few lines. This is spectacular!

thank you very much. i 100% made this bro

I’m really concerned about this comment. it is very unprofessional and disrespects the work of the owner of this article. I advise you to delete this comment ASAP.

The author of this article goes by the name of Rockwell Anyoha not malte castle

help a lot with an essy

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Notify me of follow-up comments by email.

Notify me of new posts by email.

Currently you have JavaScript disabled. In order to post comments, please make sure JavaScript and Cookies are enabled, and reload the page. Click here for instructions on how to enable JavaScript in your browser.

Dartmouth Milestones

Read stories about dartmouth’s unique character, indelible spirit, and rich history. .

The award celebrates the field of “click chemistry,” a name Sharpless coined.

Read the story

Beilock is the first woman elected to the position in Dartmouth’s more than 250-year history.

Renamed the Frank J. Guarini School of Graduate and Advanced Studies in 2018 in acknowledgment of an investment by the Honorable Frank J. Guarini ’46, Guarini supports more than 1,000 graduate students, doctoral candidates, and postdoctoral scholars.

The residence hall welcomes the LGBTQIA community and allied students who share a passion for social justice issues.

Dartmouth renamed its medical school, founded in 1797, in honor of Audrey and Theodor Geisel, Class of 1925.

(Photo by Jay Davis ’90)

The First Year Student Enrichment Program empowers first-generation students to thrive academically.

Alumna Andrea Hayes-Jordan ’87, MED ’91 became the nation’s first Black female pediatric surgeon.

(Photo by Eli Burakian ’00)

Sharpless credited a Dartmouth professor for helping set the course of his life’s work. He won the Nobel Prize in Chemistry.

Each year the farm grows more than 2000 pounds of diverse, fresh, tasty, organic produce.

In September 1972, one hundred seventy-seven women matriculated as freshmen, along with 74 female transfer students.

(Photo by Robert Gill)

Today the program involves over 90 percent of the freshman class.

He won the Thayer Prize in Mathematics and the Kramer Fellowship at Dartmouth. He went on to win the Nobel Prize in Physics.

The Dartmouth Summer Research Project on Artificial Intelligence was a seminal event for artificial intelligence as a field.

On Dec. 15, 1956, Polly Case straddled a small disk attached to a cable—a “poma lift”—and rode to the top of Holt’s Ledge in Lyme, N.H.

The lodge was constructed to serve some of the nation’s earliest competitive skiing.

Sanborn Library offers a tea service each weekday afternoon at 4 o’clock.

The library was designed by college architect Jens Frederick Larson, modeled after Independence Hall in Philadelphia.

Ready the story

A group of Dartmouth students gathered together with the goal of restoring a rowing team to the College.

Elizabeth Reynolds Hapgood, a talented linguist, created the Russian program at the College.

The Amos Tuck School of Business Administration and Finance was the first institution in the world to offer a master’s degree in business administration. Edward Tuck, class of 1862, donated an initial grant of $300,000 to found the school in 1899, naming it in memory of his father Amos Tuck, class of 1835.

The medical x-ray, like many inventions, is the result of different people working simultaneously on the same idea.

During Dartmouth Night and Homecoming, alumni return to join students in a revelatory celebration that includes a colorful parade and blazing bonfire.

Sylvanus Thayer, Class of 1807, established the engineering school at his alma mater.

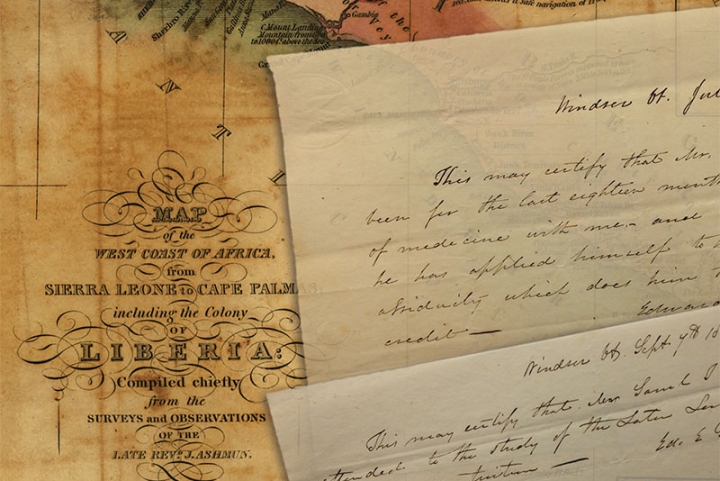

American-born and raised in Liberia, Samuel Ford McGill—Class of 1839—was the first black graduate of a U.S. medical school.

A landmark ruling in the development of U.S. constitutional and corporate law, Trustees of Dartmouth College v. Woodward held that the College would remain a private institution and not become a state university.

Dartmouth’s “Medical Department” was established when founder Nathan Smith delivered his first lecture on Nov. 22.

See more medical school history

The Society of Social Friends “Socials” maintained a student-funded and managed library, circulating holdings to its members only.

April 18, 2024

AI Report Shows ‘Startlingly Rapid’ Progress—And Ballooning Costs

A new report finds that AI matches or outperforms people at tasks such as competitive math and reading comprehension

By Nicola Jones & Nature magazine

xijian/Getty Images

Artificial intelligence (AI) systems, such as the chatbot ChatGPT , have become so advanced that they now very nearly match or exceed human performance in tasks including reading comprehension, image classification and competition-level mathematics, according to a new report. Rapid progress in the development of these systems also means that many common benchmarks and tests for assessing them are quickly becoming obsolete.

These are just a few of the top-line findings from the Artificial Intelligence Index Report 2024 , which was published on 15 April by the Institute for Human-Centered Artificial Intelligence at Stanford University in California. The report charts the meteoric progress in machine-learning systems over the past decade.

In particular, the report says, new ways of assessing AI — for example, evaluating their performance on complex tasks, such as abstraction and reasoning — are more and more necessary. “A decade ago, benchmarks would serve the community for 5–10 years” whereas now they often become irrelevant in just a few years, says Nestor Maslej, a social scientist at Stanford and editor-in-chief of the AI Index. “The pace of gain has been startlingly rapid.”

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing . By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Stanford’s annual AI Index, first published in 2017, is compiled by a group of academic and industry specialists to assess the field’s technical capabilities, costs, ethics and more — with an eye towards informing researchers, policymakers and the public. This year’s report, which is more than 400 pages long and was copy-edited and tightened with the aid of AI tools, notes that AI-related regulation in the United States is sharply rising. But the lack of standardized assessments for responsible use of AI makes it difficult to compare systems in terms of the risks that they pose.

The rising use of AI in science is also highlighted in this year’s edition: for the first time, it dedicates an entire chapter to science applications, highlighting projects including Graph Networks for Materials Exploration (GNoME), a project from Google DeepMind that aims to help chemists discover materials , and GraphCast, another DeepMind tool, which does rapid weather forecasting .

The current AI boom — built on neural networks and machine-learning algorithms — dates back to the early 2010s . The field has since rapidly expanded. For example, the number of AI coding projects on GitHub, a common platform for sharing code, increased from about 800 in 2011 to 1.8 million last year. And journal publications about AI roughly tripled over this period, the report says.

Much of the cutting-edge work on AI is being done in industry: that sector produced 51 notable machine-learning systems last year, whereas academic researchers contributed 15. “Academic work is shifting to analysing the models coming out of companies — doing a deeper dive into their weaknesses,” says Raymond Mooney, director of the AI Lab at the University of Texas at Austin, who wasn’t involved in the report.

That includes developing tougher tests to assess the visual, mathematical and even moral-reasoning capabilities of large language models (LLMs), which power chatbots. One of the latest tests is the Graduate-Level Google-Proof Q&A Benchmark (GPQA), developed last year by a team including machine-learning researcher David Rein at New York University.

The GPQA, consisting of more than 400 multiple-choice questions, is tough: PhD-level scholars could correctly answer questions in their field 65% of the time. The same scholars, when attempting to answer questions outside their field, scored only 34%, despite having access to the Internet during the test (randomly selecting answers would yield a score of 25%). As of last year, AI systems scored about 30–40%. This year, Rein says, Claude 3 — the latest chatbot released by AI company Anthropic, based in San Francisco, California — scored about 60%. “The rate of progress is pretty shocking to a lot of people, me included,” Rein adds. “It’s quite difficult to make a benchmark that survives for more than a few years.”

Cost of business

As performance is skyrocketing, so are costs. GPT-4 — the LLM that powers ChatGPT and that was released in March 2023 by San Francisco-based firm OpenAI — reportedly cost US$78 million to train. Google’s chatbot Gemini Ultra, launched in December, cost $191 million. Many people are concerned about the energy use of these systems, as well as the amount of water needed to cool the data centres that help to run them. “These systems are impressive, but they’re also very inefficient,” Maslej says.

Costs and energy use for AI models are high in large part because one of the main ways to make current systems better is to make them bigger. This means training them on ever-larger stocks of text and images. The AI Index notes that some researchers now worry about running out of training data. Last year, according to the report, the non-profit research institute Epoch projected that we might exhaust supplies of high-quality language data as soon as this year. (However, the institute’s most recent analysis suggests that 2028 is a better estimate.)

Ethical concerns about how AI is built and used are also mounting. “People are way more nervous about AI than ever before, both in the United States and across the globe,” says Maslej, who sees signs of a growing international divide. “There are now some countries very excited about AI, and others that are very pessimistic.”

In the United States, the report notes a steep rise in regulatory interest. In 2016, there was just one US regulation that mentioned AI; last year, there were 25. “After 2022, there’s a massive spike in the number of AI-related bills that have been proposed” by policymakers, Maslej says.

Regulatory action is increasingly focused on promoting responsible AI use. Although benchmarks are emerging that can score metrics such as an AI tool’s truthfulness, bias and even likability, not everyone is using the same models, Maslej says, which makes cross-comparisons hard. “This is a really important topic,” he says. “We need to bring the community together on this.”

This article is reproduced with permission and was first published on April 15, 2024 .

Franklin Regional approves summer artificial intelligence program

Franklin Regional school board members ultimately approved a proposed summer program focused on artificial intelligence, after members of its curriculum committee spoke with program organizers about their concerns.

Inspirit AI is an eight-session summer program developed and taught by graduates of Stanford University and the Massachusetts Institute of Technology, or MIT.

“Students will learn the fundamental concepts of AI and gain a deeper understanding of how AI is used to build ChatGPT and generative AI, fight the covid-19 pandemic, power self-driving cars and more,” according to a program description provided by Inspirit AI. “Students will learn to program AI using Python … (and) discuss ethics and bias within AI.”

Some school board members objected to ethics and bias being taught as part of the voluntary course, and a representative from Inspirit gave a presentation at the board’s most recent curriculum committee meeting.

“They offered to remove the ethics and bias section of the program, and that’s not what the board or the committee really wanted,” said school board member Joshua Zebrak, who attended the committee meeting. “That wasn’t what I wanted, either. I just wanted to make sure the ethics and bias portion was being taught in an objective way. … I wanted them to focus on things that are objectively true, as opposed to an opinion.”

School board and curriculum committee member Scott Weinman said the discussion with Inspirit was helpful.

“I asked what they meant by ‘ethics and bias,’ if it was more about looking at the code and what output it provides, and how to minimize ethics and bias within the coding, and it was,” Weinman said. “We also asked if the kids can choose whatever project they want to work on — that there’s no steering them in a particular direction.”

At the school board’s April meeting, Assistant Superintendent Matthew Delp said projects within the Inspirit program are chosen by students, rather than proscribed by instructors.

“I’m not looking to control what a student is personally interested in,” Zebrak said. “I just don’t want it being dictated from a place of power, like someone with a master’s degree from an Ivy League school.”

The board voted unanimously to approve the program. Board members Mark Kozlosky and Herb Yingling were absent.

Patrick Varine is a TribLive reporter covering Delmont, Export and Murrysville. He is a Western Pennsylvania native and joined the Trib in 2010 after working as a reporter and editor with the former Dover Post Co. in Delaware. He can be reached at [email protected] .

Remove the ads from your TribLIVE reading experience but still support the journalists who create the content with TribLIVE Ad-Free.

Get Ad-Free >

TribLIVE's Daily and Weekly email newsletters deliver the news you want and information you need, right to your inbox.

News Spotlight

- How to Enhance Your Home’s Exterior with Quality Siding Options Bella Construction & Development Inc Partner News

- Pittsburgh council member cancels closed-doors meeting amid experts' concerns about transparency TribLive

- Watching the Solar Eclipse at Foggy Mountain Lodge: What You Need to Know Foggy Mountain Lodge Partner News

- Leechburg softball hopes to overcome semifinal hurdle against Union - Trib HSSN TribHSSN

- Better Streets Lawrenceville Happy Hour LawrencevilleAreaNews-UGC

- 5 Key Life Skills Your Child Can Gain from Martial Arts Training Norwin Ninjas Partner News

- Tang Soo Do: The Essential Self-Defense Martial Art Champion Martial Arts Partner News

- Alle-Kiski Valley softball preview capsules for games of Wednesday, May 22, 2019 - Trib HSSN TribHSSN

- WPIAL releases baseball semifinal sites - Trib HSSN TribHSSN

- 3 Key Life Skills Your Child Will Gain from Martial Arts Training Norwin Ninjas Partner News

‘You never think something you are doing is going to take off’: Greensburg Night Market returns for 6th season

- New! Member Benefit New! Member Benefit

- Featured Analytics Hub

- Resources Resources

- Member Directory

- Networking Communities

- Advertise, Exhibit, Sponsor

- Find or Post Jobs

- Learn and Engage Learn and Engage

- Bridge Program

- Compare AACSB-Accredited Schools

- Explore Programs

- Advocacy Advocacy

- Featured AACSB Announces 2024 Class of Influential Leaders

- Diversity, Equity, Inclusion, and Belonging

- Influential Leaders

- Innovations That Inspire

- Connect With Us Connect With Us

- Accredited School Search

- Accreditation

- Learning and Events

- Advertise, Sponsor, Exhibit

- Tips and Advice

- Is Business School Right for Me?

Research Roundup: April 2024

Will AI Manage Your Next Research Project?

Much discussion about artificial intelligence (AI) has revolved around concerns that the technology might one day replace human workers. However, two researchers have explored its potential to manage large-scale projects in ways that help human workers be more productive.

Doctoral student Maximilian Koehler and professor of strategy Henry Sauermann, both from the European School of Management and Technology (ESMT) in Berlin, have published a paper in the journal Research Policy that explores the potential for algorithmic management (AM) to facilitate several aspects of projects.

AM, they note, could act as a “project manager” that assigns and coordinates tasks, offers direction and motivation, and provides relevant learning opportunities to human team members. AM support could be especially effective in streamlining and accelerating the progress of large-scale research projects.

Koehler and Sauermann studied several large projects by reviewing online documents, interviewing AI developers and project participants, and even joining some teams as participants themselves. In the process, the pair identified projects that used AM, so that they could take a closer look at how AI performed management functions and where it might be more effective.

They found that endeavors using AM were often larger than those that did not, suggesting that AM might enable projects to scale more than they could otherwise. AM-enabled projects also were able to marshal technical infrastructures—including access to shared AI tools—that smaller, standalone projects might not be able to develop.

“The capabilities of artificial intelligence have reached a point where AI can now significantly enhance the scope and efficiency of scientific research by managing complex, large-scale projects,” says Koehler.

Although AM would require business organizations and higher education institutions to invest in infrastructure, that investment could pay off in the long run, Sauermann adds. “If AI can take over some of the more algorithmic and mundane functions of management, human leaders could shift their attention to more strategic and social tasks such as identifying high-value research targets, raising funding, or building an effective organizational culture.”

The Unsung Benefits of Virtual Meetings

Many business leaders are eager to end the pandemic-era practice of holding virtual meetings, believing that the social distance and technical glitches inherent to video conferencing are detrimental to worker productivity . But companies that switch back fully to face-to-face meetings could do so at the cost of happier and more productive employees, say the co-authors of a new study appearing in the Journal of Vocational Behavior .

“In the era of hybrid work, recognizing and harnessing the potential of virtual meetings to improve employee functioning and well-being is crucial.”—Wladislaw Rivkin

Virtual meetings also helped employees reduce their tendency to procrastinate and the need to recover from stress, while improving work-life balance.

“These findings challenge the prevailing narrative surrounding the costs of virtual meetings, offering a fresh lens through which organizations can evaluate and optimize their virtual communication strategies,” says Rivkin. “In the era of hybrid work, recognizing and harnessing the potential of virtual meetings to improve employee functioning and well-being is crucial.”

Understanding the Complexity of Overconfidence

When people are confident in their abilities to handle uncertainty due to their knowledge and preparation, they often achieve positive outcomes. But what causes that confidence to transform into its riskier cousin, overconfidence?

Overconfidence can make decision-making a literal gamble—what a study published in Management Science refers to as “betting on oneself.” Because these bets can have such negative consequences in business, several researchers have sought to better understand what contributes to overconfident behavior. They include Mohammed Abdellaoui, professor at HEC Paris; Han Bleichrodt, professor of economics at the University of Alicante in Spain; and Cédric Gutierrez, assistant professor of management and technology at Bocconi University in Milan, Italy.

The research team conducted several experiments, including one in which they divided participants into two groups: One group completed an “easy” reasoning test, while the other completed a “hard” reasoning test. Before knowing their actual scores, participants were asked to make bets on their performance midway through the test, as well as at the end. In each case, they bet on whether they thought their scores fell above or below a certain percentage of correct answers (absolute performance) and on how they thought they ranked out of 100 randomly selected participants (relative performance).

The researchers analyzed participants’ betting patterns to determine participants’ “attitudinal optimism”—that is, whether they were overconfident (their actual performance was worse than they expected) or underconfident (their actual performance was better than they expected).

The researchers found that those taking the hard test were overconfident about their absolute scores, but underconfident about their relative group ranking. Those taking the easy test, however, exhibited underconfidence about their scores but overconfidence about their rank. Additionally, participants in the “easy task” group demonstrated greater attitudinal optimism than those in the “hard task” group.

This finding shows that people can be underconfident about their own absolute performance while staying irrationally optimistic about their performance compared to others. Overall, says Abdellaoui, the study shows a “complex interplay between uncertainty attitudes and overestimation tendencies” that can influence decision-making.

This interplay has real-world implications for decision-making, he emphasizes. Overconfidence might lead CEOs to make unwise acquisitions, entrepreneurs to take out excessively large loans, or business students to overestimate how well they’ll do on tests for which they haven’t studied. In other words, people might “bet on themselves” even when the probability of success is low.

“These findings underscore the complexity of overconfidence,” Abdellaoui says. “This nuanced understanding suggests that effectively addressing overconfidence in decision-making contexts requires targeting both inaccurate beliefs and the attitudinal biases that contribute to this phenomenon.”

A New Ethical Risk: The ‘NGO Halo Effect’

People can be inspired by a noble cause, but when they are “blinded” by that inspiration, they can be more willing to engage in unethical behavior, says researcher Isabel de Bruin. The “aura of moral goodness” that often surrounds charitable organizations can lead to people glorify a charity’s goals and values, causing what de Bruin calls the “NGO halo effect.”

This phenomenon was the subject of the doctoral thesis that de Bruin successfully defended at the Rotterdam School of Management at Erasmus University in the Netherlands. In 2023, de Bruin surveyed 256 employees of charitable organizations worldwide. Respondents shared that they felt deeply connected to their organizations; they described the missions of their charities as “part of their DNA” and an “embodiment of their moral identity.” That devotion, de Bruin finds, can lead people to prioritize those charitable missions over ethics and integrity.

When people identify too strongly with a charitable organization’s aura of goodness, they might find it easier to downplay unethical behavior or adopt an “end-justifies-the-means” mentality.

Survey respondents shared examples of bad acts they had witnessed at their organizations, including discrimination, sexual harassment, and bullying—behaviors that result from what de Bruin refers to as “moral naivety.” Moreover, for 45 percent of these incidents of moral naivety, respondents noted that their colleagues were not sanctioned; moreover, rules meant to prevent such behaviors were weakly implemented or not implemented at all.

When people identify too strongly with a charitable organization’s aura of goodness, it can lead to negative outcomes in two ways, de Bruin explains. First, when behaviors contradict an organization’s internal perception of morality, “it can lead to cognitive dissonance,” she says. “It can be psychologically easier to downplay or ignore unethical behavior, as it means asking fewer, harder questions about yourself and your own perceived identity as ‘good.’”

Second, people who identify with an organization’s mission can adopt an “end-justifies-the-means mentality,” says de Bruin. The survey showed that some charity staff and volunteers “strongly believe that achieving their charity’s mission by any means necessary is OK. This can extend to organizations manipulating data to exaggerate the impact of their work to raise more funds for their mission.”

According to de Bruin, her investigation indicates the need for greater research into the “NGO halo effect” as a new risk factor driving unethical behavior in charitable organizations. Future studies can help nonprofit leaders adopt effective methods to prevent, detect, and address bad behavior among their employees and volunteers.

Research News

■ Thought leadership for family businesses. The Skoll Centre for Social Entrepreneurship at the University of Oxford’s Saïd Business School in the United Kingdom has launched Ownership Project Insights , a new white paper series to provide guidance, case studies, analyses, and data-driven observations to support the impact and growth of family-led businesses. The series is part of the center’s Ownership Project 2.0: Private Capital Owners and Impact.

The first publication in the series focuses on succession and philanthropy in family-led businesses. It features essays from Oxford Saïd MBAs who will be working with family-led organizations. The Ownership Project 2.0 also invites academics and professionals to contribute to the series on relevant topics.

The series was created to improve the “scattered knowledge marketplace” for family organizations, which have great potential to contribute to societal impact, says Bridget Kustin, senior research fellow and director of the project. Ownership Project 2.0, she adds, is a “critical friend” to family-led organizations, providing them with the “tools and knowledge to scale their impact.”

■ Snapshot of prospective student preferences. The Graduate Management Admission Council (GMAC) has released the results of its 2024 Prospective Students Survey . This year, respondents point to data analysis and problem-solving as the skills they most want to develop in their business programs, and demand for curricula focused on generative artificial intelligence is up 38 percent year-on-year. In a trend reversal, the two-year MBA is once again the most popular degree format among respondents, while the Master of Management degree is growing more popular.

Nearly 75 percent of prospective students have identified equity and inclusion, sustainability, and health and well-being as “important” or “very important” to their choice of business program.

In addition, nearly 75 percent of prospective students have identified equity and inclusion, sustainability, and health and well-being as “important” or “very important” to their choice of business program. In addition, more candidates than ever are eschewing study abroad to instead apply to schools in their countries of citizenship.

Except for those in Central and South Asia, prospective students worldwide express a preference for hybrid learning. This trend mirrors the increasing prevalence of hybrid workplaces, says Andrew Walker, GMAC’s director of research analysis and communications. Prospective students’ “appetite for flexibility is increasing,” he says. “While in-person learning remains the most preferred delivery format among most candidates, its dominance among candidates is diminishing.”

■ Podcast on workplace culture. The Haas School of Business at the University of California, Berkeley, has launched “ The Culture Kit with Jenny & Sameer .” The new podcast features Jenny Chatman, Paul J. Cortese Distinguished Professor of Management, and Sameer Srivastava, the Ewald T. Grether Professor of Business Administration and Public Policy. They also co-direct the Berkeley Center for Workplace Culture and Innovation.

In each 15-minute podcast, Chatman and Srivastava address a problem submitted by a business leader, drawing on the latest academic research and their years of experience advising organizations on ways to improve workplace cultures. The first season launched with two episodes—one on a question from WD-40 CEO Steve Brass about maintaining a strong work culture , and one on a question from Hubspot CEO Yamini Rangan about keeping hybrid workers connected .

The co-hosts plan to launch new episodes every two weeks. “We hope to expand the reach of the work we've been doing through a new medium with the goal of reaching more people,” says Chatman. “Business leaders can submit ‘fixit tickets’ laying out the topics on their minds. Our goal is to give them actionable steps they can take to improve their organization’s culture.”

Send press releases, links to studies, PDFs, or other relevant information regarding new and forthcoming research, grants, initiatives, and projects underway to AACSB Insights at [email protected] .

- artificial intelligence

Our approach

- Responsibility

- Infrastructure

- Try Meta AI

RECOMMENDED READS

- 5 Steps to Getting Started with Llama 2

- The Llama Ecosystem: Past, Present, and Future

- Introducing Code Llama, a state-of-the-art large language model for coding

- Meta and Microsoft Introduce the Next Generation of Llama

- Today, we’re introducing Meta Llama 3, the next generation of our state-of-the-art open source large language model.

- Llama 3 models will soon be available on AWS, Databricks, Google Cloud, Hugging Face, Kaggle, IBM WatsonX, Microsoft Azure, NVIDIA NIM, and Snowflake, and with support from hardware platforms offered by AMD, AWS, Dell, Intel, NVIDIA, and Qualcomm.

- We’re dedicated to developing Llama 3 in a responsible way, and we’re offering various resources to help others use it responsibly as well. This includes introducing new trust and safety tools with Llama Guard 2, Code Shield, and CyberSec Eval 2.

- In the coming months, we expect to introduce new capabilities, longer context windows, additional model sizes, and enhanced performance, and we’ll share the Llama 3 research paper.

- Meta AI, built with Llama 3 technology, is now one of the world’s leading AI assistants that can boost your intelligence and lighten your load—helping you learn, get things done, create content, and connect to make the most out of every moment. You can try Meta AI here .

Today, we’re excited to share the first two models of the next generation of Llama, Meta Llama 3, available for broad use. This release features pretrained and instruction-fine-tuned language models with 8B and 70B parameters that can support a broad range of use cases. This next generation of Llama demonstrates state-of-the-art performance on a wide range of industry benchmarks and offers new capabilities, including improved reasoning. We believe these are the best open source models of their class, period. In support of our longstanding open approach, we’re putting Llama 3 in the hands of the community. We want to kickstart the next wave of innovation in AI across the stack—from applications to developer tools to evals to inference optimizations and more. We can’t wait to see what you build and look forward to your feedback.

Our goals for Llama 3

With Llama 3, we set out to build the best open models that are on par with the best proprietary models available today. We wanted to address developer feedback to increase the overall helpfulness of Llama 3 and are doing so while continuing to play a leading role on responsible use and deployment of LLMs. We are embracing the open source ethos of releasing early and often to enable the community to get access to these models while they are still in development. The text-based models we are releasing today are the first in the Llama 3 collection of models. Our goal in the near future is to make Llama 3 multilingual and multimodal, have longer context, and continue to improve overall performance across core LLM capabilities such as reasoning and coding.

State-of-the-art performance

Our new 8B and 70B parameter Llama 3 models are a major leap over Llama 2 and establish a new state-of-the-art for LLM models at those scales. Thanks to improvements in pretraining and post-training, our pretrained and instruction-fine-tuned models are the best models existing today at the 8B and 70B parameter scale. Improvements in our post-training procedures substantially reduced false refusal rates, improved alignment, and increased diversity in model responses. We also saw greatly improved capabilities like reasoning, code generation, and instruction following making Llama 3 more steerable.

*Please see evaluation details for setting and parameters with which these evaluations are calculated.

In the development of Llama 3, we looked at model performance on standard benchmarks and also sought to optimize for performance for real-world scenarios. To this end, we developed a new high-quality human evaluation set. This evaluation set contains 1,800 prompts that cover 12 key use cases: asking for advice, brainstorming, classification, closed question answering, coding, creative writing, extraction, inhabiting a character/persona, open question answering, reasoning, rewriting, and summarization. To prevent accidental overfitting of our models on this evaluation set, even our own modeling teams do not have access to it. The chart below shows aggregated results of our human evaluations across of these categories and prompts against Claude Sonnet, Mistral Medium, and GPT-3.5.

Preference rankings by human annotators based on this evaluation set highlight the strong performance of our 70B instruction-following model compared to competing models of comparable size in real-world scenarios.

Our pretrained model also establishes a new state-of-the-art for LLM models at those scales.

To develop a great language model, we believe it’s important to innovate, scale, and optimize for simplicity. We adopted this design philosophy throughout the Llama 3 project with a focus on four key ingredients: the model architecture, the pretraining data, scaling up pretraining, and instruction fine-tuning.

Model architecture

In line with our design philosophy, we opted for a relatively standard decoder-only transformer architecture in Llama 3. Compared to Llama 2, we made several key improvements. Llama 3 uses a tokenizer with a vocabulary of 128K tokens that encodes language much more efficiently, which leads to substantially improved model performance. To improve the inference efficiency of Llama 3 models, we’ve adopted grouped query attention (GQA) across both the 8B and 70B sizes. We trained the models on sequences of 8,192 tokens, using a mask to ensure self-attention does not cross document boundaries.

Training data

To train the best language model, the curation of a large, high-quality training dataset is paramount. In line with our design principles, we invested heavily in pretraining data. Llama 3 is pretrained on over 15T tokens that were all collected from publicly available sources. Our training dataset is seven times larger than that used for Llama 2, and it includes four times more code. To prepare for upcoming multilingual use cases, over 5% of the Llama 3 pretraining dataset consists of high-quality non-English data that covers over 30 languages. However, we do not expect the same level of performance in these languages as in English.

To ensure Llama 3 is trained on data of the highest quality, we developed a series of data-filtering pipelines. These pipelines include using heuristic filters, NSFW filters, semantic deduplication approaches, and text classifiers to predict data quality. We found that previous generations of Llama are surprisingly good at identifying high-quality data, hence we used Llama 2 to generate the training data for the text-quality classifiers that are powering Llama 3.

We also performed extensive experiments to evaluate the best ways of mixing data from different sources in our final pretraining dataset. These experiments enabled us to select a data mix that ensures that Llama 3 performs well across use cases including trivia questions, STEM, coding, historical knowledge, etc.

Scaling up pretraining

To effectively leverage our pretraining data in Llama 3 models, we put substantial effort into scaling up pretraining. Specifically, we have developed a series of detailed scaling laws for downstream benchmark evaluations. These scaling laws enable us to select an optimal data mix and to make informed decisions on how to best use our training compute. Importantly, scaling laws allow us to predict the performance of our largest models on key tasks (for example, code generation as evaluated on the HumanEval benchmark—see above) before we actually train the models. This helps us ensure strong performance of our final models across a variety of use cases and capabilities.

We made several new observations on scaling behavior during the development of Llama 3. For example, while the Chinchilla-optimal amount of training compute for an 8B parameter model corresponds to ~200B tokens, we found that model performance continues to improve even after the model is trained on two orders of magnitude more data. Both our 8B and 70B parameter models continued to improve log-linearly after we trained them on up to 15T tokens. Larger models can match the performance of these smaller models with less training compute, but smaller models are generally preferred because they are much more efficient during inference.

To train our largest Llama 3 models, we combined three types of parallelization: data parallelization, model parallelization, and pipeline parallelization. Our most efficient implementation achieves a compute utilization of over 400 TFLOPS per GPU when trained on 16K GPUs simultaneously. We performed training runs on two custom-built 24K GPU clusters . To maximize GPU uptime, we developed an advanced new training stack that automates error detection, handling, and maintenance. We also greatly improved our hardware reliability and detection mechanisms for silent data corruption, and we developed new scalable storage systems that reduce overheads of checkpointing and rollback. Those improvements resulted in an overall effective training time of more than 95%. Combined, these improvements increased the efficiency of Llama 3 training by ~three times compared to Llama 2.

Instruction fine-tuning

To fully unlock the potential of our pretrained models in chat use cases, we innovated on our approach to instruction-tuning as well. Our approach to post-training is a combination of supervised fine-tuning (SFT), rejection sampling, proximal policy optimization (PPO), and direct preference optimization (DPO). The quality of the prompts that are used in SFT and the preference rankings that are used in PPO and DPO has an outsized influence on the performance of aligned models. Some of our biggest improvements in model quality came from carefully curating this data and performing multiple rounds of quality assurance on annotations provided by human annotators.

Learning from preference rankings via PPO and DPO also greatly improved the performance of Llama 3 on reasoning and coding tasks. We found that if you ask a model a reasoning question that it struggles to answer, the model will sometimes produce the right reasoning trace: The model knows how to produce the right answer, but it does not know how to select it. Training on preference rankings enables the model to learn how to select it.

Building with Llama 3

Our vision is to enable developers to customize Llama 3 to support relevant use cases and to make it easier to adopt best practices and improve the open ecosystem. With this release, we’re providing new trust and safety tools including updated components with both Llama Guard 2 and Cybersec Eval 2, and the introduction of Code Shield—an inference time guardrail for filtering insecure code produced by LLMs.

We’ve also co-developed Llama 3 with torchtune , the new PyTorch-native library for easily authoring, fine-tuning, and experimenting with LLMs. torchtune provides memory efficient and hackable training recipes written entirely in PyTorch. The library is integrated with popular platforms such as Hugging Face, Weights & Biases, and EleutherAI and even supports Executorch for enabling efficient inference to be run on a wide variety of mobile and edge devices. For everything from prompt engineering to using Llama 3 with LangChain we have a comprehensive getting started guide and takes you from downloading Llama 3 all the way to deployment at scale within your generative AI application.

A system-level approach to responsibility

We have designed Llama 3 models to be maximally helpful while ensuring an industry leading approach to responsibly deploying them. To achieve this, we have adopted a new, system-level approach to the responsible development and deployment of Llama. We envision Llama models as part of a broader system that puts the developer in the driver’s seat. Llama models will serve as a foundational piece of a system that developers design with their unique end goals in mind.

Instruction fine-tuning also plays a major role in ensuring the safety of our models. Our instruction-fine-tuned models have been red-teamed (tested) for safety through internal and external efforts. Our red teaming approach leverages human experts and automation methods to generate adversarial prompts that try to elicit problematic responses. For instance, we apply comprehensive testing to assess risks of misuse related to Chemical, Biological, Cyber Security, and other risk areas. All of these efforts are iterative and used to inform safety fine-tuning of the models being released. You can read more about our efforts in the model card .

Llama Guard models are meant to be a foundation for prompt and response safety and can easily be fine-tuned to create a new taxonomy depending on application needs. As a starting point, the new Llama Guard 2 uses the recently announced MLCommons taxonomy, in an effort to support the emergence of industry standards in this important area. Additionally, CyberSecEval 2 expands on its predecessor by adding measures of an LLM’s propensity to allow for abuse of its code interpreter, offensive cybersecurity capabilities, and susceptibility to prompt injection attacks (learn more in our technical paper ). Finally, we’re introducing Code Shield which adds support for inference-time filtering of insecure code produced by LLMs. This offers mitigation of risks around insecure code suggestions, code interpreter abuse prevention, and secure command execution.

With the speed at which the generative AI space is moving, we believe an open approach is an important way to bring the ecosystem together and mitigate these potential harms. As part of that, we’re updating our Responsible Use Guide (RUG) that provides a comprehensive guide to responsible development with LLMs. As we outlined in the RUG, we recommend that all inputs and outputs be checked and filtered in accordance with content guidelines appropriate to the application. Additionally, many cloud service providers offer content moderation APIs and other tools for responsible deployment, and we encourage developers to also consider using these options.

Deploying Llama 3 at scale

Llama 3 will soon be available on all major platforms including cloud providers, model API providers, and much more. Llama 3 will be everywhere .