SYSTEMATIC REVIEW article

Differentiated instruction in secondary education: a systematic review of research evidence.

- Department of Teacher Education, University of Groningen, Groningen, Netherlands

Differentiated instruction is a pedagogical-didactical approach that provides teachers with a starting point for meeting students' diverse learning needs. Although differentiated instruction has gained a lot of attention in practice and research, not much is known about the status of the empirical evidence and its benefits for enhancing student achievement in secondary education. The current review sets out to provide an overview of the theoretical conceptualizations of differentiated instruction as well as prior findings on its effectiveness. Then, by means of a systematic review of the literature from 2006 to 2016, empirical evidence on the effects of within-class differentiated instruction for secondary school students' academic achievement is evaluated and summarized. After a rigorous search and selection process, only 14 papers about 12 unique empirical studies on the topic were selected for review. A narrative description of the selected papers shows that differentiated instruction has been operationalized in many different ways. The selection includes studies on generic teacher trainings for differentiated instruction, ability grouping and tiering, individualization, mastery learning, heterogeneous grouping, and remediation in flipped classroom lessons. The majority of the studies show small to moderate positive effects of differentiated instruction on student achievement. Summarized effect sizes across studies range from d = +0.741 to +0.509 (omitting an outlier). These empirical findings give some indication of the possible benefits of differentiated instruction. However, they also point out that there are still severe knowledge gaps. More research is needed before drawing convincing conclusions regarding the effectiveness and value of different approaches to differentiated instruction for secondary school classes.

Introduction

Differentiation is a hot-topic in education nowadays. Policy-makers and researchers urge teachers to embrace diversity and to adapt their instruction to the diverse learning needs of students in their classrooms ( Schleicher, 2016 ; Unesco, 2017 ). Differentiation is a philosophy of teaching rooted in deep respect for students, acknowledgment of their differences, and the drive to help all students thrive. Such ideas imply that teachers proactively modify curricula, teaching methods, resources, learning activities, or requirements for student products to better meet students' learning needs ( Tomlinson et al., 2003 ). When teachers deliberately plan such adaptations to facilitate students' learning and execute these adaptations during their lessons we call it differentiated instruction. A number of developments in education have boosted the need for differentiated instruction. First, contemporary classes are becoming relatively heterogeneous because of policies focused on detracking, the inclusion of students from culturally and linguistically diverse backgrounds, and inclusive education in which special education students (SEN) attend classes along with non-SEN students ( Rock et al., 2008 ; Tomlinson, 2015 ). Since early stratification of students may have unintended effects on the educational opportunities of students with varying background characteristics, addressing students' learning needs by teaching adaptively within heterogeneous classrooms has been proposed as the best choice for a fair educational system ( Oakes, 2008 ; Schütz et al., 2008 ; Schofield, 2010 ; OECD, 2012 , 2018 ). In addition, even within relatively homogeneous classrooms, there are considerable differences between students that need attention ( Wilkinson and Penney, 2014 ). Second, the idea that learners have different learning needs and that a one-size-fits-all approach does not suffice, is gaining momentum ( Subban, 2006 ). Policy makers stress that all students should be supported to develop their knowledge and skills at their own level ( Rock et al., 2008 ; Schleicher, 2016 ) and there is the wish to improve equity or equality among students ( Unesco, 2017 ; Kyriakides et al., 2018 ). When the aim is to decrease the gap between low and high achieving students, teachers could invest most in supporting low achieving students. This is called convergent differentiation ( Bosker, 2005 ). Alternatively, teachers may apply divergent differentiation in which they strive for equality by dividing their efforts equally across all students, allowing for variation between students in the learning goals they reach, time they use, and outcomes they produce ( Bosker, 2005 ).

Although the concept of differentiated instruction is quite well-known, teachers find it difficult to grasp how differentiated instruction should be implemented in their classrooms ( Van Casteren et al., 2017 ). A recent study found that teachers across different countries infrequently adapt their instruction to student characteristics ( Schleicher, 2016 ). Struggling students may work on too difficult tasks or, conversely, high ability students may practice skills they have already mastered ( Tomlinson et al., 2003 ). Clearly, more information about effective practices is needed. A recent review and meta-analysis of differentiated instruction practices in primary education shows that differentiated instruction has some potential for improving student outcomes, when implemented well ( Deunk et al., 2018 ). However, these results may not generalize directly to secondary education, since the situation in which teachers teach multiple classes in secondary education is rather different in nature compared to primary education ( Van Casteren et al., 2017 ). For secondary education, evidence for the benefits of differentiated instruction is scarce ( Coubergs et al., 2013 ). The bulk of studies in secondary education focus on differentiation of students between classes by means of streaming or tracking ( Slavin, 1990a ; Schofield, 2010 ). Alternatively, the current study seeks to scrutinize which empirical evidence there is on the effectiveness of within-class differentiated instruction in secondary education, how studies operationalize the approach, and in which contexts the studies were performed.

Theory and Operationalizations

Operationalizing differentiated instruction in the classroom.

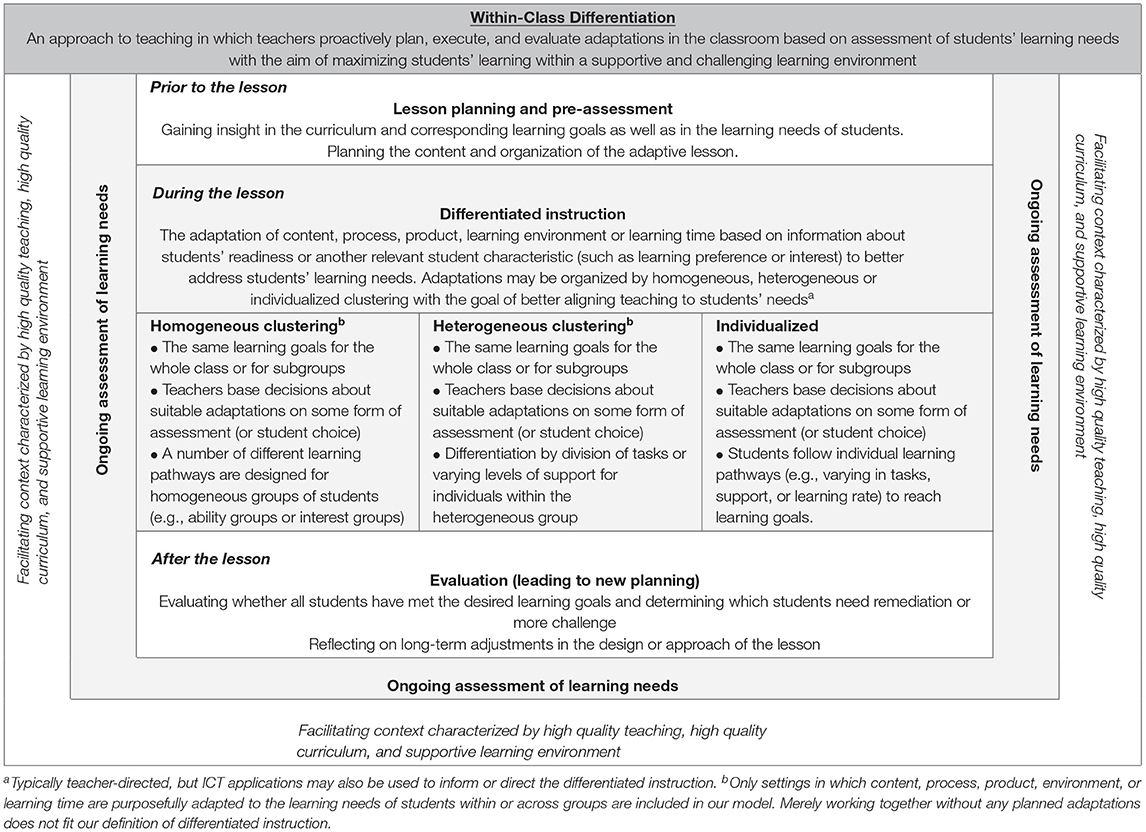

Theories of differentiation are bound by several guiding principles. They include a focus on essential ideas and skills in each content area, responsiveness to individual differences, integration of assessment and instruction, and ongoing adjustment of content, process, and products to meet students' learning needs ( Rock et al., 2008 ). Differentiation typically includes pro-active and deliberate adaptations of the content, process, product, learning environment or learning time, based on the assessment of students' readiness or another relevant student characteristic such as learning preference or interest ( Roy et al., 2013 ; Tomlinson, 2014 ). In Table 1 , we have schematized the theoretical construct of differentiated instruction in the lesson within the broader definition of within-class differentiation.

Table 1 . Theoretical model of within-class differentiation.

Differentiated instruction in the classroom entails two aspects. First is the pedagogy and didactics of differentiated instruction : which teaching practices and techniques do teachers use and what do they differentiate ( McQuarrie et al., 2008 ; Valiande and Koutselini, 2009 )? Teachers may offer students' adapted content , offer various options in the learning process , use different assessment products , or adapt the learning environment to students' learning needs ( Tomlinson, 2014 ). Teachers may also offer certain students more learning time or conversely, encourage high achievers to speed up their learning process ( Coubergs et al., 2013 ). Regarding the process , they may use pre-teaching or extended instruction to cater to the needs of students ( Smets and Struyven, 2018 ), or they could adapt instructions throughout the lesson. Second, the organizational aspect of differentiated instruction entails the structure in which it is embedded. There are different approaches a teacher may choose (see Table 1 ). In macro-adaptive approaches, teachers use some form of homogeneous clustering to organize their differentiated instruction ( Corno, 2008 ), including fixed or flexible grouping of students based on a common characteristic such as readiness or interest. Alternatively, teachers could use heterogeneous grouping to organize their differentiated instruction. Differentiation of the learning process may occur because students divide tasks within the group based on their learning preferences or abilities. Alternatively, a teacher may suggest a division of tasks or support based on assessment of learning needs ( Coubergs et al., 2013 ). When adaptations are taken to the level at which individual students work at their own rate on their level, this is called individualization ( Education Endowment Foundation, n.d. ). The learning goals are the same, but learning trajectories are tailored to individuals' needs. Some authors include individualized approaches into the theoretical construct of differentiated instruction ( Smit et al., 2011 ; Coubergs et al., 2013 ; Tomlinson, 2014 ), whereas others separate it from differentiated instruction ( Bray and McClaskey, 2013 ; Roy et al., 2013 ).

Lastly, there are teaching models or strategies in which differentiated instruction has a central place. One well-known example is group-based mastery learning . In this approach, subject matter is divided into small blocks or units. For each unit, the teacher gives uniform instructions to the whole group of students. Then, a formative assessment informs the teacher which students reach the desired level of mastery of the unit (usually set at 80–90% correct). Students below this criterion receive corrective instruction in small groups, or alternatively, forms of tutoring, peer tutoring or independent practice are also possible to differentiate the learning process ( Slavin, 1987 ). Differentiated instruction may also be embedded in other instructional approaches like peer tutoring, problem-based learning, flipped classroom models etc. ( Mastropieri et al., 2006 ; Coubergs et al., 2013 ; Altemueller and Lindquist, 2017 ).

Immediate, unplanned adaptations to student needs, so-called “micro-adaptations” ( Corno, 2008 ), are not included in the theoretical model in Table 1 , since differentiated instruction is—by nature—planned and deliberate ( Coubergs et al., 2013 ; Tomlinson, 2014 ; Keuning et al., 2017 ). Furthermore, we did not include the concept of “personalization” in our model since in personalized approaches students follow their own learning trajectories, pursue their own learning goals, and co-construct the learning trajectory, which makes it notably different from typical operationalizations of differentiated instruction ( Bray and McClaskey, 2013 ; Cavanagh, 2014 ).

Differentiation as a Sum of Its Parts

As noted above, differentiated instruction during the lesson is in fact only one piece of the mosaic ( Tomlinson, 1999 ). There are a lot of other steps that are crucial for successful implementation of differentiated instruction ( Keuning et al., 2017 ; Van Geel et al., 2019 ). Table 1 shows other behaviors that are related to what teachers do in the classroom. First, continuous monitoring and (formative) assessment and differentiated instruction are inseparable ( Hall, 1992 ; Valiande and Koutselini, 2009 ; Roy et al., 2013 ; Tomlinson, 2014 ; Denessen and Douglas, 2015 ; Prast et al., 2015 ). Some teachers may be inclined to use rather one-dimensional, fixed categorizations of students based on their learning needs at some point in time ( Smets and Struyven, 2018 ). Nevertheless, high quality differentiated instruction is based on the frequent assessment of learning needs and flexible adaptations to meet those needs. Prior to the lesson including differentiated instruction, teachers should have clear goals for their students, use some form of pre-assessment , and plan their adaptive instruction ( Prast et al., 2015 ; Keuning et al., 2017 ; Van Geel et al., 2019 ). Then, teachers proceed to the actual differentiated instruction during the lesson . After the lesson, teachers should evaluate students' progress toward their goals.

Besides these steps, more general high-quality teaching behaviors are preconditions to create a good context for differentiated instruction ( Wang et al., 1990 ; Tomlinson, 2014 ). For instance, creating a safe and stimulating learning environment in which students feel welcomed and respected is essential ( Tomlinson, 2014 ). In addition, good classroom management may help teachers to implement differentiated instruction in an orderly manner ( Maulana et al., 2015 ; Prast et al., 2015 ). In empirical studies, differentiated instruction has been found to be a separate domain of teaching, while at the same time being strongly interrelated with other high quality teaching behaviors ( Van de Grift et al., 2014 ; Maulana et al., 2015 ; Van der Lans et al., 2017 , 2018 ). In turn, high quality teaching behaviors like questioning, explaining the lesson content, or giving examples can be applied in a differentiated way, stressing that high quality teaching is both a contextual factor as a direct source of input for teachers' differentiated instruction.

Prior Review Studies on Differentiated Instruction

Although studies on within-class differentiated instruction in secondary education are scarce, a number of reviews and meta-analyses have shed some light on the effects on student achievement. Subban (2006) discusses a number of studies showing that adapting content or processes can make learning more engaging for students than one-size-fits-all teaching, and some studies showed positive effects of differentiated instruction on student achievement. The narrative review by Tomlinson et al. (2003) revealed studies showing that students achieve better results in mixed-ability classrooms in which the teacher differentiates instruction than in homogeneous classes were a more single-size approach is used. In a recent narrative research synthesis on adaptive teaching, one study on differentiated instruction was included. The authors found positive results of different types of adaptive teaching on students' academic and non-academic outcomes in primary education ( Parsons et al., 2018 ). In a large-scale meta-analysis by Scheerens (2016) , adaptive teaching was operationalized with some relevant indicators such as using variable teaching methods, orientation toward individual learning processes, and considering students' prerequisites. In this meta-analysis, a very small effect of adaptive teaching on student achievement was found.

A number of reviews report on specific operationalizations of within-class differentiated instruction. One of the most frequently reviewed forms is ability grouping . In within-class ability grouping, teachers cluster students into different homogeneous groups based on their abilities or readiness. In her narrative review, Tieso (2003) summarizes that ability grouping has a potential influence on student achievement when grouping is flexible, and teachers adapt their instruction to the needs of different groups. Steenbergen-Hu et al. (2016) performed a meta-synthesis including five other meta-analyses of the effects of ability grouping in K-12 education. In their study, within-class grouping was found to have at least a small positive impact on students' academic achievement (Hedges g = + 0.25). In the study of Kulik (1992) , who also combined results from different meta-analyses, a comparable effect size of Glass's Δ = + 0.25 in favor of within-class ability grouping was found. In the meta-analysis of Lou et al. (1996) on grouping in secondary education, within-class grouping was found to have a small positive effect (Cohen's d = + 0.12) on student outcomes. Substantive achievement gains were found in studies in which teachers adapted their teaching to needs of the different ability groups (Cohen's d = + 0.25), but not in studies in which teachers provided the same instruction for the different groups (Cohen's d = + 0.02). In his large meta-analysis of effects of instructional approaches on student outcomes, Hattie (2009) reported a small positive effect of within-class ability grouping on students' academic achievement (Cohen's d = +0.16). Conversely, Slavin (1990a) did not find significant effects of (between and within-class) ability grouping on achievement in secondary education. In a meta-synthesis of multiple meta-analyses on ability grouping—including between-class ability grouping—no overall positive effects of the approach were found ( Sipe and Curlette, 1996 ). Some studies have found that ability grouping effects may differ for subgroups of students. For instance, Lou et al. (1996) found that low-ability students learned significantly more in heterogeneous (mixed-ability) groups, average-ability students benefitted most in homogeneous ability groups, and for high-ability students group composition made no significant difference. In primary education, Deunk et al. (2018) found a negative effect of within-class homogeneous grouping for low achieving pupils. Conversely, Steenbergen-Hu et al. (2016) concluded that high-, average-, and low-ability students all benefited equally from ability grouping. Thus, the findings on differential effects of ability grouping remain inconclusive.

Another possible approach to differentiated instruction is tiering. Tiering refers to using the same curriculum material for all learners, but adjusting the depth of content, the learning activity process, and/or the type of product developed by the student to students' readiness, interest or learning style ( Pierce and Adams, 2005 ; Richards and Omdal, 2007 ). Teachers design a number of variations or tiers to a learning task, process or product, to which students are assigned based on assessed abilities. To our knowledge, there are no specific reviews of the literature or meta-analyses summarizing the effects of tiering on student achievement, but the approach is often combined with homogeneous (ability) grouping.

Alternatively, turning to heterogeneous grouping as an organizational structure for differentiated instruction, there is evidence that students of varying backgrounds working together may learn from each other's knowledge, from observing each other, and from commenting on each other's errors ( Nokes-Malach et al., 2015 ). However, based on their narrative review about differentiated instruction in secondary schools, Coubergs et al. (2013) concluded that there is little known about the effectiveness of differentiated instruction in heterogeneous settings They found that guiding heterogeneous groups is challenging for teachers, and that it is difficult to address the learning needs of all students in these mixed groups.

Reviews of effectiveness of individualized instruction indicate small effects on student outcomes. Hattie (2009) reports a small effect of individualization on student achievement (Cohen's d = +0.23). In addition, in another review a wide range of effects across meta-analyses was found of individualization on academic achievement of students (from −0.07 to +0.40; Education Endowment Foundation, n.d. ). Currently, mostly ICT-applications are used to individualize instruction. Review studies show that such adaptive ICT applications may considerably improve student achievement ( Ma et al., 2014 ; Van der Kleij et al., 2015 ; Kulik and Fletcher, 2016 ; Shute and Rahimi, 2017 ).

Guskey and Pigott (1988) performed a meta-analysis on the effects of group-based mastery learning on students' academic outcomes from grade one up to college. They reported positive effects on students' academic achievement as a result of the application of group-based mastery learning for, among others, high school students (Hedges g = +0.48). Later on, Kulik et al. (1990) and Hattie (2009) also reported relatively large positive effects of group-based mastery learning on student achievement (ES = +0.59 and Cohen's d = +0.58, respectively). Low ability students were generally found to profit most from the convergent approach ( Guskey and Pigott, 1988 ; Kulik et al., 1990 ). Mastery learning was among the most effective educational approaches in a meta-synthesis of multiple meta-analyses ( Sipe and Curlette, 1996 ). However, mastery learning may be particularly valuable to train specific skills but may yield fewer positive results for more general skills as measured by standardized tests ( Slavin, 1987 , 1990b ). Mastery learning has also been incorporated into broader interventions in secondary education such as the IMPROVE method ( Mevarech and Kramarski, 1997 ).

Overall, from previous review studies we can draw the conclusion that there is some evidence that differentiated instruction has potential power to affect students' academic achievement positively with small to medium effects. However, the evidence is limited and heterogeneous in nature. The effectiveness of some approaches to differentiated instruction, such as ability grouping, has been reviewed extensively, while other approaches have received less attention. Furthermore, most studies were executed some time ago and were executed in the context of primary education, while only few studies focus specifically on secondary education.

Contextual and Personal Factors Influencing Differentiated Instruction

When analyzing the effectiveness of differentiated instruction, it is important to acknowledge that classroom processes do not occur in a vacuum. Both internal and external sources determine whether teachers will succeed in developing complex teaching skills ( Clarke and Hollingsworth, 2002 ). In the case of differentiated instruction, teacher-level variables like education, professional development and personal characteristics like knowledge, attitudes, beliefs, values and self-efficacy may influence their behavior ( Tomlinson, 1995 ; Tomlinson et al., 2003 ; Kiley, 2011 ; De Jager, 2013 ; Parsons et al., 2013 ; Dixon et al., 2014 ; De Neve and Devos, 2016 ; Suprayogi et al., 2017 ; Stollman, 2018 ). Teachers need thorough content knowledge and a broad range of pedagogical and didactic skills to plan and execute differentiated instruction ( Van Casteren et al., 2017 ). At the classroom level, diversity of the student population ( De Neve and Devos, 2016 ) and class-size ( Blatchford et al., 2011 ; Suprayogi et al., 2017 ; Stollman, 2018 ) influence interactions between teachers and their students. Moreover, school characteristics matter. For instance, a school principal's support can influence implementation of differentiated instruction ( Hertberg-Davis and Brighton, 2006 ). Additionally, structural organizational conditions, such as time and resources available for professional development, and cultural organizational conditions such as the learning environment, support from the school board, and a professional culture of collaboration may influence teaching ( Imants and Van Veen, 2010 ; Stollman, 2018 ). Teachers have reported that preparation time is a crucial factor determining the implementation of differentiated instruction ( De Jager, 2013 ; Van Casteren et al., 2017 ). Moreover, collaboration is key; a high pedagogical team culture influences both the learning climate and the implementation of differentiated instruction ( Smit and Humpert, 2012 ; Stollman, 2018 ). Lastly, country level requirements and (assessment) policies that stress differentiated instruction may influence implementation ( Mills et al., 2014 ).

Research Questions

Researchers and teachers lack a systematic overview of the current empirical evidence for different approaches to within-class differentiated instruction in secondary education. Therefore, we aim to (1) give an overview of the empirical literature on effects of differentiated instruction on student achievement in secondary education, and (2) consider the degree to which contextual and personal factors inhibit or enhance the effects of within-class differentiated instruction.

Our study is guided by the following research questions:

RQ1. What is the research base regarding the effects of within-class differentiated instruction on students' academic achievement in secondary education?

RQ2. How are the selected approaches to differentiated instruction operationalized?

RQ3. What are the overall effects of differentiated instruction on students' academic achievement?

RQ4. Which contextual and personal factors inhibit or enhance the effects of differentiated instruction on student achievement?

Based on previous research, we hypothesize to find literature on multiple possible approaches to differentiated instruction in the classroom. Probably, there will be more evidence for some operationalizations (like ability grouping) than for others. Overall, we hypothesize that differentiated instruction will have a small to medium positive effect on students' academic achievement. Several contextual and personal factors may affect the implementation. In this review, we will include information about relevant contextual and personal variables—when provided—into the interpretation of the literature.

Study Design

In order to provide a systematic overview of the literature on within-class differentiated instruction, a best evidence synthesis ( Slavin, 1986 , 1995 ; Best Evidence Encyclopedia, n.d.) was applied. This was done by a-priori defining consistent, transparent standards to identify relevant studies about within-class differentiated instruction. Each selected study is discussed in some detail and results are evaluated. In case enough papers are found that are comparable, findings can be pooled across studies. The best-evidence strategy is particularly suitable for topics—such as differentiated instruction—for which the body of literature is expected to be rather small and diverse. In such cases, it is important to learn as much as possible from each study, not just to average quantitative outcomes and study characteristics (compare Slavin and Cheung, 2005 ). In a recent review study on differentiated instruction in primary schools, the best evidence synthesis approach was used as well ( Deunk et al., 2018 ). In this study, the authors mentioned the benefits of selecting studies using strict pre-defined criteria (to avoid a garbage in-garbage-out effect). Moreover, combining a meta-analysis with relatively extended descriptions of the included studies in order to make the information more fine-grained was found to improve the interpretability of the results.

Working Definition of Differentiated Instruction

To select relevant studies for our review, we used the following working definition of differentiated instruction: Differentiated teaching in the classroom consisting of planned adaptations in process, learning time, content, product or learning environment for groups of students or individual students. Adaptations can be based on achievement/readiness or another relevant student characteristic (such as prior knowledge, learning preferences, and interest) with the goal of meeting students' learning needs.

Adaptations that are merely organizational, such as placing students in homogeneous groups without adapting the teaching to relevant inter-learner differences, were excluded. Interventions using approaches like peer tutoring, project-based learning and other types of collaborative leaning were eligible, but only when planned differentiated instruction was applied based on relevant student characteristics (e.g., by assigning specific roles based on students' abilities). Beyond the scope of this review were studies on differentiated instruction outside the classroom such as between-class differentiation (streaming or tracking), tutoring outside the classroom, or stratification of students between schools.

Search Strategy

The studies for our best evidence synthesis were identified in a number of steps. First, we performed a systematic search in the online databases ERIC, PsycINFO, and Web of Science (SSCI). Following the guidelines of Petticrew and Roberts (2006) , a set of keywords referring to the intervention (differentiation combined with keywords referring to instruction), the population (secondary education) and the outcomes of interest (academic outcomes) were used. We limited the findings to studies published between 2006 and 2016 that were published in academic journals. Although this first search yielded relevant studies, it failed to identify a number of important studies on differentiated instruction practices known from the literature. This was because search terms like “differentiation” and “adaptive” were not used in all relevant studies. Some authors used more specific terms such as ability grouping, tiered lessons, flexible grouping and mastery learning. Therefore, an additional search was performed in ERIC and PsycINFO with more specific keywords associated with differentiated instruction. We added keywords referring to various homogeneous or heterogeneous clustering approaches, to mastery learning approaches, or to convergent or divergent approaches (see Appendix A for the full search string) 1 .

Additional to this protocol-driven approach, we used more informal approaches to trace relevant studies. We cross-referenced the selected papers and recent review studies on related topics, used personal knowledge about relevant papers, and consulted experts in the field. We only used newly identified papers in case they were from journals indexed in the online databases Ebscohost, Web of Science, or Scopus to avoid selecting predatory journal outputs.

Selection of Papers

The identified papers were screened in pre-designed Excel sheets in two stages. First, two independent coders applied a set of inclusion criteria (criteria 1–8) to all papers based on title, abstract, and keywords. The papers that met the following conditions were reviewed in full text: (1) one or both of the coders judged the paper to be included for full text review based on the inclusion criteria using the title, abstract, and keywords, or (2) the study fulfilled some of the inclusion criteria but not all criteria could be discerned clearly from the title, abstract or keywords. Second, in a full text review, two coders applied the inclusion criteria again after reading the full paper. If a study met the basic criteria 1–8, additional methodological criteria (9–13) were checked in order to make the final selection. To assure the quality of the coding process, full-text coding of both coders was compared. Differences between coders about whether the study met certain inclusion criteria were resolved by discussion and consensus. The dual coding process by two reviewers was used since this substantially increases the chance that eligible studies are rightfully included ( Edwards et al., 2002 ). Only studies that met all 13 inclusion criteria were included in the review.

Inclusion Criteria

The following inclusion criteria were used to select the relevant papers. These criteria were based on a prior review study on differentiated instruction in primary education ( Deunk et al., 2018 ) and the best evidence studies by Slavin and colleagues ( Slavin and Cheung, 2005 ; Slavin et al., 2008 , 2009 ; Slavin, 2013 ; Cheung et al., 2017 ).

1. Within-class differentiated instruction: The study is about the effect of within-class differentiated instruction, as defined in our study (see section Working Definition of Differentiated Instruction).

2. Practicality : The differentiated instruction approach is practical for teachers ( Janssen et al., 2015 ). Teachers must be able to apply this intervention themselves in a regular classroom. In addition, the intervention is time- and cost-effective, meaning that it should not take excessive training or coaching nor use of external teachers in the classroom to implement the approach. Interventions in which ICT applications are used to support the teachers' instruction and can be controlled by the teacher (e.g., in blended learning environments in which teachers make use of on-line tools or PowerPoint) could be included. However, studies on the effects of fully computerized adaptive programs (e.g., with adaptive feedback or intelligent tutors) or differentiation approaches for which an external teacher (or tutor) is needed (such as pullout interventions) were excluded.

3. Study type: Students in a differentiated instruction intervention condition are compared to those in a control condition in which students are taught using standard practice (“business as usual”), or to an alternative intervention (compare Slavin et al., 2008 , 2009 ; Slavin, 2013 ; Cheung et al., 2017 ; Deunk et al., 2018 ). The design could be truly randomized or quasi-experimental or matched (the control condition could be a group of other students in a between-group design, or students could be their own control group in a within-groups design) 2 . Additionally, large-scale survey designs in which within-class differentiated instruction is retrospectively linked to academic outcomes were eligible for inclusion (compare Deunk et al., 2018 ). Surveys have increasingly included been used in reviews of effectiveness, although one must keep in mind that no finding from a survey is definitive ( Petticrew and Roberts, 2006 ).

4. Quantitative empirical study : The study contains quantitative empirical data of at least 15 students per experimental group (compare Slavin et al., 2008 , 2009 ; Slavin, 2013 ; Cheung et al., 2017 ; Deunk et al., 2018 ). Other studies such as qualitative studies, case studies with fewer than 15 students, or theoretical or descriptive studies were excluded.

5. Secondary education: The study was executed in secondary education. For example, in middle schools, high schools, vocational schools, sixth-form schools or comparable levels of education for students from an age of about 11 or 12 years onwards. In some contexts, secondary schools could include grades as low as five, but they usually start with sixth or seventh grades (compare Slavin, 1990a ).

6. Mainstream education : The study was performed in a mainstream school setting (in a regular school, during school hours). Studies that were performed in non-school settings (e.g., in a laboratory or the workplace) or in an alternate school setting (e.g., an on-line course, a summer school, a special needs school) were excluded.

7. Academic achievement : Academic achievement of students is reported as a quantitative dependent variable, such as mathematics skills, language comprehension, or knowledge of history.

8. Language : The paper is written in English or Dutch (all authors master these languages), but the actual studies could be performed in any country.

Additional inclusion criteria used in the full-text review:

9. Differentiated instruction purpose: The study is about differentiated instruction with the aim of addressing cognitive differences (e.g., readiness, achievement level, intelligence) or differences in motivation / interest or learning profiles ( Tomlinson et al., 2003 ). Studies in which adaptions were made based on other factors such as culture (“culturally responsive teaching”) or physical or mental disabilities are beyond the scope of this review.

10. Implementation : The intervention is (at least partly) implemented. If this was not specifically reported, implementation was assumed.

11. Outcome measurement: The dependent variables/outcome measures include quantitative measures of achievement. Experimenter-made measures were accepted if they were comprehensive and fair to the both groups; no treatment-inherent measures were included ( Slavin and Madden, 2011 ).

12. Effect sizes : The paper provides enough information to calculate or extract effect sizes about the effectiveness of the differentiated instruction approach.

13. Comparability : Pretest information is provided (unless random assignments of at least 30 units was used and there were no indications of initial inequality). Studies with pretest differences of more than 50% of a standard deviation were excluded because—even with analyses of covariance—large pretest differences cannot be adequately adjusted for ( Slavin et al., 2009 ; Slavin, 2013 ; Cheung et al., 2017 ; compare Deunk et al., 2018 ).

Data Extraction

After the final selection of papers based on the criteria above, relevant information was extracted from the papers and coded by two independent reviewers in a pre-designed Excel sheet (see Appendix B ). Discrepancies between the extractions of both reviewers were discussed until consensus was reached. Missing information regarding the methodology or results was requested from the authors by e-mail (although only few responses were received). The content coding was used (additional to the full texts) to inform the literature synthesis and to extract data for the calculation of effect sizes.

Data Analysis

We transformed all outcomes on student achievement from the selected papers to Cohen's d , which is the standardized mean difference between groups ( Petticrew and Roberts, 2006 ; Borenstein et al., 2009 ). To do so, the program Comprehensive Meta-Analysis (CMA) version 2 was used ( Borenstein et al., 2009 ). Effect sizes were calculated using a random effects model since we have no reason to assume that the studies are “identical” in the sense that the true effect size is exactly the same in all studies ( Borenstein et al., 2010 ). Methods of calculating effects using different types of data are described in Borenstein et al. (2009) and Lyons (2003) . When outcomes were reported in multiple formats in the paper, we chose the means and standard deviations to come to transparent and comparable outcomes. The effects were standardized using post-score standard deviations for measures where this was needed. For some outcome formats, CMA requires the user to insert a pre-post correlation. Since none of the selected papers provided this number, we assumed a correlation of 0.80 in the analyses since it is reasonable to assume such a pre- post correlation in studies in secondary education ( Swanson and Lussier, 2001 ; Cole et al., 2011 ). This correlation does not affect the Cohen's d statistic but has impact on its variance component. For the papers in which multiple outcome measures were reported, we used the means of the different measures. In case only subgroup means (of subgroups within classes of schools) were reported, we combined the outcomes of the subgroups with study as the unit of analysis to calculate a combined effect ( Borenstein et al., 2009 ). For one study in which the intervention was executed in separate schools differing in implementation and findings, we have included the schools in the analyses separately (using schools in which the intervention took place as the unit of analysis).

Search Results

Our search led to 1,365 hits from the online databases ERIC, PsycINFO and Web of Science and 34 cross-referenced papers. Excluding duplicates, 1,029 papers were reviewed. See Appendix C for a flow-chart of the selection process. In total, 14 papers met the eligibility criteria for inclusion. Papers reporting on the same project and outcomes were taken together as one study. The papers by Altintas and Özdemir (2015a , b) report on the same project. The same applies to two other papers as well ( Vogt and Rogalla, 2009 ; Bruhwiler and Blatchford, 2011 ). Thus, in the end, 12 unique studies were included in our review and meta-analysis leading to 15 effects in total (since for one study the four different schools in which the intervention was executed were taken as the unit of analysis).

Study Characteristics

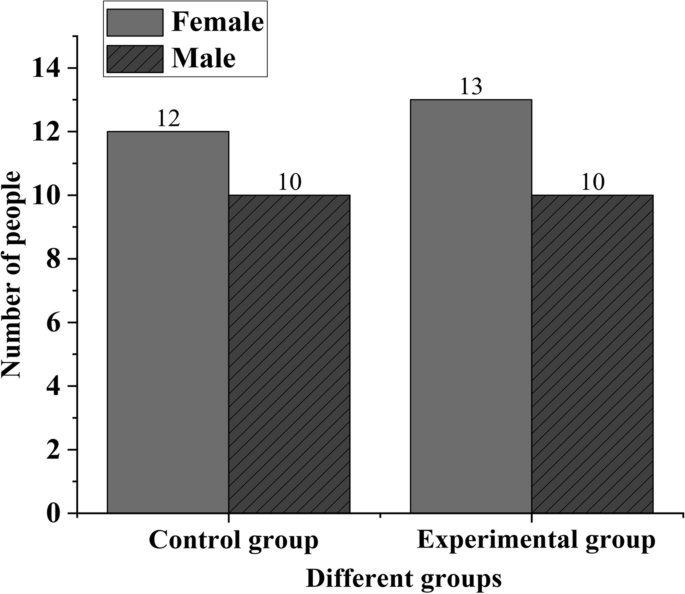

In Table 2 , the characteristics and individual effects of the studies included in our review are summarized. The selection of studies includes eight quasi-experimental studies in which classes were randomly allocated to a control or experimental condition ( Mastropieri et al., 2006 ; Richards and Omdal, 2007 ; Huber et al., 2009 ; Vogt and Rogalla, 2009 ; Little et al., 2014 ; Altintas and Özdemir, 2015a , b ; Bal, 2016 ; Bhagat et al., 2016 ), three studies in which schools were randomly allocated to conditions ( Wambugu and Changeiywo, 2008 ; Mitee and Obaitan, 2015 ; Bikić et al., 2016 ), and one survey-study ( Smit and Humpert, 2012 ). These studies covered a wide range of academic subjects, including science, mathematics and reading. In terms of the number of participating students, six studies were small-scale studies ( N < 250) and six were large-scale studies ( N > 250). However, note that all experiments had nested designs. Only the studies of Little et al. (2014) and Vogt and Rogalla (2009) have at least 15 cases in each experimental condition at the level of randomization. Four studies were performed in the United States of America, five in Europe, one in Taiwan, and two in Africa. All studies were performed in secondary education, but the Vogt and Rogalla study represents a combined sample of primary- and secondary education students.

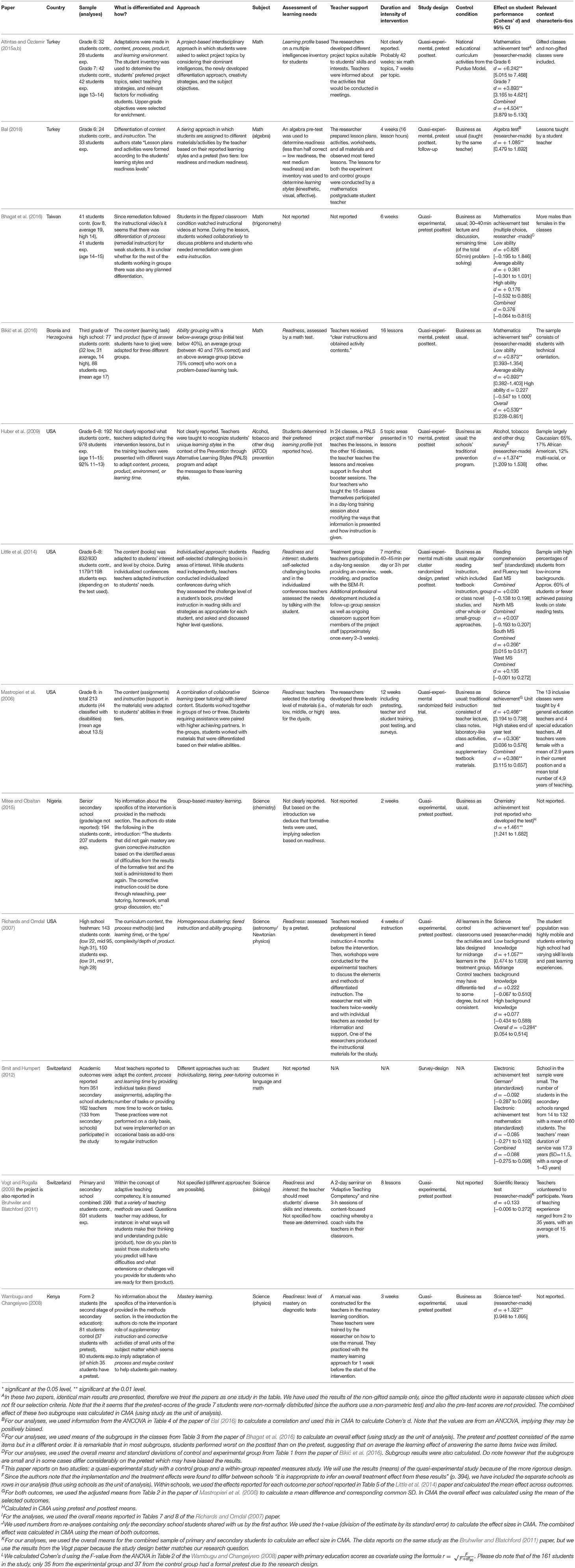

Table 2 . Summary of contents of the selected papers and the effects of the individual studies on student achievement.

Literature Synthesis

To further reflect on the findings from the selected studies in respect to our research questions, we will give a more detailed description of the study designs, implementations and findings here.

Studies on Generic Approaches to Differentiated Instruction

Although adaptive teaching does not necessarily include differentiated instruction, we found two quasi-experimental studies on adaptive teaching that (to some extent) matched our definition of differentiated instruction. In the large-scale study by Vogt and Rogalla (2009) , teachers were trained in adaptive teaching competency to improve their teaching and, in turn, to maximize students' learning. In the project “Adaptive Teaching Competency,” that was also included in the paper of Bruhwiler and Blatchford (2011) , adaptive teaching was characterized as including: sufficient subject knowledge, taking the diverse pre-conditions and learning processes of students into account, using various effective teaching methods for the whole group, differentiating for students' varying learning needs, supporting students in the regulation of learning processes, and using effective classroom management. In the project, teachers learned to focus on both adaptive planning prior to the lesson, as well as making adaptations during the lesson. Teachers of 27 primary school classes and 23 secondary school classes with 623 students were recruited to learn more about adaptive teaching. They participated in a 2-day workshop, received several coaching sessions in the classroom and used the adaptive teaching framework in their classes for eight science lessons. After the intervention, it was measured—among others—whether teachers differentiated to meet students' diverse skills and interests. After the intervention, teachers' competency in planning adaptive lessons significantly increased but their “Adaptive Implementation” did not change much. Unfortunately, in the coaching sessions, teachers often did not discuss about issues of adapting to the diversity of students' skills and their pre-existing knowledge. The results of students in the experimental classes were compared to those of 299 control students. The authors reported that the secondary students in the experimental group outperformed their counterparts in control classrooms on a science achievement test after the intervention. However, since we only had access to the means of the combined sample in primary and secondary education we used the combined sample results. Our calculation based on these means shows a small non-significant intervention effect of d = +0.133 (see Table 2 ). The authors argue that more coaching may be needed to foster the implementation of adaptive teaching in the classroom, although it would decrease the cost-effectiveness of the approach.

In the study by Huber et al. (2009) , teachers learned about adaptive teaching in a workshop, and were asked to incorporate it into their lessons. The intervention was the Prevention through Alternative Learning Styles (PALS) program aimed at prevention of alcohol-, tobacco-, and other drug (AOTD) abuse. Prevention of alcohol-, tobacco-, and other drugs is rather commonplace in secondary schools. For instance, in the US, students typically get into prevention programs more than once in their school career ( Kumar et al., 2013 ) and European schools are also encouraged to take action in promoting students' health ( World Health Organiasation, 2011 ). Teachers attended a 1-day workshop about adaptive teaching by means of: modifying time, increasing or decreasing the number of items to be learned or completed, increasing the level of support, changing the input or the way the material is presented, changing the output, adapting the amount of active participation, changing to alternate goals and expectations, adapting the level of difficulty for each individual, and providing different instruction and materials. In addition, teachers learned about alternative learning styles and disabilities. PALS materials were developed by the research team to match students' specific needs and related abilities. In a quasi-experimental study, four grade 6–8 teachers taught the 10 PALS intervention lessons to their classes and PALS team members taught another 24 classes. School officials suggested a convenient comparison group receiving the traditional prevention program. In reference to the control group, the PALS program had a large significant effect of d = +1.374 on students' knowledge of the effects of ATOD (see Table 2 ). These results were replicated in a second, within-group repeated measures design. Although the findings seem promising, more information is needed about how the approach was implemented; in the paper, it is unclear how teachers applied the information from the training in their instruction. Moreover, replication of the findings in a study in which teachers teach all project lessons may also help clarify whether the effects of the intervention were affected by the fact that project staff taught most lessons in the experimental condition.

We only selected two studies using a generic approach to differentiated instruction and the effects of the studies described above differ considerably regarding their intervention, school subject, and findings. This makes it hard to estimate the overall effectiveness of generic approaches. The study of Huber seems promising, but unfortunately, the study of Vogt and Rogalla did not lead to positive achievement effects for students across the primary and secondary school group. More studies are needed to gain insight in how teachers could effectively and efficiently be supported or coached to master the multifaceted approach of differentiated instruction.

Studies on Differentiated Instruction Using Homogeneous Clustering

A number of selected studies use a macro-adaptive approach to differentiated instruction ( Richards and Omdal, 2007 ; Altintas and Özdemir, 2015a , b ; Bal, 2016 ; Bikić et al., 2016 ). Of these studies, the study of Richards and Omdal (2007) has the most robust design. In this study, first year students were randomized over 14 classes and then classes were randomly assigned to conditions. Within the experimental condition, the science content for ability groups was adapted to students' learning needs by means of tiering. To study the effectiveness of the approach, 194 students were randomly assigned to classes in which the teachers used tiered content, while 194 other students were in the control group that worked with the midrange curriculum for 4 weeks. Each teacher was assigned at least one treatment and one control class. After a pretest, students in the experimental condition were assigned to three ability groups: a low background knowledge group (around the lowest scoring 10 percent of all students), a midrange group (about 80 percent), and a high background group (the highest scoring 10 percent). One of the researchers produced the instructional materials for the study. To develop the differentiated materials, first core instructional materials were developed that were aimed at the midrange group. Next, the content was differentiated for the low and high background students. Adaptations were made to the depth of content, the degree of teacher dependence and structuring, the number of steps, the skills, time on task, the product, and the available resources. Students were asked to work together within their tiers. There was an overall small significant effect of the intervention of d = +0.284 in favor of the tiering condition (see Table 2 ). Closer analyses of subgroup results (see Table 2 ) show that this is particularly due to a large effect for the low background learners of d = +1.057. For high-range learners, differences between the control condition and the experimental condition are near to zero ( d = +0.077), although this may be partly due to a ceiling effect on the test. The authors conclude that curriculum differentiation through tiered assignments can be an effective way to address the needs of low achieving students. They recommend, however, that it should be accompanied by professional support and that teachers who design the tiers should have substantial subject matter knowledge and experience with learners with different needs.

In the study by Bikić et al. (2016) , the effectiveness of differentiated instruction of geometry content within a problem-based learning approach is studied. In the quasi-experiment, the authors compare an approach in which students solved mathematics problems on three levels differing in complexity using problem-based learning to a control condition. The study design is not described in detail, but since the authors state “students of the experimental group and control group were not the students from the same school” it seems that schools were allocated to an experimental or control condition to study the effectiveness of the approach. Within the experimental condition, 88 secondary school students were assigned to three groups (low- average-, or high-achievers) based on an initial test, and then worked on adapted levels of geometry problems for 16 lessons before completing a final test. An example of the differentiated materials in the paper shows that the three ability groups all received a different task (which was a variation of the same task differing in complexity). Unfortunately, it is not described how the students exactly processed the content. In the control condition, 77 other students were taught in the usual, traditional manner. Students in the ability grouping condition outperformed the control students with a moderate positive effect of d = +0.539 (see Table 2 ). Subgroup analyses indicate that the approach was most effective for average ability students; students in the high achieving group did not outperform high achieving students in the control group. Do note however that the high achieving groups were small (12 exp. vs. 14 contr. students), hence, these results should be interpreted with caution. More research would be needed to clarify to which extent the differentiated content improved the effectiveness of the problem-based learning approach.

A different grouping approach is one based on preferred learning styles. In the study of Bal (2016) , grade 6 students completed an algebra pre-test as well as filling out a learning style inventory (kinesthetic, visual, affective learning styles). Algebra-learning materials an activities are adapted for two tiers; for low performing students and high performing students, also adapted for different learning styles of students in the experimental group. Despite the fact that there are reasons not to use learning styles as a distinction between students (see e.g., Kirschner et al., 2018 ), the authors did find large positive effects of the tiering approach after 4 weeks of teaching ( d = + 1.085, see Table 2 ). Do note however that ANCOVA results were used to calculate the effects which may lead to some positive bias in this estimate. Based on information from student-interviews presented in the paper, it seems that students experienced success in learning and enjoyed the materials and activities developed for the experimental condition. It is unclear however, how the materials and activities were made more appropriate for students' readiness (and learning style) and how they differed from the approach in the control condition that used traditional teaching. In that sense, it is difficult to judge what caused these positive findings. In another study on mathematics by Altintas and Özdemir (2015a , b) , teachers assessed students' preferred learning modalities by taking a multiple intelligences inventory. The data obtained from the inventory were used to determine the students' project topics, to select the teachers' teaching strategies, and to determine the relevant factors for motivating students. The effectiveness of the approach, which was originally designed for gifted students, was evaluated in a sample of 5 to 7th grade students in Turkey. After pretesting, one class of students was allocated to the experimental condition and one class of the same grade formed the control group. The authors report a very large effect of the intervention after six practices lasting 7 weeks each when compared to classes working with the Purdue model for both grade 6 and grade 7 students ( d = +4.504 across subgroups, see Table 2 ). However, it is difficult to discern what exactly caused this finding. Little information was provided about how exactly the teachers planned and executed the lessons and how students' activities and objectives were matched to their dominant intelligences, nor was there much information about possible confounding factors. In addition, since the researcher who developed the multiple intelligences theory admits that the theory is no longer up to date ( Gardner, 2016 ), one could question whether learning preferences could be better determined based on another distinction.

In summary, from the studies we found on the effectiveness approaches to differentiated instruction using homogeneous clustering, we could infer that overall small to medium sized effects (and in some cases also large effects) of the approach on student achievement can be achieved in beta subjects. The study of Altintas and Özdemir shows a very large effect of this approach and the study of Bal also shows large effects. However, before we can corroborate these findings, more information would be needed. When we look at the operationalizations of differentiated instruction in the two larger studies, we see that teachers used variations of learning tasks that were designed to better match the learning needs of different ability groups. Differential effects for student outcomes are somewhat variable; the results are most profound for the low achieving group in the study by Richards and Omdal (2007) , and for the low and average achieving group in the study of Bikić et al. (2016) . In both studies, effectiveness for the high achieving group seemed negligible.

Studies on Mastery Learning

In two included studies, mastery learning was used to boost student achievement in physics and mathematics. The quasi-experimental studies reporting on mastery learning approaches in secondary education used randomization of schools to conditions and were both performed in African schools ( Wambugu and Changeiywo, 2008 ; Mitee and Obaitan, 2015 ). In the papers, the authors describe similar characteristics of mastery learning in their theoretical framework, such as specifying learning goals, breaking down the curriculum into small units, formative assessment, using corrective instruction for students who did not reach mastery, and retesting. This process continues until virtually all the students master the taught material ( Mitee and Obaitan, 2015 ), which emphasizes its aim of convergent differentiation. Mittee and Obaitan report a large effect of the mastery learning approach of d = +1.461 based on an experiment in which about 400 students from four schools were allocated to a mastery learning or a control condition (see Table 2 ). Wambugu and Changeiywo randomly divided four classes from four schools over the mastery learning or the experimental condition. Comparing the results on the physics achievement test of the two experimental classes a two control classes, they found a large effect of mastery learning ( d = +1.322 based on the findings of an ANOVA, see Table 2 ). However, do note that pretests were only available for two out of four classes (one control and one experimental).

Unfortunately, the information on the mastery learning approach in the lessons is rather limited in both papers. Therefore, it is difficult to judge how such large achievement gains can be reached by implementing mastery learning in secondary education. Nevertheless, we can extract a number of recommendations: First, both studies use corrective instruction for helping students gain mastery. Secondly, in both studies the authors refer to some type of collaborative learning in the corrective instruction phase. Lastly, Wambugu and Changeiywo note that the time needed to develop the learning objectives, formative tests, and corrective activities is considerable so teachers may want to work together in teacher teams to achieve these goals. More high-quality research is needed to replicate these findings and to gain insight in how teachers can apply this approach in practice.

Studies on Individualized Differentiated Instruction

The large-scale quasi-experimental study on differentiated reading instruction in middle schools by Little et al. (2014) used individualized adaptations to address students' learning needs. They used a program called the Schoolwide Enrichment Model-Reading Framework (SEM-R) to support students' reading adaptively. The SEM-R approach consists of three phases: (1) short read-alouds by the teacher (“Book Hooks”) and brief discussions about books, (2) students read independently in self-selected, challenging books while the teacher organizes individualized 5- to 7-min conferences with each student once every 1 to 2 weeks, (3) interest-based and more project-oriented activities. Professional development of teachers included workshops as well as classroom support from project staff. The focus of the intervention was on phases 1 and 2. Teachers were expected to implement SEM-R on a daily basis for about 40 to 45 min per day or 3 h per week. In a cluster-randomized design executed in four middle schools with 2,150 students, the effectiveness of the approach was compared to that of traditional teaching. The effects of the approach varied considerably across the different schools. The authors reported that, for the reading fluency outcome, SEM-R students significantly outperformed their control counterparts in two out of four schools. The standardized mean differences ranged from about −0.1 to +0.3 between the schools (see Table 2 ). The authors conclude that the intervention was at least as effective as traditional instruction. However, the wide range of implementations and effects on student outcomes between classes and schools illustrates the difficulty of implementing intensive forms of individualization in practice.

In the survey study of Smit and Humpert (2012) , the authors assessed which teaching practices teachers used to differentiate their teaching. In this sub-study of the project “Schools in Alpine Regions,” teachers from 8 primary schools and 14 secondary schools in the rural Alpine region of Switzerland participated. Teachers responded to a teacher questionnaire about differentiated instruction. They mainly reported to make adaptations at the individual level by, for instance, providing students with individual tasks (tiered assignments), adapting the number of tasks, or providing more time to work on tasks. Teachers often used “learning plans” as well as tasks in which students could take individual learning trajectories varying the content or learning rate. Flexible grouping was less common and alternative assessments were very rare. Peer tutoring occurred frequently, and tiered assignments were very common. On average, 38% of teachers' weekly lessons were differentiated. The authors conclude that teachers in their sample, on average, did not execute very elaborate differentiated instruction. Moreover, no significant relation between differentiated instruction and student achievement was found for neither a standardized language test ( d = −0.092) nor a standardized mathematics test ( d = −0.085, see Table 2 ). Following the survey study, an intervention study was executed with 10 of the schools that were included in the survey-study. In this study (that was not included in our selection since it was not published in an academic journal), teachers participated in workshops and team meetings and logged their learning experiences in portfolios. Teachers barely progressed in their differentiated instruction during the 2.5-year project ( Smit et al., 2011 ). Nevertheless, a high pedagogical team culture in schools was found to have a positive influence teachers' differentiated instruction ( Smit et al., 2011 ; Smit and Humpert, 2012) , and as such may be one of the keys to achieve improvement.

Overall, it seems that it is rather difficult to boost the achievement of the whole class by means of individualized approaches. However, as Little et al. (2014) suggest, individualization may be used as an approach to increase students' engagement with the learning content. A drawback of the approach may be that the requirements for organizing and monitoring learning activities by the teacher in individualized approaches could leave less time for high quality pedagogical interaction. Possibly, future research on individualization supported by digital technology may open up more possibilities for this approach to have high impact on student achievement ( Education Endowment Foundation, n.d. ).

Studies on Differentiated Instruction Using Heterogeneous Clustering

One of the included studies used differentiated instruction within mixed-ability learning settings. In the study by Mastropieri et al. (2006) , grade eight students worked on science assignments in groups of two or three. Peer-mediated differentiated instruction and tiering was used to adapt the content to students' learning needs within the groups. The authors developed three tiers of each assignment varying in complexity. Within the peer groups, students could work on activities on their own appropriate level and continue to the next level once proficiency was obtained. All lower ability level students—including students with learning disabilities—were required to begin with the lowest tier. In the experiment, 13 classes with a total of 216 students were assigned to the peer-mediated differentiated content condition or a teacher-led control condition. The researchers divided the classes in such a way that each teacher taught at least one experimental and one control classroom. After about 12 weeks, a small positive effect was found in favor of the peer-mediated condition with tiered content on both the unit test and the high stakes end of year test (respectively d = + 0.466 and d = + 0.306, see Table 2 ). The overall effect of d = +0.386 is comparable to that of the tiering intervention of Richards and Omdal (2007) discussed earlier. The effect is slightly higher, but this may also partly be affected by the use of adjusted means. In any case, more research is needed to disentangle the effects of the peer-learning and the differentiated content.

Studies on Differentiated Instruction in Flipped Classrooms

In flipped classroom instruction, content dissemination (lecture) is moved outside of the classroom, typically by letting students watch instructional videos before the lesson. This opens up more time for active learning inside the classroom ( Leo and Puzio, 2016 ). This format implies differentiation of learning time and pace before the lesson since students may rewind, pause or watch the video's multiple times according to their learning needs. However, whether the activities during the lesson encompass our operationalization of differentiated instruction (see Table 1 ) varies. From a recent meta-analysis on flipping the classroom ( Akçayir and Akçayir, 2018 ), we found one study in secondary education in which remediation in the classroom was mentioned as being part of the intervention. Bhagat et al. (2016) report on a quasi-experiment in which 41 high school students were assigned to a classroom using flipping-the-classroom and 41 students were in the control condition. The experimental group underwent “flipped” lessons on trigonometry for 6 weeks, while the control group followed similar lessons using the conventional learning method. Students in the flipped condition watched videos of 15–20 min before the lesson. During the lesson, students discussed problems collaboratively and, in the meantime, students who needed remediation were provided with extra instruction. After the intervention, students from the flipped classrooms outperformed their counterparts on a mathematics test and were more motivated. The authors report a large effect of the intervention on students' mathematics achievement based on analysis of covariance. However, the combined effect across the subgroup mean differences is modest d = 0.376, see Table 2 ). On average, experimental students of all abilities performed better, except for high achievers who did not significantly outperform the control group. These differential effects should be interpreted with caution because of the limited number of students in the subgroups. The pro of this study is that it gives some insights in the benefits of differentiated instruction embedded in an innovative approach to teaching. Yet, the authors did not specify clearly what the remediation and collaborative learning in the classroom consisted of and cannot disentangle effects of different elements of the intervention. More research would be needed to clarify the role and effectiveness of differentiated instruction in flipped settings.

Contextual and Personal Variables

As we discussed in the theoretical framework, many variables may influence teachers' implementation of differentiated instruction. We hoped to find evidence for this assumption in our selection of papers. However, in general, little information was provided about contextual and personal factors such as school, class, or teacher characteristics.

In our sample of studies, differentiated instruction was mostly applied to teaching mathematics and science. Additionally, there were also papers on literacy and social sciences. No clear differences in effectiveness could be observed between the subjects. Students varied in background characteristics across the studies. In the study by Little et al. (2014) , for instance, about 48 to 77 percent of students were from low SES. In the study by Mastropieri et al. (2006) , many ethnicities were represented. In the studies by Huber et al. (2009) , students were mostly European-American. Student ages varied from about 11 to 17 years old (see Table 2 ). Teacher characteristics were rarely reported. In the study by Mastropieri et al. (2006) , relatively inexperienced teachers participated with a mean of about 3 years in their current position, and in the studies by Vogt and Rogalla (2009) and Smit and Humpert (2012) , years of teaching experience varied considerably, with an average of about 15 to 17 years.

The only variable that is rather consistent across the studies is that teachers in the included studies relied considerably on external sources of information or support to help them implement differentiated instruction within their classrooms. In most of the selected studies, the research team developed materials for students, and teachers were instructed or coached in implementing the interventions (see Table 2 ). Although we aimed to select practical interventions, little information is provided about whether teachers were able to successfully execute the differentiated instruction practices independently in the long run.

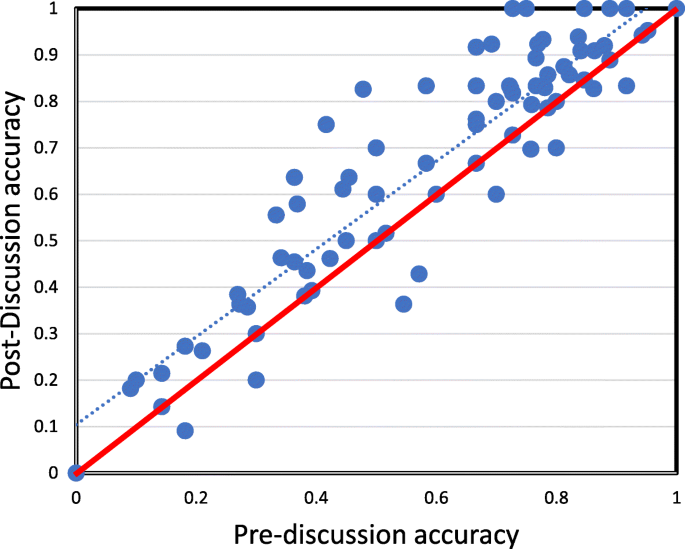

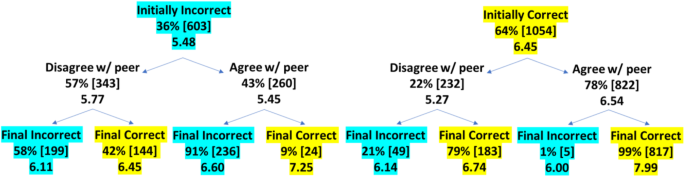

Overall Effects of Differentiated Instruction

Ideally, combining our narrative reflection on the included papers with a meta-analysis of the findings would give us an answer as to how effective within-class differentiated instruction in secondary education may be. However, unfortunately, the number of papers that remained after applying our selection criteria is limited and the studies are heterogeneous in nature so meta-analyses of results should be interpreted with caution. To inform the readers however, we did add a forest plot with an overview of the average effect size of each individual study to the appendix (see Appendix D ). In Table 2 the effects and intermediate calculations for individual studies are described. A summary effect across all studies is also reported ( d = +0.741; 95% CI = 0.397–1.1085; Q = 507.701; df = 14; p < 0.01). The p -value of the Q statistic was significant which may indicate heterogeneity of the papers meaning that the true effects of the interventions may vary. Noticeably, the largest studies in our sample show small positive effects of differentiated instruction. In contrast, the relatively small studies reported on large effects, and the other studies mostly show moderate effects of the approach. A cumulative analysis (see Appendix D ) illustrates that the small study by Altintas and Özdemir (2015a , b) considerably shifts the point estimate of the effect size in the positive direction. Excluding this outlier, the summary effect of differentiated instruction is d = +0.509 (95% CI = 0.215–0.803; see Appendix D ). A funnel plot was made to check for publication bias (see Appendix E ). Using Duval and Tweedie's Trim and Fill method ( Duval and Tweedie, 2000 ), no adjusted values were estimated. This indicates that there is no evidence of publication bias. These analyses give some information about the range of effects that can be achieved with differentiated instruction interventions ranging. However, unquestionably, more information is needed before drawing a more definitive conclusion about the overall and relative effects of different approaches to differentiated instruction in secondary schools.

Suggestions for Reporting on Differentiated Instruction Interventions

One of the issues we encountered when performing this review, was that interventions and research methodologies were often described rather briefly. In addition, relevant context information was frequently missing. This is problematic, not only from a scientific point of view, but also to judge the transferability of the findings to practice. Therefore, we encourage researchers to diligently report on the methods and analytical techniques they used and to be specific about the outcomes that led to their conclusions (see e.g., Hancock and Mueller, 2010 ). Except for this general suggestion, we would like to provide a number of specific recommendations for reporting on differentiated instruction interventions (see Appendix F ).

Conclusion and discussion

The most important conclusion from our systematic review of the literature is that there are too few high-quality studies on the effectiveness of differentiated instruction in secondary education. Only 12 studies from 14 papers were selected after applying strict selection criteria to a large amount of literature on the topic. As expected, we found papers on various operationalizations of differentiated instruction like homogeneous grouping, differentiated instruction in peer-learning, and individualization. However, even within the most well-known approaches like ability grouping, the empirical evidence was limited. High quality teacher-led differentiated instruction studies in secondary education are scarce, although the literature on ICT-applications for differentiated instruction seems to be on the rise. This paucity has not changed much after our search, although there are some recent interesting endeavors for teacher professionalization in differentiated instruction ( Brink and Bartz, 2017 ; Schipper et al., 2017 , 2018 ; Valiandes and Neophytou, 2018 ) and there have been some recent small-scale studies including aspects of differentiated instruction ( Sezer, 2017 ; Adeniji et al., 2018 ). This paucity is remarkable given the large interest for the topic of differentiated instruction in both the literature as well as in policy and practice. Apparently, the premises of differentiated instruction seems substantial enough for schools and policy makers to move towards implementation before a solid research base has been established. On the one hand, this seems defendable; differentiated instruction matches the ambitions of educationists to be more student-oriented and to improve equity among students. In addition, there is prior research showing benefits of approaches like ability grouping and mastery learning for K-12 students' achievement ( Guskey and Pigott, 1988 ; Kulik et al., 1990 ; Kulik, 1992 ; Lou et al., 1996 ; Hattie, 2009 ; Steenbergen-Hu et al., 2016 ). Furthermore, the ideas behind differentiated instruction are in line with approaches which have repeatedly been linked to better learning such as having students work on an appropriate level of moderate challenge according to their “zone of proximal development” and matching learning tasks to students' abilities and interests to create “flow” ( Tomlinson et al., 2003 ). On the other hand, more research on different operationalizations of differentiated instruction is needed to help teachers and policy makers to determine which approaches are helpful for students of different characteristics and to gain insight in how these could be implemented successfully. From prior research in primary education, we know that it is likely that not all approaches have comparable effects, and that effects for low- average- and high ability students may vary ( Deunk et al., 2018 ). Our current review shows that there is much work to be done in order to further clarify which approaches work and why within the context of secondary education.

Having said that, the studies that we did find do give us some directions about the expectations we may have about the effectiveness of differentiated instruction in secondary education. Most well-designed studies in our sample reported small to medium-sized positive effects of differentiated instruction on student achievement. This finding is comparable to the moderate effects found in most differentiated instruction reviews (e.g., Kulik, 1992 ; Lou et al., 1996 ; Steenbergen-Hu et al., 2016 ) and other studies on educational interventions ( Sipe and Curlette, 1996 ). The overall effect in our study is a bit higher than in prior reviews, possibly due to the inclusion of various approaches to differentiated instruction, including mastery learning and more holistic approaches. Although we cannot give a conclusive answer about the effectiveness of differentiated instruction in secondary education, most of the included studies do illustrate the possibility of improving student achievement by means of differentiated instruction.

Moreover, the selected papers give insight in the many different ways that differentiated instruction can be operationalized and studied in secondary education. For instance, a number of studies used generic training of teachers in principles of differentiated instruction. Based on the findings, we would suggest that more research is needed to study how teachers can adequately be guided to implement such holistic approaches into their daily teaching (compare practicality theory by Janssen et al., 2015 ). Alternatively, in four of the selected studies homogeneous clustering by means of tiering and ability grouping was used as a structure for differentiated instruction. For the subgroups, learning content was adapted to better fit the needs of the students ( Richards and Omdal, 2007 ; Altintas and Özdemir, 2015a , b ; Bal, 2016 ; Bikić et al., 2016 ). Medium to large positive effects were reported of such an approach, indicating this may be one of the ways teachers may address differentiated instruction. This finding is comparable to findings on ability grouping in the meta-analyses by Steenbergen-Hu et al. (2016) and Lou et al. (1996) . The effects were somewhat larger compared to those in the studies in primary education discussed by Deunk et al. (2018) and Slavin (1990a) . One possible explanation might be that some of the studies mentioned in those previous reviews may have included grouping without any instructional adaptations, which was excluded from the current review. Also, in our selected papers on homogeneous clustering, researcher-developed outcome measures were used. Researcher-developed measures have previously been associated with larger effects than standardized measures ( Slavin, 1987 ; Lou et al., 1996 ). Turning to another approach, two studies were reviewed on the effectiveness of mastery learning. The authors reported large effects of mastery learning on student achievement. However, since the research methods were not thoroughly described in the papers, we cannot say much about the quality of the intervention nor the implementation. Two other studies focused on individualization. Overall, small and non-significant effects of this approach were found. It could be that teachers grapple with the organizational requirements of individualized instruction ( Education Endowment Foundation, n.d. ). Additionally, a study was found that successfully embedded differentiated instruction in a peer-learning setting by means tiered content matching students' learning needs ( Mastropieri et al., 2006 ). Lastly, one of the studies embedded remediation and collaboration in a flipped-classroom format illustrating how differentiated instruction can be applied within different approaches to teaching ( Bhagat et al., 2016 ).