Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 29 August 2022

Principal Component Analyses (PCA)-based findings in population genetic studies are highly biased and must be reevaluated

- Eran Elhaik 1

Scientific Reports volume 12 , Article number: 14683 ( 2022 ) Cite this article

110k Accesses

40 Citations

579 Altmetric

Metrics details

- Computational models

- Population genetics

Principal Component Analysis (PCA) is a multivariate analysis that reduces the complexity of datasets while preserving data covariance. The outcome can be visualized on colorful scatterplots, ideally with only a minimal loss of information. PCA applications, implemented in well-cited packages like EIGENSOFT and PLINK, are extensively used as the foremost analyses in population genetics and related fields (e.g., animal and plant or medical genetics). PCA outcomes are used to shape study design, identify, and characterize individuals and populations, and draw historical and ethnobiological conclusions on origins, evolution, dispersion, and relatedness. The replicability crisis in science has prompted us to evaluate whether PCA results are reliable, robust, and replicable. We analyzed twelve common test cases using an intuitive color-based model alongside human population data. We demonstrate that PCA results can be artifacts of the data and can be easily manipulated to generate desired outcomes. PCA adjustment also yielded unfavorable outcomes in association studies. PCA results may not be reliable, robust, or replicable as the field assumes. Our findings raise concerns about the validity of results reported in the population genetics literature and related fields that place a disproportionate reliance upon PCA outcomes and the insights derived from them. We conclude that PCA may have a biasing role in genetic investigations and that 32,000-216,000 genetic studies should be reevaluated. An alternative mixed-admixture population genetic model is discussed.

Similar content being viewed by others

The influence of a priori grouping on inference of genetic clusters: simulation study and literature review of the DAPC method

Joshua M. Miller, Catherine I. Cullingham & Rhiannon M. Peery

Population relationships based on 170 ancestry SNPs from the combined Kidd and Seldin panels

Andrew J. Pakstis, William C. Speed, … Kenneth K. Kidd

Factor analysis of ancient population genomic samples

Olivier François & Flora Jay

Introduction

The ongoing reproducibility crisis, undermining the foundation of science 1 , raises various concerns ranging from study design to statistical rigor 2 , 3 . Population genetics is confounded by its utilization of small sample sizes, ignorance of effect sizes, and adoption of questionable study designs. The field is relatively small and may involve financial interests 4 , 5 , 6 and ethical dilemmas 7 , 8 . Since biases in the field rapidly propagate to related disciplines like medical genetics, biogeography, association studies, forensics, and paleogenomics in humans and non-humans alike, it is imperative to ask whether and to what extent our most elementary tools satisfy risk criteria.

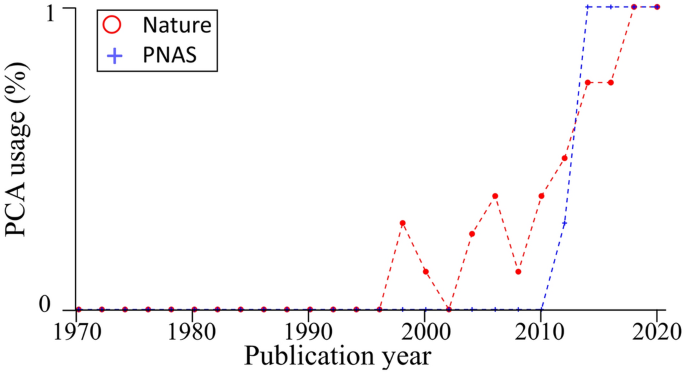

Principal Component Analysis (PCA) is a multivariate analysis that reduces the data’s dimensionality while preserving their covariance. When applied to genotype bi-allelic data, typically encoded as AA, AB, and BB, PCA finds the eigenvalues and eigenvectors of the covariance matrix of allele frequencies. The data are reduced to a small number of dimensions termed principal components (PCs); each describes a decreased proportion of the genomic variation. Genotypes are then projected onto space spanned by the PC axes, which allows visualizing the samples and their distances from one another in a colorful scatter plot. In this visualization, sample overlap is considered evidence of identity, due to common origin or ancestry 9 , 10 . PCA’s most attractive property for population geneticists is that the distances between clusters allegedly reflect the genetic and geographic distances between them. PCA also supports the projection of points onto the components calculated by a different dataset, presumably accounting for insufficient data in the projected dataset. Initially adapted for human genomic data in 1963 11 , the popularity of PCA has slowly increased over time. It was not until the release of the SmartPCA tool (EIGENSOFT package) 10 that PCA was propelled to the front stage of population genetics.

PCA is used as the first analysis of data investigation and data description in most population genetic analyses, e.g., Refs. 12 , 13 , 14 , 15 . It has a wide range of applications. It is used to examine the population structure of a cohort or individuals to determine ancestry, analyze the demographic history and admixture, decide on the genetic similarity of samples and exclude outliers, decide how to model the populations in downstream analyses, describe the ancient and modern genetic relationships between the samples, infer kinship, identify ancestral clines in the data, e.g., Refs. 16 , 17 , 18 , 19 , detect genomic signatures of natural selection, e.g., Ref. 20 and identify convergent evolution 21 . PCA or PCA-like tools are considered the ‘gold standard’ in genome-wide studies (GWAS) and GWAS meta-analyses. They are routinely used to cluster individuals with shared genetic ancestry and detect, quantify, and adjust for population structure 22 . PCA is also used to identify cases, controls 23 , 24 , 25 , and outliers (samples or data) 17 , and calculate population structure covariates 26 . The demand for large sample sizes has prompted researchers to “outsource” analyses to direct-to-consumer companies, which employ discretion in their choice of tools, methods, and data—none of which are shared—and return the PCA loadings and other “summary statistics” 27 , 28 . Loadings are also offered by databases like gnomAD 29 and the UK Biobank 30 . PCA serves as the primary tool to identify the origins of ancient samples in paleogenomics 14 , to identify biomarkers for forensic reconstruction in evolutionary biology 31 , and geolocalize samples 32 . As of April 2022, 32,000-216,000 genetic papers employed PC scatterplots to interpret genetic data, draw historical and ethnobiological conclusions, and describe the evolution of various taxa from prehistorical times to the present—no doubt Herculean tasks for any scatterplot.

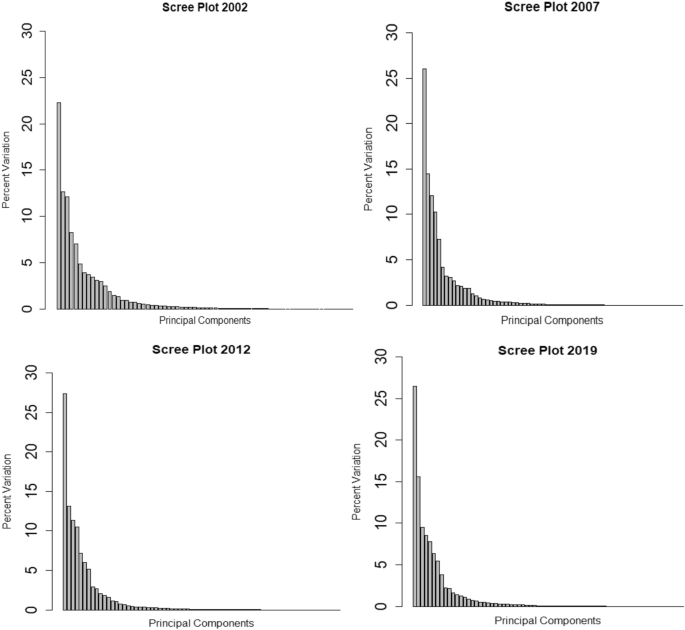

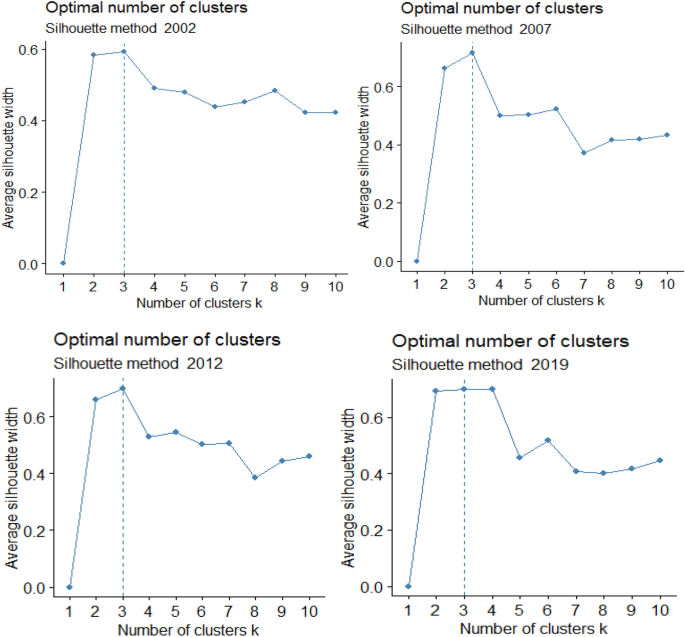

PCA’s widespread use could not have been achieved without several key traits that distinguish it from other tools—all tied to the replicability crisis. PCA can be applied to any numerical dataset, small or large, and it always yields results. It is parameter-free and nearly assumption-free 9 . It does not involve measures of significance, effect size evaluations, or error estimates. It is, by large, a “black box” harboring complex calculations that cannot be traced. Excepting the squared cosines, which is not commonly used, the proportion of explained variance of the data is the single quantity to evaluate the quality of PCA. There is no consensus on the number of PCs to analyze. Price et al. 10 recommended using 10 PCs, and Patterson et al. 9 proposed the Tracy–Widom statistic to determine the number of components. However, this statistic is highly sensitive and inflates the number of PCs. In practicality, most authors use the first two PCs, which are expected to reflect genetic similarities that are difficult to observe in higher PCs. The remaining authors use an arbitrary number of PCs or adopt ad hoc strategies to aid their decision, e.g., Ref. 33 . Pardiñas et al. 34 , for example, selected the first five PC “as recommended for most GWAS approaches” and principal components 6, 9, 11, 12, 13, and 19, whereas Wainschtein et al. 35 preferred the top 280 PCs. There are no proper usage guidelines for PCA, and “innovations” toward less restrictive usage are adopted quickly. Recently, even the practice of displaying the proportion of variation explained by each PC faded as those proportions dwarfed 14 . Since PCA is affected by the choice of markers, samples, populations, the precise implementation, and various flags implemented in the PCA packages—each has an unpredictable effect on the results—replication cannot be expected.

In population genetics, PCA and admixture-like analyses are the de-facto standards used as non-parametric genetic data descriptors. They are considered the hammer and chisel of genetic analyses 36 . Lawson et al. 37 and Elhaik and Graur 38 commented on the misuse of admixture-like tools and argued that they should not be used to draw historical conclusions. Thus far, no investigation has thoroughly explored PCA usage and accuracy across most common study designs.

Because PCA fulfills many of the risk criteria for reproducibility 2 and its typical usage as a first hypothesis generator in population genetic studies, this study will assess its reliability, robustness, and reproducibility. As PCA is a mathematical model employed to describe the unknown truth, testing its accuracy requires a convincing model where the truth is unambiguous. For that, we developed an intuitive and simple color-based model (Fig. 1 A). Because all colors consist of three dimensions—red, green, and blue—they can be plotted in a 3D plot representing the true colors (Fig. 1 B). Applied to these data, PCA reduces the dataset to two dimensions that explain most of the variation. This allows us to visualize the true colors (still using their 3D values) in PCA’s 2D scatterplot, measure the distances of the PCs from each other, and compare them to their true 3D distances. We can thereby generate “color populations,” always consisting of 3 variables, analogous to SNPs, to aid us in evaluating the accuracy of PCA. If PCA works well, we expect it to properly represent the true distances of the colors from one another in a 2D plot (i.e., light Green should cluster near Green; Red, Green, and Blue should cluster away from each other). Let us agree that if PCA cannot perform well in this simplistic setting, where subpopulations are genetically distinct ( F ST is maximized), and the dimensions are well separated and defined, it should not be used in more complex analyses and certainly cannot be used to derive far-reaching conclusions about history. In parallel, we analyzed genotype data of modern and ancient human populations. Because the inferred population structure and population history may be debatable, we asked whether and to what extent PCA can generate contradictory results and lead to absurd conclusions ( reductio ad absurdum ), whether seemingly “correct” conclusions can be derived without prior knowledge ( cherry-picking or circular reasoning ), and whether PCA grants a posteriori knowledge independent of experience (a priori). Let us also agree that if the answer to any of those questions is negative, PCA is of no use to population geneticists.

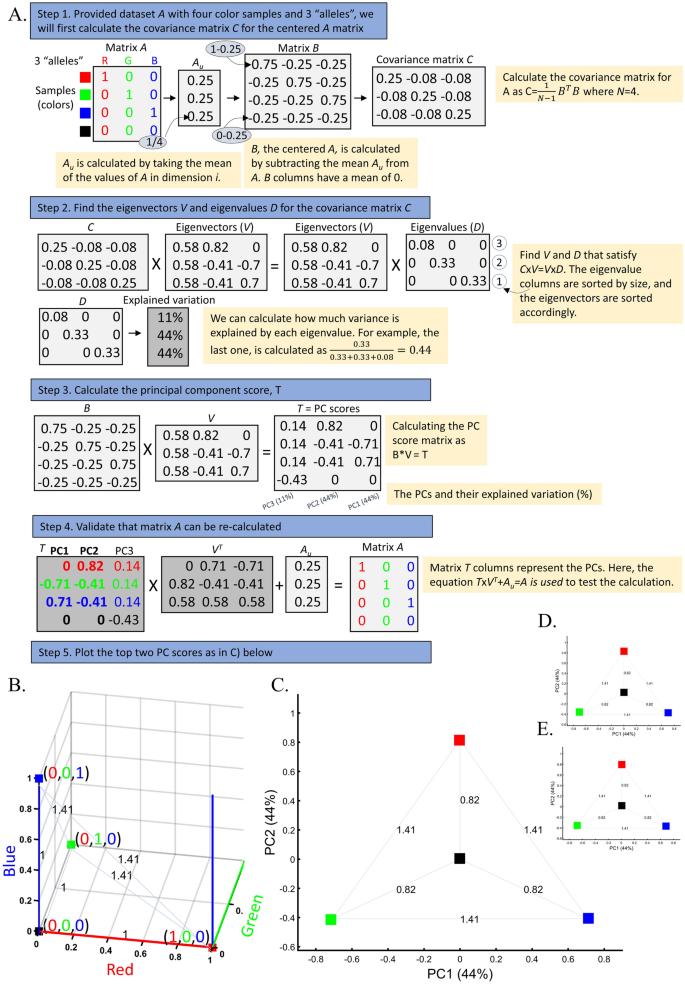

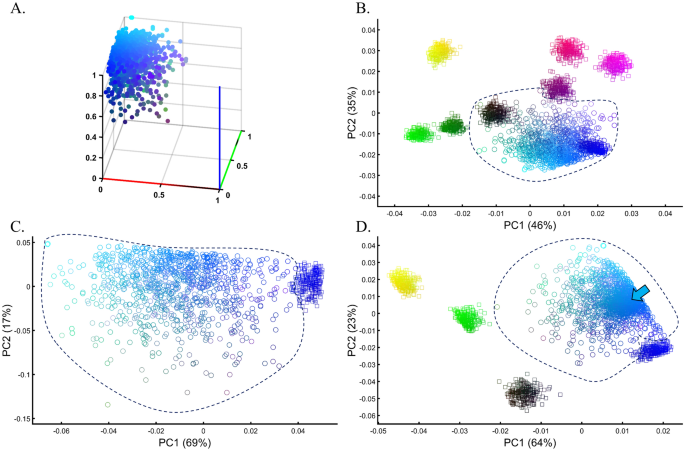

Applying PCA to four color populations. ( A ) An illustration of the PCA procedure (using the singular value decomposition (SVD) approach) applied to a color dataset consisting of four colors ( n All = 1). ( B ) A 3D plot of the original color dataset with the axes representing the primary colors, each color is represented by three numbers (“SNPs”). After PCA is applied to this dataset, the projections of color samples or populations (in their original color) are plotted along their first two eigenvectors (or principal components [PCs]) with ( C ) n All = 1, ( D ) n All = 100, and ( E ) n All = 10,000. The latter two results are identical to those of ( C ). Grey lines and labels mark the Euclidean distances between the color populations calculated across all three PCs.

We carried out an extensive empirical evaluation of PCA through twelve test cases, each assessing a typical usage of PCA using color and human genomic data. In all the cases, we applied PCA according to the standards in the literature but modulated the choice of populations, sample sizes, and, in one case, the selection of markers. The PCA tool used here yields near-identical results to the PCA implemented in EIGENSOFT (Supplementary Figs. S1 – S2 ). To illustrate the way PCA can be used to support multiple opposing arguments in the same debate, we constructed fictitious scenarios with parallels to many investigations in human ancestry that are shown in boxes. We reasoned that if PCA results are irreproducible, contradictory, or absurd, and if they can be manipulated, directed, or controlled by the experimenter, then PCA must not be used for genetic investigations, and an incalculable number of findings based on its results should be reevaluated. We found that this is indeed the case.

The near-perfect case of dimensionality reduction

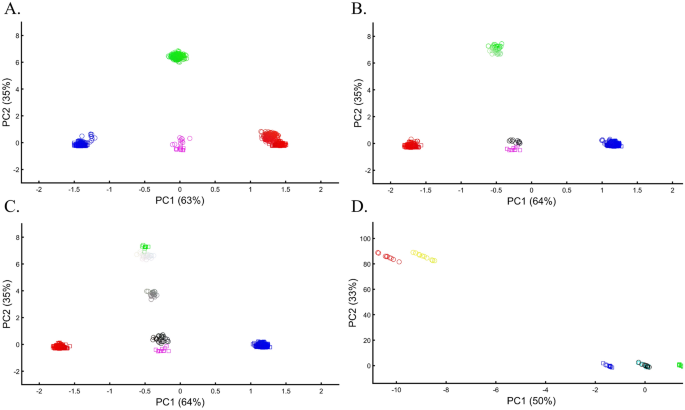

Applying principal component analysis (PCA) to a dataset of four populations sampled evenly: the three primary colors (Red, Green, and Blue) and Black illustrate a near-ideal dimension reduction example. PCA condensed the dataset of these four samples from a 3D Euclidean space (Fig. 1 B) into three principal components (PCs), the first two of which explained 88% of the variation and can be visualized in a 2D scatterplot (Fig. 1 C). Here, and in all other color-based analyses, the colors represent the true 3D structure, whereas their positions on the 2D plots are the outcome of PCA. Although PCA correctly positioned the primary colors at even distances from each other and Black, it distorted the distances between the primary colors and Black (from 1 in 3D space to 0.82 in 2D space). Thereby, even in this limited and near-perfect demonstration of data reduction, the observed distances do not reflect the actual distances between the samples (which are impossible to recreate in a 2D dataset). In other words, distances between samples in a reduced dimensionality plot do not and cannot be expected to represent actual genetic distances. Evenly increasing all the sample sizes yields identical results irrespective of the sample size (Fig. 1 D,E).

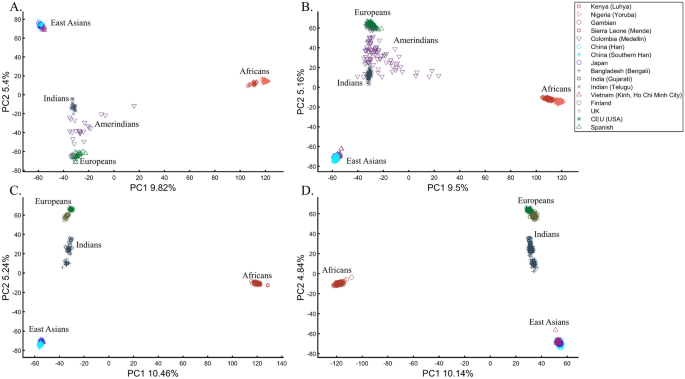

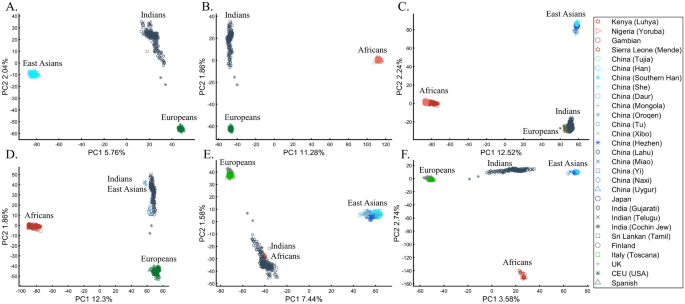

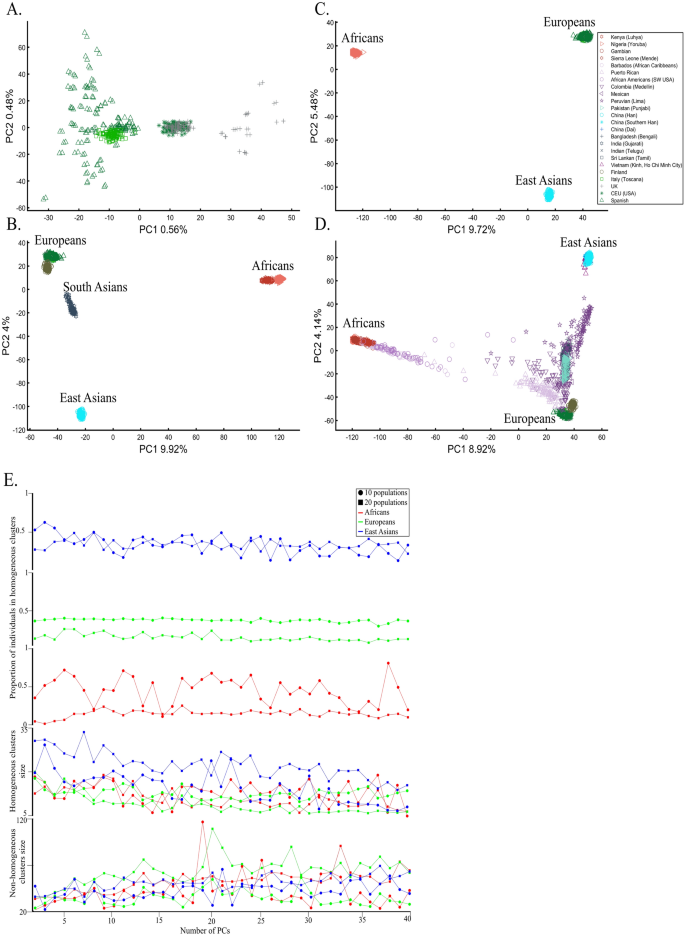

When analyzing human populations, which harbor most of the genomic variation between continental populations (12%) with only 1% of the genetic variation distributed within continental populations 39 , PCA tends to position Africans, Europeans, and East Asians at the corners of an imaginary triangle, which closely resembles our color-population model and illustration. Analyzing continental populations, we obtained similar results for two even-sized sample datasets (Fig. 2 A,C) and their quadrupled counterparts (Fig. 2 B,D). As before, the distances between the populations remain similar (Fig. 2 A–D), demonstrating that for same-sized populations, sample size does not contribute to the distortion of the results if the increase in size is proportional.

Testing the effect of even-sample sizes using two population sets. The top plots show nine populations with n = 50 ( A ) and n = 188 ( B ). The bottom plots show a different set of nine populations with n = 50 ( C ) and n = 192 ( D ). In both cases, increasing the sample size did not alter the PCs (the y-axis flip between ( C ) and ( D ) is a known phenomenon).

The case of different sample sizes

The extent to which different-sized populations produce results with conflicting interpretations is illustrated through a typical study case in Box 1 .

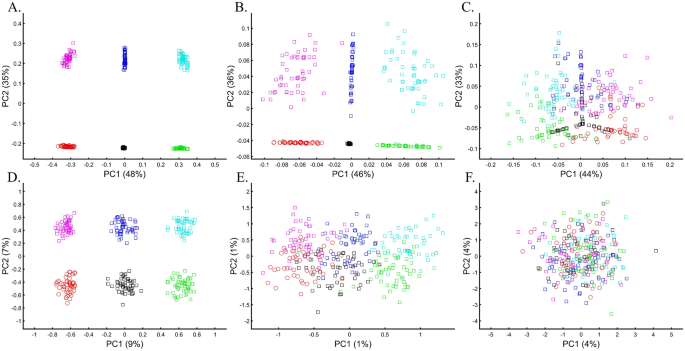

Note that unlike in Figs. 1 C and 3 A, where Black is in the middle, in other figures, the overrepresentation of certain “alleles” (e.g., Fig. 4 B) shifts Black away from (0,0). Intuitively, this can be thought of as the most common “allele” (Green in Fig. 4 B) repelling Black, which has three null or alternative “alleles”.

PCA is commonly reported as yielding a stable differentiation of continental populations (e.g., Africans vs. non-Africans, Europeans vs. Asians, and Asians vs. Native Americans or Oceanians, on the primary PCs 40 , 41 , 42 , 43 ). This prompted prehistorical inferences of migrations and admixture, viewing the PCA results that position Africans, East Asians, and Europeans in three corners of an imaginary triangle as representing the post Out Of Africa event followed by multiple migrations, differentiation, and admixture events. Inferences for Amerindians or Aboriginals typically follow this reconstruction. For instance, Silva-Zolezzi et al. 42 argued that the Zapotecos did not experience a recent admixture due to their location on the Amerindian PCA cluster at the Asian end of the European-Asian cline.

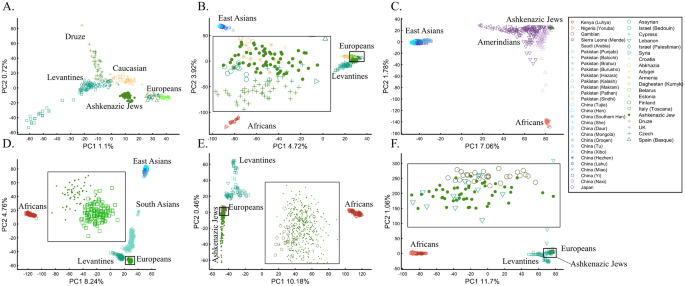

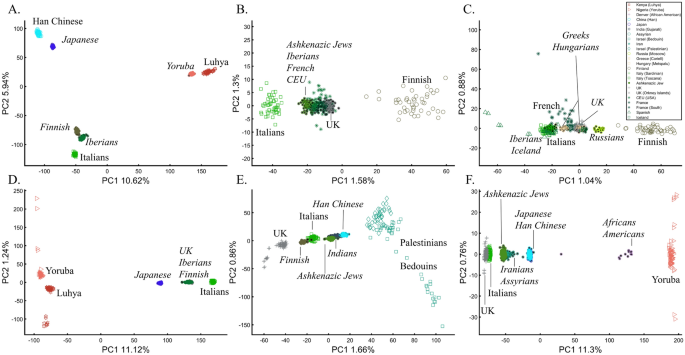

Here we show that the appearance of continental populations at the corners of a triangle is an artifact of the sampling scheme since variable sample sizes can easily create alternative results as well as alternative “clines”. We first replicated the triangular depiction of continental populations (Fig. 3 A,B) before altering it (Fig. 3 C–F). Now, East Asians appear as a three-way admixed group of Africans, Europeans, and Melanesians (Fig. 3 C), whereas Europeans appear on an African-East Asian cline (Fig. 3 D). Europeans can also be made to appear in the middle of the plot as an admixed group of Africans-Asians-Oceanians origins (Fig. 3 E), and Oceanians can cluster with (Fig. 3 F) or without East Asians (Fig. 3 E). The latter depiction maximizes the proportion of explained variance, which common wisdom would consider the correct explanation. According to some of these results, only Europeans and Oceanians (Fig. 3 C) or East Asians and Oceanians (Fig. 3 D) experienced the Out of Africa event. By contrast, East Asians (Fig. 3 C) and Europeans (Fig. 3 D) may have remained in Africa. Contrary to Silva-Zolezzi et al.’s 42 claim, the same Mexican–American cohort can appear closer to Europeans (Fig. 3 A) or as a European-Asian admixed group (Fig. 3 B). It is easy to see that none of those scenarios stand out as more or less correct than the other ones.

PCA of uneven-sized African (Af), European (Eu), Asian (As), and Mexican-Americans (Ma) or Oceanian (Oc) populations. Fixing the sample size of Mexican-Americans and altering the sample sizes of other populations: ( A ) n Af = 198; n Eu = 20; n As = 483; n Ma = 64 and ( B ) n Af = 20; n Eu = 343; n Ma = 20; n Am = 64 changes the results. An even more dramatic change can be seen when repeating this analysis on Oceanians: ( C ) n Af = 5; n Eu = 25; n As = 10; n Oce = 20 and ( D ) n Afr = 5; n Eu = 10; n As = 15; n Oc = 20 and when altering their sample sizes: ( E ) n Af = 98; n Eu = 25; n As = 150; n Oc = 24 and ( F ) n Af = 98; n Eu = 83; n As = 30; n Oc = 15.

Reich et al. 44 presented further PCA-based “evidence” to the ‘out of Africa’ scenario. Applying PCA to Africans and non-Africans, they reported that non-Africans cluster together at the center of African populations when PC1 was plotted against PC4 and that this “rough cluster[ing]” of non-Africans is “about what would be expected if all non-African populations were founded by a single dispersal ‘out of Africa.’” However, observing PC1 and PC4 for Supplementary Fig. S3 , we found no “rough cluster” of non-Africans at the center of Africans, contrary to Reich et al.’s 44 claim. Remarkably, we found a “rough cluster” of Africans at the center of non-Africans (Supplementary Fig. S3 C), suggesting that Africans were founded by a single dispersal ‘into Africa’ by non-Africans. We could also infer, based on PCA, either that Europeans never left Africa (Supplementary Fig. S3 D), that Europeans left Africa through Oceania (Supplementary Fig. S3 B), that Asians and Oceanians never left Europe (or the other way around) (Supplementary Fig. S3 F), or, since all are valid PCA results, all of the above. Unlike Reich et al. 44 , we do not believe that their example “highlights how PCA methods can provide evidence of important migration events”. Instead, our examples (Fig. 3 , Supplementary Fig. S3 ) show how PCA can be used to generate conflicting and absurd scenarios, all mathematically correct but, obviously, biologically incorrect and cherry-pick the most favorable solution. This is an example of how vital a priori knowledge is to PCA. It is thereby misleading to present one or a handful of PC plots without acknowledging the existence of many other solutions, let alone while not disclosing the proportion of explained variance.

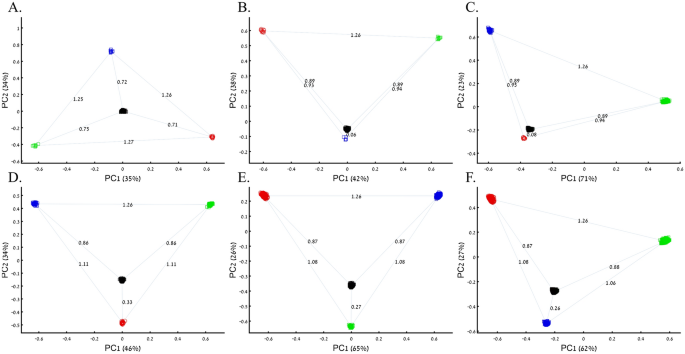

Box 1: Studying the origin of Black using the primary colors

Three research groups sought to study the origin of Black. A previous study that employed even sample-sized color populations alluded that Black is a mixture of all colors (Fig. 1 B–D). A follow-up study with a larger sample size ( n Red = n Green = n Blue = 10) and enriched in Black samples ( n Black = 200) (Fig. 4 A) reached the same conclusion. However, the Black-is-Blue group suspected that the Blue population was mixed. After QC procedures, the Blue sample size was reduced, which decreased the distance between Black and Blue and supported their speculation that Black has a Blue origin (Fig. 4 B). The Black-is-Red group hypothesized that the underrepresentation of Green, compared to its actual population size, masks the Red origin of Black. They comprehensively sampled the Green population and showed that Black is very close to Red (Fig. 4 C). Another Black-is-Red group contributed to the debate by genotyping more Red samples. To reduce the bias from other color populations, they kept the Blue and Green sample sizes even. Their results replicated the previous finding that Black is closer to Red and thereby shares a common origin with it (Fig. 4 D). A new Black-is-Green group challenged those results, arguing that the small sample size and omission of Green samples biased the results. They increased the sample sizes of the populations of the previous study and demonstrated that Black is closer to Green (Fig. 4 E). The Black-is-Blue group challenged these findings on the grounds of the relatively small sample sizes that may have skewed the results and dramatically increased all the sample sizes. However, believing that they are of Purple descent, Blue refused to participate in further studies. Their relatively small cohort was explained by their isolation and small effective population size. The results of the new sampling scheme confirmed that Black is closer to Blue (Fig. 4 F), and the group was praised for the large sample sizes that, no doubt, captured the actual variation in nature better than the former studies.

PCA of uneven-sized samples of four color populations. ( A ) n Red = n Green = n Blue = 10; n Black = 200, ( B ) n Red = n Green = 10; n Blue = 5; n Black = 200, ( C ) n Red = 10; n Green = 200; n Blue = 50; n Black = 200 ( D ) n Red = 25; n Green = n Blue = 50; n Black = 200, ( E ) n Red = 300; n Green = 200; n Blue = n Black = 300, and ( F ) n Red = 1000; n Green = 2000; n Blue = 300; n Black = 2000. Scatter plots show the top two PCs. The numbers on the grey bars reflect the Euclidean distances between the color populations over all PCs. Colors include Red [1,0,0], Green [0,1,0], Blue [0,0,1], and Black [0,0,0].

The case of one admixed population

The question of who the ancestors of admixed populations are and the extent of their contribution to other groups is at the heart of population genetics. It may not be surprising that authors hold conflicting views on interpreting these admixtures from PCA. Here, we explore how an admixed group appears in PCA, whether its ancestral groups are identifiable, and how its presence affects the findings for unmixed groups through a typical study case (Box 2 ).

To understand the impact of parameter choices on the interpretation of PCA, we revisited the first large-scale study of Indian population history carried out by Reich et al. 45 . The authors applied PCA to a cohort of Indians, Europeans, Asians, and Africans using various sample sizes that ranged from 2 (Srivastava) (out of 132 Indians) to 203 (Yoruban) samples. After applying PCA to Indians and the three continental populations to exclude “outliers” that supposedly had more African or Asian ancestries than other samples, PCA was applied again in various settings.

At this point, the authors engaged in circular logic as, on the one hand, they removed samples that appeared via PCA to have experienced gene flow from Africa (their Note 2, iii ) and, on the other hand, employed a priori claim (unsupported by historical documents) that “African history has little to do with Indian history” (which must stand in sharp contrast to the rich history of gene flow from Utah (US) residents to Indians, which was equally unsupported). Reich et al. provided no justification for the exact protocol used or any discussion about the impact of using different parameter values on resulting clusters. They then generated a plethora of conflicting PCA figures, never disclosing the proportion of explained variance along with the first four PCs examined. They then inferred based on PCA that Gujarati Americans exhibit no “unusual relatedness to West Africans (YRI) or East Asians (CHB or JPT)” (Supplementary Fig. S4 ) 45 . Their concluding analysis of Indians, Asians, and Europeans (Fig. 4 ) 45 showed Indians at the apex of a triangle with Europeans and Asians at the opposite corners. This plot was interpreted as evidence of an “ancestry that is unique to India” and an “Indian cline”. Indian groups were explained to have inherited different proportions of ancestry from “Ancestral North Indians” (ANI), related to western Eurasians, and “Ancestral South Indians” (ASI), who split from Onge. The authors then followed up with additional analyses using Africans as an outgroup, supposedly confirming the results of their selected PCA plot. Indians have since been described using the terms ANI and ASI.

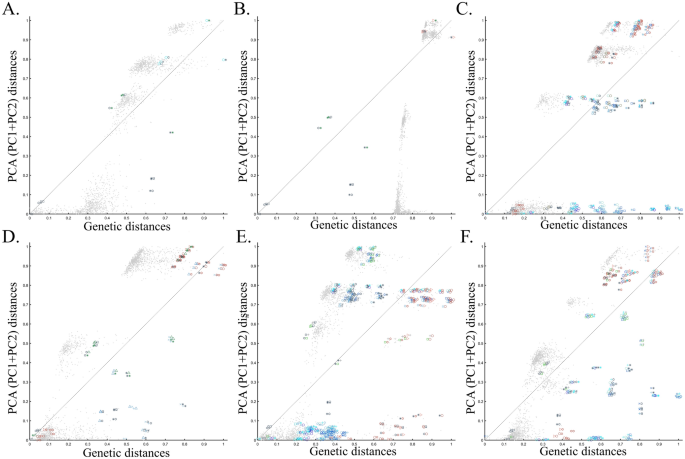

In evaluating the claims of Reich et al. 45 that rest on PCA, we first replicated the finding of the alleged “Indian cline” (Fig. 5 A). We next garnered support for an alternative cline using Indians, Africans, and Europeans (Fig. 5 B). We then demonstrated that PCA results support Indians to be European (Fig. 5 C), East Asians (Fig. 5 D), and Africans (Fig. 5 E), as well as a genuinely European-Asian, admixed population (Fig. 5 F). Whereas the first two PCs of Reich et al.’s primary figure explain less than 8% of the variation (according to our Fig. 5 A, Reich et al.’s Fig. 4 does not report this information), four out of five of our alternative depictions explain 8–14% of the variation. Our results also expose the arbitrariness of the scheme used by Reich et al. and show how radically different clustering can be obtained merely by manipulating the non-Indian populations used in the analyses. Our results also question the authors’ choice in using an analysis that explained such a small proportion of the variation (let alone not reporting it), yielded no support for a unique ancestry to India, and cast doubt on the reliability and usefulness of the ANI-ASI model to describe Indians provided their exclusive reliability on a priori knowledge in interpreting the PCA patters. Although supported by downstream analyses, the plurality of PCA results could not be used to support the authors’ findings because using PCA, it is impossible to answer a priori whether Africa is in India or the other way around (Fig. 5 E). We speculate tat the motivation for Reich et al.'s strategy was to declare Africans an outgroup, an essential component of D-statistics. Clearly, PCA-based a posteriori inferences can lead to errors of Colombian magnitude.

Studying the origin of Indians using PCA. ( A ) Replicating Reich et al.’s 45 results using n Eu = 99; n As = 146; n Ind = 321. Generating alternative PCA scenarios using: ( B ) n Af = 178; n Eu = 99; n Ind = 321, ( C ) n Af = 400; n Eu = 40; n As = 100; n Ind = 321, ( D ) n Af = 477; n Eu = 253; n As = 23; n Ind = 321, ( E ) n Af = 25; n Eu = 220; n As = 490; n Ind = 320, and ( F ) n Af = 30; n Eu = 200; n As = 50; n Ind = 320.

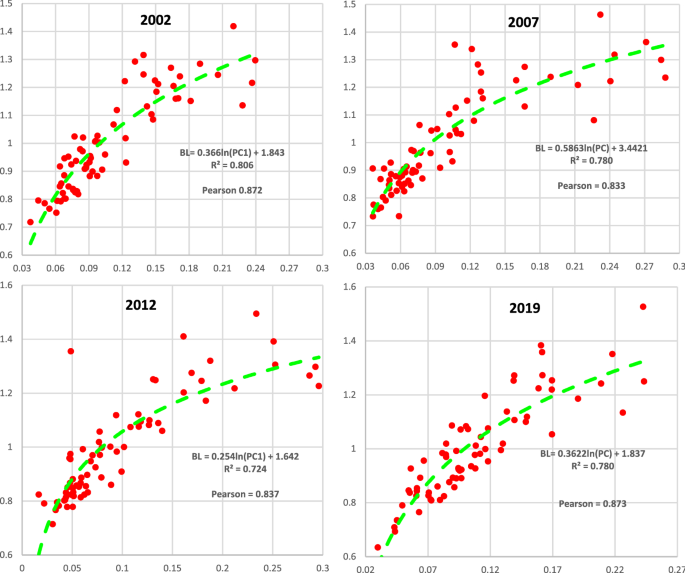

To evaluate the extent of deviation of PCA results from genetic distances, we adopted a simple genetic distance scheme where we measured the Euclidean distance between allelic counts (0,1,2) in the same data used for PCA calculations. We are aware of the diversity of existing genetic distance measures. However, to the best of our knowledge, no study has ever shown that PCA outcomes numerically correlate with any genetic distance measure, except in very simple scenarios and tools like ADMIXTURE-like tools, which, like PCA, exhibit high design flexibility. Plotting the genetic distances against those obtained from the top two PCs shows the deviation between these two measures for each dataset. We found that all the PC projections (Fig. 6 ) distorted the genetic distances in unexpected ways that differ between the datasets. PCA correctly represented the genetic distances for a minority of the populations, and just like the most poorly represented populations—none were distinguishable from other populations. Moreover, populations that clustered under PCA exhibited mixed results, questioning the accuracy of PCA clusters. Although it remains unclear which sampling scheme to adopt, neither scheme is genetically accurate. These results further question the genetic validity of the ANI-ASI model.

Comparing the genetic distances with PCA-based distances for the corresponding datasets of Fig. 5 . Genetic and PCA (PC1 + PC2) distances between populations pairs (symbol pairs) and 2000 random individual pairs (grey dots) were calculated using Euclidean distances and normalized to range from 0 to 1. Population and individual pairs whose PC distances reflect their genetic distances are shown along the x = y dotted line. Note that the position of heterogeneous populations on the plot may deviate from that of their samples and that some populations are very small.

We are aware that PCA disciples may reject our reductio ad absurdum argument and attempt to read into these results, as ridiculous as they may be, a valid description of Indian ancestry. For those readers, demonstrating the ability of the experimenter to generate near-endless contradictory historical scenarios using PCA may be more convincing or at least exhausting. For brevity, we present six more such scenarios that show PCA support for Indians as a heterogeneous group with European admixture and Mexican-Americans as an Indian-European mixed population (Supplementary Fig. S4 A), Mexican–American as an admixed African-European group with Indians as a heterogeneous group with European admixture (Supplementary Fig. S4 B), Indians and Mexican-Americans as European-Japanese admixed groups with common origins and high genetic relatedness (Supplementary Fig. S4 C), Indians and Mexican-Americans as European-Japanese admixed groups with no common origins and genetic relatedness (Supplementary Fig. S4 D), Europans as Indian and Mexican-Americans admixed group with Japanese fully cluster with the latter (Supplementary Fig. S4 E), and Japanese and Europeans cluster as an admixed Indian and Mexican-Americans groups (Supplementary Fig. S4 F). Readers are encouraged to use our code to produce novel alternative histories. We suspect that almost any topology could be obtained by finding the right set of input parameters. In this sense, any PCA output can reasonably be considered meaningless.

Contrary to Reich et al.'s claims, a more common interpretation of PCA is that the populations at the corners of the triangle are ancestral or are related to the mixed groups within the triangle, which are the outcome of admixture events, typically referred to as “gradient” or “clines 45 ”. However, some authors held different opinions. Studying the African component of Ethiopian genomes, Pagani et al. 46 produced a PC plot showing Europeans (CEU), Yoruba (western African), and Ethiopians (Eastern Africans) at the corners of a triangle (Supplementary Fig. S4 ) 46 . Rather than suggesting that the populations within the triangle (e.g., Egyptians, Spaniards, Saudi) are mixtures of these supposedly ancestral populations, the authors argued that Ethiopians have western and eastern African origins, unlike the central populations with “different patterns of admixture”. Obviously, neither interpretation is correct. Reich et al.’s interpretation does not explain why CEUs are not an Indian-African admix nor why Africans are not a European-Indian admix and is analogous to arguing that Red has Green and Blue origins (Fig. 1 ). Pagani et al.’s interpretation is a tautology, ignores the contribution of non-Africans, and is analogous to arguing that Red has Red and Green origins. We carried out forward simulations of populations with various numbers of ancestral populations and found that admixture cannot be inferred from the positions of samples in a PCA plot (Supplementary Text 1 ).

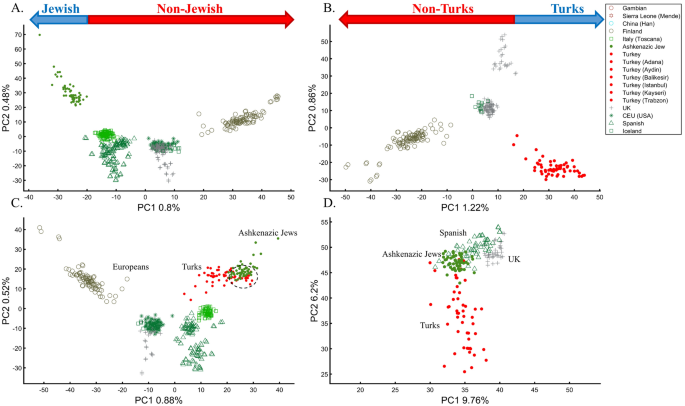

In a separate effort to study the origins of AJs, Need et al. 47 applied PCA to 55 Ashkenazic Jews (AJs) and 507 non-Jewish Caucasians. Their PCA plot showed that AJs (marked as “Jews”) formed a distinct cluster from Europeans (marked as “non-Jews”). Based on these results, the authors suggested that PCA can be used to detect linkage to Jewishness. A follow-up PCA where Middle Eastern (Bedouin, Palestinians, and Druze) and Caucasus (Adygei) populations were included showed that AJs formed a distinct cluster that nested between the Adygei (and the European cluster) and Druze (and the Middle Eastern cluster). The authors then concluded that AJs might have mixed Middle Eastern and European ancestries. The proximity to the Adygei cluster was noted as interesting but dismissed based on the small sample size of the Adygei ( n = 17). The authors concluded that AJ genomes carry an “unambiguous signature of their Jewish heritage, and this seems more likely to be due to their specific Middle Eastern ancestry than to inbreeding”. A similar strategy was employed by Bray et al. 48 to claim that PCA “confirmed that the AJ individuals cluster distinctly from Europeans, aligning closest to Southern European populations along with the first principal component, suggesting a more southern origin, and aligning with Central Europeans along the second, consistent with migration to this region.” Other authors 49 , 50 made similar claims.

It is easy to show why PCA cannot be used to reach such conclusions. We first replicated Need et al.’s 47 primary results (Fig. 7 A), showing that AJs cluster separately from Europeans. However, such an outcome is typical when comparing Europeans and non-European populations like Turks (Fig. 7 B). It is not unique to AJs, nor does it prove that they are genetically detectable. A slightly modified design shows that most AJs overlap with Turks in support of the Turkic (or Near Eastern) origin of AJs (Fig. 7 C). We can easily refute our conclusion by including continental populations and showing that most AJs cluster with Iberians rather than Turks (Fig. 7 D). This last design explains more of the variance than all the previous analyses together, although, as should be evident by now, it is not indicative of accuracy. This analysis questions PCA's use as a discriminatory genetic utility and to infer genetic ancestry.

Studying the origin of 55 AJs using PCA. ( A ) Replicating Need et al.’s results using n Eu = 507; Generating alternative PCA scenarios using: ( B ) n Eu = 223; n Turks = 56; ( C ) n Eu = 400; n Turks + Caucasus = 56, and ( D ) n Af = 100, n As = 100 (Africans and Asians are not shown), n Eu = 100; and n Turks = 50. Need et al.'s faulty terminology was adopted in A and B .

There are several more oddities with the report of Need et al. 47 . First, they did not report the variance explained by their sampling scheme (it is, likely, ~1%, as in Fig. 7 A). Second, they misrepresented the actual populations analyzed. AJs are not the only Jews, and Europeans are not the only non-Jews (Figs. 1 , 7 A) 47 . Finally, their dual interpretations of AJs as a mixed population of Middle Eastern origin are based solely on a priori belief: first, because most of the populations in their PCA are nested between and within other populations, yet the authors did not suggest that they are all admixed and second because AJs nested between Adygii and Druze 51 , 52 , both formed in the Near Eastern. The conclusions of Need et al. 47 were thereby obtained based on particular PCA schemes and what may be preconceived ideas of AJs origins that are no more real than the Iberian origin of AJs (Fig. 7 D). This is yet another demonstration (discussed in Elhaik 36 ) of how PCA can be misused to promote ethnocentric claims due to its design flexibility.

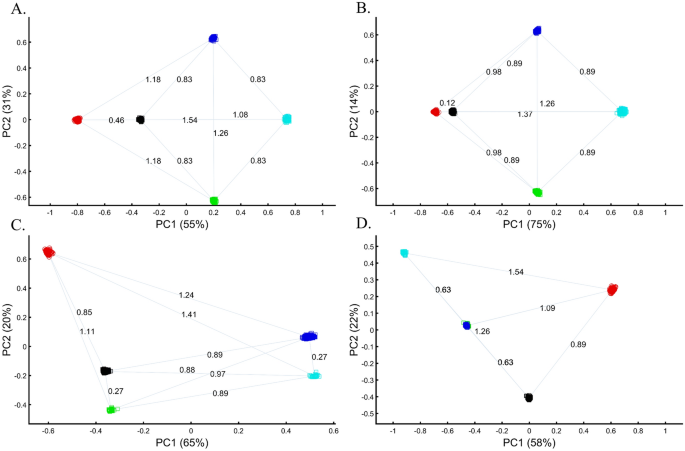

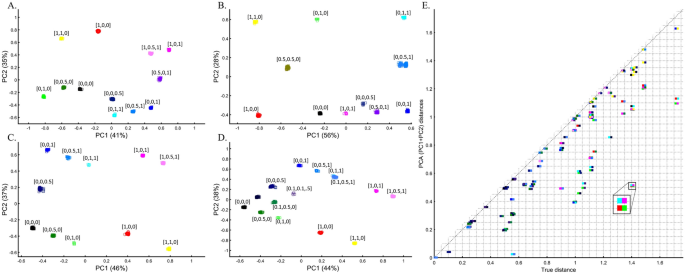

Box 2: Studying the origin of Black using the primary and one secondary (admixed) color populations

Following criticism on the sampling scheme used to study the origin of Black (Box 1 ), the redoubtable Black-is-Red group genotyped Cyan. Using even sample sizes, they demonstrated that Black is closer to Red ( D Black-Red = 0.46) (Fig. 8 A), where D is the Euclidean distance between the samples over all three PCs (short distances indicate high similarity). The Black-is-Green school criticized their findings on the grounds that their Cyan samples were biased and their results do not apply to the broad Black cohort. They also reckoned that the even sampling scheme favored Red because Blue is related to Cyan through shared language and customs. The Black-is-Red group responded by enriching their cohort in Cyan and Black ( n Cyan , n Black = 1000) and provided even more robust evidence that Black is Red ( D Black-Red = 0.12) (Fig. 8 B). However, the Black-is-Green camp dismissed these findings. Conscious of the effects of admixture, they retained only the most homogeneous Green and Cyan ( n Green , n Cyan = 33), genotyped new Blue and Black ( n Blue , n Black = 400), and analyzed them with the published Red cohort ( n Red = 100). The Black-is-Green results supported their hypothesis that Black is Green ( D Black-Green = 0.27) and that Cyan shared a common origin with Blue ( D Blue-Green = 0.27) (Fig. 8 C) and should thereby be considered an admixed Blue population. Unsurprisingly, the Black-is-Red group claimed that these results were due to the under-representation of Black since when they oversampled Black, PCA supported their findings (Fig. 8 A). In response, the Black-is-Green school maintained even sample sizes for Cyan, Blue, and Green ( n Blue , n Green , n Cyan = 33) and enriched Black and Red ( n Red , n Black = 100). Not only did their results ( D Black-Green = 0.63 < D Black-Red = 0.89) support their previous findings, but they also demonstrated that Green and Blue completely overlapped, presumably due to their shared co-ancestry, and that together with Cyan ( D Cyan-Green = 0.63 < D Cyan-Red = 1.09) (Fig. 8 B,D) they represent an antique color clade. They explained that these color populations only appeared separated due to genetic drift. However, they still retained sufficient cryptic genetic information that PCA can uncover if the correct sampling scheme is used. Further analyses by the other groups contested these findings (Supplementary Fig. S5 A-D). Among else, it was argued that Black is a Green–Red admixed group (Supplementary Fig. S5 C) and that Black and Cyan were the ancestors of Blue and Green (Supplementary Fig. S5 D).

PCA with the primary and mixed color populations. ( A ) n all = 100; n Black = 200, ( B ) n Red = n Green = n Blue = 100; n Black = n Cyan = 500, ( C ) n Red = 100; n Green = n Cyan = 33; n Blue = n Black = 400; and ( D ) n Red = n Black = 100; n Green = n Blue = n Cyan = 33; Scatter plots show the top two PCs. The numbers on the grey bars reflect the Euclidean distances between the color populations over all PCs. Colors include Red [1,0,0], Green [0,1,0], Blue [0,0,1], Cyan [0,1,1], and Black [0,0,0].

The case of a multi-admixed population

The question of how analyzing admixed groups with multiple ancestral populations affects the findings for unmixed groups is illustrated through a typical study case in Box 3 .

To understand how PCA can be misused to study multiple mixed populations, we will investigate other PCA applications to study AJs. Such analyses have a thematic intepretation, where the clustering of AJ samples is evidence of a shared Levantine origin, e.g., Refs. 12 , 13 , that “short” distances between AJs and Levantines indicate close genetic relationships in support of a shared Levantine past, e.g., Ref. 12 , whereas the “short” distances between AJs and Europeans are evidence of admixture 13 . Finally, as a rule, the much shorter distances between AJs and the Caucasus or Turkish populations, observed by all recent studies, were ignored 12 , 13 , 47 , 48 . Bray et al. 48 concluded that not only do AJs have a “more southern origin” but that their alignment with Central Europeans is “consistent with migration to this region”. In these studies, "short" and “between” received a multitude of interpretations. For example, Gladstein and Hammer's 53 PCA plot that showed AJs in the extreme edge of the plot with Bedouins and French in the other edges was interpreted as AJs clustering “tightly between European and Middle Eastern populations”. The authors interpreted the lack of “outliers” among AJs (which were never defined) as evidence of common AJ ancestry.

Following the rationale of these studies, it is easy to show how PCA can be orchestrated to yield a multitude origins for AJs. We replicated the observation that AJs are “population isolate,” i.e., AJs form a distinct group, separated from all other populations (Fig. 9 A), and are thereby genetically distinguishable 47 . We also replicated the most common yet often-ignored observation, that AJs cluster tightly with Caucasus populations (Fig. 9 B). We next produced novel results where AJs cluster tightly with Amerindians due to the north Eurasian or Amerindian origins of both groups (Fig. 9 C). We can also show that AJs cluster much closer to South Europeans than Levantines (Fig. 9 D), and overlap Finns entirely, in solid evidence of AJ’s ancient Finnish origin (Fig. 9 E). Last, we wish to refute our previous finding and show that only half of the AJs are of Finnish origin. The remaining analysis supports the lucrative Levantine origin (Fig. 9 F)—a discovery touted by all the previous reports though never actually shown. Excitingly enough, the primary PCs of this last Eurasian Finnish-Levantine mixed origin depiction explained the highest amount of variance. An intuitive interpretation of those results is a recent migration of the Finnish AJs to the Levant, where they experienced high admixture with the local Levantine populations that altered their genetic background. These examples demonstrate that PCA plots generate nonsensical results for the same populations and no a posteriori knowledge.

An in-depth study of the origin of AJs using PCA in relation to Africans (Af), Europeans (Eu), East Asians (Ea), Amerindians (Am), Levantines (Le), and South Asians (Sa). ( A ) n Eu = 159; n AJ = 60; n Le = 82, ( B ) n Af = 30; n Eu = 159; n Ea = 50; n AJ = 60; n Le = 60, ( C ) n Af = 30; n Ea = 583; n AJ = 60; n Am = 255; ( D ) n Af = 200; n Eu = 115; n Ea = 200; n AJ = 60; n Le = 235; n Sa = 88, ( E ) n Af = 200; n Eu = 30; n AJ = 400, n Le = 80 ( F ) n Af = 200; n Eu = 30; n AJ = 50; n Le = 160. Large square indicate insets.

Box 3: Studying the origin of Black using the primary and multiple mixed colors

The value of using mixed color populations to study origins prompted new analyses using even (Fig. 10 A) and variable sample sizes (Fig. 10 B–D). Using this novel sampling scheme, the Black-is-Green school reaffirmed that Black is the closest to Green (Fig. 10 A, 10 C, and 10 D) in a series of analyses, but using a different cohort yielded a novel finding that Black is closest to Pink (Fig. 10 B).

PCA with the primary and multiple mixed color populations. ( A ) n all = 50, ( B ) n all = 50 or 10, ( C , D ) n All = [50, 5, 100, or 25]. Scatter plots show the top two PCs. Colors codes are shown. ( E ) The difference between the true distances calculated over a 3D plane between every color population pair (shown side by side) from ( D ) and their Euclidean distances calculated from the top two PCs. Pairs whose PC distances from each other reflect their true 3D distances are shown along the x = y dotted line. One of the largest PCA distortions is the distances between the Red and Green populations (inset). The true Red-Green distance is 1.41 (x-axis), but the PCA distance is 0.5 (y-axis).

The extent to which PCA distances obtained by the top two PCs reflect the true distances among color population pairs is shown in Fig. 10 E. PCA distorted the distances between most color populations, but the distortion was uneven among the pairs, and while a minority of the pairs are correctly projected via PCA, most are not. Identifying which pairs are correctly projected is impossible without a priori information. For example, some shades of blue and purple were less biased than similar shades. We thereby show that PCA inferred distances are biased in an unpredicted manner and thereby uninformative for clustering.

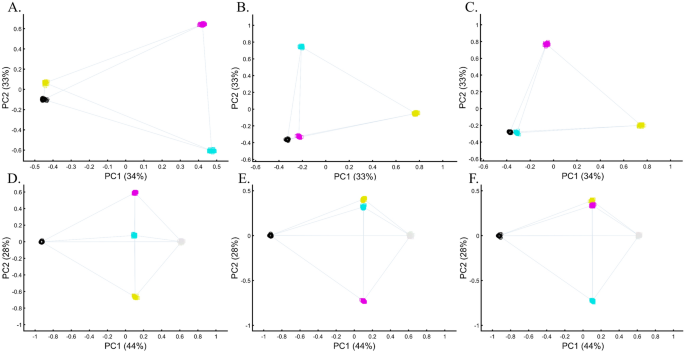

The case of multiple admixed populations without “unmixed” populations

Unlike stochastic models that possess inherent randomness, PCA is a deterministic process, a property that contributes to its perceived robustness. To explore the behavior of PCA, we tested whether the same computer code can produce similar or different results when the only variable that changes is the standard randomization technique used throughout the paper to generate the individual samples of the color populations (to avoid clutter).

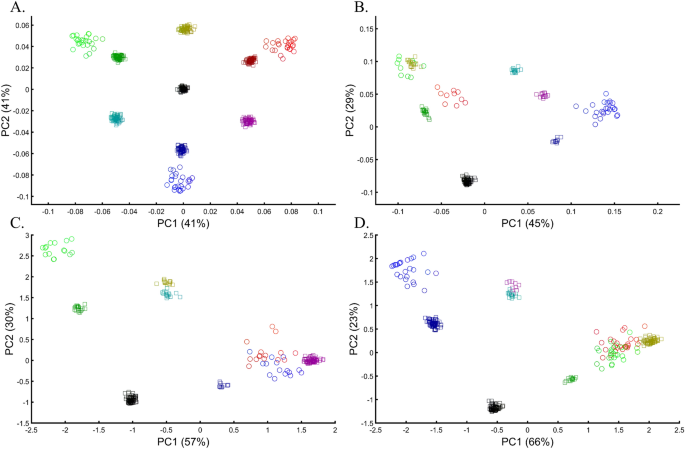

We evaluated two color sets. In the first set, Black was the closest to Yellow (Fig. 11 A), Purple (Fig. 11 C), and Cyan (Fig. 11 D,E). When adding White, in the second set, Black behaved as an outgroup as the distances between the secondary colors largely deviated from the expectation and produced false results (Fig. 11 D–F). These results illustrate the sensitivity of PCA to tiny changes in the dataset, unrelated to the populations or the sample sizes.

Studying the effects of minor sample variation on PCA results using color populations ( n all = 50). ( A – C ) Analyzing secondary colors and Black. ( D – E ) Analyzing secondary colors, White, and Black. Scatter plots show the top two PCs. Colors include Cyan [0,1,1], Purple [1,0,1], Yellow [1,1,0], White [1,1,0], and Black [0,0,0].

To explore this effect on human populations, we curated a cohort of 16 populations. We carried out PCA on ten random individuals from 15 random populations. We show that these analyses result in spurious and conflicting results (Fig. 12 ). Puerto Ricans, for instance, clustered close to Europeans (A), between Africans and Europeans (B), close to Adygei (C), and close to Europe and Adygei (D). Indians clustered with Mexicans (A, B, and D) or apart from them (C). Mexicans themselves cluster with (A and D) or without (B and C) Africans. Papuans and Russians cluster close (B) or afar (C) from East Asian populations. More robust clustering was observed for East Asians, Caucasians, and Europeans, as well as Africans. However, these were not only indistinguishable from the less robust clustering but also failed to replicate over multiple runs (results not shown). These examples show that PCA results are unpredictable and irreproducible even when 94% of the populations are the same. Note that the proportion of explained variance was similar in all the analyses, demonstrating that it is not an indication of accuracy or robustness.

Studying the effect of sampling on PCA results. A cohort of 16 worldwide populations (see legend) was selected. In each analysis, a random population was excluded. Populations were represented by random samples ( n = 10). The clusters highlight the most notable differences.

We found that although a deterministic process, PCA behaves unexpectedly, and minor variations can lead to an ensemble of different outputs that appear stochastic. This effect is more substantial when continental populations are excluded from the analysis.

The cases of case–control matching and GWAS

Samples of unknown ancestry or self-reported ancestry are typically identified by applying PCA to a cohort of test samples combined with reference populations of known ancestry (e.g., 1000 Genomes), e.g., Refs. 22 , 54 , 55 , 56 . To test whether using PCA to identify the ancestry of an unknown cohort with known samples is feasible, we simulated a large and heterogeneous Cyan population (Fig. 13 A, circles) of self-reported Blue ancestry. Following a typical GWAS scheme, we carried out PCA for these individuals and seven known and distinct color populations. PCA grouped the Cyan individuals with Blue and Black individuals (Fig. 13 B), although none of the Cyan individuals were Blue or Black (Fig. 13 A), as a different PCA scheme confirmed (Fig. 13 C). A case–control assignment of this cohort to Blue or Black based on the PCA result (Fig. 13 B) produced poor matches that reduced the power of the analysis. When repeating the analysis with different reference populations (Fig. 13 D), the simulated individuals exhibited minimal overlap with Blue, no overlap with Black, and overlapped mostly with the Cyan reference population present this time. We thereby showed that the clustering with Blue and Black is an artifact due to the choice of reference populations. In other words, the introduction of reference populations with mismatched ancestries respective to the unknown samples biases the ancestry inference of the latter.

Evaluating the accuracy of PCA clustering for a heterogeneous test population in a simulation of a GWAS setting. ( A ) The true distribution of the test Cyan population ( n = 1000). ( B ) PCA of the test population with eight even-sized ( n = 250) samples from reference populations. ( C ) PCA of the test population with Blue from the previous analysis shows a minimal overlap between the cohorts. ( D ) PCA of the test population with five even-sized ( n = 250) samples from reference populations, including Cyan (marked by an arrow). Colors ( B ) from top to bottom and left to right include: Yellow [1,1,0], light Red [1,0,0.5], Purple [1,0,1], Dark Purple [0.5,0,0.5], Black [0,0,0], dark Green [0,0.5,0], Green [0,1,0], and Blue [1,0,0].

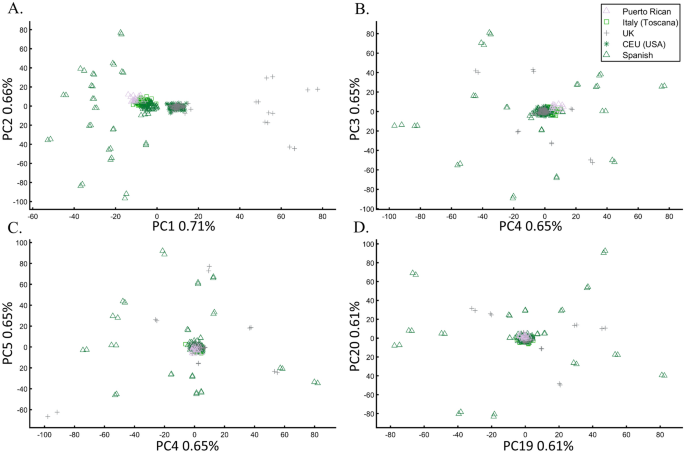

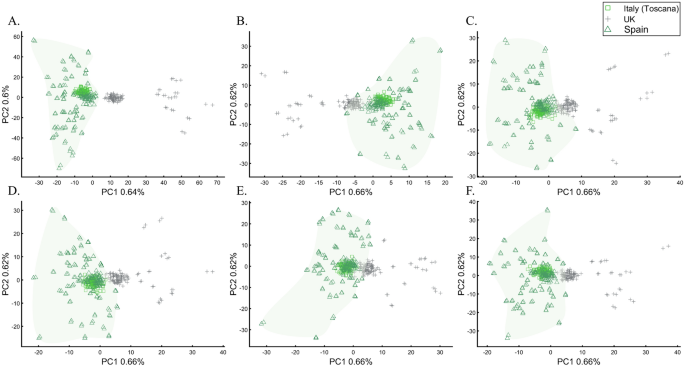

We next asked whether PCA results can group Europeans into homogeneous clusters. Analyzing four European populations yielded 43% homogeneous clusters (Fig. 14 A). Adding Africans and Asians and then South Asian populations decreased the European cluster homogeneity to 14% and 10%, respectively (Fig. 14 B,C). Including the 1000 Genome populations, as customarily done, yielded 14% homogeneous clusters (Fig. 14 D). Although the Europeans remained the same, the addition of other continental populations resulted in a three to four times decrease in the homogeneity of their clusters.

Evaluating the cluster homogeneity of European samples. PCA was applied to the four European populations (Tuscan Italians [TSI], Northern and Western Europeans from Utah [CEU], British [GBR], and Spanish [IBS]) alone ( A ), together with an African and Asian population ( B ), as well as South Asian population ( C ), and finally with all the 1000 Genomes Populations ( D ). ( E ) Evaluating the usefulness of PCA-based clustering. The bottom two plots show the sizes of non-homogeneous and homogeneous clusters, and the top three plots show the proportion of individuals in homogeneous clusters. Each plot shows the results for 10 or 20 random African, European, or Asian populations for the same PCs ( x -axis).

The number of PCs analyzed in the literature ranges from 2 to, at least, 280 35 , which raises the question of whether using more PCs increases cluster homogeneity or is another cherry-picking strategy. We calculated the cluster homogeneity for different PCs for either 10 or 20 African ( n 10 = 337, n 20 = 912), Asian ( n 10 = 331, n 20 = 785), and European ( n 10 = 440, n 20 = 935) populations of similar sample sizes (Fig. 14 E). Even in this favorable setting that included only continental populations, on average, the homogeneous clusters identified using PCA were significantly smaller than the non-homogeneous clusters ( µ Homogeneous = 12.5 samples; σ Non-homogeneous = 42.6 samples; µ Homogeneous = 12.5 samples; µ Non-homogeneous = 42.6 samples; Kruskal–Wallis test [ n Homogeneous = n Non-homogeneous = 238 samples, p = 1.95 × 10 –75 , Chi-square = 338]) and included a minority of the individuals when 20 populations were analyzed. Analyzing higher PCs decreased the size of the homogeneous clusters and increased the size of the non-homogeneous ones. The maximum number of individuals in the homogeneous clusters fluctuated for different populations and sample sizes. Mixing other continental populations with each cohort decreased the homogeneity of the clusters and their sizes (results now shown). Overall, these examples show that PCA is a poor clustering tool, particularly as sample size increases, in agreement with Elhaik and Ryan 57 , who reported that PCA clusters are neither genetically nor geographical homogeneous and that PCA does not handle admixed individuals well. Note that the cluster homogeneity in this limited setting should not be confused with the amount of variance explained by additional PCs.

To further assess whether PCA clustering represents shared ancestry or biogeography, two of the most common applications of PCA, e.g., Ref. 22 , we applied PCA to 20 Puerto Ricans (Fig. 15 ) and 300 Europeans. The Puerto Ricans clustered indistinguishably with Europeans (by contrast to Fig. 12 ) using the first two and higher PCs (Fig. 15 ). The Puerto Ricans represented over 6% of the cohort, sufficient to generate a stratification bias in an association study. We tested that by randomly assigning case–control labels to the European samples with all the Puerto Ricans as controls. We then generated causal alleles to the evenly-sized cohorts and computed the association before and after PCA adjustment. We repeated the analysis with randomly assigned labels to all the samples. In all our 12 case–control analyses, the outcome of the PCA adjustment for 2 and 10 PCs were worse than the unadjusted results, i.e., PCA adjusted results had more false positives, fewer true positives, and weaker p -values than the unadjusted results (Supplementary Text 3 ).

PCA of 20 Puerto Ricans and 300 random Europeans from the 1000 Genomes. The results are shown for various PCs.

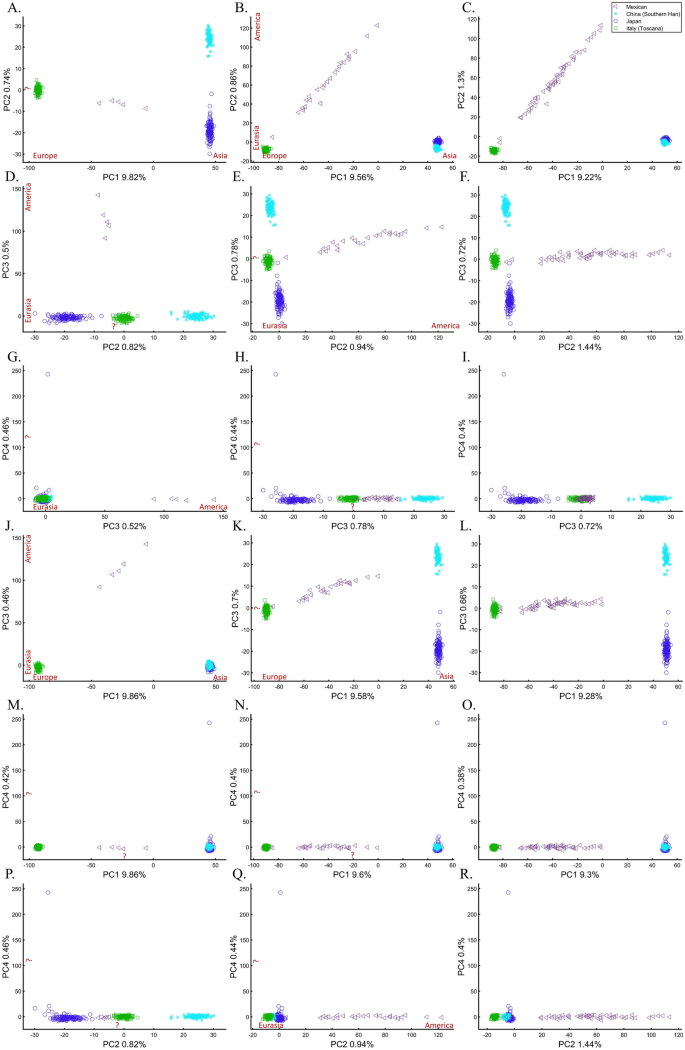

We next assessed whether the distance between individuals and populations is a meaningful biological or demographic quantity by studying the relationships between Chinese and Japanese, a question of major interest in the literature 58 , 59 . We already applied PCA to Chinese and Japanese, using Europeans as an outgroup (Supplementary Fig. S2.4 ). The only element that varied in the following analyses was the number of Mexicans as the second outgroup (5, 25, and 50). We found that the proportion of homogeneous Japanese and Chinese clusters dropped from 100% (Fig. 16 A) to 93.33% (Fig. 16 B) and 40% (Fig. 16 C), demonstrating that the genetic distances between Chinese and Japanese depend entirely on the number of Mexicans in the cohort rather than the actual genetic relationships between these populations as one may expect.

The effect of varying the number of Mexican–American on the inference of genetic distances between Chinese and Japanese using various PCs. We analyzed a fixed number of 135 Han Chinese (CHB), 133 Japanese (JPT), 115 Italians (TSI), and a variable number of Mexicans (MXL), including 5 (left column), 25 (middle column), and 50 (right column) individuals over the top four PCs. We found that the overlap between Chinese and Japanese in PC scatterplots, typically used to infer genomic distances, was unexpectedly conditional on the number of Mexican in the cohort. We noted the meaning of the axes of variation whenever apparent (red). The right column had the same axes of variations as the middle one.

Some authors consider higher PCs informative and advise considering these PCs alongside the first two. In our case, however, these PCs were not only susceptible to bias due to the addition of Mexicans but also exhibited the exact opposite pattern observed by the primary PCs (e.g., Fig. 16 G–I). It has also been suggested that in datasets with ancestry differences between samples, axes of variation often have a geographic interpretation 10 . Accordingly, the addition of Mexicans altered the order of axes of variation between the cases, making the analysis of additional PCs valuable. We demonstrate that this is not always the case. Excepting PC1, over 60% of the axes had no geographical interpretation or an incorrect one. An a priori knowledge of the current distribution of the population was essential to differentiate these cases. The addition of the first 20 Mexicans replaced the second axis of variation (initially undefined) with a third axis (Eurasia-America) in the middle and right columns and resulted in a minor decline of ~ 5% of the homogeneous clusters. Adding 25 Mexicans to the second cohort did not affect the axes, but the proportion of homogeneous clusters declined by 66%. The axes changes were unexpected and altered the interpretation of PCA results. Such changes were not detectable without an a priori knolwedge.

These results demonstrate that (1) the observable distances (and thereby clusters) between populations inferred from PCA plots (Figs. 14 , 15 , 16 ) are artifacts of the cohort and do not provide meaningful biological or historical information, (2) that distances betewen samples can be easily manipulated by the experimenter in a way that produces unpredictable results, (3) that considering higher PCs produces conflicting patterns, which are difficult to reconcile and interpret, and (4) that our extensive “exploration” of PCA solutions to Chinese and Japanese relationships using 18 scatterplots and four PCs produced no insight. It is easy to see that the multitude of conflicting results, allows the experimenter to select the favorable solution that reflects their a priori knowledge.

The case of projections

Incorporating precalculated PCA is done by projecting the PCA results calculated for the first dataset onto the second one, e.g., Ref. 17 . Here, we tested the accuracy of this approach by projecting one or more color populations onto precalculated color populations that may or may not match the projected ones. The accuracy of the results was dependent on the identity of the populations of the two cohorts. When the same populations were analyzed, they overlapped (Fig. 17 A), but when unique populations were found in the two datasets, PCA created misleading matches (Figs. 17 B–D). In the latter case, and when the sample sizes were uneven (Fig. 17 C), the projected samples formed clusters with the wrong populations, and their positioning in the plot was incorrect. Overall, we found that PCA projections are unreliable and misleading, with correct outcomes indistinguishable from incorrect ones.

Examining the accuracy of PCA projections. The PCA results of one dataset (circles) were projected onto another (squares). In ( A ), testing the case of varying sample sizes between the first ( n Red = 200, n Green = 10, n Blue = 200, n Purple = 10) and second ( n Red = 200, n Green = 200, n Blue = 10, n Purple = 10) datasets, where in the second dataset, colors varied a little (e.g., [1,0,0] → [1,0.1,0.1]). In ( B – D ), the sample size varied (10 ≤ n ≤ 300) for both datasets. Colors include Red [1,0,0], Green [0,1,0], light Green [1,0.2,1], Cyan [0,1,1], Blue [0,0,1], Purple [1,0,1], Yellow [1,1,0], Grey [0.5,0.5,0.5], White [1,1,1], and Black [0,0,0].

To evaluate the reliability of projections for human populations, we tested whether the projected populations cluster with their closest groups and to what extent these results can be manipulated. We found that populations can be shown to correctly align with continental populations when the base (or test) populations and the projected populations are very similar (Fig. 18 A), which gives us confidence in the accuracy of PCA projections. However, even in the simplest scenario of using three continental populations, it is unclear how to interpret the overlap between the base and projected populations since the Spanish would not be considered genetically closer to Finns than Italians, as suggested by PCA. In another simple scenario, where Europeans are projected onto other Europeans, distinct populations like AJs, Iberians, French, CEU, and British overlap entirely (Fig. 18 B), whereas Finns and Italians were separate. Not only do the results share no apparent resemblance to the geographical distribution, but they also produce conflicting information as to the genetic distances between these populations—two properties that PCA enthusiastics claim it represents. Adding more populations, even if only to the projected populations, contributes to further distortions with previously distinct populations (Fig. 18 B) now clustering (Fig. 18 C). In a different dataset, projecting Japanese onto a base dataset of Africans and Europeans places them as an admixed African-European population. The projected Finns cluster with other Europeans (Fig. 18 D), at odds with the previous results (Fig. 18 B) that singled them out.

PCA projections of populations (italic and black star inside the shape) onto base populations with even-sized sample ( n = 50, unless noted otherwise) (regular font). In ( A ) n projected = 100, ( B ) n projected = 50, ( C ) n projected = 20, ( D ) n projected = 100, ( E ) n projected = 80 and n projected = 100, and ( F ) 80 ≤ n projected ≤ 100 and 12 ≤ n projected ≤ 478.

To test the behavior of PCA when projecting populations different from the base populations, we projected Chinese, Finns, Indians, and AJs onto Levantine and two European populations (Fig. 18 E). The results imply that the Chinese and AJs are of an Indian origin originating from a European-Levantine mix. Replacing Levantines with Africans does not stabilize the projected results (Fig. 18 F). Now the projected Chinese and Japanese overlap, and AJs cluster with Iranians.

Overall, our results show that it is unfeasible to rely on PCA projections, particularly in studies involving different populations, as is commonly done. Even when the projected populations are identical to the base ones, the base and projected populations may or may not overlap.

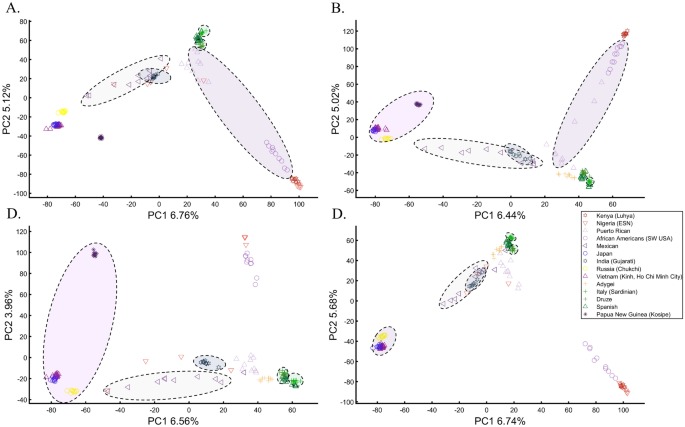

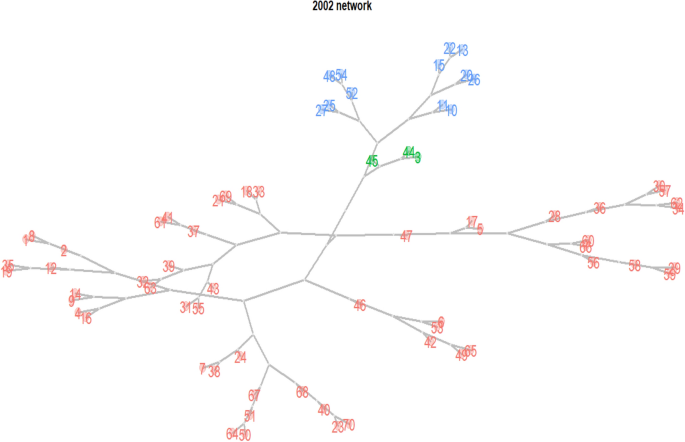

The case of ancient DNA

PCA is the primary tool in paleogenomics, where ancient samples are initially identified based on their clustering with modern or other ancient samples. Here, a wide variety of strategies is employed. In some studies, ancient and modern samples are combined 60 . In other studies, PCA is performed separately for each ancient individual and “particular reference samples”, and the PC loadings are combined 61 . Some authors projected present-day human populations onto the top two principal components defined by ancient hominins (and non-humans) 62 . The most common strategy is to project ancient DNA onto the top two principal components defined by modern-day populations 14 . Here, we will investigate the accuracy of this strategy.

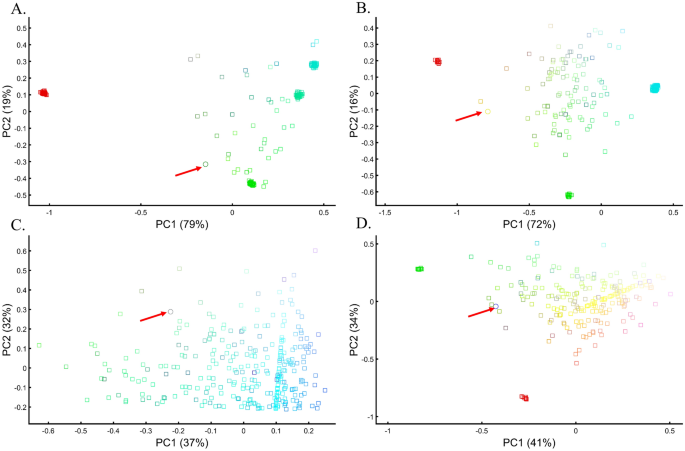

Since ancient populations show more genetic diversity than modern ones 14 , we defined “ancient colors” ( a ) as brighter colors whose allele frequency is 0.95 with an SD of 0.05 and “modern colors” ( m ) as darker colors whose allele frequency is 0.6 with an SD of 0.02. Two approaches were used in analyzing the two datasets: calculating PCA separately for the two datasets and presenting the results jointly (Fig. 19 A,B), and projecting the PCA results of the “ancient” populations onto the “modern” ones (Fig. 19 C,D). In both cases, meaningful results would show the ancient colors clustering close to their modern counterparts in distances corresponding to their true distances.

Merging PCA of “ancient” (circles) and “modern” (squares) color populations using two approaches. First, PCA is calculated separately on the two datasets, and the results are plotted together ( A , B ). Second, PCA results of “ancient” populations are projected onto the PCs of the “modern” ones ( C , D ). In ( A ), even-sized samples from “ancient” ( n = 25) and “modern” ( n = 75) color populations are used. In ( B ), different-sized samples from “ancient” (10 ≤ n ≤ 25) and “modern” (10 ≤ n ≤ 75) populations are used. In ( C ) and ( D ), different-sized samples from “ancient” (10 ≤ n ≤ 75) are used alongside even-sized samples from “modern” populations: ( C ) ( n = 15) and ( D ) n = 25. Colors include Red [1,0,0], dark Red [0.6,0,0], Green [0,1,0], dark Green [0,0.6,0], Blue [0,0,1], dark Blue [0,0,0.6], light Cyan [0,0.6,0.6], light Yellow [0.6,0.6,0], light Purple [0.6,0,0.6], and Black [0,0,0].

These are indeed the results of PCA when even-sized “modern” and “ancient” samples from color populations are analyzed and the color pallett is balanced (Fig. 19 A). In the more realistic scenario where the color pallet is imbalanced and sample sizes differ, PCA produced incorrect results where ancient Green (aGreen) clustered with modern Yellow (mYellow) away from its closest mGreen that clustered close to aRed. mPurple appeared as 4-ways mixed of aRed, aBlue, mCyan, and mDark Blue. Instead of being at the center (Fig. 19 A), Black became an outgroup and its distances to the other colors were distorted (Fig. 19 B). Projecting “ancient” colors onto “modern” ones also highly misrepresented the relationships among the ancient samples as aRed overlapped with aBlue or aGreen, mYellow appeared closer to mCyan or aRed, and the outgroups continuously changed (Fig. 19 C,D). Note that the first two PCs of the last results explained most of the variance (89%) of all anlyses.

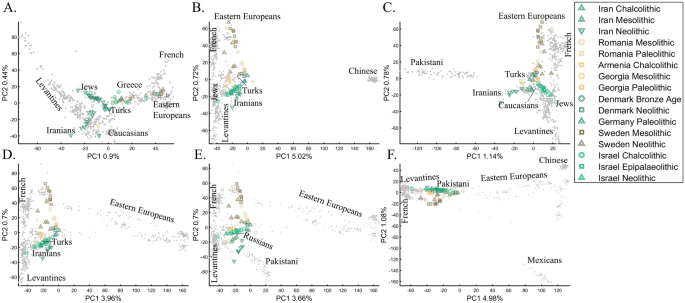

Recently, Lazaridis et al. 14 projected ancient Eurasians onto modern-day Eurasians and reported that ancient samples from Israel clustered at one end of the Near Eastern “cline” and ancient Iranians at the other, close to modern-day Jews. Insights from the positions of the ancient populations were then used in their admixture modeling that supposedly confirmed the PCA results. To test whether the authors’ inferences were correct and to what extent those PCA results are unique, we used similar modern and ancient populations to replicate the results of Lazaridis et al. 14 (Fig. 20 A). By adding the modern-day populations that Lazaridis et al. 14 omitted, we found that the ancient Levantines cluster with Turks (Fig. 20 B), Caucasians (Fig. 20 C), Iranians (Fig. 20 D), Russians (Fig. 20 E), and Pakistani (Fig. 20 F) populations. The overlap between the ancient Levantines and other populations also varied widely, whereas they cluster with ancient Iranians and Anatolians, Caucasians, or alone, as a “population isolate.” Moreover, the remaining ancient populations exhibited conflicting results inconsistent with our understanding of their origins. Mesolithic and Neolithic Swedes, for instance, clustered with modern Eastern Europeans (Fig. 20 A–C) or remotely from them (Fig. 20 D–F). These examples show the wide variety of results and interpretations possible to generate with ancient populations projected onto modern ones. Lazaridis et al.’s 14 results are neither the only possible ones nor do they explain the most variation. It is difficult to justify Lazaridis et al.’s 14 preference for the first outcome where the first two components explained only 1.35% of the variation (in our replication analysis. Lazaridis et al. omitted the proportion of explained variation) (Fig. 20 A), compared to all the alternative outcomes that explained a much larger portion of the variation (1.92–6.06%).

PCA of 65 ancient Palaeolithic, Mesolithic, Chalcolithic, and Neolithic from Iran (12), Israel (16), the Caucasus (7), Romania (10), Scandinavia (15), and Central Europe (5) (colorful shapes) projected onto modern-day populations of various sample sizes (grey dots, black labels). The full population labels are shown in Supplementary Fig. S8 . In addition to the modern-day populations used in ( A ), the following subfigures also include ( B ) Han Chinese, ( C ) Pakistani (Punjabi), ( D ) additional Russians, ( E ) Pakistani (Punjabi) and additional Russians, and ( F ) Pakistani (Punjabi), additional Russians, Han Chinese, and Mexicans. The ancient samples remained the same in all the analyses. In each plot ( A – F ), the ancient Levantines cluster with different modern-day populations.

We note that for high dimensionality data where markers are in high LD, projected samples tend to “shrink,” i.e., move towards the center of the plot. Corrections to this phenomenon have been proposed in the literature, e.g., Ref. 63 . This phenomenon does not affect our datasets, which are very small (Fig. 19 ) or LD pruned (Fig. 20 ).

The case of marker choice

The effect of marker choice on PCA results received little attention in the literature. Although PCA is routinely applied to different SNP sets, the PCs are typically deemed comparable. In forensic applications, that typically employ 100–300 markers, this is a major problem. To evaluate the effect of various markers on PCA outcomes, it is unfeasible to use our color model, although it can be used to study the effects of missing data and noise, which are common in genomic datasets and reflect the biological properties of different marker types in capturing the population structure. Remarkably, the addition of 50% (Fig. 21 A) and even 90% missingness (Fig. 21 B) allowed recovering the original population structure. The structure decayed when random noise was added to the latter dataset (Fig. 21 C). To further explore the effect of noise, we added random markers to the dataset. An addition of 10% of noisy markers increased the dataset's disparity, but it still retained the original structure (Fig. 21 D). Interestingly, even adding 100% noisy markers allowed identifying the original structure's key features (Fig. 21 E). Only when adding 1000%, noisy markers did the original structure disappear (Fig. 21 F). Note that the introduction of noise has also sliced the percent of variation explained by the PCs. These results highlight the importance of using ancestry informative markers (AIMs) to uncover the true structure of the dataset and accounting for disruptive markers.

Testing the effects of missingness and noise in a PCA of six fixed-size ( n = 50) samples from color populations. The top plots show the effect of missingness alone or combined with noise: ( A ) 50% missingness, ( B ) 90% missingness, and ( C ) 90% missingness and low-level random noise in all the markers. The bottom plots test the effect of noise when added to the original markers in the above plots using: ( D ) 30 random markers, ( E ) 300 random markers, and ( F ) 3000 random markers. Colors include Red [1,0,0], Green [0,1,0], Blue [0,0,1], Cyan [0,1,1], Yellow [1,1,0], and Black [0,0,0].

To evaluate the extent to which marker types represent the population structure, we studied the relationships between UK British and other Europeans (Italians and Iberians) using different types of 30,000 SNPs, a number of similar magnitude to the number of SNPs analyzed by some groups 64 , 65 . According to the full SNP set, the British do not overlap with Europeans (Fig. 22 A). However, coding SNPs show considerable overlap (Fig. 22 B) compared with intronic SNPs (Fig. 22 C). Protein coding SNPs, RNA molecules, and upstream or downstream SNPs (Fig. 22 D–F, respectively) also show small overlap. The identification of “outliers,” already a subjective measure, may also differ based on the proportions of each marker type. These results not only illustrate how the choice of markers and populations profoundly affect PCA results but also the difficulties in recovering the population structure in exome datasets. Overall, different marker types represent the population structure differently.

PCA of Tuscany Italians ( n = 115), British ( n = 105), and Iberians ( n = 150) across all markers ( p ~ 129,000) ( A ) and different marker types ( p ~ 30,000): ( B ) coding SNPs, ( C ) intronic SNPs, ( D ) protein-coding SNPs, ( E ) RNA molecules, and ( F ) upstream and downstream SNPs. Convex hull was used to generate the European cluster.

The case of inferring a personal ancestry

PCA is used to infer the ancestry of individuals for various purposes, however a minimal sample size of one, may be even more subjected to biases than in population studies. We found that such biases can occur when individuals with Green (Fig. 23 A) and Yellow (Fig. 23 B) ancestries clustered near admixed Cyan individuals and Orange, rather than with Greens or by themselves, respectively. One Grey individual clustered with Cyan (Fig. 23 C) when it is the only available population, much like a Blue sample clustered with Green samples (Figs. 23 D).

Inferring single individual ancestries using reference individuals. In ( A ) Using even-sized samples from reference populations ( n = 37): Red [1,0,0], Green [0,1,0], bright Cyan [0, 0.9, 0.8], dark Cyan [0, 0.9, 0.6], heterogeneous darker Cyan [0, 0.9, 0.4] with high standard deviation (0.25) with a light Green test individual [0, 0.5, 0]. In ( B ) Using the same reference populations as in ( A ) with uneven-sizes: Red ( n = 15), Green ( n = 15), bright Cyan ( n = 100), dark Cyan ( n = 15), heterogeneous darker Cyan ( n = 100), with a Yellow test indiviaul (1,1,0). In ( C ) A heterogeneous Cyan population [0, 1, 1] ( n = 300) with high standard deviation (0.25) and a Grey test individual (0.5, 0.5, 0.5). In ( D ) Red [1,0,0] ( n = 10), Green [0,1,0] ( n = 10), a heterogeneous population [1, 1, 0.5] ( n = 200) and a Blue test individual (0,0,1).

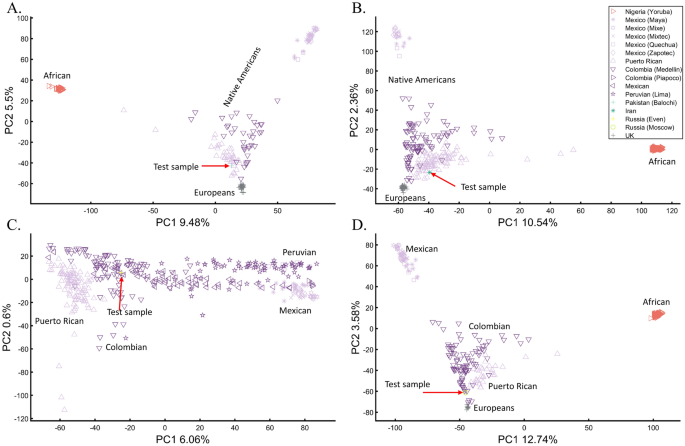

Arguably, one of the most famous cases of personal ancestral inference occurred during the 2020 US presidential primaries when a candidate published the outcome of their genetic test undertaken by Carlos Bustamante that tested their Native American ancestry ( https://elizabethwarren.com/wp-content/uploads/2018/10/Bustamante_Report_2018.pdf ). Analyzing 764,958 SNPs, Bustamante sought to test the existence of Native American ancestry using populations from the 1000 Genomes Project and Amerindians. RFMix 66 was used to identify Native American ancestry segments and PCA, elevated to be a “machine learning technique,” to verify that ancestry independently of RFMix. The longest of five genetic segments, judged to be of Native American origin, was analyzed using PCA and reported to be “clearly distinct from segments of European ancestry” and “strongly associated with Native American ancestry” as it clustered with Native Americans distinctly from Europeans and Africans (Fig. 1 in their report) and between Native American samples (Fig. 2 in their report). Bustamante concluded that “While the vast majority of the individual’s ancestry is European, the results strongly support the existence of an unadmixed Native American ancestor in the individual’s pedigree, likely in the range of 6–10 generations ago”.

We have already shown that AJs (Fig. 9 C) and Pakistanis (Fig. 14 D) can cluster with Native Americans. With the candidate’s DNA unavailable (and their specific European ancestry undisclosed), we tested whether the two PCA patterns observed by Bustamante can be reproduced for modern-day Eurasians without any reported Native American ancestry (Pakistani, Iranian, Even Russian, and Moscow Russian) (Figs. 24 A–D, respectively).

Evaluation of Native American ancestry for four Eurasians. ( A ) Using even-sample size ( n = 37) for Africans, Mexican-Americans, British, Puerto Ricans, Colombians, and a Pakistani. ( B ) Using uneven-sample sizes, for Africans ( n = 100), Mexican-Americans ( n = 20), British ( n = 50), Puerto Ricans ( n = 89), Colombians ( n = 89), and an Iranian. ( C ) Analyzing a whole-Amerindian cohort of Colombian ( n = 93), Mexican-Americans ( n = 117), Peruvian ( n = 75), Puerto Ricans ( n = 102), and an Even Russian. ( D ) Using uneven-sample sizes, for Africans ( n = 100), Mexican-Americans ( n = 53), British ( n = 20), Puerto Ricans ( n = 30), Colombians ( n = 89), and a Moscow Russian. All the samples were randomly selected.

These analyses show that the experimenter can easily generate desired patterns to support personal ancestral claims, making PCA an unreliable and misleading tool to infer personal ancestry. We further question the accuracy of Bustamante’s report, provided the biased reference population panel used by RFMix to infer the DNA segments with the alleged Amerindian origin, which excluded East European and North Eurasian populations. We draw no conclusions about the candidate’s ancestry.

The reproducibility crisis in science called for a rigorous evaluation of scientific tools and methods. Due to PCA’s centrality in population genetics, and since it was never proven to yield correct results, we sought to assess its reliability, robustness, and reproducibility for twelve test cases using a simple color-based model where the true population structure was known and real human populations. PCA failed in all three measures.

PCA did not produce correct and\or consistent results across all the design schemes, whether even-sampling was used or not, and whether for unmixed or admixed populations. We have shown that the distances between the samples are biased and can be easily manipulated to create the illusion of closely or distantly related populations. Whereas the clustering of populations between other populations in the scatter plot has been regarded as “decisive proof” or “very strong evidence” of their admixture 18 , we demonstrated that such patterns are artifacts of the sampling scheme and meaningless for any bio historical purposes. Sample clustering, a subject that received much attention in the literature, e.g., Ref. 9 , is another artifact of the sampling scheme and likewise biologically meaningless (e.g., Figs. 12 , 13 , 14 , 15 ), which is unsurprising if the distances are distorted. PCA violations of the true distances and clusters between samples limit its usability as a dimensional reduction tool for genetic analyses. Excepting PC1, where the distribution patterns may (e.g., Fig. 5 a) or may not (e.g., Fig. 9 ) bear some geographical resemblance, most of the other PCs are mirages (e.g., Fig. 16 ). The axes of variation may also change unexpectedly when a few samples are added, altering the interpretation.