A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence

The proposal for the Dartmouth Summer Research Project on Artificial Intelligence was, so far as I know, the first use of phrase Artificial Intelligence. If you are interested in the exact typography, you will have to consult a paper copy.

Download the article in PDF .

Books and Reviews

Notes on AI

What is AI?

BROUGHT TO YOU BY

- Applications

- Computer Science

- Data Science Icons

- Machine Learning

- Mathematics

Dartmouth Summer Research Project: The Birth of Artificial Intelligence

Held in the summer of 1956, the dartmouth summer research project on artificial intelligence brought together some of the brightest minds in computing and cognitive science — and is considered to have founded artificial intelligence (ai) as a field..

In the early 1950s, the field of “thinking machines” was given an array of names, from cybernetics to automata theory to complex information processing. Prior to the conference, John McCarthy — a young Assistant Professor of Mathematics at Dartmouth College — had been disappointed by submissions to the Annals of Mathematics Studies journal. He regretted that contributors didn’t focus on the potential for computers to possess intelligence beyond simple behaviors. So, he decided to organize a group to clarify and develop ideas about thinking machines.

“At the time I believed if only we could get everyone who was interested in the subject together to devote time to it and avoid distractions, we could make real progress”. John McCarthy

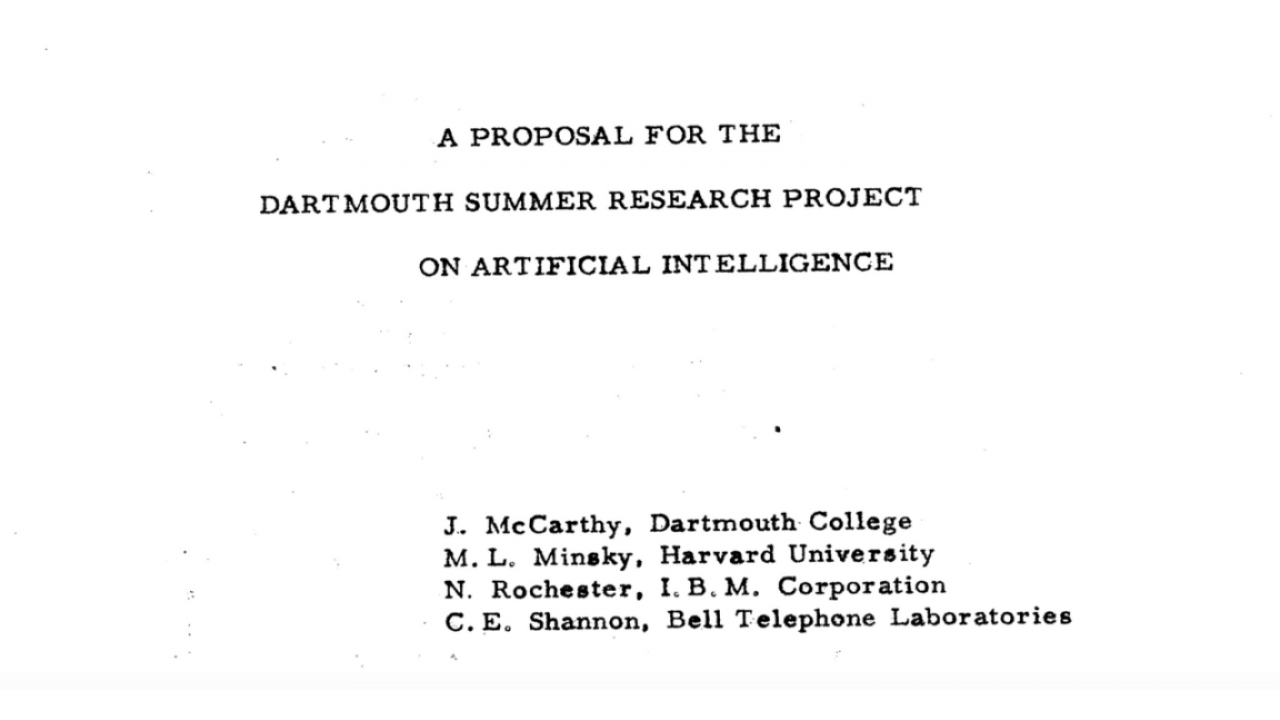

John approached the Rockefeller Foundation to request funding for a summer seminar at Dartmouth for 10 participants. In 1955, he formally proposed the project, along with friends and colleagues Marvin Minsky (Harvard University), Nathaniel Rochester (IBM Corporation), and Claude Shannon (Bell Telephone Laboratories).

Laying the Foundations of AI

The workshop was based on the conjecture that, “Every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves.”

Although they came from very different backgrounds, all the attendees believed that the act of thinking is not unique either to humans or even biological beings. Participants came and went, and discussions were wide reaching. The term AI itself was first coined and directions such as symbolic methods were initiated. Many of the participants would later make key contributions to AI, ushering in a new era.

IBM First Computer

Nathaniel Rochester designs the IBM 701, the first computer marketed by IBM.

“Machine Learning” is coined

Attendee Arthur Samuel coins the term “machine learning” and creates the Samuel Checkers-Playing program, one of the world’s first successful self-learning programs.

Marvin Minsky Wins the Turing Award

Marvin Minsky wins the Turing Award for his “central role in creating, shaping, promoting and advancing the field of artificial intelligence.”

Discover more articles

K-nearest neighbors algorithm: classification and regression star, katherine johnson: trailblazing nasa mathematician, mary lucy cartwright: the inspired mathematician behind chaos theory, your inbox will love data science.

- Visual Essays

- Print Subscription

A Look Back on the Dartmouth Summer Research Project on Artificial Intelligence

At this convention that took place on campus in the summer of 1956, the term “artificial intelligence” was coined by scientists..

For six weeks in the summer of 1956, a group of scientists convened on Dartmouth’s campus for the Dartmouth Summer Research Project on Artificial Intelligence. It was at this meeting that the term “artificial intelligence,” was coined. Decades later, artificial intelligence has made significant advancements. While the recent onset of programs like ChatGPT are changing the artificial intelligence landscape once again, The Dartmouth investigates the history of artificial intelligence on campus.

That initial conference in 1956 paved the way for the future of artificial intelligence in academia, according to Cade Metz, author of the book “Genius Makers: the Mavericks who Brought AI to Google, Facebook and the World.”

“It set the goals for this field,” Metz said. “The way we think about the technology is because of the way it was framed at that conference.”

However, the connection between Dartmouth and the birth of AI is not very well-known, according to some students. DALI Lab outreach chair and developer Jason Pak ’24 said that he had heard of the conference, but that he didn’t think it was widely discussed in the computer science department.

“In general, a lot of CS students don’t know a lot about the history of AI at Dartmouth,” Pak said. “When I’m taking CS classes, it is not something that I’m actively thinking about.”

Even though the connection between Dartmouth and the birth of artificial intelligence is not widely known on campus today, the conference’s influence on academic research in AI was far-reaching, Metz said. In fact, four of the conference participants built three of the largest and most influential AI labs at other universities across the country, shifting the nexus of AI research away from Dartmouth.

Conference participants John McCarthy and Marvin Minsky would establish AI labs at Stanford and MIT, respectively, while two other participants, Alan Newell and Hebert Simon, built an AI lab at Carnegie Mellon. Taken together, the labs at MIT, Stanford and Carnegie Mellon drove AI research for decades, Metz said.

Although the conference participants were optimistic, in the following decades, they would not achieve many of the achievements they believed would be possible with AI. Some participants in the conference, for example, believed that a computer would be able to beat any human in chess within just a decade.

“The goal was to build a machine that could do what the human brain could do,” Metz said. “Generally speaking, they didn’t think [the development of AI] would take that long.”

The conference mostly consisted of brainstorming ideas about how AI should work. However, “there was very little written record” of the conference, according to computer science professor emeritus Thomas Kurtz, in an interview that is part of the Rauner Special Collections archives.

The conference represented all kinds of disciplines coming together, Metz said. At that point, AI was a field at the intersection of computer science and psychology and it had overlaps with other emerging disciplines, such as neuroscience, he added.

Metz said that after the conference, two camps of AI research emerged. One camp believed in what is called neural networks, mathematical systems that learn skills by analyzing data. The idea of neural networks was based on the concept that machines can learn like the human brain, creating new connections and growing over time by responding to real-world input data.

Some of the conference participants would go on to argue that it wasn’t possible for machines to learn on their own. Instead, they believed in what is called “symbolic AI.”

“They felt like you had to build AI rule-by-rule,” Metz said. “You had to define intelligence yourself; you had to — rule-by-rule, line-by-line — define how intelligence would work.”

Notably, conference participant Marvin Minsky would go on to cast doubt on the neural network idea, particularly after the 1969 publication of “Perceptrons,” co-authored by Minsky and mathematician Seymour Paper, which Metz said led to a decline in neural network research.

Over the decades, Minsky adapted his ideas about neural networks, according to Joseph Rosen, a surgery professor at Dartmouth Hitchcock Medical Center. Rosen first met Minsky in 1989 and remained a close friend of his until Minsky’s death in 2016.

Minsky’s views on neural networks were complex, Rosen said, but his interest in studying AI was driven by a desire to understand human intelligence and how it worked.

“Marvin was most interested in how computers and AI could help us better understand ourselves,” Rosen said.

In about 2010, however, the neural network idea “was proven to be the way forward,” Metz said. Neural networks allow artificial intelligence programs to learn tasks on their own, which has driven a current boom in AI research, he added.

Given the boom in research activity around neural networks, some Dartmouth students feel like there is an opportunity for growth in AI-related courses and research opportunities. According to Pak, currently, the computer science department mostly focuses on research areas other than AI. Of the 64 general computer science courses offered every year, only two are related to AI, according to the computer science department website.

“A lot of our interests are shaped by the classes we take,” Pak said. “There is definitely room for more growth in AI-related courses.”

There is a high demand for classes related to AI, according to Pak. Despite being a computer science and music double major, he said he could not get into a course called MUS 14.05: “Music and Artificial Intelligence” because of the demand.

DALI Lab developer and former development lead Samiha Datta ’23 said that she is doing her senior thesis on neural language processing, a subfield of AI and machine learning. Datta said that the conference is pretty well-referenced, but she believes that many students do not know much about the specifics.

She added she thinks the department is aware of and trying to improve the lack of courses taught directly related to AI, and that it is “more possible” to do AI research at Dartmouth now than it would have been a few years ago, due to the recent onboarding of four new professors who do AI research.

“I feel lucky to be doing research on AI at the same place where the term was coined,” Datta said.

Faculty gather on Green in response to protestor arrests, petition for emergency faculty meeting

Beilock addresses Dartmouth community

Campus encampments live updates: Protests yield mass arrests

Julia cross ’24 dies at age 21, former office manager of the dartmouth pleads guilty to embezzling more than $223,000 from student newspaper, college community reacts to dartmouth’s ‘c’ grade on adl’s antisemitism report card, college investigating two students for alleged on-campus racial harassment.

The Dartmouth

Artificial Intelligence Coined at Dartmouth

The Dartmouth Summer Research Project on Artificial Intelligence was a seminal event for artificial intelligence as a field.

In 1956, a small group of scientists gathered for the Dartmouth Summer Research Project on Artificial Intelligence, which was the birth of this field of research.

To celebrate the anniversary, more than 100 researchers and scholars again met at Dartmouth for AI@50, a conference that not only honored the past and assessed present accomplishments, but also helped seed ideas for future artificial intelligence research.

The initial meeting was organized by John McCarthy, then a mathematics professor at the College. In his proposal, he stated that the conference was “to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.”

Professor of Philosophy James Moor, the director of AI@50, says that the researchers who came to Hanover 50 years ago thought about ways to make machines more cognizant, and they wanted to lay out a framework to better understand human intelligence.

More Dartmouth Milestones

Vol. 27 No. 4: Winter 2006

Editorial Introduction

A proposal for the dartmouth summer research project on artificial intelligence, august 31, 1955, happy silver anniversary, ai, ai@50: we are golden, (aa)ai more than the sum of its parts, what do we know about knowledge, ai meets web 2.0: building the web of tomorrow, today, a personal account of the development of stanley, the robot that won the darpa grand challenge, workshop reports, the dartmouth college artificial intelligence conference: the next fifty years, reports on the twenty-first national conference on artificial intelligence (aaai-06) workshop program, aaai's national and innovative applications conferences celebrate 50 years of ai, report on the nineteenth international flairs conference, book reviews, review of thinking about android epistemology, departments, calendar of events, ai crossword puzzle, ai in the news, information.

- For Readers

- For Authors

Developed By

Part of the PKP Publishing Services Network

Copyright © 2021, Association for the Advancement of Artificial Intelligence. All rights reserved.

Hitting the Books: Why a Dartmouth professor coined the term 'artificial intelligence'

I f the Wu-Tang produced it in '23 instead of '93, they'd have called it D.R.E.A.M. — because data rules everything around me. Where once our society brokered power based on strength of our arms and purse strings, the modern world is driven by data empowering algorithms to sort, silo and sell us out. These black box oracles of imperious and imperceptible decision-making deign who gets home loans , who gets bail , who finds love and who gets their kids taken from them by the state .

In their new book, How Data Happened: A History from the Age of Reason to the Age of Algorithms , which builds off their existing curriculum, Columbia University Professors Chris Wiggins and Matthew L Jones examine how data is curated into actionable information and used to shape everything from our political views and social mores to our military responses and economic activities. In the excerpt below, Wiggins and Jones look at the work of mathematician John McCarthy, the junior Dartmouth professor who single-handedly coined the term "artificial intelligence"... as part of his ploy to secure summer research funding.

Excerpted from How Data Happened: A History from the Age of Reason to the Age of Algorithms by Chris Wiggins and Matthew L Jones. Published by WW Norton. Copyright © 2023 by Chris Wiggins and Matthew L Jones. All rights reserved.

Confecting “Artificial Intelligence”

A passionate advocate of symbolic approaches, the mathematician John McCarthy is often credited with inventing the term “artificial intelligence,” including by himself: “I invented the term artificial intelligence,” he explained, “when we were trying to get money for a summer study” to aim at “the long term goal of achieving human level intelligence.” The “summer study” in question was titled “The Dartmouth Summer Research Project on Artificial Intelligence,” and the funding requested was from the Rockefeller Foundation. At the time a junior professor of mathematics at Dartmouth, McCarthy was aided in his pitch to Rockefeller by his former mentor Claude Shannon. As McCarthy describes the term’s positioning, “Shannon thought that artificial intelligence was too flashy a term and might attract unfavorable notice.” However, McCarthy wanted to avoid overlap with the existing field of “automata studies” (including “nerve nets” and Turing machines) and took a stand to declare a new field. “So I decided not to fly any false flags anymore.” The ambition was enormous; the 1955 proposal claimed “every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.” McCarthy ended up with more brain modelers than axiomatic mathematicians of the sort he wanted at the 1956 meeting, which came to be known as the Dartmouth Workshop. The event saw the coming together of diverse, often contradictory efforts to make digital computers perform tasks considered intelligent, yet as historian of artificial intelligence Jonnie Penn argues, the absence of psychological expertise at the workshop meant that the account of intelligence was “informed primarily by a set of specialists working outside the human sciences.” Each participant saw the roots of their enterprise differently. McCarthy reminisced, “anybody who was there was pretty stubborn about pursuing the ideas that he had before he came, nor was there, as far as I could see, any real exchange of ideas.”

Like Turing’s 1950 paper, the 1955 proposal for a summer workshop in artificial intelligence seems in retrospect incredibly prescient. The seven problems that McCarthy, Shannon, and their collaborators proposed to study became major pillars of computer science and the field of artificial intelligence:

“Automatic Computers” (programming languages)

“How Can a Computer be Programmed to Use a Language” (natural language processing)

“Neuron Nets” (neural nets and deep learning)

“Theory of the Size of a Calculation” (computational complexity)

“Self-improvement” (machine learning)

“Abstractions” (feature engineering)

“Randomness and Creativity” (Monte Carlo methods including stochastic learning).

The term “artificial intelligence,” in 1955, was an aspiration rather than a commitment to one method. AI, in this broad sense, involved both discovering what comprises human intelligence by attempting to create machine intelligence as well as a less philosophically fraught effort simply to get computers to perform difficult activities a human might attempt.

Only a few of these aspirations fueled the efforts that, in current usage, became synonymous with artificial intelligence: the idea that machines can learn from data. Among computer scientists, learning from data would be de-emphasized for generations.

Most of the first half century of artificial intelligence focused on combining logic with knowledge hard-coded into machines. Data collected from everyday activities was hardly the focus; it paled in prestige next to logic. In the last five years or so, artificial intelligence and machine learning have begun to be used synonymously; it’s a powerful thought-exercise to remember that it didn’t have to be this way. For the first several decades in the life of artificial intelligence, learning from data seemed to be the wrong approach, a nonscientific approach, used by those who weren’t willing “to just program” the knowledge into the computer. Before data reigned, rules did.

For all their enthusiasm, most participants at the Dartmouth workshop brought few concrete results with them. One group was different. A team from the RAND Corporation, led by Herbert Simon, had brought the goods, in the form of an automated theorem prover. This algorithm could produce proofs of basic arithmetical and logical theorems. But math was just a test case for them. As historian Hunter Heyck has stressed, that group started less from computing or mathematics than from the study of how to understand large bureaucratic organizations and the psychology of the people solving problems within them. For Simon and Newell, human brains and computers were problem solvers of the same genus.

Our position is that the appropriate way to describe a piece of problem-solving behavior is in terms of a program: a specification of what the organism will do under varying environmental circumstances in terms of certain elementary information processes it is capable of performing... Digital computers come into the picture only because they can, by appropriate programming, be induced to execute the same sequences of information processes that humans execute when they are solving problems. Hence, as we shall see, these programs describe both human and machine problem solving at the level of information processes.

Though they provided many of the first major successes in early artificial intelligence, Simon and Newell focused on a practical investigation of the organization of humans. They were interested in human problem-solving that mixed what Jonnie Penn calls a “composite of early twentieth century British symbolic logic and the American administrative logic of a hyper-rationalized organization.” Before adopting the moniker of AI, they positioned their work as the study of “information processing systems” comprising humans and machines alike, that drew on the best understanding of human reasoning of the time.

Simon and his collaborators were deeply involved in debates about the nature of human beings as reasoning animals. Simon later received the Nobel Prize in Economics for his work on the limitations of human rationality. He was concerned, alongside a bevy of postwar intellectuals, with rebutting the notion that human psychology should be understood as animal-like reaction to positive and negative stimuli. Like others, he rejected a behaviorist vision of the human as driven by reflexes, almost automatically, and that learning primarily concerned the accumulation of facts acquired through such experience. Great human capacities, like speaking a natural language or doing advanced mathematics, never could emerge only from experience—they required far more. To focus only on data was to misunderstand human spontaneity and intelligence. This generation of intellectuals, central to the development of cognitive science, stressed abstraction and creativity over the analysis of data, sensory or otherwise. Historian Jamie Cohen-Cole explains, “Learning was not so much a process of acquiring facts about the world as of developing a skill or acquiring proficiency with a conceptual tool that could then be deployed creatively.” This emphasis on the conceptual was central to Simon and Newell’s Logic Theorist program, which didn’t just grind through logical processes, but deployed human-like “heuristics” to accelerate the search for the means to achieve ends. Scholars such as George Pólya investigating how mathematicians solved problems had stressed the creativity involved in using heuristics to solve math problems. So mathematics wasn’t drudgery — it wasn’t like doing lots and lots of long division or of reducing large amounts of data. It was creative activity — and, in the eyes of its makers, a bulwark against totalitarian visions of human beings, whether from the left or the right. (And so, too, was life in a bureaucratic organization — it need not be drudgery in this picture — it could be a place for creativity. Just don’t tell that to its employees.)

Research Revealed: Navigating BU’s AI Revolution and the Future of Research and Learning

BU Task Force Releases Guidance for Generative AI in Research and Education

This month, BU’s Artificial Intelligence (AI) Task Force released a comprehensive report on Generative AI in research and education, including opportunities, challenges, and guiding principles of relevance to BU researchers, as well as 100+ policies and advice documents on generative AI in higher education. The report offers resources and recommendations to improve and accelerate learning and research outcomes through the use of Generative AI while helping to prevent its misuse or negative impact.

Research Funding

Artificial Intelligence for Information Security

The spring 2024 call for proposals for Amazon Research Awards is open through Tuesday, May 7. The awards fund machine learning (ML) research on the following topics in information security:

- Threat, intrusion, and anomaly detection for cloud security

- Generative AI and foundation models for information security

- Graph modeling and anomaly detection on graphs

- Learning with limited/noisy labels and weakly supervised learning

- ML for malware analysis and detection

- Finding security vulnerabilities using ML

- Causal inference for information security

- Zero/one-shot learning for information security

- Reinforcement learning for information security

- Protecting and preserving data privacy in the cloud

- Securing Generative AI and foundation models

Federal Funding Opportunities for Foreign Languages and Culture Initiatives

A new listing of funding opportunities for foreign language and culture initiatives offered by federal agencies is available on the Federal Relations website (Kerberos log-in required). It covers programs that aim to foster cultural understanding and global cooperation by supporting language learning and exchange initiatives between the US and international educational and research communities.

Support for Early Career Researchers

The National Science Foundation (NSF) is accepting applications for the Faculty Early Career Development (CAREER) Program through Wednesday, July 24. Assistant professors are encouraged to apply. Answers to frequently asked questions are available on the NSF website .

Limited Submission Opportunities

Faculty require institutional endorsement for the following opportunities. All current competitions can be found at bu.infoready4.com .

- Keck Foundation Research Program (5/15)

- William T. Grant Scholars Program (notify Office of Research by 5/20)

- Hartwell Foundation Individual Biomedical Research Awards (5/22)

Extensive Database for Funding Across Disciplines

BU offers all faculty and staff a free subscription to Pivot-RP , a database updated daily with federal, non-federal, foundation, and private funding opportunities in every discipline. With the BU subscription, members can save search criteria and receive weekly updates on new and upcoming funding opportunities. Learn how to set up your account and get started on the Office of Research website .

Grant Management

Important national science foundation updates.

NSF has announced updates to its Proposal and Award Policies and Procedures Guide, Award Terms and Conditions, and Grants.gov Application Guide. If you have questions, please contact your Sponsored Programs pre-award officer .

Ethics & Compliance

Required Change to Satellite Accumulation Areas in BU Labs

A satellite accumulation area (SAA) allows research teams to collect hazardous waste near the work area where it’s generated. While it was once common practice for these areas to be located inside chemical hoods in BU research labs, this practice is no longer acceptable, per fire code. This change is effective immediately. If your designated SAA is currently set up in a fume hood, please relocate it to another area in your lab such as under laboratory benches; on open, un-used counterspace; in flammable or corrosive cabinets; or on a lab cart. Learn more in this guidance from BU Environmental Health & Safety (EHS).

Batteries and Fire Safety

Lithium-ion batteries, commonly used in consumer electronics, electric vehicles, and micromobility devices (e.g., electric bikes and scooters), contain highly flammable electrolytes that can ignite if the battery is punctured, overheated, or overcharged. BU community members are encouraged to educate themselves on practices for battery safety, including proper charging, safe storage, monitoring, maintenance, and disposal. Learn more on the EHS website or reach out to [email protected] with questions.

Procedural Changes for Expedited Review of Human Subjects Research

The Charles River Campus Institutional Review Board is discontinuing annual progress reports for expedited research and moving instead to a triennial review cycle . For research teams, this will go into effect at the next expedited annual progress report review and will apply to all newly approved expedited research going forward. Annual continuing review will remain for research reviewed by the full board, per regulatory requirements.

Collaboration & Impact

Get to Know Your Campus Communicators

For researchers ready to promote findings, it can be invaluable to loop in communications professionals around the University for assistance amplifying your scholarship and expertise. Communicators embedded in schools, colleges, centers, and departments are an excellent first contact. They are connected to other communicators around BU’s campuses and have a broad view of the channels and resources available to get the word out about your work. Learn more about promoting your research .

BU Inventors and Entrepreneurs to Offer Insights and Advice for Aspiring Innovators

On Tuesday, May 7, BU faculty and alumni will share their diverse paths from invention to market to impact at the final Office of Research event of the academic year: The Innovator’s Journey . Each researcaher will offer insights on their challenges, opportunities, mentors, and institutional supports. The event also includes the presentation of the BU Innovator of the Year Award to Dr. Thomas Bifano, professor of mechanical engineering and director of the BU Photonics Center.

The Latest Innovations at Your Fingertips

The Innovator’s Quarterly is a newsletter for BU faculty, staff, and students that provides the latest on research innovations and industry collaborations coming out of BU—and the resources offered by BU Industry Engagement and Technology Development to help researchers move their work into the world. Sign up to receive updates on the Office of Research website .

Notes & News

Professor Vivek Goyal , Electrical & Computer Engineering (ENG), was named a 2024 Guggenheim Fellow by the John Simon Guggenheim Memorial Foundation.

A group of students at the College of Engineering won this year’s Anthony Janetos Climate Action Prize for a project piloting bicycle-mounted air pollution monitors with funding from the Institute for Global Sustainability’s Campus Climate Lab. The research team, which was featured in a recent video on BU sustainability research , is now in talks with the Bluebikes bikeshare program about collecting air quality data from bike trips around the city.

Associate Professor Nahid Bhadelia , Infectious Diseases (Chobanian & Avedisian School of Medicine) and founding director of the Center on Emerging Infectious Diseases, participated in a National Academies of Sciences, Engineering, and Medicine committee that developed a government-requested report on smallpox readiness based on the lessons of COVID-19 and mpox.

Professors Deborah Carr , Sociology (CAS), and Vivien Schmidt , International Relations (Pardee), were named members of the American Academy of Arts & Sciences .

BU’s Center on Forced Displacement became the first US-based research center to join the International Migration Research Network , the leading global consortium of universities focusing on migration and forced displacement.

Assistant Professors Chuanfei Dong , Astronomy (CAS), Meg Younger , Biology (CAS), and Hadi Nia , Biomedical Engineering (ENG), have been named 2024 Sloan Research Fellows .

Assistant Professors Ana Fiszbein , Biology (CAS), Jonathan Huggins , Mathematics & Statistics (CAS), Rabia Yazicigil , Electrical & Computer Engineering (ENG), Wenchao Li , Electrical & Computer Engineering (ENG), and Andrew Sabelhaus , Mechanical Engineering (ENG), received NSF Early Career Development Awards.

Sarah Hokanson , assistant vice president and assistant provost for research development and PhD and postdoctoral affairs, became the first nonfaculty principal investigator to be awarded the Gallagher Mentor Award by the National Postdoctoral Association.

Professor Jaimie Gradus , Epidemiology (SPH), and Associate Professor Patricia Janulewicz Lloyd , Environmental Health (SPH), were appointed to serve on a National Academies of Sciences, Engineering, and Medicine committee examining possible connections between veterans’ military service exposure to toxic substances and potential mental health conditions.

A team of researchers led by Professor Darrell Kotton , Medicine (Chobanian & Avedisian School of Medicine), has been awarded a five-year, $14 million grant from the National Heart, Lung, and Blood Institute for their research, “Developing Pluripotent Stem Cells to Model and Treat Lung Disease.”

Assistant Professor Sean Lubner , Mechanical Engineering (ENG), was awarded a Young Investigator Program award from the Air Force Office of Scientific Research for his project, “Investigating Coupled Thermal, Mechanical, and Electrical Phenomena in High-Temperature Materials using Thermal Wave Sensors.”

Professors Siddharth Ramachandran , Electrical & Computer Engineering (ENG), Bradley Lee Roberts , Physics (CAS), and Daniel Segrè , Biology (CAS), were named American Association for the Advancement of Science Fellows .

Assistant Professor Dane Scantling , Surgery (Chobanian & Avedisian School of Medicine), was awarded the C. James Carrico, MD, FACS, Faculty Research Fellowship for the Study of Trauma and Critical Care from the American College of Surgeons to improve access to trauma care for underserved victims of firearm violence.

Professor Cara Stepp , Speech, Language & Hearing Sciences (Sargent), was inducted into the American Institute for Medical and Biological Engineering College of Fellows .

Mark Your Calendar

Trainings & How-to

- May 1 : Workshop on Federal and Foundation Grants for Social Science Faculty

- May 7: The Innovator’s Journey, including presentation of the BU Innovator of the Year award to Dr. Thomas Bifano

- May 8: Meet the Funder: American Society for Suicide Prevention

- May 21: Meet the Funder: American Cancer Society

Seminars & Events

- May 1: Architects Without Frontiers: A Journey from Divided Cities to Zones of Fragility

- May 2: Distinguished Hariri Institute/CISE Seminar: Andrew A. Chien, University of Chicago

- May 7: A Thousand Cuts: Social Protection in the Age of Austerity

- May 7: Power & People Symposium: Mapping Community Exposure to Energy Infrastructure

- May 10: Reinforcement Learning Focused Research Program Symposium

- May 24: Space Travel with Earth Wisdom Focused Research Program Symposium

- Aug 19: The Human Spirit Can Make The ‘Impossible’ Possible: What Space Exploration Can Learn about Passion, Persistence, and Resilience from a Groundbreaking Athlete

- Aug 26-27: Neuroscience of the Everyday World Conference

Recent Event Recordings

- Amplify Your Expertise: How to Elevate Your Research with The Conversation

- Celebrating Women in Research: International Women’s Day Panel

- Rock Your Profile: Strategies to Enhance Your LinkedIn Presence and Maximize Research Impact

- Meet the Alfred P. Sloan Foundation

- Research for People & Planet Webinar Series: Investigating Consumer Interest in Circular Take-Back Programs

- Research on Tap: Climate Change and Infectious Diseases

- Research on Tap: The Global Housing Crisis

View all posts

Information For...

NSF funds groundbreaking research project led by Northeastern to ‘democratize’ artificial intelligence

- Search Search

Computer science professor David Bau is the lead principal investigator of National Deep Inference Fabric, a revolutionary new project involving industry and academic partners aimed at unlocking the secrets of AI.

- Copy Link Link Copied!

Groundbreaking research by Northeastern University will investigate how generative AI works and provide industry and the scientific community with unprecedented access to the inner workings of large language models.

Backed by a $9 million grant from the National Science Foundation , Northeastern will lead the National Deep Inference Fabric that will unlock the inner workings of large language models in the field of AI.

The project will create a computational infrastructure that will equip the scientific community with deep inferencing tools in order to develop innovative solutions across fields. An infrastructure with this capability does not currently exist.

At a fundamental level, large language models such as Open AI’s ChatGPT or Google’s Gemini are considered to be “black boxes” which limits both researchers and companies across multiple sectors in leveraging large-scale AI.

Sethuraman Panchanathan, director of the NSF, says the impact of NDIF will be far-reaching.

“Chatbots have transformed society’s relationship with AI, but how they operate is yet to be fully understood,” Panchanathan says. “With NDIF, U.S. researchers will be able peer inside the ‘black box’ of large language models, gaining new insights into how they operate and greater awareness of their potential impacts on society.”

Even the sharpest minds in artificial intelligence are still trying to wrap their heads around how these and other neural network-based tools reason and make decisions, explains David Bau, a computer science professor at Northeastern and the lead principal investigator for NDIF.

“We fundamentally don’t understand how these systems work, what they learned from the data, what their internal algorithms are,” Bau says. “I consider it one of the greatest mysteries facing scientists today — what is the basis for synthetic cognition?”

David Madigan, Northeastern’s provost and senior vice president for academic affairs, says the project will “help address one of the most pressing socio-technological problems of our time — how does AI work?”

“Progress toward solving this problem is clearly necessary before we can unlock the massive potential for AI to do good in a safe and trustworthy way,” Madigan says.

NDIF aims to democratize AI

In addition to establishing an infrastructure that will open up the inner workings of these AI models, NDIF aims to democratize AI, expanding access to large language models.

Northeastern will be building an open software library of neural network tools that will enable researchers to conduct their experiments without having to bring their own resources, and sets of educational materials to teach them how to use NDIF.

The project will build an AI-enabled workforce by training scientists and students to serve as networks of experts, who will train users across disciplines.

“There will be online and in-person educational workshops that we will be running, and we’re going to do this geographically dispersed at many locations taking advantage of Northeastern’s physical presence in a lot of parts of the country,” Bau says.

Research emerging from the fabric could have worldwide implications outside of science and academia, Bau explains. It could help demystify the underlying mechanisms of how these systems work to policymakers, creatives and others.

“The goal of understanding how these systems work is to equip humanity with a better understanding for how we could effectively use these systems,” Bau says. “What are their capabilities? What are their limitations? What are their biases? What are the potential safety issues we might face by using them?”

Putting AI through an MRI machine

Large language models like Chat GPT and Google’s Gemini are trained on huge amounts of data using deep learning techniques. Underlying these techniques are neural networks, synthetic processes that loosely mimic the activity of a human brain that enable these chatbots to make decisions.

But when you use these services through a web browser or an app, you are interacting with them in a way that obscures these processes, Bau says.

Featured Stories

Teaching elementary schoolchildren the rights and wrongs of AI is just as important as sex and drug education, Northeastern expert says

From right swipe to writing: How this Northeastern professor wrote a book with a fellow entrepreneur she met on a dating app

Musi, a new, free music streaming app, begs the question: Can anything compete with Spotify?

“They give you the answers, but they don’t give you any insights as to what computation has happened in the middle,” Bau says. “Those computations are locked up inside the computer, and for efficiency reasons, they’re not exposed to the outside world. And so, the large commercial players are creating systems to run AIs in deployment, but they’re not suitable for answering the scientific questions of how they actually work.”

At NDIF, researchers will be able to take a deeper look at the neural pathways these chatbots make, Bau says, allowing them to see what’s going on under the hood while these AI models actively respond to prompts and questions.

Researchers won’t have direct access to Open AI’s Chat GPT or Google’s Gemini as the companies haven’t opened up their models for outside research. They will instead be able to access open source AI models from companies such as Mistral AI and Meta.

“What we’re trying to do with NDIF is the equivalent of running an AI with its head stuck in an MRI machine, except the difference is the MRI is in full resolution. We can read every single neuron at every single moment,” Bau says.

But how are they doing this?

Significant computational power required

Such an operation requires significant computational power on the hardware front. As part of the undertaking, Northeastern has teamed up with the University of Illinois Urbana-Champaign, which is building data centers equipped with state-of-the-art graphics processing units (GPUs) at the National Center for Supercomputing Applications. NDIF will leverage the resources of the NCSA DeltaAI project.

NDIF will partner with New America’s Public Interest Technology University Network, a consortium of 63 universities and colleges, to ensure that the new NDIF research capabilities advance interdisciplinary research in the public interest.

Northeastern is building the software layer of the project, Bau says.

“The software layer is the thing that enables the scientists to customize these experiments and to share these very large neural networks that are running on this very fancy hardware,” he says.

Northeastern professors Jonathan Bell, Carla Brodley, Bryon Wallace and Arjun Guha are co-PIs on the initiative.

Guha explains the barriers that have hindered research into the inner-workings of large generative AI models up to now.

“Conducting research to crack open large neural networks poses significant engineering challenges,” he says. “First of all, large AI models require specialized hardware to run, which puts the cost out of reach of most labs. Second, scientific experiments that open up models require running the networks in ways that are very different from standard commercial operations. The infrastructure for conducting science on large-scale AI does not exist today.”

NDIF will have implications beyond the scientific community in academia. The social sciences and humanities, as well as neuroscience, medicine and patient care can benefit from the project.

“Understanding how large networks work, and especially what information informs their outputs, is critical if we are going to use such systems to inform patient care,” Wallace says.

NDIF will also prioritize the ethical use of AI with a focus on social responsibility and transparency. The project will include collaboration with public interest technology organizations.

Science & Technology

Recent Stories

IMAGES

VIDEO

COMMENTS

Text of the original 1955 Dartmouth Proposal. The proposal for the Dartmouth Summer Research Project on Artificial Intelligence was, so far as I know, the first use of phrase Artificial Intelligence. If you are interested in the exact typography, you will have to consult a paper copy. Download the article in PDF. Professor John McCarthy's page.

The 1956 Dartmouth summer research project on artificial intelligence was initiated by this August 31, 1955 proposal, authored by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon. The original typescript consisted of 17 pages plus a title page. Copies of the typescript are housed in the archives at Dartmouth College and Stanford University.

A PROPOSAL FOR THE DARTMOUTH SUMMER RESEARCH PROJECT ON ARTIFICIAL INTELLIGENCE. J. McCarthy, Dartmouth College M. L. Minsky, Harvard University N. Rochester, I.B.M. Corporation C.E. Shannon, Bell Telephone Laboratories. August 31, 1955. We propose that a 2 month, 10 man study of artificial intelligence be carried out during the summer of 1956 ...

C. E. Shannon, Bell Telephone Laboratories. August 3 1, 1955. A Proposal for the. DARTMOUTH SUMMER RESEARCH PROJECT ON ARTIFICIAL INTELLIGENCE. We propose that a 2 month. 10 man study of artificial intelligence be. carried out during the summe r of 1956 at Dartmouth College in Hanover. New. Hampshire.

Translate. Headnote. The 1956 Dartmouth summer research project on artificial intelligence was initiated by this August 31, 1955 proposal, authored by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon. The original typescript consisted of 17 pages plus a title page. Copies of the typescript are housed in the archives at ...

The 1956 Dartmouth summer research project on artificial intelligence was initiated by this August 31, 1955 proposal, authored by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, along with the short autobiographical statements of the proposers. The 1956 Dartmouth summer research project on artificial intelligence was initiated by this August 31, 1955 proposal, authored ...

The 1956 Dartmouth summer research project on artificial intelligence was initiated by this August 31, 1955 proposal, authored by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude ...

* The 1956 Dartmouth summer research project on artificial intelligence was initiated by this August 31, 1955 proposal, authored by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon. The original typescript consisted of 17 pages plus a title page.

09_30_2021. Held in the summer of 1956, the Dartmouth Summer Research Project on Artificial Intelligence brought together some of the brightest minds in computing and cognitive science — and is considered to have founded artificial intelligence (AI) as a field. In the early 1950s, the field of "thinking machines" was given an array of ...

The Dartmouth Summer Research Project on Artificial Intelligence was a 1956 summer workshop widely considered [1] [2] [3] to be the founding event of artificial intelligence as a field. The project lasted approximately six to eight weeks and was essentially an extended brainstorming session. Eleven mathematicians and scientists originally ...

Published May 19, 2023. For six weeks in the summer of 1956, a group of scientists convened on Dartmouth's campus for the Dartmouth Summer Research Project on Artificial Intelligence. It was at this meeting that the term "artificial intelligence," was coined. Decades later, artificial intelligence has made significant advancements.

The 1956 Dartmouth summer research project on artificial intelligence was initiated by this August 31, 1955 proposal, authored by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon. The original typescript consisted of 17 pages plus a title page.

AI News. From hosting the seminal Dartmouth Summer Research Project on Artificial Intelligence in 1956 to leading multidisciplinary research on large language models today, Dartmouth has always been at the forefront of AI. See what's happening around campus and learn about Dartmouth experts in the field.

Image. In 1956, a small group of scientists gathered for the Dartmouth Summer Research Project on Artificial Intelligence, which was the birth of this field of research. To celebrate the anniversary, more than 100 researchers and scholars again met at Dartmouth for AI@50, a conference that not only honored the past and assessed present ...

A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence (August 31st, 1955) We propose that a 2 month, 10 man study of artificial intelligence be carried out during the summer of 1956 at Dartmouth College in Hanover, New Hampshire. The study is to proceed on the basis of the conjecture that every aspect of learning or ...

The 1956 Dartmouth summer research project on artificial intelligence was initiated by this August 31, 1955 proposal, authored by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon. The original typescript consisted of 17 pages plus a title page. Copies of the typescript are housed in the archives at Dartmouth College and

A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence, August 31, 1955. John McCarthy, Marvin L. Minsky, Nathaniel Rochester, Claude E. Shannon. 12.

The "summer study" in question was titled "The Dartmouth Summer Research Project on Artificial Intelligence," and the funding requested was from the Rockefeller Foundation.

page 1 of 7. A Proposal For The Dartmouth Summer Research Project On Artificial Intelligence. J. McCarthy, Dartmouth College; M. L. Minsky, Harvard University; N. Rochester, I.B.M. Corporation; C.E. Shannon, Bell Telephone Laboratories August 31, 1955 We propose that a 2 month, 10 man study of artificial intelligence be carried out during the ...

Spotlight. BU Task Force Releases Guidance for Generative AI in Research and Education. This month, BU's Artificial Intelligence (AI) Task Force released a comprehensive report on Generative AI in research and education, including opportunities, challenges, and guiding principles of relevance to BU researchers, as well as 100+ policies and advice documents on generative AI in higher education.

NSF funds groundbreaking research project led by Northeastern to 'democratize' artificial intelligence. Computer science professor David Bau is the lead principal investigator of National Deep Inference Fabric, a revolutionary new project involving industry and academic partners aimed at unlocking the secrets of AI.

August 31, 1955. We propose that a 2 month, 10 man study of artificial intelligence be carried out during the summer of 1956 at Dartmouth College in Hanover, New Hampshire. The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a ...