Tutorial Playlist

Statistics tutorial, everything you need to know about the probability density function in statistics, the best guide to understand central limit theorem, an in-depth guide to measures of central tendency : mean, median and mode, the ultimate guide to understand conditional probability.

A Comprehensive Look at Percentile in Statistics

The Best Guide to Understand Bayes Theorem

Everything you need to know about the normal distribution, an in-depth explanation of cumulative distribution function, a complete guide to chi-square test, a complete guide on hypothesis testing in statistics, understanding the fundamentals of arithmetic and geometric progression, the definitive guide to understand spearman’s rank correlation, a comprehensive guide to understand mean squared error, all you need to know about the empirical rule in statistics, the complete guide to skewness and kurtosis, a holistic look at bernoulli distribution.

All You Need to Know About Bias in Statistics

A Complete Guide to Get a Grasp of Time Series Analysis

The Key Differences Between Z-Test Vs. T-Test

The Complete Guide to Understand Pearson's Correlation

A complete guide on the types of statistical studies, everything you need to know about poisson distribution, your best guide to understand correlation vs. regression, the most comprehensive guide for beginners on what is correlation, what is hypothesis testing in statistics types and examples.

Lesson 10 of 24 By Avijeet Biswal

Table of Contents

In today’s data-driven world , decisions are based on data all the time. Hypothesis plays a crucial role in that process, whether it may be making business decisions, in the health sector, academia, or in quality improvement. Without hypothesis & hypothesis tests, you risk drawing the wrong conclusions and making bad decisions. In this tutorial, you will look at Hypothesis Testing in Statistics.

What Is Hypothesis Testing in Statistics?

Hypothesis Testing is a type of statistical analysis in which you put your assumptions about a population parameter to the test. It is used to estimate the relationship between 2 statistical variables.

Let's discuss few examples of statistical hypothesis from real-life -

- A teacher assumes that 60% of his college's students come from lower-middle-class families.

- A doctor believes that 3D (Diet, Dose, and Discipline) is 90% effective for diabetic patients.

Now that you know about hypothesis testing, look at the two types of hypothesis testing in statistics.

Hypothesis Testing Formula

Z = ( x̅ – μ0 ) / (σ /√n)

- Here, x̅ is the sample mean,

- μ0 is the population mean,

- σ is the standard deviation,

- n is the sample size.

How Hypothesis Testing Works?

An analyst performs hypothesis testing on a statistical sample to present evidence of the plausibility of the null hypothesis. Measurements and analyses are conducted on a random sample of the population to test a theory. Analysts use a random population sample to test two hypotheses: the null and alternative hypotheses.

The null hypothesis is typically an equality hypothesis between population parameters; for example, a null hypothesis may claim that the population means return equals zero. The alternate hypothesis is essentially the inverse of the null hypothesis (e.g., the population means the return is not equal to zero). As a result, they are mutually exclusive, and only one can be correct. One of the two possibilities, however, will always be correct.

Your Dream Career is Just Around The Corner!

Null Hypothesis and Alternate Hypothesis

The Null Hypothesis is the assumption that the event will not occur. A null hypothesis has no bearing on the study's outcome unless it is rejected.

H0 is the symbol for it, and it is pronounced H-naught.

The Alternate Hypothesis is the logical opposite of the null hypothesis. The acceptance of the alternative hypothesis follows the rejection of the null hypothesis. H1 is the symbol for it.

Let's understand this with an example.

A sanitizer manufacturer claims that its product kills 95 percent of germs on average.

To put this company's claim to the test, create a null and alternate hypothesis.

H0 (Null Hypothesis): Average = 95%.

Alternative Hypothesis (H1): The average is less than 95%.

Another straightforward example to understand this concept is determining whether or not a coin is fair and balanced. The null hypothesis states that the probability of a show of heads is equal to the likelihood of a show of tails. In contrast, the alternate theory states that the probability of a show of heads and tails would be very different.

Become a Data Scientist with Hands-on Training!

Hypothesis Testing Calculation With Examples

Let's consider a hypothesis test for the average height of women in the United States. Suppose our null hypothesis is that the average height is 5'4". We gather a sample of 100 women and determine that their average height is 5'5". The standard deviation of population is 2.

To calculate the z-score, we would use the following formula:

z = ( x̅ – μ0 ) / (σ /√n)

z = (5'5" - 5'4") / (2" / √100)

z = 0.5 / (0.045)

We will reject the null hypothesis as the z-score of 11.11 is very large and conclude that there is evidence to suggest that the average height of women in the US is greater than 5'4".

Steps of Hypothesis Testing

Step 1: specify your null and alternate hypotheses.

It is critical to rephrase your original research hypothesis (the prediction that you wish to study) as a null (Ho) and alternative (Ha) hypothesis so that you can test it quantitatively. Your first hypothesis, which predicts a link between variables, is generally your alternate hypothesis. The null hypothesis predicts no link between the variables of interest.

Step 2: Gather Data

For a statistical test to be legitimate, sampling and data collection must be done in a way that is meant to test your hypothesis. You cannot draw statistical conclusions about the population you are interested in if your data is not representative.

Step 3: Conduct a Statistical Test

Other statistical tests are available, but they all compare within-group variance (how to spread out the data inside a category) against between-group variance (how different the categories are from one another). If the between-group variation is big enough that there is little or no overlap between groups, your statistical test will display a low p-value to represent this. This suggests that the disparities between these groups are unlikely to have occurred by accident. Alternatively, if there is a large within-group variance and a low between-group variance, your statistical test will show a high p-value. Any difference you find across groups is most likely attributable to chance. The variety of variables and the level of measurement of your obtained data will influence your statistical test selection.

Step 4: Determine Rejection Of Your Null Hypothesis

Your statistical test results must determine whether your null hypothesis should be rejected or not. In most circumstances, you will base your judgment on the p-value provided by the statistical test. In most circumstances, your preset level of significance for rejecting the null hypothesis will be 0.05 - that is, when there is less than a 5% likelihood that these data would be seen if the null hypothesis were true. In other circumstances, researchers use a lower level of significance, such as 0.01 (1%). This reduces the possibility of wrongly rejecting the null hypothesis.

Step 5: Present Your Results

The findings of hypothesis testing will be discussed in the results and discussion portions of your research paper, dissertation, or thesis. You should include a concise overview of the data and a summary of the findings of your statistical test in the results section. You can talk about whether your results confirmed your initial hypothesis or not in the conversation. Rejecting or failing to reject the null hypothesis is a formal term used in hypothesis testing. This is likely a must for your statistics assignments.

Types of Hypothesis Testing

To determine whether a discovery or relationship is statistically significant, hypothesis testing uses a z-test. It usually checks to see if two means are the same (the null hypothesis). Only when the population standard deviation is known and the sample size is 30 data points or more, can a z-test be applied.

A statistical test called a t-test is employed to compare the means of two groups. To determine whether two groups differ or if a procedure or treatment affects the population of interest, it is frequently used in hypothesis testing.

Chi-Square

You utilize a Chi-square test for hypothesis testing concerning whether your data is as predicted. To determine if the expected and observed results are well-fitted, the Chi-square test analyzes the differences between categorical variables from a random sample. The test's fundamental premise is that the observed values in your data should be compared to the predicted values that would be present if the null hypothesis were true.

Hypothesis Testing and Confidence Intervals

Both confidence intervals and hypothesis tests are inferential techniques that depend on approximating the sample distribution. Data from a sample is used to estimate a population parameter using confidence intervals. Data from a sample is used in hypothesis testing to examine a given hypothesis. We must have a postulated parameter to conduct hypothesis testing.

Bootstrap distributions and randomization distributions are created using comparable simulation techniques. The observed sample statistic is the focal point of a bootstrap distribution, whereas the null hypothesis value is the focal point of a randomization distribution.

A variety of feasible population parameter estimates are included in confidence ranges. In this lesson, we created just two-tailed confidence intervals. There is a direct connection between these two-tail confidence intervals and these two-tail hypothesis tests. The results of a two-tailed hypothesis test and two-tailed confidence intervals typically provide the same results. In other words, a hypothesis test at the 0.05 level will virtually always fail to reject the null hypothesis if the 95% confidence interval contains the predicted value. A hypothesis test at the 0.05 level will nearly certainly reject the null hypothesis if the 95% confidence interval does not include the hypothesized parameter.

Simple and Composite Hypothesis Testing

Depending on the population distribution, you can classify the statistical hypothesis into two types.

Simple Hypothesis: A simple hypothesis specifies an exact value for the parameter.

Composite Hypothesis: A composite hypothesis specifies a range of values.

A company is claiming that their average sales for this quarter are 1000 units. This is an example of a simple hypothesis.

Suppose the company claims that the sales are in the range of 900 to 1000 units. Then this is a case of a composite hypothesis.

One-Tailed and Two-Tailed Hypothesis Testing

The One-Tailed test, also called a directional test, considers a critical region of data that would result in the null hypothesis being rejected if the test sample falls into it, inevitably meaning the acceptance of the alternate hypothesis.

In a one-tailed test, the critical distribution area is one-sided, meaning the test sample is either greater or lesser than a specific value.

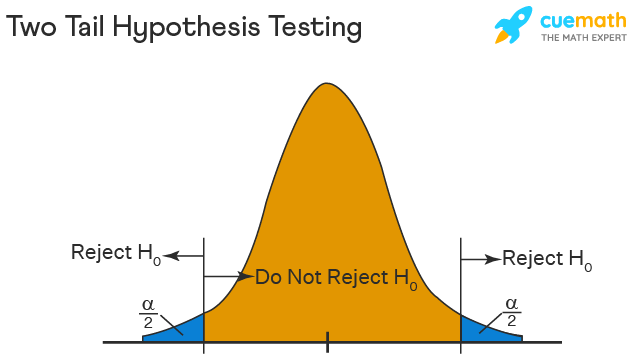

In two tails, the test sample is checked to be greater or less than a range of values in a Two-Tailed test, implying that the critical distribution area is two-sided.

If the sample falls within this range, the alternate hypothesis will be accepted, and the null hypothesis will be rejected.

Become a Data Scientist With Real-World Experience

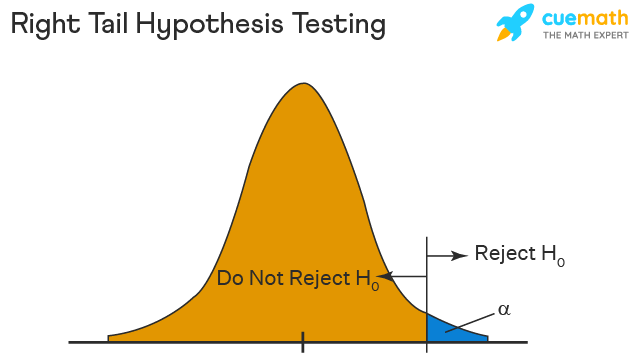

Right Tailed Hypothesis Testing

If the larger than (>) sign appears in your hypothesis statement, you are using a right-tailed test, also known as an upper test. Or, to put it another way, the disparity is to the right. For instance, you can contrast the battery life before and after a change in production. Your hypothesis statements can be the following if you want to know if the battery life is longer than the original (let's say 90 hours):

- The null hypothesis is (H0 <= 90) or less change.

- A possibility is that battery life has risen (H1) > 90.

The crucial point in this situation is that the alternate hypothesis (H1), not the null hypothesis, decides whether you get a right-tailed test.

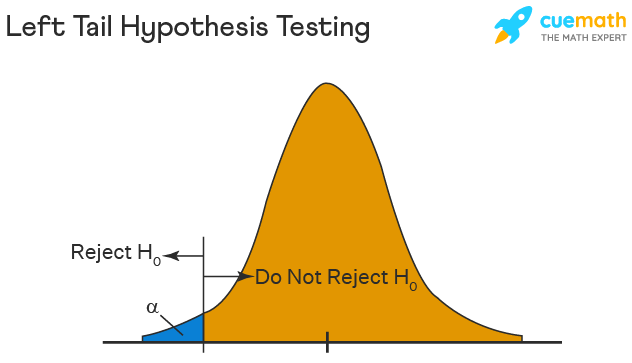

Left Tailed Hypothesis Testing

Alternative hypotheses that assert the true value of a parameter is lower than the null hypothesis are tested with a left-tailed test; they are indicated by the asterisk "<".

Suppose H0: mean = 50 and H1: mean not equal to 50

According to the H1, the mean can be greater than or less than 50. This is an example of a Two-tailed test.

In a similar manner, if H0: mean >=50, then H1: mean <50

Here the mean is less than 50. It is called a One-tailed test.

Type 1 and Type 2 Error

A hypothesis test can result in two types of errors.

Type 1 Error: A Type-I error occurs when sample results reject the null hypothesis despite being true.

Type 2 Error: A Type-II error occurs when the null hypothesis is not rejected when it is false, unlike a Type-I error.

Suppose a teacher evaluates the examination paper to decide whether a student passes or fails.

H0: Student has passed

H1: Student has failed

Type I error will be the teacher failing the student [rejects H0] although the student scored the passing marks [H0 was true].

Type II error will be the case where the teacher passes the student [do not reject H0] although the student did not score the passing marks [H1 is true].

Level of Significance

The alpha value is a criterion for determining whether a test statistic is statistically significant. In a statistical test, Alpha represents an acceptable probability of a Type I error. Because alpha is a probability, it can be anywhere between 0 and 1. In practice, the most commonly used alpha values are 0.01, 0.05, and 0.1, which represent a 1%, 5%, and 10% chance of a Type I error, respectively (i.e. rejecting the null hypothesis when it is in fact correct).

Future-Proof Your AI/ML Career: Top Dos and Don'ts

A p-value is a metric that expresses the likelihood that an observed difference could have occurred by chance. As the p-value decreases the statistical significance of the observed difference increases. If the p-value is too low, you reject the null hypothesis.

Here you have taken an example in which you are trying to test whether the new advertising campaign has increased the product's sales. The p-value is the likelihood that the null hypothesis, which states that there is no change in the sales due to the new advertising campaign, is true. If the p-value is .30, then there is a 30% chance that there is no increase or decrease in the product's sales. If the p-value is 0.03, then there is a 3% probability that there is no increase or decrease in the sales value due to the new advertising campaign. As you can see, the lower the p-value, the chances of the alternate hypothesis being true increases, which means that the new advertising campaign causes an increase or decrease in sales.

Why is Hypothesis Testing Important in Research Methodology?

Hypothesis testing is crucial in research methodology for several reasons:

- Provides evidence-based conclusions: It allows researchers to make objective conclusions based on empirical data, providing evidence to support or refute their research hypotheses.

- Supports decision-making: It helps make informed decisions, such as accepting or rejecting a new treatment, implementing policy changes, or adopting new practices.

- Adds rigor and validity: It adds scientific rigor to research using statistical methods to analyze data, ensuring that conclusions are based on sound statistical evidence.

- Contributes to the advancement of knowledge: By testing hypotheses, researchers contribute to the growth of knowledge in their respective fields by confirming existing theories or discovering new patterns and relationships.

Limitations of Hypothesis Testing

Hypothesis testing has some limitations that researchers should be aware of:

- It cannot prove or establish the truth: Hypothesis testing provides evidence to support or reject a hypothesis, but it cannot confirm the absolute truth of the research question.

- Results are sample-specific: Hypothesis testing is based on analyzing a sample from a population, and the conclusions drawn are specific to that particular sample.

- Possible errors: During hypothesis testing, there is a chance of committing type I error (rejecting a true null hypothesis) or type II error (failing to reject a false null hypothesis).

- Assumptions and requirements: Different tests have specific assumptions and requirements that must be met to accurately interpret results.

After reading this tutorial, you would have a much better understanding of hypothesis testing, one of the most important concepts in the field of Data Science . The majority of hypotheses are based on speculation about observed behavior, natural phenomena, or established theories.

If you are interested in statistics of data science and skills needed for such a career, you ought to explore Simplilearn’s Post Graduate Program in Data Science.

If you have any questions regarding this ‘Hypothesis Testing In Statistics’ tutorial, do share them in the comment section. Our subject matter expert will respond to your queries. Happy learning!

1. What is hypothesis testing in statistics with example?

Hypothesis testing is a statistical method used to determine if there is enough evidence in a sample data to draw conclusions about a population. It involves formulating two competing hypotheses, the null hypothesis (H0) and the alternative hypothesis (Ha), and then collecting data to assess the evidence. An example: testing if a new drug improves patient recovery (Ha) compared to the standard treatment (H0) based on collected patient data.

2. What is hypothesis testing and its types?

Hypothesis testing is a statistical method used to make inferences about a population based on sample data. It involves formulating two hypotheses: the null hypothesis (H0), which represents the default assumption, and the alternative hypothesis (Ha), which contradicts H0. The goal is to assess the evidence and determine whether there is enough statistical significance to reject the null hypothesis in favor of the alternative hypothesis.

Types of hypothesis testing:

- One-sample test: Used to compare a sample to a known value or a hypothesized value.

- Two-sample test: Compares two independent samples to assess if there is a significant difference between their means or distributions.

- Paired-sample test: Compares two related samples, such as pre-test and post-test data, to evaluate changes within the same subjects over time or under different conditions.

- Chi-square test: Used to analyze categorical data and determine if there is a significant association between variables.

- ANOVA (Analysis of Variance): Compares means across multiple groups to check if there is a significant difference between them.

3. What are the steps of hypothesis testing?

The steps of hypothesis testing are as follows:

- Formulate the hypotheses: State the null hypothesis (H0) and the alternative hypothesis (Ha) based on the research question.

- Set the significance level: Determine the acceptable level of error (alpha) for making a decision.

- Collect and analyze data: Gather and process the sample data.

- Compute test statistic: Calculate the appropriate statistical test to assess the evidence.

- Make a decision: Compare the test statistic with critical values or p-values and determine whether to reject H0 in favor of Ha or not.

- Draw conclusions: Interpret the results and communicate the findings in the context of the research question.

4. What are the 2 types of hypothesis testing?

- One-tailed (or one-sided) test: Tests for the significance of an effect in only one direction, either positive or negative.

- Two-tailed (or two-sided) test: Tests for the significance of an effect in both directions, allowing for the possibility of a positive or negative effect.

The choice between one-tailed and two-tailed tests depends on the specific research question and the directionality of the expected effect.

5. What are the 3 major types of hypothesis?

The three major types of hypotheses are:

- Null Hypothesis (H0): Represents the default assumption, stating that there is no significant effect or relationship in the data.

- Alternative Hypothesis (Ha): Contradicts the null hypothesis and proposes a specific effect or relationship that researchers want to investigate.

- Nondirectional Hypothesis: An alternative hypothesis that doesn't specify the direction of the effect, leaving it open for both positive and negative possibilities.

Find our Data Analyst Online Bootcamp in top cities:

About the author.

Avijeet is a Senior Research Analyst at Simplilearn. Passionate about Data Analytics, Machine Learning, and Deep Learning, Avijeet is also interested in politics, cricket, and football.

Recommended Resources

Free eBook: Top Programming Languages For A Data Scientist

Normality Test in Minitab: Minitab with Statistics

Machine Learning Career Guide: A Playbook to Becoming a Machine Learning Engineer

- PMP, PMI, PMBOK, CAPM, PgMP, PfMP, ACP, PBA, RMP, SP, and OPM3 are registered marks of the Project Management Institute, Inc.

9.4 Full Hypothesis Test Examples

Tests on means, example 9.8.

Jeffrey, as an eight-year old, established a mean time of 16.43 seconds for swimming the 25-yard freestyle, with a standard deviation of 0.8 seconds . His dad, Frank, thought that Jeffrey could swim the 25-yard freestyle faster using goggles. Frank bought Jeffrey a new pair of expensive goggles and timed Jeffrey for 15 25-yard freestyle swims . For the 15 swims, Jeffrey's mean time was 16 seconds. Frank thought that the goggles helped Jeffrey to swim faster than the 16.43 seconds. Conduct a hypothesis test using a preset α = 0.05. Assume that the swim times for the 25-yard freestyle are normal.

Set up the Hypothesis Test:

Since the problem is about a mean, this is a test of a single population mean .

H 0 : μ = 16.43 H a : μ < 16.43

For Jeffrey to swim faster, his time will be less than 16.43 seconds. The "<" tells you this is left-tailed.

Determine the distribution needed:

Random variable: X ¯ X ¯ = the mean time to swim the 25-yard freestyle.

Distribution for the test: X ¯ X ¯ is normal (population standard deviation is known: σ = 0.8)

X ¯ ~ N ( μ , σ X n ) X ¯ ~ N ( μ , σ X n ) Therefore, X ¯ ~ N ( 16.43 , 0.8 15 ) X ¯ ~ N ( 16.43 , 0.8 15 )

μ = 16.43 comes from H 0 and not the data. σ = 0.8, and n = 15.

Calculate the p -value using the normal distribution for a mean:

p -value = P ( x ¯ x ¯ < 16) = 0.0187 where the sample mean in the problem is given as 16.

p -value = 0.0187 (This is called the actual level of significance .) The p -value is the area to the left of the sample mean is given as 16.

μ = 16.43 comes from H 0 . Our assumption is μ = 16.43.

Interpretation of the p -value: If H 0 is true , there is a 0.0187 probability (1.87%)that Jeffrey's mean time to swim the 25-yard freestyle is 16 seconds or less. Because a 1.87% chance is small, the mean time of 16 seconds or less is unlikely to have happened randomly. It is a rare event.

Compare α and the p -value:

α = 0.05 p -value = 0.0187 α > p -value

Make a decision: Since α > α > p -value, reject H 0 .

This indicates that you reject the null hypothesis that the mean time to swim the 25-yard freestyle is at least 16.43 seconds.

Conclusion: At the 5% significance level, there is sufficient evidence that Jeffrey's mean time to swim the 25-yard freestyle is less than 16.43 seconds. Thus, based on the sample data, we conclude that Jeffrey swims faster using the new goggles.

The Type I and Type II errors for this problem are as follows: The Type I error is to conclude that Jeffrey swims the 25-yard freestyle, on average, in less than 16.43 seconds when, in fact, he actually swims the 25-yard freestyle, on average, in at least 16.43 seconds. (Reject the null hypothesis when the null hypothesis is true.)

The Type II error is that there is not evidence to conclude that Jeffrey swims the 25-yard freestyle, on average, in less than 16.43 seconds when, in fact, he actually does swim the 25-yard free-style, on average, in less than 16.43 seconds. (Do not reject the null hypothesis when the null hypothesis is false.)

The mean throwing distance of a football for Marco, a high school quarterback, is 40 yards, with a standard deviation of two yards. The team coach tells Marco to adjust his grip to get more distance. The coach records the distances for 20 throws. For the 20 throws, Marco’s mean distance was 45 yards. The coach thought the different grip helped Marco throw farther than 40 yards. Conduct a hypothesis test using a preset α = 0.05. Assume the throw distances for footballs are normal.

First, determine what type of test this is, set up the hypothesis test, find the p -value, sketch the graph, and state your conclusion.

Example 9.9

Jasmine has just begun her new job on the sales force of a very competitive company. In a sample of 16 sales calls it was found that she closed the contract for an average value of 108 dollars with a standard deviation of 12 dollars. Test at 5% significance that the population mean is at least 100 dollars against the alternative that it is less than 100 dollars. Company policy requires that new members of the sales force must exceed an average of $100 per contract during the trial employment period. Can we conclude that Jasmine has met this requirement at the significance level of 95%?

- H 0 : µ ≤ 100 H a : µ > 100 The null and alternative hypothesis are for the parameter µ because the number of dollars of the contracts is a continuous random variable. Also, this is a one-tailed test because the company has only an interested if the number of dollars per contact is below a particular number not "too high" a number. This can be thought of as making a claim that the requirement is being met and thus the claim is in the alternative hypothesis.

- Test statistic: t c = x ¯ − µ 0 s n = 108 − 100 ( 12 16 ) = 2.67 t c = x ¯ − µ 0 s n = 108 − 100 ( 12 16 ) = 2.67

- Critical value: t a = 1.753 t a = 1.753 with n-1 degrees of freedom= 15

The test statistic is a Student's t because the sample size is below 30; therefore, we cannot use the normal distribution. Comparing the calculated value of the test statistic and the critical value of t t ( t a ) ( t a ) at a 5% significance level, we see that the calculated value is in the tail of the distribution. Thus, we conclude that 108 dollars per contract is significantly larger than the hypothesized value of 100 and thus we cannot accept the null hypothesis. There is evidence that supports Jasmine's performance meets company standards.

It is believed that a stock price for a particular company will grow at a rate of $5 per week with a standard deviation of $1. An investor believes the stock won’t grow as quickly. The changes in stock price is recorded for ten weeks and are as follows: $4, $3, $2, $3, $1, $7, $2, $1, $1, $2. Perform a hypothesis test using a 5% level of significance. State the null and alternative hypotheses, state your conclusion, and identify the Type I errors.

Example 9.10

A manufacturer of salad dressings uses machines to dispense liquid ingredients into bottles that move along a filling line. The machine that dispenses salad dressings is working properly when 8 ounces are dispensed. Suppose that the average amount dispensed in a particular sample of 35 bottles is 7.91 ounces with a variance of 0.03 ounces squared, s 2 s 2 . Is there evidence that the machine should be stopped and production wait for repairs? The lost production from a shutdown is potentially so great that management feels that the level of significance in the analysis should be 99%.

Again we will follow the steps in our analysis of this problem.

STEP 1 : Set the Null and Alternative Hypothesis. The random variable is the quantity of fluid placed in the bottles. This is a continuous random variable and the parameter we are interested in is the mean. Our hypothesis therefore is about the mean. In this case we are concerned that the machine is not filling properly. From what we are told it does not matter if the machine is over-filling or under-filling, both seem to be an equally bad error. This tells us that this is a two-tailed test: if the machine is malfunctioning it will be shutdown regardless if it is from over-filling or under-filling. The null and alternative hypotheses are thus:

STEP 2 : Decide the level of significance and draw the graph showing the critical value.

This problem has already set the level of significance at 99%. The decision seems an appropriate one and shows the thought process when setting the significance level. Management wants to be very certain, as certain as probability will allow, that they are not shutting down a machine that is not in need of repair. To draw the distribution and the critical value, we need to know which distribution to use. Because this is a continuous random variable and we are interested in the mean, and the sample size is greater than 30, the appropriate distribution is the normal distribution and the relevant critical value is 2.575 from the normal table or the t-table at 0.005 column and infinite degrees of freedom. We draw the graph and mark these points.

STEP 3 : Calculate sample parameters and the test statistic. The sample parameters are provided, the sample mean is 7.91 and the sample variance is .03 and the sample size is 35. We need to note that the sample variance was provided not the sample standard deviation, which is what we need for the formula. Remembering that the standard deviation is simply the square root of the variance, we therefore know the sample standard deviation, s, is 0.173. With this information we calculate the test statistic as -3.07, and mark it on the graph.

STEP 4 : Compare test statistic and the critical values Now we compare the test statistic and the critical value by placing the test statistic on the graph. We see that the test statistic is in the tail, decidedly greater than the critical value of 2.575. We note that even the very small difference between the hypothesized value and the sample value is still a large number of standard deviations. The sample mean is only 0.08 ounces different from the required level of 8 ounces, but it is 3 plus standard deviations away and thus we cannot accept the null hypothesis.

STEP 5 : Reach a Conclusion

Three standard deviations of a test statistic will guarantee that the test will fail. The probability that anything is within three standard deviations is almost zero. Actually it is 0.0026 on the normal distribution, which is certainly almost zero in a practical sense. Our formal conclusion would be “ At a 99% level of significance we cannot accept the hypothesis that the sample mean came from a distribution with a mean of 8 ounces” Or less formally, and getting to the point, “At a 99% level of significance we conclude that the machine is under filling the bottles and is in need of repair”.

Try It 9.10

A company records the mean time of employees working in a day. The mean comes out to be 475 minutes, with a standard deviation of 45 minutes. A manager recorded times of 20 employees. The times of working were (frequencies are in parentheses) 460(3); 465(2); 470(3); 475(1); 480(6); 485(3); 490(2).

Conduct a hypothesis test using a 2.5% level of significance to determine if the mean time is more than 475 .

Hypothesis Test for Proportions

Just as there were confidence intervals for proportions, or more formally, the population parameter p of the binomial distribution, there is the ability to test hypotheses concerning p .

The population parameter for the binomial is p . The estimated value (point estimate) for p is p′ where p′ = x/n , x is the number of successes in the sample and n is the sample size.

When you perform a hypothesis test of a population proportion p , you take a simple random sample from the population. The conditions for a binomial distribution must be met, which are: there are a certain number n of independent trials meaning random sampling, the outcomes of any trial are binary, success or failure, and each trial has the same probability of a success p . The shape of the binomial distribution needs to be similar to the shape of the normal distribution. To ensure this, the quantities np′ and nq′ must both be greater than five ( np′ > 5 and nq′ > 5). In this case the binomial distribution of a sample (estimated) proportion can be approximated by the normal distribution with μ = np μ = np and σ = npq σ = npq . Remember that q = 1 – p q = 1 – p . There is no distribution that can correct for this small sample bias and thus if these conditions are not met we simply cannot test the hypothesis with the data available at that time. We met this condition when we first were estimating confidence intervals for p .

Again, we begin with the standardizing formula modified because this is the distribution of a binomial.

Substituting p 0 p 0 , the hypothesized value of p , we have:

This is the test statistic for testing hypothesized values of p , where the null and alternative hypotheses take one of the following forms:

The decision rule stated above applies here also: if the calculated value of Z c shows that the sample proportion is "too many" standard deviations from the hypothesized proportion, the null hypothesis cannot be accepted. The decision as to what is "too many" is pre-determined by the analyst depending on the level of significance required in the test.

Example 9.11

The mortgage department of a large bank is interested in the nature of loans of first-time borrowers. This information will be used to tailor their marketing strategy. They believe that 50% of first-time borrowers take out smaller loans than other borrowers. They perform a hypothesis test to determine if the percentage is the same or different from 50% . They sample 100 first-time borrowers and find 53 of these loans are smaller that the other borrowers. For the hypothesis test, they choose a 5% level of significance.

STEP 1 : Set the null and alternative hypothesis.

H 0 : p = 0.50 H a : p ≠ 0.50

The words "is the same or different from" tell you this is a two-tailed test. The Type I and Type II errors are as follows: The Type I error is to conclude that the proportion of borrowers is different from 50% when, in fact, the proportion is actually 50%. (Reject the null hypothesis when the null hypothesis is true). The Type II error is there is not enough evidence to conclude that the proportion of first time borrowers differs from 50% when, in fact, the proportion does differ from 50%. (You fail to reject the null hypothesis when the null hypothesis is false.)

STEP 2 : Decide the level of significance and draw the graph showing the critical value

The level of significance has been set by the problem at the 5% level. Because this is two-tailed test one-half of the alpha value will be in the upper tail and one-half in the lower tail as shown on the graph. The critical value for the normal distribution at the 95% level of confidence is 1.96. This can easily be found on the student’s t-table at the very bottom at infinite degrees of freedom remembering that at infinity the t-distribution is the normal distribution. Of course the value can also be found on the normal table but you have go looking for one-half of 95 (0.475) inside the body of the table and then read out to the sides and top for the number of standard deviations.

STEP 3 : Calculate the sample parameters and critical value of the test statistic.

The test statistic is a normal distribution, Z, for testing proportions and is:

For this case, the sample of 100 found 53 of these loans were smaller than those of other borrowers. The sample proportion, p′ = 53/100= 0.53 The test question, therefore, is : “Is 0.53 significantly different from .50?” Putting these values into the formula for the test statistic we find that 0.53 is only 0.60 standard deviations away from .50. This is barely off of the mean of the standard normal distribution of zero. There is virtually no difference from the sample proportion and the hypothesized proportion in terms of standard deviations.

STEP 4 : Compare the test statistic and the critical value.

The calculated value is well within the critical values of ± 1.96 standard deviations and thus we cannot reject the null hypothesis. To reject the null hypothesis we need significant evident of difference between the hypothesized value and the sample value. In this case the sample value is very nearly the same as the hypothesized value measured in terms of standard deviations.

STEP 5 : Reach a conclusion

The formal conclusion would be “At a 5% level of significance we cannot reject the null hypothesis that 50% of first-time borrowers take out smaller loans than other borrowers.” Notice the length to which the conclusion goes to include all of the conditions that are attached to the conclusion. Statisticians, for all the criticism they receive, are careful to be very specific even when this seems trivial. Statisticians cannot say more than they know, and the data constrain the conclusion to be within the metes and bounds of the data.

Try It 9.11

A teacher believes that 85% of students in the class will want to go on a field trip to the local zoo. The teacher performs a hypothesis test to determine if the percentage is the same or different from 85%. The teacher samples 50 students and 39 reply that they would want to go to the zoo. For the hypothesis test, use a 1% level of significance.

Example 9.12

Suppose a consumer group suspects that the proportion of households that have three or more cell phones is 30%. A cell phone company has reason to believe that the proportion is not 30%. Before they start a big advertising campaign, they conduct a hypothesis test. Their marketing people survey 150 households with the result that 43 of the households have three or more cell phones.

Here is an abbreviate version of the system to solve hypothesis tests applied to a test on a proportions.

Try It 9.12

Marketers believe that 92% of adults in the United States own a cell phone. A cell phone manufacturer believes that number is actually lower. 200 American adults are surveyed, of which, 174 report having cell phones. Use a 5% level of significance. State the null and alternative hypothesis, find the p -value, state your conclusion, and identify the Type I and Type II errors.

Example 9.13

The National Institute of Standards and Technology provides exact data on conductivity properties of materials. Following are conductivity measurements for 11 randomly selected pieces of a particular type of glass.

1.11; 1.07; 1.11; 1.07; 1.12; 1.08; .98; .98; 1.02; .95; .95 Is there convincing evidence that the average conductivity of this type of glass is greater than one? Use a significance level of 0.05.

Let’s follow a four-step process to answer this statistical question.

- H 0 : μ ≤ 1

- H a : μ > 1

- Plan : We are testing a sample mean without a known population standard deviation with less than 30 observations. Therefore, we need to use a Student's-t distribution. Assume the underlying population is normal.

- Do the calculations and draw the graph .

- State the Conclusions : We cannot accept the null hypothesis. It is reasonable to state that the data supports the claim that the average conductivity level is greater than one.

Try It 9.13

The boiling point of a specific liquid is measured for 15 samples, and the boiling points are obtained as follows:

205; 206; 206; 202; 199; 194; 197; 198; 198; 201; 201; 202; 207; 211; 205

Is there convincing evidence that the average boiling point is greater than 200? Use a significance level of 0.1. Assume the population is normal.

Example 9.14

In a study of 420,019 cell phone users, 172 of the subjects developed brain cancer. Test the claim that cell phone users developed brain cancer at a greater rate than that for non-cell phone users (the rate of brain cancer for non-cell phone users is 0.0340%). Since this is a critical issue, use a 0.005 significance level. Explain why the significance level should be so low in terms of a Type I error.

- H 0 : p ≤ 0.00034

- H a : p > 0.00034

If we commit a Type I error, we are essentially accepting a false claim. Since the claim describes cancer-causing environments, we want to minimize the chances of incorrectly identifying causes of cancer.

- We will be testing a sample proportion with x = 172 and n = 420,019. The sample is sufficiently large because we have np' = 420,019(0.00034) = 142.8, nq' = 420,019(0.99966) = 419,876.2, two independent outcomes, and a fixed probability of success p' = 0.00034. Thus we will be able to generalize our results to the population.

Try It 9.14

In a study of 390,000 moisturizer users, 138 of the subjects developed skin diseases. Test the claim that moisturizer users developed skin diseases at a greater rate than that for non-moisturizer users (the rate of skin diseases for non-moisturizer users is 0.041%). Since this is a critical issue, use a 0.005 significance level. Explain why the significance level should be so low in terms of a Type I error.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute OpenStax.

Access for free at https://openstax.org/books/introductory-business-statistics-2e/pages/1-introduction

- Authors: Alexander Holmes, Barbara Illowsky, Susan Dean

- Publisher/website: OpenStax

- Book title: Introductory Business Statistics 2e

- Publication date: Dec 13, 2023

- Location: Houston, Texas

- Book URL: https://openstax.org/books/introductory-business-statistics-2e/pages/1-introduction

- Section URL: https://openstax.org/books/introductory-business-statistics-2e/pages/9-4-full-hypothesis-test-examples

© Dec 6, 2023 OpenStax. Textbook content produced by OpenStax is licensed under a Creative Commons Attribution License . The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

Hypothesis Testing

Hypothesis testing is a tool for making statistical inferences about the population data. It is an analysis tool that tests assumptions and determines how likely something is within a given standard of accuracy. Hypothesis testing provides a way to verify whether the results of an experiment are valid.

A null hypothesis and an alternative hypothesis are set up before performing the hypothesis testing. This helps to arrive at a conclusion regarding the sample obtained from the population. In this article, we will learn more about hypothesis testing, its types, steps to perform the testing, and associated examples.

What is Hypothesis Testing in Statistics?

Hypothesis testing uses sample data from the population to draw useful conclusions regarding the population probability distribution . It tests an assumption made about the data using different types of hypothesis testing methodologies. The hypothesis testing results in either rejecting or not rejecting the null hypothesis.

Hypothesis Testing Definition

Hypothesis testing can be defined as a statistical tool that is used to identify if the results of an experiment are meaningful or not. It involves setting up a null hypothesis and an alternative hypothesis. These two hypotheses will always be mutually exclusive. This means that if the null hypothesis is true then the alternative hypothesis is false and vice versa. An example of hypothesis testing is setting up a test to check if a new medicine works on a disease in a more efficient manner.

Null Hypothesis

The null hypothesis is a concise mathematical statement that is used to indicate that there is no difference between two possibilities. In other words, there is no difference between certain characteristics of data. This hypothesis assumes that the outcomes of an experiment are based on chance alone. It is denoted as \(H_{0}\). Hypothesis testing is used to conclude if the null hypothesis can be rejected or not. Suppose an experiment is conducted to check if girls are shorter than boys at the age of 5. The null hypothesis will say that they are the same height.

Alternative Hypothesis

The alternative hypothesis is an alternative to the null hypothesis. It is used to show that the observations of an experiment are due to some real effect. It indicates that there is a statistical significance between two possible outcomes and can be denoted as \(H_{1}\) or \(H_{a}\). For the above-mentioned example, the alternative hypothesis would be that girls are shorter than boys at the age of 5.

Hypothesis Testing P Value

In hypothesis testing, the p value is used to indicate whether the results obtained after conducting a test are statistically significant or not. It also indicates the probability of making an error in rejecting or not rejecting the null hypothesis.This value is always a number between 0 and 1. The p value is compared to an alpha level, \(\alpha\) or significance level. The alpha level can be defined as the acceptable risk of incorrectly rejecting the null hypothesis. The alpha level is usually chosen between 1% to 5%.

Hypothesis Testing Critical region

All sets of values that lead to rejecting the null hypothesis lie in the critical region. Furthermore, the value that separates the critical region from the non-critical region is known as the critical value.

Hypothesis Testing Formula

Depending upon the type of data available and the size, different types of hypothesis testing are used to determine whether the null hypothesis can be rejected or not. The hypothesis testing formula for some important test statistics are given below:

- z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\). \(\overline{x}\) is the sample mean, \(\mu\) is the population mean, \(\sigma\) is the population standard deviation and n is the size of the sample.

- t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\). s is the sample standard deviation.

- \(\chi ^{2} = \sum \frac{(O_{i}-E_{i})^{2}}{E_{i}}\). \(O_{i}\) is the observed value and \(E_{i}\) is the expected value.

We will learn more about these test statistics in the upcoming section.

Types of Hypothesis Testing

Selecting the correct test for performing hypothesis testing can be confusing. These tests are used to determine a test statistic on the basis of which the null hypothesis can either be rejected or not rejected. Some of the important tests used for hypothesis testing are given below.

Hypothesis Testing Z Test

A z test is a way of hypothesis testing that is used for a large sample size (n ≥ 30). It is used to determine whether there is a difference between the population mean and the sample mean when the population standard deviation is known. It can also be used to compare the mean of two samples. It is used to compute the z test statistic. The formulas are given as follows:

- One sample: z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\).

- Two samples: z = \(\frac{(\overline{x_{1}}-\overline{x_{2}})-(\mu_{1}-\mu_{2})}{\sqrt{\frac{\sigma_{1}^{2}}{n_{1}}+\frac{\sigma_{2}^{2}}{n_{2}}}}\).

Hypothesis Testing t Test

The t test is another method of hypothesis testing that is used for a small sample size (n < 30). It is also used to compare the sample mean and population mean. However, the population standard deviation is not known. Instead, the sample standard deviation is known. The mean of two samples can also be compared using the t test.

- One sample: t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\).

- Two samples: t = \(\frac{(\overline{x_{1}}-\overline{x_{2}})-(\mu_{1}-\mu_{2})}{\sqrt{\frac{s_{1}^{2}}{n_{1}}+\frac{s_{2}^{2}}{n_{2}}}}\).

Hypothesis Testing Chi Square

The Chi square test is a hypothesis testing method that is used to check whether the variables in a population are independent or not. It is used when the test statistic is chi-squared distributed.

One Tailed Hypothesis Testing

One tailed hypothesis testing is done when the rejection region is only in one direction. It can also be known as directional hypothesis testing because the effects can be tested in one direction only. This type of testing is further classified into the right tailed test and left tailed test.

Right Tailed Hypothesis Testing

The right tail test is also known as the upper tail test. This test is used to check whether the population parameter is greater than some value. The null and alternative hypotheses for this test are given as follows:

\(H_{0}\): The population parameter is ≤ some value

\(H_{1}\): The population parameter is > some value.

If the test statistic has a greater value than the critical value then the null hypothesis is rejected

Left Tailed Hypothesis Testing

The left tail test is also known as the lower tail test. It is used to check whether the population parameter is less than some value. The hypotheses for this hypothesis testing can be written as follows:

\(H_{0}\): The population parameter is ≥ some value

\(H_{1}\): The population parameter is < some value.

The null hypothesis is rejected if the test statistic has a value lesser than the critical value.

Two Tailed Hypothesis Testing

In this hypothesis testing method, the critical region lies on both sides of the sampling distribution. It is also known as a non - directional hypothesis testing method. The two-tailed test is used when it needs to be determined if the population parameter is assumed to be different than some value. The hypotheses can be set up as follows:

\(H_{0}\): the population parameter = some value

\(H_{1}\): the population parameter ≠ some value

The null hypothesis is rejected if the test statistic has a value that is not equal to the critical value.

Hypothesis Testing Steps

Hypothesis testing can be easily performed in five simple steps. The most important step is to correctly set up the hypotheses and identify the right method for hypothesis testing. The basic steps to perform hypothesis testing are as follows:

- Step 1: Set up the null hypothesis by correctly identifying whether it is the left-tailed, right-tailed, or two-tailed hypothesis testing.

- Step 2: Set up the alternative hypothesis.

- Step 3: Choose the correct significance level, \(\alpha\), and find the critical value.

- Step 4: Calculate the correct test statistic (z, t or \(\chi\)) and p-value.

- Step 5: Compare the test statistic with the critical value or compare the p-value with \(\alpha\) to arrive at a conclusion. In other words, decide if the null hypothesis is to be rejected or not.

Hypothesis Testing Example

The best way to solve a problem on hypothesis testing is by applying the 5 steps mentioned in the previous section. Suppose a researcher claims that the mean average weight of men is greater than 100kgs with a standard deviation of 15kgs. 30 men are chosen with an average weight of 112.5 Kgs. Using hypothesis testing, check if there is enough evidence to support the researcher's claim. The confidence interval is given as 95%.

Step 1: This is an example of a right-tailed test. Set up the null hypothesis as \(H_{0}\): \(\mu\) = 100.

Step 2: The alternative hypothesis is given by \(H_{1}\): \(\mu\) > 100.

Step 3: As this is a one-tailed test, \(\alpha\) = 100% - 95% = 5%. This can be used to determine the critical value.

1 - \(\alpha\) = 1 - 0.05 = 0.95

0.95 gives the required area under the curve. Now using a normal distribution table, the area 0.95 is at z = 1.645. A similar process can be followed for a t-test. The only additional requirement is to calculate the degrees of freedom given by n - 1.

Step 4: Calculate the z test statistic. This is because the sample size is 30. Furthermore, the sample and population means are known along with the standard deviation.

z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\).

\(\mu\) = 100, \(\overline{x}\) = 112.5, n = 30, \(\sigma\) = 15

z = \(\frac{112.5-100}{\frac{15}{\sqrt{30}}}\) = 4.56

Step 5: Conclusion. As 4.56 > 1.645 thus, the null hypothesis can be rejected.

Hypothesis Testing and Confidence Intervals

Confidence intervals form an important part of hypothesis testing. This is because the alpha level can be determined from a given confidence interval. Suppose a confidence interval is given as 95%. Subtract the confidence interval from 100%. This gives 100 - 95 = 5% or 0.05. This is the alpha value of a one-tailed hypothesis testing. To obtain the alpha value for a two-tailed hypothesis testing, divide this value by 2. This gives 0.05 / 2 = 0.025.

Related Articles:

- Probability and Statistics

- Data Handling

Important Notes on Hypothesis Testing

- Hypothesis testing is a technique that is used to verify whether the results of an experiment are statistically significant.

- It involves the setting up of a null hypothesis and an alternate hypothesis.

- There are three types of tests that can be conducted under hypothesis testing - z test, t test, and chi square test.

- Hypothesis testing can be classified as right tail, left tail, and two tail tests.

Examples on Hypothesis Testing

- Example 1: The average weight of a dumbbell in a gym is 90lbs. However, a physical trainer believes that the average weight might be higher. A random sample of 5 dumbbells with an average weight of 110lbs and a standard deviation of 18lbs. Using hypothesis testing check if the physical trainer's claim can be supported for a 95% confidence level. Solution: As the sample size is lesser than 30, the t-test is used. \(H_{0}\): \(\mu\) = 90, \(H_{1}\): \(\mu\) > 90 \(\overline{x}\) = 110, \(\mu\) = 90, n = 5, s = 18. \(\alpha\) = 0.05 Using the t-distribution table, the critical value is 2.132 t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\) t = 2.484 As 2.484 > 2.132, the null hypothesis is rejected. Answer: The average weight of the dumbbells may be greater than 90lbs

- Example 2: The average score on a test is 80 with a standard deviation of 10. With a new teaching curriculum introduced it is believed that this score will change. On random testing, the score of 38 students, the mean was found to be 88. With a 0.05 significance level, is there any evidence to support this claim? Solution: This is an example of two-tail hypothesis testing. The z test will be used. \(H_{0}\): \(\mu\) = 80, \(H_{1}\): \(\mu\) ≠ 80 \(\overline{x}\) = 88, \(\mu\) = 80, n = 36, \(\sigma\) = 10. \(\alpha\) = 0.05 / 2 = 0.025 The critical value using the normal distribution table is 1.96 z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\) z = \(\frac{88-80}{\frac{10}{\sqrt{36}}}\) = 4.8 As 4.8 > 1.96, the null hypothesis is rejected. Answer: There is a difference in the scores after the new curriculum was introduced.

- Example 3: The average score of a class is 90. However, a teacher believes that the average score might be lower. The scores of 6 students were randomly measured. The mean was 82 with a standard deviation of 18. With a 0.05 significance level use hypothesis testing to check if this claim is true. Solution: The t test will be used. \(H_{0}\): \(\mu\) = 90, \(H_{1}\): \(\mu\) < 90 \(\overline{x}\) = 110, \(\mu\) = 90, n = 6, s = 18 The critical value from the t table is -2.015 t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\) t = \(\frac{82-90}{\frac{18}{\sqrt{6}}}\) t = -1.088 As -1.088 > -2.015, we fail to reject the null hypothesis. Answer: There is not enough evidence to support the claim.

go to slide go to slide go to slide

Book a Free Trial Class

FAQs on Hypothesis Testing

What is hypothesis testing.

Hypothesis testing in statistics is a tool that is used to make inferences about the population data. It is also used to check if the results of an experiment are valid.

What is the z Test in Hypothesis Testing?

The z test in hypothesis testing is used to find the z test statistic for normally distributed data . The z test is used when the standard deviation of the population is known and the sample size is greater than or equal to 30.

What is the t Test in Hypothesis Testing?

The t test in hypothesis testing is used when the data follows a student t distribution . It is used when the sample size is less than 30 and standard deviation of the population is not known.

What is the formula for z test in Hypothesis Testing?

The formula for a one sample z test in hypothesis testing is z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\) and for two samples is z = \(\frac{(\overline{x_{1}}-\overline{x_{2}})-(\mu_{1}-\mu_{2})}{\sqrt{\frac{\sigma_{1}^{2}}{n_{1}}+\frac{\sigma_{2}^{2}}{n_{2}}}}\).

What is the p Value in Hypothesis Testing?

The p value helps to determine if the test results are statistically significant or not. In hypothesis testing, the null hypothesis can either be rejected or not rejected based on the comparison between the p value and the alpha level.

What is One Tail Hypothesis Testing?

When the rejection region is only on one side of the distribution curve then it is known as one tail hypothesis testing. The right tail test and the left tail test are two types of directional hypothesis testing.

What is the Alpha Level in Two Tail Hypothesis Testing?

To get the alpha level in a two tail hypothesis testing divide \(\alpha\) by 2. This is done as there are two rejection regions in the curve.

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2023 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

How to Write a Great Hypothesis

Hypothesis Format, Examples, and Tips

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Amy Morin, LCSW, is a psychotherapist and international bestselling author. Her books, including "13 Things Mentally Strong People Don't Do," have been translated into more than 40 languages. Her TEDx talk, "The Secret of Becoming Mentally Strong," is one of the most viewed talks of all time.

:max_bytes(150000):strip_icc():format(webp)/VW-MIND-Amy-2b338105f1ee493f94d7e333e410fa76.jpg)

Verywell / Alex Dos Diaz

- The Scientific Method

Hypothesis Format

Falsifiability of a hypothesis, operational definitions, types of hypotheses, hypotheses examples.

- Collecting Data

Frequently Asked Questions

A hypothesis is a tentative statement about the relationship between two or more variables. It is a specific, testable prediction about what you expect to happen in a study.

One hypothesis example would be a study designed to look at the relationship between sleep deprivation and test performance might have a hypothesis that states: "This study is designed to assess the hypothesis that sleep-deprived people will perform worse on a test than individuals who are not sleep-deprived."

This article explores how a hypothesis is used in psychology research, how to write a good hypothesis, and the different types of hypotheses you might use.

The Hypothesis in the Scientific Method

In the scientific method , whether it involves research in psychology, biology, or some other area, a hypothesis represents what the researchers think will happen in an experiment. The scientific method involves the following steps:

- Forming a question

- Performing background research

- Creating a hypothesis

- Designing an experiment

- Collecting data

- Analyzing the results

- Drawing conclusions

- Communicating the results

The hypothesis is a prediction, but it involves more than a guess. Most of the time, the hypothesis begins with a question which is then explored through background research. It is only at this point that researchers begin to develop a testable hypothesis. Unless you are creating an exploratory study, your hypothesis should always explain what you expect to happen.

In a study exploring the effects of a particular drug, the hypothesis might be that researchers expect the drug to have some type of effect on the symptoms of a specific illness. In psychology, the hypothesis might focus on how a certain aspect of the environment might influence a particular behavior.

Remember, a hypothesis does not have to be correct. While the hypothesis predicts what the researchers expect to see, the goal of the research is to determine whether this guess is right or wrong. When conducting an experiment, researchers might explore a number of factors to determine which ones might contribute to the ultimate outcome.

In many cases, researchers may find that the results of an experiment do not support the original hypothesis. When writing up these results, the researchers might suggest other options that should be explored in future studies.

In many cases, researchers might draw a hypothesis from a specific theory or build on previous research. For example, prior research has shown that stress can impact the immune system. So a researcher might hypothesize: "People with high-stress levels will be more likely to contract a common cold after being exposed to the virus than people who have low-stress levels."

In other instances, researchers might look at commonly held beliefs or folk wisdom. "Birds of a feather flock together" is one example of folk wisdom that a psychologist might try to investigate. The researcher might pose a specific hypothesis that "People tend to select romantic partners who are similar to them in interests and educational level."

Elements of a Good Hypothesis

So how do you write a good hypothesis? When trying to come up with a hypothesis for your research or experiments, ask yourself the following questions:

- Is your hypothesis based on your research on a topic?

- Can your hypothesis be tested?

- Does your hypothesis include independent and dependent variables?

Before you come up with a specific hypothesis, spend some time doing background research. Once you have completed a literature review, start thinking about potential questions you still have. Pay attention to the discussion section in the journal articles you read . Many authors will suggest questions that still need to be explored.

To form a hypothesis, you should take these steps:

- Collect as many observations about a topic or problem as you can.

- Evaluate these observations and look for possible causes of the problem.

- Create a list of possible explanations that you might want to explore.

- After you have developed some possible hypotheses, think of ways that you could confirm or disprove each hypothesis through experimentation. This is known as falsifiability.

In the scientific method , falsifiability is an important part of any valid hypothesis. In order to test a claim scientifically, it must be possible that the claim could be proven false.

Students sometimes confuse the idea of falsifiability with the idea that it means that something is false, which is not the case. What falsifiability means is that if something was false, then it is possible to demonstrate that it is false.

One of the hallmarks of pseudoscience is that it makes claims that cannot be refuted or proven false.

A variable is a factor or element that can be changed and manipulated in ways that are observable and measurable. However, the researcher must also define how the variable will be manipulated and measured in the study.

For example, a researcher might operationally define the variable " test anxiety " as the results of a self-report measure of anxiety experienced during an exam. A "study habits" variable might be defined by the amount of studying that actually occurs as measured by time.

These precise descriptions are important because many things can be measured in a number of different ways. One of the basic principles of any type of scientific research is that the results must be replicable. By clearly detailing the specifics of how the variables were measured and manipulated, other researchers can better understand the results and repeat the study if needed.

Some variables are more difficult than others to define. How would you operationally define a variable such as aggression ? For obvious ethical reasons, researchers cannot create a situation in which a person behaves aggressively toward others.

In order to measure this variable, the researcher must devise a measurement that assesses aggressive behavior without harming other people. In this situation, the researcher might utilize a simulated task to measure aggressiveness.

Hypothesis Checklist

- Does your hypothesis focus on something that you can actually test?

- Does your hypothesis include both an independent and dependent variable?

- Can you manipulate the variables?

- Can your hypothesis be tested without violating ethical standards?

The hypothesis you use will depend on what you are investigating and hoping to find. Some of the main types of hypotheses that you might use include:

- Simple hypothesis : This type of hypothesis suggests that there is a relationship between one independent variable and one dependent variable.

- Complex hypothesis : This type of hypothesis suggests a relationship between three or more variables, such as two independent variables and a dependent variable.

- Null hypothesis : This hypothesis suggests no relationship exists between two or more variables.

- Alternative hypothesis : This hypothesis states the opposite of the null hypothesis.

- Statistical hypothesis : This hypothesis uses statistical analysis to evaluate a representative sample of the population and then generalizes the findings to the larger group.

- Logical hypothesis : This hypothesis assumes a relationship between variables without collecting data or evidence.

A hypothesis often follows a basic format of "If {this happens} then {this will happen}." One way to structure your hypothesis is to describe what will happen to the dependent variable if you change the independent variable .

The basic format might be: "If {these changes are made to a certain independent variable}, then we will observe {a change in a specific dependent variable}."

A few examples of simple hypotheses:

- "Students who eat breakfast will perform better on a math exam than students who do not eat breakfast."

- Complex hypothesis: "Students who experience test anxiety before an English exam will get lower scores than students who do not experience test anxiety."

- "Motorists who talk on the phone while driving will be more likely to make errors on a driving course than those who do not talk on the phone."

Examples of a complex hypothesis include:

- "People with high-sugar diets and sedentary activity levels are more likely to develop depression."

- "Younger people who are regularly exposed to green, outdoor areas have better subjective well-being than older adults who have limited exposure to green spaces."

Examples of a null hypothesis include:

- "Children who receive a new reading intervention will have scores different than students who do not receive the intervention."

- "There will be no difference in scores on a memory recall task between children and adults."

Examples of an alternative hypothesis:

- "Children who receive a new reading intervention will perform better than students who did not receive the intervention."

- "Adults will perform better on a memory task than children."

Collecting Data on Your Hypothesis

Once a researcher has formed a testable hypothesis, the next step is to select a research design and start collecting data. The research method depends largely on exactly what they are studying. There are two basic types of research methods: descriptive research and experimental research.

Descriptive Research Methods

Descriptive research such as case studies , naturalistic observations , and surveys are often used when it would be impossible or difficult to conduct an experiment . These methods are best used to describe different aspects of a behavior or psychological phenomenon.

Once a researcher has collected data using descriptive methods, a correlational study can then be used to look at how the variables are related. This type of research method might be used to investigate a hypothesis that is difficult to test experimentally.

Experimental Research Methods

Experimental methods are used to demonstrate causal relationships between variables. In an experiment, the researcher systematically manipulates a variable of interest (known as the independent variable) and measures the effect on another variable (known as the dependent variable).

Unlike correlational studies, which can only be used to determine if there is a relationship between two variables, experimental methods can be used to determine the actual nature of the relationship—whether changes in one variable actually cause another to change.

A Word From Verywell

The hypothesis is a critical part of any scientific exploration. It represents what researchers expect to find in a study or experiment. In situations where the hypothesis is unsupported by the research, the research still has value. Such research helps us better understand how different aspects of the natural world relate to one another. It also helps us develop new hypotheses that can then be tested in the future.

Some examples of how to write a hypothesis include:

- "Staying up late will lead to worse test performance the next day."

- "People who consume one apple each day will visit the doctor fewer times each year."

- "Breaking study sessions up into three 20-minute sessions will lead to better test results than a single 60-minute study session."

The four parts of a hypothesis are:

- The research question

- The independent variable (IV)

- The dependent variable (DV)

- The proposed relationship between the IV and DV

Castillo M. The scientific method: a need for something better? . AJNR Am J Neuroradiol. 2013;34(9):1669-71. doi:10.3174/ajnr.A3401

Nevid J. Psychology: Concepts and Applications. Wadworth, 2013.

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

Statistics Made Easy

4 Examples of Hypothesis Testing in Real Life

In statistics, hypothesis tests are used to test whether or not some hypothesis about a population parameter is true.

To perform a hypothesis test in the real world, researchers will obtain a random sample from the population and perform a hypothesis test on the sample data, using a null and alternative hypothesis:

- Null Hypothesis (H 0 ): The sample data occurs purely from chance.

- Alternative Hypothesis (H A ): The sample data is influenced by some non-random cause.

If the p-value of the hypothesis test is less than some significance level (e.g. α = .05), then we can reject the null hypothesis and conclude that we have sufficient evidence to say that the alternative hypothesis is true.

The following examples provide several situations where hypothesis tests are used in the real world.

Example 1: Biology

Hypothesis tests are often used in biology to determine whether some new treatment, fertilizer, pesticide, chemical, etc. causes increased growth, stamina, immunity, etc. in plants or animals.

For example, suppose a biologist believes that a certain fertilizer will cause plants to grow more during a one-month period than they normally do, which is currently 20 inches. To test this, she applies the fertilizer to each of the plants in her laboratory for one month.

She then performs a hypothesis test using the following hypotheses:

- H 0 : μ = 20 inches (the fertilizer will have no effect on the mean plant growth)

- H A : μ > 20 inches (the fertilizer will cause mean plant growth to increase)

If the p-value of the test is less than some significance level (e.g. α = .05), then she can reject the null hypothesis and conclude that the fertilizer leads to increased plant growth.

Example 2: Clinical Trials

Hypothesis tests are often used in clinical trials to determine whether some new treatment, drug, procedure, etc. causes improved outcomes in patients.

For example, suppose a doctor believes that a new drug is able to reduce blood pressure in obese patients. To test this, he may measure the blood pressure of 40 patients before and after using the new drug for one month.

He then performs a hypothesis test using the following hypotheses:

- H 0 : μ after = μ before (the mean blood pressure is the same before and after using the drug)

- H A : μ after < μ before (the mean blood pressure is less after using the drug)

If the p-value of the test is less than some significance level (e.g. α = .05), then he can reject the null hypothesis and conclude that the new drug leads to reduced blood pressure.

Example 3: Advertising Spend

Hypothesis tests are often used in business to determine whether or not some new advertising campaign, marketing technique, etc. causes increased sales.

For example, suppose a company believes that spending more money on digital advertising leads to increased sales. To test this, the company may increase money spent on digital advertising during a two-month period and collect data to see if overall sales have increased.

They may perform a hypothesis test using the following hypotheses:

- H 0 : μ after = μ before (the mean sales is the same before and after spending more on advertising)

- H A : μ after > μ before (the mean sales increased after spending more on advertising)

If the p-value of the test is less than some significance level (e.g. α = .05), then the company can reject the null hypothesis and conclude that increased digital advertising leads to increased sales.

Example 4: Manufacturing

Hypothesis tests are also used often in manufacturing plants to determine if some new process, technique, method, etc. causes a change in the number of defective products produced.

For example, suppose a certain manufacturing plant wants to test whether or not some new method changes the number of defective widgets produced per month, which is currently 250. To test this, they may measure the mean number of defective widgets produced before and after using the new method for one month.

They can then perform a hypothesis test using the following hypotheses:

- H 0 : μ after = μ before (the mean number of defective widgets is the same before and after using the new method)

- H A : μ after ≠ μ before (the mean number of defective widgets produced is different before and after using the new method)

If the p-value of the test is less than some significance level (e.g. α = .05), then the plant can reject the null hypothesis and conclude that the new method leads to a change in the number of defective widgets produced per month.

Additional Resources

Introduction to Hypothesis Testing Introduction to the One Sample t-test Introduction to the Two Sample t-test Introduction to the Paired Samples t-test

Published by Zach

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

S.3 hypothesis testing.

In reviewing hypothesis tests, we start first with the general idea. Then, we keep returning to the basic procedures of hypothesis testing, each time adding a little more detail.

The general idea of hypothesis testing involves:

- Making an initial assumption.

- Collecting evidence (data).

- Based on the available evidence (data), deciding whether to reject or not reject the initial assumption.

Every hypothesis test — regardless of the population parameter involved — requires the above three steps.

Example S.3.1

Is normal body temperature really 98.6 degrees f section .