- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Empirical Research: Definition, Methods, Types and Examples

Content Index

Empirical research: Definition

Empirical research: origin, quantitative research methods, qualitative research methods, steps for conducting empirical research, empirical research methodology cycle, advantages of empirical research, disadvantages of empirical research, why is there a need for empirical research.

Empirical research is defined as any research where conclusions of the study is strictly drawn from concretely empirical evidence, and therefore “verifiable” evidence.

This empirical evidence can be gathered using quantitative market research and qualitative market research methods.

For example: A research is being conducted to find out if listening to happy music in the workplace while working may promote creativity? An experiment is conducted by using a music website survey on a set of audience who are exposed to happy music and another set who are not listening to music at all, and the subjects are then observed. The results derived from such a research will give empirical evidence if it does promote creativity or not.

LEARN ABOUT: Behavioral Research

You must have heard the quote” I will not believe it unless I see it”. This came from the ancient empiricists, a fundamental understanding that powered the emergence of medieval science during the renaissance period and laid the foundation of modern science, as we know it today. The word itself has its roots in greek. It is derived from the greek word empeirikos which means “experienced”.

In today’s world, the word empirical refers to collection of data using evidence that is collected through observation or experience or by using calibrated scientific instruments. All of the above origins have one thing in common which is dependence of observation and experiments to collect data and test them to come up with conclusions.

LEARN ABOUT: Causal Research

Types and methodologies of empirical research

Empirical research can be conducted and analysed using qualitative or quantitative methods.

- Quantitative research : Quantitative research methods are used to gather information through numerical data. It is used to quantify opinions, behaviors or other defined variables . These are predetermined and are in a more structured format. Some of the commonly used methods are survey, longitudinal studies, polls, etc

- Qualitative research: Qualitative research methods are used to gather non numerical data. It is used to find meanings, opinions, or the underlying reasons from its subjects. These methods are unstructured or semi structured. The sample size for such a research is usually small and it is a conversational type of method to provide more insight or in-depth information about the problem Some of the most popular forms of methods are focus groups, experiments, interviews, etc.

Data collected from these will need to be analysed. Empirical evidence can also be analysed either quantitatively and qualitatively. Using this, the researcher can answer empirical questions which have to be clearly defined and answerable with the findings he has got. The type of research design used will vary depending on the field in which it is going to be used. Many of them might choose to do a collective research involving quantitative and qualitative method to better answer questions which cannot be studied in a laboratory setting.

LEARN ABOUT: Qualitative Research Questions and Questionnaires

Quantitative research methods aid in analyzing the empirical evidence gathered. By using these a researcher can find out if his hypothesis is supported or not.

- Survey research: Survey research generally involves a large audience to collect a large amount of data. This is a quantitative method having a predetermined set of closed questions which are pretty easy to answer. Because of the simplicity of such a method, high responses are achieved. It is one of the most commonly used methods for all kinds of research in today’s world.

Previously, surveys were taken face to face only with maybe a recorder. However, with advancement in technology and for ease, new mediums such as emails , or social media have emerged.

For example: Depletion of energy resources is a growing concern and hence there is a need for awareness about renewable energy. According to recent studies, fossil fuels still account for around 80% of energy consumption in the United States. Even though there is a rise in the use of green energy every year, there are certain parameters because of which the general population is still not opting for green energy. In order to understand why, a survey can be conducted to gather opinions of the general population about green energy and the factors that influence their choice of switching to renewable energy. Such a survey can help institutions or governing bodies to promote appropriate awareness and incentive schemes to push the use of greener energy.

Learn more: Renewable Energy Survey Template Descriptive Research vs Correlational Research

- Experimental research: In experimental research , an experiment is set up and a hypothesis is tested by creating a situation in which one of the variable is manipulated. This is also used to check cause and effect. It is tested to see what happens to the independent variable if the other one is removed or altered. The process for such a method is usually proposing a hypothesis, experimenting on it, analyzing the findings and reporting the findings to understand if it supports the theory or not.

For example: A particular product company is trying to find what is the reason for them to not be able to capture the market. So the organisation makes changes in each one of the processes like manufacturing, marketing, sales and operations. Through the experiment they understand that sales training directly impacts the market coverage for their product. If the person is trained well, then the product will have better coverage.

- Correlational research: Correlational research is used to find relation between two set of variables . Regression analysis is generally used to predict outcomes of such a method. It can be positive, negative or neutral correlation.

LEARN ABOUT: Level of Analysis

For example: Higher educated individuals will get higher paying jobs. This means higher education enables the individual to high paying job and less education will lead to lower paying jobs.

- Longitudinal study: Longitudinal study is used to understand the traits or behavior of a subject under observation after repeatedly testing the subject over a period of time. Data collected from such a method can be qualitative or quantitative in nature.

For example: A research to find out benefits of exercise. The target is asked to exercise everyday for a particular period of time and the results show higher endurance, stamina, and muscle growth. This supports the fact that exercise benefits an individual body.

- Cross sectional: Cross sectional study is an observational type of method, in which a set of audience is observed at a given point in time. In this type, the set of people are chosen in a fashion which depicts similarity in all the variables except the one which is being researched. This type does not enable the researcher to establish a cause and effect relationship as it is not observed for a continuous time period. It is majorly used by healthcare sector or the retail industry.

For example: A medical study to find the prevalence of under-nutrition disorders in kids of a given population. This will involve looking at a wide range of parameters like age, ethnicity, location, incomes and social backgrounds. If a significant number of kids coming from poor families show under-nutrition disorders, the researcher can further investigate into it. Usually a cross sectional study is followed by a longitudinal study to find out the exact reason.

- Causal-Comparative research : This method is based on comparison. It is mainly used to find out cause-effect relationship between two variables or even multiple variables.

For example: A researcher measured the productivity of employees in a company which gave breaks to the employees during work and compared that to the employees of the company which did not give breaks at all.

LEARN ABOUT: Action Research

Some research questions need to be analysed qualitatively, as quantitative methods are not applicable there. In many cases, in-depth information is needed or a researcher may need to observe a target audience behavior, hence the results needed are in a descriptive analysis form. Qualitative research results will be descriptive rather than predictive. It enables the researcher to build or support theories for future potential quantitative research. In such a situation qualitative research methods are used to derive a conclusion to support the theory or hypothesis being studied.

LEARN ABOUT: Qualitative Interview

- Case study: Case study method is used to find more information through carefully analyzing existing cases. It is very often used for business research or to gather empirical evidence for investigation purpose. It is a method to investigate a problem within its real life context through existing cases. The researcher has to carefully analyse making sure the parameter and variables in the existing case are the same as to the case that is being investigated. Using the findings from the case study, conclusions can be drawn regarding the topic that is being studied.

For example: A report mentioning the solution provided by a company to its client. The challenges they faced during initiation and deployment, the findings of the case and solutions they offered for the problems. Such case studies are used by most companies as it forms an empirical evidence for the company to promote in order to get more business.

- Observational method: Observational method is a process to observe and gather data from its target. Since it is a qualitative method it is time consuming and very personal. It can be said that observational research method is a part of ethnographic research which is also used to gather empirical evidence. This is usually a qualitative form of research, however in some cases it can be quantitative as well depending on what is being studied.

For example: setting up a research to observe a particular animal in the rain-forests of amazon. Such a research usually take a lot of time as observation has to be done for a set amount of time to study patterns or behavior of the subject. Another example used widely nowadays is to observe people shopping in a mall to figure out buying behavior of consumers.

- One-on-one interview: Such a method is purely qualitative and one of the most widely used. The reason being it enables a researcher get precise meaningful data if the right questions are asked. It is a conversational method where in-depth data can be gathered depending on where the conversation leads.

For example: A one-on-one interview with the finance minister to gather data on financial policies of the country and its implications on the public.

- Focus groups: Focus groups are used when a researcher wants to find answers to why, what and how questions. A small group is generally chosen for such a method and it is not necessary to interact with the group in person. A moderator is generally needed in case the group is being addressed in person. This is widely used by product companies to collect data about their brands and the product.

For example: A mobile phone manufacturer wanting to have a feedback on the dimensions of one of their models which is yet to be launched. Such studies help the company meet the demand of the customer and position their model appropriately in the market.

- Text analysis: Text analysis method is a little new compared to the other types. Such a method is used to analyse social life by going through images or words used by the individual. In today’s world, with social media playing a major part of everyone’s life, such a method enables the research to follow the pattern that relates to his study.

For example: A lot of companies ask for feedback from the customer in detail mentioning how satisfied are they with their customer support team. Such data enables the researcher to take appropriate decisions to make their support team better.

Sometimes a combination of the methods is also needed for some questions that cannot be answered using only one type of method especially when a researcher needs to gain a complete understanding of complex subject matter.

We recently published a blog that talks about examples of qualitative data in education ; why don’t you check it out for more ideas?

Since empirical research is based on observation and capturing experiences, it is important to plan the steps to conduct the experiment and how to analyse it. This will enable the researcher to resolve problems or obstacles which can occur during the experiment.

Step #1: Define the purpose of the research

This is the step where the researcher has to answer questions like what exactly do I want to find out? What is the problem statement? Are there any issues in terms of the availability of knowledge, data, time or resources. Will this research be more beneficial than what it will cost.

Before going ahead, a researcher has to clearly define his purpose for the research and set up a plan to carry out further tasks.

Step #2 : Supporting theories and relevant literature

The researcher needs to find out if there are theories which can be linked to his research problem . He has to figure out if any theory can help him support his findings. All kind of relevant literature will help the researcher to find if there are others who have researched this before, or what are the problems faced during this research. The researcher will also have to set up assumptions and also find out if there is any history regarding his research problem

Step #3: Creation of Hypothesis and measurement

Before beginning the actual research he needs to provide himself a working hypothesis or guess what will be the probable result. Researcher has to set up variables, decide the environment for the research and find out how can he relate between the variables.

Researcher will also need to define the units of measurements, tolerable degree for errors, and find out if the measurement chosen will be acceptable by others.

Step #4: Methodology, research design and data collection

In this step, the researcher has to define a strategy for conducting his research. He has to set up experiments to collect data which will enable him to propose the hypothesis. The researcher will decide whether he will need experimental or non experimental method for conducting the research. The type of research design will vary depending on the field in which the research is being conducted. Last but not the least, the researcher will have to find out parameters that will affect the validity of the research design. Data collection will need to be done by choosing appropriate samples depending on the research question. To carry out the research, he can use one of the many sampling techniques. Once data collection is complete, researcher will have empirical data which needs to be analysed.

LEARN ABOUT: Best Data Collection Tools

Step #5: Data Analysis and result

Data analysis can be done in two ways, qualitatively and quantitatively. Researcher will need to find out what qualitative method or quantitative method will be needed or will he need a combination of both. Depending on the unit of analysis of his data, he will know if his hypothesis is supported or rejected. Analyzing this data is the most important part to support his hypothesis.

Step #6: Conclusion

A report will need to be made with the findings of the research. The researcher can give the theories and literature that support his research. He can make suggestions or recommendations for further research on his topic.

A.D. de Groot, a famous dutch psychologist and a chess expert conducted some of the most notable experiments using chess in the 1940’s. During his study, he came up with a cycle which is consistent and now widely used to conduct empirical research. It consists of 5 phases with each phase being as important as the next one. The empirical cycle captures the process of coming up with hypothesis about how certain subjects work or behave and then testing these hypothesis against empirical data in a systematic and rigorous approach. It can be said that it characterizes the deductive approach to science. Following is the empirical cycle.

- Observation: At this phase an idea is sparked for proposing a hypothesis. During this phase empirical data is gathered using observation. For example: a particular species of flower bloom in a different color only during a specific season.

- Induction: Inductive reasoning is then carried out to form a general conclusion from the data gathered through observation. For example: As stated above it is observed that the species of flower blooms in a different color during a specific season. A researcher may ask a question “does the temperature in the season cause the color change in the flower?” He can assume that is the case, however it is a mere conjecture and hence an experiment needs to be set up to support this hypothesis. So he tags a few set of flowers kept at a different temperature and observes if they still change the color?

- Deduction: This phase helps the researcher to deduce a conclusion out of his experiment. This has to be based on logic and rationality to come up with specific unbiased results.For example: In the experiment, if the tagged flowers in a different temperature environment do not change the color then it can be concluded that temperature plays a role in changing the color of the bloom.

- Testing: This phase involves the researcher to return to empirical methods to put his hypothesis to the test. The researcher now needs to make sense of his data and hence needs to use statistical analysis plans to determine the temperature and bloom color relationship. If the researcher finds out that most flowers bloom a different color when exposed to the certain temperature and the others do not when the temperature is different, he has found support to his hypothesis. Please note this not proof but just a support to his hypothesis.

- Evaluation: This phase is generally forgotten by most but is an important one to keep gaining knowledge. During this phase the researcher puts forth the data he has collected, the support argument and his conclusion. The researcher also states the limitations for the experiment and his hypothesis and suggests tips for others to pick it up and continue a more in-depth research for others in the future. LEARN MORE: Population vs Sample

LEARN MORE: Population vs Sample

There is a reason why empirical research is one of the most widely used method. There are a few advantages associated with it. Following are a few of them.

- It is used to authenticate traditional research through various experiments and observations.

- This research methodology makes the research being conducted more competent and authentic.

- It enables a researcher understand the dynamic changes that can happen and change his strategy accordingly.

- The level of control in such a research is high so the researcher can control multiple variables.

- It plays a vital role in increasing internal validity .

Even though empirical research makes the research more competent and authentic, it does have a few disadvantages. Following are a few of them.

- Such a research needs patience as it can be very time consuming. The researcher has to collect data from multiple sources and the parameters involved are quite a few, which will lead to a time consuming research.

- Most of the time, a researcher will need to conduct research at different locations or in different environments, this can lead to an expensive affair.

- There are a few rules in which experiments can be performed and hence permissions are needed. Many a times, it is very difficult to get certain permissions to carry out different methods of this research.

- Collection of data can be a problem sometimes, as it has to be collected from a variety of sources through different methods.

LEARN ABOUT: Social Communication Questionnaire

Empirical research is important in today’s world because most people believe in something only that they can see, hear or experience. It is used to validate multiple hypothesis and increase human knowledge and continue doing it to keep advancing in various fields.

For example: Pharmaceutical companies use empirical research to try out a specific drug on controlled groups or random groups to study the effect and cause. This way, they prove certain theories they had proposed for the specific drug. Such research is very important as sometimes it can lead to finding a cure for a disease that has existed for many years. It is useful in science and many other fields like history, social sciences, business, etc.

LEARN ABOUT: 12 Best Tools for Researchers

With the advancement in today’s world, empirical research has become critical and a norm in many fields to support their hypothesis and gain more knowledge. The methods mentioned above are very useful for carrying out such research. However, a number of new methods will keep coming up as the nature of new investigative questions keeps getting unique or changing.

Create a single source of real data with a built-for-insights platform. Store past data, add nuggets of insights, and import research data from various sources into a CRM for insights. Build on ever-growing research with a real-time dashboard in a unified research management platform to turn insights into knowledge.

LEARN MORE FREE TRIAL

MORE LIKE THIS

21 Best Customer Advocacy Software for Customers in 2024

Apr 19, 2024

10 Quantitative Data Analysis Software for Every Data Scientist

Apr 18, 2024

11 Best Enterprise Feedback Management Software in 2024

17 Best Online Reputation Management Software in 2024

Apr 17, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

- Ask a Librarian

Research: Overview & Approaches

- Getting Started with Undergraduate Research

- Planning & Getting Started

- Building Your Knowledge Base

- Locating Sources

- Reading Scholarly Articles

- Creating a Literature Review

- Productivity & Organizing Research

- Scholarly and Professional Relationships

Introduction to Empirical Research

Databases for finding empirical research, guided search, google scholar, examples of empirical research, sources and further reading.

- Interpretive Research

- Action-Based Research

- Creative & Experimental Approaches

Your Librarian

- Introductory Video This video covers what empirical research is, what kinds of questions and methods empirical researchers use, and some tips for finding empirical research articles in your discipline.

- Guided Search: Finding Empirical Research Articles This is a hands-on tutorial that will allow you to use your own search terms to find resources.

- Study on radiation transfer in human skin for cosmetics

- Long-Term Mobile Phone Use and the Risk of Vestibular Schwannoma: A Danish Nationwide Cohort Study

- Emissions Impacts and Benefits of Plug-In Hybrid Electric Vehicles and Vehicle-to-Grid Services

- Review of design considerations and technological challenges for successful development and deployment of plug-in hybrid electric vehicles

- Endocrine disrupters and human health: could oestrogenic chemicals in body care cosmetics adversely affect breast cancer incidence in women?

- << Previous: Scholarly and Professional Relationships

- Next: Interpretive Research >>

- Last Updated: Apr 5, 2024 9:55 AM

- URL: https://guides.lib.purdue.edu/research_approaches

- University of Memphis Libraries

- Research Guides

Empirical Research: Defining, Identifying, & Finding

Introduction.

- Defining Empirical Research

The Introduction Section

- Database Tools

- Search Terms

- Image Descriptions

The Introduction exists to explain the research project and to justify why this research has been done. The introduction will discuss:

- The topic covered by the research,

- Previous research done on this topic,

- What is still unknown about the topic that this research will answer, and

- Why someone would want to know that answer.

What Criteria to Look For

The "Introduction" is where you are most likely to find the research question .

Finding the Criteria

The research question may not be clearly labeled in the Introduction. Often, the author(s) may rephrase their question as a research statement or a hypothesis . Some research may have more than one research question or a research question with multiple parts.

Words That May Signify the Research Question

These are some common word choices authors make when they are describing their research question as a research statement or hypothesis.

- Hypothesize, hypothesized, or hypothesis

- Investigation, investigate(s), or investigated

- Predict(s) or predicted

- Evaluate(s) or evaluated

- This research, this study, the current study, or this paper

- The aim of this study or this research

You might also look for common question words (who, what, when, where, why, how) in a statement to see if it might be a rephrased research question.

What Headings to Look Under

- General heading for the section.

- Since this is the first heading after the title and abstract, some authors leave it unlabeled.

- Likely where the research question is located if there is not a separate heading for it.

- Explicit discussion of what is being investigated in the research.

- Should have some form of the research question.

- Often a separate heading where the authors discuss previous research done on the topic.

- May be labeled by the topic being reviewed.

- Less likely to find the research question clearly stated. The authors may be talking about their topic more broadly than their current research question.

- Single "Introduction" heading.

- Includes phrase "this paper."

- Includes question word "how."

- You could turn the phrase "how people perceive inequality in outcomes and risk at the collective level" into the question "How do people perceive inequality in outcomes and risk at the collective level?"

- Labeled "Introduction" heading along with headings for topics of literature review.

- Includes phrase "this research investigates."

- Includes question word "how."

- You could turn the phrase "how LGBTQ college students negotiate the hookup scene on college campuses" into the question "How do LGBTQ college students negotiate the hookup scene on college campuses?"

- Beginning of Introduction section is unlabeled. It then includes headings for different parts of the literature review and ends with a heading called "The Current Study" on page 573 for discussing the research questions.

- Includes the words and phrases "aim of this study," "hypothesized," and "predicted."

- You could turn the phrase "examine the effects of racial discrimination on anxiety symptom distress" into the question "What are the effects of racial discrimination on anxiety symptom distress?"

- You could turn the phrase "explore the moderating role of internalized racism in the link between racial discrimination and changes in anxiety symptom distress" into the question "How doe internalized racism moderate the link ink between racial discrimination and changes in anxiety symptom distress?"

- << Previous: Identifying Empirical Research

- Next: Methods >>

- Last Updated: Apr 2, 2024 11:25 AM

- URL: https://libguides.memphis.edu/empirical-research

Penn State University Libraries

Empirical research in the social sciences and education.

- What is Empirical Research and How to Read It

- Finding Empirical Research in Library Databases

- Designing Empirical Research

- Ethics, Cultural Responsiveness, and Anti-Racism in Research

- Citing, Writing, and Presenting Your Work

Contact the Librarian at your campus for more help!

Designing Empirical Research (eBooks only)

Start with:

- SAGE Project Planner A tutorial that guides you through all aspects of designing an original research project, from defining a topic, to reviewing the literature, to collecting data, to writing and sharing your results.

- SAGE Research Methods Core This link opens in a new window A collection of encyclopedias, handbooks, and other materials for designing Education, Behavioral Sciences, and Social Sciences Research. Use the search box to find information on specific methods, or use the "Methods Map" to explore related methodologies. more... less... SAGE Research Methods is a research methods tool created to help researchers, faculty and students with their research projects. SAGE Research Methods links over 100,000 pages of SAGE's renowned book, journal and reference content with truly advanced search and discovery tools. Researchers can explore methods concepts to help them design research projects, understand particular methods or identify a new method, conduct their research, and write up their findings. Since SAGE Research Methods focuses on methodology rather than disciplines, it can be used across the social sciences, health sciences, and more. SAGE Research Methods contains content from more than 640 books, dictionaries, encyclopedias, and handbooks, the entire Little Green Book, and Little Blue Book series, two Major Works collating a selection of journal articles, and newly commissioned videos. Our access is to: SRM Core Update 2020-2025; SRM Cases (includes updates through 2025); SRM Cases 2.

- SpringerLink eBooks Although Springer is better known for publishing in the life sciences, it offers a growing collection of social science materials. To find research methods books, type research* OR method* into the search box. Then, under "Discipline," click on "See All," and choose your topic of interest.

- PsycTESTS (formerly listed as APA PsycTests®) This link opens in a new window Helps you identify questionnaires and tests to use in your research. more... less... PsycTESTS is a research database that provides access to psychological tests, measures, scales, surveys, and other assessments as well as descriptive information about the test and its development and administration.

- Health & Psychosocial Instruments - HAPI (PSU access will expire on June 30, 2024.) This link opens in a new window Additional questionnaires and tests to use in your research. more... less... Health and Psychosocial Instruments features material on unpublished information-gathering tools for clinicians that are discussed in journal articles, such as questionnaires, interview schedules, tests, checklists, rating and other scales, coding schemes, and projective techniques. The majority of tools are in medical and nursing areas such pain measurement, quality of life assessment, and drug efficacy evaluation. However, HaPI also includes tests used in medically related disciplines such as psychology, social work, occupational therapy, physical therapy, and speech & hearing therapy. For more information on the HaPI database, please click here.

- LinkedIn Learning This link opens in a new window Provides video tutorials data analysis and statistical software. more... less... Lynda.com, Inc. offers over a thousand video tutorials on leading software topics like Microsoft Office, Adobe Creative Suite, SQL, Drupal, audio and video editing applications, ColdFusion, operating systems, and many more. These high-quality tutorials are taught by industry experts and available 24/7 for convenient, self-paced learning.

Some Additional Resources:

- Library catalog search for books on social science research methodology

Penn State Research Offices

- Office for Research Protections Ensures that research at Penn State is conducted in accordance with federal, state, and local regulations and guidelines that protect human participants, animals, students, and personnel.

- Research Data Services Provides consultation on data, geospatial, statistical, and other research questions.

- Statistical Consulting Center Offers advice on research planning and design, sampling, modeling, analysis, results interpretation, and software.

- Institute for State and Regional Affairs, Penn State Harrisburg Located at Penn State Harrisburg, ISRA includes departments for census, geospatial, and survey research. Services include consultation/training and contract research.

- << Previous: Finding Empirical Research in Library Databases

- Next: Ethics, Cultural Responsiveness, and Anti-Racism in Research >>

- Last Updated: Feb 18, 2024 8:33 PM

- URL: https://guides.libraries.psu.edu/emp

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

5 Research design

Research design is a comprehensive plan for data collection in an empirical research project. It is a ‘blueprint’ for empirical research aimed at answering specific research questions or testing specific hypotheses, and must specify at least three processes: the data collection process, the instrument development process, and the sampling process. The instrument development and sampling processes are described in the next two chapters, and the data collection process—which is often loosely called ‘research design’—is introduced in this chapter and is described in further detail in Chapters 9–12.

Broadly speaking, data collection methods can be grouped into two categories: positivist and interpretive. Positivist methods , such as laboratory experiments and survey research, are aimed at theory (or hypotheses) testing, while interpretive methods, such as action research and ethnography, are aimed at theory building. Positivist methods employ a deductive approach to research, starting with a theory and testing theoretical postulates using empirical data. In contrast, interpretive methods employ an inductive approach that starts with data and tries to derive a theory about the phenomenon of interest from the observed data. Often times, these methods are incorrectly equated with quantitative and qualitative research. Quantitative and qualitative methods refers to the type of data being collected—quantitative data involve numeric scores, metrics, and so on, while qualitative data includes interviews, observations, and so forth—and analysed (i.e., using quantitative techniques such as regression or qualitative techniques such as coding). Positivist research uses predominantly quantitative data, but can also use qualitative data. Interpretive research relies heavily on qualitative data, but can sometimes benefit from including quantitative data as well. Sometimes, joint use of qualitative and quantitative data may help generate unique insight into a complex social phenomenon that is not available from either type of data alone, and hence, mixed-mode designs that combine qualitative and quantitative data are often highly desirable.

Key attributes of a research design

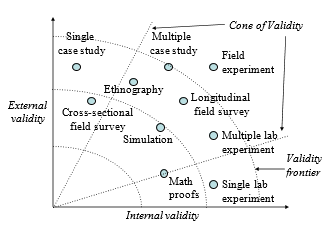

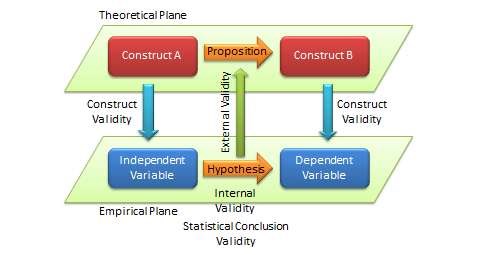

The quality of research designs can be defined in terms of four key design attributes: internal validity, external validity, construct validity, and statistical conclusion validity.

Internal validity , also called causality, examines whether the observed change in a dependent variable is indeed caused by a corresponding change in a hypothesised independent variable, and not by variables extraneous to the research context. Causality requires three conditions: covariation of cause and effect (i.e., if cause happens, then effect also happens; if cause does not happen, effect does not happen), temporal precedence (cause must precede effect in time), and spurious correlation, or there is no plausible alternative explanation for the change. Certain research designs, such as laboratory experiments, are strong in internal validity by virtue of their ability to manipulate the independent variable (cause) via a treatment and observe the effect (dependent variable) of that treatment after a certain point in time, while controlling for the effects of extraneous variables. Other designs, such as field surveys, are poor in internal validity because of their inability to manipulate the independent variable (cause), and because cause and effect are measured at the same point in time which defeats temporal precedence making it equally likely that the expected effect might have influenced the expected cause rather than the reverse. Although higher in internal validity compared to other methods, laboratory experiments are by no means immune to threats of internal validity, and are susceptible to history, testing, instrumentation, regression, and other threats that are discussed later in the chapter on experimental designs. Nonetheless, different research designs vary considerably in their respective level of internal validity.

External validity or generalisability refers to whether the observed associations can be generalised from the sample to the population (population validity), or to other people, organisations, contexts, or time (ecological validity). For instance, can results drawn from a sample of financial firms in the United States be generalised to the population of financial firms (population validity) or to other firms within the United States (ecological validity)? Survey research, where data is sourced from a wide variety of individuals, firms, or other units of analysis, tends to have broader generalisability than laboratory experiments where treatments and extraneous variables are more controlled. The variation in internal and external validity for a wide range of research designs is shown in Figure 5.1.

Some researchers claim that there is a trade-off between internal and external validity—higher external validity can come only at the cost of internal validity and vice versa. But this is not always the case. Research designs such as field experiments, longitudinal field surveys, and multiple case studies have higher degrees of both internal and external validities. Personally, I prefer research designs that have reasonable degrees of both internal and external validities, i.e., those that fall within the cone of validity shown in Figure 5.1. But this should not suggest that designs outside this cone are any less useful or valuable. Researchers’ choice of designs are ultimately a matter of their personal preference and competence, and the level of internal and external validity they desire.

Construct validity examines how well a given measurement scale is measuring the theoretical construct that it is expected to measure. Many constructs used in social science research such as empathy, resistance to change, and organisational learning are difficult to define, much less measure. For instance, construct validity must ensure that a measure of empathy is indeed measuring empathy and not compassion, which may be difficult since these constructs are somewhat similar in meaning. Construct validity is assessed in positivist research based on correlational or factor analysis of pilot test data, as described in the next chapter.

Statistical conclusion validity examines the extent to which conclusions derived using a statistical procedure are valid. For example, it examines whether the right statistical method was used for hypotheses testing, whether the variables used meet the assumptions of that statistical test (such as sample size or distributional requirements), and so forth. Because interpretive research designs do not employ statistical tests, statistical conclusion validity is not applicable for such analysis. The different kinds of validity and where they exist at the theoretical/empirical levels are illustrated in Figure 5.2.

Improving internal and external validity

The best research designs are those that can ensure high levels of internal and external validity. Such designs would guard against spurious correlations, inspire greater faith in the hypotheses testing, and ensure that the results drawn from a small sample are generalisable to the population at large. Controls are required to ensure internal validity (causality) of research designs, and can be accomplished in five ways: manipulation, elimination, inclusion, and statistical control, and randomisation.

In manipulation , the researcher manipulates the independent variables in one or more levels (called ‘treatments’), and compares the effects of the treatments against a control group where subjects do not receive the treatment. Treatments may include a new drug or different dosage of drug (for treating a medical condition), a teaching style (for students), and so forth. This type of control is achieved in experimental or quasi-experimental designs, but not in non-experimental designs such as surveys. Note that if subjects cannot distinguish adequately between different levels of treatment manipulations, their responses across treatments may not be different, and manipulation would fail.

The elimination technique relies on eliminating extraneous variables by holding them constant across treatments, such as by restricting the study to a single gender or a single socioeconomic status. In the inclusion technique, the role of extraneous variables is considered by including them in the research design and separately estimating their effects on the dependent variable, such as via factorial designs where one factor is gender (male versus female). Such technique allows for greater generalisability, but also requires substantially larger samples. In statistical control , extraneous variables are measured and used as covariates during the statistical testing process.

Finally, the randomisation technique is aimed at cancelling out the effects of extraneous variables through a process of random sampling, if it can be assured that these effects are of a random (non-systematic) nature. Two types of randomisation are: random selection , where a sample is selected randomly from a population, and random assignment , where subjects selected in a non-random manner are randomly assigned to treatment groups.

Randomisation also ensures external validity, allowing inferences drawn from the sample to be generalised to the population from which the sample is drawn. Note that random assignment is mandatory when random selection is not possible because of resource or access constraints. However, generalisability across populations is harder to ascertain since populations may differ on multiple dimensions and you can only control for a few of those dimensions.

Popular research designs

As noted earlier, research designs can be classified into two categories—positivist and interpretive—depending on the goal of the research. Positivist designs are meant for theory testing, while interpretive designs are meant for theory building. Positivist designs seek generalised patterns based on an objective view of reality, while interpretive designs seek subjective interpretations of social phenomena from the perspectives of the subjects involved. Some popular examples of positivist designs include laboratory experiments, field experiments, field surveys, secondary data analysis, and case research, while examples of interpretive designs include case research, phenomenology, and ethnography. Note that case research can be used for theory building or theory testing, though not at the same time. Not all techniques are suited for all kinds of scientific research. Some techniques such as focus groups are best suited for exploratory research, others such as ethnography are best for descriptive research, and still others such as laboratory experiments are ideal for explanatory research. Following are brief descriptions of some of these designs. Additional details are provided in Chapters 9–12.

Experimental studies are those that are intended to test cause-effect relationships (hypotheses) in a tightly controlled setting by separating the cause from the effect in time, administering the cause to one group of subjects (the ‘treatment group’) but not to another group (‘control group’), and observing how the mean effects vary between subjects in these two groups. For instance, if we design a laboratory experiment to test the efficacy of a new drug in treating a certain ailment, we can get a random sample of people afflicted with that ailment, randomly assign them to one of two groups (treatment and control groups), administer the drug to subjects in the treatment group, but only give a placebo (e.g., a sugar pill with no medicinal value) to subjects in the control group. More complex designs may include multiple treatment groups, such as low versus high dosage of the drug or combining drug administration with dietary interventions. In a true experimental design , subjects must be randomly assigned to each group. If random assignment is not followed, then the design becomes quasi-experimental . Experiments can be conducted in an artificial or laboratory setting such as at a university (laboratory experiments) or in field settings such as in an organisation where the phenomenon of interest is actually occurring (field experiments). Laboratory experiments allow the researcher to isolate the variables of interest and control for extraneous variables, which may not be possible in field experiments. Hence, inferences drawn from laboratory experiments tend to be stronger in internal validity, but those from field experiments tend to be stronger in external validity. Experimental data is analysed using quantitative statistical techniques. The primary strength of the experimental design is its strong internal validity due to its ability to isolate, control, and intensively examine a small number of variables, while its primary weakness is limited external generalisability since real life is often more complex (i.e., involving more extraneous variables) than contrived lab settings. Furthermore, if the research does not identify ex ante relevant extraneous variables and control for such variables, such lack of controls may hurt internal validity and may lead to spurious correlations.

Field surveys are non-experimental designs that do not control for or manipulate independent variables or treatments, but measure these variables and test their effects using statistical methods. Field surveys capture snapshots of practices, beliefs, or situations from a random sample of subjects in field settings through a survey questionnaire or less frequently, through a structured interview. In cross-sectional field surveys , independent and dependent variables are measured at the same point in time (e.g., using a single questionnaire), while in longitudinal field surveys , dependent variables are measured at a later point in time than the independent variables. The strengths of field surveys are their external validity (since data is collected in field settings), their ability to capture and control for a large number of variables, and their ability to study a problem from multiple perspectives or using multiple theories. However, because of their non-temporal nature, internal validity (cause-effect relationships) are difficult to infer, and surveys may be subject to respondent biases (e.g., subjects may provide a ‘socially desirable’ response rather than their true response) which further hurts internal validity.

Secondary data analysis is an analysis of data that has previously been collected and tabulated by other sources. Such data may include data from government agencies such as employment statistics from the U.S. Bureau of Labor Services or development statistics by countries from the United Nations Development Program, data collected by other researchers (often used in meta-analytic studies), or publicly available third-party data, such as financial data from stock markets or real-time auction data from eBay. This is in contrast to most other research designs where collecting primary data for research is part of the researcher’s job. Secondary data analysis may be an effective means of research where primary data collection is too costly or infeasible, and secondary data is available at a level of analysis suitable for answering the researcher’s questions. The limitations of this design are that the data might not have been collected in a systematic or scientific manner and hence unsuitable for scientific research, since the data was collected for a presumably different purpose, they may not adequately address the research questions of interest to the researcher, and interval validity is problematic if the temporal precedence between cause and effect is unclear.

Case research is an in-depth investigation of a problem in one or more real-life settings (case sites) over an extended period of time. Data may be collected using a combination of interviews, personal observations, and internal or external documents. Case studies can be positivist in nature (for hypotheses testing) or interpretive (for theory building). The strength of this research method is its ability to discover a wide variety of social, cultural, and political factors potentially related to the phenomenon of interest that may not be known in advance. Analysis tends to be qualitative in nature, but heavily contextualised and nuanced. However, interpretation of findings may depend on the observational and integrative ability of the researcher, lack of control may make it difficult to establish causality, and findings from a single case site may not be readily generalised to other case sites. Generalisability can be improved by replicating and comparing the analysis in other case sites in a multiple case design .

Focus group research is a type of research that involves bringing in a small group of subjects (typically six to ten people) at one location, and having them discuss a phenomenon of interest for a period of one and a half to two hours. The discussion is moderated and led by a trained facilitator, who sets the agenda and poses an initial set of questions for participants, makes sure that the ideas and experiences of all participants are represented, and attempts to build a holistic understanding of the problem situation based on participants’ comments and experiences. Internal validity cannot be established due to lack of controls and the findings may not be generalised to other settings because of the small sample size. Hence, focus groups are not generally used for explanatory or descriptive research, but are more suited for exploratory research.

Action research assumes that complex social phenomena are best understood by introducing interventions or ‘actions’ into those phenomena and observing the effects of those actions. In this method, the researcher is embedded within a social context such as an organisation and initiates an action—such as new organisational procedures or new technologies—in response to a real problem such as declining profitability or operational bottlenecks. The researcher’s choice of actions must be based on theory, which should explain why and how such actions may cause the desired change. The researcher then observes the results of that action, modifying it as necessary, while simultaneously learning from the action and generating theoretical insights about the target problem and interventions. The initial theory is validated by the extent to which the chosen action successfully solves the target problem. Simultaneous problem solving and insight generation is the central feature that distinguishes action research from all other research methods, and hence, action research is an excellent method for bridging research and practice. This method is also suited for studying unique social problems that cannot be replicated outside that context, but it is also subject to researcher bias and subjectivity, and the generalisability of findings is often restricted to the context where the study was conducted.

Ethnography is an interpretive research design inspired by anthropology that emphasises that research phenomenon must be studied within the context of its culture. The researcher is deeply immersed in a certain culture over an extended period of time—eight months to two years—and during that period, engages, observes, and records the daily life of the studied culture, and theorises about the evolution and behaviours in that culture. Data is collected primarily via observational techniques, formal and informal interaction with participants in that culture, and personal field notes, while data analysis involves ‘sense-making’. The researcher must narrate her experience in great detail so that readers may experience that same culture without necessarily being there. The advantages of this approach are its sensitiveness to the context, the rich and nuanced understanding it generates, and minimal respondent bias. However, this is also an extremely time and resource-intensive approach, and findings are specific to a given culture and less generalisable to other cultures.

Selecting research designs

Given the above multitude of research designs, which design should researchers choose for their research? Generally speaking, researchers tend to select those research designs that they are most comfortable with and feel most competent to handle, but ideally, the choice should depend on the nature of the research phenomenon being studied. In the preliminary phases of research, when the research problem is unclear and the researcher wants to scope out the nature and extent of a certain research problem, a focus group (for an individual unit of analysis) or a case study (for an organisational unit of analysis) is an ideal strategy for exploratory research. As one delves further into the research domain, but finds that there are no good theories to explain the phenomenon of interest and wants to build a theory to fill in the unmet gap in that area, interpretive designs such as case research or ethnography may be useful designs. If competing theories exist and the researcher wishes to test these different theories or integrate them into a larger theory, positivist designs such as experimental design, survey research, or secondary data analysis are more appropriate.

Regardless of the specific research design chosen, the researcher should strive to collect quantitative and qualitative data using a combination of techniques such as questionnaires, interviews, observations, documents, or secondary data. For instance, even in a highly structured survey questionnaire, intended to collect quantitative data, the researcher may leave some room for a few open-ended questions to collect qualitative data that may generate unexpected insights not otherwise available from structured quantitative data alone. Likewise, while case research employ mostly face-to-face interviews to collect most qualitative data, the potential and value of collecting quantitative data should not be ignored. As an example, in a study of organisational decision-making processes, the case interviewer can record numeric quantities such as how many months it took to make certain organisational decisions, how many people were involved in that decision process, and how many decision alternatives were considered, which can provide valuable insights not otherwise available from interviewees’ narrative responses. Irrespective of the specific research design employed, the goal of the researcher should be to collect as much and as diverse data as possible that can help generate the best possible insights about the phenomenon of interest.

Social Science Research: Principles, Methods and Practices (Revised edition) Copyright © 2019 by Anol Bhattacherjee is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

What is Empirical Research? Definition, Methods, Examples

Appinio Research · 09.02.2024 · 35min read

Ever wondered how we gather the facts, unveil hidden truths, and make informed decisions in a world filled with questions? Empirical research holds the key.

In this guide, we'll delve deep into the art and science of empirical research, unraveling its methods, mysteries, and manifold applications. From defining the core principles to mastering data analysis and reporting findings, we're here to equip you with the knowledge and tools to navigate the empirical landscape.

What is Empirical Research?

Empirical research is the cornerstone of scientific inquiry, providing a systematic and structured approach to investigating the world around us. It is the process of gathering and analyzing empirical or observable data to test hypotheses, answer research questions, or gain insights into various phenomena. This form of research relies on evidence derived from direct observation or experimentation, allowing researchers to draw conclusions based on real-world data rather than purely theoretical or speculative reasoning.

Characteristics of Empirical Research

Empirical research is characterized by several key features:

- Observation and Measurement : It involves the systematic observation or measurement of variables, events, or behaviors.

- Data Collection : Researchers collect data through various methods, such as surveys, experiments, observations, or interviews.

- Testable Hypotheses : Empirical research often starts with testable hypotheses that are evaluated using collected data.

- Quantitative or Qualitative Data : Data can be quantitative (numerical) or qualitative (non-numerical), depending on the research design.

- Statistical Analysis : Quantitative data often undergo statistical analysis to determine patterns , relationships, or significance.

- Objectivity and Replicability : Empirical research strives for objectivity, minimizing researcher bias . It should be replicable, allowing other researchers to conduct the same study to verify results.

- Conclusions and Generalizations : Empirical research generates findings based on data and aims to make generalizations about larger populations or phenomena.

Importance of Empirical Research

Empirical research plays a pivotal role in advancing knowledge across various disciplines. Its importance extends to academia, industry, and society as a whole. Here are several reasons why empirical research is essential:

- Evidence-Based Knowledge : Empirical research provides a solid foundation of evidence-based knowledge. It enables us to test hypotheses, confirm or refute theories, and build a robust understanding of the world.

- Scientific Progress : In the scientific community, empirical research fuels progress by expanding the boundaries of existing knowledge. It contributes to the development of theories and the formulation of new research questions.

- Problem Solving : Empirical research is instrumental in addressing real-world problems and challenges. It offers insights and data-driven solutions to complex issues in fields like healthcare, economics, and environmental science.

- Informed Decision-Making : In policymaking, business, and healthcare, empirical research informs decision-makers by providing data-driven insights. It guides strategies, investments, and policies for optimal outcomes.

- Quality Assurance : Empirical research is essential for quality assurance and validation in various industries, including pharmaceuticals, manufacturing, and technology. It ensures that products and processes meet established standards.

- Continuous Improvement : Businesses and organizations use empirical research to evaluate performance, customer satisfaction, and product effectiveness. This data-driven approach fosters continuous improvement and innovation.

- Human Advancement : Empirical research in fields like medicine and psychology contributes to the betterment of human health and well-being. It leads to medical breakthroughs, improved therapies, and enhanced psychological interventions.

- Critical Thinking and Problem Solving : Engaging in empirical research fosters critical thinking skills, problem-solving abilities, and a deep appreciation for evidence-based decision-making.

Empirical research empowers us to explore, understand, and improve the world around us. It forms the bedrock of scientific inquiry and drives progress in countless domains, shaping our understanding of both the natural and social sciences.

How to Conduct Empirical Research?

So, you've decided to dive into the world of empirical research. Let's begin by exploring the crucial steps involved in getting started with your research project.

1. Select a Research Topic

Selecting the right research topic is the cornerstone of a successful empirical study. It's essential to choose a topic that not only piques your interest but also aligns with your research goals and objectives. Here's how to go about it:

- Identify Your Interests : Start by reflecting on your passions and interests. What topics fascinate you the most? Your enthusiasm will be your driving force throughout the research process.

- Brainstorm Ideas : Engage in brainstorming sessions to generate potential research topics. Consider the questions you've always wanted to answer or the issues that intrigue you.

- Relevance and Significance : Assess the relevance and significance of your chosen topic. Does it contribute to existing knowledge? Is it a pressing issue in your field of study or the broader community?

- Feasibility : Evaluate the feasibility of your research topic. Do you have access to the necessary resources, data, and participants (if applicable)?

2. Formulate Research Questions

Once you've narrowed down your research topic, the next step is to formulate clear and precise research questions . These questions will guide your entire research process and shape your study's direction. To create effective research questions:

- Specificity : Ensure that your research questions are specific and focused. Vague or overly broad questions can lead to inconclusive results.

- Relevance : Your research questions should directly relate to your chosen topic. They should address gaps in knowledge or contribute to solving a particular problem.

- Testability : Ensure that your questions are testable through empirical methods. You should be able to gather data and analyze it to answer these questions.

- Avoid Bias : Craft your questions in a way that avoids leading or biased language. Maintain neutrality to uphold the integrity of your research.

3. Review Existing Literature

Before you embark on your empirical research journey, it's essential to immerse yourself in the existing body of literature related to your chosen topic. This step, often referred to as a literature review, serves several purposes:

- Contextualization : Understand the historical context and current state of research in your field. What have previous studies found, and what questions remain unanswered?

- Identifying Gaps : Identify gaps or areas where existing research falls short. These gaps will help you formulate meaningful research questions and hypotheses.

- Theory Development : If your study is theoretical, consider how existing theories apply to your topic. If it's empirical, understand how previous studies have approached data collection and analysis.

- Methodological Insights : Learn from the methodologies employed in previous research. What methods were successful, and what challenges did researchers face?

4. Define Variables

Variables are fundamental components of empirical research. They are the factors or characteristics that can change or be manipulated during your study. Properly defining and categorizing variables is crucial for the clarity and validity of your research. Here's what you need to know:

- Independent Variables : These are the variables that you, as the researcher, manipulate or control. They are the "cause" in cause-and-effect relationships.

- Dependent Variables : Dependent variables are the outcomes or responses that you measure or observe. They are the "effect" influenced by changes in independent variables.

- Operational Definitions : To ensure consistency and clarity, provide operational definitions for your variables. Specify how you will measure or manipulate each variable.

- Control Variables : In some studies, controlling for other variables that may influence your dependent variable is essential. These are known as control variables.

Understanding these foundational aspects of empirical research will set a solid foundation for the rest of your journey. Now that you've grasped the essentials of getting started, let's delve deeper into the intricacies of research design.

Empirical Research Design

Now that you've selected your research topic, formulated research questions, and defined your variables, it's time to delve into the heart of your empirical research journey – research design . This pivotal step determines how you will collect data and what methods you'll employ to answer your research questions. Let's explore the various facets of research design in detail.

Types of Empirical Research

Empirical research can take on several forms, each with its own unique approach and methodologies. Understanding the different types of empirical research will help you choose the most suitable design for your study. Here are some common types:

- Experimental Research : In this type, researchers manipulate one or more independent variables to observe their impact on dependent variables. It's highly controlled and often conducted in a laboratory setting.

- Observational Research : Observational research involves the systematic observation of subjects or phenomena without intervention. Researchers are passive observers, documenting behaviors, events, or patterns.

- Survey Research : Surveys are used to collect data through structured questionnaires or interviews. This method is efficient for gathering information from a large number of participants.

- Case Study Research : Case studies focus on in-depth exploration of one or a few cases. Researchers gather detailed information through various sources such as interviews, documents, and observations.

- Qualitative Research : Qualitative research aims to understand behaviors, experiences, and opinions in depth. It often involves open-ended questions, interviews, and thematic analysis.

- Quantitative Research : Quantitative research collects numerical data and relies on statistical analysis to draw conclusions. It involves structured questionnaires, experiments, and surveys.

Your choice of research type should align with your research questions and objectives. Experimental research, for example, is ideal for testing cause-and-effect relationships, while qualitative research is more suitable for exploring complex phenomena.

Experimental Design

Experimental research is a systematic approach to studying causal relationships. It's characterized by the manipulation of one or more independent variables while controlling for other factors. Here are some key aspects of experimental design:

- Control and Experimental Groups : Participants are randomly assigned to either a control group or an experimental group. The independent variable is manipulated for the experimental group but not for the control group.

- Randomization : Randomization is crucial to eliminate bias in group assignment. It ensures that each participant has an equal chance of being in either group.

- Hypothesis Testing : Experimental research often involves hypothesis testing. Researchers formulate hypotheses about the expected effects of the independent variable and use statistical analysis to test these hypotheses.

Observational Design

Observational research entails careful and systematic observation of subjects or phenomena. It's advantageous when you want to understand natural behaviors or events. Key aspects of observational design include:

- Participant Observation : Researchers immerse themselves in the environment they are studying. They become part of the group being observed, allowing for a deep understanding of behaviors.

- Non-Participant Observation : In non-participant observation, researchers remain separate from the subjects. They observe and document behaviors without direct involvement.

- Data Collection Methods : Observational research can involve various data collection methods, such as field notes, video recordings, photographs, or coding of observed behaviors.

Survey Design

Surveys are a popular choice for collecting data from a large number of participants. Effective survey design is essential to ensure the validity and reliability of your data. Consider the following:

- Questionnaire Design : Create clear and concise questions that are easy for participants to understand. Avoid leading or biased questions.

- Sampling Methods : Decide on the appropriate sampling method for your study, whether it's random, stratified, or convenience sampling.

- Data Collection Tools : Choose the right tools for data collection, whether it's paper surveys, online questionnaires, or face-to-face interviews.

Case Study Design

Case studies are an in-depth exploration of one or a few cases to gain a deep understanding of a particular phenomenon. Key aspects of case study design include:

- Single Case vs. Multiple Case Studies : Decide whether you'll focus on a single case or multiple cases. Single case studies are intensive and allow for detailed examination, while multiple case studies provide comparative insights.

- Data Collection Methods : Gather data through interviews, observations, document analysis, or a combination of these methods.

Qualitative vs. Quantitative Research

In empirical research, you'll often encounter the distinction between qualitative and quantitative research . Here's a closer look at these two approaches:

- Qualitative Research : Qualitative research seeks an in-depth understanding of human behavior, experiences, and perspectives. It involves open-ended questions, interviews, and the analysis of textual or narrative data. Qualitative research is exploratory and often used when the research question is complex and requires a nuanced understanding.

- Quantitative Research : Quantitative research collects numerical data and employs statistical analysis to draw conclusions. It involves structured questionnaires, experiments, and surveys. Quantitative research is ideal for testing hypotheses and establishing cause-and-effect relationships.

Understanding the various research design options is crucial in determining the most appropriate approach for your study. Your choice should align with your research questions, objectives, and the nature of the phenomenon you're investigating.

Data Collection for Empirical Research

Now that you've established your research design, it's time to roll up your sleeves and collect the data that will fuel your empirical research. Effective data collection is essential for obtaining accurate and reliable results.

Sampling Methods

Sampling methods are critical in empirical research, as they determine the subset of individuals or elements from your target population that you will study. Here are some standard sampling methods:

- Random Sampling : Random sampling ensures that every member of the population has an equal chance of being selected. It minimizes bias and is often used in quantitative research.

- Stratified Sampling : Stratified sampling involves dividing the population into subgroups or strata based on specific characteristics (e.g., age, gender, location). Samples are then randomly selected from each stratum, ensuring representation of all subgroups.

- Convenience Sampling : Convenience sampling involves selecting participants who are readily available or easily accessible. While it's convenient, it may introduce bias and limit the generalizability of results.

- Snowball Sampling : Snowball sampling is instrumental when studying hard-to-reach or hidden populations. One participant leads you to another, creating a "snowball" effect. This method is common in qualitative research.

- Purposive Sampling : In purposive sampling, researchers deliberately select participants who meet specific criteria relevant to their research questions. It's often used in qualitative studies to gather in-depth information.

The choice of sampling method depends on the nature of your research, available resources, and the degree of precision required. It's crucial to carefully consider your sampling strategy to ensure that your sample accurately represents your target population.

Data Collection Instruments

Data collection instruments are the tools you use to gather information from your participants or sources. These instruments should be designed to capture the data you need accurately. Here are some popular data collection instruments:

- Questionnaires : Questionnaires consist of structured questions with predefined response options. When designing questionnaires, consider the clarity of questions, the order of questions, and the response format (e.g., Likert scale , multiple-choice).

- Interviews : Interviews involve direct communication between the researcher and participants. They can be structured (with predetermined questions) or unstructured (open-ended). Effective interviews require active listening and probing for deeper insights.

- Observations : Observations entail systematically and objectively recording behaviors, events, or phenomena. Researchers must establish clear criteria for what to observe, how to record observations, and when to observe.

- Surveys : Surveys are a common data collection instrument for quantitative research. They can be administered through various means, including online surveys, paper surveys, and telephone surveys.

- Documents and Archives : In some cases, data may be collected from existing documents, records, or archives. Ensure that the sources are reliable, relevant, and properly documented.

To streamline your process and gather insights with precision and efficiency, consider leveraging innovative tools like Appinio . With Appinio's intuitive platform, you can harness the power of real-time consumer data to inform your research decisions effectively. Whether you're conducting surveys, interviews, or observations, Appinio empowers you to define your target audience, collect data from diverse demographics, and analyze results seamlessly.

By incorporating Appinio into your data collection toolkit, you can unlock a world of possibilities and elevate the impact of your empirical research. Ready to revolutionize your approach to data collection?

Book a Demo

Data Collection Procedures

Data collection procedures outline the step-by-step process for gathering data. These procedures should be meticulously planned and executed to maintain the integrity of your research.

- Training : If you have a research team, ensure that they are trained in data collection methods and protocols. Consistency in data collection is crucial.

- Pilot Testing : Before launching your data collection, conduct a pilot test with a small group to identify any potential problems with your instruments or procedures. Make necessary adjustments based on feedback.

- Data Recording : Establish a systematic method for recording data. This may include timestamps, codes, or identifiers for each data point.

- Data Security : Safeguard the confidentiality and security of collected data. Ensure that only authorized individuals have access to the data.

- Data Storage : Properly organize and store your data in a secure location, whether in physical or digital form. Back up data to prevent loss.

Ethical Considerations

Ethical considerations are paramount in empirical research, as they ensure the well-being and rights of participants are protected.

- Informed Consent : Obtain informed consent from participants, providing clear information about the research purpose, procedures, risks, and their right to withdraw at any time.

- Privacy and Confidentiality : Protect the privacy and confidentiality of participants. Ensure that data is anonymized and sensitive information is kept confidential.

- Beneficence : Ensure that your research benefits participants and society while minimizing harm. Consider the potential risks and benefits of your study.

- Honesty and Integrity : Conduct research with honesty and integrity. Report findings accurately and transparently, even if they are not what you expected.