Subscribe to the PwC Newsletter

Join the community, trending research, assisting in writing wikipedia-like articles from scratch with large language models.

stanford-oval/storm • 22 Feb 2024

We study how to apply large language models to write grounded and organized long-form articles from scratch, with comparable breadth and depth to Wikipedia pages.

Mini-Gemini: Mining the Potential of Multi-modality Vision Language Models

We try to narrow the gap by mining the potential of VLMs for better performance and any-to-any workflow from three aspects, i. e., high-resolution visual tokens, high-quality data, and VLM-guided generation.

InstantMesh: Efficient 3D Mesh Generation from a Single Image with Sparse-view Large Reconstruction Models

We present InstantMesh, a feed-forward framework for instant 3D mesh generation from a single image, featuring state-of-the-art generation quality and significant training scalability.

Magic Clothing: Controllable Garment-Driven Image Synthesis

We propose Magic Clothing, a latent diffusion model (LDM)-based network architecture for an unexplored garment-driven image synthesis task.

LLaVA-UHD: an LMM Perceiving Any Aspect Ratio and High-Resolution Images

To address the challenges, we present LLaVA-UHD, a large multimodal model that can efficiently perceive images in any aspect ratio and high resolution.

Megalodon: Efficient LLM Pretraining and Inference with Unlimited Context Length

The quadratic complexity and weak length extrapolation of Transformers limits their ability to scale to long sequences, and while sub-quadratic solutions like linear attention and state space models exist, they empirically underperform Transformers in pretraining efficiency and downstream task accuracy.

Visual Autoregressive Modeling: Scalable Image Generation via Next-Scale Prediction

We present Visual AutoRegressive modeling (VAR), a new generation paradigm that redefines the autoregressive learning on images as coarse-to-fine "next-scale prediction" or "next-resolution prediction", diverging from the standard raster-scan "next-token prediction".

Actions Speak Louder than Words: Trillion-Parameter Sequential Transducers for Generative Recommendations

Large-scale recommendation systems are characterized by their reliance on high cardinality, heterogeneous features and the need to handle tens of billions of user actions on a daily basis.

MyGO: Discrete Modality Information as Fine-Grained Tokens for Multi-modal Knowledge Graph Completion

To overcome their inherent incompleteness, multi-modal knowledge graph completion (MMKGC) aims to discover unobserved knowledge from given MMKGs, leveraging both structural information from the triples and multi-modal information of the entities.

State Space Model for New-Generation Network Alternative to Transformers: A Survey

In this paper, we give the first comprehensive review of these works and also provide experimental comparisons and analysis to better demonstrate the features and advantages of SSM.

> cs > cs.AI

Help | Advanced Search

Artificial Intelligence

Authors and titles for recent submissions.

- Fri, 19 Apr 2024

- Thu, 18 Apr 2024

- Wed, 17 Apr 2024

- Tue, 16 Apr 2024

- Mon, 15 Apr 2024

Fri, 19 Apr 2024 (showing first 25 of 92 entries)

Links to: arXiv , form interface , find , cs , new , 2404 , contact , h elp ( Access key information)

Mobile Navigation

Research index, filter and sort, filter selections, sort options, research papers.

A free, AI-powered research tool for scientific literature

- Mario R. Capecchi

- Means of Production

- Old Growth Forests

New & Improved API for Developers

Introducing semantic reader in beta.

Stay Connected With Semantic Scholar Sign Up What Is Semantic Scholar? Semantic Scholar is a free, AI-powered research tool for scientific literature, based at the Allen Institute for AI.

- Event calendar

The Top 17 ‘Must-Read’ AI Papers in 2022

We caught up with experts in the RE•WORK community to find out what the top 17 AI papers are for 2022 so far that you can add to your Summer must reads. The papers cover a wide range of topics including AI in social media and how AI can benefit humanity and are free to access.

Interested in learning more? Check out all the upcoming RE•WORK events to find out about the latest trends and industry updates in AI here .

Max Li, Staff Data Scientist – Tech Lead at Wish

Max is a Staff Data Scientist at Wish where he focuses on experimentation (A/B testing) and machine learning. His passion is to empower data-driven decision-making through the rigorous use of data. View Max’s presentation, ‘Assign Experiment Variants at Scale in A/B Tests’, from our Deep Learning Summit in February 2022 here .

1. Boostrapped Meta-Learning (2022) – Sebastian Flennerhag et al.

The first paper selected by Max proposes an algorithm in which allows the meta-learner teach itself, allowing to overcome the meta-optimisation challenge. The algorithm focuses meta-learning with gradients, which guarantees improvements in performance. The paper also looks at how bootstrapping opens up possibilities. Read the full paper here .

2. Multi-Objective Bayesian Optimization over High-Dimensional Search Spaces (2022) – Samuel Daulton et al.

Another paper selected by Max proposes MORBO, a scalable method for multiple-objective BO as it performs better than that of high-dimensional search spaces. MORBO significantly improves the sample efficiency, and where BO algorithms fail, MORBO provides improved sample efficiencies to the current BO approach used. Read the full paper here .

3. Tabular Data: Deep Learning is Not All You Need (2021) – Ravid Shwartz-Ziv, Amitai Armon

To solve real-life data science problems, selecting the right model to use is crucial. This final paper selected by Max explores whether deep models should be recommended as an option for tabular data. Read the full paper here .

Jigyasa Grover, Senior Machine Learning Engineer at Twitter

Jigyasa Grover is a Senior Machine Learning Engineer at Twitter working in the performance ads ranking domain. Recently, she was honoured with the 'Outstanding in AI: Young Role Model Award' by Women in AI across North America. She is one of the few ML Google Developer Experts globally. Jigyasa has previously presented at our Deep Learning Summit and MLOps event in San Fransisco earlier this year.

4. Privacy for Free: How does Dataset Condensation Help Privacy? (2022) – Tian Dong et al.

Jigyasa’s first recommendation concentrates on Privacy Preserving Machine Learning, specifically mitigating the leakage of sensitive data in Machine Learning. The paper provides one of the first propositions of using dataset condensation techniques to preserve the data efficiency during model training and furnish membership privacy. This paper was published by Sony AI and won the Outstanding Paper Award at ICML 2022. Read the full paper here .

5. Affective Signals in a Social Media Recommender System (2022) – Jane Dwivedi-Yu et al.

The second paper recommended by Jigyasa talks about operationalising Affective Computing, also known as Emotional AI, for an improved personalised feed on social media. The paper discusses the design of an affective taxonomy customised to user needs on social media. It further lays out the curation of suitable training data by combining engagement data and data from a human-labelling task to enable the identification of the affective response a user might exhibit for a particular post. Read the full paper here .

6. ItemSage: Learning Product Embeddings for Shopping Recommendations at Pinterest (2022) – Paul Baltescu et al.

Jigyasa’s last recommendation is a paper by Pinterest that illustrates the aggregation of both textual and visual information to build a unified set of product embeddings to enhance recommendation results on e-commerce websites. By applying multi-task learning, the proposed embeddings can optimise for multiple engagement types and ensures that the shopping recommendation stack is efficient with respect to all objectives. Read the full article here .

Asmita Poddar, Software Development Engineer at Amazon Alexa

Asmita is a Software Development Engineer at Amazon Alexa, where she works on developing and productionising natural language processing and speech models. Asmita also has prior experience in applying machine learning in diverse domains. Asmita will be presenting at our London AI Summit , in September, where she will discuss AI for Spoken Communication.

7. Competition-Level Code Generation with AlphaCode (2022) – Yujia Li et al.

Systems can help programmers become more productive. Asmita has selected this paper which addresses the problems with incorporating innovations in AI into these systems. AlphaCode is a system that creates solutions for problems that requires deeper reasoning. Read the full paper here .

8. A Commonsense Knowledge Enhanced Network with Retrospective Loss for Emotion Recognition in Spoken Dialog (2022) – Yunhe Xie et al.

There are limits to model’s reasoning in regards to the existing ERSD datasets. The final paper selected by Asmita proposes a Commonsense Knowledge Enhanced Network with a backward-looking loss to perform dialog modelling, external knowledge integration and historical state retrospect. The model used has been shown to outperform other models. Read the full paper here .

Discover the speakers we have lined up and the topics we will cover at the London AI Summit.

Sergei Bobrovskyi, Expert in Anomaly Detection for Root Cause Analysis at Airbus

Dr. Sergei Bobrovskyi is a Data Scientist within the Analytics Accelerator team of the Airbus Digital Transformation Office. His work focuses on applications of AI for anomaly detection in time series, spanning various use-cases across Airbus. Sergei will be presenting at our Berlin AI Summit in October about Anomaly Detection, Root Cause Analysis and Explainability.

9. LaMDA: Language Models for Dialog Applications (2022) – Romal Thoppilan et al.

The paper chosen by Sergei describes the LaMDA system, which caused the furor this summer, when a former Google engineer claimed it has shown signs of being sentient. LaMDA is a family of large language models for dialog applications based on Transformer architecture. The interesting feature of the model is their fine-tuning with human annotated data and possibility to consult external sources. In any case, this is a very interesting model family, which we might encounter in many of the applications we use daily. Read the full paper here .

10. A Path Towards Autonomous Machine Intelligence Version 0.9.2, 2022-06-27 (2022) – Yann LeCun

The second paper chosen by Sergei provides a vision on how to progress towards general AI. The study combines a number of concepts including configurable predictive world model, behaviour driven through intrinsic motivation, and hierarchical joint embedding architectures. Read the full paper here .

11. Coordination Among Neural Modules Through a Shared Global Workpace (2022) – Anirudh Goyal et al.

This paper chosen by Sergei combines the Transformer architecture underlying most of the recent successes of deep learning with ideas from the Global Workspace Theory from cognitive sciences. This is an interesting read to broaden the understanding of why certain model architectures perform well and in which direction we might go in the future to further improve performance on challenging tasks. Read the full paper here .

12. Magnetic control of tokamak plasmas through deep reinforcement learning (2022) – Jonas Degrave et al.

Sergei chose the next paper, which asks the question of ‘how can AI research benefit humanity?’. The use of AI to enable safe, reliable and scalable deployment of fusion energy could contribute to the solution of pression problems of climate change. Sergei has said that this is an extremely interesting application of AI technology for engineering. Read the full paper here .

13. TranAd: Deep Transformer Networks for Anomaly Detection in Multivariate Time Series Data (2022) – Shreshth Tuli, Giuliano Casale and Nicholas R. Jennings

The final paper chosen by Sergei is a specialised paper applying transformer architecture to the problem of unsupervised anomaly detection in multivariate time-series. Many architectures which were successful in other fields are at some points being also applied to time-series. The paper shows an improved performance on some known data sets. Read the full paper here .

Abdullahi Adamu, Senior Software Engineer at Sony

Abdullahi has worked in various industries including working at a market research start-up where he developed models that could extract insights from human conversations about products or services. He moved to Publicis, where he became Data Engineer and Data Scientist in 2018. Abdullahi will be part of our panel discussion at the London AI Summit in September, where he will discuss Harnessing the Power of Deep Learning.

14. Self-Supervision for Learning from the Bottom Up (2022) – Alexei Efros

This paper chosen by Abdullahi makes compelling arguments for why self-supervision is the next step in the evolution of AI/ML for building more robust models. Overall, these compelling arguments justify even further why self-supervised learning is important on our journey towards more robust models that generalise better in the wild. Read the full paper here .

15. Neural Architecture Search Survey: A Hardware Perspective (2022) – Krishna Teja Chitty-Venkata and Arun K. Somani

Another paper chosen by Abdullahi understands that as we move towards edge computing and federated learning, neural architecture search that takes into account hardware constraints which will be more critical in ensuring that we have leaner neural network models that balance latency and generalisation performance. This survey gives a birds eye view of the various neural architecture search algorithms that take into account hardware constraints to design artificial neural networks that give the best tradeoff of performance and accuracy. Read the full paper here .

16. What Should Not Be Contrastive In Contrastive Learning (2021) – Tete Xiao et al.

In the paper chosen by Abdullahi highlights the underlying assumptions behind data augmentation methods and how these can be counter productive in the context of contrastive learning; for example colour augmentation whilst a downstream task is meant to differentiate colours of objects. The result reported show promising results in the wild. Overall, it presents an elegant solution to using data augmentation for contrastive learning. Read the full paper here .

17. Why do tree-based models still outperform deep learning on tabular data? (2022) – Leo Grinsztajn, Edouard Oyallon and Gael Varoquaux

The final paper selected by Abdulliah works on answering the question of why deep learning models still find it hard to compete on tabular data compared to tree-based models. It is shown that MLP-like architectures are more sensitive to uninformative features in data, compared to their tree-based counterparts. Read the full paper here .

Sign up to the RE•WORK monthly newsletter for the latest AI news, trends and events.

Join us at our upcoming events this year:

· London AI Summit – 14-15 September 2022

· Berlin AI Summit – 4-5 October 2022

· AI in Healthcare Summit Boston – 13-14 October 2022

· Sydney Deep Learning and Enterprise AI Summits – 17-18 October 2022

· MLOps Summit – 9-10 November 2022

· Toronto AI Summit – 9-10 November 2022

· Nordics AI Summit - 7-8 December 2022

Tracking Firm Use of AI in Real Time: A Snapshot from the Business Trends and Outlook Survey

Timely and accurate measurement of AI use by firms is both challenging and crucial for understanding the impacts of AI on the U.S. economy. We provide new, real-time estimates of current and expected future use of AI for business purposes based on the Business Trends and Outlook Survey for September 2023 to February 2024. During this period, bi-weekly estimates of AI use rate rose from 3.7% to 5.4%, with an expected rate of about 6.6% by early Fall 2024. The fraction of workers at businesses that use AI is higher, especially for large businesses and in the Information sector. AI use is higher in large firms but the relationship between AI use and firm size is non-monotonic. In contrast, AI use is higher in young firms although, on an employment-weighted basis, is U-shaped in firm age. Common uses of AI include marketing automation, virtual agents, and data/text analytics. AI users often utilize AI to substitute for worker tasks and equipment/software, but few report reductions in employment due to AI use. Many firms undergo organizational changes to accommodate AI, particularly by training staff, developing new workflows, and purchasing cloud services/storage. AI users also exhibit better overall performance and higher incidence of employment expansion compared to other businesses. The most common reason for non-adoption is the inapplicability of AI to the business.

This research paper is associated with the research program of the Center for Economic Studies (CES) which produces a wide range of economic analyses to improve the statistical programs of the U.S. Census Bureau. Research papers from this program have not undergone the review accorded Census Bureau publications and no endorsement should be inferred. Any opinions and conclusions expressed herein are those of the authors and do not represent the views of the U.S. Census Bureau. The Census Bureau has reviewed this data product to ensure appropriate access, use, and disclosure avoidance protection of the confidential source data (Project No. P-7529868, Disclosure Review Board (DRB) approval numbers: CBDRB-FY23-0478, CBDRB-FY24-0162, and CBDRB-FY24-0225). We thank Joe Staudt and John Eltinge for helpful comments. John Haltiwanger was also a Schedule A employee of the Bureau of the Census at the time of the writing of this paper. The views expressed herein are those of the authors and do not necessarily reflect the views of the National Bureau of Economic Research.

MARC RIS BibTeΧ

Download Citation Data

More from NBER

In addition to working papers , the NBER disseminates affiliates’ latest findings through a range of free periodicals — the NBER Reporter , the NBER Digest , the Bulletin on Retirement and Disability , the Bulletin on Health , and the Bulletin on Entrepreneurship — as well as online conference reports , video lectures , and interviews .

Research with Generative AI

Resources for scholars and researchers

Generative AI (GenAI) technologies offer new opportunities to advance research and scholarship. This resource page aims to provide Harvard researchers and scholars with basic guidance, information on available resources, and contacts. The content will be regularly updated as these technologies continue to evolve. Your feedback is welcome.

Leading the way

Harvard’s researchers are making strides not only on generative AI, but the larger world of artificial intelligence and its applications. Learn more about key efforts.

The Kempner Institute

The Kempner Institute is dedicated to revealing the foundations of intelligence in both natural and artificial contexts, and to leveraging these findings to develop groundbreaking technologies.

Harvard Data Science Initiative

The Harvard Data Science Initiative is dedicated to understanding the many dimensions of data science and propelling it forward.

More AI @ Harvard

Generative AI is only part of the fascinating world of artificial intelligence. Explore Harvard’s groundbreaking and cross-disciplinary academic work in AI.

funding opportunity

GenAI Research Program/ Summer Funding for Harvard College Students 2024

The Office of the Vice Provost for Research, in partnership with the Office of Undergraduate Research and Fellowships, is pleased to offer an opportunity for collaborative research projects related to Generative AI between Harvard faculty and undergraduate students over the summer of 2024.

Learn more and apply

Frequently asked questions

Can i use generative ai to write and/or develop research papers.

Academic publishers have a range of policies on the use of AI in research papers. In some cases, publishers may prohibit the use of AI for certain aspects of paper development. You should review the specific policies of the target publisher to determine what is permitted.

Here is a sampling of policies available online:

- JAMA and the JAMA Network

- Springer Nature

How should AI-generated content be cited in research papers?

Guidance will likely develop as AI systems evolve, but some leading style guides have offered recommendations:

- The Chicago Manual of Style

- MLA Style Guide

Should I disclose the use of generative AI in a research paper?

Yes. Most academic publishers require researchers using AI tools to document this use in the methods or acknowledgements sections of their papers. You should review the specific guidelines of the target publisher to determine what is required.

Can I use AI in writing grant applications?

You should review the specific policies of potential funders to determine if the use of AI is permitted. For its part, the National Institutes of Health (NIH) advises caution : “If you use an AI tool to help write your application, you also do so at your own risk,” as these tools may inadvertently introduce issues associated with research misconduct, such as plagiarism or fabrication.

Can I use AI in the peer review process?

Many funders have not yet published policies on the use of AI in the peer review process. However, the National Institutes of Health (NIH) has prohibited such use “for analyzing and formulating peer review critiques for grant applications and R&D contract proposals.” You should carefully review the specific policies of funders to determine their stance on the use of AI

Are there AI safety concerns or potential risks I should be aware of?

Yes. Some of the primary safety issues and risks include the following:

- Bias and discrimination: The potential for AI systems to exhibit unfair or discriminatory behavior.

- Misinformation, impersonation, and manipulation: The risk of AI systems disseminating false or misleading information, or being used to deceive or manipulate individuals.

- Research and IP compliance: The necessity for AI systems to adhere to legal and ethical guidelines when utilizing proprietary information or conducting research.

- Security vulnerabilities: The susceptibility of AI systems to hacking or unauthorized access.

- Unpredictability: The difficulty in predicting the behavior or outcomes of AI systems.

- Overreliance: The risk of relying excessively on AI systems without considering their limitations or potential errors.

See Initial guidelines for the use of Generative AI tools at Harvard for more information.

- Initial guidelines for the use of Generative AI tools at Harvard

Generative AI tools

- Explore Tools Available to the Harvard Community

- Request API Access

- Request a Vendor Risk Assessment

- Questions? Contact HUIT

Copyright and intellectual property

- Copyright and Fair Use: A Guide for the Harvard Community

- Copyright Advisory Program

- Intellectual Property Policy

- Protecting Intellectual Property

Data security and privacy

- Harvard Information Security and Data Privacy

- Data Security Levels – Research Data Examples

- Privacy Policies and Guidelines

Research support

- University Research Computing and Data (RCD) Services

- Research Administration and Compliance

- Research Computing

- Research Data and Scholarship

- Faculty engaged in AI research

- Centers and initiatives engaged in AI research

- Degree and other education programs in AI

- Skip to main content

- Skip to primary sidebar

- Skip to footer

The Best of Applied Artificial Intelligence, Machine Learning, Automation, Bots, Chatbots

Top 10 Influential AI Research Papers in 2023 from Google, Meta, Microsoft, and More

December 5, 2023 by Mariya Yao

From Generative Agents research paper

In this article, we delve into ten transformative research papers from diverse domains, spanning language models, image processing, image generation, and video editing. As discussions around Artificial General Intelligence (AGI) reveal that AGI seems more approachable than ever, it’s no wonder that some of the featured papers explore various paths to AGI, such as extending language models or harnessing reinforcement learning for domain-spanning mastery.

If you’d like to skip around, here are the research papers we featured:

- Sparks of AGI by Microsoft

- PALM-E by Google

- LLaMA 2 by Meta AI

- LLaVA by University of Wisconsin–Madison, Microsoft, and Columbia University

- Generative Agents by Stanford University and Google

- Segment Anything by Meta AI

- DALL-E 3 by OpenAI

- ControlNet by Stanford University

- Gen-1 by Runway

- DreamerV3 by DeepMind and University of Toronto

If this in-depth educational content is useful for you, subscribe to our AI mailing list to be alerted when we release new material.

Top 10 AI Research Papers 2023

1. sparks of agi by microsoft.

In this research paper, a team from Microsoft Research analyzes an early version of OpenAI’s GPT-4, which was still under active development at the time. The team argues that GPT-4 represents a new class of large language models, exhibiting more generalized intelligence compared to previous AI models. Their investigation reveals GPT-4’s expansive capabilities across various domains, including mathematics, coding, vision, medicine, law, and psychology. They highlight that GPT-4 can solve complex and novel tasks without specialized prompting, often achieving performance close to human level.

The Microsoft team also emphasizes the potential of GPT-4 to be considered an early, albeit incomplete, form of artificial general intelligence (AGI). They focus on identifying GPT-4’s limitations and discuss the challenges in progressing towards more advanced and comprehensive AGI versions. This includes considering new paradigms beyond the current next-word prediction model.

Where to learn more about this research?

- Sparks of Artificial General Intelligence: Early experiments with GPT-4 (research paper)

- Sparks of AGI: early experiments with GPT-4 (a talk by the paper’s first author Sébastien Bubeck)

Where can you get implementation code?

- Not applicable

2. PALM-E by Google

The research paper introduces PaLM-E , a novel approach to language models that bridges the gap between words and percepts in the real world by directly incorporating continuous sensor inputs. This embodied language model seamlessly integrates multi-modal sentences containing visual, continuous state estimation, and textual information. These inputs are trained end-to-end with a pre-trained LLM and applied to various embodied tasks, including sequential robotic manipulation planning, visual question answering, and captioning.

PaLM-E, particularly the largest model with 562B parameters, demonstrates remarkable performance on a wide range of tasks and modalities. Notably, it excels in embodied reasoning tasks, exhibits positive transfer from joint training across language, vision, and visual-language domains, and showcases state-of-the-art capabilities in OK-VQA benchmarking. Despite its focus on embodied reasoning, PaLM-E-562B also exhibits an array of capabilities, including zero-shot multimodal chain-of-thought reasoning, few-shot prompting, OCR-free math reasoning, and multi-image reasoning, despite being trained on only single-image examples.

- PaLM-E: An Embodied Multimodal Language Model (research paper)

- PaLM-E (demos)

- PaLM-E (blog post)

- Code implementation of the PaLM-E model is not available.

3. LLaMA 2 by Meta AI

Summary .

LLaMA 2 is an enhanced version of its predecessor, trained on a new data mix, featuring a 40% larger pretraining corpus, doubled context length, and grouped-query attention. The LLaMA 2 series of models includes LLaMA 2 and LLaMA 2-Chat , optimized for dialogue, with sizes ranging from 7 to 70 billion parameters. These models exhibit superior performance in helpfulness and safety benchmarks compared to open-source counterparts and are comparable to some closed-source models. The development process involved rigorous safety measures, including safety-specific data annotation and red-teaming. The paper aims to contribute to the responsible development of LLMs by providing detailed descriptions of fine-tuning methodologies and safety improvements.

- Llama 2: Open Foundation and Fine-Tuned Chat Models (research paper)

- Llama 2: open source, free for research and commercial use (blog post)

- Meta AI released LLaMA 2 models to individuals, creators, researchers, and businesses of all sizes. You can access model weights and starting code for pretrained and fine-tuned LLaMA 2 language models through GitHub .

4. LLaVA by University of Wisconsin–Madison, Microsoft, and Columbia University

The research paper introduces LLaVA , L arge L anguage a nd V ision A ssistant, a groundbreaking multimodal model that leverages language-only GPT-4 to generate instruction-following data for both text and images. This novel approach extends the concept of instruction tuning to the multimodal space, enabling the development of a general-purpose visual assistant.

The paper addresses the challenge of a scarcity of vision-language instruction-following data by presenting a method to convert image-text pairs into the appropriate instruction-following format, utilizing GPT-4. They construct a large multimodal model (LMM) by integrating the open-set visual encoder of CLIP with the language decoder LLaMA. The fine-tuning process on generated instructional vision-language data proves effective, and practical insights are offered for building a general-purpose instruction-following visual agent.

The paper’s contributions include the generation of multimodal instruction-following data, the development of large multimodal models through end-to-end training on generated data, and the achievement of state-of-the-art performance on the Science QA multimodal reasoning dataset. Additionally, the paper demonstrates a commitment to open-source principles by making the generated multimodal instruction data, codebase for data generation and model training, model checkpoint, and a visual chat demo available to the public.

- Visual Instruction Tuning (research paper)

- LLaVA: Large Language and Vision Assistant (blog post with demos)

- The LLaVa code implementation is available on GitHub .

5. Generative Agents by Stanford University and Google

The paper introduces a groundbreaking concept – generative agents that can simulate believable human behavior. These agents exhibit a wide range of actions, from daily routines like cooking breakfast to creative endeavors such as painting and writing. They form opinions, engage in conversations, and remember past experiences, creating a vibrant simulation of human-like interactions.

To achieve this, the paper presents an architectural framework that extends large language models, allowing agents to store their experiences in natural language, synthesize memories over time, and retrieve them dynamically for behavior planning. These generative agents find applications in various domains, from role-play scenarios to social prototyping in virtual worlds. The research validates their effectiveness through evaluations, emphasizing the importance of memory, reflection, and planning in creating convincing agent behavior while addressing ethical and societal considerations.

- Generative Agents: Interactive Simulacra of Human Behavior (research paper)

- Generative Agents (video presentation of the research by the paper’s first author, Joon Sung Park)

- The core simulation module for generative agents was released on GitHub .

6. Segment Anything by Meta AI

In this paper, the Meta AI team introduced a groundbreaking task, model, and dataset for image segmentation. Leveraging an efficient model in a data collection loop, the project has created the most extensive segmentation dataset to date, featuring over 1 billion masks for 11 million licensed and privacy-respecting images. To achieve their goal of building a foundational model for image segmentation, the project focuses on promptable models trained on a diverse dataset. SAM, the Segment Anything Model , employs a straightforward yet effective architecture comprising an image encoder, a prompt encoder, and a mask decoder. The experiments demonstrate that SAM competes favorably with fully supervised results on a diverse range of downstream tasks, including edge detection, object proposal generation, and instance segmentation.

- Segment Anything (research paper)

- Segment Anything (the research website with demos, datasets, etc)

- The Segment Anything Model (SAM) and corresponding dataset (SA-1B) of 1B masks and 11M images have been released here .

7. DALL-E 3 by OpenAI

The research paper presents a groundbreaking approach to addressing one of the most significant challenges in text-to-image models: prompt following. Text-to-image models have historically struggled with accurately translating detailed image descriptions into visuals, often misinterpreting prompts or overlooking critical details. The authors of the paper hypothesize that these issues come from noisy and inaccurate image captions in the training dataset. To overcome this limitation, they developed a specialized image captioning system capable of generating highly descriptive and precise image captions. These enhanced captions are then used to recaption the training dataset for text-to-image models. The results are remarkable, with the DALL-E model trained on the improved dataset showcasing significantly enhanced prompt-following abilities.

Note: The paper does not cover training or implementation details of the DALL-E 3 model and only focuses on evaluating the improved prompt following of DALL-E 3 as a result of training on highly descriptive generated captions.

- Improving Image Generation with Better Captions (research paper)

- DALL-E 3 (blog post by OpenAI)

- The code implementation of DALL-E 3 is not available, but the authors released text-to-image samples collected for the evaluations of DALL-E against the competitors.

8. ControlNet by Stanford University

ControlNet is a neural network structure designed by the Stanford University research team to control pretrained large diffusion models and support additional input conditions. ControlNet learns task-specific conditions in an end-to-end manner and demonstrates robust learning even with small training datasets. The training process is as fast as fine-tuning a diffusion model and can be performed on personal devices or scaled to handle large amounts of data using powerful computation clusters. By augmenting large diffusion models like Stable Diffusion with ControlNets, the researchers enable conditional inputs such as edge maps, segmentation maps, and keypoints, thereby enriching methods to control large diffusion models and facilitating related applications.

- Adding Conditional Control to Text-to-Image Diffusion Models (research paper)

- Ablation Study: Why ControlNets use deep encoder? What if it was lighter? Or even an MLP? (blog post by ControlNet developers)

- The official implementation of this paper is available on GitHub .

9. Gen-1 by Runway

The Gen-1 research paper introduced a groundbreaking advancement in the realm of video editing through the fusion of text-guided generative diffusion models. While such models had previously revolutionized image creation and manipulation, extending their capabilities to video editing had remained a formidable challenge. Existing methods either required laborious re-training for each input or resorted to error-prone techniques to propagate image edits across frames. In response to these limitations, the researchers presented a structure and content-guided video diffusion model that allowed seamless video editing based on textual or visual descriptions of the desired output. The suggested solution was to leverage monocular depth estimates with varying levels of detail to gain precise control over structure and content fidelity.

Gen-1 was trained jointly on images and videos, paving the way for versatile video editing capabilities. It empowered users with fine-grained control over output characteristics, enabling customization based on a few reference images. Extensive experiments demonstrated its prowess, from preserving temporal consistency to achieving user preferences in editing outcomes.

- Structure and Content-Guided Video Synthesis with Diffusion Models (research paper)

- Gen-1: The Next Step Forward for Generative AI (blog post by Runway)

- Gen-2: The Next Step Forward for Generative AI (blog post by Runway)

- The code implementation of Gen-1 is not available.

10. DreamerV3 by DeepMind and University of Toronto

The paper introduces DreamerV3 , a pioneering algorithm, based on world models, that showcases remarkable performance across a wide spectrum of domains, encompassing both continuous and discrete actions, visual and low-dimensional inputs, 2D and 3D environments, varied data budgets, reward frequencies, and reward scales. At the heart of DreamerV3 lies a world model that learns from experience, combining rich perception and imagination training. This model incorporates three neural networks: one for predicting future outcomes based on potential actions, another for assessing the value of different situations, and a third for learning how to navigate toward valuable situations. The algorithm’s generalizability across domains with fixed hyperparameters is achieved through the transformation of signal magnitudes and robust normalization techniques.

A particularly noteworthy achievement of DreamerV3 is its ability to conquer the challenge of collecting diamonds in the popular video game Minecraft entirely from scratch, without any reliance on human data or curricula. DreamerV3 also demonstrates scalability, where larger models directly translate to higher data efficiency and superior final performance.

- Mastering Diverse Domains through World Models (research paper)

- DreamerV3 (project website)

- A reimplementation of DreamerV3 is available on GitHub .

In 2023, the landscape of AI research witnessed remarkable advancements, and these ten transformative papers have illuminated the path forward. From innovative language models to groundbreaking image generation and video editing techniques, these papers have pushed the boundaries of AI capabilities. As we reflect on these achievements, we anticipate even more transformative discoveries and applications on the horizon, shaping the AI landscape for years to come.

Enjoy this article? Sign up for more AI research updates.

We’ll let you know when we release more summary articles like this one.

- Email Address *

- Name * First Last

- Natural Language Processing (NLP)

- Chatbots & Conversational AI

- Computer Vision

- Ethics & Safety

- Machine Learning

- Deep Learning

- Reinforcement Learning

- Generative Models

- Other (Please Describe Below)

- What is your biggest challenge with AI research? *

About Mariya Yao

Mariya is the co-author of Applied AI: A Handbook For Business Leaders and former CTO at Metamaven. She "translates" arcane technical concepts into actionable business advice for executives and designs lovable products people actually want to use. Follow her on Twitter at @thinkmariya to raise your AI IQ.

About TOPBOTS

- Expert Contributors

- Terms of Service & Privacy Policy

- Contact TOPBOTS

Search code, repositories, users, issues, pull requests...

Provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

A curated list of the most impressive AI papers

aimerou/awesome-ai-papers

Folders and files, repository files navigation, awesome ai papers ⭐️, description.

This repository is an up-to-date list of significant AI papers organized by publication date. It covers five fields : computer vision, natural language processing, audio processing, multimodal learning and reinforcement learning. Feel free to give this repository a star if you enjoy the work.

Maintainer: Aimerou Ndiaye

Table of Contents

Computer vision.

- Natural Language Processing

Audio Processing

Multimodal learning, reinforcement learning, other papers, historical papers.

To select the most relevant papers, we chose subjective limits in terms of number of citations. Each icon here designates a paper type that meets one of these criteria.

🏆 Historical Paper : more than 10k citations and a decisive impact in the evolution of AI.

⭐ Important Paper : more than 50 citations and state of the art results.

⏫ Trend : 1 to 50 citations, recent and innovative paper with growing adoption.

📰 Important Article : decisive work that was not accompanied by a research paper.

2023 Papers

- ⭐ 01/2023: Muse: Text-To-Image Generation via Masked Generative Transformers (Muse)

- ⭐ 02/2023: Structure and Content-Guided Video Synthesis with Diffusion Models (Gen-1)

- ⭐ 02/2023: Scaling Vision Transformers to 22 Billion Parameters (ViT 22B)

- ⭐ 02/2023: Adding Conditional Control to Text-to-Image Diffusion Models (ControlNet)

- ⭐ 03/2023: Visual ChatGPT: Talking, Drawing and Editing with Visual Foundation Models (Visual ChatGPT)

- ⭐ 03/2023: Scaling up GANs for Text-to-Image Synthesis (GigaGAN)

- ⭐ 04/2023: Segment Anything (SAM)

- ⭐ 04/2023: DINOv2: Learning Robust Visual Features without Supervision (DINOv2)

- ⭐ 04/2023: Visual Instruction Tuning

- ⭐ 04/2023: Align your Latents: High-Resolution Video Synthesis with Latent Diffusion Models (VideoLDM)

- ⭐ 04/2023: Synthetic Data from Diffusion Models Improves ImageNet Classification

- ⭐ 04/2023: Segment Anything in Medical Images (MedSAM)

- ⭐ 05/2023: Drag Your GAN: Interactive Point-based Manipulation on the Generative Image Manifold (DragGAN)

- ⭐ 06/2023: Neuralangelo: High-Fidelity Neural Surface Reconstruction (Neuralangelo)

- ⭐ 07/2023: SDXL: Improving Latent Diffusion Models for High-Resolution Image Synthesis (SDXL)

- ⭐ 08/2023: 3D Gaussian Splatting for Real-Time Radiance Field Rendering

- ⭐ 08/2023: Qwen-VL: A Versatile Vision-Language Model for Understanding, Localization... (Qwen-VL)

- ⏫ 08/2023: MVDream: Multi-view Diffusion for 3D Generation (MVDream)

- ⏫ 11/2023: Florence-2: Advancing a Unified Representation for a Variety of Vision Tasks (Florence-2)

- ⏫ 12/2023: VideoPoet: A Large Language Model for Zero-Shot Video Generation (VideoPoet)

- ⭐ 01/2023: DetectGPT: Zero-Shot Machine-Generated Text Detection using Probability Curvature (DetectGPT)

- ⭐ 02/2023: Toolformer: Language Models Can Teach Themselves to Use Tools (Toolformer)

- ⭐ 02/2023: LLaMA: Open and Efficient Foundation Language Models (LLaMA)

- 📰 03/2023: GPT-4

- ⭐ 03/2023: Sparks of Artificial General Intelligence: Early experiments with GPT-4 (GPT-4 Eval)

- ⭐ 03/2023: HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in HuggingFace (HuggingGPT)

- ⭐ 03/2023: BloombergGPT: A Large Language Model for Finance (BloombergGPT)

- ⭐ 04/2023: Instruction Tuning with GPT-4

- ⭐ 04/2023: Generative Agents: Interactive Simulacra of Human (Gen Agents)

- ⭐ 05/2023: PaLM 2 Technical Report (PaLM-2)

- ⭐ 05/2023: Tree of Thoughts: Deliberate Problem Solving with Large Language Models (ToT)

- ⭐ 05/2023: LIMA: Less Is More for Alignment (LIMA)

- ⭐ 05/2023: QLoRA: Efficient Finetuning of Quantized LLMs (QLoRA)

- ⭐ 05/2023: Voyager: An Open-Ended Embodied Agent with Large Language Models (Voyager)

- ⭐ 07/2023: ToolLLM: Facilitating Large Language Models to Master 16000+ Real-world APIs (ToolLLM)

- ⭐ 08/2023: MetaGPT: Meta Programming for Multi-Agent Collaborative Framework (MetaGPT)

- ⭐ 08/2023: Code Llama: Open Foundation Models for Code (Code Llama)

- ⏫ 09/2023: RLAIF: Scaling Reinforcement Learning from Human Feedback with AI Feedback (RLAIF)

- ⭐ 09/2023: Large Language Models as Optimizers (OPRO)

- ⏫ 10/2023: Eureka: Human-Level Reward Design via Coding Large Language Models (Eureka)

- ⏫ 12/2023: Mathematical discoveries from program search with large language models (FunSearch)

- ⭐ 01/2023: Neural Codec Language Models are Zero-Shot Text to Speech Synthesizers (VALL-E)

- ⭐ 01/2023: MusicLM: Generating Music From Text (MusicLM)

- ⭐ 01/2023: AudioLDM: Text-to-Audio Generation with Latent Diffusion Models (AudioLDM)

- ⭐ 03/2023: Google USM: Scaling Automatic Speech Recognition Beyond 100 Languages (USM)

- ⭐ 05/2023: Scaling Speech Technology to 1,000+ Languages (MMS)

- ⏫ 06/2023: Simple and Controllable Music Generation (MusicGen)

- ⏫ 06/2023: AudioPaLM: A Large Language Model That Can Speak and Listen (AudioPaLM)

- ⏫ 06/2023: Voicebox: Text-Guided Multilingual Universal Speech Generation at Scale (Voicebox)

- ⭐ 02/2023: Language Is Not All You Need: Aligning Perception with Language Models (Kosmos-1)

- ⭐ 03/2023: PaLM-E: An Embodied Multimodal Language Model (PaLM-E)

- ⭐ 04/2023: AudioGPT: Understanding and Generating Speech, Music, Sound, and Talking Head (AudioGPT)

- ⭐ 05/2023: ImageBind: One Embedding Space To Bind Them All (ImageBind)

- ⏫ 07/2023: Scaling Autoregressive Multi-Modal Models: Pretraining and Instruction Tuning (CM3Leon)

- ⏫ 07/2023: Meta-Transformer: A Unified Framework for Multimodal Learning (Meta-Transformer)

- ⏫ 08/2023: SeamlessM4T: Massively Multilingual & Multimodal Machine Translation (SeamlessM4T)

- ⭐ 01/2023: Mastering Diverse Domains through World Models (DreamerV3)

- ⏫ 02/2023: Grounding Large Language Models in Interactive Environments with Online RL (GLAM)

- ⏫ 02/2023: Efficient Online Reinforcement Learning with Offline Data (RLPD)

- ⏫ 03/2023: Reward Design with Language Models

- ⭐ 05/2023: Direct Preference Optimization: Your Language Model is Secretly a Reward Model (DPO)

- ⏫ 06/2023: Faster sorting algorithms discovered using deep reinforcement learning (AlphaDev)

- ⏫ 08/2023: Retroformer: Retrospective Large Language Agents with Policy Gradient Optimization (Retroformer)

- ⭐ 02/2023: Symbolic Discovery of Optimization Algorithms (Lion)

- ⭐ 07/2023: RT-2: Vision-Language-Action Models Transfer Web Knowledge to Robotic Control (RT-2)

- ⏫ 11/2023: Scaling deep learning for materials discovery (GNoME)

- ⏫ 12/2023: Discovery of a structural class of antibiotics with explainable deep learning

2022 Papers

- ⭐ 01/2022: A ConvNet for the 2020s (ConvNeXt)

- ⭐ 01/2022: Patches Are All You Need (ConvMixer)

- ⭐ 02/2022: Block-NeRF: Scalable Large Scene Neural View Synthesis (Block-NeRF)

- ⭐ 03/2022: DINO: DETR with Improved DeNoising Anchor Boxes for End-to-End Object Detection (DINO)

- ⭐ 03/2022: Scaling Up Your Kernels to 31×31: Revisiting Large Kernel Design in CNNs (Large Kernel CNN)

- ⭐ 03/2022: TensoRF: Tensorial Radiance Fields (TensoRF)

- ⭐ 04/2022: MaxViT: Multi-Axis Vision Transformer (MaxViT)

- ⭐ 04/2022: Hierarchical Text-Conditional Image Generation with CLIP Latents (DALL-E 2)

- ⭐ 05/2022: Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding (Imagen)

- ⭐ 05/2022: GIT: A Generative Image-to-text Transformer for Vision and Language (GIT)

- ⭐ 06/2022: CMT: Convolutional Neural Network Meet Vision Transformers (CMT)

- ⭐ 07/2022: Swin UNETR: Swin Transformers for Semantic Segmentation of Brain Tumors... (Swin UNETR)

- ⭐ 07/2022: Classifier-Free Diffusion Guidance

- ⭐ 08/2022: Fine Tuning Text-to-Image Diffusion Models for Subject-Driven Generation (DreamBooth)

- ⭐ 09/2022: DreamFusion: Text-to-3D using 2D Diffusion (DreamFusion)

- ⭐ 09/2022: Make-A-Video: Text-to-Video Generation without Text-Video Data (Make-A-Video)

- ⭐ 10/2022: On Distillation of Guided Diffusion Models

- ⭐ 10/2022: LAION-5B: An open large-scale dataset for training next generation image-text models (LAION-5B)

- ⭐ 10/2022: Imagic: Text-Based Real Image Editing with Diffusion Models (Imagic)

- ⭐ 11/2022: Visual Prompt Tuning

- ⭐ 11/2022: Magic3D: High-Resolution Text-to-3D Content Creation (Magic3D)

- ⭐ 11/2022: DiffusionDet: Diffusion Model for Object Detection (DiffusionDet)

- ⭐ 11/2022: InstructPix2Pix: Learning to Follow Image Editing Instructions (InstructPix2Pix)

- ⭐ 12/2022: Multi-Concept Customization of Text-to-Image Diffusion (Custom Diffusion)

- ⭐ 12/2022: Scalable Diffusion Models with Transformers (DiT)

- ⭐ 01/2022: LaMBDA: Language Models for Dialog Applications (LaMBDA)

- ⭐ 01/2022: Chain-of-Thought Prompting Elicits Reasoning in Large Language Models (CoT)

- ⭐ 02/2022: Competition-Level Code Generation with AlphaCode (AlphaCode)

- ⭐ 02/2022: Finetuned Language Models Are Zero-Shot Learners (FLAN)

- ⭐ 03/2022: Training language models to follow human instructions with human feedback (InstructGPT)

- ⭐ 03/2022: Multitask Prompted Training Enables Zero-Shot Task Generalization (T0)

- ⭐ 03/2022: Training Compute-Optimal Large Language Models (Chinchilla)

- ⭐ 04/2022: Do As I Can, Not As I Say: Grounding Language in Robotic Affordances (SayCan)

- ⭐ 04/2022: GPT-NeoX-20B: An Open-Source Autoregressive Language Model (GPT-NeoX)

- ⭐ 04/2022: PaLM: Scaling Language Modeling with Pathways (PaLM)

- ⭐ 06/2022: Beyond the Imitation Game: Quantifying and extrapolating the capabilities of lang... (BIG-bench)

- ⭐ 06/2022: Solving Quantitative Reasoning Problems with Language Models (Minerva)

- ⭐ 10/2022: ReAct: Synergizing Reasoning and Acting in Language Models (ReAct)

- ⭐ 11/2022: BLOOM: A 176B-Parameter Open-Access Multilingual Language Model (BLOOM)

- 📰 11/2022: Optimizing Language Models for Dialogue (ChatGPT)

- ⭐ 12/2022: Large Language Models Encode Clinical Knowledge (Med-PaLM)

- ⭐ 02/2022: mSLAM: Massively multilingual joint pre-training for speech and text (mSLAM)

- ⭐ 02/2022: ADD 2022: the First Audio Deep Synthesis Detection Challenge (ADD)

- ⭐ 03/2022: Efficient Training of Audio Transformers with Patchout (PaSST)

- ⭐ 04/2022: MAESTRO: Matched Speech Text Representations through Modality Matching (Maestro)

- ⭐ 05/2022: SpeechT5: Unified-Modal Encoder-Decoder Pre-Training for Spoken Language... (SpeechT5)

- ⭐ 06/2022: WavLM: Large-Scale Self-Supervised Pre-Training for Full Stack Speech Processing (WavLM)

- ⭐ 07/2022: BigSSL: Exploring the Frontier of Large-Scale Semi-Supervised Learning for ASR (BigSSL)

- ⭐ 08/2022: MuLan: A Joint Embedding of Music Audio and Natural Language (MuLan)

- ⭐ 09/2022: AudioLM: a Language Modeling Approach to Audio Generation (AudioLM)

- ⭐ 09/2022: AudioGen: Textually Guided Audio Generation (AudioGen)

- ⭐ 10/2022: High Fidelity Neural Audio Compression (EnCodec)

- ⭐ 12/2022: Robust Speech Recognition via Large-Scale Weak Supervision (Whisper)

- ⭐ 01/2022: BLIP: Boostrapping Language-Image Pre-training for Unified Vision-Language... (BLIP)

- ⭐ 02/2022: data2vec: A General Framework for Self-supervised Learning in Speech, Vision and... (Data2vec)

- ⭐ 03/2022: VL-Adapter: Parameter-Efficient Transfer Learning for Vision-and-Language Tasks (VL-Adapter)

- ⭐ 04/2022: Winoground: Probing Vision and Language Models for Visio-Linguistic... (Winoground)

- ⭐ 04/2022: Flamingo: a Visual Language Model for Few-Shot Learning (Flamingo)

- ⭐ 05/2022: A Generalist Agent (Gato)

- ⭐ 05/2022: CoCa: Contrastive Captioners are Image-Text Foundation Models (CoCa)

- ⭐ 05/2022: VLMo: Unified Vision-Language Pre-Training with Mixture-of-Modality-Experts (VLMo)

- ⭐ 08/2022: Image as a Foreign Language: BEiT Pretraining for All Vision and Vision-Language Tasks (BEiT)

- ⭐ 09/2022: PaLI: A Jointly-Scaled Multilingual Language-Image Model (PaLI)

- ⭐ 01/2022: Learning robust perceptive locomotion for quadrupedal robots in the wild

- ⭐ 02/2022: BC-Z: Zero-Shot Task Generalization with Robotic Imitation Learning

- ⭐ 02/2022: Outracing champion Gran Turismo drivers with deep reinforcement learning (Sophy)

- ⭐ 02/2022: Magnetic control of tokamak plasmas through deep reinforcement learning

- ⭐ 08/2022: Learning to Walk in Minutes Using Massively Parallel Deep Reinforcement Learning (ANYmal)

- ⭐ 10/2022: Discovering faster matrix multiplication algorithms with reinforcement learning (AlphaTensor)

- ⭐ 02/2022: FourCastNet: A Global Data-driven High-resolution Weather Model... (FourCastNet)

- ⭐ 05/2022: ColabFold: making protein folding accessible to all (ColabFold)

- ⭐ 06/2022: Measuring and Improving the Use of Graph Information in GNN

- ⭐ 10/2022: TimesNet: Temporal 2D-Variation Modeling for General Time Series Analysis (TimesNet)

- ⭐ 12/2022: RT-1: Robotics Transformer for Real-World Control at Scale (RT-1)

- 🏆 1958: Perceptron: A probabilistic model for information storage and organization in the brain (Perceptron)

- 🏆 1986: Learning representations by back-propagating errors (Backpropagation)

- 🏆 1986: Induction of decision trees (CART)

- 🏆 1989: A Tutorial on Hidden Markov Models and Selected Applications in Speech Recognition (HMM)

- 🏆 1989: Multilayer feedforward networks are universal approximators

- 🏆 1992: A training algorithm for optimal margin classifiers (SVM)

- 🏆 1996: Bagging predictors

- 🏆 1998: Gradient-based learning applied to document recognition (CNN/GTN)

- 🏆 2001: Random Forests

- 🏆 2001: A fast and elitist multiobjective genetic algorithm (NSGA-II)

- 🏆 2003: Latent Dirichlet Allocation (LDA)

- 🏆 2006: Reducing the Dimensionality of Data with Neural Networks (Autoencoder)

- 🏆 2008: Visualizing Data using t-SNE (t-SNE)

- 🏆 2009: ImageNet: A large-scale hierarchical image database (ImageNet)

- 🏆 2012: ImageNet Classification with Deep Convolutional Neural Networks (AlexNet)

- 🏆 2013: Efficient Estimation of Word Representations in Vector Space (Word2vec)

- 🏆 2013: Auto-Encoding Variational Bayes (VAE)

- 🏆 2014: Generative Adversarial Networks (GAN)

- 🏆 2014: Dropout: A Simple Way to Prevent Neural Networks from Overfitting (Dropout)

- 🏆 2014: Sequence to Sequence Learning with Neural Networks

- 🏆 2014: Neural Machine Translation by Jointly Learning to Align and Translate (RNNSearch-50)

- 🏆 2014: Adam: A Method for Stochastic Optimization (Adam)

- 🏆 2015: Batch Normalization: Accelerating Deep Network Training by Reducing Internal Cov... (BatchNorm)

- 🏆 2015: Going Deeper With Convolutions (Inception)

- 🏆 2015: Human-level control through deep reinforcement learning (Deep Q Network)

- 🏆 2015: Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks (Faster R-CNN)

- 🏆 2015: U-Net: Convolutional Networks for Biomedical Image Segmentation (U-Net)

- 🏆 2015: Deep Residual Learning for Image Recognition (ResNet)

- 🏆 2016: You Only Look Once: Unified, Real-Time Object Detection (YOLO)

- 🏆 2017: Attention is All you Need (Transformer)

- 🏆 2018: BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding (BERT)

- 🏆 2020: Language Models are Few-Shot Learners (GPT-3)

- 🏆 2020: An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale (ViT)

- 🏆 2021: Highly accurate protein structure prediction with AlphaFold (Alphafold)

- 📰 2022: ChatGPT: Optimizing Language Models For Dialogue (ChatGPT)

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- NEWS FEATURE

- 27 September 2023

- Correction 10 October 2023

AI and science: what 1,600 researchers think

- Richard Van Noorden &

- Jeffrey M. Perkel

You can also search for this author in PubMed Google Scholar

Artificial-intelligence (AI) tools are becoming increasingly common in science, and many scientists anticipate that they will soon be central to the practice of research, suggests a Nature survey of more than 1,600 researchers around the world.

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

24,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

185,98 € per year

only 3,65 € per issue

Rent or buy this article

Prices vary by article type

Prices may be subject to local taxes which are calculated during checkout

Nature 621 , 672-675 (2023)

doi: https://doi.org/10.1038/d41586-023-02980-0

Updates & Corrections

Correction 10 October 2023 : An earlier version of this story erroneously affiliated Kedar Hippalgaonkar with the National University of Singapore.

Reprints and permissions

Supplementary Information

- AI survey methodology (docx)

- AI survey questions (pdf)

- AI survey results (xlsx)

Related Articles

- Machine learning

- Mathematics and computing

- Computer science

AI’s keen diagnostic eye

Outlook 18 APR 24

AI traces mysterious metastatic cancers to their source

News 17 APR 24

AI now beats humans at basic tasks — new benchmarks are needed, says major report

News 15 APR 24

Use game theory for climate models that really help reach net zero goals

Correspondence 16 APR 24

This water bottle purifies your drink with energy from your steps

Research Highlight 17 APR 24

A milestone map of mouse-brain connectivity reveals challenging new terrain for scientists

Technology Feature 15 APR 24

How to break big tech’s stranglehold on AI in academia

Correspondence 09 APR 24

Postdoctoral Position

We are seeking highly motivated and skilled candidates for postdoctoral fellow positions

Boston, Massachusetts (US)

Boston Children's Hospital (BCH)

Qiushi Chair Professor

Distinguished scholars with notable achievements and extensive international influence.

Hangzhou, Zhejiang, China

Zhejiang University

ZJU 100 Young Professor

Promising young scholars who can independently establish and develop a research direction.

Head of the Thrust of Robotics and Autonomous Systems

Reporting to the Dean of Systems Hub, the Head of ROAS is an executive assuming overall responsibility for the academic, student, human resources...

Guangzhou, Guangdong, China

The Hong Kong University of Science and Technology (Guangzhou)

Head of Biology, Bio-island

Head of Biology to lead the discovery biology group.

BeiGene Ltd.

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

April 18, 2024

AI Report Shows ‘Startlingly Rapid’ Progress—And Ballooning Costs

A new report finds that AI matches or outperforms people at tasks such as competitive math and reading comprehension

By Nicola Jones & Nature magazine

xijian/Getty Images

Artificial intelligence (AI) systems, such as the chatbot ChatGPT , have become so advanced that they now very nearly match or exceed human performance in tasks including reading comprehension, image classification and competition-level mathematics, according to a new report. Rapid progress in the development of these systems also means that many common benchmarks and tests for assessing them are quickly becoming obsolete.

These are just a few of the top-line findings from the Artificial Intelligence Index Report 2024 , which was published on 15 April by the Institute for Human-Centered Artificial Intelligence at Stanford University in California. The report charts the meteoric progress in machine-learning systems over the past decade.

In particular, the report says, new ways of assessing AI — for example, evaluating their performance on complex tasks, such as abstraction and reasoning — are more and more necessary. “A decade ago, benchmarks would serve the community for 5–10 years” whereas now they often become irrelevant in just a few years, says Nestor Maslej, a social scientist at Stanford and editor-in-chief of the AI Index. “The pace of gain has been startlingly rapid.”

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing . By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Stanford’s annual AI Index, first published in 2017, is compiled by a group of academic and industry specialists to assess the field’s technical capabilities, costs, ethics and more — with an eye towards informing researchers, policymakers and the public. This year’s report, which is more than 400 pages long and was copy-edited and tightened with the aid of AI tools, notes that AI-related regulation in the United States is sharply rising. But the lack of standardized assessments for responsible use of AI makes it difficult to compare systems in terms of the risks that they pose.

The rising use of AI in science is also highlighted in this year’s edition: for the first time, it dedicates an entire chapter to science applications, highlighting projects including Graph Networks for Materials Exploration (GNoME), a project from Google DeepMind that aims to help chemists discover materials , and GraphCast, another DeepMind tool, which does rapid weather forecasting .

The current AI boom — built on neural networks and machine-learning algorithms — dates back to the early 2010s . The field has since rapidly expanded. For example, the number of AI coding projects on GitHub, a common platform for sharing code, increased from about 800 in 2011 to 1.8 million last year. And journal publications about AI roughly tripled over this period, the report says.

Much of the cutting-edge work on AI is being done in industry: that sector produced 51 notable machine-learning systems last year, whereas academic researchers contributed 15. “Academic work is shifting to analysing the models coming out of companies — doing a deeper dive into their weaknesses,” says Raymond Mooney, director of the AI Lab at the University of Texas at Austin, who wasn’t involved in the report.

That includes developing tougher tests to assess the visual, mathematical and even moral-reasoning capabilities of large language models (LLMs), which power chatbots. One of the latest tests is the Graduate-Level Google-Proof Q&A Benchmark (GPQA), developed last year by a team including machine-learning researcher David Rein at New York University.

The GPQA, consisting of more than 400 multiple-choice questions, is tough: PhD-level scholars could correctly answer questions in their field 65% of the time. The same scholars, when attempting to answer questions outside their field, scored only 34%, despite having access to the Internet during the test (randomly selecting answers would yield a score of 25%). As of last year, AI systems scored about 30–40%. This year, Rein says, Claude 3 — the latest chatbot released by AI company Anthropic, based in San Francisco, California — scored about 60%. “The rate of progress is pretty shocking to a lot of people, me included,” Rein adds. “It’s quite difficult to make a benchmark that survives for more than a few years.”

Cost of business

As performance is skyrocketing, so are costs. GPT-4 — the LLM that powers ChatGPT and that was released in March 2023 by San Francisco-based firm OpenAI — reportedly cost US$78 million to train. Google’s chatbot Gemini Ultra, launched in December, cost $191 million. Many people are concerned about the energy use of these systems, as well as the amount of water needed to cool the data centres that help to run them. “These systems are impressive, but they’re also very inefficient,” Maslej says.

Costs and energy use for AI models are high in large part because one of the main ways to make current systems better is to make them bigger. This means training them on ever-larger stocks of text and images. The AI Index notes that some researchers now worry about running out of training data. Last year, according to the report, the non-profit research institute Epoch projected that we might exhaust supplies of high-quality language data as soon as this year. (However, the institute’s most recent analysis suggests that 2028 is a better estimate.)

Ethical concerns about how AI is built and used are also mounting. “People are way more nervous about AI than ever before, both in the United States and across the globe,” says Maslej, who sees signs of a growing international divide. “There are now some countries very excited about AI, and others that are very pessimistic.”

In the United States, the report notes a steep rise in regulatory interest. In 2016, there was just one US regulation that mentioned AI; last year, there were 25. “After 2022, there’s a massive spike in the number of AI-related bills that have been proposed” by policymakers, Maslej says.

Regulatory action is increasingly focused on promoting responsible AI use. Although benchmarks are emerging that can score metrics such as an AI tool’s truthfulness, bias and even likability, not everyone is using the same models, Maslej says, which makes cross-comparisons hard. “This is a really important topic,” he says. “We need to bring the community together on this.”

This article is reproduced with permission and was first published on April 15, 2024 .

The best AI tools for research papers and academic research (Literature review, grants, PDFs and more)

As our collective understanding and application of artificial intelligence (AI) continues to evolve, so too does the realm of academic research. Some people are scared by it while others are openly embracing the change.

Make no mistake, AI is here to stay!

Instead of tirelessly scrolling through hundreds of PDFs, a powerful AI tool comes to your rescue, summarizing key information in your research papers. Instead of manually combing through citations and conducting literature reviews, an AI research assistant proficiently handles these tasks.

These aren’t futuristic dreams, but today’s reality. Welcome to the transformative world of AI-powered research tools!

The influence of AI in scientific and academic research is an exciting development, opening the doors to more efficient, comprehensive, and rigorous exploration.

This blog post will dive deeper into these tools, providing a detailed review of how AI is revolutionizing academic research. We’ll look at the tools that can make your literature review process less tedious, your search for relevant papers more precise, and your overall research process more efficient and fruitful.

I know that I wish these were around during my time in academia. It can be quite confronting when trying to work out what ones you should and shouldn’t use. A new one seems to be coming out every day!

Here is everything you need to know about AI for academic research and the ones I have personally trialed on my Youtube channel.

Best ChatGPT interface – Chat with PDFs/websites and more

I get more out of ChatGPT with HeyGPT . It can do things that ChatGPT cannot which makes it really valuable for researchers.

Use your own OpenAI API key ( h e re ). No login required. Access ChatGPT anytime, including peak periods. Faster response time. Unlock advanced functionalities with HeyGPT Ultra for a one-time lifetime subscription

AI literature search and mapping – best AI tools for a literature review – elicit and more

Harnessing AI tools for literature reviews and mapping brings a new level of efficiency and precision to academic research. No longer do you have to spend hours looking in obscure research databases to find what you need!

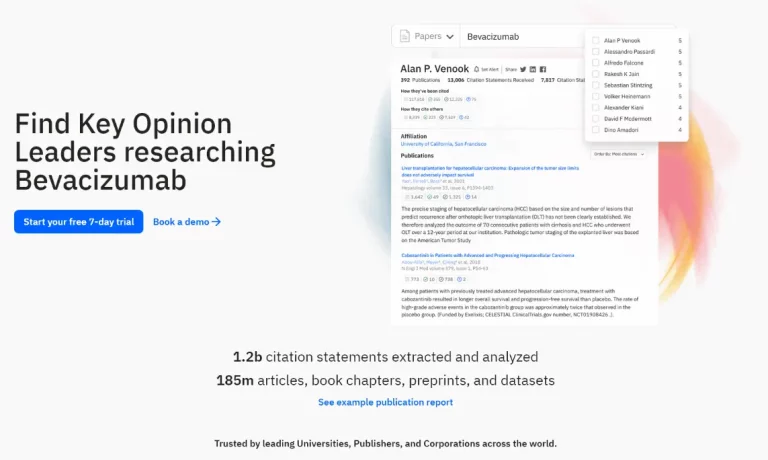

AI-powered tools like Semantic Scholar and elicit.org use sophisticated search engines to quickly identify relevant papers.

They can mine key information from countless PDFs, drastically reducing research time. You can even search with semantic questions, rather than having to deal with key words etc.

With AI as your research assistant, you can navigate the vast sea of scientific research with ease, uncovering citations and focusing on academic writing. It’s a revolutionary way to take on literature reviews.

- Elicit – https://elicit.org

- Supersymmetry.ai: https://www.supersymmetry.ai

- Semantic Scholar: https://www.semanticscholar.org

- Connected Papers – https://www.connectedpapers.com/

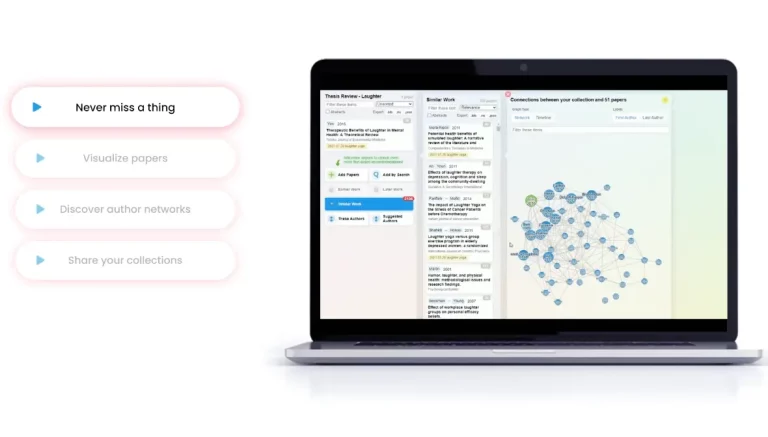

- Research rabbit – https://www.researchrabbit.ai/

- Laser AI – https://laser.ai/

- Litmaps – https://www.litmaps.com

- Inciteful – https://inciteful.xyz/

- Scite – https://scite.ai/

- System – https://www.system.com

If you like AI tools you may want to check out this article:

- How to get ChatGPT to write an essay [The prompts you need]

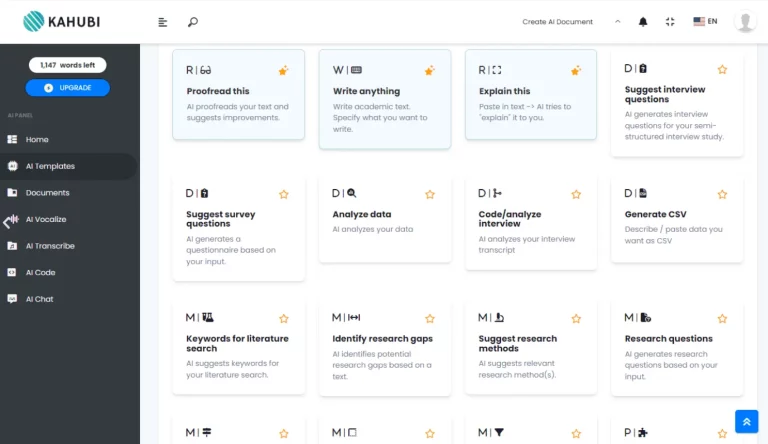

AI-powered research tools and AI for academic research

AI research tools, like Concensus, offer immense benefits in scientific research. Here are the general AI-powered tools for academic research.

These AI-powered tools can efficiently summarize PDFs, extract key information, and perform AI-powered searches, and much more. Some are even working towards adding your own data base of files to ask questions from.

Tools like scite even analyze citations in depth, while AI models like ChatGPT elicit new perspectives.

The result? The research process, previously a grueling endeavor, becomes significantly streamlined, offering you time for deeper exploration and understanding. Say goodbye to traditional struggles, and hello to your new AI research assistant!

- Bit AI – https://bit.ai/

- Consensus – https://consensus.app/

- Exper AI – https://www.experai.com/

- Hey Science (in development) – https://www.heyscience.ai/

- Iris AI – https://iris.ai/

- PapersGPT (currently in development) – https://jessezhang.org/llmdemo

- Research Buddy – https://researchbuddy.app/

- Mirror Think – https://mirrorthink.ai

AI for reading peer-reviewed papers easily

Using AI tools like Explain paper and Humata can significantly enhance your engagement with peer-reviewed papers. I always used to skip over the details of the papers because I had reached saturation point with the information coming in.

These AI-powered research tools provide succinct summaries, saving you from sifting through extensive PDFs – no more boring nights trying to figure out which papers are the most important ones for you to read!

They not only facilitate efficient literature reviews by presenting key information, but also find overlooked insights.

With AI, deciphering complex citations and accelerating research has never been easier.

- Open Read – https://www.openread.academy

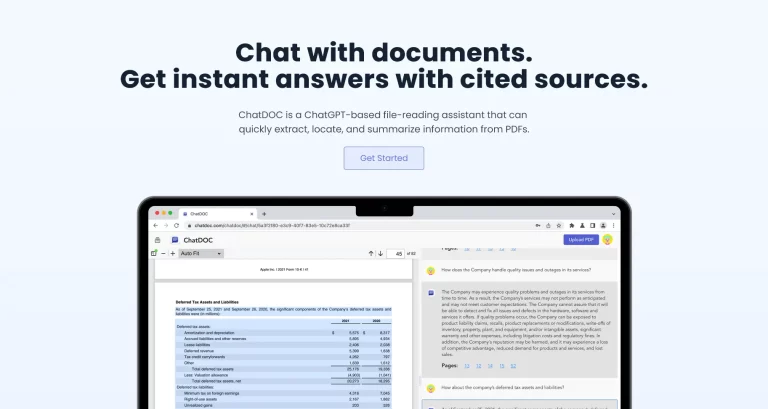

- Chat PDF – https://www.chatpdf.com

- Explain Paper – https://www.explainpaper.com

- Humata – https://www.humata.ai/

- Lateral AI – https://www.lateral.io/

- Paper Brain – https://www.paperbrain.study/

- Scholarcy – https://www.scholarcy.com/

- SciSpace Copilot – https://typeset.io/

- Unriddle – https://www.unriddle.ai/

- Sharly.ai – https://www.sharly.ai/

AI for scientific writing and research papers

In the ever-evolving realm of academic research, AI tools are increasingly taking center stage.

Enter Paper Wizard, Jenny.AI, and Wisio – these groundbreaking platforms are set to revolutionize the way we approach scientific writing.

Together, these AI tools are pioneering a new era of efficient, streamlined scientific writing.

- Paper Wizard – https://paperwizard.ai/

- Jenny.AI https://jenni.ai/ (20% off with code ANDY20)

- Wisio – https://www.wisio.app

AI academic editing tools

In the realm of scientific writing and editing, artificial intelligence (AI) tools are making a world of difference, offering precision and efficiency like never before. Consider tools such as Paper Pal, Writefull, and Trinka.

Together, these tools usher in a new era of scientific writing, where AI is your dedicated partner in the quest for impeccable composition.

- Paper Pal – https://paperpal.com/

- Writefull – https://www.writefull.com/

- Trinka – https://www.trinka.ai/

AI tools for grant writing

In the challenging realm of science grant writing, two innovative AI tools are making waves: Granted AI and Grantable.

These platforms are game-changers, leveraging the power of artificial intelligence to streamline and enhance the grant application process.

Granted AI, an intelligent tool, uses AI algorithms to simplify the process of finding, applying, and managing grants. Meanwhile, Grantable offers a platform that automates and organizes grant application processes, making it easier than ever to secure funding.

Together, these tools are transforming the way we approach grant writing, using the power of AI to turn a complex, often arduous task into a more manageable, efficient, and successful endeavor.

- Granted AI – https://grantedai.com/

- Grantable – https://grantable.co/

Free AI research tools

There are many different tools online that are emerging for researchers to be able to streamline their research processes. There’s no need for convience to come at a massive cost and break the bank.

The best free ones at time of writing are:

- Elicit – https://elicit.org

- Connected Papers – https://www.connectedpapers.com/

- Litmaps – https://www.litmaps.com ( 10% off Pro subscription using the code “STAPLETON” )

- Consensus – https://consensus.app/

Wrapping up

The integration of artificial intelligence in the world of academic research is nothing short of revolutionary.

With the array of AI tools we’ve explored today – from research and mapping, literature review, peer-reviewed papers reading, scientific writing, to academic editing and grant writing – the landscape of research is significantly transformed.

The advantages that AI-powered research tools bring to the table – efficiency, precision, time saving, and a more streamlined process – cannot be overstated.

These AI research tools aren’t just about convenience; they are transforming the way we conduct and comprehend research.

They liberate researchers from the clutches of tedium and overwhelm, allowing for more space for deep exploration, innovative thinking, and in-depth comprehension.

Whether you’re an experienced academic researcher or a student just starting out, these tools provide indispensable aid in your research journey.

And with a suite of free AI tools also available, there is no reason to not explore and embrace this AI revolution in academic research.

We are on the precipice of a new era of academic research, one where AI and human ingenuity work in tandem for richer, more profound scientific exploration. The future of research is here, and it is smart, efficient, and AI-powered.

Before we get too excited however, let us remember that AI tools are meant to be our assistants, not our masters. As we engage with these advanced technologies, let’s not lose sight of the human intellect, intuition, and imagination that form the heart of all meaningful research. Happy researching!

Thank you to Ivan Aguilar – Ph.D. Student at SFU (Simon Fraser University), for starting this list for me!

Dr Andrew Stapleton has a Masters and PhD in Chemistry from the UK and Australia. He has many years of research experience and has worked as a Postdoctoral Fellow and Associate at a number of Universities. Although having secured funding for his own research, he left academia to help others with his YouTube channel all about the inner workings of academia and how to make it work for you.

Thank you for visiting Academia Insider.

We are here to help you navigate Academia as painlessly as possible. We are supported by our readers and by visiting you are helping us earn a small amount through ads and affiliate revenue - Thank you!

2024 © Academia Insider

AI Research Tools

ChatDOC lets you chat with PDFs and tables to quickly extract information and insights. Sources are cited for fact-checking, this is a ChatGPT based file-reading

ChatPDF allows you to talk to your PDF documents as if they were human. It’s perfect for quickly extracting information or answering questions from large

Genei is a research tool that automates the process of summarizing background reading and can also generate blogs, articles, and reports. It allows you to