Search code, repositories, users, issues, pull requests...

Provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement . We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

"FailedHealthCheck: Data generation extremely slow" when using strategies without filtering #2195

SethMMorton commented Nov 8, 2019

DRMacIver commented Nov 8, 2019

Sorry, something went wrong.

SethMMorton commented Nov 8, 2019 • edited

SethMMorton commented Nov 9, 2019

Zac-HD commented Nov 9, 2019

Drmaciver commented nov 9, 2019.

- 👍 1 reaction

SethMMorton commented Nov 10, 2019

Zac-hd commented nov 17, 2019.

DRMacIver commented Nov 22, 2019

Successfully merging a pull request may close this issue.

Hypothesis Generation and Interpretation pp 33–69 Cite as

- Hiroshi Ishikawa 3

- First Online: 01 January 2024

129 Accesses

Part of the book series: Studies in Big Data ((SBD,volume 139))

This chapter will explain the definition and properties of a hypothesis, the related concepts, and basic methods of hypothesis generation as follows.

Describe the definition, properties, and life cycle of a hypothesis.

Describe relationships between a hypothesis and a theory, a model, and data.

Categorize and explain research questions that provide hints for hypothesis generation.

Explain how to visualize data and analysis results.

Explain the philosophy of science and scientific methods in relation to hypothesis generation in science.

Explain deduction, induction, plausible reasoning, and analogy concretely as reasoning methods useful for hypothesis generation.

Explain problem solving as hypothesis generation methods by using familiar examples.

This is a preview of subscription content, log in via an institution .

Buying options

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Aufmann RN, Lockwood JS et al (2018) Mathematical excursions. CENGAGE

Google Scholar

Bortolotti L (2008) An introduction to the philosophy of science. Polity

Cairo A (2016) The truthful art: data, charts, and maps for communication. New Riders

Cellucci C (2017) Rethinking knowledge: the heuristic view. Springer

Chang M (2014) Principles of scientific methods. CRC Press

Crease RP (2010) The great equations: breakthroughs in science from Pythagoras to Heisenberg. W. W. Norton & Company

Danks D, Ippoliti E (eds) Building theories: Heuristics and hypotheses in sciences. Springer

Diggle PJ, Chetwynd AG (2011) Statistics and scientific method: an introduction for students and researchers. Oxford University Press

DOAJ (2022) Directory of open access journal. https://doaj.org/ Accessed 2022

Gilchrist P, Wheaton B (2011) Lifestyle sport, public policy and youth engagement: examining the emergence of Parkour. Int J Sport Policy Polit 3(1):109–131. https://doi.org/10.1080/19406940.2010.547866

Article Google Scholar

Google Maps. https://www.google.com/maps Accessed 2022.

Ishikawa H (2015) Social big data mining. CRC Press

Järvinen P (2008) Mapping research questions to research methods. In: Avison D, Kasper GM, Pernici B, Ramos I, Roode D (eds) Advances in information systems research, education and practice. Proceedings of IFIP 20th world computer congress, TC 8, information systems, vol 274. Springer. https://doi.org/10.1007/978-0-387-09682-7-9_3

JAXA (2022) Martian moons eXploration. http://www.mmx.jaxa.jp/en/ . Accessed 2022

Lewton T (2020) How the bits of quantum gravity can buzz. Quanta Magazine. 2020. https://www.quantamagazine.org/gravitons-revealed-in-the-noise-of-gravitational-waves-20200723/ . Accessed 2022

Mahajan S (2014) The art of insight in science and engineering: Mastering complexity. The MIT Press

Méndez A, Rivera–Valentín EG (2017) The equilibrium temperature of planets in elliptical orbits. Astrophys J Lett 837(1)

NASA (2022) Mars sample return. https://www.jpl.nasa.gov/missions/mars-sample-return-msr Accessed 2022

OpenStreetMap (2022). https://www.openstreetmap.org . Accessed 2022

Pólya G (2009) Mathematics and plausible reasoning: vol I: induction and analogy in mathematics. Ishi Press

Pólya G, Conway JH (2014) How to solve it. Princeton University Press

Rehm J (2019) The four fundamental forces of nature. Live science https://www.livescience.com/the-fundamental-forces-of-nature.html

Sadler-Smith E (2015) Wallas’ four-stage model of the creative process: more than meets the eye? Creat Res J 27(4):342–352. https://doi.org/10.1080/10400419.2015.1087277

Siegel E, This is why physicists think string theory might be our ‘theory of everything.’ Forbes, 2018. https://www.forbes.com/sites/startswithabang/2018/05/31/this-is-why-physicists-think-string-theory-might-be-our-theory-of-everything/?sh=b01d79758c25

Zeitz P (2006) The art and craft of problem solving. Wiley

Download references

Author information

Authors and affiliations.

Department of Systems Design, Tokyo Metropolitan University, Hino, Tokyo, Japan

Hiroshi Ishikawa

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Hiroshi Ishikawa .

Rights and permissions

Reprints and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter.

Ishikawa, H. (2024). Hypothesis. In: Hypothesis Generation and Interpretation. Studies in Big Data, vol 139. Springer, Cham. https://doi.org/10.1007/978-3-031-43540-9_2

Download citation

DOI : https://doi.org/10.1007/978-3-031-43540-9_2

Published : 01 January 2024

Publisher Name : Springer, Cham

Print ISBN : 978-3-031-43539-3

Online ISBN : 978-3-031-43540-9

eBook Packages : Computer Science Computer Science (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Test faster, fix more

Generating the right data

One thing that often causes people problems is figuring out how to generate the right data to fit their data model. You can start with just generating strings and integers, but eventually you want to be able to generate objects from your domain model. Hypothesis provides a lot of tools to help you build the data you want, but sometimes the choice can be a bit overwhelming.

Here’s a worked example to walk you through some of the details and help you get to grips with how to use them.

Suppose we have the following class:

A project has a name, a start date, and an end date.

How do we generate such a thing?

The idea is to break the problem down into parts, and then use the tools Hypothesis provides to assemble those parts into a strategy for generating our projects.

We’ll start by generating the data we need for each field, and then at the end we’ll see how to put it all together to generate a Project.

First we need to generate a name. We’ll use Hypothesis’s standard text strategy for that:

Lets customize this a bit: First off, lets say project names have to be non-empty.

Now, lets avoid the high end unicode for now (of course, your system should handle the full range of unicode, but this is just an example, right?).

To do this we need to pass an alphabet to the text strategy. This can either be a range of characters or another strategy. We’re going to use the characters strategy, which gives you a flexible way of describing a strategy for single-character text strings, to do that.

The max and min codepoint parameters do what you’d expect: They limit the range of permissible codepoints. We’ve blocked off the 0 codepoint (it’s not really useful and tends to just cause trouble with C libraries) and anything with a codepoint above 1000 - so we’re considering non-ASCII characters but nothing really high end.

The blacklist_categories parameter uses the notion of unicode category to limit the range of acceptable characters. If you want to see what category a character has you can use Python’s unicodedata module to find out:

The categories we’ve excluded are control characters and surrogates . Surrogates are excluded by default but when you explicitly pass in blacklist categories you need to exclude them yourself.

So we can put that together with text() to get a name matching our requirements:

But this is still not quite right: We’ve allowed spaces in names, but we don’t really want a name to start with or end with a space. You can see that this is currently allowed by asking Hypothesis for a more specific example:

So lets fix it so that they can’t by stripping the spaces off it.

To do this we’re going to use the strategy’s map method which lets you compose it with an arbitrary function to post-process the results into the for you want:

Now lets check that we can no longer have the above problem:

The problem is that our initial test worked because the strings we were generating were always non-empty because of the min_size parameter. We’re still only generating non-empty strings, but if we generate a string which is all spaces then strip it, the result will be empty after our map.

We can fix this using the strategy’s filter function, which restricts to only generating things which satisfy some condition:

And repeating the check:

Hypothesis raises NoSuchExample to indicate that… well, that there’s no such example.

In general you should be a little bit careful with filter and only use it to filter to conditions that are relatively hard to happen by accident. In this case it’s fine because the filter condition only fails if our initial draw was a string consisting entirely of spaces, but if we’d e.g. tried the opposite and tried to filter to strings that only had spaces, we’d have had a bad time of it and got a very slow and not very useful test.

Anyway, we now really do have a strategy that produces decent names for our projects. Lets put this all together into a test that demonstrates that our names now have the desired properties:

It’s not common practice to write tests for your strategies, but it can be helpful when trying to figure things out.

Dates and times

Hypothesis has date and time generation in a hypothesis.extra subpackage because it relies on pytz to generate them, but other than that it works in exactly the same way as before:

Lets constrain our dates to be UTC, because the sensible thing to do is to use UTC internally and convert on display to the user:

We can also constrain our projects to start in a reasonable range of years, as by default Hypothesis will cover the whole of representable history:

Again we can put together a test that checks this behaviour (though we have less code here so it’s less useful):

Putting it all together

We can now generate all the parts for our project definitions, but how do we generate a project?

The first thing to reach for is the builds function.

builds lets you take a set of strategies and feed their results as arguments to a function (or, in this case, class. Anything callable really) to create a new strategy that works by drawing those arguments then passing them to the function to give you that example.

Unfortunately, this isn’t quite right:

Projects can start after they end when we use builds this way. One way to fix this would be to use filter():

This will work, but it starts to edge into the territory of where filter should be avoided - about half of the initially generated examples will fail the filter.

What we’ll do instead is draw two dates and use whichever one is smallest as the start, and whatever is largest at the end. This is hard to do with builds because of the dependence between the arguments, so instead we’ll use builds’ more advanced cousin, composite :

The idea of composite is you get passed a magic first argument ‘draw’ that you can use to get examples out of a strategy. You then make as many draws as you want and use these to return the desired data.

You can also use the assume function to discard the current call if you get yourself into a state where you can’t proceed or where it’s easier to start again. In this case we do that when we draw the same data twice.

Note that in all of our examples we’re now writing projects() instead of projects. That’s because composite returns a function rather than a strategy. Any arguments to your defining function other than the first are also arguments to the one produced by composite.

We can now put together one final test that we got this bit right too:

Wrapping up

There’s a lot more to Hypothesis’s data generation than this, but hopefully it gives you a flavour of the sort of things to try and the sort of things that are possible.

It’s worth having a read of the documentation for this, and if you’re still stuck then try asking the community for some help. We’re pretty friendly.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Perspective

- Published: 10 January 2012

Machine learning and data mining: strategies for hypothesis generation

- M A Oquendo 1 ,

- E Baca-Garcia 1 , 2 ,

- A Artés-Rodríguez 3 ,

- F Perez-Cruz 3 , 4 ,

- H C Galfalvy 1 ,

- H Blasco-Fontecilla 2 ,

- D Madigan 5 &

- N Duan 1 , 6

Molecular Psychiatry volume 17 , pages 956–959 ( 2012 ) Cite this article

5417 Accesses

60 Citations

6 Altmetric

Metrics details

- Data mining

- Machine learning

- Neurological models

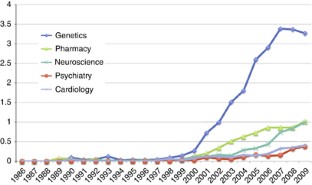

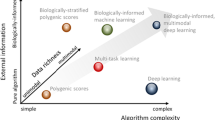

Strategies for generating knowledge in medicine have included observation of associations in clinical or research settings and more recently, development of pathophysiological models based on molecular biology. Although critically important, they limit hypothesis generation to an incremental pace. Machine learning and data mining are alternative approaches to identifying new vistas to pursue, as is already evident in the literature. In concert with these analytic strategies, novel approaches to data collection can enhance the hypothesis pipeline as well. In data farming, data are obtained in an ‘organic’ way, in the sense that it is entered by patients themselves and available for harvesting. In contrast, in evidence farming (EF), it is the provider who enters medical data about individual patients. EF differs from regular electronic medical record systems because frontline providers can use it to learn from their own past experience. In addition to the possibility of generating large databases with farming approaches, it is likely that we can further harness the power of large data sets collected using either farming or more standard techniques through implementation of data-mining and machine-learning strategies. Exploiting large databases to develop new hypotheses regarding neurobiological and genetic underpinnings of psychiatric illness is useful in itself, but also affords the opportunity to identify novel mechanisms to be targeted in drug discovery and development.

This is a preview of subscription content, access via your institution

Access options

Subscribe to this journal

Receive 12 print issues and online access

251,40 € per year

only 20,95 € per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

A primer on the use of machine learning to distil knowledge from data in biological psychiatry

Thomas P. Quinn, Jonathan L. Hess, … on behalf of the Machine Learning in Psychiatry (MLPsych) Consortium

Axes of a revolution: challenges and promises of big data in healthcare

Smadar Shilo, Hagai Rossman & Eran Segal

Deep learning for small and big data in psychiatry

Georgia Koppe, Andreas Meyer-Lindenberg & Daniel Durstewitz

Carlsson A . A paradigm shift in brain research. Science 2001; 294 : 1021–1024.

Article CAS Google Scholar

Mitchell TM . The Discipline of Machine Learning . School of Computer Science: Pittsburgh, PA, 2006. Available from: http://aaai.org/AITopics/MachineLearning .

Google Scholar

Nilsson NJ . Introduction to Machine Learning. An early draft of a proposed textbook . Robotics Laboratory, Department of Computer Science, Stanford University: Stanford, 1996. Available from: http://robotics.stanford.edu/people/nilsson/mlbook.html .

Hand DJ . Mining medical data. Stat Methods Med Res 2000; 9 : 305–307.

PubMed CAS Google Scholar

Smyth P . Data mining: data analysis on a grand scale? Stat Methods Med Res 2000; 9 : 309–327.

Burgun A, Bodenreider O . Accessing and integrating data and knowledge for biomedical research. Yearb Med Inform 2008; 47 (Suppl 1): 91–101.

Hochberg AM, Hauben M, Pearson RK, O’Hara DJ, Reisinger SJ, Goldsmith DI et al . An evaluation of three signal-detection algorithms using a highly inclusive reference event database. Drug Saf 2009; 32 : 509–525.

Article Google Scholar

Sanz EJ, De-las-Cuevas C, Kiuru A, Bate A, Edwards R . Selective serotonin reuptake inhibitors in pregnant women and neonatal withdrawal syndrome: a database analysis. Lancet 2005; 365 : 482–487.

Baca-Garcia E, Perez-Rodriguez MM, Basurte-Villamor I, Saiz-Ruiz J, Leiva-Murillo JM, de Prado-Cumplido M et al . Using data mining to explore complex clinical decisions: A study of hospitalization after a suicide attempt. J Clin Psychiatry 2006; 67 : 1124–1132.

Ray S, Britschgi M, Herbert C, Takeda-Uchimura Y, Boxer A, Blennow K et al . Classification and prediction of clinical Alzheimer's diagnosis based on plasma signaling proteins. Nat Med 2007; 13 : 1359–1362.

Baca-Garcia E, Perez-Rodriguez MM, Basurte-Villamor I, Lopez-Castroman J, Fernandez del Moral AL, Jimenez-Arriero MA et al . Diagnostic stability and evolution of bipolar disorder in clinical practice: a prospective cohort study. Acta Psychiatr Scand 2007; 115 : 473–480.

Baca-Garcia E, Vaquero-Lorenzo C, Perez-Rodriguez MM, Gratacos M, Bayes M, Santiago-Mozos R et al . Nucleotide variation in central nervous system genes among male suicide attempters. Am J Med Genet B Neuropsychiatr Genet 2010; 153B : 208–213.

Sun D, van Erp TG, Thompson PM, Bearden CE, Daley M, Kushan L et al . Elucidating a magnetic resonance imaging-based neuroanatomic biomarker for psychosis: classification analysis using probabilistic brain atlas and machine learning algorithms. Biol Psychiatry 2009; 66 : 1055–1060.

Shen H, Wang L, Liu Y, Hu D . Discriminative analysis of resting-state functional connectivity patterns of schizophrenia using low dimensional embedding of fMRI. Neuroimage 2010; 49 : 3110–3121.

Hay MC, Weisner TS, Subramanian S, Duan N, Niedzinski EJ, Kravitz RL . Harnessing experience: exploring the gap between evidence-based medicine and clinical practice. J Eval Clin Pract 2008; 14 : 707–713.

Unutzer J, Choi Y, Cook IA, Oishi S . A web-based data management system to improve care for depression in a multicenter clinical trial. Psychiatr Serv 2002; 53 : 671–673.

Download references

Acknowledgements

Dr Blasco-Fontecilla acknowledges the Spanish Ministry of Health (Rio Hortega CM08/00170), Alicia Koplowitz Foundation, and Conchita Rabago Foundation for funding his post-doctoral rotation at CHRU, Montpellier, France. SAF2010-21849.

Author information

Authors and affiliations.

Department of Psychiatry, New York State Psychiatric Institute and Columbia University, New York, NY, USA

M A Oquendo, E Baca-Garcia, H C Galfalvy & N Duan

Fundacion Jimenez Diaz and Universidad Autonoma, CIBERSAM, Madrid, Spain

E Baca-Garcia & H Blasco-Fontecilla

Department of Signal Theory and Communications, Universidad Carlos III de Madrid, Madrid, Spain

A Artés-Rodríguez & F Perez-Cruz

Princeton University, Princeton, NJ, USA

F Perez-Cruz

Department of Statistics, Columbia University, New York, NY, USA

Department of Biostatistics, Columbia University, New York, NY, USA

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to M A Oquendo .

Ethics declarations

Competing interests.

Dr Oquendo has received unrestricted educational grants and/or lecture fees form Astra-Zeneca, Bristol Myers Squibb, Eli Lilly, Janssen, Otsuko, Pfizer, Sanofi-Aventis and Shire. Her family owns stock in Bistol Myers Squibb. The remaining authors declare no conflict of interest.

PowerPoint slides

Powerpoint slide for fig. 1, rights and permissions.

Reprints and permissions

About this article

Cite this article.

Oquendo, M., Baca-Garcia, E., Artés-Rodríguez, A. et al. Machine learning and data mining: strategies for hypothesis generation. Mol Psychiatry 17 , 956–959 (2012). https://doi.org/10.1038/mp.2011.173

Download citation

Received : 15 July 2011

Revised : 20 October 2011

Accepted : 21 November 2011

Published : 10 January 2012

Issue Date : October 2012

DOI : https://doi.org/10.1038/mp.2011.173

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- data farming

- inductive reasoning

This article is cited by

Applications of artificial intelligence−machine learning for detection of stress: a critical overview.

- Alexios-Fotios A. Mentis

- Donghoon Lee

- Panos Roussos

Molecular Psychiatry (2023)

Optimizing prediction of response to antidepressant medications using machine learning and integrated genetic, clinical, and demographic data

- Dekel Taliaz

- Amit Spinrad

- Bernard Lerer

Translational Psychiatry (2021)

Computational psychiatry: a report from the 2017 NIMH workshop on opportunities and challenges

- Michele Ferrante

- A. David Redish

- Joshua A. Gordon

Molecular Psychiatry (2019)

The role of machine learning in neuroimaging for drug discovery and development

- Orla M. Doyle

- Mitul A. Mehta

- Michael J. Brammer

Psychopharmacology (2015)

Stabilized sparse ordinal regression for medical risk stratification

- Truyen Tran

- Svetha Venkatesh

Knowledge and Information Systems (2015)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Hypothesis generation for data science projects

This article was published as part of the Data Science Blogathon .

Introduction

The first step toward problem solving in data science projects is not about building machine learning models.. Yes, you read it right!

That distinction belongs to the generation of hypotheses, the step where our problem solving skills are combined with our business intuition. It is a truly crucial step in ensuring a successful data science project.

Let's be honest: we all think of a hypothesis almost every day. Consider the example of a famous sport in India: cricket. It's that time of year when IPL fever is high and we're all engrossed in predicting the winner..

If you've been guessing which team would win based on various factors, such as the size of the stadium and the batters present in the team with six batting abilities or batters with high averages of T20, Congratulations to all. You have all been making an educated guess and generating hypotheses based on your domain knowledge of the sport..

In the same way, the first step in solving any business problem using machine learning is hypothesis generation. Understanding the problem statement with a good knowledge of the domain is important and formulating a hypothesis will further expose you to new problem-solving ideas..

Then, in this article, Let's dive into what hypothesis generation is and find out why it matters to all data scientists.

Table of Contents

What is hypothesis generation.

- Hypothesis generation vs hypothesis testing

How does hypothesis generation help?

When should hypothesis generation.

- Case study: Generating hypotheses with predicting the length of the New York City taxi ride

Hypothesis The generation is one “assumption” informed of various factors that are affecting the business problem that needs to be solved by machine learning. When formulating a hypothesis, the data scientist should not know the result of the hypothesis that has been generated based on any evidence.

"A hypothesis can be defined simply as an assumption. A scientific hypothesis is an intelligent assumption “. – Isaac asimov

Hypothesis generation is a crucial step in any data science project. If you skip this or review it, the probability that the project will fail increases exponentially.

Hypothesis generation vs. Hypothesis testing

This is a very common mistake that data science beginners make.

Hypothesis generation is a process that begins with an educated guess, whereas hypothesis testing is a process of concluding that the grounded assumption is true / false or that the relationship between the variables is statistically significant or not.

This last part could be used for future research using statistical tests. A hypothesis is accepted or rejected based on the level of significance and the score of the test used to test the hypothesis.

To understand more about hypothesis testing in detail, you can read it here or you can also learn it through this course .

Here there is 5 key reasons hypothesis generation is so important in data science:

- Hypothesis generation helps to understand the business problem as we delve deeper into the inference of the various factors that affect our target variable..

- You will get a much better idea of what are the main factors that are responsible for solving the problem.

- Data to be collected from various sources that are key to turning your business problem into a data science based problem

- Improve your domain awareness if you are new to the domain as you spend time understanding the issue

- Helps to approach the problem in a structured way

The million dollar question: At what time in the world should you perform hypothesis generation?

- Hypothesis generation should be done before looking at the dataset or data collection.

- You will notice that if you have generated your hypothesis properly, would have included all the variables present in the data set in the generation of its hypothesis.

- It may also have included variables that are not present in the dataset

Case study: generation of hypotheses about “predicting the length of the taxi ride in New York City”

Let's see now the “ NEW YORK CITY TAXI TRIP DURATION FORECAST “ statement of the problem and generate some hypotheses that would affect the duration of our taxi trip to understand the generation of hypotheses.

Here is the problem statement:

Predict the duration of a trip so that the company can assign the taxis that are left free for the next trip. This will help reduce customer waiting time and also help gain customer trust..

Let's start!

Hypothesis generation based on various factors

1. distance-based functions / speed.

Let's try to come up with a formula that is related to the duration of the trip and helps us generate several hypotheses for the problem:

TIME = DISTANCE / SPEED

Distance and speed play an important role in predicting trip duration.

We can notice that the duration of the trip is directly proportional to the distance traveled and inversely proportional to the speed of the taxi. With this we can arrive at a hypothesis based on distance and speed.

- Distance : The greater the distance traveled by the taxi, the longer the duration of the trip.

- Inner drop point : Disembarkation points in congested or inland lanes could result in an increase in the duration of the trip.

- Speed: At higher speed, shorter trip duration

2. Car-based functions

Cars are of various types, sizes and brands, and these characteristics of the car could be vital for travel not only for the safety of the passengers but also for the duration of the trip. Let's now generate some hypotheses based on the characteristics of the car.

- Condition of the ac r : Well-conditioned cars are unlikely to have breakdown problems and could have a shorter trip duration

- Car size : Small cars (Hatchback) may have a shorter trip duration and larger size cars (XUV) may have a longer travel time depending on the size of the car and congestion in the city.

3. Trip type

Travel types may be different depending on the travel providers: it may be a trip in a remote station, individual or group trips. Now let's define a hypothesis based on the type of trip used.

- Pool car : Bundled trips can increase the duration of the trip, since the car reaches several places before reaching the assigned destination.

4. Functions based on controller details

A driver is an important person when it comes to travel time. Various factors about the driver can help to understand the reason behind the duration of the trip and here are some hypotheses about it.

- Driver's age : Older drivers could be more careful and contribute to a longer journey time.

- Gender : Female drivers are likely to drive slowly and this could contribute to a longer journey time.

- Driver experience : Drivers with less driving experience can lead to a longer trip duration.

- Medical condition : Drivers with a medical condition can contribute to a longer trip duration.

5. Passenger data

Passengers can influence the duration of the trip knowingly or unknowingly. As usual, we come across passengers asking drivers to speed up as they are getting late and there could be other hypothesis factors that we can consider.

- Age of passengers : Older people as passengers can contribute to a longer trip duration, as drivers tend to slow down on trips involving older people.

- Medical conditions or pregnancy: Passengers with medical conditions contribute to a longer trip duration.

- Emergency: Passengers with an emergency could help reduce travel time.

- Passenger counting : Higher passenger numbers lead to shorter trips due to seat congestion

6. Date and time functions

The day and time of the week are important, as New York is a busy city and could be very congested during office hours or on weekdays. Let's now generate some hypotheses about the characteristics based on date and time.

Pick up day :

- Weekends could contribute to more trips in remote stations and could have a longer trip duration.

- Weekdays tend to have a longer travel time due to high traffic.

- If the pick-up day is a holiday, the duration of the trip may be shorter.

- If the pick-up day falls on a holiday week, travel time could be shorter due to less traffic.

- Early morning trips have a shorter trip duration due to less traffic

- Night trips have a longer trip duration due to rush hours

7. Road-based features

The roads are of different types and the condition of the road or the obstructions in the road are factors that cannot be ignored. Let's form some hypotheses based on these factors.

- State of the road : The duration of the trip is longer if the condition of the road is bad

- Road type : Trips on concrete roads tend to have a shorter trip duration.

- Strike on the road: Strikes carried out on the roads in the direction of travel increase the duration of the journey

8. Weather-based features

The weather can change at any time and could possibly affect the trip if the weather worsens. Therefore, this is an important feature to consider in our hypothesis.

- Weather at the start of the trip : The rainy weather condition contributes to a longer trip duration

Final notes

- After writing our hypothesis and looking at the data set You will notice that you would have covered hypothesis writing about most of the features present in the dataset. There could also be the possibility that you have to work with fewer functions and the functions that you have hypothesized about are not being captured. / currently stored by the company and not available.

- Always go ahead and capture data from external sources if you think the data is relevant to your prediction. Not .: Get weather information

- It is also important to note that, since hypothesis generation is an estimated assumption, the hypothesis generated could turn out to be true or false once exploratory data analyzes and hypothesis tests are performed on the data.

I hope you have been able to get something of value out of this post. If there is something I missed or something inaccurate or if you have any comments, let me know in the comments. I would be greatly appreciated.

Related Posts:

- Classification Models of Traditional vs.Deep Learning

- Multiprocessing in Python | A Beginner's Guide to Multiprocessing in Python

- Building a convolutional neural network

- Projects to learn natural language processing

Recent posts

Artificial Intelligence in Video: How New Technologies Are Changing Video Production?

IT profiles you should consider

How to record a screen on Windows computer?

¿Do you know the seniority levels?

Find Your Best Slip Rings and Rotary Joints Here

Posittion Agency: Advantages of link building for an online store

Subscribe to our newsletter.

We will not send you SPAM mail. We hate it as much as you.

The data always to the Peak

- Submit your COVID-19 Pandemic Research

- Research Leap Manual on Academic Writing

- Conduct Your Survey Easily

- Research Tools for Primary and Secondary Research

- Useful and Reliable Article Sources for Researchers

- Tips on writing a Research Paper

- Stuck on Your Thesis Statement?

- Out of the Box

- How to Organize the Format of Your Writing

- Argumentative Versus Persuasive. Comparing the 2 Types of Academic Writing Styles

- Very Quick Academic Writing Tips and Advices

- Top 4 Quick Useful Tips for Your Introduction

- Have You Chosen the Right Topic for Your Research Paper?

- Follow These Easy 8 Steps to Write an Effective Paper

- 7 Errors in your thesis statement

- How do I even Write an Academic Paper?

- Useful Tips for Successful Academic Writing

Collaborative Governance in Government Administration in the Field of State Security Along the Republic of Indonesia (RI)-Malaysia Border Area

It service management system practices in kenya, introduction economic and psychological well-being during covid-19 pandemic in albania, a need for sustainability.

- Designing a Framework for Assessing Agripreneurship Action for the Green Scheme Irrigation Projects, Namibia

- The Potential Utilisation of Artificial Intelligence (AI) in Enterprises

- Case Study – Developing a National Research and Evidence Base for The Health and Wellbeing Chapter of The Welsh Government’s 2023 Innovation Strategy for Wales

- The Impacts of Technology Innovation on Customer Satisfaction, Employee and Leadership Commitment in CSR Practice

- Current Developments on the Chinese Real Estate Market

- Slide Share

Harnessing AI for Hypothesis Generation: Accelerate Discovery in Your Research Field

Artificial intelligence (AI) has been making waves in the scientific community , offering new opportunities for researchers to accelerate discovery and make groundbreaking discoveries. One of the most exciting applications of AI in research is hypothesis generation. By harnessing the power of AI, researchers can quickly generate and test hypotheses, accelerating the pace of discovery in their field. In this article, we will explore the benefits of AI hypothesis generation and how it can speed up discovery in your research field.

The Role of AI in Hypothesis Generation

Traditionally, hypothesis generation has been a slow and laborious process, requiring significant amounts of time and resources. Researchers must review large volumes of literature, analyze data, and consult with colleagues to generate potential hypotheses. However, with the advent of AI, this process has become much faster and more efficient.

AI-powered hypothesis generation tools use natural language processing algorithms to analyze large volumes of literature and data, identifying potential hypotheses and connections between different research areas. By analyzing patterns and relationships within the data, these tools can generate new and innovative hypotheses that might not have been identified through traditional means.

The Benefits of AI Hypothesis Generation

AI hypothesis generation offers a number of benefits to researchers looking to accelerate discovery in their field. Firstly, it can help to identify potential research areas that might have been overlooked. By analyzing data and literature from a wide range of sources, AI-powered tools can identify connections and patterns that might not have been apparent through traditional means. This can lead to the discovery of new research areas and the development of innovative hypotheses that can drive new discoveries.

Secondly, AI hypothesis generation can help to save time and resources. By automating the process of hypothesis generation, researchers can save significant amounts of time and resources that can be used for other aspects of their research. This can be particularly beneficial for researchers who are working with limited resources or who are under tight deadlines.

Finally, AI hypothesis generation can help to drive innovation and collaboration. By generating new and innovative hypotheses, researchers can attract the attention of colleagues and potential collaborators, fostering collaboration and driving the exchange of ideas across different fields. This can lead to the development of new research areas and the creation of innovative solutions to complex problems.

Challenges and Limitations

While AI hypothesis generation offers many benefits, there are also challenges and limitations that must be taken into account. Firstly, there is the issue of data quality. AI-powered tools are only as good as the data that is fed into them, and if the data is incomplete or of poor quality, the results may be inaccurate or misleading. Secondly, there is the issue of bias in AI algorithms. AI algorithms can be biased in a number of ways, such as through the selection of training data or the algorithms themselves. This can lead to the generation of biased hypotheses, which can be detrimental to research.

Finally, there is the issue of interpretability. AI algorithms can be highly complex, and it can be difficult for researchers to understand how they arrived at a particular hypothesis or conclusion. This can make it challenging to validate the results or to identify potential errors or biases.

AI hypothesis generation offers an exciting opportunity for researchers to accelerate discovery in their field. By automating the process of hypothesis generation and analyzing large volumes of data and literature, AI-powered tools can identify new and innovative hypotheses that might not have been identified through traditional means. However, there are also challenges and limitations that must be taken into account, such as data quality, bias, and interpretability. Despite these challenges, AI hypothesis generation is likely to play an increasingly important role in research in the future, driving innovation and enabling researchers to make groundbreaking discoveries.

Suggested Articles

The Role of Primary and Secondary Research Tools in Your Survey Try a New Method…

How to write a Research Paper fbq('track', 'ViewContent', { content_ids: 'PPT writing view', });

The purpose of academic writing is to make your work clear and understandable to whoever…

As a researcher, you want your work to make a difference. You want it to…

Related Posts

Comments are closed.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Genome Biol

The data-hypothesis relationship

Teppo felin.

1 Saïd Business School, University of Oxford, Oxford, UK

Jan Koenderink

2 Department of Physics, Delft University of Technology, Delft, The Netherlands

3 Department of Experimental Psychology, University of Leuven, Leuven, Belgium

Joachim I. Krueger

4 Department of Cognitive, Linguistic and Psychological Sciences, Brown University, Providence, USA

Denis Noble

5 Department of Physiology, Anatomy and Genetics, University of Oxford, Oxford, UK

George F.R. Ellis

6 Department of Mathematics, University of Cape Town, Cape Town, South Africa

Every conscious cognitive process will show itself to be steeped in theories; full of hypotheses. Rupert Riedl [ 1 ]

In a provocative editorial, Yanai and Lercher (henceforth Y&L) claim that “a hypothesis is a liability” [ 2 ]. They contend that having a hypothesis is costly because it causes scientists to miss hidden data and interesting phenomena. Y&L advocate “hypothesis-free” data exploration, which they argue can yield significant scientific discoveries.

We disagree. While we concur that a bad hypothesis is a liability, there is no such thing as hypothesis-free data exploration. Observation and data are always hypothesis- or theory-laden. Data is meaningless without some form of hypothesis or theory. Any exploration of data, however informal, is necessarily guided by some form of expectations. Even informal hunches or conjectures are types of proto-hypothesis. Furthermore, seemingly hypothesis-free statistical tools and computational techniques also contain latent hypotheses and theories about what is important—what might be interesting, worth measuring or paying attention to. Thus, while Y&L argue that a “hypothesis is a liability,” we argue that hypothesis-free observation is not possible (nor desirable) and that hypotheses in fact are the primary engine of scientific creativity and discovery.

The hidden gorilla

To illustrate their point about how a hypothesis is a liability, Y&L present their own version of the famous gorilla experiment [ 3 ]. In their experiment, subjects receive some made-up data featuring three variables: the BMI of individuals, the number of steps taken on a particular day, and their gender. One experimental group received three hypotheses to consider, while the other was “hypothesis-free.” Subjects in this latter group were simply asked to address the question “what do you conclude from the dataset?”

The “catch” of Y&L’s experiment was that a visual plot of the data showed a waving gorilla. And the key finding was that subjects in the hypothesis-free group were five times more likely to see the gorilla, compared with subjects in the hypothesis-focused group. Y&L concluded from this that hypotheses blind us to hidden patterns and insights in the data. Perhaps ironically, Y&L come to this conclusion based on their own hypothesis about the dangers of hypotheses.

But how exactly does missing the gorilla generalize to Y&L’s point about a hypothesis being a liability in scientific discovery? They argue that missing the gorilla is a problem, even though it is hard to see how finding an irrelevant gorilla mimics making a scientific insight. Now, we understand the gorilla is used as a metaphor for missing surprising or hidden things in science. But a meteorologist missing a cloud that looks like a gorilla is roughly equivalent to what Y&L are doing. A gorilla-shaped cloud has no scientific interest to the meteorologist, just as the gorilla-shaped data is irrelevant to Y&L’s context (the health data with three variables: BMI, steps taken and gender). Furthermore, the gorilla example does not generalize to scientific discovery because a gorilla is something that is universally recognized, while scientific discovery is essentially about finding new data, establishing new facts and relationships. New insights and scientific discoveries do not somehow “pop out” like the gorilla does once one plots the raw data. Hypotheses are needed. Thus, there is a mismatch between the experiment and what Y&L are claiming, on a number of levels.

Y&L import some of these problems from the original gorilla study [ 4 ]. The most serious concern is that various versions of the gorilla study can be seen as a form of attentional misdirection, similar to what is practiced by magicians. Experimental tasks are artificially constructed and designed to prove a specific hypothesis: that people are blind and miss large objects in their visual scenes. Experimenters first hide something in the visual scene, then distract their subjects with other tasks (whether counting basketball passes or asking them to analyze specific hypotheses), and then, voilà, reveal to them what they have missed. The problem is that—whether in science or in everyday life—an indefinite number of things remain undetected when we interact with data or visual scenes. It is not obvious what an apple falling means, without the right question, hypothesis, or theory. Visual scenes and data teem with possibilities, uses and meanings. Of course, the excitement generated by these studies comes from the fact that something so large and surprising—like a gorilla—goes undetected, even though it should be plainly obvious.

But there are deeper issues here. Reductionist forms of science assume that cues and data (somehow) jump out and tell us why they are relevant and important, based on the characteristics of the data itself (the physical properties of the world). In vision science, this assumption is based on research in psychophysics (and inverse optics and ideal observer theory) that focuses on salience as a function of cue or stimulus characteristics. From this perspective, cues and stimuli become data, information, and evidence due to their inherent nature [ 5 ].

To illustrate the problem with this, consider two stimulus or cue characteristics that are important to various versions of the gorilla study—and central to psychophysics and the cognitive sciences more generally—namely “size” and “surprisingness” [ 6 ]. The idea in psychophysics is that these characteristics should make cues salient. For example, researchers embedded an image of a gorilla in the CT scan images of patients’ lungs. They then asked expert radiologists to look for nodules as part of lung-cancer screening. Eighty-three percent of the radiologists missed the gorilla embedded in the image, despite the fact that the gorilla was 48 times the size of the nodules they were looking for [ 7 ].

But if radiologists or experimental subjects were asked to, say, “look for something unusual” or to “see if you can find the animal,” they would presumably find the gorilla. Thus, visual awareness or recognition has little to do with size or surprisingness. It has more to do with the question posed by the experimenter or the expectations of experimental subjects. In fact, experimental subjects themselves might suspect that the study actually is not about counting basketball passes or about analyzing health data or finding cancerous nodules in lungs. If subjects think that they are being tricked by experimenters—as is often the case—they might ignore the distracting tasks and priming questions and look for and find the gorilla. Note, again, that the a priori hypothesis of experimenters themselves is that people are blind, and so the experiments themselves are designed to prove this point. Alert subjects might suspect that they are being purposefully distracted and thus try to guess what they are meant to look for and find it.

The key point here is that the “transformation” of raw cues or data to information and evidence is not a straightforward process. It requires some form of hypothesis. Cues and data do not automatically tell us what they mean, whether or why they are relevant, or for which hypothesis they might provide evidence. Size is relevant in some situations, but not in others. Cues and data only become information and evidence in response to the questions and queries that we are asking.

Fishing expeditions require a net

One alternative to having a hypothesis, Y&L argue, is hypothesis- free exploration of data or what they call fishing expeditions. Of course, the idea of engaging in a fishing expedition—as Y&L recognize—has highly negative connotations, suggesting haphazard, unscientific, and perhaps even unethical practices. But they make a valid point: more exploratory and imaginative practices are important in science.

But fishing expeditions are hardly hypothesis-free. That is, fishing expeditions—to extend Y&L’s metaphor—require a net or some type of device for catching fish. Data and insights (just like fish) do not jump out and declare their relevance, meaning, or importance. As put by physical chemist Michael Polanyi, “things are not labelled ‘evidence’ in nature” [ 8 ]. The relevant data needs to be identified and lured in some fashion. Even the most exploratory process in science features choices and assumptions about what will count as data and evidence and what should be measured (and how). Any look at data—however preliminary it might be— necessarily represents some form of proto-hypothesis: a latent expectation, question, or even guess about what might be lurking, about what might potentially be interesting or relevant and how it might be caught.

In short, there’s no systematic way to extract and identify anything hidden without at least some rough idea of what one is looking for. The tools and devices scientists use are the net, sieve, or filter for capturing relevance and meaning. These nets come in vastly different materials and textures, sizes, types of weights, and anchors. Choices also need to be made about where to cast these nets. There are various ways to use and deploy them (trolling, longline, and so forth). Each choice implies a hypothesis. The choice of fishing net implies a hypothesis about what one is looking for and about what one might expect to catch and see as relevant [ 9 ].

Now, it might seem like we are stretching the definition of a hypothesis by including expectations, conjectures, and even the statistical and computational tools that are used to generate insights. But we think it is important to recognize that any tool—whether cognitive, computational, or statistical—functions like a net, as it already embodies implicit hypotheses about what matters and what does not. Perhaps these are not full-fledged, formal hypotheses in the sense that Y&L discuss. But they certainly are proto-hypotheses that direct awareness and attention toward what should be measured and what counts as data and evidence. A hypothesis is some form of expectation or question about what one is looking for and about what one expects to find. The identification and collection of data necessarily is of the same form, as one cannot collect all data about what is going on in the world at a specific time: flu patterns in China, weather patterns in the Pacific, sunspot cycles, the state of the New York stock exchange, earthquakes in Tahiti, and so on. Science is about making decisions about what subset of all this “stuff” should be focused on and included in the analysis.

Y&L specifically emphasize correlations and the generation of various statistical patterns as a way to make hypothesis-free discoveries in data. Correlations are one form of “net” for looking at data. But correlations are ubiquitous and their strength tells us little [ 10 ]. One needs a hypothesis to arbitrate between which correlation might be worth investigating and which not. The genome-wide associational studies have pointed this out. With the exception of the usual outliers (rare genetic diseases), the association levels are relatively small. More data may offer more stable statistical estimates, but it will not achieve the identification of causality required for a physiological explanation. On the contrary, the extremely low association data can be hiding substantial causality or perhaps more complex or interconnected, omnigenic factors are at play in the genome [ 11 ]. A causal hypothesis, tested rigorously with quantitative modeling, can reveal the potential pathways for understanding genetic variation, epigenetic factors, and disease or traits [ 12 ].

Science: bottom-up versus top-down

Y&L argue that scientific discoveries are “undiscoverable without data.” While this is correct in principle, Y&L mis-specify the data-hypothesis relationship by privileging the role the data to the detriment of hypothesis and theory. They ignore the temporal primacy of theory and hypothesis. A hypothesis tells us what data to look for. Data emerges and becomes evidence in response to a hypothesis. In physics, for example, the existence of gravitational waves had long been hypothesized. The hypothesis guided scientists to look for this data. This specifically led to the invention and construction of exquisitely sensitive devices to detect and measure gravitational radiation (e.g., LIGO and VIRGO observations). Eventually, in 2015, gravitational waves were discovered. The data emerged because of the conceptualization, design, and construction of relevant devices for measurement. The data was manifest due to the hypothesis rather than the other way around. And the data analysis itself is theory-based [ 13 ]: it depends on templates of waves expected from the gravitational coalescence of black holes or neutron stars.

Einstein aptly captured the relationship between hypotheses and data when noting that “whether you can observe a thing or not depends on the theory which you use. It is the theory which decides what can be observed.” Einstein’s point might be illustrated by the so-called DIKW hierarchy (Fig. 1 ) [ 14 ]. Currently popular data-first approaches assume that scientific understanding is built from the bottom-up. But to the contrary, many of the greatest insights have come “top-down,” where scientists start with theories and hypotheses that guide them to identify the right data and evidence. One of the most profound ways this happens is when scientists query fundamental assumptions that are taken for granted, such as that species are fixed for all time, or that simultaneity is independent of the state of motion. This questioning of axiomatic assumptions drives the creation of transformational theories (the theory of evolution, special relativity) and the subsequent collection of associated data that tests such profound reshaping of the foundations.

The DIKW “hierarchy” is often seen as “bottom-up.” But, as we argue, top-down mechanisms play a critical role in discovering data, relevance, and meaning

There certainly are significant reciprocal influences between these “levels” of the hierarchy. But Y&L’s central argument that a “hypothesis is a liability” simply does not recognize the profound, top-down influence played by hypotheses and theories in science, and how these enable the identification and generation of data.

Our concern is that starting at the bottom—as suggested by Y&L’s notion of hypothesis-free exploration of data—will inadvertently lead to an overly descriptive science: what Ernest Rutherford called “stamp collecting.” Charles Darwin anticipated this problem when he wrote to a friend:

It made me laugh to read of [Edwin Lankester’s] advice or rather regret that I had not published facts alone . How profoundly ignorant he must be of the very soul of observation. About 30 years ago there was much talk that Geologists ought only to observe and not theorise ; and I well remember someone saying, that at this rate a man might as well go into a gravel-pit and count the pebbles and describe their colours. How odd it is that everyone should not see that all observation must be for or against some view , if it is to be of any service [ 15 ].

Acknowledgements

TF, DN and GFRE gratefully acknowledge University of Oxford's Foundations of Value and Values-initiative for providing a forum to discuss these types of interdisciplinary issues.

Authors’ contributions

TF wrote the initial draft of the manuscript. JK, JIK, DN and GFRE added many ideas, examples and further edits to subsequent iterations of the article. The authors read and approved the final manuscript.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

IMAGES

VIDEO

COMMENTS

I have what I believe is a fairly straightforward test that is using an out-of-the box strategy, but I am getting failed health checks that the data generation is very slow. The entire test file is at the bottom of this issue so you can let me know if there is anything glaringly wrong with the test.

Hypothesis' health checks are designed to detect and warn you about performance problems where your tests are slow, inefficient, or generating very large examples. ... , or occasionally for Hypothesis internal reasons. too_slow = 3 ¶ Check for when your data generation is extremely slow and likely to hurt testing.

For example, everything_except(int) returns a strategy that can generate anything that from_type() can ever generate, except for instances of int, and excluding instances of types added via register_type_strategy(). This is useful when writing tests which check that invalid input is rejected in a certain way. hypothesis.strategies. frozensets (elements, *, min_size = 0, max_size = None ...

It aims to improve the integration between Hypothesis and Pytest by providing extra information and convenient access to config options. pytest --hypothesis-show-statistics can be used to display test and data generation statistics. pytest --hypothesis-profile=<profile name> can be used to load a settings profile.

1. This is because Pandera has a fairly inefficient approach: they generate a custom strategy for the first part of the schema, and then use rejection sampling to filter out values which fail any subsequent filter. That's why their docs recommend specifying the most-restrictive constraint as the base strategy.

This is a very common mistake data science beginners make. Hypothesis generation is a process beginning with an educated guess whereas hypothesis testing is a process to conclude that the educated guess is true/false or the relationship between the variables is statistically significant or not.

Although critically important, they limit hypothesis generation to an incremental pace. Machine learning and data mining are alternative approaches to identifying new vistas to pursue, as is ...

The problem is that your strategy for unique names doesn't allow Hypothesis to generate the same name in two different runs of your test function, which must be allowed - otherwise, we can't try variations or discover a minimal failing example (among other problems).. This may also require some work to reset the state of your API for each input Hypothesis tries.

One of the disadvantages of this is that the fallback strategy is to simply apply the check to the generated data, which can be highly inefficient. In this case, hypothesis will generate strings and try to find examples of strings that are in the set {"foo", "bar"}, which will be very slow and most likely raise an Unsatisfiable exception. To ...

This chapter will explain the definition and properties of a hypothesis, the related concepts, and basic methods of hypothesis generation as follows. Describe the definition, properties, and life cycle of a hypothesis. Describe relationships between a hypothesis and a theory, a model, and data. Categorize and explain research questions that ...

4. Photo by Anna Nekrashevich from Pexels. Hypothesis testing is a common statistical tool used in research and data science to support the certainty of findings. The aim of testing is to answer how probable an apparent effect is detected by chance given a random data sample. This article provides a detailed explanation of the key concepts in ...

Feb 7, 2021. 1. A short primer on why we can reject hypotheses, but cannot accept them, with examples and visuals. Image by the author. Hypothesis testing is the basis of classical statistical inference. It's a framework for making decisions under uncertainty with the goal to prevent you from making stupid decisions — provided there is data ...

Generating the right data One thing that often causes people problems is figuring out how to generate the right data to fit their data model. You can start with just generating strings and integers, but eventually you want to be able to generate objects from your domain model. Hypothesis provides a lot of tools to help you build the data you want, but sometimes the choice can be a bit ...

Although critically important, they limit hypothesis generation to an incremental pace. Machine learning and data mining are alternative approaches to identifying new vistas to pursue, as is ...

Hypothesis generation vs. Hypothesis testing. This is a very common mistake that data science beginners make. Hypothesis generation is a process that begins with an educated guess, whereas hypothesis testing is a process of concluding that the grounded assumption is true / false or that the relationship between the variables is statistically ...

The Role of AI in Hypothesis Generation. Traditionally, hypothesis generation has been a slow and laborious process, requiring significant amounts of time and resources. Researchers must review large volumes of literature, analyze data, and consult with colleagues to generate potential hypotheses.

A hypothesis tells us what data to look for. Data emerges and becomes evidence in response to a hypothesis. In physics, for example, the existence of gravitational waves had long been hypothesized. The hypothesis guided scientists to look for this data.