- Privacy Policy

Buy Me a Coffee

Home » Quantitative Research – Methods, Types and Analysis

Quantitative Research – Methods, Types and Analysis

Table of Contents

Quantitative Research

Quantitative research is a type of research that collects and analyzes numerical data to test hypotheses and answer research questions . This research typically involves a large sample size and uses statistical analysis to make inferences about a population based on the data collected. It often involves the use of surveys, experiments, or other structured data collection methods to gather quantitative data.

Quantitative Research Methods

Quantitative Research Methods are as follows:

Descriptive Research Design

Descriptive research design is used to describe the characteristics of a population or phenomenon being studied. This research method is used to answer the questions of what, where, when, and how. Descriptive research designs use a variety of methods such as observation, case studies, and surveys to collect data. The data is then analyzed using statistical tools to identify patterns and relationships.

Correlational Research Design

Correlational research design is used to investigate the relationship between two or more variables. Researchers use correlational research to determine whether a relationship exists between variables and to what extent they are related. This research method involves collecting data from a sample and analyzing it using statistical tools such as correlation coefficients.

Quasi-experimental Research Design

Quasi-experimental research design is used to investigate cause-and-effect relationships between variables. This research method is similar to experimental research design, but it lacks full control over the independent variable. Researchers use quasi-experimental research designs when it is not feasible or ethical to manipulate the independent variable.

Experimental Research Design

Experimental research design is used to investigate cause-and-effect relationships between variables. This research method involves manipulating the independent variable and observing the effects on the dependent variable. Researchers use experimental research designs to test hypotheses and establish cause-and-effect relationships.

Survey Research

Survey research involves collecting data from a sample of individuals using a standardized questionnaire. This research method is used to gather information on attitudes, beliefs, and behaviors of individuals. Researchers use survey research to collect data quickly and efficiently from a large sample size. Survey research can be conducted through various methods such as online, phone, mail, or in-person interviews.

Quantitative Research Analysis Methods

Here are some commonly used quantitative research analysis methods:

Statistical Analysis

Statistical analysis is the most common quantitative research analysis method. It involves using statistical tools and techniques to analyze the numerical data collected during the research process. Statistical analysis can be used to identify patterns, trends, and relationships between variables, and to test hypotheses and theories.

Regression Analysis

Regression analysis is a statistical technique used to analyze the relationship between one dependent variable and one or more independent variables. Researchers use regression analysis to identify and quantify the impact of independent variables on the dependent variable.

Factor Analysis

Factor analysis is a statistical technique used to identify underlying factors that explain the correlations among a set of variables. Researchers use factor analysis to reduce a large number of variables to a smaller set of factors that capture the most important information.

Structural Equation Modeling

Structural equation modeling is a statistical technique used to test complex relationships between variables. It involves specifying a model that includes both observed and unobserved variables, and then using statistical methods to test the fit of the model to the data.

Time Series Analysis

Time series analysis is a statistical technique used to analyze data that is collected over time. It involves identifying patterns and trends in the data, as well as any seasonal or cyclical variations.

Multilevel Modeling

Multilevel modeling is a statistical technique used to analyze data that is nested within multiple levels. For example, researchers might use multilevel modeling to analyze data that is collected from individuals who are nested within groups, such as students nested within schools.

Applications of Quantitative Research

Quantitative research has many applications across a wide range of fields. Here are some common examples:

- Market Research : Quantitative research is used extensively in market research to understand consumer behavior, preferences, and trends. Researchers use surveys, experiments, and other quantitative methods to collect data that can inform marketing strategies, product development, and pricing decisions.

- Health Research: Quantitative research is used in health research to study the effectiveness of medical treatments, identify risk factors for diseases, and track health outcomes over time. Researchers use statistical methods to analyze data from clinical trials, surveys, and other sources to inform medical practice and policy.

- Social Science Research: Quantitative research is used in social science research to study human behavior, attitudes, and social structures. Researchers use surveys, experiments, and other quantitative methods to collect data that can inform social policies, educational programs, and community interventions.

- Education Research: Quantitative research is used in education research to study the effectiveness of teaching methods, assess student learning outcomes, and identify factors that influence student success. Researchers use experimental and quasi-experimental designs, as well as surveys and other quantitative methods, to collect and analyze data.

- Environmental Research: Quantitative research is used in environmental research to study the impact of human activities on the environment, assess the effectiveness of conservation strategies, and identify ways to reduce environmental risks. Researchers use statistical methods to analyze data from field studies, experiments, and other sources.

Characteristics of Quantitative Research

Here are some key characteristics of quantitative research:

- Numerical data : Quantitative research involves collecting numerical data through standardized methods such as surveys, experiments, and observational studies. This data is analyzed using statistical methods to identify patterns and relationships.

- Large sample size: Quantitative research often involves collecting data from a large sample of individuals or groups in order to increase the reliability and generalizability of the findings.

- Objective approach: Quantitative research aims to be objective and impartial in its approach, focusing on the collection and analysis of data rather than personal beliefs, opinions, or experiences.

- Control over variables: Quantitative research often involves manipulating variables to test hypotheses and establish cause-and-effect relationships. Researchers aim to control for extraneous variables that may impact the results.

- Replicable : Quantitative research aims to be replicable, meaning that other researchers should be able to conduct similar studies and obtain similar results using the same methods.

- Statistical analysis: Quantitative research involves using statistical tools and techniques to analyze the numerical data collected during the research process. Statistical analysis allows researchers to identify patterns, trends, and relationships between variables, and to test hypotheses and theories.

- Generalizability: Quantitative research aims to produce findings that can be generalized to larger populations beyond the specific sample studied. This is achieved through the use of random sampling methods and statistical inference.

Examples of Quantitative Research

Here are some examples of quantitative research in different fields:

- Market Research: A company conducts a survey of 1000 consumers to determine their brand awareness and preferences. The data is analyzed using statistical methods to identify trends and patterns that can inform marketing strategies.

- Health Research : A researcher conducts a randomized controlled trial to test the effectiveness of a new drug for treating a particular medical condition. The study involves collecting data from a large sample of patients and analyzing the results using statistical methods.

- Social Science Research : A sociologist conducts a survey of 500 people to study attitudes toward immigration in a particular country. The data is analyzed using statistical methods to identify factors that influence these attitudes.

- Education Research: A researcher conducts an experiment to compare the effectiveness of two different teaching methods for improving student learning outcomes. The study involves randomly assigning students to different groups and collecting data on their performance on standardized tests.

- Environmental Research : A team of researchers conduct a study to investigate the impact of climate change on the distribution and abundance of a particular species of plant or animal. The study involves collecting data on environmental factors and population sizes over time and analyzing the results using statistical methods.

- Psychology : A researcher conducts a survey of 500 college students to investigate the relationship between social media use and mental health. The data is analyzed using statistical methods to identify correlations and potential causal relationships.

- Political Science: A team of researchers conducts a study to investigate voter behavior during an election. They use survey methods to collect data on voting patterns, demographics, and political attitudes, and analyze the results using statistical methods.

How to Conduct Quantitative Research

Here is a general overview of how to conduct quantitative research:

- Develop a research question: The first step in conducting quantitative research is to develop a clear and specific research question. This question should be based on a gap in existing knowledge, and should be answerable using quantitative methods.

- Develop a research design: Once you have a research question, you will need to develop a research design. This involves deciding on the appropriate methods to collect data, such as surveys, experiments, or observational studies. You will also need to determine the appropriate sample size, data collection instruments, and data analysis techniques.

- Collect data: The next step is to collect data. This may involve administering surveys or questionnaires, conducting experiments, or gathering data from existing sources. It is important to use standardized methods to ensure that the data is reliable and valid.

- Analyze data : Once the data has been collected, it is time to analyze it. This involves using statistical methods to identify patterns, trends, and relationships between variables. Common statistical techniques include correlation analysis, regression analysis, and hypothesis testing.

- Interpret results: After analyzing the data, you will need to interpret the results. This involves identifying the key findings, determining their significance, and drawing conclusions based on the data.

- Communicate findings: Finally, you will need to communicate your findings. This may involve writing a research report, presenting at a conference, or publishing in a peer-reviewed journal. It is important to clearly communicate the research question, methods, results, and conclusions to ensure that others can understand and replicate your research.

When to use Quantitative Research

Here are some situations when quantitative research can be appropriate:

- To test a hypothesis: Quantitative research is often used to test a hypothesis or a theory. It involves collecting numerical data and using statistical analysis to determine if the data supports or refutes the hypothesis.

- To generalize findings: If you want to generalize the findings of your study to a larger population, quantitative research can be useful. This is because it allows you to collect numerical data from a representative sample of the population and use statistical analysis to make inferences about the population as a whole.

- To measure relationships between variables: If you want to measure the relationship between two or more variables, such as the relationship between age and income, or between education level and job satisfaction, quantitative research can be useful. It allows you to collect numerical data on both variables and use statistical analysis to determine the strength and direction of the relationship.

- To identify patterns or trends: Quantitative research can be useful for identifying patterns or trends in data. For example, you can use quantitative research to identify trends in consumer behavior or to identify patterns in stock market data.

- To quantify attitudes or opinions : If you want to measure attitudes or opinions on a particular topic, quantitative research can be useful. It allows you to collect numerical data using surveys or questionnaires and analyze the data using statistical methods to determine the prevalence of certain attitudes or opinions.

Purpose of Quantitative Research

The purpose of quantitative research is to systematically investigate and measure the relationships between variables or phenomena using numerical data and statistical analysis. The main objectives of quantitative research include:

- Description : To provide a detailed and accurate description of a particular phenomenon or population.

- Explanation : To explain the reasons for the occurrence of a particular phenomenon, such as identifying the factors that influence a behavior or attitude.

- Prediction : To predict future trends or behaviors based on past patterns and relationships between variables.

- Control : To identify the best strategies for controlling or influencing a particular outcome or behavior.

Quantitative research is used in many different fields, including social sciences, business, engineering, and health sciences. It can be used to investigate a wide range of phenomena, from human behavior and attitudes to physical and biological processes. The purpose of quantitative research is to provide reliable and valid data that can be used to inform decision-making and improve understanding of the world around us.

Advantages of Quantitative Research

There are several advantages of quantitative research, including:

- Objectivity : Quantitative research is based on objective data and statistical analysis, which reduces the potential for bias or subjectivity in the research process.

- Reproducibility : Because quantitative research involves standardized methods and measurements, it is more likely to be reproducible and reliable.

- Generalizability : Quantitative research allows for generalizations to be made about a population based on a representative sample, which can inform decision-making and policy development.

- Precision : Quantitative research allows for precise measurement and analysis of data, which can provide a more accurate understanding of phenomena and relationships between variables.

- Efficiency : Quantitative research can be conducted relatively quickly and efficiently, especially when compared to qualitative research, which may involve lengthy data collection and analysis.

- Large sample sizes : Quantitative research can accommodate large sample sizes, which can increase the representativeness and generalizability of the results.

Limitations of Quantitative Research

There are several limitations of quantitative research, including:

- Limited understanding of context: Quantitative research typically focuses on numerical data and statistical analysis, which may not provide a comprehensive understanding of the context or underlying factors that influence a phenomenon.

- Simplification of complex phenomena: Quantitative research often involves simplifying complex phenomena into measurable variables, which may not capture the full complexity of the phenomenon being studied.

- Potential for researcher bias: Although quantitative research aims to be objective, there is still the potential for researcher bias in areas such as sampling, data collection, and data analysis.

- Limited ability to explore new ideas: Quantitative research is often based on pre-determined research questions and hypotheses, which may limit the ability to explore new ideas or unexpected findings.

- Limited ability to capture subjective experiences : Quantitative research is typically focused on objective data and may not capture the subjective experiences of individuals or groups being studied.

- Ethical concerns : Quantitative research may raise ethical concerns, such as invasion of privacy or the potential for harm to participants.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Questionnaire – Definition, Types, and Examples

Case Study – Methods, Examples and Guide

Observational Research – Methods and Guide

Qualitative Research Methods

Explanatory Research – Types, Methods, Guide

Survey Research – Types, Methods, Examples

Quantitative Data Analysis 101

The lingo, methods and techniques, explained simply.

By: Derek Jansen (MBA) and Kerryn Warren (PhD) | December 2020

Quantitative data analysis is one of those things that often strikes fear in students. It’s totally understandable – quantitative analysis is a complex topic, full of daunting lingo , like medians, modes, correlation and regression. Suddenly we’re all wishing we’d paid a little more attention in math class…

The good news is that while quantitative data analysis is a mammoth topic, gaining a working understanding of the basics isn’t that hard , even for those of us who avoid numbers and math . In this post, we’ll break quantitative analysis down into simple , bite-sized chunks so you can approach your research with confidence.

Overview: Quantitative Data Analysis 101

- What (exactly) is quantitative data analysis?

- When to use quantitative analysis

- How quantitative analysis works

The two “branches” of quantitative analysis

- Descriptive statistics 101

- Inferential statistics 101

- How to choose the right quantitative methods

- Recap & summary

What is quantitative data analysis?

Despite being a mouthful, quantitative data analysis simply means analysing data that is numbers-based – or data that can be easily “converted” into numbers without losing any meaning.

For example, category-based variables like gender, ethnicity, or native language could all be “converted” into numbers without losing meaning – for example, English could equal 1, French 2, etc.

This contrasts against qualitative data analysis, where the focus is on words, phrases and expressions that can’t be reduced to numbers. If you’re interested in learning about qualitative analysis, check out our post and video here .

What is quantitative analysis used for?

Quantitative analysis is generally used for three purposes.

- Firstly, it’s used to measure differences between groups . For example, the popularity of different clothing colours or brands.

- Secondly, it’s used to assess relationships between variables . For example, the relationship between weather temperature and voter turnout.

- And third, it’s used to test hypotheses in a scientifically rigorous way. For example, a hypothesis about the impact of a certain vaccine.

Again, this contrasts with qualitative analysis , which can be used to analyse people’s perceptions and feelings about an event or situation. In other words, things that can’t be reduced to numbers.

How does quantitative analysis work?

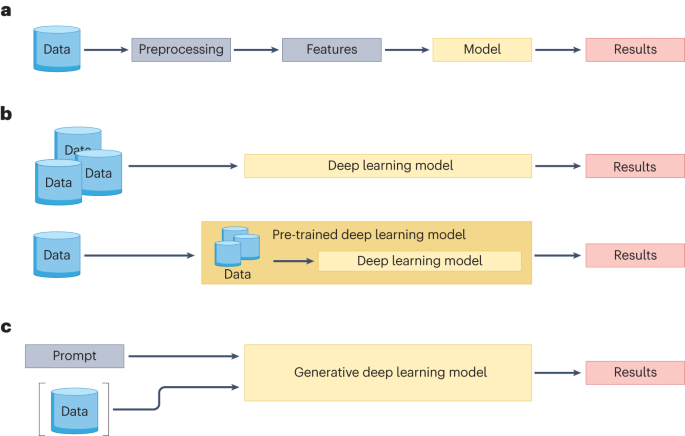

Well, since quantitative data analysis is all about analysing numbers , it’s no surprise that it involves statistics . Statistical analysis methods form the engine that powers quantitative analysis, and these methods can vary from pretty basic calculations (for example, averages and medians) to more sophisticated analyses (for example, correlations and regressions).

Sounds like gibberish? Don’t worry. We’ll explain all of that in this post. Importantly, you don’t need to be a statistician or math wiz to pull off a good quantitative analysis. We’ll break down all the technical mumbo jumbo in this post.

Need a helping hand?

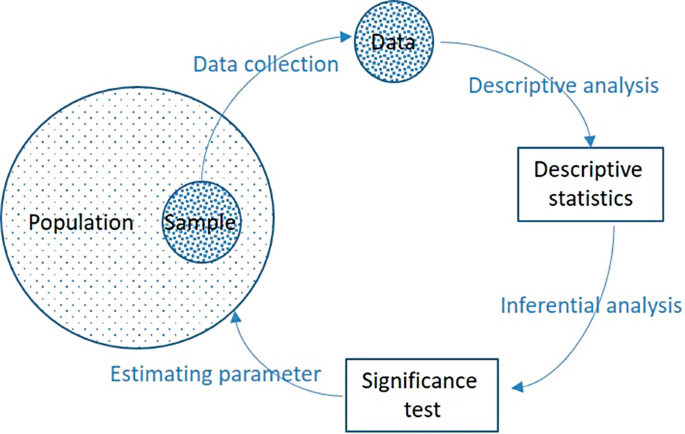

As I mentioned, quantitative analysis is powered by statistical analysis methods . There are two main “branches” of statistical methods that are used – descriptive statistics and inferential statistics . In your research, you might only use descriptive statistics, or you might use a mix of both , depending on what you’re trying to figure out. In other words, depending on your research questions, aims and objectives . I’ll explain how to choose your methods later.

So, what are descriptive and inferential statistics?

Well, before I can explain that, we need to take a quick detour to explain some lingo. To understand the difference between these two branches of statistics, you need to understand two important words. These words are population and sample .

First up, population . In statistics, the population is the entire group of people (or animals or organisations or whatever) that you’re interested in researching. For example, if you were interested in researching Tesla owners in the US, then the population would be all Tesla owners in the US.

However, it’s extremely unlikely that you’re going to be able to interview or survey every single Tesla owner in the US. Realistically, you’ll likely only get access to a few hundred, or maybe a few thousand owners using an online survey. This smaller group of accessible people whose data you actually collect is called your sample .

So, to recap – the population is the entire group of people you’re interested in, and the sample is the subset of the population that you can actually get access to. In other words, the population is the full chocolate cake , whereas the sample is a slice of that cake.

So, why is this sample-population thing important?

Well, descriptive statistics focus on describing the sample , while inferential statistics aim to make predictions about the population, based on the findings within the sample. In other words, we use one group of statistical methods – descriptive statistics – to investigate the slice of cake, and another group of methods – inferential statistics – to draw conclusions about the entire cake. There I go with the cake analogy again…

With that out the way, let’s take a closer look at each of these branches in more detail.

Branch 1: Descriptive Statistics

Descriptive statistics serve a simple but critically important role in your research – to describe your data set – hence the name. In other words, they help you understand the details of your sample . Unlike inferential statistics (which we’ll get to soon), descriptive statistics don’t aim to make inferences or predictions about the entire population – they’re purely interested in the details of your specific sample .

When you’re writing up your analysis, descriptive statistics are the first set of stats you’ll cover, before moving on to inferential statistics. But, that said, depending on your research objectives and research questions , they may be the only type of statistics you use. We’ll explore that a little later.

So, what kind of statistics are usually covered in this section?

Some common statistical tests used in this branch include the following:

- Mean – this is simply the mathematical average of a range of numbers.

- Median – this is the midpoint in a range of numbers when the numbers are arranged in numerical order. If the data set makes up an odd number, then the median is the number right in the middle of the set. If the data set makes up an even number, then the median is the midpoint between the two middle numbers.

- Mode – this is simply the most commonly occurring number in the data set.

- In cases where most of the numbers are quite close to the average, the standard deviation will be relatively low.

- Conversely, in cases where the numbers are scattered all over the place, the standard deviation will be relatively high.

- Skewness . As the name suggests, skewness indicates how symmetrical a range of numbers is. In other words, do they tend to cluster into a smooth bell curve shape in the middle of the graph, or do they skew to the left or right?

Feeling a bit confused? Let’s look at a practical example using a small data set.

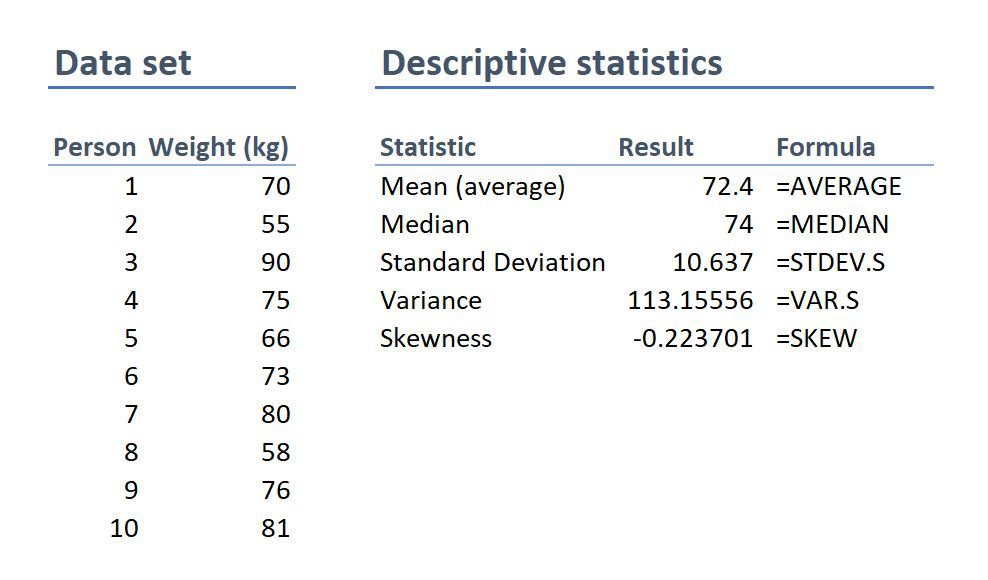

On the left-hand side is the data set. This details the bodyweight of a sample of 10 people. On the right-hand side, we have the descriptive statistics. Let’s take a look at each of them.

First, we can see that the mean weight is 72.4 kilograms. In other words, the average weight across the sample is 72.4 kilograms. Straightforward.

Next, we can see that the median is very similar to the mean (the average). This suggests that this data set has a reasonably symmetrical distribution (in other words, a relatively smooth, centred distribution of weights, clustered towards the centre).

In terms of the mode , there is no mode in this data set. This is because each number is present only once and so there cannot be a “most common number”. If there were two people who were both 65 kilograms, for example, then the mode would be 65.

Next up is the standard deviation . 10.6 indicates that there’s quite a wide spread of numbers. We can see this quite easily by looking at the numbers themselves, which range from 55 to 90, which is quite a stretch from the mean of 72.4.

And lastly, the skewness of -0.2 tells us that the data is very slightly negatively skewed. This makes sense since the mean and the median are slightly different.

As you can see, these descriptive statistics give us some useful insight into the data set. Of course, this is a very small data set (only 10 records), so we can’t read into these statistics too much. Also, keep in mind that this is not a list of all possible descriptive statistics – just the most common ones.

But why do all of these numbers matter?

While these descriptive statistics are all fairly basic, they’re important for a few reasons:

- Firstly, they help you get both a macro and micro-level view of your data. In other words, they help you understand both the big picture and the finer details.

- Secondly, they help you spot potential errors in the data – for example, if an average is way higher than you’d expect, or responses to a question are highly varied, this can act as a warning sign that you need to double-check the data.

- And lastly, these descriptive statistics help inform which inferential statistical techniques you can use, as those techniques depend on the skewness (in other words, the symmetry and normality) of the data.

Simply put, descriptive statistics are really important , even though the statistical techniques used are fairly basic. All too often at Grad Coach, we see students skimming over the descriptives in their eagerness to get to the more exciting inferential methods, and then landing up with some very flawed results.

Don’t be a sucker – give your descriptive statistics the love and attention they deserve!

Branch 2: Inferential Statistics

As I mentioned, while descriptive statistics are all about the details of your specific data set – your sample – inferential statistics aim to make inferences about the population . In other words, you’ll use inferential statistics to make predictions about what you’d expect to find in the full population.

What kind of predictions, you ask? Well, there are two common types of predictions that researchers try to make using inferential stats:

- Firstly, predictions about differences between groups – for example, height differences between children grouped by their favourite meal or gender.

- And secondly, relationships between variables – for example, the relationship between body weight and the number of hours a week a person does yoga.

In other words, inferential statistics (when done correctly), allow you to connect the dots and make predictions about what you expect to see in the real world population, based on what you observe in your sample data. For this reason, inferential statistics are used for hypothesis testing – in other words, to test hypotheses that predict changes or differences.

Of course, when you’re working with inferential statistics, the composition of your sample is really important. In other words, if your sample doesn’t accurately represent the population you’re researching, then your findings won’t necessarily be very useful.

For example, if your population of interest is a mix of 50% male and 50% female , but your sample is 80% male , you can’t make inferences about the population based on your sample, since it’s not representative. This area of statistics is called sampling, but we won’t go down that rabbit hole here (it’s a deep one!) – we’ll save that for another post .

What statistics are usually used in this branch?

There are many, many different statistical analysis methods within the inferential branch and it’d be impossible for us to discuss them all here. So we’ll just take a look at some of the most common inferential statistical methods so that you have a solid starting point.

First up are T-Tests . T-tests compare the means (the averages) of two groups of data to assess whether they’re statistically significantly different. In other words, do they have significantly different means, standard deviations and skewness.

This type of testing is very useful for understanding just how similar or different two groups of data are. For example, you might want to compare the mean blood pressure between two groups of people – one that has taken a new medication and one that hasn’t – to assess whether they are significantly different.

Kicking things up a level, we have ANOVA, which stands for “analysis of variance”. This test is similar to a T-test in that it compares the means of various groups, but ANOVA allows you to analyse multiple groups , not just two groups So it’s basically a t-test on steroids…

Next, we have correlation analysis . This type of analysis assesses the relationship between two variables. In other words, if one variable increases, does the other variable also increase, decrease or stay the same. For example, if the average temperature goes up, do average ice creams sales increase too? We’d expect some sort of relationship between these two variables intuitively , but correlation analysis allows us to measure that relationship scientifically .

Lastly, we have regression analysis – this is quite similar to correlation in that it assesses the relationship between variables, but it goes a step further to understand cause and effect between variables, not just whether they move together. In other words, does the one variable actually cause the other one to move, or do they just happen to move together naturally thanks to another force? Just because two variables correlate doesn’t necessarily mean that one causes the other.

Stats overload…

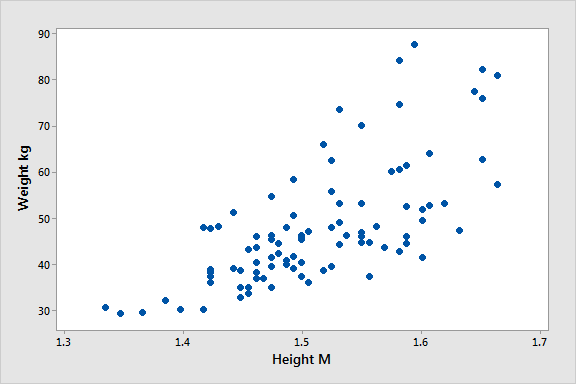

I hear you. To make this all a little more tangible, let’s take a look at an example of a correlation in action.

Here’s a scatter plot demonstrating the correlation (relationship) between weight and height. Intuitively, we’d expect there to be some relationship between these two variables, which is what we see in this scatter plot. In other words, the results tend to cluster together in a diagonal line from bottom left to top right.

As I mentioned, these are are just a handful of inferential techniques – there are many, many more. Importantly, each statistical method has its own assumptions and limitations.

For example, some methods only work with normally distributed (parametric) data, while other methods are designed specifically for non-parametric data. And that’s exactly why descriptive statistics are so important – they’re the first step to knowing which inferential techniques you can and can’t use.

How to choose the right analysis method

To choose the right statistical methods, you need to think about two important factors :

- The type of quantitative data you have (specifically, level of measurement and the shape of the data). And,

- Your research questions and hypotheses

Let’s take a closer look at each of these.

Factor 1 – Data type

The first thing you need to consider is the type of data you’ve collected (or the type of data you will collect). By data types, I’m referring to the four levels of measurement – namely, nominal, ordinal, interval and ratio. If you’re not familiar with this lingo, check out the video below.

Why does this matter?

Well, because different statistical methods and techniques require different types of data. This is one of the “assumptions” I mentioned earlier – every method has its assumptions regarding the type of data.

For example, some techniques work with categorical data (for example, yes/no type questions, or gender or ethnicity), while others work with continuous numerical data (for example, age, weight or income) – and, of course, some work with multiple data types.

If you try to use a statistical method that doesn’t support the data type you have, your results will be largely meaningless . So, make sure that you have a clear understanding of what types of data you’ve collected (or will collect). Once you have this, you can then check which statistical methods would support your data types here .

If you haven’t collected your data yet, you can work in reverse and look at which statistical method would give you the most useful insights, and then design your data collection strategy to collect the correct data types.

Another important factor to consider is the shape of your data . Specifically, does it have a normal distribution (in other words, is it a bell-shaped curve, centred in the middle) or is it very skewed to the left or the right? Again, different statistical techniques work for different shapes of data – some are designed for symmetrical data while others are designed for skewed data.

This is another reminder of why descriptive statistics are so important – they tell you all about the shape of your data.

Factor 2: Your research questions

The next thing you need to consider is your specific research questions, as well as your hypotheses (if you have some). The nature of your research questions and research hypotheses will heavily influence which statistical methods and techniques you should use.

If you’re just interested in understanding the attributes of your sample (as opposed to the entire population), then descriptive statistics are probably all you need. For example, if you just want to assess the means (averages) and medians (centre points) of variables in a group of people.

On the other hand, if you aim to understand differences between groups or relationships between variables and to infer or predict outcomes in the population, then you’ll likely need both descriptive statistics and inferential statistics.

So, it’s really important to get very clear about your research aims and research questions, as well your hypotheses – before you start looking at which statistical techniques to use.

Never shoehorn a specific statistical technique into your research just because you like it or have some experience with it. Your choice of methods must align with all the factors we’ve covered here.

Time to recap…

You’re still with me? That’s impressive. We’ve covered a lot of ground here, so let’s recap on the key points:

- Quantitative data analysis is all about analysing number-based data (which includes categorical and numerical data) using various statistical techniques.

- The two main branches of statistics are descriptive statistics and inferential statistics . Descriptives describe your sample, whereas inferentials make predictions about what you’ll find in the population.

- Common descriptive statistical methods include mean (average), median , standard deviation and skewness .

- Common inferential statistical methods include t-tests , ANOVA , correlation and regression analysis.

- To choose the right statistical methods and techniques, you need to consider the type of data you’re working with , as well as your research questions and hypotheses.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

You Might Also Like:

74 Comments

Hi, I have read your article. Such a brilliant post you have created.

Thank you for the feedback. Good luck with your quantitative analysis.

Thank you so much.

Thank you so much. I learnt much well. I love your summaries of the concepts. I had love you to explain how to input data using SPSS

Amazing and simple way of breaking down quantitative methods.

This is beautiful….especially for non-statisticians. I have skimmed through but I wish to read again. and please include me in other articles of the same nature when you do post. I am interested. I am sure, I could easily learn from you and get off the fear that I have had in the past. Thank you sincerely.

Send me every new information you might have.

i need every new information

Thank you for the blog. It is quite informative. Dr Peter Nemaenzhe PhD

It is wonderful. l’ve understood some of the concepts in a more compréhensive manner

Your article is so good! However, I am still a bit lost. I am doing a secondary research on Gun control in the US and increase in crime rates and I am not sure which analysis method I should use?

Based on the given learning points, this is inferential analysis, thus, use ‘t-tests, ANOVA, correlation and regression analysis’

Well explained notes. Am an MPH student and currently working on my thesis proposal, this has really helped me understand some of the things I didn’t know.

I like your page..helpful

wonderful i got my concept crystal clear. thankyou!!

This is really helpful , thank you

Thank you so much this helped

Wonderfully explained

thank u so much, it was so informative

THANKYOU, this was very informative and very helpful

This is great GRADACOACH I am not a statistician but I require more of this in my thesis

Include me in your posts.

This is so great and fully useful. I would like to thank you again and again.

Glad to read this article. I’ve read lot of articles but this article is clear on all concepts. Thanks for sharing.

Thank you so much. This is a very good foundation and intro into quantitative data analysis. Appreciate!

You have a very impressive, simple but concise explanation of data analysis for Quantitative Research here. This is a God-send link for me to appreciate research more. Thank you so much!

Avery good presentation followed by the write up. yes you simplified statistics to make sense even to a layman like me. Thank so much keep it up. The presenter did ell too. i would like more of this for Qualitative and exhaust more of the test example like the Anova.

This is a very helpful article, couldn’t have been clearer. Thank you.

Awesome and phenomenal information.Well done

The video with the accompanying article is super helpful to demystify this topic. Very well done. Thank you so much.

thank you so much, your presentation helped me a lot

I don’t know how should I express that ur article is saviour for me 🥺😍

It is well defined information and thanks for sharing. It helps me a lot in understanding the statistical data.

I gain a lot and thanks for sharing brilliant ideas, so wish to be linked on your email update.

Very helpful and clear .Thank you Gradcoach.

Thank for sharing this article, well organized and information presented are very clear.

VERY INTERESTING AND SUPPORTIVE TO NEW RESEARCHERS LIKE ME. AT LEAST SOME BASICS ABOUT QUANTITATIVE.

An outstanding, well explained and helpful article. This will help me so much with my data analysis for my research project. Thank you!

wow this has just simplified everything i was scared of how i am gonna analyse my data but thanks to you i will be able to do so

simple and constant direction to research. thanks

This is helpful

Great writing!! Comprehensive and very helpful.

Do you provide any assistance for other steps of research methodology like making research problem testing hypothesis report and thesis writing?

Thank you so much for such useful article!

Amazing article. So nicely explained. Wow

Very insightfull. Thanks

I am doing a quality improvement project to determine if the implementation of a protocol will change prescribing habits. Would this be a t-test?

The is a very helpful blog, however, I’m still not sure how to analyze my data collected. I’m doing a research on “Free Education at the University of Guyana”

tnx. fruitful blog!

So I am writing exams and would like to know how do establish which method of data analysis to use from the below research questions: I am a bit lost as to how I determine the data analysis method from the research questions.

Do female employees report higher job satisfaction than male employees with similar job descriptions across the South African telecommunications sector? – I though that maybe Chi Square could be used here. – Is there a gender difference in talented employees’ actual turnover decisions across the South African telecommunications sector? T-tests or Correlation in this one. – Is there a gender difference in the cost of actual turnover decisions across the South African telecommunications sector? T-tests or Correlation in this one. – What practical recommendations can be made to the management of South African telecommunications companies on leveraging gender to mitigate employee turnover decisions?

Your assistance will be appreciated if I could get a response as early as possible tomorrow

This was quite helpful. Thank you so much.

wow I got a lot from this article, thank you very much, keep it up

Thanks for yhe guidance. Can you send me this guidance on my email? To enable offline reading?

Thank you very much, this service is very helpful.

Every novice researcher needs to read this article as it puts things so clear and easy to follow. Its been very helpful.

Wonderful!!!! you explained everything in a way that anyone can learn. Thank you!!

I really enjoyed reading though this. Very easy to follow. Thank you

Many thanks for your useful lecture, I would be really appreciated if you could possibly share with me the PPT of presentation related to Data type?

Thank you very much for sharing, I got much from this article

This is a very informative write-up. Kindly include me in your latest posts.

Very interesting mostly for social scientists

Thank you so much, very helpfull

You’re welcome 🙂

woow, its great, its very informative and well understood because of your way of writing like teaching in front of me in simple languages.

I have been struggling to understand a lot of these concepts. Thank you for the informative piece which is written with outstanding clarity.

very informative article. Easy to understand

Beautiful read, much needed.

Always greet intro and summary. I learn so much from GradCoach

Quite informative. Simple and clear summary.

I thoroughly enjoyed reading your informative and inspiring piece. Your profound insights into this topic truly provide a better understanding of its complexity. I agree with the points you raised, especially when you delved into the specifics of the article. In my opinion, that aspect is often overlooked and deserves further attention.

Absolutely!!! Thank you

Thank you very much for this post. It made me to understand how to do my data analysis.

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Quantitative Data Analysis: A Comprehensive Guide

By: Ofem Eteng | Published: May 18, 2022

A healthcare giant successfully introduces the most effective drug dosage through rigorous statistical modeling, saving countless lives. A marketing team predicts consumer trends with uncanny accuracy, tailoring campaigns for maximum impact.

Table of Contents

These trends and dosages are not just any numbers but are a result of meticulous quantitative data analysis. Quantitative data analysis offers a robust framework for understanding complex phenomena, evaluating hypotheses, and predicting future outcomes.

In this blog, we’ll walk through the concept of quantitative data analysis, the steps required, its advantages, and the methods and techniques that are used in this analysis. Read on!

What is Quantitative Data Analysis?

Quantitative data analysis is a systematic process of examining, interpreting, and drawing meaningful conclusions from numerical data. It involves the application of statistical methods, mathematical models, and computational techniques to understand patterns, relationships, and trends within datasets.

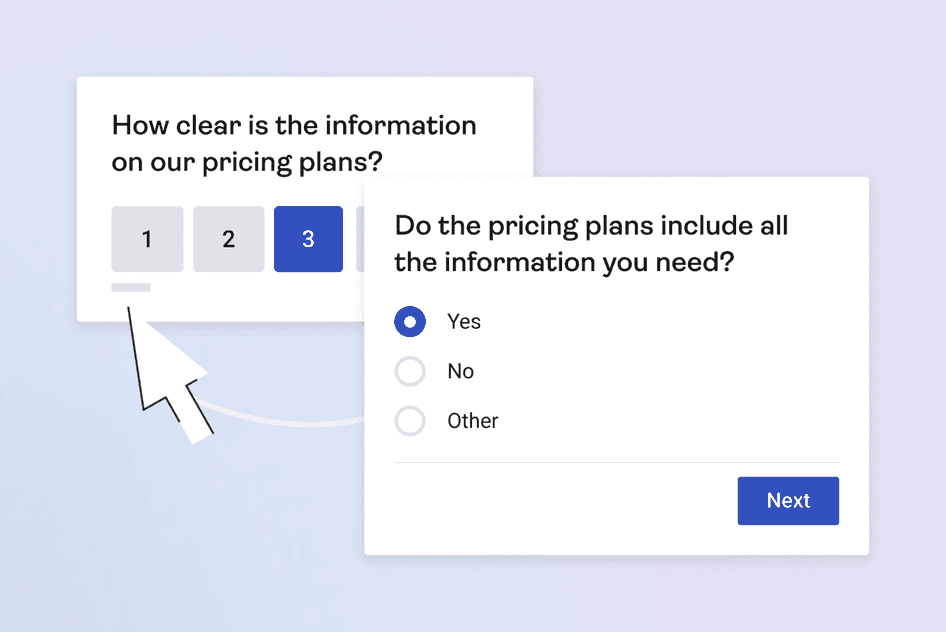

Quantitative data analysis methods typically work with algorithms, mathematical analysis tools, and software to gain insights from the data, answering questions such as how many, how often, and how much. Data for quantitative data analysis is usually collected from close-ended surveys, questionnaires, polls, etc. The data can also be obtained from sales figures, email click-through rates, number of website visitors, and percentage revenue increase.

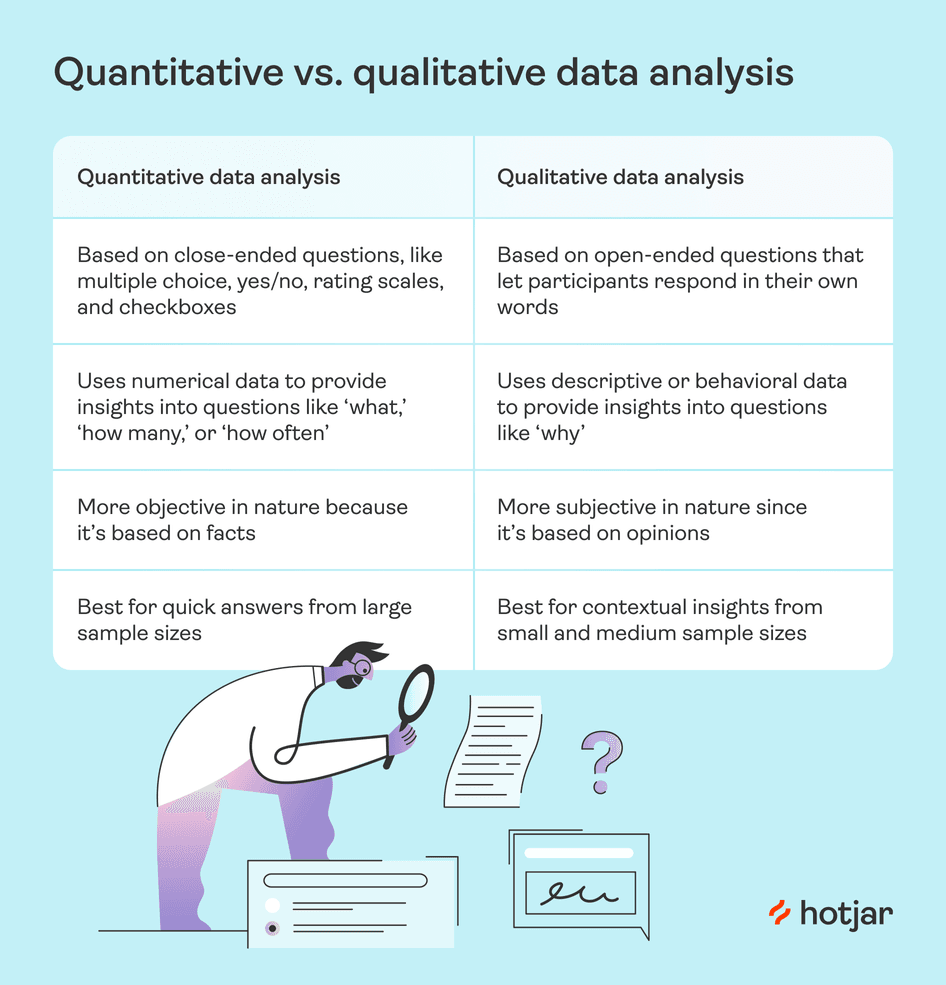

Quantitative Data Analysis vs Qualitative Data Analysis

When we talk about data, we directly think about the pattern, the relationship, and the connection between the datasets – analyzing the data in short. Therefore when it comes to data analysis, there are broadly two types – Quantitative Data Analysis and Qualitative Data Analysis.

Quantitative data analysis revolves around numerical data and statistics, which are suitable for functions that can be counted or measured. In contrast, qualitative data analysis includes description and subjective information – for things that can be observed but not measured.

Let us differentiate between Quantitative Data Analysis and Quantitative Data Analysis for a better understanding.

Data Preparation Steps for Quantitative Data Analysis

Quantitative data has to be gathered and cleaned before proceeding to the stage of analyzing it. Below are the steps to prepare a data before quantitative research analysis:

- Step 1: Data Collection

Before beginning the analysis process, you need data. Data can be collected through rigorous quantitative research, which includes methods such as interviews, focus groups, surveys, and questionnaires.

- Step 2: Data Cleaning

Once the data is collected, begin the data cleaning process by scanning through the entire data for duplicates, errors, and omissions. Keep a close eye for outliers (data points that are significantly different from the majority of the dataset) because they can skew your analysis results if they are not removed.

This data-cleaning process ensures data accuracy, consistency and relevancy before analysis.

- Step 3: Data Analysis and Interpretation

Now that you have collected and cleaned your data, it is now time to carry out the quantitative analysis. There are two methods of quantitative data analysis, which we will discuss in the next section.

However, if you have data from multiple sources, collecting and cleaning it can be a cumbersome task. This is where Hevo Data steps in. With Hevo, extracting, transforming, and loading data from source to destination becomes a seamless task, eliminating the need for manual coding. This not only saves valuable time but also enhances the overall efficiency of data analysis and visualization, empowering users to derive insights quickly and with precision

Hevo is the only real-time ELT No-code Data Pipeline platform that cost-effectively automates data pipelines that are flexible to your needs. With integration with 150+ Data Sources (40+ free sources), we help you not only export data from sources & load data to the destinations but also transform & enrich your data, & make it analysis-ready.

Start for free now!

Now that you are familiar with what quantitative data analysis is and how to prepare your data for analysis, the focus will shift to the purpose of this article, which is to describe the methods and techniques of quantitative data analysis.

Methods and Techniques of Quantitative Data Analysis

Quantitative data analysis employs two techniques to extract meaningful insights from datasets, broadly. The first method is descriptive statistics, which summarizes and portrays essential features of a dataset, such as mean, median, and standard deviation.

Inferential statistics, the second method, extrapolates insights and predictions from a sample dataset to make broader inferences about an entire population, such as hypothesis testing and regression analysis.

An in-depth explanation of both the methods is provided below:

- Descriptive Statistics

- Inferential Statistics

1) Descriptive Statistics

Descriptive statistics as the name implies is used to describe a dataset. It helps understand the details of your data by summarizing it and finding patterns from the specific data sample. They provide absolute numbers obtained from a sample but do not necessarily explain the rationale behind the numbers and are mostly used for analyzing single variables. The methods used in descriptive statistics include:

- Mean: This calculates the numerical average of a set of values.

- Median: This is used to get the midpoint of a set of values when the numbers are arranged in numerical order.

- Mode: This is used to find the most commonly occurring value in a dataset.

- Percentage: This is used to express how a value or group of respondents within the data relates to a larger group of respondents.

- Frequency: This indicates the number of times a value is found.

- Range: This shows the highest and lowest values in a dataset.

- Standard Deviation: This is used to indicate how dispersed a range of numbers is, meaning, it shows how close all the numbers are to the mean.

- Skewness: It indicates how symmetrical a range of numbers is, showing if they cluster into a smooth bell curve shape in the middle of the graph or if they skew towards the left or right.

2) Inferential Statistics

In quantitative analysis, the expectation is to turn raw numbers into meaningful insight using numerical values, and descriptive statistics is all about explaining details of a specific dataset using numbers, but it does not explain the motives behind the numbers; hence, a need for further analysis using inferential statistics.

Inferential statistics aim to make predictions or highlight possible outcomes from the analyzed data obtained from descriptive statistics. They are used to generalize results and make predictions between groups, show relationships that exist between multiple variables, and are used for hypothesis testing that predicts changes or differences.

There are various statistical analysis methods used within inferential statistics; a few are discussed below.

- Cross Tabulations: Cross tabulation or crosstab is used to show the relationship that exists between two variables and is often used to compare results by demographic groups. It uses a basic tabular form to draw inferences between different data sets and contains data that is mutually exclusive or has some connection with each other. Crosstabs help understand the nuances of a dataset and factors that may influence a data point.

- Regression Analysis: Regression analysis estimates the relationship between a set of variables. It shows the correlation between a dependent variable (the variable or outcome you want to measure or predict) and any number of independent variables (factors that may impact the dependent variable). Therefore, the purpose of the regression analysis is to estimate how one or more variables might affect a dependent variable to identify trends and patterns to make predictions and forecast possible future trends. There are many types of regression analysis, and the model you choose will be determined by the type of data you have for the dependent variable. The types of regression analysis include linear regression, non-linear regression, binary logistic regression, etc.

- Monte Carlo Simulation: Monte Carlo simulation, also known as the Monte Carlo method, is a computerized technique of generating models of possible outcomes and showing their probability distributions. It considers a range of possible outcomes and then tries to calculate how likely each outcome will occur. Data analysts use it to perform advanced risk analyses to help forecast future events and make decisions accordingly.

- Analysis of Variance (ANOVA): This is used to test the extent to which two or more groups differ from each other. It compares the mean of various groups and allows the analysis of multiple groups.

- Factor Analysis: A large number of variables can be reduced into a smaller number of factors using the factor analysis technique. It works on the principle that multiple separate observable variables correlate with each other because they are all associated with an underlying construct. It helps in reducing large datasets into smaller, more manageable samples.

- Cohort Analysis: Cohort analysis can be defined as a subset of behavioral analytics that operates from data taken from a given dataset. Rather than looking at all users as one unit, cohort analysis breaks down data into related groups for analysis, where these groups or cohorts usually have common characteristics or similarities within a defined period.

- MaxDiff Analysis: This is a quantitative data analysis method that is used to gauge customers’ preferences for purchase and what parameters rank higher than the others in the process.

- Cluster Analysis: Cluster analysis is a technique used to identify structures within a dataset. Cluster analysis aims to be able to sort different data points into groups that are internally similar and externally different; that is, data points within a cluster will look like each other and different from data points in other clusters.

- Time Series Analysis: This is a statistical analytic technique used to identify trends and cycles over time. It is simply the measurement of the same variables at different times, like weekly and monthly email sign-ups, to uncover trends, seasonality, and cyclic patterns. By doing this, the data analyst can forecast how variables of interest may fluctuate in the future.

- SWOT analysis: This is a quantitative data analysis method that assigns numerical values to indicate strengths, weaknesses, opportunities, and threats of an organization, product, or service to show a clearer picture of competition to foster better business strategies

How to Choose the Right Method for your Analysis?

Choosing between Descriptive Statistics or Inferential Statistics can be often confusing. You should consider the following factors before choosing the right method for your quantitative data analysis:

1. Type of Data

The first consideration in data analysis is understanding the type of data you have. Different statistical methods have specific requirements based on these data types, and using the wrong method can render results meaningless. The choice of statistical method should align with the nature and distribution of your data to ensure meaningful and accurate analysis.

2. Your Research Questions

When deciding on statistical methods, it’s crucial to align them with your specific research questions and hypotheses. The nature of your questions will influence whether descriptive statistics alone, which reveal sample attributes, are sufficient or if you need both descriptive and inferential statistics to understand group differences or relationships between variables and make population inferences.

Pros and Cons of Quantitative Data Analysis

1. Objectivity and Generalizability:

- Quantitative data analysis offers objective, numerical measurements, minimizing bias and personal interpretation.

- Results can often be generalized to larger populations, making them applicable to broader contexts.

Example: A study using quantitative data analysis to measure student test scores can objectively compare performance across different schools and demographics, leading to generalizable insights about educational strategies.

2. Precision and Efficiency:

- Statistical methods provide precise numerical results, allowing for accurate comparisons and prediction.

- Large datasets can be analyzed efficiently with the help of computer software, saving time and resources.

Example: A marketing team can use quantitative data analysis to precisely track click-through rates and conversion rates on different ad campaigns, quickly identifying the most effective strategies for maximizing customer engagement.

3. Identification of Patterns and Relationships:

- Statistical techniques reveal hidden patterns and relationships between variables that might not be apparent through observation alone.

- This can lead to new insights and understanding of complex phenomena.

Example: A medical researcher can use quantitative analysis to pinpoint correlations between lifestyle factors and disease risk, aiding in the development of prevention strategies.

1. Limited Scope:

- Quantitative analysis focuses on quantifiable aspects of a phenomenon , potentially overlooking important qualitative nuances, such as emotions, motivations, or cultural contexts.

Example: A survey measuring customer satisfaction with numerical ratings might miss key insights about the underlying reasons for their satisfaction or dissatisfaction, which could be better captured through open-ended feedback.

2. Oversimplification:

- Reducing complex phenomena to numerical data can lead to oversimplification and a loss of richness in understanding.

Example: Analyzing employee productivity solely through quantitative metrics like hours worked or tasks completed might not account for factors like creativity, collaboration, or problem-solving skills, which are crucial for overall performance.

3. Potential for Misinterpretation:

- Statistical results can be misinterpreted if not analyzed carefully and with appropriate expertise.

- The choice of statistical methods and assumptions can significantly influence results.

This blog discusses the steps, methods, and techniques of quantitative data analysis. It also gives insights into the methods of data collection, the type of data one should work with, and the pros and cons of such analysis.

Gain a better understanding of data analysis with these essential reads:

- Data Analysis and Modeling: 4 Critical Differences

- Exploratory Data Analysis Simplified 101

- 25 Best Data Analysis Tools in 2024

Carrying out successful data analysis requires prepping the data and making it analysis-ready. That is where Hevo steps in.

Want to give Hevo a try? Sign Up for a 14-day free trial and experience the feature-rich Hevo suite first hand. You may also have a look at the amazing Hevo price , which will assist you in selecting the best plan for your requirements.

Share your experience of understanding Quantitative Data Analysis in the comment section below! We would love to hear your thoughts.

Ofem is a freelance writer specializing in data-related topics, who has expertise in translating complex concepts. With a focus on data science, analytics, and emerging technologies.

No-code Data Pipeline for your Data Warehouse

- Data Strategy

Get Started with Hevo

Related articles

Preetipadma Khandavilli

Data Mining and Data Analysis: 4 Key Differences

Nicholas Samuel

Data Quality Analysis Simplified: A Comprehensive Guide 101

Sharon Rithika

Data Analysis in Tableau: Unleash the Power of COUNTIF

I want to read this e-book.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- What Is Quantitative Research? | Definition & Methods

What Is Quantitative Research? | Definition & Methods

Published on 4 April 2022 by Pritha Bhandari . Revised on 10 October 2022.

Quantitative research is the process of collecting and analysing numerical data. It can be used to find patterns and averages, make predictions, test causal relationships, and generalise results to wider populations.

Quantitative research is the opposite of qualitative research , which involves collecting and analysing non-numerical data (e.g. text, video, or audio).

Quantitative research is widely used in the natural and social sciences: biology, chemistry, psychology, economics, sociology, marketing, etc.

- What is the demographic makeup of Singapore in 2020?

- How has the average temperature changed globally over the last century?

- Does environmental pollution affect the prevalence of honey bees?

- Does working from home increase productivity for people with long commutes?

Table of contents

Quantitative research methods, quantitative data analysis, advantages of quantitative research, disadvantages of quantitative research, frequently asked questions about quantitative research.

You can use quantitative research methods for descriptive, correlational or experimental research.

- In descriptive research , you simply seek an overall summary of your study variables.

- In correlational research , you investigate relationships between your study variables.

- In experimental research , you systematically examine whether there is a cause-and-effect relationship between variables.

Correlational and experimental research can both be used to formally test hypotheses , or predictions, using statistics. The results may be generalised to broader populations based on the sampling method used.

To collect quantitative data, you will often need to use operational definitions that translate abstract concepts (e.g., mood) into observable and quantifiable measures (e.g., self-ratings of feelings and energy levels).

Prevent plagiarism, run a free check.

Once data is collected, you may need to process it before it can be analysed. For example, survey and test data may need to be transformed from words to numbers. Then, you can use statistical analysis to answer your research questions .

Descriptive statistics will give you a summary of your data and include measures of averages and variability. You can also use graphs, scatter plots and frequency tables to visualise your data and check for any trends or outliers.

Using inferential statistics , you can make predictions or generalisations based on your data. You can test your hypothesis or use your sample data to estimate the population parameter .

You can also assess the reliability and validity of your data collection methods to indicate how consistently and accurately your methods actually measured what you wanted them to.

Quantitative research is often used to standardise data collection and generalise findings . Strengths of this approach include:

- Replication

Repeating the study is possible because of standardised data collection protocols and tangible definitions of abstract concepts.

- Direct comparisons of results

The study can be reproduced in other cultural settings, times or with different groups of participants. Results can be compared statistically.

- Large samples

Data from large samples can be processed and analysed using reliable and consistent procedures through quantitative data analysis.

- Hypothesis testing

Using formalised and established hypothesis testing procedures means that you have to carefully consider and report your research variables, predictions, data collection and testing methods before coming to a conclusion.

Despite the benefits of quantitative research, it is sometimes inadequate in explaining complex research topics. Its limitations include:

- Superficiality

Using precise and restrictive operational definitions may inadequately represent complex concepts. For example, the concept of mood may be represented with just a number in quantitative research, but explained with elaboration in qualitative research.

- Narrow focus

Predetermined variables and measurement procedures can mean that you ignore other relevant observations.

- Structural bias

Despite standardised procedures, structural biases can still affect quantitative research. Missing data , imprecise measurements or inappropriate sampling methods are biases that can lead to the wrong conclusions.

- Lack of context

Quantitative research often uses unnatural settings like laboratories or fails to consider historical and cultural contexts that may affect data collection and results.

Quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings.

Quantitative methods allow you to test a hypothesis by systematically collecting and analysing data, while qualitative methods allow you to explore ideas and experiences in depth.

In mixed methods research , you use both qualitative and quantitative data collection and analysis methods to answer your research question .

Data collection is the systematic process by which observations or measurements are gathered in research. It is used in many different contexts by academics, governments, businesses, and other organisations.

Operationalisation means turning abstract conceptual ideas into measurable observations.

For example, the concept of social anxiety isn’t directly observable, but it can be operationally defined in terms of self-rating scores, behavioural avoidance of crowded places, or physical anxiety symptoms in social situations.

Before collecting data , it’s important to consider how you will operationalise the variables that you want to measure.

Reliability and validity are both about how well a method measures something:

- Reliability refers to the consistency of a measure (whether the results can be reproduced under the same conditions).

- Validity refers to the accuracy of a measure (whether the results really do represent what they are supposed to measure).

If you are doing experimental research , you also have to consider the internal and external validity of your experiment.

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Bhandari, P. (2022, October 10). What Is Quantitative Research? | Definition & Methods. Scribbr. Retrieved 22 April 2024, from https://www.scribbr.co.uk/research-methods/introduction-to-quantitative-research/

Is this article helpful?

Pritha Bhandari

Data Analysis in Quantitative Research

- Reference work entry

- First Online: 13 January 2019

- Cite this reference work entry

- Yong Moon Jung 2

1749 Accesses

1 Citations

Quantitative data analysis serves as part of an essential process of evidence-making in health and social sciences. It is adopted for any types of research question and design whether it is descriptive, explanatory, or causal. However, compared with qualitative counterpart, quantitative data analysis has less flexibility. Conducting quantitative data analysis requires a prerequisite understanding of the statistical knowledge and skills. It also requires rigor in the choice of appropriate analysis model and the interpretation of the analysis outcomes. Basically, the choice of appropriate analysis techniques is determined by the type of research question and the nature of the data. In addition, different analysis techniques require different assumptions of data. This chapter provides introductory guides for readers to assist them with their informed decision-making in choosing the correct analysis models. To this end, it begins with discussion of the levels of measure: nominal, ordinal, and scale. Some commonly used analysis techniques in univariate, bivariate, and multivariate data analysis are presented for practical examples. Example analysis outcomes are produced by the use of SPSS (Statistical Package for Social Sciences).

Download reference work entry PDF

Similar content being viewed by others

Data Analysis Techniques for Quantitative Study

Meta-Analytic Methods for Public Health Research

- Quantitative data analysis

- Levels of measurement

- Choice of analysis model

1 Introduction

Quantitative data analysis is an essential process that supports decision-making and evidence-based research in health and social sciences. Compared with qualitative counterpart, quantitative data analysis has less flexibility (see Chaps. 48, “Thematic Analysis,” 49, “Narrative Analysis,” 28, “Conversation Analysis: An Introduction to Methodology, Data Collection, and Analysis,” and 50, “Critical Discourse/Discourse Analysis” ). Conducting quantitative data analysis requires a prerequisite understanding of the statistical knowledge and skills. It also requires rigor in the choice of appropriate analysis model and in the interpretation of the analysis outcomes. In addition, different analysis techniques require different assumptions of data. When these conditions are not fully satisfied, the analysis is regarded as inappropriate and misleading.

This chapter provides introductory guides for readers to assist them with their informed decision-making in choosing the correct analysis models. The chapter begins with discussion of the levels of measure: nominal, ordinal, and scale. Some commonly used analysis techniques in univariate, bivariate, and multivariate data analysis are presented for practical examples. Example analysis outcomes are produced by the use of SPSS (Statistical Package for Social Sciences).

2 Nature of Data for Quantitative Data Analysis

2.1 significance of understating of levels of measurement.

For proper quantitative analysis, information needs to be expressed in numerical formats. In order for the numbers to be the basic components of the dataset in quantitative analysis, every attributes of the variables need to be converted into numbers. This process is call coding, where numbers are assigned to each attribute of a variable. For instance, the attribute of male in sex variable is given the value of 1, and the attribute of female is given the value of 2. In this case, the numbers represent the attributes expressed in lengthier text terms for the purpose of quantitative analysis.

While the coding process is applied to replace any non-numerical information such as letters and symbols, the numbers do not represent attributes in the same way. This means that the nature and value of the same number can be different. For instance, the value of number 1 in the above sex variable does not have any numerical meaning but is just a shorter placeholder for male (Trochim and Donnelly 2007 ). However, the value of number 1 in income variable represents the actual value of the number. In these two cases, different meanings are attached to the same value of number 1. Put another way, the number represents different levels depending on the nature of the variable.

Level of measurement has critical implications for data analysis. Firstly, an understanding of level of measurement helps with evaluating the measurement. Each variable must be measured in such way that its attributes are clearly represented in the measurement, and the maximum amount of information is collected. Measurement evaluation can judge if the most appropriate level of measurement was selected for the collection of the best information of a variable. This will be further explained in the following section. Secondly, understanding of the level of measurement ensures the correct interpretation of the analysis outcomes. A clear understanding of what the value of the number exactly represents and what the distance among the values means guides proper interpretation. Lastly but most importantly, the level of measurement of a variable determines the choice of the analysis model . For example, mean value is not produced for sex variable, and subsequently an analysis model for mean comparison for sex variable simply does not make sense. The choice of inappropriate analysis model has a possibility of misdealing the discussions and conclusions.

2.2 Four Levels of Measurement

The level of measurement defines the nature and the relationship of the values assigned to the attributes of a variable (Trochim and Donnelly 2007 ). That is to say that the relationship between the values of 1 and 2 in sex variable is different from that in income variable. Unlike the latter case, 2 is neither greater than 1 nor double the magnitude or quantity of 1. A variety of measurements is in use in quantitative research. Most of the texts for quantitative research present four levels of measurement (Brockopp and Hastings-Tolsma 2003 ; Trochim and Donnelly 2007 ; Babbie 2016 ).

The first or lowest level of measurement is nominal . The nominal measurement is characterized by variables that are discrete and noncontinuous (Brockopp and Hastings-Tolsma 2003 ). The values are assigned arbitrarily, and thus it is not assumed that higher values mean more of something. The nominal level is appropriate for categorical variables such as sex (male, female), marital status (married, unmarried), and religion (Christianity, non-Christianity).

The second level of measurement is ordinal , where the attributes are rank-ordered along the continuum of the characteristics. The ordinal measurement is more than classifying information, and higher values signify more of things in this measurement. However, distances between values do not represent the numerical differences. Educational attainment (high school or less, undergraduate, postgraduate), level of socioeconomic status (high, medium, low), and degree of agreement (agree, neutral, disagree) can be measured by the ordinal measurement.

The third level of measurement is interval . While the values do not have numerical meanings in the previous two measurements, the distances between the values are interpretable in this measurement. In this measurement, computing average makes sense. However, the interval measurement does not have an absolute zero, and ratios do not make sense in this measurement. Temperature is a typical example of the interval measurement, where 0° does not mean there is no temperature and 40° is not twice as hot as 20°.

The last or highest measurement is ratio . This measurement is characterized by variables that are assessed incrementally with equal distances and has a meaningful absolute zero. Height, weight, number of clients, and annual income can be measured by the ratio measurement and meaningful ratios or fraction can be calculated in this measurement. Although interval and ratio measurement are distinctive in their concepts, it is noted that they are not strictly distinguished in the data analysis in social and behavioral sciences. For instance, SPSS combines these two measurements into one measurement.

As was indicated, four levels of measurement form a hierarchy by the amount of information. Also the nature of the values in each measurement defines appropriate statistics that can be produced (McHugh 2007 ). Higher levels of measurement have capacity to produce more statistics. Table 1 summarizes the key features of the levels of measurement and the producible statistics.

It should be noted here that variables that can be measured by the interval and ratio measurements can also be measured by lower measurements. For instance, income variable can be measured by nominal (yes, no), ordinal (low, medium, high), and ratio (actual amount of income). If income is measured by the ratio measurement, it can be later reduced to ordinal or nominal measurements. However, variables measured by the lower measurements cannot be converted into higher ones (Babbie 2016 ). The ability to manipulate higher measurements means that a wider variety of statistics can be used to test and describe variables at that level (McHugh 2007 ). Therefore, it is suggested that a variable should be measured at the highest level possible (Brockopp and Hastings-Tolsma 2003 ; Babbie 2016 ). If lower measurements are used when higher measurements are applicable, it causes loss of information and decreases the variety and the power of statistics.

3 Types of Analysis Models