The list went on and on. But what was it that made us identify one application or approach as "Web 1.0" and another as "Web 2.0"? (The question is particularly urgent because the Web 2.0 meme has become so widespread that companies are now pasting it on as a marketing buzzword, with no real understanding of just what it means. The question is particularly difficult because many of those buzzword-addicted startups are definitely not Web 2.0, while some of the applications we identified as Web 2.0, like Napster and BitTorrent, are not even properly web applications!) We began trying to tease out the principles that are demonstrated in one way or another by the success stories of web 1.0 and by the most interesting of the new applications.

1. The Web As Platform

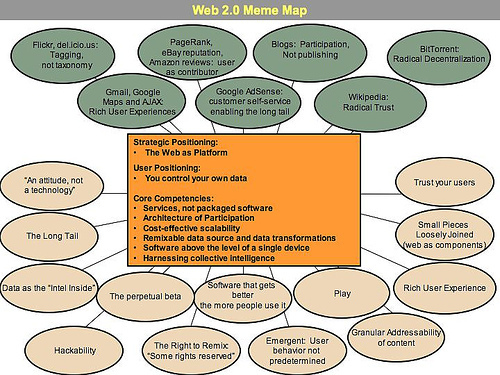

Like many important concepts, Web 2.0 doesn't have a hard boundary, but rather, a gravitational core. You can visualize Web 2.0 as a set of principles and practices that tie together a veritable solar system of sites that demonstrate some or all of those principles, at a varying distance from that core.

Figure 1 shows a "meme map" of Web 2.0 that was developed at a brainstorming session during FOO Camp, a conference at O'Reilly Media. It's very much a work in progress, but shows the many ideas that radiate out from the Web 2.0 core.

For example, at the first Web 2.0 conference, in October 2004, John Battelle and I listed a preliminary set of principles in our opening talk. The first of those principles was "The web as platform." Yet that was also a rallying cry of Web 1.0 darling Netscape, which went down in flames after a heated battle with Microsoft. What's more, two of our initial Web 1.0 exemplars, DoubleClick and Akamai, were both pioneers in treating the web as a platform. People don't often think of it as "web services", but in fact, ad serving was the first widely deployed web service, and the first widely deployed "mashup" (to use another term that has gained currency of late). Every banner ad is served as a seamless cooperation between two websites, delivering an integrated page to a reader on yet another computer. Akamai also treats the network as the platform, and at a deeper level of the stack, building a transparent caching and content delivery network that eases bandwidth congestion.

Nonetheless, these pioneers provided useful contrasts because later entrants have taken their solution to the same problem even further, understanding something deeper about the nature of the new platform. Both DoubleClick and Akamai were Web 2.0 pioneers, yet we can also see how it's possible to realize more of the possibilities by embracing additional Web 2.0 design patterns .

Let's drill down for a moment into each of these three cases, teasing out some of the essential elements of difference.

Netscape vs. Google

If Netscape was the standard bearer for Web 1.0, Google is most certainly the standard bearer for Web 2.0, if only because their respective IPOs were defining events for each era. So let's start with a comparison of these two companies and their positioning.

Netscape framed "the web as platform" in terms of the old software paradigm: their flagship product was the web browser, a desktop application, and their strategy was to use their dominance in the browser market to establish a market for high-priced server products. Control over standards for displaying content and applications in the browser would, in theory, give Netscape the kind of market power enjoyed by Microsoft in the PC market. Much like the "horseless carriage" framed the automobile as an extension of the familiar, Netscape promoted a "webtop" to replace the desktop, and planned to populate that webtop with information updates and applets pushed to the webtop by information providers who would purchase Netscape servers.

In the end, both web browsers and web servers turned out to be commodities, and value moved "up the stack" to services delivered over the web platform.

Google, by contrast, began its life as a native web application, never sold or packaged, but delivered as a service, with customers paying, directly or indirectly, for the use of that service. None of the trappings of the old software industry are present. No scheduled software releases, just continuous improvement. No licensing or sale, just usage. No porting to different platforms so that customers can run the software on their own equipment, just a massively scalable collection of commodity PCs running open source operating systems plus homegrown applications and utilities that no one outside the company ever gets to see.

At bottom, Google requires a competency that Netscape never needed: database management. Google isn't just a collection of software tools, it's a specialized database. Without the data, the tools are useless; without the software, the data is unmanageable. Software licensing and control over APIs--the lever of power in the previous era--is irrelevant because the software never need be distributed but only performed, and also because without the ability to collect and manage the data, the software is of little use. In fact, the value of the software is proportional to the scale and dynamism of the data it helps to manage.

Google's service is not a server--though it is delivered by a massive collection of internet servers--nor a browser--though it is experienced by the user within the browser. Nor does its flagship search service even host the content that it enables users to find. Much like a phone call, which happens not just on the phones at either end of the call, but on the network in between, Google happens in the space between browser and search engine and destination content server, as an enabler or middleman between the user and his or her online experience.

While both Netscape and Google could be described as software companies, it's clear that Netscape belonged to the same software world as Lotus, Microsoft, Oracle, SAP, and other companies that got their start in the 1980's software revolution, while Google's fellows are other internet applications like eBay, Amazon, Napster, and yes, DoubleClick and Akamai.

Web 2.0 Courses

Success in the Web 2.0 world depends on a successful user experience.

Register now to learn advanced User Interface techniques using PHP and SQL. You'll master building a dynamic website using efficient and reusable code and seamlessly integrating Web 2.0 patterns, object-oriented PHP, along with other technologies and techniques.

Recommended for You

© 2024, O’Reilly Media, Inc.

(707) 827-7019 (800) 889-8969

All trademarks and registered trademarks appearing on oreilly.com are the property of their respective owners.

About O'Reilly

- Academic Solutions

- Corporate Information

- Privacy Policy

- Terms of Service

- Work with Us

- Editorial Independence

- Community & Featured Users

- Newsletters

- Meetups & User Groups

Partner Sites

- makezine.com

- makerfaire.com

- craftzine.com

- O'Reilly Insights on Forbes.com

Shop O'Reilly

- Customer Service

- Shipping Information

- Ordering & Payment

- Affiliate Program

- The O'Reilly Guarantee

- Search Search Please fill out this field.

What Is Web 2.0?

Understanding web 2.0.

- Advantages and Disadvantages

Web 2.0 vs. Web 1.0

Web 2.0 vs. web 3.0, web 2.0 components.

- Applications

The Bottom Line

- Marketing Essentials

What Is Web 2.0? Definition, Impact, and Examples

:max_bytes(150000):strip_icc():format(webp)/wk_headshot_aug_2018_02__william_kenton-5bfc261446e0fb005118afc9.jpg)

Investopedia / Joules Garcia

Web 2.0 describes the current state of the internet, which has more user-generated content and usability for end-users compared to its earlier incarnation, Web 1.0. Web 2.0 generally refers to the 21st-century internet applications that have transformed the digital era in the aftermath of the dotcom bubble .

Key Takeaways

- Web 2.0 describes the current state of the internet, which has more user-generated content and usability for end-users compared to its earlier incarnation, Web 1.0.

- It does not refer to any specific technical upgrades to the internet; it refers to a shift in how the internet is used.

- There is a higher level of information sharing and interconnectedness among participants in the new age of the internet

- It allowed for the creation of applications such as Facebook, X (formerly Twitter), Reddit, TikTok, and Wikipedia.

- Web 2.0 paved the way for Web 3.0, the next generation of the web that uses many of the same technologies to approach problems differently.

The term Web 2.0 first came into use in 1999 as the internet pivoted toward a system that actively engaged the user. Users were encouraged to provide content, rather than just viewing it. The social aspect of the internet has been particularly transformed; in general, social media allows users to engage and interact with one another by sharing thoughts, perspectives, and opinions. Users can tag, share, post, and like.

Web 2.0 does not refer to any specific technical upgrades to the internet. It simply refers to a shift in how the internet is used in the 21st century. In the new age, there is a higher level of information sharing and interconnectedness among participants. This new version allows users to actively participate in the experience rather than just acting as passive viewers who take in information.

Because of Web 2.0, people can publish articles and comments on different platforms, increasing engaged content creation and participation through the creation of accounts on different sites. It also gave rise to web apps, self-publishing platforms like WordPress , Medium, Substack, as well as social media sites. Examples of Web 2.0 sites include Wikipedia, Facebook, X, and various blogs, which all transformed the way the same information is shared and delivered.

History of Web 2.0

In a 1999 article called Fragmented Future, Darcy DiNucci coined the phrase Web 2.0. In the article, DiNucci mentions that the "first glimmerings" of this new stage of the web were beginning to appear. In Fragmented Future, DiNucci describes Web 2.0 as a "transport mechanism, the ether through which interactivity happens."

The phrase became popularized after a 2004 conference held by O'Reilly Media and MediaLive International. Tim O'Reilly, founder and chief executive officer (CEO) of the media company, is credited with the streamlining of the process, as he hosted various interviews and Web 2.0 conferences to explore the early business models for web content.

The interworking of Web 2.0 has continually evolved over the years. Instead of a single instance of Web 2.0 having been created, its definition and capabilities continue to change. For example, Justin Hall is credited as being one of the first bloggers, though his personal blog dates back to 1994.

Advantages and Disadvantages of Web 2.0

The development of technology has allowed users to share their thoughts and opinions with others, creating new ways of organizing and connecting with other people. One of the largest advantages of Web 2.0 is improved communication through web applications that enhance interactivity, collaboration, and knowledge sharing.

This is most evident through social networking, where individuals armed with a Web 2.0 connection can publish content, share ideas, extract information, and subscribe to various informational feeds. This has brought about major strides in marketing optimization as more strategic, targeted marketing approaches are now possible.

Web 2.0 also brings about a certain level of equity. Most individuals have an equal chance of posting their views and comments, and each individual may build a network of contacts. Because information may be transmitted more quickly under Web 2.0 compared to prior methods of information sharing, the latest updates and news may be available to more people.

Disadvantages

Unfortunately, there are a lot of disadvantages to the internet acting more like an open forum. Through the expansion of social media, we have seen an increase in online stalking, doxing , cyberbullying, identity theft , and other online crimes. There is also the threat of misinformation spreading among users, whether that's through open-source information-sharing sites or on social media.

Individuals may blame Web 2.0 for misinformation, information overload, or the unreliability of what people read. As almost anyone can post anything via various blogs, social media, or Web 2.0 outlets, there is an increased risk of confusion on what is real and what sources may be deemed reliable.

As a result, Web 2.0 brings about higher stakes regarding communication. It's more likely to have fake accounts, spammers, forgers, or hackers that attempt to steal information, imitate personas, or trick unsuspecting Web 2.0 users into following their agenda. As Web 2.0 doesn't always and can't verify information, there is a heightened risk for bad actors to take advantage of opportunities.

Web 1.0 is used to describe the first stage of the internet. At this point, there were few content creators; most of those using the internet were consumers. Static pages were more common than dynamic HTML , which incorporates interactive and animated websites with specific coding or language.

Content in this stage came from a server’s file system rather than a database management system. Users were able to sign online guestbooks and HTML forms were sent via email. Examples of internet sites that are classified as Web 1.0 are Britannica Online, personal websites, and mp3.com. In general, these websites are static and have limited functionality and flexibility.

Dynamic information (always changing)

Less control over user input

Promotes greater collaboration, as channels are more dynamic and flexible

Considered much more social and interative-driven

Static information (more difficult to change)

More controlled user input

Promoted individual contribution; channels were less dynamic

Consider much more informative and data-driven

The world is already shifting into the next iteration of the web (appropriately dubbed "Web 3.0"). Though both rely on many similar technologies, they use the available capabilities to solve problems differently.

One strong example of Web 3.0 relates to currency. Under Web 2.0, users could input fiat currency information such as bank account information or credit card data. This information could be processed by the receiver to allow for transactions. Web 3.0 strives to approach the transaction process using similar but different processes. With the introduction of Bitcoin, Ethereum , and other cryptocurrencies, the same problem can be solved in a theoretically more efficient way under Web 3.0.

Web 3.0 is more heavily rooted in increasing the trust between users. More often, applications rely on decentralization, letting data be exchanged in several locations simultaneously. Web 3.0 is also more likely to incorporate artificial intelligence or machine learning applications.

Focuses on reading and writing content

May be more susceptible to less-secure technology

May use more antiquated, simpler processing techniques

Primarily aims to connect people

Focused on creating content

Often has more robust cybersecurity measures

May incorporate more advanced concepts such as AI or machine learning

Primarily aims to connect data or information

There is no single, universally-accepted definition for Web 2.0. Instead, it's best described as a series of components that, when put together, create an online environment of interactivity and greater capacity compared to the original version of the web. Here are the more prominent components of Web 2.0.

Wikis are often information repositories that collect input from various users. Users may edit, update, and change the information within a web page, meaning there is often no singular owner of the page or the information within. As opposed to users simply absorbing information given to them, wiki-based sites such as Wikipedia are successful when users contribute information to the site.

Software Applications

The early days of the web relied upon local software being installed on-premises. With Web 2.0, applications gained a greater opportunity to be housed off-site, downloaded over the web, or even offered as a service via web applications and cloud computing . This has shepherded a new type of business model where companies can sell software applications on a monthly subscription basis.

Social Networking

Often one of the aspects most thought of when discussing Web 2.0. Social networking is similar to wikis in that individuals are empowered to post information on the web. Whereas wikis are informational and often require verification, social networking has looser constraints on what can be posted. In addition, users have greater capabilities to interact and connect with other social networking users.

General User-Generated Content

In addition to social media posts, users can more easily post artwork, images, audio, video, or other user-generated media. This information shared online for purchase or may be freely distributed. This has led to greater distribution of content creator crediting (though creators are at greater risk for their content being stolen by others).

Crowdsourcing

Though many may think of Web 2.0 as allowing for individual contribution, Web 2.0 brought about great capabilities regarding crowdsourced, crowdfunded , and crowd-tested content. Web 2.0 let individuals collectively share resources to meet a common goal, whether that goal be knowledge-based or financial.

There is no single universally accepted definition for Web 2.0 or Web 3.0. Because of its expansive nature, it's often hard to confine the boundaries of Web 2.0 into a single simple definition.

Web 2.0 Applications

The components above are directly related to the applications of Web 2.0. Those components allowed for new types of software, platforms, or applications that are still used today.

- Zoom, Netflix, and Spotify are all examples of software as a service (SaaS) . With the greater capability of connecting individuals via Web 2.0, off-premise software applications are exponentially more capable and powerful.

- HuffPost, Boing Boing, and Techcrunch are blogs that allow users to input opinions and information onto web pages. These pages are informative similar to Web 1.0; however, individual contributors have a much greater capability in creating and distributing their own informative content.

- X, Instagram, Facebook, and Threads are social media networks that allow for personalized content to be uploaded to the web. This content can then be shared with a private collection of friends or with a broad social media user base.

- Reddit, Digg, and Pinterest are also applications that allow for user input. These types of applications are more geared towards organizing social content around specific themes or topics, much like how original forums used to.

- YouTube , TikTok, and Flickr are even more examples of content sharing. However, specific applications specialize in the distribution of multimedia, video, or audio.

What Does Web 2.0 Mean?

Web 2.0 describes how the initial version of the web has advanced into a more robust, capable system. After the initial breakthrough of the initial web capabilities, greater technologies were developed to allow users to more freely interact and contribute to what resides on the web. The ability for web users to be greater connected to other web users is at the core of Web 2.0.

What Are Examples of Web 2.0 Applications?

The most commonly cited examples of Web 2.0 applications include Facebook, X, Instagram, or Tiktok. These sites allow users to interact with web pages instead of simply viewing them. These types of websites extend to sites like Wikipedia, where a broad range of users can help form the information that is shared and distributed on the web.

Is Web 2.0 and Web 3.0 the Same?

Web 2.0 and Web 3.0 use many of the same technologies (AJAX, JavaScript, HTML5, CSS3). Web 3.0 is more likely to leverage even more modern technologies or principles in an attempt to connect the information to drive even greater value.

In the early days of web browsing, users would often navigate to simple web pages filled with information and limited-to-no ability to interact with the page. Today, the web has advanced and allows for users to connect with others, contribute information, and have greater flexibility in how the web is being used. Though Web 2.0 is already shaping the way for Web 3.0, many of the fundamental pieces of Web 2.0 are still used today.

Web Design Museum. " Web 2.0 ."

Darcy DiNucci. " Fragmented Future ."

O'Reilly. " Web 2.0 and the Emergent Internet Operating System ."

University of Notre Dame of Maryland. " History of Blogging ."

:max_bytes(150000):strip_icc():format(webp)/web_definition_final_0823-dbe6dbcd450c498e896b884a6ff3d885.jpg)

- Terms of Service

- Editorial Policy

- Privacy Policy

- Your Privacy Choices

ESSAY SAUCE

FOR STUDENTS : ALL THE INGREDIENTS OF A GOOD ESSAY

Essay: Web 2.0

Essay details and download:.

- Subject area(s): Information technology essays

- Reading time: 4 minutes

- Price: Free download

- Published: 16 June 2012*

- File format: Text

- Words: 1,038 (approx)

- Number of pages: 5 (approx)

Text preview of this essay:

This page of the essay has 1,038 words. Download the full version above.

The term "Web 2.0" was first used in January 1999 by Darcy DiNucci, a consultant on electronic information design (information architecture). The Web we know now, which loads into a browser window in essentially static screenfuls, is only an embryo of the Web to come. The first glimmerings of Web 2.0 are beginning to appear, and we are just starting to see how that embryo might develop. The Web will be understood not as screenfuls of text and graphics but as a transport mechanism, the ether through which interactivity happens.

The term Web 2.0 was initially championed by bloggers and by technology journalists, culminating in the 2006 TIME magazine Person of The Year (You). Web 2.0 websites allow users to do more than just retrieve information. By increasing what was already possible in "Web 1.0", they provide the user with more user-interface, software and storage facilities, all through their browser. This has been called "network as platform" computing. Major features of Web 2.0 include social networking sites, user created web sites, self-publishing platforms, tagging, and social bookmarking. Users can provide the data that is on a Web 2.0 site and exercise some control over that data.

Web 2.0 offers all users the same freedom to contribute. While this opens the possibility for serious debate and collaboration, it also increases the incidence of "spamming" and "trolling" by unscrupulous or less mature users. The impossibility of excluding group members who don’t contribute to the provision of goods from sharing profits gives rise to the possibility that serious members will prefer to withhold their contribution of effort and free ride on the contribution of others.

Key features of Web 2.0 Folksonomy: Free Classification of Information Rich User Experience User as a Contributor Long Tail User Participation Basic Trust Dispersion

The client-side (web browser) technologies used in Web 2.0 development include Ajax and JavaScript frameworks such as YUI Library, Dojo Toolkit, MooTools, jQuery and Prototype JavaScript Framework. Ajax programming uses JavaScript to upload and download new data from the web server without undergoing a full page reload.

Adobe Flex is another technology often used in Web 2.0 applications. Compared to JavaScript libraries like jQuery, Flex makes it easier for programmers to populate large data grids, charts, and other heavy user interactions.

On the server side, Web 2.0 uses many of the same technologies as Web 1.0. Languages such as PHP, Ruby, Perl, Python, as well as JSP, and ASP.NET, are used by developers to output data dynamically using information from files and databases. What has begun to change in Web 2.0 is the way this data is formatted. In the early days of the Internet, there was little need for different websites to communicate with each other and share data. In the new "participatory web", however, sharing data between sites has become an essential capability.

Web 2.0 can be described in three parts: Rich Internet application (RIA) ‘ defines the experience brought from desktop to browser whether it is from a graphical point of view or usability point of view. Some buzzwords related to RIA are Ajax and Flash.

Web-oriented architecture (WOA) ‘ is a key piece in Web 2.0, which defines how Web 2.0 applications expose their functionality so that other applications can leverage and integrate the functionality providing a set of much richer applications. Examples are feeds, RSS, Web Services, mash-ups.

Social Web ‘ defines how Web 2.0 tends to interact much more with the end user and make the end-user an integral part.

A third important part of Web 2.0 is the Social web, which is a fundamental shift in the way people communicate. The social web consists of a number of online tools and platforms where people share their perspectives, opinions, thoughts and experiences. Web 2.0 applications tend to interact much more with the end user. As such, the end user is not only a user of the application but also a participant by: Podcasting Blogging Tagging Curating with RSS Social bookmarking Social networking Web content voting The popularity of the term Web 2.0, along with the increasing use of blogs, wikis, and social networking technologies, has led many in academia and business to append a flurry of 2.0’s to existing concepts and fields of study, including Library 2.0, Social Work 2.0, Enterprise 2.0, PR 2.0, Classroom 2.0, Publishing 2.0, Medicine 2.0, Telco 2.0, Travel 2.0, Government 2.0, and even Porn 2.0. Many of these 2.0s refer to Web 2.0 technologies as the source of the new version in their respective disciplines and areas.

Blogs, wikis and RSS are often held up as exemplary manifestations of Web 2.0. A reader of a blog or a wiki is provided with tools to add a comment or even, in the case of the wiki, to edit the content. This is what we call the Read/Write web. Talis believes that Library 2.0 means harnessing this type of participation so that libraries can benefit from increasingly rich collaborative cataloging efforts, such as including contributions from partner libraries as well as adding rich enhancements, such as book jackets or movie files, to records from publishers and others.

Futurist John Smart, lead author of the Metaverse Roadmap, defines Web 3.0 as the first-generation Metaverse (convergence of the virtual and physical world), a web development layer that includes TV-quality open video, 3D simulations, augmented reality, human-constructed semantic standards, and pervasive broadband, wireless, and sensors. Web 3.0’s early geosocial (Foursquare, etc.) and augmented reality (Layar, etc.) webs are an extension of Web 2.0’s participatory technologies and social networks (Facebook, etc.) into 3D space. Of all its metaverse-like developments, Smart suggests Web 3.0’s most defining characteristic will be the mass diffusion of NTSC-or-better quality video to TVs, laptops, tablets, and mobile devices, a time when "the internet swallows the television." Smart considers Web 3.0 to be the Semantic Web and in particular, the rise of statistical, machine-constructed semantic tags and algorithms, driven by broad collective use of conversational interfaces, perhaps circa 2020.[77] David Siegel’s perspective in Pull: The Power of the Semantic Web, 2009, is consonant with this, proposing that the growth of human-constructed semantic standards and data will be a slow, industry-specific incremental process for years to come, perhaps unlikely to tip into broad social utility until after 2020.

According to some Internet experts, Web 3.0 will enable the use of autonomous agents to perform some tasks for the user. Rather than having search engines gear towards your keywords, the search engines will gear towards the user.

...(download the rest of the essay above)

About this essay:

If you use part of this page in your own work, you need to provide a citation, as follows:

Essay Sauce, Web 2.0 . Available from:<https://www.essaysauce.com/information-technology-essays/web-2-0/> [Accessed 03-05-24].

These Information technology essays have been submitted to us by students in order to help you with your studies.

* This essay may have been previously published on Essay.uk.com at an earlier date.

Essay Categories:

- Accounting essays

- Architecture essays

- Business essays

- Computer science essays

- Criminology essays

- Economics essays

- Education essays

- Engineering essays

- English language essays

- Environmental studies essays

- Essay examples

- Finance essays

- Geography essays

- Health essays

- History essays

- Hospitality and tourism essays

- Human rights essays

- Information technology essays

- International relations

- Leadership essays

- Linguistics essays

- Literature essays

- Management essays

- Marketing essays

- Mathematics essays

- Media essays

- Medicine essays

- Military essays

- Miscellaneous essays

- Music Essays

- Nursing essays

- Philosophy essays

- Photography and arts essays

- Politics essays

- Project management essays

- Psychology essays

- Religious studies and theology essays

- Sample essays

- Science essays

- Social work essays

- Sociology essays

- Sports essays

- Types of essay

- Zoology essays

About / RSS / Subscribe / Submissions

- Contemporaries

- Book Series

Web 2.0 and Literary Criticism

Edited by Aarthi Vadde and Jessica Pressman

Aarthi Vadde and Jessica Pressman

Multiplayer Lit/Multiplayer Crit

Sarah Wasserman

The Participatory Cultures of Omenana: Reading and Writing on a Nigerian SF Website

Matthew Eatough

Do It for the Vine: Literary Reviews and Online Amplification

Kinohi Nishikawa

Can Literary Theory be Participatory?

Priya Joshi

The Handwritten Styles of Instagram Poetry

Seth Perlow

Studying and Preserving the Global Networks of Twitter Literature

Christian Howard

Close Shaves with Content

Tess McNulty

Prescribed Print: Bibliotherapy after Web 2.0

Literary criticism 2.0: emerging ideas.

Jared Zeiders

A Creative Reading of Web 2.0 and Literary Criticism Using Voyant’s TermsBerry

Tina Lumbis

Introduction

Web 2.0 is changing the literary. We all know this, and we have emergent fields of study based upon this knowledge: electronic literature, game studies, cultural analytics, digital humanities. Yet, scholarship on how the contemporary digital environment is transforming literary criticism deserves more attention. Our cluster takes up this topic: in the individual essays, in their networked relationship, and in the pathway to its production and publication.

This cluster of essays began as a seminar at the American Comparative Literature Association (ACLA) conference, held at Georgetown University in March 2019. Aarthi and Jessica bonded over a shared sense that literary critics need to learn from, follow, and take seriously the trends in web 2.0 literature and literary culture even as we remained skeptical of web 2.0's rhetoric of newness and openness. Jessica had recently published a polemical piece, "Electronic Literature as Comparative Literature," a call for comparative literature scholars to take seriously media and digital translation as part of comparative studies; Aarthi had recently published on the transformative nature of collaborative writing platforms in "Amateur Creativity: Contemporary Literature and the Digital Publishing Scene." Both of these pieces were oriented to the changing media ecology of literary and critical writing, and we convened the seminar with the hopes of converging our individual lines of inquiry while inviting others to the table.

Our call-for-papers asked potential participants to explore the impact of information technologies on the making of contemporary literature and literary culture in a global context. The personal computer, mobile devices, the cloud, the server farm, the search engine, the algorithm, and the network are now indispensable parts of daily life; they are equally indispensable to the reading, writing, and distribution of literature. We wished to understand the consequences of their ubiquity on specific developments in the contemporary literary field — its aesthetic forms, medial substrates, institutional sites of canonization, and informal sites of readership. Popular aspects of web 2.0 literariness elude our specialist methods of analysis and challenge the prevailing norms of selectivity guiding our profession. How might literary history and literary value look different if the discipline paid more heed to the collaborative reading and writing practices of social media, online reviewing culture, and self-publishing platforms? Where is a literary critic to focus her gaze when what counts as content, text, or poetics is inseparable from hardware, software, platform, social network?

The contributors to this cluster collectively examine the specific challenges and opportunities posed to literary critics approaching web 2.0 participatory culture. They present astute analyses of literature in medial translation, make social media platforms their objects of study, and take methodological cues from multiplayer collaborations of various kinds. Yet, even as the topic of our seminar sought to address the bleeding-edge of literary culture, its format (three days spent in close conversation around a table) reminded us of how much older than the internet participatory culture is and how much literary criticism depends upon it. Although the term gained popularity as a way of distinguishing web 2.0 and differentiating digital media from broadcast media, one could say participatory culture goes as far back as the ancient Greek practice of methexis in which audiences would participate and improvise in theatrical performances. To retain one's individual voice while also becoming enmeshed in a collective such as "the people" or "the hive mind" is a paradox of participatory culture that applies as much to theories of democratic politics and the state as it does to internet culture and literary tradition.

The seminar at Georgetown was so generative in part because we as members understood ourselves to be becoming a written collective even before we met in person. We knew from the start that we were going to turn the seminar into a published cluster for Post45 , so we began our conversation at the conference with the intention of building something together for a digital venue. Though we each wrote and shared individual papers for the seminar, we used these short talks as building blocks for a conversation that combined and recombined over the space of the conference and the editorial process. The essays before you bear the spirit of our dialogue, and the current (we hesitate to say "final") form of the cluster reflects our editorial desire to bring the collaborative ethos of the live seminar into the more highly mediated and modular environment of WordPress.

One way in which we have approximated conversation is through hyperlinks, both conceptual and programmatic. We asked each of our contributors to consider their individual essay in relationship to the other contributions and to revise with the goal of referencing key streams of thought from the seminar. We also asked each contributor to denote in their essays where those connections occur and where we could include HTML hyperlinks to other essays so that the conceptual connections perform programmatically as a network. What we realized around that table in that room, and what any virtual meet-up misses, is the importance of live, in-person, embodied interaction. Residual liveness is, perhaps, why online participatory culture is so vibrant and addictive. Philip Auslander understood the importance of "liveness" — the sense of being "live" even when that embodied presence is experienced through technological mediation (extended by microphones, screens, etc.) — before web 2.0 emerged, and the concept of liveness seems ever more vital to understanding our contemporary literary condition. 1 Many of the essays in this cluster attend to the liveness of contemporary literary culture, from emphasis on handwriting in Instagram poetry to the need for a literary theory driven by actual readers rather than the ideal reader of critical imagination .

Rather than thinking of the published cluster as a remediation of the seminar, we seek to present it as evidence of the iterative, even recursive nature of participatory culture. Participation at its most idealistic implies active membership in some community larger than ourselves, but as the emancipatory promises of web 2.0 lose their luster, so too do their notions of agency and self-fashioning. We are not only linked to other humans, objects, and data files, but we are also informed and, in machinic metaphors, even formatted by these relationships. Participation and conscription converge in online life as "opting in" has become the default setting.

If we consider how computational infrastructures inform our experiences as users, we face uneasy questions of how our objects of study find us online. Digital life is not only about using tools but also about being shaped and even circumscribed by them. Our past searches on Google inform what we find in future searches; our network of friends on Facebook shapes the content of our sidebars. What we see online is neither random nor unimportant, and it is determined by exactly what does not meet the eye. As cultural and literary critics, this fact should have profound implications on our approach to our work. Our seminar kept returning to questions of what we could not see and why that occlusion matters.

Perspective and orientation are never just about vision but also about politics, as Sara Ahmed has shown; and, following Franco Moretti, a visualization can change the way we see and the questions we ask. 2 It is not clear whether such concepts have the capacity to explain our lack of access to user data or the invisibility of the algorithms guiding what we see, but it seems right to say that too provincial a notion of literary value is its own form of blindness. Why do we roll our eyes at Instagram poetry? Why do we blow off amateur reviewers and critics? Why do we trivialize the platforms that process and promote literariness to wider audiences than we as scholars will ever reach (e.g. Amazon Vine, social media teasers, the genre of listicles)? Our seminar served as a reminder that literary critics need to think reflexively and critically about why and how we proceed with our values and assertions, close readings and citations, in a climate of both information overload and opacity. Our cluster now gives you a taste of those conversations as they take networked form.

Sarah Wasserman offers an account of "multiplayer crit" to illustrate how literary criticism has changed with the web-enabled times and should continue to become a more collaborative endeavor. Her inspiration for "multiplayer crit" is the multiplayer novel, which formally and thematically embeds the strategies of contemporary videogames. Multiplayer crit goes further than the singly-authored novel or monograph by modelling scenes of collective reading and writing that recall gamers building worlds together. A degree of autonomous world-building also plays into the rise of online literary journals curating African science-fiction, as Matt Eatough explains. A focus on new African literary journals that publish online, especially the Nigerian science fiction magazine Omenana , which serves as Matt's case study, reveals a younger generation of writers more interested in cultivating common tastes than in assuming the social missions of an Achebe or Ngũgĩ. In adopting the perspectives and vocabularies of web 2.0, and fan culture in particular, these magazines set out to release African literature from its historical obligations to educate the public and satisfy a Euro-American market. Kinohi Nishikawa takes us to the polar opposite of the digital publishing scene with Amazon Vine: a platform for Amazon.com users to write online reviews and form community while increasing company revenue. Nishikawa considers the implications of Vine on and for literature, especially literature by writers outside of the United States and for literature in translation.

If literary criticism, little magazines, and reviewing culture are proving adaptable and even reinvigorated by the participatory cultures of web 2.0, the fate of literary theory remains less sure. Priya Joshi asks the million dollar question: Can literary theory be participatory? Turning away from the abstracted concepts of author, reader, and text, she bring book history's methods of analysis to bear on the communication circuits of contemporary literature. If "Theory" with a capital T remains a category intent on policing the boundaries of the literary, she argues, then it will be unable to account for the popular movements endogenous to contemporary literary culture. Seth Perlow and Christian Howard spotlight such popular (even viral) movements by taking Instapoetry and Twitterature as their objects of study. While both concede the distance of these corporate-branded genres from the sanctified space of the literary, they use that distance to reconsider the metaphysics of authorial presence and the critic's duty to specify inherited criteria of value when writing about such texts.

Finally, Tess McNulty and Leah Price take us from the zone of popular culture to the paraliterary where reading resides not in the hands of critics but in those of marketers, scientists, and doctors. Tess asks what counts as "content" in web 2.0, prompting us to recognize and shift our critical gaze towards the stuff that demands our attention but does not reward it. She counterintuitively finds the strategies of clickbait permeating the aesthetics of writers we can confidently call literary, foremost George Saunders. Leah in turn makes a distinction between reading literature and literary reading as she follows the newly minted profession of bibliotherapy through its neuroscientific rationales and its own participatory networks. When reading recommendations morph into medical prescriptions and concierge services, the consolations of literature soften the critical edge of disciplinary literary studies.

As is evident from each of these synopses, a major objective of this cluster is to conduct a self-assessment of our own processes, methods, and perspectives as literary critics. To that end, we have turned the tables on ourselves and made our contributions into a combined object of study for two new participants: Tina Lumbis and Jared Zeiders . Both graduate students in English and Comparative Literature at San Diego State University with a focus on digital humanities, Tina and Jared synthesize the ideas from our essayistic arguments into two different types of data visualizations that together add another layer of interpretation and collectivization.

Our editorial vision is to create a microcosm of the participatory cultures we study. We employ an array of digital tools and digitally-informed strategies to chart the changing contours of the literary field. As our cluster demonstrates, contemporary literary criticism need not be segregated into qualitative and quantitative camps. The criticism for which we advocate ranges between methods, modes, and objects of study. The literary is alive and well within web 2.0 participatory cultures and presents ample opportunity for creative, even heterodox, approaches to it.

Aarthi Vadde is associate professor of English at Duke University. She is the author of Chimeras of Form: Modernist Internationalism beyond Europe, 1914-2016 (Columbia UP, 2016; winner of 2018 Harry Levin Prize) and the co-editor of The Critic as Amateur (Bloomsbury Academic, 2019).

Jessica Pressman is associate professor of English at San Diego State University. She is the author of Digital Modernism: Making It New in New Media (Oxford UP, 2014), co-author,with Mark C. Marino and Jeremy Douglass, of Reading Project: A Collaborative Analysis of William Poundstone's Project for Tachistocope {Bottomless Pit} (Iowa UP, 2015), and co-editor, with N. Katherine Hayles, of Comparative Textual Media: Transforming the Humanities in a Postprint Era (Minnesota UP, 2013).

Keywords : Web 2.0, literary criticism, participatory culture, liveness

- Philip Auslander, Liveness: Performance in a Mediatized Culture (New York: Routledge, 1999). [ ⤒ ]

- See Sara Ahmed, Queer Phenomenology: Orientations, Objects, Others (Durham: Duke University Press, 2006) and Franco Moretti, Graphs, Maps, Trees (New York: Verso, 2005). [ ⤒ ]

Past clusters

Abortion Now, Abortion Forever

African American Satire in the Twenty-First Century

Ali Smith Now

Anti-Work Aesthetics

Asian/American (Anti-)Bodies

Bernadette Mayer

Bored As Hell

Critique in the Trump Era

Cultural Analytics Now

Dark Academia

David Berman

Decolonize X?

Ecologies of Neoliberal Publishing

Extraordinary Renditions

Feel Your Fantasy: The Drag Race Cluster

For Speed and Creed: The Fast and Furious Franchise

Forms of the Global Anglophone

Gestures of Refusal

Get in the Cage

Global Horror

Heteropessimism

How We Write (Well)

Interpretive Difficulty

Leaving Hollywoo: Essays After BoJack Horseman

Legacies — 9/11 and the War On Terror at Twenty

Little Magazines

Locating Lorine Niedecker

Lydia Davis

Mike Davis Forever

Minimalisms Now: Race, Affect, Aesthetics

New Filmic Geographies

New Literary Television

Poetry's Social Forms

Public Humanities as/and Comparatist Practice

Reading Disco Elysium

Reading Sally Rooney

Reading with Algorithms

Roth’s Yahrzeit

Slow Burn: Quarantine Edition

Someone Else's Object

Stranger Things and Nostalgia Now

The 7 Neoliberal Arts

The After Archive

The Bachelor

The Body of Contemporary Latina/o/x Poetry

The Hallyu Project

The Pain Cluster

The Stuff of Figure, Now

Tis the Damn Season: Taylor Swift's evermore

W(h)ither the Christian Right?

What's Contemporary About the Academy Awards?

Recent Uncollected Essays

Peer Reviewed

Generic Life: Mass Consumption and Globalization in Harryette Mullen’s S*PeRM**K*T

Anna Zalokostas

The Programming Era: The Art of Conversation Design from ELIZA to Alexa

Christopher Grobe

Our Costume Dramas of Creative Destruction

Aaron Chandler

Manifest Diversity and the Empire of Finance

Susan Koshy

Susan Sontag and the Americanization of the Nouveau Roman

Wattpad’s fictions of care.

Sarah Brouillette

Amiri Baraka’s Changing Same as Anational Sociality

Gerónimo Sarmiento Cruz

What is Web 2.0?

P.S. Interestingly, the fact that I'm using Flickr for figures in my writing these days led to a leak of one part of the essay before the piece as a whole was published. I posted a " Web 2.0 meme map " to flickr so I could reference it from there in the article. I thought I'd get the article up before anyone noticed it, but I was surprised to see Business Week pick it up last week .

0 TrackBacks

Trackback url for this entry: http://blogs.oreilly.com/cgi-bin/mt/mt-t.cgi/4297, comments: 16.

Josh Owens [09.30.05 08:08 PM]

Good read. We actually discussed the map in question on our latest podcast... http://web20show.com/articles/2005/09/28/web-2-0-show-episode-2

Shamil [10.01.05 01:48 AM]

Great article explaining not only Web 2.0 but underlying psychology and business logic.

There is one doubt though - access. Isn't Access a critical component to make the Web 2.0 a ubiquotous platform? It appears to me that a monopoly could emerge in this capital-intensive business as entire networks are owned by a few large telecom companies.

Would appreciate your thoughts on the above, Thanks Shamil

Douglass Turner [10.01.05 07:58 AM]

Excellent piece. What Web 2.0 does not address indeed is incapable of addressing is the need for fundamental advancement in the user interface of the Web. The Internet is the platform, but Loose Coupling and Mashups create woeful interfaces.

If you want to get a look at where Web interfaces need to go, take a look at our work in search interface technology called SearchIris:

demo: http://www.visual-io.com/search/index.html

howto: http://www.visual-io.com/search/about.html

Tim O'Reilly [10.01.05 10:33 AM]

I said I'm not fond of definitions, but I woke up this morning with the start of one in my head:

Web 2.0 is the network as platform, spanning all connected devices; Web 2.0 applications are those that make the most of the intrinsic advantages of that platform: delivering software as a continually-updated service that gets better the more people use it, consuming and remixing data from multiple sources, including individual users, while providing their own data and services in a form that allows remixing by others, creating network effects through an "architecture of participation," and going beyond the page metaphor of Web 1.0 to deliver rich user experiences.

C. Enrique Ortiz [10.01.05 05:19 PM]

Do you remember "the network is the computer"? Web 2.0 is based on that same concept, except that Web 2.0 further defines what the network should be -- an open and always available architecture and set of services, that can be consumed as is, or combined into compound services, that are accessible via open and consistent methods, regardless of device, platform, computer language.

SutroStyle [10.01.05 11:37 PM]

You should really look into this news before you muse about web 2.0 - this might be the more important: http://www.eweek.com/article2/0,1895,1865104,00.asp

Thomas Madsen-Mygdal [10.02.05 01:35 PM]

I'm very disappointed that you're promoting company owned data silos as a core of web2.0.

"Control over unique, hard-to-recreate data sources that get richer as more people use them".

All though you describe the free data movement as something that will come the result before that wil be many years of lockin.

Products where the product actually is the community/commons. Products that gets network effects and requires people to use their product to be a part of the community/commons.

Is it really "web2.0" to lock your customers in and basing your business model on aggregration of your customers data. And at the same limiting competition because of network effects. When simple open standards could allow decentralization today - and in many instances all ready is doing it. (What would the blogosphere be like if it was based on this centralized model).

Why should'nt we be able to do this now - and why not promote this model which all ready is emerging in many ways?

Suresh Kumar [10.02.05 01:40 PM]

I don't think Tim was promoting Data silos...

i think he was making the point that Web 2.0 company knows how to extend the value of a 'data-set' that they may not necessarily own..

Google Maps and Amazon are examples of that.

Tim O'Reilly [10.02.05 03:15 PM]

What Suresh said is correct.

However, I *do* believe that owning a hard-to-recreate source of data will bee seen as a competitive advantage in the Web 2.0 era. Recognizing that fact is not "promoting" it. If anything, it will allow those who value free access to data to take steps to ensure that they own their own data, rather than getting sucked into various schemes where other people have them by the short hairs because they don't realize what the levers of power are.

choi li akiro singh santos [10.03.05 09:07 AM]

East Asia is already on Web 4.0 :-)

Great summary. The discussion and examples ignore the real changes sweeping East Asia. The last time I checked it is the WORLD Wide Web. East Asia is paving the way as far the next generation Internet -- while North America is stuck at 1-3 megabits, we are at 10 megabits.

Here is a recent article on Web 4.0 in South Korea:

http://www.businessweek.com/@@@l0wPIUQ@6DlRwEA/magazine/content/05_39/b3952405.htm

One cannot underestimate the potential of multi-player games in creating persistent virtual environments. Warcraft has hundreds of thousands of FANATIC users in South Korea alone:

http://www.businessweek.com/@@IPq474UQ*aDlRwEA/magazine/content/05_38/b3951085.htm

We in the east think that our edge in bandwidth will allow us to not look too much to the West this time around. We may not be doing much in terms of conceptualizing the framework we are in, but things are moving so fast out here, we defer to you bandwidth-starved folks in the West on that point. As William Gibson aptly said, "The future is here. It's just not evenly distributed yet."

Perhaps another core competency is the ability to function and build communities in a multi-lingual WWW.

Thomas Madsen-Mygdal [10.04.05 05:13 AM]

I understand your position.

Allthough i respect your "radar" tremendously i think it's off a bit on this one. There's data proving that what you're promoting/decontructing isn't the emerging model.

And that piece of data is that little thing called the blogosphere.

If one sat down and read your article, accepted that as best practice the whole blogosphere would be running one giant ebay-style service where you'd need to be in order to be a part of the party. All the early blog pioneers recognized that they we're part of something bigger and any attempts to centralize it would fail. So they created rss, ping, metaweblog api, etc. Blogs we're decentralized from their very beginning. Imagine the less amount of innovation that would've happened if all the tools and services was controlled by a single company.

What is happening now is that all the new companies aren't pushing open standards, creating light versions of the semantic web in their spaces, etc. They are pushing the ebay best practice of the middle 90's - not the decentralized web model that really is the core of what's happening.

So imho there's data to back that this is the emerging model. And in my opinion especially at times where you know this is gonna become hype/buzz there's certainly a possibility to take a stand and make sure we don't go down 10 years in the same wrong path as before.

I think you're underestimating the impact you're having on this one and thereby the responsibity.

As Brenda Laurel says in a great quote: "Stories, movies, videogames and websites don't have to be about values to have a profound influence on values. Values are everywhere, embedded in every aspect of our culture and lurking in the very natures of our media and technologies. Culture workers know the question isn't whether they are there but who is taking responsibility for them, how they are being shaped, and how they are shaping us for the future."

At the core the web2.0 should be about giving up control on users and data - and recognizing that your little company is only a very little part of something much larger whether it's in your field or in general.

Tim O'Reilly [10.04.05 09:14 AM]

I think you misunderstand my position. It's far more nuanced than the idea that either centralization OR decentralization is a trump card. I believe that decentralization is a key driver of innovation in Web 2.0 (P2P, web services, blogging -- and the fundamental architecture of the web, or the internet itself, being good examples.) But each wave of decentralization leads to clever new forms of centralization, clever new forms of competitive advantage.

I'm willing to take a large bet that the blogosphere is NOT the counter example that you argue for. Why? History repeats itself. The rhetoric of the early web was much the same as the rhetoric of blogging: everyone is equal, anyone can put up a web site. Not only that, the web was one of the most profoundly decentralized architectures you can imagine, with zero barriers to entry. Yet within only a decade, we had giant companies so powerful that a whole industry has grown up whereby the "long tail" of decentralization tries to optimize their notice by the search engines, who are now at the hugely profitable head of the once flat web.

And this is already happening in blogging. Look at the influence of sites like Technorati, Feedster, Bloglines etc. in annointing "the top bloggers" (who get increasing attention as a result). Notice also how many of the sites in the top 100 blogs are already owned not by individuals but by blog publishing companies like Weblogsinc and Gawker Media.

As to taking a stand, I will take my stand here: identifying a trend is not promoting it. Is an scientist who writes about global warming promoting it?

I see all around me people who understand that the business opportunity is in controlling key data sources, or building network-effect businesses that give them a preeminent position in what was originally a decentralized marketplace.

How you choose to react to that is up to you. Some people will make it part of their business plan, others will try to oppose it. But knowing that that is the game is step one.

If you look at my talks (and I've been talking about this for quite a few years), one slide I frequently show is one about the Internet Operating System, with two images, and the question, "What kind of operating system do we want?" The two images: "One ring to bind them", and a routing map of the Internet, with the caption "Small Pieces Loosely Joined."

I love the "small pieces loosely joined" architecture of the Internet and of the most successful open source projects, and I spend a great deal of my time promoting the benefits of that architecture. But I also recognize the counterforce, and understand its attraction, perhaps even its inevitability.

When Steven Levy was working on the profile he did of me in the recent issue of Wired, we talked about this dynamic. I quoted from a poem of Wallace Stevens (Esthetique du Mal): "the tragedy begins again, in the yes of the realist spoken because he must say yes, because beneath every no lay a yes that had never been broken."

Decentralization is overcome by re-centralization again and again (the PC, once the haven of homebrew hackers, became the core of huge corporate hegemonies; the internet followed the same path; so too will the latest generation of applications.) Yet innovation comes again and again from the fringes, because of something in us that keeps inventing a new, free future, and will not take no for an answer.

That dynamic is the core of progress. It was only through the profit impulse, which led to huge companies taking control of these once decentralized hacker projects, that they were brought to the general public. The hackers move on, and make the future interesting again.

It's really all quite lovely.

George Chiramattel [10.14.05 12:18 PM]

Hi Tim, First of all let me congratulate you on this beautiful article.

I would also like to add to this discussion. If the Internet represents the 'collective intelligence of humanity' then in my opinion we require better tooling to utilize it. I wouldn't expect the 'virtual brain of humanity' to come with a 'search box' as its primary interface :-) At the following URL , I have described how we can build a better tool to handle the huge volume of information that is getting published on the net. I call this tool FolkMind. http://www.chiramattel.com/george/blog/archives/2005_10.html#a000084

gogle [06.15.06 02:59 AM]

well. I'm willing to take a large bet that the blogosphere is NOT the counter example that you argue for. Why? History repeats itself. History never repeats. I am not argue with you.

Alex Szilaghi [01.11.07 08:59 PM]

The web 2.0 is the future. Is really hard to define something that is just starting. Anyhow keep up writing. You have good info on your articles.

taly weiss [01.17.07 07:23 AM]

Web 2.0 is an open to all platform, as such – it must hold all information with no restrains. That is the beauty of this platform and yes, it does imply that you will find a lot of irrelevant information (The web 2.0 antagonists claim that "we get too much crap"). But, this is exactly why contribution is necessary. If you meet unimportant information – you should "bury" it, or rank it low. This contribution will serve to sort the information by its relevancy and true value. I see contribution as means not only for adding more information but as a key to help others navigate in the endless information offered. With others actively responding you can decide what is best to read and what is worth your attention. Improving the contribution behavior is a necessary key to web 2.0 success but unfortunately it hasn't yet received the attention it deserves. We can easily recognize that not a lot do contribute to this collective intelligence as the ratio data (views per voters or per rankers, viewers per comments; viewers per members) found at web 2.0 reach about 3-5% at most. For more on this issue see www.trendsspotting.com/blog/?p=1, www.trendsspotting.com/blog/?p=4

Post A Comment:

Email Address:

Remember personal info?

Comments: (You may use HTML tags for style)

Type the characters you see in the picture above.

STAY CONNECTED

Recommended for you, recent comments.

- taly weiss on What is Web 2.0? : Web 2.0 is an open to a...

- Alex Szilaghi on What is Web 2.0? : The web 2.0 is the futu...

- gogle on What is Web 2.0? : well. I'm willing to ta...

- George Chiramattel on What is Web 2.0? : Hi Tim, First of all le...

- Tim O'Reilly on What is Web 2.0? : Thomas - I think you m...

- Thomas Madsen-Mygdal on What is Web 2.0? : Tim, I understand your...

- choi li akiro singh santos on What is Web 2.0? : East Asia is already on...

- Tim O'Reilly on What is Web 2.0? : Thomas -- What Suresh ...

- Suresh Kumar on What is Web 2.0? : I don't think Tim was p...

- Thomas Madsen-Mygdal on What is Web 2.0? : I'm very disappointed t...

MOST ACTIVE | MOST RECENT

- Google Bets Big on HTML 5: News from Google I/O

- Google Web Elements and Google's Iceberg Strategy (Google I/O)

- Google I/O keynote, day 1

- From the July NY Tech Meetup

- Active Facebook Users By Country

- FCC discusses broadband: the job is a big one

- Four short links: 27 May 2009

- Allison Randal

- Andrew Savikas

- Artur Bergman

- Brady Forrest

- Brett McLaughlin

- Dale Dougherty

- Jesse Robbins

- Jim Stogdill

- Marc Hedlund

- Michael Loukides

- Mike Hendrickson

- Nat Torkington

- Nikolaj Nyholm

- Robert Passarella

RADAR TOPICS

- book related

- emerging tech

- hard numbers

- open source

Home — Essay Samples — Information Science and Technology — World Wide Web — The Likelihood in the Web 2.0

The Likelihood in The Web 2.0

- Categories: Information Technology Internet World Wide Web

About this sample

Words: 429 |

Published: Sep 19, 2019

Words: 429 | Page: 1 | 3 min read

Cite this Essay

Let us write you an essay from scratch

- 450+ experts on 30 subjects ready to help

- Custom essay delivered in as few as 3 hours

Get high-quality help

Prof Ernest (PhD)

Verified writer

- Expert in: Information Science and Technology

+ 120 experts online

By clicking “Check Writers’ Offers”, you agree to our terms of service and privacy policy . We’ll occasionally send you promo and account related email

No need to pay just yet!

Related Essays

3 pages / 1474 words

3 pages / 1370 words

2 pages / 985 words

1 pages / 594 words

Remember! This is just a sample.

You can get your custom paper by one of our expert writers.

121 writers online

Still can’t find what you need?

Browse our vast selection of original essay samples, each expertly formatted and styled

Related Essays on World Wide Web

The Internet has become one of the best inventions in the modern world .it is even difficult to imagine how life would be in the absence of the internet. the internet is a viewed to be the current trend that everyone should fit [...]

This paper describes the design philosophies for the Internet protocol suite, TCP/IP which was first proposed 15 years ago by DARPA. It shows us the design goals of the Internet with their importance and how these goals led to [...]

In the article “Is Google Making Us Stupid?” by Nicholas Carr he argues that the internet is changing the way we think and work for the worst. I have to disagree. Although the internet is changing us, it’s for the better. First, [...]

This paper, by using information and sources, will describe the work, legend, and contribution of the World Wide Web to the world made by Tim Berners-Lee. The World Wide Web is a central and necessary part of our day-to day [...]

Changing domain can have a serious impact on organic traffic and is usually not advisable from the perspective of SEO. This is because each domain name is linked to several metrics and characteristics such as relevance, [...]

Anonymity guarantees that a client may utilize a resource or service without unveiling the client's identity. The prerequisites for anonymity give protection of the client identity. Anonymity isn't proposed to secure the subject [...]

Related Topics

By clicking “Send”, you agree to our Terms of service and Privacy statement . We will occasionally send you account related emails.

Where do you want us to send this sample?

By clicking “Continue”, you agree to our terms of service and privacy policy.

Be careful. This essay is not unique

This essay was donated by a student and is likely to have been used and submitted before

Download this Sample

Free samples may contain mistakes and not unique parts

Sorry, we could not paraphrase this essay. Our professional writers can rewrite it and get you a unique paper.

Please check your inbox.

We can write you a custom essay that will follow your exact instructions and meet the deadlines. Let's fix your grades together!

Get Your Personalized Essay in 3 Hours or Less!

We use cookies to personalyze your web-site experience. By continuing we’ll assume you board with our cookie policy .

- Instructions Followed To The Letter

- Deadlines Met At Every Stage

- Unique And Plagiarism Free

Excite really never got the business model right at all. We fell into the classic problem of how when a new medium comes out it adopts the practices, the content, the business models of the old medium—which fails, and then the more appropriate models get figured out.

Sites like del.icio.us and flickr allow users to "tag" content with descriptive tokens. But there is also huge source of implicit tags that they ignore: the text within web links. Moreover, these links represent a social network connecting the individuals and organizations who created the pages, and by using graph theory we can compute from this network an estimate of the reputation of each member. We plan to mine the web for these implicit tags, and use them together with the reputation hierarchy they embody to enhance web searches.

Six ways to make Web 2.0 work

Technologies known collectively as Web 2.0 have spread widely among consumers over the past five years. Social-networking Web sites, such as Facebook and MySpace, now attract more than 100 million visitors a month. As the popularity of Web 2.0 has grown, companies have noted the intense consumer engagement and creativity surrounding these technologies. Many organizations, keen to harness Web 2.0 internally, are experimenting with the tools or deploying them on a trial basis.

Over the past two years, McKinsey has studied more than 50 early adopters to garner insights into successful efforts to use Web 2.0 as a way of unlocking participation. We have surveyed, independently, a range of executives on Web 2.0 adoption. Our work suggests the challenges that lie ahead. To date, as many survey respondents are dissatisfied with their use of Web 2.0 technologies as are satisfied. Many of the dissenters cite impediments such as organizational structure, the inability of managers to understand the new levers of change, and a lack of understanding about how value is created using Web 2.0 tools. We have found that, unless a number of success factors are present, Web 2.0 efforts often fail to launch or to reach expected heights of usage. Executives who are suspicious or uncomfortable with perceived changes or risks often call off these efforts. Others fail because managers simply don’t know how to encourage the type of participation that will produce meaningful results.

Twitter responses from our readers

After “Six ways to make Web 2.0 work” was posted, we wanted to encourage Twitter users to continue the conversation. Twitter allows individuals to broadcast 140-character posts to a loosely connected community of followers. Within a few days, over 300 posts used the #web2.0work hashtag 1 1. Hashtags are a community-driven convention for adding additional context and metadata to Twitter posts. Users can create a hashtag simply by prefixing a word with a hash (#) symbol. For example: #web2.0work. we established to monitor conversations and respond to the stream of opinions surrounding the article.

The tweets 2 2. A tweet is a single post or status update on Twitter. came in several varieties. Many respondents simply reported that we had posted the article and offered a shortened URL back to the piece on mckinseyquarterly.com. Others, however, went further, commenting on the findings of the article and sharing how they have been integrating some of the “six ways” precepts into their own Web 2.0 processes.

@estephen : @mckquarterly #web2.0work Rec 1 is spot on, even for conservative companies. we use this technique for wiki/blog internally. very effective.

@Racecarver48 : #web2.0work From recent first pilots, I can specifically agree with your theses 4, 5, and 6. We applied the successful w/o knowing them;-)

@Nurbani : @McKQuarterly #web2.0work #5 hit home 4 mktg initiatives.

The issue that Twitter respondents seemed to agree on the most was that Web 2.0 work can’t be added to an already full load, but instead needs to be meshed with everyday workflows.

@Barry_H : #web2.0work McKinsey report http://bit.ly/5Ac1y. Point 3 really important, embed new things in the day job don't add more work

@sifowler : @McKQuarterly 3. The tool has to fit in the workflow. Perceived 'extra' work reduces take-up. #web2.0work

@Salv_Reina : @McKQuarterly re Workflow, this is critical. If the tool sits outside day 2 day work, it won't take easily #web2.0work

Some users raised questions about points in our analysis that they thought were missing or not fully developed.

@tomguarriello : @McKQuarterly Yes, but your recs don't address the fear of social media that paralyzes many organizations. Loss of control/risk stops them

@RichardStacy : @mckquarterly possible adjunct to point 2 - create permission to fail, encourage experimentation and provide 'ROI break' #web2.0work.

Or what they thought we got wrong.

@drkleiman : it feels like too much focus on the tools and tech itself, not enough on thinking thru strategy & goals that can be accomplished #web2.0work

Others felt the article’s list focused too heavily on issues internal to companies rather than on the potential for participation beyond organizational borders.

@timolsen : #web2.0work - Article emphasizes how to use web 2.0 internally, I think using it externally is the real kicker.

One of the article’s authors, Michael Chui, @mchui , directed this respondent to other published research where we analyze how Web 2.0 can be used to enlist participation among consumers.

@mchui : @timolsen Definitely agree that external uses of Web 2.0 are important - see http://bit.ly/FDPsM #web2.0work

In some cases, the article prompted a fuller dialogue away from the Twitter environment. A number of tweets posted links to more substantial blog site responses.

@theparallaxview : new post 'McKinsey's Six of the Best for Web 2.0 Work' http://bit.ly/xvc9v #web2.0work @McKQuarterly

@broadstuff : McKinsey - 6 Lessons for making good use of Web 2.0 http://tinyurl.com/bd9ahg

@SocialMedia411 : Contribution and Connection are the New Currency (ConversationAgent): http://bit.ly/aSBY2 Response to McKinsey Report

@SandeepVizEdu : 6 Ways To Make Web2.0 Work - Visual Adaption of #web2.0work by McKinsey http://tinyurl.com/b5f5o6

One respondent, after reading the piece, vowed to take action.

@kwjenkins : @McKQuarterly The article inspired me to start a blog on our work Intranet - we believe in collab, just not a lot of grassroots efforts yet

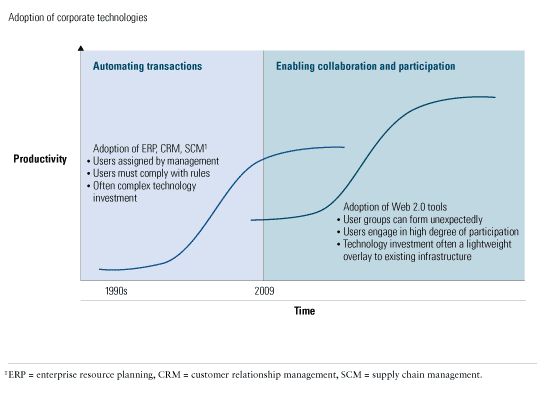

Some historical perspective is useful. Web 2.0, the latest wave in corporate technology adoptions, could have a more far-reaching organizational impact than technologies adopted in the 1990s—such as enterprise resource planning (ERP) , customer relationship management (CRM), and supply chain management (Exhibit 1). The latest Web tools have a strong bottom-up element and engage a broad base of workers. They also demand a mind-set different from that of earlier IT programs, which were instituted primarily by edicts from senior managers.

The new tools

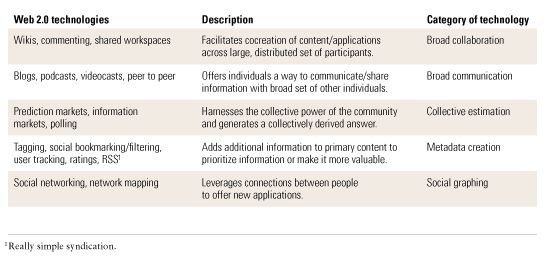

Web 2.0 covers a range of technologies. The most widely used are blogs, wikis, podcasts, information tagging, prediction markets, and social networks (Exhibit 2). New technologies constantly appear as the Internet continues to evolve. Of the companies we interviewed for our research, all were using at least one of these tools. What distinguishes them from previous technologies is the high degree of participation they require to be effective. Unlike ERP and CRM, where most users either simply process information in the form of reports or use the technology to execute transactions (such as issuing payments or entering customer orders), Web 2.0 technologies are interactive and require users to generate new information and content or to edit the work of other participants.

A range of technologies

Earlier technologies often required expensive and lengthy technical implementations, as well as the realignment of formal business processes. With such memories still fresh, some executives naturally remain wary of Web 2.0. But the new tools are different. While they are inherently disruptive and often challenge an organization and its culture, they are not technically complex to implement. Rather, they are a relatively lightweight overlay to the existing infrastructure and do not necessarily require complex technology integration.

Gains from participation

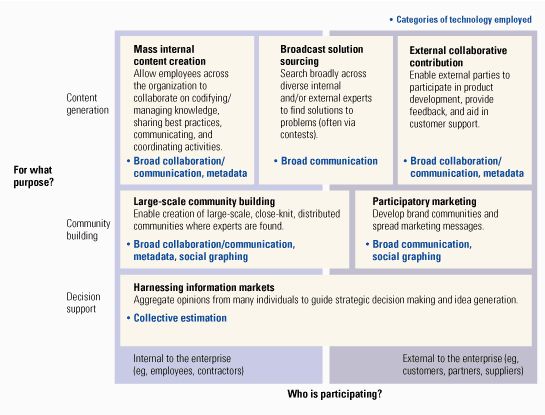

Clay Shirky, an adjunct professor at New York University, calls the underused human potential at companies an immense “cognitive surplus” and one that could be tapped by participatory tools. Corporate leaders are, of course, eager to find new ways to add value. Over the past 15 years, using a combination of technology investments and process reengineering, they have substantially raised the productivity of transactional processes. Web 2.0 promises further gains, although the capabilities differ from those of the past technologies (Exhibit 3).

Management capabilities unlocked by participation

Research by our colleagues shows how differences in collaboration are correlated with large differences in corporate performance. 1 1. Scott C. Beardsley, Bradford C. Johnson, and James M. Manyika, “ Competitive advantage from better interactions ,” mckinseyquarterly.com, May 2006. Our most recent Web 2.0 survey demonstrates that despite early frustrations, a growing number of companies remain committed to capturing the collaborative benefits of Web 2.0. 2 2. Building the Web 2.0 Enterprise: McKinsey Global Survey Results ,” mckinseyquarterly.com, July 2008. Since we first polled global executives two years ago, the adoption of these tools has continued. Spending on them is now a relatively modest $1 billion, but the level of investment is expected to grow by more than 15 percent annually over the next five years, despite the current recession. 3 3. See G. Oliver Young et al., “Can enterprise Web 2.0 survive the recession?” forrester.com, January 6, 2009.

Management imperatives for unlocking participation

To help companies navigate the Web 2.0 landscape, we have identified six critical factors that determine the outcome of efforts to implement these technologies.

1. The transformation to a bottom-up culture needs help from the top. Web 2.0 projects often are seen as grassroots experiments, and leaders sometimes believe the technologies will be adopted without management intervention—a “build it and they will come” philosophy. These business leaders are correct in thinking that participatory technologies are founded upon bottom-up involvement from frontline staffers and that this pattern is fundamentally different from the rollout of ERP systems, for example, where compliance with rules is mandatory. Successful participation, however, requires not only grassroots activity but also a different leadership approach: senior executives often become role models and lead through informal channels.