How to Write an Image Analysis Essay in 6 Easy Steps

Writing an analysis of a picture can be a little daunting, especially if analyzing and essay writing are not your strengths. Not to worry. In this tutorial, you’ll learn how to do it, even if you’re a beginner.

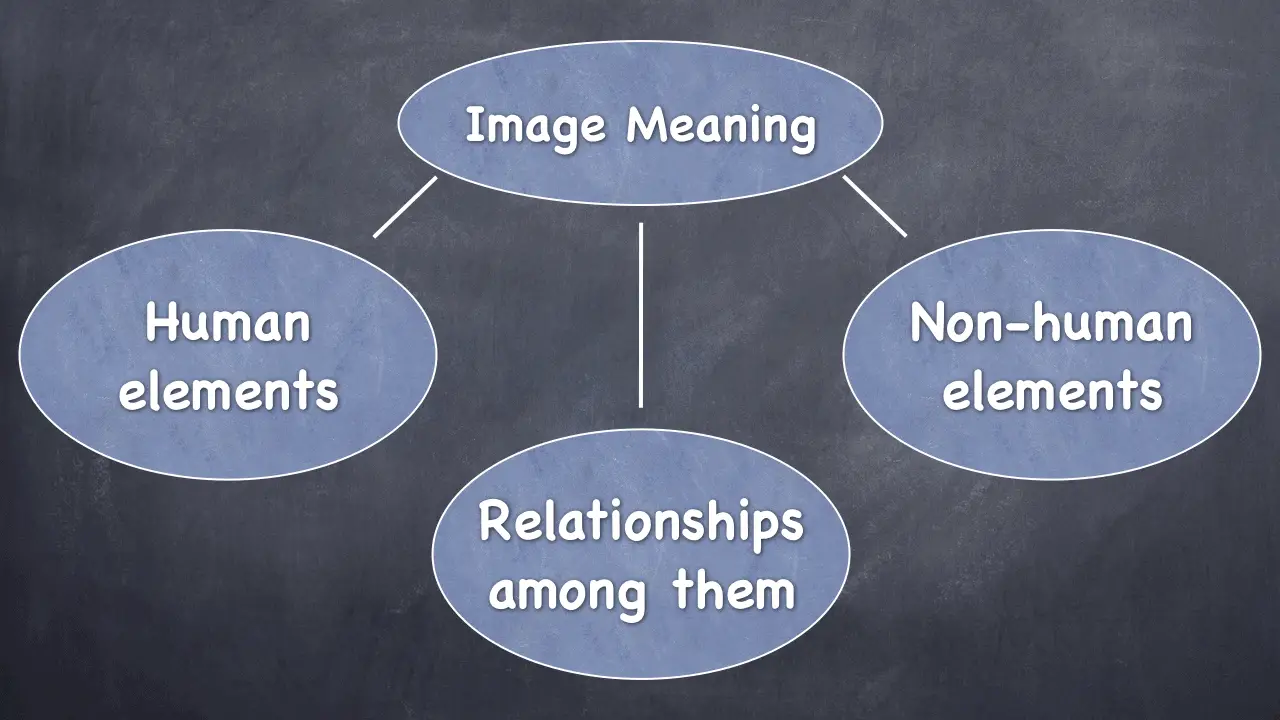

To write an effective visual analysis, all you need to do is break the image into parts and discuss the relationship between them. That’s it in a nutshell.

Writing an image analysis essay, whether you’re analyzing a photo, painting, or any other kind of an image, is a simple, 6-step process. Let me take you through it.

Together, we’ll analyze a simple image and write a short analysis essay based on it. You can analyze any image, such as a photo or a painting, by following these steps.

Here is a simple image we’ll analyze.

And we’re ready for the…

6 Steps to Writing a Visual Analysis Essay

Step 1: Identify the Elements

When you look at this image, what do you see?

Right now, you are not just a casual observer. You are like a detective who must inspect things thoroughly and be careful not to miss any details.

So, let’s put on our Sherlock Holmes hat, grab a magnifying glass, and make a list of all the major and some minor elements of this picture.

What do we observe?

- Children. How many? Four.

- Children’s hands. Four pairs.

Great. These are all human elements. In fact, it would be useful for us to have two categories of elements: human and non-human.

When we group elements into categories, it will help us later when we’ll be writing the essay. Categories make it easier to think about the elements.

What other elements do we see?

- The hands are holding soil.

- Each handful of soil also has a tiny plant in it.

- Finally, we see the green lawn or ground on which the children stand.

These are all of the obvious elements in the image. But can we dig deeper and observe more?

Again, wearing our Sherlock Holmes hat, our job is to gather information that may not be immediately obvious or noticeable.

Let’s take another look, using our detective tentacles:

- The children’s hands are arranged in a circle.

- The children’s skin color varies from lighter to darker.

- The children wear summer clothes.

You may have noticed these elements even when you first saw the image. In that case, great job!

It looks like we’ve covered all the elements. We’re ready to move on to the next step.

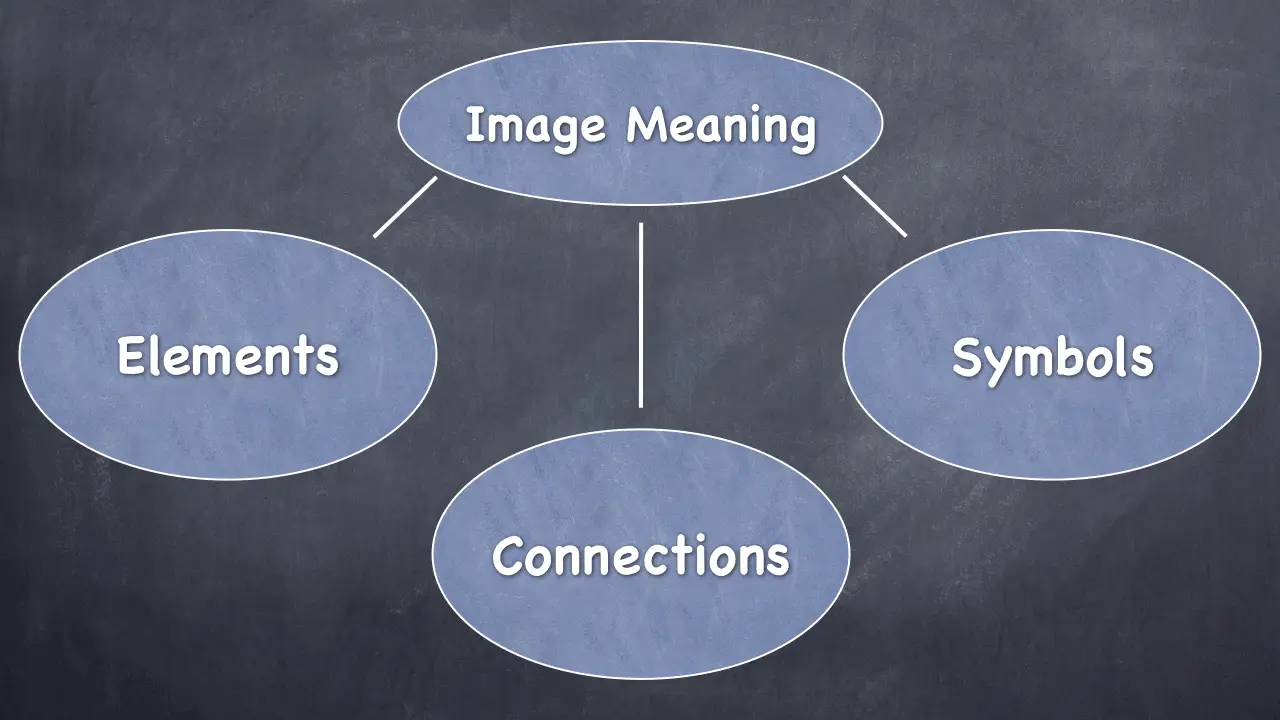

Step 2. Detect Symbols and Connections

What does Sherlock Holmes or any good detective do after basic observation? It is time to think and use our logic and imagination.

We will now look for symbols and any connections or relationships among the elements.

Identifying Symbols

- Children symbolize future and hope.

- Their hands form a circle, creating a unifying effect. The symbol is unity, and there is power in unity.

- Children’s hands hold soil, and soil symbolizes earth, perhaps planet Earth.

- The earth holds young plants which symbolize the environment and ecology.

- The young plants also symbolize youth and the future.

- The children wear summer clothes, and summer symbolizes happiness and freedom because this is when children are on vacation and enjoy life.

Great. Now, let’s see if we can make some connections and identify some relationships among the elements and symbols.

We will use our imagination to put together some kind of a meaning.

In analyzing an image, we want to understand what the creator or the artist is trying to convey.

Do artists and photographers always want to convey something or is it sometimes just a picture?

It doesn’t matter because we never know what the artist really thought when creating the work . We’re not mind readers.

But we can always gather meaning using our own logic and imagination. We can derive meaning from any image. And that’s all we need to do to write an analysis essay.

Finding Connections and Relationships

Let’s allow our imagination to roam free and write down a few thoughts. Some ideas will be more obvious than others.

- This entire image seems to be about the future of the environment.

- Why is this future important? It’s important because of the future generations, symbolized by the children.

- A strong sense of long-term future is conveyed because not only do the children hold plants, but these are baby plants. The message is “children hold future generations.”

- The variety of skin colors implies diversity. Also, the hands form a circle. Together, these two elements can mean: “global diversity.”

As you can see, we can derive really interesting meaning from even a simple image.

We did a great job here and now have plenty of material to work with and write about. It’s time for the next step.

Step 3. Formulate Your Thesis

In this step, your task is to put together an argument that you will support in your essay. What can this argument be?

The goal of writing a visual analysis is to arrive at the meaning of the image and to reveal it to the reader.

We just finished the analysis by breaking the image down into parts. As a result, we have a pretty good idea of the meaning of the image.

Now, we need to take these parts and put them together into a meaningful statement. This statement will be our thesis.

Let’s do it.

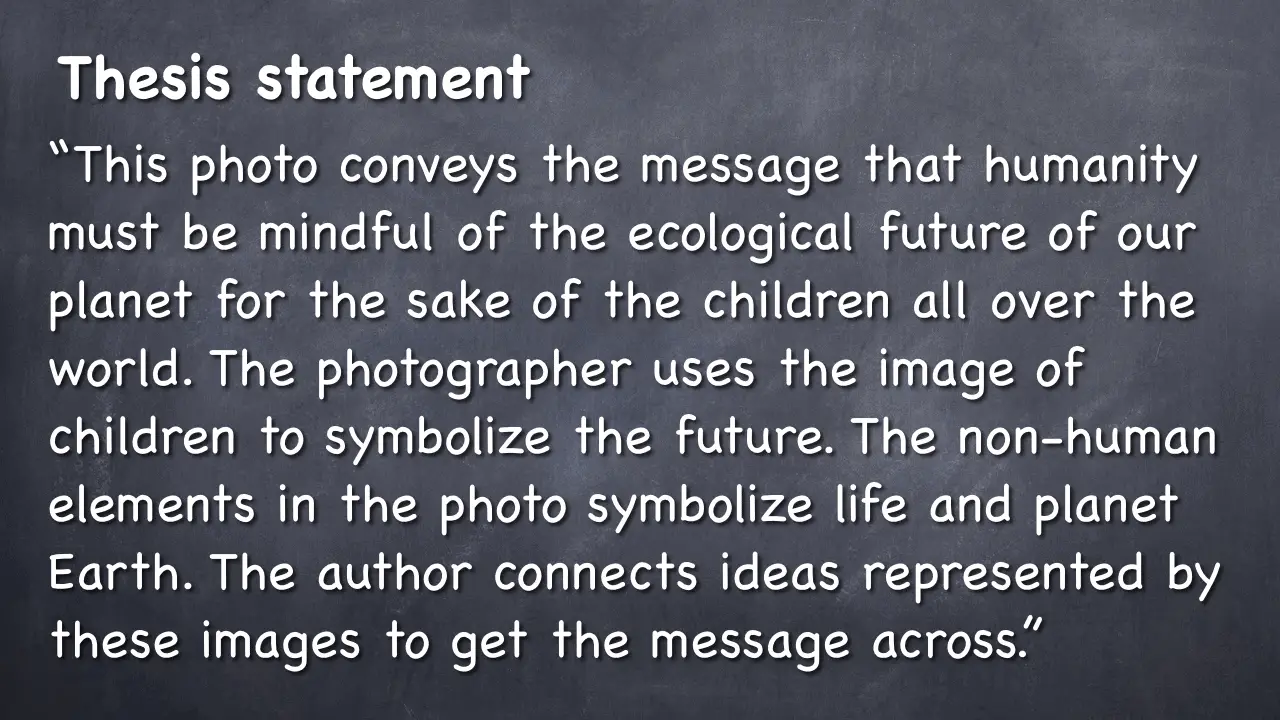

Writing the Thesis

This whole picture may mean something like the following:

This sounds good. Let’s write another version:

This sounds good, as well. What is the difference between the two statements?

The first one places the responsibility for the future of the planet on children.

The second one places this responsibility on the entire humanity.

Therefore, the second statement just makes more sense. Based on it, let’s write our thesis.

We now have our thesis, which means we know exactly what argument we will be supporting in the essay.

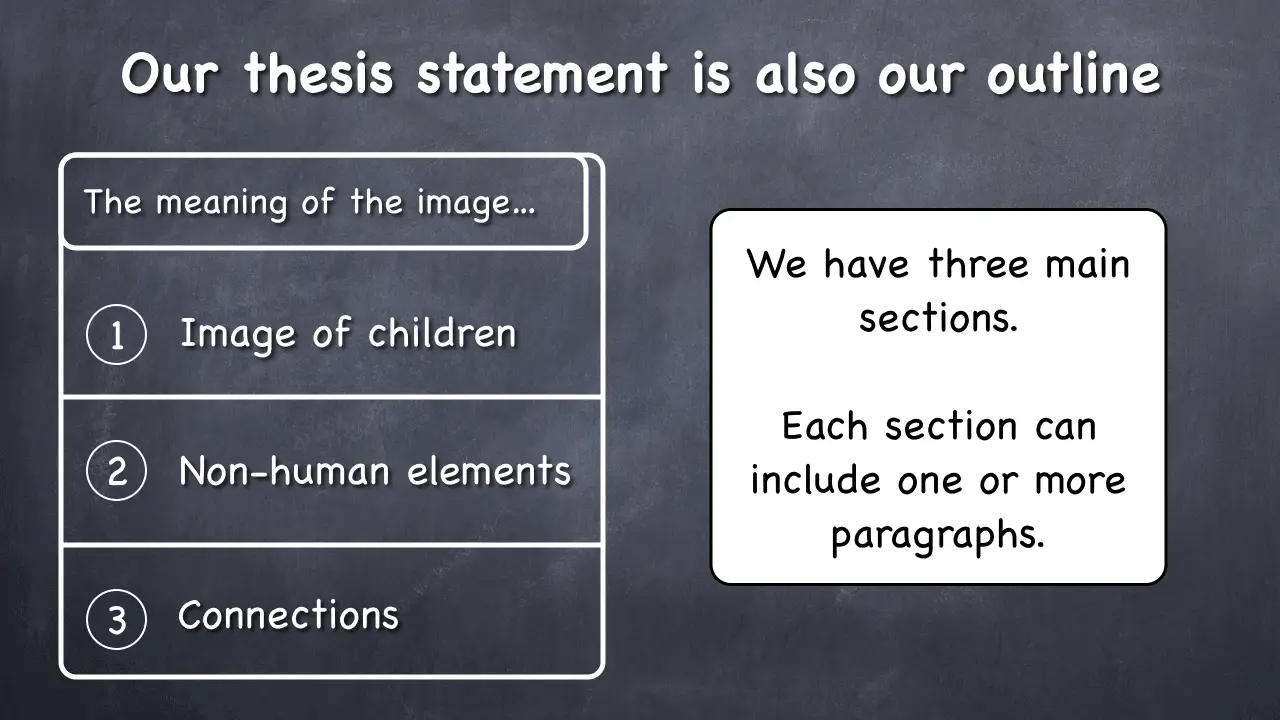

Step 4: Write the Complete Thesis Statement

While a thesis is our main point, a thesis statement is a complete paragraph that includes the supporting points.

To write it, we’ll use the Power of Three. This means that we are going to come up with three supporting points for our main point.

This is where our categories from Step 1 will come in handy. These categories are human and non-human elements. They will make up the first two supporting points for the thesis.

The third supporting point can be the relationships among the elements.

We can also pick a different set of supporting points. Our job here is to simply have three supporting ideas that make sense to us.

For example, we have our elements, symbols, and connections. And we can structure the complete argument this way:

All we really need is one way to organize our thoughts in the essay. Let’s go with the first version and formulate the supporting points.

Here’s our main point again:

Here are our supporting points:

- The photographer uses the image of children to symbolize the future.

- The non-human elements in the photo symbolize life and planet Earth.

- The author connects many ideas represented by images to get the message across.

Now we have everything we need to write the complete thesis statement. We’ll just put the main and the supporting statements into one paragraph.

Thesis Statement

Step 5: write the body of your essay.

At this point, we have everything we need to write the rest of the essay. We know that it will have three main sections because the thesis statement is also our outline.

We’re ready to write the body of the essay. Let’s do it.

Body of the Essay (3 paragraphs)

“The author of this photograph chose children and, more specifically, children’s hands in order to convey his point. In many, if not all human cultures, children evoke the feelings of hope, new beginnings, and the future. This is why people often say, ‘Children are our future.’ Furthermore, the children in the photo are of different ethnic backgrounds. This is evident from their skin colors, which vary from lighter to darker. This detail shows that the author probably meant children all over the world.

The non-human elements of the picture are the plants and the soil. The plants are very young – they are just sprouts, and that signifies the fragility of life. The soil in which they grow evokes the image of our planet Earth. Soil also symbolizes fertility. The clothes the children wear are summer clothes, and summer signifies freedom because this is the time of a long vacation for school children. Perhaps the author implies that the environment affects people’s freedom.

Finally, the relationships and connections among these elements help the photographer convey the message that humans should be mindful of their decisions today to ensure a bright future for the planet. This idea can be arrived at by careful examination. First, the children’s hands are arranged in a circle, which is a symbol of our planet and also signifies the power of unity. The future depends on people’s cooperation. Second, the children seem to be in the process of planting. The author emphasizes long-term future because the children hold baby plants. In other words, they ‘hold the future of other children’ in their hands. Third, the placement of the sprouts, which rest inside the soil in children’s hands, is a strong way to suggest that the future of the ecology is literally ‘in our hands.’”

Step 6. Add an Introduction and a Conclusion

Before we continue, I have an entire detailed article on how to write an essay step-by-step for beginners . In it, I walk you through writing every part of an essay, from the thesis to the conclusion.

Introduction

That said, your introduction should be just a sentence or two that go right before you state the thesis.

Let’s revisit our thesis statement, and then write the introduction.

And now let’s write an introductory sentence that would make the opening paragraph complete:

Now, if you read this intro sentence followed by the thesis statement, you’ll see that they work great together. And we’re done with the opening paragraph.

Your conclusion should be just a simple restatement. You can conclude your essay in many ways, but this is the basic and time-proven one.

Let’s do it:

We simply restated our thesis here. Your conclusion can be one or more sentences. In a short essay, a sentence will suffice.

Guess what – we just wrote a visual analysis essay together, and now you have a pretty good idea of how to write one.

Hope this was helpful!

How to Write a 300 Word Essay – Simple Tutorial

How to expand an essay – 4 tips to increase the word count, 10 solid essay writing tips to help you improve quickly, essay writing for beginners: 6-step guide with examples, 6 simple ways to improve sentence structure in your essays.

Tutor Phil is an e-learning professional who helps adult learners finish their degrees by teaching them academic writing skills.

You Might Like These Next...

How to Write a Summary of an Article in 5 Easy Steps

https://youtu.be/mXGNf8JMY4Y When you’re summarizing, you’re simply trying to express something in fewer words. I’m Tutor Phil, and in this tutorial, I’ll show you how to summarize an...

How to Write Strong Body Paragraphs in an Essay

https://youtu.be/OcI9NKg_cEk A body paragraph in an essay consists of three parts: topic sentence, explanation, and one or more examples. The topic sentence summarizes your paragraph completely...

How to Write a Visual Analysis Essay: Examples & Template

A visual analysis essay is an academic paper type that history and art students often deal with. It consists of a detailed description of an image or object. It can also include an interpretation or an argument that is supported by visual evidence.

Our specialists will write a custom essay specially for you!

In this article, our custom writing experts will:

- explain what a visual analysis is;

- share useful tips on how to write a good visual analysis essay;

- provide an essay sample.

- 🎨 Visual Analysis Definition

- 🏺 Artwork Analysis Tips

- ✅ Visual Analysis Writing Guide

- 📑 Example & Citation Tips

🎨 What Is a Visual Analysis?

The primary objective of visual analysis is to understand an artwork better by examining the visual elements. There are two types of visual analysis: formal and contextual.

- A formal analysis focuses on artwork elements such as texture, color, size, and line. It aims to organize visual information and translate it into words. A formal analysis doesn’t interpret the piece.

- Unlike formal analysis, contextual analysis’ primary goal is to connect artwork to its purpose or meaning within a culture. A contextual analysis includes formal analysis. Additionally, it discusses an artwork’s social purpose and significance.

Usually, students deal with formal visual analysis. Before starting to work on your essay, make sure to ask your professor whether to include contextual analysis or not.

The Purpose of Analyzing Images

Why is visual analysis important? What does it help to learn? There are several things that visual analysis helps with:

- It allows students to enhance their appreciation of art.

- It enables students to develop the ability to synthesize information.

- It encourages students to seek out answers instead of simply receiving them.

- It prompts higher-order critical thinking and helps to create a well-reasoned analysis.

- By conducting visual analysis, students learn how to support and explain their ideas by studying visual information.

What Is Formal Analysis: Art History

When we look at an artwork, we want to know why it was created, who made it, and what its function was. That’s why art historians and researchers pay special attention to the role of artworks within historical contexts.

Just in 1 hour! We will write you a plagiarism-free paper in hardly more than 1 hour

Visual analysis is a helpful tool in exploring art. It focuses on the following aspects:

- Interpretation of subject matter ( iconography). An iconographic analysis is an explanation of the work’s meaning. Art historians try to understand what is shown and why it is depicted in a certain way.

- The analysis of function. Many works of art were designed to serve a purpose that goes beyond aesthetics. Understanding that purpose by studying their historical use helps learn more about artworks. It also establishes a connection between function and appearance.

Formal Analysis: Art Glossary

Now, let’s look at some visual elements and principles and learn how to define them.

Visual Elements :

Visual Principles :

🏺 How to Analyze Artworks: Different Types

Writing a formal analysis is a skill that requires practice. Being careful and attentive during the pre-writing stage is essential if you want to create a good and well-structured visual analysis.

Receive a plagiarism-free paper tailored to your instructions. Cut 15% off your first order!

Visual analysis essay mainly consists of two components:

- Description of the selected image or object,

- Interpretation built on the visual evidence.

During the pre-writing stage:

- Collect general information about an artwork. Describe it briefly. Pay special attention to visual elements and principles:

- Develop an interpretation. Think critically. What does the information in your notes imply? How can it be interpreted?

- Support your ideas. To do it, refer to the visual elements directly. Avoid generalizing art and double-check your prompts.

How to Analyze a Painting Using the Elements of Art

To write an excellent formal visual analysis, you need to consider as many visual principles and elements as you can apply. In the formal analysis part:

- Target your description;

- Address only those elements relevant to your essay;

- Pay attention to visual elements and principles;

- Introduce the subject of the painting and describe it;

- Explain why you have decided to discuss specific elements;

- Discuss the relationship between visual elements of the artwork;

- Use the vocabulary terms.

If you are asked to do a contextual analysis , you may want to:

- Focus on the historical importance of an artwork;

- Explore the style or movement associated with an artwork;

- Learn about the historical context and the public’s reaction to the artwork;

- Learn about the author and how they’ve created the piece of art.

Painting Analysis Essay Example & Tips

Here is a template you can use for your essay.

Get an originally-written paper according to your instructions!

Now, let’s take a look at an essay example.

How to Analyze a Photograph

Analyzing photos has a lot in common with paintings. There are three methods on which photo visual analysis relies: description, reflection, and formal analysis. Historical analysis can be included as well, though it is optional.

- Description . It implies looking closely at the photo and considering all the details. The description needs to be objective and consists of basic statements that don’t express an opinion.

- Reflection. For the next step, focus on the emotions that the photograph evokes. Here, every viewer will have a different opinion and feelings about the artwork. Knowing some historical context may be helpful to construct a thoughtful response.

- Formal analysis . Think of the visual elements and principles. How are they represented in the photograph?

- Historical analysis. For a contextual analysis, you need to pay attention to the external elements of the photograph. Make sure that you understand the environmental context in which the photo was taken. Under what historical circumstances was the picture made?

Photo Analysis Essay Tips

Now that we’ve talked about analyzing a photograph let’s look at some helpful tips that will help you write an essay.

How to Analyze a Sculpture

Visual analysis of a sculpture is slightly different from the one of a painting or a photograph. However, it still uses similar concepts, relies on visual elements and principles. When you write about sculpture, consider:

Visual Analysis Essay on a Sculpture: Writing Tips

A sculpture analysis consists of the following parts:

- Description . Include specific details, such as what the sculpture may represent. For instance, the human figure may be an athlete, an ancient God, a poet, etc. Consider their pose, body build, and attire.

- Formal analysis . Here, visual elements and principles become the focus. Discuss the color, shape, technique, and medium.

- Contextual analysis . If you decide to include a contextual analysis, you can talk about the sculpture’s function and how it conveys ideas and sentiments of that period. Mention its historical and cultural importance.

When it comes to sculpture analysis, you may also want to collect technical data such as:

- The size of the sculpture

- Medium (the material)

- The current condition (is it damaged, preserved as a fragment, or as a whole piece)

- Display (Was a sculpture a part of an architectural setting, or was it an independent piece of work?)

For instance, if you were to do a visual analysis of Laocoön and His Sons , you could first look up such details:

- Location: Discovered in a Roman vineyard in 1506

- Current location: Vatican

- Date: Hellenistic Period (323 BCE – 31 CE)

- Size: Height 208 cm; Width 163 cm; Depth 112 cm

- Material: Marble

- Current condition: Missing several parts.

Visual Analysis Essay: Advertisement Analysis

Visuals are used in advertisements to attract attention or convince the public that they need what is being advertised. The purpose of a visual argument is to create interest. Advertisements use images to convey information and communicate with the audience.

When writing a visual analysis of an advertisement, pay attention to the following:

- text elements,

- illustrations,

- composition.

All of this influences how the viewer perceives the information and reacts to it.

When you write about an advertisement, you conduct a rhetorical analysis of its visual elements. Visual rhetoric is mainly directed at analyzing images and extracting information from them. It helps to understand the use of typography, imagery, and the arrangement of elements on the page.

Think of the famous visual rhetoric examples such as the We can do it! poster or a Chanel №5 commercial. Both examples demonstrate how persuasive imagery has been used throughout history.

How to Write a Visual Analysis Paper on an Advertisement

The presentation of visual elements in advertising is essential. It helps to convince the audience. When you analyze visual arguments, always keep the rhetorical situation in mind. Here are some crucial elements to focus on:

✅ How to Write a Visual Analysis Paper: Step by Step

Now, we’ll focus on the paper itself and how to structure it. But first, check out the list of topics and choose what suits you best.

Visual Analysis Essay Topics

There are a lot of artworks and advertisements that can be analyzed and viewed from different perspectives. Here are some essay topics on visual analysis that you may find helpful:

- Analyze Gustav Klimt’s The Kiss (1907-1908.)

- The theme of humanity and The Son of Man (1964) by René Magritte.

- The use of visual elements in Almond Blossom by Vincent van Gogh (1888-1890.)

- Identity and Seated Harlequin (1901) by Picasso .

- Explore the themes of Paul Klee ’s The Tree of Houses , 1918.

- Objectives, activities, and instructions of Pietro Perugino’s fresco The Delivery of the Keys to Saint Peter .

- Reflection on social issues of the time in Two Fridas by Frida Kahlo and Untitled by Ramses Younan .

- Analyze the importance of Mural (1943) by Jackson Pollock .

- The political message in John Gast’s painting American Progress (1872).

- Describe the visual techniques used in Toy Pieta by Scott Avett .

- The interpretation of the painting Indian Fire God by Frederic Remington.

- Explore the historical significance and aesthetic meaning of Ognissanti Madonna by Giotto di Bondone .

- Analyze different interpretations of The Three Dancers by Pablo Picasso .

Photography:

- The idea behind Lindsay Key (1985) by Robert Mapplethorpe.

- Explore the mythical appeal of Robert Capa’s photograph The Falling Soldier (Spain,1936) from Death in Making photobook.

- Describe Two Boys with Fish (2018) from Faith series by Mario Macilau.

- Kevin Carter’s Starving Child and Vulture (1993) as the representation of photojournalism.

- The story behind Philippe Halsman’s Dali Atomicus , 1948.

- Describe The Starving Boy in Uganda photograph by Mike Wells

- Analyse the view of a historic disaster in San Francisco photograph by George R. Lawrence.

- The statement behind Eddie Adams’s photo Shooting a Viet Cong Prisoner .

- How is Steve McCurry’s perception of the world reflected in his photo Afghanistan Girl .

- Analyze the reflection of Ansel Adams’s environmental philosophy in his photo Moon and Half Dome (1960).

- Describe Girl on the Garda Lake (2016) by Giuseppe Milo.

- Combination of internal geometry and true-to-life moments in Behind the Gare Saint Lazare by Henri Cartier-Bresson .

- Modern art and Couple on Seat by Lynn Chadwick (1984.)

- Analyze the biblical context of Pieta (1498-1499) by Michelangelo.

- The use of shapes in Louise Bourgeois’ Spider (1996.)

- Analysis of the symbolism behind The Thinker (1880) by Rodin.

- The historical meaning of Fountain (1917) by Duchamp .

- Analyze the Miniature Statue of Liberty by Willard Wigan

- The combination of Egyptian culture and classical Greek ideology in statue of Osiris-Antinous .

- Reflection of the civilization values in emperor Qin’s Terracotta Army .

- The aesthetic and philosophical significance of Michelangelo’s David .

- Explore the controversial meaning of Damien Hirst’s sculpture For the Love of God (2007).

- Analyze the elements of art and design used in The Thinker by August Rodin .

- Symbolic elements in the Ancient Greek statues of Zeus .

- Depiction of the fundamental aspects of Buddhism in The Parinirvana of Siddhartha/Shakyamuni.

Advertisement:

- How Volkswagen : Think Small (1960) ad changed advertising.

- Analyze the use of figures in California Milk Processor Board: Got Milk? (1993) ad campaign .

- Analyze the use of colors in Coca-Cola — The Pause that Refreshes (1931.)

- Explore the historical context of We Can Do It! (1942) campaign.

- The importance of a slogan in 1947: A Diamond Is Forever by De Beers.

- Examine the specifics of visual advert: dogs and their humans.

- Describe the use of visual techniques in Kentucky Fried Chicken company’s advertisement.

- Analyze the multiple messages behind the print ad of JBL .

- Discuss the methods used in Toyota Highlander advertisement .

- Elucidation of people’s dependency on social networks in the advertising campaign Followers by Miller Lite.

- The use of the visual arguments in Schlitz Brewing Company advertisement .

- The role of colors and fonts in Viva la Juicy perfume advertisement .

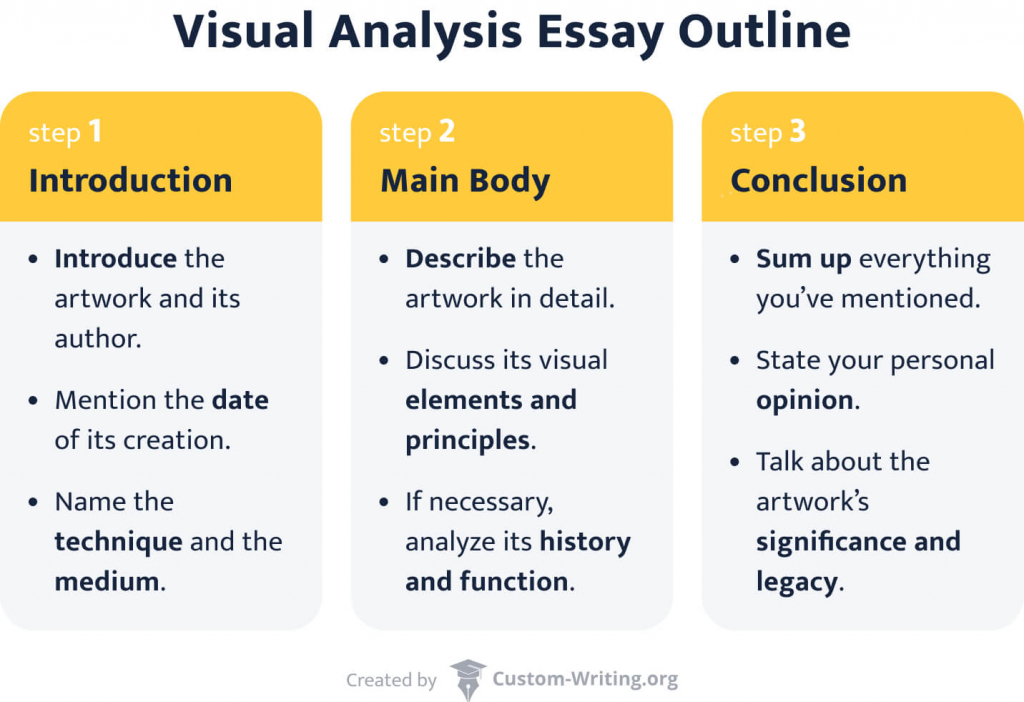

Visual Analysis Essay Outline

You can use this art analysis template to structure your essay:

How to Start an Art Essay

Every analysis starts with an introduction. In the first paragraph, make sure that:

- the reader knows that this essay is a visual analysis;

- you have provided all the necessary background information about an artwork.

It’s also important to know how to introduce an artwork. If you’re dealing with a panting or a photograph, it’s better to integrate them into the first page of your analysis. This way, the reader can see the piece and use it as a reference while reading your paper.

Art Thesis Statement Examples & Tips

Formulating a thesis is an essential step in every essay. Depending on the purpose of your paper, you can either focus your visual analysis thesis statement on formal elements or connect it with the contextual meaning.

To create a strong thesis, you should relate it to an artwork’s meaning, significance, or effect. Your interpretation should put out an argument that someone could potentially disagree with.

- For instance, you can consider how formal elements or principles impact the meaning of an artwork. Here are some options you can consider:

- If your focus is the contextual analysis, you can find the connection between the artwork and the artist’s personal life or a historical event.

How to Write Visual Analysis Body Paragraphs

Body paragraphs of formal analysis consist of two parts—the description and the analysis itself. Let’s take Klimt’s The Kiss as an example:

The contextual analysis includes interpretation and evaluation.

Visual Analysis Essay Conclusion

When you work on the conclusion, try to conclude your paper without restating the thesis. At the end of your essay, you can present an interesting fact. You can also try to:

- Compare an artwork to similar ones;

- Contrast your own ideas on the piece with the reaction people had when it was first revealed.

- Talk about an artwork’s significance to the culture and art in general.

📑 Visual Analysis Essay Example & Citation Tips

In this section of the article, we will share some tips on how to reference an artwork in a paper. We will also provide an essay example.

How to Reference a Painting in an Essay

When you work on visual analysis, it is important to know how to write the title of an artwork properly. Citing a painting, a photograph, or any other visual source, will require a little more information than citing a book or an article. Here is what you will need:

- Size dimensions

- Current location

- Name of the piece

- Artist’s name

- Date when artwork was created

If you want to cite a painting or an artwork you saw online, you will also need:

- The name of the website

- Website URL

- Page’s publication date

- Date of your access

How to Properly Credit an Artwork in APA

How to properly credit an artwork in mla, how to properly credit an artwork in chicago format.

Finally, here’s a sample visual analysis of Rodin’s sculpture The Thinker in APA format. Feel free to download it below.

Many people believe that works of art are bound to be immortal. Indeed, some remarkable masterpieces have outlived their artists by many years, gaining more and more popularity with time. Among them is The Thinker, a brilliant sculpture made by Auguste Rodin, depicting a young, athletic man, immersed deep into his thoughts.

You can also look at the following essay samples to get even more ideas.

- The Protestors Cartoon by Clay Bennett: Visual Analysis

- Visual Analysis – Editorial Cartoon

- Visual Analysis: “Dust Storm” Photo by Steve McCurry

- Visual, Aural, Read & Write, Kinesthetic Analysis

- Schlitz Brewing Company Advertisement: Visual Arguments Analysis

Thanks for reading through our article! We hope you found it helpful. Don’t hesitate to share it with your friends.

Further reading:

- How to Write a Lab Report: Format, Tips, & Example

- Literature Review Outline: Examples, Approaches, & Templates

- How to Write a Research Paper Step by Step [2024 Upd.]

- How to Write a Term Paper: The Ultimate Guide and Tips

❓ Visual Analysis FAQs

To write a visual argument essay, you need to use rhetorical analysis. Visual rhetoric is directed at analyzing images and extracting the information they contain. It helps to analyze the visuals and the arrangement of elements on the page.

A well-though contextual analysis will include:

1. formal analysis, 2. some information about the artist, 3. details on when and where the piece was created, 4. the social purpose of the work, 5. its cultural meaning.

It is better to include pictures in the introduction part of your paper. Make sure to cite them correctly according to the format you’re using. Don’t forget to add the website name, the URL, and the access date.

To analyze means not only to describe but also to evaluate and synthesize visual information. To do that, you need to learn about visual elements and principles and see how and why they are used within artworks.

🔍 References

- Art History: University of North Carolina at Chapel Hill

- Visual Analysis: Duke University

- Writing a Formal Analysis in Art History: Hamilton College

- Contextual Analysis: Pine-Richland School District

- How to Analyze an Artwork: Student Art Guide

- Introduction to Art Historical Analysis: Khan Academy

- Guidelines for Analysis of Art: University of Arkansas at Little Rock

- Elements of Art: Getty.edu

- Formal or Critical Analysis: LibreTexts

- Analyzing a Photograph: University of Oregon

- Picture Composition Analysis and Photo Essay: University of Northern Iowa

- Visual Analysis Guidelines: Skidmore College

- How to Analyze Sculpture: NLA Design and Visual Arts: WordPress

- Visual Rhetoric: Purdue University

- Formal Visual Analysis: The Elements & Principles of Composition

- Share to Facebook

- Share to Twitter

- Share to LinkedIn

- Share to email

Want to know how to write a reflection paper for college or school? To do that, you need to connect your personal experiences with theoretical knowledge. Usually, students are asked to reflect on a documentary, a text, or their experience. Sometimes one needs to write a paper about a lesson...

A character analysis is an examination of the personalities and actions of protagonists and antagonists that make up a story. It discusses their role in the story, evaluates their traits, and looks at their conflicts and experiences. You might need to write this assignment in school or college. Like any...

![thesis on image analysis Critical Writing: Examples & Brilliant Tips [2024]](https://custom-writing.org/blog/wp-content/uploads/2021/02/fingers-note-report-journalist-filling-284x153.jpg)

Any critique is nothing more than critical analysis, and the word “analysis” does not have a negative meaning. Critical writing relies on objective evaluations of or a response to an author’s creation. As such, they can be either positive or negative, as the work deserves. To write a critique, you...

If you are assigned to write a rhetorical analysis essay, you have one significant advantage. You can choose a text from an almost infinite number of resources. The most important thing is that you analyze the statement addressed to an audience. The task of a rhetorical analysis essay is to...

Any literary analysis is a challenging task since literature includes many elements that can be interpreted differently. However, a stylistic analysis of all the figurative language the poets use may seem even harder. You may never realize what the author actually meant and how to comment on it! While analyzing...

As a student, you may be asked to write a book review. Unlike an argumentative essay, a book review is an opportunity to convey the central theme of a story while offering a new perspective on the author’s ideas. Knowing how to create a well-organized and coherent review, however, is...

The difference between an argumentative and persuasive essay isn’t always clear. If you’re struggling with either style for your next assignment, don’t worry. The following will clarify everything you need to know so you can write with confidence. First, we define the primary objectives of argumentative vs. persuasive writing. We...

You don’t need to be a nerd to understand the general idea behind cause and effect essays. Let’s see! If you skip a meal, you get hungry. And if you write an essay about it, your goal is achieved! However, following multiple rules of academic writing can be a tough...

![thesis on image analysis How to Write an Argumentative Essay: 101 Guide [+ Examples]](https://custom-writing.org/blog/wp-content/uploads/2021/01/young-writer-taking-notes-284x153.jpg)

An argumentative essay is a genre of academic writing that investigates different sides of a particular issue. Its central purpose is to inform the readers rather than expressively persuade them. Thus, it is crucial to differentiate between argumentative and persuasive essays. While composing an argumentative essay, the students have to...

![thesis on image analysis How to Title an Essay: Guide with Creative Examples [2024]](https://custom-writing.org/blog/wp-content/uploads/2021/01/close-up-woman-making-greeting-card-new-year-christmas-2021-friends-family-scrap-booking-diy-writing-letter-with-best-wishes-design-her-homemade-card-holidays-celebration-284x153.jpg)

It’s not a secret that the reader notices an essay title first. No catchy hook or colorful examples attract more attention from a quick glance. Composing a creative title for your essay is essential if you strive to succeed, as it: Thus, how you name your paper is of the...

The conclusion is the last paragraph in your paper that draws the ideas and reasoning together. However, its purpose does not end there. A definite essay conclusion accomplishes several goals: Therefore, a conclusion usually consists of: Our experts prepared this guide, where you will find great tips on how to...

![thesis on image analysis How to Write a Good Introduction: Examples & Tips [2024 Upd.]](https://custom-writing.org/blog/wp-content/uploads/2021/01/closeup-shot-woman-working-studying-from-home-with-red-coffee-cup-nearby-284x153.jpg)

A five-paragraph essay is one of the most common academic assignments a student may face. It has a well-defined structure: an introduction, three body paragraphs, and a conclusion. Writing an introduction can be the most challenging part of the entire piece. It aims to introduce the main ideas and present...

do you review and edit visual arts extended essay

How to Write a Visual Analysis Essay: Mastering Artful Interpretations 👌

.png)

Setting itself apart from other essays, visual analysis essays necessitate a thorough examination of design elements and principles. Whether it's the mysterious smile of the 'Mona Lisa' or a striking photograph capturing a fleeting moment, visual art has the power to move us. Writing this kind of paper is like peeling back the layers of a visual story, uncovering its meanings, and unraveling its impact.

Think of it as decoding the secrets a picture holds. Imagine standing in front of a famous painting, like the 'Mona Lisa' in the Louvre. Millions are drawn to it, captivated by the tale it tells. Your essay lets you share your perspective on the stories hidden in images.

If you're feeling unsure about tackling this kind of essay, don't worry—check out this blog for a straightforward guide. The expert team at our essay service online will walk you through each step of writing the essay, offering tips and examples along the way.

What Is a Visual Analysis Essay

A visual analysis essay is a unique form of writing that delves into the interpretation of visual elements within an image, such as a painting, photograph, or advertisement. Rather than focusing solely on the subject matter, this type of essay scrutinizes the design elements and principles employed in the creation of the visual piece.

Design Elements: These include fundamental components like color, size, shape, and line. By dissecting these elements, you gain a deeper understanding of how they contribute to the overall composition and convey specific messages or emotions.

Design Principles: Equally important are the design principles—balance, texture, contrast, and proportion. These principles guide the arrangement and interaction of the design elements, influencing the visual impact of the entire composition.

Purpose: The goal is not only to describe the visual content but also to decipher its underlying meaning and the artistic choices made by the creator. It goes beyond the surface level, encouraging the writer to explore the intentions behind the visual elements and how they communicate with the audience.

Stepwise Approach: To tackle this essay, follow a stepwise approach. Begin by closely observing the image, noting each design element and principle. Then, interpret how these choices contribute to the overall message or theme. Structure your essay to guide the reader through your analysis, providing evidence and examples to support your interpretations.

Tips for How to Write a Visual Analysis Essay Successfully:

- Use clear and concise language.

- Support your analysis with specific details from the visual piece.

- Consider the historical or cultural context when applicable.

- Connect your observations to the overall artistic or rhetorical goals.

Sample Visual Analysis Essay Outline

This sample outline offers a framework for organizing a comprehensive structure for a visual analysis essay, ensuring a systematic exploration of design elements and principles. Adjustments can be made based on the specific requirements of the assignment and the characteristics of the chosen visual piece. Now, let's delve into how to start a visual analysis essay using this template.

I. Visual Analysis Essay Introduction

A. Briefly introduce the chosen visual piece

- Include relevant details (title, artist, date)

B. Provide a thesis statement

- Express the main point of your analysis

- Preview the key design elements and principles to be discussed

II. Description of the Visual Piece

A. Present an overview of the visual content

- Describe the subject matter and overall composition

- Highlight prominent visual elements (color, size, shape, line)

III. Design Elements Analysis

- Discuss the use of color and its impact on the composition

- Explore the emotional or symbolic associations of specific colors

B. Size and Shape

- Analyze the significance of size and shape in conveying meaning

- Discuss how these elements contribute to the overall visual appeal

- Examine the use of lines and their role in guiding the viewer's gaze

- Discuss any stylistic choices related to lines

IV. Design Principles Analysis

- Discuss the visual balance and how it contributes to the overall harmony

- Analyze whether the balance is symmetrical or asymmetrical

- Explore the use of texture and its impact on the viewer's perception

- Discuss how texture adds depth and visual interest

C. Contrast

- Analyze the contrast between elements and its effect on the composition

- Discuss whether the contrast enhances the visual impact

D. Proportion

- Discuss the proportion of elements and their role in creating a cohesive visual experience

- Analyze any intentional distortions for artistic effect

V. Interpretation and Analysis

A. Explore the overall meaning or message conveyed by the visual piece

- Consider the synthesis of design elements and principles

- Discuss any cultural or historical context influencing the interpretation

VI. Conclusion

A. Summarize the key points discussed in the analysis

B. Restate the thesis in the context of the insights gained

C. Conclude with a reflection on the overall impact and effectiveness of the visual piece.

An In-Depth Guide to Analyzing Visual Art

This in-depth guide on how to start a visual analysis essay begins with establishing a contextual foundation, progresses to a meticulous description of the painting, and culminates in a comprehensive analysis that unveils the intricate layers of meaning embedded in the artwork. As we navigate through each step of writing a visual analysis paper, the intention is not only to see the art but to understand the language it speaks and the stories it tells.

Step 1: Introduction and Background

Analyzing the art requires setting the stage with a solid analysis essay format - introduction and background. Begin by providing essential context about the artwork, including details about the artist, the time period, and the broader artistic movement it may belong to. This preliminary step allows the audience to grasp the significance of the painting within a larger cultural or historical framework.

Step 2: Painting Description

The next crucial phase in visual analysis involves a meticulous examination and description of the painting itself. Take your audience on a vivid tour through the canvas, unraveling its visual elements such as color palette, composition, shapes, and lines.

Provide a comprehensive snapshot of the subject matter, capturing the essence of what the artist intended to convey. This step serves as the foundation for the subsequent in-depth analysis, offering a detailed understanding of the visual elements at play.

Step 3: In-Depth Analysis

With the groundwork laid in the introduction and the painting description, now it's time to dive into the heart of writing a visual analysis paper. Break down the visual elements and principles, exploring how they interact to convey meaning and emotion. Discuss the deliberate choices made by the artist in terms of color symbolism, compositional techniques, and the use of texture.

Consider the emotional impact on the viewer and any cultural or historical influences that might be reflected in the artwork. According to our custom essay service experts, this in-depth analysis goes beyond the surface, encouraging a profound exploration of the artistic decisions that shape the overall narrative of the visual piece.

How to Write a Visual Analysis Essay: A Proper Structure

Using the conventional five-paragraph essay structure proves to be a reliable approach for your essay. When examining a painting, carefully select the relevant aspects that capture your attention and analyze them in relation to your thesis. Keep it simple and adhere to the classic essay structure; it's like a reliable roadmap for your thoughts.

.png)

Introduction

The gateway to a successful visual analysis essay lies in a compelling introduction. Begin by introducing the chosen visual piece, offering essential details such as the title, artist, and date. Capture the reader's attention by providing a brief overview of the artwork's significance. Conclude the introduction with a concise thesis statement, outlining the main point of your analysis and previewing the key aspects you will explore.

Crafting a robust thesis statement is pivotal in guiding your analysis. Clearly articulate the primary message or interpretation you aim to convey through your essay. Your thesis should serve as the roadmap for the reader, indicating the specific elements and principles you will analyze and how they contribute to the overall meaning of the visual piece.

The body is where the intricate exploration takes place. Divide this section into coherent paragraphs, each dedicated to a specific aspect of your analysis. Focus on the chosen design elements and principles, discussing their impact on the composition and the intended message. Support your analysis with evidence from the visual piece, providing detailed descriptions and interpretations. Consider the historical or cultural context if relevant, offering a well-rounded understanding of the artwork.

Conclude with a concise yet impactful conclusion. Summarize the key points discussed in the body of the essay, reinforcing the connection between design elements, principles, and the overall message. Restate your thesis in the context of the insights gained through your analysis. Leave the reader with a final thought that encapsulates the significance of the visual piece and the depth of understanding achieved through your exploration.

In your essays, it's important to follow the usual citation rules to give credit to your sources. When you quote from a book, website, journal, or movie, use in-text citations according to the style your teacher prefers, like MLA or APA. At the end of your essay, create a list of all your sources on a page called 'Sources Cited' or 'References.'

The good news for your analysis essays is that citing art is simpler. You don't need to stress about putting art citations in the middle of your sentences. In your introduction, just explain the artwork you're talking about—mentioning details like its name and who made it. After that, in the main part of your essay, you can mention the artwork by its name, such as 'Starry Night' by Vincent van Gogh.

This way, you can keep your focus on talking about the art without getting tangled up in the details of citing it in your text. Always keep in mind that using citations correctly makes your writing look more professional.

Visual Analysis Essay Example

To provide a clearer illustration of a good paper, let's delve into our sample essay, showcasing an exemplary art history visual analysis essay example.

Unveiling the Details in Image Analysis Essay

Have you ever gazed at an image and wondered about the stories it silently holds? Describing images in visual analysis papers is not just about putting what you see into words; it's about unraveling the visual tales woven within every pixel. So, how do you articulate the unspoken language of images? Let's examine below:

.png)

- Start with the Basics: Begin your description by addressing the fundamental elements like colors, shapes, and lines. What hues dominate the image? Are there distinct shapes that catch your eye? How do the lines guide your gaze?

- Capture the Atmosphere: Move beyond the surface and capture the mood or atmosphere the image evokes. Is it serene or bustling with energy? Does it exude warmth or coolness? Conveying the emotional tone adds layers to your description.

- Detail the Composition: Dive into the arrangement of elements. How are objects positioned? What is the focal point? Analyzing the composition unveils the intentional choices made by the creator.

- Consider Scale and Proportion: When unsure how to write an image analysis essay well, try exploring the relationships between objects. Are there disparities in size? How do these proportions contribute to the overall visual impact? Scale and proportion provide insights into the image's dynamics.

- Examine Textures and Patterns: Zoom in on the finer details. Are there textures that invite touch? Do patterns emerge upon closer inspection? Describing these nuances enriches your analysis, offering a tactile dimension.

- Cultural and Historical Context: Consider the broader context in which the image exists. How might cultural or historical factors influence its meaning? Understanding context adds depth to your description.

Final Thoughts

As we conclude our journey, consider this: how might your newfound appreciation for the subtleties of visual description enhance your understanding of the world around you? Every image, whether captured in art or everyday life, has a story to tell. Will you be the perceptive storyteller, wielding the brush of description to illuminate the tales that images whisper? The adventure of discovery lies in your hands, and the language of images eagerly awaits your interpretation. How will you let your descriptions shape the narratives yet untold?

Keep exploring, keep questioning, and let the rich tapestry of visual storytelling unfold before you. And if you're looking for a boost on how to write a thesis statement for a visual analysis essay, order an essay online , and our experts will gladly handle it for you!

How Do You Make a Good Conclusion to a Visual Analysis Essay?

How do you write a visual analysis essay thesis, what is a good approach to writing a visual analysis paper formally.

- Plagiarism Report

- Unlimited Revisions

- 24/7 Support

- Campus Library Info.

- ARC Homepage

- Library Resources

- Articles & Databases

- Books & Ebooks

Baker College Research Guides

- Research Guides

- General Education

COM 1020: Composition and Critical Thinking II

- Visual Analysis Essay

- COM 1020 Reminders: The Writing Process, Research, etc.

- Understanding Rhetoric and Rhetorical Analysis

- Visual Rhetoric

- What is an Annotated Bibliography?

- Understanding Oral Communications

- Narrated PowerPoint

- Presentations (Tips and Strategies)

- Letter Formatting

- Abstract Formatting

- Scholarly Articles

- Critical Reading

- Google Slides

Understanding Visual Analysis Essays

A written analysis allows writers to explore the discrete parts of some thing—in this case, several visual artifacts—to better understand the whole and how it communicates its message.

We should also consider how the image(s) appeal to ethos, pathos, and logos, and why. Consider, for example, how most advertisements rely on an appeal to pathos--or emotion--to persuade consumers to buy their project. Some ads will use humor to do so. Others will evoke patriotism to persuade consumers to purchase a product (suggesting buying a certain product will make them a good American).

This particular analysis will allow students to focus on visual materials relating to their career of interest to better understand how messages related to their field are composed and presented. This project will grant students the means to evaluate qualitative and quantitative arguments in the visual artifacts as well as interpret the claims made and supporting reasons. The project also will allow students to research discipline-specific and professional visual resources.

The audience for the analysis is an audience with comparable knowledge on the topic. Students should define and explain any terminology or jargon used that may be difficult for a general audience to understand.

Instructions:

Begin the essay by finding at least two examples of images relating to your intended future field of study (or a field that you are interested in learning more about). Use the Visual Analysis Planning Sheet to record your observations about the images. You will describe the images in great detail.

You will also need to research and find out who made the images, when, why and for what purpose. (This is called the rhetorical situation).

The essay should also explain what the purpose and intent of the images is and if there are any implicit messages (hidden messages) as well. An ad for Coca-Cola sells soda, but it also might imply something about family values. A public service announcement about hand-washing might also imply a sense of fear about pathogens and the spread of viruses from abroad. You should explore such obvious and hidden messages in your essay.

After describing all the key components, you’ll consider whether or not the images succeed at their goal or purpose and what these images suggest about how the field communicates its messages. See the Visual Analysis Planning Sheet for more help: https://docs.google.com/document/d/1HUa4_XZ84svJPJ2Ppe5TTIK20Yp7bd-h/edit

Suggested Organization of Visual Analysis Essay

I. Introduction (1 paragraph) - should contain a hook (attention-grabber), set the context for the essay, and contain your thesis statement (described below).

a. Thesis statement : State what two images are being analyzed and what your overall claim is about them. The thesis should make a claim about the images such as whether they are effective or ineffective at communicating their message.

II. Explain the Rhetorical Situation of both images: (2 paragraphs) Begin by discussing what is being advertised or displayed, who made it (company, artist, writer, etc.), who is the target audience, where and when the image was published and shared, and where the image was made (country). Provide these details for both images being discussed and analyzed.

III. Description of both images (4-8 paragraphs). Discuss each image in full detail, providing the following details about both:

a. Describe what appears in the image. Be as detailed as possible.

b. Discuss the primary color choice used and what mood these colors create.

c. Explain the overall layout and organization of each image.

d. Discuss the use of wording in the visual image. What font is used, what color, and size is the font.

e. Explain what the message in the visual actually says and what this message means/indicates/asks of viewers and readers.

F. Discuss any other relevant information (from the planning worksheet or anything you think is noteworthy.

IV. Discussion and Evaluation (2-4 paragraphs) - Synthesizing your findings,and analyze what you think the smaller details accomplish.

- Discuss if the images appeal to ethos, pathos, or logos and provide evidence to back up your claim.

- Discuss what sociological, political, economic or cultural attitudes are indirectly reflected in the images. Back up your claims with evidence. An advertisement may be about a pair of blue jeans but it might, indirectly, reflect such matters as sexism, alienation, stereotyped thinking, conformism, generational conflict, loneliness, elitism, and so on.

- Assert what claims are being made by the images. Consider the reasons which support that claim: reasons about the nature of the visual's product or service, reasons about those responsible for that product or service, and reasons which appeal to the audience's values, beliefs, or desires.

V. Conclusion (1 paragraph) - should contain both a recap of your response, as well as a closing statement in regards to your overall response to the chosen essay. Include a conclusion that reviews the messages the images make and offer a conclusion that combines the results of your findings and why they matter.

Drafting/Research Strategies:

To write a visual analysis, you must look closely at a visual object—and translate your visual observations into written text. However, a visual analysis does not simply record your observations. It also makes a claim about the images. You will describe the images in detail and then offer an analysis of what the images communicate at the surface level. You will also highlight any implicit messages that the images communicate. (Use Visual Analysis Planning Sheet). Students should begin the project by taking detailed notes about the images. Review every component of each image. Be precise. Consider the composition, colors, textures, size, space, and other visual and material attributes of the images. Go beyond your first impressions. This should take some time—allow your eye to absorb the image. Making a sketch of the work can help you understand its visual logic.

Good to Know

Below are some helpful resources to aid in creating your Visual Analysis Essay.

- Visual Analysis essay sample

- Photos and Illustrations

- Visual Elements: Play, Use, and Design

- << Previous: Visual Rhetoric

- Next: What is an Annotated Bibliography? >>

- Last Updated: Feb 23, 2024 2:01 PM

- URL: https://guides.baker.edu/com1020

- Search this Guide Search

Your browser does not support javascript. Some site functionality may not work as expected.

- Images from UW Libraries

- Open Images

- Image Analysis

- Citing Images

- University of Washington Libraries

- Library Guides

- Images Research Guide

Images Research Guide: Image Analysis

Analyze images.

Content analysis

- What do you see?

- What is the image about?

- Are there people in the image? What are they doing? How are they presented?

- Can the image be looked at different ways?

- How effective is the image as a visual message?

Visual analysis

- How is the image composed? What is in the background, and what is in the foreground?

- What are the most important visual elements in the image? How can you tell?

- How is color used?

- What meanings are conveyed by design choices?

Contextual information

- What information accompanies the image?

- Does the text change how you see the image? How?

- Is the textual information intended to be factual and inform, or is it intended to influence what and how you see?

- What kind of context does the information provide? Does it answer the questions Where, How, Why, and For whom was the image made?

Image source

- Where did you find the image?

- What information does the source provide about the origins of the image?

- Is the source reliable and trustworthy?

- Was the image found in an image database, or was it being used in another context to convey meaning?

Technical quality

- Is the image large enough to suit your purposes?

- Are the color, light, and balance true?

- Is the image a quality digital image, without pixelation or distortion?

- Is the image in a file format you can use?

- Are there copyright or other use restrictions you need to consider?

developed by Denise Hattwig , [email protected]

More Resources

National Archives document analysis worksheets :

- Photographs

- All worksheets

Visual literacy resources :

- Visual Literacy for Libraries: A Practical, Standards-Based Guide (book, 2016) by Brown, Bussert, Hattwig, Medaille ( UW Libraries availability )

- 7 Things You Should Know About... Visual Literacy ( Educause , 2015 )

- Keeping Up With... Visual Literacy (ACRL, 2013)

- Visual Literacy Competency Standards for Higher Education (ACRL, 2011)

- Visual Literacy White Paper (Adobe, 2003)

- Reading Images: an Introduction to Visual Literacy (UNC School of Education)

- Visual Literacy Activities (Oakland Museum of California)

- << Previous: Open Images

- Next: Citing Images >>

- Last Updated: Nov 15, 2023 12:45 PM

- URL: https://guides.lib.uw.edu/newimages

Quick Links:

ENC 1101- Prof. Berkley

- Log In Required

- Source Analysis Essay

- Argumentative Essay With Sources

Images Databases

- About MLA This link opens in a new window

- MLA Template

- How Do I Cite?

Image Analysis Essay

Assignment Description : Write an argumentative essay based on an image. The argument should focus on the image and the message the image conveys. All evidence for your argument should come from the image. The analysis should come from you. An excellent essay will analyze the image in a way that conveys a deeper meaning than one gets from simply observing the image.

Assignment Outcomes : The Image Analysis Essay should demonstrate your ability to make a logical argument that is well supported by evidence and correct use of MLA format and citation style.

Assignment Requirements :

Write an argumentative essay on an image. The image can not include any text.

Have an arguable thesis that is well supported by every paragraph of the essay.

Have a conclusion that answers the questions, “So what?”

The only required source is the image itself. If necessary for your argument, you may bring in other sources that give historical era, artist’s information, or other background material that provides context for the image. All sources must be from a credible, academic source like those found in the Broward College databases.

Correctly cite and document sources according to MLA format, using both in-text citations and the works cited list.

Essays must be 800-1,000 words minimum.

Advice : Choose an image that evokes a strong reaction in you. Look for an image that is rich, so you have plenty of material with which to work. You may also want to tie it thematically to the research you've done in the other two essays.

Norman Rockwell Museum

(works best in explorer).

- Opposing Viewpoints in Context Use "Advance Search" to select "Cartoon" in search box and "Images" in content type

- ARTstor A repository of hundreds of thousands of digital images and related data.

- Cartoon Bank Conde Nast single image cartoons

- Library of Congress Collections of photographs, cartoons and caricatures from American newspapers and magazines

- LIFE Magazine Hosted by Google, cover to cover of LIFE Magazine from November 23, 1936 to December 29, 1972 including advertisements.

- American Memory

- National Geographic Image Library

- Florida Memory Project

- << Previous: Argumentative Essay With Sources

- Next: About MLA >>

- Last Updated: Apr 18, 2024 10:58 AM

- URL: https://libguides.broward.edu/berkley

Medical image analysis based on deep learning approach

- Published: 06 April 2021

- Volume 80 , pages 24365–24398, ( 2021 )

Cite this article

- Muralikrishna Puttagunta 1 &

- S. Ravi ORCID: orcid.org/0000-0001-7267-9233 1

33k Accesses

111 Citations

9 Altmetric

Explore all metrics

Medical imaging plays a significant role in different clinical applications such as medical procedures used for early detection, monitoring, diagnosis, and treatment evaluation of various medical conditions. Basicsof the principles and implementations of artificial neural networks and deep learning are essential for understanding medical image analysis in computer vision. Deep Learning Approach (DLA) in medical image analysis emerges as a fast-growing research field. DLA has been widely used in medical imaging to detect the presence or absence of the disease. This paper presents the development of artificial neural networks, comprehensive analysis of DLA, which delivers promising medical imaging applications. Most of the DLA implementations concentrate on the X-ray images, computerized tomography, mammography images, and digital histopathology images. It provides a systematic review of the articles for classification, detection, and segmentation of medical images based on DLA. This review guides the researchers to think of appropriate changes in medical image analysis based on DLA.

Similar content being viewed by others

Brain tumor detection and classification using machine learning: a comprehensive survey

Machine learning and deep learning approach for medical image analysis: diagnosis to detection

UNet++: A Nested U-Net Architecture for Medical Image Segmentation

Avoid common mistakes on your manuscript.

1 Introduction

In the health care system, there has been a dramatic increase in demand for medical image services, e.g. Radiography, endoscopy, Computed Tomography (CT), Mammography Images (MG), Ultrasound images, Magnetic Resonance Imaging (MRI), Magnetic Resonance Angiography (MRA), Nuclear medicine imaging, Positron Emission Tomography (PET) and pathological tests. Besides, medical images can often be challenging to analyze and time-consuming process due to the shortage of radiologists.

Artificial Intelligence (AI) can address these problems. Machine Learning (ML) is an application of AI that can be able to function without being specifically programmed, that learn from data and make predictions or decisions based on past data. ML uses three learning approaches, namely, supervised learning, unsupervised learning, and semi-supervised learning. The ML techniques include the extraction of features and the selection of suitable features for a specific problem requires a domain expert. Deep learning (DL) techniques solve the problem of feature selection. DL is one part of ML, and DL can automatically extract essential features from raw input data [ 88 ]. The concept of DL algorithms was introduced from cognitive and information theories. In general, DL has two properties: (1) multiple processing layers that can learn distinct features of data through multiple levels of abstraction, and (2) unsupervised or supervised learning of feature presentations on each layer. A large number of recent review papers have highlighted the capabilities of advanced DLA in the medical field MRI [ 8 ], Radiology [ 96 ], Cardiology [ 11 ], and Neurology [ 155 ].

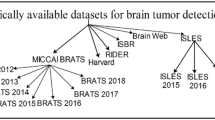

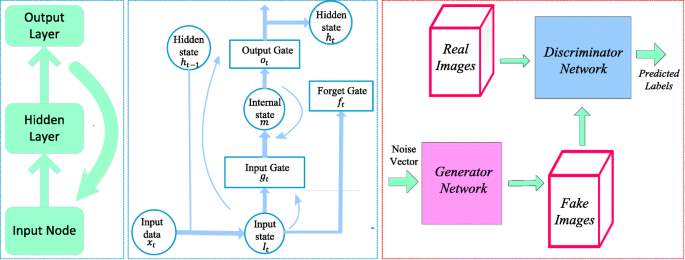

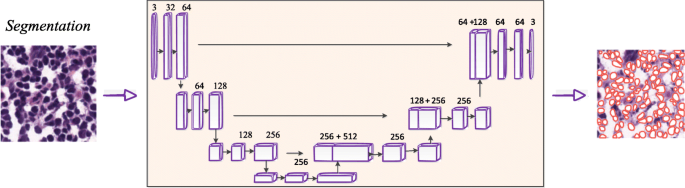

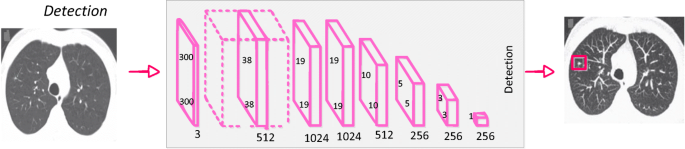

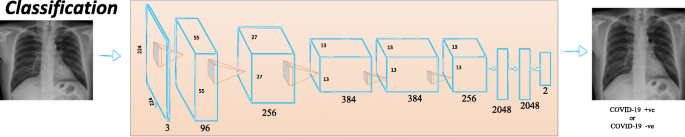

Different forms of DLA were borrowed from the field of computer vision and applied to specific medical image analysis. Recurrent Neural Networks (RNNs) and convolutional neural networks are examples of supervised DL algorithms. In medical image analysis, unsupervised learning algorithms have also been studied; These include Deep Belief Networks (DBNs), Restricted Boltzmann Machines (RBMs), Autoencoders, and Generative Adversarial Networks (GANs) [ 84 ]. DLA is generally applicable for detecting an abnormality and classify a specific type of disease. When DLA is applied to medical images, Convolutional Neural Networks (CNN) are ideally suited for classification, segmentation, object detection, registration, and other tasks [ 29 , 44 ]. CNN is an artificial visual neural network structure used for medical image pattern recognition based on convolution operation. Deep learning (DL) applications in medical images are visualized in Fig. 1 .

a X-ray image with pulmonary masses [ 121 ] b CT image with lung nodule [ 82 ] c Digitized histo pathological tissue image [ 132 ]

2 Neural networks

2.1 history of neural networks.

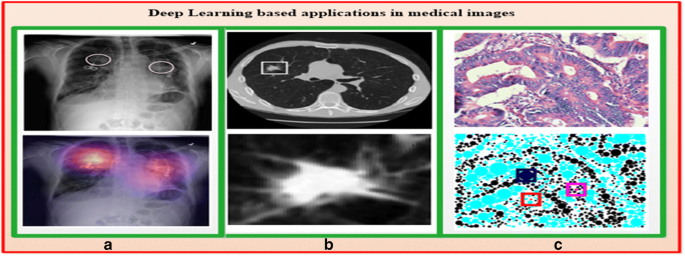

The study of artificial neural networks and deep learning derives from the ability to create a computer system that simulates the human brain [ 33 ]. A neurophysiologist, Warren McCulloch, and a mathematician Walter Pitts [ 97 ] developed a primitive neural network based on what has been known as a biological structure in the early 1940s. In 1949, a book titled “Organization of Behavior” [ 100 ] was the first to describe the process of upgrading synaptic weights which is now referred to as the Hebbian Learning Rule. In 1958, Frank Rosenblatt’s [ 127 ] landmark paper defined the structure of the neural network called the perceptron for the binary classification task.

In 1962, Windrow [ 172 ] introduced a device called the Adaptive Linear Neuron (ADALINE) by implementing their designs in hardware. The limitations of perceptions were emphasized by Minski and Papert (1969) [ 98 ]. The concept of the backward propagation of errors for purposes of training is discussed in Werbose1974 [ 171 ]. In 1979, Fukushima [ 38 ] designed artificial neural networks called Neocognitron, with multiple pooling and convolution layers. One of the most important breakthroughs in deep learning occurred in 2006, when Hinton et al. [ 9 ] implemented the Deep Belief Network, with several layers of Restricted Boltzmann Machines, greedily teaching one layer at a time in an unsupervised fashion. In 1989, Yann LeCun [ 71 ] combined CNN with backpropagation to effectively perform the automated recognition of handwritten digits. Figure 2 shows important advancements in the history of neural networks that led to a deep learning era.

Demonstrations of significant developments in the history of neural networks [ 33 , 134 ]

2.2 Artificial neural networks

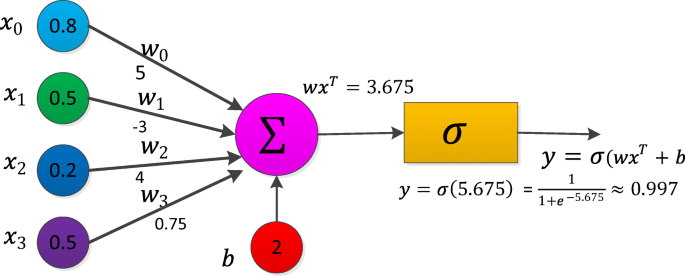

Artificial Neural Networks (ANN) form the basis for most of the DLA. ANN is a computational model structure that has some performance characteristics similar to biological neural networks. ANN comprises simple processing units called neurons or nodes that are interconnected by weighted links. A biological neuron can be described mathematically in Eq. ( 1 ). Figure 3 shows the simplest artificial neural model known as the perceptron.

Perceptron [ 77 ]

2.3 Training a neural network with Backpropagation (BP)

In the neural networks, the learning process is modeled as an iterative process of optimization of the weights to minimize a loss function. Based on network performance, the weights are modified on a set of examples belonging to the training set. The necessary steps of the training procedure contain forward and backward phases. For Neural Network training, any of the activation functions in forwarding propagation is selected and BP training is used for changing weights. The BP algorithm helps multilayer FFNN to learn input-output mappings from training samples [ 16 ]. Forward propagation and backpropagation are explained with the one hidden layer deep neural networks in the following algorithm.

The backpropagation algorithm is as follows for one hidden layer neural network

Initialize all weights to small random values.

While the stopping condition is false, do steps 3 through10.

For each training pair (( x 1 , y 1 )…( x n , y n ) do steps 4 through 9.

Feed-forward propagation:

Each input unit ( X i , i = 1, 2, … n ) receives the input signal x i and send this signal to all hidden units in the above layer.

Each hidden unit ( Z j , j = 1. ., p ) compute output using the below equation, and it transmits to the output unit (i.e.) \( {z}_{j\_ in}={b}_j+{\sum}_{i=1}^n{w}_{ij}{x}_i \) applies to an activation function Z j = f ( Z j _ in ).

Compute the out signal for each output unit ( Y k , k = 1, …., m ).

\( {y}_{k\_ in}={b}_k+{\sum}_{j=1}^p{z}_j{w}_{jk} \) and calculate activation y k = f ( y k _ in )

Backpropagation

For input training pattern ( x 1 , x 2 …., x n ) corresponding output pattern ( y 1 , y 2 , …, y m ), let ( t 1 , t 2 , …. . t m ) be target pattern. For each output, the neuron computes network error δ k

At output-layer neurons δ k = ( t k − y k ) f ′ ( y k _ in )

For each hidden neuron, calculate its error information term δ j while doing so, use δ k of the output neurons as obtained in the previous step

At Hidden layer neurons \( {\delta}_j={f}^{\prime}\left({z}_{j\_ in}\right){\sum}_k^m{\delta}_k{w}_{jk} \)

Update weights and biases using the following formulas where η is learning rate

Each output layer ( Y k , k = 1, 2, …. m ) updates its weights ( J = 0, 1, … P ) and bias

w jk ( new ) = w jk ( old ) + ηδ k z j ; b k ( new ) = b k ( old ) + ηδ k

Each hidden layer ( Z J , J = 1, 2, … p ) updates its weights ( i = 0, 1, … n ) biases:

w ij ( new ) = w ij ( old ) + ηδ j x i ; b j ( old ) = b j ( old ) + ηδ j

Test stopping condition

2.4 Activation function

The activation function is the mechanism by which artificial neurons process and transfers information [ 42 ]. There are various types of activation functions which can be used in neural networks based on the characteristic of the application. The activation functions are non-linear and continuously differentiable. Differentiability property is important mainly when training a neural network using the gradient descent method. Some widely used activation functions are listed in Table 1 .

3 Deep learning

Deep learning is a subset of the machine learning field which deals with the development of deep neural networks inspired by biological neural networks in the human brain .

3.1 Autoencoder

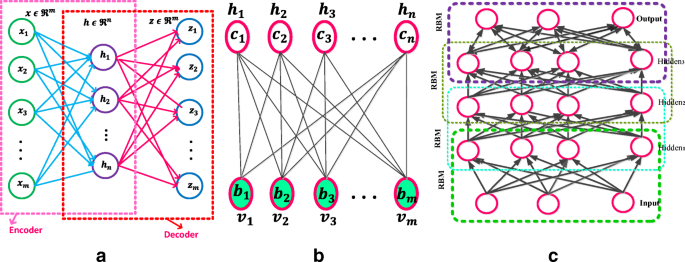

Autoencoder (AE) [ 128 ] is one of the deep learning models which exemplifies the principle of unsupervised representation learning as depicted in Fig. 4a . AE is useful when the input data have more number of unlabelled data compared to labeled data. AE encodes the input x into a lower-dimensional space z. The encoded representation is again decoded to an approximated representation x ′ of the input x through one hidden layer z.

a Autoencoder [ 187 ] b Restricted Boltzmann Machine with n hidden and m visible units [ 88 ] c Deep Belief Networks [ 88 ]

Basic AE consists of three main steps:

Encode: Convert input vector \( x\ \epsilon\ {\mathbf{\mathfrak{R}}}^{\boldsymbol{m}} \) into \( h\ \epsilon\ {\mathbf{\mathfrak{R}}}^{\mathrm{n}} \) , the hidden layer by h = f ( wx + b )where \( w\ \epsilon\ {\mathbf{\mathfrak{R}}}^{\boldsymbol{m}\ast \boldsymbol{n}} \) and \( b\ \epsilon\ {\mathbf{\mathfrak{R}}}^{\boldsymbol{n}} \) . m and n are dimensions of the input vector and converted hidden state. The dimension of the hidden layer h is to be smaller than x . f is an activate function.

Decode: Based on the above h , reconstruct input vector z by equation z = f ′ ( w ′ h + b ′ ) where \( {w}^{\prime}\epsilon\ {\mathbf{\mathfrak{R}}}^{\boldsymbol{n}\ast \boldsymbol{m}} \) and \( {b}^{\prime}\boldsymbol{\epsilon} {\mathbf{\mathfrak{R}}}^{\boldsymbol{m}}. \) The f ′ is the same as the above activation function.

Calculate square error: L recons ( x , z) = ∥ x − z∥ 2 , which is the reconstruction error cost function. Reconstruct error minimization is achieved by optimizing the cost function (2)

Another unsupervised algorithm representation is known as Stacked Autoencoder (SAE). The SAE comprises stacks of autoencoder layers mounted on top of each other where the output of each layer was wired to the inputs of the next layer. A Denoising Autoencoder (DAE) was introduced by Vincent et al. [ 159 ]. The DAE is trained to reconstruct the input from random noise added input data. Variational autoencoder (VAE) [ 66 ] is modifying the encoder where the latent vector space is used to represent the images that follow a Gaussian distribution unit. There are two losses in this model; one is a mean squared error and the Kull back Leibler divergence loss that determines how close the latent variable matches the Gaussian distribution unit. Sparse autoencoder [ 106 ] and variational autoencoders have applications in unsupervised, semi-supervised learning, and segmentation.

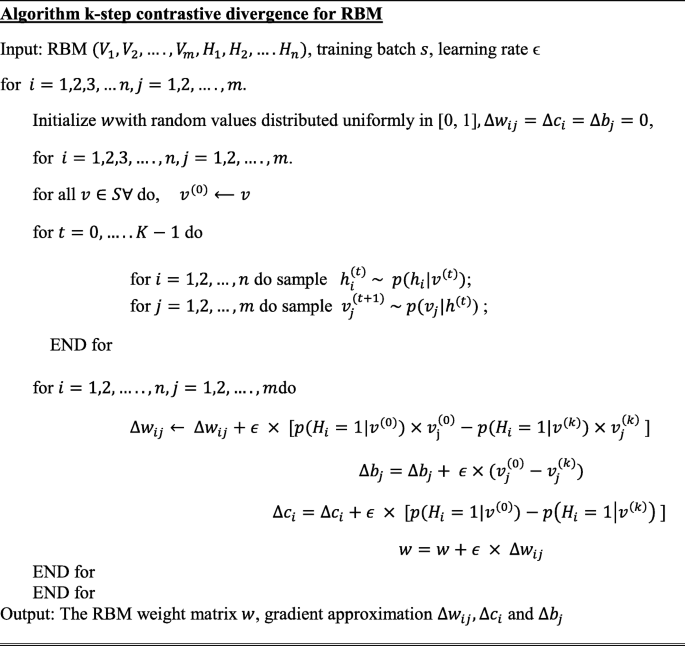

3.2 Restricted Boltzmann machine

A Restricted Boltzmann machine [RBM] is a Markov Random Field (MRF) associated with the two-layer undirected probabilistic generative model, as shown in Fig. 4b . RBM contains visible units (input) v and hidden (output) units h . A significant feature of this model is that there is no direct contact between the two visible units or either of the two hidden units. In binary RBMs, the random variables ( v , h ) takes ( v , h ) ∈ {0, 1} m + n . Like the general Boltzmann machine [ 50 ], the RBM is an energy-based model. The energy of the state { v , h } is defined as (3)

where v j , h i are the binary states of visible unit j ∈ {1, 2, … m } and hidden unit i ∈ {1, 2, .. n }, b j , c i are their biases of visible and hidden units, w ij is the symmetric interaction term between the units v j and h i them. A joint probability of ( v , h ) is given by the Gibbs distribution in Eq. ( 4 )

Z is a “partition function” that can be given by summing over all possible pairs of visual v and hidden h (5).

A significant feature of the RBM model is that there is no direct contact between the two visible units or either of the two hidden units. In term of probability, conditional distributions p ( h | v ) and p ( v | h ) is computed as (6) \( p\left(h|v\right)={\prod}_{i=1}^np\left({h}_i|v\right) \)

For binary RBM condition distribution of visible and hidden are given by (7) and (8)

where σ( · ) is a sigmoid function

RBMs parameters ( w ij , b j , c i ) are efficiently calculated using the contrastive divergence learning method [ 150 ]. A batch version of k-step contrastive divergence learning (CD-k) can be discussed in the algorithm below [ 36 ]

3.3 Deep belief networks

The Deep Belief Networks (DBN) proposed by Hinton et al. [ 51 ] is a non-convolution model that can extract features and learn a deep hierarchical representation of training data. DBNs are generative models constructed by stacking multiple RBMs. DBN is a hybrid model, the first two layers are like RBM, and the rest of the layers form a directed generative model. A DBN has one visible layer v and a series of hidden layers h (1) , h (2) , …, h ( l ) as shown in Fig. 4c . The DBN model joint distribution between the observed units v and the l hidden layers h k ( k = 1, … l ) as (9)

where v = h (0) , P ( h k | h k + 1 ) is a conditional distribution (10) for the layer k given the units of k + 1

A DBN has l weight matrices: W (1) , …. , W ( l ) and l + 1 bias vectors: b (0) , …, b ( l ) P ( h ( l ) , h ( l − 1) ) is the joint distribution of top-level RBM (11).

The probability distribution of DBN is given by Eq. ( 12 )

3.4 Convolutional neural networks (CNN)