15 Scientific Method Examples

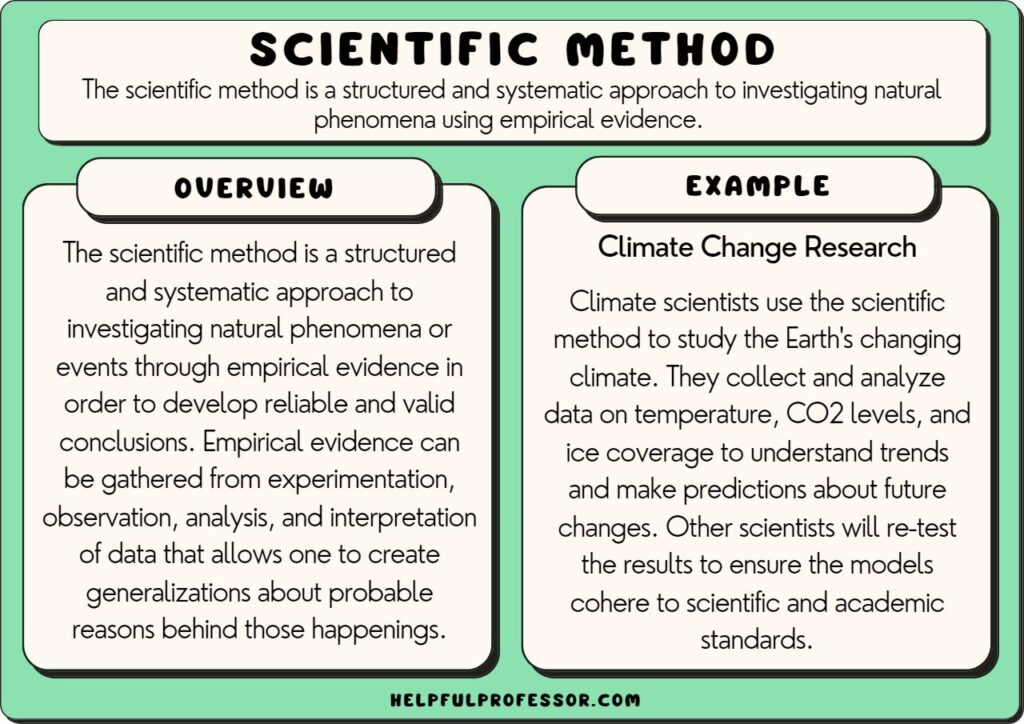

The scientific method is a structured and systematic approach to investigating natural phenomena using empirical evidence .

The scientific method has been a lynchpin for rapid improvements in human development. It has been an invaluable procedure for testing and improving upon human ingenuity. It’s led to amazing scientific, technological, and medical breakthroughs.

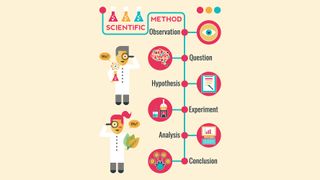

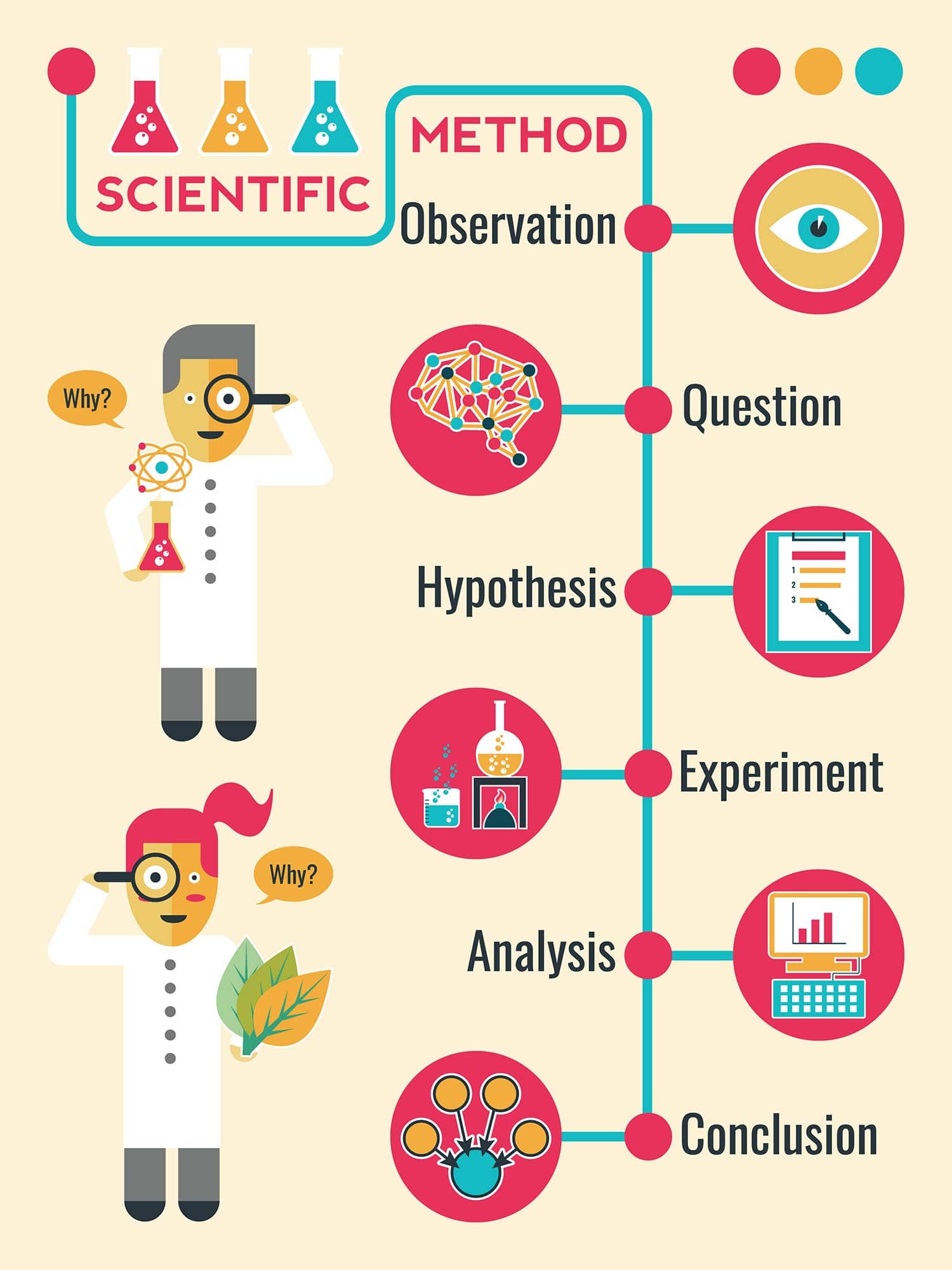

Some common steps in a scientific approach would include:

- Observation

- Question formulation

- Hypothesis development

- Experimentation and collecting data

- Analyzing results

- Drawing conclusions

Definition of Scientific Method

The scientific method is a structured and systematic approach to investigating natural phenomena or events through empirical evidence.

Empirical evidence can be gathered from experimentation, observation, analysis, and interpretation of data that allows one to create generalizations about probable reasons behind those happenings.

As mentioned in the article published in the journal Nature,

“ As schoolchildren, we are taught that the scientific method involves a question and suggested explanation (hypothesis) based on observation, followed by the careful design and execution of controlled experiments, and finally validation, refinement or rejection of this hypothesis” (p. 237).

The use of scientific methods permits replication and validation of other people’s scientific analyses, leading toward improvement upon previous results, and solid empirical conclusions.

Voit (2019) adds that:

“…it not only prescribes the order and types of activities that give a scientific study validity and a stamp of approval but also has substantially shaped how we collectively think about the endeavor of investigating nature” (p. 1).

This method aims to minimize subjective biases while maximizing objectivity helping researchers gather factual data.

It follows set procedures and guidelines for testing hypotheses using controlled conditions, assuring optimum accuracy and relevance in concluding by assessing a range of aspects (Blystone & Blodgett, 2006).

Overall, the scientific method provides researchers with a structured way of inquiry that seeks insightful explanations regarding evidence-based investigation grounded in facts acquired from an array of fields.

15 Examples of Scientific Method

- Medicine Delivery : Scientists use scientific method to determine the most effective way of delivering a medicine to its target location in the body. They perform experiments and gather data on the different methods of medicine delivery, monitoring factors such as dosage and time release.

- Agricultural Research : Scientific method is frequently used in agricultural research to determine the most effective way to grow crops or raise livestock. This may involve testing different fertilizers, irrigation methods, or animal feed, measuring yield, and analyzing data.

- Food Science and Nutrition : Nutritionists and food scientists use the scientific method to study the effects of different food types and diet on health. They design experiments to understand the impact of dietary changes on weight, disease risk, and overall health outcomes.

- Environmental Studies : Researchers use scientific method to study natural ecosystems and how human activities impact them. They collect data on things like biodiversity, water quality, and pollution levels, analyzing changes over time.

- Psychological Studies : Psychologists use the scientific method to understand human behavior and cognition. They conduct experiments under controlled conditions to test theories about learning, memory, social interaction, and more.

- Climate Change Research : Climate scientists use the scientific method to study the Earth’s changing climate. They collect and analyze data on temperature, CO2 levels, and ice coverage to understand trends and make predictions about future changes.

- Geology Exploration : Geologists use scientific method to analyze rock samples from deep in the earth’s crust and gather information about geological processes over millions of years. They evaluate data by studying patterns left behind by these processes.

- Space Exploration : Scientists use scientific methods in designing space missions so that they can explore other planets or learn more about our solar system. They employ experiments like landing craft exploration missions as well as remote sensing techniques that allow them to examine far-off planets without having physically land on their surfaces.

- Archaeology : Archaeologists use the scientific method to understand past human cultures. They formulate hypotheses about a site or artifact, conduct excavations or analyses, and then interpret the data to test their hypotheses.

- Clinical Trials : Medical researchers use scientific method to test new treatments and therapies for various diseases. They design controlled studies that track patients’ outcomes while varying variables like dosage or treatment frequency.

- Industrial Research & Development : Many companies use scientific methods in their R&D departments. For example, automakers may assess the effectiveness of anti-lock brakes before releasing them into the marketplace through tests with dummy targets.

- Material Science Experiments : Engineers have extensively used scientific method experimentation efforts when designing new materials and testing which options could be flexible enough for certain applications. These experiments might include casting molten material into molds and then subjecting it to high heat to expose vulnerabilities

- Chemical Engineering Investigations : Chemical engineers also abide by scientific method principles to create new chemical compounds & technologies designed to be valuable in the industry. They may experiment with different substances, changing materials’ concentration and heating conditions to ensure the final end-product safety and reliability of the material.

- Biotechnology : Biotechnologists use the scientific method to develop new products or processes. For instance, they may experiment with genetic modification techniques to enhance crop resistance to pests or disease.

- Physics Research : Scientists use scientific method in their work to study fundamental principles of the universe. They seek answers for how atoms and molecules are breaking down and related events that unfold naturally by running many simulations using computer models or designing sophisticated experiments to test hypotheses.

Origins of the Scientific Method

The scientific method can be traced back to ancient times when philosophers like Aristotle used observation and logic to understand the natural world.

These early philosophers were focused on understanding the world around them and sought explanations for natural phenomena through direct observation (Betz, 2010).

In the Middle Ages, Muslim scholars played a key role in developing scientific inquiry by emphasizing empirical observations.

Alhazen (a.k.a Ibn al-Haytham), for example, introduced experimental methods that helped establish optics as a modern science. He emphasized investigation through experimentation with controlled conditions (De Brouwer, 2021).

During the Scientific Revolution of the 17th century in Europe, scientists such as Francis Bacon and René Descartes began to develop what we now know as the scientific method observation (Betz, 2010).

Bacon argued that knowledge must be based on empirical evidence obtained through observation and experimentation rather than relying solely upon tradition or authority.

Descartes emphasized mathematical methods as tools in experimentation and rigorous thinking processes (Fukuyama, 2021).

These ideas later developed into systematic research designs , including hypothesis testing, controlled experiments, and statistical analysis – all of which are still fundamental aspects of modern-day scientific research.

Since then, technological advancements have allowed for more sophisticated instruments and measurements, yielding far more precise data sets scientists use today in fields ranging from Medicine & Chemistry to Astrophysics or Genetics.

So, while early Greek philosophers laid much groundwork toward an observational-based approach to explaining nature, Islam scholars furthered our understanding of logical reasoning techniques and gave rise to a more formalized methodology.

Steps in the Scientific Method

While there may be variations in the specific steps scientists follow, the general process has six key steps (Blystone & Blodgett, 2006).

Here is a brief overview of each of these steps:

1. Observation

The first step in the scientific method is to identify and observe a phenomenon that requires explanation.

This can involve asking open-ended questions, making detailed observations using our senses or tools, or exploring natural patterns, which are sources to develop hypotheses.

2. Formulation of a Hypothesis

A hypothesis is an educated guess or proposed explanation for the observed phenomenon based on previous observations & experiences or working assumptions derived from a valid literature review .

The hypothesis should be testable and falsifiable through experimentation and subsequent analysis.

3. Testing of the Hypothesis

In this step, scientists perform experiments to test their hypothesis while ensuring that all variables are controlled besides the one being observed.

The data collected in these experiments must be measurable, repeatable, and consistent.

4. Data Analysis

Researchers carefully scrutinize data gathered from experiments – typically using inferential statistics techniques to analyze whether results support their hypotheses or not.

This helps them gain important insights into what previously unknown mechanisms might exist based on statistical evidence gained about their system.

See: 15 Examples of Data Analysis

5. Drawing Conclusions

Based on their data analyses, scientists reach conclusions about whether their original hypotheses were supported by evidence obtained from testing.

If there is insufficient supporting evidence for their ideas – trying again with modified iterations of the initial idea sometimes happens.

6. Communicating Results

Once results have been analyzed and interpreted under accepted principles within the scientific community, scientists publish findings in respected peer-reviewed journals.

These publications help knowledge-driven communities establish trends within respective fields while indirectly subjecting papers reviews requests boosting research quality across the scientific discipline.

Importance of the Scientific Method

The scientific method is important because it helps us to collect reliable data and develop testable hypotheses that can be used to explain natural phenomena (Haig, 2018).

Here are some reasons why the scientific method is so essential:

- Objectivity : The scientific method requires researchers to conduct unbiased experiments and analyses, which leads to more impartial conclusions. In this way, replication of findings by peers also ensures results can be relied upon as founded on sound principles allowing others confidence in building further knowledge on top of existing research.

- Precision & Predictive Power : Scientific methods usually include techniques for obtaining highly precise measurements, ensuring that data collected is more meaningful with fewer uncertainties caused by limited measuring errors leading to statistically significant results having firm logical foundations. If predictions develop scientifically tested generalized defined conditions factored into the analysis, it helps in delivering realistic expectations

- Validation : By following established scientific principles defined within the community – independent scholars can replicate observation data without being influenced by subjective biases or prejudices. It assures general acceptance among scientific communities who follow similar protocols when researching within respective fields.

- Application & Innovation : Scientific concept advancements that occur based on correct hypothesis testing commonly lead scientists toward new discoveries, identifying potential breakthroughs in research. They pave the way for technological innovations often seen as game changers, like mapping human genome DNA onto creating novel therapies against genetic diseases or unlocking secrets of today’s universe through discoveries at LHC.

- Impactful Decision-Making : Policymakers can draw from these scientific findings investing resources into informed decisions leading us toward a sustainable future. For example, research gathered about carbon pollution’s impact on climate change informs debate making policy action decisions about our planet’s environment, providing valuable knowledge-useful information benefiting societies (Haig, 2018).

The scientific method is an essential tool that has revolutionized our understanding of the natural world.

By emphasizing rigorous experimentation, objective measurement, and logical analysis- scientists can obtain more unbiased evidence with empirical validity .

Utilizing this methodology has led to groundbreaking discoveries & knowledge expansion that have shaped our modern world from medicine to technology.

The scientific method plays a crucial role in advancing research and our overall societal consensus on reliable information by providing reliable results, ensuring we can make more informed decisions toward a sustainable future.

As scientific advancements continue rapidly, ensuring we’re applying core principles of this process enables objectives to progress, paving new ways for interdisciplinary research across all fields, thereby fuelling ever-driving human curiosity.

Betz, F. (2010). Origin of scientific method. Managing Science , 21–41. https://doi.org/10.1007/978-1-4419-7488-4_2

Blystone, R. V., & Blodgett, K. (2006). WWW: The scientific method. CBE—Life Sciences Education , 5 (1), 7–11. https://doi.org/10.1187/cbe.05-12-0134

De Brouwer , P. J. S. (2021). The big r-book: From data science to learning machines and big data . John Wiley & Sons, Inc.

Defining the scientific method. (2009). Nature Methods , 6 (4), 237–237. https://doi.org/10.1038/nmeth0409-237

Fukuyama, F. (2012). The end of history and the last man . New York: Penguin.

Haig, B. D. (2018). The importance of scientific method for psychological science. Psychology, Crime & Law , 25 (6), 527–541. https://doi.org/10.1080/1068316x.2018.1557181

Voit, E. O. (2019). Perspective: Dimensions of the scientific method. PLOS Computational Biology , 15 (9), e1007279. https://doi.org/10.1371/journal.pcbi.1007279

Viktoriya Sus (MA)

Viktoriya Sus is an academic writer specializing mainly in economics and business from Ukraine. She holds a Master’s degree in International Business from Lviv National University and has more than 6 years of experience writing for different clients. Viktoriya is passionate about researching the latest trends in economics and business. However, she also loves to explore different topics such as psychology, philosophy, and more.

- Viktoriya Sus (MA) #molongui-disabled-link 15 Free Enterprise Examples

- Viktoriya Sus (MA) #molongui-disabled-link 21 Sunk Costs Examples (The Fallacy Explained)

- Viktoriya Sus (MA) #molongui-disabled-link Price Floor: 15 Examples & Definition

- Viktoriya Sus (MA) #molongui-disabled-link Linguistic Relativity: 10 Examples and Definition

Chris Drew (PhD)

This article was peer-reviewed and edited by Chris Drew (PhD). The review process on Helpful Professor involves having a PhD level expert fact check, edit, and contribute to articles. Reviewers ensure all content reflects expert academic consensus and is backed up with reference to academic studies. Dr. Drew has published over 20 academic articles in scholarly journals. He is the former editor of the Journal of Learning Development in Higher Education and holds a PhD in Education from ACU.

- Chris Drew (PhD) #molongui-disabled-link 25 Positive Punishment Examples

- Chris Drew (PhD) #molongui-disabled-link 25 Dissociation Examples (Psychology)

- Chris Drew (PhD) #molongui-disabled-link 15 Zone of Proximal Development Examples

- Chris Drew (PhD) #molongui-disabled-link Perception Checking: 15 Examples and Definition

Scientific Method

Illustration by J.R. Bee. ThoughtCo.

- Cell Biology

- Weather & Climate

- B.A., Biology, Emory University

- A.S., Nursing, Chattahoochee Technical College

The scientific method is a series of steps followed by scientific investigators to answer specific questions about the natural world. It involves making observations, formulating a hypothesis , and conducting scientific experiments . Scientific inquiry starts with an observation followed by the formulation of a question about what has been observed. The steps of the scientific method are as follows:

Observation

The first step of the scientific method involves making an observation about something that interests you. This is very important if you are doing a science project because you want your project to be focused on something that will hold your attention. Your observation can be on anything from plant movement to animal behavior, as long as it is something you really want to know more about. This is where you come up with the idea for your science project.

Once you've made your observation, you must formulate a question about what you have observed. Your question should tell what it is that you are trying to discover or accomplish in your experiment. When stating your question you should be as specific as possible. For example, if you are doing a project on plants , you may want to know how plants interact with microbes. Your question may be: Do plant spices inhibit bacterial growth ?

The hypothesis is a key component of the scientific process. A hypothesis is an idea that is suggested as an explanation for a natural event, a particular experience, or a specific condition that can be tested through definable experimentation. It states the purpose of your experiment, the variables used, and the predicted outcome of your experiment. It is important to note that a hypothesis must be testable. That means that you should be able to test your hypothesis through experimentation . Your hypothesis must either be supported or falsified by your experiment. An example of a good hypothesis is: If there is a relation between listening to music and heart rate, then listening to music will cause a person's resting heart rate to either increase or decrease.

Once you've developed a hypothesis, you must design and conduct an experiment that will test it. You should develop a procedure that states very clearly how you plan to conduct your experiment. It is important that you include and identify a controlled variable or dependent variable in your procedure. Controls allow us to test a single variable in an experiment because they are unchanged. We can then make observations and comparisons between our controls and our independent variables (things that change in the experiment) to develop an accurate conclusion.

The results are where you report what happened in the experiment. That includes detailing all observations and data made during your experiment. Most people find it easier to visualize the data by charting or graphing the information.

The final step of the scientific method is developing a conclusion. This is where all of the results from the experiment are analyzed and a determination is reached about the hypothesis. Did the experiment support or reject your hypothesis? If your hypothesis was supported, great. If not, repeat the experiment or think of ways to improve your procedure.

- Six Steps of the Scientific Method

- What Is an Experiment? Definition and Design

- Scientific Method Flow Chart

- Scientific Method Lesson Plan

- How To Design a Science Fair Experiment

- Science Projects for Every Subject

- How to Do a Science Fair Project

- What Are the Elements of a Good Hypothesis?

- How to Write a Lab Report

- What Is a Hypothesis? (Science)

- Biology Science Fair Project Ideas

- Understanding Simple vs Controlled Experiments

- Null Hypothesis Definition and Examples

- Stove Top Frozen Pizza Science Experiment

- Dependent Variable Definition and Examples

- What Is the Difference Between Hard and Soft Science?

Science and the scientific method: Definitions and examples

Here's a look at the foundation of doing science — the scientific method.

The scientific method

Hypothesis, theory and law, a brief history of science, additional resources, bibliography.

Science is a systematic and logical approach to discovering how things in the universe work. It is also the body of knowledge accumulated through the discoveries about all the things in the universe.

The word "science" is derived from the Latin word "scientia," which means knowledge based on demonstrable and reproducible data, according to the Merriam-Webster dictionary . True to this definition, science aims for measurable results through testing and analysis, a process known as the scientific method. Science is based on fact, not opinion or preferences. The process of science is designed to challenge ideas through research. One important aspect of the scientific process is that it focuses only on the natural world, according to the University of California, Berkeley . Anything that is considered supernatural, or beyond physical reality, does not fit into the definition of science.

When conducting research, scientists use the scientific method to collect measurable, empirical evidence in an experiment related to a hypothesis (often in the form of an if/then statement) that is designed to support or contradict a scientific theory .

"As a field biologist, my favorite part of the scientific method is being in the field collecting the data," Jaime Tanner, a professor of biology at Marlboro College, told Live Science. "But what really makes that fun is knowing that you are trying to answer an interesting question. So the first step in identifying questions and generating possible answers (hypotheses) is also very important and is a creative process. Then once you collect the data you analyze it to see if your hypothesis is supported or not."

The steps of the scientific method go something like this, according to Highline College :

- Make an observation or observations.

- Form a hypothesis — a tentative description of what's been observed, and make predictions based on that hypothesis.

- Test the hypothesis and predictions in an experiment that can be reproduced.

- Analyze the data and draw conclusions; accept or reject the hypothesis or modify the hypothesis if necessary.

- Reproduce the experiment until there are no discrepancies between observations and theory. "Replication of methods and results is my favorite step in the scientific method," Moshe Pritsker, a former post-doctoral researcher at Harvard Medical School and CEO of JoVE, told Live Science. "The reproducibility of published experiments is the foundation of science. No reproducibility — no science."

Some key underpinnings to the scientific method:

- The hypothesis must be testable and falsifiable, according to North Carolina State University . Falsifiable means that there must be a possible negative answer to the hypothesis.

- Research must involve deductive reasoning and inductive reasoning . Deductive reasoning is the process of using true premises to reach a logical true conclusion while inductive reasoning uses observations to infer an explanation for those observations.

- An experiment should include a dependent variable (which does not change) and an independent variable (which does change), according to the University of California, Santa Barbara .

- An experiment should include an experimental group and a control group. The control group is what the experimental group is compared against, according to Britannica .

The process of generating and testing a hypothesis forms the backbone of the scientific method. When an idea has been confirmed over many experiments, it can be called a scientific theory. While a theory provides an explanation for a phenomenon, a scientific law provides a description of a phenomenon, according to The University of Waikato . One example would be the law of conservation of energy, which is the first law of thermodynamics that says that energy can neither be created nor destroyed.

A law describes an observed phenomenon, but it doesn't explain why the phenomenon exists or what causes it. "In science, laws are a starting place," said Peter Coppinger, an associate professor of biology and biomedical engineering at the Rose-Hulman Institute of Technology. "From there, scientists can then ask the questions, 'Why and how?'"

Laws are generally considered to be without exception, though some laws have been modified over time after further testing found discrepancies. For instance, Newton's laws of motion describe everything we've observed in the macroscopic world, but they break down at the subatomic level.

This does not mean theories are not meaningful. For a hypothesis to become a theory, scientists must conduct rigorous testing, typically across multiple disciplines by separate groups of scientists. Saying something is "just a theory" confuses the scientific definition of "theory" with the layperson's definition. To most people a theory is a hunch. In science, a theory is the framework for observations and facts, Tanner told Live Science.

The earliest evidence of science can be found as far back as records exist. Early tablets contain numerals and information about the solar system , which were derived by using careful observation, prediction and testing of those predictions. Science became decidedly more "scientific" over time, however.

1200s: Robert Grosseteste developed the framework for the proper methods of modern scientific experimentation, according to the Stanford Encyclopedia of Philosophy. His works included the principle that an inquiry must be based on measurable evidence that is confirmed through testing.

1400s: Leonardo da Vinci began his notebooks in pursuit of evidence that the human body is microcosmic. The artist, scientist and mathematician also gathered information about optics and hydrodynamics.

1500s: Nicolaus Copernicus advanced the understanding of the solar system with his discovery of heliocentrism. This is a model in which Earth and the other planets revolve around the sun, which is the center of the solar system.

1600s: Johannes Kepler built upon those observations with his laws of planetary motion. Galileo Galilei improved on a new invention, the telescope, and used it to study the sun and planets. The 1600s also saw advancements in the study of physics as Isaac Newton developed his laws of motion.

1700s: Benjamin Franklin discovered that lightning is electrical. He also contributed to the study of oceanography and meteorology. The understanding of chemistry also evolved during this century as Antoine Lavoisier, dubbed the father of modern chemistry , developed the law of conservation of mass.

1800s: Milestones included Alessandro Volta's discoveries regarding electrochemical series, which led to the invention of the battery. John Dalton also introduced atomic theory, which stated that all matter is composed of atoms that combine to form molecules. The basis of modern study of genetics advanced as Gregor Mendel unveiled his laws of inheritance. Later in the century, Wilhelm Conrad Röntgen discovered X-rays , while George Ohm's law provided the basis for understanding how to harness electrical charges.

1900s: The discoveries of Albert Einstein , who is best known for his theory of relativity, dominated the beginning of the 20th century. Einstein's theory of relativity is actually two separate theories. His special theory of relativity, which he outlined in a 1905 paper, " The Electrodynamics of Moving Bodies ," concluded that time must change according to the speed of a moving object relative to the frame of reference of an observer. His second theory of general relativity, which he published as " The Foundation of the General Theory of Relativity ," advanced the idea that matter causes space to curve.

In 1952, Jonas Salk developed the polio vaccine , which reduced the incidence of polio in the United States by nearly 90%, according to Britannica . The following year, James D. Watson and Francis Crick discovered the structure of DNA , which is a double helix formed by base pairs attached to a sugar-phosphate backbone, according to the National Human Genome Research Institute .

2000s: The 21st century saw the first draft of the human genome completed, leading to a greater understanding of DNA. This advanced the study of genetics, its role in human biology and its use as a predictor of diseases and other disorders, according to the National Human Genome Research Institute .

- This video from City University of New York delves into the basics of what defines science.

- Learn about what makes science science in this book excerpt from Washington State University .

- This resource from the University of Michigan — Flint explains how to design your own scientific study.

Merriam-Webster Dictionary, Scientia. 2022. https://www.merriam-webster.com/dictionary/scientia

University of California, Berkeley, "Understanding Science: An Overview." 2022. https://undsci.berkeley.edu/article/0_0_0/intro_01

Highline College, "Scientific method." July 12, 2015. https://people.highline.edu/iglozman/classes/astronotes/scimeth.htm

North Carolina State University, "Science Scripts." https://projects.ncsu.edu/project/bio183de/Black/science/science_scripts.html

University of California, Santa Barbara. "What is an Independent variable?" October 31,2017. http://scienceline.ucsb.edu/getkey.php?key=6045

Encyclopedia Britannica, "Control group." May 14, 2020. https://www.britannica.com/science/control-group

The University of Waikato, "Scientific Hypothesis, Theories and Laws." https://sci.waikato.ac.nz/evolution/Theories.shtml

Stanford Encyclopedia of Philosophy, Robert Grosseteste. May 3, 2019. https://plato.stanford.edu/entries/grosseteste/

Encyclopedia Britannica, "Jonas Salk." October 21, 2021. https://www.britannica.com/ biography /Jonas-Salk

National Human Genome Research Institute, "Phosphate Backbone." https://www.genome.gov/genetics-glossary/Phosphate-Backbone

National Human Genome Research Institute, "What is the Human Genome Project?" https://www.genome.gov/human-genome-project/What

Live Science contributor Ashley Hamer updated this article on Jan. 16, 2022.

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

Eerie, orange skies loom over Athens as dust storm engulfs southern Greece

Hidden 'biosphere' of extreme microbes discovered 13 feet below Atacama Desert is deepest found there to date

Eclipse from space: Paths of 2024 and 2017 eclipses collide over US in new satellite image

Most Popular

- 2 James Webb telescope confirms there is something seriously wrong with our understanding of the universe

- 3 Giant, 82-foot lizard fish discovered on UK beach could be largest marine reptile ever found

- 4 Global 'time signals' subtly shifted as the total solar eclipse reshaped Earth's upper atmosphere, new data shows

- 5 'I nearly fell out of my chair': 1,800-year-old mini portrait of Alexander the Great found in a field in Denmark

- 2 Why does striking flint against steel start a fire?

- 3 Tweak to Schrödinger's cat equation could unite Einstein's relativity and quantum mechanics, study hints

- 4 32 times lasers revealed hidden forts and settlements from centuries ago

- 5 New UTI vaccine wards off infection for years, early studies suggest

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2023 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

Scientific Method Steps in Psychology Research

Steps, Uses, and Key Terms

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Emily is a board-certified science editor who has worked with top digital publishing brands like Voices for Biodiversity, Study.com, GoodTherapy, Vox, and Verywell.

:max_bytes(150000):strip_icc():format(webp)/Emily-Swaim-1000-0f3197de18f74329aeffb690a177160c.jpg)

Verywell / Theresa Chiechi

How do researchers investigate psychological phenomena? They utilize a process known as the scientific method to study different aspects of how people think and behave.

When conducting research, the scientific method steps to follow are:

- Observe what you want to investigate

- Ask a research question and make predictions

- Test the hypothesis and collect data

- Examine the results and draw conclusions

- Report and share the results

This process not only allows scientists to investigate and understand different psychological phenomena but also provides researchers and others a way to share and discuss the results of their studies.

Generally, there are five main steps in the scientific method, although some may break down this process into six or seven steps. An additional step in the process can also include developing new research questions based on your findings.

What Is the Scientific Method?

What is the scientific method and how is it used in psychology?

The scientific method consists of five steps. It is essentially a step-by-step process that researchers can follow to determine if there is some type of relationship between two or more variables.

By knowing the steps of the scientific method, you can better understand the process researchers go through to arrive at conclusions about human behavior.

Scientific Method Steps

While research studies can vary, these are the basic steps that psychologists and scientists use when investigating human behavior.

The following are the scientific method steps:

Step 1. Make an Observation

Before a researcher can begin, they must choose a topic to study. Once an area of interest has been chosen, the researchers must then conduct a thorough review of the existing literature on the subject. This review will provide valuable information about what has already been learned about the topic and what questions remain to be answered.

A literature review might involve looking at a considerable amount of written material from both books and academic journals dating back decades.

The relevant information collected by the researcher will be presented in the introduction section of the final published study results. This background material will also help the researcher with the first major step in conducting a psychology study: formulating a hypothesis.

Step 2. Ask a Question

Once a researcher has observed something and gained some background information on the topic, the next step is to ask a question. The researcher will form a hypothesis, which is an educated guess about the relationship between two or more variables

For example, a researcher might ask a question about the relationship between sleep and academic performance: Do students who get more sleep perform better on tests at school?

In order to formulate a good hypothesis, it is important to think about different questions you might have about a particular topic.

You should also consider how you could investigate the causes. Falsifiability is an important part of any valid hypothesis. In other words, if a hypothesis was false, there needs to be a way for scientists to demonstrate that it is false.

Step 3. Test Your Hypothesis and Collect Data

Once you have a solid hypothesis, the next step of the scientific method is to put this hunch to the test by collecting data. The exact methods used to investigate a hypothesis depend on exactly what is being studied. There are two basic forms of research that a psychologist might utilize: descriptive research or experimental research.

Descriptive research is typically used when it would be difficult or even impossible to manipulate the variables in question. Examples of descriptive research include case studies, naturalistic observation , and correlation studies. Phone surveys that are often used by marketers are one example of descriptive research.

Correlational studies are quite common in psychology research. While they do not allow researchers to determine cause-and-effect, they do make it possible to spot relationships between different variables and to measure the strength of those relationships.

Experimental research is used to explore cause-and-effect relationships between two or more variables. This type of research involves systematically manipulating an independent variable and then measuring the effect that it has on a defined dependent variable .

One of the major advantages of this method is that it allows researchers to actually determine if changes in one variable actually cause changes in another.

While psychology experiments are often quite complex, a simple experiment is fairly basic but does allow researchers to determine cause-and-effect relationships between variables. Most simple experiments use a control group (those who do not receive the treatment) and an experimental group (those who do receive the treatment).

Step 4. Examine the Results and Draw Conclusions

Once a researcher has designed the study and collected the data, it is time to examine this information and draw conclusions about what has been found. Using statistics , researchers can summarize the data, analyze the results, and draw conclusions based on this evidence.

So how does a researcher decide what the results of a study mean? Not only can statistical analysis support (or refute) the researcher’s hypothesis; it can also be used to determine if the findings are statistically significant.

When results are said to be statistically significant, it means that it is unlikely that these results are due to chance.

Based on these observations, researchers must then determine what the results mean. In some cases, an experiment will support a hypothesis, but in other cases, it will fail to support the hypothesis.

So what happens if the results of a psychology experiment do not support the researcher's hypothesis? Does this mean that the study was worthless?

Just because the findings fail to support the hypothesis does not mean that the research is not useful or informative. In fact, such research plays an important role in helping scientists develop new questions and hypotheses to explore in the future.

After conclusions have been drawn, the next step is to share the results with the rest of the scientific community. This is an important part of the process because it contributes to the overall knowledge base and can help other scientists find new research avenues to explore.

Step 5. Report the Results

The final step in a psychology study is to report the findings. This is often done by writing up a description of the study and publishing the article in an academic or professional journal. The results of psychological studies can be seen in peer-reviewed journals such as Psychological Bulletin , the Journal of Social Psychology , Developmental Psychology , and many others.

The structure of a journal article follows a specified format that has been outlined by the American Psychological Association (APA) . In these articles, researchers:

- Provide a brief history and background on previous research

- Present their hypothesis

- Identify who participated in the study and how they were selected

- Provide operational definitions for each variable

- Describe the measures and procedures that were used to collect data

- Explain how the information collected was analyzed

- Discuss what the results mean

Why is such a detailed record of a psychological study so important? By clearly explaining the steps and procedures used throughout the study, other researchers can then replicate the results. The editorial process employed by academic and professional journals ensures that each article that is submitted undergoes a thorough peer review, which helps ensure that the study is scientifically sound.

Once published, the study becomes another piece of the existing puzzle of our knowledge base on that topic.

Before you begin exploring the scientific method steps, here's a review of some key terms and definitions that you should be familiar with:

- Falsifiable : The variables can be measured so that if a hypothesis is false, it can be proven false

- Hypothesis : An educated guess about the possible relationship between two or more variables

- Variable : A factor or element that can change in observable and measurable ways

- Operational definition : A full description of exactly how variables are defined, how they will be manipulated, and how they will be measured

Uses for the Scientific Method

The goals of psychological studies are to describe, explain, predict and perhaps influence mental processes or behaviors. In order to do this, psychologists utilize the scientific method to conduct psychological research. The scientific method is a set of principles and procedures that are used by researchers to develop questions, collect data, and reach conclusions.

Goals of Scientific Research in Psychology

Researchers seek not only to describe behaviors and explain why these behaviors occur; they also strive to create research that can be used to predict and even change human behavior.

Psychologists and other social scientists regularly propose explanations for human behavior. On a more informal level, people make judgments about the intentions, motivations , and actions of others on a daily basis.

While the everyday judgments we make about human behavior are subjective and anecdotal, researchers use the scientific method to study psychology in an objective and systematic way. The results of these studies are often reported in popular media, which leads many to wonder just how or why researchers arrived at the conclusions they did.

Examples of the Scientific Method

Now that you're familiar with the scientific method steps, it's useful to see how each step could work with a real-life example.

Say, for instance, that researchers set out to discover what the relationship is between psychotherapy and anxiety .

- Step 1. Make an observation : The researchers choose to focus their study on adults ages 25 to 40 with generalized anxiety disorder.

- Step 2. Ask a question : The question they want to answer in their study is: Do weekly psychotherapy sessions reduce symptoms in adults ages 25 to 40 with generalized anxiety disorder?

- Step 3. Test your hypothesis : Researchers collect data on participants' anxiety symptoms . They work with therapists to create a consistent program that all participants undergo. Group 1 may attend therapy once per week, whereas group 2 does not attend therapy.

- Step 4. Examine the results : Participants record their symptoms and any changes over a period of three months. After this period, people in group 1 report significant improvements in their anxiety symptoms, whereas those in group 2 report no significant changes.

- Step 5. Report the results : Researchers write a report that includes their hypothesis, information on participants, variables, procedure, and conclusions drawn from the study. In this case, they say that "Weekly therapy sessions are shown to reduce anxiety symptoms in adults ages 25 to 40."

Of course, there are many details that go into planning and executing a study such as this. But this general outline gives you an idea of how an idea is formulated and tested, and how researchers arrive at results using the scientific method.

Erol A. How to conduct scientific research ? Noro Psikiyatr Ars . 2017;54(2):97-98. doi:10.5152/npa.2017.0120102

University of Minnesota. Psychologists use the scientific method to guide their research .

Shaughnessy, JJ, Zechmeister, EB, & Zechmeister, JS. Research Methods In Psychology . New York: McGraw Hill Education; 2015.

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

When you choose to publish with PLOS, your research makes an impact. Make your work accessible to all, without restrictions, and accelerate scientific discovery with options like preprints and published peer review that make your work more Open.

- PLOS Biology

- PLOS Climate

- PLOS Complex Systems

- PLOS Computational Biology

- PLOS Digital Health

- PLOS Genetics

- PLOS Global Public Health

- PLOS Medicine

- PLOS Mental Health

- PLOS Neglected Tropical Diseases

- PLOS Pathogens

- PLOS Sustainability and Transformation

- PLOS Collections

- About This Blog

- Official PLOS Blog

- EveryONE Blog

- Speaking of Medicine

- PLOS Biologue

- Absolutely Maybe

- DNA Science

- PLOS ECR Community

- All Models Are Wrong

- About PLOS Blogs

A Guide to Using the Scientific Method in Everyday Life

The scientific method —the process used by scientists to understand the natural world—has the merit of investigating natural phenomena in a rigorous manner. Working from hypotheses, scientists draw conclusions based on empirical data. These data are validated on large-scale numbers and take into consideration the intrinsic variability of the real world. For people unfamiliar with its intrinsic jargon and formalities, science may seem esoteric. And this is a huge problem: science invites criticism because it is not easily understood. So why is it important, then, that every person understand how science is done?

Because the scientific method is, first of all, a matter of logical reasoning and only afterwards, a procedure to be applied in a laboratory.

Individuals without training in logical reasoning are more easily victims of distorted perspectives about themselves and the world. An example is represented by the so-called “ cognitive biases ”—systematic mistakes that individuals make when they try to think rationally, and which lead to erroneous or inaccurate conclusions. People can easily overestimate the relevance of their own behaviors and choices. They can lack the ability to self-estimate the quality of their performances and thoughts . Unconsciously, they could even end up selecting only the arguments that support their hypothesis or beliefs . This is why the scientific framework should be conceived not only as a mechanism for understanding the natural world, but also as a framework for engaging in logical reasoning and discussion.

A brief history of the scientific method

The scientific method has its roots in the sixteenth and seventeenth centuries. Philosophers Francis Bacon and René Descartes are often credited with formalizing the scientific method because they contrasted the idea that research should be guided by metaphysical pre-conceived concepts of the nature of reality—a position that, at the time, was highly supported by their colleagues . In essence, Bacon thought that inductive reasoning based on empirical observation was critical to the formulation of hypotheses and the generation of new understanding : general or universal principles describing how nature works are derived only from observations of recurring phenomena and data recorded from them. The inductive method was used, for example, by the scientist Rudolf Virchow to formulate the third principle of the notorious cell theory , according to which every cell derives from a pre-existing one. The rationale behind this conclusion is that because all observations of cell behavior show that cells are only derived from other cells, this assertion must be always true.

Inductive reasoning, however, is not immune to mistakes and limitations. Referring back to cell theory, there may be rare occasions in which a cell does not arise from a pre-existing one, even though we haven’t observed it yet—our observations on cell behavior, although numerous, can still benefit from additional observations to either refute or support the conclusion that all cells arise from pre-existing ones. And this is where limited observations can lead to erroneous conclusions reasoned inductively. In another example, if one never has seen a swan that is not white, they might conclude that all swans are white, even when we know that black swans do exist, however rare they may be.

The universally accepted scientific method, as it is used in science laboratories today, is grounded in hypothetico-deductive reasoning . Research progresses via iterative empirical testing of formulated, testable hypotheses (formulated through inductive reasoning). A testable hypothesis is one that can be rejected (falsified) by empirical observations, a concept known as the principle of falsification . Initially, ideas and conjectures are formulated. Experiments are then performed to test them. If the body of evidence fails to reject the hypothesis, the hypothesis stands. It stands however until and unless another (even singular) empirical observation falsifies it. However, just as with inductive reasoning, hypothetico-deductive reasoning is not immune to pitfalls—assumptions built into hypotheses can be shown to be false, thereby nullifying previously unrejected hypotheses. The bottom line is that science does not work to prove anything about the natural world. Instead, it builds hypotheses that explain the natural world and then attempts to find the hole in the reasoning (i.e., it works to disprove things about the natural world).

How do scientists test hypotheses?

Controlled experiments

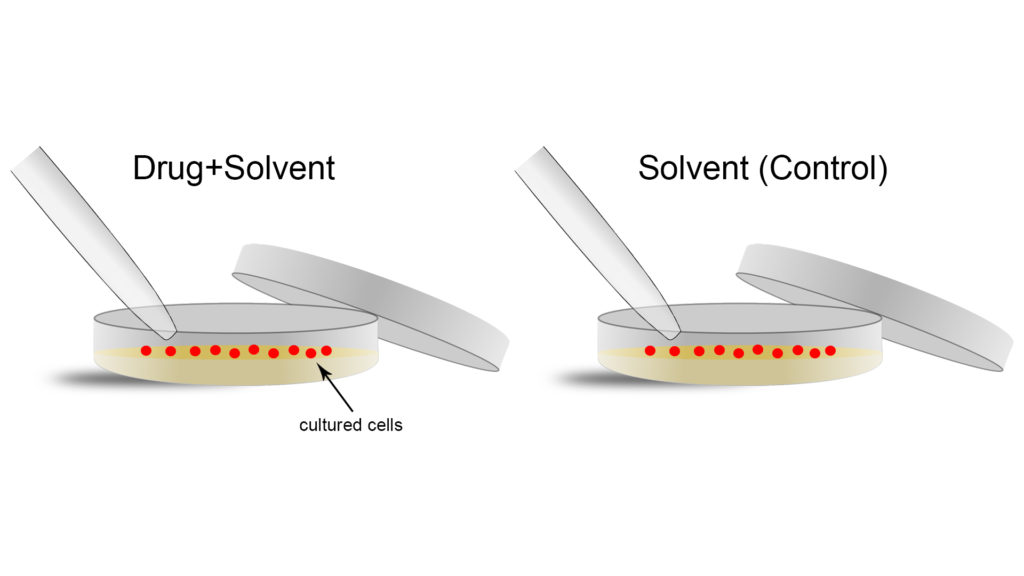

The word “experiment” can be misleading because it implies a lack of control over the process. Therefore, it is important to understand that science uses controlled experiments in order to test hypotheses and contribute new knowledge. So what exactly is a controlled experiment, then?

Let us take a practical example. Our starting hypothesis is the following: we have a novel drug that we think inhibits the division of cells, meaning that it prevents one cell from dividing into two cells (recall the description of cell theory above). To test this hypothesis, we could treat some cells with the drug on a plate that contains nutrients and fuel required for their survival and division (a standard cell biology assay). If the drug works as expected, the cells should stop dividing. This type of drug might be useful, for example, in treating cancers because slowing or stopping the division of cells would result in the slowing or stopping of tumor growth.

Although this experiment is relatively easy to do, the mere process of doing science means that several experimental variables (like temperature of the cells or drug, dosage, and so on) could play a major role in the experiment. This could result in a failed experiment when the drug actually does work, or it could give the appearance that the drug is working when it is not. Given that these variables cannot be eliminated, scientists always run control experiments in parallel to the real ones, so that the effects of these other variables can be determined. Control experiments are designed so that all variables, with the exception of the one under investigation, are kept constant. In simple terms, the conditions must be identical between the control and the actual experiment.

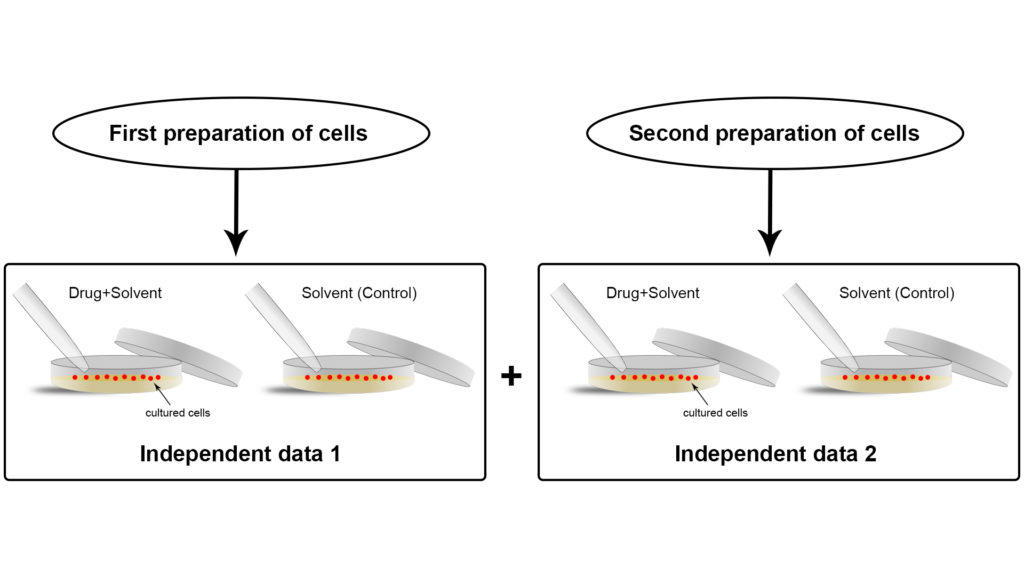

Coming back to our example, when a drug is administered it is not pure. Often, it is dissolved in a solvent like water or oil. Therefore, the perfect control to the actual experiment would be to administer pure solvent (without the added drug) at the same time and with the same tools, where all other experimental variables (like temperature, as mentioned above) are the same between the two (Figure 1). Any difference in effect on cell division in the actual experiment here can be attributed to an effect of the drug because the effects of the solvent were controlled.

In order to provide evidence of the quality of a single, specific experiment, it needs to be performed multiple times in the same experimental conditions. We call these multiple experiments “replicates” of the experiment (Figure 2). The more replicates of the same experiment, the more confident the scientist can be about the conclusions of that experiment under the given conditions. However, multiple replicates under the same experimental conditions are of no help when scientists aim at acquiring more empirical evidence to support their hypothesis. Instead, they need independent experiments (Figure 3), in their own lab and in other labs across the world, to validate their results.

Often times, especially when a given experiment has been repeated and its outcome is not fully clear, it is better to find alternative experimental assays to test the hypothesis.

Applying the scientific approach to everyday life

So, what can we take from the scientific approach to apply to our everyday lives?

A few weeks ago, I had an agitated conversation with a bunch of friends concerning the following question: What is the definition of intelligence?

Defining “intelligence” is not easy. At the beginning of the conversation, everybody had a different, “personal” conception of intelligence in mind, which – tacitly – implied that the conversation could have taken several different directions. We realized rather soon that someone thought that an intelligent person is whoever is able to adapt faster to new situations; someone else thought that an intelligent person is whoever is able to deal with other people and empathize with them. Personally, I thought that an intelligent person is whoever displays high cognitive skills, especially in abstract reasoning.

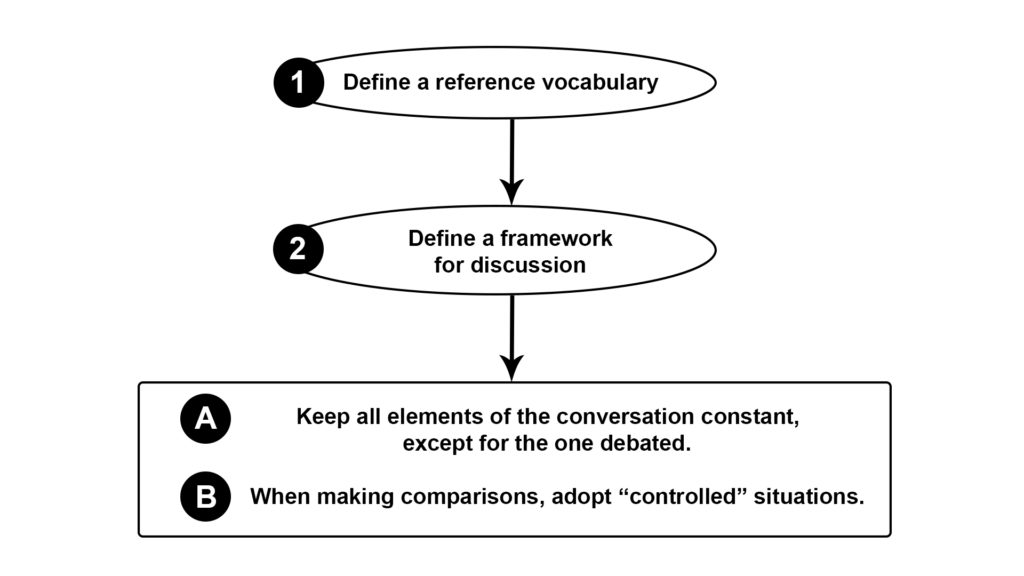

The scientific method has the merit of providing a reference system, with precise protocols and rules to follow. Remember: experiments must be reproducible, which means that an independent scientists in a different laboratory, when provided with the same equipment and protocols, should get comparable results. Fruitful conversations as well need precise language, a kind of reference vocabulary everybody should agree upon, in order to discuss about the same “content”. This is something we often forget, something that was somehow missing at the opening of the aforementioned conversation: even among friends, we should always agree on premises, and define them in a rigorous manner, so that they are the same for everybody. When speaking about “intelligence”, we must all make sure we understand meaning and context of the vocabulary adopted in the debate (Figure 4, point 1). This is the first step of “controlling” a conversation.

There is another downside that a discussion well-grounded in a scientific framework would avoid. The mistake is not structuring the debate so that all its elements, except for the one under investigation, are kept constant (Figure 4, point 2). This is particularly true when people aim at making comparisons between groups to support their claim. For example, they may try to define what intelligence is by comparing the achievements in life of different individuals: “Stephen Hawking is a brilliant example of intelligence because of his great contribution to the physics of black holes”. This statement does not help to define what intelligence is, simply because it compares Stephen Hawking, a famous and exceptional physicist, to any other person, who statistically speaking, knows nothing about physics. Hawking first went to the University of Oxford, then he moved to the University of Cambridge. He was in contact with the most influential physicists on Earth. Other people were not. All of this, of course, does not disprove Hawking’s intelligence; but from a logical and methodological point of view, given the multitude of variables included in this comparison, it cannot prove it. Thus, the sentence “Stephen Hawking is a brilliant example of intelligence because of his great contribution to the physics of black holes” is not a valid argument to describe what intelligence is. If we really intend to approximate a definition of intelligence, Steven Hawking should be compared to other physicists, even better if they were Hawking’s classmates at the time of college, and colleagues afterwards during years of academic research.

In simple terms, as scientists do in the lab, while debating we should try to compare groups of elements that display identical, or highly similar, features. As previously mentioned, all variables – except for the one under investigation – must be kept constant.

This insightful piece presents a detailed analysis of how and why science can help to develop critical thinking.

In a nutshell

Here is how to approach a daily conversation in a rigorous, scientific manner:

- First discuss about the reference vocabulary, then discuss about the content of the discussion. Think about a researcher who is writing down an experimental protocol that will be used by thousands of other scientists in varying continents. If the protocol is rigorously written, all scientists using it should get comparable experimental outcomes. In science this means reproducible knowledge, in daily life this means fruitful conversations in which individuals are on the same page.

- Adopt “controlled” arguments to support your claims. When making comparisons between groups, visualize two blank scenarios. As you start to add details to both of them, you have two options. If your aim is to hide a specific detail, the better is to design the two scenarios in a completely different manner—it is to increase the variables. But if your intention is to help the observer to isolate a specific detail, the better is to design identical scenarios, with the exception of the intended detail—it is therefore to keep most of the variables constant. This is precisely how scientists ideate adequate experiments to isolate new pieces of knowledge, and how individuals should orchestrate their thoughts in order to test them and facilitate their comprehension to others.

Not only the scientific method should offer individuals an elitist way to investigate reality, but also an accessible tool to properly reason and discuss about it.

Edited by Jason Organ, PhD, Indiana University School of Medicine.

Simone is a molecular biologist on the verge of obtaining a doctoral title at the University of Ulm, Germany. He is Vice-Director at Culturico (https://culturico.com/), where his writings span from Literature to Sociology, from Philosophy to Science. His writings recently appeared in Psychology Today, openDemocracy, Splice Today, Merion West, Uncommon Ground and The Society Pages. Follow Simone on Twitter: @simredaelli

- Pingback: Case Studies in Ethical Thinking: Day 1 | Education & Erudition

This has to be the best article I have ever read on Scientific Thinking. I am presently writing a treatise on how Scientific thinking can be adopted to entreat all situations.And how, a 4 year old child can be taught to adopt Scientific thinking, so that, the child can look at situations that bothers her and she could try to think about that situation by formulating the right questions. She may not have the tools to find right answers? But, forming questions by using right technique ? May just make her find a way to put her mind to rest even at that level. That is why, 4 year olds are often “eerily: (!)intelligent, I have iften been intimidated and plain embarrassed to see an intelligent and well spoken 4 year old deal with celibrity ! Of course, there are a lot of variables that have to be kept in mind in order to train children in such controlled thinking environment, as the screenplay of little Sheldon shows. Thanking the author with all my heart – #ershadspeak #wearescience #weareallscientists Ershad Khandker

Simone, thank you for this article. I have the idea that I want to apply what I learned in Biology to everyday life. You addressed this issue, and have given some basic steps in using the scientific method.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name and email for the next time I comment.

By Ashley Moses, edited by Andrew S. Cale Each year, millions of scientific research papers are published. Virtually none of them can…

By Ana Santos-Carvalho and Carolina Lebre, edited by Andrew S. Cale Excessive use of technical jargon can be a significant barrier to…

By Ryan McRae and Briana Pobiner, edited by Andrew S. Cale In 2023, the field of human evolution benefited from a plethora…

- Table of Contents

- Random Entry

- Chronological

- Editorial Information

- About the SEP

- Editorial Board

- How to Cite the SEP

- Special Characters

- Advanced Tools

- Support the SEP

- PDFs for SEP Friends

- Make a Donation

- SEPIA for Libraries

- Entry Contents

Bibliography

Academic tools.

- Friends PDF Preview

- Author and Citation Info

- Back to Top

Scientific Method

Science is an enormously successful human enterprise. The study of scientific method is the attempt to discern the activities by which that success is achieved. Among the activities often identified as characteristic of science are systematic observation and experimentation, inductive and deductive reasoning, and the formation and testing of hypotheses and theories. How these are carried out in detail can vary greatly, but characteristics like these have been looked to as a way of demarcating scientific activity from non-science, where only enterprises which employ some canonical form of scientific method or methods should be considered science (see also the entry on science and pseudo-science ). Others have questioned whether there is anything like a fixed toolkit of methods which is common across science and only science. Some reject privileging one view of method as part of rejecting broader views about the nature of science, such as naturalism (Dupré 2004); some reject any restriction in principle (pluralism).

Scientific method should be distinguished from the aims and products of science, such as knowledge, predictions, or control. Methods are the means by which those goals are achieved. Scientific method should also be distinguished from meta-methodology, which includes the values and justifications behind a particular characterization of scientific method (i.e., a methodology) — values such as objectivity, reproducibility, simplicity, or past successes. Methodological rules are proposed to govern method and it is a meta-methodological question whether methods obeying those rules satisfy given values. Finally, method is distinct, to some degree, from the detailed and contextual practices through which methods are implemented. The latter might range over: specific laboratory techniques; mathematical formalisms or other specialized languages used in descriptions and reasoning; technological or other material means; ways of communicating and sharing results, whether with other scientists or with the public at large; or the conventions, habits, enforced customs, and institutional controls over how and what science is carried out.

While it is important to recognize these distinctions, their boundaries are fuzzy. Hence, accounts of method cannot be entirely divorced from their methodological and meta-methodological motivations or justifications, Moreover, each aspect plays a crucial role in identifying methods. Disputes about method have therefore played out at the detail, rule, and meta-rule levels. Changes in beliefs about the certainty or fallibility of scientific knowledge, for instance (which is a meta-methodological consideration of what we can hope for methods to deliver), have meant different emphases on deductive and inductive reasoning, or on the relative importance attached to reasoning over observation (i.e., differences over particular methods.) Beliefs about the role of science in society will affect the place one gives to values in scientific method.

The issue which has shaped debates over scientific method the most in the last half century is the question of how pluralist do we need to be about method? Unificationists continue to hold out for one method essential to science; nihilism is a form of radical pluralism, which considers the effectiveness of any methodological prescription to be so context sensitive as to render it not explanatory on its own. Some middle degree of pluralism regarding the methods embodied in scientific practice seems appropriate. But the details of scientific practice vary with time and place, from institution to institution, across scientists and their subjects of investigation. How significant are the variations for understanding science and its success? How much can method be abstracted from practice? This entry describes some of the attempts to characterize scientific method or methods, as well as arguments for a more context-sensitive approach to methods embedded in actual scientific practices.

1. Overview and organizing themes

2. historical review: aristotle to mill, 3.1 logical constructionism and operationalism, 3.2. h-d as a logic of confirmation, 3.3. popper and falsificationism, 3.4 meta-methodology and the end of method, 4. statistical methods for hypothesis testing, 5.1 creative and exploratory practices.

- 5.2 Computer methods and the ‘new ways’ of doing science

6.1 “The scientific method” in science education and as seen by scientists

6.2 privileged methods and ‘gold standards’, 6.3 scientific method in the court room, 6.4 deviating practices, 7. conclusion, other internet resources, related entries.

This entry could have been given the title Scientific Methods and gone on to fill volumes, or it could have been extremely short, consisting of a brief summary rejection of the idea that there is any such thing as a unique Scientific Method at all. Both unhappy prospects are due to the fact that scientific activity varies so much across disciplines, times, places, and scientists that any account which manages to unify it all will either consist of overwhelming descriptive detail, or trivial generalizations.

The choice of scope for the present entry is more optimistic, taking a cue from the recent movement in philosophy of science toward a greater attention to practice: to what scientists actually do. This “turn to practice” can be seen as the latest form of studies of methods in science, insofar as it represents an attempt at understanding scientific activity, but through accounts that are neither meant to be universal and unified, nor singular and narrowly descriptive. To some extent, different scientists at different times and places can be said to be using the same method even though, in practice, the details are different.

Whether the context in which methods are carried out is relevant, or to what extent, will depend largely on what one takes the aims of science to be and what one’s own aims are. For most of the history of scientific methodology the assumption has been that the most important output of science is knowledge and so the aim of methodology should be to discover those methods by which scientific knowledge is generated.

Science was seen to embody the most successful form of reasoning (but which form?) to the most certain knowledge claims (but how certain?) on the basis of systematically collected evidence (but what counts as evidence, and should the evidence of the senses take precedence, or rational insight?) Section 2 surveys some of the history, pointing to two major themes. One theme is seeking the right balance between observation and reasoning (and the attendant forms of reasoning which employ them); the other is how certain scientific knowledge is or can be.

Section 3 turns to 20 th century debates on scientific method. In the second half of the 20 th century the epistemic privilege of science faced several challenges and many philosophers of science abandoned the reconstruction of the logic of scientific method. Views changed significantly regarding which functions of science ought to be captured and why. For some, the success of science was better identified with social or cultural features. Historical and sociological turns in the philosophy of science were made, with a demand that greater attention be paid to the non-epistemic aspects of science, such as sociological, institutional, material, and political factors. Even outside of those movements there was an increased specialization in the philosophy of science, with more and more focus on specific fields within science. The combined upshot was very few philosophers arguing any longer for a grand unified methodology of science. Sections 3 and 4 surveys the main positions on scientific method in 20 th century philosophy of science, focusing on where they differ in their preference for confirmation or falsification or for waiving the idea of a special scientific method altogether.

In recent decades, attention has primarily been paid to scientific activities traditionally falling under the rubric of method, such as experimental design and general laboratory practice, the use of statistics, the construction and use of models and diagrams, interdisciplinary collaboration, and science communication. Sections 4–6 attempt to construct a map of the current domains of the study of methods in science.

As these sections illustrate, the question of method is still central to the discourse about science. Scientific method remains a topic for education, for science policy, and for scientists. It arises in the public domain where the demarcation or status of science is at issue. Some philosophers have recently returned, therefore, to the question of what it is that makes science a unique cultural product. This entry will close with some of these recent attempts at discerning and encapsulating the activities by which scientific knowledge is achieved.

Attempting a history of scientific method compounds the vast scope of the topic. This section briefly surveys the background to modern methodological debates. What can be called the classical view goes back to antiquity, and represents a point of departure for later divergences. [ 1 ]

We begin with a point made by Laudan (1968) in his historical survey of scientific method:

Perhaps the most serious inhibition to the emergence of the history of theories of scientific method as a respectable area of study has been the tendency to conflate it with the general history of epistemology, thereby assuming that the narrative categories and classificatory pigeon-holes applied to the latter are also basic to the former. (1968: 5)

To see knowledge about the natural world as falling under knowledge more generally is an understandable conflation. Histories of theories of method would naturally employ the same narrative categories and classificatory pigeon holes. An important theme of the history of epistemology, for example, is the unification of knowledge, a theme reflected in the question of the unification of method in science. Those who have identified differences in kinds of knowledge have often likewise identified different methods for achieving that kind of knowledge (see the entry on the unity of science ).

Different views on what is known, how it is known, and what can be known are connected. Plato distinguished the realms of things into the visible and the intelligible ( The Republic , 510a, in Cooper 1997). Only the latter, the Forms, could be objects of knowledge. The intelligible truths could be known with the certainty of geometry and deductive reasoning. What could be observed of the material world, however, was by definition imperfect and deceptive, not ideal. The Platonic way of knowledge therefore emphasized reasoning as a method, downplaying the importance of observation. Aristotle disagreed, locating the Forms in the natural world as the fundamental principles to be discovered through the inquiry into nature ( Metaphysics Z , in Barnes 1984).

Aristotle is recognized as giving the earliest systematic treatise on the nature of scientific inquiry in the western tradition, one which embraced observation and reasoning about the natural world. In the Prior and Posterior Analytics , Aristotle reflects first on the aims and then the methods of inquiry into nature. A number of features can be found which are still considered by most to be essential to science. For Aristotle, empiricism, careful observation (but passive observation, not controlled experiment), is the starting point. The aim is not merely recording of facts, though. For Aristotle, science ( epistêmê ) is a body of properly arranged knowledge or learning—the empirical facts, but also their ordering and display are of crucial importance. The aims of discovery, ordering, and display of facts partly determine the methods required of successful scientific inquiry. Also determinant is the nature of the knowledge being sought, and the explanatory causes proper to that kind of knowledge (see the discussion of the four causes in the entry on Aristotle on causality ).

In addition to careful observation, then, scientific method requires a logic as a system of reasoning for properly arranging, but also inferring beyond, what is known by observation. Methods of reasoning may include induction, prediction, or analogy, among others. Aristotle’s system (along with his catalogue of fallacious reasoning) was collected under the title the Organon . This title would be echoed in later works on scientific reasoning, such as Novum Organon by Francis Bacon, and Novum Organon Restorum by William Whewell (see below). In Aristotle’s Organon reasoning is divided primarily into two forms, a rough division which persists into modern times. The division, known most commonly today as deductive versus inductive method, appears in other eras and methodologies as analysis/synthesis, non-ampliative/ampliative, or even confirmation/verification. The basic idea is there are two “directions” to proceed in our methods of inquiry: one away from what is observed, to the more fundamental, general, and encompassing principles; the other, from the fundamental and general to instances or implications of principles.

The basic aim and method of inquiry identified here can be seen as a theme running throughout the next two millennia of reflection on the correct way to seek after knowledge: carefully observe nature and then seek rules or principles which explain or predict its operation. The Aristotelian corpus provided the framework for a commentary tradition on scientific method independent of science itself (cosmos versus physics.) During the medieval period, figures such as Albertus Magnus (1206–1280), Thomas Aquinas (1225–1274), Robert Grosseteste (1175–1253), Roger Bacon (1214/1220–1292), William of Ockham (1287–1347), Andreas Vesalius (1514–1546), Giacomo Zabarella (1533–1589) all worked to clarify the kind of knowledge obtainable by observation and induction, the source of justification of induction, and best rules for its application. [ 2 ] Many of their contributions we now think of as essential to science (see also Laudan 1968). As Aristotle and Plato had employed a framework of reasoning either “to the forms” or “away from the forms”, medieval thinkers employed directions away from the phenomena or back to the phenomena. In analysis, a phenomena was examined to discover its basic explanatory principles; in synthesis, explanations of a phenomena were constructed from first principles.

During the Scientific Revolution these various strands of argument, experiment, and reason were forged into a dominant epistemic authority. The 16 th –18 th centuries were a period of not only dramatic advance in knowledge about the operation of the natural world—advances in mechanical, medical, biological, political, economic explanations—but also of self-awareness of the revolutionary changes taking place, and intense reflection on the source and legitimation of the method by which the advances were made. The struggle to establish the new authority included methodological moves. The Book of Nature, according to the metaphor of Galileo Galilei (1564–1642) or Francis Bacon (1561–1626), was written in the language of mathematics, of geometry and number. This motivated an emphasis on mathematical description and mechanical explanation as important aspects of scientific method. Through figures such as Henry More and Ralph Cudworth, a neo-Platonic emphasis on the importance of metaphysical reflection on nature behind appearances, particularly regarding the spiritual as a complement to the purely mechanical, remained an important methodological thread of the Scientific Revolution (see the entries on Cambridge platonists ; Boyle ; Henry More ; Galileo ).