Click through the PLOS taxonomy to find articles in your field.

For more information about PLOS Subject Areas, click here .

Loading metrics

Open Access

Peer-reviewed

Research Article

Clustering algorithms: A comparative approach

Roles Data curation, Formal analysis, Investigation, Methodology, Software, Validation, Visualization, Writing – original draft, Writing – review & editing

Affiliation Institute of Mathematics and Computer Science, University of São Paulo, São Carlos, São Paulo, Brazil

Roles Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Validation, Writing – original draft, Writing – review & editing

* E-mail: [email protected]

Affiliation Department of Computer Science, Federal University of São Carlos, São Carlos, São Paulo, Brazil

Roles Validation, Writing – original draft, Writing – review & editing

Affiliation Federal University of Technology, Paraná, Paraná, Brazil

Roles Funding acquisition, Project administration, Supervision, Validation, Writing – review & editing

Affiliation São Carlos Institute of Physics, University of São Paulo, São Carlos, São Paulo, Brazil

Roles Funding acquisition, Project administration, Supervision, Validation, Writing – original draft, Writing – review & editing

Roles Conceptualization, Formal analysis, Funding acquisition, Methodology, Project administration, Resources, Supervision, Validation, Writing – original draft, Writing – review & editing

- Mayra Z. Rodriguez,

- Cesar H. Comin,

- Dalcimar Casanova,

- Odemir M. Bruno,

- Diego R. Amancio,

- Luciano da F. Costa,

- Francisco A. Rodrigues

- Published: January 15, 2019

- https://doi.org/10.1371/journal.pone.0210236

- Reader Comments

Many real-world systems can be studied in terms of pattern recognition tasks, so that proper use (and understanding) of machine learning methods in practical applications becomes essential. While many classification methods have been proposed, there is no consensus on which methods are more suitable for a given dataset. As a consequence, it is important to comprehensively compare methods in many possible scenarios. In this context, we performed a systematic comparison of 9 well-known clustering methods available in the R language assuming normally distributed data. In order to account for the many possible variations of data, we considered artificial datasets with several tunable properties (number of classes, separation between classes, etc). In addition, we also evaluated the sensitivity of the clustering methods with regard to their parameters configuration. The results revealed that, when considering the default configurations of the adopted methods, the spectral approach tended to present particularly good performance. We also found that the default configuration of the adopted implementations was not always accurate. In these cases, a simple approach based on random selection of parameters values proved to be a good alternative to improve the performance. All in all, the reported approach provides subsidies guiding the choice of clustering algorithms.

Citation: Rodriguez MZ, Comin CH, Casanova D, Bruno OM, Amancio DR, Costa LdF, et al. (2019) Clustering algorithms: A comparative approach. PLoS ONE 14(1): e0210236. https://doi.org/10.1371/journal.pone.0210236

Editor: Hans A. Kestler, University of Ulm, GERMANY

Received: December 26, 2016; Accepted: December 19, 2018; Published: January 15, 2019

Copyright: © 2019 Rodriguez et al. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Data Availability: All datasets used for evaluating the algorithms can be obtained from Figshare: https://figshare.com/s/29005b491a418a667b22 .

Funding: This work has been supported by FAPESP - Fundação de Amparo à Pesquisa do Estado de São Paulo (grant nos. 15/18942-8 and 18/09125-4 for CHC, 14/20830-0 and 16/19069-9 for DRA, 14/08026-1 for OMB and 11/50761-2 and 15/22308-2 for LdFC), CNPq - Conselho Nacional de Desenvolvimento Científico e Tecnológico (grant nos. 307797/2014-7 for OMB and 307333/2013-2 for LdFC), Núcleo de Apoio à Pesquisa (LdFC) and CAPES - Coordenação de Aperfeiçoamento de Pessoal de Nível Superior (Finance Code 001).

Competing interests: The authors have declared that no competing interests exist.

Introduction

In recent years, the automation of data collection and recording implied a deluge of information about many different kinds of systems [ 1 – 8 ]. As a consequence, many methodologies aimed at organizing and modeling data have been developed [ 9 ]. Such methodologies are motivated by their widespread application in diagnosis [ 10 ], education [ 11 ], forecasting [ 12 ], and many other domains [ 13 ]. The definition, evaluation and application of these methodologies are all part of the machine learning field [ 14 ], which became a major subarea of computer science and statistics due to their crucial role in the modern world.

Machine learning encompasses different topics such as regression analysis [ 15 ], feature selection methods [ 16 ], and classification [ 14 ]. The latter involves assigning classes to the objects in a dataset. Three main approaches can be considered for classification: supervised, semi-supervised and unsupervised classification. In the former case, the classes, or labels, of some objects are known beforehand, defining the training set, and an algorithm is used to obtain the classification criteria. Semi-supervised classification deals with training the algorithm using both labeled and unlabeled data. They are commonly used when manually labeling a dataset becomes costly. Lastly, unsupervised classification, henceforth referred as clustering , deals with defining classes from the data without knowledge of the class labels. The purpose of clustering algorithms is to identify groups of objects, or clusters, that are more similar to each other than to other clusters. Such an approach to data analysis is closely related to the task of creating a model of the data, that is, defining a simplified set of properties that can provide intuitive explanation about relevant aspects of a dataset. Clustering methods are generally more demanding than supervised approaches, but provide more insights about complex data. This type of classifiers constitute the main object of the current work.

Because clustering algorithms involve several parameters, often operate in high dimensional spaces, and have to cope with noisy, incomplete and sampled data, their performance can vary substantially for different applications and types of data. For such reasons, several different approaches to clustering have been proposed in the literature (e.g. [ 17 – 19 ]). In practice, it becomes a difficult endeavor, given a dataset or problem, to choose a suitable clustering approach. Nevertheless, much can be learned by comparing different clustering methods. Several previous efforts for comparing clustering algorithms have been reported in the literature [ 20 – 29 ]. Here, we focus on generating a diversified and comprehensive set of artificial, normally distributed data containing not only distinct number of classes, features, number of objects and separation between classes, but also a varied structure of the involved groups (e.g. possessing predefined correlation distributions between features). The purpose of using artificial data is the possibility to obtain an unlimited number of samples and to systematically change any of the aforementioned properties of a dataset. Such features allow the clustering algorithms to be comprehensive and strictly evaluated in a vast number of circumstances, and also grants the possibility of quantifying the sensitivity of the performance with respect to small changes in the data. It should be observed, nevertheless, that the performance results reported in this work are therefore respective and limited to normally distributed data, and other results could be expected for other types of data following other statistical behavior. Here we associate performance with the similarity between the known labels of the objects and those found by the algorithm. Many measurements have been defined for quantifying such similarity [ 30 ], we compare the Jaccard index [ 31 ], Adjusted Rand index [ 32 ], Fowlkes-Mallows index [ 33 ] and Normalized mutual information [ 34 ]. A modified version of the procedure developed by [ 35 ] was used to create 400 distinct datasets, which were used in order to quantify the performance of the clustering algorithms. We describe the adopted procedure and the respective parameters used for data generation. Related approaches include [ 36 ].

Each clustering algorithm relies on a set of parameters that needs to be adjusted in order to achieve viable performance, which corresponds to an important point to be addressed while comparing clustering algorithms. A long standing problem in machine learning is the definition of a proper procedure for setting the parameter values [ 37 ]. In principle, one can apply an optimization procedure (e.g., simulated annealing [ 38 ] or genetic algorithms [ 39 ]) to find the parameter configuration providing the best performance of a given algorithm. Nevertheless, there are two major problems with such an approach. First, adjusting parameters to a given dataset may lead to overfitting [ 40 ]. That is, the specific values found to provide good performance may lead to lower performance when new data is considered. Second, parameter optimization can be unfeasible in some cases, given the time complexity of many algorithms, combined with their typically large number of parameters. Ultimately, many researchers resort to applying classifier or clustering algorithms using the default parameters provided by the software. Therefore, efforts are required for evaluating and comparing the performance of clustering algorithms in the optimization and default situations. In the following, we consider some representative examples of algorithms applied in the literature [ 37 , 41 ].

Clustering algorithms have been implemented in several programming languages and packages. During the development and implementation of such codes, it is common to implement changes or optimizations, leading to new versions of the original methods. The current work focuses on the comparative analysis of several clustering algorithm found in popular packages available in the R programming language [ 42 ]. This choice was motivated by the popularity of the R language in the data mining field, and by virtue of the well-established clustering packages it contains. This study is intended to assist researchers who have programming skills in R language, but with little experience in clustering of data.

The algorithms are evaluated on three distinct situations. First, we consider their performance when using the default parameters provided by the packages. Then, we consider the performance variation when single parameters of the algorithms are changed, while the rest are kept at their default values. Finally, we consider the simultaneous variation of all parameters by means of a random sampling procedure. We compare the results obtained for the latter two situations with those achieved by the default parameters, in such a way as to investigate the possible improvements in performance which could be achieved by modifying the algorithms.

The algorithms were evaluated on 400 artificial, normally distributed, datasets generated by a robust methodology previously described in [ 36 ]. The number of features, number of classes, number of objects for each class and average distance between classes can be systematically changed among the datasets.

The text is divided as follows. We start by revising some of the main approaches to clustering algorithms comparison. Next, we describe the clustering methods considered in the analysis, we also present the R packages implementing such methods. The data generation method and the performance measurements used to compare the algorithms are presented, followed by the presentation of the performance results obtained for the default parameters, for single parameter variation and for random parameter sampling.

Related works

Previous approaches for comparing the performance of clustering algorithms can be divided according to the nature of used datasets. While some studies use either real-world or artificial data, others employ both types of datasets to compare the performance of several clustering methods.

A comparative analysis using real world dataset is presented in several works [ 20 , 21 , 24 , 25 , 43 , 44 ]. Some of these works are reviewed briefly in the following. In [ 43 ], the authors propose an evaluation approach based in a multiple criteria decision making in the domain of financial risk analysis over three real world credit risk and bankruptcy risk datasets. More specifically, clustering algorithms are evaluated in terms of a combination of clustering measurements, which includes a collection of external and internal validity indexes. Their results show that no algorithm can achieve the best performance on all measurements for any dataset and, for this reason, it is mandatory to use more than one performance measure to evaluate clustering algorithms.

In [ 21 ], a comparative analysis of clustering methods was performed in the context of text-independent speaker verification task, using three dataset of documents. Two approaches were considered: clustering algorithms focused in minimizing a distance based objective function and a Gaussian models-based approach. The following algorithms were compared: k-means, random swap, expectation-maximization, hierarchical clustering, self-organized maps (SOM) and fuzzy c-means. The authors found that the most important factor for the success of the algorithms is the model order, which represents the number of centroid or Gaussian components (for Gaussian models-based approaches) considered. Overall, the recognition accuracy was similar for clustering algorithms focused in minimizing a distance based objective function. When the number of clusters was small, SOM and hierarchical methods provided lower accuracy than the other methods. Finally, a comparison of the computational efficiency of the methods revealed that the split hierarchical method is the fastest clustering algorithm in the considered dataset.

In [ 25 ], five clustering methods were studied: k-means, multivariate Gaussian mixture, hierarchical clustering, spectral and nearest neighbor methods. Four proximity measures were used in the experiments: Pearson and Spearman correlation coefficient, cosine similarity and the euclidean distance. The algorithms were evaluated in the context of 35 gene expression data from either Affymetrix or cDNA chip platforms, using the adjusted rand index for performance evaluation. The multivariate Gaussian mixture method provided the best performance in recovering the actual number of clusters of the datasets. The k-means method displayed similar performance. In this same analysis, the hierarchical method led to limited performance, while the spectral method showed to be particularly sensitive to the proximity measure employed.

In [ 24 ], experiments were performed to compare five different types of clustering algorithms: CLICK, self organized mapping-based method (SOM), k-means, hierarchical and dynamical clustering. Data sets of gene expression time series of the Saccharomyces cerevisiae yeast were used. A k-fold cross-validation procedure was considered to compare different algorithms. The authors found that k-means, dynamical clustering and SOM tended to yield high accuracy in all experiments. On the other hand, hierarchical clustering presented a more limited performance in clustering larger datasets, yielding low accuracy in some experiments.

A comparative analysis using artificial data is presented in [ 45 – 47 ]. In [ 47 ], two subspace clustering methods were compared: MAFIA (Adaptive Grids for Clustering Massive Data Sets) [ 48 ] and FINDIT (A Fast and Intelligent Subspace Clustering Algorithm Using Dimension Voting) [ 49 ]. The artificial data, modeled according to a normal distribution, allowed the control of the number of dimensions and instances. The methods were evaluated in terms of both scalability and accuracy. In the former, the running time of both algorithms were compared for different number of instances and features. In addition, the authors assessed the ability of the methods in finding adequate subspaces for each cluster. They found that MAFIA discovered all relevant clusters, but one significant dimension was left out in most cases. Conversely, the FINDIT method performed better in the task of identifying the most relevant dimensions. Both algorithms were found to scale linearly with the number of instances, however MAFIA outperformed FINDIT in most of the tests.

Another common approach for comparing clustering algorithms considers using a mixture of real world and artificial data (e.g. [ 23 , 26 – 28 , 50 ]). In [ 28 ], the performance of k-means, single linkage and simulated annealing (SA) was evaluated, considering different partitions obtained by validation indexes. The authors used two real world datasets obtained from [ 51 ] and three artificial datasets (having two dimensions and 10 clusters). The authors proposed a new validation index called I index that measures the separation based on the maximum distance between clusters and compactness based on the sum of distances between objects and their respective centroids. They found that such an index was the most reliable among other considered indices, reaching its maximum value when the number of clusters is properly chosen.

A systematic quantitative evaluation of four graph-based clustering methods was performed in [ 27 ]. The compared methods were: markov clustering (MCL), restricted neighborhood search clustering (RNSC), super paramagnetic clustering (SPC), and molecular complex detection (MCODE). Six datasets modeling protein interactions in the Saccharomyces cerevisiae and 84 random graphs were used for the comparison. For each algorithm, the robustness of the methods was measured in a twofold fashion: the variation of performance was quantified in terms of changes in the (i) methods parameters and (ii) dataset properties. In the latter, connections were included and removed to reflect uncertainties in the relationship between proteins. The restricted neighborhood search clustering method turned out to be particularly robust to variations in the choice of method parameters, whereas the other algorithms were found to be more robust to dataset alterations. In [ 52 ] the authors report a brief comparison of clustering algorithms using the Fundamental clustering problem suite (FPC) as dataset. The FPC contains artificial and real datasets for testing clustering algorithms. Each dataset represents a particular challenge that the clustering algorithm has to handle, for example, in the Hepta and LSum datasets the clusters can be separated by a linear decision boundary, but have different densities and variances. On the other hand, the ChainLink and Atom datasets cannot be separated by linear decision boundaries. Likewise, the Target dataset contains outliers. Lower performance was obtained by the single linkage clustering algorithm for the Tetra, EngyTime, Twodiamonds and Wingnut datasets. Although the datasets are quite versatile, it is not possible to control and evaluate how some of its characteristics, such as dimensions or number of features, affect the clustering accuracy.

Clustering methods

Many different types of clustering methods have been proposed in the literature [ 53 – 56 ]. Despite such a diversity, some methods are more frequently used [ 57 ]. Also, many of the commonly employed methods are defined in terms of similar assumptions about the data (e.g., k-means and k-medoids) or consider analogous mathematical concepts (e.g, similarity matrices for spectral or graph clustering) and, consequently, should provide similar performance in typical usage scenarios. Therefore, in the following we consider a choice of clustering algorithms from different families of methods. Several taxonomies have been proposed to organize the many different types of clustering algorithms into families [ 29 , 58 ]. While some taxonomies categorize the algorithms based on their objective functions [ 58 ], others aim at the specific structures desired for the obtained clusters (e.g. hierarchical) [ 29 ]. Here we consider the algorithms indicated in Table 1 as examples of the categories indicated in the same table. The algorithms represent some of the main types of methods in the literature. Note that some algorithms are from the same family, but in these cases they posses notable differences in their applications (e.g., treating very large datasets using clara). A short description about the parameters of each considered algorithm is provided in S1 File of the supplementary material.

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

The first column shows the name of the algorithms used throughout the text. The second column indicates the category of the algorithms. The third and fourth columns contain, respectively, the function name and R library of each algorithm.

https://doi.org/10.1371/journal.pone.0210236.t001

Regarding partitional approaches, the k-means [ 68 ] algorithm has been widely used by researchers [ 57 ]. This method requires as input parameters the number of groups ( k ) and a distance metric. Initially, each data point is associated with one of the k clusters according to its distance to the centroids (clusters centers) of each cluster. An example is shown in Fig 1(a) , where black points correspond to centroids and the remaining points have the same color if the centroid that is closest to them is the same. Then, new centroids are calculated, and the classification of the data points is repeated for the new centroids, as indicated in Fig 1(b) , where gray points indicate the position of the centroids in the previous iteration. The process is repeated until no significant changes of the centroids positions is observed at each new step, as shown in Fig 1(c) and 1(d) .

Each plot shows the partition obtained after specific iterations of the algorithm. The centroids of the clusters are shown as a black marker. Points are colored according to their assigned clusters. Gray markers indicate the position of the centroids in the previous iteration. The dataset contains 2 clusters, but k = 4 seeds were used in the algorithm.

https://doi.org/10.1371/journal.pone.0210236.g001

The a priori setting of the number of clusters is the main limitation of the k-means algorithm. This is so because the final classification can strongly depend on the choice of the number of centroids [ 68 ]. In addition, the k-means is not particularly recommended in cases where the clusters do not show convex distribution or have very different sizes [ 59 , 60 ]. Moreover, the k-means algorithm is sensitive to the initial seed selection [ 41 ]. Given these limitations, many modifications of this algorithm have been proposed [ 61 – 63 ], such as the k-medoid [ 64 ] and k-means++ [ 65 ]. Nevertheless, this algorithm, besides having low computational cost, can provide good results in many practical situations such as in anomaly detection [ 66 ] and data segmentation [ 67 ]. The R routine used for k-means clustering was the k-means from the stats package, which contains the implementation of the algorithms proposed by Macqueen [ 68 ], Hartigan and Wong [ 69 ]. The algorithm of Hartigan and Wong is employed by the stats package when setting the parameters to their default values, while the algorithm proposed by Macqueen is used for all other cases. Another interesting example of partitional clustering algorithms is the clustering for large applications (clara) [ 70 ]. This method takes into account multiple fixed samples of the dataset to minimize sampling bias and, subsequently, select the best medoids among the chosen samples, where a medoid is defined as the object i for which the average dissimilarity to all other objects in its cluster is minimal. This method tends to be efficient for large amounts of data because it does not explore the whole neighborhood of the data points [ 71 ], although the quality of the results have been found to strongly depend on the number of objects in the sample data [ 62 ]. The clara algorithm employed in our analysis was provided by the clara function contained in the cluster package. This function implements the method developed by Kaufman and Rousseeuw [ 70 ].

The Ordering Points To Identify the Clustering Structure (OPTICS) [ 72 , 73 ] is a density-based cluster ordering based on the concept of maximal density-reachability [ 72 ]. The algorithm starts with a data point and expands its neighborhood using a similar procedure as in the dbscan algorithm [ 74 ], with the difference that the neighborhood is first expanded to points with low core-distance. The core distance of an object p is defined as the m -th smallest distance between p and the objects in its ϵ -neighborhood (i.e., objects having distance less than or equal to ϵ from p ), where m is a parameter of the algorithm indicating the smallest number of points that can form a cluster. The optics algorithm can detect clusters having large density variations and irregular shapes. The R routine used for optics clustering was the optics from the dbscan package. This function considers the original algorithm developed by Ankerst et al. [ 72 ]. An hierarchical clustering structure from the output of the optics algorithm can be constructed using the function extractXi from the dbscan package. We note that the function extractDBSCAN , from the same package, provides a clustering from an optics ordering that is similar to what the dbscan algorithm would generate.

Clustering methods that take into account the linkage between data points, traditionally known as hierarchical methods, can be subdivided into two groups: agglomerative and divisive [ 59 ]. In an agglomerative hierarchical clustering algorithm, initially, each object belongs to a respective individual cluster. Then, after successive iterations, groups are merged until stop conditions are reached. On the other hand, a divisive hierarchical clustering method starts with all objects in a single cluster and, after successive iterations, objects are separated into clusters. There are two main packages in the R language that provide routines for performing hierarchical clustering, they are the stats and cluster . Here we consider the agnes routine from the cluster package which implements the algorithm proposed by Kaufman and Rousseeuw [ 70 ]. Four well-known linkage criteria are available in agnes , namely single linkage, complete linkage, Ward’s method, and weighted average linkage [ 75 ].

Model-based methods can be regarded as a general framework for estimating the maximum likelihood of the parameters of an underlying distribution to a given dataset. A well-known instance of model-based methods is the expectation-maximization (EM) algorithm. Most commonly, one considers that the data from each class can be modeled by multivariate normal distributions, and, therefore, the distribution observed for the whole data can be seen as a mixture of such normal distributions. A maximum likelihood approach is then applied for finding the most probable parameters of the normal distributions of each class. The EM approach for clustering is particularly suitable when the dataset is incomplete [ 76 , 77 ]. On the other hand, the clusters obtained from the method may strongly depend on the initial conditions [ 54 ]. In addition, the algorithm may fail to find very small clusters [ 29 , 78 ]. In the R language, the package mclust [ 79 , 80 ]. provides iterative EM (Expectation-Maximization) methods for maximum likelihood estimation using parameterized Gaussian mixture models. Functions estep and mstep implement the individual steps of an EM iteration. A related algorithm that is also analyzed in the current study is the hcmodel, which can be found in the hc function of the mclust package. The hcmodel algorithm, which is also based on Gaussian-mixtures, was proposed by Fraley [ 81 ]. The algorithm contains many additional steps compared to traditional EM methods, such as an agglomerative procedure and the adjustment of model parameters through a Bayes factor selection using the BIC aproximation [ 82 ].

In recent years, the efficient handling of high dimensional data has become of paramount importance and, for this reason, this feature has been desired when choosing the most appropriate method for obtaining accurate partitions. To tackle high dimensional data, subspace clustering was proposed [ 49 ]. This method works by considering the similarity between objects with respect to distinct subsets of the attributes [ 88 ]. The motivation for doing so is that different subsets of the attributes might define distinct separations between the data. Therefore, the algorithm can identify clusters that exist in multiple, possibly overlapping, subspaces [ 49 ]. Subspace algorithms can be categorized into four main families [ 89 ], namely: lattice, statistical, approximation and hybrid. The hddc function from package HDclassif implements the subspace clustering method of Bouveyron [ 90 ] in the R language. The algorithm is based on statistical models, with the assumption that all attributes may be relevant for clustering [ 91 ]. Some parameters of the algorithm, such as the number of clusters or model to be used, are estimated using an EM procedure.

So far, we have discussed the application of clustering algorithms on static data. Nevertheless, when analyzing data, it is important to take into account whether the data are dynamic or static. Dynamic data, unlike static data, undergo changes over time. Some kinds of data, like the network packets received by a router and credit card transaction streams, are transient in nature and they are known as data stream . Another example of dynamic data are time series because its values change over time [ 92 ]. Dynamic data usually include a large number of features and the amount of objects is potentially unbounded [ 59 ]. This requires the application of novel approaches to quickly process the entire volume of continuously incoming data [ 93 ], the detection of new clusters that are formed and the identification of outliers [ 94 ].

Materials and methods

Artificial datasets.

The proper comparison of clustering algorithms requires a robust artificial data generation method to produce a variety of datasets. For such a task, we apply a methodology based on a previous work by Hirschberger et al. [ 35 ]. The procedure can be used to generate normally distributed samples characterized by F features and separated into C classes. In addition, the method can control both the variance and correlation distributions among the features for each class. The artificial dataset can also be generated by varying the number of objects per class, N e , and the expected separation, α , between the classes.

For each class i in the dataset, a covariance matrix R i of size F × F is created, and this matrix is used for generating N e objects for the classes. This means that pairs of features can have distinct correlation for each generated class. Then, the generated class values are divided by α and translated by s i , where s i is a random variable described by a uniform random distribution defined in the interval [−1, 1]. Parameter α is associated with the expected distances between classes. Such distances can have different impacts on clusterization depending on the number of objects and features used in the dataset. The features in the generated data have a multivariate normal distribution. In addition, the covariance among the features also have a normal distribution. Notice that such a procedure for the generation of artificial datasets was previously used in [ 36 ].

In Fig 2 , we show some examples of artificially generated data. For visualization purposes, all considered cases contain F = 2 features. The parameters used for each case are described in the caption of the figure. Note that the methodology can generate a variety of dataset configurations, including variations in features correlation for each class.

The parameters used for each case are (a) C = 2, Ne = 100 and α = 3.3. (b) C = 2, Ne = 100 and α = 2.3. (c) C = 10, Ne = 50 and α = 4.3. (d) C = 10, Ne = 50 and α = 6.3. Note that each class can present highly distinct properties due to differences in correlation between their features.

https://doi.org/10.1371/journal.pone.0210236.g002

- Number of classes ( C ): The generated datasets are divided into C = {2, 10, 50} classes.

- Number of features ( F ): The number of features to characterize the objects is F = {2, 5, 10, 50, 200}.

- Number of object per class ( N e ): we considered Ne = {5, 50, 100, 500, 5000} objects per class. In our experiments, in a given generated dataset, the number of instances for each class is constant.

- Mixing parameter ( α ): This parameter has a non-trivial dependence on the number of classes and features. Therefore, for each dataset, the value of this parameter was tuned so that no algorithm would achieve an accuracy of 0% or 100%.

We refer to datasets containing 2, 10, 50 and 200 features as DB2F, DB10F, DB50F, DB200F respectively. Such datasets are composed of all considered number of classes, C = {2, 10, 50}, and 50 elements for each class (i.e., Ne = 50). In some cases, we also indicate the number of classes considered for the dataset. For example, dataset DB2C10F contains 2 classes, 10 features and 50 elements per class.

For each case, we consider 10 realizations of the dataset. Therefore, 400 datasets were generated in total.

Evaluating the performance of clustering algorithms

The evaluation of the quality of the generated partitions is one of the most important issues in cluster analysis [ 30 ]. Indices used for measuring the quality of a partition can be categorized into two classes, internal and external indices. Internal validation indices are based on information intrinsic to the data, and evaluates the goodness of a clustering structure without external information. When the correct partition is not available it is possible to estimate the quality of a partition measuring how closely each instance is related to the cluster and how well-separated a cluster is from other clusters. They are mainly used for choosing an optimal clustering algorithm to be applied on a specific dataset [ 96 ]. On the other hand, external validation indices measure the similarity between the output of the clustering algorithm and the correct partitioning of the dataset. The Jaccard, Fowlkes-Mallows and adjusted rand index belong to the same pair counting category, making them closely related. Some differences include the fact that they can exhibit biasing with respect to the number of clusters or the distribution of class sizes in a partition. Normalization helps prevent this unwanted effect. In [ 97 ] the authors discuss several types of bias that may affect external cluster validity indices. A total of 26 pair-counting based external cluster validity indices were used to identify the bias generated by the number of clusters. It was shown that the Fowlkes Mallows and Jaccard index monotonically decrease as the number of clusters increases, favoring partitions with smaller number of clusters, while the Adjusted Rand Index tends to be indifferent to the number of clusters.

Note that when the two sets of labels have a perfect one-to-one correspondence, the quality measures are all equal to unity.

Previous works have shown that there is no single internal cluster validation index that outperforms the other indices [ 100 , 101 ]. In [ 101 ] the authors compare a set of internal cluster validation indices in many distinct scenarios, indicating that the Silhouette index yielded the best results in most cases.

Results and discussion

The accuracy of each considered clustering algorithm was evaluated using three methodologies. In the first methodology, we consider the default parameters of the algorithms provided by the R package. The reason for measuring performance using the default parameters is to consider the case where a researcher applies the classifier to a dataset without any parameter adjustment. This is a common scenario when the researcher is not a machine learning expert. In the second methodology, we quantify the influence of the algorithms parameters on the accuracy. This is done by varying a single parameter of an algorithm while keeping the others at their default values. The third methodology consists in analyzing the performance by randomly varying all parameters of a classifier. This procedure allows the quantification of certain properties such as the maximum accuracy attained and the sensibility of the algorithm to parameter variation.

Performance when using default parameters

In this experiment, we evaluated the performance of the classifiers for all datasets described in Section Artificial datasets . All unsupervised algorithms were set with their default configuration of parameters. For each algorithm, we divide the results according to the number of features contained in the dataset. In other words, for a given number of features, F , we used datasets with C = {2, 10, 50, 200} classes, and N e = {5, 50, 100} objects for each class. Thus, the performance results obtained for each F corresponds to the performance averaged over distinct number of classes and objects per class. We note that the algorithm based on subspaces cannot be applied to datasets containing 2 features, and therefore its accuracy was not quantified for such datasets.

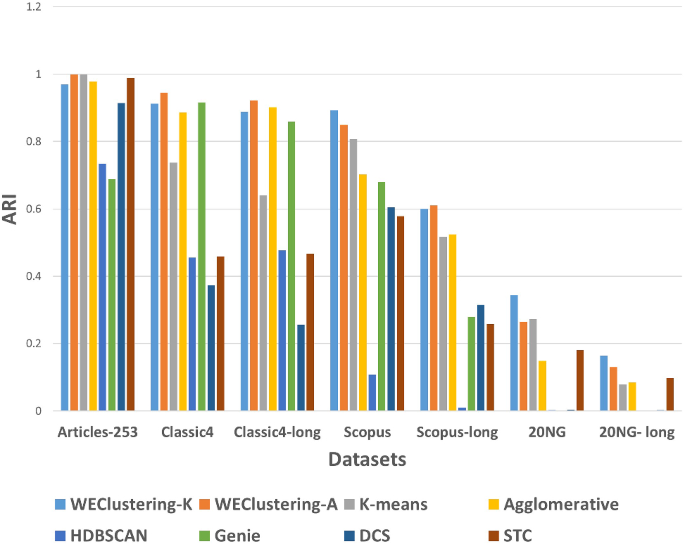

In Fig 3 , we show the obtained values for the four considered performance metrics. The results indicate that all performance metrics provide similar results. Also, the hierarchical method seems to be strongly affected by the number of features in the dataset. In fact, when using 50 and 200 features the hierarchical method provided lower accuracy. The k-means, spectral, optics and dbscan methods benefit from an increment in the number of features. Interestingly, the hcmodel has a better performance in the datasets containing 10 features than in those containing 2, 50 and 200 features, which suggests an optimum performance for this algorithm for datasets containing around 10 features. It is also clear that for 2 features the performance of the algorithms tend to be similar, with the exception of the optics and dbscan methods. On the other hand a larger number of features induce marked differences in performance. In particular, for 200 features, the spectral algorithm provides the best results among all classifiers.

All artificial datasets were used for evaluation. The averages were calculated separately for datasets containing 2, 10 and 50 features. The considered performance indexes are (a) adjusted Rand, (b) Jaccard, (c) normalized mutual information and (d) Fowlkes Mallows.

https://doi.org/10.1371/journal.pone.0210236.g003

We use the Kruskal-Wallis test [ 102 ], a nonparametric test, to explore the statistical differences in performance when considering distinct number of features in clustering methods. First, we test if the difference in performance is significant for 2 features. For this case, the Kruskal-Wallis test returns a p-value of p = 6.48 × 10 −7 , with a chi-squared distance of χ 2 = 41.50. Therefore, the difference in performance is statistically significant when considering all algorithms. For datasets containing 10 features, a p-value of p = 1.53 × 10 −8 is returned by the Kruskal-Wallis test, with a chi-squared distance of χ 2 = 52.20). For 50 features, the test returns a p-value of p = 1.56 × 10 −6 , with a chi-squared distance of χ 2 = 41.67). For 200 features, the test returns a p-value of p = 2.49 × 10 −6 , with a chi-squared distance of χ 2 = 40.58). Therefore, the null hypothesis of the Kruskal–Wallis test is rejected. This means that the algorithms indeed have significant differences in performance for 2, 10, 50 and 200 features, as indicated in Fig 3 .

In order to verify the influence of the number of objects used for classification, we also calculated the average accuracy for datasets separated according to the number of objects N e . The result is shown in Fig 4 . We observe that the impact that changing N e has on the accuracy depends on the algorithm. Surprisingly, the hierarchical, k-means and clara methods attain lower accuracy when more data is used. The result indicates that these algorithms tend to be less robust with respect to the larger overlap between the clusters due to an increase in the number of objects. We also observe that a larger N e enhances the performance of the hcmodel, optics and dbscan algorithms. This results is in agreement with [ 90 ].

All artificial datasets were used for evaluation. The averages were calculated separately for datasets containing 5, 50 and 100 objects per class. The considered performance indexes are (a) adjusted Rand, (b) Jaccard, (c) normalized mutual information and (d) Fowlkes Mallows.

https://doi.org/10.1371/journal.pone.0210236.g004

In most clustering algorithms, the size of the data has an effect on the clustering quality. In order to quantify this effect, we considered a scenario where the data has a high number of instances. Datasets with F = 5, C = 10 and Ne = {5, 50, 500, 5000} instances per class were created. This dataset will be referenced as DB10C5F. In Fig 5 we can observe that the subspace and spectral methods lead to improved accuracy when the number of instances increases. On the other hand, the size of the dataset does not seem to influence the accuracy of the kmeans, clara, hcmodel and EM algorithms. For the spectral, hierarchical and hcmodel algorithms, the accuracy could not be calculated when 5000 instances per class was used due to the amount of memory used by these methods. For example, in the case of the spectral algorithm method, a lot of processing power is required to compute and store the kernel matrix when the algorithm is executed. When the size of the dataset is too small, we see that the subspace algorithm results in low accuracy.

The plots correspond to the ARI, Jaccard and FM indexes averaged for all datasets containing 10 classes and 5 features (DB10C5F).

https://doi.org/10.1371/journal.pone.0210236.g005

It is also interesting to verify the performance of the clustering algorithms when setting distinct values for the expected number of classes K in the dataset. Such a value is usually not known beforehand in real datasets. For instance, one might expect the data to contain 10 classes, and, as a consequence, set K = 10 in the algorithm, but the objects may actually be better accommodated into 12 classes. An accurate algorithm should still provide reasonable results even when a wrong number of classes is assumed. Thus, we varied K for each algorithm and verified the resulting variation in accuracy. Observe that the optics and dbscan methods were not considered in this analysis as they do not have a parameter for setting the number of classes. In order to simplify the analysis, we only considered datasets comprising objects described by 10 features and divided into 10 classes (DB10C10F). The results are shown in Fig 6 . The top figures correspond to the average ARI and Jaccard indexes calculated for DB10C10F, while the Silhoute and Dunn indexes are shown at the bottom of the figure. The results indicate that setting K < 10 leads to a worse performance than obtained for the cases where K > 10, which suggests that a slight overestimation of the number of classes has smaller effect on the performance. Therefore, a good strategy for choosing K seems to be setting it to values that are slightly larger than the number of expected classes. An interesting behavior is observed for hierarchical clustering. The accuracy improves as the number of expected classes increases. This behavior is due to the default value of the method parameter, which is set as “average”. The “average” value means that the unweighted pair group method with arithmetic mean (UPGMA) is used to agglomerate the points. UPGMA is the average of the dissimilarities between the points in one cluster and the points in the other cluster. The moderate performance of UPGMA in recovering the original groups, even with high subgroup differentiation, is probably a consequence of the fact that UPGMA tends to result in more unbalanced clusters, that is, the majority of the objects are assigned to a few clusters while many other clusters contain only one or two objects.

The upper plots correspond to the ARI and Jaccard indices averaged for all datasets containing 10 classes and 10 features (DB10C10F). The lower plots correspond to the Silhouette and Dunn indices for the same dataset. The red line indicates the actual number of clusters in the dataset.

https://doi.org/10.1371/journal.pone.0210236.g006

The external validation indices show that most of the clustering algorithms correctly identify the 10 main clusters in the dataset. Naturally, this knowledge would not be available in a real life cluster analysis. For this reason, we also consider internal validation indices, which provides feedback on the partition quality. Two internal validation indices were considered, the Silhouette index (defined in the range [−1,1]) and the Dunn index (defined in the range [0, ∞]). These indices were applied to the DB10C10F and DB10C2F dataset while varying the expected number of clusters K . The results are presented in Figs 6 and 7 . In Fig 6 we can see that the results obtained for the different algorithms are mostly similar. The results for the Silhouette index indicate high accuracy around k = 10. The Dunn index displays a slightly lower performance, misestimating the correct number of clusters for the hierarchical algorithm. In Fig 7 Silhouette and Dunn show similar behavior.

The upper plots correspond to the ARI and Jaccard indices averaged for all datasets containing 10 classes and 2 features (DB10C2F). The lower plots correspond to the Silhouette and Dunn indices for the same dataset. The red line indicates the actual number of clusters in the dataset.

https://doi.org/10.1371/journal.pone.0210236.g007

The results obtained for the default parameters are summarized in Table 2 . The table is divided into four parts, each part corresponds to a performance metric. For each performance metric, the value in row i and column j of the table represents the average performance of the method in row i minus the average performance of the method in column j . The last column of the table indicates the average performance of each algorithm. We note that the averages were taken over all generated datasets.

In general, the spectral algorithm provides the highest accuracy rate among all evaluated methods.

https://doi.org/10.1371/journal.pone.0210236.t002

The results shown in Table 2 indicate that the spectral algorithm tends to outperform the other algorithms by at least 10%. On the other hand, the hierarchical method attained lower performance in most of the considered cases. Another interesting result is that the k-means and clara provided equivalent performance when considering all datasets. In the light of the results, the spectral method could be preferred when no optimitization of parameters values is performed.

One-dimensional analysis

In addition to the aforementioned quantities, we also measured, for each dataset, the maximum accuracy obtained when varying each single parameter of the algorithm. We then calculate the average of maximum accuracies, 〈max Acc〉, obtained over all considered datasets. In Table 3 , we show the values of 〈 S 〉, max S , Δ S and 〈max Acc〉 for datasets containing two features. When considering a two-class problem (DB2C2F), a significant improvement in performance (〈 S 〉 = 10.75% and 〈 S 〉 = 13.35%) was observed when varying parameter modelName , minPts and kpar of, respectively, the EM, optics and spectral methods. For all other cases, only minor average gain in performance was observed. For the 10-class problem, we notice that an inadequate value for parameter method of the hierarchical algorithm can lead to substantial loss of accuracy (16.15% on average). In most cases, however, the average variation in performance was small.

This analysis is based on the performance (measured through the ARI index) obtained when varying a single parameter of the clustering algorithm, while maintaining the others in their default configuration. 〈 S 〉, max S , Δ S are associated with the average, standard deviation and maximum difference between the performance obtained when varying a single parameter and the performance obtained for the default parameter values. We also measure 〈max Acc〉, the average of best ARI values obtained when varying each parameter, where the average is calculated over all considered datasets.

https://doi.org/10.1371/journal.pone.0210236.t003

In Table 4 , we show the values of 〈 S 〉, max S , Δ S and 〈max Acc〉 for datasets described by 10 features. For the the two-class clustering problem, a moderate improvement can be observed for the k-means, hierarchical and optics algorithm through the variation of, respectively, parameter nstart , method and minPts . A large increase in accuracy was observed when varying parameter modelName of the EM method. Changing the modelName used by the algorithm led to, on average, an improvement of 18.8%. A similar behavior was obtained when the number of classes was set to C = 10. For 10 classes, the variation of method in the hierarchical algorithm provided an average improvement of 6.72%. A high improvement was also observed when varying parameter modelName of the EM algorithm, with an average improvement of 13.63%.

This analysis is based on the performance obtained when varying a single parameter, while maintaining the others in their default configuration. 〈 S 〉, max S , Δ S are associated with the average, standard deviation and maximum difference between the performance obtained when varying a single parameter and the performance obtained for the default parameter values. We also measure 〈max Acc〉, the average of best ARI values obtained when varying each parameter, where the average is calculated over all considered datasets.

https://doi.org/10.1371/journal.pone.0210236.t004

Differently from the parameters discussed so far, the variation of some parameters plays a minor role in the discriminative power of the clustering algorithms. This is the case, for instance, of parameters kernel and iter of the spectral clustering algorithm and parameter iter.max of the kmeans clustering. In some cases, the effect of a unidimensional variation of parameter resulted in reduction of performance. For instance, the variation of min.individuals and models of the subspace algorithm provided an average loss of accuracy on the order of 〈 S 〉 = 20%, depending on the dataset. Similar behavior is observed for the dbscan method, for which the variation of minPts causes and average loss of accuracy of 20.32%. Parameters metric and rngR of the clara algorithm also led to marked decrease in performance.

In Table 5 , we show the values of 〈 S 〉, max S , Δ S and 〈max Acc〉 for datasets described by 200 features. For the two-class clustering problem, a significant improvement in performance was observed when varying nstart in the k-means method, method in the hierarchical algorithm, modelName in the hcmodel method and modelName in the EM method. On the other hand, when varying metric , min.individuals and use in, respectively, the clara, subspace, and hcmodel methods an average loss of accuracy larger than 10% was verified. The largest loss of accuracy happens with parameter minPts (49.47%) of the dbscan method. For the 10-class problem, similar results were observed, with the exception of the clara method, for which any parameter change resulted in a large loss of accuracy.

This analysis is based on the performance obtained when varying a single parameter, while maintaining the others in their default configuration. 〈 S 〉, max S , Δ S are associated with the average, standard deviation and maximum difference between the performance obtained when varying a single parameter and the performance obtained for the default parameter values. We also measure 〈max Acc〉, the average of best ARI values obtained when varying each parameter.

https://doi.org/10.1371/journal.pone.0210236.t005

Multi-dimensional analysis

A complete analysis of the performance of a clustering algorithm requires the simultaneous variation of all of its parameters. Nevertheless, such a task is difficult to do in practice, given the large number of parameter combinations that need to be taken into account. Therefore, here we consider a random variation of parameters aimed at obtaining a sampling of each algorithm performance for its complete multi-dimensional parameter space.

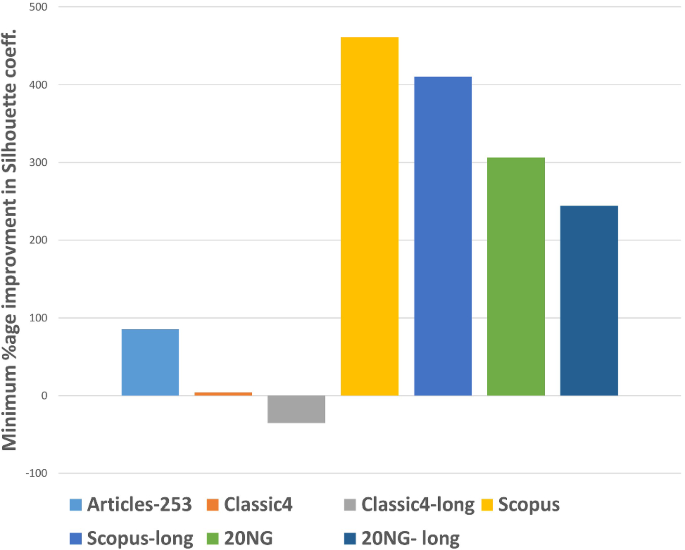

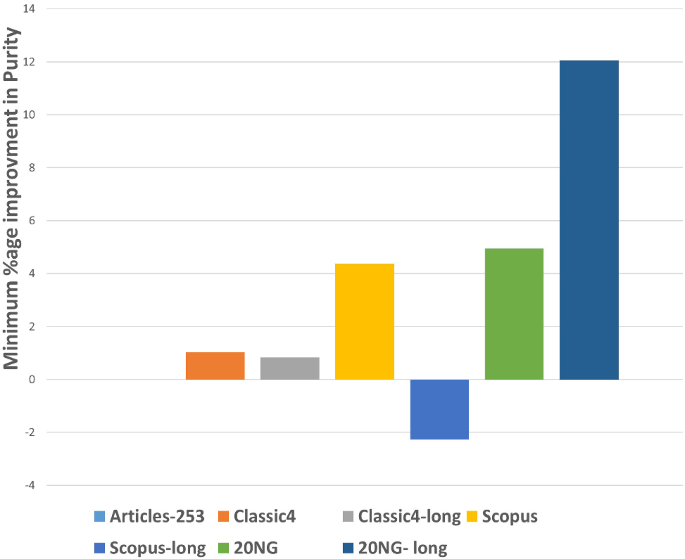

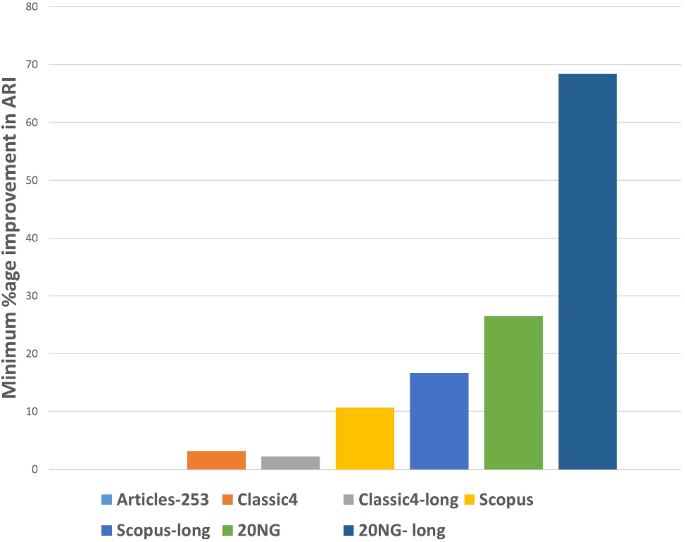

The performance of the algorithms for the different sets of parameters was evaluated according to the following procedure. Consider the histogram of ARI values obtained for the random sampling of parameters for the k-means algorithm, shown in Fig 8 . The red dashed line indicates the ARI value obtained for the default parameters of the algorithm. The light blue shaded region indicates the parameters configurations where the performance of the algorithm improved. From this result we calculated four main measures. The first, which we call p-value, is given by the area of the blue region divided by the total histogram area, multiplied by 100 in order to result in a percentage value. The p-value represents the percentage of parameter configurations where the algorithm performance improved when compared to the default parameters configuration. The second, third and fourth measures are given by the mean, 〈 R 〉, standard deviation, Δ R , and maximum value, max R , of the relative performance for all cases where the performance is improved (e.g. the blue shaded region in Fig 8 ). The relative performance is calculated as the difference in performance between a given realization of parameter values and the default parameters. The mean indicates the expected improvement of the algorithm for the random variation of parameters. The standard deviation represents the stability of such improvement, that is, how certain one is that the performance will be improved when doing such random variation. The maximum value indicates the largest improvement obtained when random parameters are considered. We also measured the average of the maximum accuracies 〈max ARI〉 obtained for each dataset when randomly selecting the parameters. In the S2 File of the supplementary material we show the distribution of ARI values obtained for the random sampling of parameters for all clustering algorithms considered in our analysis.

The algorithm was applied to dataset DB10C10F, and 500 sets of parameters were drawn.

https://doi.org/10.1371/journal.pone.0210236.g008

In Table 6 we show the performance (ARI) of the algorithms for dataset DB2C2F when applying the aforementioned random selection of parameters. The optics and EM methods are the only algorithms with a p-value larger than 50%. Also, a high average gain in performance was observed for the EM (22.1%) and hierarchical (30.6%) methods. Moderate improvement was observed for the hcmodel, kmeans, spectral, optics and dbscan algorithms.

The p-value represents the probability that the classifier set with a random configuration of parameters outperform the same classifier set with its default parameters. 〈 R 〉, Δ R and max R represent the average, standard deviation and maximum value of the improvement obtained when random parameters are considered. Column 〈max ARI〉 indicates the average of the best accuracies obtained for each dataset.

https://doi.org/10.1371/journal.pone.0210236.t006

The performance of the algorithms for dataset DB10C2F is presented in Table 7 . A high p-value was obtained for the optics (96.6%), EM (76.5%) and k-means (77.7%). Nevertheless, the average improvement in performance was relatively low for most algorithms, with the exception of the optics method, which led to an average improvement of 15.9%.

https://doi.org/10.1371/journal.pone.0210236.t007

A more marked variation in performance was observed for dataset DB2C10F, with results shown in Table 8 . The EM, kmeans, hierarchical and optics clustering algorithms resulted in a p-value larger than 50%. In such cases, when the performance was improved, the average gain in performance was, respectively, 30.1%, 18.0%, 25.9% and 15.5%. This means that the random variation of parameters might represent a valid approach for improving these algorithms. Actually, with the exception of clara and dbscan, all methods display significant average improvement in performance for this dataset. The results also show that a maximum accuracy of 100% can be achieved for the EM and subspace algorithms.

https://doi.org/10.1371/journal.pone.0210236.t008

In Table 9 we show the performance of the algorithms for dataset DB10C10F. The p-values for the EM, clara, k-means and optics indicate that the random selection of parameters usually improves the performance of these algorithms. The hierarchical algorithm can be significantly improved by the considered random selection of parameters. This is a consequence of the default value of parameter method , which, as discussed in the previous section, is not appropriate for this dataset.

https://doi.org/10.1371/journal.pone.0210236.t009

The performance of the algorithms for the dataset DB2C200F is presented in Table 10 . A high p-value was obtained for the EM (65.1%) and k-means (65.6%) algorithms. The average gain in performance in such cases was 39.1% and 35.4%, respectively. On the other hand, only in approximately 16% of the cases the Spectral and Subspace methods resulted in an improved ARI. Interestingly, the random variation of parameters led to, on average, large performance improvements for all algorithms.

https://doi.org/10.1371/journal.pone.0210236.t010

In Table 11 we show the performance of the algorithms for dataset DB10C200F. A high p-value was obtained for all methods. On the other hand, the average improvement in accuracy tended to be lower than in the case of the dataset DB2C200F.

https://doi.org/10.1371/journal.pone.0210236.t011

Conclusions

Clustering data is a complex task involving the choice between many different methods, parameters and performance metrics, with implications in many real-world problems [ 63 , 103 – 108 ]. Consequently, the analysis of the advantages and pitfalls of clustering algorithms is also a difficult task that has been received much attention. Here, we approached this task focusing on a comprehensive methodology for generating a large diversity of heterogeneous datasets with precisely defined properties such as the distances between classes and correlations between features. Using packages in the R language, we developed a comparison of the performance of nine popular clustering methods applied to 400 artificial datasets. Three situations were considered: default parameters, single parameter variation and random variation of parameters. It should be nevertheless be borne in mind that all results reported in this work are respective to specific configurations of normally distributed data and algorithmic implementations, so that different performance can be obtained in other situations. Besides serving as a practical guidance to the application of clustering methods when the researcher is not an expert in data mining techniques, a number of interesting results regarding the considered clustering methods were obtained.

Regarding the default parameters, the difference in performance of clustering methods was not significant for low-dimensional datasets. Specifically, the Kruskal-Wallis test on the differences in performance when 2 features were considered resulted in a p-value of p = 6.48 × 10 −7 (with a chi-squared distance of χ 2 = 41.50). For 10 features, a p-value of p = 1.53 × 10 −8 ( χ 2 = 52.20) was obtained. Considering 50 features resulted in a p-value of p = 1.56 × 10 −6 for the Kruskal-Wallis test ( χ 2 = 41.67). For 200 features, the obtained p-value was p = 2.49 × 10 −6 ( χ 2 = 40.58).

The Spectral method provided the best performance when using default parameters, with an Adjusted Rand Index (ARI) of 68.16%, as indicated in Table 2 . In contrast, the hierarchical method yielded an ARI of 21.34%. It is also interesting that underestimating the number of classes in the dataset led to worse performance than in overestimation situations. This was observed for all algorithms and is in accordance with previous results [ 44 ].

Regarding single parameter variations, for datasets containing 2 features, the hierarchical, optics and EM methods showed significant performance variation. On the other hand, for datasets containing 10 or more features, most methods could be readily improved through changes on selected parameters.

With respect to the multidimensional analysis for datasets containing ten classes and two features, the performance of the algorithms for the multidimensional selection of parameters was similar to that using the default parameters. This suggests that the algorithms are not sensitive to parameter variations for this dataset. For datasets containing two classes and ten features, the EM, hcmodel, subspace and hierarchical algorithm showed significant gain in performance. The EM algorithm also resulted in a high p-value (70.8%), which indicates that many parameter values for this algorithm can provide better results than the default configuration. For datasets containing ten classes and ten features, the improvement was significantly lower for almost all the algorithms, with the exception of the hierarchical clustering. When a large number of features was considered, such as in the case of the datasets containing 200 features, large gains in performance were observed for all methods.

In Tables 12 , 13 and 14 we show a summary of the best accuracies obtained during our analysis. The tables contain the best performance, measured as the ARI of the resulting partitions, achieved by each algorithm in the three considered situations (default, one- and multi-dimensional adjustment of parameters). The results are respective to datasets DB2C2F, DB10C2F, DB2C10F, DB10C10F, DB2C200F and DB10C200F. We observe that, for datasets containing 2 features, the algorithms tend to show similar performance, specially when the number of classes is increased. For datasets containing 10 features or more, the spectral algorithm seems to consistently provide the best performance, although the EM, hierarchical, k-means and subspace algorithms can also achieve similar performance with some parameter tuning. It should be observed that several clustering algorithms, such as optics and dbscan, aim at other data distributions such as elongated or S-shaped [ 72 , 74 ]. Therefore, different results could be obtained for non-normally distributed data.

https://doi.org/10.1371/journal.pone.0210236.t012

https://doi.org/10.1371/journal.pone.0210236.t013

https://doi.org/10.1371/journal.pone.0210236.t014

Other algorithms could be compared in future extensions of this work. An important aspect that could also be explored is to consider other statistical distributions for modeling the data. In addition, an analogous approach could be applied to semi-supervised classification.

Supporting information

S1 file. description of the clustering algorithms’ parameters..

We provide a brief description about the parameters of the clustering algorithms considered in the main text.

https://doi.org/10.1371/journal.pone.0210236.s001

S2 File. Clustering performance obtained for the random selection of parameters.

The file contains figures showing the histograms of ARI values obtained for identifying the clusters of, respectively, datasets DB10C10F and DB2C10F using a random selection of parameters. Each plot corresponds to a clustering method considered in the main text.

https://doi.org/10.1371/journal.pone.0210236.s002

- View Article

- PubMed/NCBI

- Google Scholar

- 7. Aggarwal CC, Zhai C. In: Aggarwal CC, Zhai C, editors. A Survey of Text Clustering Algorithms. Boston, MA: Springer US; 2012. p. 77–128.

- 13. Joachims T. Text categorization with support vector machines: Learning with many relevant features. In: European conference on machine learning. Springer; 1998. p. 137–142.

- 14. Witten IH, Frank E. Data Mining: Practical Machine Learning Tools and Techniques. 2nd ed. San Francisco: Morgan Kaufmann; 2005.

- 37. Berkhin P. In: Kogan J, Nicholas C, Teboulle M, editors. A Survey of Clustering Data Mining Techniques. Berlin, Heidelberg: Springer Berlin Heidelberg; 2006. p. 25–71.

- 42. R Development Core Team. R: A Language and Environment for Statistical Computing; 2006. Available from: http://www.R-project.org .

- 44. Erman J, Arlitt M, Mahanti A. Traffic classification using clustering algorithms. In: Proceedings of the 2006 SIGCOMM workshop on mining network data. ACM; 2006. p. 281–286.

- 47. Parsons L, Haque E, Liu H. Evaluating subspace clustering algorithms. In: Workshop on Clustering High Dimensional Data and its Applications, SIAM Int. Conf. on Data Mining. Citeseer; 2004. p. 48–56.

- 48. Burdick D, Calimlim M, Gehrke J. MAFIA: A Maximal Frequent Itemset Algorithm for Transactional Databases. In: Proceedings of the 17th International Conference on Data Engineering. Washington, DC, USA: IEEE Computer Society; 2001. p. 443–452.

- 51. UCI. breast-cancer-wisconsin;. Available from: https://http://archive.ics.uci.edu/ml/machine-learning-databases/breast-cancer-wisconsin/ .

- 52. Ultsch A. Clustering wih som: U* c. In: Proceedings of the 5th Workshop on Self-Organizing Maps. vol. 2; 2005. p. 75–82.

- 54. Aggarwal CC, Reddy CK. Data Clustering: Algorithms and Applications. vol. 2. 1st ed. Chapman & Hall/CRC; 2013.

- 58. Jain AK, Topchy A, Law MH, Buhmann JM. Landscape of clustering algorithms. In: Pattern Recognition, 2004. ICPR 2004. Proceedings of the 17th International Conference on. vol. 1. IEEE; 2004. p. 260–263.

- 64. Kaufman L, Rousseeuw PJ. Finding groups in data: an introduction to cluster analysis. Series in Probability& Mathematical Statistics. 2009;.

- 65. Arthur D, Vassilvitskii S. k-means++: The advantages of careful seeding. In: Proceedings of the eighteenth annual ACM-SIAM symposium on Discrete algorithms. Society for Industrial and Applied Mathematics; 2007. p. 1027–1035.

- 66. Sequeira K, Zaki M. ADMIT: anomaly-based data mining for intrusions. In: Proceedings of the eighth ACM SIGKDD international conference on Knowledge discovery and data mining. ACM; 2002. p. 386–395.

- 67. Williams GJ, Huang Z. Mining the knowledge mine. In: Australian Joint Conference on Artificial Intelligence. Springer; 1997. p. 340–348.

- 68. MacQueen J. Some methods for classification and analysis of multivariate observations. In: Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, Volume 1: Statistics. Berkeley, Calif: University of California Press; 1967. p. 281–297.

- 70. Kaufman L, Rousseeuw PJ. Finding Groups in Data: an introduction to cluster analysis. John Wiley & Sons; 1990.

- 71. Han J, Kamber M. Data Mining. Concepts and Techniques. vol. 2. 2nd ed. -: Morgan Kaufmann; 2006.

- 73. Ankerst M, Breunig MM, Kriegel HP, Sander J. OPTICS: Ordering Points To Identify the Clustering Structure. ACM Press; 1999. p. 49–60.

- 74. Ester M, Kriegel HP, Sander J, Xu X. A Density-based Algorithm for Discovering Clusters a Density-based Algorithm for Discovering Clusters in Large Spatial Databases with Noise. In: Proceedings of the Second International Conference on Knowledge Discovery and Data Mining. KDD’96. AAAI Press; 1996. p. 226–231.

- 86. Ng AY, Jordan MI, Weiss Y. On spectral clustering: Analysis and an algorithm. In: Advances in Neural Information Processing Systems 14. MIT Press; 2001. p. 849–856.

- 95. Horn RA, Johnson CR. Matrix Analysis. 2nd ed. New York, NY, USA: Cambridge University Press; 2012.

- 96. Liu Y, Li Z, Xiong H, Gao X, Wu J. Understanding of internal clustering validation measures. In: Data Mining (ICDM), 2010 IEEE 10th International Conference on. IEEE; 2010. p. 911–916.

- 98. Cover TM, Thomas JA. Elements of Information Theory. vol. 2. Wiley; 2012.

- 102. McKight PE, Najab J. Kruskal-Wallis Test. Corsini Encyclopedia of Psychology. 2010;.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Springer Nature - PMC COVID-19 Collection

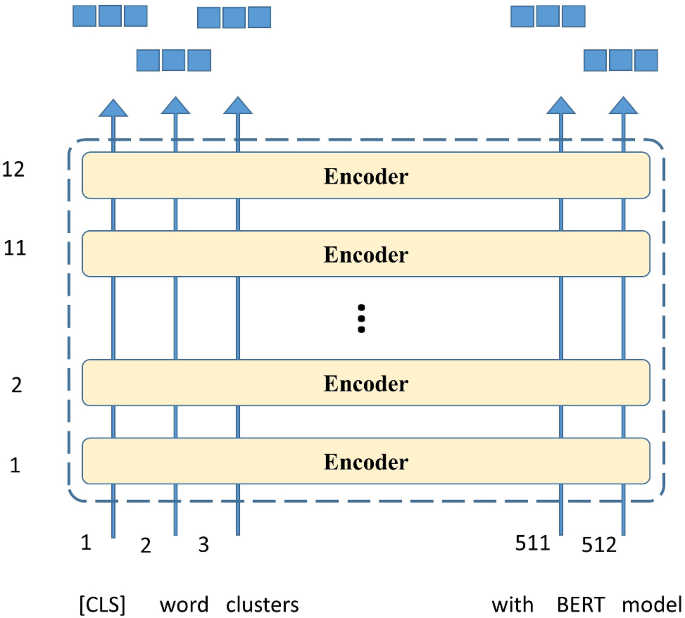

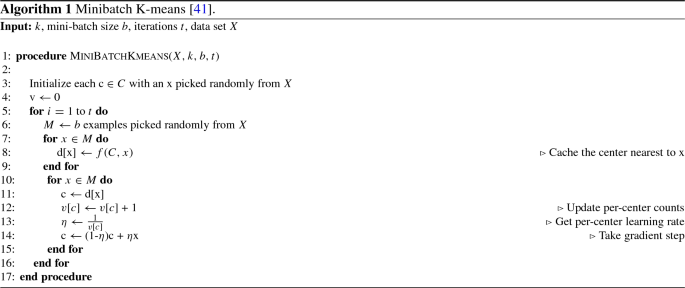

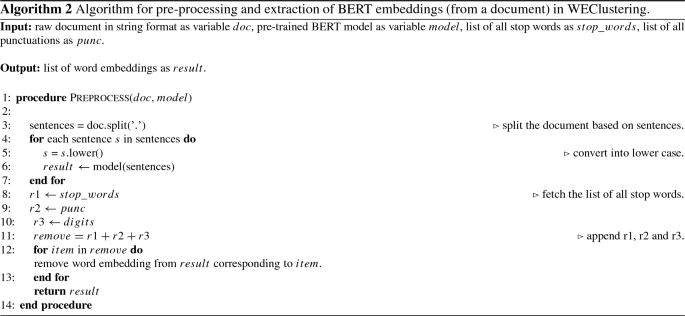

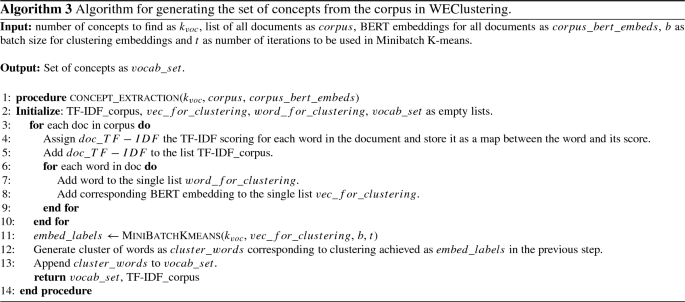

An Improved K-means Clustering Algorithm Towards an Efficient Data-Driven Modeling

1 Department of Computer Science and Engineering, Chittagong University of Engineering & Technology, Chittagong, 4349 Bangladesh

MD. Asif Iqbal

Avijeet shil, m. j. m. chowdhury.

2 Department of Computer Science and Information Technology, La Trobe University, Victoria, 3086 Australia

Mohammad Ali Moni

3 School of Health and Rehabilitation Sciences, Faculty of Health and Behavioural Sciences, The University of Queensland, St Lucia, QLD 4072 Australia

Iqbal H. Sarker

Associated data.

Data and codes used in this work can be made available upon reasonable request

K-means algorithm is one of the well-known unsupervised machine learning algorithms. The algorithm typically finds out distinct non-overlapping clusters in which each point is assigned to a group. The minimum squared distance technique distributes each point to the nearest clusters or subgroups. One of the K-means algorithm’s main concerns is to find out the initial optimal centroids of clusters. It is the most challenging task to determine the optimum position of the initial clusters’ centroids at the very first iteration. This paper proposes an approach to find the optimal initial centroids efficiently to reduce the number of iterations and execution time . To analyze the effectiveness of our proposed method, we have utilized different real-world datasets to conduct experiments. We have first analyzed COVID-19 and patient datasets to show our proposed method’s efficiency. A synthetic dataset of 10M instances with 8 dimensions is also used to estimate the performance of the proposed algorithm. Experimental results show that our proposed method outperforms traditional kmeans++ and random centroids initialization methods regarding the computation time and the number of iterations.

Introduction

Machine learning is a subset of Artificial Intelligence that makes applications capable of learning and improves the result through the experience, not being programmed explicitly through computation [ 1 ]. Supervised and unsupervised are the basic approaches of the machine learning algorithm. The unsupervised algorithm identifies hidden data structures from unlabelled data contained in the dataset [ 2 ]. According to the hidden structures of the datasets, clustering algorithm is typically used to find the similar data groups which also can be considered as a core part of data science as mentioned in Sarker et al. [ 3 ].

In the context of data science and machine learning, K-Means clustering is known as one of the powerful unsupervised techniques to identify the structure of a given dataset. The clustering algorithm is the best choice for separating the data into groups and is extensively exercised for its simplicity [ 4 ]. Its applications have been seen in different important real-world scenarios, for example, recommendation systems, various smart city services as well as cybersecurity and many more to cluster the data. Beyond this, clustering is one of the most useful techniques for business data analysis [ 5 ]. Also, the K-means algorithm has been used to analyze the users’ behavior and context-aware services [ 6 ]. Moreover, the K-means algorithm plays a vital role in complicated feature extraction.

In terms of problem type, K-means algorithm is considered an NP-Hard problem [ 7 ]. It is widely used to find the number of clusters so that it is possible to divide the unlabelled dataset into clusters to solve real-world problems in various application domains, mentioned above. It is done by calculating the distances from a centroid of a cluster. We need to fix the initial centroids’ coordinates to find the number of clusters at initialization. Thus, this step has a crucial role in the K-means algorithm. Generally, we randomly select the initial centroids. If we can determine the initial centroids efficiently, it will take fewer steps to converge. According to D. T. Pham et al. [ 8 ], the overall complexity of the K-means algorithm is

Where t is the number of iterations, k is the number of clusters and n is the number of data points.

Optimization plays an important role both for supervised and unsupervised learning algorithms [ 9 ]. So, it will be a great advantage if we can save some computational costs by optimization. The paper will give an overview to find the initial centroids more efficiently with the help of principal component analysis (PCA) and percentile concept for estimating the initial centroids. We need to have fewer iterations and execution times than the conventional method.

In this paper, recent datasets of COVID-19, a healthcare dataset and a synthetic dataset size of 10 Million instances are used to analyze our proposed method. In the COVID-19 dataset, K-means clustering is used to divide the countries into different clusters based on the health care quality. A patient dataset with relatively high instances and low dimensions is used for clustering the patient and inspecting the performance of the proposed algorithm. And finally, a high instance of 10M synthetic dataset is used to evaluate the performance. We have also compared our method with the kmeans++ and random centroids selection methods.

The key contributions of our work are as follows-

- We propose an improved K-means clustering algorithm that can be used to build an efficient data-driven model.

- Our approach finds the optimal initial centroids efficiently to reduce the number of iterations and execution time.

- To show the efficiency of our model comparing with the existing approaches, we conduct experimental analysis utilizing a COVID-19 real-world dataset. A 10M synthetic dataset as well as a health care dataset have also been analyzed to determine our proposed method’s efficiency compared to the benchmark models.

This paper provides an algorithmic overview of our proposed method to develop an efficient k-means clustering algorithm. It is an extended and refined version of the paper [ 10 ]. Elaborations from the previous paper are (i) analysis of the proposed algorithm with two additional datasets along with the COVID-19 dataset [ 10 ], (ii) the comparison with random centroid selection and kmeans++ centroid selection methods, (iii) analysis of our proposed method more generalized way in different fields, and (iv) including more recent related works and summarizing several real-world applications.

In Sect. 2 , we’ll discuss the works of similar concepts. In Sect. 3 , we will further discuss our proposed methodology with a proper example. In Sect. 4 , we will show some experimental results, description of the datasets and a comparative analysis. In Sects. 5 and 6 , discussion and conclusion have been included.

Related Work