- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

2.1: Types of Data Representation

- Last updated

- Save as PDF

- Page ID 5696

Two common types of graphic displays are bar charts and histograms. Both bar charts and histograms use vertical or horizontal bars to represent the number of data points in each category or interval. The main difference graphically is that in a bar chart there are spaces between the bars and in a histogram there are not spaces between the bars. Why does this subtle difference exist and what does it imply about graphic displays in general?

Displaying Data

It is often easier for people to interpret relative sizes of data when that data is displayed graphically. Note that a categorical variable is a variable that can take on one of a limited number of values and a quantitative variable is a variable that takes on numerical values that represent a measurable quantity. Examples of categorical variables are tv stations, the state someone lives in, and eye color while examples of quantitative variables are the height of students or the population of a city. There are a few common ways of displaying data graphically that you should be familiar with.

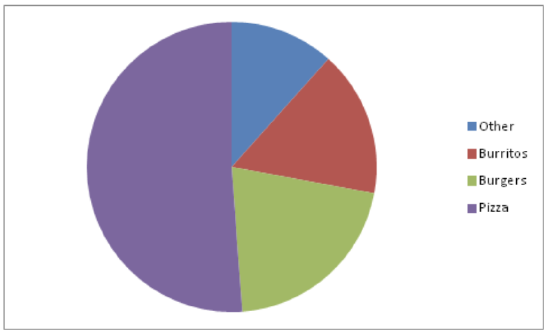

A pie chart shows the relative proportions of data in different categories. Pie charts are excellent ways of displaying categorical data with easily separable groups. The following pie chart shows six categories labeled A−F. The size of each pie slice is determined by the central angle. Since there are 360 o in a circle, the size of the central angle θ A of category A can be found by:

CK-12 Foundation - https://www.flickr.com/photos/slgc/16173880801 - CCSA

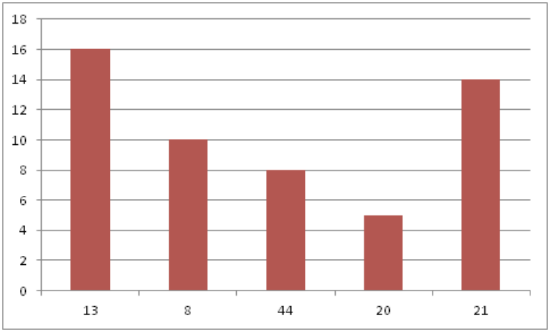

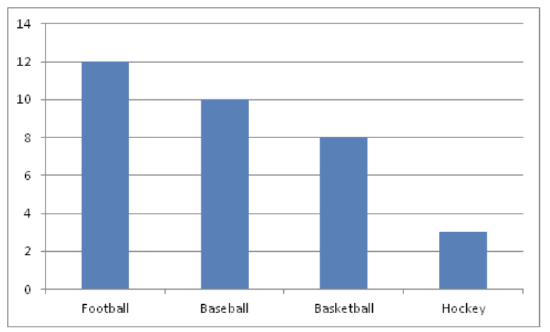

A bar chart displays frequencies of categories of data. The bar chart below has 5 categories, and shows the TV channel preferences for 53 adults. The horizontal axis could have also been labeled News, Sports, Local News, Comedy, Action Movies. The reason why the bars are separated by spaces is to emphasize the fact that they are categories and not continuous numbers. For example, just because you split your time between channel 8 and channel 44 does not mean on average you watch channel 26. Categories can be numbers so you need to be very careful.

CK-12 Foundation - https://www.flickr.com/photos/slgc/16173880801 - CCSA

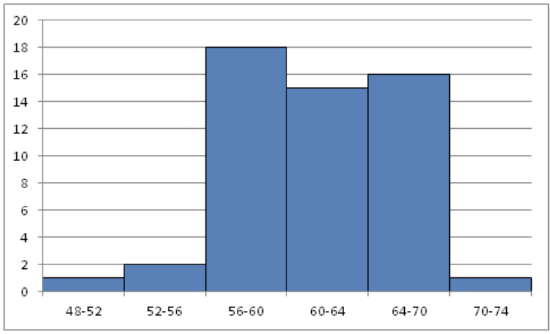

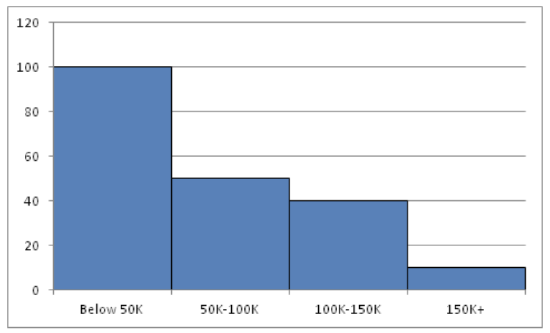

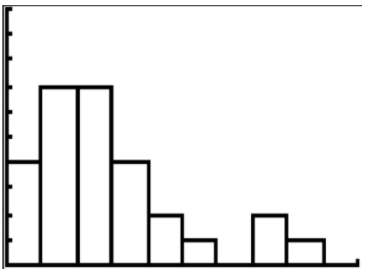

A histogram displays frequencies of quantitative data that has been sorted into intervals. The following is a histogram that shows the heights of a class of 53 students. Notice the largest category is 56-60 inches with 18 people.

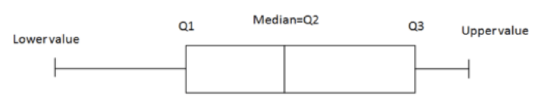

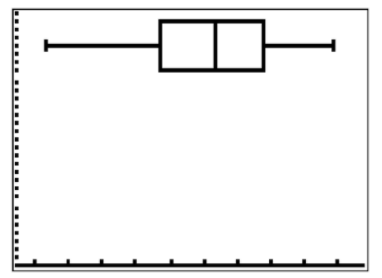

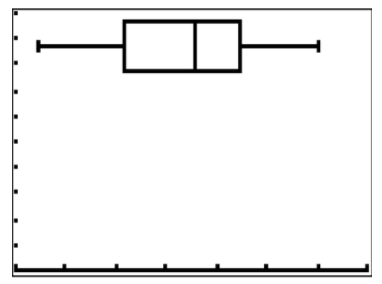

A boxplot (also known as a box and whiskers plot ) is another way to display quantitative data. It displays the five 5 number summary (minimum, Q1, median , Q3, maximum). The box can either be vertically or horizontally displayed depending on the labeling of the axis. The box does not need to be perfectly symmetrical because it represents data that might not be perfectly symmetrical.

Earlier, you were asked about the difference between histograms and bar charts. The reason for the space in bar charts but no space in histograms is bar charts graph categorical variables while histograms graph quantitative variables. It would be extremely improper to forget the space with bar charts because you would run the risk of implying a spectrum from one side of the chart to the other. Note that in the bar chart where TV stations where shown, the station numbers were not listed horizontally in order by size. This was to emphasize the fact that the stations were categories.

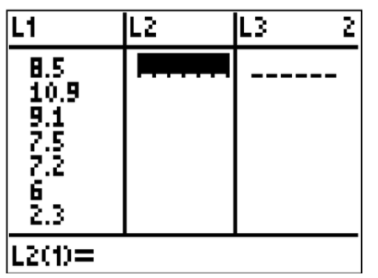

Create a boxplot of the following numbers in your calculator.

8.5, 10.9, 9.1, 7.5, 7.2, 6, 2.3, 5.5

Enter the data into L1 by going into the Stat menu.

CK-12 Foundation - CCSA

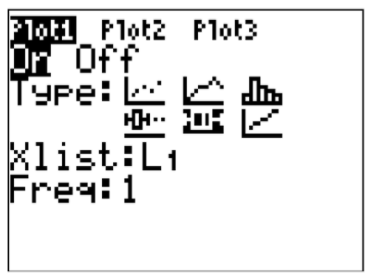

Then turn the statplot on and choose boxplot.

Use Zoomstat to automatically center the window on the boxplot.

Create a pie chart to represent the preferences of 43 hungry students.

- Other – 5

- Burritos – 7

- Burgers – 9

- Pizza – 22

Create a bar chart representing the preference for sports of a group of 23 people.

- Football – 12

- Baseball – 10

- Basketball – 8

- Hockey – 3

Create a histogram for the income distribution of 200 million people.

- Below $50,000 is 100 million people

- Between $50,000 and $100,000 is 50 million people

- Between $100,000 and $150,000 is 40 million people

- Above $150,000 is 10 million people

1. What types of graphs show categorical data?

2. What types of graphs show quantitative data?

A math class of 30 students had the following grades:

3. Create a bar chart for this data.

4. Create a pie chart for this data.

5. Which graph do you think makes a better visual representation of the data?

A set of 20 exam scores is 67, 94, 88, 76, 85, 93, 55, 87, 80, 81, 80, 61, 90, 84, 75, 93, 75, 68, 100, 98

6. Create a histogram for this data. Use your best judgment to decide what the intervals should be.

7. Find the five number summary for this data.

8. Use the five number summary to create a boxplot for this data.

9. Describe the data shown in the boxplot below.

10. Describe the data shown in the histogram below.

A math class of 30 students has the following eye colors:

11. Create a bar chart for this data.

12. Create a pie chart for this data.

13. Which graph do you think makes a better visual representation of the data?

14. Suppose you have data that shows the breakdown of registered republicans by state. What types of graphs could you use to display this data?

15. From which types of graphs could you obtain information about the spread of the data? Note that spread is a measure of how spread out all of the data is.

Review (Answers)

To see the Review answers, open this PDF file and look for section 15.4.

Additional Resources

PLIX: Play, Learn, Interact, eXplore - Baby Due Date Histogram

Practice: Types of Data Representation

Real World: Prepare for Impact

Computer Science: Reflections on the Field, Reflections from the Field (2004)

Chapter: 5 data, representation, and information, 5 data, representation, and information.

T he preceding two chapters address the creation of models that capture phenomena of interest and the abstractions both for data and for computation that reduce these models to forms that can be executed by computer. We turn now to the ways computer scientists deal with information, especially in its static form as data that can be manipulated by programs.

Gray begins by narrating a long line of research on databases—storehouses of related, structured, and durable data. We see here that the objects of research are not data per se but rather designs of “schemas” that allow deliberate inquiry and manipulation. Gray couples this review with introspection about the ways in which database researchers approach these problems.

Databases support storage and retrieval of information by defining—in advance—a complex structure for the data that supports the intended operations. In contrast, Lesk reviews research on retrieving information from documents that are formatted to meet the needs of applications rather than predefined schematized formats.

Interpretation of information is at the heart of what historians do, and Ayers explains how information technology is transforming their paradigms. He proposes that history is essentially model building—constructing explanations based on available information—and suggests that the methods of computer science are influencing this core aspect of historical analysis.

DATABASE SYSTEMS: A TEXTBOOK CASE OF RESEARCH PAYING OFF

Jim Gray, Microsoft Research

A small research investment helped produce U.S. market dominance in the $14 billion database industry. Government and industry funding of a few research projects created the ideas for several generations of products and trained the people who built those products. Continuing research is now creating the ideas and training the people for the next generation of products.

Industry Profile

The database industry generated about $14 billion in revenue in 2002 and is growing at 20 percent per year, even though the overall technology sector is almost static. Among software sectors, the database industry is second only to operating system software. Database industry leaders are all U.S.-based corporations: IBM, Microsoft, and Oracle are the three largest. There are several specialty vendors: Tandem sells over $1 billion/ year of fault-tolerant transaction processing systems, Teradata sells about $1 billion/year of data-mining systems, and companies like Information Resources Associates, Verity, Fulcrum, and others sell specialized data and text-mining software.

In addition to these well-established companies, there is a vibrant group of small companies specializing in application-specific databases—for text retrieval, spatial and geographical data, scientific data, image data, and so on. An emerging group of companies offer XML-oriented databases. Desktop databases are another important market focused on extreme ease of use, small size, and disconnected (offline) operation.

Historical Perspective

Companies began automating their back-office bookkeeping in the 1960s. The COBOL programming language and its record-oriented file model were the workhorses of this effort. Typically, a batch of transactions was applied to the old-tape-master, producing a new-tape-master and printout for the next business day. During that era, there was considerable experimentation with systems to manage an online database that could capture transactions as they happened. At first these systems were ad hoc, but late in that decade network and hierarchical database products emerged. A COBOL subcommittee defined a network data model stan-

dard (DBTG) that formed the basis for most systems during the 1970s. Indeed, in 1980 DBTG-based Cullinet was the leading software company.

However, there were some problems with DBTG. DBTG uses a low-level, record-at-a-time procedural language to access information. The programmer has to navigate through the database, following pointers from record to record. If the database is redesigned, as often happens over a decade, then all the old programs have to be rewritten.

The relational data model, enunciated by IBM researcher Ted Codd in a 1970 Communications of the Association for Computing Machinery article, 1 was a major advance over DBTG. The relational model unified data and metadata so that there was only one form of data representation. It defined a non-procedural data access language based on algebra or logic. It was easier for end users to visualize and understand than the pointers-and-records-based DBTG model.

The research community (both industry and university) embraced the relational data model and extended it during the 1970s. Most significantly, researchers showed that a non-procedural language could be compiled to give performance comparable to the best record-oriented database systems. This research produced a generation of systems and people that formed the basis for products from IBM, Ingres, Oracle, Informix, Sybase, and others. The SQL relational database language was standardized by ANSI/ISO between 1982 and 1986. By 1990, virtually all database systems provided an SQL interface (including network, hierarchical, and object-oriented systems).

Meanwhile the database research agenda moved on to geographically distributed databases and to parallel data access. Theoretical work on distributed databases led to prototypes that in turn led to products. Today, all the major database systems offer the ability to distribute and replicate data among nodes of a computer network. Intense research on data replication during the late 1980s and early 1990s gave rise to a second generation of replication products that are now the mainstays of mobile computing.

Research of the 1980s showed how to execute each of the relational data operators in parallel—giving hundred-fold and thousand-fold speedups. The results of this research began to appear in the products of several major database companies. With the proliferation of data mining in the 1990s, huge databases emerged. Interactive access to these databases requires that the system use multiple processors and multiple disks to read all the data in parallel. In addition, these problems require near-

linear time search algorithms. University and industrial research of the previous decade had solved these problems and forms the basis of the current VLDB (very large database) data-mining systems.

Rollup and drilldown data reporting systems had been a mainstay of decision-support systems ever since the 1960s. In the middle 1990s, the research community really focused on data-mining algorithms. They invented very efficient data cube and materialized view algorithms that form the basis for the current generation of business intelligence products.

The most recent round of government-sponsored research creating a new industry comes from the National Science Foundation’s Digital Libraries program, which spawned Google. It was founded by a group of “database” graduate students who took a fresh look at how information should be organized and presented in the Internet era.

Current Research Directions

There continues to be active and valuable research on representing and indexing data, adding inference to data search, compiling queries more efficiently, executing queries in parallel, integrating data from heterogeneous data sources, analyzing performance, and extending the transaction model to handle long transactions and workflow (transactions that involve human as well as computer steps). The availability of huge volumes of data on the Internet has prompted the study of data integration, mediation, and federation in which a portal system presents a unification of several data sources by pulling data on demand from different parts of the Internet.

In addition, there is great interest in unifying object-oriented concepts with the relational model. New data types (image, document, and drawing) are best viewed as the methods that implement them rather than by the bytes that represent them. By adding procedures to the database system, one gets active databases, data inference, and data encapsulation. This object-oriented approach is an area of active research and ferment both in academe and industry. It seems that in 2003, the research prototypes are mostly done and this is an area that is rapidly moving into products.

The Internet is full of semi-structured data—data that has a bit of schema and metadata, but is mostly a loose collection of facts. XML has emerged as the standard representation of semi-structured data, but there is no consensus on how such data should be stored, indexed, or searched. There have been intense research efforts to answer these questions. Prototypes have been built at universities and industrial research labs, and now products are in development.

The database research community now has a major focus on stream data processing. Traditionally, databases have been stored locally and are

updated by transactions. Sensor networks, financial markets, telephone calls, credit card transactions, and other data sources present streams of data rather than a static database. The stream data processing researchers are exploring languages and algorithms for querying such streams and providing approximate answers.

Now that nearly all information is online, data security and data privacy are extremely serious and important problems. A small, but growing, part of the database community is looking at ways to protect people’s privacy by limiting the ways data is used. This work also has implications for protecting intellectual property (e.g., digital rights management, watermarking) and protecting data integrity by digitally signing documents and then replicating them so that the documents cannot be altered or destroyed.

Case Histories

The U.S. government funded many database research projects from 1972 to the present. Projects at the University of California at Los Angeles gave rise to Teradata and produced many excellent students. Projects at Computer Corp. of America (SDD-1, Daplex, Multibase, and HiPAC) pioneered distributed database technology and object-oriented database technology. Projects at Stanford University fostered deductive database technology, data integration technology, query optimization technology, and the popular Yahoo! and Google Internet sites. Work at Carnegie Mellon University gave rise to general transaction models and ultimately to the Transarc Corporation. There have been many other successes from AT&T, the University of Texas at Austin, Brown and Harvard Universities, the University of Maryland, the University of Michigan, Massachusetts Institute of Technology, Princeton University, and the University of Toronto among others. It is not possible to enumerate all the contributions here, but we highlight three representative research projects that had a major impact on the industry.

Project INGRES

Project Ingres started at the University of California at Berkeley in 1972. Inspired by Codd’s paper on relational databases, several faculty members (Stonebraker, Rowe, Wong, and others) started a project to design and build a relational system. Incidental to this work, they invented a query language (QUEL), relational optimization techniques, a language binding technique, and interesting storage strategies. They also pioneered work on distributed databases.

The Ingres academic system formed the basis for the Ingres product now owned by Computer Associates. Students trained on Ingres went on

to start or staff all the major database companies (AT&T, Britton Lee, HP, Informix, IBM, Oracle, Tandem, Sybase). The Ingres project went on to investigate distributed databases, database inference, active databases, and extensible databases. It was rechristened Postgres, which is now the basis of the digital library and scientific database efforts within the University of California system. Recently, Postgres spun off to become the basis for a new object-relational system from the start-up Illustra Information Technologies.

Codd’s ideas were inspired by seeing the problems IBM and its customers were having with IBM’s IMS product and the DBTG network data model. His relational model was at first very controversial; people thought that the model was too simplistic and that it could never give good performance. IBM Research management took a gamble and chartered a small (10-person) systems effort to prototype a relational system based on Codd’s ideas. That system produced a prototype that eventually grew into the DB2 product series. Along the way, the IBM team pioneered ideas in query optimization, data independence (views), transactions (logging and locking), and security (the grant-revoke model). In addition, the SQL query language from System R was the basis for the ANSI/ISO standard.

The System R group went on to investigate distributed databases (project R*) and object-oriented extensible databases (project Starburst). These research projects have pioneered new ideas and algorithms. The results appear in IBM’s database products and those of other vendors.

Not all research ideas work out. During the 1970s there was great enthusiasm for database machines—special-purpose computers that would be much faster than general-purpose operating systems running conventional database systems. These research projects were often based on exotic hardware like bubble memories, head-per-track disks, or associative RAM. The problem was that general-purpose systems were improving at 50 percent per year, so it was difficult for exotic systems to compete with them. By 1980, most researchers realized the futility of special-purpose approaches and the database-machine community switched to research on using arrays of general-purpose processors and disks to process data in parallel.

The University of Wisconsin hosted the major proponents of this idea in the United States. Funded by the government and industry, those researchers prototyped and built a parallel database machine called

Gamma. That system produced ideas and a generation of students who went on to staff all the database vendors. Today the parallel systems from IBM, Tandem, Oracle, Informix, Sybase, and Microsoft all have a direct lineage from the Wisconsin research on parallel database systems. The use of parallel database systems for data mining is the fastest-growing component of the database server industry.

The Gamma project evolved into the Exodus project at Wisconsin (focusing on an extensible object-oriented database). Exodus has now evolved to the Paradise system, which combines object-oriented and parallel database techniques to represent, store, and quickly process huge Earth-observing satellite databases.

And Then There Is Science

In addition to creating a huge industry, database theory, science, and engineering constitute a key part of computer science today. Representing knowledge within a computer is one of the central challenges of computer science ( Box 5.1 ). Database research has focused primarily on this fundamental issue. Many universities have faculty investigating these problems and offer classes that teach the concepts developed by this research program.

COMPUTER SCIENCE IS TO INFORMATION AS CHEMISTRY IS TO MATTER

Michael Lesk, Rutgers University

In other countries computer science is often called “informatics” or some similar name. Much computer science research derives from the need to access, process, store, or otherwise exploit some resource of useful information. Just as chemistry is driven to large extent by the need to understand substances, computing is driven by a need to handle data and information. As an example of the way chemistry has developed, see Oliver Sacks’s book Uncle Tungsten: Memories of a Chemical Boyhood (Vintage Books, 2002). He describes his explorations through the different metals, learning the properties of each, and understanding their applications. Similarly, in the history of computer science, our information needs and our information capabilities have driven parts of the research agenda. Information retrieval systems take some kind of information, such as text documents or pictures, and try to retrieve topics or concepts based on words or shapes. Deducing the concept from the bytes can be difficult, and the way we approach the problem depends on what kind of bytes we have and how many of them we have.

Our experimental method is to see if we can build a system that will provide some useful access to information or service. If it works, those algorithms and that kind of data become a new field: look at areas like geographic information systems. If not, people may abandon the area until we see a new motivation to exploit that kind of data. For example, face-recognition algorithms have received a new impetus from security needs, speeding up progress in the last few years. An effective strategy to move computer science forward is to provide some new kind of information and see if we can make it useful.

Chemistry, of course, involves a dichotomy between substances and reactions. Just as we can (and frequently do) think of computer science in terms of algorithms, we can talk about chemistry in terms of reactions. However, chemistry has historically focused on substances: the encyclopedias and indexes in chemistry tend to be organized and focused on compounds, with reaction names and schemes getting less space on the shelf. Chemistry is becoming more balanced as we understand reactions better; computer science has always been more heavily oriented toward algorithms, but we cannot ignore the driving force of new kinds of data.

The history of information retrieval, for example, has been driven by the kinds of information we could store and use. In the 1960s, for example, storage was extremely expensive. Research projects were limited to text

materials. Even then, storage costs meant that a research project could just barely manage to have a single ASCII document available for processing. For example, Gerard Salton’s SMART system, one of the leading text retrieval systems for many years (see Salton’s book, The SMART Automatic Retrieval System , Prentice-Hall, 1971), did most of its processing on collections of a few hundred abstracts. The only collections of “full documents” were a collection of 80 extended abstracts, each a page or two long, and a collection of under a thousand stories from Time Magazine , each less than a page in length. The biggest collection was 1400 abstracts in aeronautical engineering. With this data, Salton was able to experiment on the effectiveness of retrieval methods using suffixing, thesauri, and simple phrase finding. Salton also laid down the standard methodology for evaluating retrieval systems, based on Cyril Cleverdon’s measures of “recall” (percentage of the relevant material that is retrieved in response to a query) and “precision” (the percentage of the material retrieved that is relevant). A system with perfect recall finds all relevant material, making no errors of omission and leaving out nothing the user wanted. In contrast, a system with perfect precision finds only relevant material, making no errors of commission and not bothering the user with stuff of no interest. The SMART system produced these measures for many retrieval experiments and its methodology was widely used, making text retrieval one of the earliest areas of computer science with agreed-on evaluation methods. Salton was not able to do anything with image retrieval at the time; there were no such data available for him.

Another idea shaped by the amount of information available was “relevance feedback,” the idea of identifying useful documents from a first retrieval pass in order to improve the results of a later retrieval. With so few documents, high precision seemed like an unnecessary goal. It was simply not possible to retrieve more material than somebody could look at. Thus, the research focused on high recall (also stimulated by the insistence by some users that they had to have every single relevant document). Relevance feedback helped recall. By contrast, the use of phrase searching to improve precision was tried but never got much attention simply because it did not have the scope to produce much improvement in the running systems.

The basic problem is that we wish to search for concepts, and what we have in natural language are words and phrases. When our documents are few and short, the main problem is not to miss any, and the research at the time stressed algorithms that found related words via associations or improved recall with techniques like relevance feedback.

Then, of course, several other advances—computer typesetting and word processing to generate material and cheap disks to hold it—led to much larger text collections. Figure 5.1 shows the decline in the price of

FIGURE 5.1 Decline in the price of disk space, 1950 to 2004.

disk space since the first disks in the mid-1950s, generally following the cost-performance trends of Moore’s law.

Cheaper storage led to larger and larger text collections online. Now there are many terabytes of data on the Web. These vastly larger volumes mean that precision has now become more important, since a common problem is to wade through vastly too many documents. Not surprisingly, in the mid-1980s efforts started on separating the multiple meanings of words like “bank” or “pine” and became the research area of “sense disambiguation.” 2 With sense disambiguation, it is possible to imagine searching for only one meaning of an ambiguous word, thus avoiding many erroneous retrievals.

Large-scale research on text processing took off with the availability of the TREC (Text Retrieval Evaluation Conference) data. Thanks to the National Institute of Standards and Technology, several hundred megabytes of text were provided (in each of several years) for research use. This stimulated more work on query analysis, text handling, searching

algorithms, and related areas; see the series titled TREC Conference Proceedings, edited by Donna Harmon of NIST.

Document clustering appeared as an important way to shorten long search results. Clustering enables a system to report not, say, 5000 documents but rather 10 groups of 500 documents each, and the user can then explore the group or groups that seem relevant. Salton anticipated the future possibility of such algorithms, as did others. 3 Until we got large collections, though, clustering did not find application in the document retrieval world. Now one routinely sees search engines using these techniques, and faster clustering algorithms have been developed.

Thus the algorithms explored switched from recall aids to precision aids as the quantity of available data increased. Manual thesauri, for example, have dropped out of favor for retrieval, partly because of their cost but also because their goal is to increase recall, which is not today’s problem. In terms of finding the concepts hinted at by words and phrases, our goals now are to sharpen rather than broaden these concepts: thus disambiguation and phrase matching, and not as much work on thesauri and term associations.

Again, multilingual searching started to matter, because multilingual collections became available. Multilingual research shows a more precise example of particular information resources driving research. The Canadian government made its Parliamentary proceedings (called Hansard ) available in both French and English, with paragraph-by-paragraph translation. This data stimulated a number of projects looking at how to handle bilingual material, including work on automatic alignment of the parallel texts, automatic linking of similar words in the two languages, and so on. 4

A similar effect was seen with the Brown corpus of tagged English text, where the part of speech of each word (e.g., whether a word is a noun or a verb) was identified. This produced a few years of work on algorithms that learned how to assign parts of speech to words in running text based on statistical techniques, such as the work by Garside. 5

One might see an analogy to various new fields of chemistry. The recognition that pesticides like DDT were environmental pollutants led to a new interest in biodegradability, and the Freon propellants used in aerosol cans stimulated research in reactions in the upper atmosphere. New substances stimulated a need to study reactions that previously had not been a top priority for chemistry and chemical engineering.

As storage became cheaper, image storage was now as practical as text storage had been a decade earlier. Starting in the 1980s we saw the IBM QBIC project demonstrating that something could be done to retrieve images directly, without having to index them by text words first. 6 Projects like this were stimulated by the availability of “clip art” such as the COREL image disks. Several different projects were driven by the easy access to images in this way, with technology moving on from color and texture to more accurate shape processing. At Berkeley, for example, the “Blobworld” project made major improvements in shape detection and recognition, as described in Carson et al. 7 These projects demonstrated that retrieval could be done with images as well as with words, and that properties of images could be found that were usable as concepts for searching.

Another new kind of data that became feasible to process was sound, in particular human speech. Here it was the Defense Advanced Research Projects Agency (DARPA) that took the lead, providing the SWITCH-BOARD corpus of spoken English. Again, the availability of a substantial file of tagged information helped stimulate many research projects that used this corpus and developed much of the technology that eventually went into the commercial speech recognition products we now have. As with the TREC contests, the competitions run by DARPA based on its spoken language data pushed the industry and the researchers to new advances. National needs created a new technology; one is reminded of the development of synthetic rubber during World War II or the advances in catalysis needed to make explosives during World War I.

Yet another kind of new data was geo-coded data, introducing a new set of conceptual ideas related to place. Geographical data started showing up in machine-readable form during the 1980s, especially with the release of the Dual Independent Map Encoding (DIME) files after the 1980

census and the Topologically Integrated Geographic Encoding and Referencing (TIGER) files from the 1990 census. The availability, free of charge, of a complete U.S. street map stimulated much research on systems to display maps, to give driving directions, and the like. 8 When aerial photographs also became available, there was the triumph of Microsoft’s “Terraserver,” which made it possible to look at a wide swath of the world from the sky along with correlated street and topographic maps. 9

More recently, in the 1990s, we have started to look at video search and retrieval. After all, if a CD-ROM contains about 300,000 times as many bytes per pound as a deck of punched cards, and a digitized video has about 500,000 times as many bytes per second as the ASCII script it comes from, we should be about where we were in the 1960s with video today. And indeed there are a few projects, most notably the Informedia project at Carnegie Mellon University, that experiment with video signals; they do not yet have ways of searching enormous collections, but they are developing algorithms that exploit whatever they can find in the video: scene breaks, closed-captioning, and so on.

Again, there is the problem of deducing concepts from a new kind of information. We started with the problem of words in one language needing to be combined when synonymous, picked apart when ambiguous, and moved on to detecting synonyms across multiple languages and then to concepts depicted in pictures and sounds. Now we see research such as that by Jezekiel Ben-Arie associating words like “run” or “hop” with video images of people doing those actions. In the same way we get again new chemistry when molecules like “buckyballs” are created and stimulate new theoretical and reaction studies.

Defining concepts for search can be extremely difficult. For example, despite our abilities to parse and define every item in a computer language, we have made no progress on retrieval of software; people looking for search or sort routines depend on metadata or comments. Some areas seem more flexible than others: text and naturalistic photograph processing software tends to be very general, while software to handle CAD diagrams and maps tends to be more specific. Algorithms are sometimes portable; both speech processing and image processing need Fourier transforms, but the literature is less connected than one might like (partly

because of the difference between one-dimensional and two-dimensional transforms).

There are many other examples of interesting computer science research stimulated by the availability of particular kinds of information. Work on string matching today is often driven by the need to align sequences in either protein or DNA data banks. Work on image analysis is heavily influenced by the need to deal with medical radiographs. And there are many other interesting projects specifically linked to an individual data source. Among examples:

The British Library scanning of the original manuscript of Beowulf in collaboration with the University of Kentucky, working on image enhancement until the result of the scanning is better than reading the original;

The Perseus project, demonstrating the educational applications possible because of the earlier Thesaurus Linguae Graecae project, which digitized all the classical Greek authors;

The work in astronomical analysis stimulated by the Sloan Digital Sky Survey;

The creation of the field of “forensic paleontology” at the University of Texas as a result of doing MRI scans of fossil bones;

And, of course, the enormous amount of work on search engines stimulated by the Web.

When one of these fields takes off, and we find wide usage of some online resource, it benefits society. Every university library gained readers as their catalogs went online and became accessible to students in their dorm rooms. Third World researchers can now access large amounts of technical content their libraries could rarely acquire in the past.

In computer science, and in chemistry, there is a tension between the algorithm/reaction and the data/substance. For example, should one look up an answer or compute it? Once upon a time logarithms were looked up in tables; today we also compute them on demand. Melting points and other physical properties of chemical substances are looked up in tables; perhaps with enough quantum mechanical calculation we could predict them, but it’s impractical for most materials. Predicting tomorrow’s weather might seem a difficult choice. One approach is to measure the current conditions, take some equations that model the atmosphere, and calculate forward a day. Another is to measure the current conditions, look in a big database for the previous day most similar to today, and then take the day after that one as the best prediction for tomorrow. However, so far the meteorologists feel that calculation is better. Another complicated example is chess: given the time pressure of chess tournaments

against speed and storage available in computers, chess programs do the opening and the endgame by looking in tables of old data and calculate for the middle game.

To conclude, a recipe for stimulating advances in computer science is to make some data available and let people experiment with it. With the incredibly cheap disks and scanners available today, this should be easier than ever. Unfortunately, what we gain with technology we are losing to law and economics. Many large databases are protected by copyright; few motion pictures, for example, are old enough to have gone out of copyright. Content owners generally refuse to grant permission for wide use of their material, whether out of greed or fear: they may have figured out how to get rich off their files of information or they may be afraid that somebody else might have. Similarly it is hard to get permission to digitize in-copyright books, no matter how long they have been out of print. Jim Gray once said to me, “May all your problems be technical.” In the 1960s I was paying people to key in aeronautical abstracts. It never occurred to us that we should be asking permission of the journals involved (I think what we did would qualify as fair use, but we didn’t even think about it). Today I could scan such things much more easily, but I would not be able to get permission. Am I better off or worse off?

There are now some 22 million chemical substances in the Chemical Abstracts Service Registry and 7 million reactions. New substances continue to intrigue chemists and cause research on new reactions, with of course enormous interest in biochemistry both for medicine and agriculture. Similarly, we keep adding data to the Web, and new kinds of information (photographs of dolphins, biological flora, and countless other things) can push computer scientists to new algorithms. In both cases, synthesis of specific instances into concepts is a crucial problem. As we see more and more kinds of data, we learn more about how to extract meaning from it, and how to present it, and we develop a need for new algorithms to implement this knowledge. As the data gets bigger, we learn more about optimization. As it gets more complex, we learn more about representation. And as it gets more useful, we learn more about visualization and interfaces, and we provide better service to society.

HISTORY AND THE FUNDAMENTALS OF COMPUTER SCIENCE

Edward L. Ayers, University of Virginia

We might begin with a thought experiment: What is history? Many people, I’ve discovered, think of it as books and the things in books. That’s certainly the explicit form in which we usually confront history. Others, thinking less literally, might think of history as stories about the past; that would open us to oral history, family lore, movies, novels, and the other forms in which we get most of our history.

All these images are wrong, of course, in the same way that images of atoms as little solar systems are wrong, or pictures of evolution as profiles of ever taller and more upright apes and people are wrong. They are all models, radically simplified, that allow us to think about such things in the exceedingly small amounts of time that we allot to these topics.

The same is true for history, which is easiest to envision as technological progress, say, or westward expansion, of the emergence of freedom—or of increasing alienation, exploitation of the environment, or the growth of intrusive government.

Those of us who think about specific aspects of society or nature for a living, of course, are never satisfied with the stories that suit the purposes of everyone else so well.

We are troubled by all the things that don’t fit, all the anomalies, variance, and loose ends. We demand more complex measurement, description, and fewer smoothing metaphors and lowest common denominators.

Thus, to scientists, atoms appear as clouds of probability; evolution appears as a branching, labyrinthine bush in which some branches die out and others diversify. It can certainly be argued that past human experience is as complex as anything in nature and likely much more so, if by complexity we mean numbers of components, variability of possibilities, and unpredictability of outcomes.

Yet our means of conveying that complexity remain distinctly analog: the story, the metaphor, the generalization. Stories can be wonderfully complex, of course, but they are complex in specific ways: of implication, suggestion, evocation. That’s what people love and what they remember.

But maybe there is a different way of thinking about the past: as information. In fact, information is all we have. Studying the past is like studying scientific processes for which you have the data but cannot run the experiment again, in which there is no control, and in which you can never see the actual process you are describing and analyzing. All we have is information in various forms: words in great abundance, billions of numbers, millions of images, some sounds and buildings, artifacts.

The historian’s goal, it seems to me, should be to account for as much of the complexity embedded in that information as we can. That, it appears, is what scientists do, and it has served them well.

And how has science accounted for ever-increasing amounts of complexity in the information they use? Through ever more sophisticated instruments. The connection between computer science and history could be analogous to that between telescopes and stars, microscopes and cells. We could be on the cusp of a new understanding of the patterns of complexity in human behavior of the past.

The problem may be that there is too much complexity in that past, or too much static, or too much silence. In the sciences, we’ve learned how to filter, infer, use indirect evidence, and fill in the gaps, but we have a much more literal approach to the human past.

We have turned to computer science for tasks of more elaborate description, classification, representation. The digital archive my colleagues and I have built, the Valley of the Shadow Project, permits the manipulation of millions of discrete pieces of evidence about two communities in the era of the American Civil War. It uses sorting mechanisms, hypertextual display, animation, and the like to allow people to handle the evidence of this part of the past for themselves. This isn’t cutting-edge computer science, of course, but it’s darned hard and deeply disconcerting to some, for it seems to abdicate responsibility, to undermine authority, to subvert narrative, to challenge story.

Now, we’re trying to take this work to the next stage, to analysis. We have composed a journal article that employs an array of technologies, especially geographic information systems and statistical analysis in the creation of the evidence. The article presents its argument, evidence, and historiographical context as a complex textual, tabular, and graphical representation. XML offers a powerful means to structure text and XSL an even more powerful means to transform it and manipulate its presentation. The text is divided into sections called “statements,” each supported with “explanation.” Each explanation, in turn, is supported by evidence and connected to relevant historiography.

Linkages, forward and backward, between evidence and narrative are central. The historiography can be automatically sorted by author, date, or title; the evidence can be arranged by date, topic, or type. Both evidence and historiographical entries are linked to the places in the analysis where they are invoked. The article is meant to be used online, but it can be printed in a fixed format with all the limitations and advantages of print.

So, what are the implications of thinking of the past in the hardheaded sense of admitting that all we really have of the past is information? One implication might be great humility, since all we have for most

of the past are the fossils of former human experience, words frozen in ink and images frozen in line and color. Another implication might be hubris: if we suddenly have powerful new instruments, might we be on the threshold of a revolution in our understanding of the past? We’ve been there before.

A connection between history and social science was tried before, during the first days of accessible computers. Historians taught themselves statistical methods and even programming languages so that they could adopt the techniques, models, and insights of sociology and political science. In the 1950s and 1960s the creators of the new political history called on historians to emulate the precision, explicitness, replicability, and inclusivity of the quantitative social sciences. For two decades that quantitative history flourished, promising to revolutionize the field. And to a considerable extent it did: it changed our ideas of social mobility, political identification, family formation, patterns of crime, economic growth, and the consequences of ethnic identity. It explicitly linked the past to the present and held out a history of obvious and immediate use.

But that quantitative social science history collapsed suddenly, the victim of its own inflated claims, limited method and machinery, and changing academic fashion. By the mid-1980s, history, along with many of the humanities and social sciences, had taken the linguistic turn. Rather than software manuals and codebooks, graduate students carried books of French philosophy and German literary interpretation. The social science of choice shifted from sociology to anthropology; texts replaced tables. A new generation defined itself in opposition to social scientific methods just as energetically as an earlier generation had seen in those methods the best means of writing a truly democratic history. The first computer revolution largely failed.

The first effort at that history fell into decline in part because historians could not abide the distance between their most deeply held beliefs and what the statistical machinery permitted, the abstraction it imposed. History has traditionally been built around contingency and particularity, but the most powerful tools of statistics are built on sampling and extrapolation, on generalization and tendency. Older forms of social history talked about vague and sometimes dubious classifications in part because that was what the older technology of tabulation permitted us to see. It has become increasingly clear across the social sciences that such flat ways of describing social life are inadequate; satisfying explanations must be dynamic, interactive, reflexive, and subtle, refusing to reify structures of social life or culture. The new technology permits a new cross-fertilization.

Ironically, social science history faded just as computers became widely available, just as new kinds of social science history became feasible. No longer is there any need for white-coated attendants at huge mainframes

and expensive proprietary software. Rather than reducing people to rows and columns, searchable databases now permit researchers to maintain the identities of individuals in those databases and to represent entire populations rather than samples. Moreover, the record can now include things social science history could only imagine before the Web: completely indexed newspapers, with the original readable on the screen; completely searchable letters and diaries by the thousands; and interactive maps with all property holders identified and linked to other records. Visualization of patterns in the data, moreover, far outstrips the possibilities of numerical calculation alone. Manipulable histograms, maps, and time lines promise a social history that is simultaneously sophisticated and accessible. We have what earlier generations of social science historians dreamed of: a fast and widely accessible network linked to cheap and powerful computers running common software with well-established standards for the handling of numbers, texts, and images. New possibilities of collaboration and cumulative research beckon. Perhaps the time is right to reclaim a worthy vision of a disciplined and explicit social scientific history that we abandoned too soon.

What does this have to do with computer science? Everything, it seems to me. If you want hard problems, historians have them. And what’s the hardest problem of all right now? The capture of the very information that is history. Can computer science imagine ways to capture historical information more efficiently? Can it offer ways to work with the spotty, broken, dirty, contradictory, nonstandardized information we work with?

The second hard problem is the integration of this disparate evidence in time and space, offering new precision, clarity, and verifiability, as well as opening new questions and new ways of answering them.

If we can think of these ways, then we face virtually limitless possibilities. Is there a more fundamental challenge or opportunity for computer science than helping us to figure out human society over human time?

This page intentionally left blank.

Computer Science: Reflections on the Field, Reflections from the Field provides a concise characterization of key ideas that lie at the core of computer science (CS) research. The book offers a description of CS research recognizing the richness and diversity of the field. It brings together two dozen essays on diverse aspects of CS research, their motivation and results. By describing in accessible form computer science’s intellectual character, and by conveying a sense of its vibrancy through a set of examples, the book aims to prepare readers for what the future might hold and help to inspire CS researchers in its creation.

READ FREE ONLINE

Welcome to OpenBook!

You're looking at OpenBook, NAP.edu's online reading room since 1999. Based on feedback from you, our users, we've made some improvements that make it easier than ever to read thousands of publications on our website.

Do you want to take a quick tour of the OpenBook's features?

Show this book's table of contents , where you can jump to any chapter by name.

...or use these buttons to go back to the previous chapter or skip to the next one.

Jump up to the previous page or down to the next one. Also, you can type in a page number and press Enter to go directly to that page in the book.

Switch between the Original Pages , where you can read the report as it appeared in print, and Text Pages for the web version, where you can highlight and search the text.

To search the entire text of this book, type in your search term here and press Enter .

Share a link to this book page on your preferred social network or via email.

View our suggested citation for this chapter.

Ready to take your reading offline? Click here to buy this book in print or download it as a free PDF, if available.

Get Email Updates

Do you enjoy reading reports from the Academies online for free ? Sign up for email notifications and we'll let you know about new publications in your areas of interest when they're released.

CHAPTER 4: DATA MEASUREMENT

4-3: Types of Data and Appropriate Representations

Introduction.

Graphs and charts can be effective visual tools because they present information quickly and easily. Graphs and charts condense large amounts of information into easy-to-understand formats that clearly and effectively communicate important points. Graphs are commonly used by print and electronic media as they quickly convey information in a small space. Statistics are often presented visually as they can effectively facilitate understanding of the data. Different types of graphs and charts are used to represent different types of data.

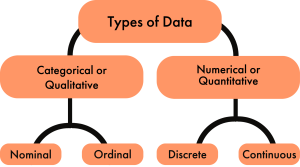

Types of Data

There are four types of data used in statistics: nominal data, ordinal data, discrete data, and continuous data. Nominal and ordinal data fall under the umbrella of categorical data, while discrete data and continuous data fall under the umbrella of continuous data.

Qualitative Data

Categorical or qualitative data labels data into categories. Categorical data is defined in terms of natural language specifications. For example, name, sex, country of origin, are categories that represent qualitative data. There are two subcategories of qualitative data, nominal data and ordinal data.

Nominal Data

Ordinal Data

When the categories have a natural order, the categories are said to be ordinal . It can be ordered and measured. For example education level (H.S. diploma; 1 year certificate; 2 year degree; 4 year degree; masters degree; doctorate degree), satisfaction rating (extremely dislike; dislike; neutral; like; extremely like), etc. are categories that have a natural order to them. Ordinal data are commonly used for collecting demographic information (age, sex, race, etc.). This is particularly prevalent in marketing and insurance sectors, but it is also used by governments (e.g. the census), and is commonly used when conducting customer satisfaction surveys. Ordinal data is commonly represented using a bar graph .

Quantitative Data

Quantitative data has two subcategories, discrete data and continuous data.

Discrete Data

The data is discrete when the numbers do not touch each other on a real number line (e.g., 0, 1, 2, 3, 4…). Discrete data is whole numerical values typically shown as counts and contains only a finite number of possible values. For example, the number of visits to the doctor, the number of students in a class, etc. Discrete data is typically represented by a histogram .

Continuous Data

The data is continuous when it has an infinite number of possible values that can be selected within certain limits. (i.e., the numbers run into each other on a real number line). Continuous data is data that can be calculated . It has an infinite number of possible values that can be selected within certain limits. Examples of continuous data are temperature, time, height, etc. Continuous data is typically represented by a line graph .

Explore 1 – Types of data

Classify the data into qualitative or quantitative, then into a subcategory of nominal, ordinal, discrete or continuous.

Weight is a number that is measured and has order. It can also take on any number. So, weight is quantitative: continuous.

- egg size (small, medium, large, extra large)

Egg size is typically small, medium, large, or extra large that has a natural order. So, egg size is qualitative: ordinal.

- number of miles driven to work

Number of miles is a number that is measured and has order. It can also take on any number. So, number of miles is : quantitative: continuous.

- body temperature

Body temperature is a number that is measured and has order. It can also take on any number. So, temperature is quantitative: continuous.

- basketball team jersey number

Jersey numbers have no order and are numbers that are not measured. So, jersey number is qualitative: nominal.

- U.S. shoe size

Shoe size is a number. It is calculated based on a formula that includes the measure of your foot length. However, it has only whole or half numbers (e.g., 8 or 9.5). Shoe size has a natural order but has a finite number of options (e.g., half or whole numbers). So, shoe size is quantitative: discrete.

- military rank

Military rank is not numerical but is categorical with a natural order. So, military rank is qualitative: ordinal.

- university GPA

University GPA is a weighted average that is calculated, so it is quantitative: continuous.

Practice Exercises

- year of birth

- levels of fluency (language)

- height of players on a team

- dose of medicine

- political party

- course letter grades

- Quantitative: discrete

- Qualitative: ordinal

- Quantitative: continuous

- Qualitative: nominal

Types of Graphs and Charts

The type of graph or chart used to visualize data is determined by the type of data being represented. A pie chart or bar chart is typically used for nominal data and a bar chart for ordinal data . For quantitative data , we typically use a histogram for discrete data and a line graph for continuous data .

A pie chart is a circular graphic which is divided into slices to illustrate numerical proportion. Pie charts are widely used in the business world and the mass media. The size of each slice is determined by the percentage represented by a category compared to the whole (i.e., the entire dataset). The percentage in each category adds to 100% or the whole.

Explore 2 – Pie Charts

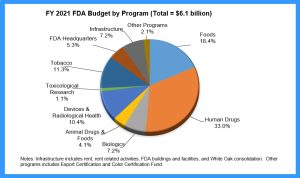

The pie chart shows the distribution of the Food and Drug Administration’s Budget of different programs for the fiscal year 2021. The total budget was $6.1 billion. [1]

- How many categories are shown in the pie chart?

If we count the number of slices, there are 10 categories shown.

- What do the percentages represent?

The percentages show the percent of the $6.1 billion FDA budget that was spent on each category.

- Why is it vital to show the total budget on the chart?

Without the total budget we would be unable to calculate the amount spent on each category.

- Is there a limit to the number of categories that can be shown on a pie chart?

Yes. If the slices are too small to see, another method of representing the data should be used. Ideally, a pie chart should show no more than 5 or 6 categories.

- What does the largest slice represent?

The percentage of the total budget spent on human drugs.

- What does the smallest slice represent?

The percentage of the total budget spent on toxicological research.

- How could this pie chart be improved?

The slices could be ordered around the circle by size, and the 3-D look could be eliminated to avoid the distorted perspective and to make the graph clearer.

- Is this an appropriate use of a pie chart?

The chart is showing a comparison of all categories the budget went towards so it is appropriate.

Bar graphs are used to represent categorical data . Each category is represented as a bar either vertically or horizontally. A bar is the measured value or percentage of a category and there is equal space between each pair of consecutive bars. Bar graphs have the advantage of being easy to read and offer direct comparison of categories.

Explore 3 – Bar Graphs

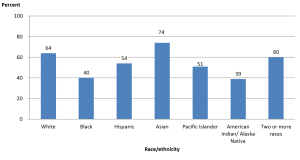

Graduation rates within 6 years from the first institution attended for first-time, full-time bachelor’s degree-seeking students at 4-year postsecondary institutions, by race/ethnicity: cohort entry year 2010.

- How many categories are represented in the bar graph and what do they represent?

There are 7 categories representing the race/ethnicity of the students.

- What do the numbers above each bar represent and why may they be necessary?

The rounded percent of the category. They are necessary because it is very difficult to tell from the vertical scale the height of each bar.

- What does the tallest bar represent?

The percent of students who graduated within six years from their first institution within 6 years who were Asian.

- What does the shortest bar represent?

The percent of students who graduated within six years from their first institution within 6 years who were American Indian or Alaska Native.

- Is this an appropriate use of a bar graph?

Yes. The data is qualitative: nominal; there is no order within the categories.

Histograms are used to represent quantitative data that is discrete . A histogram divides up the range of possible values in a data set into classes or intervals. For each class, a rectangle is constructed with a base length equal to the range of values in that specific class and a length equal to the number of observations falling into that class. A histogram has an appearance similar to a vertical bar chart, but there are no gaps between the bars. The bars are ordered along the axis from the smallest to the largest possible value. Consequently, the bars cannot be reordered. Histograms are often used to illustrate the major features of the distribution of the data in a convenient form. They are also useful when dealing with large data sets (greater than 100 observations). They can help detect any unusual observations (outliers) or any gaps in the data.

Histograms may look similar to bar charts but they are really completely different. Histograms plot quantitative data with ranges of the data grouped into classes or intervals while bar charts plot categorical data. Histograms are used to show distributions while bar charts are used to compare categories. Bars can be reordered in bar charts but not in histograms. The bars of bar charts have the same width. The widths of the bars in a histogram need not be the same as long as the total area of all bars is one hundred percent if percentages are used or the total count, if counts are used. Therefore, values in bar graphs are given by the length of the bar while values in histograms are given by areas.

Explore 4 – Histograms

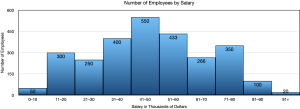

Reading data from a table can be less than enlightening and certainly doesn’t inspire much interest. Graphing the same data in a histogram gives a graphical representation where certain features are automatically highlighted.

- What do you notice about the bars of this histogram compared to the bars of a bar graph?

The bars touch in a histogram but not in a bar chart. This is because the data is ordered along the axis.

- What do the numbers above the bars represent?

The number of employees whose salary lands in each class.

- State a feature of the graph that is very obvious to you.

Answers may vary. Very few employees make less than $10,000 or more than $91,000. $41,000 – $50,000 is the most common salary.

Line graphs are used when the data is quantitative and continuous . The axis acts as a real number line where every possible value is located. Line graphs are typically used to show how data values change over time.

Explore 5 – Line Graphs

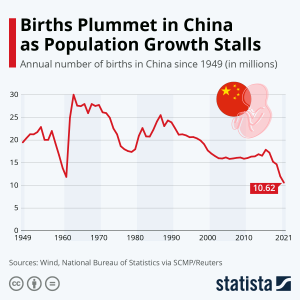

Here is an example of a line graph.

- What does this line graph represent?

Solution: The number of annual births in China from 1949 to 2021.

- What do the numbers on the vertical axis represent?

Solution: The number of births in millions.

- What do the numbers on the horizontal axis represent?

Solution: The year.

- Is this an appropriate use of a line graph?

Solution: Yes. The time scale in years is continuous and a line graph is appropriate for continuous data.

- Does a line graph highlight anything that a histogram may not?

Solution: Yes. The trend in data over time. In this graph the trend of annual births is decreasing.

Infographics are often used by media outlets who are trying to tell a specific (often biased) story. They often combine charts or graphs with narrative and statistics.

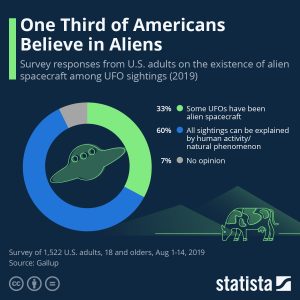

Explore 6 – Infographics

Solution: Since it is circular and based on percentages in each category, it is based on a pie chart.

- How many categories are represented?

Solution: There are three categories.

- What story is the infographic trying to tell?

Solution: About one third of Americans believe in aliens.

- How was the data gathered?

Solution: A survey of 1522 U.S. adults.

- What does the largest blue area on the chart represent?

Solution: The percentage of those surveyed that believe that all sightings can be explained by human activity or natural phenomena.

- What does the smallest grey area on the chart represent?

Solution: The percentage of those surveyed that have no opinion on UFO sightings.

- Robert is involved in a group project for a class. The group has collected data to show the amount of time spent performing different tasks on a cell phone. The categories include making calls, Internet, text, music, videos, social media, email, games, and photos. What type of graph or chart should be used to display the average time spent per day on any of these tasks? Explain your reasoning.

- A marketing firm wants to show what fraction of the overall market uses a particular Internet browser. What type of graph or chart should be used to display this information? Explain your reasoning.

- The data is categorical so a bar graph should be used.

- The data is categorical. If there are not too many categories (browser used) then a pie chart would work since fraction of the market is used. Alternatively, a bar chart could be used showing the fraction or percent as the height of each bar.

- Name three (3) differences between a bar graph and a histogram.

- A bar graph is used for qualitative data while a histogram is used for quantitative data.

- In a bar graph the categories can be reordered. In a histogram the categories cannot be reordered.

- In a bar graph the bars do not touch. In a histogram the bars touch.

- A teacher wants to show their class the results of a midterm exam, without exposing any student names. What type of graph or chart should be used to display the scores earned on the midterm? Explain your reasoning.

- A pizza company wants to display a graphic of the five favorite pizzas of their customers on the company website. What type of graph or chart should be used to display this information? Explain your reasoning.

- Maria is keeping track of her daughter’s height by measuring her height on her birthday each year and recording it in a spreadsheet. What type of graph or chart should be used to display this information? Explain your reasoning

- Midterm scores may be quantitative as either raw scores or percentages, in which case they should show a histogram showing the number of students scoring in a given score (or percentage) interval. If the midterm results are letter grades, the data is qualitative but ordered. In this case, a pie chart could be used to show the percent of students with each letter grade, but it would be very busy. A better option would be a bar graph showing the number of students at each letter grade.

- An infographic. This is categorical data so a (pizza) pie chart would be a good option or a bar chart.

- A line graph since the data is collected over time and time is continuous.

Perspectives

- Mike has collected data for a school project from a survey that asked, “What is your favorite pizza? ”. He surveyed 200 people and discovered that there were only 9 pizzas that were on the favorites list. In his report, he plans to show his data in a (pizza) pie chart. Is this the correct chart to use for his purpose? Explain your reasoning.

- Sarah is keeping track of the value of her car every year. She started when she first bought the car new and looks up its value every year. She figures that when the car’s value drops to $5000, it is time for an upgrade. What type of graph or chart should be used to display this information? Explain your reasoning.

- The Earth’s atmosphere is made up of 77% Nitrogen, 21% Oxygen, and 2% other gases. What type of graph or chart should be used to display this data? Explain your reasoning.

- A pie chart could be used but with 9 categories there may be too many slices for the chart to be clear. A bar graph may be better due to the number of categories.

- A line graph since time is continuous and she will be able to see the trend in car value over time.

- The data is qualitative: nominal and has percentages that add to 100% so a pie chart would work well with only 3 categories. Alternatively, a bar chart would work.

Skills Exercises

- phone number

- https://www.fda.gov/about-fda/fda-basics/fact-sheet-fda-glance ↵

able to be put into categories

data that can be given labels and put into categories

qualitative data that can be put into labelled categories that have no order and no overlap

having nothing in common; no overlap

the number of times a data value has been recorded

a number or ratio expressed as a fraction of 100

a circular graphic which is divided into slices representing the number or percentage in each category

qualitative data that has a natural order

a graph where each category is represented by a vertical or horizontal bar that measures a frequency or percentage of the whole

expressed using a number or numbers

data that involves numerical values with order

data that is measured using whole numbers with only a finite number of possibilities

a graph similar in appearance to a vertical bar graph with gaps between the bars, ordered bars, with a bse length equal to the range of values in a specific class

data that has an infinite number of possible values that can be selected within certain limits

use arithmetic and the order of operations

a graph used for continuous data that uses an axis as a real number line where every possible value is located

a graphic showing a combination of graphs, charts, and statistics

Numeracy Copyright © 2023 by Utah Valley University is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Data Analysis in Research: Types & Methods

Content Index

Why analyze data in research?

Types of data in research, finding patterns in the qualitative data, methods used for data analysis in qualitative research, preparing data for analysis, methods used for data analysis in quantitative research, considerations in research data analysis, what is data analysis in research.

Definition of research in data analysis: According to LeCompte and Schensul, research data analysis is a process used by researchers to reduce data to a story and interpret it to derive insights. The data analysis process helps reduce a large chunk of data into smaller fragments, which makes sense.

Three essential things occur during the data analysis process — the first is data organization . Summarization and categorization together contribute to becoming the second known method used for data reduction. It helps find patterns and themes in the data for easy identification and linking. The third and last way is data analysis – researchers do it in both top-down and bottom-up fashion.

LEARN ABOUT: Research Process Steps

On the other hand, Marshall and Rossman describe data analysis as a messy, ambiguous, and time-consuming but creative and fascinating process through which a mass of collected data is brought to order, structure and meaning.

We can say that “the data analysis and data interpretation is a process representing the application of deductive and inductive logic to the research and data analysis.”

Researchers rely heavily on data as they have a story to tell or research problems to solve. It starts with a question, and data is nothing but an answer to that question. But, what if there is no question to ask? Well! It is possible to explore data even without a problem – we call it ‘Data Mining’, which often reveals some interesting patterns within the data that are worth exploring.

Irrelevant to the type of data researchers explore, their mission and audiences’ vision guide them to find the patterns to shape the story they want to tell. One of the essential things expected from researchers while analyzing data is to stay open and remain unbiased toward unexpected patterns, expressions, and results. Remember, sometimes, data analysis tells the most unforeseen yet exciting stories that were not expected when initiating data analysis. Therefore, rely on the data you have at hand and enjoy the journey of exploratory research.

Create a Free Account

Every kind of data has a rare quality of describing things after assigning a specific value to it. For analysis, you need to organize these values, processed and presented in a given context, to make it useful. Data can be in different forms; here are the primary data types.

- Qualitative data: When the data presented has words and descriptions, then we call it qualitative data . Although you can observe this data, it is subjective and harder to analyze data in research, especially for comparison. Example: Quality data represents everything describing taste, experience, texture, or an opinion that is considered quality data. This type of data is usually collected through focus groups, personal qualitative interviews , qualitative observation or using open-ended questions in surveys.

- Quantitative data: Any data expressed in numbers of numerical figures are called quantitative data . This type of data can be distinguished into categories, grouped, measured, calculated, or ranked. Example: questions such as age, rank, cost, length, weight, scores, etc. everything comes under this type of data. You can present such data in graphical format, charts, or apply statistical analysis methods to this data. The (Outcomes Measurement Systems) OMS questionnaires in surveys are a significant source of collecting numeric data.

- Categorical data: It is data presented in groups. However, an item included in the categorical data cannot belong to more than one group. Example: A person responding to a survey by telling his living style, marital status, smoking habit, or drinking habit comes under the categorical data. A chi-square test is a standard method used to analyze this data.

Learn More : Examples of Qualitative Data in Education

Data analysis in qualitative research

Data analysis and qualitative data research work a little differently from the numerical data as the quality data is made up of words, descriptions, images, objects, and sometimes symbols. Getting insight from such complicated information is a complicated process. Hence it is typically used for exploratory research and data analysis .

Although there are several ways to find patterns in the textual information, a word-based method is the most relied and widely used global technique for research and data analysis. Notably, the data analysis process in qualitative research is manual. Here the researchers usually read the available data and find repetitive or commonly used words.

For example, while studying data collected from African countries to understand the most pressing issues people face, researchers might find “food” and “hunger” are the most commonly used words and will highlight them for further analysis.

LEARN ABOUT: Level of Analysis

The keyword context is another widely used word-based technique. In this method, the researcher tries to understand the concept by analyzing the context in which the participants use a particular keyword.

For example , researchers conducting research and data analysis for studying the concept of ‘diabetes’ amongst respondents might analyze the context of when and how the respondent has used or referred to the word ‘diabetes.’

The scrutiny-based technique is also one of the highly recommended text analysis methods used to identify a quality data pattern. Compare and contrast is the widely used method under this technique to differentiate how a specific text is similar or different from each other.

For example: To find out the “importance of resident doctor in a company,” the collected data is divided into people who think it is necessary to hire a resident doctor and those who think it is unnecessary. Compare and contrast is the best method that can be used to analyze the polls having single-answer questions types .

Metaphors can be used to reduce the data pile and find patterns in it so that it becomes easier to connect data with theory.

Variable Partitioning is another technique used to split variables so that researchers can find more coherent descriptions and explanations from the enormous data.

LEARN ABOUT: Qualitative Research Questions and Questionnaires

There are several techniques to analyze the data in qualitative research, but here are some commonly used methods,