Subscribe to the PwC Newsletter

Join the community, add a new evaluation result row, automated essay scoring.

24 papers with code • 1 benchmarks • 1 datasets

Essay scoring: Automated Essay Scoring is the task of assigning a score to an essay, usually in the context of assessing the language ability of a language learner. The quality of an essay is affected by the following four primary dimensions: topic relevance, organization and coherence, word usage and sentence complexity, and grammar and mechanics.

Source: A Joint Model for Multimodal Document Quality Assessment

Benchmarks Add a Result

Most implemented papers, automated essay scoring based on two-stage learning.

Current state-of-art feature-engineered and end-to-end Automated Essay Score (AES) methods are proven to be unable to detect adversarial samples, e. g. the essays composed of permuted sentences and the prompt-irrelevant essays.

A Neural Approach to Automated Essay Scoring

nusnlp/nea • EMNLP 2016

SkipFlow: Incorporating Neural Coherence Features for End-to-End Automatic Text Scoring

Our new method proposes a new \textsc{SkipFlow} mechanism that models relationships between snapshots of the hidden representations of a long short-term memory (LSTM) network as it reads.

Neural Automated Essay Scoring and Coherence Modeling for Adversarially Crafted Input

Youmna-H/Coherence_AES • NAACL 2018

We demonstrate that current state-of-the-art approaches to Automated Essay Scoring (AES) are not well-suited to capturing adversarially crafted input of grammatical but incoherent sequences of sentences.

Co-Attention Based Neural Network for Source-Dependent Essay Scoring

This paper presents an investigation of using a co-attention based neural network for source-dependent essay scoring.

Language models and Automated Essay Scoring

In this paper, we present a new comparative study on automatic essay scoring (AES).

Evaluation Toolkit For Robustness Testing Of Automatic Essay Scoring Systems

midas-research/calling-out-bluff • 14 Jul 2020

This number is increasing further due to COVID-19 and the associated automation of education and testing.

Prompt Agnostic Essay Scorer: A Domain Generalization Approach to Cross-prompt Automated Essay Scoring

Cross-prompt automated essay scoring (AES) requires the system to use non target-prompt essays to award scores to a target-prompt essay.

Many Hands Make Light Work: Using Essay Traits to Automatically Score Essays

To find out which traits work best for different types of essays, we conduct ablation tests for each of the essay traits.

EXPATS: A Toolkit for Explainable Automated Text Scoring

octanove/expats • 7 Apr 2021

Automated text scoring (ATS) tasks, such as automated essay scoring and readability assessment, are important educational applications of natural language processing.

Automated Essay Grading

A cs109a final project by anmol gupta, annie hwang, paul lisker, and kevin loughlin, introduction.

One of the main responsibilities of teachers and professors in the humanities is grading students essays [1]. Of course, manual essay grading for a classroom of students is a time-consuming process, and can even become tedious at times. Furthermore, essay grading can be plagued by inconsistencies in determining what a “good” essay really is. Indeed, the grading of essays is often a topic of controversy, due to its intrinsic subjectivity. Instructors might be more inclined to better reward essays with a particular voice or writing style, or even a specific position on the essay prompt.

With these and other issues taken into consideration, the problem of essay grading is clearly a field ripe for a more systematic, unbiased method of rating written work. There has been much research into creating AI agents, ultimately based on statistical models, that can automatically grade essays and therefore reduce or even eliminate the potential for bias. Such a model would take typical features of strong essays into account, analyzing each essay for the existence of these features.

In this project, which stems from an existing Kaggle competition sponsored by the William and Flora Hewlett Foundation [2], we have attempted to provide an efficient, automated solution to essay grading, thereby eliminating grader bias, as well as expediting a tedious and time-consuming job. While superior auto-graders that have resulted from years of extensive research surely exist, we feel that our final project demonstrates our ability to apply the data science process learned in this course to a complex, real-world problem.

Data Exploration

It was unnecessary for us to collect any data, as the essays were provided by the Hewlett Foundation. The data is comprised of eight separate essay sets, consisting of a training set of 12,976 essays and a validation set of 4,218 essays. Each essay set had a unique topic and scoring system, which certainly complicated fitting a model, given the diverse data. On the bright side, the essay sets were complete—that is, there was no missing data. Furthermore, the data was clearly laid out in both txt and csv formats that made importing them into a Pandas DataFrame a relatively simple process. In fact, the only complication to arise from collecting the data was a rather sneaky one, only discovered in the later stages when we attempted to spell check the essays. A very small number of essays contained special characters that could not be processed in unicode (the most popular method of text encoding for English). To handle these special characters, we used ISO-8859-1 text encoding, which eliminated encoding-related errors.

The training and validation sets did have plenty of information that we deemed to be extraneous to the scope of our project. For example, the score was often broken down by scorer, and at times into subcategories. We decided to take the average of the overall provided scores as our notion of “score” for each essay. Ultimately, then, the three crucial pieces of information were the essay, the essay set to which it belonged, and the overall essay score.

With the information we needed in place, we tested a few essay features at a basic level to get a better grasp on the data’s format as well as to investigate the sorts of features that might prove useful in predicting an essay’s score. In particular, we calculated the number of words and the vocabulary sizes (number of unique words), for each essay in the training set, plotting them against the provided essay scores.

We hypothesized that word count would certainly be correlated positively with essay score. As students, we note that longer essays often reflect deeper thought and stronger content. On the flip side, there is also value in being succinct by eliminating “filler” content and unnecessary details in papers. As such, we figured that the strength of correlation would weaken as the length of the essays increased.

With regard to vocabulary sizes, we reasoned that individuals who typically read and write more have broader lexicons, and as such a larger vocabulary size would correlate with a higher quality essay, and thus a higher score. After all, the more individuals read and write, the greater their exposure to a larger vocabulary and a more thorough understanding of how properly use it in their own writing. As such, a skilled writer will likely use a variety of exciting words in an effort to more effectively keep readers engaged and to best express their ideas in response to the prompt. Therefore, we hypothesized that a larger vocabulary list would correlate with a higher essay score.

The first notable finding, as evidenced in Figure 1, is that the Word Count vs. Score scatter plots closely mirror the Vocab Size vs Score scatter plots when paired by essay set. This suggests that there might be a relationship between the length of the essay and the different number of words that a writer uses, a discovery that makes sense: a longer essay is bound to have more unique words.

From the Word Counts vs Score scatter plots, we note that in general, there seems to be an upward, positive trend between the essay words counts and the score, with the data expanding in a funnel-like shape. In set 4, there are certainly a couple of essays with the score of 1 that have a smaller word count and vocabulary list than the essays with a score of 0, but that result is likely due to essays with a score of 0 being either incomplete or unrelated to the prompt. As such, these data represent outliers and therefore do not speak to the general, positive relationship. Similar trends and patterns hold true for Vocab Size vs Score.

For set 3, we see that as the scores increase, the range of values for the number of words increases, meaning the number of words themselves tend to increase with score in a tornado-like shape, as mentioned. That is, while low scores were almost exclusively reserved for short essays, good grades were assigned to essays anywhere along the word count spectrum. In other words, there are many essays which have comparable word and vocabulary counts with different scores—especially those of smaller size. On the other hand, those essays with a distinctly greater word count and vocabulary size clearly receive higher scores. Similarly, for sets 1, 2, 4, 5, 6, and 7, we noted that, although the average word count increases as the score increases, the range of word counts also becomes wider, resulting in significant overlap of word counts across scores. This reinforces the conclusion that while word count is, in fact, correlated to essay score, the correlation is weaker for higher-scored essays, since there exists a significant overlap of word count across different scores.

Essay set 8 has different trends: essays with large word counts and vocabulary sizes range greatly in scores. However, despite the unpredictability highlighted by this wide range, a clear predictor does emerge: essays with a small word count and small vocabulary size are graded with correspondingly low scores. As such, unlike in other datasets, where higher word and vocabulary counts equate to higher scores, we see that higher word essays may still be graded across the full range of scores. On the other hand, low word and vocabulary counts are a strong predictor of low score. In our investigation of this phenomenon, we noticed a disparity with essay set 8: it was the only prompt that has a maximum essay length, as measured by word count. Ultimately, this factor could have encouraged essays of particular size, regardless of essay quality.

The Baseline

With a sufficient grasp on the data, we set out to create a baseline essay grading model to which we could compare our final (hopefully more advanced) model. In order to generalize the model across different essay sets (which each contained different scoring systems, as mentioned), we standardized each essay set’s score distribution to have a mean of 0 and a standard deviation of 1.

For the baseline model, we began by considering the various essay features in order to choose the ones that we believed would be most effective, ultimately settling on n-grams. In natural language processing, n-grams are a very powerful resource. An n-gram refers to a consecutive sequence of n words in a given text. As an example, the n-grams with n=1 (unigram) of the sentence “I like ice cream” would be “I”, “like”, “ice”, and “cream”. The bigrams (n=2) of this same sentence would thus be “I like”, “like ice”, and “ice cream”. Ultimately, for a sufficiently large text, n-grams may be analyzed for any positive, nonzero integer n.

In analyzing a text, using n-grams of different n values may be important. For example, while the meaning of “bad” is successfully conveyed as a unigram, it would be lost in “not good,” since the two words would be analyzed independently. In this scenario, then, a bigram would be more useful. By a similar argument, a bigram may be effective for “not good,” but less so for “bad,” since it could associate the word with potentially unrelated words. For our baseline, however, we decided to proceed with unigrams in the name of simplicity.

To quantify the concept of n-grams, we used an information retrieval method called term frequency-inverse document frequency (tf-idf). This measure quantifies the number of times that an n-gram appears in the essay while weighting them based on how frequently the words appear in a general corpus of text. In other words, the tf-idf measure provides a powerful way of standardizing n-gram counts based on the expected number of times that they would have appeared in an essay in the first place. As a result, while the count of a particular n-gram may be large if found often in the text, this can be offset when processed by the tf-idf method if the n-gram is one already frequently appears in essays.

As such, given the benefits of n-grams and their quantification via the tf-idf method, we created a baseline model using unigrams with tf-idf as the predictive features. As our baseline model, we decided to use a simple linear regression model to predict a set of (standardized) scores for our training essays.

To evaluate our linear regression model, we opted to eschew the traditional R^2 measure in favor of Spearman’s Rank Correlation Coefficient. While the traditional R^2 measures determines the accuracy of our model—that is, how closely the predicted scores correspond to the true scores—Spearman instead measures the strength and direction of monotonic association between the essay feature and the score. In other words, it determines how well the ranking of the features corresponds with the ranking of the scores. The benefit of this approach is that this is a useful measure for grading essays, since we're interested to know how directly a feature predicts the relative score of an essay (i.e., how an essay compares to another essay) rather than the actual score given to the essay. Ultimately, this is a better model to measure rather than accuracy, since it gives direct insight into the influence of the feature on the score, and furthermore, because relative accuracy might be more important than actual accuracy.

Spearman results in a score ranging from -1 to 1, where the closer the score is to an absolute value of 1, the stronger the monotonic association (and where positive values imply a positive monotonic association, versus negative values implying a negative one). The closer the value to 0, the weaker the monotonic association. The general consensus of Spearman correlation strength interpretation is as follows:

- .00-.19 “very weak”

- .20-.39 “weak”

- .40-.59 “moderate”

- .60-.79 “strong”

- .80-1.0 “very strong”[3]

As seen in Figure 2, the baseline model received scores that ranged from very weak to moderate, all with p-scores of several factors less than 0.05 (i.e. statistically significant results). However, even with this statistical significance, such weak Spearman correlations are ultimately far too low for this baseline model to provide a trustworthy system. As such, we clearly need a stronger model with a more robust selection of features, as expected!

Advanced Modeling

To improve upon our original model, we first brainstormed what other essay features might better predict an essay’s scores. Our early data exploration pointed to word count and vocab size being useful features. Other trivial features that we opted to include were number of sentences, percent of misspellings, and percentages of each part of speech. We believed these features would be valuable additions to our existing baseline model, as they provide greater insight to the overall structure of each essay, and thus foreseeably could be correlated with score.

However, we also wanted to include at least one nontrivial feature, operating under the belief that essay grading depends on the actual content of the essay—that is, an aspect of the writing that is not captured by trivial statistics on the essay. After all, the number of words in an essay tells us very little about the essay’s content; rather, it is simply generally correlated with better scores. Based on a recommendation by our Teaching Fellow Yoon, we decided to implement the nontrivial perplexity feature.

Perplexity is a measure of the likelihood of a sequence of words of appearing, given a training set of text. Somewhat confusingly, a low perplexity score corresponds to a high likelihood of appearing. As an example, if my training set of three essays were “I like food”, “I like donuts”, and “I like pasta”, the essay “I love pasta” would have a lower perplexity than “you hate cabbage,” since “I love pasta” is more similar to an essay in the training set. This is important, because it gives us a quantifiable way to measure an essay’s content relative to other essays in a set. One would logically conclude that good essays on a certain topic would have similar ideas (and thus similar vocabulary). As such, it follows that given a sufficient training set, perplexity may well provide a valid measure of the content of the essays [4].

Using perplexity proved to be much more of a challenge than anticipated. While the NLTK module provides a method that builds a language model and can subsequently calculate the perplexity of a string based from this model, the method is currently removed from NLTK due to several existing bugs [5]. While alternatives to NLTK do exist, they are all either (a) not free, or (b) generally implemented in C++. Though it is possible to port C++ code into Python, this approach seemed to be time-consuming and beyond the scope of this project. As such, we concluded that the most appealing option was to implement a basic version of the perplexity library ourselves.

We therefore constructed a unigram language model and perplexity function. Ideally, we will be able to expand this functionality to n-grams in the future, but due to time constraints, complexity, code efficiency, and the necessity of testing code we write ourselves, we have only managed to implement perplexity on a unigram model for now. The relationship of each feature to the score can be seen in Figure 3.

Unique word count, word count, and sentence count all seem to have a clearly correlated relationship with score, while perplexity demonstrates a possible trend. It is our belief that with a more advanced perplexity library, perhaps one based on n-grams rather than unigrams, this relationship would be strengthened. Indeed, this is a point of discussion later in this report.

With these these additional features in place, we moved on to select the actual model to predict our response variable. In the end, we decided to continue using linear regression, as we saw no reason to stray from this approach, and also because we were recommended to use such a model! However, we decided that it was important to include a regularization component in order to limit the influence of any collinear relationships among our thousands of features.

We experimented with both Lasso and Ridge regularization, tuning for optimal alpha with values ranging from 0.05 to 1 in 0.05 increments. As learned in class, Lasso performs both parameter shrinkage and variable selection, automatically removing predictors that are collinear with other predictors. Ridge regression, on the other hand, does not zero out coefficients for the predictors, but does minimize them, limiting their effect on the Spearman correlation.

Analysis & Interpretation

With this improved model, we see that the Spearman rank correlations have significantly improved from the baseline model. The Spearman rank correlation values now mostly lie in either the “strong” or “very strong” range, a notable improvement from our baseline model producing mostly “very weak” to “moderate” Spearman values.

In Figure 5, we highlight the scores of the models that yielded the highest Spearman correlations for each of the essay sets. Our highest Spearman correlation was achieved on Essay Set 1, at approximately 0.884, whereas our lowest was achieved on Essay Set 8, at approximately 0.619. It is interesting to note the vast difference in performance across essay sets, a fact that may indicate a failure to sufficiently and successfully generalize the model’s accuracy across such a wide variety of essay sets and prompts. We discuss ways to improve this in the following section.

Figures 4 and 5 also show that Lasso regularization generally performed better than the Ridge regularization, exhibiting better Spearman scores in six out of the eight essay sets; in fact, the average score of Lasso was also slightly higher (.793 as compared to .780). While this difference is not large, we would nonetheless opt for the Lasso model. Given that we have thousands of features with the inclusion of tf-idf, it is likely that plenty of these features are not statistically significant in our linear model. Hence, completely eliminating those features—as Lasso does—rather than just shrinking their coefficients, gives us a more interpretable, computationally efficient, and simpler model.

Ultimately, our Lasso linear regression yielded the greatest overall Spearman correlation, and is intuitively justifiable as a model. With proper tuning for regularization, we note that an alpha value of no greater than 0.5 yielded best results (it should be noted, though, that all nonzero alphas produced comparable Spearman scores, as evidenced in Figure 4). Importantly, p-values remained well below 0.05, confirming the statistical significance of our findings. In layman terms, this high Spearman correlation is significant because it indicates that the scores we have predicted for the essays are relatively similar in rank to the actual scores that the essays have received (as in, if essay A is ranked higher than essay B, our model did well in successfully providing the same conclusion).

Future Work & Concluding Thoughts

In sum, we were able to successfully implement a Lasso linear regression model using both trivial and nontrivial essay features to vastly improve upon our baseline model. While features like word count appear to have the most correlated relationship with score from a graphical standpoint, we believe that a feature such as perplexity, which actually takes a language model into account, would in the long run be a superior predictor. Namely, we would ideally extend our self-implemented perplexity functionality to the n-gram case, rather than simply using unigrams. With this added capability, we believe our model could achieve even greater Spearman correlation scores.

Other features that we believe could improve the effectiveness of the model include parse trees. Parse trees are ordered trees that represent the syntactic structure of a phrase. This linguistic model is, much like perplexity, based on content rather than the “metadata” that many trivial features provide. As such, it may prove effective in contributing to the model a more in-depth analysis of the context and construction of sentences, pointing to writing styles that may correlate to higher grades. Finally, we would like to take the prompts of the essays into account. This could be a significant feature for our model, because depending on the type of essay being writing—e.g. persuasive, narrative, summary—the organization of the essay could vary, which would then affect how we create our models and which features become more important.

There is certainly room for improvement on our model—namely, the features we just mentioned, as well as many more we have not discussed. However, given the time, resources and scope for this project, we were very pleased with our results. None of us had ever performed NLP before, but we now look forward to continuing to apply statistical methodology to such problems in the future!

- U.S. Bureau of Labor Statistics. "What High School Teachers Do." U.S. Bureau of Labor Statistics, Dec. 2015. Web. 13 Dec. 2016. http://www.bls.gov/ooh/education-training-and-library/high-school-teachers.htm#tab-2 .

- The Hewlett Foundation. "The Hewlett Foundation: Automated Essay Scoring." Kaggle, Feb. 2012. Web. 13 Dec. 2016. https://www.kaggle.com/c/asap-aes .

- "Spearman's Correlation." Statstutor, n.d. Web. 14 Dec. 2016. http://www.statstutor.ac.uk/resources/uploaded/spearmans.pdf

- Berwick, Robert C. "Natural Language Processing Notes for Lectures 2 and 3, Fall 2012." Massachusetts Institute of Technology - Natural Language Processing Course. Massachusetts Institute of Technology, n.d. Web. 13 Dec. 2016. http://web.mit.edu/6.863/www/fall2012/lectures/lecture2&3-notes12.pdf .

- "NgramModel No Longer Available? - Issue #738 - Nltk/nltk." GitHub. NLTK Open Source Library, Aug. 2014. Web. 13 Dec. 2016. https://github.com/nltk/nltk/issues/738 .

Automated Essay Scoring with Transformers

Comparing bert and deberta models for multi-dimensional automated essay scoring.

Click here to access Github repo containing relevant code or continue reading below on for non-technical description of the project.

Technologies/Skills Used

- Transformers - BERT

- Transformers - DeBERTa

- Hugging Face

- Google Colab

Problem Description

English language learners require timely and accurate feedback on essays in order to measure progress towards fluency. Ideally, students receive constructive feedback indicating which particular areas require greater focus. Manually grading essays with comprehensive rubrics with multiple traits, however, is a laborious process that prevents timely evaluation and is subject to bias and grader fatigue.

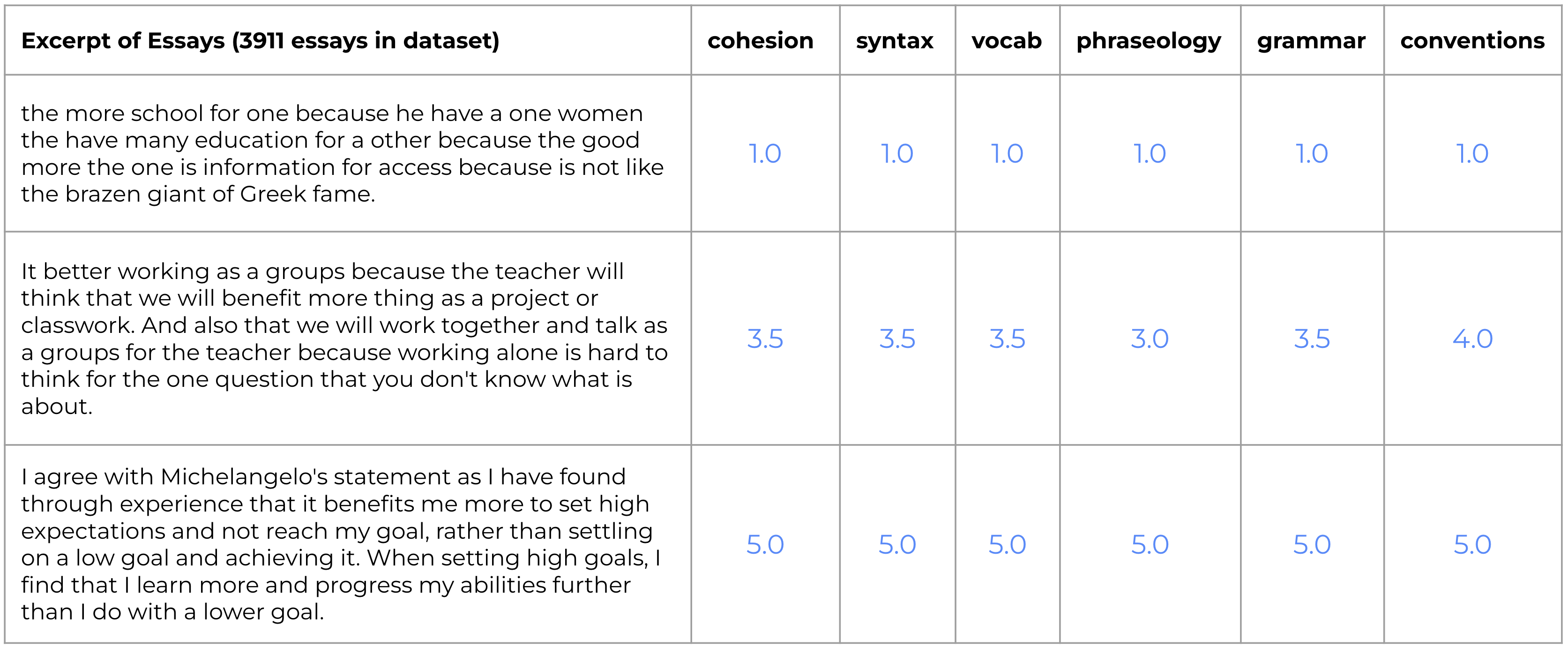

Data & Performance Metric

Several foundations, in conjunction with Vanderbilt University and the educational non-profit The Learning Agency Lab, published the ELLIPSE dataset via Kaggle in 2022. Students wrote argumentative essays in response to a variety of different prompts, setting up a cross-prompt multi-trait AES scoring task. According to the Learning Agency Lab, the ELLIPSE corpus “comprises 3911 argumentative essays written by 8th-12th grade ELLs. The essays were scored according to six analytic measures: cohesion, syntax, vocabulary, phraseology, grammar, and conventions…each measure represents a component of proficiency in essay writing, with greater scores corresponding to greater proficiency in that measure. The scores range from 1.0 to 5.0 in increments of 0.5.” Performance we measured by lowest mean column-wise root mean squared error (MCRMSE). Below are some short excerpts from selected essays.

Experiments

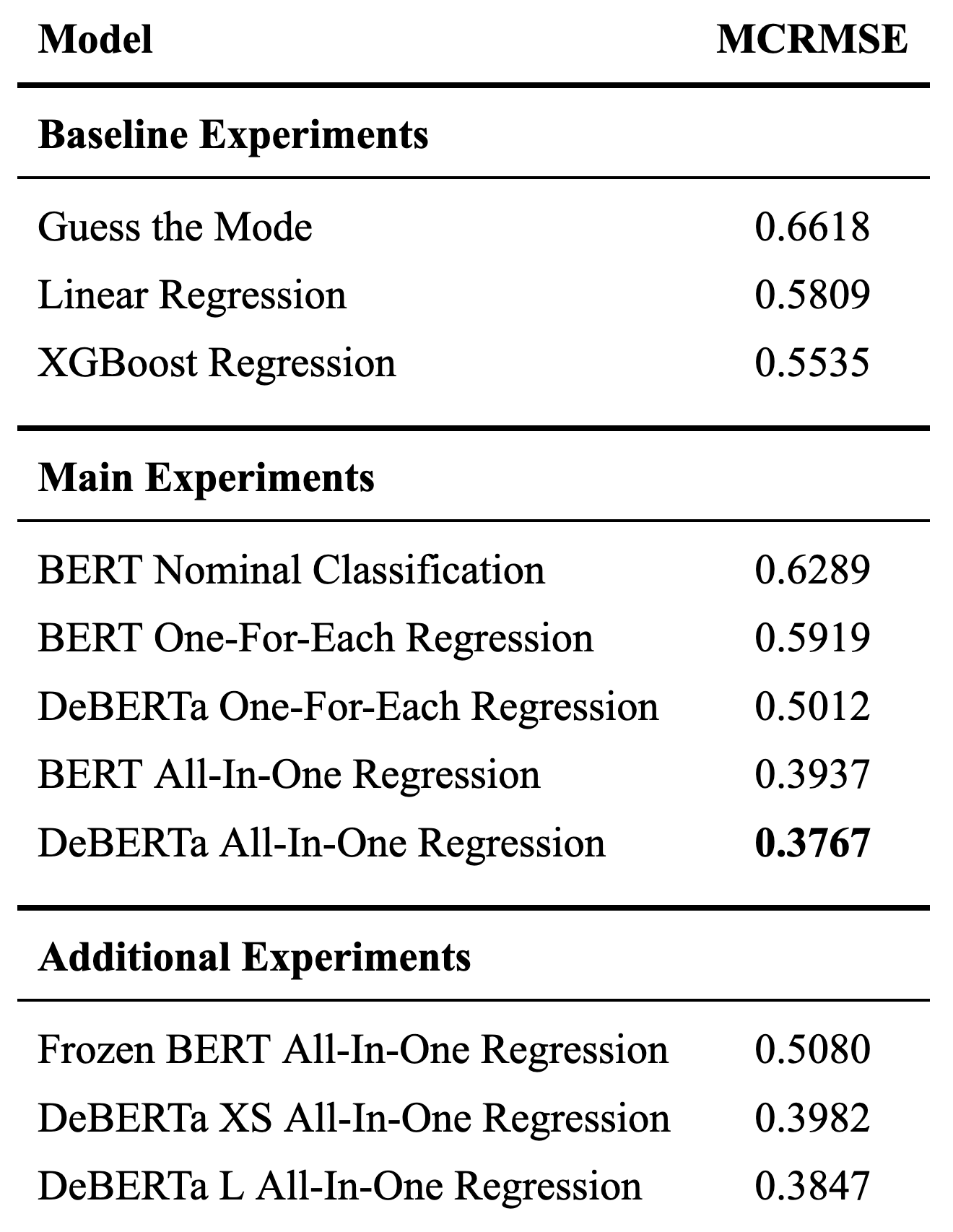

After preprocessing our text inputs and selecting a performance metric, we developed three families of models:

- Baseline experiments that relied on handcrafted features created using spaCy

- The most performant BERT and DeBERTa models that were fine-tuned for our particular task

- Additional BERT and DeBERTa models that were used to illustrate alternative approaches

A table of the baseline models, subsequent transformer-based models, and accompanying MCRMSE for the final, held-out test sets run with the same number of epochs and batch size follow below. All DeBERTa models employed DeBERTaV3

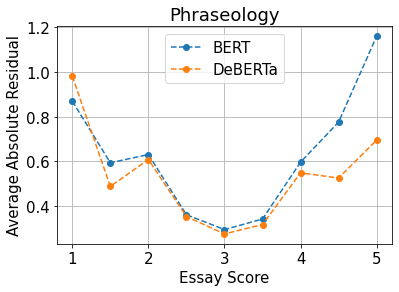

The source of greatest progress came from “going all-in” with DeBERTa All-In-One Regression, as evidenced by its 0.3767 MCRMSE. Contrary to the one-for-each models where models were trained in parallel for each rubric trait, these all-in-one models predicted all six traits at once from the same network with a simple six node regression dense layer. Since essays that scored well in cohesion also tended to score highly in vocabulary, we hypothesize that the all-in-one models tended to perform better because one trait is highly predictive of another such that mutually reinforcing or shared signals propagate through a shared architecture, boosting performance. For example, an essay with excellent cohesion is also likely to have advanced vocabulary, and thus feedback to improve vocabulary performance would also improve that of cohesion and performance and vice versa.

One hypothesis for why DeBERTa is better able to score phraseology in general is due to its enhanced mask decoder in which both absolute and relative positions of the words are taken into account instead of just the absolute position embeddings that BERT monitors. BERT’s strategy of using the absolute position vectors in the input layer hinders the ability for the BERT model to appropriately learn information regarding relative positions. DeBERTa incorporates absolute position vectors after the transformer blocks and before the softmax layer for the predicting masked tokens. In doing this, DeBERTa gathers the relative position information from all transformer blocks and only uses absolute position as complimentary information when predicting masked words. This understanding of relational position is critical in assessing the phraseology rubric. Lexicon bundles, a component of phraseology, is a prime example of why DeBERTa can assess how these words are used in relation to one another.

Experiments using all-in-one and one-for-each architectures with both BERT and DeBERTa demonstrate that the most performant approach is to use an all-in-one DeBERTa regression model to predict scores for multi-trait essays written by high school ELLs. Meaningful hyperparameter considerations include fine-tuning DeBERTa on the training set and using small batch sizes.

Paper was completed by Kurt Eulau, Alex Carite, and Tom Welsh. Please contact Kurt Eulau for a copy of the full paper.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Entropy (Basel)

Improving Automated Essay Scoring by Prompt Prediction and Matching

1 School of Artificial Intelligence, Beijing Normal University, Beijing 100875, China

Tianbao Song

2 School of Computer Science and Engineering, Beijing Technology and Business University, Beijing 100048, China

Weiming Peng

Associated data.

Publicly available datasets were used in this study. These data can be found here: http://hsk.blcu.edu.cn/ (accessed on 6 March 2022).

Automated essay scoring aims to evaluate the quality of an essay automatically. It is one of the main educational application in the field of natural language processing. Recently, Pre-training techniques have been used to improve performance on downstream tasks, and many studies have attempted to use pre-training and then fine-tuning mechanisms in an essay scoring system. However, obtaining better features such as prompts by the pre-trained encoder is critical but not fully studied. In this paper, we create a prompt feature fusion method that is better suited for fine-tuning. Besides, we use multi-task learning by designing two auxiliary tasks, prompt prediction and prompt matching, to obtain better features. The experimental results show that both auxiliary tasks can improve model performance, and the combination of the two auxiliary tasks with the NEZHA pre-trained encoder produces the best results, with Quadratic Weighted Kappa improving 2.5% and Pearson’s Correlation Coefficient improving 2% on average across all results on the HSK dataset.

1. Introduction

Automated essay scoring (AES), which aims to automatically evaluate and score essays, is one typical application of natural language processing (NLP) technique in the field of education [ 1 ]. In earlier studies, a combination of handcrafted design features and statistical machine learning is used [ 2 , 3 ], and with the development of deep learning, neural network-based approaches gradually become mainstream [ 4 , 5 , 6 , 7 , 8 ]. Recently, pre-trained language models have gradually become the foundation module of NLP, and the paradigm of pre-training, then fine-tuning, is also widely adopted. Pre-training is the most common method for transfer learning, in which a model is trained on a surrogate task and then adapted to the desired downstream task by fine-tuning [ 9 ]. Some research has attempted to use pre-training modules in AES tasks [ 10 , 11 , 12 ]. Howard et al. [ 10 ] utilize the pre-trained encoder as a feature extraction module to obtain a representation of the input text and update the pre-trained model parameters based on the downstream text classification task by adding a linear layer. Rodriguez et al. [ 11 ] employ a pre-trained encoder as the essay representation extraction module for the AES task, with inputs at various granularities of the sentence, paragraph, overall, etc., and then use regression as the training target for the downstream task to further optimize the representation. In this paper, we fine-tune the pre-trained encoder as a feature extraction module and convert the essay scoring task into regression as in previous studies [ 4 , 5 , 6 , 7 ].

The existing neural methods obtain a generic representation of the text through a hierarchical model using convolutional neural networks (CNN) for word-level representation and long short-term memory (LSTM) for sentence-level representation [ 4 ], which is not specific to different features. To enhance the representation of the essay, some studies have attempted to incorporate features such as prompt [ 3 , 13 ], organization [ 14 ], coherence [ 2 ], and discourse structure [ 15 , 16 , 17 ] into the neural model. These features are critical for the AES task because they help the model understand the essay while also making the essay scoring more interpretable. In actual scenarios, prompt adherence is an important feature in essay scoring tasks [ 3 ]. The hierarchical model is insensitive to changes in the corresponding prompt for the essay and always assigns the same score for the same essay, regardless of the essay prompt. Persing and Ng [ 3 ] propose a feature-rich approach that integrates the prompt adherence dimension. Ref. [ 18 ] improves document modeling with a topic word. Li et al. [ 7 ] utilizes a hierarchical structure with an attention mechanism to construct prompt information. However, the above feature fusion methods are unsuitable for fine-tuning.

The two challenges in effectively incorporating pre-trained models into AES feature representation are the data dimension and the methodological dimension. For the data dimension, the use of fine-tuning approaches to transfer the pre-trained encoder to downstream tasks frequently necessitates sufficient data, and there has been more research on both training and testing data from the same target prompt [ 4 , 5 ], but the data size is relatively small, varying between a few hundred and a few thousand, and pre-trained encoders cannot be fine-tuned well. In order to solve this challenge, we use the whole training set, which includes various prompts. In terms of methodology, we employ the pre-training and multi-task learning (MTL) paradigms, which can learn features that cannot be learned in a single task through joint learning, learning to learn, and learning with auxiliary tasks [ 19 ], etc. MTL methods have been applied to several NLP tasks, such as text classification [ 20 , 21 ], semantic analysis [ 22 ] et al. Our method creates two auxiliary tasks that need to be learned alongside the main task. The main task and auxiliary tasks can increase each other’s performance by sharing information and complementing each other.

In this paper, we propose an essay scoring model based on fine-tuning that utilizes multi-task learning to fuse prompt features by designing two auxiliary tasks, prompt prediction, and prompt matching, which is more suitable for fine-tuning. Our approach can effectively incorporate the prompt feature in essays and improve the representation and understanding of the essay. The paper is organized as follows. In Section 2 , we first review related studies. We describe our method and experiment in Section 3 and Section 4 . Section 5 presents the findings and discussions. Finally, in Section 6 , we provide a conclusion, future work, and the limitations of the paper.

2. Related Work

Pre-trained language models, such as BERT [ 23 ], BERT-WWM [ 24 ], RoBERTa [ 25 ], and NEZHA [ 26 ], have gradually become a fundamental technique for NLP, with great success on both English and Chinese tasks [ 27 ]. In our approach, we use the BERT and NEZHA feature extraction layers. BERT is the abbreviation of Bidirectional Encoder Representations from Transformers, and it is based on transformer blocks that are built using the attention mechanism [ 28 ] to extract semantic information. It is trained on two unsupervised tasks using large-scale datasets: masked language model (MLM) and next sentence prediction (NSP). NEZHA is a Chinese pre-training model that employs functional relative positional encoding and whole word masking (WWM) rather than BERT. The pre-training then the fine-tuning mechanism is widely used in downstream NLP tasks, including AES [ 11 , 12 , 15 ]. Mim et al. [ 15 ] propose a pre-training approach for evaluating the organization and argument strength of essays based on modeling coherence. Song et al. [ 12 ] present a multi-stage pre-training method for automated Chinese essay scoring that consists of three components: weakly supervised pre-training, supervised cross-prompt fine-tuning, and supervised target-prompt fine-tuning. Rodriguez et al. [ 11 ] use BERT and XLNET [ 29 ] for representation and fine-tuning of English corpus.

The essay prompt introduces the topic, offers concepts, and restricts both content and perspective. Some studies have attempted to enhance the AES system by incorporating prompt features in many ways, such as by integrating prompt information to determine if an essay is off-topic [ 13 , 18 ] or by considering prompt adherence as a crucial indicator [ 3 ]. Louis and Higgins [ 13 ] improve model performance by expanding prompt information with a list of related words and reducing spelling errors. Persing and Ng [ 3 ] propose a feature-rich method for incorporating the prompt adherence dimension via manual annotation. Klebanov et al. [ 18 ] also improve essay modeling with topic words to quantify the overall relevance of the essay to the prompt, and the relationship between prompt adherence scores and total essay quality is also discussed. The methods described above mostly employ statistical machine learning, prompt information is enriched by annotation and the construction of datasets, as well as the construction of word lists and topic word mining. While all of them are making great progress, the approaches they are employing are more difficult to directly transfer to fine-tuning. Li et al. [ 7 ] propose a shared model and an enhanced model (EModel), and utilize a neural network hierarchical structure with an attention mechanism to construct features of the essay such as discourse, coherence, relevancy, and prompt. For the representation, the paper employs GloVe [ 30 ] rather than a pre-trained model. In the experiment section, we compared our method to the sub-module of EModel (Pro.) which incorporates the prompt feature.

3.1. Motivation

Although previous studies on automated essay scoring models for specific prompts have shown promising results, most research focuses on generic features of essays. Only a few studies have focused on prompt feature extraction, and no one has attempted to use a multi-task approach to make the model capture prompt features and be sensitive to prompts automatically. Our approach is motivated by capturing prompt features to make the model aware of the prompt and using pre-training and then the fine-tuning mechanism for AES. Based on this motivation, we use a multi-task learning approach to obtain features that are more applicable to Essay Scoring (ES) by adding essay prompts to the model input and proposing two auxiliary tasks: Prompt Prediction ( PP ) and Prompt Matching ( PM ). The overall architecture of our model is illustrated in Figure 1 .

The proposed framework. “一封求职信” is the prompt of the essay, the English translation is “A cover letter”. “主管您好” means “Hello Manager”. The prompt and essay are separated by [SEP].

3.2. Input and Feature Extraction Layer

The input representation for a given essay is built by adding the corresponding token embeddings E t o k e n , segment embeddings E s e g m e n t , and position embeddings E p o s i t i o n . To fully exploit the prompt information, we concatenate the prompt in front of the essay. The first token of each input is a special classification token [CLS], and the prompt and essay are separated by [SEP]. The token embedding of the j -th essay in the i -th prompt can be expressed as Equation ( 1 ), E s e g m e n t and E p o s i t i o n are obtained from the tokenizer of the pre-train encoder.

We utilize the BERT and NEZHA as feature extraction layers. The final hidden state corresponding to the [CLS] token is the essay representation r e for essay scoring and subtasks.

3.3. Essay Scoring Layer

We view essay scoring as a regression task. To enable data mapping regression problems, the real scores are scaled to the range [ 0 , 1 ] for training and rescaled during evaluation, according to the existing studies:

where s i j is the scaled score for i -th prompt j -th essay, and s c o r e i j is the actual score for i -th prompt j -th essay, m a x s c o r e i and m i n s c o r e i are the maximum and minimum of the real scores for the i -th prompt. The input is essay representation r e from the pre-trained encoder, which is fed into a linear layer with a sigmoid activation function:

where s ^ is the predicted score by AES system, σ is the sigmoid function, W e s is a trainable weights, and b e s is a bias. The essay scoring (es) training objective is described as:

3.4. Subtask 1: Prompt Prediction

The definition of prompt prediction is giving an essay to determine which prompt it belongs to. We view prompt prediction as a classification task. The input is essay representation r e , which is fed into a linear layer with a softmax function. The formula is given by Equation ( 5 ):

where u ^ is the probability distribution of classification results, W p p is a parameter matrix, and b p p is a bias. The loss function is formalized as follows:

where u k is the real prompt label for the k -th sample, p p p k c is the probability that the k -th sample belongs to the c -th category, C denotes the number of prompts, which in this study is ten.

3.5. Subtask 2: Prompt Matching

The definition of prompt matching is giving a pair of a prompt and an essay, and to decide if the essay and the prompt are compatible. We consider prompt matching to be a classification task. The following is the formula:

where v ^ is the probability distribution of matching results, W p m is a parameter matrix, and b p m is a bias. The objective function is shown in Equation ( 9 )

where v k indicates whether the input prompt and essay match. p p m k m is the likelihood that the matching degree of k -th sample falls into category m. m denotes the matching degree, 0 for a match, 1 for a dismatch. The distinction between prompt prediction and prompt matching is that as the number of prompts increases, the difference in classification targets leads to increasingly obvious differences in task difficulty, sample distribution and diversity, and scalability.

3.6. Multi-Task Loss Function

The final loss function for each input is a weighted sum of the loss functions for essay scoring and two subtasks: prompt prediction and prompt matching, with the loss formalized as follows:

where α , β , and γ are non-negative weights assigned in advance to balance the importance of the three tasks. Because the objective of this research is to improve the AES system, the main task should be given more weight than the two auxiliary tasks. The optimal parameters in this paper are α : β = α : γ = 100:1, and in Section 5.3 , we design experiments to figure out the optimal value interval for α , β , and γ .

4. Experiment

4.1. dataset.

We use HSK (HSK is the acronym of Hanyu Shuiping Kaoshi, which is Chinese Pinyin for the Chinese Proficiency Test). Dynamic Composition Corpus ( http://hsk.blcu.edu.cn/ (accessed on 6 March 2022)) as our dataset as in existing studies [ 31 ]. HSK is also called “TOEFL in Chinese”, which is a national standardized test designed to test the proficiency of non-native speakers of Chinese. The HSK corpus includes 11,569 essays composed by foreigners from more than thirty different nations or regions in response to more than fifty distinct prompts. We eliminate any prompts with fewer than 500 student writings from the HSK dataset to constitute the experimental data. The statistical results of the final filtered dataset are provided in Table 1 , which comprises 8878 essays across 10 prompts taken from the actual HSK test. Each essay score ranges from 40 to 95 points. We divide the entire dataset at random into the training set, validation set, and test set in the ratio of 6:2:2. To alleviate the problem of insufficient data under a single prompt, we apply the entire training set that consists of different prompts for fine-tuning. We test every prompt individually as well as the entire test set during the testing phase and utilize the same 5-fold cross-validation procedure as [ 4 , 5 ]. Finally, we report the average performance.

HSK dataset statistic.

4.2. Evaluation Metrics

For the main task, we use the Quadratic Weighted Kappa (QWK)approach, which is widely used in AES [ 32 ], to analyze the agreement between prediction scores and the ground truth. QWK can be calculated by Equations ( 11 ) and ( 12 )

where i and j are the golden score of the human rater and the AES system score, and each essay has N possible ratings. Second, calculate the QWK score using Equation ( 12 ).

where O i , j denotes the number of essays that receive a rating i by the human rater and a rating j by the AES system. The expected rating matrix Z is histogram vectors of the golden rating and AES system rating and normalized so that the sum of its elements equals the sum of its elements in O . We also utilize Pearson’s Correlation Coefficient (PCC) to measure the association as in previous studies [ 3 , 32 , 33 ], which quantifies the degree of linear dependency between two variables and describes the level of covariation. In contrast to the QWK metric, which evaluates the agreement between the model output and the gold standard, we use PCC to assess whether the AES system ranks essays similarly to the gold standard, indicating the capacity of the AES system to appropriately rank texts, i.e., high scores ahead of low scores. For auxiliary tasks, we consider prompt prediction and prompt matching as classification problems and use macro-F1 score (F1), and accuracy (Acc.) as evaluation metrics.

4.3. Comparisons

Our model is compared to the baseline models listed below. The former three are existing neural AES methods, and we experiment with both character and word input when training for comparison. The fourth method is to fine-tune the pre-trained model, and the rest are variations of our proposed method.

CNN-LSTM [ 4 ]: This method builds a document using CNN for word-level representation and LSTM for sentence-level representation, as well as the addition of a pooling layer to obtain the text representation. Finally, the score is obtained by applying the linear layer of the sigmoid function.

CNN-LSTM-att [ 5 ]: This method incorporates an attention mechanism into both the word-level and sentence-level representations of CNN-LSTM.

EModel (Pro.): This method concatenates the prompt information in the input layer of CNN-LSTM-att, which is a sub-module of [ 7 ].

BERT/NEZHA-FT: This method is used to fine-tune the pre-trained model. To obtain the essay representation, we directly feed an essay into the pre-trained encoder as the input. We choose the [CLS] embedding as essay representations and feed them into a linear layer of the sigmoid function for scoring.

BERT/NEZHA-concat: The difference between this method and fine-tune is that the input representation concatenates the prompt to the front of the essay in token embedding, as in Figure 1 .

BERT/NEZHA-PP: This model incorporates prompt prediction as an auxiliary task, with the same input as the concat model and the output using [CLS] as the essay representation. A linear layer with the sigmoid function is used for essay scoring, and a linear layer with the softmax function is used for prompt prediction.

BERT/NEZHA-PM: This model includes prompt matching as an auxiliary task. In the input stage of constructing the training data, there is a 50% probability that the prompt and the essay are mismatched. [CLS] embedding is used to represent the essay. A linear layer with the sigmoid function is used for essay scoring, and a linear layer with the softmax function is used for prompt matching.

BERT/NEZHA-PP&PM: This model utilizes two auxiliary tasks, prompt prediction, and prompt matching, with the same inputs and outputs as the PM model. The output layer of the auxiliary tasks is the same as above.

4.4. Parameter Settings

We use BERT ( https://github.com/google-research/bert (accessed on 11 March 2022)) and NEZHA ( https://github.com/huawei-noah/Pretrained-Language-Model/tree/master/NEZHA-TensorFlow (accessed on 11 March 2022)) as pre-trained encoder. To obtain tokens and token embeddings, we employ the tokenizer and vocabulary of the pre-trained encoder. The parameters of the pre-trained encoder are learnable during both the fine-tuning and training phases. The maximum length of the input is set to 512 and Table 2 includes additional parameters. The baseline models, CNN-LSTM and CNN-LSTM-att, are trained from scratch, and their parameters are shown in Table 2 . Our experiments are carried out on NVIDIA TESLA V100 32 G GPUs.

Parameter settings.

5. Results and Discussions

5.1. main results and analysis.

We report our experimental results in Table 3 and Table A1 (Due to space limitations, this table is included in Appendix A ). Table A1 illustrates the average QWK and PCC for each prompt. Table 3 shows QWK and PCC across the entire test set and the average results of each prompt test set. As shown in Table 3 , we can find that the proposed auxiliary tasks (PP, PM, and PP&PM) (line 8–10 & 13–15) outperform other contrast models on both QWK and PCC, PP&PM models with the pre-trained encoder, BERT, and NEZHA, outperform PP and PM on QWK. In terms of the PCC metric, PM models exceeded the other two models except for the average result with the NEZHA encoder. The findings above indicate that our proposed two auxiliary tasks are both effective.

QWK and PCC for the total test set and Average QWK and PCC for each prompt test set; † denotes input as a character; ‡ denotes input as word. The best results are in bold.

On Total test set, our best results, a pre-trained encoder with PM and PP, are higher compared to fine-tuning method and EModel(Pro.), exceed the strong baseline concat model by 1.8% with BERT and 2.3% with NEZHA on QWK, and get a generally consistent correlation. It is shown from Table 3 that our proposed models also yield similar results to the Average test set, 1.6% of BERT and 2% of NEZHA on QWK of PP&PM models compared to concat model, 2% of BERT and 2.5% of NEZHA on QWK of PP&PM models compared to fine-tuning model, and competitive results on PCC metric. Using the multi-task learning approach and fine-tuning comparison, our proposed approach outperforms the baseline system on both QWK and PCC, indicating that better essay representation can be obtained through multi-tasking learning. Furthermore, when compared to the concat model with fused prompt representation, our proposed approach outperform the baseline in QWK scores, but line 10 and line 15 in Table 3 Total track PCC values are lower within 1% of the baseline. It demonstrates that our proposed auxiliary task is effective in representing the essay prompt.

We train the hierarchical model (line 1–4) using character and word as input, respectively, and the results show that using the character for training is generally better, with the best results in Total and Average being more than 4% lower than those with the pre-training method. The results indicate that using pre-trained encoders both BERT and NEZHA for feature extraction works well on the HSK dataset. The pre-training model comparison reveals that BERT and NEZHA are competitive, with NEZHA delivering the best results.

Results of each prompt with BERT and NEZHA are displayed in Figure 2 . The results of our proposed models (PP, PM, and PP&PM) have made positive progress on several prompts. Among them, the results of PP&PM, in addition, to prompt 1 and prompt 5, extend beyond the two baselines of fine-tuning and concat . The results indicate that our proposed auxiliary tasks to incorporate prompt is generic and can be employed with a range of genres and prompts. The primary cause of the results of individual prompts being suboptimal is that the hyperparameters of loss function α , β , and γ are not adjusted specifically for each prompt and we will further analyze the reasons for this in Section 5.3 .

( a ) Results of each prompt with BERT pre-trained encoder on QWK; ( b ) Results of each prompt with NEZHA pre-trained encoder on QWK.

5.2. Result and Effect of Auxiliary Tasks

Table 4 depicts the results of the auxiliary tasks (PP and PM) on validation set, the accuracy and F1 are both greater than 85% for BERT and 90% for NEZHA, and the model is well trained in the auxiliary task, when compared to both pre-trained models BERT and NEZHA, the latter produces better. The results of auxiliary tasks with NEZHA perform better as feature extraction modules.

Accuracy and F1 for PP and PM on validation set.

Comparing the contribution of PP and PM, as shown in Table A1 and Table 3 and Figure 3 , the contribution of PM is higher and more effective. Figure 3 a,b illustrate radar graphs of various pre-trained encoders of PP and PM across 10 prompts utilizing QWK metrics. Figure 3 a shows that the QWK value of PM is higher than PP in all but prompt 9 with BERT encoder, and Figure 3 b demonstrates that the results of PM are 60% better compared to those of PP, implying that PM is also superior to PP for a specific prompt. The PM and PP comparison results for the Total and Average datasets are provided in Figure 3 c,d. Except for the PM model with the NEZHA pre-trained encoder, which has a slightly lower QWK than the PP model, all models that use PM as a single auxiliary task perform better, further demonstrating the superiority of prompt matching in prompt representing and incorporating.

( a ) Radar graph of BERT-PP&BERT-PM; ( b ) Radar graph of NEZHA-PP&NEZHA-PM; ( c ) Results of PP and PM on QWK; ( d ) Results of PP and PM on PCC.

5.3. Effect of Loss Weight

We examine how the ratio of loss weight parameters β and γ affects the model. Figure 4 a shows that the model works best when the ratio is 1:1 on both QWK and PCC metrics. Figure A1 depicts the QWK results for various β and γ ratios, as well as revealing that the model produces the greatest results at around 1:1 for different prompts, except for prompts 1, 5, and 6, and the same is true for the average results. Concerning the issue of our model being suboptimal for individual prompts, Figure A1 illustrates that the best results for prompts 1, 5, and 6 are not achieved at 1:1, suggesting that it is inappropriate for such parameters in these prompts. Because we disorder the entire training set and fix the β and γ ratio before testing it independently, the parameters of the different prompts cannot be dynamically adjusted within a single training procedure. The reasons are to address the lack of data and also to focus more on the average performance of the model, which also prevents the model from overfitting for specific prompts. Compared to the results in Table A1 , NEZHA-PP and NEZHA-PM both outperform the baselines and the PP&PM model for prompt 1, indicating that both PP and PM can enhance the results when employed separately. For prompt 5, NEZHA-PP performs better than NEZHA-PM, showing that PP plays a greater role. The PP&PM model is already the best result for prompt 6, even though the 1:1 parameter is not optimal in Figure A1 , demonstrating that there is still potential for improvement. The information above reveals that different prompts have varying degrees of difficulty for joint training and parameter optimization of the main and auxiliary tasks, along with different conditions of applicability for the two auxiliary tasks we presented.

( a ) The effect of PP&PM in different β / γ ratios of QWK and PCC on Total dataset, we fix the value of α in this section of the experiment.; ( b ) The smoothing results for training losses across all tasks; ( c ) The results of different α : β (PP), α : γ (PM), and α : β : γ (PP&PM) ratios on QWK.

We also measure the effect of α on the model, where we fix the β / γ ratio constant at 1:1. Figure 4 c demonstrates that the PP, PM, and PP&PM models are all optimal at α : β = α : γ = 100:1, with the best QWK values for PP&PM, indicating that our suggested method of combining two auxiliary tasks for joint training is effective. The observation of [ 1 , 100 ] shows that when the ratio is small, the main task cannot be trained well, the two auxiliary tasks have a negative impact on the main task, but the single auxiliary task has less impact, indicating that multiple auxiliary tasks are more difficult to train concurrently than a single auxiliary task. In addition, future research should consider how to dynamically optimize the parameters of multiple tasks.

The training losses for ES, PP, and PM are included in Figure 4 b, and it can be seen that the loss of the main task decreases rapidly in the early stage, and the model converges around 6000 steps. The reason for faster model convergence in PM is that the task is a dichotomous classification compared to PP, which is a ten classification, and additionally, among the ten prompts, prompt 6 “A letter to parent” and prompt 9 “Parents are children’s first teachers” are more similar, making PP more difficult. As a result, further research into how to select the appropriate weight ratio and design more matching auxiliary tasks is required.

6. Conclusions and Future Work

This paper presents a pre-training and then fine-tuning model for automated essay scoring. The model incorporates the essay prompts to the model input and obtains better features more applicable to essay scoring by multi-task learning with two auxiliary tasks, prompt prediction, and prompt matching. Experiments demonstrate that the model outperforms baselines in results measured by the QWK and PCC on average across all results on the HSK dataset, indicating that our model is substantially better in terms of agreement and association. The experimental results also show that both auxiliary tasks can effectively improve the model performance, and the combination of the two auxiliary tasks with the NEZHA pre-trained encoder yields the best results, with QWK enhancing 2.5% and PCC improving 2% compared to the strong baseline, the concatenate model, on average across all results on the HSK dataset. When compared to existing neural essay scoring methods, the experimental results show that QWK improves by 7.2% and PCC improves by 8% on average across all results.

Although our work has enhanced the effectiveness of the AES system, there are still limitations. Regarding the data dimension, this research primarily investigates fusing prompt features in Chinese; other languages are not examined extensively. Nevertheless, our method is more convenient for migration than the manual annotation approach, and other languages can be directly migrated. Furthermore, other features in different languages can use our method to create similar auxiliary tasks for information fusion. Moreover, as the number of prompts grows, the difficulty of training for prompt prediction increases, and we will consider combining prompts with genre and other information to design auxiliary tasks suitable for more prompts, as well as attempting to find a balance between the number of essays and the number of prompts to make prompt prediction more efficient. The parameters of the loss function are now defined empirically at the methodological level, which is not conducive to additional auxiliary activities. In future work, we will optimize the parameter selection scheme and build dynamic parameter optimization techniques to accommodate variable numbers of auxiliary tasks. In terms of application, our approaches focus on fusing textual information in prompts, while they do not cover all prompt forms. Our system now requires additional modules for the chart and picture prompt. In future research, we will experiment with multimodal prompt data to improve the application scenarios of the AES system.

Abbreviations

The following abbreviations are used in this manuscript:

QWK and PCC for each prompt on HSK dataset, † denotes input as character; ‡ denotes input as word. The best results are in bold.

The effect of PP&PM in different β / γ ratios of QWK across all dataset, we fix the value of α in this section of the experiment.

Funding Statement

This research was funded by the National Natural Science Foundation of China (Grant No.62007004), the Major Program of the National Social Science Foundation of China (Grant No.18ZDA295), and the Doctoral Interdisciplinary Foundation Project of Beijing Normal University (Grant No.BNUXKJC2020).

Author Contributions

Conceptualization and methodology, J.S. (Jingbo Sun); writing—original draft preparation, J.S. (Jingbo Sun) and T.S.; writing—review and editing, T.S., J.S. (Jihua Song) and W.P. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Data availability statement, conflicts of interest.

The authors declare no conflict of interest.

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Search code, repositories, users, issues, pull requests...

Provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

automated-essay-scoring

Here are 8 public repositories matching this topic..., siyuanzhao / automated-essay-grading.

Source code for the paper A Memory-Augmented Neural Model for Automated Grading

- Updated Sep 2, 2019

zlliang / essaysense

An experiment on Automated Essay Scoring

- Updated Dec 22, 2019

doheejin / ProTACT

This repository is the implementation of the ProTACT architecture, introduced in the paper "Prompt- and Trait Relation-aware Cross-prompt Essay Trait Scoring" (ACL Findings 2023).

- Updated Oct 29, 2023

audreycs / Memory-Networks-Automated-Essay-Grading

An implementation of paper "A Memory-Augmented Neural Model for Automated Grading" in PyTorch

- Updated Nov 24, 2020

travismoore3 / aes_system

AES system for ESL essays in Python

- Updated May 12, 2018

albertusk95 / nips-challenge-essay-scoring-arabic

Global NIPS Paper Implementation Challenge - An Automated System for Essay Scoring of Online Exams in Arabic based on Stemming Techniques and Levenshtein Edit Operations

- Updated Jan 31, 2018

Tenvence / ulra

Official Code of ACL 2023 (long, main conference) paper "Aggregating Multiple Heuristic Signals as Supervision for Unsupervised Automated Essay Scoring".

- Updated Sep 15, 2023

rupesh11101999 / automated-essay-grading-siyuanzhao

Improve this page.

Add a description, image, and links to the automated-essay-scoring topic page so that developers can more easily learn about it.

Curate this topic

Add this topic to your repo

To associate your repository with the automated-essay-scoring topic, visit your repo's landing page and select "manage topics."

HTML conversions sometimes display errors due to content that did not convert correctly from the source. This paper uses the following packages that are not yet supported by the HTML conversion tool. Feedback on these issues are not necessary; they are known and are being worked on.

- failed: inconsolata

Authors: achieve the best HTML results from your LaTeX submissions by following these best practices .

From Automation to Augmentation: Large Language Models Elevating Essay Scoring Landscape

Receiving immediate and personalized feedback is crucial for second-language learners, and Automated Essay Scoring (AES) systems are a vital resource when human instructors are unavailable. This study investigates the effectiveness of Large Language Models (LLMs), specifically GPT-4 and fine-tuned GPT-3.5, as tools for AES. Our comprehensive set of experiments, conducted on both public and private datasets, highlights the remarkable advantages of LLM-based AES systems. They include superior accuracy, consistency, generalizability, and interpretability, with fine-tuned GPT-3.5 surpassing traditional grading models. Additionally, we undertake LLM-assisted human evaluation experiments involving both novice and expert graders. One pivotal discovery is that LLMs not only automate the grading process but also enhance the performance of human graders. Novice graders when provided with feedback generated by LLMs, achieve a level of accuracy on par with experts, while experts become more efficient and maintain greater consistency in their assessments. These results underscore the potential of LLMs in educational technology, paving the way for effective collaboration between humans and AI, ultimately leading to transformative learning experiences through AI-generated feedback.

Changrong Xiao 1 1 {}^{1} start_FLOATSUPERSCRIPT 1 end_FLOATSUPERSCRIPT , Wenxing Ma 2 2 {}^{2} start_FLOATSUPERSCRIPT 2 end_FLOATSUPERSCRIPT Sean Xin Xu 1 1 {}^{1} start_FLOATSUPERSCRIPT 1 end_FLOATSUPERSCRIPT , Kunpeng Zhang 3 3 {}^{3} start_FLOATSUPERSCRIPT 3 end_FLOATSUPERSCRIPT , Yufang Wang 4 4 {}^{4} start_FLOATSUPERSCRIPT 4 end_FLOATSUPERSCRIPT , Qi Fu 4 4 {}^{4} start_FLOATSUPERSCRIPT 4 end_FLOATSUPERSCRIPT 1 1 {}^{1} start_FLOATSUPERSCRIPT 1 end_FLOATSUPERSCRIPT Center for AI and Management (AIM), School of Economics and Management, Tsinghua University 2 2 {}^{2} start_FLOATSUPERSCRIPT 2 end_FLOATSUPERSCRIPT School of Economics and Management, Tsinghua University 3 3 {}^{3} start_FLOATSUPERSCRIPT 3 end_FLOATSUPERSCRIPT Department of Decision, Operations & Information Technologies, University of Maryland 4 4 {}^{4} start_FLOATSUPERSCRIPT 4 end_FLOATSUPERSCRIPT Beijing Xicheng Educational Research Institute [email protected] , [email protected] , [email protected] , [email protected] , [email protected] , [email protected]

1 Introduction

English learning is an integral part of the high school curriculum in China, with a particular emphasis on writing practice. While timely and reliable feedback is essential for improving students’ proficiency, it presents a significant challenge for educators to provide individualized feedback, due to the high student-teacher ratio in China. This limitation hinders students’ academic progress, especially those who aspire to enhance their self-directed learning. Hence, the development of automated systems capable of delivering accurate and constructive feedback and assessment scores carries immense importance in this context.

Automated Essay Scoring (AES) systems provide valuable assistance to students by offering immediate and consistent feedback on their work, while also simplifying the grading process for educators. However, the effective implementation of AES systems in real-world educational settings presents several challenges. One of the primary challenges is the diverse range of exercise contexts and the inherent ambiguity in scoring rubrics. Take, for example, the case of Chinese high school students who engage in various writing exercises as part of their preparation for the College Entrance Examination. Although established scoring guidelines for these exams are widely recognized by English educators, they often lack the necessary granularity, especially when assessing abstract evaluation criteria such as logical structure. Furthermore, interviews conducted with high school teachers have revealed that subjective elements and personal experiences frequently exert influence over the grading process. These intricacies and complexities introduce significant hurdles when it comes to ensuring the accuracy, generalizability, and interpretability of AES systems.

To address these challenges, it is important to highlight recent advancements in the field of Natural Language Processing (NLP), particularly the development of large language models (LLMs). A notable example is OpenAI’s ChatGPT 1 1 1 https://chat.openai.com , which showcases impressive capabilities. ChatGPT not only demonstrates robust logical reasoning but also displays a remarkable ability to comprehend and adhere to human instructions (Ouyang et al., 2022 ) . Moreover, recent studies have further underscored the potential of leveraging LLMs in AES tasks (Mizumoto and Eguchi, 2023 ; Yancey et al., 2023 ; Naismith et al., 2023 ) .

In this study, we employed GPT-3.5 and GPT-4 as the foundational LLMs for our investigation. We carefully designed appropriate prompts for LLMs, instructing them to evaluate essays and provide detailed explanations. Additionally, we enhance the performance of GPT-3.5 by fine-tuning it using annotated datasets. We conducted extensive experiments under both publicly available essay-scoring datasets and a proprietary private dataset of student essays. To further assess the potential of LLMs in enhancing human grading, we conducted human evaluation experiments involving both novice and expert graders. These experiments yielded compelling insights into the educational context and potential avenues for effective collaboration between humans and AI. In summary, our study makes three significant contributions:

We pioneer the exploration of LLMs’ capabilities as AES systems, especially in intricate scenarios with tailored grading criteria. Our best fine-tuned GPT-3.5 model exhibits superior accuracy, consistency, and generalizability, coupled with the ability to provide detailed explanations and recommendations.

We introduce a substantial essay-scoring dataset, comprising 6,559 essays written by Chinese high school students, along with multi-dimensional scores provided by expert educators. This dataset enriches the resources available for research in the field of AI in Education (AIEd) 2 2 2 Codes and resources can be found in our GitHub repository https://github.com/Xiaochr/LLM-AES. .

The most significant implications of our study emerge from the LLM-assisted human evaluation experiments. Our findings underscore the potential of LLM-generated feedback to elevate the capabilities of individuals with limited domain knowledge to a level comparable to experts. These insights pave the way for future research in the realm of human-AI collaboration and AI-assisted learning in educational contexts.

2 Related Work

2.1 automated essay scoring (aes).

Automated Essay Scoring (AES) stands as a pivotal research area at the intersection of NLP and education. Traditional AES methods usually involve a two-stage process, as outlined in (Ramesh and Sanampudi, 2022 ) . First, features are extracted from the essay, including statistical features (Miltsakaki and Kukich, 2004 ; Ridley et al., 2020 ) and latent vector representations (Mikolov et al., 2013 ; Pennington et al., 2014 ) . Subsequently, regression-based or classification-based machine learning models are employed to predict the essay’s score (Sultan et al., 2016 ; Mathias and Bhattacharyya, 2018b , a ; Salim et al., 2019 ) .

With the advancement of deep learning, AES has witnessed the integration of advanced techniques such as convolutional neural networks (CNNs), long short-term memory networks (LSTMs), Attention-based models, and other deep learning technologies. These innovations have led to more precise score predictions (Dong and Zhang, 2016 ; Taghipour and Ng, 2016 ; Riordan et al., 2017 ) .

2.2 LLM Applications in AES

The domain of AES has also experienced advancements with the incorporation of pre-trained language models to enhance performance. Rodriguez et al. ( 2019 ); Lun et al. ( 2020 ) utilized Bidirectional Encoder Representations from Transformers (BERT (Devlin et al., 2018 ) ) to automatically evaluate essays and short answers. Additionally, Yang et al. ( 2020 ) improved BERT’s performance by fine-tuning it through a combination of regression and ranking loss, while Wang et al. ( 2022 ) employed BERT for jointly learning multi-scale essay representations.

Recent studies have explored The potential of leveraging the capabilities of the modern LLMs in AES tasks. Mizumoto and Eguchi ( 2023 ) provided ChatGPT with specific IELTS scoring rubrics for essay evaluation but found limited improvements when incorporating GPT scores into the regression model. Similarly, Han et al. ( 2023 ) introduced an automated scoring framework that did not outperform the BERT baseline. In a different approach, Yancey et al. ( 2023 ) used GPT-4’s few-shot capabilities to predict Common European Framework of Reference for Languages (CEFR) levels for short essays written by second-language learners. However, the Quadratic Weighted Kappa (QWK) scores did not surpass those achieved by the baseline model trained with XGBoost or human annotators.

Building on these insights, our study aims to further investigate the effectiveness of LLMs in AES tasks. We focus on more complex contexts and leverage domain-specific datasets to fine-tune LLMs for enhanced prediction performance. This research area offers promising avenues for future exploration and improvement.

ASAP dataset

Our chinese student english essay dataset, 4.1 essay scoring.

In this section, we will present the methods employed in the experiments of this study. The methods can be broadly divided into two main components: prompt engineering and further fine-tuning with the training dataset. We harnessed the power of OpenAI’s GPT-series models to generate both essay scores and feedback, specifically leveraging the zero-shot and few-shot capabilities of gpt-4 , as well as gpt-3.5-turbo for fine-tuning purposes.

To create appropriate prompts for different scenarios, our approach began with the development of initial instructions, followed by their refinement using GPT-4. An illustrative example of a prompt and its corresponding model-generated output can be found in Table 9 in the Appendices.

In this study, we considered various grading approaches, including zero-shot, few-shot, fine-tuning, and the baseline, which are as follows:

GPT-4, zero-shot, without rubrics

In this setting, we simply provide the prompt and the target essay to GPT-4. The model then evaluates the essay and assigns a score based on its comprehension within the specified score range.

GPT-4, zero-shot, with rubrics

Alongside the prompt and the target essay, we also provide GPT-4 with explicit scoring rubrics, guiding its evaluation.

GPT-4, few-shot, with rubrics

In addition to the zero-shot settings, the few-shot prompts include sample essays and their corresponding scores. This assists GPT-4 in understanding the latent scoring patterns. With the given prompt, target essay, scoring rubrics, and a set of k 𝑘 k italic_k essay examples, GPT-4 provides an appropriate score reflecting this enriched context.

As indicated by prior studies in AES tasks (Yancey et al., 2023 ) , increasing the value of k 𝑘 k italic_k did not consistently yield better results, showing a trend of diminishing marginal returns. Therefore, we choose a suitable k = 3 𝑘 3 k=3 italic_k = 3 as described in the study.

We explored two approaches for selecting the sample essays. The first approach involved randomly selecting essays from various levels of quality to help LLM understand the approximate level of the target essay. The second method adopted a retrieval-based approach, which has been proven to be effective in enhancing LLM performance (Khandelwal et al., 2020 ; Shi et al., 2023 ; Ram et al., 2023 ) . Leveraging OpenAI’s text-embedding-ada-002 model, we calculated the embedding for each essay. This allowed us to identify the top k 𝑘 k italic_k similar essays based on cosine similarity (excluding the target essay from the selection). Our experiments demonstrated that this retrieval strategy consistently yielded superior results. Therefore, we focused on the outcomes of this approach in the subsequent sections.

In all these configurations, we adopted the Chain-of-Thought (CoT) (Wei et al., 2022 ) strategy. This approach instructed the LLM to first analyze and explain the provided materials before making final score determinations. Research studies (Lampinen et al., 2022 ; Zhou et al., 2023 ; Li et al., 2023 ) have shown that this structured approach significantly enhances the capabilities of the LLM, optimizing performance in tasks that require inference and reasoning.

Fine-tuned GPT-3.5

We conducted further investigations into the effectiveness of supervised fine-tuning methods. Specifically, we employed OpenAI’s gpt-3.5-turbo and fine-tuned it individually for each dataset. This find-tuning process incorporated prompts containing scoring rubrics, examples, and the target essays. Given that our private dataset includes scores in three sub-dimensions and an overall score, we explored two fine-tuning strategies. The first approach directly generates all four scores simultaneously. Alternatively, we experimented with training three specialized models, each focusing on a distinct scoring dimension. We then combined the scores from these expert models to obtain the overall score.

BERT baseline