Table of Contents

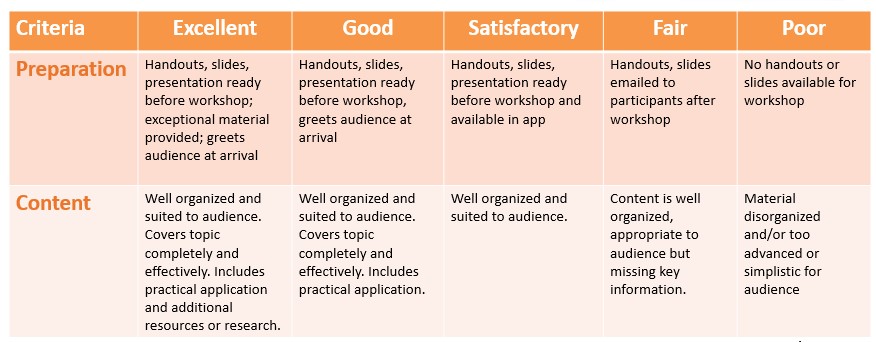

Training delivery and facilitation competency rubric.

How can we help “part-time trainers” — people who may not have training in their job title nor do they have a background in instructional design or adult learning theory but who are asked to train others — how can we help them be more effective when they deliver training?

Some organizations offer a train-the-trainer program , but I’ve found that a lot of organizations leave their “part-time trainers” to fend for themselves. To their credit, many of these part-time trainers have deep subject matter expertise and stories and experiences to help them train others. Other part-time trainers blast their learners with lots of content.

If you’d like to help your part-time trainers (or perhaps if you’re a part-time trainer yourself), perhaps this Training Facilitator Evaluation Rubric, focused on basic training delivery and facilitation competencies, will help.

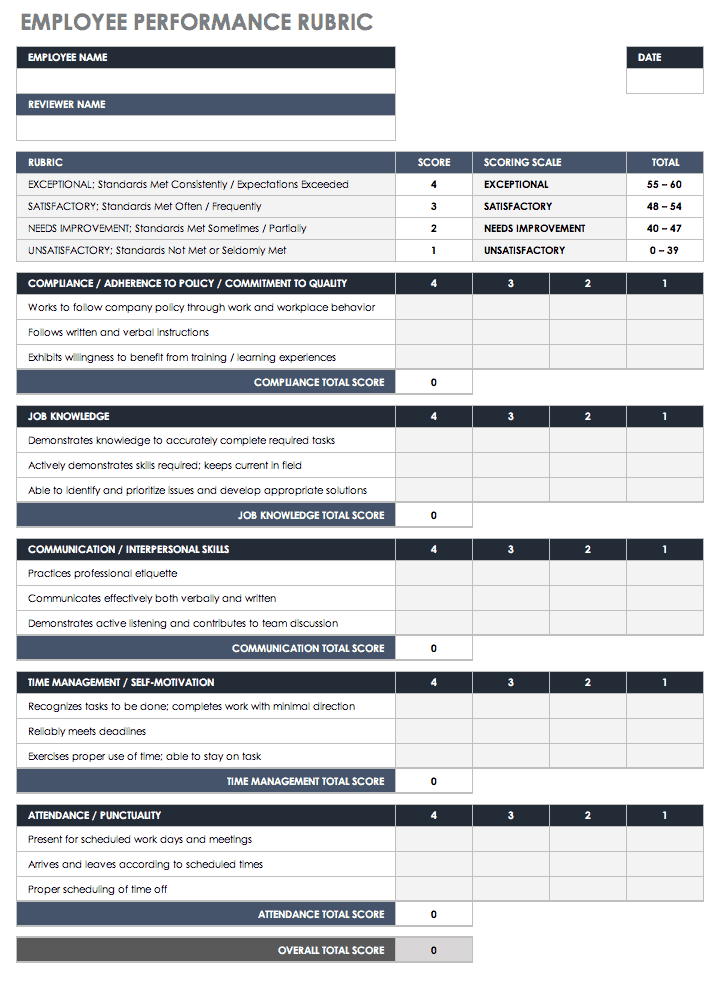

There are four essential competencies for anyone (whether you’re a part-time trainer or if this is the bulk of your work) who delivers and facilitates training, with descriptions of what it looks like to demonstrate each competency at a “developing facilitator” level, “proficient facilitator” level, and “master facilitator” level.

Subject-Matter Expertise

This competency revolves around how well you know the content. While complete mastery of the content isn’t necessary, Training Industry reported on a study by L&D Partners that concluded that there is actually a correlation between content mastery and overall training delivery, and that if trainers don’t master the content they are delivering, that weakness could overshadow their delivery skills.

Content mastery is important, but as you can see, it’s only one of the four pillars to this competency rubric. Solely knowing the content well is not nearly enough to be considered a competent training facilitator.

Presentation Skills

Perhaps you think “presentation skills” and “training delivery” are synonymous, but for the purpose of this rubric, the concept of presentation skills specifically refers to the manner in which you deliver your words, respond to your learners, and present your body language.

Flexibility

Keep in mind that training isn’t just about what information you share or how closely you stick to the training materials (your facilitator guide or lesson plan, your slides, etc. – which are indeed important), but also how you read the room and meet the needs of your learners. The degree to which you can balance the goals of your training session with the needs of your learners will have a big impact on the outcome.

Results-Focused

At the end of the day, you deliver training so that people can do something new or differently or better. This particular competency is what differentiates a trainer who says: “I plan to cover _____” from a trainer who says: “By the end of this session, my learners will be able to ____”. The former ensures that you say what you want to say. The latter creates an environment where relevant knowledge can be learned, new skills can be developed, and on-the-job behaviors can change.

You can download a pdf of the Facilitator Evaluation Rubric . Fill out the form below with your email.

If you’d like to know more about this Training Facilitator Evaluation Rubric , if you’d like to bring a train-the-trainer program to your team, and/or if you just want to talk about effective training design and delivery, feel free to connect with me on LinkedIn or send me an email: [email protected]

Brian Washburn

Brian has over 25 years of experience in Learning & Development including the last 7 as CEO of Endurance Learning.

Brian is always available to chat about learning & development and to talk about whether Endurance Learning can be your training team’s “extra set of hands”.

See author's posts

Instructor-Led Training Resources

These are some of our favorite resources to support everyone involved with instructor-led training.

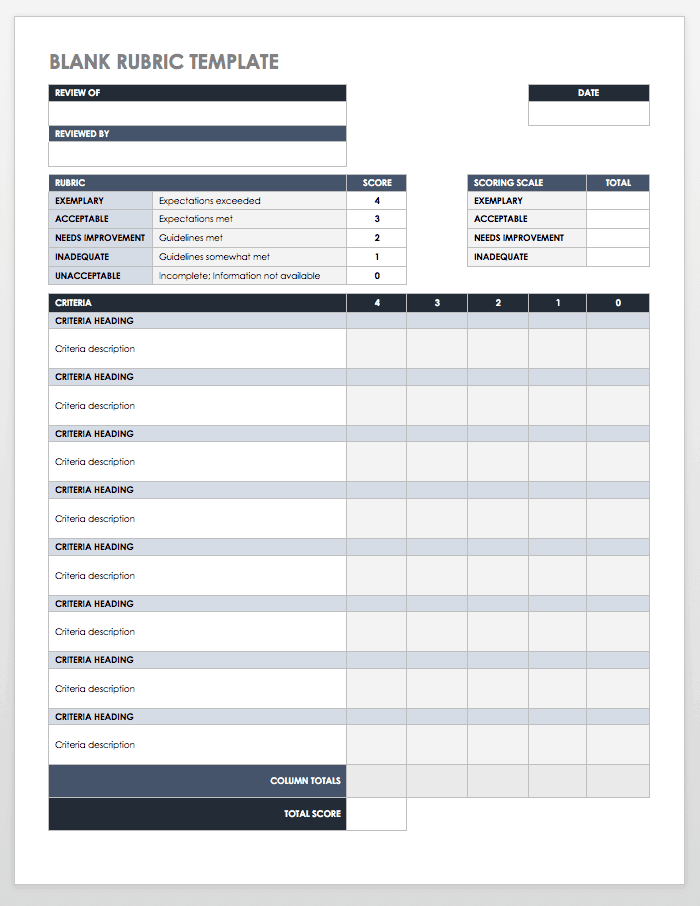

A rubric is a way to assess performance with a standard set of evaluation criteria. The next time you need to assess the performance of someone delivering training (even if that someone is you), you may find this rubric helpful.

263 Training Activities to Boost Your Workshop

Get quick access to the training activities and workshop activities that help you generate ideas for your next training session.

The Role of Co-facilitators

Co-facilitators play an important role in a training workshop. The most obvious benefit is that when you co-facilitate, you get a break from leading the

18 Instructor-led Training Activities

Engaging, intentional, face-to-face and virtual instructor-led training activities can make the difference between a session that helps learners to apply new skills or knowledge and one that falls flat.

Articles Similar to Training Delivery and Facilitation Competency Rubric

Accessibility and Inclusion in Instructor-Led Training (ILT)

Is your ILT designed with accessibility and inclusion in mind? Gwen Navarrete Klapperich wants to make sure you consider accessibility and inclusion in your ILT design, and offers some suggestions on how to do just that.

Turning the Tables: From Trainer to Student

As people who have designed and delivered effective training, Kassy Laborie and Zovig Garboushian know a thing or two about good learning experiences. So what nuggets have they gleaned from a 9-month course that they’re both attending, and that all of us should consider when designing our own programs? Today’s podcast answers that question.

Is this the world’s most effective role play?

When it comes to your training participants, two of the dirtiest, or perhaps scariest, words you can say during a session may be: role play. In today’s podcast, John Crook, Head of Learning at Intersol Global, offers some thoughts on how to make role plays more authentic and robust.

What can training designers learn from a popular keynote speaker?

What can anyone who designs training learn from the way a keynote speaker designs and refines their presentation? Renowned keynote speaker, Jessica Kriegel, answers that question and more in today’s podcast.

Using a Whiteboard in a Virtual Classroom

Do you remember the time way back before COVID when we all gathered in classrooms for training? We have seen some Instructor-Led Training (ILT) return,

Beyond ChatGPT: Combining AI and Sound Instructional Design

ChatGPT can do a lot, but it’s not (yet) able to generate all the stuff you need for an effective training session. Combining ChatGPT and Soapbox, however, might actually get you there.

Subscribe to Get Updates from Endurance Learning

Brian Washburn CEO & Chief Ideas Guy

Enter your information below and we’ll send you the latest updates from our blog. Thanks for following!

Download the Facilitator Evaluation Rubric

Enter your email below and we’ll send you the PDF of the rubric to help you assess the skills of someone delivering training.

Grow your L&D Career Today!

The Foundations of L&D course through the L&D Pro Academy provides the concepts and practical experience you need to grow your confidence and abilities as a well-rounded L&D professional.

Enter your email below and we’ll be in touch with an info sheet!

Find Your L&D Career Path

Explore the range of careers to understand what role might be a good fit for your L&D career.

Enter your email below and we’ll send you the PDF of the What’s Possible in L&D Worksheet .

Let's Talk Training!

Enter your information below and we’ll get back to you soon.

Download the Feedback Lesson Plan

Enter your email below and we’ll send you the lesson plan as a PDF.

Download the Microsoft Word Job Aid Template

Enter your email below and we’ll send you the Word version of this template.

Download the Free Lesson Plan Template!

Enter your email below and we’ll send you a Word document that you can start using today!

Download the Training Materials Checklist

Enter your email below and we’ll send you the PDF of the Training Materials Checklist.

Subscribe to Endurance Learning for updates

Get regular updates from the Endurance Learning team.

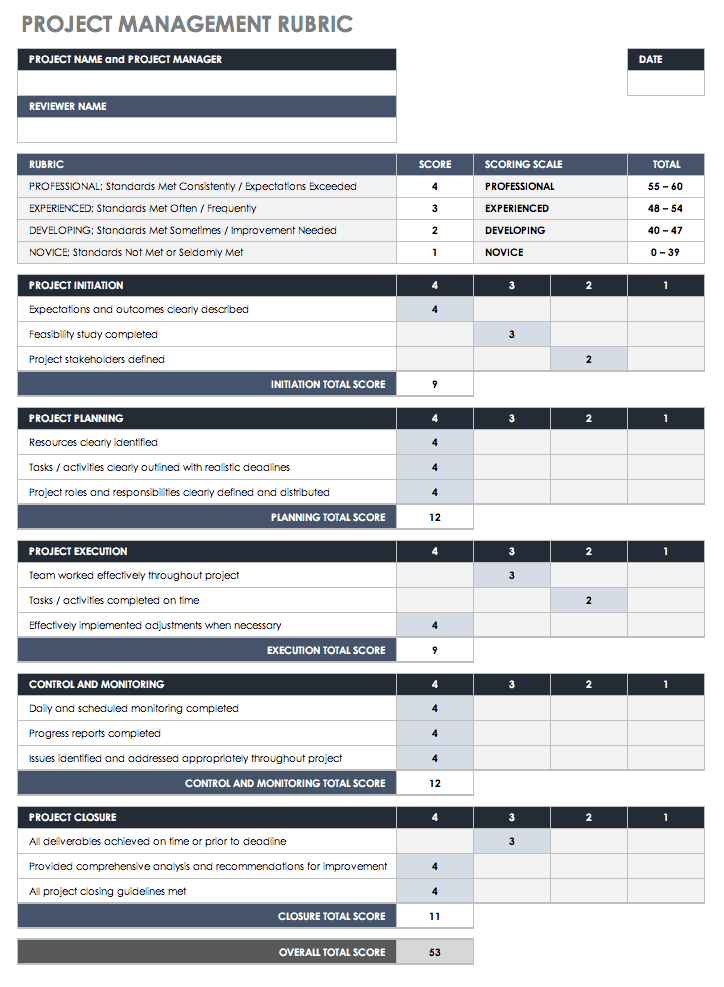

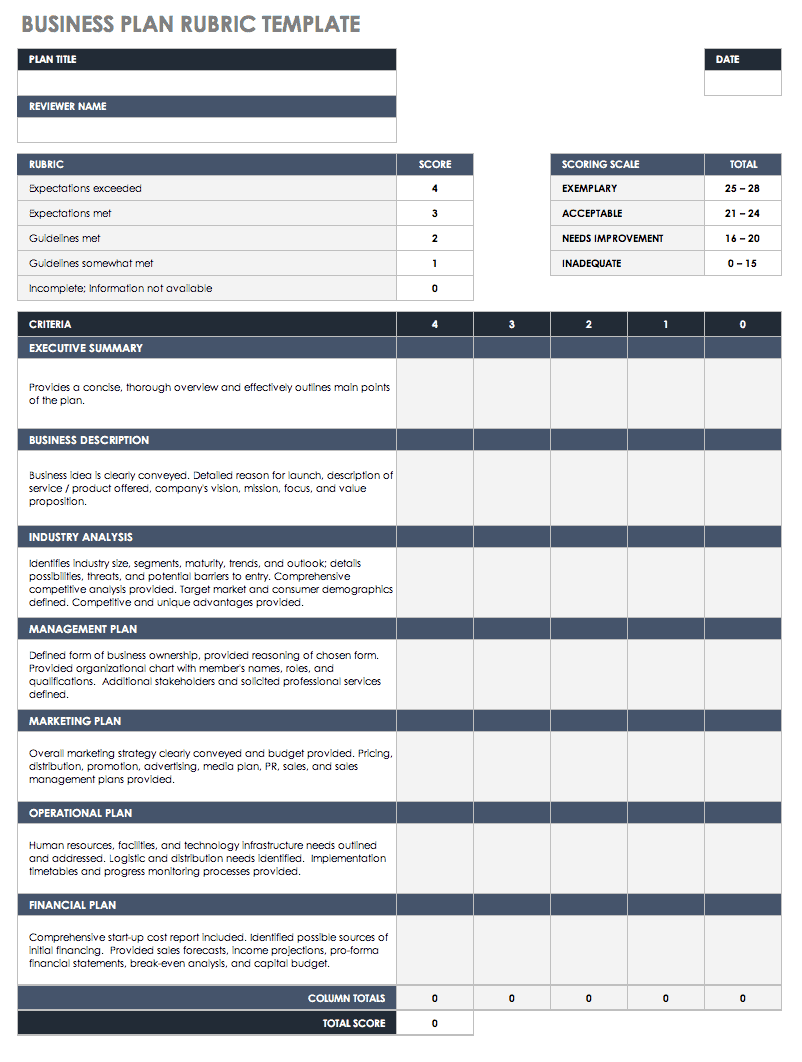

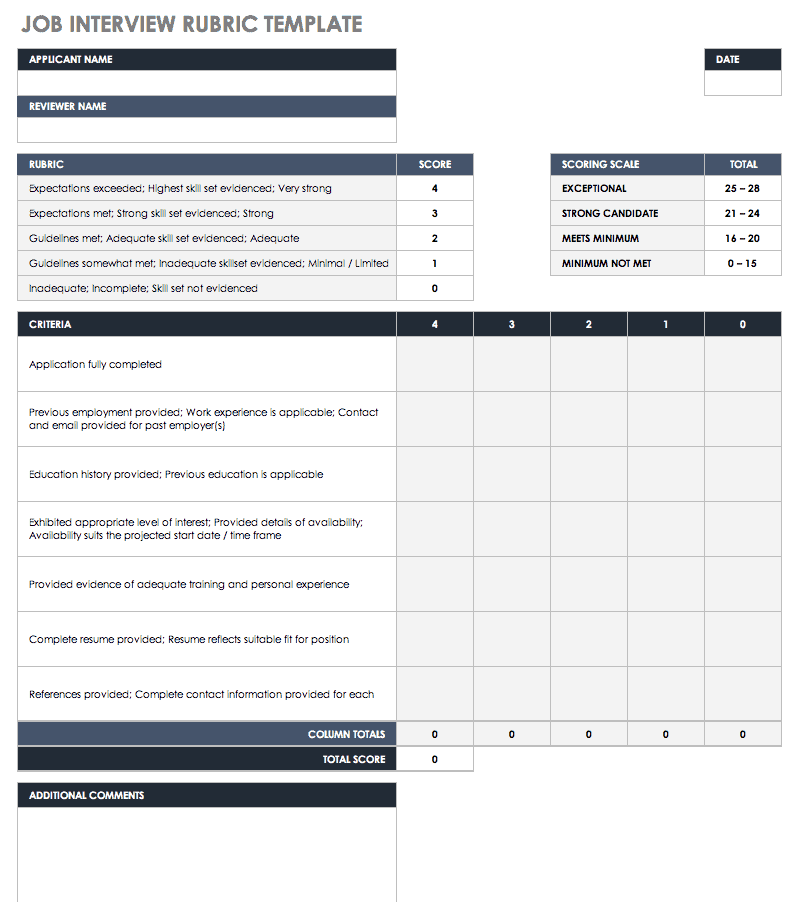

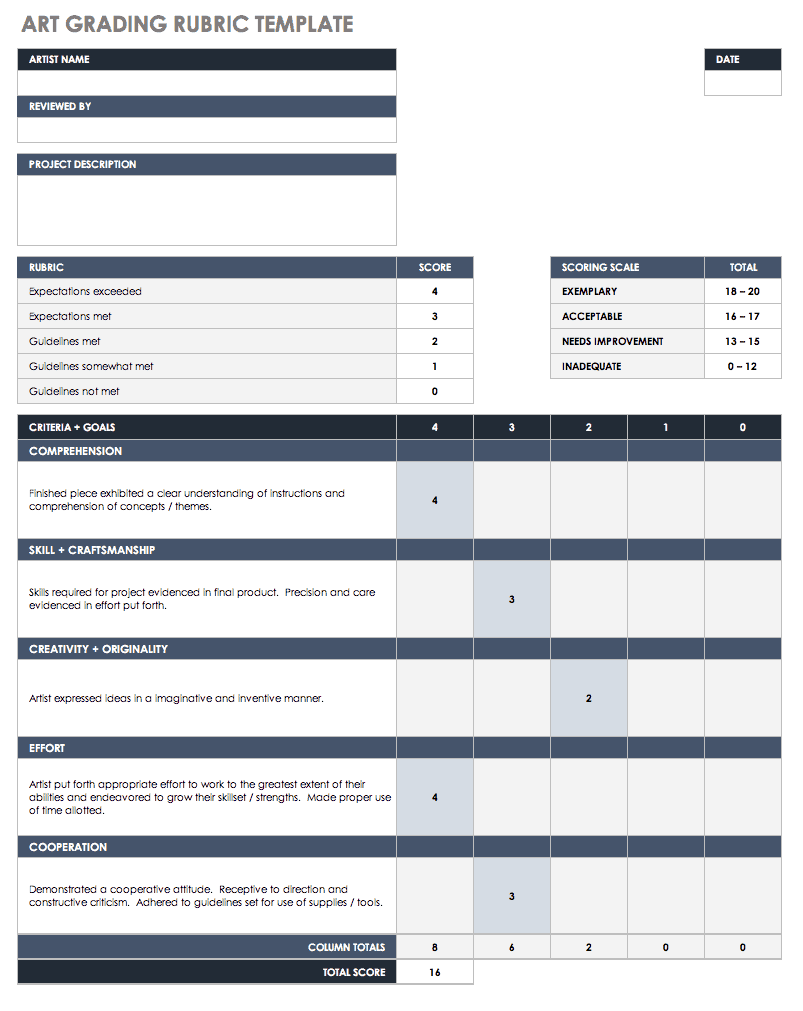

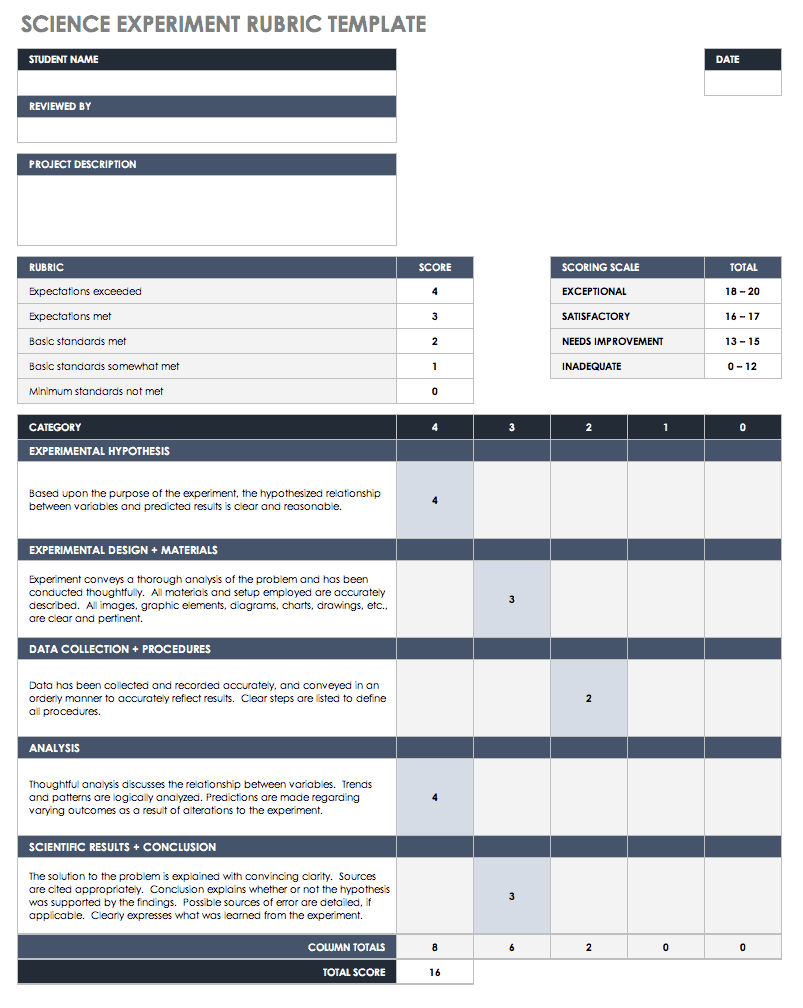

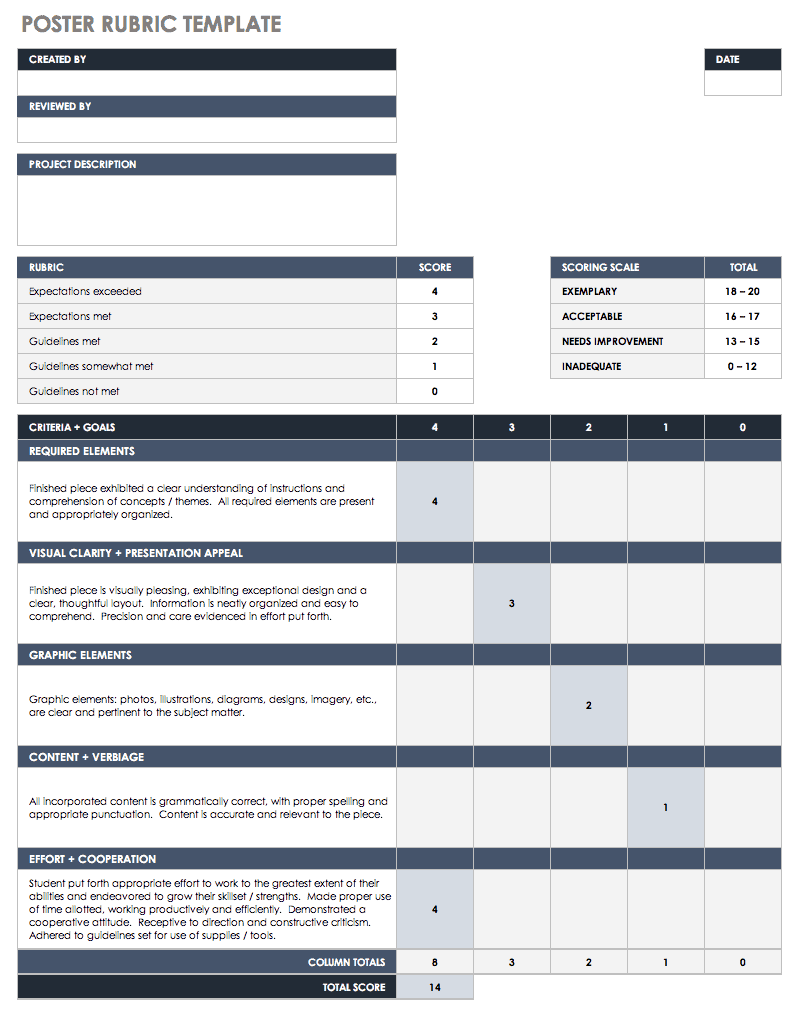

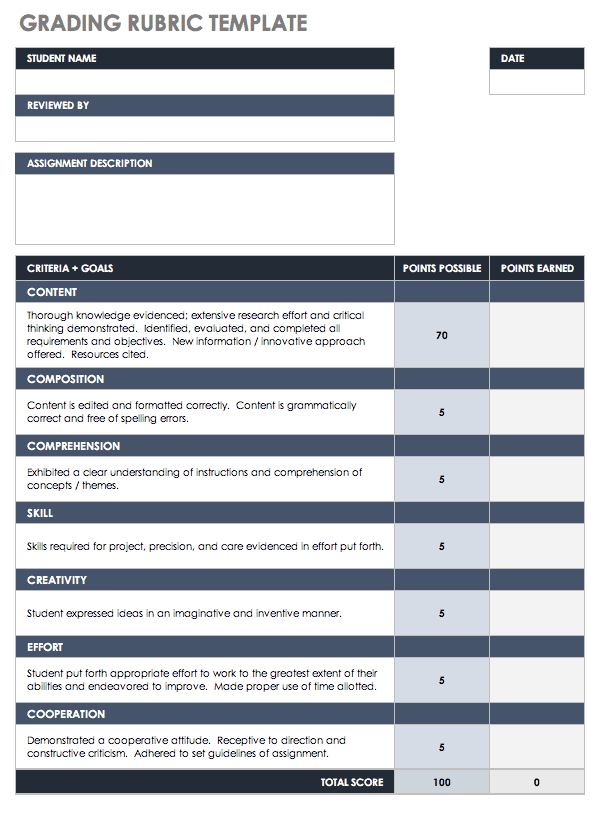

Rubric Best Practices, Examples, and Templates

A rubric is a scoring tool that identifies the different criteria relevant to an assignment, assessment, or learning outcome and states the possible levels of achievement in a specific, clear, and objective way. Use rubrics to assess project-based student work including essays, group projects, creative endeavors, and oral presentations.

Rubrics can help instructors communicate expectations to students and assess student work fairly, consistently and efficiently. Rubrics can provide students with informative feedback on their strengths and weaknesses so that they can reflect on their performance and work on areas that need improvement.

How to Get Started

Best practices, moodle how-to guides.

- Workshop Recording (Fall 2022)

- Workshop Registration

Step 1: Analyze the assignment

The first step in the rubric creation process is to analyze the assignment or assessment for which you are creating a rubric. To do this, consider the following questions:

- What is the purpose of the assignment and your feedback? What do you want students to demonstrate through the completion of this assignment (i.e. what are the learning objectives measured by it)? Is it a summative assessment, or will students use the feedback to create an improved product?

- Does the assignment break down into different or smaller tasks? Are these tasks equally important as the main assignment?

- What would an “excellent” assignment look like? An “acceptable” assignment? One that still needs major work?

- How detailed do you want the feedback you give students to be? Do you want/need to give them a grade?

Step 2: Decide what kind of rubric you will use

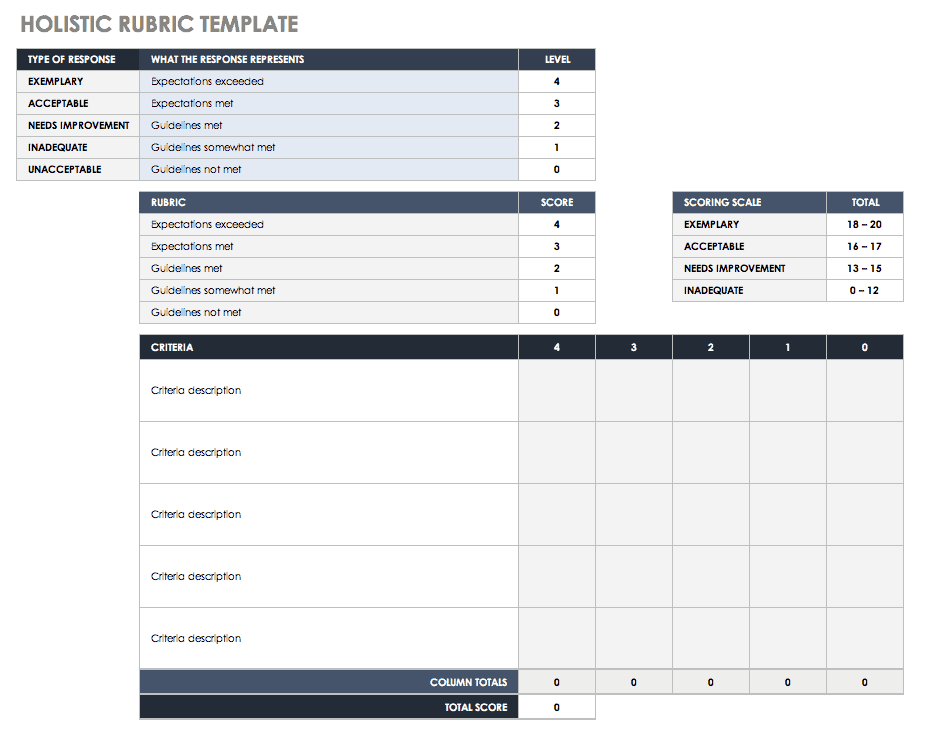

Types of rubrics: holistic, analytic/descriptive, single-point

Holistic Rubric. A holistic rubric includes all the criteria (such as clarity, organization, mechanics, etc.) to be considered together and included in a single evaluation. With a holistic rubric, the rater or grader assigns a single score based on an overall judgment of the student’s work, using descriptions of each performance level to assign the score.

Advantages of holistic rubrics:

- Can p lace an emphasis on what learners can demonstrate rather than what they cannot

- Save grader time by minimizing the number of evaluations to be made for each student

- Can be used consistently across raters, provided they have all been trained

Disadvantages of holistic rubrics:

- Provide less specific feedback than analytic/descriptive rubrics

- Can be difficult to choose a score when a student’s work is at varying levels across the criteria

- Any weighting of c riteria cannot be indicated in the rubric

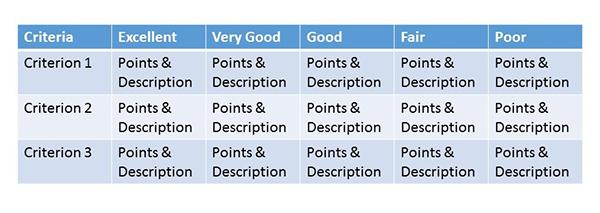

Analytic/Descriptive Rubric . An analytic or descriptive rubric often takes the form of a table with the criteria listed in the left column and with levels of performance listed across the top row. Each cell contains a description of what the specified criterion looks like at a given level of performance. Each of the criteria is scored individually.

Advantages of analytic rubrics:

- Provide detailed feedback on areas of strength or weakness

- Each criterion can be weighted to reflect its relative importance

Disadvantages of analytic rubrics:

- More time-consuming to create and use than a holistic rubric

- May not be used consistently across raters unless the cells are well defined

- May result in giving less personalized feedback

Single-Point Rubric . A single-point rubric is breaks down the components of an assignment into different criteria, but instead of describing different levels of performance, only the “proficient” level is described. Feedback space is provided for instructors to give individualized comments to help students improve and/or show where they excelled beyond the proficiency descriptors.

Advantages of single-point rubrics:

- Easier to create than an analytic/descriptive rubric

- Perhaps more likely that students will read the descriptors

- Areas of concern and excellence are open-ended

- May removes a focus on the grade/points

- May increase student creativity in project-based assignments

Disadvantage of analytic rubrics: Requires more work for instructors writing feedback

Step 3 (Optional): Look for templates and examples.

You might Google, “Rubric for persuasive essay at the college level” and see if there are any publicly available examples to start from. Ask your colleagues if they have used a rubric for a similar assignment. Some examples are also available at the end of this article. These rubrics can be a great starting point for you, but consider steps 3, 4, and 5 below to ensure that the rubric matches your assignment description, learning objectives and expectations.

Step 4: Define the assignment criteria

Make a list of the knowledge and skills are you measuring with the assignment/assessment Refer to your stated learning objectives, the assignment instructions, past examples of student work, etc. for help.

Helpful strategies for defining grading criteria:

- Collaborate with co-instructors, teaching assistants, and other colleagues

- Brainstorm and discuss with students

- Can they be observed and measured?

- Are they important and essential?

- Are they distinct from other criteria?

- Are they phrased in precise, unambiguous language?

- Revise the criteria as needed

- Consider whether some are more important than others, and how you will weight them.

Step 5: Design the rating scale

Most ratings scales include between 3 and 5 levels. Consider the following questions when designing your rating scale:

- Given what students are able to demonstrate in this assignment/assessment, what are the possible levels of achievement?

- How many levels would you like to include (more levels means more detailed descriptions)

- Will you use numbers and/or descriptive labels for each level of performance? (for example 5, 4, 3, 2, 1 and/or Exceeds expectations, Accomplished, Proficient, Developing, Beginning, etc.)

- Don’t use too many columns, and recognize that some criteria can have more columns that others . The rubric needs to be comprehensible and organized. Pick the right amount of columns so that the criteria flow logically and naturally across levels.

Step 6: Write descriptions for each level of the rating scale

Artificial Intelligence tools like Chat GPT have proven to be useful tools for creating a rubric. You will want to engineer your prompt that you provide the AI assistant to ensure you get what you want. For example, you might provide the assignment description, the criteria you feel are important, and the number of levels of performance you want in your prompt. Use the results as a starting point, and adjust the descriptions as needed.

Building a rubric from scratch

For a single-point rubric , describe what would be considered “proficient,” i.e. B-level work, and provide that description. You might also include suggestions for students outside of the actual rubric about how they might surpass proficient-level work.

For analytic and holistic rubrics , c reate statements of expected performance at each level of the rubric.

- Consider what descriptor is appropriate for each criteria, e.g., presence vs absence, complete vs incomplete, many vs none, major vs minor, consistent vs inconsistent, always vs never. If you have an indicator described in one level, it will need to be described in each level.

- You might start with the top/exemplary level. What does it look like when a student has achieved excellence for each/every criterion? Then, look at the “bottom” level. What does it look like when a student has not achieved the learning goals in any way? Then, complete the in-between levels.

- For an analytic rubric , do this for each particular criterion of the rubric so that every cell in the table is filled. These descriptions help students understand your expectations and their performance in regard to those expectations.

Well-written descriptions:

- Describe observable and measurable behavior

- Use parallel language across the scale

- Indicate the degree to which the standards are met

Step 7: Create your rubric

Create your rubric in a table or spreadsheet in Word, Google Docs, Sheets, etc., and then transfer it by typing it into Moodle. You can also use online tools to create the rubric, but you will still have to type the criteria, indicators, levels, etc., into Moodle. Rubric creators: Rubistar , iRubric

Step 8: Pilot-test your rubric

Prior to implementing your rubric on a live course, obtain feedback from:

- Teacher assistants

Try out your new rubric on a sample of student work. After you pilot-test your rubric, analyze the results to consider its effectiveness and revise accordingly.

- Limit the rubric to a single page for reading and grading ease

- Use parallel language . Use similar language and syntax/wording from column to column. Make sure that the rubric can be easily read from left to right or vice versa.

- Use student-friendly language . Make sure the language is learning-level appropriate. If you use academic language or concepts, you will need to teach those concepts.

- Share and discuss the rubric with your students . Students should understand that the rubric is there to help them learn, reflect, and self-assess. If students use a rubric, they will understand the expectations and their relevance to learning.

- Consider scalability and reusability of rubrics. Create rubric templates that you can alter as needed for multiple assignments.

- Maximize the descriptiveness of your language. Avoid words like “good” and “excellent.” For example, instead of saying, “uses excellent sources,” you might describe what makes a resource excellent so that students will know. You might also consider reducing the reliance on quantity, such as a number of allowable misspelled words. Focus instead, for example, on how distracting any spelling errors are.

Example of an analytic rubric for a final paper

Example of a holistic rubric for a final paper, single-point rubric, more examples:.

- Single Point Rubric Template ( variation )

- Analytic Rubric Template make a copy to edit

- A Rubric for Rubrics

- Bank of Online Discussion Rubrics in different formats

- Mathematical Presentations Descriptive Rubric

- Math Proof Assessment Rubric

- Kansas State Sample Rubrics

- Design Single Point Rubric

Technology Tools: Rubrics in Moodle

- Moodle Docs: Rubrics

- Moodle Docs: Grading Guide (use for single-point rubrics)

Tools with rubrics (other than Moodle)

- Google Assignments

- Turnitin Assignments: Rubric or Grading Form

Other resources

- DePaul University (n.d.). Rubrics .

- Gonzalez, J. (2014). Know your terms: Holistic, Analytic, and Single-Point Rubrics . Cult of Pedagogy.

- Goodrich, H. (1996). Understanding rubrics . Teaching for Authentic Student Performance, 54 (4), 14-17. Retrieved from

- Miller, A. (2012). Tame the beast: tips for designing and using rubrics.

- Ragupathi, K., Lee, A. (2020). Beyond Fairness and Consistency in Grading: The Role of Rubrics in Higher Education. In: Sanger, C., Gleason, N. (eds) Diversity and Inclusion in Global Higher Education. Palgrave Macmillan, Singapore.

Search form

- About Faculty Development and Support

- Programs and Funding Opportunities

Consultations, Observations, and Services

- Strategic Resources & Digital Publications

- Canvas @ Yale Support

- Learning Environments @ Yale

- Teaching Workshops

- Teaching Consultations and Classroom Observations

- Teaching Programs

- Spring Teaching Forum

- Written and Oral Communication Workshops and Panels

- Writing Resources & Tutorials

- About the Graduate Writing Laboratory

- Writing and Public Speaking Consultations

- Writing Workshops and Panels

- Writing Peer-Review Groups

- Writing Retreats and All Writes

- Online Writing Resources for Graduate Students

- About Teaching Development for Graduate and Professional School Students

- Teaching Programs and Grants

- Teaching Forums

- Resources for Graduate Student Teachers

- About Undergraduate Writing and Tutoring

- Academic Strategies Program

- The Writing Center

- STEM Tutoring & Programs

- Humanities & Social Sciences

- Center for Language Study

- Online Course Catalog

- Antiracist Pedagogy

- NECQL 2019: NorthEast Consortium for Quantitative Literacy XXII Meeting

- STEMinar Series

- Teaching in Context: Troubling Times

- Helmsley Postdoctoral Teaching Scholars

- Pedagogical Partners

- Instructional Materials

- Evaluation & Research

- STEM Education Job Opportunities

- Yale Connect

- Online Education Legal Statements

You are here

Creating and using rubrics.

A rubric describes the criteria that will be used to evaluate a specific task, such as a student writing assignment, poster, oral presentation, or other project. Rubrics allow instructors to communicate expectations to students, allow students to check in on their progress mid-assignment, and can increase the reliability of scores. Research suggests that when rubrics are used on an instructional basis (for instance, included with an assignment prompt for reference), students tend to utilize and appreciate them (Reddy and Andrade, 2010).

Rubrics generally exist in tabular form and are composed of:

- A description of the task that is being evaluated,

- The criteria that is being evaluated (row headings),

- A rating scale that demonstrates different levels of performance (column headings), and

- A description of each level of performance for each criterion (within each box of the table).

When multiple individuals are grading, rubrics also help improve the consistency of scoring across all graders. Instructors should insure that the structure, presentation, consistency, and use of their rubrics pass rigorous standards of validity , reliability , and fairness (Andrade, 2005).

Major Types of Rubrics

There are two major categories of rubrics:

- Holistic : In this type of rubric, a single score is provided based on raters’ overall perception of the quality of the performance. Holistic rubrics are useful when only one attribute is being evaluated, as they detail different levels of performance within a single attribute. This category of rubric is designed for quick scoring but does not provide detailed feedback. For these rubrics, the criteria may be the same as the description of the task.

- Analytic : In this type of rubric, scores are provided for several different criteria that are being evaluated. Analytic rubrics provide more detailed feedback to students and instructors about their performance. Scoring is usually more consistent across students and graders with analytic rubrics.

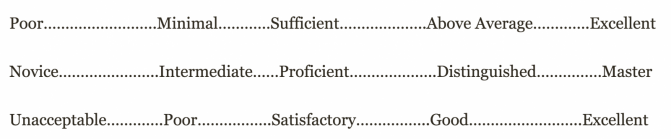

Rubrics utilize a scale that denotes level of success with a particular assignment, usually a 3-, 4-, or 5- category grid:

Figure 1: Grading Rubrics: Sample Scales (Brown Sheridan Center)

Sample Rubrics

Instructors can consider a sample holistic rubric developed for an English Writing Seminar course at Yale.

The Association of American Colleges and Universities also has a number of free (non-invasive free account required) analytic rubrics that can be downloaded and modified by instructors. These 16 VALUE rubrics enable instructors to measure items such as inquiry and analysis, critical thinking, written communication, oral communication, quantitative literacy, teamwork, problem-solving, and more.

Recommendations

The following provides a procedure for developing a rubric, adapted from Brown’s Sheridan Center for Teaching and Learning :

- Define the goal and purpose of the task that is being evaluated - Before constructing a rubric, instructors should review their learning outcomes associated with a given assignment. Are skills, content, and deeper conceptual knowledge clearly defined in the syllabus , and do class activities and assignments work towards intended outcomes? The rubric can only function effectively if goals are clear and student work progresses towards them.

- Decide what kind of rubric to use - The kind of rubric used may depend on the nature of the assignment, intended learning outcomes (for instance, does the task require the demonstration of several different skills?), and the amount and kind of feedback students will receive (for instance, is the task a formative or a summative assessment ?). Instructors can read the above, or consider “Additional Resources” for kinds of rubrics.

- Define the criteria - Instructors can review their learning outcomes and assessment parameters to determine specific criteria for the rubric to cover. Instructors should consider what knowledge and skills are required for successful completion, and create a list of criteria that assess outcomes across different vectors (comprehensiveness, maturity of thought, revisions, presentation, timeliness, etc). Criteria should be distinct and clearly described, and ideally, not surpass seven in number.

- Define the rating scale to measure levels of performance - Whatever rating scale instructors choose, they should insure that it is clear, and review it in-class to field student question and concerns. Instructors can consider if the scale will include descriptors or only be numerical, and might include prompts on the rubric for achieving higher achievement levels. Rubrics typically include 3-5 levels in their rating scales (see Figure 1 above).

- Write descriptions for each performance level of the rating scale - Each level should be accompanied by a descriptive paragraph that outlines ideals for each level, lists or names all performance expectations within the level, and if possible, provides a detail or example of ideal performance within each level. Across the rubric, descriptions should be parallel, observable, and measurable.

- Test and revise the rubric - The rubric can be tested before implementation, by arranging for writing or testing conditions with several graders or TFs who can use the rubric together. After grading with the rubric, graders might grade a similar set of materials without the rubric to assure consistency. Instructors can consider discrepancies, share the rubric and results with faculty colleagues for further opinions, and revise the rubric for use in class. Instructors might also seek out colleagues’ rubrics as well, for comparison. Regarding course implementation, instructors might consider passing rubrics out during the first class, in order to make grading expectations clear as early as possible. Rubrics should fit on one page, so that descriptions and criteria are viewable quickly and simultaneously. During and after a class or course, instructors can collect feedback on the rubric’s clarity and effectiveness from TFs and even students through anonymous surveys. Comparing scores and quality of assignments with parallel or previous assignments that did not include a rubric can reveal effectiveness as well. Instructors should feel free to revise a rubric following a course too, based on student performance and areas of confusion.

Additional Resources

Cox, G. C., Brathwaite, B. H., & Morrison, J. (2015). The Rubric: An assessment tool to guide students and markers. Advances in Higher Education, 149-163.

Creating and Using Rubrics - Carnegie Mellon Eberly Center for Teaching Excellence and & Educational Innovation

Creating a Rubric - UC Denver Center for Faculty Development

Grading Rubric Design - Brown University Sheridan Center for Teaching and Learning

Moskal, B. M. (2000). Scoring rubrics: What, when and how? Practical Assessment, Research & Evaluation 7(3).

Quinlan A. M., (2011) A Complete Guide to Rubrics: Assessment Made Easy for Teachers of K-college 2nd edition, Rowman & Littlefield Education.

Andrade, H. (2005). Teaching with Rubrics: The Good, the Bad, and the Ugly. College Teaching 53(1):27-30.

Reddy, Y. M., & Andrade, H. (2010). A review of rubric use in higher education. Assessment & Evaluation in Higher Education, 35(4), 435-448.

Sheridan Center for Teaching and Learning , Brown University

Downloads

YOU MAY BE INTERESTED IN

Instructional Enhancement Fund

The Instructional Enhancement Fund (IEF) awards grants of up to $500 to support the timely integration of new learning activities into an existing undergraduate or graduate course. All Yale instructors of record, including tenured and tenure-track faculty, clinical instructional faculty, lecturers, lectors, and part-time acting instructors (PTAIs), are eligible to apply. Award decisions are typically provided within two weeks to help instructors implement ideas for the current semester.

The Poorvu Center for Teaching and Learning routinely supports members of the Yale community with individual instructional consultations and classroom observations.

Reserve a Room

The Poorvu Center for Teaching and Learning partners with departments and groups on-campus throughout the year to share its space. Please review the reservation form and submit a request.

Eberly Center

Teaching excellence & educational innovation, creating and using rubrics.

A rubric is a scoring tool that explicitly describes the instructor’s performance expectations for an assignment or piece of work. A rubric identifies:

- criteria: the aspects of performance (e.g., argument, evidence, clarity) that will be assessed

- descriptors: the characteristics associated with each dimension (e.g., argument is demonstrable and original, evidence is diverse and compelling)

- performance levels: a rating scale that identifies students’ level of mastery within each criterion

Rubrics can be used to provide feedback to students on diverse types of assignments, from papers, projects, and oral presentations to artistic performances and group projects.

Benefitting from Rubrics

- reduce the time spent grading by allowing instructors to refer to a substantive description without writing long comments

- help instructors more clearly identify strengths and weaknesses across an entire class and adjust their instruction appropriately

- help to ensure consistency across time and across graders

- reduce the uncertainty which can accompany grading

- discourage complaints about grades

- understand instructors’ expectations and standards

- use instructor feedback to improve their performance

- monitor and assess their progress as they work towards clearly indicated goals

- recognize their strengths and weaknesses and direct their efforts accordingly

Examples of Rubrics

Here we are providing a sample set of rubrics designed by faculty at Carnegie Mellon and other institutions. Although your particular field of study or type of assessment may not be represented, viewing a rubric that is designed for a similar assessment may give you ideas for the kinds of criteria, descriptions, and performance levels you use on your own rubric.

- Example 1: Philosophy Paper This rubric was designed for student papers in a range of courses in philosophy (Carnegie Mellon).

- Example 2: Psychology Assignment Short, concept application homework assignment in cognitive psychology (Carnegie Mellon).

- Example 3: Anthropology Writing Assignments This rubric was designed for a series of short writing assignments in anthropology (Carnegie Mellon).

- Example 4: History Research Paper . This rubric was designed for essays and research papers in history (Carnegie Mellon).

- Example 1: Capstone Project in Design This rubric describes the components and standards of performance from the research phase to the final presentation for a senior capstone project in design (Carnegie Mellon).

- Example 2: Engineering Design Project This rubric describes performance standards for three aspects of a team project: research and design, communication, and team work.

Oral Presentations

- Example 1: Oral Exam This rubric describes a set of components and standards for assessing performance on an oral exam in an upper-division course in history (Carnegie Mellon).

- Example 2: Oral Communication This rubric is adapted from Huba and Freed, 2000.

- Example 3: Group Presentations This rubric describes a set of components and standards for assessing group presentations in history (Carnegie Mellon).

Class Participation/Contributions

- Example 1: Discussion Class This rubric assesses the quality of student contributions to class discussions. This is appropriate for an undergraduate-level course (Carnegie Mellon).

- Example 2: Advanced Seminar This rubric is designed for assessing discussion performance in an advanced undergraduate or graduate seminar.

See also " Examples and Tools " section of this site for more rubrics.

CONTACT US to talk with an Eberly colleague in person!

- Faculty Support

- Graduate Student Support

- Canvas @ Carnegie Mellon

- Quick Links

Rubrics for Oral Presentations

Introduction.

Many instructors require students to give oral presentations, which they evaluate and count in students’ grades. It is important that instructors clarify their goals for these presentations as well as the student learning objectives to which they are related. Embedding the assignment in course goals and learning objectives allows instructors to be clear with students about their expectations and to develop a rubric for evaluating the presentations.

A rubric is a scoring guide that articulates and assesses specific components and expectations for an assignment. Rubrics identify the various criteria relevant to an assignment and then explicitly state the possible levels of achievement along a continuum, so that an effective rubric accurately reflects the expectations of an assignment. Using a rubric to evaluate student performance has advantages for both instructors and students. Creating Rubrics

Rubrics can be either analytic or holistic. An analytic rubric comprises a set of specific criteria, with each one evaluated separately and receiving a separate score. The template resembles a grid with the criteria listed in the left column and levels of performance listed across the top row, using numbers and/or descriptors. The cells within the center of the rubric contain descriptions of what expected performance looks like for each level of performance.

A holistic rubric consists of a set of descriptors that generate a single, global score for the entire work. The single score is based on raters’ overall perception of the quality of the performance. Often, sentence- or paragraph-length descriptions of different levels of competencies are provided.

When applied to an oral presentation, rubrics should reflect the elements of the presentation that will be evaluated as well as their relative importance. Thus, the instructor must decide whether to include dimensions relevant to both form and content and, if so, which one. Additionally, the instructor must decide how to weight each of the dimensions – are they all equally important, or are some more important than others? Additionally, if the presentation represents a group project, the instructor must decide how to balance grading individual and group contributions. Evaluating Group Projects

Creating Rubrics

The steps for creating an analytic rubric include the following:

1. Clarify the purpose of the assignment. What learning objectives are associated with the assignment?

2. Look for existing rubrics that can be adopted or adapted for the specific assignment

3. Define the criteria to be evaluated

4. Choose the rating scale to measure levels of performance

5. Write descriptions for each criterion for each performance level of the rating scale

6. Test and revise the rubric

Examples of criteria that have been included in rubrics for evaluation oral presentations include:

- Knowledge of content

- Organization of content

- Presentation of ideas

- Research/sources

- Visual aids/handouts

- Language clarity

- Grammatical correctness

- Time management

- Volume of speech

- Rate/pacing of Speech

- Mannerisms/gestures

- Eye contact/audience engagement

Examples of scales/ratings that have been used to rate student performance include:

- Strong, Satisfactory, Weak

- Beginning, Intermediate, High

- Exemplary, Competent, Developing

- Excellent, Competent, Needs Work

- Exceeds Standard, Meets Standard, Approaching Standard, Below Standard

- Exemplary, Proficient, Developing, Novice

- Excellent, Good, Marginal, Unacceptable

- Advanced, Intermediate High, Intermediate, Developing

- Exceptional, Above Average, Sufficient, Minimal, Poor

- Master, Distinguished, Proficient, Intermediate, Novice

- Excellent, Good, Satisfactory, Poor, Unacceptable

- Always, Often, Sometimes, Rarely, Never

- Exemplary, Accomplished, Acceptable, Minimally Acceptable, Emerging, Unacceptable

Grading and Performance Rubrics Carnegie Mellon University Eberly Center for Teaching Excellence & Educational Innovation

Creating and Using Rubrics Carnegie Mellon University Eberly Center for Teaching Excellence & Educational Innovation

Using Rubrics Cornell University Center for Teaching Innovation

Rubrics DePaul University Teaching Commons

Building a Rubric University of Texas/Austin Faculty Innovation Center

Building a Rubric Columbia University Center for Teaching and Learning

Rubric Development University of West Florida Center for University Teaching, Learning, and Assessment

Creating and Using Rubrics Yale University Poorvu Center for Teaching and Learning

Designing Grading Rubrics Brown University Sheridan Center for Teaching and Learning

Examples of Oral Presentation Rubrics

Oral Presentation Rubric Pomona College Teaching and Learning Center

Oral Presentation Evaluation Rubric University of Michigan

Oral Presentation Rubric Roanoke College

Oral Presentation: Scoring Guide Fresno State University Office of Institutional Effectiveness

Presentation Skills Rubric State University of New York/New Paltz School of Business

Oral Presentation Rubric Oregon State University Center for Teaching and Learning

Oral Presentation Rubric Purdue University College of Science

Group Class Presentation Sample Rubric Pepperdine University Graziadio Business School

Center for Excellence in Teaching

Home > Resources > Group presentation rubric

Group presentation rubric

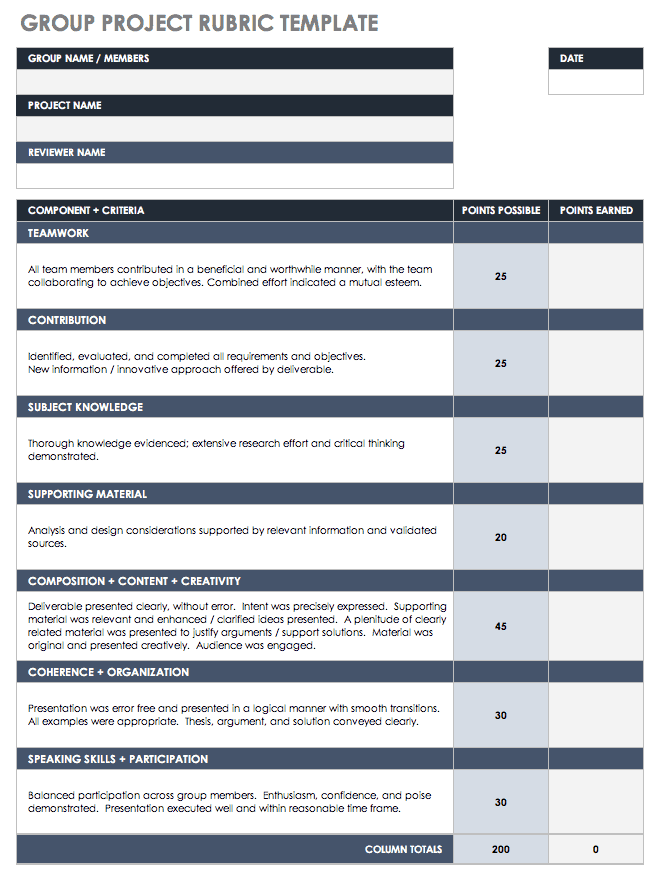

This is a grading rubric an instructor uses to assess students’ work on this type of assignment. It is a sample rubric that needs to be edited to reflect the specifics of a particular assignment. Students can self-assess using the rubric as a checklist before submitting their assignment.

Download this file

Download this file [63.74 KB]

Back to Resources Page

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Am J Pharm Educ

- v.74(9); 2010 Nov 10

A Standardized Rubric to Evaluate Student Presentations

Michael j. peeters.

a University of Toledo College of Pharmacy

Eric G. Sahloff

Gregory e. stone.

b University of Toledo College of Education

To design, implement, and assess a rubric to evaluate student presentations in a capstone doctor of pharmacy (PharmD) course.

A 20-item rubric was designed and used to evaluate student presentations in a capstone fourth-year course in 2007-2008, and then revised and expanded to 25 items and used to evaluate student presentations for the same course in 2008-2009. Two faculty members evaluated each presentation.

The Many-Facets Rasch Model (MFRM) was used to determine the rubric's reliability, quantify the contribution of evaluator harshness/leniency in scoring, and assess grading validity by comparing the current grading method with a criterion-referenced grading scheme. In 2007-2008, rubric reliability was 0.98, with a separation of 7.1 and 4 rating scale categories. In 2008-2009, MFRM analysis suggested 2 of 98 grades be adjusted to eliminate evaluator leniency, while a further criterion-referenced MFRM analysis suggested 10 of 98 grades should be adjusted.

The evaluation rubric was reliable and evaluator leniency appeared minimal. However, a criterion-referenced re-analysis suggested a need for further revisions to the rubric and evaluation process.

INTRODUCTION

Evaluations are important in the process of teaching and learning. In health professions education, performance-based evaluations are identified as having “an emphasis on testing complex, ‘higher-order’ knowledge and skills in the real-world context in which they are actually used.” 1 Objective structured clinical examinations (OSCEs) are a common, notable example. 2 On Miller's pyramid, a framework used in medical education for measuring learner outcomes, “knows” is placed at the base of the pyramid, followed by “knows how,” then “shows how,” and finally, “does” is placed at the top. 3 Based on Miller's pyramid, evaluation formats that use multiple-choice testing focus on “knows” while an OSCE focuses on “shows how.” Just as performance evaluations remain highly valued in medical education, 4 authentic task evaluations in pharmacy education may be better indicators of future pharmacist performance. 5 Much attention in medical education has been focused on reducing the unreliability of high-stakes evaluations. 6 Regardless of educational discipline, high-stakes performance-based evaluations should meet educational standards for reliability and validity. 7

PharmD students at University of Toledo College of Pharmacy (UTCP) were required to complete a course on presentations during their final year of pharmacy school and then give a presentation that served as both a capstone experience and a performance-based evaluation for the course. Pharmacists attending the presentations were given Accreditation Council for Pharmacy Education (ACPE)-approved continuing education credits. An evaluation rubric for grading the presentations was designed to allow multiple faculty evaluators to objectively score student performances in the domains of presentation delivery and content. Given the pass/fail grading procedure used in advanced pharmacy practice experiences, passing this presentation-based course and subsequently graduating from pharmacy school were contingent upon this high-stakes evaluation. As a result, the reliability and validity of the rubric used and the evaluation process needed to be closely scrutinized.

Each year, about 100 students completed presentations and at least 40 faculty members served as evaluators. With the use of multiple evaluators, a question of evaluator leniency often arose (ie, whether evaluators used the same criteria for evaluating performances or whether some evaluators graded easier or more harshly than others). At UTCP, opinions among some faculty evaluators and many PharmD students implied that evaluator leniency in judging the students' presentations significantly affected specific students' grades and ultimately their graduation from pharmacy school. While it was plausible that evaluator leniency was occurring, the magnitude of the effect was unknown. Thus, this study was initiated partly to address this concern over grading consistency and scoring variability among evaluators.

Because both students' presentation style and content were deemed important, each item of the rubric was weighted the same across delivery and content. However, because there were more categories related to delivery than content, an additional faculty concern was that students feasibly could present poor content but have an effective presentation delivery and pass the course.

The objectives for this investigation were: (1) to describe and optimize the reliability of the evaluation rubric used in this high-stakes evaluation; (2) to identify the contribution and significance of evaluator leniency to evaluation reliability; and (3) to assess the validity of this evaluation rubric within a criterion-referenced grading paradigm focused on both presentation delivery and content.

The University of Toledo's Institutional Review Board approved this investigation. This study investigated performance evaluation data for an oral presentation course for final-year PharmD students from 2 consecutive academic years (2007-2008 and 2008-2009). The course was taken during the fourth year (P4) of the PharmD program and was a high-stakes, performance-based evaluation. The goal of the course was to serve as a capstone experience, enabling students to demonstrate advanced drug literature evaluation and verbal presentations skills through the development and delivery of a 1-hour presentation. These presentations were to be on a current pharmacy practice topic and of sufficient quality for ACPE-approved continuing education. This experience allowed students to demonstrate their competencies in literature searching, literature evaluation, and application of evidence-based medicine, as well as their oral presentation skills. Students worked closely with a faculty advisor to develop their presentation. Each class (2007-2008 and 2008-2009) was randomly divided, with half of the students taking the course and completing their presentation and evaluation in the fall semester and the other half in the spring semester. To accommodate such a large number of students presenting for 1 hour each, it was necessary to use multiple rooms with presentations taking place concurrently over 2.5 days for both the fall and spring sessions of the course. Two faculty members independently evaluated each student presentation using the provided evaluation rubric. The 2007-2008 presentations involved 104 PharmD students and 40 faculty evaluators, while the 2008-2009 presentations involved 98 students and 46 faculty evaluators.

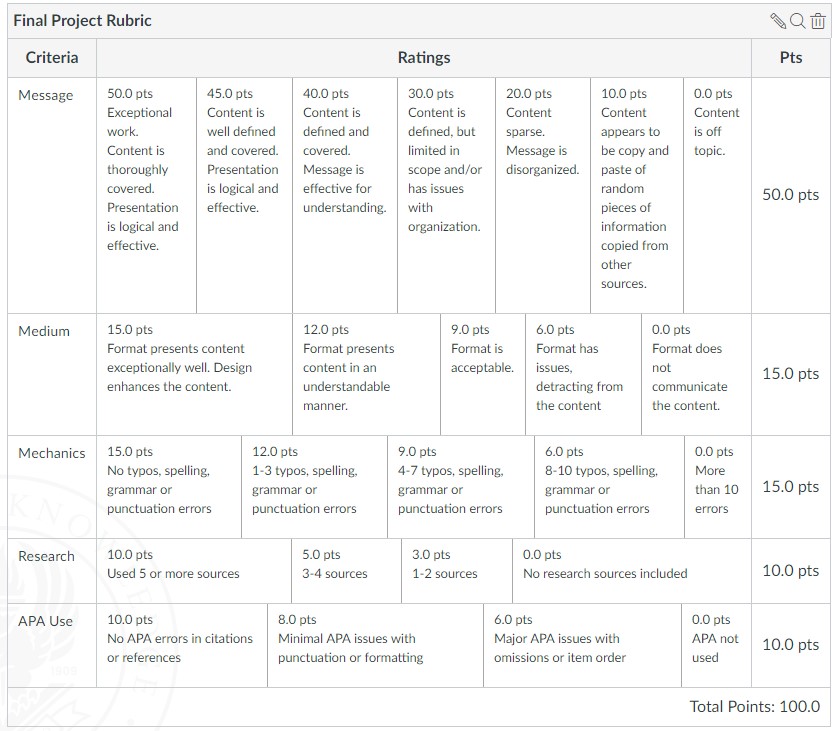

After vetting through the pharmacy practice faculty, the initial rubric used in 2007-2008 focused on describing explicit, specific evaluation criteria such as amounts of eye contact, voice pitch/volume, and descriptions of study methods. The evaluation rubric used in 2008-2009 was similar to the initial rubric, but with 5 items added (Figure (Figure1). 1 ). The evaluators rated each item (eg, eye contact) based on their perception of the student's performance. The 25 rubric items had equal weight (ie, 4 points each), but each item received a rating from the evaluator of 1 to 4 points. Thus, only 4 rating categories were included as has been recommended in the literature. 8 However, some evaluators created an additional 3 rating categories by marking lines in between the 4 ratings to signify half points ie, 1.5, 2.5, and 3.5. For example, for the “notecards/notes” item in Figure Figure1, 1 , a student looked at her notes sporadically during her presentation, but not distractingly nor enough to warrant a score of 3 in the faculty evaluator's opinion, so a 3.5 was given. Thus, a 7-category rating scale (1, 1.5, 2, 2.5. 3, 3.5, and 4) was analyzed. Each independent evaluator's ratings for the 25 items were summed to form a score (0-100%). The 2 evaluators' scores then were averaged and a letter grade was assigned based on the following scale: >90% = A, 80%-89% = B, 70%-79% = C, <70% = F.

Rubric used to evaluate student presentations given in a 2008-2009 capstone PharmD course.

EVALUATION AND ASSESSMENT

Rubric reliability.

To measure rubric reliability, iterative analyses were performed on the evaluations using the Many-Facets Rasch Model (MFRM) following the 2007-2008 data collection period. While Cronbach's alpha is the most commonly reported coefficient of reliability, its single number reporting without supplementary information can provide incomplete information about reliability. 9 - 11 Due to its formula, Cronbach's alpha can be increased by simply adding more repetitive rubric items or having more rating scale categories, even when no further useful information has been added. The MFRM reports separation , which is calculated differently than Cronbach's alpha, is another source of reliability information. Unlike Cronbach's alpha, separation does not appear enhanced by adding further redundant items. From a measurement perspective, a higher separation value is better than a lower one because students are being divided into meaningful groups after measurement error has been accounted for. Separation can be thought of as the number of units on a ruler where the more units the ruler has, the larger the range of performance levels that can be measured among students. For example, a separation of 4.0 suggests 4 graduations such that a grade of A is distinctly different from a grade of B, which in turn is different from a grade of C or of F. In measuring performances, a separation of 9.0 is better than 5.5, just as a separation of 7.0 is better than a 6.5; a higher separation coefficient suggests that student performance potentially could be divided into a larger number of meaningfully separate groups.

The rating scale can have substantial effects on reliability, 8 while description of how a rating scale functions is a unique aspect of the MFRM. With analysis iterations of the 2007-2008 data, the number of rating scale categories were collapsed consecutively until improvements in reliability and/or separation were no longer found. The last positive iteration that led to positive improvements in reliability or separation was deemed an optimal rating scale for this evaluation rubric.

In the 2007-2008 analysis, iterations of the data where run through the MFRM. While only 4 rating scale categories had been included on the rubric, because some faculty members inserted 3 in-between categories, 7 categories had to be included in the analysis. This initial analysis based on a 7-category rubric provided a reliability coefficient (similar to Cronbach's alpha) of 0.98, while the separation coefficient was 6.31. The separation coefficient denoted 6 distinctly separate groups of students based on the items. Rating scale categories were collapsed, with “in-between” categories included in adjacent full-point categories. Table Table1 1 shows the reliability and separation for the iterations as the rating scale was collapsed. As shown, the optimal evaluation rubric maintained a reliability of 0.98, but separation improved the reliability to 7.10 or 7 distinctly separate groups of students based on the items. Another distinctly separate group was added through a reduction in the rating scale while no change was seen to Cronbach's alpha, even though the number of rating scale categories was reduced. Table Table1 1 describes the stepwise, sequential pattern across the final 4 rating scale categories analyzed. Informed by the 2007-2008 results, the 2008-2009 evaluation rubric (Figure (Figure1) 1 ) used 4 rating scale categories and reliability remained high.

Evaluation Rubric Reliability and Separation with Iterations While Collapsing Rating Scale Categories.

a Reliability coefficient of variance in rater response that is reproducible (ie, Cronbach's alpha).

b Separation is a coefficient of item standard deviation divided by average measurement error and is an additional reliability coefficient.

c Optimal number of rating scale categories based on the highest reliability (0.98) and separation (7.1) values.

Evaluator Leniency

Described by Fleming and colleagues over half a century ago, 6 harsh raters (ie, hawks) or lenient raters (ie, doves) have also been demonstrated in more recent studies as an issue as well. 12 - 14 Shortly after 2008-2009 data were collected, those evaluations by multiple faculty evaluators were collated and analyzed in the MFRM to identify possible inconsistent scoring. While traditional interrater reliability does not deal with this issue, the MFRM had been used previously to illustrate evaluator leniency on licensing examinations for medical students and medical residents in the United Kingdom. 13 Thus, accounting for evaluator leniency may prove important to grading consistency (and reliability) in a course using multiple evaluators. Along with identifying evaluator leniency, the MFRM also corrected for this variability. For comparison, course grades were calculated by summing the evaluators' actual ratings (as discussed in the Design section) and compared with the MFRM-adjusted grades to quantify the degree of evaluator leniency occurring in this evaluation.

Measures created from the data analysis in the MFRM were converted to percentages using a common linear test-equating procedure involving the mean and standard deviation of the dataset. 15 To these percentages, student letter grades were assigned using the same traditional method used in 2007-2008 (ie, 90% = A, 80% - 89% = B, 70% - 79% = C, <70% = F). Letter grades calculated using the revised rubric and the MFRM then were compared to letter grades calculated using the previous rubric and course grading method.

In the analysis of the 2008-2009 data, the interrater reliability for the letter grades when comparing the 2 independent faculty evaluations for each presentation was 0.98 by Cohen's kappa. However, using the 3-facet MRFM revealed significant variation in grading. The interaction of evaluator leniency on student ability and item difficulty was significant, with a chi-square of p < 0.01. As well, the MFRM showed a reliability of 0.77, with a separation of 1.85 (ie, almost 2 groups of evaluators). The MFRM student ability measures were scaled to letter grades and compared with course letter grades. As a result, 2 B's became A's and so evaluator leniency accounted for a 2% change in letter grades (ie, 2 of 98 grades).

Validity and Grading

Explicit criterion-referenced standards for grading are recommended for higher evaluation validity. 3 , 16 - 18 The course coordinator completed 3 additional evaluations of a hypothetical student presentation rating the minimal criteria expected to describe each of an A, B, or C letter grade performance. These evaluations were placed with the other 196 evaluations (2 evaluators × 98 students) from 2008-2009 into the MFRM, with the resulting analysis report giving specific cutoff percentage scores for each letter grade. Unlike the traditional scoring method of assigning all items an equal weight, the MFRM ordered evaluation items from those more difficult for students (given more weight) to those less difficult for students (given less weight). These criterion-referenced letter grades were compared with the grades generated using the traditional grading process.

When the MFRM data were rerun with the criterion-referenced evaluations added into the dataset, a 10% change was seen with letter grades (ie, 10 of 98 grades). When the 10 letter grades were lowered, 1 was below a C, the minimum standard, and suggested a failing performance. Qualitative feedback from faculty evaluators agreed with this suggested criterion-referenced performance failure.

Measurement Model

Within modern test theory, the Rasch Measurement Model maps examinee ability with evaluation item difficulty. Items are not arbitrarily given the same value (ie, 1 point) but vary based on how difficult or easy the items were for examinees. The Rasch measurement model has been used frequently in educational research, 19 by numerous high-stakes testing professional bodies such as the National Board of Medical Examiners, 20 and also by various state-level departments of education for standardized secondary education examinations. 21 The Rasch measurement model itself has rigorous construct validity and reliability. 22 A 3-facet MFRM model allows an evaluator variable to be added to the student ability and item difficulty variables that are routine in other Rasch measurement analyses. Just as multiple regression accounts for additional variables in analysis compared to a simple bivariate regression, the MFRM is a multiple variable variant of the Rasch measurement model and was applied in this study using the Facets software (Linacre, Chicago, IL). The MFRM is ideal for performance-based evaluations with the addition of independent evaluator/judges. 8 , 23 From both yearly cohorts in this investigation, evaluation rubric data were collated and placed into the MFRM for separate though subsequent analyses. Within the MFRM output report, a chi-square for a difference in evaluator leniency was reported with an alpha of 0.05.

The presentation rubric was reliable. Results from the 2007-2008 analysis illustrated that the number of rating scale categories impacted the reliability of this rubric and that use of only 4 rating scale categories appeared best for measurement. While a 10-point Likert-like scale may commonly be used in patient care settings, such as in quantifying pain, most people cannot process more then 7 points or categories reliably. 24 Presumably, when more than 7 categories are used, the categories beyond 7 either are not used or are collapsed by respondents into fewer than 7 categories. Five-point scales commonly are encountered, but use of an odd number of categories can be problematic to interpretation and is not recommended. 25 Responses using the middle category could denote a true perceived average or neutral response or responder indecisiveness or even confusion over the question. Therefore, removing the middle category appears advantageous and is supported by our results.

With 2008-2009 data, the MFRM identified evaluator leniency with some evaluators grading more harshly while others were lenient. Evaluator leniency was indeed found in the dataset but only a couple of changes were suggested based on the MFRM-corrected evaluator leniency and did not appear to play a substantial role in the evaluation of this course at this time.

Performance evaluation instruments are either holistic or analytic rubrics. 26 The evaluation instrument used in this investigation exemplified an analytic rubric, which elicits specific observations and often demonstrates high reliability. However, Norman and colleagues point out a conundrum where drastically increasing the number of evaluation rubric items (creating something similar to a checklist) could augment a reliability coefficient though it appears to dissociate from that evaluation rubric's validity. 27 Validity may be more than the sum of behaviors on evaluation rubric items. 28 Having numerous, highly specific evaluation items appears to undermine the rubric's function. With this investigation's evaluation rubric and its numerous items for both presentation style and presentation content, equal numeric weighting of items can in fact allow student presentations to receive a passing score while falling short of the course objectives, as was shown in the present investigation. As opposed to analytic rubrics, holistic rubrics often demonstrate lower yet acceptable reliability, while offering a higher degree of explicit connection to course objectives. A summative, holistic evaluation of presentations may improve validity by allowing expert evaluators to provide their “gut feeling” as experts on whether a performance is “outstanding,” “sufficient,” “borderline,” or “subpar” for dimensions of presentation delivery and content. A holistic rubric that integrates with criteria of the analytic rubric (Figure (Figure1) 1 ) for evaluators to reflect on but maintains a summary, overall evaluation for each dimension (delivery/content) of the performance, may allow for benefits of each type of rubric to be used advantageously. This finding has been demonstrated with OSCEs in medical education where checklists for completed items (ie, yes/no) at an OSCE station have been successfully replaced with a few reliable global impression rating scales. 29 - 31

Alternatively, and because the MFRM model was used in the current study, an items-weighting approach could be used with the analytic rubric. That is, item weighting based on the difficulty of each rubric item could suggest how many points should be given for that rubric items, eg, some items would be worth 0.25 points, while others would be worth 0.5 points or 1 point (Table (Table2). 2 ). As could be expected, the more complex the rubric scoring becomes, the less feasible the rubric is to use. This was the main reason why this revision approach was not chosen by the course coordinator following this study. As well, it does not address the conundrum that the performance may be more than the summation of behavior items in the Figure Figure1 1 rubric. This current study cannot suggest which approach would be better as each would have its merits and pitfalls.

Rubric Item Weightings Suggested in the 2008-2009 Data Many-Facet Rasch Measurement Analysis

Regardless of which approach is used, alignment of the evaluation rubric with the course objectives is imperative. Objectivity has been described as a general striving for value-free measurement (ie, free of the evaluator's interests, opinions, preferences, sentiments). 27 This is a laudable goal pursued through educational research. Strategies to reduce measurement error, termed objectification , may not necessarily lead to increased objectivity. 27 The current investigation suggested that a rubric could become too explicit if all the possible areas of an oral presentation that could be assessed (ie, objectification) were included. This appeared to dilute the effect of important items and lose validity. A holistic rubric that is more straightforward and easier to score quickly may be less likely to lose validity (ie, “lose the forest for the trees”), though operationalizing a revised rubric would need to be investigated further. Similarly, weighting items in an analytic rubric based on their importance and difficulty for students may alleviate this issue; however, adding up individual items might prove arduous. While the rubric in Figure Figure1, 1 , which has evolved over the years, is the subject of ongoing revisions, it appears a reliable rubric on which to build.

The major limitation of this study involves the observational method that was employed. Although the 2 cohorts were from a single institution, investigators did use a completely separate class of PharmD students to verify initial instrument revisions. Optimizing the rubric's rating scale involved collapsing data from misuse of a 4-category rating scale (expanded by evaluators to 7 categories) by a few of the evaluators into 4 independent categories without middle ratings. As a result of the study findings, no actual grading adjustments were made for students in the 2008-2009 presentation course; however, adjustment using the MFRM have been suggested by Roberts and colleagues. 13 Since 2008-2009, the course coordinator has made further small revisions to the rubric based on feedback from evaluators, but these have not yet been re-analyzed with the MFRM.

The evaluation rubric used in this study for student performance evaluations showed high reliability and the data analysis agreed with using 4 rating scale categories to optimize the rubric's reliability. While lenient and harsh faculty evaluators were found, variability in evaluator scoring affected grading in this course only minimally. Aside from reliability, issues of validity were raised using criterion-referenced grading. Future revisions to this evaluation rubric should reflect these criterion-referenced concerns. The rubric analyzed herein appears a suitable starting point for reliable evaluation of PharmD oral presentations, though it has limitations that could be addressed with further attention and revisions.

ACKNOWLEDGEMENT

Author contributions— MJP and EGS conceptualized the study, while MJP and GES designed it. MJP, EGS, and GES gave educational content foci for the rubric. As the study statistician, MJP analyzed and interpreted the study data. MJP reviewed the literature and drafted a manuscript. EGS and GES critically reviewed this manuscript and approved the final version for submission. MJP accepts overall responsibility for the accuracy of the data, its analysis, and this report.

New user? Create an account

Forgot your password?

Forgot your username?

Advertisement

Evaluation Rubrics a Valuable Tool for Assessing eLearning

Join or login to save this to your library

Contributor

Jean Marrapodi

VP/Senior Instructional Designer, UMB Bank

Unless you have attended a college course or have a child in elementary school, you may not be familiar with evaluation rubrics. Basically, a rubric is a grid that defines expectations and provides an objective scoring system. Susan Brookhart defines a rubric as “a coherent set of criteria for students’ work that includes descriptions of levels of performance quality on the criteria.”

Rubrics identify the criteria used for rating and have a value scale that defines each of the criteria, similar to a Likert scale, going from best to worse.

Using rubrics

Figure 2 shows a rubric that I used to grade a final project in an online undergraduate course that I authored and taught. This particular rubric is embedded in Canvas, the LMS the college uses. For scoring, the professor clicks on the appropriate boxes when grading the work, making things quite efficient.

For this project, students had the option of creating their assignments in a variety of formats, so I needed to create an equitable system that would be able to evaluate PowerPoint presentations, web pages, videos, and papers. I wanted to grade not only their use of the selected tool, but also that their information was accurate, that they were careful to proofread for correct grammar and spelling, and that they included documented research to support their claims. Basically, I broke the criteria down into the message, medium, mechanics, research, and citation accuracy. I distributed the points so that message, which was the most important factor, had the most weight.

Make expectations clear in student learning

When a rubric is used for grading, each criterion is reviewed independently. Students are given the rubric with the assignment, so they understand how their work will be evaluated and they can self-assess prior to submission. When well-written, rubrics make expectations clear, creating an objective standard so anyone reviewing an assignment should arrive at the same score. (In theory, anyway. Inter-rater reliability can be tricky.) When an assignment is returned, the rubric helps the student see what areas need improvement.

Rubrics require higher-order thinking on the part of the learner since they involve evaluation. This helps deepen the learning and increases transfer to other settings.

Using rubrics in corporate training

In corporate training, rubrics can be used in project-based learning to review what has been created and demonstrate the skill being taught. Rubrics are useful for peer review, as well, for evaluation by a trainer or manager reviewing the work product.

When rubrics are used in peer review, most people find that they learn as much reviewing their colleagues’ work as they do in creating their own submissions. Rubrics can be used for evaluating customer service calls or role plays, and even for defining career development for individual development on a team. ( Download Marrapodi’s rubric for IDs here .)

Rubrics also have their place in eLearning. Coursera, one of the largest providers of MOOCs, uses rubrics for peer grading of assignments. Each assignment is reviewed and commented on by three-to-four students to ensure a fair evaluation. In online higher education, rubrics are routinely used to score students’ work.

In corporate eLearning, a rubric can be used in several ways, such as:

- A pretest that allows learners to self-assess their skills and develop goals for the course.

- A coaching and skill-building tool when included with a project for the employee to complete. The learner’s supervisor can then review the finished product against the rubric.

- A way to rate the performance of an actor in a scenario in a skill-based training. This can help learners think through what went well, what went poorly, and why.

Building a rubric

Rubrics have three key components.

- Criteria define the elements being reviewed. It answers what is being reviewed. Criteria must be appropriate, definable, observable, distinct, and able to support descriptions along a continuum.

- Descriptions describe the levels of performance on a scale, which is usually high to low. The description tells what work at each level (excellent, very good, good, fair, poor) looks like. If the rubric is used for scoring, this section includes point values. They center the target performance (acceptable, mastery, passing) at the appropriate level.

- The rating scale is the terminology used to describe the levels of performance. They always go from high to low and might use ranges like excellent to poor, or expert to novice. The key is that they have equal increments and are presented in descending order. Most rubrics use four-to-five columns, but there is no hard-and-fast rule. It’s best not to use yes/no in a rubric, but in some instances, a yes/no or met/failed criterion might be appropriate. If the entire rubric consists of yes/no questions, it is better to just use a checklist.

Most rubrics can be built in a simple table, but the ed tech people have some tools that you can use, such as Rubric Maker and Quick Rubric .

When building rubrics , remember:

- Criteria assess only one item per row.

- Descriptions must include gradations of the same thing. Think grayscale.

Writing a rubric

The first step in creating a rubric is to determine the categories to evaluate. In my first example, I used the message, medium, and mechanics. If we were baking blueberry muffins, the rating categories might be texture, flavor, and appearance. When determining components for your criteria, use brief statements, phrases, or keywords. Each rubric line should focus on a different skill and must evaluate only one measurable criterion. Be careful that they don’t overlap.

After you determine components, you need to write the descriptions for each of your criteria. Ask yourself how proficient the learner should be at the task. Keep Gloria Gery’s proficiency scale ( Figure 3 ) in mind. If the learner is a beginner at something, don’t expect mastery of the skill. Rate things accordingly.

Figure 4 shows the beginning of a rubric for evaluating a workshop presenter. Do you see how things move up and down from the midpoint of Satisfactory?

Ideally, the entire rubric should fit on one sheet of paper, and it should always be presented with the assignment.

Roll out the rubric

Obviously, you want to have your SME review your rubric as part of your development process, but no matter how well you design it, it is likely you will have missed something. Plan to pilot your rubric before finalizing it. As you evaluate assignments with it, you will find things that you didn’t think of or identify areas that were problematic for the students that you may not have anticipated. Ask yourself if it worked and was sufficiently detailed, and adjust things before releasing it for your learning program.

The first time you launch something with a rubric, plan on explaining it to your learners. If it was new to you, it will be new to them. Encourage them to use it to plan their work, then as a review tool prior to submission. Don’t assume they will know to do that.

In conclusion, rubrics definitely have their place in the eLearning world. They provide a good way for learners to show what they know; they also help learners see that they know what they think they know, which is yet another way to strengthen and build their learning.

To further explore eLearning evaluation and assessment, download The eLearning Guild’s research report, Evaluating Learning: Insights from Learning Professionals .

Brookhart S. (2013). How to create and use rubrics for formative assessment and grading . Alexandria, VA: ASCD.

April 1, 2019

Accessible & Inclusive Learning: A Panel Discussion

Online Events Archive

Trends in Instructional Technologies over the Past 15 Years

Revitalizing learning: merging timeless design principles with cutting-edge learning environments, get them to yes: tools and tactics to get what you need from stakeholders, instructional design in the real world.

How to Evaluate Learning Videos with a Rubric

by Christopher Karel

Evaluating learning videos is easy with a rubric. Reflecting upon effectiveness is also easier if you use the same tool to measure all of your videos. Therefore, I offer you a method to evaluate learning videos using a rubric that will help you improve the KSB (Knowledge, Skills, and Behaviors) of your learners.

FYI: I’m on a mission to help people make and use video for learning purposes. If you are making a learning video for yourself or for a client, then you are managing numerous moving parts. By always beginning with the end in mind (guided by a rubric), you will be on your way to creating video content that will boost the KSB of your learners. If you are managing a team and need to evaluate your video content, then using a rubric will help your entire team align their feedback around a common goal.

Let’s get to it!

The main purpose of rubrics is to assess performance. -ASCD

Using a rubric will help you set a consistent standard for your learning video content. By evaluating content the same way for every project, you will be efficient and objective – every time. Below, I offer an annotated path to evaluate learning videos with a rubric. Each section of the rubric is captured in a screenshot followed by a brief explanation and several questions you can ask yourself to aid in completing the said section. Download the rubric and use it with your existing content or on your new videos. Then, let me know how it goes. Feel free to modify the rubric to suit your needs and attribute the original rubric to Learning Carton.

The purpose is the first thing you want to identify in each video you evaluate. Ask yourself these questions and then circle the appropriate word.

- Knowledge : Is the video designed to create awareness on a topic? Examples: teach product knowledge, explain a process, share information about a topic

- Skills : Is the video designed to demonstrate a skill or show someone how to act (behavior modeling)?

- Behavior : Is the video designed to change the learner’s behavior by requiring the viewer to make informed decisions?

The purpose of the video should be clear and concise. Can you easily state the purpose in a single sentence?

Type of Video

Next, you should circle the type of video. What type of learning video is it? Check out The 6 Types of Video for Learning if you need a further explanation of the types. If you feel the video is not one of these six types, then it may not be a learning video at all.

As you start to deep dive into evaluating the learning video look for these seven categories. Read this section carefully before you watch the video and have the rubric on paper or a nearby screen as you watch. Your goal is to openly and honestly rank the video by answering these questions:

- Are facts and information up to date? Is it organized and clearly delivered?

- Does the video present value by offering information designed for the learning audience?

- Are the learning objectives clearly stated or easily accessible?

- Is the content free from bias?

- Is there a call to action that implores the learner to do something to extend their learning?

- Is there a story structure to the content? Beginning-middle-end.

- Is it clear how the video is meant to be shared with the audience?

Now it’s time to evaluate the video’s visual merits. This is the last thing you should evaluate; thus, this is the reason it is at the end of the rubric. Training and learning video is not made with Hollywood budgets. It’s not necessary! You can learn how to do something from a video someone made in their house using their cellphone! Learning video should adhere to professional skills in production, but it is not as important as the content and purpose. That being said, rank your video’s technical score with these questions:

- How is the overall look of the video? Is it pleasing to the eye?

- Is it easy to understand the audio? Is the volume consistent? Is the audio free from imperfections?

- Are the visuals composed nicely so that the program is engaging to look at for a long period of time?

- Does lighting enhance or distract from the subject in the video?

- Are there too many effects? Are graphics used to support the message?

- Is the video the same size throughout or do you see black bars and boxes on the sides or top?

- Is the video quality sharp?

Total Score