Memory construction: a brief and selective history

Affiliation.

- 1 Department of Psychology, City, University of London, London, UK.

- PMID: 35331087

- DOI: 10.1080/09658211.2021.1964795

In this short article, we provide a brief introduction to the idea that memory involves constructive processes. The importance of constructive processes in memory has a rich history, one that stretches back more than 125 years. This historical context provides a backdrop for the articles appearing in this special issue of Memory, articles that outline the current thinking about the constructive nature of memory. We argue that memory construction, either implicitly or explicitly, represents the current framework in which modern memory research is embedded.

Keywords: Memory construction; autobiographical memory; false memory; knowledge representation; memory accuracy.

- Memory, Episodic*

- Mental Recall

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

10.10: Eyewitness Testimony and Memory Construction

- Last updated

- Save as PDF

- Page ID 59944

Learning Objectives

- Describe the unreliability of eyewitness testimony

- Explain the misinformation effect

Memory Construction and Reconstruction

The formulation of new memories is sometimes called construction , and the process of bringing up old memories is called reconstruction . Yet as we retrieve our memories, we also tend to alter and modify them. A memory pulled from long-term storage into short-term memory is flexible. New events can be added and we can change what we think we remember about past events, resulting in inaccuracies and distortions. People may not intend to distort facts, but it can happen in the process of retrieving old memories and combining them with new memories (Roediger and DeSoto, in press).

Suggestibility

When someone witnesses a crime, that person’s memory of the details of the crime is very important in catching the suspect. Because memory is so fragile, witnesses can be easily (and often accidentally) misled due to the problem of suggestibility. Suggestibility describes the effects of misinformation from external sources that leads to the creation of false memories. In the fall of 2002, a sniper in the DC area shot people at a gas station, leaving Home Depot, and walking down the street. These attacks went on in a variety of places for over three weeks and resulted in the deaths of ten people. During this time, as you can imagine, people were terrified to leave their homes, go shopping, or even walk through their neighborhoods. Police officers and the FBI worked frantically to solve the crimes, and a tip hotline was set up. Law enforcement received over 140,000 tips, which resulted in approximately 35,000 possible suspects (Newseum, n.d.).

Most of the tips were dead ends, until a white van was spotted at the site of one of the shootings. The police chief went on national television with a picture of the white van. After the news conference, several other eyewitnesses called to say that they too had seen a white van fleeing from the scene of the shooting. At the time, there were more than 70,000 white vans in the area. Police officers, as well as the general public, focused almost exclusively on white vans because they believed the eyewitnesses. Other tips were ignored. When the suspects were finally caught, they were driving a blue sedan.

As illustrated by this example, we are vulnerable to the power of suggestion, simply based on something we see on the news. Or we can claim to remember something that in fact is only a suggestion someone made. It is the suggestion that is the cause of the false memory.

Eyewitness Misidentification

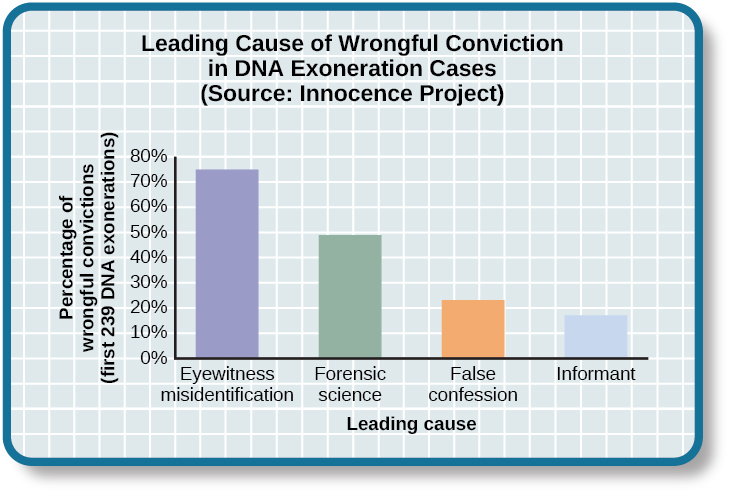

Even though memory and the process of reconstruction can be fragile, police officers, prosecutors, and the courts often rely on eyewitness identification and testimony in the prosecution of criminals. However, faulty eyewitness identification and testimony can lead to wrongful convictions (Figure 1).

How does this happen? In 1984, Jennifer Thompson, then a 22-year-old college student in North Carolina, was brutally raped at knifepoint. As she was being raped, she tried to memorize every detail of her rapist’s face and physical characteristics, vowing that if she survived, she would help get him convicted. After the police were contacted, a composite sketch was made of the suspect, and Jennifer was shown six photos. She chose two, one of which was of Ronald Cotton. After looking at the photos for 4–5 minutes, she said, “Yeah. This is the one,” and then she added, “I think this is the guy.” When questioned about this by the detective who asked, “You’re sure? Positive?” She said that it was him. Then she asked the detective if she did OK, and he reinforced her choice by telling her she did great. These kinds of unintended cues and suggestions by police officers can lead witnesses to identify the wrong suspect. The district attorney was concerned about her lack of certainty the first time, so she viewed a lineup of seven men. She said she was trying to decide between numbers 4 and 5, finally deciding that Cotton, number 5, “Looks most like him.” He was 22 years old.

By the time the trial began, Jennifer Thompson had absolutely no doubt that she was raped by Ronald Cotton. She testified at the court hearing, and her testimony was compelling enough that it helped convict him. How did she go from, “I think it’s the guy” and it “Looks most like him,” to such certainty? Gary Wells and Deah Quinlivan (2009) assert it’s suggestive police identification procedures, such as stacking lineups to make the defendant stand out, telling the witness which person to identify, and confirming witnesses choices by telling them “Good choice,” or “You picked the guy.”

After Cotton was convicted of the rape, he was sent to prison for life plus 50 years. After 4 years in prison, he was able to get a new trial. Jennifer Thompson once again testified against him. This time Ronald Cotton was given two life sentences. After serving 11 years in prison, DNA evidence finally demonstrated that Ronald Cotton did not commit the rape, was innocent, and had served over a decade in prison for a crime he did not commit.

Link to Learning

To learn more about Ronald Cotton and the fallibility of memory, watch these excellent Part 1 and Part 2 videos by 60 Minutes .

Ronald Cotton’s story, unfortunately, is not unique. There are also people who were convicted and placed on death row, who were later exonerated. The Innocence Project is a non-profit group that works to exonerate falsely convicted people, including those convicted by eyewitness testimony. To learn more, you can visit innocenceproject.org .

Dig Deeper: Preserving Eyewitness Memory: The Elizabeth Smart Case

Contrast the Cotton case with what happened in the Elizabeth Smart case. When Elizabeth was 14 years old and fast asleep in her bed at home, she was abducted at knifepoint. Her nine-year-old sister, Mary Katherine, was sleeping in the same bed and watched, terrified, as her beloved older sister was abducted. Mary Katherine was the sole eyewitness to this crime and was very fearful. In the coming weeks, the Salt Lake City police and the FBI proceeded with caution with Mary Katherine. They did not want to implant any false memories or mislead her in any way. They did not show her police line-ups or push her to do a composite sketch of the abductor. They knew if they corrupted her memory, Elizabeth might never be found. For several months, there was little or no progress on the case. Then, about 4 months after the kidnapping, Mary Katherine first recalled that she had heard the abductor’s voice prior to that night (he had worked one time as a handyman at the family’s home) and then she was able to name the person whose voice it was. The family contacted the press and others recognized him—after a total of nine months, the suspect was caught and Elizabeth Smart was returned to her family.

Query \(\PageIndex{1}\)

Elizabeth Loftus and the Misinformation Effect

Cognitive psychologist Elizabeth Loftus has conducted extensive research on memory. She has studied false memories as well as recovered memories of childhood sexual abuse. Loftus also developed the misinformation effect paradigm , which holds that after exposure to incorrect information, a person may misremember the original event.

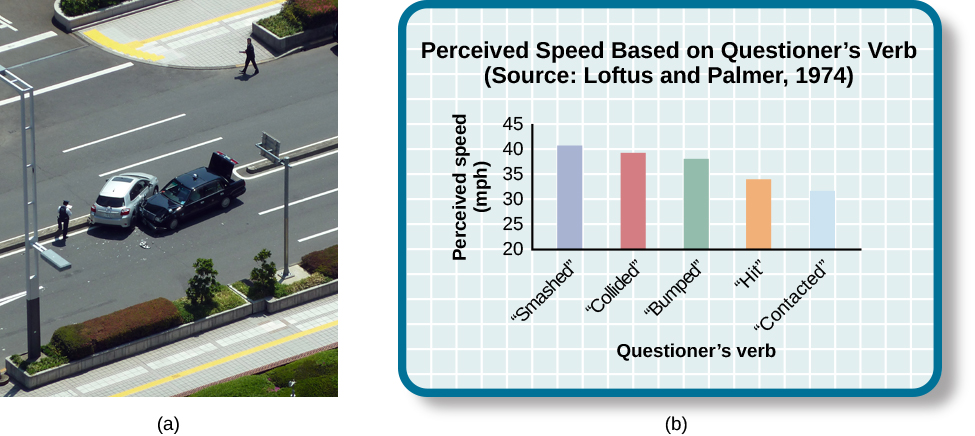

According to Loftus, an eyewitness’s memory of an event is very flexible due to the misinformation effect. To test this theory, Loftus and John Palmer (1974) asked 45 U.S. college students to estimate the speed of cars using different forms of questions (Figure 2). The participants were shown films of car accidents and were asked to play the role of the eyewitness and describe what happened. They were asked, “About how fast were the cars going when they (smashed, collided, bumped, hit, contacted) each other?” The participants estimated the speed of the cars based on the verb used.

This video explains the misinformation effect.

You can view the transcript for “The Misinformation Effect” here (opens in new window) .

Participants who heard the word “smashed” estimated that the cars were traveling at a much higher speed than participants who heard the word “contacted.” The implied information about speed, based on the verb they heard, had an effect on the participants’ memory of the accident. In a follow-up one week later, participants were asked if they saw any broken glass (none was shown in the accident pictures). Participants who had been in the “smashed” group were more than twice as likely to indicate that they did remember seeing glass. Loftus and Palmer demonstrated that a leading question encouraged them to not only remember the cars were going faster, but to also falsely remember that they saw broken glass.

Studies have demonstrated that young adults (the typical research subjects in psychology) are often susceptible to misinformation, but that children and older adults can be even more susceptible (Bartlett & Memon, 2007; Ceci & Bruck, 1995). In addition, misinformation effects can occur easily, and without any intention to deceive (Allan & Gabbert, 2008). Even slight differences in the wording of a question can lead to misinformation effects. Subjects in one study were more likely to say yes when asked “Did you see the broken headlight?” than when asked “Did you see a broken headlight?” (Loftus, 1975).

Other studies have shown that misinformation can corrupt memory even more easily when it is encountered in social situations (Gabbert, Memon, Allan, & Wright, 2004). This is a problem particularly in cases where more than one person witnesses a crime. In these cases, witnesses tend to talk to one another in the immediate aftermath of the crime, including as they wait for police to arrive. But because different witnesses are different people with different perspectives, they are likely to see or notice different things, and thus remember different things, even when they witness the same event. So when they communicate about the crime later, they not only reinforce common memories for the event, they also contaminate each other’s memories for the event (Gabbert, Memon, & Allan, 2003; Paterson & Kemp, 2006; Takarangi, Parker, & Garry, 2006).

The misinformation effect has been modeled in the laboratory. Researchers had subjects watch a video in pairs. Both subjects sat in front of the same screen, but because they wore differently polarized glasses, they saw two different versions of a video, projected onto a screen. So, although they were both watching the same screen, and believed (quite reasonably) that they were watching the same video, they were actually watching two different versions of the video (Garry, French, Kinzett, & Mori, 2008).

In the video, Eric the electrician is seen wandering through an unoccupied house and helping himself to the contents thereof. A total of eight details were different between the two videos. After watching the videos, the “co-witnesses” worked together on 12 memory test questions. Four of these questions dealt with details that were different in the two versions of the video, so subjects had the chance to influence one another. Then subjects worked individually on 20 additional memory test questions. Eight of these were for details that were different in the two videos. Subjects’ accuracy was highly dependent on whether they had discussed the details previously. Their accuracy for items they had not previously discussed with their co-witness was 79%. But for items that they had discussed, their accuracy dropped markedly, to 34%. That is, subjects allowed their co-witnesses to corrupt their memories for what they had seen.

Controversies over Repressed and Recovered Memories

Other researchers have described how whole events, not just words, can be falsely recalled, even when they did not happen. The idea that memories of traumatic events could be repressed has been a theme in the field of psychology, beginning with Sigmund Freud, and the controversy surrounding the idea continues today.

Recall of false autobiographical memories is called false memory syndrome . This syndrome has received a lot of publicity, particularly as it relates to memories of events that do not have independent witnesses—often the only witnesses to the abuse are the perpetrator and the victim (e.g., sexual abuse).

On one side of the debate are those who have recovered memories of childhood abuse years after it occurred. These researchers argue that some children’s experiences have been so traumatizing and distressing that they must lock those memories away in order to lead some semblance of a normal life. They believe that repressed memories can be locked away for decades and later recalled intact through hypnosis and guided imagery techniques (Devilly, 2007).

Research suggests that having no memory of childhood sexual abuse is quite common in adults. For instance, one large-scale study conducted by John Briere and Jon Conte (1993) revealed that 59% of 450 men and women who were receiving treatment for sexual abuse that had occurred before age 18 had forgotten their experiences. Ross Cheit (2007) suggested that repressing these memories created psychological distress in adulthood. The Recovered Memory Project was created so that victims of childhood sexual abuse can recall these memories and allow the healing process to begin (Cheit, 2007; Devilly, 2007).

On the other side, Loftus has challenged the idea that individuals can repress memories of traumatic events from childhood, including sexual abuse, and then recover those memories years later through therapeutic techniques such as hypnosis, guided visualization, and age regression.

Loftus is not saying that childhood sexual abuse doesn’t happen, but she does question whether or not those memories are accurate, and she is skeptical of the questioning process used to access these memories, given that even the slightest suggestion from the therapist can lead to misinformation effects. For example, researchers Stephen Ceci and Maggie Brucks (1993, 1995) asked three-year-old children to use an anatomically correct doll to show where their pediatricians had touched them during an exam. Fifty-five percent of the children pointed to the genital/anal area on the dolls, even when they had not received any form of genital exam.

Ever since Loftus published her first studies on the suggestibility of eyewitness testimony in the 1970s, social scientists, police officers, therapists, and legal practitioners have been aware of the flaws in interview practices. Consequently, steps have been taken to decrease suggestibility of witnesses. One way is to modify how witnesses are questioned. When interviewers use neutral and less leading language, children more accurately recall what happened and who was involved (Goodman, 2006; Pipe, 1996; Pipe, Lamb, Orbach, & Esplin, 2004). Another change is in how police lineups are conducted. It’s recommended that a blind photo lineup be used. This way the person administering the lineup doesn’t know which photo belongs to the suspect, minimizing the possibility of giving leading cues. Additionally, judges in some states now inform jurors about the possibility of misidentification. Judges can also suppress eyewitness testimony if they deem it unreliable.

More on False Memories

In early false memory studies, undergraduate subjects’ family members were recruited to provide events from the students’ lives. The student subjects were told that the researchers had talked to their family members and learned about four different events from their childhoods. The researchers asked if the now undergraduate students remembered each of these four events—introduced via short hints. The subjects were asked to write about each of the four events in a booklet and then were interviewed two separate times. The trick was that one of the events came from the researchers rather than the family (and the family had actually assured the researchers that this event had not happened to the subject). In the first such study, this researcher-introduced event was a story about being lost in a shopping mall and rescued by an older adult. In this study, after just being asked whether they remembered these events occurring on three separate occasions, a quarter of subjects came to believe that they had indeed been lost in the mall (Loftus & Pickrell, 1995). In subsequent studies, similar procedures were used to get subjects to believe that they nearly drowned and had been rescued by a lifeguard, or that they had spilled punch on the bride’s parents at a family wedding, or that they had been attacked by a vicious animal as a child, among other events (Heaps & Nash, 1999; Hyman, Husband, & Billings, 1995; Porter, Yuille, & Lehman, 1999).

More recent false memory studies have used a variety of different manipulations to produce false memories in substantial minorities and even occasional majorities of manipulated subjects (Braun, Ellis, & Loftus, 2002; Lindsay, Hagen, Read, Wade, & Garry, 2004; Mazzoni, Loftus, Seitz, & Lynn, 1999; Seamon, Philbin, & Harrison, 2006; Wade, Garry, Read, & Lindsay, 2002). For example, one group of researchers used a mock-advertising study, wherein subjects were asked to review (fake) advertisements for Disney vacations, to convince subjects that they had once met the character Bugs Bunny at Disneyland—an impossible false memory because Bugs is a Warner Brothers character (Braun et al., 2002). Another group of researchers photoshopped childhood photographs of their subjects into a hot air balloon picture and then asked the subjects to try to remember and describe their hot air balloon experience (Wade et al., 2002). Other researchers gave subjects unmanipulated class photographs from their childhoods along with a fake story about a class prank, and thus enhanced the likelihood that subjects would falsely remember the prank (Lindsay et al., 2004).

Using a false feedback manipulation, we have been able to persuade subjects to falsely remember having a variety of childhood experiences. In these studies, subjects are told (falsely) that a powerful computer system has analyzed questionnaires that they completed previously and has concluded that they had a particular experience years earlier. Subjects apparently believe what the computer says about them and adjust their memories to match this new information. A variety of different false memories have been implanted in this way. In some studies, subjects are told they once got sick on a particular food (Bernstein, Laney, Morris, & Loftus, 2005). These memories can then spill out into other aspects of subjects’ lives, such that they often become less interested in eating that food in the future (Bernstein & Loftus, 2009b). Other false memories implanted with this methodology include having an unpleasant experience with the character Pluto at Disneyland and witnessing physical violence between one’s parents (Berkowitz, Laney, Morris, Garry, & Loftus, 2008; Laney & Loftus, 2008).

Importantly, once these false memories are implanted—whether through complex methods or simple ones—it is extremely difficult to tell them apart from true memories (Bernstein & Loftus, 2009a; Laney & Loftus, 2008).

Think It Over

Jurors place a lot of weight on eyewitness testimony. Imagine you are an attorney representing a defendant who is accused of robbing a convenience store. Several eyewitnesses have been called to testify against your client. What would you tell the jurors about the reliability of eyewitness testimony?

false memory syndrome: recall of false autobiographical memories

memory construction: formulation of new memories

misattribution: memory error in which you confuse the source of your information

misinformation effect paradigm: after exposure to incorrect information, a person may misremember the original event

reconstruction: process of bringing up old memories that might be distorted by new information

suggestibility: effects of misinformation from external sources that leads to the creation of false memories

Licenses and Attributions

CC licensed content, Shared previously

- Problems with Memory. Authored by : OpenStax College. Located at : http://cnx.org/contents/[email protected]:I97J3Te3@7/Problems-with-Memory . License : CC BY: Attribution . License Terms : Download for free at http://cnx.org/contents/[email protected]

- False Memories and more on the Misinformation Effect. Authored by : Cara Laney and Elizabeth F. Loftus . Provided by : Reed College, University of California, Irvine. Located at : http://nobaproject.com/textbooks/wendy-king-introduction-to-psychology-the-full-noba-collection/modules/eyewitness-testimony-and-memory-biases . Project : The Noba Project. License : CC BY: Attribution

- The Misinformation Effect. Authored by : Eureka Foong. Located at : https://www.youtube.com/watch?v=iMPIWkFtd88 . License : Other . License Terms : Standard YouTube License

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 19 January 2024

A generative model of memory construction and consolidation

- Eleanor Spens ORCID: orcid.org/0000-0002-9327-6342 1 &

- Neil Burgess ORCID: orcid.org/0000-0003-0646-6584 1 , 2

Nature Human Behaviour volume 8 , pages 526–543 ( 2024 ) Cite this article

16k Accesses

279 Altmetric

Metrics details

- Cognitive neuroscience

- Computational neuroscience

Episodic memories are (re)constructed, share neural substrates with imagination, combine unique features with schema-based predictions and show schema-based distortions that increase with consolidation. Here we present a computational model in which hippocampal replay (from an autoassociative network) trains generative models (variational autoencoders) to (re)create sensory experiences from latent variable representations in entorhinal, medial prefrontal and anterolateral temporal cortices via the hippocampal formation. Simulations show effects of memory age and hippocampal lesions in agreement with previous models, but also provide mechanisms for semantic memory, imagination, episodic future thinking, relational inference and schema-based distortions including boundary extension. The model explains how unique sensory and predictable conceptual elements of memories are stored and reconstructed by efficiently combining both hippocampal and neocortical systems, optimizing the use of limited hippocampal storage for new and unusual information. Overall, we believe hippocampal replay training generative models provides a comprehensive account of memory construction, imagination and consolidation.

Similar content being viewed by others

The generative grammar of the brain: a critique of internally generated representations

A model of working memory for latent representations

Time-dependent memory transformation in hippocampus and neocortex is semantic in nature

Episodic memory concerns autobiographical experiences in their spatiotemporal context, whereas semantic memory concerns factual knowledge 1 . The former is thought to rapidly capture multimodal experience via long-term potentiation in the hippocampus, enabling the latter to learn statistical regularities over multiple experiences in the neocortex 2 , 3 , 4 , 5 . Crucially, episodic memory is thought to be constructive; recall is the (re)construction of a past experience, rather than the retrieval of a copy 6 , 7 . But the mechanisms behind episodic (re)construction and its link to semantic memory are not well understood.

Old memories can be preserved after hippocampal damage despite amnesia for recent ones 8 , suggesting that memories initially encoded in the hippocampus end up being stored in neocortical areas, an idea known as ‘systems consolidation’ 9 . The standard model of systems consolidation involves transfer of information from the hippocampus to the neocortex 2 , 3 , 4 , 10 , whereas other views suggest that episodic and semantic information from the same events can exist in parallel 11 . Hippocampal ‘replay’ of patterns of neural activity during rest 12 , 13 is thought to play a role in consolidation 14 , 15 . However, consolidation does not just change which brain regions support memory traces; it also converts them into a more abstract representation, a process sometimes referred to as semanticization 16 , 17 .

Generative models capture the probability distributions underlying data, enabling the generation of realistic new items by sampling from these distributions. Here we propose that consolidated memory takes the form of a generative network, trained to capture the statistical structure of stored events by learning to reproduce them (see also refs. 18 , 19 ). As consolidation proceeds, the generative network supports both the recall of ‘facts’ (semantic memory) and the reconstruction of experience from these ‘facts’ (episodic memory), in conjunction with additional information from the hippocampus that becomes less necessary as training progresses.

This builds on existing models of spatial cognition in which recall and imagination of scenes involve the same neural circuits 20 , 21 , 22 , and is supported by evidence from neuropsychology that damage to the hippocampal formation (HF) leads to deficits in imagination 23 , episodic future thinking 24 , dreaming 25 and daydreaming 26 , as well as by neuroimaging evidence that recall and imagination involve similar neural processes 27 , 28 .

We model consolidation as the training of a generative model by an initial autoassociative encoding of memory through ‘teacher–student learning’ 29 during hippocampal replay (see also ref. 30 ). Recall after consolidation has occurred is a generative process mediated by schemas representing common structure across events, as are other forms of scene construction or imagination. Our model builds on: (1) research into the relationship between generative models and consolidation 18 , 19 , (2) the use of variational autoencoders to model the hippocampal formation 31 , 32 , 33 and (3) the view that abstract allocentric latent variables are learned from egocentric sensory representations in spatial cognition 22 .

More generally, we build on the idea that the memory system learns schemas which encode ‘priors’ for the reconstruction of input patterns 34 , 35 . Unpredictable aspects of experience need to be stored in detail for further learning, while fully predicted aspects do not, consistent with the idea that memory helps to predict the future 36 , 37 , 38 , 39 . We suggest that familiar components are encoded in the autoassociative network as concepts (relying on the generative network for reconstruction), while novel components are encoded in greater sensory detail. This is efficient in terms of memory storage 40 , 41 , 42 and reflects the fact that consolidation can be a gradual transition, during which the autoassociative network supports aspects of memory not yet captured by the generative network. In other words, the generative network can reconstruct predictable aspects of an event from the outset on the basis of existing schemas, but as consolidation progresses, the network updates its schemas to reconstruct the event more accurately until the formerly unpredictable details stored in HF are no longer required.

Our model draws together existing ideas in machine learning to suggest an explanation for the following key features of memory, only subsets of which are captured by previous models:

The initial encoding of memory requires only a single exposure to the event and depends on the HF, while the consolidated form of memory is acquired more gradually 2 , 3 , 10 , as in the complementary learning systems (CLS) model 4 .

The semantic content of memories becomes independent of the HF over time 43 , 44 , 45 , consistent with CLS.

Vivid, detailed episodic memory remains dependent on HF 46 , consistent with multiple trace theory 11 (but not with CLS).

Similar neural circuits are involved in recall, imagination and episodic future thinking 27 , 28 , suggesting a common mechanism for event generation, as modelled in spatial cognition 22 .

Consolidation extracts statistical regularities from episodic memories to inform behaviour 47 , 48 , and supports relational inference and generalization 49 . The Tolman–Eichenbaum machine (TEM) 31 simulates this in the domain of multiple tasks with common transition structures (see also ref. 50 ), while ref. 51 models how both individual examples and statistical regularities could be learned within HF.

Post-consolidation episodic memories are more prone to schema-based distortions in which semantic or contextual knowledge influences recall 6 , 52 , consistent with the behaviour of generative models 32 .

Neural representations in the entorhinal cortex (EC) such as grid cells 53 are thought to encode latent structures underlying experiences 31 , 54 , and other regions of the association cortex, such as the medial prefrontal cortex (mPFC), may compress stimuli to a minimal representation 55 .

Novelty is thought to promote encoding within HF 56 , while more predictable events consistent with existing schemas are consolidated more rapidly 57 . Activity in the hippocampus can reflect prediction error or mismatch novelty 58 , 59 , and novelty is thought to affect the degree of compression of representations in memory 60 to make efficient use of limited HF capacity 42 .

Memory traces in the hippocampus appear to involve a mixture of sensory and conceptual features, with the latter encoded by concept cells 61 , potentially bound together by episode-specific neurons 62 . Few models explore how this could happen.

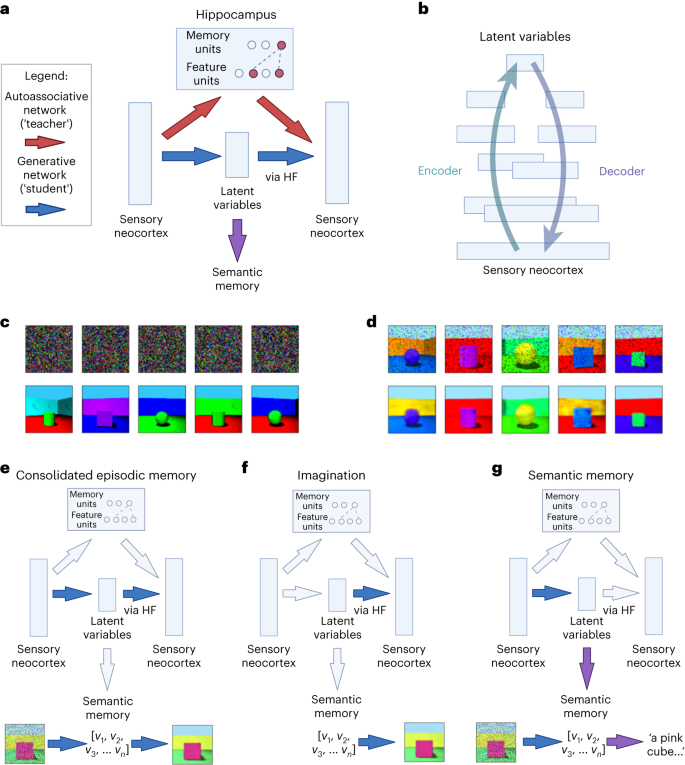

Consolidation as the training of a generative model

Our model simulates how the initial representation of memories can be used to train a generative network, which learns to reconstruct memories by capturing the statistical structure of experienced events (or ‘schemas’). First, the hippocampus rapidly encodes an event; then, generative networks gradually take over after being trained on replayed representations from the hippocampus. This makes the memory more abstracted, more supportive of generalization and relational inference, but also more prone to gist-based distortion. The generative networks can be used to reconstruct (for memory) or construct (for imagination) sensory experience, or to support semantic memory and relational inference directly from their latent variable representations (see Fig. 1 ).

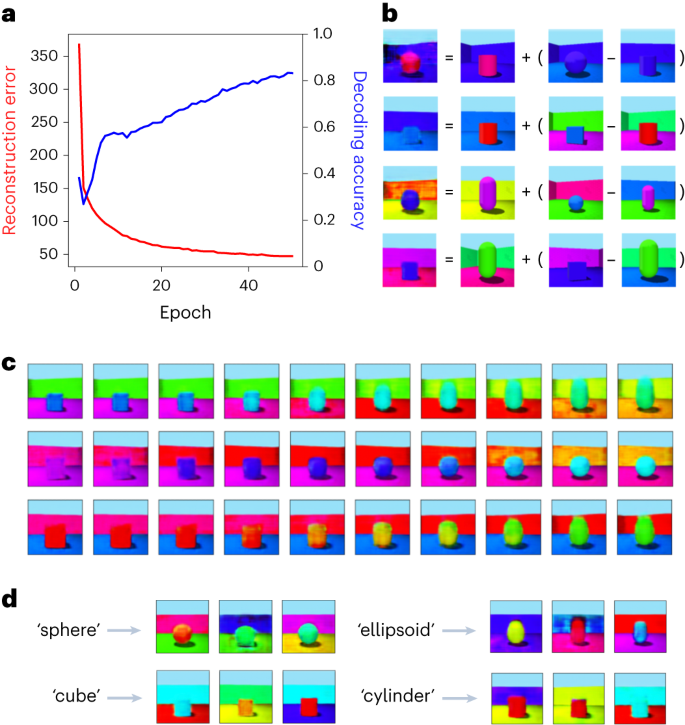

a , First, the hippocampus rapidly encodes an event, modelled as one-shot memorization in an autoassociative network (an MHN). Then, generative networks are trained on replayed representations from the autoassociative network, learning to reconstruct memories by capturing the statistical structure of experienced events. b , A more detailed schematic of the generative network to indicate the multiple layers of, and overlap between, the encoder and decoder (where layers closer to the sensory neocortex overlap more). The generation of a sensory experience, for example, visual imagery, requires the decoder to the sensory neocortex via HF. c , Random noise inputs to the MHN (top row) reactivate its memories (bottom row) after 10,000 items from the Shapes3D dataset are encoded, with five examples shown. d , The generative model (a variational autoencoder) can recall images (bottom row) from a partial input (top row), following training on 10,000 replayed memories sampled from the MHN. e , Episodic memory after consolidation: a partial input is mapped to latent variables whose return projections to the sensory neocortex via HF then decode these back into a sensory experience. f , Imagination: latent variables are decoded into an experience via HF and return projections to the neocortex. g , Semantic memory: a partial input is mapped to latent variables, which capture the ‘key facts’ of the scene. The bottom rows of e – g illustrate these functions in a model that has encoded the Shapes3D dataset into latent variables ( v 1 , v 2 , v 3 , …, v n ). Diagrams were created using BioRender.com .

Before consolidation, the hippocampal autoassociative network encodes the memory. A modern Hopfield network (MHN) 63 is used, which can be interpreted such that the feature units activated by an event are bound together by a memory unit 64 (see Methods and Supplementary Information ). Teacher–student learning 29 allows transfer of memories from one neural network to another during consolidation 30 . Accordingly, we use outputs from the autoassociative network to train the generative network: random inputs to the hippocampus result in the reactivation of memories, and this reactivation results in consolidation. After consolidation, generative networks encode the information contained in memories. Reliance on the generative networks increases over time as they learn to reconstruct a particular event.

Specifically, the generative networks are implemented as variational autoencoders (VAEs), which are autoencoders with special properties such that the most compressed layer represents a set of latent variables, which can be sampled from to generate realistic new examples corresponding to the training dataset 65 , 66 . Latent variables can be thought of as hidden factors behind the observed data, and directions in the latent space can correspond to meaningful transformations (see Methods ). The VAE’s encoder ‘encodes’ sensory experience as latent variables, while its decoder ‘decodes’ latent variables back to sensory experience. In psychological terms, after training on a class of stimuli, VAEs can reconstruct such stimuli from a partial input according to the schema for that class, and generate novel stimuli consistent with the schema. (Our use of VAEs is illustrative, and we would expect a range of other generative latent variable models, such as predictive coding networks 67 , 68 , 69 , to show similar behaviour.) See Methods and Supplementary Information for further details.

Generative networks capture the probability distributions underlying events, or ‘schemas’. In other words, here ‘schemas’ are rules or priors (expected probability distributions) for reconstructing a certain type of stimulus (for example, the schema for an office predicts the presence of co-occurring objects such as desks and chairs, facilitating episode generation), whereas concepts represent categories but not necessarily how to reconstruct them. However, schemas and concepts are closely related, and their meanings can overlap, with conflicting definitions in the psychology literature 70 , 71 .

During perception, the generative model provides an ongoing estimate of novelty from its reconstruction error (also known as ‘prediction error’, the difference between input and output representations). Aspects of an event that are consistent with previous experience (that is, with low reconstruction error) do not need to be encoded in detail in the autoassociative ‘teacher’ network 36 , 37 , 38 , 39 . Once the generative network’s reconstruction error is sufficiently low, the hippocampal trace is unnecessary, freeing up capacity for new encodings. However, we have not simulated decay, deletion or capacity constraints in the autoassociative memory part of the model.

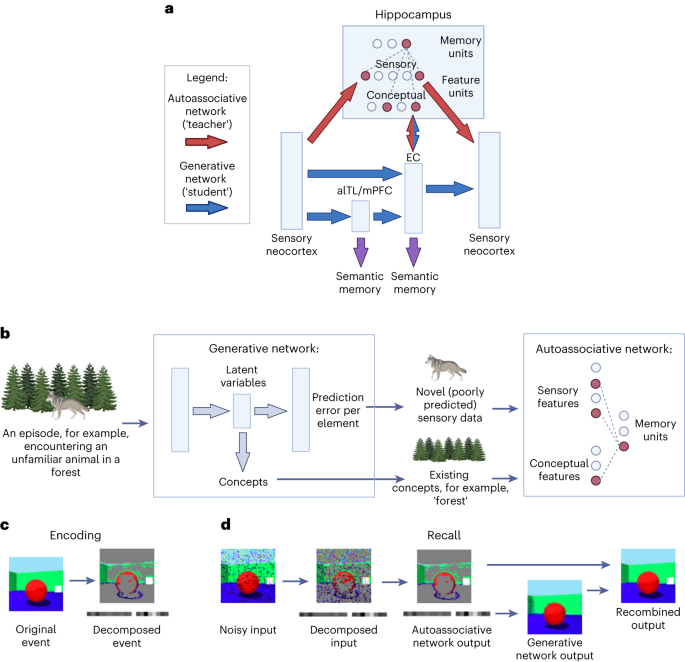

Combining conceptual and sensory features in episodic memory

Consolidation is often considered in terms of fine-grained sensory representations updating coarse-grained conceptual representations, for example, the sight of a particular dog updating the concept of a dog. Modelling hippocampal representations as sensory-like is a reasonable simplification, which we make in simulations of the ‘basic’ model in Fig. 1 . However, memories probably bind together representations along a spectrum from coarse-grained and conceptual to fine-grained and sensory. For example, the hippocampal encoding of a day at the beach is likely to bind together coarse-grained concepts such as ‘beach’ and ‘sea’ along with sensory representations such as the melody of an unfamiliar song or the sight of a particular sandcastle, consistent with the evidence for concept cells in the hippocampus 61 . (This also fits with the observation that ambiguous images ‘flip’ between interpretations in perception but are stable when held in memory 72 , reflecting how the conceptual content of memories constrains recall.)

Furthermore, encoding every sensory detail in the hippocampus would be inefficient (elements already predicted by conceptual representations being redundant); an efficient system should take advantage of shared structure across memories to encode only what is necessary 40 , 41 . Accordingly, we suggest that predictable elements are encoded as conceptual features linked to the generative latent variable representation, while unpredictable elements are encoded in a more detailed and veridical form as sensory features.

Suppose someone sees an unfamiliar animal in the forest (Fig. 2b ). Much of the event might be consistent with an existing forest schema, but the unfamiliar animal would be novel. In the extended model (Fig. 2 and section ‘Combining conceptual and unpredictable sensory features’), the reconstruction error per element of the experience is calculated by the generative model during perception, and elements with high reconstruction error are encoded in the autoassociative network as sensory features, along with conceptual features linked to the generative model’s latent variable representation. In other words, each pattern is split into a predictable component (approximating the generative network’s prediction for the pattern), plus an unpredictable component (elements with high prediction error). This produces a sparser vector than storing every element in detail, increasing the capacity of the network 42 .

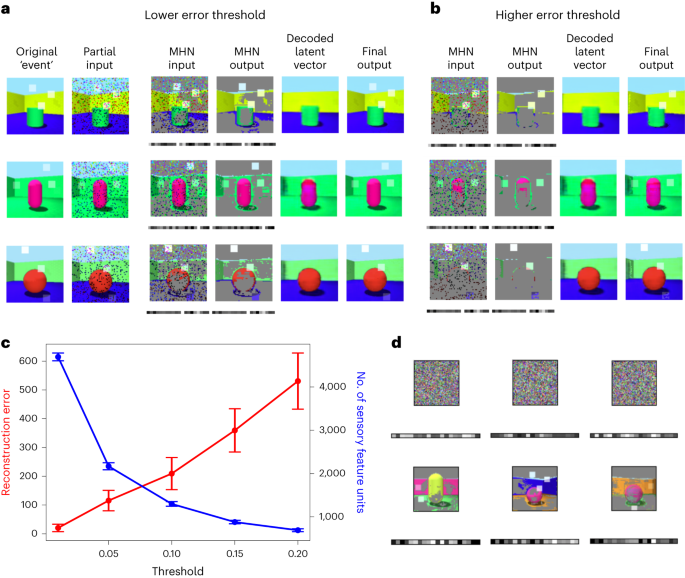

a , Each scene is initially encoded as a combination of predictable conceptual features related to the latent variables of the generative network and unpredictable sensory features that were poorly predicted by the generative network. An MHN (in red) encodes both sensory and conceptual features (with connections to the sensory neocortex and latent variables in EC, respectively), binding them together via memory units. Memories may eventually be learned by the generative model (in blue), but consolidation can be a prolonged process, during which time the generative network provides schemas for reconstruction and the autoassociative network supports new or detailed information not yet captured by these schemas. Multiple generative networks can be trained concurrently, with different networks optimized for different tasks. This includes networks with latent variables in EC, mPFC and alTL, each with its own semantic projections. However, in all cases, return projections to the sensory neocortex are via HF. b , An illustration of encoding in the extended model. c , Encoding ‘scenes’ from the Shapes3D dataset, with each ‘scene’ decomposed into unpredicted sensory features (top) and conceptual features linked to the generative network’s latent variables (bottom). Novel features (white squares overlaid on the image with varying opacity) are added to each ‘scene’. d , Recalling ‘scenes’ (with novel features) from the Shapes3D dataset. First, the input is decomposed; then, the MHN performs pattern completion on both sensory and conceptual features. The conceptual features (which in these simulations are simply the generative network’s latent variables) are then decoded into a schema-based prediction, onto which any stored sensory features are overwritten. Diagrams were created using BioRender.com .

Neural substrates of the model

Which brain regions do the components of this model represent? The autoassociative network involves the hippocampus binding together the constituents of a memory in the neocortex, whereas the generative network involves neocortical inputs projecting to latent variable representations in the higher association cortex, which then project back to the neocortex via the HF. The entorhinal (EC), medial prefrontal cortex (mPFC) and anterolateral temporal lobe (alTL) are all prime candidates for the site of latent variable representations.

First, the EC is the main route between the hippocampus and the neocortex, and is where grid cells, which are thought to be a latent variable representation of spatial or relational structure 31 , 54 , are most often observed 73 . Second, mPFC and its connections to HF play a crucial role in episodic memory processing 70 , 74 , 75 , 76 , 77 , 78 , are thought to encode schemas 57 , 71 , are implicated in transitive inference 79 and the integration of memories 80 , and perform dimensionality reduction by compressing irrelevant features 55 . Third, the anterior and lateral temporal cortices associated with semantic memory 81 and retrograde amnesia 82 probably contain latent variable representations capturing semantic structure. This might correspond to the ‘anterior temporal network’ associated with semantic dementia 83 , while the first network (between sensory and entorhinal cortices) might correspond to the ‘posterior medial network’ 83 , and to the network mapping between visual scenes and allocentric spatial representations 20 , 21 , 22 .

Which regions constitute the generative network’s decoder? The decoder converts latent variable representations in the higher association cortex back to sensory neocortical representations via HF. Patients with damage to the hippocampus proper but not the EC can generate simple scenes (or fragments thereof), but an intact hippocampus is required for more coherent imagery of complex ones 23 . We hypothesize that conceptual units in the hippocampus proper help to generate complex, conceptually coherent scenes (perhaps through a recurrent ‘clean up’ mechanism), but that an intact EC and its return pathway to the sensory neocortex (the ventral visual stream for images) can still decode representations to some extent in their absence.

Multiple generative networks can be trained concurrently from a single autoassociative network through consolidation, with different networks optimized for different tasks. In other words, multiple networks could update their parameters to minimize prediction error on the basis of the same replayed memories. This could consist of a primary VAE with latent variables in the EC, plus additional parallel pathways from the higher sensory cortex to the EC via latent variables in the mPFC or the alTL. (Computationally, the shared connections could be fixed as the alternative pathways are trained.) Note that in all cases, return projections to the sensory neocortex via HF are required to decode latent variables into sensory experiences.

Modelling encoding and recall

Each new event is encoded as an autoassociative trace in the hippocampus, modelled as an MHN. Two properties of this network are particularly important: memorization occurs with only one exposure, and random inputs to the network retrieve stored memories sampled from the whole set of memories (modelling replay).

We model recall as (re)constructing a scene from a partial input. First, we simulate encoding and replay in the autoassociative network. The network memorizes a set of scenes, representing events, as described above. When the network is given a partial input, it retrieves the closest stored memory. Even when the network is given random noise, it retrieves stored memories (see Fig. 1c ). Second, we simulate recall in the generative network trained on reactivated memories from the autoassociative network, which is able to reconstruct the original image when presented with a partial version of an item from the training data (Fig. 1d ).

In the basic model (Fig. 1a ), the prediction error could be calculated for each event so that only the unpredictable events are stored in the hippocampus, as the predictable ones can already be retrieved by the generative network (however, this is not simulated explicitly). In the extended model (Fig. 2 and section ‘Combining conceptual and unpredictable sensory features’), prediction error is calculated for each element of an event, determining which sensory details are stored.

Modelling semantic memory

Existing semantic memory survives when the hippocampus is lesioned 43 , 44 , 45 , and hippocampal amnesics can describe remote memories more successfully than recent ones 8 , 84 , even if they might not recall them ‘episodically’ 11 . This temporal gradient indicates that the semantic component of memories becomes HF-independent. In the model, EC lesions impair all truly episodic recollection since the return projections from the HF are required for the generation of sensory experiences. Here we describe how remote memories could be retrieved ‘in semantic form’ despite lesions including the hippocampus and the EC.

The latent variable representation of an event in the generative network encodes the key facts about the event and can drive semantic memory directly without decoding the representation back into a sensory experience (Fig. 1g ). The output route via HF is necessary for turning latent variable representations in mPFC or alTL into a sensory experience, but the latent variables themselves could support semantic retrieval. Thus, when the HF (including the EC) is removed, the model can still support retrieval of semantic information (see section ‘Modelling brain damage’ for details). To show this, we trained models to predict attributes of each image from its latent vector. Figure 3a shows that semantic ‘decoding accuracy’ increases as training progresses, reflecting the learning of semantic structure as a by-product of learning to reconstruct the sensory input patterns ( r s (48) = 0.997, P < 0.001, 95% confidence interval (CI) = 0.987, 1.000). While semantic memory is much more complex than simple classification, richer ‘semantic’ outputs such as verbal descriptions can also be decoded from latent variable representations of images 85 , 86 .

a , Reconstruction error (red) and decoding accuracy (blue) improve during training of the generative model. Decoding accuracy refers to the performance of a support vector classifier trained to output the central object’s shape from the latent variables, using 200 examples at the end of each epoch of generative model training. An epoch is one presentation of the training set of 10,000 samples from the hippocampus. b , Relational inference as vector arithmetic in the latent space. The three items on the right of each equation are items from the training data. Their latent variable representations are combined as vectors according to the equation, giving the latent variable representation from which the first item is generated. The pair in brackets describes a relation which is applied to the second item to produce the first. In the top row, the object shape changes from a cylinder to a sphere. In the second, the object shape changes from a cylinder to a cube, and the object colour from red to blue. In the third and fourth, more complex transitions change the object colour and shape, wall colour and angle. c , Imagining new items via interpolation in latent space. Each row shows points along a line in the latent space between two items from the training data, decoded into images by the generative network’s decoder. d , Imagining new items from a category. Samples from each of the shape categories of the support vector classifier in a are shown.

Imagination, episodic future thinking and relational inference

Here we model the generation of events that have not been experienced from the generative network’s latent variables. Events can be generated either by external specification of latent variables (imagination) or by transforming the latent variable representations of specific events (relational inference). The former is simulated by sampling from categories in the latent space then decoding the results (Fig. 3d ). The latter is simulated by interpolating between the latent representations of events (Fig. 3c ) or by doing vector arithmetic in the latent space (Fig. 3b ). This illustrates that the model has learnt some conceptual structure to the data, supporting reasoning tasks of the form ‘what is to A as B is to C ?’, and provides a model for the flexible recombination of memories thought to underlie episodic future thinking 24 .

Modelling schema-based distortions

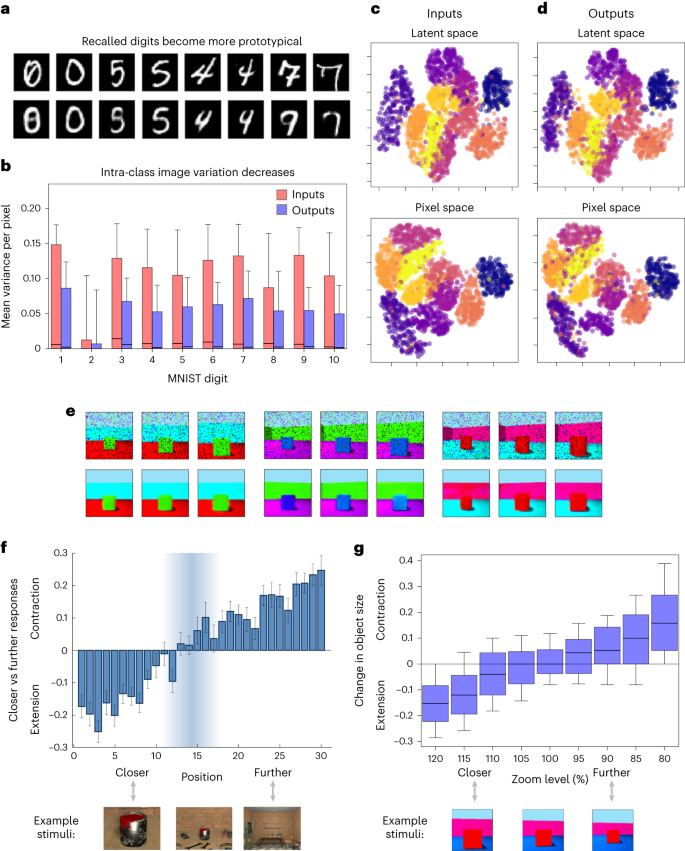

The schema-based distortions observed in human episodic memory increase over time 6 and with sleep 52 , suggesting an association with consolidation. Recall by the generative network distorts memories towards prototypical representations. Figure 4a–d shows that handwritten digits from the MNIST dataset 87 ‘recalled’ by a VAE become more prototypical (MNIST is used for this because each image has a single category). Recalled pairs from the same class become more similar, that is, intra-class variation decreases (paired samples t -test t (7,839) = 60.523, P < 0.001, Cohen’s d = −0.684, 95% CI = 0.021, 0.022). The pixel space of MNIST digits before and after recall and the latent space of their encodings also show this effect. In summary, recall with a generative network distorts stimuli towards more prototypical representations even when no class information is given during training. As reliance on the generative model increases, so does the level of distortion.

a , MNIST digits (top) and the VAE’s output for each (bottom). Recalled pairs from the same class become more similar. A total of 10,000 items from the MNIST dataset were encoded in the MHN, and 10,000 replayed samples were used to train the VAE. b , The variation within each MNIST class is smaller for the recalled items than for the original inputs. For each of the 10 classes, the variance per pixel is calculated across 500 images, and the 784 pixel variances are then plotted for each class before and after recall. In each boxplot, the box gives the interquartile range, its central line gives the median, and its whiskers extend to the 10th and 90th percentiles of the data. c , d , The pixel spaces of MNIST digits (bottom row) and the latent space of their encodings (top row) show more compact clusters for the generative network’s outputs ( d ) than for its inputs ( c ). Pixel and latent spaces are shown projected into 2D with UMAP 146 and colour-coded by class. e , Examples of boundary extension and contraction. Top row: the noisy input images (from a held-out test set), with an atypically ‘zoomed out’ or ‘zoomed in’ view (by 80% and 120% on the left and right, respectively) for three original images. Bottom row: the predicted images for each input image, which are distorted towards the ‘typical view’ in each case. f , Adapted figure from ref. 92 , showing the distribution of boundary extension vs contraction as a function of the viewpoint of an image. Specifically, the values are the average of ‘closer’ vs ‘further’ judgements, assigned −1 and 1, respectively, of an identical stimulus image in comparison with the remembered image (with 900 trials per position). Error bars give the standard error of the mean. Example stimuli are shown at the bottom. g , In our model, the VAE increases the estimated size of the central object in atypically ‘zoomed out’ views compared with the training data, and decreases it in atypically ‘zoomed in’ views, as in ref. 92 . Two hundred images are used at each ‘zoom level’. See b for a description of boxplot elements.

Boundary extension and contraction exemplify this phenomenon. Boundary extension is the tendency to remember a wider field of view than was observed 88 , while boundary contraction is the opposite 89 . Unusually close-up views appear to cause boundary extension, and unusually far away ones boundary contraction 89 , although this is debated 90 , 91 . We modelled this by giving the generative network a range of new scenes that were artificially ‘zoomed in’ or ‘zoomed out’ compared with those in its training set; its reconstructions are distorted towards the ‘typical view’ (Fig. 4e ), as in human data. Figure 4g shows the change in the object size in memory quantitatively, mirroring the findings in ref. 92 (Fig. 4f ). (Note that the measure of boundary extension vs contraction used by ref. 92 is produced by averaging ‘closer’ vs ‘further’ judgements of an identical stimulus image in comparison with the remembered image, rather than the drawing-based measure we use, but the two measures are significantly correlated 89 .)

Combining conceptual and unpredictable sensory features

In the extended model, memories stored in the hippocampal autoassociative network combine conceptual features (derived from the generative network’s latent variables) and unpredictable sensory features (those with a high reconstruction error during encoding) (Fig. 2 ). In these simulations, the conceptual features are simply a one-to-one copy of latent variable representations. (Since latent variable representations are not stable as the generative network learns, concepts derived from latent variables seem more likely to be stored than the latent variables themselves, so this is a simplification; see section ‘Extended model’ for further details.)

Figure 5a,b shows the stages of recall in the extended model after encoding with a lower or higher prediction error threshold. After decomposing the input into its predictable (conceptual) and unpredictable (sensory) features, the autoassociative network performs pattern completion on the combined representation. The prototypical (that is, predicted) image corresponding to the retrieved conceptual features must then be obtained by decoding the associated latent variable representation into an experience via the return projections to the sensory neocortex. Next, the predictable and unpredictable elements are recombined, simply by overwriting the prototypical prediction with any unpredictable elements, via the connections from the sensory features to the sensory neocortex. The extended model is therefore able to exploit the generative network to reconstruct the predictable aspects of the event from its latent variables, storing only those sensory details that were poorly predicted in the autoassociative network. Equally, as the generative network improves, sensory features stored in the hippocampus may no longer differ significantly from the initial schematic reconstruction in the sensory neocortex, signalling that the hippocampal representation is no longer needed.

a , The stages of recall are shown from left to right (see Fig. 2d ), where each row represents an example scene. Each scene consists of a standard Shapes3D image with the addition of novel features (several white squares overlaid on the image with varying opacity). b , Repeating this process with a higher error threshold for encoding (with the same events and partial inputs) means fewer poorly predicted sensory features are stored in the autoassociative MHN, leading to more prototypical recall with increased reconstruction error. c , Average reconstruction error and number of sensory features (that is, pixels) stored in the autoassociative MHN against the error threshold for encoding. One hundred images are tested and error bars give the s.e.m. d , Replay in the extended model. The autoassociative network retrieves memories when random noise is given as input, as shown for three example inputs (upper row). As above, the square images show the poorly predicted sensory features and the rectangles below these display the latent variable representations (lower row).

Schema-based distortions in the extended model

The schema-based distortions shown in the basic model result from the generative network and increase with dependence on it, but memory distortions can also have a rapid onset 93 , 94 . In the extended model, even immediate recall involves a combination of conceptual and sensory features, and the presence of conceptual features induces distortions before consolidation of that specific memory.

In general, recall is biased towards the ‘mean’ of the class soon after encoding due to the influence of the conceptual representations (Fig. 5a,b ). This is more pronounced when the error threshold for encoding is high, as there is more reliance on the ‘prototypical’ representations, resulting in the recall of fewer novel features. At a lower error threshold, more sensory detail is encoded, that is, the dimension of the memory trace is higher ( r s (3) = −1, P < 0.001). This results in a lower reconstruction error ( r s (3) = 1, P < 0.001), indicating lower distortion but at the expense of efficiency.

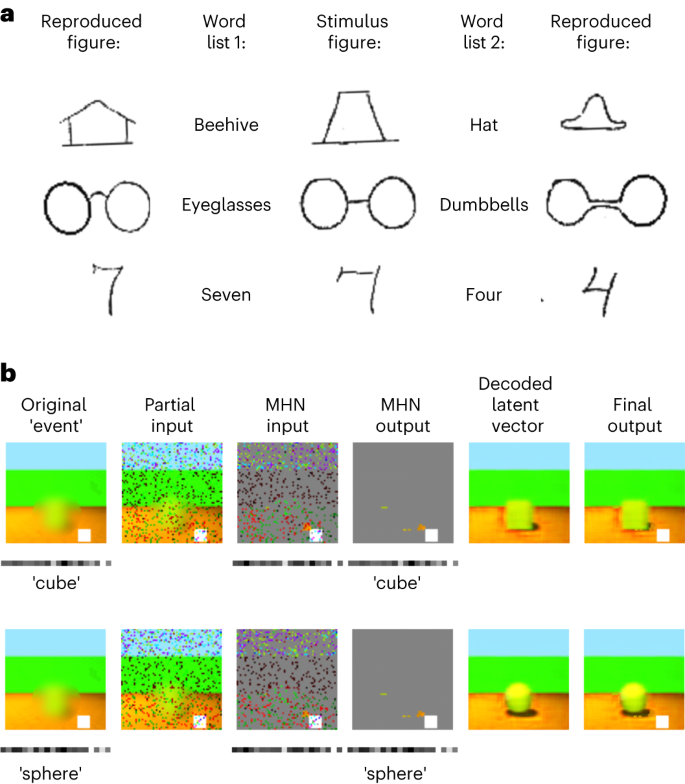

External context further distorts memory. Reference 95 asked participants to reproduce ambiguous sketches. A context was established by telling the participants that they would see images from a certain category. After a delay, drawings from memory were distorted to look more like members of the context category. Figure 6b shows the result of encoding the same ambiguous image with two different externally provided concepts (a cube in the top row, a sphere in the bottom row), represented by the latent variables for each concept, as opposed to the latent variables predicted by the image itself as in Fig. 5a,b . During recall, the encoded concept is retrieved in the autoassociative network, determining the prototypical scene reconstructed by the generative network. This biases recall towards the class provided as context, mirroring Fig. 6a .

a , Adapted figure from ref. 95 showing that recall of an ambiguous item (stimulus figure, centre) depends on its context at encoding (word from list 1, left; or list 2, right), as shown by drawing from memory (reproduced figure, far left and far right). b , Memory distortions in the extended model, when the original scene (containing an ambiguous blurred shape) is encoded with a given concept (cube, top; sphere, bottom), represented by the latent variables for that class. Then, a partial input is processed by the generative network to produce predicted conceptual features and the sensory features not predicted by the prototype for that concept (in this case, a white square) for input to the autoassociative MHN. However, pattern completion in the MHN reproduces the originally encoded sensory and conceptual features (cube, top; sphere, bottom), and these are recombined to produce the final output, which is distorted towards the encoded conceptual context.

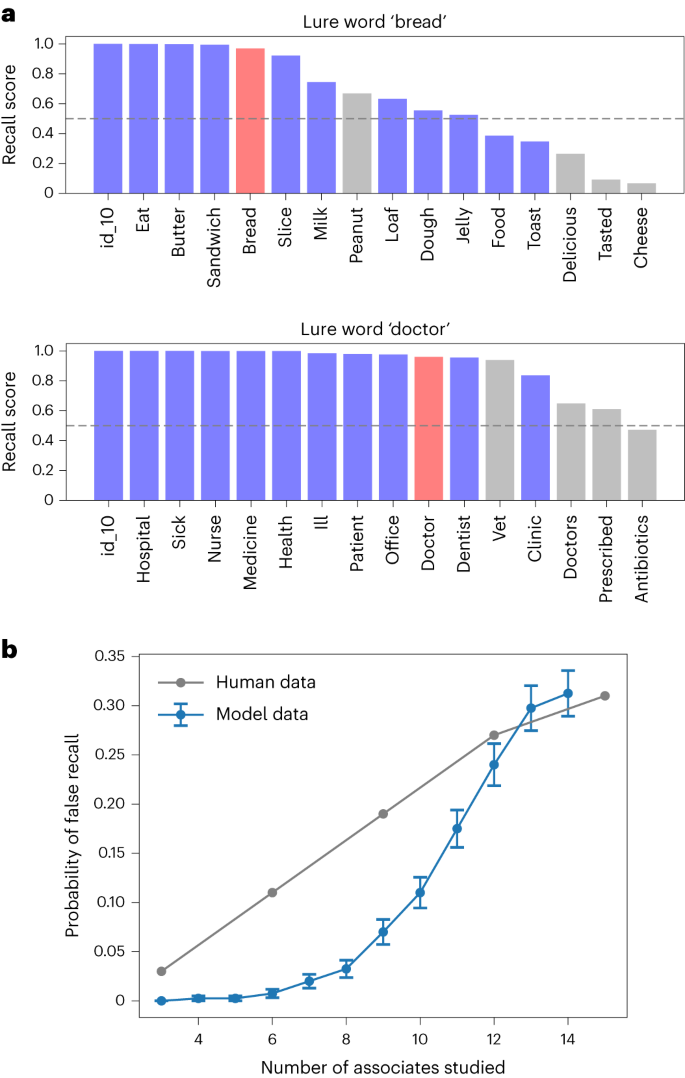

We also simulate the Deese–Roediger–McDermott (DRM) task 93 , 94 in the extended model to demonstrate its applicability to non-image stimuli. In the DRM task, participants are shown lists of words that are semantically related to ‘lure words’ not present in the list; there is a robust finding that false recognition and recall of the lure words occur 93 , 94 . In the extended model, gist-based semantic intrusions arise as a consequence of learning the co-occurrence statistics of words. First, the VAE is trained to reconstruct the sets of words in simple stories 96 converted to vectors of word counts, representing background knowledge. The system then encodes the experimental lists as the combination of an ‘id_n’ term capturing unique spatiotemporal context, and the VAE’s latent representation of each word list (respectively analogous to the stimulus-unique pixels and the VAE’s latent representation of each image in Fig. 5 ). As in the human data, lure words are often but not always recalled when the system is presented with ‘id_n’ (Fig. 7a ), since the latent variable representations that generate the words in the list also tend to generate the lure word. The system also forgets some words and produces additional semantic intrusions. In addition, the chance of recalling the lure word is higher for longer lists, as in human data from ref. 97 , as more related words provide a stronger ‘prior’ for the lure (Fig. 7b ) ( r s (10) = 0.998, P < 0.001, 95% CI = 0.982, 1.000).

a , First, the VAE is trained to reconstruct simple stories 96 converted to vectors of word counts, representing background knowledge. The system then encodes the lists as the combination of an ‘id_n’ term capturing unique spatiotemporal context, and the VAE’s latent variable representation of the word list. In each plot, recalled stimuli when the system is presented with ‘id_n’ are shown, with output scores treated as probabilities so that words with a score >0.5 (above dashed lines) are recalled. Words from the stimulus list are shown in blue, and lures in red. See Fig. 1 of Supplementary Information for results for the remaining DRM lists. b , The chance of recalling the lure word is higher when longer lists are encoded (blue). Each measurement is averaged across 400 trials (20 random subsets of each of the 20 DRM lists), and error bars give the s.e.m. This qualitatively resembles human data from ref. 97 (grey).

Modelling brain damage

Recent episodic memory is impaired following damage to the HF, whereas semantic memory, including the semantic content of remote episodes, appears relatively spared. In the model, the semantic form of a consolidated memory survives damage to the HF due to latent variable representations in the mPFC or the alTL (even if those in the EC are lesioned); Fig. 3a demonstrates how semantic recall performance improves with the age of a memory, reflecting the temporal gradient of retrograde amnesia (see section ‘Modelling semantic memory’). However, these semantic ‘facts’ cannot be used to generate an experience ‘episodically’ without the generative network’s decoder, in agreement with multiple trace theory 11 .

The extent of retrograde amnesia can vary greatly depending in part on which regions of the HF are damaged 98 , 99 . The dissociation of retrograde and anterograde amnesia in some cases suggests that the circuits for encoding memories and the circuits for recalling them via the HF only overlap partially 99 . For example, if the autoassociative network is damaged but not the generative network’s decoder, the generative network can still perform reconstruction of fully consolidated memories. This could explain varying reports of the gradient of retrograde amnesia when assessing episodic recollection (as opposed to semantic memory), if the generative network’s decoder is intact in patients showing spared episodic recollection of early memories 45 . Note that the location of damage within the generative network’s decoder also affects the resulting deficit in our model. In particular, patients with damage restricted to the hippocampus proper can (re)construct simple scenes but not more complex ones 23 .

Our model also shows the characteristic anterograde amnesia after hippocampal damage, as the hippocampus is required to initially bind features together and support off-line training of the generative model. Anterograde semantic learning would also be impaired by hippocampal damage (as the generative network is trained by hippocampal replay). While hippocampal replay need not be the only mechanism for schema acquisition, it would probably be much slower without the benefit of replay. However, semantic learning over short timescales may be relatively unimpaired, as it is less dependent on extracting regularities from long-term memory 100 .

In semantic dementia, semantic memory is impaired, and remote episodic memory is impaired more than recent episodic memory 101 . This would be consistent with lesions to the generative network, as recent memories can rely more on the hippocampal autoassociative network. However, the exact effects would depend on the distribution of damage across the various potential generative networks in the EC, mPFC and alTL. Of these, the alTL network is associated with semantic dementia, and the posterior medial network (corresponding to the generative network between the sensory areas and the EC) with Alzheimer’s disease 83 .

Finally, neuropsychological evidence suggests a distinction between familiarity and recollection, and furthermore a partial dissociation between different tests of familiarity; patients with selective hippocampal damage can exhibit recognition memory deficits in a simple ‘yes/no’ task with similar foils, but not in a ‘forced choice’ variant involving choosing the more familiar stimulus from a set 102 . This is consistent with the idea that lower prediction error in the neocortical generative network indicates familiarity, but retrieval of unique details from the hippocampus is required for more definitive recognition memory.

We have proposed a model of systems consolidation as the training of a generative neural network, which learns to support episodic memory, and also imagination, semantic memory and inference. This occurs through teacher–student learning. The hippocampal ‘teacher’ rapidly encodes an event, which may combine unpredictable sensory elements (with connections to and from the sensory cortex) and predictable conceptual elements (with connections to and from latent variable representations in the generative network). After exposure to replayed representations from the ‘teacher’, the generative ‘student’ network supports reconstruction of events by forming a schematic representation in the sensory neocortex from latent variables via the HF, with unpredictable sensory elements added from the hippocampus.

In contrast to the relatively veridical initial encoding, the generative model learns to capture the probability distributions underlying experiences, or ‘schemas’. This enables not just efficient recall, reconstructing memories without the need to store them individually, but also imagination (by sampling from the latent variable distributions) and inference (by using the learned statistics of experience to predict the values of unseen variables). In addition, semantic memory (that is, factual knowledge) develops as a by-product of learning to predict sensory experience. As the generative model becomes more accurate, the need to store and retrieve unpredicted details in the hippocampus decreases (producing a gradient of retrograde amnesia in cases of hippocampal damage). However, the generative network necessarily introduces distortion compared to the initial memory system. Multiple generative networks can be trained in parallel, and we expect this to include networks with latent variables in the EC, mPFC and alTL.

We now compare the model’s performance to the list of key findings from the introduction:

Gradual consolidation follows one-shot encoding: A memory is encoded in the hippocampal ‘teacher’ network after a single exposure, and transferred to the generative ‘student’ network after being replayed repeatedly (Fig. 1c,d ).

Semantic memory becomes hippocampus-independent: The latent variable representations learned by the generative networks constitute the ‘key facts’ of an episode, supporting semantic memory (Fig. 3a ).

Episodic memory remains hippocampus-dependent: Return projections to the sensory neocortex via the HF are required to decode the latent variable representations into a sensory experience (Fig. 1 ). (EC is required for even simple (re)construction, while the hippocampus proper helps to generate complex conceptually coherent scenes and retrieves unpredictable details that are not yet consolidated into the generative network; see section ‘Neural substrates of the model’.)

Shared substrate for episode generation: Generative models are a common mechanism for episode generation. Familiar scenes can be reconstructed and new ones can be generated by sampling or transforming existing latent variable representations (Fig. 3b–d ), providing a model for imagination, scene construction and episodic future thinking.

Consolidation promotes inference and generalization: Relational inference corresponds to vector arithmetic applied to the generative network’s latent variables (Fig. 3b ).

Episodic memories are distorted: We show how memory distortions arise from the generative network (Figs. 4 , 6 and 7 ). This extends the model of ref. 32 to relate memory distortion to consolidation.

Association cortex encodes latent structure: Latent variable representations in the EC, mPFC, and alTL provide schemas for episodic recollection and imagination (via HF) and for semantic retrieval and inference.

Prediction error affects memory processing: The generative network is constantly calculating the reconstruction error of experiences 58 , 59 . Events that are consistent with the existing generative model require less encoding in the autoassociative hippocampal network (Fig. 5 ).

Episodic memories include conceptual features: When an experience combines a mixture of familiar and unfamiliar elements, both concepts and poorly predicted sensory elements are stored in the hippocampus via association to a specific memory unit.

Our model can be seen as an update to the complementary learning systems (CLS) 4 framework to better account for points 3 to 9 above, reconciling the development of semantic representations in the neocortex (as in CLS) with the continued dependence on the hippocampal formation for episodic recall (as in multiple trace theory 11 ). Furthermore, it provides a unified view of: (1) episode generation, (2) how episodic memories change over time and exhibit distortions and (3) how semantic and episodic information are combined in memory. We build on previous work exploring the role of generative networks in consolidation 18 , 19 , as models of the hippocampal formation 31 , 32 , 33 , as priors for episodic memory 35 and as models of spatial cognition 22 .

A key aspect of the model is that multiple generative networks can be trained concurrently from a single autoassociative network (Fig. 2a ) and may be optimized for different tasks. Thus, the latent representations in the mPFC and the alTL may be more closely linked to value or language than those in the EC 103 , 104 . These differences may arise from differences in network structure (for example, the degree of compression) or from additional training objectives that shape their representations 105 (for example, the generative network with latent variables in the mPFC might be trained to predict task-relevant value in addition to the EC representations). We expect the generative networks to overlap closer to their sensory inputs/outputs, where general-purpose features are more useful, and diverge as the representations become more abstract (or task-specific if there are additional training objectives) 106 . This may involve a primary VAE with latent variables in the EC, with additional pathways from the higher sensory cortex to the EC routed via latent variables in the mPFC or the alTL.

Our model raises some fundamental questions: Does true episodic memory require event-unique detail, and does this require the hippocampus? Or can prototypical predictions qualify as memory rather than imagination? In the model, event-unique details are initially provided by the hippocampus but can also be provided by the generative network. For example, if you know that someone attended your 8th birthday party and gave you a particular gift, these personal semantic facts need not be hippocampal-dependent but could generate a scene with the right event-specific details, which would seem like episodic memory. The increasingly sophisticated generation of images from text using generative models 107 suggests that episode construction from semantic facts is computationally plausible.

Episodic memories are defined by their unique spatiotemporal context 1 . In the model, spatial and temporal context correspond to conceptual features captured by place 108 , 109 or time 110 , 111 cells in the hippocampus and might be linked to latent variable representations formed in the EC, such as grid cells in the medial EC, which form an efficient basis for locations in real 31 , 112 , 113 or cognitive spaces 31 , 54 , or temporal context representations in the lateral EC 114 , 115 . Events with specific spatial and temporal context can be generated from these latent variable representations, as has been modelled in detail for space 20 , 21 , 22 .

More generally, this work builds on the spatial cognition literature, in which place and head direction cells act as latent variables in a generative model 20 , 21 , 22 , allowing the generation of a scene from a specific viewpoint. References 20 , 21 , 22 explore how egocentric sensory representations could be transformed into allocentric latent variables before storage in the medial temporal lobe and conversely, how egocentric representations could be reconstructed from allocentric ones to support imagery. The latent representations learned through consolidation in our model correspond loosely to the allocentric representations, and the sensory representations produced by HF to the egocentric ones; only egocentric and sensory representations are directly experienced, whereas allocentric and semantic representations are useful abstractions that can also be exploited for efficient hippocampal encoding.

Our model simplifies the true nature of mnemonic processing in several ways. First, the interaction of sensory and conceptual features in the hippocampus and latent variables in the EC during retrieval could be more complex, with each type of representation contributing to pattern completion of the other as in interactions between items and contextual representations in the Temporal Context Model 116 , and might iterate over retrievals from both hippocampal and generative networks 50 . Second, our model distinguishes between ‘sensory’ and ‘conceptual’ representations in the hippocampus, respectively linked to the sensory neocortex at the input/output of the generative network and to the latent variable layer in the middle. In reality, a gradient of levels of representation in the hippocampus is more likely, from detailed sensory representations to coarse-grained conceptual ones, respectively linked to lower or higher neocortical areas 117 , and might map onto the observed functional gradients along the longitudinal axis of the hippocampus 118 . Third, our generative network uses back-propagation of the prediction error between output and input patterns to learn. Generative networks with more plausible (if less efficient) learning rules exist 67 , 68 , 69 , which have the advantage of producing a prediction error signal at each layer (between top–down prediction and bottom–up recognition), potentially allowing learning of concepts and exceptions at all levels of description. Fourth, considering consolidation as a continual lifelong process rather than during encoding of a single dataset introduces new complexities; these include the instability of latent representations and the prevention of catastrophic forgetting of already consolidated memories as new memories are assimilated into the generative network. The model could be extended to address this, for example, by using replay from the generative network as well as from the hippocampal network, which could reduce catastrophic forgetting and stabilize latent variable representations in both networks 33 , 119 , 120 , building on previous research on sleep and learning 121 . Fifth, we model semantic memory as prediction of categorical information for an ‘event’, but future work should model more complex semantic knowledge, for example, by decoding language from latent representations of multimodal stimuli 85 , 86 . In particular, the relationship between semantic memory for specific ‘events’ and the broader ‘web’ of general knowledge should be considered.