- Privacy Policy

Home » Textual Analysis – Types, Examples and Guide

Textual Analysis – Types, Examples and Guide

Table of Contents

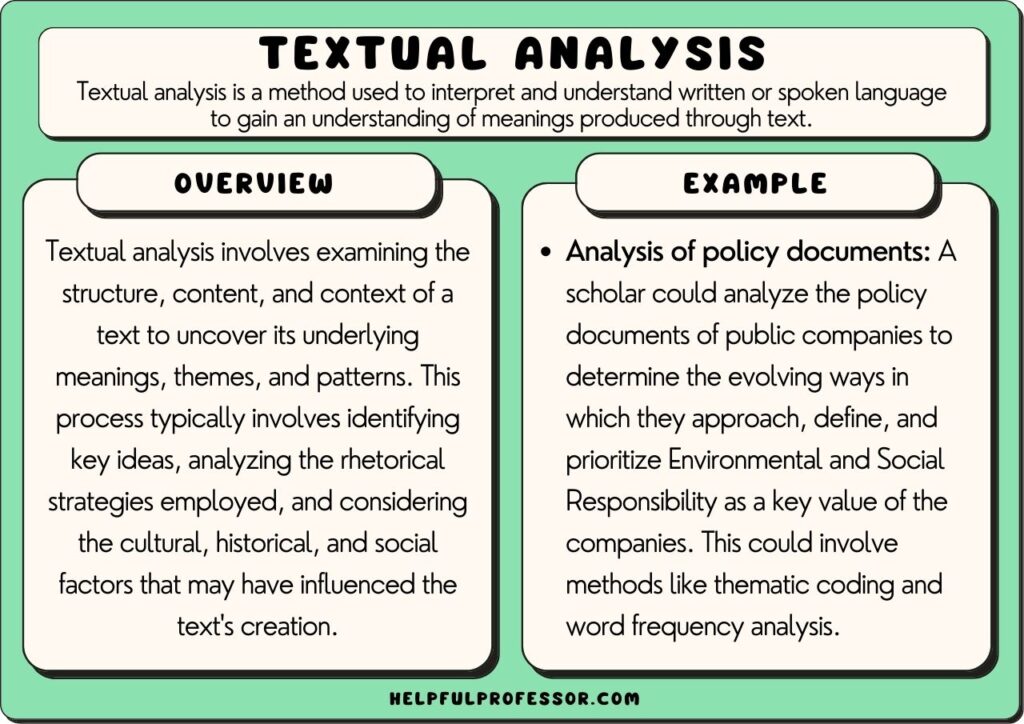

Textual Analysis

Textual analysis is the process of examining a text in order to understand its meaning. It can be used to analyze any type of text, including literature , poetry, speeches, and scientific papers. Textual analysis involves analyzing the structure, content, and style of a text.

Textual analysis can be used to understand a text’s author, date, and audience. It can also reveal how a text was constructed and how it functions as a piece of communication.

Textual Analysis in Research

Textual analysis is a valuable tool in research because it allows researchers to examine and interpret text data in a systematic and rigorous way. Here are some ways that textual analysis can be used in research:

- To explore research questions: Textual analysis can be used to explore research questions in various fields, such as literature, media studies, and social sciences. It can provide insight into the meaning, interpretation, and communication patterns of text.

- To identify patterns and themes: Textual analysis can help identify patterns and themes within a set of text data, such as analyzing the representation of gender or race in media.

- To evaluate interventions: Textual analysis can be used to evaluate the effectiveness of interventions, such as analyzing the language and messaging of public health campaigns.

- To inform policy and practice: Textual analysis can provide insights that inform policy and practice, such as analyzing legal documents to inform policy decisions.

- To analyze historical data: Textual analysis can be used to analyze historical data, such as letters, diaries, and newspapers, to provide insights into historical events and social contexts.

Textual Analysis in Cultural and Media Studies

Textual analysis is a key tool in cultural and media studies as it enables researchers to analyze the meanings, representations, and discourses present in cultural and media texts. Here are some ways that textual analysis is used in cultural and media studies:

- To analyze representation: Textual analysis can be used to analyze the representation of different social groups, such as gender, race, and sexuality, in media and cultural texts. This analysis can provide insights into how these groups are constructed and represented in society.

- To analyze cultural meanings: Textual analysis can be used to analyze the cultural meanings and symbols present in media and cultural texts. This analysis can provide insights into how culture and society are constructed and understood.

- To analyze discourse: Textual analysis can be used to analyze the discourse present in cultural and media texts. This analysis can provide insights into how language is used to construct meaning and power relations.

- To analyze media content: Textual analysis can be used to analyze media content, such as news articles, TV shows, and films, to understand how they shape our understanding of the world around us.

- To analyze advertising : Textual analysis can be used to analyze advertising campaigns to understand how they construct meanings, identities, and desires.

Textual Analysis in the Social Sciences

Textual analysis is a valuable tool in the social sciences as it enables researchers to analyze and interpret text data in a systematic and rigorous way. Here are some ways that textual analysis is used in the social sciences:

- To analyze interview data: Textual analysis can be used to analyze interview data, such as transcribed interviews, to identify patterns and themes in the data.

- To analyze survey responses: Textual analysis can be used to analyze survey responses to identify patterns and themes in the data.

- To analyze social media data: Textual analysis can be used to analyze social media data, such as tweets and Facebook posts, to identify patterns and themes in the data.

- To analyze policy documents: Textual analysis can be used to analyze policy documents, such as government reports and legislation, to identify discourses and power relations present in the policy.

- To analyze historical data: Textual analysis can be used to analyze historical data, such as letters and diaries, to provide insights into historical events and social contexts.

Textual Analysis in Literary Studies

Textual analysis is a key tool in literary studies as it enables researchers to analyze and interpret literary texts in a systematic and rigorous way. Here are some ways that textual analysis is used in literary studies:

- To analyze narrative structure: Textual analysis can be used to analyze the narrative structure of a literary text, such as identifying the plot, character development, and point of view.

- To analyze language and style: Textual analysis can be used to analyze the language and style used in a literary text, such as identifying figurative language, symbolism, and rhetorical devices.

- To analyze themes and motifs: Textual analysis can be used to analyze the themes and motifs present in a literary text, such as identifying recurring symbols, themes, and motifs.

- To analyze historical and cultural context: Textual analysis can be used to analyze the historical and cultural context of a literary text, such as identifying how the text reflects the social and political context of its time.

- To analyze intertextuality: Textual analysis can be used to analyze the intertextuality of a literary text, such as identifying how the text references or is influenced by other literary works.

Textual Analysis Methods

Textual analysis methods are techniques used to analyze and interpret various types of text, including written documents, audio and video recordings, and online content. These methods are commonly used in fields such as linguistics, communication studies, sociology, psychology, and literature.

Some common textual analysis methods include:

Content Analysis

This involves identifying patterns and themes within a set of text data. This method is often used to analyze media content or other types of written materials, such as policy documents or legal briefs.

Discourse Analysis

This involves examining how language is used to construct meaning in social contexts. This method is often used to analyze political speeches or other types of public discourse.

Critical Discourse Analysis

This involves examining how power and social relations are constructed through language use, particularly in political and social contexts.

Narrative Analysis

This involves examining the structure and content of stories or narratives within a set of text data. This method is often used to analyze literary texts or oral histories.

This involves analyzing the meaning of signs and symbols within a set of text data. This method is often used to analyze advertising or other types of visual media.

Text mining

This involves using computational techniques to extract patterns and insights from large sets of text data. This method is often used in fields such as marketing and social media analysis.

Close Reading

This involves a detailed and in-depth analysis of a particular text, focusing on the language, style, and literary techniques used by the author.

How to Conduct Textual Analysis

Here are some general steps to conduct textual analysis:

- Choose your research question: Define your research question and identify the text or set of texts that you want to analyze.

- F amiliarize yourself with the text: Read and re-read the text, paying close attention to its language, structure, and content. Take notes on key themes, patterns, and ideas that emerge.

- Choose your analytical approach: Select the appropriate analytical approach for your research question, such as close reading, thematic analysis, content analysis, or discourse analysis.

- Create a coding scheme: If you are conducting content analysis, create a coding scheme to categorize and analyze the content of the text. This may involve identifying specific words, themes, or ideas to code.

- Code the text: Apply your coding scheme to the text and systematically categorize the content based on the identified themes or patterns.

- Analyze the data: Once you have coded the text, analyze the data to identify key patterns, themes, or trends. Use appropriate software or tools to help with this process if needed.

- Draw conclusions: Draw conclusions based on your analysis and answer your research question. Present your findings and provide evidence to support your conclusions.

- R eflect on limitations and implications: Reflect on the limitations of your analysis, such as any biases or limitations of the selected method. Also, discuss the implications of your findings and their relevance to the broader research field.

When to use Textual Analysis

Textual analysis can be used in various research fields and contexts. Here are some situations when textual analysis can be useful:

- Understanding meaning and interpretation: Textual analysis can help understand the meaning and interpretation of text, such as literature, media, and social media.

- Analyzing communication patterns: Textual analysis can be used to analyze communication patterns in different contexts, such as political speeches, social media conversations, and legal documents.

- Exploring cultural and social contexts: Textual analysis can be used to explore cultural and social contexts, such as the representation of gender, race, and identity in media.

- Examining historical documents: Textual analysis can be used to examine historical documents, such as letters, diaries, and newspapers.

- Evaluating marketing and advertising campaigns: Textual analysis can be used to evaluate marketing and advertising campaigns, such as analyzing the language, symbols, and imagery used.

Examples of Textual Analysis

Here are a few examples:

- Media Analysis: Textual analysis is frequently used in media studies to examine how news outlets and social media platforms frame and present news stories. Researchers can use textual analysis to examine the language and images used in news articles, tweets, and other forms of media to identify patterns and biases.

- Customer Feedback Analysis: Textual analysis is often used by businesses to analyze customer feedback, such as online reviews or social media posts, to identify common themes and areas for improvement. This allows companies to make data-driven decisions and improve their products or services.

- Political Discourse Analysis: Textual analysis is commonly used in political science to analyze political speeches, debates, and other forms of political communication. Researchers can use this method to identify the language and rhetoric used by politicians, as well as the strategies they employ to appeal to different audiences.

- Literary Analysis: Textual analysis is a fundamental tool in literary criticism, allowing scholars to examine the language, structure, and themes of literary works. This can involve close reading of individual texts or analysis of larger literary movements.

- Sentiment Analysis: Textual analysis is used to analyze social media posts, customer feedback, or other sources of text data to determine the sentiment of the text. This can be useful for businesses or organizations to understand how their brand or product is perceived in the market.

Purpose of Textual Analysis

There are several specific purposes for using textual analysis, including:

- To identify and interpret patterns in language use: Textual analysis can help researchers identify patterns in language use, such as common themes, recurring phrases, and rhetorical devices. This can provide insights into the values and beliefs that underpin the text.

- To explore the cultural context of the text: Textual analysis can help researchers understand the cultural context in which the text was produced, including the historical, social, and political factors that shaped the language and messages.

- To examine the intended and unintended meanings of the text: Textual analysis can help researchers uncover both the intended and unintended meanings of the text, and to explore how the language is used to convey certain messages or values.

- To understand how texts create and reinforce social and cultural identities: Textual analysis can help researchers understand how texts contribute to the creation and reinforcement of social and cultural identities, such as gender, race, ethnicity, and nationality.

Applications of Textual Analysis

Here are some common applications of textual analysis:

Media Studies

Textual analysis is frequently used in media studies to analyze news articles, advertisements, and social media posts to identify patterns and biases in media representation.

Literary Criticism

Textual analysis is a fundamental tool in literary criticism, allowing scholars to examine the language, structure, and themes of literary works.

Political Science

Textual analysis is commonly used in political science to analyze political speeches, debates, and other forms of political communication.

Marketing and Consumer Research

Textual analysis is used to analyze customer feedback, such as online reviews or social media posts, to identify common themes and areas for improvement.

Healthcare Research

Textual analysis is used to analyze patient feedback and medical records to identify patterns in patient experiences and improve healthcare services.

Social Sciences

Textual analysis is used in various fields within social sciences, such as sociology, anthropology, and psychology, to analyze various forms of data, including interviews, field notes, and documents.

Linguistics

Textual analysis is used in linguistics to study language use and its relationship to social and cultural contexts.

Advantages of Textual Analysis

There are several advantages of textual analysis in research. Here are some of the key advantages:

- Systematic and objective: Textual analysis is a systematic and objective method of analyzing text data. It enables researchers to analyze text data in a consistent and rigorous way, minimizing the risk of bias or subjectivity.

- Versatile : Textual analysis can be used to analyze a wide range of text data, including interview transcripts, survey responses, social media data, policy documents, and literary texts.

- Efficient : Textual analysis can be a more efficient method of data analysis compared to manual coding or other methods of qualitative analysis. With the help of software tools, researchers can process large volumes of text data more quickly and accurately.

- Allows for in-depth analysis: Textual analysis enables researchers to conduct in-depth analysis of text data, uncovering patterns and themes that may not be visible through other methods of data analysis.

- Can provide rich insights: Textual analysis can provide rich and detailed insights into complex social phenomena. It can uncover subtle nuances in language use, reveal underlying meanings and discourses, and shed light on the ways in which social structures and power relations are constructed and maintained.

Limitations of Textual Analysis

While textual analysis can provide valuable insights into the ways in which language is used to convey meaning and create social and cultural identities, it also has several limitations. Some of these limitations include:

- Limited Scope : Textual analysis is only able to analyze the content of written or spoken language, and does not provide insights into non-verbal communication such as facial expressions or body language.

- Subjectivity: Textual analysis is subject to the biases and interpretations of the researcher, as well as the context in which the language was produced. Different researchers may interpret the same text in different ways, leading to inconsistencies in the findings.

- Time-consuming: Textual analysis can be a time-consuming process, particularly if the researcher is analyzing a large amount of text. This can be a limitation in situations where quick analysis is necessary.

- Lack of Generalizability: Textual analysis is often used in qualitative research, which means that its findings cannot be generalized to larger populations. This limits the ability to draw conclusions that are applicable to a wider range of contexts.

- Limited Accessibility: Textual analysis requires specialized skills and training, which may limit its accessibility to researchers who are not trained in this method.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Cluster Analysis – Types, Methods and Examples

Questionnaire – Definition, Types, and Examples

Discriminant Analysis – Methods, Types and...

Case Study – Methods, Examples and Guide

MANOVA (Multivariate Analysis of Variance) –...

Observational Research – Methods and Guide

Textual Analysis: Definition, Types & 10 Examples

Textual analysis is a research methodology that involves exploring written text as empirical data. Scholars explore both the content and structure of texts, and attempt to discern key themes and statistics emergent from them.

This method of research is used in various academic disciplines, including cultural studies, literature, bilical studies, anthropology , sociology, and others (Dearing, 2022; McKee, 2003).

This method of analysis involves breaking down a text into its constituent parts for close reading and making inferences about its context, underlying themes, and the intentions of its author.

Textual Analysis Definition

Alan McKee is one of the preeminent scholars of textual analysis. He provides a clear and approachable definition in his book Textual Analysis: A Beginner’s Guide (2003) where he writes:

“When we perform textual analysis on a text we make an educated guess at some of the most likely interpretations that might be made of the text […] in order to try and obtain a sense of the ways in which, in particular cultures at particular times, people make sense of the world around them.”

A key insight worth extracting from this definition is that textual analysis can reveal what cultural groups value, how they create meaning, and how they interpret reality.

This is invaluable in situations where scholars are seeking to more deeply understand cultural groups and civilizations – both past and present (Metoyer et al., 2018).

As such, it may be beneficial for a range of different types of studies, such as:

- Studies of Historical Texts: A study of how certain concepts are framed, described, and approached in historical texts, such as the Bible.

- Studies of Industry Reports: A study of how industry reports frame and discuss concepts such as environmental and social responsibility.

- Studies of Literature: A study of how a particular text or group of texts within a genre define and frame concepts. For example, you could explore how great American literature mythologizes the concept of the ‘The American Dream’.

- Studies of Speeches: A study of how certain politicians position national identities in their appeals for votes.

- Studies of Newspapers: A study of the biases within newspapers toward or against certain groups of people.

- Etc. (For more, see: Dearing, 2022)

McKee uses the term ‘textual analysis’ to also refer to text types that are not just written, but multimodal. For a dive into the analysis of multimodal texts, I recommend my article on content analysis , where I explore the study of texts like television advertisements and movies in detail.

Features of a Textual Analysis

When conducting a textual analysis, you’ll need to consider a range of factors within the text that are worthy of close examination to infer meaning. Features worthy of considering include:

- Content: What is being said or conveyed in the text, including explicit and implicit meanings, themes, or ideas.

- Context: When and where the text was created, the culture and society it reflects, and the circumstances surrounding its creation and distribution.

- Audience: Who the text is intended for, how it’s received, and the effect it has on its audience.

- Authorship: Who created the text, their background and perspectives, and how these might influence the text.

- Form and structure: The layout, sequence, and organization of the text and how these elements contribute to its meanings (Metoyer et al., 2018).

Textual Analysis Coding Methods

The above features may be examined through quantitative or qualitative research designs , or a mixed-methods angle.

1. Quantitative Approaches

You could analyze several of the above features, namely, content, form, and structure, from a quantitative perspective using computational linguistics and natural language processing (NLP) analysis.

From this approach, you would use algorithms to extract useful information or insights about frequency of word and phrase usage, etc. This can include techniques like sentiment analysis, topic modeling, named entity recognition, and more.

2. Qualitative Approaches

In many ways, textual analysis lends itself best to qualitative analysis. When identifying words and phrases, you’re also going to want to look at the surrounding context and possibly cultural interpretations of what is going on (Mayring, 2015).

Generally, humans are far more perceptive at teasing out these contextual factors than machines (although, AI is giving us a run for our money).

One qualitative approach to textual analysis that I regularly use is inductive coding, a step-by-step methodology that can help you extract themes from texts. If you’re interested in using this step-by-step method, read my guide on inductive coding here .

See more Qualitative Research Approaches Here

Textual Analysis Examples

Title: “Discourses on Gender, Patriarchy and Resolution 1325: A Textual Analysis of UN Documents” Author: Nadine Puechguirbal Year: 2010 APA Citation: Puechguirbal, N. (2010). Discourses on Gender, Patriarchy and Resolution 1325: A Textual Analysis of UN Documents, International Peacekeeping, 17 (2): 172-187. doi: 10.1080/13533311003625068

Summary: The article discusses the language used in UN documents related to peace operations and analyzes how it perpetuates stereotypical portrayals of women as vulnerable individuals. The author argues that this language removes women’s agency and keeps them in a subordinate position as victims, instead of recognizing them as active participants and agents of change in post-conflict environments. Despite the adoption of UN Security Council Resolution 1325, which aims to address the role of women in peace and security, the author suggests that the UN’s male-dominated power structure remains unchallenged, and gender mainstreaming is often presented as a non-political activity.

Title: “Racism and the Media: A Textual Analysis” Author: Kassia E. Kulaszewicz Year: 2015 APA Citation: Kulaszewicz, K. E. (2015). Racism and the Media: A Textual Analysis . Dissertation. Retrieved from: https://sophia.stkate.edu/msw_papers/477

Summary: This study delves into the significant role media plays in fostering explicit racial bias. Using Bandura’s Learning Theory, it investigates how media content influences our beliefs through ‘observational learning’. Conducting a textual analysis, it finds differences in representation of black and white people, stereotyping of black people, and ostensibly micro-aggressions toward black people. The research highlights how media often criminalizes Black men, portraying them as violent, while justifying or supporting the actions of White officers, regardless of their potential criminality. The study concludes that news media likely continues to reinforce racism, whether consciously or unconsciously.

Title: “On the metaphorical nature of intellectual capital: a textual analysis” Author: Daniel Andriessen Year: 2006 APA Citation: Andriessen, D. (2006). On the metaphorical nature of intellectual capital: a textual analysis. Journal of Intellectual capital , 7 (1), 93-110.

Summary: This article delves into the metaphorical underpinnings of intellectual capital (IC) and knowledge management, examining how knowledge is conceptualized through metaphors. The researchers employed a textual analysis methodology, scrutinizing key texts in the field to identify prevalent metaphors. They found that over 95% of statements about knowledge are metaphor-based, with “knowledge as a resource” and “knowledge as capital” being the most dominant. This study demonstrates how textual analysis helps us to understand current understandings and ways of speaking about a topic.

Title: “Race in Rhetoric: A Textual Analysis of Barack Obama’s Campaign Discourse Regarding His Race” Author: Andrea Dawn Andrews Year: 2011 APA Citation: Andrew, A. D. (2011) Race in Rhetoric: A Textual Analysis of Barack Obama’s Campaign Discourse Regarding His Race. Undergraduate Honors Thesis Collection. 120 . https://digitalcommons.butler.edu/ugtheses/120

This undergraduate honors thesis is a textual analysis of Barack Obama’s speeches that explores how Obama frames the concept of race. The student’s capstone project found that Obama tended to frame racial inequality as something that could be overcome, and that this was a positive and uplifting project. Here, the student breaks-down times when Obama utilizes the concept of race in his speeches, and examines the surrounding content to see the connotations associated with race and race-relations embedded in the text. Here, we see a decidedly qualitative approach to textual analysis which can deliver contextualized and in-depth insights.

Sub-Types of Textual Analysis

While above I have focused on a generalized textual analysis approach, a range of sub-types and offshoots have emerged that focus on specific concepts, often within their own specific theoretical paradigms. Each are outlined below, and where I’ve got a guide, I’ve linked to it in blue:

- Content Analysis : Content analysis is similar to textual analysis, and I would consider it a type of textual analysis, where it’s got a broader understanding of the term ‘text’. In this type, a text is any type of ‘content’, and could be multimodal in nature, such as television advertisements, movies, posters, and so forth. Content analysis can be both qualitative and quantitative, depending on whether it focuses more on the meaning of the content or the frequency of certain words or concepts (Chung & Pennebaker, 2018).

- Discourse Analysis : Emergent specifically from critical and postmodern/ poststructural theories, discourse analysis focuses closely on the use of language within a social context, with the goal of revealing how repeated framing of terms and concepts has the effect of shaping how cultures understand social categories. It considers how texts interact with and shape social norms, power dynamics, ideologies, etc. For example, it might examine how gender is socially constructed as a distinct social category through Disney films. It may also be called ‘critical discourse analysis’.

- Narrative Analysis: This approach is used for analyzing stories and narratives within text. It looks at elements like plot, characters, themes, and the sequence of events to understand how narratives construct meaning.

- Frame Analysis: This approach looks at how events, ideas, and themes are presented or “framed” within a text. It explores how these frames can shape our understanding of the information being presented. While similar to discourse analysis, a frame analysis tends to be less associated with the loaded concept of ‘discourse’ that exists specifically within postmodern paradigms (Smith, 2017).

- Semiotic Analysis: This approach studies signs and symbols, both visual and textual, and could be a good compliment to a content analysis, as it provides the language and understandings necessary to describe how signs make meaning in cultural contexts that we might find with the fields of semantics and pragmatics . It’s based on the theory of semiotics, which is concerned with how meaning is created and communicated through signs and symbols.

- Computational Textual Analysis: In the context of data science or artificial intelligence, this type of analysis involves using algorithms to process large amounts of text. Techniques can include topic modeling, sentiment analysis, word frequency analysis, and others. While being extremely useful for a quantitative analysis of a large dataset of text, it falls short in its ability to provide deep contextualized understandings of words-in-context.

Each of these methods has its strengths and weaknesses, and the choice of method depends on the research question, the type of text being analyzed, and the broader context of the research.

See More Examples of Analysis Here

Strengths and Weaknesses of Textual Analysis

When writing your methodology for your textual analysis, make sure to define not only what textual analysis is, but (if applicable) the type of textual analysis, the features of the text you’re analyzing, and the ways you will code the data. It’s also worth actively reflecting on the potential weaknesses of a textual analysis approach, but also explaining why, despite those weaknesses, you believe this to be the most appropriate methodology for your study.

Chung, C. K., & Pennebaker, J. W. (2018). Textual analysis. In Measurement in social psychology (pp. 153-173). Routledge.

Dearing, V. A. (2022). Manual of textual analysis . Univ of California Press.

McKee, A. (2003). Textual analysis: A beginner’s guide. Textual analysis , 1-160.

Mayring, P. (2015). Qualitative content analysis: Theoretical background and procedures. Approaches to qualitative research in mathematics education: Examples of methodology and methods , 365-380. doi: https://doi.org/10.1007/978-94-017-9181-6_13

Metoyer, R., Zhi, Q., Janczuk, B., & Scheirer, W. (2018, March). Coupling story to visualization: Using textual analysis as a bridge between data and interpretation. In 23rd International Conference on Intelligent User Interfaces (pp. 503-507). doi: https://doi.org/10.1145/3172944.3173007

Smith, J. A. (2017). Textual analysis. The international encyclopedia of communication research methods , 1-7.

Chris Drew (PhD)

Dr. Chris Drew is the founder of the Helpful Professor. He holds a PhD in education and has published over 20 articles in scholarly journals. He is the former editor of the Journal of Learning Development in Higher Education. [Image Descriptor: Photo of Chris]

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 5 Top Tips for Succeeding at University

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 50 Durable Goods Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 100 Consumer Goods Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 30 Globalization Pros and Cons

Leave a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

The Practical Guide to Textual Analysis

- Getting Started

- How Does It Work?

- Use Cases & Applications

Textual analysis is the process of gathering and examining qualitative data to understand what it’s about.

But making sense of qualitative information is a major challenge. Whether analyzing data in business or performing academic research, manually reading, analyzing, and tagging text is no longer effective – it’s time-consuming, results are often inaccurate, and the process far from scalable.

Fortunately, developments in the sub-fields of Artificial Intelligence (AI) like machine learning and natural language processing (NLP) are creating unprecedented opportunities to process and analyze large collections of text data.

Thanks to algorithms trained with machine learning it is possible to perform a myriad of tasks that involve analyzing text, like topic classification (automatically tagging texts by topic), feature extraction (identifying specific characteristics in a text) and sentiment analysis (recognizing the emotions that underlie a given text).

Below, we’ll dive into textual analysis with machine learning, what it is and how it works, and reveal its most important applications in business and academic research:

Getting started with textual analysis

- What is textual analysis?

- Difference between textual analysis and content analysis?

- What is computer-assisted textual analysis?

- Methods and techniques

- Why is it important?

How does textual analysis work?

- Text classification

- Text extraction

Use cases and applications

- Customer service

- Customer feedback

- Academic research

Let’s start with the basics!

Getting Started With Textual Analysis

What is textual analysis.

While similar to text analysis , textual analysis is mainly used in academic research to analyze content related to media and communication studies, popular culture, sociology, and philosophy.

In this case, the purpose of textual analysis is to understand the cultural and ideological aspects that underlie a text and how they are connected with the particular context in which the text has been produced. In short, textual analysis consists of describing the characteristics of a text and making interpretations to answer specific questions.

One of the challenges of textual analysis resides in how to turn complex, large-scale data into manageable information. Computer-assisted textual analysis can be instrumental at this point, as it allows you to perform certain tasks automatically (without having to read all the data) and makes it simple to observe patterns and get unexpected insights. For example, you could perform automated textual analysis on a large set of data and easily tag all the information according to a series of previously defined categories. You could also use it to extract specific pieces of data, like names, countries, emails, or any other features.

Companies are using computer-assisted textual analysis to make sense of unstructured business data , and find relevant insights that lead to data-driven decisions. It’s being used to automate everyday tasks like ticket tagging and routing, improving productivity, and saving valuable time.

Difference Between Textual Analysis and Content Analysis?

When we talk about textual analysis we refer to a data-gathering process for analyzing text data. This qualitative methodology examines the structure, content, and meaning of a text, and how it relates to the historical and cultural context in which it was produced. To do so, textual analysis combines knowledge from different disciplines, like linguistics and semiotics.

Content analysis can be considered a subcategory of textual analysis, which intends to systematically analyze text, by coding the elements of the text to get quantitative insights. By coding text (that is, establishing different categories for the analysis), content analysis makes it possible to examine large sets of data and make replicable and valid inferences.

Sitting at the intersection between qualitative and quantitative approaches, content analysis has proved to be very useful to study a wide array of text data ― from newspaper articles to social media messages ― within many different fields, that range from academic research to organizational or business studies.

What is Computer-Assisted Textual Analysis?

Computer-assisted textual analysis involves using a software, digital platform, or computational tools to perform tasks related to text analysis automatically.

The developments in machine learning make it possible to create algorithms that can be trained with examples and learn a series of tasks, from identifying topics on a given text to extracting relevant information from an extensive collection of data. Natural Language Processing (NLP), another sub-field of AI, helps machines process unstructured data and transform it into manageable information that’s ready to analyze.

Automated textual analysis enables you to analyze large amounts of data that would require a significant amount of time and resources if done manually. Not only is automated textual analysis fast and straightforward, but it’s also scalable and provides consistent results.

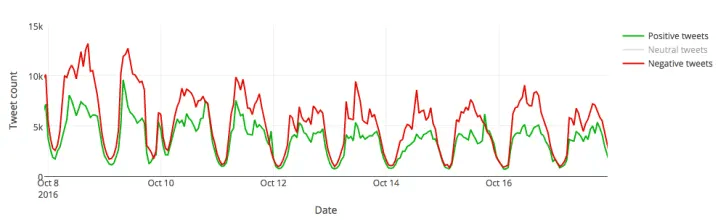

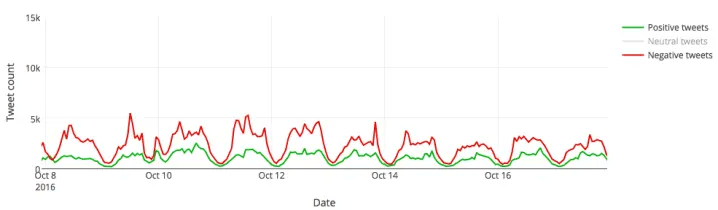

Let’s look at an example. During the US elections 2016, we used MonkeyLearn to analyze millions of tweets referring to Donald Trump and Hillary Clinton . A text classification model allowed us to tag each Tweet into the two predefined categories: Trump and Hillary. The results showed that, on an average day, Donald Trump was getting around 450,000 Twitter mentions while Hillary Clinton was only getting about 250,000. And that was just the tip of the iceberg! What was really interesting was the nuances of those mentions: were they favorable or unfavorable? By performing sentiment analysis , we were able to discover the feelings behind those messages and gain some interesting insights about the polarity of those opinions.

For example, this is how Trump’s Tweets looked like when counted by sentiment:

And this graphic shows the same for Hillary Clinton:

There are many methods and techniques for automated textual analysis. In the following section, we’ll take a closer look at each of them so that you have a better idea of what you can do with computer-assisted textual analysis.

Textual Analysis Methods & Techniques

- Word frequency

Collocation

Concordance, basic methods, word frequency.

Word frequency helps you find the most recurrent terms or expressions within a set of data. Counting the times a word is mentioned in a group of texts can lead you to interesting insights, for example, when analyzing customer feedback responses. If the terms ‘hard to use’ or ‘complex’ often appear in comments about your product, it may indicate you need to make UI/UX adjustments.

By ‘collocation’ we mean a sequence of words that frequently occur together. Collocations are usually bigrams (a pair of words) and trigrams (a combination of three words). ‘Average salary’ , ‘global market’ , ‘close a deal’ , ‘make an appointment’ , ‘attend a meeting’ are examples of collocations related to business.

In textual analysis, identifying collocations is useful to understand the semantic structure of a text. Counting bigrams and trigrams as one word improves the accuracy of the analysis.

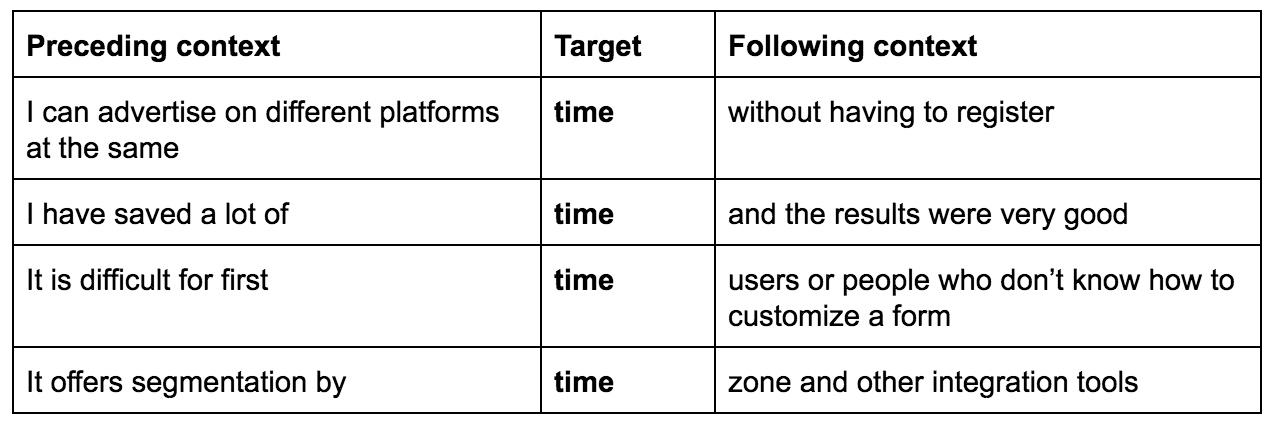

Human language is ambiguous: depending on the context, the same word can mean different things. Concordance is used to identify instances in which a word or a series of words appear, to understand its exact meaning. For example, here are a few sentences from product reviews containing the word ‘time’:

Advanced Methods

Text classification.

Text classification is the process of assigning tags or categories to unstructured data based on its content.

When we talk about unstructured data we refer to all sorts of text-based information that is unorganized, and therefore complex to sort and manage. For businesses, unstructured data may include emails, social media posts, chats, online reviews, support tickets, among many others. Text classification ― one of the essential tasks of Natural Language Processing (NLP) ― makes it possible to analyze text in a simple and cost-efficient way, organizing the data according to topic, urgency, sentiment or intent. We’ll take a closer look at each of these applications below:

Topic Analysis consists of assigning predefined tags to an extensive collection of text data, based on its topics or themes. Let’s say you want to analyze a series of product reviews to understand what aspects of your product are being discussed, and a review reads ‘the customer service is very responsive, they are always ready to help’ . This piece of feedback will be tagged under the topic ‘Customer Service’ .

Sentiment Analysis , also known as ‘opinion mining’, is the automated process of understanding the attributes of an opinion, that is, the emotions that underlie a text (e.g. positive, negative, and neutral). Sentiment analysis provides exciting opportunities in all kinds of fields. In business, you can use it to analyze customer feedback, social media posts, emails, support tickets, and chats. For instance, you could analyze support tickets to identify angry customers and solve their issues as a priority. You may also combine topic analysis with sentiment analysis (it is called aspect-based sentiment analysis ) to identify the topics being discussed about your product, and also, how people are reacting towards those topics. For example, take the product review we mentioned earlier for topic analysis: ‘the customer service is very responsive, they are always ready to help’ . This statement would be classified as both Positive and Customer Service .

Language detection : this allows you to classify a text based on its language. It’s particularly useful for routing purposes. For example, if you get a support ticket in Spanish, it could be automatically routed to a Spanish-speaking customer support team.

Intent detection : text classifiers can also be used to recognize the intent of a given text. What is the purpose behind a specific message? This can be helpful if you need to analyze customer support conversations or the results of a sales email campaign. For example, you could analyze email responses and classify your prospects based on their level of interest in your product.

Text Extraction

Text extraction is a textual analysis technique which consists of extracting specific terms or expressions from a collection of text data. Unlike text classification, the result is not a predefined tag but a piece of information that is already present in the text. For example, if you have a large collection of emails to analyze, you could easily pull out specific information such as email addresses, company names or any keyword that you need to retrieve. In some cases, you can combine text classification and text extraction in the same analysis.

The most useful text extraction tasks include:

Named-entity recognition : used to extract the names of companies , people , or organizations from a set of data.

Keyword extraction : allows you to extract the most relevant terms within a text. You can use keyword extraction to index data to be searched, create tags clouds, summarize the content of a text, among many other things.

Feature extraction : used to identify specific characteristics within a text. For example, if you are analyzing a series of product descriptions, you could create customized extractors to retrieve information like brand, model, color, etc .

Why is Textual Analysis Important?

Every day, we create a colossal amount of digital data. In fact, in the last two years alone we generated 90% percent of all the data in the world . That includes social media messages, emails, Google searches, and every other source of online data.

At the same time, books, media libraries, reports, and other types of databases are now available in digital format, providing researchers of all disciplines opportunities that didn’t exist before.

But the problem is that most of this data is unstructured. Since it doesn’t follow any organizational criteria, unstructured text is hard to search, manage, and examine. In this scenario, automated textual analysis tools are essential, as they help make sense of text data and find meaningful insights in a sea of information.

Text analysis enables businesses to go through massive collections of data with minimum human effort, saving precious time and resources, and allowing people to focus on areas where they can add more value. Here are some of the advantages of automated textual analysis:

Scalability

You can analyze as much data as you need in just seconds. Not only will you save valuable time, but you’ll also make your teams much more productive.

Real-time analysis

For businesses, it is key to detect angry customers on time or be warned of a potential PR crisis. By creating customized machine learning models for text analysis, you can easily monitor chats, reviews, social media channels, support tickets and all sorts of crucial data sources in real time, so you’re ready to take action when needed.

Academic researchers, especially in the political science field , may find real-time analysis with machine learning particularly useful to analyze polls, Twitter data, and election results.

Consistent criteria

Routine manual tasks (like tagging incoming tickets or processing customer feedback, for example) often end up being tedious and time-consuming. There are more chances of making mistakes and the criteria applied within team members often turns out to be inconsistent and subjective. Machine learning algorithms, on the other hand, learn from previous examples and always use the same criteria to analyze data .

How does Textual Analysis Work?

Computer-assisted textual analysis makes it easy to analyze large collections of text data and find meaningful information. Thanks to machine learning, it is possible to create models that learn from examples and can be trained to classify or extract relevant data.

But how easy is to get started with textual analysis?

As with most things related to artificial intelligence (AI), automated text analysis is perceived as a complex tool, only accessible to those with programming skills. Fortunately, that’s no longer the case. AI platforms like MonkeyLearn are actually very simple to use and don’t require any previous machine learning expertise. First-time users can try different pre-trained text analysis models right away, and use them for specific purposes even if they don’t have coding skills or have never studied machine learning.

However, if you want to take full advantage of textual analysis and create your own customized models, you should understand how it works.

There are two steps you need to follow before running an automated analysis: data gathering and data preparation. Here, we’ll explain them more in detail:

Data gathering : when we think of a topic we want to analyze, we should first make sure that we can obtain the data we need. Let’s say you want to analyze all the customer support tickets your company has received over a designated period of time. You should be able to export that information from your software and create a CSV or an Excel file. The data can be either internal (that is, data that’s only available to your business, like emails, support tickets, chats, spreadsheets, surveys, databases, etc) or external (like review sites, social media, news outlets or other websites).

Data preparation : before performing automated text analysis it’s necessary to prepare the data that you are going to use. This is done by applying a series of Natural Language Processing (NLP) techniques. Tokenization , parsing , lemmatization , stemming and stopword removal are just a few of them.

Once these steps are complete, you will be all set up for the data analysis itself. In this section, we’ll refer to how the most common textual analysis methods work: text classification and text extraction.

Text classification is the process of assigning tags to a collection of data based on its content.

When done manually, text categorization is a time-consuming task that often leads to mistakes and inaccuracies. By doing this automatically, it is possible to obtain very good results while spending less time and resources. Automatic text classification consists of three main approaches: rule-based, machine learning and hybrid.

Rule-based systems

Rule-based systems follow an ‘if-then’ (condition-action) structure based on linguistic rules. Basically, rules are human-made associations between a linguistic pattern on a text and a predefined tag. These linguistic patterns often refer to morphological, syntactic, lexical, semantic, or phonological aspects.

For instance, this could be a rule to classify a series of laptop descriptions:

( Lenovo | Sony | Hewlett Packard | Apple ) → Brand

In this case, when the text classification model detects any of those words within a text (the ‘if’ portion), it will assign the predefined tag ‘brand’ to them (the ‘then’ portion).

One of the main advantages of rule-based systems is that they are easy to understand by humans. On the downside, creating complex systems is quite tricky, because you need to have good knowledge of linguistics and of the topics present in the text that you want to analyze. Besides, adding new rules can be tough as it requires several tests, making rule-based systems hard to scale.

Machine learning-based systems

Machine learning-based systems are trained to make predictions based on examples. This means that a person needs to provide representative and consistent samples and assign the expected tags manually so that the system learns to make its own predictions from those past observations. The collection of manually tagged data is called training data .

But how does machine learning actually work?

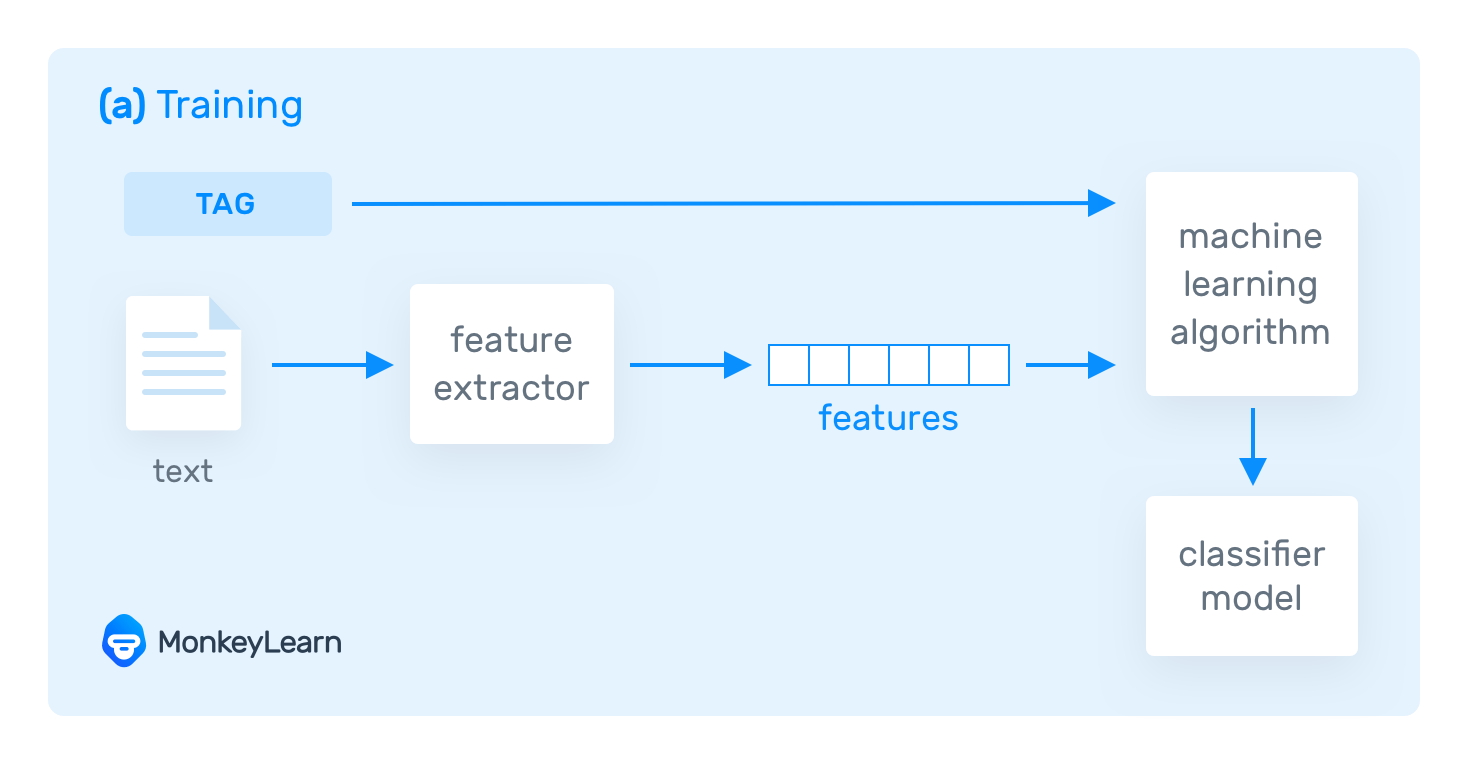

Suppose you are training a machine learning-based classifier. The system needs to transform the training data into something it can understand: in this case, vectors (an array of numbers with encoded data). Vectors contain a set of relevant features from the given text, and use them to learn and make predictions on future data.

One of the most common methods for text vectorization is called bag of words and consists of counting how many times a particular word (from a predetermined list of words) appears in the text you want to analyze.

So, the text is transformed into vectors and fed into a machine learning algorithm along with its expected tags, creating a text classification model:

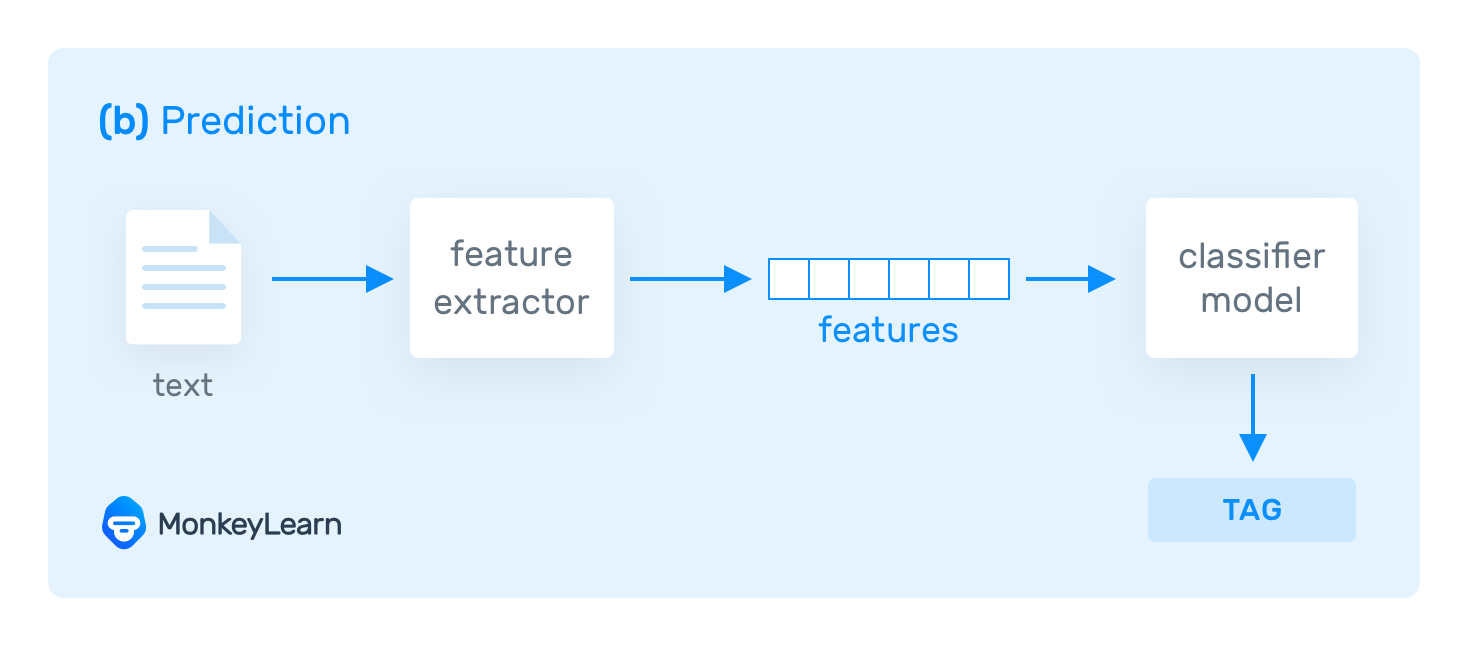

After being trained, the model can make predictions over unseen data:

Machine learning algorithms

The most common algorithms used in text classification are Naive Bayes family of algorithms (NB), Support Vector Machines (SVM), and deep learning algorithms .

Naive Bayes family of algorithms (NB) is a probabilistic algorithm that uses Bayes’ theorem to calculate the probability of each tag for a given text. It then provides the tag with the highest likelihood of occurrence. This algorithm provides good results as long as the training data is scarce.

Support Vector Machines (SVM) is a machine learning algorithm that divides vectors into two different groups within a three-dimensional space. In one group, you have vectors that belong to a given tag, and in the other group vectors that don’t belong to that tag. Using this algorithm requires more coding skills, but the results are better than the ones with Naive Bayes.

Deep learning algorithms try to emulate the way the human brain thinks. They use millions of training examples and generate very rich representations of texts, leading to much more accurate predictions than other machine learning algorithms. The downside is that they need vast amounts of training data to provide accurate results and require intensive coding.

Hybrid systems

These systems combine rule-based systems and machine learning-based systems to obtain more accurate predictions.

There are different parameters to evaluate the performance of a text classifier: accuracy , precision , recall , and F1 score .

You can measure how your text classifier works by comparing it to a fixed testing set (that is, a group of data that already includes its expected tags) or by using cross-validation, a process that divides your training data into two groups – one used to train the model, and the other used to test the results.

Let’s go into more detail about each of these parameters:

Accuracy : this is the number of correct predictions that the text classifier makes divided by the total number of predictions. However, accuracy alone is not the best parameter to analyze the performance of a text classifier. When the number of examples is imbalanced (for example, a lot of the data belongs to one of the categories) you may experience an accuracy paradox , that is, a model with high accuracy, but one that’s not necessarily able to make accurate predictions for all tags. In this case, it’s better to look at precision and recall, and F1 score.

Precision : this metric indicates the number of correct predictions for a given tag, divided by the total number of correct and incorrect predictions for that tag. In this case, a high precision level indicates there were less false positives. For some tasks ― like sending automated email responses ― you will need text classification models with a high level of precision, that will only deliver an answer when it’s highly likely that the recipient belongs to a given tag.

Recall : it shows the number of correct predictions for a given tag, over the number of predictions that should have been predicted as belonging to that tag. High recall metrics indicate there were less false negatives and, if routing support tickets for example, it means that tickets will be sent to the right teams.

F1 score : this metric considers both precision and recall results, and provides an idea of how well your text classifier is working. It allows you to see how accurate is your model for all the tags you’re using.

Cross-validation

Cross-validation is a method used to measure the accuracy of a text classifier model. It consists of splitting the training dataset into a number of equal-length subsets, in a random way. For instance, let’s imagine you have four subsets and each of them contains 25% of your training data.

All of those subsets except one are used to train the text classifier. Then, the classifier is used to make predictions over the remaining subset. After this, you need to compile all the metrics we mentioned before (accuracy, precision, recall, and F1 score), and start the process all over again, until all the subsets have been used for testing. Finally, all the results are compiled to obtain the average performance of each metric.

Text extraction is the process of identifying specific pieces of text from unstructured data. This is very useful for a variety of purposes, from extracting company names from a Linkedin dataset to pulling out prices on product descriptions.

Text extraction allows to automatically visualize where the relevant terms or expressions are, without needing to read or scan all the text by yourself. And that is particularly relevant when you have massive databases, which would otherwise take ages to analyze manually.

There are different approaches to text extraction. Here, we’ll refer to the most commonly used and reliable:

Regular expressions

Regular expressions are similar to rules for text classification models. They can be defined as a series of characters that define a pattern.

Every time the text extractor detects a coincidence with a pattern, it assigns the corresponding tag.

This approach allows you to create text extractors quickly and with good results, as long as you find the right patterns for the data you want to analyze. However, as it gets more complex, it can be hard to manage and scale.

Conditional Random Fields

Conditional Random Fields (CRF) is a statistical approach used for text extraction with machine learning. It identifies different patterns by assigning a weight to each of the word sequences within a text. CRF’s also allow you to create additional parameters related to the patterns, based on syntactic or semantic information.

This approach creates more complex and richer patterns than regular expressions and can encode a large volume of information. However, if you want to train the text extractor properly, you will need to have in-depth NLP and computing knowledge.

You can use the same performance metrics that we mentioned for text classification (accuracy, precision, recall, and F1 score), although these metrics only consider exact matches as positive results, leaving partial matches aside.

If you want partial matches to be included in the results, you should use a performance metric called ROUGE (Recall-Oriented Understudy for Gisting Evaluation). This group of metrics measures lengths and numbers of sequences to make a match between the source text and the extraction performed by the model.

The parameters used to compare these two texts need to be defined manually. You may define ROUGE-n metrics (n is the length of the units you want to measure) or ROUGE-L metrics (to compare the longest common sentence).

Use Cases and Applications

Automated textual analysis is the process of obtaining meaningful information out of raw data. Considering unstructured data is getting closer to 80% of the existing information in the digital world , it’s easy to understand why this brings outstanding opportunities for businesses, organizations, and academic researchers.

For companies, it is now possible to obtain real-time insights on how their users feel about their products and make better business decisions based on data. Shifting to a data-driven approach is one of the main challenges of businesses today.

Textual analysis has many exciting applications across different areas of a company, like customer service, marketing, product, or sales. By allowing the automation of specific tasks that used to be manual, textual analysis is helping teams become more productive and efficient, and allowing them to focus on areas where they can add real value.

In the academic research field, computer-assisted textual analysis (and mainly, machine learning-based models) are expanding the horizons of investigation, by providing new ways of processing, classifying, and obtaining relevant data.

In this section, we’ll describe the most significant applications related to customer service, customer feedback, and academic research.

Customer Service

It’s not all about having an amazing product or investing a lot of money on advertising. What really tips the balance when it comes to business success is to provide high-quality customer service. Stats claim that 70% of the customer journey is defined by how people feel they are being treated .

So, how can textual analysis help companies deliver a better customer service experience?

Automatically tag support tickets

Every time a customer sends a request, comment, or complain, there’s a new support ticket to be processed. Customer support teams need to categorize every incoming message based on its content, a routine task that can be boring, time-consuming, and inconsistent if done manually.

Textual analysis with machine learning allows you automatically identify the topic of each support ticket and tag it accordingly. How does it work?

- First, a person defines a set of categories and trains a classifier model by applying the appropriate tags to a number of representative samples.

- The model analyzes the words and expressions used in each ticket. For example: ‘I’m having problems when paying with my credit card’ , and it compares it with previous examples.

- Finally, it automatically tags the ticket according to its content. In this case, the ticket would be tagged as Payment Issues .

Automatically route and triage support tickets

Once support tickets are tagged, they need to be routed to the appropriate team in charge to deal with that issue. Machine learning enables teams to send a ticket to the right person in real-time , based on the ticket’s topic, language or complexity. For example, a ticket previously tagged as Payment Issues will be automatically routed to the Billing Area .

Detect the urgency of a ticket

A simple task, like being able to prioritize tickets based on their urgency, can have a substantial positive impact on your customer service. By analyzing the content of each ticket, a textual analysis model can let you assess which of them are more critical and prioritize accordingly . For instance, a ticket containing the words or expressions ‘as soon as possible’ or ‘immediately’ would be automatically classified as Urgent .

Get insights from ticket analytics

The performance of customer service teams is usually measured by KPI’s, like first response time, the average time of resolution, and customer satisfaction (CSAT).

Textual analysis algorithms can be used to analyze the different interactions between customers and the customer service area, like chats, support tickets, emails, and customer satisfaction surveys.

You can use aspect-based sentiment analysis to understand the main topics discussed by your customers and how they are feeling about those topics. For example, you may have a lot of mentions referring to the topic ‘UI/UX’ . But, are all those customers’ opinions positive, negative, or neutral? This type of analysis can provide a more accurate perspective of what they think about your product and get a deeper understanding the overall customer satisfaction.

Customer Feedback

Listening to the Voice of Customer (VoC) is critical to understand the customers’ expectations, experience and opinion about your brand. Two of the most common tools to monitor and examine customer feedback are customer surveys and product reviews.

By analyzing customer feedback data, companies can detect topics for improvement, spot product flaws, get a better understanding of your customer’s needs and measure their level of satisfaction, among many other things.

But how do you process and analyze tons of reviews or thousands of customer surveys? Here are some ideas of how you can use textual analysis algorithms to analyze different kinds of customer feedback:

Analyze NPS Responses

Net Promoter Score (NPS) is the most popular tool to measure customer satisfaction. The first part of the survey involves giving the brand a score from 0 to 10 based on the question: 'How likely is it that you would recommend [brand] to a friend or colleague?' . The results allow you to classify your customers as promoters , passives , and detractors .

Then, there’s a follow-up question, inquiring about the reasons for your previous score. These open-ended responses often provide the most insightful information about your company. At the same time, it’s the most complex data to process. Yes, you could read and tag each of the responses manually, but what if there are thousands of them?

Textual analysis with machine learning enables you to detect the main topics that your customers are referring to, and even extract the most relevant keywords related to those topics . To make the most of your data, you could also perform sentiment analysis and find out if your customers are talking about a given topic positively or negatively.

Analyze Customer Surveys

Besides NPS, textual analysis algorithms can help you analyze all sorts of customer surveys. Using a text classification model to tag your responses can make you save a lot of valuable time and resources while allowing you to obtain consistent results.

Analyze Product Reviews

Product reviews are a significant factor when buying a product. Prospective buyers read at least 10 reviews before feeling they can trust a local business and that’s just one of the (many) reasons why you should keep a close eye on what people are saying about your brand online.

Analyzing product reviews can give you an idea of what people love and hate the most about your product and service. It can provide useful insights and opportunities for improvement. And it can show you what to do to get one step ahead of your competition.

The truth is that going through pages and pages of product reviews is not a very exciting task. Categorizing all those opinions can take teams hours and in the end, it becomes an expensive and unproductive process. That’s why automated textual analysis is a game-changer.

Imagine you want to analyze a set of product reviews from your SaaS company in G2 Crowd. A textual analysis model will allow you to tag g each review based on topic, like Ease of Use , Price , UI/UX , Integrations . You could also run a sentiment analysis to discover how your customers feel about those topics: do they think the price is suitable or too expensive? Do they find it too complex or easy to use?

Thanks to textual analysis algorithms, you can get powerful information to help you make data-driven decisions, and empower your teams to be more productive by reducing manual tasks to a minimum.

Academic Research

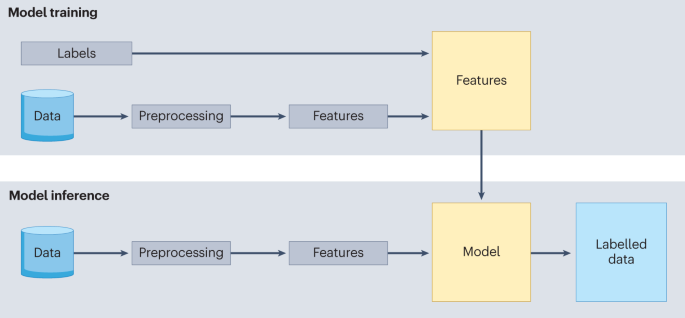

What if you were able to sift through tons of papers and journals, and discover data that is relevant to your research in just seconds? Just imagine if you could easily classify years of news articles and extract meaningful keywords from them, or analyze thousands of tweets after a significant political change .

Even though machine learning applications in business and science seem to be more frequent, social science research is also benefiting from ML to perform tasks related to the academic world.

Social science researchers need to deal with vast volumes of unstructured data. Therefore, one of the major opportunities provided by computer-assisted textual analysis is being able to classify data, extract relevant information, or identify different groups in extensive collections of data.

Another application of textual analysis with machine learning is supporting the coding process . Coding is one of the early steps of any qualitative textual analysis. It involves a detailed examination of what you want to analyze to become familiar with the data. When done manually, this task can be very time consuming and often inaccurate or inconsistent. Fortunately, machine learning algorithms (like text classifier models) can help you do this in very little time and allow you to scale up the coding process easily.

Finally, using machine learning algorithms to scan large amounts of papers, databases, and journal articles can lead to new investigation hypotheses .

Final Words

In a world overloaded with data, textual analysis with machine learning is a powerful tool that enables you to make sense of unstructured information and find what’s relevant in just seconds.

With promising use cases across many fields from marketing to social science research, machine learning algorithms are far from being a niche technology only available for a few. Moreover, they are turning into user-friendly applications that are dominated by workers with little or no coding skills.

Thanks to text analysis models, teams are becoming more productive by being released from manual and routine tasks that used to take valuable time from them. At the same time, companies can make better decisions based on valuable, real-time insights obtained from data.

By now, you probably have an idea of what textual analysis is with machine learning and how you can use it to make your everyday tasks more efficient and straightforward. Ready to get started? MonkeyLearn makes it very simple to take your first steps. Just contact us and get a personalized demo from one of our experts!

MonkeyLearn Inc. All rights reserved 2024

Analyzing Text Data

An introduction to text analysis and text mining, an overview of text analysis methods, additional resources.

- Text Analysis Methods

- Library Databases

- Social Media

- Open Source

- Language Corpora

- Web Scraping

- Software for Text Analysis

- Text Data Citation

Library Data Services

What is text analysis.

Text analysis is a broad term that encompasses the examination and interpretation of textual data. It involves various techniques to understand, organize, and derive insights from text, including methods from linguistics, statistics, and machine learning. Text analysis often includes processes like text categorization, sentiment analysis, and entity recognition, to gain valuable insights from textual data.

What is text mining?

Text mining , also known as text data mining, is a process of using computer programs and algorithms to dig through large amounts of text, like books, articles, websites, or social media posts, to find valuable and hidden information. This information could be patterns, trends, insights, or specific pieces of knowledge that are not immediately obvious when you read the texts on your own. Text data mining helps people make sense of vast amounts of text data quickly and efficiently, making it easier to discover useful information and gain new perspectives from written content.

This video is an introduction to text mining and how it can be used in research.

There are many different methods for text analysis, such as:

- word frequency analysis

- natural language processing

- sentiment analysis

These text analysis techniques serve various purposes, from organizing and understanding text data to making predictions, extracting knowledge, and automating tasks.

Before beginning your text analysis project, it is important to specify your goals and then choose the method that will allow you to meet those goals. Then, consider how much data you need, and identify a sampling plan , before beginning data collection.

- Examples of Text and Data Mining Research Using Copyrighted Materials By Sean Flynn and Lokesh Vyas, an exploration of text and data mining across disciplines, from medicine to literature. Published December 5, 2022.

- Next: Text Analysis Methods >>

- Last Updated: Feb 7, 2024 10:26 AM

- URL: https://libguides.gwu.edu/textanalysis

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 11 April 2024

Quantitative text analysis

- Kristoffer L. Nielbo ORCID: orcid.org/0000-0002-5116-5070 1 ,

- Folgert Karsdorp 2 ,

- Melvin Wevers ORCID: orcid.org/0000-0001-8177-4582 3 ,

- Alie Lassche ORCID: orcid.org/0000-0002-7607-0174 4 ,

- Rebekah B. Baglini ORCID: orcid.org/0000-0002-2836-5867 5 ,

- Mike Kestemont 6 &

- Nina Tahmasebi ORCID: orcid.org/0000-0003-1688-1845 7

Nature Reviews Methods Primers volume 4 , Article number: 25 ( 2024 ) Cite this article

3497 Accesses

53 Altmetric

Metrics details

- Computational science

- Interdisciplinary studies

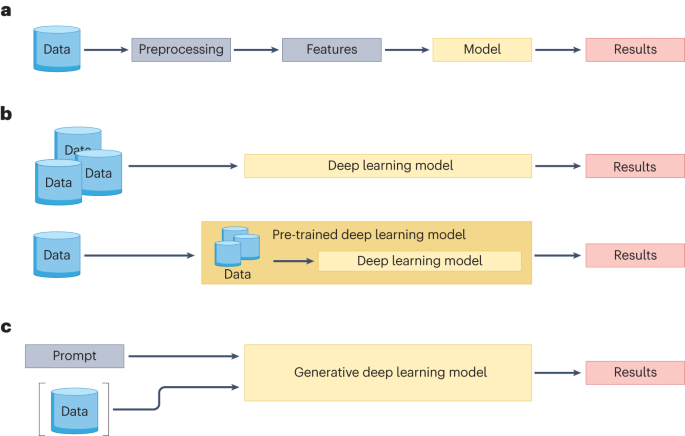

Text analysis has undergone substantial evolution since its inception, moving from manual qualitative assessments to sophisticated quantitative and computational methods. Beginning in the late twentieth century, a surge in the utilization of computational techniques reshaped the landscape of text analysis, catalysed by advances in computational power and database technologies. Researchers in various fields, from history to medicine, are now using quantitative methodologies, particularly machine learning, to extract insights from massive textual data sets. This transformation can be described in three discernible methodological stages: feature-based models, representation learning models and generative models. Although sequential, these stages are complementary, each addressing analytical challenges in the text analysis. The progression from feature-based models that require manual feature engineering to contemporary generative models, such as GPT-4 and Llama2, signifies a change in the workflow, scale and computational infrastructure of the quantitative text analysis. This Primer presents a detailed introduction of some of these developments, offering insights into the methods, principles and applications pertinent to researchers embarking on the quantitative text analysis, especially within the field of machine learning.

You have full access to this article via your institution.

Similar content being viewed by others

Augmenting interpretable models with large language models during training

An open source machine learning framework for efficient and transparent systematic reviews

The shaky foundations of large language models and foundation models for electronic health records

Introduction.

Qualitative analysis of textual data has a long research history. However, a fundamental shift occurred in the late twentieth century when researchers began investigating the potential of computational methods for text analysis and interpretation 1 . Today, researchers in diverse fields, such as history, medicine and chemistry, commonly use the quantification of large textual data sets to uncover patterns and trends, producing insights and knowledge that can aid in decision-making and offer novel ways of viewing historical events and current realities. Quantitative text analysis (QTA) encompasses a range of computational methods that convert textual data or natural language into structured formats before subjecting them to statistical, mathematical and numerical analysis. With the increasing availability of digital text from numerous sources, such as books, scientific articles, social media posts and online forums, these methods are becoming increasingly valuable, facilitated by advances in computational technology.

Given the widespread application of QTA across disciplines, it is essential to understand the evolution of the field. As a relatively consolidated field, QTA embodies numerous methods for extracting and structuring information in textual data. It gained momentum in the late 1990s as a subset of the broader domain of data mining, catalysed by advances in database technologies, software accessibility and computational capabilities 2 , 3 . However, it is essential to recognize that the evolution of QTA extends beyond computer science and statistics. It has heavily incorporated techniques and algorithms derived from corpus linguistics 4 , computer linguistics 5 and information retrieval 6 . Today, QTA is largely driven by machine learning , a crucial component of data science , artificial intelligence (AI) and natural language processing (NLP).

Methods of QTA are often referred to as techniques that are innately linked with specific tasks (Table 1 ). For example, the sentiment analysis aims to determine the emotional tone of a text 7 , whereas entity and concept extraction seek to identify and categorize elements in a text, such as names, locations or key themes 8 , 9 . Text classification refers to the task of sorting texts into groups with predefined labels 10 — for example, sorting news articles into semantic categories such as politics, sports or entertainment. In contrast to machine-learning tasks that use supervised learning , text clustering, which uses unsupervised learning , involves finding naturally occurring groups in unlabelled texts 11 . A significant subset of tasks primarily aim to simplify and structure natural language. For example, representation learning includes tasks that automatically convert texts into numerical representations, which can then be used for other tasks 12 . The lines separating these techniques can be blurred and often vary depending on the research context. For example, topic modelling, a type of statistical modelling used for concept extraction, serves simultaneously as a clustering and representation learning technique 13 , 14 , 15 .

QTA, similar to machine learning, learns from observation of existing data rather than by manipulating variables as in scientific experiments 16 . In QTA, experiments encompass the design and implementation of empirical tests to explore and evaluate the performance of models, algorithms and techniques in relation to specific tasks and applications. In practice, this involves a series of steps. First, text data are collected from real-world sources such as newspaper articles, patient records or social media posts. Then, a specific type of machine-learning model is selected and designed. The model could be a tree-based decision model, a clustering technique or more complex encoder–decoder models for tasks such as translation. Subsequently, the selected model is trained on the collected data, learning to make categorizations or predictions based on the data. The performance of the model is evaluated using predominantly intrinsic performance metrics (such as accuracy for a classification task) and, to a lesser degree, extrinsic metrics that measure how the output of the model impacts a broader task or system.