- Privacy Policy

Home » Factor Analysis – Steps, Methods and Examples

Factor Analysis – Steps, Methods and Examples

Table of Contents

Factor Analysis

Definition:

Factor analysis is a statistical technique that is used to identify the underlying structure of a relatively large set of variables and to explain these variables in terms of a smaller number of common underlying factors. It helps to investigate the latent relationships between observed variables.

Factor Analysis Steps

Here are the general steps involved in conducting a factor analysis:

1. Define the Research Objective:

Clearly specify the purpose of the factor analysis. Determine what you aim to achieve or understand through the analysis.

2. Data Collection:

Gather the data on the variables of interest. These variables should be measurable and related to the research objective. Ensure that you have a sufficient sample size for reliable results.

3. Assess Data Suitability:

Examine the suitability of the data for factor analysis. Check for the following aspects:

- Sample size: Ensure that you have an adequate sample size to perform factor analysis reliably.

- Missing values: Handle missing data appropriately, either by imputation or exclusion.

- Variable characteristics: Verify that the variables are continuous or at least ordinal in nature. Categorical variables may require different analysis techniques.

- Linearity: Assess whether the relationships among variables are linear.

4. Determine the Factor Analysis Technique:

There are different types of factor analysis techniques available, such as exploratory factor analysis (EFA) and confirmatory factor analysis (CFA). Choose the appropriate technique based on your research objective and the nature of the data.

5. Perform Factor Analysis:

a. Exploratory Factor Analysis (EFA):

- Extract factors: Use factor extraction methods (e.g., principal component analysis or common factor analysis) to identify the initial set of factors.

- Determine the number of factors: Decide on the number of factors to retain based on statistical criteria (e.g., eigenvalues, scree plot) and theoretical considerations.

- Rotate factors: Apply factor rotation techniques (e.g., varimax, oblique) to simplify the factor structure and make it more interpretable.

- Interpret factors: Analyze the factor loadings (correlations between variables and factors) to interpret the meaning of each factor.

- Determine factor reliability: Assess the internal consistency or reliability of the factors using measures like Cronbach’s alpha.

- Report results: Document the factor loadings, rotated component matrix, communalities, and any other relevant information.

b. Confirmatory Factor Analysis (CFA):

- Formulate a theoretical model: Specify the hypothesized relationships among variables and factors based on prior knowledge or theoretical considerations.

- Define measurement model: Establish how each variable is related to the underlying factors by assigning factor loadings in the model.

- Test the model: Use statistical techniques like maximum likelihood estimation or structural equation modeling to assess the goodness-of-fit between the observed data and the hypothesized model.

- Modify the model: If the initial model does not fit the data adequately, revise the model by adding or removing paths, allowing for correlated errors, or other modifications to improve model fit.

- Report results: Present the final measurement model, parameter estimates, fit indices (e.g., chi-square, RMSEA, CFI), and any modifications made.

6. Interpret and Validate the Factors:

Once you have identified the factors, interpret them based on the factor loadings, theoretical understanding, and research objectives. Validate the factors by examining their relationships with external criteria or by conducting further analyses if necessary.

Types of Factor Analysis

Types of Factor Analysis are as follows:

Exploratory Factor Analysis (EFA)

EFA is used to explore the underlying structure of a set of observed variables without any preconceived assumptions about the number or nature of the factors. It aims to discover the number of factors and how the observed variables are related to those factors. EFA does not impose any restrictions on the factor structure and allows for cross-loadings of variables on multiple factors.

Confirmatory Factor Analysis (CFA)

CFA is used to test a pre-specified factor structure based on theoretical or conceptual assumptions. It aims to confirm whether the observed variables measure the latent factors as intended. CFA tests the fit of a hypothesized model and assesses how well the observed variables are associated with the expected factors. It is often used for validating measurement instruments or evaluating theoretical models.

Principal Component Analysis (PCA)

PCA is a dimensionality reduction technique that can be considered a form of factor analysis, although it has some differences. PCA aims to explain the maximum amount of variance in the observed variables using a smaller number of uncorrelated components. Unlike traditional factor analysis, PCA does not assume that the observed variables are caused by underlying factors but focuses solely on accounting for variance.

Common Factor Analysis

It assumes that the observed variables are influenced by common factors and unique factors (specific to each variable). It attempts to estimate the common factor structure by extracting the shared variance among the variables while also considering the unique variance of each variable.

Hierarchical Factor Analysis

Hierarchical factor analysis involves multiple levels of factors. It explores both higher-order and lower-order factors, aiming to capture the complex relationships among variables. Higher-order factors are based on the relationships among lower-order factors, which are in turn based on the relationships among observed variables.

Factor Analysis Formulas

Factor Analysis is a statistical method used to describe variability among observed, correlated variables in terms of a potentially lower number of unobserved variables called factors.

Here are some of the essential formulas and calculations used in factor analysis:

Correlation Matrix :

The first step in factor analysis is to create a correlation matrix, which calculates the correlation coefficients between pairs of variables.

Correlation coefficient (Pearson’s r) between variables X and Y is calculated as:

r(X,Y) = Σ[(xi – x̄)(yi – ȳ)] / [n-1] σx σy

where: xi, yi are the data points, x̄, ȳ are the means of X and Y respectively, σx, σy are the standard deviations of X and Y respectively, n is the number of data points.

Extraction of Factors :

The extraction of factors from the correlation matrix is typically done by methods such as Principal Component Analysis (PCA) or other similar methods.

The formula used in PCA to calculate the principal components (factors) involves finding the eigenvalues and eigenvectors of the correlation matrix.

Let’s denote the correlation matrix as R. If λ is an eigenvalue of R, and v is the corresponding eigenvector, they satisfy the equation: Rv = λv

Factor Loadings :

Factor loadings are the correlations between the original variables and the factors. They can be calculated as the eigenvectors normalized by the square roots of their corresponding eigenvalues.

Communality and Specific Variance :

Communality of a variable is the proportion of variance in that variable explained by the factors. It can be calculated as the sum of squared factor loadings for that variable across all factors.

The specific variance of a variable is the proportion of variance in that variable not explained by the factors, and it’s calculated as 1 – Communality.

Factor Rotation : Factor rotation, such as Varimax or Promax, is used to make the output more interpretable. It doesn’t change the underlying relationships but affects the loadings of the variables on the factors.

For example, in the Varimax rotation, the objective is to minimize the variance of the squared loadings of a factor (column) on all the variables (rows) in a factor matrix, which leads to more high and low loadings, making the factor easier to interpret.

Examples of Factor Analysis

Here are some real-time examples of factor analysis:

- Psychological Research: In a study examining personality traits, researchers may use factor analysis to identify the underlying dimensions of personality by analyzing responses to various questionnaires or surveys. Factors such as extroversion, neuroticism, and conscientiousness can be derived from the analysis.

- Market Research: In marketing, factor analysis can be used to understand consumers’ preferences and behaviors. For instance, by analyzing survey data related to product features, pricing, and brand perception, researchers can identify factors such as price sensitivity, brand loyalty, and product quality that influence consumer decision-making.

- Finance and Economics: Factor analysis is widely used in portfolio management and asset pricing models. By analyzing historical market data, factors such as market returns, interest rates, inflation rates, and other economic indicators can be identified. These factors help in understanding and predicting investment returns and risk.

- Social Sciences: Factor analysis is employed in social sciences to explore underlying constructs in complex datasets. For example, in education research, factor analysis can be used to identify dimensions such as academic achievement, socio-economic status, and parental involvement that contribute to student success.

- Health Sciences: In medical research, factor analysis can be utilized to identify underlying factors related to health conditions, symptom clusters, or treatment outcomes. For instance, in a study on mental health, factor analysis can be used to identify underlying factors contributing to depression, anxiety, and stress.

- Customer Satisfaction Surveys: Factor analysis can help businesses understand the key drivers of customer satisfaction. By analyzing survey responses related to various aspects of product or service experience, factors such as product quality, customer service, and pricing can be identified, enabling businesses to focus on areas that impact customer satisfaction the most.

Factor analysis in Research Example

Here’s an example of how factor analysis might be used in research:

Let’s say a psychologist is interested in the factors that contribute to overall wellbeing. They conduct a survey with 1000 participants, asking them to respond to 50 different questions relating to various aspects of their lives, including social relationships, physical health, mental health, job satisfaction, financial security, personal growth, and leisure activities.

Given the broad scope of these questions, the psychologist decides to use factor analysis to identify underlying factors that could explain the correlations among responses.

After conducting the factor analysis, the psychologist finds that the responses can be grouped into five factors:

- Physical Wellbeing : Includes variables related to physical health, exercise, and diet.

- Mental Wellbeing : Includes variables related to mental health, stress levels, and emotional balance.

- Social Wellbeing : Includes variables related to social relationships, community involvement, and support from friends and family.

- Professional Wellbeing : Includes variables related to job satisfaction, work-life balance, and career development.

- Financial Wellbeing : Includes variables related to financial security, savings, and income.

By reducing the 50 individual questions to five underlying factors, the psychologist can more effectively analyze the data and draw conclusions about the major aspects of life that contribute to overall wellbeing.

In this way, factor analysis helps researchers understand complex relationships among many variables by grouping them into a smaller number of factors, simplifying the data analysis process, and facilitating the identification of patterns or structures within the data.

When to Use Factor Analysis

Here are some circumstances in which you might want to use factor analysis:

- Data Reduction : If you have a large set of variables, you can use factor analysis to reduce them to a smaller set of factors. This helps in simplifying the data and making it easier to analyze.

- Identification of Underlying Structures : Factor analysis can be used to identify underlying structures in a dataset that are not immediately apparent. This can help you understand complex relationships between variables.

- Validation of Constructs : Factor analysis can be used to confirm whether a scale or measure truly reflects the construct it’s meant to measure. If all the items in a scale load highly on a single factor, that supports the construct validity of the scale.

- Generating Hypotheses : By revealing the underlying structure of your variables, factor analysis can help to generate hypotheses for future research.

- Survey Analysis : If you have a survey with many questions, factor analysis can help determine if there are underlying factors that explain response patterns.

Applications of Factor Analysis

Factor Analysis has a wide range of applications across various fields. Here are some of them:

- Psychology : It’s often used in psychology to identify the underlying factors that explain different patterns of correlations among mental abilities. For instance, factor analysis has been used to identify personality traits (like the Big Five personality traits), intelligence structures (like Spearman’s g), or to validate the constructs of different psychological tests.

- Market Research : In this field, factor analysis is used to identify the factors that influence purchasing behavior. By understanding these factors, businesses can tailor their products and marketing strategies to meet the needs of different customer groups.

- Healthcare : In healthcare, factor analysis is used in a similar way to psychology, identifying underlying factors that might influence health outcomes. For instance, it could be used to identify lifestyle or behavioral factors that influence the risk of developing certain diseases.

- Sociology : Sociologists use factor analysis to understand the structure of attitudes, beliefs, and behaviors in populations. For example, factor analysis might be used to understand the factors that contribute to social inequality.

- Finance and Economics : In finance, factor analysis is used to identify the factors that drive financial markets or economic behavior. For instance, factor analysis can help understand the factors that influence stock prices or economic growth.

- Education : In education, factor analysis is used to identify the factors that influence academic performance or attitudes towards learning. This could help in developing more effective teaching strategies.

- Survey Analysis : Factor analysis is often used in survey research to reduce the number of items or to identify the underlying structure of the data.

- Environment : In environmental studies, factor analysis can be used to identify the major sources of environmental pollution by analyzing the data on pollutants.

Advantages of Factor Analysis

Advantages of Factor Analysis are as follows:

- Data Reduction : Factor analysis can simplify a large dataset by reducing the number of variables. This helps make the data easier to manage and analyze.

- Structure Identification : It can identify underlying structures or patterns in a dataset that are not immediately apparent. This can provide insights into complex relationships between variables.

- Construct Validation : Factor analysis can be used to validate whether a scale or measure accurately reflects the construct it’s intended to measure. This is important for ensuring the reliability and validity of measurement tools.

- Hypothesis Generation : By revealing the underlying structure of your variables, factor analysis can help generate hypotheses for future research.

- Versatility : Factor analysis can be used in various fields, including psychology, market research, healthcare, sociology, finance, education, and environmental studies.

Disadvantages of Factor Analysis

Disadvantages of Factor Analysis are as follows:

- Subjectivity : The interpretation of the factors can sometimes be subjective, depending on how the data is perceived. Different researchers might interpret the factors differently, which can lead to different conclusions.

- Assumptions : Factor analysis assumes that there’s some underlying structure in the dataset and that all variables are related. If these assumptions do not hold, factor analysis might not be the best tool for your analysis.

- Large Sample Size Required : Factor analysis generally requires a large sample size to produce reliable results. This can be a limitation in studies where data collection is challenging or expensive.

- Correlation, not Causation : Factor analysis identifies correlational relationships, not causal ones. It cannot prove that changes in one variable cause changes in another.

- Complexity : The statistical concepts behind factor analysis can be difficult to understand and require expertise to implement correctly. Misuse or misunderstanding of the method can lead to incorrect conclusions.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Cluster Analysis – Types, Methods and Examples

Discriminant Analysis – Methods, Types and...

MANOVA (Multivariate Analysis of Variance) –...

Documentary Analysis – Methods, Applications and...

ANOVA (Analysis of variance) – Formulas, Types...

Graphical Methods – Types, Examples and Guide

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Int J Med Educ

Factor Analysis: a means for theory and instrument development in support of construct validity

Mohsen tavakol.

1 School of Medicine, Medical Education Centre, the University of Nottingham, UK

Angela Wetzel

2 School of Education, Virginia Commonwealth University, USA

Introduction

Factor analysis (FA) allows us to simplify a set of complex variables or items using statistical procedures to explore the underlying dimensions that explain the relationships between the multiple variables/items. For example, to explore inter-item relationships for a 20-item instrument, a basic analysis would produce 400 correlations; it is not an easy task to keep these matrices in our heads. FA simplifies a matrix of correlations so a researcher can more easily understand the relationship between items in a scale and the underlying factors that the items may have in common. FA is a commonly applied and widely promoted procedure for developing and refining clinical assessment instruments to produce evidence for the construct validity of the measure.

In the literature, the strong association between construct validity and FA is well documented, as the method provides evidence based on test content and evidence based on internal structure, key components of construct validity. 1 From FA, evidence based on internal structure and evidence based on test content can be examined to tell us what the instrument really measures - the intended abstract concept (i.e., a factor/dimension/construct) or something else. Establishing construct validity for the interpretations from a measure is critical to high quality assessment and subsequent research using outcomes data from the measure. Therefore, FA should be a researcher’s best friend during the development and validation of a new measure or when adapting a measure to a new population. FA is also a useful companion when critiquing existing measures for application in research or assessment practice. However, despite the popularity of FA, when applied in medical education instrument development, factor analytic procedures do not always match best practice. 2 This editorial article is designed to help medical educators use FA appropriately.

The Applications of FA

The applications of FA depend on the purpose of the research. Generally speaking, there are two most important types of FA: Explorator Factor Analysis (EFA) and Confirmatory Factor Analysis (CFA).

Exploratory Factor Analysis

Exploratory Factor Analysis (EFA) is widely used in medical education research in the early phases of instrument development, specifically for measures of latent variables that cannot be assessed directly. Typically, in EFA, the researcher, through a review of the literature and engagement with content experts, selects as many instrument items as necessary to fully represent the latent construct (e.g., professionalism). Then, using EFA, the researcher explores the results of factor loadings, along with other criteria (e.g., previous theory, Minimum average partial, 3 Parallel analysis, 4 conceptual meaningfulness, etc.) to refine the measure. Suppose an instrument consisting of 30 questions yields two factors - Factor 1 and Factor 2. A good definition of a factor as a theoretical construct is to look at its factor loadings. 5 The factor loading is the correlation between the item and the factor; a factor loading of more than 0.30 usually indicates a moderate correlation between the item and the factor. Most statistical software, such as SAS, SPSS and R, provide factor loadings. Upon review of the items loading on each factor, the researcher identifies two distinct constructs, with items loading on Factor 1 all related to professionalism, and items loading on Factor 2 related, instead, to leadership. Here, EFA helps the researcher build evidence based on internal structure by retaining only those items with appropriately high loadings on Factor 1 for professionalism, the construct of interest.

It is important to note that, often, Principal Component Analysis (PCA) is applied and described, in error, as exploratory factor analysis. 2 , 6 PCA is appropriate if the study primarily aims to reduce the number of original items in the intended instrument to a smaller set. 7 However, if the instrument is being designed to measure a latent construct, EFA, using Maximum Likelihood (ML) or Principal Axis Factoring (PAF), is the appropriate method. 7 These exploratory procedures statistically analyze the interrelationships between the instrument items and domains to uncover the unknown underlying factorial structure (dimensions) of the construct of interest. PCA, by design, seeks to explain total variance (i.e., specific and error variance) in the correlation matrix. The sum of the squared loadings on a factor matrix for a particular item indicates the proportion of variance for that given item that is explained by the factors. This is called the communality. The higher the communality value, the more the extracted factors explain the variance of the item. Further, the mean score for the sum of the squared factor loadings specifies the proportion of variance explained by each factor. For example, assume four items of an instrument have produced Factor 1, factor loadings of Factor 1 are 0.86, 0.75, 0.66 and 0.58, respectively. If you square the factor loading of items, you will get the percentage of the variance of that item which is explained by Factor 1. In this example, the first principal component (PC) for item1, item2, item3 and item4 is 74%, 56%, 43% and 33%, respectively. If you sum the squared factor loadings of Factor 1, you will get the eigenvalue, which is 2.1 and dividing the eigenvalue by four (2.1/4= 0.52) we will get the proportion of variance accounted for Factor 1, which is 52 %. Since PCA does not separate specific variance and error variance, it often inflates factor loadings and limits the potential for the factor structure to be generalized and applied with other samples in subsequent study. On the other hand, Maximum likelihood and Principal Axis Factoring extraction methods separate common and unique variance (specific and error variance), which overcomes the issue attached to PCA. Thus, the proportion of variance explained by an extracted factor more precisely reflects the extent to which the latent construct is measured by the instrument items. This focus on shared variance among items explained by the underlying factor, particularly during instrument development, helps the researcher understand the extent to which a measure captures the intended construct. It is useful to mention that in PAF, the initial communalities are not set at 1s, but they are chosen based on the squared multiple correlation coefficient. Indeed, if you run a multiple regression to predict say item1 (dependent variable) from other items (independent variables) and then look at the R-squared (R2), you will see R2 is equal to the communalities of item1 derived from PAF.

Confirmatory Factor Analysis

When prior EFA studies are available for your intended instrument, Confirmatory Factor Analysis extends on those findings, allowing you to confirm or disconfirm the underlying factor structures, or dimensions, extracted in prior research. CFA is a theory or model-driven approach that tests how well the data “fit” to the proposed model or theory. CFA thus departs from EFA in that researchers must first identify a factor model before analysing the data. More fundamentally, CFA is a means for statistically testing the internal structure of instruments and relies on the maximum likelihood estimation (MLE) and a different set of standards for assessing the suitability of the construct of interest. 7 , 8

Factor analysts usually use the path diagram to show the theoretical and hypothesized relationships between items and the factors to create a hypothetical model to test using the ML method. In the path diagram, circles or ovals represent factors. A rectangle represents the instrument items. Lines (→ or ↔) represent relationships between items. No line, no relationship. A single-headed arrow shows the causal relationship (the variable that the arrowhead refers to is the dependent variable), and a double-headed shows a covariance between variables or factors.

If CFA indicates the primary factors, or first-order factors, produced by the prior PAF are correlated, then the second-order factors need to be modelled and estimated to get a greater understanding of the data. It should be noted if the prior EFA applied an orthogonal rotation to the factor solution, the factors produced would be uncorrelated. Hence, the analysis of the second-order factors is not possible. Generally, in social science research, most constructs assume inter-related factors, and therefore should apply an oblique rotation. The justification for analyzing the second-order factors is that when the correlations between the primary factors exist, CFA can then statistically model a broad picture of factors not captured by the primary factors (i.e., the first-order factors). 9 The analysis of the first-order factors is like surveying mountains with a zoom lens binoculars, while the analysis of the second-order factors uses a wide-angle lens. 10 Goodness of- fit- tests need to be conducted when evaluating the hypothetical model tested by CFA. The question is: does the new data fit the hypothetical model? However, the statistical models of the goodness of- fit- tests are complex, and extend beyond the scope of this editorial paper; thus,we strongly encourage the readers consult with factors analysts to receive resources and possible advise.

Conclusions

Factor analysis methods can be incredibly useful tools for researchers attempting to establish high quality measures of those constructs not directly observed and captured by observation. Specifically, the factor solution derived from an Exploratory Factor Analysis provides a snapshot of the statistical relationships of the key behaviors, attitudes, and dispositions of the construct of interest. This snapshot provides critical evidence for the validity of the measure based on the fit of the test content to the theoretical framework that underlies the construct. Further, the relationships between factors, which can be explored with EFA and confirmed with CFA, help researchers interpret the theoretical connections between underlying dimensions of a construct and even extending to relationships across constructs in a broader theoretical model. However, studies that do not apply recommended extraction, rotation, and interpretation in FA risk drawing faulty conclusions about the validity of a measure. As measures are picked up by other researchers and applied in experimental designs, or by practitioners as assessments in practice, application of measures with subpar evidence for validity produces a ripple effect across the field. It is incumbent on researchers to ensure best practices are applied or engage with methodologists to support and consult where there are gaps in knowledge of methods. Further, it remains important to also critically evaluate measures selected for research and practice, focusing on those that demonstrate alignment with best practice for FA and instrument development. 7 , 11

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Root out friction in every digital experience, super-charge conversion rates, and optimize digital self-service

Uncover insights from any interaction, deliver AI-powered agent coaching, and reduce cost to serve

Increase revenue and loyalty with real-time insights and recommendations delivered to teams on the ground

Know how your people feel and empower managers to improve employee engagement, productivity, and retention

Take action in the moments that matter most along the employee journey and drive bottom line growth

Whatever they’re are saying, wherever they’re saying it, know exactly what’s going on with your people

Get faster, richer insights with qual and quant tools that make powerful market research available to everyone

Run concept tests, pricing studies, prototyping + more with fast, powerful studies designed by UX research experts

Track your brand performance 24/7 and act quickly to respond to opportunities and challenges in your market

Explore the platform powering Experience Management

- Free Account

- For Digital

- For Customer Care

- For Human Resources

- For Researchers

- Financial Services

- All Industries

Popular Use Cases

- Customer Experience

- Employee Experience

- Employee Exit Interviews

- Net Promoter Score

- Voice of Customer

- Customer Success Hub

- Product Documentation

- Training & Certification

- XM Institute

- Popular Resources

- Customer Stories

- Market Research

- Artificial Intelligence

- Partnerships

- Marketplace

The annual gathering of the experience leaders at the world’s iconic brands building breakthrough business results, live in Salt Lake City.

- English/AU & NZ

- Español/Europa

- Español/América Latina

- Português Brasileiro

- REQUEST DEMO

- Experience Management

- Survey Data Analysis & Reporting

- Factor Analysis

Try Qualtrics for free

Factor analysis and how it simplifies research findings.

17 min read There are many forms of data analysis used to report on and study survey data. Factor analysis is best when used to simplify complex data sets with many variables.

What is factor analysis?

Factor analysis is the practice of condensing many variables into just a few, so that your research data is easier to work with.

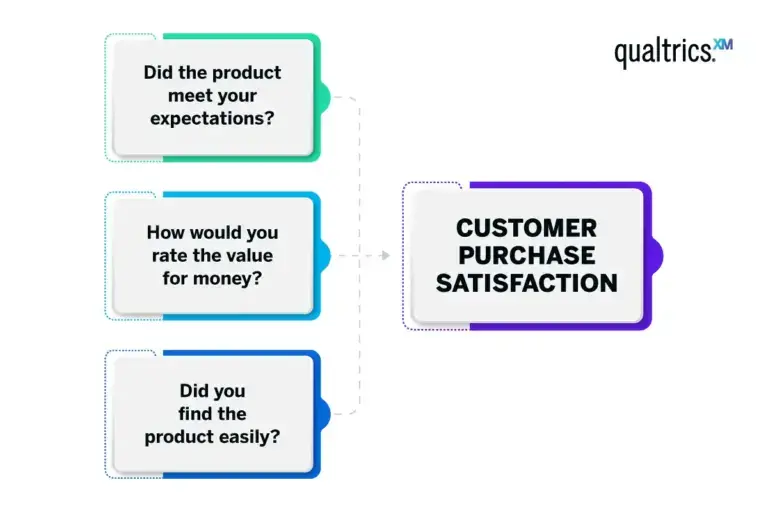

For example, a retail business trying to understand customer buying behaviours might consider variables such as ‘did the product meet your expectations?’, ‘how would you rate the value for money?’ and ‘did you find the product easily?’. Factor analysis can help condense these variables into a single factor, such as ‘customer purchase satisfaction’.

The theory is that there are deeper factors driving the underlying concepts in your data, and that you can uncover and work with them instead of dealing with the lower-level variables that cascade from them. Know that these deeper concepts aren’t necessarily immediately obvious – they might represent traits or tendencies that are hard to measure, such as extraversion or IQ.

Factor analysis is also sometimes called “dimension reduction”: you can reduce the “dimensions” of your data into one or more “super-variables,” also known as unobserved variables or latent variables. This process involves creating a factor model and often yields a factor matrix that organizes the relationship between observed variables and the factors they’re associated with.

As with any kind of process that simplifies complexity, there is a trade-off between the accuracy of the data and how easy it is to work with. With factor analysis, the best solution is the one that yields a simplification that represents the true nature of your data, with minimum loss of precision. This often means finding a balance between achieving the variance explained by the model and using fewer factors to keep the model simple.

Factor analysis isn’t a single technique, but a family of statistical methods that can be used to identify the latent factors driving observable variables. Factor analysis is commonly used in market research , as well as other disciplines like technology, medicine, sociology, field biology, education, psychology and many more.

What is a factor?

In the context of factor analysis, a factor is a hidden or underlying variable that we infer from a set of directly measurable variables.

Take ‘customer purchase satisfaction’ as an example again. This isn’t a variable you can directly ask a customer to rate, but it can be determined from the responses to correlated questions like ‘did the product meet your expectations?’, ‘how would you rate the value for money?’ and ‘did you find the product easily?’.

While not directly observable, factors are essential for providing a clearer, more streamlined understanding of data. They enable us to capture the essence of our data’s complexity, making it simpler and more manageable to work with, and without losing lots of information.

Free eBook: 2024 global market research trends report

Key concepts in factor analysis

These concepts are the foundational pillars that guide the application and interpretation of factor analysis.

Central to factor analysis, variance measures how much numerical values differ from the average. In factor analysis, you’re essentially trying to understand how underlying factors influence this variance among your variables. Some factors will explain more variance than others, meaning they more accurately represent the variables they consist of.

The eigenvalue expresses the amount of variance a factor explains. If a factor solution (unobserved or latent variables) has an eigenvalue of 1 or above, it indicates that a factor explains more variance than a single observed variable, which can be useful in reducing the number of variables in your analysis. Factors with eigenvalues less than 1 account for less variability than a single variable and are generally not included in the analysis.

Factor score

A factor score is a numeric representation that tells us how strongly each variable from the original data is related to a specific factor. Also called the component score, it can help determine which variables are most influenced by each factor and are most important for each underlying concept.

Factor loading

Factor loading is the correlation coefficient for the variable and factor. Like the factor score, factor loadings give an indication of how much of the variance in an observed variable can be explained by the factor. High factor loadings (close to 1 or -1) mean the factor strongly influences the variable.

When to use factor analysis

Factor analysis is a powerful tool when you want to simplify complex data, find hidden patterns, and set the stage for deeper, more focused analysis.

It’s typically used when you’re dealing with a large number of interconnected variables, and you want to understand the underlying structure or patterns within this data. It’s particularly useful when you suspect that these observed variables could be influenced by some hidden factors.

For example, consider a business that has collected extensive customer feedback through surveys. The survey covers a wide range of questions about product quality, pricing, customer service and more. This huge volume of data can be overwhelming, and this is where factor analysis comes in. It can help condense these numerous variables into a few meaningful factors, such as ‘product satisfaction’, ‘customer service experience’ and ‘value for money’.

Factor analysis doesn’t operate in isolation – it’s often used as a stepping stone for further analysis. For example, once you’ve identified key factors through factor analysis, you might then proceed to a cluster analysis – a method that groups your customers based on their responses to these factors. The result is a clearer understanding of different customer segments, which can then guide targeted marketing and product development strategies.

By combining factor analysis with other methodologies, you can not only make sense of your data but also gain valuable insights to drive your business decisions.

Factor analysis assumptions

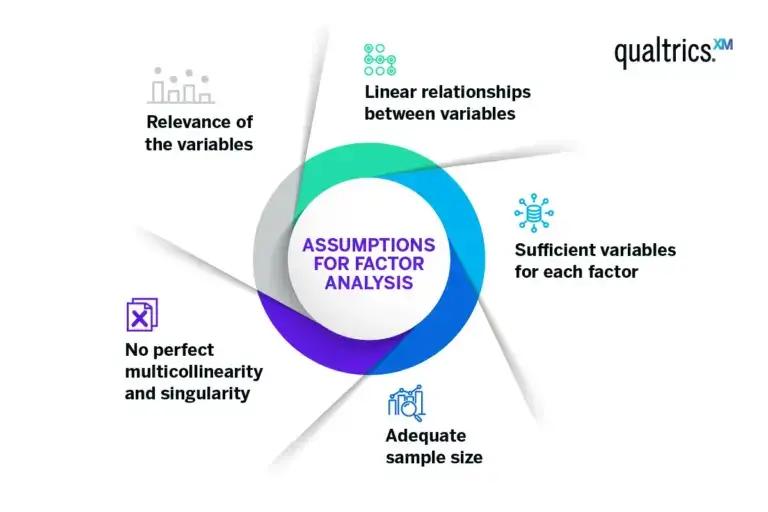

Factor analysis relies on several assumptions for accurate results. Violating these assumptions may lead to factors that are hard to interpret or misleading.

Linear relationships between variables

This ensures that changes in the values of your variables are consistent.

Sufficient variables for each factor

Because if only a few variables represent a factor, it might not be identified accurately.

Adequate sample size

The larger the ratio of cases (respondents, for instance) to variables, the more reliable the analysis.

No perfect multicollinearity and singularity

No variable is a perfect linear combination of other variables, and no variable is a duplicate of another.

Relevance of the variables

There should be some correlation between variables to make a factor analysis feasible.

Types of factor analysis

There are two main factor analysis methods: exploratory and confirmatory. Here’s how they are used to add value to your research process.

Confirmatory factor analysis

In this type of analysis, the researcher starts out with a hypothesis about their data that they are looking to prove or disprove. Factor analysis will confirm – or not – where the latent variables are and how much variance they account for.

Principal component analysis (PCA) is a popular form of confirmatory factor analysis. Using this method, the researcher will run the analysis to obtain multiple possible solutions that split their data among a number of factors. Items that load onto a single particular factor are more strongly related to one another and can be grouped together by the researcher using their conceptual knowledge or pre-existing research.

Using PCA will generate a range of solutions with different numbers of factors, from simplified 1-factor solutions to higher levels of complexity. However, the fewer number of factors employed, the less variance will be accounted for in the solution.

Exploratory factor analysis

As the name suggests, exploratory factor analysis is undertaken without a hypothesis in mind. It’s an investigatory process that helps researchers understand whether associations exist between the initial variables, and if so, where they lie and how they are grouped.

How to perform factor analysis: A step-by-step guide

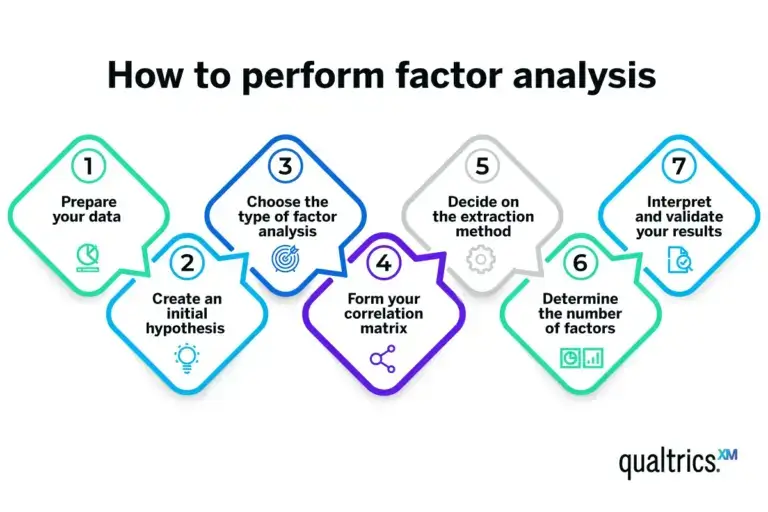

Performing a factor analysis involves a series of steps, often facilitated by statistical software packages like SPSS, Stata and the R programming language . Here’s a simplified overview of the process.

Prepare your data

Start with a dataset where each row represents a case (for example, a survey respondent), and each column is a variable you’re interested in. Ensure your data meets the assumptions necessary for factor analysis.

Create an initial hypothesis

If you have a theory about the underlying factors and their relationships with your variables, make a note of this. This hypothesis can guide your analysis, but keep in mind that the beauty of factor analysis is its ability to uncover unexpected relationships.

Choose the type of factor analysis

The most common type is exploratory factor analysis, which is used when you’re not sure what to expect. If you have a specific hypothesis about the factors, you might use confirmatory factor analysis.

Form your correlation matrix

After you’ve chosen the type of factor analysis, you’ll need to create the correlation matrix of your variables. This matrix, which shows the correlation coefficients between each pair of variables, forms the basis for the extraction of factors. This is a key step in building your factor analysis model.

Decide on the extraction method

Principal component analysis is the most commonly used extraction method. If you believe your factors are correlated, you might opt for principal axis factoring, a type of factor analysis that identifies factors based on shared variance.

Determine the number of factors

Various criteria can be used here, such as Kaiser’s criterion (eigenvalues greater than 1), the scree plot method or parallel analysis. The choice depends on your data and your goals.

Interpret and validate your results

Each factor will be associated with a set of your original variables, so label each factor based on how you interpret these associations. These labels should represent the underlying concept that ties the associated variables together.

Validation can be done through a variety of methods, like splitting your data in half and checking if both halves produce the same factors.

How factor analysis can help you

As well as giving you fewer variables to navigate, factor analysis can help you understand grouping and clustering in your input variables, since they’ll be grouped according to the latent variables.

Say you ask several questions all designed to explore different, but closely related, aspects of customer satisfaction:

- How satisfied are you with our product?

- Would you recommend our product to a friend or family member?

- How likely are you to purchase our product in the future?

But you only want one variable to represent a customer satisfaction score. One option would be to average the three question responses. Another option would be to create a factor dependent variable. This can be done by running a principal component analysis (PCA) and keeping the first principal component (also known as a factor). The advantage of a PCA over an average is that it automatically weights each of the variables in the calculation.

Say you have a list of questions and you don’t know exactly which responses will move together and which will move differently; for example, purchase barriers of potential customers. The following are possible barriers to purchase:

- Price is prohibitive

- Overall implementation costs

- We can’t reach a consensus in our organization

- Product is not consistent with our business strategy

- I need to develop an ROI, but cannot or have not

- We are locked into a contract with another product

- The product benefits don’t outweigh the cost

- We have no reason to switch

- Our IT department cannot support your product

- We do not have sufficient technical resources

- Your product does not have a feature we require

- Other (please specify)

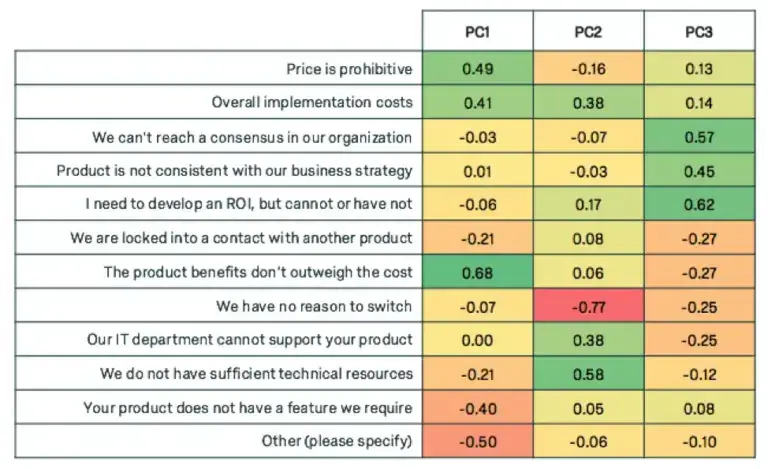

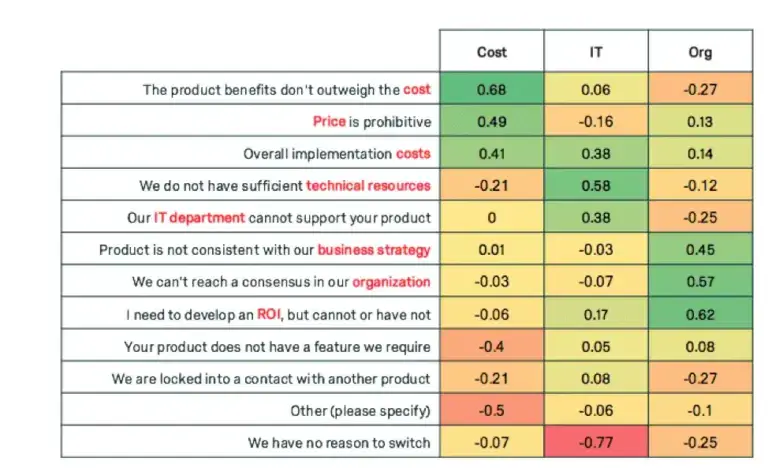

Factor analysis can uncover the trends of how these questions will move together. The following are loadings for 3 factors for each of the variables.

Notice how each of the principal components have high weights for a subset of the variables. Weight is used interchangeably with loading, and high weight indicates the variables that are most influential for each principal component. +0.30 is generally considered to be a heavy weight.

The first component displays heavy weights for variables related to cost, the second weights variables related to IT, and the third weights variables related to organizational factors. We can give our new super variables clever names.

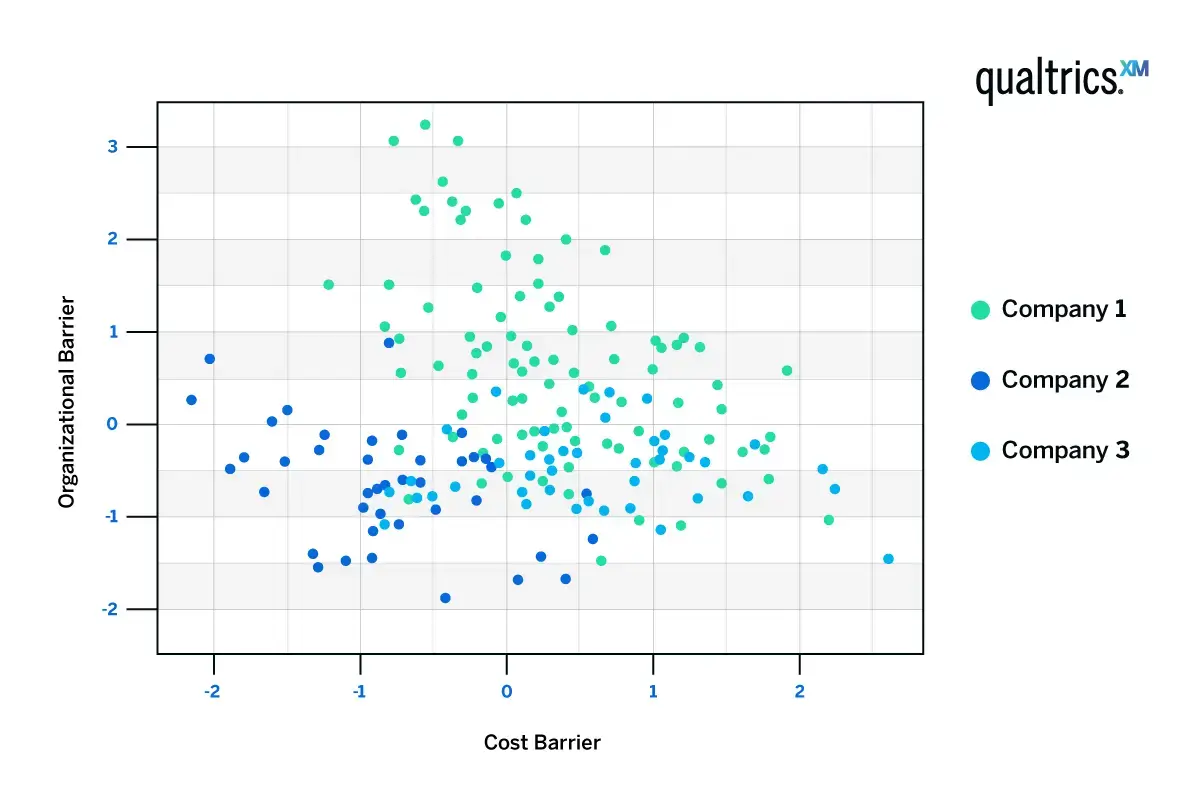

If we were to cluster the customers based on these three components, we can see some trends. Customers tend to be high in cost barriers or organizational barriers, but not both.

The red dots represent respondents who indicated they had higher organizational barriers; the green dots represent respondents who indicated they had higher cost barriers

Considerations when using factor analysis

Factor analysis is a tool, and like any tool its effectiveness depends on how you use it. When employing factor analysis, it’s essential to keep a few key considerations in mind.

Oversimplification

While factor analysis is great for simplifying complex data sets, there’s a risk of oversimplification when grouping variables into factors. To avoid this you should ensure the reduced factors still accurately represent the complexities of your variables.

Subjectivity

Interpreting the factors can sometimes be subjective, and requires a good understanding of the variables and the context. Be mindful that multiple analysts may come up with different names for the same factor.

Supplementary techniques

Factor analysis is often just the first step. Consider how it fits into your broader research strategy and which other techniques you’ll use alongside it.

Examples of factor analysis studies

Factor analysis, including PCA, is often used in tandem with segmentation studies. It might be an intermediary step to reduce variables before using KMeans to make the segments.

Factor analysis provides simplicity after reducing variables. For long studies with large blocks of Matrix Likert scale questions, the number of variables can become unwieldy. Simplifying the data using factor analysis helps analysts focus and clarify the results, while also reducing the number of dimensions they’re clustering on.

Sample questions for factor analysis

Choosing exactly which questions to perform factor analysis on is both an art and a science. Choosing which variables to reduce takes some experimentation, patience and creativity. Factor analysis works well on Likert scale questions and Sum to 100 question types.

Factor analysis works well on matrix blocks of the following question genres:

Psychographics (Agree/Disagree):

- I value family

- I believe brand represents value

Behavioral (Agree/Disagree):

- I purchase the cheapest option

- I am a bargain shopper

Attitudinal (Agree/Disagree):

- The economy is not improving

- I am pleased with the product

Activity-Based (Agree/Disagree):

- I love sports

- I sometimes shop online during work hours

Behavioral and psychographic questions are especially suited for factor analysis.

Sample output reports

Factor analysis simply produces weights (called loadings) for each respondent. These loadings can be used like other responses in the survey.

Related resources

Analysis & Reporting

Margin of error 11 min read

Data saturation in qualitative research 8 min read, thematic analysis 11 min read, behavioral analytics 12 min read, statistical significance calculator: tool & complete guide 18 min read, regression analysis 19 min read, data analysis 31 min read, request demo.

Ready to learn more about Qualtrics?

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

Lesson 12: factor analysis, overview section .

Factor Analysis is a method for modeling observed variables, and their covariance structure, in terms of a smaller number of underlying unobservable (latent) “factors.” The factors typically are viewed as broad concepts or ideas that may describe an observed phenomenon. For example, a basic desire of obtaining a certain social level might explain most consumption behavior. These unobserved factors are more interesting to the social scientist than the observed quantitative measurements.

Factor analysis is generally an exploratory/descriptive method that requires many subjective judgments. It is a widely used tool and often controversial because the models, methods, and subjectivity are so flexible that debates about interpretations can occur.

The method is similar to principal components although, as the textbook points out, factor analysis is more elaborate. In one sense, factor analysis is an inversion of principal components. In factor analysis, we model the observed variables as linear functions of the “factors.” In principal components, we create new variables that are linear combinations of the observed variables. In both PCA and FA, the dimension of the data is reduced. Recall that in PCA, the interpretation of the principal components is often not very clean. A particular variable may, on occasion, contribute significantly to more than one of the components. Ideally, we like each variable to contribute significantly to only one component. A technique called factor rotation is employed toward that goal. Examples of fields where factor analysis is involved include physiology, health, intelligence, sociology, and sometimes ecology among others.

- Understand the terminology of factor analysis, including the interpretation of factor loadings, specific variances, and commonalities;

- Understand how to apply both principal component and maximum likelihood methods for estimating the parameters of a factor model;

- Understand factor rotation, and interpret rotated factor loadings.

- Skip to primary navigation

- Skip to main content

- Skip to primary sidebar

Institute for Digital Research and Education

A Practical Introduction to Factor Analysis

Factor analysis is a method for modeling observed variables and their covariance structure in terms of unobserved variables (i.e., factors). There are two types of factor analyses, exploratory and confirmatory. Exploratory factor analysis (EFA) is method to explore the underlying structure of a set of observed variables, and is a crucial step in the scale development process. The first step in EFA is factor extraction. During this seminar, we will discuss how principal components analysis and common factor analysis differ in their approach to variance partitioning. Common factor analysis models can be estimated using various estimation methods such as principal axis factoring and maximum likelihood, and we will compare the practical differences between these two methods. After extracting the best factor structure, we can obtain a more interpretable factor solution through factor rotation. Here is where we will discuss the difference between orthogonal and oblique rotations, and finally how to the final solution to generate a factor score. For the latter portion of the seminar we will introduce confirmatory factor analysis (CFA), which is a method to verify a factor structure that has already been defined. Topics to discuss include identification, model fit, and degrees of freedom demonstrated through a three-item, two-item and eight-item one factor CFA and a two-factor CFA. SPSS will be used for the EFA portion of the seminar and R (lavaan) will be used for the CFA portion. You can click on the main link to access each portion of the seminar.

I. Exploratory Factor Analysis (EFA)

- Motivating example: The SAQ

- Pearson correlation formula

- Partitioning the variance in factor analysis

- principal components analysis

- principal axis factoring

- maximum likelihood

- Simple Structure

- Orthogonal rotation (Varimax)

- Oblique (Direct Oblimin)

- Generating factor scores

II. Confirmatory Factor Analysis (CFA)

- Motivating example SPSS Anxiety Questionairre

- The factor analysis model

- The model-implied covariance matrix

- The path diagram

- Known values, parameters, and degrees of freedom

- Three-item (one) factor analysis

- Identification of a three-item one factor CFA

- Running a one-factor CFA in lavaan

- (Optional) How to manually obtain the standardized solution

- (Optional) Degrees of freedom with means

- One factor CFA with two items

- One factor CFA with more than three items (SAQ-8)

- Model chi-square

- A note on sample size

- (Optional) Model test of the baseline or null model

- Incremental versus absolute fit index

- CFI (Confirmatory Factor Index)

- TLI (Tucker Lewis Index)

- Uncorrelated factors

- Correlated factors

- Second-Order CFA

- (Optional) Warning message with second-order CFA

- (Optional) Obtaining the parameter table

DiStefano, C., Zhu, M., & Mindrila, D. (2009). Understanding and using factor scores: Considerations for the applied researcher. Practical Assessment, Research & Evaluation , 14 (20), 2.

Field, A. (2009). Discovering statistics using SPSS . Sage publications.

Geiser, C. (2012). Data analysis with Mplus . Guilford Press.

Lawley, D. N., Maxwell, A. E. (1971). Factor Analysis as a Statistical Method. Second Edition. American Elsevier Publishing Company, Inc. New York.

Pett, M. A., Lackey, N. R., & Sullivan, J. J. (2003). Making sense of factor analysis: The use of factor analysis for instrument development in health care research . Sage.

Your Name (required)

Your Email (must be a valid email for us to receive the report!)

Comment/Error Report (required)

How to cite this page

- © 2021 UC REGENTS

- 1-800-609-6480

- Your Audience

- Your Industry

- Customer Stories

- Case Studies

- Alchemer Survey

- Alchemer Survey is the industry leader in flexibility, ease of use, and fastest implementation. Learn More

- Alchemer Workflow

- Alchemer Workflow is the fastest, easiest, and most effective way to close the loop with customers. Learn More

- Alchemer Digital

- Alchemer Digital drives omni-channel customer engagement across Mobile and Web digital properties. Learn More

- Additional Products

- Alchemer Mobile

- Alchemer Web

- Email and SMS Distribution

- Integrations

- Panel Services

- Website Intercept

- Onboarding Services

- Business Labs

- Basic Training

- Alchemer University

- Our full-service team will help you find the audience you need. Learn More

- Professional Services

- Specialists will custom-fit Alchemer Survey and Workflow to your business. Learn More

- Mobile Executive Reports

- Get help gaining insights into mobile customer feedback tailored to your requirements. Learn More

- Self-Service Survey Pricing

- News & Press

- Help Center

- Mobile Developer Guides

- Resource Library

- Close the Loop

- Security & Privacy

Factor Analysis 101: The Basics

- Share this post:

What is Factor Analysis?

Factor analysis is a powerful data reduction technique that enables researchers to investigate concepts that cannot easily be measured directly. By boiling down a large number of variables into a handful of comprehensible underlying factors, factor analysis results in easy-to-understand, actionable data.

By applying this method to your research, you can spot trends faster and see themes throughout your datasets, enabling you to learn what the data points have in common.

Unlike statistical methods such as regression analysis , factor analysis does not require defined variables.

Factor analysis is most commonly used to identify the relationship between all of the variables included in a given dataset.

The Objectives of Factor Analysis

Think of factor analysis as shrink wrap. When applied to a large amount of data, it compresses the set into a smaller set that is far more manageable, and easier to understand.

The overall objective of factor analysis can be broken down into four smaller objectives:

- To definitively understand how many factors are needed to explain common themes amongst a given set of variables.

- To determine the extent to which each variable in the dataset is associated with a common theme or factor.

- To provide an interpretation of the common factors in the dataset.

- To determine the degree to which each observed data point represents each theme or factor.

When to Use Factor Analysis

Determining when to use particular statistical methods to get the most insight out of your data can be tricky.

When considering factor analysis, have your goal top-of-mind.

There are three main forms of factor analysis. If your goal aligns to any of these forms, then you should choose factor analysis as your statistical method of choice:

Exploratory Factor Analysi s should be used when you need to develop a hypothesis about a relationship between variables.

Confirmatory Factor Analysis should be used to test a hypothesis about the relationship between variables.

Construct Validity should be used to test the degree to which your survey actually measures what it is intended to measure.

How To Ensure Your Survey is Optimized for Factor Analysis

If you know that you’ll want to perform a factor analysis on response data from a survey, there are a few things you can do ahead of time to ensure that your analysis will be straightforward, informative, and actionable.

Identify and Target Enough Respondents

Large datasets are the lifeblood of factor analysis. You’ll need large groups of survey respondents, often found through panel services , for factor analysis to yield significant results.

While variables such as population size and your topic of interest will influence how many respondents you need, it’s best to maintain a “more respondents the better” mindset.

The More Questions, The Better

While designing your survey , load in as many specific questions as possible. Factor analysis will fall flat if your survey only has a few broad questions.

The ultimate goal of factor analysis is to take a broad concept and simplify it by considering more granular, contextual information, so this approach will provide you the results you’re looking for.

Aim for Quantitative Data

If you’re looking to perform a factor analysis, you’ll want to avoid having open-ended survey questions .

By providing answer options in the form of scales (whether they be Likert Scales , numerical scales, or even ‘yes/no’ scales) you’ll save yourself a world of trouble when you begin conducting your factor analysis. Just make sure that you’re using the same scaled answer options as often as possible.

- Get Your Free Demo Today Get Demo

- See How Easy Alchemer Is to Use See Help Docs

Start making smarter decisions

Related posts, introducing alchemer workflow – the fastest, easiest, most effective way to close the loop with customers and employees.

- February 14, 2023

- 3 minute read

Alchemer Acquires Apptentive, Market-Leading Mobile Feedback Platform

- January 4, 2023

How In-app Customer Feedback Helps Drive Revenue and Inform Your Product

- April 11, 2024

- 5 minute read

Don’t let State and Federal Regulations Crush Your IT Department

- April 8, 2024

- 4 minute read

Don’t Let Unknown Data Siloes Put Your Entire School District at Risk

- April 1, 2024

See it in Action

Request a demo.

- Privacy Overview

- Strictly Necessary Cookies

- 3rd Party Cookies

This website uses cookies so that we can provide you with the best user experience possible. Cookie information is stored in your browser and performs functions such as recognising you when you return to our website and helping our team to understand which sections of the website you find most interesting and useful.

Strictly Necessary Cookie should be enabled at all times so that we can save your preferences for cookie settings.

If you disable this cookie, we will not be able to save your preferences. This means that every time you visit this website you will need to enable or disable cookies again.

This website uses Google Analytics to collect anonymous information such as the number of visitors to the site, and the most popular pages.

Keeping this cookie enabled helps us to improve our website.

Please enable Strictly Necessary Cookies first so that we can save your preferences!

9 Factor Analysis Overview

You can load this file with open_template_file(“factoranalysis”) .

Background: https://usq.pressbooks.pub/statisticsforresearchstudents/part/factor-analysis/ https://advstats.psychstat.org/book/factor/efa.php for an overview, though there are many introductions that cover the same general territory.

Sage: Kim, J., & Mueller, C. W. (1978). Factor analysis. SAGE Publications, Inc., https://doi.org/10.4135/9781412984256

Finch, W. (2020). Exploratory factor analysis. SAGE Publications, Inc., https://doi.org/10.4135/9781544339900

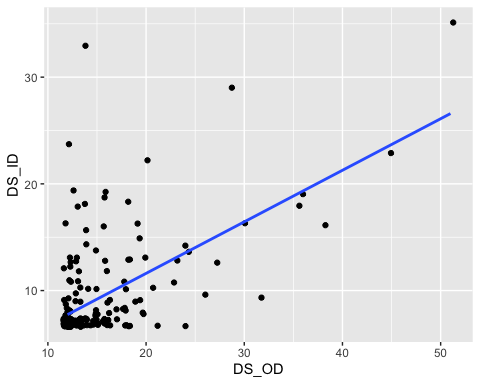

We have seen that the scales in the ethics1 data set are at list minimally internally reliable, but the weakest ones are DS_OD and DS_ID .

ggplot ( data = ethics1, aes ( x= DS_OD, y= DS_ID)) + geom_jitter () + geom_smooth ( method = "lm" , se = FALSE )

Clearly they are positively associated, but there are some high values

for both scales that are possibly very influential and making

the line seem steeper than it might be if they weren’t there.

Remember that DS_ID was constructed from 7 items and DS_OD used 12 items. If you look at the ethics vignette you can see the specific items.

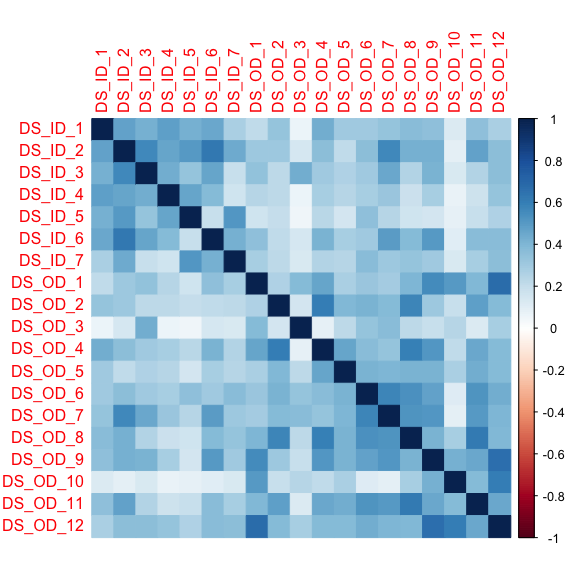

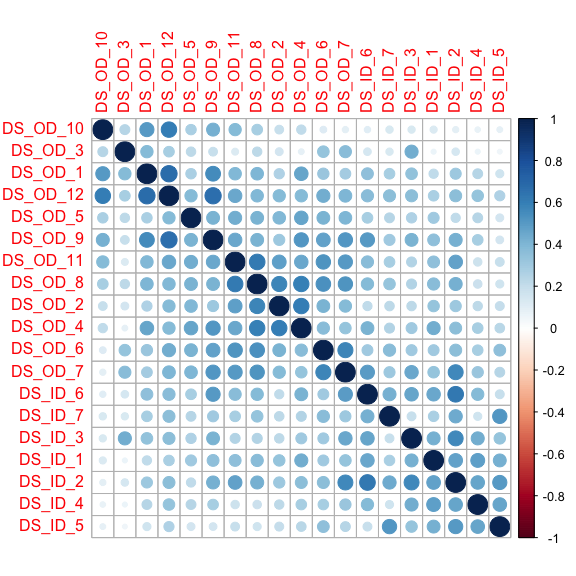

Looking at the correlation matrix for the original 19 variables we can see that these vary quite a bit.

# This creates a data frame with just our variables of interest. ethics1[ complete.cases (ethics1) == TRUE ,] |> select ( starts_with ( "DS_ID_" ) | starts_with ( "DS_OD_" )) |> haven :: zap_labels () -> DS_data # This creates a correlation matrix for those variables. DS_data |> cor () -> DS_cor # Rounding will make it easier to read. round (DS_cor, 2 )

## DS_ID_1 DS_ID_2 DS_ID_3 DS_ID_4 DS_ID_5 DS_ID_6 DS_ID_7 DS_OD_1 ## DS_ID_1 1.00 0.47 0.43 0.49 0.42 0.46 0.28 0.22 ## DS_ID_2 0.47 1.00 0.57 0.46 0.51 0.62 0.44 0.31 ## DS_ID_3 0.43 0.57 1.00 0.43 0.33 0.46 0.20 0.34 ## DS_ID_4 0.49 0.46 0.43 1.00 0.47 0.38 0.17 0.24 ## DS_ID_5 0.42 0.51 0.33 0.47 1.00 0.20 0.52 0.16 ## DS_ID_6 0.46 0.62 0.46 0.38 0.20 1.00 0.43 0.35 ## DS_ID_7 0.28 0.44 0.20 0.17 0.52 0.43 1.00 0.28 ## DS_OD_1 0.22 0.31 0.34 0.24 0.16 0.35 0.28 1.00 ## DS_OD_2 0.33 0.32 0.23 0.22 0.20 0.21 0.23 0.27 ## DS_OD_3 0.06 0.14 0.43 0.06 0.06 0.15 0.13 0.39 ## DS_OD_4 0.43 0.36 0.30 0.28 0.23 0.42 0.25 0.46 ## DS_OD_5 0.31 0.22 0.27 0.24 0.16 0.29 0.25 0.28 ## DS_OD_6 0.30 0.37 0.30 0.28 0.35 0.30 0.38 0.32 ## DS_OD_7 0.34 0.57 0.45 0.32 0.24 0.50 0.31 0.29 ## DS_OD_8 0.38 0.43 0.26 0.18 0.16 0.38 0.34 0.40 ## DS_OD_9 0.35 0.43 0.42 0.29 0.15 0.51 0.31 0.55 ## DS_OD_10 0.13 0.10 0.13 0.08 0.09 0.10 0.14 0.50 ## DS_OD_11 0.36 0.47 0.25 0.18 0.20 0.37 0.29 0.39 ## DS_OD_12 0.28 0.36 0.37 0.34 0.26 0.38 0.37 0.67 ## DS_OD_2 DS_OD_3 DS_OD_4 DS_OD_5 DS_OD_6 DS_OD_7 DS_OD_8 DS_OD_9 ## DS_ID_1 0.33 0.06 0.43 0.31 0.30 0.34 0.38 0.35 ## DS_ID_2 0.32 0.14 0.36 0.22 0.37 0.57 0.43 0.43 ## DS_ID_3 0.23 0.43 0.30 0.27 0.30 0.45 0.26 0.42 ## DS_ID_4 0.22 0.06 0.28 0.24 0.28 0.32 0.18 0.29 ## DS_ID_5 0.20 0.06 0.23 0.16 0.35 0.24 0.16 0.15 ## DS_ID_6 0.21 0.15 0.42 0.29 0.30 0.50 0.38 0.51 ## DS_ID_7 0.23 0.13 0.25 0.25 0.38 0.31 0.34 0.31 ## DS_OD_1 0.27 0.39 0.46 0.28 0.32 0.29 0.40 0.55 ## DS_OD_2 1.00 0.16 0.60 0.40 0.41 0.38 0.58 0.31 ## DS_OD_3 0.16 1.00 0.08 0.22 0.34 0.37 0.22 0.19 ## DS_OD_4 0.60 0.08 1.00 0.46 0.38 0.34 0.60 0.51 ## DS_OD_5 0.40 0.22 0.46 1.00 0.41 0.40 0.41 0.42 ## DS_OD_6 0.41 0.34 0.38 0.41 1.00 0.57 0.54 0.48 ## DS_OD_7 0.38 0.37 0.34 0.40 0.57 1.00 0.52 0.52 ## DS_OD_8 0.58 0.22 0.60 0.41 0.54 0.52 1.00 0.42 ## DS_OD_9 0.31 0.19 0.51 0.42 0.48 0.52 0.42 1.00 ## DS_OD_10 0.19 0.25 0.22 0.28 0.11 0.10 0.29 0.43 ## DS_OD_11 0.48 0.12 0.46 0.43 0.53 0.50 0.62 0.46 ## DS_OD_12 0.38 0.29 0.38 0.38 0.44 0.41 0.39 0.67 ## DS_OD_10 DS_OD_11 DS_OD_12 ## DS_ID_1 0.13 0.36 0.28 ## DS_ID_2 0.10 0.47 0.36 ## DS_ID_3 0.13 0.25 0.37 ## DS_ID_4 0.08 0.18 0.34 ## DS_ID_5 0.09 0.20 0.26 ## DS_ID_6 0.10 0.37 0.38 ## DS_ID_7 0.14 0.29 0.37 ## DS_OD_1 0.50 0.39 0.67 ## DS_OD_2 0.19 0.48 0.38 ## DS_OD_3 0.25 0.12 0.29 ## DS_OD_4 0.22 0.46 0.38 ## DS_OD_5 0.28 0.43 0.38 ## DS_OD_6 0.11 0.53 0.44 ## DS_OD_7 0.10 0.50 0.41 ## DS_OD_8 0.29 0.62 0.39 ## DS_OD_9 0.43 0.46 0.67 ## DS_OD_10 1.00 0.38 0.60 ## DS_OD_11 0.38 1.00 0.46 ## DS_OD_12 0.60 0.46 1.00

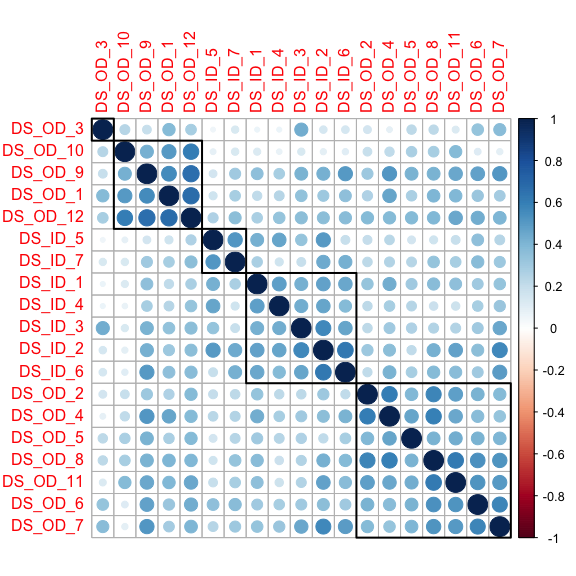

A visualization can be helpful for seeing patterns.

#notice that the figure size is adjusted. corrplot :: corrplot (DS_cor, method = 'color' )

corrplot :: corrplot (DS_cor, order = 'AOE' )

corrplot :: corrplot (DS_cor, order = ‘hclust’ , addrect = 5 )

There are many other options for displaying the correlation matrix, which you can see here: https://cran.r-project.org/web/packages/corrplot/vignettes/corrplot-intro.html

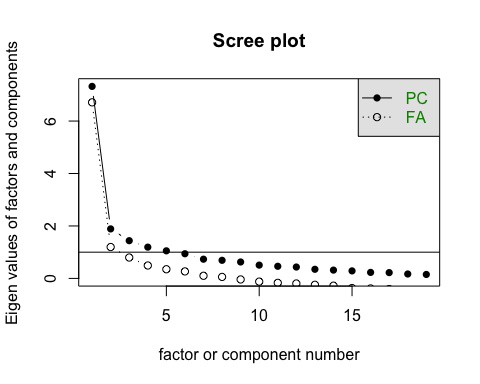

How many factors are there?

ev <- eigen (DS_cor) # get eigenvalues ev $ values

## [1] 7.3216335 1.8875037 1.4382953 1.1918764 1.0513941 0.9402367 0.7320710 ## [8] 0.6899644 0.6261405 0.5065023 0.4623007 0.4337202 0.3475235 0.3164977 ## [15] 0.2865786 0.2289359 0.2207224 0.1679963 0.1501070

psych :: scree (DS_data)

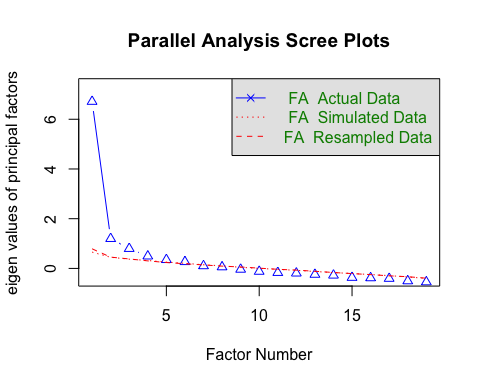

psych :: fa.parallel (DS_data, fa = "fa" )

## Parallel analysis suggests that the number of factors = 6 and the number of components = NA

In this case it seems like there are actually 5 factors, not two.

DS_data |> factanal ( factors = 6 , scores = "Bartlett" ) -> fa5 fa5

## ## Call: ## factanal(x = DS_data, factors = 6, scores = "Bartlett") ## ## Uniquenesses: ## DS_ID_1 DS_ID_2 DS_ID_3 DS_ID_4 DS_ID_5 DS_ID_6 DS_ID_7 DS_OD_1 ## 0.591 0.288 0.430 0.609 0.005 0.371 0.624 0.379 ## DS_OD_2 DS_OD_3 DS_OD_4 DS_OD_5 DS_OD_6 DS_OD_7 DS_OD_8 DS_OD_9 ## 0.497 0.005 0.005 0.659 0.439 0.346 0.337 0.346 ## DS_OD_10 DS_OD_11 DS_OD_12 ## 0.469 0.392 0.173 ## ## Loadings: ## Factor1 Factor2 Factor3 Factor4 Factor5 Factor6 ## DS_ID_1 0.248 0.459 0.301 0.202 ## DS_ID_2 0.320 0.695 0.350 ## DS_ID_3 0.610 0.156 0.186 0.349 ## DS_ID_4 0.474 0.135 0.367 ## DS_ID_5 0.202 0.970 ## DS_ID_6 0.241 0.730 0.151 0.118 ## DS_ID_7 0.271 0.256 0.177 0.452 ## DS_OD_1 0.187 0.226 0.659 0.213 0.228 ## DS_OD_2 0.587 0.155 0.113 0.338 ## DS_OD_3 0.153 0.207 0.959 ## DS_OD_4 0.445 0.246 0.210 0.826 ## DS_OD_5 0.449 0.155 0.244 0.204 ## DS_OD_6 0.622 0.186 0.181 0.258 0.199 ## DS_OD_7 0.576 0.496 0.102 0.224 ## DS_OD_8 0.735 0.195 0.171 0.225 ## DS_OD_9 0.337 0.448 0.559 0.163 ## DS_OD_10 0.152 0.702 ## DS_OD_11 0.684 0.222 0.279 ## DS_OD_12 0.278 0.256 0.809 0.161 ## ## Factor1 Factor2 Factor3 Factor4 Factor5 Factor6 ## SS loadings 3.057 2.717 2.321 1.673 1.212 1.054 ## Proportion Var 0.161 0.143 0.122 0.088 0.064 0.055 ## Cumulative Var 0.161 0.304 0.426 0.514 0.578 0.633 ## ## Test of the hypothesis that 6 factors are sufficient. ## The chi square statistic is 230.2 on 72 degrees of freedom. ## The p-value is 1.96e-18

For contrast, we can also see what the 2-factor solution looks like.

DS_data |> factanal ( factors = 2 ) -> fa2 fa2

## ## Call: ## factanal(x = DS_data, factors = 2) ## ## Uniquenesses: ## DS_ID_1 DS_ID_2 DS_ID_3 DS_ID_4 DS_ID_5 DS_ID_6 DS_ID_7 DS_OD_1 ## 0.605 0.342 0.616 0.695 0.715 0.521 0.728 0.436 ## DS_OD_2 DS_OD_3 DS_OD_4 DS_OD_5 DS_OD_6 DS_OD_7 DS_OD_8 DS_OD_9 ## 0.702 0.862 0.597 0.709 0.599 0.494 0.559 0.408 ## DS_OD_10 DS_OD_11 DS_OD_12 ## 0.474 0.551 0.289 ## ## Loadings: ## Factor1 Factor2 ## DS_ID_1 0.607 0.161 ## DS_ID_2 0.795 0.161 ## DS_ID_3 0.574 0.233 ## DS_ID_4 0.536 0.133 ## DS_ID_5 0.531 ## DS_ID_6 0.648 0.244 ## DS_ID_7 0.465 0.235 ## DS_OD_1 0.207 0.722 ## DS_OD_2 0.402 0.370 ## DS_OD_3 0.154 0.338 ## DS_OD_4 0.459 0.439 ## DS_OD_5 0.337 0.421 ## DS_OD_6 0.504 0.383 ## DS_OD_7 0.641 0.309 ## DS_OD_8 0.491 0.447 ## DS_OD_9 0.408 0.652 ## DS_OD_10 0.722 ## DS_OD_11 0.458 0.489 ## DS_OD_12 0.272 0.798 ## ## Factor1 Factor2 ## SS loadings 4.450 3.649 ## Proportion Var 0.234 0.192 ## Cumulative Var 0.234 0.426 ## ## Test of the hypothesis that 2 factors are sufficient. ## The chi square statistic is 721.19 on 134 degrees of freedom. ## The p-value is 3.24e-81

We use rotations to simplify the representation of the factors.

There are many options, let’s use promax as an example.

DS_data |> factanal ( factors = 5 , scores = "Bartlett" , rotation = "promax" ) -> fa5p print (fa5p, cut= 0.2 )

## ## Call: ## factanal(x = DS_data, factors = 5, scores = "Bartlett", rotation = "promax") ## ## Uniquenesses: ## DS_ID_1 DS_ID_2 DS_ID_3 DS_ID_4 DS_ID_5 DS_ID_6 DS_ID_7 DS_OD_1 ## 0.557 0.314 0.553 0.621 0.005 0.330 0.633 0.409 ## DS_OD_2 DS_OD_3 DS_OD_4 DS_OD_5 DS_OD_6 DS_OD_7 DS_OD_8 DS_OD_9 ## 0.461 0.750 0.248 0.654 0.403 0.272 0.338 0.364 ## DS_OD_10 DS_OD_11 DS_OD_12 ## 0.464 0.473 0.193 ## ## Loadings: ## Factor1 Factor2 Factor3 Factor4 Factor5 ## DS_ID_1 0.294 0.431 ## DS_ID_2 0.655 0.216 ## DS_ID_3 0.578 ## DS_ID_4 0.421 0.281 ## DS_ID_5 1.074 ## DS_ID_6 0.895 -0.247 ## DS_ID_7 0.412 ## DS_OD_1 0.729 ## DS_OD_2 0.764 ## DS_OD_3 0.240 0.452 ## DS_OD_4 0.895 -0.290 ## DS_OD_5 0.411 ## DS_OD_6 0.250 0.590 ## DS_OD_7 0.356 0.736 ## DS_OD_8 0.701 0.280 ## DS_OD_9 0.499 0.349 ## DS_OD_10 0.862 -0.244 ## DS_OD_11 0.443 0.307 ## DS_OD_12 0.871 ## ## Factor1 Factor2 Factor3 Factor4 Factor5 ## SS loadings 2.478 2.441 2.330 1.628 1.544 ## Proportion Var 0.130 0.128 0.123 0.086 0.081 ## Cumulative Var 0.130 0.259 0.382 0.467 0.548 ## ## Factor Correlations: ## Factor1 Factor2 Factor3 Factor4 Factor5 ## Factor1 1.000 0.367 -0.271 -0.568 -0.346 ## Factor2 0.367 1.000 -0.571 -0.543 -0.559 ## Factor3 -0.271 -0.571 1.000 0.476 0.543 ## Factor4 -0.568 -0.543 0.476 1.000 0.470 ## Factor5 -0.346 -0.559 0.543 0.470 1.000 ## ## Test of the hypothesis that 5 factors are sufficient.

## The chi square statistic is 323.4 on 86 degrees of freedom. ## The p-value is 3.33e-29

One of the interesting things to notice is that DS_OD_3 is not by itself the way it was when we looked at the bivariate correlation matrix. This is also true for some of the other rectangles that were highlighted. This is because factor analysis is a multivariate method, controlling for many variables at once. When you do that the bivariate relationships can change.

One of the decisions about rotations is whether the factors should be allowed to be correlated or must be uncorrelated (orthogonal) with each other. The promax rotation allows them to be correlated. To see the implications of this we can compare the correlations of the factors created in fa5 and fa5p.

round ( cor (fa5 $ scores), 2 )

## Factor1 Factor2 Factor3 Factor4 Factor5 Factor6 ## Factor1 1.00 -0.11 -0.07 0.01 -0.02 -0.09 ## Factor2 -0.11 1.00 -0.04 -0.04 0.00 0.01 ## Factor3 -0.07 -0.04 1.00 0.01 -0.02 0.00 ## Factor4 0.01 -0.04 0.01 1.00 0.00 0.00 ## Factor5 -0.02 0.00 -0.02 0.00 1.00 0.02 ## Factor6 -0.09 0.01 0.00 0.00 0.02 1.00

round ( cor (fa5p $ scores), 2 )

## Factor1 Factor2 Factor3 Factor4 Factor5 ## Factor1 1.00 0.50 0.46 0.35 0.46 ## Factor2 0.50 1.00 0.41 0.25 0.47 ## Factor3 0.46 0.41 1.00 0.54 0.34 ## Factor4 0.35 0.25 0.54 1.00 0.31 ## Factor5 0.46 0.47 0.34 0.31 1.00

From a criminology perspective we would probably expect that deviance of different types would be correlated, so it is likely we would use the rotated scores.

We can use these scores for further analysis or we could use summary scales with Cronbach’s α the way we did previously.

Research Methods for Lehman EdD Copyright © by elinwaring. All Rights Reserved.

Share This Book

A Primer on Factor Analysis in Research using Reproducible R Software

Abdisalam hassan muse (phd).

Amoud University

This primer provides an overview of factor analysis in research, covering the meaning and assumptions of factor analysis, as well as the differences between exploratory factor analysis (EFA) and confirmatory factor analysis (CFA). The procedure for conducting factor analysis is explained, with a focus on the role of the correlation matrix and a general model of the correlation matrix of individual variables. The paper covers methods for extracting factors, including principal component analysis (PCA) and determining the number of factors to be extracted, such as the comprehensibility, Kaiser Criterion, variance explained criteria, Cattell’s scree plot, and Horn’s parallel analysis (PA). The meaning and interpretation of communality and eigenvalues are discussed, as well as factor loading and rotation methods such as varimax. The paper also covers the meaning and interpretation of factor scores and their use in subsequent analyses. The R software is used throughout the paper to provide reproducible examples and code for conducting factor analysis.

Introduction

Factor analysis is a statistical technique commonly used in research to identify underlying dimensions or constructs that explain the variability among a set of observed variables. It is often used to reduce the complexity of a dataset by summarizing a large number of variables into a smaller set of factors that are easier to understand and analyze. Factor analysis is widely used in fields such as psychology, education, marketing, and social sciences to explore the relationships between variables and to identify underlying latent constructs.

In this tutorial paper, we will provide an overview of factor analysis, including its meaning and assumptions, the differences between exploratory factor analysis (EFA) and confirmatory factor analysis (CFA), and the procedure for conducting factor analysis. We will also cover the role of the correlation matrix and a general model of the correlation matrix of individual variables.

The paper will discuss methods for extracting factors, including principal component analysis (PCA), and determining the number of factors to be extracted using criteria such as the comprehensibility, Kaiser Criterion, variance explained criteria, Cattell’s scree plot, and Horn’s parallel analysis (PA). The meaning and interpretation of communality and eigenvalues will be discussed, as well as factor loading and rotation methods such as varimax.

Finally, the paper will cover the meaning and interpretation of factor scores and their use in subsequent analyses. The R software will be used throughout the paper to provide reproducible examples and code for conducting factor analysis. By the end of this tutorial paper, readers will have a better understanding of the fundamentals of factor analysis and how to apply it in their research.

Module I: Factor Analysis in Research

Meaning of factor analysis.

Factor analysis is a statistical method that is widely used in research to identify the underlying factors that explain the variations in a set of observed variables. The method is particularly useful in fields such as psychology, sociology, marketing, and education, where researchers often deal with complex datasets that contain many variables. The basic idea behind factor analysis is to identify the common factors that underlie a set of observed variables. By identifying these factors, researchers can reduce the number of variables they need to analyze, simplify the data, and gain insights into the underlying structure of the data.

Factor analysis can be used in two main ways: exploratory and confirmatory.

Exploratory factor analysis is used when the researcher does not have a priori knowledge of the underlying factors and wants to identify them from the data.

Confirmatory factor analysis , on the other hand, is used when the researcher has a specific hypothesis about the underlying factors and wants to test this hypothesis using the data.

Factor analysis has several advantages over other statistical methods. It can help researchers identify the most important variables in a dataset, reduce the number of variables they need to analyze, and provide insights into the relationships between variables. However, it also has some limitations and assumptions that must be taken into account when applying the method.

In this primer or tutorial paper, we will provide an overview of factor analysis, its applications in research, and the steps involved in performing factor analysis. We will also discuss the assumptions and limitations of the method, as well as methods for interpreting and visualizing the results. Finally, we will provide several examples of factor analysis in different fields of research, illustrating how the method can be used to extract meaningful information from complex datasets.

Assumptions of Factor Analysis

Factor analysis is a statistical technique that is used to identify the underlying factors that explain the correlations between a set of observed variables. In order to obtain valid results from factor analysis, certain assumptions must be met. Here are some of the key assumptions of factor analysis: