- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Data Analysis in Research: Types & Methods

Content Index

Why analyze data in research?

Types of data in research, finding patterns in the qualitative data, methods used for data analysis in qualitative research, preparing data for analysis, methods used for data analysis in quantitative research, considerations in research data analysis, what is data analysis in research.

Definition of research in data analysis: According to LeCompte and Schensul, research data analysis is a process used by researchers to reduce data to a story and interpret it to derive insights. The data analysis process helps reduce a large chunk of data into smaller fragments, which makes sense.

Three essential things occur during the data analysis process — the first is data organization . Summarization and categorization together contribute to becoming the second known method used for data reduction. It helps find patterns and themes in the data for easy identification and linking. The third and last way is data analysis – researchers do it in both top-down and bottom-up fashion.

LEARN ABOUT: Research Process Steps

On the other hand, Marshall and Rossman describe data analysis as a messy, ambiguous, and time-consuming but creative and fascinating process through which a mass of collected data is brought to order, structure and meaning.

We can say that “the data analysis and data interpretation is a process representing the application of deductive and inductive logic to the research and data analysis.”

Researchers rely heavily on data as they have a story to tell or research problems to solve. It starts with a question, and data is nothing but an answer to that question. But, what if there is no question to ask? Well! It is possible to explore data even without a problem – we call it ‘Data Mining’, which often reveals some interesting patterns within the data that are worth exploring.

Irrelevant to the type of data researchers explore, their mission and audiences’ vision guide them to find the patterns to shape the story they want to tell. One of the essential things expected from researchers while analyzing data is to stay open and remain unbiased toward unexpected patterns, expressions, and results. Remember, sometimes, data analysis tells the most unforeseen yet exciting stories that were not expected when initiating data analysis. Therefore, rely on the data you have at hand and enjoy the journey of exploratory research.

Create a Free Account

Every kind of data has a rare quality of describing things after assigning a specific value to it. For analysis, you need to organize these values, processed and presented in a given context, to make it useful. Data can be in different forms; here are the primary data types.

- Qualitative data: When the data presented has words and descriptions, then we call it qualitative data . Although you can observe this data, it is subjective and harder to analyze data in research, especially for comparison. Example: Quality data represents everything describing taste, experience, texture, or an opinion that is considered quality data. This type of data is usually collected through focus groups, personal qualitative interviews , qualitative observation or using open-ended questions in surveys.

- Quantitative data: Any data expressed in numbers of numerical figures are called quantitative data . This type of data can be distinguished into categories, grouped, measured, calculated, or ranked. Example: questions such as age, rank, cost, length, weight, scores, etc. everything comes under this type of data. You can present such data in graphical format, charts, or apply statistical analysis methods to this data. The (Outcomes Measurement Systems) OMS questionnaires in surveys are a significant source of collecting numeric data.

- Categorical data: It is data presented in groups. However, an item included in the categorical data cannot belong to more than one group. Example: A person responding to a survey by telling his living style, marital status, smoking habit, or drinking habit comes under the categorical data. A chi-square test is a standard method used to analyze this data.

Learn More : Examples of Qualitative Data in Education

Data analysis in qualitative research

Data analysis and qualitative data research work a little differently from the numerical data as the quality data is made up of words, descriptions, images, objects, and sometimes symbols. Getting insight from such complicated information is a complicated process. Hence it is typically used for exploratory research and data analysis .

Although there are several ways to find patterns in the textual information, a word-based method is the most relied and widely used global technique for research and data analysis. Notably, the data analysis process in qualitative research is manual. Here the researchers usually read the available data and find repetitive or commonly used words.

For example, while studying data collected from African countries to understand the most pressing issues people face, researchers might find “food” and “hunger” are the most commonly used words and will highlight them for further analysis.

LEARN ABOUT: Level of Analysis

The keyword context is another widely used word-based technique. In this method, the researcher tries to understand the concept by analyzing the context in which the participants use a particular keyword.

For example , researchers conducting research and data analysis for studying the concept of ‘diabetes’ amongst respondents might analyze the context of when and how the respondent has used or referred to the word ‘diabetes.’

The scrutiny-based technique is also one of the highly recommended text analysis methods used to identify a quality data pattern. Compare and contrast is the widely used method under this technique to differentiate how a specific text is similar or different from each other.

For example: To find out the “importance of resident doctor in a company,” the collected data is divided into people who think it is necessary to hire a resident doctor and those who think it is unnecessary. Compare and contrast is the best method that can be used to analyze the polls having single-answer questions types .

Metaphors can be used to reduce the data pile and find patterns in it so that it becomes easier to connect data with theory.

Variable Partitioning is another technique used to split variables so that researchers can find more coherent descriptions and explanations from the enormous data.

LEARN ABOUT: Qualitative Research Questions and Questionnaires

There are several techniques to analyze the data in qualitative research, but here are some commonly used methods,

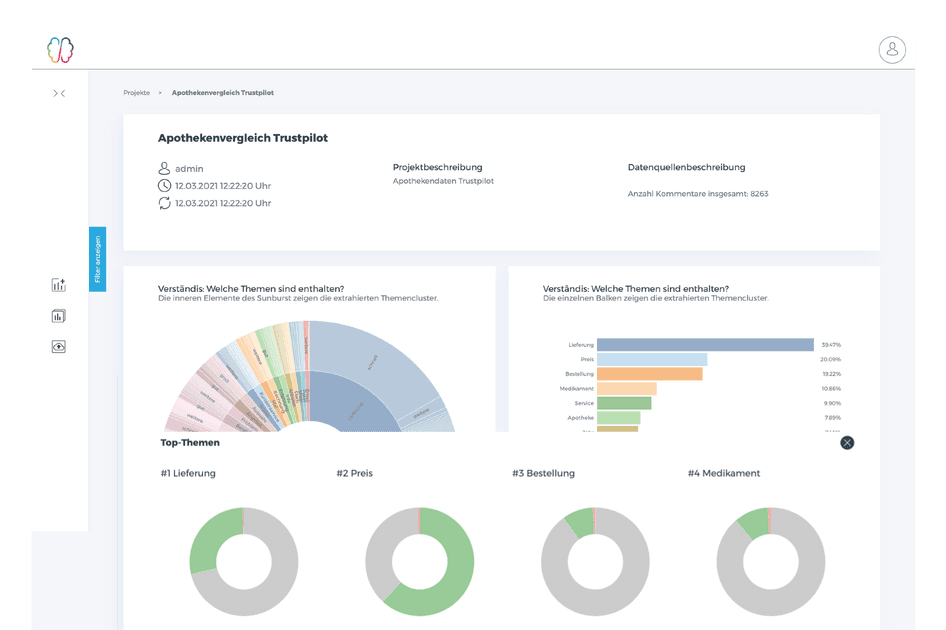

- Content Analysis: It is widely accepted and the most frequently employed technique for data analysis in research methodology. It can be used to analyze the documented information from text, images, and sometimes from the physical items. It depends on the research questions to predict when and where to use this method.

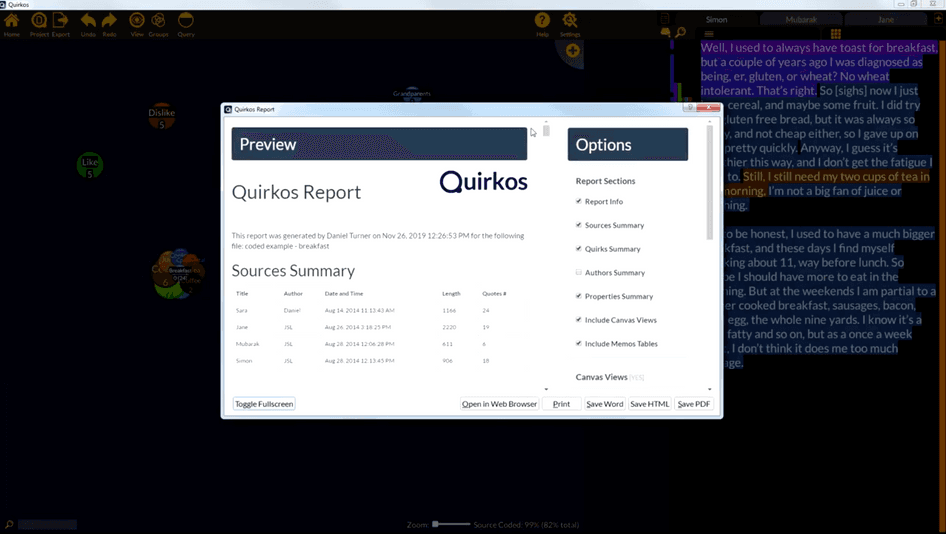

- Narrative Analysis: This method is used to analyze content gathered from various sources such as personal interviews, field observation, and surveys . The majority of times, stories, or opinions shared by people are focused on finding answers to the research questions.

- Discourse Analysis: Similar to narrative analysis, discourse analysis is used to analyze the interactions with people. Nevertheless, this particular method considers the social context under which or within which the communication between the researcher and respondent takes place. In addition to that, discourse analysis also focuses on the lifestyle and day-to-day environment while deriving any conclusion.

- Grounded Theory: When you want to explain why a particular phenomenon happened, then using grounded theory for analyzing quality data is the best resort. Grounded theory is applied to study data about the host of similar cases occurring in different settings. When researchers are using this method, they might alter explanations or produce new ones until they arrive at some conclusion.

LEARN ABOUT: 12 Best Tools for Researchers

Data analysis in quantitative research

The first stage in research and data analysis is to make it for the analysis so that the nominal data can be converted into something meaningful. Data preparation consists of the below phases.

Phase I: Data Validation

Data validation is done to understand if the collected data sample is per the pre-set standards, or it is a biased data sample again divided into four different stages

- Fraud: To ensure an actual human being records each response to the survey or the questionnaire

- Screening: To make sure each participant or respondent is selected or chosen in compliance with the research criteria

- Procedure: To ensure ethical standards were maintained while collecting the data sample

- Completeness: To ensure that the respondent has answered all the questions in an online survey. Else, the interviewer had asked all the questions devised in the questionnaire.

Phase II: Data Editing

More often, an extensive research data sample comes loaded with errors. Respondents sometimes fill in some fields incorrectly or sometimes skip them accidentally. Data editing is a process wherein the researchers have to confirm that the provided data is free of such errors. They need to conduct necessary checks and outlier checks to edit the raw edit and make it ready for analysis.

Phase III: Data Coding

Out of all three, this is the most critical phase of data preparation associated with grouping and assigning values to the survey responses . If a survey is completed with a 1000 sample size, the researcher will create an age bracket to distinguish the respondents based on their age. Thus, it becomes easier to analyze small data buckets rather than deal with the massive data pile.

LEARN ABOUT: Steps in Qualitative Research

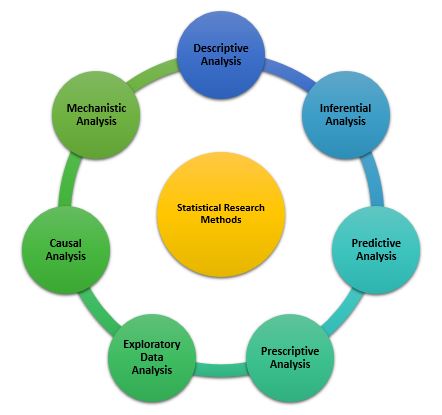

After the data is prepared for analysis, researchers are open to using different research and data analysis methods to derive meaningful insights. For sure, statistical analysis plans are the most favored to analyze numerical data. In statistical analysis, distinguishing between categorical data and numerical data is essential, as categorical data involves distinct categories or labels, while numerical data consists of measurable quantities. The method is again classified into two groups. First, ‘Descriptive Statistics’ used to describe data. Second, ‘Inferential statistics’ that helps in comparing the data .

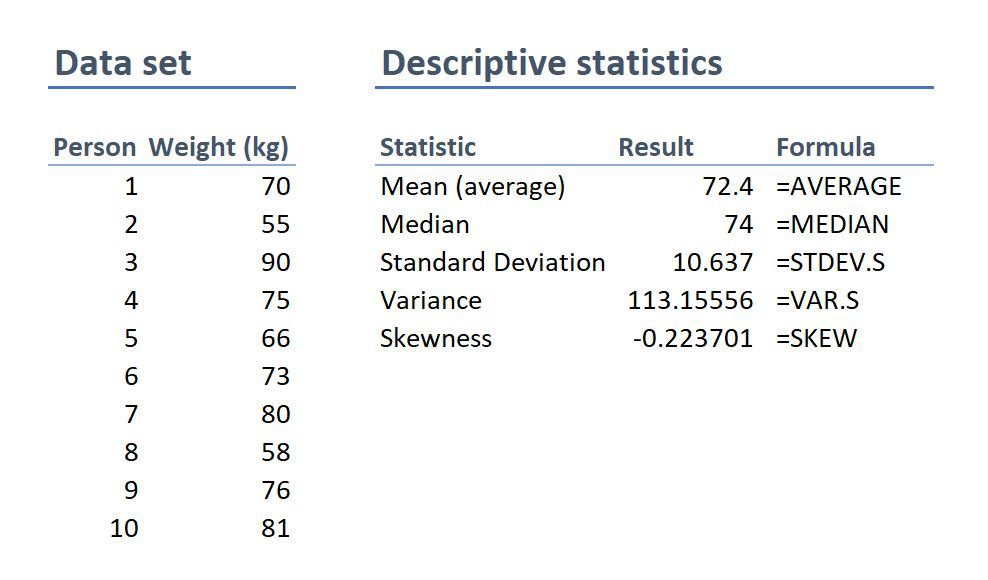

Descriptive statistics

This method is used to describe the basic features of versatile types of data in research. It presents the data in such a meaningful way that pattern in the data starts making sense. Nevertheless, the descriptive analysis does not go beyond making conclusions. The conclusions are again based on the hypothesis researchers have formulated so far. Here are a few major types of descriptive analysis methods.

Measures of Frequency

- Count, Percent, Frequency

- It is used to denote home often a particular event occurs.

- Researchers use it when they want to showcase how often a response is given.

Measures of Central Tendency

- Mean, Median, Mode

- The method is widely used to demonstrate distribution by various points.

- Researchers use this method when they want to showcase the most commonly or averagely indicated response.

Measures of Dispersion or Variation

- Range, Variance, Standard deviation

- Here the field equals high/low points.

- Variance standard deviation = difference between the observed score and mean

- It is used to identify the spread of scores by stating intervals.

- Researchers use this method to showcase data spread out. It helps them identify the depth until which the data is spread out that it directly affects the mean.

Measures of Position

- Percentile ranks, Quartile ranks

- It relies on standardized scores helping researchers to identify the relationship between different scores.

- It is often used when researchers want to compare scores with the average count.

For quantitative research use of descriptive analysis often give absolute numbers, but the in-depth analysis is never sufficient to demonstrate the rationale behind those numbers. Nevertheless, it is necessary to think of the best method for research and data analysis suiting your survey questionnaire and what story researchers want to tell. For example, the mean is the best way to demonstrate the students’ average scores in schools. It is better to rely on the descriptive statistics when the researchers intend to keep the research or outcome limited to the provided sample without generalizing it. For example, when you want to compare average voting done in two different cities, differential statistics are enough.

Descriptive analysis is also called a ‘univariate analysis’ since it is commonly used to analyze a single variable.

Inferential statistics

Inferential statistics are used to make predictions about a larger population after research and data analysis of the representing population’s collected sample. For example, you can ask some odd 100 audiences at a movie theater if they like the movie they are watching. Researchers then use inferential statistics on the collected sample to reason that about 80-90% of people like the movie.

Here are two significant areas of inferential statistics.

- Estimating parameters: It takes statistics from the sample research data and demonstrates something about the population parameter.

- Hypothesis test: I t’s about sampling research data to answer the survey research questions. For example, researchers might be interested to understand if the new shade of lipstick recently launched is good or not, or if the multivitamin capsules help children to perform better at games.

These are sophisticated analysis methods used to showcase the relationship between different variables instead of describing a single variable. It is often used when researchers want something beyond absolute numbers to understand the relationship between variables.

Here are some of the commonly used methods for data analysis in research.

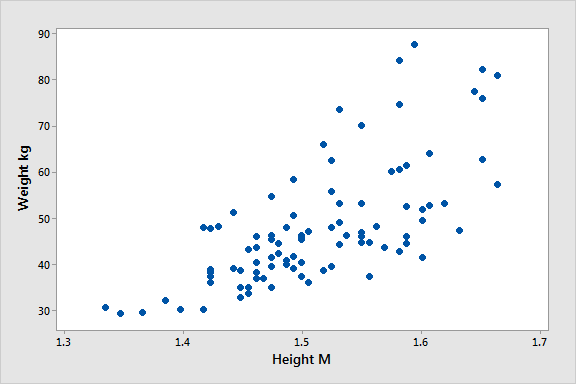

- Correlation: When researchers are not conducting experimental research or quasi-experimental research wherein the researchers are interested to understand the relationship between two or more variables, they opt for correlational research methods.

- Cross-tabulation: Also called contingency tables, cross-tabulation is used to analyze the relationship between multiple variables. Suppose provided data has age and gender categories presented in rows and columns. A two-dimensional cross-tabulation helps for seamless data analysis and research by showing the number of males and females in each age category.

- Regression analysis: For understanding the strong relationship between two variables, researchers do not look beyond the primary and commonly used regression analysis method, which is also a type of predictive analysis used. In this method, you have an essential factor called the dependent variable. You also have multiple independent variables in regression analysis. You undertake efforts to find out the impact of independent variables on the dependent variable. The values of both independent and dependent variables are assumed as being ascertained in an error-free random manner.

- Frequency tables: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Analysis of variance: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Researchers must have the necessary research skills to analyze and manipulation the data , Getting trained to demonstrate a high standard of research practice. Ideally, researchers must possess more than a basic understanding of the rationale of selecting one statistical method over the other to obtain better data insights.

- Usually, research and data analytics projects differ by scientific discipline; therefore, getting statistical advice at the beginning of analysis helps design a survey questionnaire, select data collection methods , and choose samples.

LEARN ABOUT: Best Data Collection Tools

- The primary aim of data research and analysis is to derive ultimate insights that are unbiased. Any mistake in or keeping a biased mind to collect data, selecting an analysis method, or choosing audience sample il to draw a biased inference.

- Irrelevant to the sophistication used in research data and analysis is enough to rectify the poorly defined objective outcome measurements. It does not matter if the design is at fault or intentions are not clear, but lack of clarity might mislead readers, so avoid the practice.

- The motive behind data analysis in research is to present accurate and reliable data. As far as possible, avoid statistical errors, and find a way to deal with everyday challenges like outliers, missing data, data altering, data mining , or developing graphical representation.

LEARN MORE: Descriptive Research vs Correlational Research The sheer amount of data generated daily is frightening. Especially when data analysis has taken center stage. in 2018. In last year, the total data supply amounted to 2.8 trillion gigabytes. Hence, it is clear that the enterprises willing to survive in the hypercompetitive world must possess an excellent capability to analyze complex research data, derive actionable insights, and adapt to the new market needs.

LEARN ABOUT: Average Order Value

QuestionPro is an online survey platform that empowers organizations in data analysis and research and provides them a medium to collect data by creating appealing surveys.

MORE LIKE THIS

Taking Action in CX – Tuesday CX Thoughts

Apr 30, 2024

QuestionPro CX Product Updates – Quarter 1, 2024

Apr 29, 2024

NPS Survey Platform: Types, Tips, 11 Best Platforms & Tools

Apr 26, 2024

User Journey vs User Flow: Differences and Similarities

Other categories.

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

The 11 Best Data Analytics Tools for Data Analysts in 2024

As the field of data analytics evolves, the range of available data analysis tools grows with it. If you’re considering a career in the field, you’ll want to know: Which data analysis tools do I need to learn?

In this post, we’ll highlight some of the key data analytics tools you need to know and why. From open-source tools to commercial software, you’ll get a quick overview of each, including its applications, pros, and cons. What’s even better, a good few of those on this list contain AI data analytics tools , so you’re at the forefront of the field as 2024 comes around.

We’ll start our list with the must-haves, then we’ll move onto some of the more popular tools and platforms used by organizations large and small. Whether you’re preparing for an interview, or are deciding which tool to learn next, by the end of this post you’ll have an idea how to progress.

If you’re only starting out, then CareerFoundry’s free data analytics short course will help you take your first steps.

Here are the data analysis tools we’ll cover:

- Microsoft Excel

- Jupyter Notebook

- Apache Spark

- Google Cloud AutoML

- Microsoft Power BI

How to choose a data analysis tool

Data analysis tools faq.

So, let’s get into the list then!

1. Microsoft Excel

Excel at a glance:

- Type of tool: Spreadsheet software.

- Availability : Commercial.

- Mostly used for: Data wrangling and reporting.

- Pros: Widely-used, with lots of useful functions and plug-ins.

- Cons: Cost, calculation errors, poor at handling big data.

Excel: the world’s best-known spreadsheet software. What’s more, it features calculations and graphing functions that are ideal for data analysis.

Whatever your specialism, and no matter what other software you might need, Excel is a staple in the field. Its invaluable built-in features include pivot tables (for sorting or totaling data) and form creation tools.

It also has a variety of other functions that streamline data manipulation. For instance, the CONCATENATE function allows you to combine text, numbers, and dates into a single cell. SUMIF lets you create value totals based on variable criteria, and Excel’s search function makes it easy to isolate specific data.

It has limitations though. For instance, it runs very slowly with big datasets and tends to approximate large numbers, leading to inaccuracies. Nevertheless, it’s an important and powerful data analysis tool, and with many plug-ins available, you can easily bypass Excel’s shortcomings. Get started with these ten Excel formulas that all data analysts should know .

Python at a glance:

- Type of tool: Programming language.

- Availability: Open-source, with thousands of free libraries.

- Used for: Everything from data scraping to analysis and reporting.

- Pros: Easy to learn, highly versatile, widely-used.

- Cons: Memory intensive—doesn’t execute as fast as some other languages.

A programming language with a wide range of uses, Python is a must-have for any data analyst. Unlike more complex languages, it focuses on readability, and its general popularity in the tech field means many programmers are already familiar with it.

Python is also extremely versatile; it has a huge range of resource libraries suited to a variety of different data analytics tasks. For example, the NumPy and pandas libraries are great for streamlining highly computational tasks, as well as supporting general data manipulation.

Libraries like Beautiful Soup and Scrapy are used to scrape data from the web, while Matplotlib is excellent for data visualization and reporting. Python’s main drawback is its speed—it is memory intensive and slower than many languages. In general though, if you’re building software from scratch, Python’s benefits far outweigh its drawbacks. You can learn more about Python in our full guide .

R at a glance:

- Availability: Open-source.

- Mostly used for: Statistical analysis and data mining.

- Pros: Platform independent, highly compatible, lots of packages.

- Cons: Slower, less secure, and more complex to learn than Python.

R, like Python, is a popular open-source programming language. It is commonly used to create statistical/data analysis software.

R’s syntax is more complex than Python and the learning curve is steeper. However, it was built specifically to deal with heavy statistical computing tasks and is very popular for data visualization. A bit like Python, R also has a network of freely available code, called CRAN (the Comprehensive R Archive Network), which offers 10,000+ packages.

It integrates well with other languages and systems (including big data software) and can call on code from languages like C, C++, and FORTRAN. On the downside, it has poor memory management, and while there is a good community of users to call on for help, R has no dedicated support team. But there is an excellent R-specific integrated development environment (IDE) called RStudio , which is always a bonus!

4. Jupyter Notebook

Jupyter Notebook at a glance:

- Type of tool: Interactive authoring software.

- Mostly used for: Sharing code, creating tutorials, presenting work.

- Pros: Great for showcasing, language-independent.

- Cons: Not self-contained, nor great for collaboration.

Jupyter Notebook is an open-source web application that allows you to create interactive documents. These combine live code, equations, visualizations, and narrative text.

Imagine something a bit like a Microsoft word document, only far more interactive, and designed specifically for data analytics! As a data analytics tool, it’s great for showcasing work: Jupyter Notebook runs in the browser and supports over 40 languages, including Python and R. It also integrates with big data analysis tools, like Apache Spark (see below) and offers various outputs from HTML to images, videos, and more.

But as with every tool, it has its limitations. Jupyter Notebook documents have poor version control, and tracking changes is not intuitive. This means it’s not the best place for development and analytics work (you should use a dedicated IDE for these) and it isn’t well suited to collaboration.

Since it isn’t self-contained, this also means you have to provide any extra assets (e.g. libraries or runtime systems) to anybody you’re sharing the document with. But for presentation and tutorial purposes, it remains an invaluable data science and data analytics tool.

5. Apache Spark

Apache Spark at a glance:

- Type of tool: Data processing framework

- Availability: Open-source

- Mostly used for: Big data processing, machine learning

- Pros: Fast, dynamic, easy to use

- Cons: No file management system, rigid user interface

Apache Spark is a software framework that allows data analysts and data scientists to quickly process vast data sets. It was first developed in 2012, it’s designed to analyze unstructured big data, Spark distributes computationally heavy analytics tasks across many computers.

While other similar frameworks exist (for example, Apache Hadoop ) Spark is exceptionally fast. By using RAM rather than local memory, it is around 100x faster than Hadoop. That’s why it’s often used for the development of data-heavy machine learning models .

It even has a library of machine learning algorithms, MLlib , including classification, regression, and clustering algorithms, to name a few. On the downside, consuming so much memory means Spark is computationally expensive. It also lacks a file management system, so it usually needs integration with other software, i.e. Hadoop.

6. Google Cloud AutoML

Google Cloud AutoML at a glance:

- Type of tool: Machine learning platform

- Availability: Cloud-based, commercial

- Mostly used for: Automating machine learning tasks

- Pros: Allows analysts with limited coding experience to build and deploy ML models , skipping lots of steps

- Cons: Can be pricey for large-scale projects, lacks some flexibility

A serious proposition for data analysts and scientists in 2024 is Google Cloud’s AutoML tool. With the hype around generative AI in 2023 set to roll over into the next year, tools like AutoML but the capability to create machine learning models into your own hands.

Google Cloud AutoML contains a suite of tools across categories from structured data to language translation, image and video classification. As more and more organizations adopt machine learning, there will be a growing demand for data analysts who can use AutoML tools to automate their work easily.

SAS at a glance:

- Type of tool: Statistical software suite

- Availability: Commercial

- Mostly used for: Business intelligence, multivariate, and predictive analysis

- Pros: Easily accessible, business-focused, good user support

- Cons: High cost, poor graphical representation

SAS (which stands for Statistical Analysis System) is a popular commercial suite of business intelligence and data analysis tools. It was developed by the SAS Institute in the 1960s and has evolved ever since. Its main use today is for profiling customers, reporting, data mining, and predictive modeling. Created for an enterprise market, the software is generally more robust, versatile, and easier for large organizations to use. This is because they tend to have varying levels of in-house programming expertise.

But as a commercial product, SAS comes with a hefty price tag. Nevertheless, with cost comes benefits; it regularly has new modules added, based on customer demand. Although it has fewer of these than say, Python libraries, they are highly focused. For instance, it offers modules for specific uses such as anti-money laundering and analytics for the Internet of Things.

8. Microsoft Power BI

Power BI at a glance:

- Type of tool: Business analytics suite.

- Availability: Commercial software (with a free version available).

- Mostly used for: Everything from data visualization to predictive analytics.

- Pros: Great data connectivity, regular updates, good visualizations.

- Cons: Clunky user interface, rigid formulas, data limits (in the free version).

At less than a decade old, Power BI is a relative newcomer to the market of data analytics tools. It began life as an Excel plug-in but was redeveloped in the early 2010s as a standalone suite of business data analysis tools. Power BI allows users to create interactive visual reports and dashboards , with a minimal learning curve. Its main selling point is its great data connectivity—it operates seamlessly with Excel (as you’d expect, being a Microsoft product) but also text files, SQL server, and cloud sources, like Google and Facebook analytics.

It also offers strong data visualization but has room for improvement in other areas. For example, it has quite a bulky user interface, rigid formulas, and the proprietary language (Data Analytics Expressions, or ‘DAX’) is not that user-friendly. It does offer several subscriptions though, including a free one. This is great if you want to get to grips with the tool, although the free version does have drawbacks—the main limitation being the low data limit (around 2GB).

Tableau at a glance:

- Type of tool: Data visualization tool.

- Availability: Commercial.

- Mostly used for: Creating data dashboards and worksheets.

- Pros: Great visualizations, speed, interactivity, mobile support.

- Cons: Poor version control, no data pre-processing.

If you’re looking to create interactive visualizations and dashboards without extensive coding expertise, Tableau is one of the best commercial data analysis tools available. The suite handles large amounts of data better than many other BI tools, and it is very simple to use. It has a visual drag and drop interface (another definite advantage over many other data analysis tools). However, because it has no scripting layer, there’s a limit to what Tableau can do. For instance, it’s not great for pre-processing data or building more complex calculations.

While it does contain functions for manipulating data, these aren’t great. As a rule, you’ll need to carry out scripting functions using Python or R before importing your data into Tableau. But its visualization is pretty top-notch, making it very popular despite its drawbacks. Furthermore, it’s mobile-ready. As a data analyst , mobility might not be your priority, but it’s nice to have if you want to dabble on the move! You can learn more about Tableau in this post .

KNIME at a glance:

- Type of tool: Data integration platform.

- Mostly used for: Data mining and machine learning.

- Pros: Open-source platform that is great for visually-driven programming.

- Cons: Lacks scalability, and technical expertise is needed for some functions.

Last on our list is KNIME (Konstanz Information Miner), an open-source, cloud-based, data integration platform. It was developed in 2004 by software engineers at Konstanz University in Germany. Although first created for the pharmaceutical industry, KNIME’s strength in accruing data from numerous sources into a single system has driven its application in other areas. These include customer analysis, business intelligence, and machine learning.

Its main draw (besides being free) is its usability. A drag-and-drop graphical user interface (GUI) makes it ideal for visual programming. This means users don’t need a lot of technical expertise to create data workflows. While it claims to support the full range of data analytics tasks, in reality, its strength lies in data mining. Though it offers in-depth statistical analysis too, users will benefit from some knowledge of Python and R. Being open-source, KNIME is very flexible and customizable to an organization’s needs—without heavy costs. This makes it popular with smaller businesses, who have limited budgets.

Now that we’ve checked out all of the data analysis tools, let’s see how to choose the right one for your business needs.

11. Streamlit

- Type of tool: Python library for building web applications

- Availability: Open-source

- Mostly used for: Creating interactive data visualizations and dashboards

- Pros: Easy to use, can create a wide range of graphs, charts, and maps, can be deployed as web apps

- Cons: Not as powerful as Power BI or Tableau, requires a Python installation

Sure we mentioned Python itself as a tool earlier and introduced a few of its libraries, but Streamlit is definitely one data analytics tool to watch in 2024, and to consider for your own toolkit.

Essentially, Streamlit is an open-source Python library for building interactive and shareable web apps for data science and machine learning projects. It’s a pretty new tool on the block, but is already one which is getting attention from data professionals looking to create visualizations easily!

Alright, so you’ve got your data ready to go, and you’re looking for the perfect tool to analyze it with. How do you find the one that’s right for your organization?

First, consider that there’s no one singular data analytics tool that will address all the data analytics issues you may have. When looking at this list, you may look at one tool for most of your needs, but require the use of a secondary tool for smaller processes.

Second, consider the business needs of your organization and figure out exactly who will need to make use of the data analysis tools. Will they be used primarily by fellow data analysts or scientists, non-technical users who require an interactive and intuitive interface—or both? Many tools on this list will cater to both types of user.

Third, consider the tool’s data modeling capabilities. Does the tool have these capabilities, or will you need to use SQL or another tool to perform data modeling prior to analysis?

Fourth—and finally!—consider the practical aspect of price and licensing. Some of the options are totally free or have some free-to-use features (but will require licensing for the full product). Some data analysis tools will be offered on a subscription or licencing basis. In this case, you may need to consider the number of users required or—if you’re looking on solely a project-to-project basis—the potential length of the subscription.

In this post, we’ve explored some of the most popular data analysis tools currently in use. The key thing to takeaway is that there’s no one tool that does it all. A good data analyst has wide-ranging knowledge of different languages and software.

CareerFoundry’s own data expert, Tom Gadsby, explains which data analytics tools are best for specific processes in the following short video:

If you found a tool on this list that you didn’t know about, why not research more? Play around with the open-source data analysis tools (they’re free, after all!) and read up on the rest.

At the very least, it helps to know which data analytics tools organizations are using. To learn more about the field, start our free 5-day data analytics short course .

For more industry insights, check out the following:

- The 7 most useful data analysis methods and techniques

- How to build a data analytics portfolio

- Get started with SQL: A cheatsheet

What are data analytics tools?

Data analytics tools are software and apps that help data analysts collect, clean, analyze, and visualize data. These tools are used to extract insights from data that can be used to make informed business decisions.

What is the most used tool by data analysts?

Microsoft Excel continues to be the most widely used tool by data analysts for data wrangling and reporting. Big reasons are that it provides a user-friendly interface for data manipulation, calculations, and data viz.

Is SQL a data analysis tool?

Yes. SQL is a specialized programming language for managing and querying data in relational databases. Data analysts use SQL to extract and analyze data from databases, which can then be used to generate insights and reports.

Which tool is best to analyse data?

It depends on what you want to do with the data and the context. Some of the most popular and versatile tools are included in this article, namely Python, SQL, MS Excel, and Tableau.

Data Analysis in Quantitative Research

- Reference work entry

- First Online: 13 January 2019

- Cite this reference work entry

- Yong Moon Jung 2

1774 Accesses

2 Citations

Quantitative data analysis serves as part of an essential process of evidence-making in health and social sciences. It is adopted for any types of research question and design whether it is descriptive, explanatory, or causal. However, compared with qualitative counterpart, quantitative data analysis has less flexibility. Conducting quantitative data analysis requires a prerequisite understanding of the statistical knowledge and skills. It also requires rigor in the choice of appropriate analysis model and the interpretation of the analysis outcomes. Basically, the choice of appropriate analysis techniques is determined by the type of research question and the nature of the data. In addition, different analysis techniques require different assumptions of data. This chapter provides introductory guides for readers to assist them with their informed decision-making in choosing the correct analysis models. To this end, it begins with discussion of the levels of measure: nominal, ordinal, and scale. Some commonly used analysis techniques in univariate, bivariate, and multivariate data analysis are presented for practical examples. Example analysis outcomes are produced by the use of SPSS (Statistical Package for Social Sciences).

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Armstrong JS. Significance tests harm progress in forecasting. Int J Forecast. 2007;23(2):321–7.

Article Google Scholar

Babbie E. The practice of social research. 14th ed. Belmont: Cengage Learning; 2016.

Google Scholar

Brockopp DY, Hastings-Tolsma MT. Fundamentals of nursing research. Boston: Jones & Bartlett; 2003.

Creswell JW. Research design: qualitative, quantitative, and mixed methods approaches. Thousand Oaks: Sage; 2014.

Fawcett J. The relationship of theory and research. Philadelphia: F. A. Davis; 1999.

Field A. Discovering statistics using IBM SPSS statistics. London: Sage; 2013.

Grove SK, Gray JR, Burns N. Understanding nursing research: building an evidence-based practice. 6th ed. St. Louis: Elsevier Saunders; 2015.

Hair JF, Black WC, Babin BJ, Anderson RE, Tatham RD. Multivariate data analysis. Upper Saddle River: Pearson Prentice Hall; 2006.

Katz MH. Multivariable analysis: a practical guide for clinicians. Cambridge: Cambridge University Press; 2006.

Book Google Scholar

McHugh ML. Scientific inquiry. J Specialists Pediatr Nurs. 2007; 8 (1):35–7. Volume 8, Issue 1, Version of Record online: 22 FEB 2007

Pallant J. SPSS survival manual: a step by step guide to data analysis using IBM SPSS. Sydney: Allen & Unwin; 2016.

Polit DF, Beck CT. Nursing research: principles and methods. Philadelphia: Lippincott Williams & Wilkins; 2004.

Trochim WMK, Donnelly JP. Research methods knowledge base. 3rd ed. Mason: Thomson Custom Publishing; 2007.

Tabachnick, B. G., & Fidell, L. S. (2013). Using multivariate statistics. Boston: Pearson Education.

Wells CS, Hin JM. Dealing with assumptions underlying statistical tests. Psychol Sch. 2007;44(5):495–502.

Download references

Author information

Authors and affiliations.

Centre for Business and Social Innovation, University of Technology Sydney, Ultimo, NSW, Australia

Yong Moon Jung

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Yong Moon Jung .

Editor information

Editors and affiliations.

School of Science and Health, Western Sydney University, Penrith, NSW, Australia

Pranee Liamputtong

Rights and permissions

Reprints and permissions

Copyright information

© 2019 Springer Nature Singapore Pte Ltd.

About this entry

Cite this entry.

Jung, Y.M. (2019). Data Analysis in Quantitative Research. In: Liamputtong, P. (eds) Handbook of Research Methods in Health Social Sciences. Springer, Singapore. https://doi.org/10.1007/978-981-10-5251-4_109

Download citation

DOI : https://doi.org/10.1007/978-981-10-5251-4_109

Published : 13 January 2019

Publisher Name : Springer, Singapore

Print ISBN : 978-981-10-5250-7

Online ISBN : 978-981-10-5251-4

eBook Packages : Social Sciences Reference Module Humanities and Social Sciences Reference Module Business, Economics and Social Sciences

Share this entry

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Your browser is out of date, please update it.

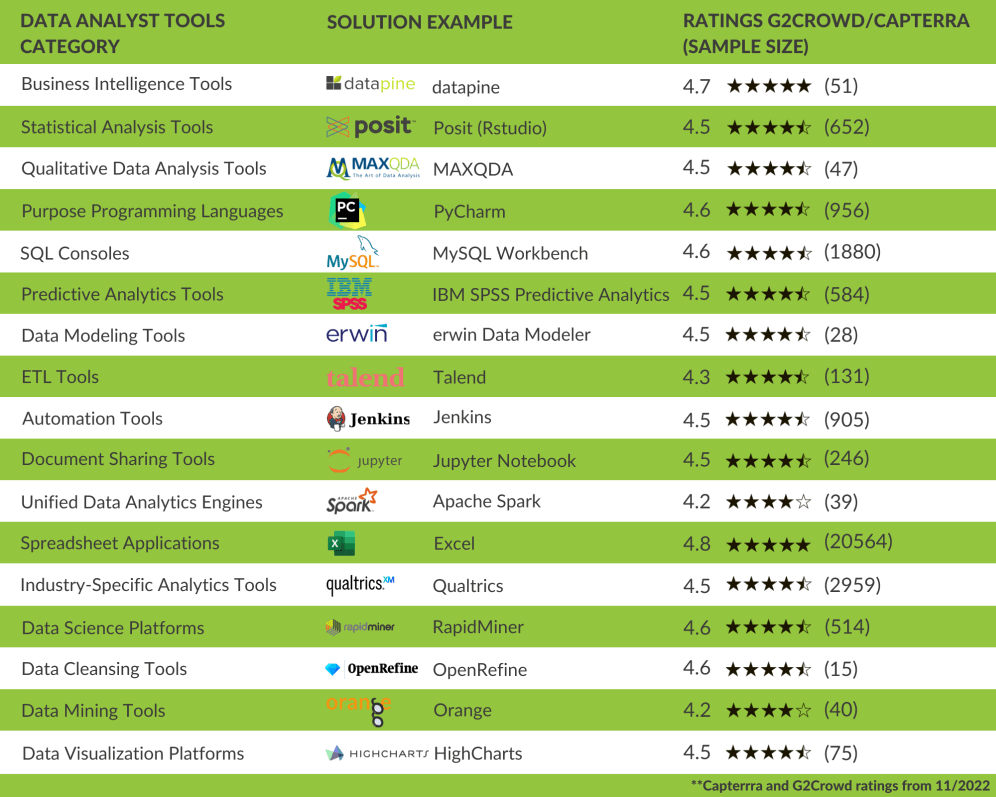

Essential Data Analyst Tools Discover a List of The 17 Best Data Analysis Software & Tools On The Market

Top 17 software & tools for data analysts (2023).

Table of Content 1) What are data analyst tools? 2) The best 17 data analyst tools for 2023 3) Key takeaways & guidance

To be able to perform data analysis at the highest level possible, analysts and data professionals will use software that will ensure the best results in several tasks from executing algorithms, preparing data, generating predictions, and automating processes, to standard tasks such as visualizing and reporting on the data. Although there are many of these solutions on the market, data analysts must choose wisely in order to benefit their analytical efforts. That said, in this article, we will cover the best data analyst tools and name the key features of each based on various types of analysis processes. But first, we will start with a basic definition and a brief introduction.

1) What Are Data Analyst Tools?

Data analyst tools is a term used to describe software and applications that data analysts use in order to develop and perform analytical processes that help companies to make better, informed business decisions while decreasing costs and increasing profits.

In order to make the best possible decision on which software you need to choose as an analyst, we have compiled a list of the top data analyst tools that have various focus and features, organized in software categories, and represented with an example of each. These examples have been researched and selected using rankings from two major software review sites: Capterra and G2Crowd . By looking into each of the software categories presented in this article, we selected the most successful solutions with a minimum of 15 reviews between both review websites until November 2022. The order in which these solutions are listed is completely random and does not represent a grading or ranking system.

2) What Tools Do Data Analysts Use?

To make the most out of the infinite number of software that is currently offered on the market, we will focus on the most prominent tools needed to be an expert data analyst. The image above provides a visual summary of all the areas and tools that will be covered in this insightful post. These data analysis tools are mostly focused on making analysts lives easier by providing them with solutions that make complex analytical tasks more efficient. Like this, they get more time to perform the analytical part of their job. Let’s get started with business intelligence tools.

1. Business intelligence tools

BI tools are one of the most represented means of performing data analysis. Specializing in business analytics, these solutions will prove to be beneficial for every data analyst that needs to analyze, monitor, and report on important findings. Features such as self-service, predictive analytics, and advanced SQL modes make these solutions easily adjustable to every level of knowledge, without the need for heavy IT involvement. By providing a set of useful features, analysts can understand trends and make tactical decisions. Our data analytics tools article wouldn’t be complete without business intelligence, and datapine is one example that covers most of the requirements both for beginner and advanced users. This all-in-one tool aims to facilitate the entire analysis process from data integration and discovery to reporting.

KEY FEATURES:

Visual drag-and-drop interface to build SQL queries automatically, with the option to switch to, advanced (manual) SQL mode

Powerful predictive analytics features, interactive charts and dashboards, and automated reporting

AI-powered alarms that are triggered as soon as an anomaly occurs or a goal is met

datapine is a popular business intelligence software with an outstanding rating of 4.8 stars in Capterra and 4.6 stars in G2Crowd. It focuses on delivering simple, yet powerful analysis features into the hands of beginners and advanced users in need of a fast and reliable online data analysis solution for all analysis stages. An intuitive user interface will enable you to simply drag-and-drop your desired values into datapine’s Analyzer and create numerous charts and graphs that can be united into an interactive dashboard. If you’re an experienced analyst, you might want to consider the SQL mode where you can build your own queries or run existing codes or scripts. Another crucial feature is the predictive analytics forecast engine that can analyze data from multiple sources which can be previously integrated with their various data connectors. While there are numerous predictive solutions out there, datapine provides simplicity and speed at its finest. By simply defining the input and output of the forecast based on specified data points and desired model quality, a complete chart will unfold together with predictions.

We should also mention robust artificial intelligence that is becoming an invaluable assistant in today’s analysis processes. Neural networks, pattern recognition, and threshold alerts will alarm you as soon as a business anomaly occurs or a previously set goal is met so you don’t have to manually analyze large volumes of data – the data analytics software does it for you. Access your data from any device with an internet connection, and share your findings easily and securely via dashboards or customized reports for anyone that needs quick answers to any type of business question.

2. Statistical Analysis Tools

Next in our list of data analytics tools comes a more technical area related to statistical analysis. Referring to computation techniques that often contain a variety of statistical techniques to manipulate, explore, and generate insights, there exist multiple programming languages to make (data) scientists’ work easier and more effective. With the expansion of various languages that are today present on the market, science has its own set of rules and scenarios that need special attention when it comes to statistical data analysis and modeling. Here we will present one of the most popular tools for a data analyst – Posit (previously known as RStudio or R programming). Although there are other languages that focus on (scientific) data analysis, R is particularly popular in the community.

POSIT (R-STUDIO)

An ecosystem of more than 10 000 packages and extensions for distinct types of data analysis

Statistical analysis, modeling, and hypothesis testing (e.g. analysis of variance, t test, etc.)

Active and communicative community of researchers, statisticians, and scientists

Posit , formerly known as RStudio, is one of the top data analyst tools for R and Python. Its development dates back to 2009 and it’s one of the most used software for statistical analysis and data science, keeping an open-source policy and running on a variety of platforms, including Windows, macOS and Linux. As a result of the latest rebranding process, some of the famous products on the platform will change their names, while others will stay the same. For example, RStudio Workbench and RStudio Connect will now be known as Posit Workbench and Posit Connect respectively. On the other side, products like RStudio Desktop and RStudio Server will remain the same. As stated on the software’s website, the rebranding happened because the name RStudio no longer reflected the variety of products and languages that the platform currently supports.

Posit is by far the most popular integrated development environment (IDE) out there with 4,7 stars on Capterra and 4,5 stars on G2Crowd. Its capabilities for data cleaning, data reduction, and data analysis report output with R markdown, make this tool an invaluable analytical assistant that covers both general and academic data analysis. It is compiled of an ecosystem of more than 10 000 packages and extensions that you can explore by categories, and perform any kind of statistical analysis such as regression, conjoint, factor cluster analysis, etc. Easy to understand for those that don’t have a high-level of programming skills, Posit can perform complex mathematical operations by using a single command. A number of graphical libraries such as ggplot and plotly make this language different than others in the statistical community since it has efficient capabilities to create quality visualizations.

Posit was mostly used in the academic area in the past, today it has applications across industries and large companies such as Google, Facebook, Twitter, and Airbnb, among others. Due to an enormous number of researchers, scientists, and statisticians using it, the tool has an extensive and active community where innovative technologies and ideas are presented and communicated regularly.

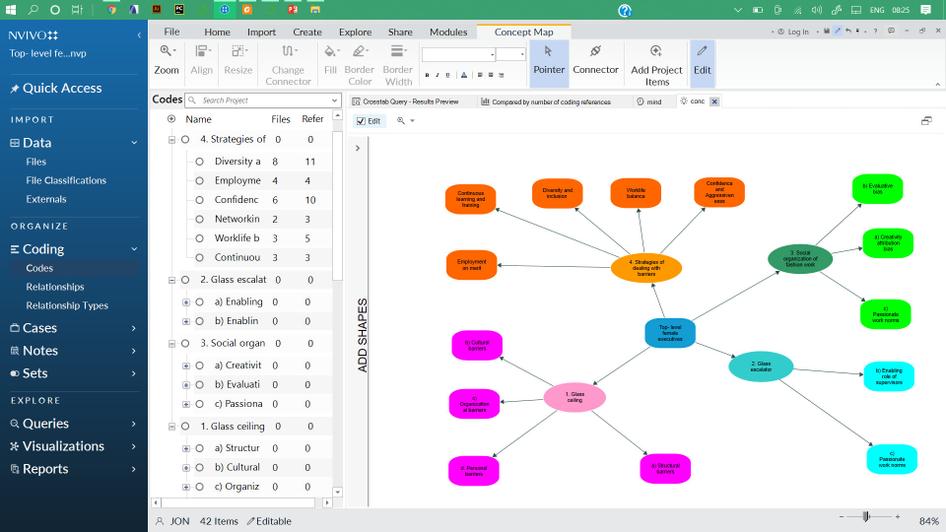

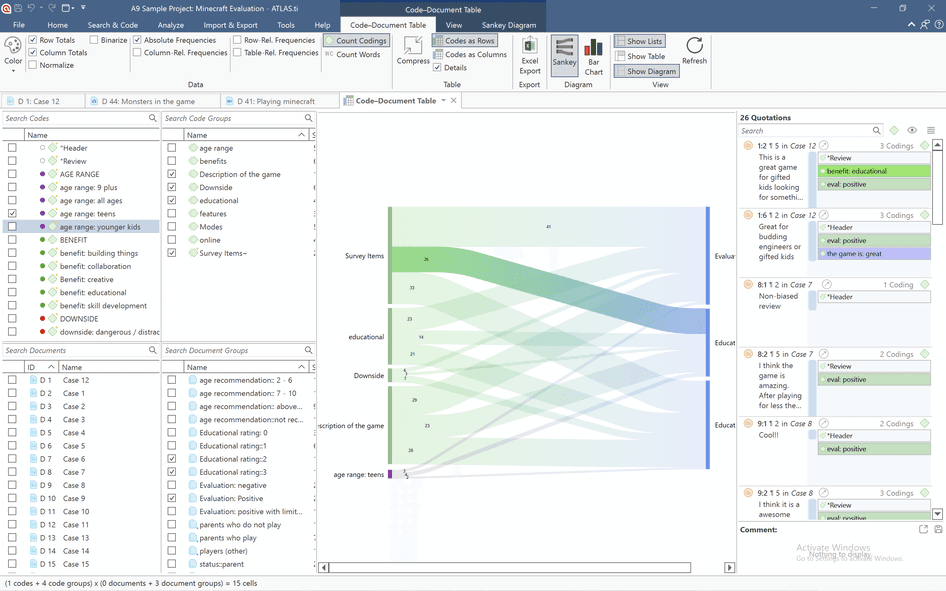

3. QUALITATIVE DATA ANALYSIS TOOLS

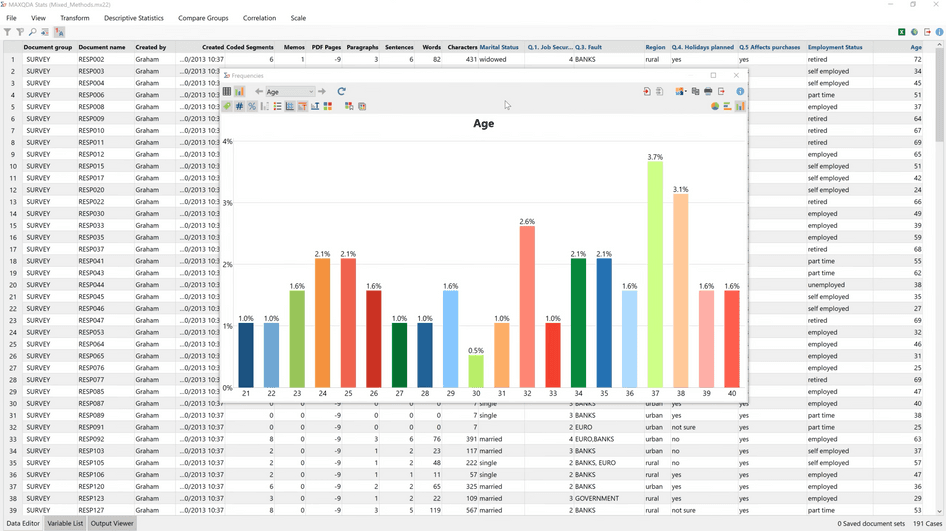

Naturally, when we think about data, our mind automatically takes us to numbers. Although much of the extracted data might be in a numeric format, there is also immense value in collecting and analyzing non-numerical information, especially in a business context. This is where qualitative data analysis tools come into the picture. These solutions offer researchers, analysts, and businesses the necessary functionalities to make sense of massive amounts of qualitative data coming from different sources such as interviews, surveys, e-mails, customer feedback, social media comments, and much more depending on the industry. There is a wide range of qualitative analysis software out there, the most innovative ones rely on artificial intelligence and machine learning algorithms to make the analysis process faster and more efficient. Today, we will discuss MAXQDA, one of the most powerful QDA platforms in the market.

The possibility to mark important information using codes, colors, symbols or emojis

AI-powered audio transcription capabilities such as speed and rewind controls, speaker labels, and others

Possibility to work with multiple languages and scripts thanks to Unicode support

Founded in 1989 “by researchers, for researchers”, MAXQDA is a qualitative data analysis software for Windows and Mac that assists users in organizing and interpreting qualitative data from different sources with the help of innovative features. Unlike some other solutions on the same range, MAXQDA supports a wide range of data sources and formats. Users can import traditional text data from interviews, focus groups, web pages, and YouTube or Twitter comments, as well as various types of multimedia data such as videos or audio files. Paired to that, the software also offers a Mixed Methods tool which allows users to use both qualitative and quantitative data for a more complete analytics process. This level of versatility has earned MAXQDA worldwide recognition for many years. The tool has a positive 4.6 stars rating in Capterra and a 4.5 in G2Crowd.

Amongst its most valuable functions, MAXQDA offers users the capability of setting different codes to mark their most important data and organize it in an efficient way. Codes can be easily generated via drag & drop and labeled using colors, symbols, or emojis. Your findings can later be transformed, automatically or manually, into professional visualizations and exported in various readable formats such as PDF, Excel, or Word, among others.

4. General-purpose programming languages

Programming languages are used to solve a variety of data problems. We have explained R and statistical programming, now we will focus on general ones that use letters, numbers, and symbols to create programs and require formal syntax used by programmers. Often, they’re also called text-based programs because you need to write software that will ultimately solve a problem. Examples include C#, Java, PHP, Ruby, Julia, and Python, among many others on the market. Here we will focus on Python and we will present PyCharm as one of the best tools for data analysts that have coding knowledge as well.

Intelligent code inspection and completion with error detection, code fixes, and automated code refractories

Built-in developer tools for smart debugging, testing, profiling, and deployment

Cross-technology development supporting JavaScript, CoffeeScript, HTML/CSS, Node.js, and more

PyCharm is an integrated development environment (IDE) by JetBrains designed for developers that want to write better, more productive Python code from a single platform. The tool, which is successfully rated with 4.7 stars on Capterra and 4.6 in G2Crowd, offers developers a range of essential features including an integrated visual debugger, GUI-based test runner, integration with major VCS and built-in database tools, and much more. Amongst its most praised features, the intelligent code assistance provides developers with smart code inspections highlighting errors and offering quick fixes and code completions.

PyCharm supports the most important Python implementations including Python 2.x and 3.x, Jython, IronPython, PyPy and Cython, and it is available in three different editions. The Community version, which is free and open-sourced, the Professional paid version, including all advanced features, and the Edu version which is also free and open-sourced for educational purposes. Definitely, one of the best Python data analyst tools in the market.

5. SQL consoles

Our data analyst tools list wouldn’t be complete without SQL consoles. Essentially, SQL is a programming language that is used to manage/query data held in relational databases, particularly effective in handling structured data as a database tool for analysts. It’s highly popular in the data science community and one of the analyst tools used in various business cases and data scenarios. The reason is simple: as most of the data is stored in relational databases and you need to access and unlock its value, SQL is a highly critical component of succeeding in business, and by learning it, analysts can offer a competitive advantage to their skillset. There are different relational (SQL-based) database management systems such as MySQL, PostgreSQL, MS SQL, and Oracle, for example, and by learning these data analysts’ tools would prove to be extremely beneficial to any serious analyst. Here we will focus on MySQL Workbench as the most popular one.

MySQL Workbench

A unified visual tool for data modeling, SQL development, administration, backup, etc.

Instant access to database schema and objects via the Object Browser

SQL Editor that offers color syntax highlighting, reuse of SQL snippets, and execution history

MySQL Workbench is used by analysts to visually design, model, and manage databases, optimize SQL queries, administer MySQL environments, and utilize a suite of tools to improve the performance of MySQL applications. It will allow you to perform tasks such as creating and viewing databases and objects (triggers or stored procedures, e.g.), configuring servers, and much more. You can easily perform backup and recovery as well as inspect audit data. MySQL Workbench will also help in database migration and is a complete solution for analysts working in relational database management and companies that need to keep their databases clean and effective. The tool, which is very popular amongst analysts and developers, is rated 4.6 stars in Capterra and 4.5 in G2Crowd.

6. Standalone predictive analytics tools

Predictive analytics is one of the advanced techniques, used by analysts that combine data mining, machine learning, predictive modeling, and artificial intelligence to predict future events, and it deserves a special place in our list of data analysis tools as its popularity has increased in recent years with the introduction of smart solutions that enabled analysts to simplify their predictive analytics processes. You should keep in mind that some BI tools we already discussed in this list offer easy to use, built-in predictive analytics solutions but, in this section, we focus on standalone, advanced predictive analytics that companies use for various reasons, from detecting fraud with the help of pattern detection to optimizing marketing campaigns by analyzing consumers’ behavior and purchases. Here we will list a data analysis software that is helpful for predictive analytics processes and helps analysts to predict future scenarios.

IBM SPSS PREDICTIVE ANALYTICS ENTERPRISE

A visual predictive analytics interface to generate predictions without code

Can be integrated with other IBM SPSS products for a complete analysis scope

Flexible deployment to support multiple business scenarios and system requirements

IBM SPSS Predictive Analytics provides enterprises with the power to make improved operational decisions with the help of various predictive intelligence features such as in-depth statistical analysis, predictive modeling, and decision management. The tool offers a visual interface for predictive analytics that can be easily used by average business users with no previous coding knowledge, while still providing analysts and data scientists with more advanced capabilities. Like this, users can take advantage of predictions to inform important decisions in real time with a high level of certainty.

Additionally, the platform provides flexible deployment options to support multiple scenarios, business sizes and use cases. For example, for supply chain analysis or cybercrime prevention, among many others. Flexible data integration and manipulation is another important feature included in this software. Unstructured and structured data, including text data, from multiple sources, can be analyzed for predictive modeling that will translate into intelligent business outcomes.

As a part of the IBM product suite, users of the tool can take advantage of other solutions and modules such as the IBM SPSS Modeler, IBM SPSS Statistics, and IMB SPSS Analytic Server for a complete analytical scope. Reviewers gave the software a 4.5 star rating on Capterra and 4.2 on G2Crowd.

7. Data modeling tools

Our list of data analysis tools wouldn’t be complete without data modeling. Creating models to structure the database, and design business systems by utilizing diagrams, symbols, and text, ultimately represent how the data flows and is connected in between. Businesses use data modeling tools to determine the exact nature of the information they control and the relationship between datasets, and analysts are critical in this process. If you need to discover, analyze, and specify changes in information that is stored in a software system, database or other application, chances are your skills are critical for the overall business. Here we will show one of the most popular data analyst software used to create models and design your data assets.

erwin data modeler (DM)

Automated data model generation to increase productivity in analytical processes

Single interface no matter the location or the type of the data

5 different versions of the solution you can choose from and adjust based on your business needs

erwin DM works both with structured and unstructured data in a data warehouse and in the cloud. It’s used to “find, visualize, design, deploy and standardize high-quality enterprise data assets,” as stated on their official website. erwin can help you reduce complexities and understand data sources to meet your business goals and needs. They also offer automated processes where you can automatically generate models and designs to reduce errors and increase productivity. This is one of the tools for analysts that focus on the architecture of the data and enable you to create logical, conceptual, and physical data models.

Additional features such as a single interface for any data you might possess, no matter if it’s structured or unstructured, in a data warehouse or the cloud makes this solution highly adjustable for your analytical needs. With 5 versions of the erwin data modeler, their solution is highly adjustable for companies and analysts that need various data modeling features. This versatility is reflected in its positive reviews, gaining the platform an almost perfect 4.8 star rating on Capterra and 4.3 stars in G2Crowd.

8. ETL tools

ETL is a process used by companies, no matter the size, across the world, and if a business grows, chances are you will need to extract, load, and transform data into another database to be able to analyze it and build queries. There are some core types of ETL tools for data analysts such as batch ETL, real-time ETL, and cloud-based ETL, each with its own specifications and features that adjust to different business needs. These are the tools used by analysts that take part in more technical processes of data management within a company, and one of the best examples is Talend.

Collecting and transforming data through data preparation, integration, cloud pipeline designer

Talend Trust Score to ensure data governance and resolve quality issues across the board

Sharing data internally and externally through comprehensive deliveries via APIs

Talend is a data integration platform used by experts across the globe for data management processes, cloud storage, enterprise application integration, and data quality. It’s a Java-based ETL tool that is used by analysts in order to easily process millions of data records and offers comprehensive solutions for any data project you might have. Talend’s features include (big) data integration, data preparation, cloud pipeline designer, and stitch data loader to cover multiple data management requirements of an organization. Users of the tool rated it with 4.2 stars in Capterra and 4.3 in G2Crowd. This is an analyst software extremely important if you need to work on ETL processes in your analytical department.

Apart from collecting and transforming data, Talend also offers a data governance solution to build a data hub and deliver it through self-service access through a unified cloud platform. You can utilize their data catalog, inventory and produce clean data through their data quality feature. Sharing is also part of their data portfolio; Talend’s data fabric solution will enable you to deliver your information to every stakeholder through a comprehensive API delivery platform. If you need a data analyst tool to cover ETL processes, Talend might be worth considering.

9. Automation Tools

As mentioned, the goal of all the solutions present on this list is to make data analysts lives easier and more efficient. Taking that into account, automation tools could not be left out of this list. In simple words, data analytics automation is the practice of using systems and processes to perform analytical tasks with almost no human interaction. In the past years, automation solutions have impacted the way analysts perform their jobs as these tools assist them in a variety of tasks such as data discovery, preparation, data replication, and more simple ones like report automation or writing scripts. That said, automating analytical processes significantly increases productivity, leaving more time to perform more important tasks. We will see this more in detail through Jenkins one of the leaders in open-source automation software.

Popular continuous integration (CI) solution with advanced automation features such as running code in multiple platforms

Job automations to set up customized tasks can be scheduled or based on a specific event

Several job automation plugins for different purposes such as Jenkins Job Builder, Jenkins Job DLS or Jenkins Pipeline DLS

Developed in 2004 under the name Hudson, Jenkins is an open-source CI automation server that can be integrated with several DevOps tools via plugins. By default, Jenkins assists developers to automate parts of their software development process like building, testing, and deploying. However, it is also highly used by data analysts as a solution to automate jobs such as running codes and scripts daily or when a specific event happened. For example, run a specific command when new data is available.

There are several Jenkins plugins to generate jobs automatically. For example, the Jenkins Job Builder plugin takes simple descriptions of jobs in YAML or JSON format and turns them into runnable jobs in Jenkins’s format. On the other side, the Jenkins Job DLS plugin provides users with the capabilities to easily generate jobs from other jobs and edit the XML configuration to supplement or fix any existing elements in the DLS. Lastly, the Pipeline plugin is mostly used to generate complex automated processes.

For Jenkins, automation is not useful if it’s not tight to integration. For this reason, they provide hundreds of plugins and extensions to integrate Jenkins with your existing tools. This way, the entire process of code generation and execution can be automated at every stage and in different platforms - leaving you enough time to perform other relevant tasks. All the plugins and extensions from Jenkins are developed in Java meaning the tool can also be installed in any other operator that runs on Java. Users rated Jenkins with 4.5 stars in Capterra and 4.4 stars in G2Crowd.

10. DOCUMENT SHARING TOOLS

As an analyst working with programming, it is very likely that you have found yourself in the situation of having to share your code or analytical findings with others. Rather you want someone to look into your code for errors or provide any other kind of feedback to your work, a document sharing tool is the way to go. These solutions enable users to share interactive documents which can contain live code and other multimedia elements for a collaborative process. Below, we will present Jupyter Notebook, one of the most popular and efficient platforms for this purpose.

JUPYTER NOTEBOOK

Supports 40 programming languages including Python, R, Julia, C++, and more

Easily share notebooks with others via email, Dropbox, GitHub and Jupyter Notebook Viewer

In-browser editing for code, with automatic syntax highlighting, indentation, and tab completion

Jupyter Notebook is an open source web based interactive development environment used to generate and share documents called notebooks, containing live codes, data visualizations, and text in a simple and streamlined way. Its name is an abbreviation of the core programming languages it supports: Julia, Python, and R and, according to its website, it has a flexible interface that enables users to view, execute and share their code all in the same platform. Notebooks allow analysts, developers, and anyone else to combine code, comments, multimedia, and visualizations in an interactive document that can be easily shared and reworked directly in your web browser.

Even though it works by default on Python, Jupyter Notebook supports over 40 programming languages and it can be used in multiple scenarios. Some of them include sharing notebooks with interactive visualizations, avoiding the static nature of other software, live documentation to explain how specific Python modules or libraries work, or simply sharing code and data files with others. Notebooks can be easily converted into different output formats such as HTML, LaTeX, PDF, and more. This level of versatility has earned the tool 4.7 stars rating on Capterra and 4.5 in G2Crowd.

11. Unified data analytics engines

If you work for a company that produces massive datasets and needs a big data management solution, then unified data analytics engines might be the best resolution for your analytical processes. To be able to make quality decisions in a big data environment, analysts need tools that will enable them to take full control of their company’s robust data environment. That’s where machine learning and AI play a significant role. That said, Apache Spark is one of the data analysis tools on our list that supports big-scale data processing with the help of an extensive ecosystem.

Apache Spark

High performance: Spark owns the record in the large-scale data processing

A large ecosystem of data frames, streaming, machine learning, and graph computation

Perform Exploratory Analysis on petabyte-scale data without the need for downsampling

Apache Spark was originally developed by UC Berkeley in 2009 and since then, it has expanded across industries and companies such as Netflix, Yahoo, and eBay that have deployed Spark, processed petabytes of data and proved that Apache is the go-to solution for big data management, earning it a positive 4.2 star rating in both Capterra and G2Crowd. Their ecosystem consists of Spark SQL, streaming, machine learning, graph computation, and core Java, Scala, and Python APIs to ease the development. Already in 2014, Spark officially set a record in large-scale sorting. Actually, the engine can be 100x faster than Hadoop and this is one of the features that is extremely crucial for massive volumes of data processing.

You can easily run applications in Java, Python, Scala, R, and SQL while more than 80 high-level operators that Spark offers will make your data transformation easy and effective. As a unified engine, Spark comes with support for SQL queries, MLlib for machine learning and GraphX for streaming data that can be combined to create additional, complex analytical workflows. Additionally, it runs on Hadoop, Kubernetes, Apache Mesos, standalone or in the cloud and can access diverse data sources. Spark is truly a powerful engine for analysts that need support in their big data environment.

12. Spreadsheet applications

Spreadsheets are one of the most traditional forms of data analysis. Quite popular in any industry, business or organization, there is a slim chance that you haven’t created at least one spreadsheet to analyze your data. Often used by people that don’t have high technical abilities to code themselves, spreadsheets can be used for fairly easy analysis that doesn’t require considerable training, complex and large volumes of data and databases to manage. To look at spreadsheets in more detail, we have chosen Excel as one of the most popular in business.

Part of the Microsoft Office family, hence, it’s compatible with other Microsoft applications

Pivot tables and building complex equations through designated rows and columns

Perfect for smaller analysis processes through workbooks and quick sharing

With 4.8 stars rating in Capterra and 4.7 in G2Crowd, Excel needs a category on its own since this powerful tool has been in the hands of analysts for a very long time. Often considered a traditional form of analysis, Excel is still widely used across the globe. The reasons are fairly simple: there aren’t many people who have never used it or come across it at least once in their career. It’s a fairly versatile data analyst tool where you simply manipulate rows and columns to create your analysis. Once this part is finished, you can export your data and send it to the desired recipients, hence, you can use Excel as a reporting tool as well. You do need to update the data on your own, Excel doesn’t have an automation feature similar to other tools on our list. Creating pivot tables, managing smaller amounts of data and tinkering with the tabular form of analysis, Excel has developed as an electronic version of the accounting worksheet to one of the most spread tools for data analysts.

A wide range of functionalities accompany Excel, from arranging to manipulating, calculating and evaluating quantitative data to building complex equations and using pivot tables, conditional formatting, adding multiple rows and creating charts and graphs – Excel has definitely earned its place in traditional data management.

13. Industry-specific analytics tools

While there are many data analysis tools on this list that are used in various industries and are applied daily in analysts’ workflow, there are solutions that are specifically developed to accommodate a single industry and cannot be used in another. For that reason, we have decided to include of one these solutions on our list, although there are many others, industry-specific data analysis programs and software. Here we focus on Qualtrics as one of the leading research software that is used by over 11000 world’s brands and has over 2M users across the globe as well as many industry-specific features focused on market research.

5 main experience features: design, customer, brand, employee, and product

Additional research services by their in-house experts

Advanced statistical analysis with their Stats iQ analysis tool

Qualtrics is a software for data analysis that is focused on experience management (XM) and is used for market research by companies across the globe. The tool, which has a positive 4.8 stars rating on Capterra and 4.4 in G2Crowd, offers 5 product pillars for enterprise XM which include design, customer, brand, employee, and product experiences, as well as additional research services performed by their own experts. Their XM platform consists of a directory, automated actions, Qualtrics iQ tool, and platform security features that combine automated and integrated workflows into a single point of access. That way, users can refine each stakeholder’s experience and use their tool as an “ultimate listening system.”

Since automation is becoming increasingly important in our data-driven age, Qualtrics has also developed drag-and-drop integrations into the systems that companies already use such as CRM, ticketing, or messaging, while enabling users to deliver automatic notifications to the right people. This feature works across brand tracking and product feedback as well as customer and employee experience. Other critical features such as the directory where users can connect data from 130 channels (including web, SMS, voice, video, or social), and Qualtrics iQ to analyze unstructured data will enable users to utilize their predictive analytics engine and build detailed customer journeys. If you’re looking for a data analytic software that needs to take care of market research of your company, Qualtrics is worth the try.

14. Data science platforms

Data science can be used for most software solutions on our list, but it does deserve a special category since it has developed into one of the most sought-after skills of the decade. No matter if you need to utilize preparation, integration or data analyst reporting tools, data science platforms will probably be high on your list for simplifying analytical processes and utilizing advanced analytics models to generate in-depth data science insights. To put this into perspective, we will present RapidMiner as one of the top data analyst software that combines deep but simplified analysis.

A comprehensive data science and machine learning platform with 1500+ algorithms and functions

Possible to integrate with Python and R as well as support for database connections (e.g. Oracle)