- Grades 6-12

- School Leaders

How do You Use Social Media? Be entered to win a $50 gift card!

What Is Critical Thinking and Why Do We Need To Teach It?

Question the world and sort out fact from opinion.

The world is full of information (and misinformation) from books, TV, magazines, newspapers, online articles, social media, and more. Everyone has their own opinions, and these opinions are frequently presented as facts. Making informed choices is more important than ever, and that takes strong critical thinking skills. But what exactly is critical thinking? Why should we teach it to our students? Read on to find out.

What is critical thinking?

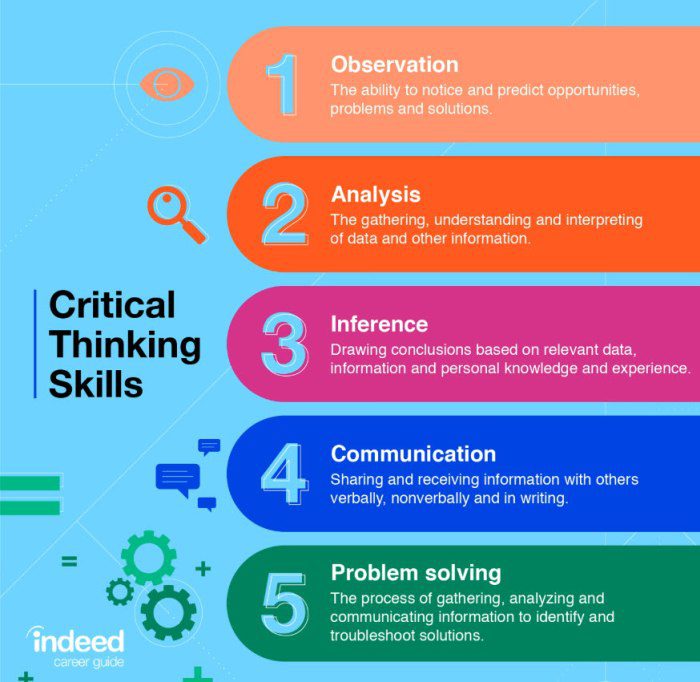

Source: Indeed

Critical thinking is the ability to examine a subject and develop an informed opinion about it. It’s about asking questions, then looking closely at the answers to form conclusions that are backed by provable facts, not just “gut feelings” and opinion. These skills allow us to confidently navigate a world full of persuasive advertisements, opinions presented as facts, and confusing and contradictory information.

The Foundation for Critical Thinking says, “Critical thinking can be seen as having two components: 1) a set of information and belief-generating and processing skills, and 2) the habit, based on intellectual commitment, of using those skills to guide behavior.”

In other words, good critical thinkers know how to analyze and evaluate information, breaking it down to separate fact from opinion. After a thorough analysis, they feel confident forming their own opinions on a subject. And what’s more, critical thinkers use these skills regularly in their daily lives. Rather than jumping to conclusions or being guided by initial reactions, they’ve formed the habit of applying their critical thinking skills to all new information and topics.

Why is critical thinking so important?

Imagine you’re shopping for a new car. It’s a big purchase, so you want to do your research thoroughly. There’s a lot of information out there, and it’s up to you to sort through it all.

- You’ve seen TV commercials for a couple of car models that look really cool and have features you like, such as good gas mileage. Plus, your favorite celebrity drives that car!

- The manufacturer’s website has a lot of information, like cost, MPG, and other details. It also mentions that this car has been ranked “best in its class.”

- Your neighbor down the street used to have this kind of car, but he tells you that he eventually got rid of it because he didn’t think it was comfortable to drive. Plus, he heard that brand of car isn’t as good as it used to be.

- Three independent organizations have done test-drives and published their findings online. They all agree that the car has good gas mileage and a sleek design. But they each have their own concerns or complaints about the car, including one that found it might not be safe in high winds.

So much information! It’s tempting to just go with your gut and buy the car that looks the coolest (or is the cheapest, or says it has the best gas mileage). Ultimately, though, you know you need to slow down and take your time, or you could wind up making a mistake that costs you thousands of dollars. You need to think critically to make an informed choice.

What does critical thinking look like?

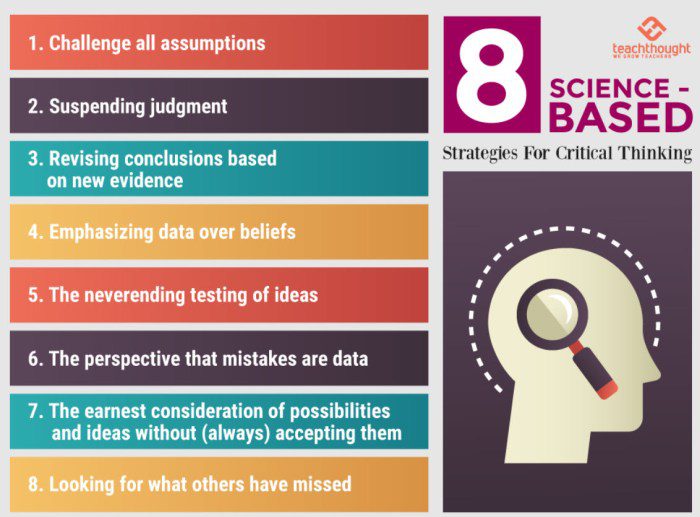

Source: TeachThought

Let’s continue with the car analogy, and apply some critical thinking to the situation.

- Critical thinkers know they can’t trust TV commercials to help them make smart choices, since every single one wants you to think their car is the best option.

- The manufacturer’s website will have some details that are proven facts, but other statements that are hard to prove or clearly just opinions. Which information is factual, and even more important, relevant to your choice?

- A neighbor’s stories are anecdotal, so they may or may not be useful. They’re the opinions and experiences of just one person and might not be representative of a whole. Can you find other people with similar experiences that point to a pattern?

- The independent studies could be trustworthy, although it depends on who conducted them and why. Closer analysis might show that the most positive study was conducted by a company hired by the car manufacturer itself. Who conducted each study, and why?

Did you notice all the questions that started to pop up? That’s what critical thinking is about: asking the right questions, and knowing how to find and evaluate the answers to those questions.

Good critical thinkers do this sort of analysis every day, on all sorts of subjects. They seek out proven facts and trusted sources, weigh the options, and then make a choice and form their own opinions. It’s a process that becomes automatic over time; experienced critical thinkers question everything thoughtfully, with purpose. This helps them feel confident that their informed opinions and choices are the right ones for them.

Key Critical Thinking Skills

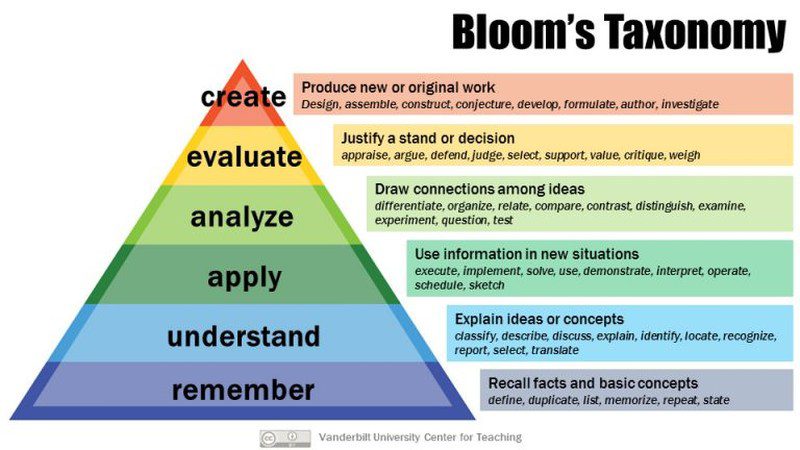

There’s no official list, but many people use Bloom’s Taxonomy to help lay out the skills kids should develop as they grow up.

Source: Vanderbilt University

Bloom’s Taxonomy is laid out as a pyramid, with foundational skills at the bottom providing a base for more advanced skills higher up. The lowest phase, “Remember,” doesn’t require much critical thinking. These are skills like memorizing math facts, defining vocabulary words, or knowing the main characters and basic plot points of a story.

Higher skills on Bloom’s list incorporate more critical thinking.

True understanding is more than memorization or reciting facts. It’s the difference between a child reciting by rote “one times four is four, two times four is eight, three times four is twelve,” versus recognizing that multiplication is the same as adding a number to itself a certain number of times. When you understand a concept, you can explain how it works to someone else.

When you apply your knowledge, you take a concept you’ve already mastered and apply it to new situations. For instance, a student learning to read doesn’t need to memorize every word. Instead, they use their skills in sounding out letters to tackle each new word as they come across it.

When we analyze something, we don’t take it at face value. Analysis requires us to find facts that stand up to inquiry. We put aside personal feelings or beliefs, and instead identify and scrutinize primary sources for information. This is a complex skill, one we hone throughout our entire lives.

Evaluating means reflecting on analyzed information, selecting the most relevant and reliable facts to help us make choices or form opinions. True evaluation requires us to put aside our own biases and accept that there may be other valid points of view, even if we don’t necessarily agree with them.

Finally, critical thinkers are ready to create their own result. They can make a choice, form an opinion, cast a vote, write a thesis, debate a topic, and more. And they can do it with the confidence that comes from approaching the topic critically.

How do you teach critical thinking skills?

The best way to create a future generation of critical thinkers is to encourage them to ask lots of questions. Then, show them how to find the answers by choosing reliable primary sources. Require them to justify their opinions with provable facts, and help them identify bias in themselves and others. Try some of these resources to get started.

- 5 Critical Thinking Skills Every Kid Needs To Learn (And How To Teach Them)

- 100+ Critical Thinking Questions for Students To Ask About Anything

- 10 Tips for Teaching Kids To Be Awesome Critical Thinkers

- Free Critical Thinking Poster, Rubric, and Assessment Ideas

More Critical Thinking Resources

The answer to “What is critical thinking?” is a complex one. These resources can help you dig more deeply into the concept and hone your own skills.

- The Foundation for Critical Thinking

- Cultivating a Critical Thinking Mindset (PDF)

- Asking the Right Questions: A Guide to Critical Thinking (Browne/Keeley, 2014)

Have more questions about what critical thinking is or how to teach it in your classroom? Join the WeAreTeachers HELPLINE group on Facebook to ask for advice and share ideas!

Plus, 12 skills students can work on now to help them in careers later ..

You Might Also Like

What Is Social-Emotional Learning (SEL)?

Teach kids the "soft skills" they need to know. Continue Reading

Copyright © 2023. All rights reserved. 5335 Gate Parkway, Jacksonville, FL 32256

Critical thinking – Why is it so hard to teach?

Critical thinking is not a set of skills that can be deployed at any time, in any context. It is a type of thought that even 3-year-olds can engage in – and even trained scientists can fail in.

Willingham, D. T. (2008). Critical thinking: Why is it so hard to teach? Arts Education Policy Review, 109 (4), 21–32. https://doi.org/10.3200/AEPR.109.4.21-32

Sign up for the latest news, announcements & information!

- Events & Conferences

- Publications

Site Search

Use the below form to search the website.

Jump to navigation

Critical Thinking: Why Is It So Hard to Teach?

Teacher quality standards.

- Element D: Teachers establish and communicate high expectations and use processes to support the development of critical-thinking and problem-solving skills.

PD resource content

This article takes a deep dive into what it really means for students to think critically. Professor of Cognitive Psychology, Daniel Willingham, details the latest research in an effort support teachers as they explicitly model and scaffold critical thinking strategies with their students.

Resource Links

Willingham, D. T. (2007). Critical thinking: Why is it so hard to teach ? American Educator . Retrieved from http://www.aft.org/sites/default/files/periodicals/Crit_Thinking.pdf

You must log in in order to respond to resource questions.

Submit your work

After completing your responses and resource rating, please submit your work for credit.

Search form

- Community Garden

- Cultivated sets

- Developed in collaboration between Dyslexia Canada and IDA Ontario

Critical Thinking: Why is it so hard to teach?

By :

- Daniel Willingham, Reading Rockets

Grade(s) :

Topic(s) :

- Comprehension

Description

- A1. Transferable Skills

In this Reading Rockets article, Daniel Willingham explores the topic of critical thinking and how to foster metacognitive skills in the classroom. Willingham notes that background knowledge plays a role in critical thinking and understanding the surface structure of problems. He provides evidence-based strategies, such as promoting thinking within a particular domain to bring everyday thinking into the classroom.

Curriculum Connection

Critical thinking is essential in all areas of the curriculum and this website broadly supports the successful implementation of fostering metacognitive skills to enhance critical thinking.

Related Posts

- C1. Knowledge about Texts

- D2. Creating Texts

Teaching TV: Learning With Tel...

- By Elizabeth Verrall

- A2. Digital Media Literacy

- D1. Developing Ideas and Organizing Content

Teaching TV: Television Techni...

Teaching tv: film production: ....

This is a good article and it really makes you think about the big push that is always come from the top-down to make critical thinkers in our education systems. After you read this, it makes a lot of sense why students have a difficult time doing math word problems, looking at multiple perspectives in middle school geography and being able to connect the dots in science. Great read for intermediate teachers.

These are great resources. I would love to see research and resources from Canadian researchers as well.

Leave a Comment Cancel reply

You must be logged in to post a comment.

[email protected]

Produced By

Funding for ONlit.org is provided by the Ministry of Education. Please note that the views expressed in these resources are the views of ONlit and do not necessarily reflect those of the Ministry of Education.

© 2023 ONlit. All rights reserved.

Critical Thinking: Why Is It So Hard to Teach?

Learning critical thinking skills can only take a student so far. Critical thinking depends on knowing relevant content very well and thinking about it, repeatedly. Here are five strategies, consistent with the research, to help bring critical thinking into the everyday classroom.

On this page:

Why is thinking critically so hard, thinking tends to focus on a problem's "surface structure", with deep knowledge, thinking can penetrate beyond surface structure, looking for a deep structure helps, but it only takes you so far, is thinking like a scientist easier, why scientific thinking depends on scientific knowledge.

Virtually everyone would agree that a primary, yet insufficiently met, goal of schooling is to enable students to think critically. In layperson’s terms, critical thinking consists of seeing both sides of an issue, being open to new evidence that disconfirms your ideas, reasoning dispassionately, demanding that claims be backed by evidence, deducing and inferring conclusions from available facts, solving problems, and so forth. Then too, there are specific types of critical thinking that are characteristic of different subject matter: That’s what we mean when we refer to “thinking like a scientist” or “thinking like a historian.”

This proper and commonsensical goal has very often been translated into calls to teach “critical thinking skills” and “higher-order thinking skills” and into generic calls for teaching students to make better judgments, reason more logically, and so forth. In a recent survey of human resource officials 1 and in testimony delivered just a few months ago before the Senate Finance Committee, 2 business leaders have repeatedly exhorted schools to do a better job of teaching students to think critically. And they are not alone. Organizations and initiatives involved in education reform, such as the National Center on Education and the Economy, the American Diploma Project, and the Aspen Institute, have pointed out the need for students to think and/or reason critically. The College Board recently revamped the SAT to better assess students’ critical thinking and ACT, Inc. offers a test of critical thinking for college students.

These calls are not new. In 1983, A Nation At Risk , a report by the National Commission on Excellence in Education, found that many 17-year-olds did not possess the “ ‘higher-order’ intellectual skills” this country needed. It claimed that nearly 40 percent could not draw inferences from written material and only onefifth could write a persuasive essay.

Following the release of A Nation At Risk , programs designed to teach students to think critically across the curriculum became extremely popular. By 1990, most states had initiatives designed to encourage educators to teach critical thinking, and one of the most widely used programs, Tactics for Thinking, sold 70,000 teacher guides. 3 But, for reasons I’ll explain, the programs were not very effective — and today we still lament students’ lack of critical thinking.

After more than 20 years of lamentation, exhortation, and little improvement, maybe it’s time to ask a fundamental question: Can critical thinking actually be taught? Decades of cognitive research point to a disappointing answer: not really. People who have sought to teach critical thinking have assumed that it is a skill, like riding a bicycle, and that, like other skills, once you learn it, you can apply it in any situation. Research from cognitive science shows that thinking is not that sort of skill. The processes of thinking are intertwined with the content of thought (that is, domain knowledge). Thus, if you remind a student to “look at an issue from multiple perspectives” often enough, he will learn that he ought to do so, but if he doesn’t know much about an issue, he can’t think about it from multiple perspectives. You can teach students maxims about how they ought to think, but without background knowledge and practice, they probably will not be able to implement the advice they memorize. Just as it makes no sense to try to teach factual content without giving students opportunities to practice using it, it also makes no sense to try to teach critical thinking devoid of factual content.

In this article, I will describe the nature of critical thinking, explain why it is so hard to do and to teach, and explore how students acquire a specific type of critical thinking: thinking scientifically. Along the way, we’ll see that critical thinking is not a set of skills that can be deployed at any time, in any context. It is a type of thought that even 3-year-olds can engage in — and even trained scientists can fail in. And it is very much dependent on domain knowledge and practice.

Educators have long noted that school attendance and even academic success are no guarantee that a student will graduate an effective thinker in all situations. There is an odd tendency for rigorous thinking to cling to particular examples or types of problems. Thus, a student may have learned to estimate the answer to a math problem before beginning calculations as a way of checking the accuracy of his answer, but in the chemistry lab, the same student calculates the components of a compound without noticing that his estimates sum to more than 100%. And a student who has learned to thoughtfully discuss the causes of the American Revolution from both the British and American perspectives doesn’t even think to question how the Germans viewed World War II. Why are students able to think critically in one situation, but not in another? The brief answer is: Thought processes are intertwined with what is being thought about. Let’s explore this in depth by looking at a particular kind of critical thinking that has been studied extensively: problem solving.

Imagine a seventh-grade math class immersed in word problems. How is it that students will be able to answer one problem, but not the next, even though mathematically both word problems are the same, that is, they rely on the same mathematical knowledge? Typically, the students are focusing on the scenario that the word problem describes (its surface structure) instead of on the mathematics required to solve it (its deep structure). So even though students have been taught how to solve a particular type of word problem, when the teacher or textbook changes the scenario, students still struggle to apply the solution because they don’t recognize that the problems are mathematically the same.

To understand why the surface structure of a problem is so distracting and, as a result, why it’s so hard to apply familiar solutions to problems that appear new, let’s first consider how you understand what’s being asked when you are given a problem. Anything you hear or read is automatically interpreted in light of what you already know about similar subjects. For example, suppose you read these two sentences: “After years of pressure from the film and television industry, the President has filed a formal complaint with China over what U.S. firms say is copyright infringement. These firms assert that the Chinese government sets stringent trade restrictions for U.S. entertainment products, even as it turns a blind eye to Chinese companies that copy American movies and television shows and sell them on the black market.” Background knowledge not only allows you to comprehend the sentences, it also has a powerful effect as you continue to read because it narrows the interpretations of new text that you will entertain. For example, if you later read the word “Bush,” it would not make you think of a small shrub, nor would you wonder whether it referred to the former President Bush, the rock band, or a term for rural hinterlands. If you read “piracy,” you would not think of eye-patched swabbies shouting “shiver me timbers!” The cognitive system gambles that incoming information will be related to what you’ve just been thinking about. Thus, it significantly narrows the scope of possible interpretations of words, sentences, and ideas. The benefit is that comprehension proceeds faster and more smoothly; the cost is that the deep structure of a problem is harder to recognize.

The narrowing of ideas that occurs while you read (or listen) means that you tend to focus on the surface structure, rather than on the underlying structure of the problem. For example, in one experiment, 4 subjects saw a problem like this one:

Members of the West High School Band were hard at work practicing for the annual Homecoming Parade. First they tried marching in rows of 12, but Andrew was left by himself to bring up the rear. Then the director told the band members to march in columns of eight, but Andrew was still left to march alone. Even when the band marched in rows of three, Andrew was left out. Finally, in exasperation, Andrew told the band director that they should march in rows of five in order to have all the rows filled. He was right. Given that there were at least 45 musicians on the field but fewer than 200 musicians, how many students were there in the West High School Band?

Earlier in the experiment, subjects had read four problems along with detailed explanations of how to solve each one, ostensibly to rate them for the clarity of the writing. One of the four problems concerned the number of vegetables to buy for a garden, and it relied on the same type of solution necessary for the band problem-calculation of the least common multiple. Yet, few subjects — just 19 percent — saw that the band problem was similar and that they could use the garden problem solution. Why?

When a student reads a word problem, her mind interprets the problem in light of her prior knowledge, as happened when you read the two sentences about copyrights and China. The difficulty is that the knowledge that seems relevant relates to the surface structure — in this problem, the reader dredges up knowledge about bands, high school, musicians, and so forth. The student is unlikely to read the problem and think of it in terms of its deep structure — using the least common multiple. The surface structure of the problem is overt, but the deep structure of the problem is not. Thus, people fail to use the first problem to help them solve the second: In their minds, the first was about vegetables in a garden and the second was about rows of band marchers.

If knowledge of how to solve a problem never transferred to problems with new surface structures, schooling would be inefficient or even futile — but of course, such transfer does occur. When and why is complex, 5 but two factors are especially relevant for educators: familiarity with a problem’s deep structure and the knowledge that one should look for a deep structure. I’ll address each in turn. When one is very familiar with a problem’s deep structure, knowledge about how to solve it transfers well. That familiarity can come from long-term, repeated experience with one problem, or with various manifestations of one type of problem (i.e., many problems that have different surface structures, but the same deep structure). After repeated exposure to either or both, the subject simply perceives the deep structure as part of the problem description. Here’s an example:

A treasure hunter is going to explore a cave up on a hill near a beach. He suspected there might be many paths inside the cave so he was afraid he might get lost. Obviously, he did not have a map of the cave; all he had with him were some common items such as a flashlight and a bag. What could he do to make sure he did not get lost trying to get back out of the cave later?

The solution is to carry some sand with you in the bag, and leave a trail as you go, so you can trace your path back when you’re ready to leave the cave. About 75% of American college students thought of this solution — but only 25% of Chinese students solved it. 6 The experimenters suggested that Americans solved it because most grew up hearing the story of Hansel and Gretel which includes the idea of leaving a trail as you travel to an unknown place in order to find your way back. The experimenters also gave subjects another puzzle based on a common Chinese folk tale, and the percentage of solvers from each culture reversed. www.aft.org/pubs-reports/american_educator/index.htm”>Read the puzzle based on the Chinese folk tale, and the tale itself.

It takes a good deal of practice with a problem type before students know it well enough to immediately recognize its deep structure, irrespective of the surface structure, as Americans did for the Hansel and Gretel problem. American subjects didn’t think of the problem in terms of sand, caves, and treasure; they thought of it in terms of finding something with which to leave a trail. The deep structure of the problem is so well represented in their memory, that they immediately saw that structure when they read the problem.

Now let’s turn to the second factor that aids in transfer despite distracting differences in surface structure — knowing to look for a deep structure. Consider what would happen if I said to a student working on the band problem, “this one is similar to the garden problem.” The student would understand that the problems must share a deep structure and would try to figure out what it is. Students can do something similar without the hint. A student might think “I’m seeing this problem in a math class, so there must be a math formula that will solve this problem.” Then he could scan his memory (or textbook) for candidates, and see if one of them helps. This is an example of what psychologists call metacognition, or regulating one’s thoughts. In the introduction, I mentioned that you can teach students maxims about how they ought to think. Cognitive scientists refer to these maxims as metacognitive strategies. They are little chunks of knowledge — like “look for a problem’s deep structure” or “consider both sides of an issue” — that students can learn and then use to steer their thoughts in more productive directions.

Helping students become better at regulating their thoughts was one of the goals of the critical thinking programs that were popular 20 years ago. These programs are not very effective. Their modest benefit is likely due to teaching students to effectively use metacognitive strategies. Students learn to avoid biases that most of us are prey to when we think, such as settling on the first conclusion that seems reasonable, only seeking evidence that confirms one’s beliefs, ignoring countervailing evidence, overconfidence, and others. 7 Thus, a student who has been encouraged many times to see both sides of an issue, for example, is probably more likely to spontaneously think “I should look at both sides of this issue” when working on a problem.

Unfortunately, metacognitive strategies can only take you so far. Although they suggest what you ought to do, they don’t provide the knowledge necessary to implement the strategy. For example, when experimenters told subjects working on the band problem that it was similar to the garden problem, more subjects solved the problem (35% compared to 19% without the hint), but most subjects, even when told what to do, weren’t able to do it. Likewise, you may know that you ought not accept the first reasonable-sounding solution to a problem, but that doesn’t mean you know how to come up with alterative solutions or weigh how reasonable each one is. That requires domain knowledge and practice in putting that knowledge to work.

Since critical thinking relies so heavily on domain knowledge, educators may wonder if thinking critically in a particular domain is easier to learn. The quick answer is yes, it’s a little easier. To understand why, let’s focus on one domain, science, and examine the development of scientific thinking.

Teaching science has been the focus of intensive study for decades, and the research can be usefully categorized into two strands. The first examines how children acquire scientific concepts; for example, how they come to forgo naive conceptions of motion and replace them with an understanding of physics. The second strand is what we would call thinking scientifically, that is, the mental procedures by which science is conducted: developing a model, deriving a hypothesis from the model, designing an experiment to test the hypothesis, gathering data from the experiment, interpreting the data in light of the model, and so forth. Most researchers believe that scientific thinking is really a subset of reasoning that is not different in kind from other types of reasoning that children and adults do. 8 What makes it scientific thinking is knowing when to engage in such reasoning, and having accumulated enough relevant knowledge and spent enough time practicing to do so.

Recognizing when to engage in scientific reasoning is so important because the evidence shows that being able to reason is not enough; children and adults use and fail to use the proper reasoning processes on problems that seem similar. For example, consider a type of reasoning about cause and effect that is very important in science: conditional probabilities. If two things go together, it’s possible that one causes the other. Suppose you start a new medicine and notice that you seem to be getting headaches more often than usual. You would infer that the medication influenced your chances of getting a headache. But it could also be that the medication increases your chances of getting a headache only in certain circumstances or conditions. In conditional probability, the relationship between two things (e.g., medication and headaches) is dependent on a third factor. For example, the medication might increase the probability of a headache only when you’ve had a cup of coffee. The relationship of the medication and headaches is conditional on the presence of coffee.

Understanding and using conditional probabilities is essential to scientific thinking because it is so important in reasoning about what causes what. But people’s success in thinking this way depends on the particulars of how the question is presented. Studies show that adults sometimes use conditional probabilities successfully, 9 but fail to do so with many problems that call for it. 10 Even trained scientists are open to pitfalls in reasoning about conditional probabilities (as well as other types of reasoning). Physicians are known to discount or misinterpret new patient data that conflict with a diagnosis they have in mind, 11 and Ph.D.- level scientists are prey to faulty reasoning when faced with a problem embedded in an unfamiliar context. 12

And yet, young children are sometimes able to reason about conditional probabilities. In one experiment, 13 the researchers showed 3-year-olds a box and told them it was a “blicket detector” that would play music if a blicket were placed on top. The child then saw one of the two sequences shown below in which blocks are placed on the blicket detector. At the end of the sequence, the child was asked whether each block was a blicket. In other words, the child was to use conditional reasoning to infer which block caused the music to play.

Note that the relationship between each individual block (yellow cube and blue cylinder) and the music is the same in sequences 1 and 2. In either sequence, the child sees the yellow cube associated with music three times, and the blue cylinder associated with the absence of music once and the presence of music twice. What differs between the first and second sequence is the relationship between the blue and yellow blocks, and therefore, the conditional probability of each block being a blicket. Three-year-olds understood the importance of conditional probabilities.For sequence 1, they said the yellow cube was a blicket, but the blue cylinder was not; for sequence 2, they chose equally between the two blocks.

This body of studies has been summarized simply: Children are not as dumb as you might think, and adults (even trained scientists) are not as smart as you might think.What’s going on? One issue is that the common conception of critical thinking or scientific thinking (or historical thinking) as a set of skills is not accurate. Critical thinking does not have certain characteristics normally associated with skills — in particular, being able to use that skill at any time. If I told you that I learned to read music, for example, you would expect, correctly, that I could use my new skill (i.e., read music) whenever I wanted. But critical thinking is very different. As we saw in the discussion of conditional probabilities, people can engage in some types of critical thinking without training, but even with extensive training, they will sometimes fail to think critically. This understanding that critical thinking is not a skill is vital. It tells us that teaching students to think critically probably lies in small part in showing them new ways of thinking, and in large part in enabling them to deploy the right type of thinking at the right time.

Returning to our focus on science, we’re ready to address a key question: Can students be taught when to engage in scientific thinking? Sort of. It is easier than trying to teach general critical thinking, but not as easy as we would like. Recall that when we were discussing problem solving, we found that students can learn metacognitive strategies that help them look past the surface structure of a problem and identify its deep structure, thereby getting them a step closer to figuring out a solution. Essentially the same thing can happen with scientific thinking. Students can learn certain metacognitive strategies that will cue them to think scientifically. But, as with problem solving, the metacognitive strategies only tell the students what they should do — they do not provide the knowledge that students need to actually do it. The good news is that within a content area like science, students have more context cues to help them figure out which metacognitive strategy to use, and teachers have a clearer idea of what domain knowledge they must teach to enable students to do what the strategy calls for.

For example, two researchers 14 taught second-, third-, and fourth-graders the scientific concept behind controlling variables; that is, of keeping everything in two comparison conditions the same, except for the one variable that is the focus of investigation. The experimenters gave explicit instruction about this strategy for conducting experiments and then had students practice with a set of materials (e.g., springs) to answer a specific question (e.g., which of these factors determine how far a spring will stretch: length, coil diameter, wire diameter, or weight?). The experimenters found that students not only understood the concept of controlling variables, they were able to apply it seven months later with different materials and a different experimenter, although the older children showed more robust transfer than the younger children. In this case, the students recognized that they were designing an experiment and that cued them to recall the metacognitive strategy, “When I design experiments, I should try to control variables.” Of course, succeeding in controlling all of the relevant variables is another matter-that depends on knowing which variables may matter and how they could vary.

Experts in teaching science recommend that scientific reasoning be taught in the context of rich subject matter knowledge. A committee of prominent science educators brought together by the National Research Council put it plainly: “Teaching content alone is not likely to lead to proficiency in science, nor is engaging in inquiry experiences devoid of meaningful science content.”

The committee drew this conclusion based on evidence that background knowledge is necessary to engage in scientific thinking. For example, knowing that one needs a control group in an experiment is important. Like having two comparison conditions, having a control group in addition to an experimental group helps you focus on the variable you want to study. But knowing that you need a control group is not the same as being able to create one. Since it’s not always possible to have two groups that are exactly alike, knowing which factors can vary between groups and which must not vary is one example of necessary background knowledge. In experiments measuring how quickly subjects can respond, for example, control groups must be matched for age, because age affects response speed, but they need not be perfectly matched for gender.

More formal experimental work verifies that background knowledge is necessary to reason scientifically. For example, consider devising a research hypothesis. One could generate multiple hypotheses for any given situation. Suppose you know that car A gets better gas mileage than car B and you’d like to know why. There are many differences between the cars, so which will you investigate first? Engine size? Tire pressure? A key determinant of the hypothesis you select is plausibility. You won’t choose to investigate a difference between cars A and B that you think is unlikely to contribute to gas mileage (e.g., paint color), but if someone provides a reason to make this factor more plausible (e.g., the way your teenage son’s driving habits changed after he painted his car red), you are more likely to say that this now-plausible factor should be investigated. 16 One’s judgment about the plausibility of a factor being important is based on one’s knowledge of the domain.

Other data indicate that familiarity with the domain makes it easier to juggle different factors simultaneously, which in turn allows you to construct experiments that simultaneously control for more factors. For example, in one experiment, 17 eighth-graders completed two tasks. In one, they were to manipulate conditions in a computer simulation to keep imaginary creatures alive. In the other, they were told that they had been hired by a swimming pool company to evaluate how the surface area of swimming pools was related to the cooling rate of its water. Students were more adept at designing experiments for the first task than the second, which the researchers interpreted as being due to students’ familiarity with the relevant variables. Students are used to thinking about factors that might influence creatures’ health (e.g., food, predators), but have less experience working with factors that might influence water temperature (e.g., volume, surface area). Hence, it is not the case that “controlling variables in an experiment” is a pure process that is not affected by subjects’ knowledge of those variables.

Prior knowledge and beliefs not only influence which hypotheses one chooses to test, they influence how one interprets data from an experiment. In one experiment, 18 undergraduates were evaluated for their knowledge of electrical circuits. Then they participated in three weekly, 1.5-hour sessions during which they designed and conducted experiments using a computer simulation of circuitry, with the goal of learning how circuitry works. The results showed a strong relationship between subjects’ initial knowledge and how much subjects learned in future sessions, in part due to how the subjects interpreted the data from the experiments they had conducted. Subjects who started with more and better integrated knowledge planned more informative experiments and made better use of experimental outcomes.

Other studies have found similar results, and have found that anomalous, or unexpected, outcomes may be particularly important in creating new knowledge-and particularly dependent upon prior knowledge. 19 Data that seem odd because they don’t fit one’s mental model of the phenomenon under investigation are highly informative. They tell you that your understanding is incomplete, and they guide the development of new hypotheses. But you could only recognize the outcome of an experiment as anomalous if you had some expectation of how it would turn out. And that expectation would be based on domain knowledge, as would your ability to create a new hypothesis that takes the anomalous outcome into account.

The idea that scientific thinking must be taught hand in hand with scientific content is further supported by research on scientific problem solving; that is, when students calculate an answer to a textbook-like problem, rather than design their own experiment. A meta-analysis 20 of 40 experiments investigating methods for teaching scientific problem solving showed that effective approaches were those that focused on building complex, integrated knowledge bases as part of problem solving, for example by including exercises like concept mapping. Ineffective approaches focused exclusively on the strategies to be used in problem solving while ignoring the knowledge necessary for the solution.

What do all these studies boil down to? First, critical thinking (as well as scientific thinking and other domain-based thinking) is not a skill. There is not a set of critical thinking skills that can be acquired and deployed regardless of context. Second, there are metacognitive strategies that, once learned, make critical thinking more likely. Third, the ability to think critically (to actually do what the metacognitive strategies call for) depends on domain knowledge and practice. For teachers, the situation is not hopeless, but no one should underestimate the difficulty of teaching students to think critically.

Liked it? Share it!

Visit our sister websites:, reading rockets launching young readers (opens in a new window), start with a book read. explore. learn (opens in a new window), colorín colorado helping ells succeed (opens in a new window), ld online all about learning disabilities (opens in a new window), reading universe all about teaching reading and writing (opens in a new window).

Getting Students Comfortable with Critical Thinking

Three Strategies to Support Critical Thinking

Toward more joyful learning.

Premium Resource

Critical thinking skills do not develop via osmosis or incidental exposure to critical thought.

- Critical thinking is subject-specific. Art critics and football analysts are, arguably, both critical thinkers in their own right. Yet we wouldn't want an art critic to offer color commentary for the Super Bowl or a sportscaster to serve as a docent at the Museum of Modern Art. The same principle applies to academics. As cognitive scientist Daniel Willingham (2007) notes, being able to solve a complex mathematics problem does not transfer to being able to analyze a historical text or construct a well-reasoned essay. We must help students develop distinct critical thinking skills in all subject areas.

- We must teach critical thinking skills directly. As I've noted previously in this column, (Goodwin, 2017), critical thinking skills do not develop via osmosis or incidental exposure to critical thought. A study that compared the effects of providing one group of college students with direct instruction in critical thinking and another with texts that reflected analytical and evaluative thinking found the first group to be far more capable of demonstrating critical thinking afterward (Marin & Halpern, 2011).

- Mental models are key to critical thinking. In many pursuits, critical thought depends on our ability to use and reflect on mental models, or schema . Research has shown that one of the biggest differences between experts and novices is that experts use well-developed mental models to solve problems by categorizing them, creating a mental representation of them, retrieving strategies for solving them, and reflecting afterward on the validity of their answers (Nokes, Schunn, & Chi, 2010). So, to help students develop critical thinking skills, we must help them develop mental schema for solving problems.

1. Structured problem solving

2. cognitive writing, 3. guided investigations, the new classroom instruction that works.

An all-new third edition provides a rigorous research base for instructional strategies proven to promote meaningful learning.

Collins, J. L., Lee, J., Fox, J. D., & Madigan, T. P. (2017). Bringing together reading and writing: An experimental study of writing intensive reading comprehension in low-performing urban elementary schools. Reading Research Quarterly , 52 (3), 311–332.

Goodwin, B. (2017). Critical thinking won't develop through osmosis. Educational Leadership , 74 (5). 80–81.

Kahneman, D. (2011). Thinking fast and slow . Farrar, Straus & Giroux.

Lynch, S., Taymans, J., Watson, W. A., Ochsendorf, R. J., Pyke, C., & Szesze, M. J. (2007). Effectiveness of a highly rated science curriculum unit for students with disabilities in general education classrooms. Exceptional Children , 73 (2), 202–223.

Marin, L. M., & Halpern, D. F. (2011). Pedagogy for developing critical thinking in adolescents: Explicit instruction produces greatest gains. Thinking skills and creativity , 6 (2011), 1–13.

Nokes, T. J., Schunn, C. D., & Chi, M. T. (2010). Problem solving and human expertise. International Encyclopedia of Education , vol. 5, pp. 265–272.

Olson, C. B., Matuchniak, T., Chung, H. Q., Stumpf, R., & Farkas, G. (2017). Reducing achievement gaps in academic writing for Latinos and English Learners in grades 7-12. Journal of Educational Psychology , 109 (1), 1–21.

Willingham, D. T. (2007). Critical thinking: Why is it so hard to teach? American Educator , 31 , 8–19.

Bryan Goodwin is the president and CEO of McREL International, a Denver-based nonprofit education research and development organization. Goodwin, a former teacher and journalist, has been at McREL for more than 20 years, serving previously as chief operating officer and director of communications and marketing. Goodwin writes a monthly research column for Educational Leadership and presents research findings and insights to audiences across the United States and in Canada, the Middle East, and Australia.

ASCD is a community dedicated to educators' professional growth and well-being.

Let us help you put your vision into action., related articles.

Picture Books Aren’t Just for the Youngest Students

The Hidden Rigors of Data Science

Transforming STEM by Focusing on Justice

STEM Doesn’t Have to Be Rocket Science

How to Start with STEM

From our issue.

To process a transaction with a Purchase Order please send to [email protected]

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

What influences students’ abilities to critically evaluate scientific investigations?

Ashley b. heim.

1 Department of Ecology and Evolutionary Biology, Cornell University, Ithaca, NY, United States of America

2 Laboratory of Atomic and Solid State Physics, Cornell University, Ithaca, NY, United States of America

David Esparza

Michelle k. smith, n. g. holmes, associated data.

All raw data files are available from the Cornell Institute for Social and Economic Research (CISER) data and reproduction archive ( https://archive.ciser.cornell.edu/studies/2881 ).

Critical thinking is the process by which people make decisions about what to trust and what to do. Many undergraduate courses, such as those in biology and physics, include critical thinking as an important learning goal. Assessing critical thinking, however, is non-trivial, with mixed recommendations for how to assess critical thinking as part of instruction. Here we evaluate the efficacy of assessment questions to probe students’ critical thinking skills in the context of biology and physics. We use two research-based standardized critical thinking instruments known as the Biology Lab Inventory of Critical Thinking in Ecology (Eco-BLIC) and Physics Lab Inventory of Critical Thinking (PLIC). These instruments provide experimental scenarios and pose questions asking students to evaluate what to trust and what to do regarding the quality of experimental designs and data. Using more than 3000 student responses from over 20 institutions, we sought to understand what features of the assessment questions elicit student critical thinking. Specifically, we investigated (a) how students critically evaluate aspects of research studies in biology and physics when they are individually evaluating one study at a time versus comparing and contrasting two and (b) whether individual evaluation questions are needed to encourage students to engage in critical thinking when comparing and contrasting. We found that students are more critical when making comparisons between two studies than when evaluating each study individually. Also, compare-and-contrast questions are sufficient for eliciting critical thinking, with students providing similar answers regardless of if the individual evaluation questions are included. This research offers new insight on the types of assessment questions that elicit critical thinking at the introductory undergraduate level; specifically, we recommend instructors incorporate more compare-and-contrast questions related to experimental design in their courses and assessments.

Introduction

Critical thinking and its importance.

Critical thinking, defined here as “the ways in which one uses data and evidence to make decisions about what to trust and what to do” [ 1 ], is a foundational learning goal for almost any undergraduate course and can be integrated in many points in the undergraduate curriculum. Beyond the classroom, critical thinking skills are important so that students are able to effectively evaluate data presented to them in a society where information is so readily accessible [ 2 , 3 ]. Furthermore, critical thinking is consistently ranked as one of the most necessary outcomes of post-secondary education for career advancement by employers [ 4 ]. In the workplace, those with critical thinking skills are more competitive because employers assume they can make evidence-based decisions based on multiple perspectives, keep an open mind, and acknowledge personal limitations [ 5 , 6 ]. Despite the importance of critical thinking skills, there are mixed recommendations on how to elicit and assess critical thinking during and as a result of instruction. In response, here we evaluate the degree to which different critical thinking questions elicit students’ critical thinking skills.

Assessing critical thinking in STEM

Across STEM (i.e., science, technology, engineering, and mathematics) disciplines, several standardized assessments probe critical thinking skills. These assessments focus on aspects of critical thinking and ask students to evaluate experimental methods [ 7 – 11 ], form hypotheses and make predictions [ 12 , 13 ], evaluate data [ 2 , 12 – 14 ], or draw conclusions based on a scenario or figure [ 2 , 12 – 14 ]. Many of these assessments are open-response, so they can be difficult to score, and several are not freely available.

In addition, there is an ongoing debate regarding whether critical thinking is a domain-general or context-specific skill. That is, can someone transfer their critical thinking skills from one domain or context to another (domain-general) or do their critical thinking skills only apply in their domain or context of expertise (context-specific)? Research on the effectiveness of teaching critical thinking has found mixed results, primarily due to a lack of consensus definition of and assessment tools for critical thinking [ 15 , 16 ]. Some argue that critical thinking is domain-general—or what Ennis refers to as the “general approach”—because it is an overlapping skill that people use in various aspects of their lives [ 17 ]. In contrast, others argue that critical thinking must be elicited in a context-specific domain, as prior knowledge is needed to make informed decisions in one’s discipline [ 18 , 19 ]. Current assessments include domain-general components [ 2 , 7 , 8 , 14 , 20 , 21 ], asking students to evaluate, for instance, experiments on the effectiveness of dietary supplements in athletes [ 20 ] and context-specific components, such as to measure students’ abilities to think critically in domains such as neuroscience [ 9 ] and biology [ 10 ].

Others maintain the view that critical thinking is a context-specific skill for the purpose of undergraduate education, but argue that it should be content accessible [ 22 – 24 ], as “thought processes are intertwined with what is being thought about” [ 23 ]. From this viewpoint, the context of the assessment would need to be embedded in a relatively accessible context to assess critical thinking independent of students’ content knowledge. Thus, to effectively elicit critical thinking among students, instructors should use assessments that present students with accessible domain-specific information needed to think deeply about the questions being asked [ 24 , 25 ].

Within the context of STEM, current critical thinking assessments primarily ask students to evaluate a single experimental scenario (e.g., [ 10 , 20 ]), though compare-and-contrast questions about more than one scenario can be a powerful way to elicit critical thinking [ 26 , 27 ]. Generally included in the “Analysis” level of Bloom’s taxonomy [ 28 – 30 ], compare-and-contrast questions encourage students to recognize, distinguish between, and relate features between scenarios and discern relevant patterns or trends, rather than compile lists of important features [ 26 ]. For example, a compare-and-contrast assessment may ask students to compare the hypotheses and research methods used in two different experimental scenarios, instead of having them evaluate the research methods of a single experiment. Alternatively, students may inherently recall and use experimental scenarios based on their prior experiences and knowledge as they evaluate an individual scenario. In addition, evaluating a single experimental scenario individually may act as metacognitive scaffolding [ 31 , 32 ]—a process which “guides students by asking questions about the task or suggesting relevant domain-independent strategies [ 32 ]—to support students in their compare-and-contrast thinking.

Purpose and research questions

Our primary objective of this study was to better understand what features of assessment questions elicit student critical thinking using two existing instruments in STEM: the Biology Lab Inventory of Critical Thinking in Ecology (Eco-BLIC) and Physics Lab Inventory of Critical Thinking (PLIC). We focused on biology and physics since critical thinking assessments were already available for these disciplines. Specifically, we investigated (a) how students critically evaluate aspects of research studies in biology and physics when they are individually evaluating one study at a time or comparing and contrasting two studies and (b) whether individual evaluation questions are needed to encourage students to engage in critical thinking when comparing and contrasting.

Providing undergraduates with ample opportunities to practice critical thinking skills in the classroom is necessary for evidence-based critical thinking in their future careers and everyday life. While most critical thinking instruments in biology and physics contexts have undergone some form of validation to ensure they are accurately measuring the intended construct, to our knowledge none have explored how different question types influence students’ critical thinking. This research offers new insight on the types of questions that elicit critical thinking, which can further be applied by educators and researchers across disciplines to measure cognitive student outcomes and incorporate more effective critical thinking opportunities in the classroom.

Ethics statement

The procedures for this study were approved by the Institutional Review Board of Cornell University (Eco-BLIC: #1904008779; PLIC: #1608006532). Informed consent was obtained by all participating students via online consent forms at the beginning of the study, and students did not receive compensation for participating in this study unless their instructor offered credit for completing the assessment.

Participants and assessment distribution

We administered the Eco-BLIC to undergraduate students across 26 courses at 11 institutions (six doctoral-granting, three Master’s-granting, and two Baccalaureate-granting) in Fall 2020 and Spring 2021 and received 1612 usable responses. Additionally, we administered the PLIC to undergraduate students across 21 courses at 11 institutions (six doctoral-granting, one Master’s-granting, three four-year colleges, and one 2-year college) in Fall 2020 and Spring 2021 and received 1839 usable responses. We recruited participants via convenience sampling by emailing instructors of primarily introductory ecology-focused courses or introductory physics courses who expressed potential interest in implementing our instrument in their course(s). Both instruments were administered online via Qualtrics and students were allowed to complete the assessments outside of class. The demographic distribution of the response data is presented in Table 1 , all of which were self-reported by students. The values presented in this table represent all responses we received.

Instrument description

Question types.

Though the content and concepts featured in the Eco-BLIC and PLIC are distinct, both instruments share a similar structure and set of question types. The Eco-BLIC—which was developed using a structure similar to that of the PLIC [ 1 ]—includes two predator-prey scenarios based on relationships between (a) smallmouth bass and mayflies and (b) great-horned owls and house mice. Within each scenario, students are presented with a field-based study and a laboratory-based study focused on a common research question about feeding behaviors of smallmouth bass or house mice, respectively. The prompts for these two Eco-BLIC scenarios are available in S1 and S2 Appendices. The PLIC focuses on two research groups conducting different experiments to test the relationship between oscillation periods of masses hanging on springs [ 1 ]; the prompts for this scenario can be found in S3 Appendix . The descriptive prompts in both the Eco-BLIC and PLIC also include a figure presenting data collected by each research group, from which students are expected to draw conclusions. The research scenarios (e.g., field-based group and lab-based group on the Eco-BLIC) are written so that each group has both strengths and weaknesses in their experimental designs.

After reading the prompt for the first experimental group (Group 1) in each instrument, students are asked to identify possible claims from Group 1’s data (data evaluation questions). Students next evaluate the strengths and weaknesses of various study features for Group 1 (individual evaluation questions). Examples of these individual evaluation questions are in Table 2 . They then suggest next steps the group should pursue (next steps items). Students are then asked to read about the prompt describing the second experimental group’s study (Group 2) and again answer questions about the possible claims, strengths and weaknesses, and next steps of Group 2’s study (data evaluation questions, individual evaluation questions, and next steps items). Once students have independently evaluated Groups 1 and 2, they answer a series of questions to compare the study approaches of Group 1 versus Group 2 (group comparison items). In this study, we focus our analysis on the individual evaluation questions and group comparison items.

The Eco-BLIC examples are derived from the owl/mouse scenario.

Instrument versions

To determine whether the individual evaluation questions impacted the assessment of students’ critical thinking, students were randomly assigned to take one of two versions of the assessment via Qualtrics branch logic: 1) a version that included the individual evaluation and group comparison items or 2) a version with only the group comparison items, with the individual evaluation questions removed. We calculated the median time it took students to answer each of these versions for both the Eco-BLIC and PLIC.

Think-aloud interviews

We also conducted one-on-one think-aloud interviews with students to elicit feedback on the assessment questions (Eco-BLIC n = 21; PLIC n = 4). Students were recruited via convenience sampling at our home institution and were primarily majoring in biology or physics. All interviews were audio-recorded and screen captured via Zoom and lasted approximately 30–60 minutes. We asked participants to discuss their reasoning for answering each question as they progressed through the instrument. We did not analyze these interviews in detail, but rather used them to extract relevant examples of critical thinking that helped to explain our quantitative findings. Multiple think-aloud interviews were conducted with students using previous versions of the PLIC [ 1 ], though these data are not discussed here.

Data analyses

Our analyses focused on (1) investigating the alignment between students’ responses to the individual evaluation questions and the group comparison items and (2) comparing student responses between the two instrument versions. If individual evaluation and group comparison items elicit critical thinking in the same way, we would expect to see the same frequency of responses for each question type, as per Fig 1 . For example, if students evaluated one study feature of Group 1 as a strength and the same study feature for Group 2 as a strength, we would expect that students would respond that both groups were highly effective for this study feature on the group comparison item (i.e., data represented by the purple circle in the top right quadrant of Fig 1 ). Alternatively, if students evaluated one study feature of Group 1 as a strength and the same study feature for Group 2 as a weakness, we would expect that students would indicate that Group 1 was more effective than Group 2 on the group comparison item (i.e., data represented by the green circle in the lower right quadrant of Fig 1 ).

The x- and y-axes represent rankings on the individual evaluation questions for Groups 1 and 2 (or field and lab groups), respectively. The colors in the legend at the top of the figure denote responses to the group comparison items. In this idealized example, all pie charts are the same size to indicate that the student answers are equally proportioned across all answer combinations.

We ran descriptive statistics to summarize student responses to questions and examine distributions and frequencies of the data on the Eco-BLIC and PLIC. We also conducted chi-square goodness-of-fit tests to analyze differences in student responses between versions within the relevant questions from the same instrument. In all of these tests, we used a Bonferroni correction to lower the chances of receiving a false positive and account for multiple comparisons. We generated figures—primarily multi-pie chart graphs and heat maps—to visualize differences between individual evaluation and group comparison items and between versions of each instrument with and without individual evaluation questions, respectively. All aforementioned data analyses and figures were conducted or generated in the R statistical computing environment (v. 4.1.1) and Microsoft Excel.

We asked students to evaluate different experimental set-ups on the Eco-BLIC and PLIC two ways. Students first evaluated the strengths and weaknesses of study features for each scenario individually (individual evaluation questions, Table 2 ) and, subsequently, answered a series of questions to compare and contrast the study approaches of both research groups side-by-side (group comparison items, Table 2 ). Through analyzing the individual evaluation questions, we found that students generally ranked experimental features (i.e., those related to study set-up, data collection and summary methods, and analysis and outcomes) of the independent research groups as strengths ( Fig 2 ), evidenced by the mean scores greater than 2 on a scale from 1 (weakness) to 4 (strength).

Each box represents the interquartile range (IQR). Lines within each box represent the median. Circles represent outliers of mean scores for each question.

Individual evaluation versus compare-and-contrast evaluation

Our results indicate that when students consider Group 1 or Group 2 individually, they mark most study features as strengths (consistent with the means in Fig 2 ), shown by the large circles in the upper right quadrant across the three experimental scenarios ( Fig 3 ). However, the proportion of colors on each pie chart shows that students select a range of responses when comparing the two groups [e.g., Group 1 being more effective (green), Group 2 being more effective (blue), both groups being effective (purple), and neither group being effective (orange)]. We infer that students were more discerning (i.e., more selective) when they were asked to compare the two groups across the various study features ( Fig 3 ). In short, students think about the groups differently if they are rating either Group 1 or Group 2 in the individual evaluation questions versus directly comparing Group 1 to Group 2.

The x- and y-axes represent students’ rankings on the individual evaluation questions for Groups 1 and 2 on each assessment, respectively, where 1 indicates weakness and 4 indicates strength. The overall size of each pie chart represents the proportion of students who responded with each pair of ratings. The colors in the pie charts denote the proportion of students’ responses who chose each option on the group comparison items. (A) Eco-BLIC bass-mayfly scenario (B) Eco-BLIC owl-mouse scenario (C) PLIC oscillation periods of masses hanging on springs scenario.

These results are further supported by student responses from the think-aloud interviews. For example, one interview participant responding to the bass-mayfly scenario of the Eco-BLIC explained that accounting for bias/error in both the field and lab groups in this scenario was a strength (i.e., 4). This participant mentioned that Group 1, who performed the experiment in the field, “[had] outliers, so they must have done pretty well,” and that Group 2, who collected organisms in the field but studied them in lab, “did a good job of accounting for bias.” However, when asked to compare between the groups, this student argued that Group 2 was more effective at accounting for bias/error, noting that “they controlled for more variables.”

Another individual who was evaluating “repeated trials for each mass” in the PLIC expressed a similar pattern. In response to ranking this feature of Group 1 as a strength, they explained: “Given their uncertainties and how small they are, [the group] seems like they’ve covered their bases pretty well.” Similarly, they evaluated this feature of Group 2 as a strength as well, simply noting: “Same as the last [group], I think it’s a strength.” However, when asked to compare between Groups 1 and 2, this individual argued that Group 1 was more effective because they conducted more trials.

Individual evaluation questions to support compare and contrast thinking

Given that students were more discerning when they directly compared two groups for both biology and physics experimental scenarios, we next sought to determine if the individual evaluation questions for Group 1 or Group 2 were necessary to elicit or helpful to support student critical thinking about the investigations. To test this, students were randomly assigned to one of two versions of the instrument. Students in one version saw individual evaluation questions about Group 1 and Group 2 and then saw group comparison items for Group 1 versus Group 2. Students in the second version only saw the group comparison items. We found that students assigned to both versions responded similarly to the group comparison questions, indicating that the individual evaluation questions did not promote additional critical thinking. We visually represent these similarities across versions with and without the individual evaluation questions in Fig 4 as heat maps.

The x-axis denotes students’ responses on the group comparison items (i.e., whether they ranked Group 1 as more effective, Group 2 as more effective, both groups as highly effective, or neither group as effective/both groups were minimally effective). The y-axis lists each of the study features that students compared between the field and lab groups. White and lighter shades of red indicate a lower percentage of student responses, while brighter red indicates a higher percentage of student responses. (A) Eco-BLIC bass-mayfly scenario. (B) Eco-BLIC owl-mouse scenario. (C) PLIC oscillation periods of masses hanging on springs scenario.

We ran chi-square goodness-of-fit tests on the answers between student responses on both instrument versions and there were no significant differences on the Eco-BLIC bass-mayfly scenario ( Fig 4A ; based on an adjusted p -value of 0.006) or owl-mouse questions ( Fig 4B ; based on an adjusted p-value of 0.004). There were only three significant differences (out of 53 items) in how students responded to questions on both versions of the PLIC ( Fig 4C ; based on an adjusted p -value of 0.0005). The items that students responded to differently ( p <0.0005) across both versions were items where the two groups were identical in their design; namely, the equipment used (i.e., stopwatches), the variables measured (i.e., time and mass), and the number of bounces of the spring per trial (i.e., five bounces). We calculated Cramer’s C (Vc; [ 33 ]), a measure commonly applied to Chi-square goodness of fit models to understand the magnitude of significant results. We found that the effect sizes for these three items were small (Vc = 0.11, Vc = 0.10, Vc = 0.06, respectively).

The trend that students answer the Group 1 versus Group 2 comparison questions similarly, regardless of whether they responded to the individual evaluation questions, is further supported by student responses from the think-aloud interviews. For example, one participant who did not see the individual evaluation questions for the owl-mouse scenario of the Eco-BLIC independently explained that sampling mice from other fields was a strength for both the lab and field groups. They explained that for the lab group, “I think that [the mice] coming from multiple nearby fields is good…I was curious if [mouse] behavior was universal.” For the field group, they reasoned, “I also noticed it was just from a single nearby field…I thought that was good for control.” However, this individual ultimately reasoned that the field group was “more effective for sampling methods…it’s better to have them from a single field because you know they were exposed to similar environments.” Thus, even without individual evaluation questions available, students can still make individual evaluations when comparing and contrasting between groups.

We also determined that removing the individual evaluation questions decreased the duration of time students needed to complete the Eco-BLIC and PLIC. On the Eco-BLIC, the median time to completion for the version with individual evaluation and group comparison questions was approximately 30 minutes, while the version with only the group comparisons had a median time to completion of 18 minutes. On the PLIC, the median time to completion for the version with individual evaluation questions and group comparison questions was approximately 17 minutes, while the version with only the group comparisons had a median time to completion of 15 minutes.

To determine how to elicit critical thinking in a streamlined manner using introductory biology and physics material, we investigated (a) how students critically evaluate aspects of experimental investigations in biology and physics when they are individually evaluating one study at a time versus comparing and contrasting two and (b) whether individual evaluation questions are needed to encourage students to engage in critical thinking when comparing and contrasting.

Students are more discerning when making comparisons

We found that students were more discerning when comparing between the two groups in the Eco-BLIC and PLIC rather than when evaluating each group individually. While students tended to independently evaluate study features of each group as strengths ( Fig 2 ), there was greater variation in their responses to which group was more effective when directly comparing between the two groups ( Fig 3 ). Literature evaluating the role of contrasting cases provides plausible explanations for our results. In that work, contrasting between two cases supports students in identifying deep features of the cases, compared with evaluating one case after the other [ 34 – 37 ]. When presented with a single example, students may deem certain study features as unimportant or irrelevant, but comparing study features side-by-side allows students to recognize the distinct features of each case [ 38 ]. We infer, therefore, that students were better able to recognize the strengths and weaknesses of the two groups in each of the assessment scenarios when evaluating the groups side by side, rather than in isolation [ 39 , 40 ]. This result is somewhat surprising, however, as students could have used their knowledge of experimental designs as a contrasting case when evaluating each group. Future work, therefore, should evaluate whether experts use their vast knowledge base of experimental studies as discerning contrasts when evaluating each group individually. This work would help determine whether our results here suggest that students do not have a sufficient experiment-base to use as contrasts or if the students just do not use their experiment-base when evaluating the individual groups. Regardless, our study suggests that critical thinking assessments should ask students to compare and contrast experimental scenarios, rather than just evaluate individual cases.

Individual evaluation questions do not influence answers to compare and contrast questions

We found that individual evaluation questions were unnecessary for eliciting or supporting students’ critical thinking on the two assessments. Students responded to the group comparison items similarly whether or not they had received the individual evaluation questions. The exception to this pattern was that students responded differently to three group comparison items on the PLIC when individual evaluation questions were provided. These three questions constituted a small portion of the PLIC and showed a small effect size. Furthermore, removing the individual evaluation questions decreased the median time for students to complete the Eco-BLIC and PLIC. It is plausible that spending more time thinking about the experimental methods while responding to the individual evaluation questions would then prepare students to be better discerners on the group comparison questions. However, the overall trend is that individual evaluation questions do not have a strong impact on how students evaluate experimental scenarios, nor do they set students up to be better critical thinkers later. This finding aligns with prior research suggesting that students tend to disregard details when they evaluate a single case, rather than comparing and contrasting multiple cases [ 38 ], further supporting our findings about the effectiveness of the group comparison questions.

Practical implications

Individual evaluation questions were not effective for students to engage in critical thinking nor to prepare them for subsequent questions that elicit their critical thinking. Thus, researchers and instructors could make critical thinking assessments more effective and less time-consuming by encouraging comparisons between cases. Additionally, the study raises a question about whether instruction should incorporate more experimental case studies throughout their courses and assessments so that students have a richer experiment-base to use as contrasts when evaluating individual experimental scenarios. To help students discern information about experimental design, we suggest that instructors consider providing them with multiple experimental studies (i.e., cases) and asking them to compare and contrast between these studies.

Future directions and limitations

When designing critical thinking assessments, questions should ask students to make meaningful comparisons that require them to consider the important features of the scenarios. One challenge of relying on compare-and-contrast questions in the Eco-BLIC and PLIC to elicit students’ critical thinking is ensuring that students are comparing similar yet distinct study features across experimental scenarios, and that these comparisons are meaningful [ 38 ]. For example, though sample size is different between experimental scenarios in our instruments, it is a significant feature that has implications for other aspects of the research like statistical analyses and behaviors of the animals. Therefore, one limitation of our study could be that we exclusively focused on experimental method evaluation questions (i.e., what to trust), and we are unsure if the same principles hold for other dimensions of critical thinking (i.e., what to do). Future research should explore whether questions that are not in a compare-and-contrast format also effectively elicit critical thinking, and if so, to what degree.