Quantitative Data Analysis 101

The lingo, methods and techniques, explained simply.

By: Derek Jansen (MBA) and Kerryn Warren (PhD) | December 2020

Quantitative data analysis is one of those things that often strikes fear in students. It’s totally understandable – quantitative analysis is a complex topic, full of daunting lingo , like medians, modes, correlation and regression. Suddenly we’re all wishing we’d paid a little more attention in math class…

The good news is that while quantitative data analysis is a mammoth topic, gaining a working understanding of the basics isn’t that hard , even for those of us who avoid numbers and math . In this post, we’ll break quantitative analysis down into simple , bite-sized chunks so you can approach your research with confidence.

Overview: Quantitative Data Analysis 101

- What (exactly) is quantitative data analysis?

- When to use quantitative analysis

- How quantitative analysis works

The two “branches” of quantitative analysis

- Descriptive statistics 101

- Inferential statistics 101

- How to choose the right quantitative methods

- Recap & summary

What is quantitative data analysis?

Despite being a mouthful, quantitative data analysis simply means analysing data that is numbers-based – or data that can be easily “converted” into numbers without losing any meaning.

For example, category-based variables like gender, ethnicity, or native language could all be “converted” into numbers without losing meaning – for example, English could equal 1, French 2, etc.

This contrasts against qualitative data analysis, where the focus is on words, phrases and expressions that can’t be reduced to numbers. If you’re interested in learning about qualitative analysis, check out our post and video here .

What is quantitative analysis used for?

Quantitative analysis is generally used for three purposes.

- Firstly, it’s used to measure differences between groups . For example, the popularity of different clothing colours or brands.

- Secondly, it’s used to assess relationships between variables . For example, the relationship between weather temperature and voter turnout.

- And third, it’s used to test hypotheses in a scientifically rigorous way. For example, a hypothesis about the impact of a certain vaccine.

Again, this contrasts with qualitative analysis , which can be used to analyse people’s perceptions and feelings about an event or situation. In other words, things that can’t be reduced to numbers.

How does quantitative analysis work?

Well, since quantitative data analysis is all about analysing numbers , it’s no surprise that it involves statistics . Statistical analysis methods form the engine that powers quantitative analysis, and these methods can vary from pretty basic calculations (for example, averages and medians) to more sophisticated analyses (for example, correlations and regressions).

Sounds like gibberish? Don’t worry. We’ll explain all of that in this post. Importantly, you don’t need to be a statistician or math wiz to pull off a good quantitative analysis. We’ll break down all the technical mumbo jumbo in this post.

Need a helping hand?

As I mentioned, quantitative analysis is powered by statistical analysis methods . There are two main “branches” of statistical methods that are used – descriptive statistics and inferential statistics . In your research, you might only use descriptive statistics, or you might use a mix of both , depending on what you’re trying to figure out. In other words, depending on your research questions, aims and objectives . I’ll explain how to choose your methods later.

So, what are descriptive and inferential statistics?

Well, before I can explain that, we need to take a quick detour to explain some lingo. To understand the difference between these two branches of statistics, you need to understand two important words. These words are population and sample .

First up, population . In statistics, the population is the entire group of people (or animals or organisations or whatever) that you’re interested in researching. For example, if you were interested in researching Tesla owners in the US, then the population would be all Tesla owners in the US.

However, it’s extremely unlikely that you’re going to be able to interview or survey every single Tesla owner in the US. Realistically, you’ll likely only get access to a few hundred, or maybe a few thousand owners using an online survey. This smaller group of accessible people whose data you actually collect is called your sample .

So, to recap – the population is the entire group of people you’re interested in, and the sample is the subset of the population that you can actually get access to. In other words, the population is the full chocolate cake , whereas the sample is a slice of that cake.

So, why is this sample-population thing important?

Well, descriptive statistics focus on describing the sample , while inferential statistics aim to make predictions about the population, based on the findings within the sample. In other words, we use one group of statistical methods – descriptive statistics – to investigate the slice of cake, and another group of methods – inferential statistics – to draw conclusions about the entire cake. There I go with the cake analogy again…

With that out the way, let’s take a closer look at each of these branches in more detail.

Branch 1: Descriptive Statistics

Descriptive statistics serve a simple but critically important role in your research – to describe your data set – hence the name. In other words, they help you understand the details of your sample . Unlike inferential statistics (which we’ll get to soon), descriptive statistics don’t aim to make inferences or predictions about the entire population – they’re purely interested in the details of your specific sample .

When you’re writing up your analysis, descriptive statistics are the first set of stats you’ll cover, before moving on to inferential statistics. But, that said, depending on your research objectives and research questions , they may be the only type of statistics you use. We’ll explore that a little later.

So, what kind of statistics are usually covered in this section?

Some common statistical tests used in this branch include the following:

- Mean – this is simply the mathematical average of a range of numbers.

- Median – this is the midpoint in a range of numbers when the numbers are arranged in numerical order. If the data set makes up an odd number, then the median is the number right in the middle of the set. If the data set makes up an even number, then the median is the midpoint between the two middle numbers.

- Mode – this is simply the most commonly occurring number in the data set.

- In cases where most of the numbers are quite close to the average, the standard deviation will be relatively low.

- Conversely, in cases where the numbers are scattered all over the place, the standard deviation will be relatively high.

- Skewness . As the name suggests, skewness indicates how symmetrical a range of numbers is. In other words, do they tend to cluster into a smooth bell curve shape in the middle of the graph, or do they skew to the left or right?

Feeling a bit confused? Let’s look at a practical example using a small data set.

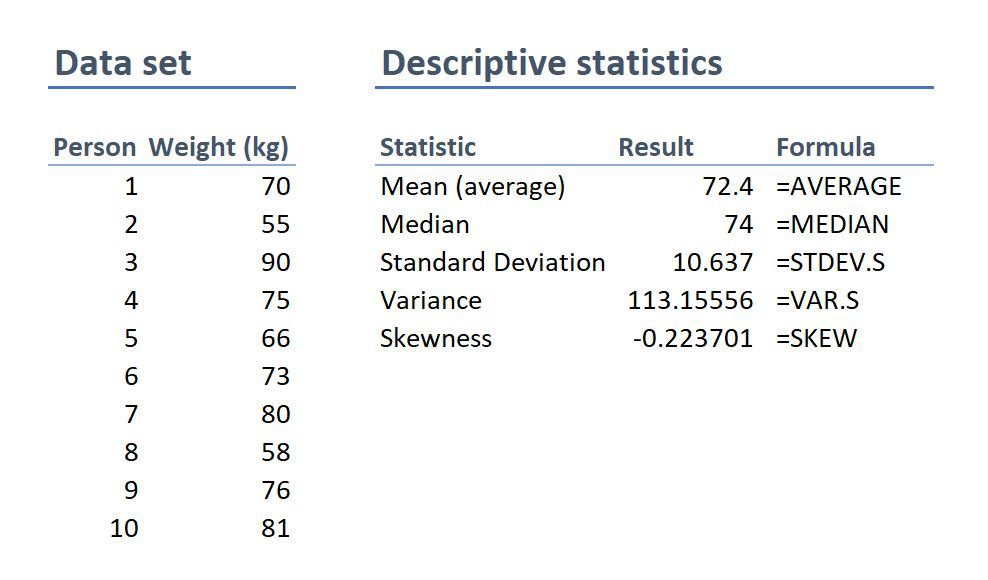

On the left-hand side is the data set. This details the bodyweight of a sample of 10 people. On the right-hand side, we have the descriptive statistics. Let’s take a look at each of them.

First, we can see that the mean weight is 72.4 kilograms. In other words, the average weight across the sample is 72.4 kilograms. Straightforward.

Next, we can see that the median is very similar to the mean (the average). This suggests that this data set has a reasonably symmetrical distribution (in other words, a relatively smooth, centred distribution of weights, clustered towards the centre).

In terms of the mode , there is no mode in this data set. This is because each number is present only once and so there cannot be a “most common number”. If there were two people who were both 65 kilograms, for example, then the mode would be 65.

Next up is the standard deviation . 10.6 indicates that there’s quite a wide spread of numbers. We can see this quite easily by looking at the numbers themselves, which range from 55 to 90, which is quite a stretch from the mean of 72.4.

And lastly, the skewness of -0.2 tells us that the data is very slightly negatively skewed. This makes sense since the mean and the median are slightly different.

As you can see, these descriptive statistics give us some useful insight into the data set. Of course, this is a very small data set (only 10 records), so we can’t read into these statistics too much. Also, keep in mind that this is not a list of all possible descriptive statistics – just the most common ones.

But why do all of these numbers matter?

While these descriptive statistics are all fairly basic, they’re important for a few reasons:

- Firstly, they help you get both a macro and micro-level view of your data. In other words, they help you understand both the big picture and the finer details.

- Secondly, they help you spot potential errors in the data – for example, if an average is way higher than you’d expect, or responses to a question are highly varied, this can act as a warning sign that you need to double-check the data.

- And lastly, these descriptive statistics help inform which inferential statistical techniques you can use, as those techniques depend on the skewness (in other words, the symmetry and normality) of the data.

Simply put, descriptive statistics are really important , even though the statistical techniques used are fairly basic. All too often at Grad Coach, we see students skimming over the descriptives in their eagerness to get to the more exciting inferential methods, and then landing up with some very flawed results.

Don’t be a sucker – give your descriptive statistics the love and attention they deserve!

Branch 2: Inferential Statistics

As I mentioned, while descriptive statistics are all about the details of your specific data set – your sample – inferential statistics aim to make inferences about the population . In other words, you’ll use inferential statistics to make predictions about what you’d expect to find in the full population.

What kind of predictions, you ask? Well, there are two common types of predictions that researchers try to make using inferential stats:

- Firstly, predictions about differences between groups – for example, height differences between children grouped by their favourite meal or gender.

- And secondly, relationships between variables – for example, the relationship between body weight and the number of hours a week a person does yoga.

In other words, inferential statistics (when done correctly), allow you to connect the dots and make predictions about what you expect to see in the real world population, based on what you observe in your sample data. For this reason, inferential statistics are used for hypothesis testing – in other words, to test hypotheses that predict changes or differences.

Of course, when you’re working with inferential statistics, the composition of your sample is really important. In other words, if your sample doesn’t accurately represent the population you’re researching, then your findings won’t necessarily be very useful.

For example, if your population of interest is a mix of 50% male and 50% female , but your sample is 80% male , you can’t make inferences about the population based on your sample, since it’s not representative. This area of statistics is called sampling, but we won’t go down that rabbit hole here (it’s a deep one!) – we’ll save that for another post .

What statistics are usually used in this branch?

There are many, many different statistical analysis methods within the inferential branch and it’d be impossible for us to discuss them all here. So we’ll just take a look at some of the most common inferential statistical methods so that you have a solid starting point.

First up are T-Tests . T-tests compare the means (the averages) of two groups of data to assess whether they’re statistically significantly different. In other words, do they have significantly different means, standard deviations and skewness.

This type of testing is very useful for understanding just how similar or different two groups of data are. For example, you might want to compare the mean blood pressure between two groups of people – one that has taken a new medication and one that hasn’t – to assess whether they are significantly different.

Kicking things up a level, we have ANOVA, which stands for “analysis of variance”. This test is similar to a T-test in that it compares the means of various groups, but ANOVA allows you to analyse multiple groups , not just two groups So it’s basically a t-test on steroids…

Next, we have correlation analysis . This type of analysis assesses the relationship between two variables. In other words, if one variable increases, does the other variable also increase, decrease or stay the same. For example, if the average temperature goes up, do average ice creams sales increase too? We’d expect some sort of relationship between these two variables intuitively , but correlation analysis allows us to measure that relationship scientifically .

Lastly, we have regression analysis – this is quite similar to correlation in that it assesses the relationship between variables, but it goes a step further to understand cause and effect between variables, not just whether they move together. In other words, does the one variable actually cause the other one to move, or do they just happen to move together naturally thanks to another force? Just because two variables correlate doesn’t necessarily mean that one causes the other.

Stats overload…

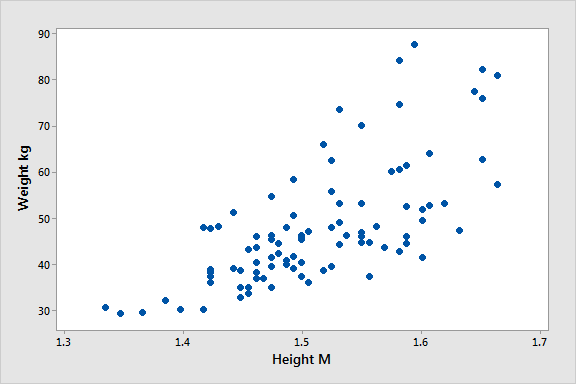

I hear you. To make this all a little more tangible, let’s take a look at an example of a correlation in action.

Here’s a scatter plot demonstrating the correlation (relationship) between weight and height. Intuitively, we’d expect there to be some relationship between these two variables, which is what we see in this scatter plot. In other words, the results tend to cluster together in a diagonal line from bottom left to top right.

As I mentioned, these are are just a handful of inferential techniques – there are many, many more. Importantly, each statistical method has its own assumptions and limitations.

For example, some methods only work with normally distributed (parametric) data, while other methods are designed specifically for non-parametric data. And that’s exactly why descriptive statistics are so important – they’re the first step to knowing which inferential techniques you can and can’t use.

How to choose the right analysis method

To choose the right statistical methods, you need to think about two important factors :

- The type of quantitative data you have (specifically, level of measurement and the shape of the data). And,

- Your research questions and hypotheses

Let’s take a closer look at each of these.

Factor 1 – Data type

The first thing you need to consider is the type of data you’ve collected (or the type of data you will collect). By data types, I’m referring to the four levels of measurement – namely, nominal, ordinal, interval and ratio. If you’re not familiar with this lingo, check out the video below.

Why does this matter?

Well, because different statistical methods and techniques require different types of data. This is one of the “assumptions” I mentioned earlier – every method has its assumptions regarding the type of data.

For example, some techniques work with categorical data (for example, yes/no type questions, or gender or ethnicity), while others work with continuous numerical data (for example, age, weight or income) – and, of course, some work with multiple data types.

If you try to use a statistical method that doesn’t support the data type you have, your results will be largely meaningless . So, make sure that you have a clear understanding of what types of data you’ve collected (or will collect). Once you have this, you can then check which statistical methods would support your data types here .

If you haven’t collected your data yet, you can work in reverse and look at which statistical method would give you the most useful insights, and then design your data collection strategy to collect the correct data types.

Another important factor to consider is the shape of your data . Specifically, does it have a normal distribution (in other words, is it a bell-shaped curve, centred in the middle) or is it very skewed to the left or the right? Again, different statistical techniques work for different shapes of data – some are designed for symmetrical data while others are designed for skewed data.

This is another reminder of why descriptive statistics are so important – they tell you all about the shape of your data.

Factor 2: Your research questions

The next thing you need to consider is your specific research questions, as well as your hypotheses (if you have some). The nature of your research questions and research hypotheses will heavily influence which statistical methods and techniques you should use.

If you’re just interested in understanding the attributes of your sample (as opposed to the entire population), then descriptive statistics are probably all you need. For example, if you just want to assess the means (averages) and medians (centre points) of variables in a group of people.

On the other hand, if you aim to understand differences between groups or relationships between variables and to infer or predict outcomes in the population, then you’ll likely need both descriptive statistics and inferential statistics.

So, it’s really important to get very clear about your research aims and research questions, as well your hypotheses – before you start looking at which statistical techniques to use.

Never shoehorn a specific statistical technique into your research just because you like it or have some experience with it. Your choice of methods must align with all the factors we’ve covered here.

Time to recap…

You’re still with me? That’s impressive. We’ve covered a lot of ground here, so let’s recap on the key points:

- Quantitative data analysis is all about analysing number-based data (which includes categorical and numerical data) using various statistical techniques.

- The two main branches of statistics are descriptive statistics and inferential statistics . Descriptives describe your sample, whereas inferentials make predictions about what you’ll find in the population.

- Common descriptive statistical methods include mean (average), median , standard deviation and skewness .

- Common inferential statistical methods include t-tests , ANOVA , correlation and regression analysis.

- To choose the right statistical methods and techniques, you need to consider the type of data you’re working with , as well as your research questions and hypotheses.

Psst… there’s more (for free)

This post is part of our dissertation mini-course, which covers everything you need to get started with your dissertation, thesis or research project.

You Might Also Like:

74 Comments

Hi, I have read your article. Such a brilliant post you have created.

Thank you for the feedback. Good luck with your quantitative analysis.

Thank you so much.

Thank you so much. I learnt much well. I love your summaries of the concepts. I had love you to explain how to input data using SPSS

Amazing and simple way of breaking down quantitative methods.

This is beautiful….especially for non-statisticians. I have skimmed through but I wish to read again. and please include me in other articles of the same nature when you do post. I am interested. I am sure, I could easily learn from you and get off the fear that I have had in the past. Thank you sincerely.

Send me every new information you might have.

i need every new information

Thank you for the blog. It is quite informative. Dr Peter Nemaenzhe PhD

It is wonderful. l’ve understood some of the concepts in a more compréhensive manner

Your article is so good! However, I am still a bit lost. I am doing a secondary research on Gun control in the US and increase in crime rates and I am not sure which analysis method I should use?

Based on the given learning points, this is inferential analysis, thus, use ‘t-tests, ANOVA, correlation and regression analysis’

Well explained notes. Am an MPH student and currently working on my thesis proposal, this has really helped me understand some of the things I didn’t know.

I like your page..helpful

wonderful i got my concept crystal clear. thankyou!!

This is really helpful , thank you

Thank you so much this helped

Wonderfully explained

thank u so much, it was so informative

THANKYOU, this was very informative and very helpful

This is great GRADACOACH I am not a statistician but I require more of this in my thesis

Include me in your posts.

This is so great and fully useful. I would like to thank you again and again.

Glad to read this article. I’ve read lot of articles but this article is clear on all concepts. Thanks for sharing.

Thank you so much. This is a very good foundation and intro into quantitative data analysis. Appreciate!

You have a very impressive, simple but concise explanation of data analysis for Quantitative Research here. This is a God-send link for me to appreciate research more. Thank you so much!

Avery good presentation followed by the write up. yes you simplified statistics to make sense even to a layman like me. Thank so much keep it up. The presenter did ell too. i would like more of this for Qualitative and exhaust more of the test example like the Anova.

This is a very helpful article, couldn’t have been clearer. Thank you.

Awesome and phenomenal information.Well done

The video with the accompanying article is super helpful to demystify this topic. Very well done. Thank you so much.

thank you so much, your presentation helped me a lot

I don’t know how should I express that ur article is saviour for me 🥺😍

It is well defined information and thanks for sharing. It helps me a lot in understanding the statistical data.

I gain a lot and thanks for sharing brilliant ideas, so wish to be linked on your email update.

Very helpful and clear .Thank you Gradcoach.

Thank for sharing this article, well organized and information presented are very clear.

VERY INTERESTING AND SUPPORTIVE TO NEW RESEARCHERS LIKE ME. AT LEAST SOME BASICS ABOUT QUANTITATIVE.

An outstanding, well explained and helpful article. This will help me so much with my data analysis for my research project. Thank you!

wow this has just simplified everything i was scared of how i am gonna analyse my data but thanks to you i will be able to do so

simple and constant direction to research. thanks

This is helpful

Great writing!! Comprehensive and very helpful.

Do you provide any assistance for other steps of research methodology like making research problem testing hypothesis report and thesis writing?

Thank you so much for such useful article!

Amazing article. So nicely explained. Wow

Very insightfull. Thanks

I am doing a quality improvement project to determine if the implementation of a protocol will change prescribing habits. Would this be a t-test?

The is a very helpful blog, however, I’m still not sure how to analyze my data collected. I’m doing a research on “Free Education at the University of Guyana”

tnx. fruitful blog!

So I am writing exams and would like to know how do establish which method of data analysis to use from the below research questions: I am a bit lost as to how I determine the data analysis method from the research questions.

Do female employees report higher job satisfaction than male employees with similar job descriptions across the South African telecommunications sector? – I though that maybe Chi Square could be used here. – Is there a gender difference in talented employees’ actual turnover decisions across the South African telecommunications sector? T-tests or Correlation in this one. – Is there a gender difference in the cost of actual turnover decisions across the South African telecommunications sector? T-tests or Correlation in this one. – What practical recommendations can be made to the management of South African telecommunications companies on leveraging gender to mitigate employee turnover decisions?

Your assistance will be appreciated if I could get a response as early as possible tomorrow

This was quite helpful. Thank you so much.

wow I got a lot from this article, thank you very much, keep it up

Thanks for yhe guidance. Can you send me this guidance on my email? To enable offline reading?

Thank you very much, this service is very helpful.

Every novice researcher needs to read this article as it puts things so clear and easy to follow. Its been very helpful.

Wonderful!!!! you explained everything in a way that anyone can learn. Thank you!!

I really enjoyed reading though this. Very easy to follow. Thank you

Many thanks for your useful lecture, I would be really appreciated if you could possibly share with me the PPT of presentation related to Data type?

Thank you very much for sharing, I got much from this article

This is a very informative write-up. Kindly include me in your latest posts.

Very interesting mostly for social scientists

Thank you so much, very helpfull

You’re welcome 🙂

woow, its great, its very informative and well understood because of your way of writing like teaching in front of me in simple languages.

I have been struggling to understand a lot of these concepts. Thank you for the informative piece which is written with outstanding clarity.

very informative article. Easy to understand

Beautiful read, much needed.

Always greet intro and summary. I learn so much from GradCoach

Quite informative. Simple and clear summary.

I thoroughly enjoyed reading your informative and inspiring piece. Your profound insights into this topic truly provide a better understanding of its complexity. I agree with the points you raised, especially when you delved into the specifics of the article. In my opinion, that aspect is often overlooked and deserves further attention.

Absolutely!!! Thank you

Thank you very much for this post. It made me to understand how to do my data analysis.

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

- Online Degree Explore Bachelor’s & Master’s degrees

- MasterTrack™ Earn credit towards a Master’s degree

- University Certificates Advance your career with graduate-level learning

- Top Courses

- Join for Free

What Is Data Analysis? (With Examples)

Data analysis is the practice of working with data to glean useful information, which can then be used to make informed decisions.

![analysis in research sample [Featured image] A female data analyst takes notes on her laptop at a standing desk in a modern office space](https://d3njjcbhbojbot.cloudfront.net/api/utilities/v1/imageproxy/https://images.ctfassets.net/wp1lcwdav1p1/2CUbULaq9mEfSSIq6lsCUu/b8ec58abf5106bf9bf75b17da09c39c0/What_is_data_analysis.png?w=1500&h=680&q=60&fit=fill&f=faces&fm=jpg&fl=progressive&auto=format%2Ccompress&dpr=1&w=1000)

"It is a capital mistake to theorize before one has data. Insensibly one begins to twist facts to suit theories, instead of theories to suit facts," Sherlock Holme's proclaims in Sir Arthur Conan Doyle's A Scandal in Bohemia.

This idea lies at the root of data analysis. When we can extract meaning from data, it empowers us to make better decisions. And we’re living in a time when we have more data than ever at our fingertips.

Companies are wisening up to the benefits of leveraging data. Data analysis can help a bank to personalize customer interactions, a health care system to predict future health needs, or an entertainment company to create the next big streaming hit.

The World Economic Forum Future of Jobs Report 2023 listed data analysts and scientists as one of the most in-demand jobs, alongside AI and machine learning specialists and big data specialists [ 1 ]. In this article, you'll learn more about the data analysis process, different types of data analysis, and recommended courses to help you get started in this exciting field.

Read more: How to Become a Data Analyst (with or Without a Degree)

Beginner-friendly data analysis courses

Interested in building your knowledge of data analysis today? Consider enrolling in one of these popular courses on Coursera:

In Google's Foundations: Data, Data, Everywhere course, you'll explore key data analysis concepts, tools, and jobs.

In Duke University's Data Analysis and Visualization course, you'll learn how to identify key components for data analytics projects, explore data visualization, and find out how to create a compelling data story.

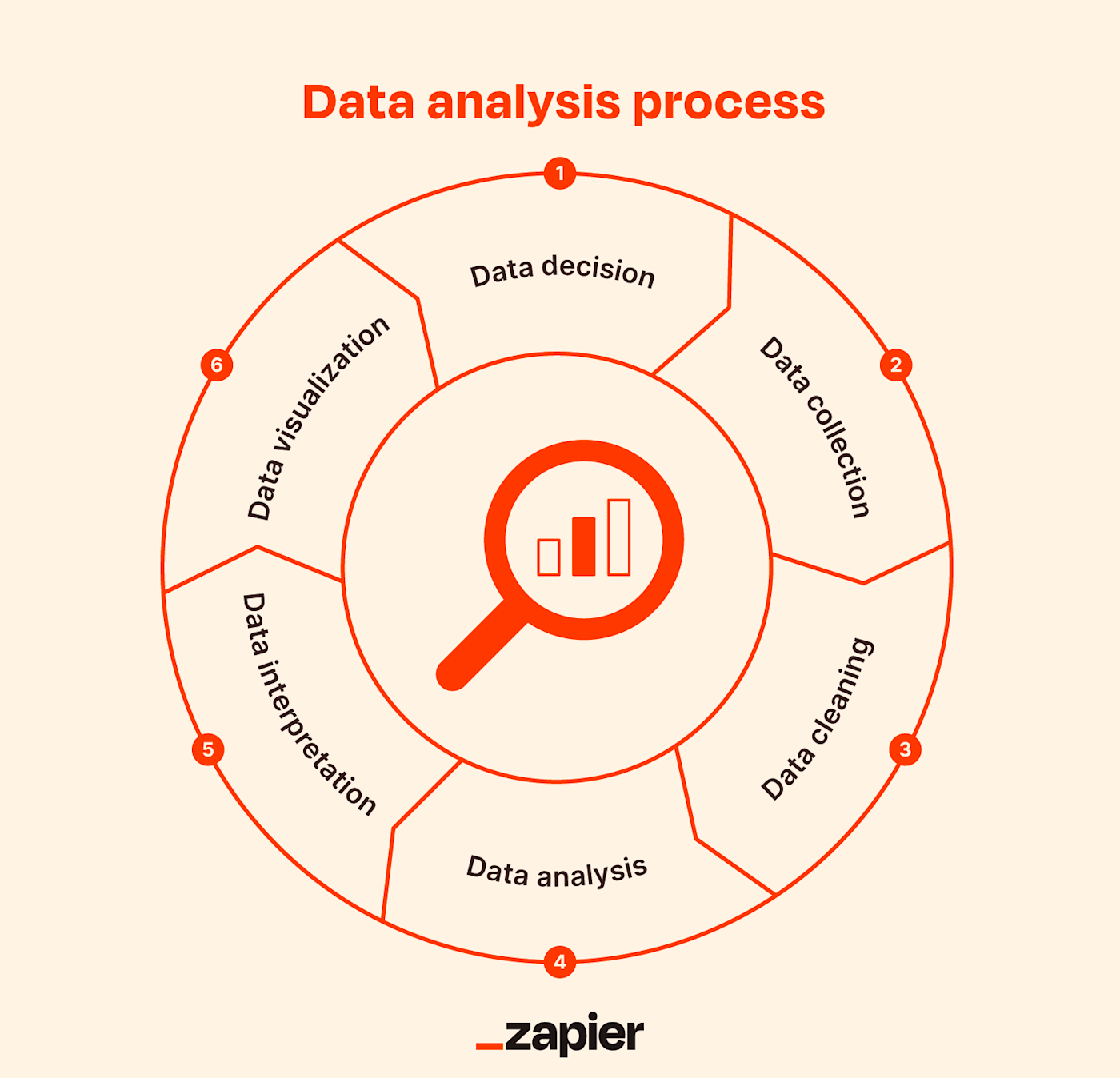

Data analysis process

As the data available to companies continues to grow both in amount and complexity, so too does the need for an effective and efficient process by which to harness the value of that data. The data analysis process typically moves through several iterative phases. Let’s take a closer look at each.

Identify the business question you’d like to answer. What problem is the company trying to solve? What do you need to measure, and how will you measure it?

Collect the raw data sets you’ll need to help you answer the identified question. Data collection might come from internal sources, like a company’s client relationship management (CRM) software, or from secondary sources, like government records or social media application programming interfaces (APIs).

Clean the data to prepare it for analysis. This often involves purging duplicate and anomalous data, reconciling inconsistencies, standardizing data structure and format, and dealing with white spaces and other syntax errors.

Analyze the data. By manipulating the data using various data analysis techniques and tools, you can begin to find trends, correlations, outliers, and variations that tell a story. During this stage, you might use data mining to discover patterns within databases or data visualization software to help transform data into an easy-to-understand graphical format.

Interpret the results of your analysis to see how well the data answered your original question. What recommendations can you make based on the data? What are the limitations to your conclusions?

Learn more about data analysis in this lecture by Kevin, Director of Data Analytics at Google, from Google's Data Analytics Professional Certificate :

Read more: What Does a Data Analyst Do? A Career Guide

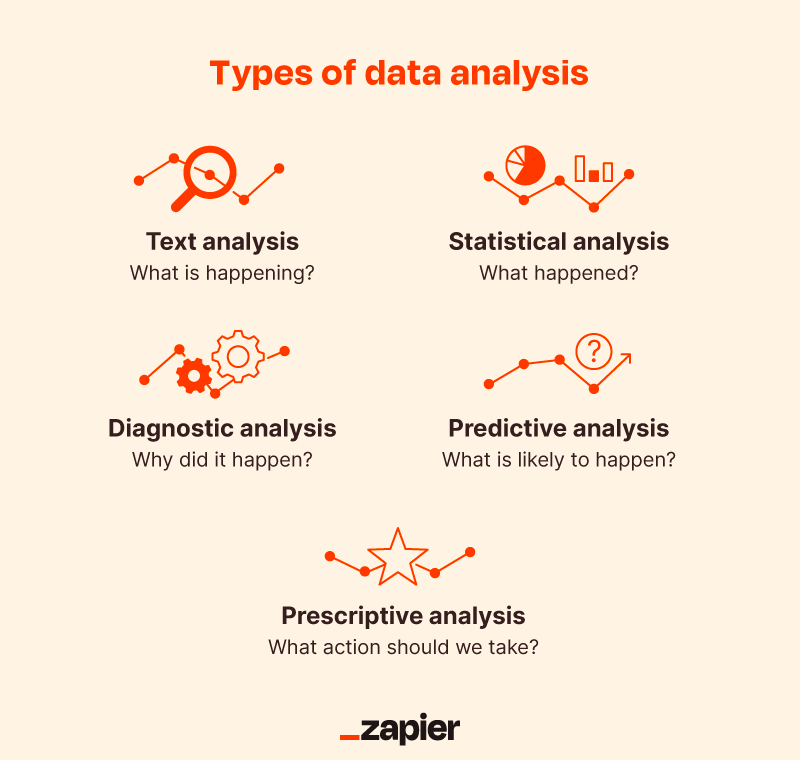

Types of data analysis (with examples)

Data can be used to answer questions and support decisions in many different ways. To identify the best way to analyze your date, it can help to familiarize yourself with the four types of data analysis commonly used in the field.

In this section, we’ll take a look at each of these data analysis methods, along with an example of how each might be applied in the real world.

Descriptive analysis

Descriptive analysis tells us what happened. This type of analysis helps describe or summarize quantitative data by presenting statistics. For example, descriptive statistical analysis could show the distribution of sales across a group of employees and the average sales figure per employee.

Descriptive analysis answers the question, “what happened?”

Diagnostic analysis

If the descriptive analysis determines the “what,” diagnostic analysis determines the “why.” Let’s say a descriptive analysis shows an unusual influx of patients in a hospital. Drilling into the data further might reveal that many of these patients shared symptoms of a particular virus. This diagnostic analysis can help you determine that an infectious agent—the “why”—led to the influx of patients.

Diagnostic analysis answers the question, “why did it happen?”

Predictive analysis

So far, we’ve looked at types of analysis that examine and draw conclusions about the past. Predictive analytics uses data to form projections about the future. Using predictive analysis, you might notice that a given product has had its best sales during the months of September and October each year, leading you to predict a similar high point during the upcoming year.

Predictive analysis answers the question, “what might happen in the future?”

Prescriptive analysis

Prescriptive analysis takes all the insights gathered from the first three types of analysis and uses them to form recommendations for how a company should act. Using our previous example, this type of analysis might suggest a market plan to build on the success of the high sales months and harness new growth opportunities in the slower months.

Prescriptive analysis answers the question, “what should we do about it?”

This last type is where the concept of data-driven decision-making comes into play.

Read more : Advanced Analytics: Definition, Benefits, and Use Cases

What is data-driven decision-making (DDDM)?

Data-driven decision-making, sometimes abbreviated to DDDM), can be defined as the process of making strategic business decisions based on facts, data, and metrics instead of intuition, emotion, or observation.

This might sound obvious, but in practice, not all organizations are as data-driven as they could be. According to global management consulting firm McKinsey Global Institute, data-driven companies are better at acquiring new customers, maintaining customer loyalty, and achieving above-average profitability [ 2 ].

Get started with Coursera

If you’re interested in a career in the high-growth field of data analytics, consider these top-rated courses on Coursera:

Begin building job-ready skills with the Google Data Analytics Professional Certificate . Prepare for an entry-level job as you learn from Google employees—no experience or degree required.

Practice working with data with Macquarie University's Excel Skills for Business Specialization . Learn how to use Microsoft Excel to analyze data and make data-informed business decisions.

Deepen your skill set with Google's Advanced Data Analytics Professional Certificate . In this advanced program, you'll continue exploring the concepts introduced in the beginner-level courses, plus learn Python, statistics, and Machine Learning concepts.

Frequently asked questions (FAQ)

Where is data analytics used .

Just about any business or organization can use data analytics to help inform their decisions and boost their performance. Some of the most successful companies across a range of industries — from Amazon and Netflix to Starbucks and General Electric — integrate data into their business plans to improve their overall business performance.

What are the top skills for a data analyst?

Data analysis makes use of a range of analysis tools and technologies. Some of the top skills for data analysts include SQL, data visualization, statistical programming languages (like R and Python), machine learning, and spreadsheets.

Read : 7 In-Demand Data Analyst Skills to Get Hired in 2022

What is a data analyst job salary?

Data from Glassdoor indicates that the average base salary for a data analyst in the United States is $75,349 as of March 2024 [ 3 ]. How much you make will depend on factors like your qualifications, experience, and location.

Do data analysts need to be good at math?

Data analytics tends to be less math-intensive than data science. While you probably won’t need to master any advanced mathematics, a foundation in basic math and statistical analysis can help set you up for success.

Learn more: Data Analyst vs. Data Scientist: What’s the Difference?

Article sources

World Economic Forum. " The Future of Jobs Report 2023 , https://www3.weforum.org/docs/WEF_Future_of_Jobs_2023.pdf." Accessed March 19, 2024.

McKinsey & Company. " Five facts: How customer analytics boosts corporate performance , https://www.mckinsey.com/business-functions/marketing-and-sales/our-insights/five-facts-how-customer-analytics-boosts-corporate-performance." Accessed March 19, 2024.

Glassdoor. " Data Analyst Salaries , https://www.glassdoor.com/Salaries/data-analyst-salary-SRCH_KO0,12.htm" Accessed March 19, 2024.

Keep reading

Coursera staff.

Editorial Team

Coursera’s editorial team is comprised of highly experienced professional editors, writers, and fact...

This content has been made available for informational purposes only. Learners are advised to conduct additional research to ensure that courses and other credentials pursued meet their personal, professional, and financial goals.

Skip to content

Read the latest news stories about Mailman faculty, research, and events.

Departments

We integrate an innovative skills-based curriculum, research collaborations, and hands-on field experience to prepare students.

Learn more about our research centers, which focus on critical issues in public health.

Our Faculty

Meet the faculty of the Mailman School of Public Health.

Become a Student

Life and community, how to apply.

Learn how to apply to the Mailman School of Public Health.

Content Analysis

Content analysis is a research tool used to determine the presence of certain words, themes, or concepts within some given qualitative data (i.e. text). Using content analysis, researchers can quantify and analyze the presence, meanings, and relationships of such certain words, themes, or concepts. As an example, researchers can evaluate language used within a news article to search for bias or partiality. Researchers can then make inferences about the messages within the texts, the writer(s), the audience, and even the culture and time of surrounding the text.

Description

Sources of data could be from interviews, open-ended questions, field research notes, conversations, or literally any occurrence of communicative language (such as books, essays, discussions, newspaper headlines, speeches, media, historical documents). A single study may analyze various forms of text in its analysis. To analyze the text using content analysis, the text must be coded, or broken down, into manageable code categories for analysis (i.e. “codes”). Once the text is coded into code categories, the codes can then be further categorized into “code categories” to summarize data even further.

Three different definitions of content analysis are provided below.

Definition 1: “Any technique for making inferences by systematically and objectively identifying special characteristics of messages.” (from Holsti, 1968)

Definition 2: “An interpretive and naturalistic approach. It is both observational and narrative in nature and relies less on the experimental elements normally associated with scientific research (reliability, validity, and generalizability) (from Ethnography, Observational Research, and Narrative Inquiry, 1994-2012).

Definition 3: “A research technique for the objective, systematic and quantitative description of the manifest content of communication.” (from Berelson, 1952)

Uses of Content Analysis

Identify the intentions, focus or communication trends of an individual, group or institution

Describe attitudinal and behavioral responses to communications

Determine the psychological or emotional state of persons or groups

Reveal international differences in communication content

Reveal patterns in communication content

Pre-test and improve an intervention or survey prior to launch

Analyze focus group interviews and open-ended questions to complement quantitative data

Types of Content Analysis

There are two general types of content analysis: conceptual analysis and relational analysis. Conceptual analysis determines the existence and frequency of concepts in a text. Relational analysis develops the conceptual analysis further by examining the relationships among concepts in a text. Each type of analysis may lead to different results, conclusions, interpretations and meanings.

Conceptual Analysis

Typically people think of conceptual analysis when they think of content analysis. In conceptual analysis, a concept is chosen for examination and the analysis involves quantifying and counting its presence. The main goal is to examine the occurrence of selected terms in the data. Terms may be explicit or implicit. Explicit terms are easy to identify. Coding of implicit terms is more complicated: you need to decide the level of implication and base judgments on subjectivity (an issue for reliability and validity). Therefore, coding of implicit terms involves using a dictionary or contextual translation rules or both.

To begin a conceptual content analysis, first identify the research question and choose a sample or samples for analysis. Next, the text must be coded into manageable content categories. This is basically a process of selective reduction. By reducing the text to categories, the researcher can focus on and code for specific words or patterns that inform the research question.

General steps for conducting a conceptual content analysis:

1. Decide the level of analysis: word, word sense, phrase, sentence, themes

2. Decide how many concepts to code for: develop a pre-defined or interactive set of categories or concepts. Decide either: A. to allow flexibility to add categories through the coding process, or B. to stick with the pre-defined set of categories.

Option A allows for the introduction and analysis of new and important material that could have significant implications to one’s research question.

Option B allows the researcher to stay focused and examine the data for specific concepts.

3. Decide whether to code for existence or frequency of a concept. The decision changes the coding process.

When coding for the existence of a concept, the researcher would count a concept only once if it appeared at least once in the data and no matter how many times it appeared.

When coding for the frequency of a concept, the researcher would count the number of times a concept appears in a text.

4. Decide on how you will distinguish among concepts:

Should text be coded exactly as they appear or coded as the same when they appear in different forms? For example, “dangerous” vs. “dangerousness”. The point here is to create coding rules so that these word segments are transparently categorized in a logical fashion. The rules could make all of these word segments fall into the same category, or perhaps the rules can be formulated so that the researcher can distinguish these word segments into separate codes.

What level of implication is to be allowed? Words that imply the concept or words that explicitly state the concept? For example, “dangerous” vs. “the person is scary” vs. “that person could cause harm to me”. These word segments may not merit separate categories, due the implicit meaning of “dangerous”.

5. Develop rules for coding your texts. After decisions of steps 1-4 are complete, a researcher can begin developing rules for translation of text into codes. This will keep the coding process organized and consistent. The researcher can code for exactly what he/she wants to code. Validity of the coding process is ensured when the researcher is consistent and coherent in their codes, meaning that they follow their translation rules. In content analysis, obeying by the translation rules is equivalent to validity.

6. Decide what to do with irrelevant information: should this be ignored (e.g. common English words like “the” and “and”), or used to reexamine the coding scheme in the case that it would add to the outcome of coding?

7. Code the text: This can be done by hand or by using software. By using software, researchers can input categories and have coding done automatically, quickly and efficiently, by the software program. When coding is done by hand, a researcher can recognize errors far more easily (e.g. typos, misspelling). If using computer coding, text could be cleaned of errors to include all available data. This decision of hand vs. computer coding is most relevant for implicit information where category preparation is essential for accurate coding.

8. Analyze your results: Draw conclusions and generalizations where possible. Determine what to do with irrelevant, unwanted, or unused text: reexamine, ignore, or reassess the coding scheme. Interpret results carefully as conceptual content analysis can only quantify the information. Typically, general trends and patterns can be identified.

Relational Analysis

Relational analysis begins like conceptual analysis, where a concept is chosen for examination. However, the analysis involves exploring the relationships between concepts. Individual concepts are viewed as having no inherent meaning and rather the meaning is a product of the relationships among concepts.

To begin a relational content analysis, first identify a research question and choose a sample or samples for analysis. The research question must be focused so the concept types are not open to interpretation and can be summarized. Next, select text for analysis. Select text for analysis carefully by balancing having enough information for a thorough analysis so results are not limited with having information that is too extensive so that the coding process becomes too arduous and heavy to supply meaningful and worthwhile results.

There are three subcategories of relational analysis to choose from prior to going on to the general steps.

Affect extraction: an emotional evaluation of concepts explicit in a text. A challenge to this method is that emotions can vary across time, populations, and space. However, it could be effective at capturing the emotional and psychological state of the speaker or writer of the text.

Proximity analysis: an evaluation of the co-occurrence of explicit concepts in the text. Text is defined as a string of words called a “window” that is scanned for the co-occurrence of concepts. The result is the creation of a “concept matrix”, or a group of interrelated co-occurring concepts that would suggest an overall meaning.

Cognitive mapping: a visualization technique for either affect extraction or proximity analysis. Cognitive mapping attempts to create a model of the overall meaning of the text such as a graphic map that represents the relationships between concepts.

General steps for conducting a relational content analysis:

1. Determine the type of analysis: Once the sample has been selected, the researcher needs to determine what types of relationships to examine and the level of analysis: word, word sense, phrase, sentence, themes. 2. Reduce the text to categories and code for words or patterns. A researcher can code for existence of meanings or words. 3. Explore the relationship between concepts: once the words are coded, the text can be analyzed for the following:

Strength of relationship: degree to which two or more concepts are related.

Sign of relationship: are concepts positively or negatively related to each other?

Direction of relationship: the types of relationship that categories exhibit. For example, “X implies Y” or “X occurs before Y” or “if X then Y” or if X is the primary motivator of Y.

4. Code the relationships: a difference between conceptual and relational analysis is that the statements or relationships between concepts are coded. 5. Perform statistical analyses: explore differences or look for relationships among the identified variables during coding. 6. Map out representations: such as decision mapping and mental models.

Reliability and Validity

Reliability : Because of the human nature of researchers, coding errors can never be eliminated but only minimized. Generally, 80% is an acceptable margin for reliability. Three criteria comprise the reliability of a content analysis:

Stability: the tendency for coders to consistently re-code the same data in the same way over a period of time.

Reproducibility: tendency for a group of coders to classify categories membership in the same way.

Accuracy: extent to which the classification of text corresponds to a standard or norm statistically.

Validity : Three criteria comprise the validity of a content analysis:

Closeness of categories: this can be achieved by utilizing multiple classifiers to arrive at an agreed upon definition of each specific category. Using multiple classifiers, a concept category that may be an explicit variable can be broadened to include synonyms or implicit variables.

Conclusions: What level of implication is allowable? Do conclusions correctly follow the data? Are results explainable by other phenomena? This becomes especially problematic when using computer software for analysis and distinguishing between synonyms. For example, the word “mine,” variously denotes a personal pronoun, an explosive device, and a deep hole in the ground from which ore is extracted. Software can obtain an accurate count of that word’s occurrence and frequency, but not be able to produce an accurate accounting of the meaning inherent in each particular usage. This problem could throw off one’s results and make any conclusion invalid.

Generalizability of the results to a theory: dependent on the clear definitions of concept categories, how they are determined and how reliable they are at measuring the idea one is seeking to measure. Generalizability parallels reliability as much of it depends on the three criteria for reliability.

Advantages of Content Analysis

Directly examines communication using text

Allows for both qualitative and quantitative analysis

Provides valuable historical and cultural insights over time

Allows a closeness to data

Coded form of the text can be statistically analyzed

Unobtrusive means of analyzing interactions

Provides insight into complex models of human thought and language use

When done well, is considered a relatively “exact” research method

Content analysis is a readily-understood and an inexpensive research method

A more powerful tool when combined with other research methods such as interviews, observation, and use of archival records. It is very useful for analyzing historical material, especially for documenting trends over time.

Disadvantages of Content Analysis

Can be extremely time consuming

Is subject to increased error, particularly when relational analysis is used to attain a higher level of interpretation

Is often devoid of theoretical base, or attempts too liberally to draw meaningful inferences about the relationships and impacts implied in a study

Is inherently reductive, particularly when dealing with complex texts

Tends too often to simply consist of word counts

Often disregards the context that produced the text, as well as the state of things after the text is produced

Can be difficult to automate or computerize

Textbooks & Chapters

Berelson, Bernard. Content Analysis in Communication Research.New York: Free Press, 1952.

Busha, Charles H. and Stephen P. Harter. Research Methods in Librarianship: Techniques and Interpretation.New York: Academic Press, 1980.

de Sola Pool, Ithiel. Trends in Content Analysis. Urbana: University of Illinois Press, 1959.

Krippendorff, Klaus. Content Analysis: An Introduction to its Methodology. Beverly Hills: Sage Publications, 1980.

Fielding, NG & Lee, RM. Using Computers in Qualitative Research. SAGE Publications, 1991. (Refer to Chapter by Seidel, J. ‘Method and Madness in the Application of Computer Technology to Qualitative Data Analysis’.)

Methodological Articles

Hsieh HF & Shannon SE. (2005). Three Approaches to Qualitative Content Analysis.Qualitative Health Research. 15(9): 1277-1288.

Elo S, Kaarianinen M, Kanste O, Polkki R, Utriainen K, & Kyngas H. (2014). Qualitative Content Analysis: A focus on trustworthiness. Sage Open. 4:1-10.

Application Articles

Abroms LC, Padmanabhan N, Thaweethai L, & Phillips T. (2011). iPhone Apps for Smoking Cessation: A content analysis. American Journal of Preventive Medicine. 40(3):279-285.

Ullstrom S. Sachs MA, Hansson J, Ovretveit J, & Brommels M. (2014). Suffering in Silence: a qualitative study of second victims of adverse events. British Medical Journal, Quality & Safety Issue. 23:325-331.

Owen P. (2012).Portrayals of Schizophrenia by Entertainment Media: A Content Analysis of Contemporary Movies. Psychiatric Services. 63:655-659.

Choosing whether to conduct a content analysis by hand or by using computer software can be difficult. Refer to ‘Method and Madness in the Application of Computer Technology to Qualitative Data Analysis’ listed above in “Textbooks and Chapters” for a discussion of the issue.

QSR NVivo: http://www.qsrinternational.com/products.aspx

Atlas.ti: http://www.atlasti.com/webinars.html

R- RQDA package: http://rqda.r-forge.r-project.org/

Rolly Constable, Marla Cowell, Sarita Zornek Crawford, David Golden, Jake Hartvigsen, Kathryn Morgan, Anne Mudgett, Kris Parrish, Laura Thomas, Erika Yolanda Thompson, Rosie Turner, and Mike Palmquist. (1994-2012). Ethnography, Observational Research, and Narrative Inquiry. Writing@CSU. Colorado State University. Available at: https://writing.colostate.edu/guides/guide.cfm?guideid=63 .

As an introduction to Content Analysis by Michael Palmquist, this is the main resource on Content Analysis on the Web. It is comprehensive, yet succinct. It includes examples and an annotated bibliography. The information contained in the narrative above draws heavily from and summarizes Michael Palmquist’s excellent resource on Content Analysis but was streamlined for the purpose of doctoral students and junior researchers in epidemiology.

At Columbia University Mailman School of Public Health, more detailed training is available through the Department of Sociomedical Sciences- P8785 Qualitative Research Methods.

Join the Conversation

Have a question about methods? Join us on Facebook

How to conduct a meta-analysis in eight steps: a practical guide

- Open access

- Published: 30 November 2021

- Volume 72 , pages 1–19, ( 2022 )

Cite this article

You have full access to this open access article

- Christopher Hansen 1 ,

- Holger Steinmetz 2 &

- Jörn Block 3 , 4 , 5

142k Accesses

44 Citations

157 Altmetric

Explore all metrics

Avoid common mistakes on your manuscript.

1 Introduction

“Scientists have known for centuries that a single study will not resolve a major issue. Indeed, a small sample study will not even resolve a minor issue. Thus, the foundation of science is the cumulation of knowledge from the results of many studies.” (Hunter et al. 1982 , p. 10)

Meta-analysis is a central method for knowledge accumulation in many scientific fields (Aguinis et al. 2011c ; Kepes et al. 2013 ). Similar to a narrative review, it serves as a synopsis of a research question or field. However, going beyond a narrative summary of key findings, a meta-analysis adds value in providing a quantitative assessment of the relationship between two target variables or the effectiveness of an intervention (Gurevitch et al. 2018 ). Also, it can be used to test competing theoretical assumptions against each other or to identify important moderators where the results of different primary studies differ from each other (Aguinis et al. 2011b ; Bergh et al. 2016 ). Rooted in the synthesis of the effectiveness of medical and psychological interventions in the 1970s (Glass 2015 ; Gurevitch et al. 2018 ), meta-analysis is nowadays also an established method in management research and related fields.

The increasing importance of meta-analysis in management research has resulted in the publication of guidelines in recent years that discuss the merits and best practices in various fields, such as general management (Bergh et al. 2016 ; Combs et al. 2019 ; Gonzalez-Mulé and Aguinis 2018 ), international business (Steel et al. 2021 ), economics and finance (Geyer-Klingeberg et al. 2020 ; Havranek et al. 2020 ), marketing (Eisend 2017 ; Grewal et al. 2018 ), and organizational studies (DeSimone et al. 2020 ; Rudolph et al. 2020 ). These articles discuss existing and trending methods and propose solutions for often experienced problems. This editorial briefly summarizes the insights of these papers; provides a workflow of the essential steps in conducting a meta-analysis; suggests state-of-the art methodological procedures; and points to other articles for in-depth investigation. Thus, this article has two goals: (1) based on the findings of previous editorials and methodological articles, it defines methodological recommendations for meta-analyses submitted to Management Review Quarterly (MRQ); and (2) it serves as a practical guide for researchers who have little experience with meta-analysis as a method but plan to conduct one in the future.

2 Eight steps in conducting a meta-analysis

2.1 step 1: defining the research question.

The first step in conducting a meta-analysis, as with any other empirical study, is the definition of the research question. Most importantly, the research question determines the realm of constructs to be considered or the type of interventions whose effects shall be analyzed. When defining the research question, two hurdles might develop. First, when defining an adequate study scope, researchers must consider that the number of publications has grown exponentially in many fields of research in recent decades (Fortunato et al. 2018 ). On the one hand, a larger number of studies increases the potentially relevant literature basis and enables researchers to conduct meta-analyses. Conversely, scanning a large amount of studies that could be potentially relevant for the meta-analysis results in a perhaps unmanageable workload. Thus, Steel et al. ( 2021 ) highlight the importance of balancing manageability and relevance when defining the research question. Second, similar to the number of primary studies also the number of meta-analyses in management research has grown strongly in recent years (Geyer-Klingeberg et al. 2020 ; Rauch 2020 ; Schwab 2015 ). Therefore, it is likely that one or several meta-analyses for many topics of high scholarly interest already exist. However, this should not deter researchers from investigating their research questions. One possibility is to consider moderators or mediators of a relationship that have previously been ignored. For example, a meta-analysis about startup performance could investigate the impact of different ways to measure the performance construct (e.g., growth vs. profitability vs. survival time) or certain characteristics of the founders as moderators. Another possibility is to replicate previous meta-analyses and test whether their findings can be confirmed with an updated sample of primary studies or newly developed methods. Frequent replications and updates of meta-analyses are important contributions to cumulative science and are increasingly called for by the research community (Anderson & Kichkha 2017 ; Steel et al. 2021 ). Consistent with its focus on replication studies (Block and Kuckertz 2018 ), MRQ therefore also invites authors to submit replication meta-analyses.

2.2 Step 2: literature search

2.2.1 search strategies.

Similar to conducting a literature review, the search process of a meta-analysis should be systematic, reproducible, and transparent, resulting in a sample that includes all relevant studies (Fisch and Block 2018 ; Gusenbauer and Haddaway 2020 ). There are several identification strategies for relevant primary studies when compiling meta-analytical datasets (Harari et al. 2020 ). First, previous meta-analyses on the same or a related topic may provide lists of included studies that offer a good starting point to identify and become familiar with the relevant literature. This practice is also applicable to topic-related literature reviews, which often summarize the central findings of the reviewed articles in systematic tables. Both article types likely include the most prominent studies of a research field. The most common and important search strategy, however, is a keyword search in electronic databases (Harari et al. 2020 ). This strategy will probably yield the largest number of relevant studies, particularly so-called ‘grey literature’, which may not be considered by literature reviews. Gusenbauer and Haddaway ( 2020 ) provide a detailed overview of 34 scientific databases, of which 18 are multidisciplinary or have a focus on management sciences, along with their suitability for literature synthesis. To prevent biased results due to the scope or journal coverage of one database, researchers should use at least two different databases (DeSimone et al. 2020 ; Martín-Martín et al. 2021 ; Mongeon & Paul-Hus 2016 ). However, a database search can easily lead to an overload of potentially relevant studies. For example, key term searches in Google Scholar for “entrepreneurial intention” and “firm diversification” resulted in more than 660,000 and 810,000 hits, respectively. Footnote 1 Therefore, a precise research question and precise search terms using Boolean operators are advisable (Gusenbauer and Haddaway 2020 ). Addressing the challenge of identifying relevant articles in the growing number of database publications, (semi)automated approaches using text mining and machine learning (Bosco et al. 2017 ; O’Mara-Eves et al. 2015 ; Ouzzani et al. 2016 ; Thomas et al. 2017 ) can also be promising and time-saving search tools in the future. Also, some electronic databases offer the possibility to track forward citations of influential studies and thereby identify further relevant articles. Finally, collecting unpublished or undetected studies through conferences, personal contact with (leading) scholars, or listservs can be strategies to increase the study sample size (Grewal et al. 2018 ; Harari et al. 2020 ; Pigott and Polanin 2020 ).

2.2.2 Study inclusion criteria and sample composition

Next, researchers must decide which studies to include in the meta-analysis. Some guidelines for literature reviews recommend limiting the sample to studies published in renowned academic journals to ensure the quality of findings (e.g., Kraus et al. 2020 ). For meta-analysis, however, Steel et al. ( 2021 ) advocate for the inclusion of all available studies, including grey literature, to prevent selection biases based on availability, cost, familiarity, and language (Rothstein et al. 2005 ), or the “Matthew effect”, which denotes the phenomenon that highly cited articles are found faster than less cited articles (Merton 1968 ). Harrison et al. ( 2017 ) find that the effects of published studies in management are inflated on average by 30% compared to unpublished studies. This so-called publication bias or “file drawer problem” (Rosenthal 1979 ) results from the preference of academia to publish more statistically significant and less statistically insignificant study results. Owen and Li ( 2020 ) showed that publication bias is particularly severe when variables of interest are used as key variables rather than control variables. To consider the true effect size of a target variable or relationship, the inclusion of all types of research outputs is therefore recommended (Polanin et al. 2016 ). Different test procedures to identify publication bias are discussed subsequently in Step 7.

In addition to the decision of whether to include certain study types (i.e., published vs. unpublished studies), there can be other reasons to exclude studies that are identified in the search process. These reasons can be manifold and are primarily related to the specific research question and methodological peculiarities. For example, studies identified by keyword search might not qualify thematically after all, may use unsuitable variable measurements, or may not report usable effect sizes. Furthermore, there might be multiple studies by the same authors using similar datasets. If they do not differ sufficiently in terms of their sample characteristics or variables used, only one of these studies should be included to prevent bias from duplicates (Wood 2008 ; see this article for a detection heuristic).

In general, the screening process should be conducted stepwise, beginning with a removal of duplicate citations from different databases, followed by abstract screening to exclude clearly unsuitable studies and a final full-text screening of the remaining articles (Pigott and Polanin 2020 ). A graphical tool to systematically document the sample selection process is the PRISMA flow diagram (Moher et al. 2009 ). Page et al. ( 2021 ) recently presented an updated version of the PRISMA statement, including an extended item checklist and flow diagram to report the study process and findings.

2.3 Step 3: choice of the effect size measure

2.3.1 types of effect sizes.

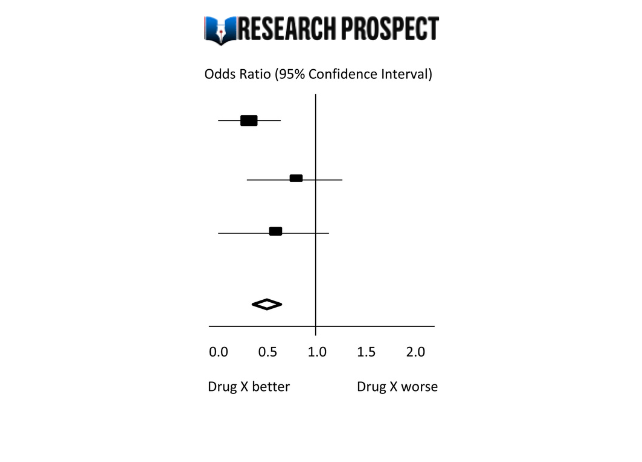

The two most common meta-analytical effect size measures in management studies are (z-transformed) correlation coefficients and standardized mean differences (Aguinis et al. 2011a ; Geyskens et al. 2009 ). However, meta-analyses in management science and related fields may not be limited to those two effect size measures but rather depend on the subfield of investigation (Borenstein 2009 ; Stanley and Doucouliagos 2012 ). In economics and finance, researchers are more interested in the examination of elasticities and marginal effects extracted from regression models than in pure bivariate correlations (Stanley and Doucouliagos 2012 ). Regression coefficients can also be converted to partial correlation coefficients based on their t-statistics to make regression results comparable across studies (Stanley and Doucouliagos 2012 ). Although some meta-analyses in management research have combined bivariate and partial correlations in their study samples, Aloe ( 2015 ) and Combs et al. ( 2019 ) advise researchers not to use this practice. Most importantly, they argue that the effect size strength of partial correlations depends on the other variables included in the regression model and is therefore incomparable to bivariate correlations (Schmidt and Hunter 2015 ), resulting in a possible bias of the meta-analytic results (Roth et al. 2018 ). We endorse this opinion. If at all, we recommend separate analyses for each measure. In addition to these measures, survival rates, risk ratios or odds ratios, which are common measures in medical research (Borenstein 2009 ), can be suitable effect sizes for specific management research questions, such as understanding the determinants of the survival of startup companies. To summarize, the choice of a suitable effect size is often taken away from the researcher because it is typically dependent on the investigated research question as well as the conventions of the specific research field (Cheung and Vijayakumar 2016 ).

2.3.2 Conversion of effect sizes to a common measure

After having defined the primary effect size measure for the meta-analysis, it might become necessary in the later coding process to convert study findings that are reported in effect sizes that are different from the chosen primary effect size. For example, a study might report only descriptive statistics for two study groups but no correlation coefficient, which is used as the primary effect size measure in the meta-analysis. Different effect size measures can be harmonized using conversion formulae, which are provided by standard method books such as Borenstein et al. ( 2009 ) or Lipsey and Wilson ( 2001 ). There also exist online effect size calculators for meta-analysis. Footnote 2

2.4 Step 4: choice of the analytical method used

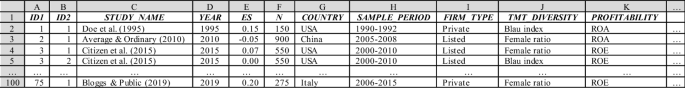

Choosing which meta-analytical method to use is directly connected to the research question of the meta-analysis. Research questions in meta-analyses can address a relationship between constructs or an effect of an intervention in a general manner, or they can focus on moderating or mediating effects. There are four meta-analytical methods that are primarily used in contemporary management research (Combs et al. 2019 ; Geyer-Klingeberg et al. 2020 ), which allow the investigation of these different types of research questions: traditional univariate meta-analysis, meta-regression, meta-analytic structural equation modeling, and qualitative meta-analysis (Hoon 2013 ). While the first three are quantitative, the latter summarizes qualitative findings. Table 1 summarizes the key characteristics of the three quantitative methods.

2.4.1 Univariate meta-analysis

In its traditional form, a meta-analysis reports a weighted mean effect size for the relationship or intervention of investigation and provides information on the magnitude of variance among primary studies (Aguinis et al. 2011c ; Borenstein et al. 2009 ). Accordingly, it serves as a quantitative synthesis of a research field (Borenstein et al. 2009 ; Geyskens et al. 2009 ). Prominent traditional approaches have been developed, for example, by Hedges and Olkin ( 1985 ) or Hunter and Schmidt ( 1990 , 2004 ). However, going beyond its simple summary function, the traditional approach has limitations in explaining the observed variance among findings (Gonzalez-Mulé and Aguinis 2018 ). To identify moderators (or boundary conditions) of the relationship of interest, meta-analysts can create subgroups and investigate differences between those groups (Borenstein and Higgins 2013 ; Hunter and Schmidt 2004 ). Potential moderators can be study characteristics (e.g., whether a study is published vs. unpublished), sample characteristics (e.g., study country, industry focus, or type of survey/experiment participants), or measurement artifacts (e.g., different types of variable measurements). The univariate approach is thus suitable to identify the overall direction of a relationship and can serve as a good starting point for additional analyses. However, due to its limitations in examining boundary conditions and developing theory, the univariate approach on its own is currently oftentimes viewed as not sufficient (Rauch 2020 ; Shaw and Ertug 2017 ).

2.4.2 Meta-regression analysis

Meta-regression analysis (Hedges and Olkin 1985 ; Lipsey and Wilson 2001 ; Stanley and Jarrell 1989 ) aims to investigate the heterogeneity among observed effect sizes by testing multiple potential moderators simultaneously. In meta-regression, the coded effect size is used as the dependent variable and is regressed on a list of moderator variables. These moderator variables can be categorical variables as described previously in the traditional univariate approach or (semi)continuous variables such as country scores that are merged with the meta-analytical data. Thus, meta-regression analysis overcomes the disadvantages of the traditional approach, which only allows us to investigate moderators singularly using dichotomized subgroups (Combs et al. 2019 ; Gonzalez-Mulé and Aguinis 2018 ). These possibilities allow a more fine-grained analysis of research questions that are related to moderating effects. However, Schmidt ( 2017 ) critically notes that the number of effect sizes in the meta-analytical sample must be sufficiently large to produce reliable results when investigating multiple moderators simultaneously in a meta-regression. For further reading, Tipton et al. ( 2019 ) outline the technical, conceptual, and practical developments of meta-regression over the last decades. Gonzalez-Mulé and Aguinis ( 2018 ) provide an overview of methodological choices and develop evidence-based best practices for future meta-analyses in management using meta-regression.

2.4.3 Meta-analytic structural equation modeling (MASEM)

MASEM is a combination of meta-analysis and structural equation modeling and allows to simultaneously investigate the relationships among several constructs in a path model. Researchers can use MASEM to test several competing theoretical models against each other or to identify mediation mechanisms in a chain of relationships (Bergh et al. 2016 ). This method is typically performed in two steps (Cheung and Chan 2005 ): In Step 1, a pooled correlation matrix is derived, which includes the meta-analytical mean effect sizes for all variable combinations; Step 2 then uses this matrix to fit the path model. While MASEM was based primarily on traditional univariate meta-analysis to derive the pooled correlation matrix in its early years (Viswesvaran and Ones 1995 ), more advanced methods, such as the GLS approach (Becker 1992 , 1995 ) or the TSSEM approach (Cheung and Chan 2005 ), have been subsequently developed. Cheung ( 2015a ) and Jak ( 2015 ) provide an overview of these approaches in their books with exemplary code. For datasets with more complex data structures, Wilson et al. ( 2016 ) also developed a multilevel approach that is related to the TSSEM approach in the second step. Bergh et al. ( 2016 ) discuss nine decision points and develop best practices for MASEM studies.

2.4.4 Qualitative meta-analysis

While the approaches explained above focus on quantitative outcomes of empirical studies, qualitative meta-analysis aims to synthesize qualitative findings from case studies (Hoon 2013 ; Rauch et al. 2014 ). The distinctive feature of qualitative case studies is their potential to provide in-depth information about specific contextual factors or to shed light on reasons for certain phenomena that cannot usually be investigated by quantitative studies (Rauch 2020 ; Rauch et al. 2014 ). In a qualitative meta-analysis, the identified case studies are systematically coded in a meta-synthesis protocol, which is then used to identify influential variables or patterns and to derive a meta-causal network (Hoon 2013 ). Thus, the insights of contextualized and typically nongeneralizable single studies are aggregated to a larger, more generalizable picture (Habersang et al. 2019 ). Although still the exception, this method can thus provide important contributions for academics in terms of theory development (Combs et al., 2019 ; Hoon 2013 ) and for practitioners in terms of evidence-based management or entrepreneurship (Rauch et al. 2014 ). Levitt ( 2018 ) provides a guide and discusses conceptual issues for conducting qualitative meta-analysis in psychology, which is also useful for management researchers.

2.5 Step 5: choice of software

Software solutions to perform meta-analyses range from built-in functions or additional packages of statistical software to software purely focused on meta-analyses and from commercial to open-source solutions. However, in addition to personal preferences, the choice of the most suitable software depends on the complexity of the methods used and the dataset itself (Cheung and Vijayakumar 2016 ). Meta-analysts therefore must carefully check if their preferred software is capable of performing the intended analysis.

Among commercial software providers, Stata (from version 16 on) offers built-in functions to perform various meta-analytical analyses or to produce various plots (Palmer and Sterne 2016 ). For SPSS and SAS, there exist several macros for meta-analyses provided by scholars, such as David B. Wilson or Andy P. Field and Raphael Gillet (Field and Gillett 2010 ). Footnote 3 Footnote 4 For researchers using the open-source software R (R Core Team 2021 ), Polanin et al. ( 2017 ) provide an overview of 63 meta-analysis packages and their functionalities. For new users, they recommend the package metafor (Viechtbauer 2010 ), which includes most necessary functions and for which the author Wolfgang Viechtbauer provides tutorials on his project website. Footnote 5 Footnote 6 In addition to packages and macros for statistical software, templates for Microsoft Excel have also been developed to conduct simple meta-analyses, such as Meta-Essentials by Suurmond et al. ( 2017 ). Footnote 7 Finally, programs purely dedicated to meta-analysis also exist, such as Comprehensive Meta-Analysis (Borenstein et al. 2013 ) or RevMan by The Cochrane Collaboration ( 2020 ).

2.6 Step 6: coding of effect sizes

2.6.1 coding sheet.